Advanced Context Engineering for LLM Agents

The Problem

Modern LLMs face several fundamental challenges when deployed as agents: Limited Context Windows: Fixed token limits restrict information processing capacity Memory Management: Difficulty balancing active context vs. long-term storage Relevance Determination: Challenges in identifying the most pertinent information Information Organization: Need for optimal structuring of contextual data

These limitations create bottlenecks for complex, multi-turn interactions where agents must maintain coherent conversations while accessing relevant historical information. As agents become more sophisticated, effective context management becomes the determining factor between a functional system and one that can truly understand and respond to complex, evolving user needs.

The System

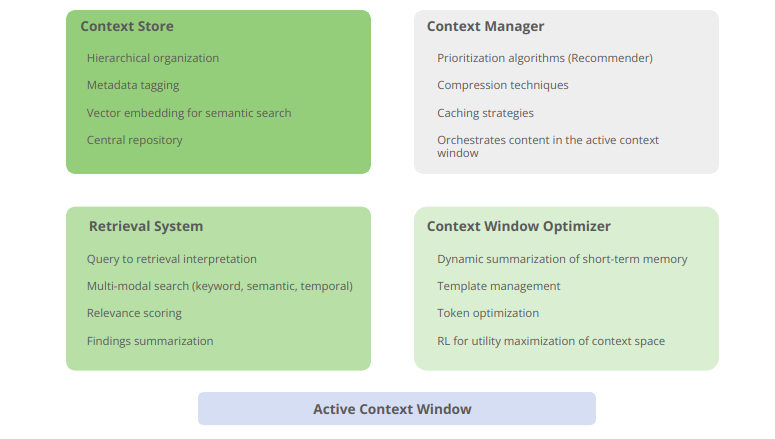

I am proposing a solution consisting of four interconnected components working together to create intelligent context management.

The Context Store serves as a hierarchical repository with metadata tagging (TF-IDF), vector embeddings for semantic search, and temporal indexing across sessions, conversations, and topics.

The Context Manager orchestrates information flow using prioritization algorithms, compression techniques, and caching strategies to optimize the active context window.

The Retrieval System efficiently locates relevant information through multi-modal search combining keyword, semantic, and temporal dimensions with relevance scoring using BM25, cosine similarity, and probabilistic ranking.

Finally, the Context Window Optimizer maximizes utility within token constraints through dynamic summarization using lightweight SLMs, consistent template management, and intelligent token optimization that balances detail with brevity.

Key Differentiating Features

This system offers several unique capabilities that set it apart from traditional context management approaches.

Relevance-aware summarization dynamically adjusts summary detail based on information importance, ensuring critical context is preserved while reducing token usage.

Context persistence strategies provide configurable data retention policies that determine what information persists across sessions, enabling true long-term agent memory.

Task-specific templates optimize context structures for different agent tasks, improving both efficiency and response quality.

Memory consolidation periodically refines long-term memory to process outdated information, maintaining system efficiency over time.

Most importantly, explainable context decisions provide transparency by clearly outlining why specific context is included or excluded, fostering trust and enabling systematic debugging and optimization.

More about this idea can be found on Encyclopedia Autonomica

What challenges have you faced with context management in your LLM projects? Share your experiences and let's discuss potential solutions in the comments below.