Update README.md

Browse files

README.md

CHANGED

|

@@ -2,4 +2,18 @@

|

|

| 2 |

license: mit

|

| 3 |

base_model:

|

| 4 |

- deepseek-ai/DeepSeek-R1

|

| 5 |

-

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

license: mit

|

| 3 |

base_model:

|

| 4 |

- deepseek-ai/DeepSeek-R1

|

| 5 |

+

---

|

| 6 |

+

|

| 7 |

+

# R1 1776

|

| 8 |

+

|

| 9 |

+

R1 1776 is a DeepSeek-R1 reasoning model that has been post-trained by Perplexity AI to remove Chinese Communist Party censorship.

|

| 10 |

+

The model provides unbiased, accurate, and factual information while maintaining high reasoning capabilities.

|

| 11 |

+

|

| 12 |

+

## Evals

|

| 13 |

+

|

| 14 |

+

To ensure our model remains fully “uncensored” and capable of engaging with a broad spectrum of sensitive topics, we curated a diverse, multilingual evaluation set of over a 1000 of examples that comprehensively cover such subjects. We then use human annotators as well as carefully designed LLM judges to measure the likelihood a model will evade or provide overly sanitized responses to the queries.

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

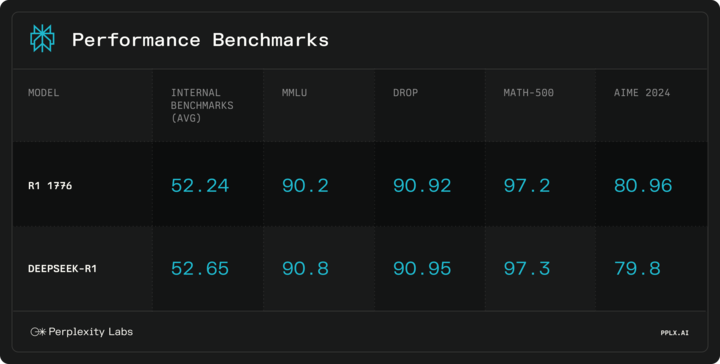

We also ensured that the model’s math and reasoning abilities remained intact after the decensoring process. Evaluations on multiple benchmarks showed that our post-trained model performed on par with the base R1 model, indicating that the decensoring had no impact on its core reasoning capabilities.

|

| 18 |

+

|

| 19 |

+

|