Abhishek Thakur

commited on

Commit

·

d84ea96

1

Parent(s):

b565952

more docs

Browse files- docs/source/competition_space.mdx +38 -1

- docs/source/custom_metric.mdx +138 -0

- docs/source/leaderboard.mdx +1 -0

- docs/source/pricing.mdx +1 -1

- docs/source/submit.mdx +1 -0

- docs/source/teams.mdx +3 -0

docs/source/competition_space.mdx

CHANGED

|

@@ -1 +1,38 @@

|

|

| 1 |

-

# Competition Space

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Competition Space

|

| 2 |

+

|

| 3 |

+

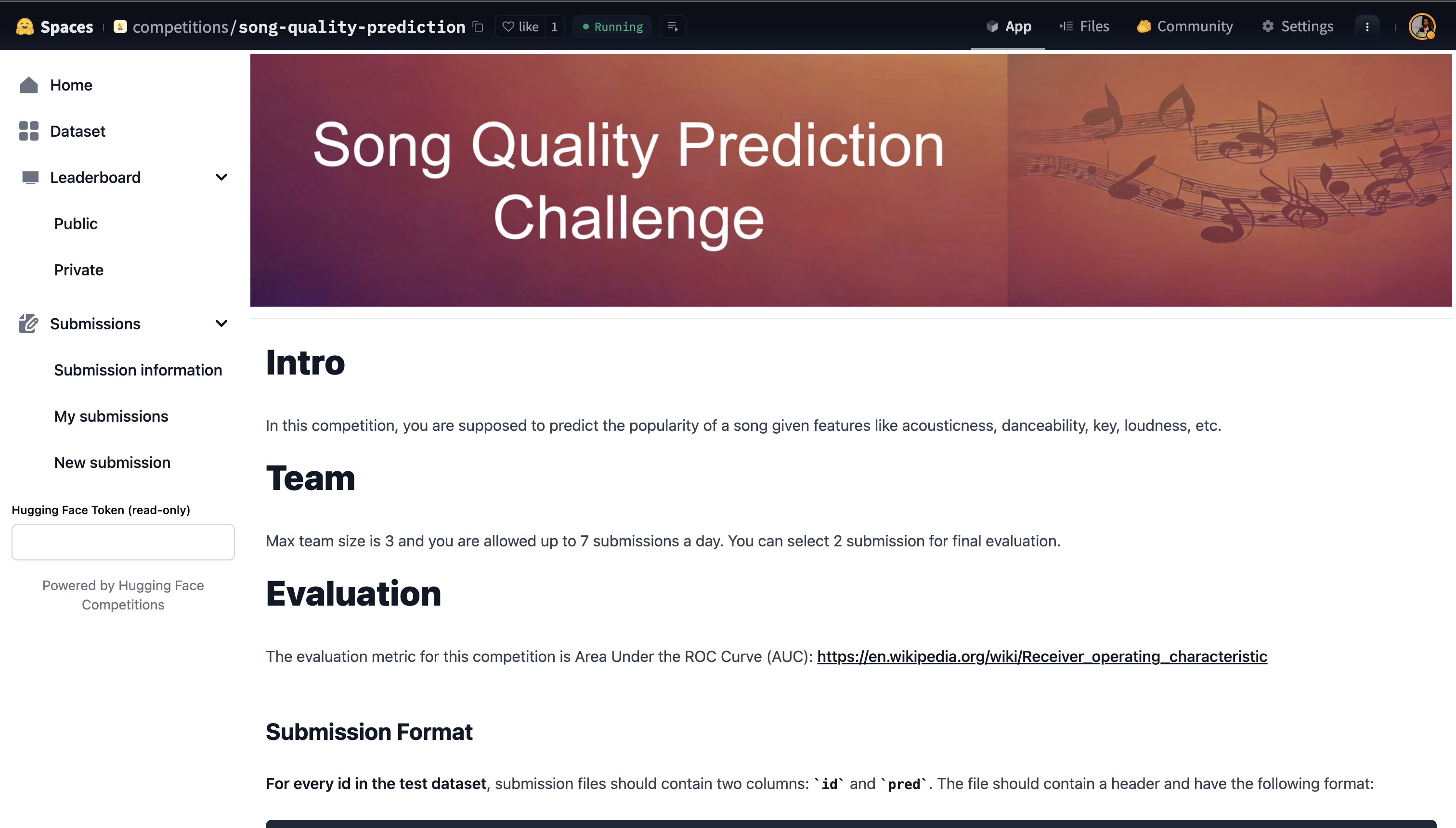

A competition space is a Hugging Face Space where the actual competition takes place. It is a space where you can submit your model and get a score. It is also a space where competitors can see the leaderboard, discuss, and make submissions.

|

| 4 |

+

|

| 5 |

+

Check out an example competition space below:

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

The competition space consists of the following:

|

| 10 |

+

|

| 11 |

+

- Competition description (content is fetched from private competition repo)

|

| 12 |

+

- Dataset description (content is fetched from private competition repo)

|

| 13 |

+

- Leaderboard (content is fetched from private competition repo)

|

| 14 |

+

- Public (available to everyone, all the time)

|

| 15 |

+

- Private (available to everyone, but only after the competition ends)

|

| 16 |

+

- Submissions

|

| 17 |

+

- Submission guidelines (content is fetched from private competition repo)

|

| 18 |

+

- My submissions (users can see their own submissions)

|

| 19 |

+

- New submission (users can make new submissions)

|

| 20 |

+

- Discussions (accessible via community tab)

|

| 21 |

+

|

| 22 |

+

### Secrets

|

| 23 |

+

|

| 24 |

+

The competition space requires two secrets:

|

| 25 |

+

|

| 26 |

+

- `HF_TOKEN`: this is the Hugging Face write token of the user who created the competition space. This token must be kept alive for the duration of the competition. In case the token expires, the competition space will stop working. If you change/refresh/delete this token, you will need to update this secret.

|

| 27 |

+

- `COMPETITION_ID`: this is the path of private competition repo. e.g. `abhishek/private-competition-data`. If you change the name of the private competition repo, you will need to update this secret.

|

| 28 |

+

|

| 29 |

+

Note: The above two secrets are crucial for the competition space to work!

|

| 30 |

+

|

| 31 |

+

### Public & private competition spaces

|

| 32 |

+

|

| 33 |

+

A competition space can be public or private. A public competition space is available to everyone, all the time.

|

| 34 |

+

A private competition space is only available to the members of the organization the competition space is created in.

|

| 35 |

+

|

| 36 |

+

You can at any point make the competition public.

|

| 37 |

+

|

| 38 |

+

Generally, we recommend testing every aspect of the competition space in a private competition space before making it public.

|

docs/source/custom_metric.mdx

CHANGED

|

@@ -0,0 +1,138 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Custom metric

|

| 2 |

+

|

| 3 |

+

In case you don't settle for the default scikit-learn metrics, you can define your own metric.

|

| 4 |

+

|

| 5 |

+

Here, we expect the organizer to know python.

|

| 6 |

+

|

| 7 |

+

### How to define a custom metric

|

| 8 |

+

|

| 9 |

+

To define a custom metric, change `EVAL_METRIC` in `conf.json` to `custom`. You must also make sure that `EVAL_HIGHER_IS_BETTER` is set to `1` or `0` depending on whether a higher value of the metric is better or not.

|

| 10 |

+

|

| 11 |

+

The second step is to create a file `metric.py` in the private competition repo.

|

| 12 |

+

The file should contain a `compute` function that takes competition params as input.

|

| 13 |

+

|

| 14 |

+

Here is the part where we check if metric is custom and calculate the metric value:

|

| 15 |

+

|

| 16 |

+

```python

|

| 17 |

+

def compute_metrics(params):

|

| 18 |

+

if params.metric == "custom":

|

| 19 |

+

metric_file = hf_hub_download(

|

| 20 |

+

repo_id=params.competition_id,

|

| 21 |

+

filename="metric.py",

|

| 22 |

+

token=params.token,

|

| 23 |

+

repo_type="dataset",

|

| 24 |

+

)

|

| 25 |

+

sys.path.append(os.path.dirname(metric_file))

|

| 26 |

+

metric = importlib.import_module("metric")

|

| 27 |

+

evaluation = metric.compute(params)

|

| 28 |

+

.

|

| 29 |

+

.

|

| 30 |

+

.

|

| 31 |

+

````

|

| 32 |

+

|

| 33 |

+

You can find the above part in competitions github repo `compute_metrics.py`

|

| 34 |

+

|

| 35 |

+

`params` is defined as:

|

| 36 |

+

|

| 37 |

+

```python

|

| 38 |

+

class EvalParams(BaseModel):

|

| 39 |

+

competition_id: str

|

| 40 |

+

competition_type: str

|

| 41 |

+

metric: str

|

| 42 |

+

token: str

|

| 43 |

+

team_id: str

|

| 44 |

+

submission_id: str

|

| 45 |

+

submission_id_col: str

|

| 46 |

+

submission_cols: List[str]

|

| 47 |

+

submission_rows: int

|

| 48 |

+

output_path: str

|

| 49 |

+

submission_repo: str

|

| 50 |

+

time_limit: int

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

You are free to do whatever you want to in the `compute` function.

|

| 54 |

+

In the end it must return a dictionary with the following keys:

|

| 55 |

+

|

| 56 |

+

```python

|

| 57 |

+

{

|

| 58 |

+

"public_score": metric_value,

|

| 59 |

+

"private_score": metric_value,

|

| 60 |

+

}

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

public and private scores can be floats or can also be dictionaries in case you want to use multiple metrics.

|

| 64 |

+

Example for multiple metrics:

|

| 65 |

+

|

| 66 |

+

```python

|

| 67 |

+

{

|

| 68 |

+

"public_score": {

|

| 69 |

+

"metric1": metric_value,

|

| 70 |

+

"metric2": metric_value,

|

| 71 |

+

},

|

| 72 |

+

"private_score": {

|

| 73 |

+

"metric1": metric_value,

|

| 74 |

+

"metric2": metric_value,

|

| 75 |

+

},

|

| 76 |

+

}

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

Note: When using multiple metrics, a base metric (the first one) will be used to rank the participants in the competition.

|

| 80 |

+

|

| 81 |

+

### Example of a custom metric

|

| 82 |

+

|

| 83 |

+

```python

|

| 84 |

+

import pandas as pd

|

| 85 |

+

from huggingface_hub import hf_hub_download

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

def compute(params):

|

| 89 |

+

solution_file = hf_hub_download(

|

| 90 |

+

repo_id=params.competition_id,

|

| 91 |

+

filename="solution.csv",

|

| 92 |

+

token=params.token,

|

| 93 |

+

repo_type="dataset",

|

| 94 |

+

)

|

| 95 |

+

|

| 96 |

+

solution_df = pd.read_csv(solution_file)

|

| 97 |

+

|

| 98 |

+

submission_filename = f"submissions/{params.team_id}-{params.submission_id}.csv"

|

| 99 |

+

submission_file = hf_hub_download(

|

| 100 |

+

repo_id=params.competition_id,

|

| 101 |

+

filename=submission_filename,

|

| 102 |

+

token=params.token,

|

| 103 |

+

repo_type="dataset",

|

| 104 |

+

)

|

| 105 |

+

submission_df = pd.read_csv(submission_file)

|

| 106 |

+

|

| 107 |

+

public_ids = solution_df[solution_df.split == "public"][params.submission_id_col].values

|

| 108 |

+

private_ids = solution_df[solution_df.split == "private"][params.submission_id_col].values

|

| 109 |

+

|

| 110 |

+

public_solution_df = solution_df[solution_df[params.submission_id_col].isin(public_ids)]

|

| 111 |

+

public_submission_df = submission_df[submission_df[params.submission_id_col].isin(public_ids)]

|

| 112 |

+

|

| 113 |

+

private_solution_df = solution_df[solution_df[params.submission_id_col].isin(private_ids)]

|

| 114 |

+

private_submission_df = submission_df[submission_df[params.submission_id_col].isin(private_ids)]

|

| 115 |

+

|

| 116 |

+

public_solution_df = public_solution_df.sort_values(params.submission_id_col).reset_index(drop=True)

|

| 117 |

+

public_submission_df = public_submission_df.sort_values(params.submission_id_col).reset_index(drop=True)

|

| 118 |

+

|

| 119 |

+

private_solution_df = private_solution_df.sort_values(params.submission_id_col).reset_index(drop=True)

|

| 120 |

+

private_submission_df = private_submission_df.sort_values(params.submission_id_col).reset_index(drop=True)

|

| 121 |

+

|

| 122 |

+

# CALCULATE METRICS HERE.......

|

| 123 |

+

# _metric = SOME METRIC FUNCTION

|

| 124 |

+

target_cols = [col for col in solution_df.columns if col not in [params.submission_id_col, "split"]]

|

| 125 |

+

public_score = _metric(public_solution_df[target_cols], public_submission_df[target_cols])

|

| 126 |

+

private_score = _metric(private_solution_df[target_cols], private_submission_df[target_cols])

|

| 127 |

+

|

| 128 |

+

evaluation = {

|

| 129 |

+

"public_score": public_score,

|

| 130 |

+

"private_score": private_score,

|

| 131 |

+

}

|

| 132 |

+

return evaluation

|

| 133 |

+

```

|

| 134 |

+

|

| 135 |

+

Take a careful look at the above code.

|

| 136 |

+

You can see that we are downloading the solution file and the submission file from the dataset repo.

|

| 137 |

+

We are then calculating the metric on the public and private splits of the solution and submission files.

|

| 138 |

+

Finally, we are returning the metric values in a dictionary.

|

docs/source/leaderboard.mdx

CHANGED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

# Understanding the leaderboard

|

docs/source/pricing.mdx

CHANGED

|

@@ -2,7 +2,7 @@

|

|

| 2 |

|

| 3 |

Creating a competition is free. However, you will need to pay for the compute resources used to run the competition. The cost of the compute resources depends the type of competition you create.

|

| 4 |

|

| 5 |

-

- generic: generic competitions are free to run. you can, however, upgrade the compute to cpu-

|

| 6 |

|

| 7 |

- script: script competitions require a compute resource to run the participant's code. you can choose between a variety of cpu and gpu instances (T4, A10g and even A100). the cost of the compute resource is charged per hour.

|

| 8 |

|

|

|

|

| 2 |

|

| 3 |

Creating a competition is free. However, you will need to pay for the compute resources used to run the competition. The cost of the compute resources depends the type of competition you create.

|

| 4 |

|

| 5 |

+

- generic: generic competitions are free to run. you can, however, upgrade the compute to cpu-upgrade to speed up the metric calculation and reduce the waiting time for the participants.

|

| 6 |

|

| 7 |

- script: script competitions require a compute resource to run the participant's code. you can choose between a variety of cpu and gpu instances (T4, A10g and even A100). the cost of the compute resource is charged per hour.

|

| 8 |

|

docs/source/submit.mdx

CHANGED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

# Making a submission

|

docs/source/teams.mdx

CHANGED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Teaming up

|

| 2 |

+

|

| 3 |

+

Coming soon!

|