Spaces:

Sleeping

Sleeping

Commit

·

7af2d02

0

Parent(s):

Set up for pyautogen 0.1.x

Browse files- .gitattributes +36 -0

- Dockerfile +19 -0

- README.md +39 -0

- app.py +320 -0

- autogen-rag.gif +3 -0

- autogen.png +0 -0

- requirements.txt +2 -0

.gitattributes

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

autogen-rag.gif filter=lfs diff=lfs merge=lfs -text

|

Dockerfile

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.10.13-slim-bookworm

|

| 2 |

+

|

| 3 |

+

# Setup user to not run as root

|

| 4 |

+

RUN adduser --disabled-password --gecos '' autogen

|

| 5 |

+

RUN adduser autogen sudo

|

| 6 |

+

RUN echo '%sudo ALL=(ALL) NOPASSWD:ALL' >> /etc/sudoers

|

| 7 |

+

USER autogen

|

| 8 |

+

|

| 9 |

+

# Setup working directory

|

| 10 |

+

WORKDIR /home/autogen

|

| 11 |

+

COPY . /home/autogen/

|

| 12 |

+

|

| 13 |

+

# Install app requirements

|

| 14 |

+

RUN pip3 install --no-cache-dir torch --index-url https://download.pytorch.org/whl/cpu

|

| 15 |

+

RUN pip3 install -U pip && pip3 install --no-cache-dir -r requirements.txt

|

| 16 |

+

ENV PATH="${PATH}:/home/autogen/.local/bin"

|

| 17 |

+

|

| 18 |

+

EXPOSE 7860

|

| 19 |

+

ENTRYPOINT ["python3", "app.py"]

|

README.md

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Autogen Demos

|

| 3 |

+

emoji: 🌖

|

| 4 |

+

colorFrom: pink

|

| 5 |

+

colorTo: blue

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.47.1

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

license: mit

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

# Microsoft AutoGen: Retrieve Chat Demo

|

| 14 |

+

|

| 15 |

+

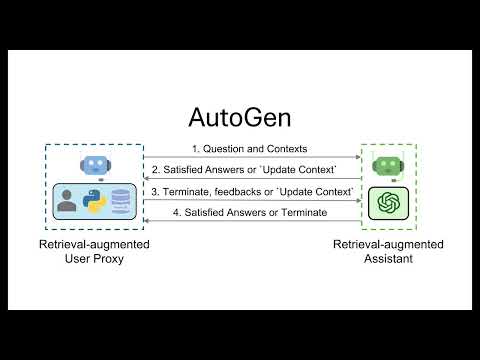

This demo shows how to use the RetrieveUserProxyAgent and RetrieveAssistantAgent to build a chatbot.

|

| 16 |

+

|

| 17 |

+

## Run app

|

| 18 |

+

```

|

| 19 |

+

# Install dependencies

|

| 20 |

+

pip3 install --no-cache-dir torch --index-url https://download.pytorch.org/whl/cpu

|

| 21 |

+

pip3 install --no-cache-dir -r requirements.txt

|

| 22 |

+

|

| 23 |

+

# Launch app

|

| 24 |

+

python app.py

|

| 25 |

+

```

|

| 26 |

+

|

| 27 |

+

## Run docker locally

|

| 28 |

+

```

|

| 29 |

+

docker build -t autogen/rag .

|

| 30 |

+

docker run -it autogen/rag -p 7860:7860

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

#### [GitHub](https://github.com/microsoft/autogen) [Discord](https://discord.gg/pAbnFJrkgZ) [Blog](https://microsoft.github.io/autogen/blog/2023/10/18/RetrieveChat) [Paper](https://arxiv.org/abs/2308.08155) [SourceCode](https://github.com/thinkall/autogen-demo) [OnlineApp](https://huggingface.co/spaces/thinkall/autogen-demos)

|

| 34 |

+

|

| 35 |

+

- Watch the demo video

|

| 36 |

+

|

| 37 |

+

[](https://www.youtube.com/embed/R3cB4V7dl70)

|

| 38 |

+

|

| 39 |

+

|

app.py

ADDED

|

@@ -0,0 +1,320 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import os

|

| 3 |

+

from pathlib import Path

|

| 4 |

+

import autogen

|

| 5 |

+

import chromadb

|

| 6 |

+

import multiprocessing as mp

|

| 7 |

+

from autogen.retrieve_utils import TEXT_FORMATS, get_file_from_url, is_url

|

| 8 |

+

from autogen.agentchat.contrib.retrieve_assistant_agent import RetrieveAssistantAgent

|

| 9 |

+

from autogen.agentchat.contrib.retrieve_user_proxy_agent import (

|

| 10 |

+

RetrieveUserProxyAgent,

|

| 11 |

+

PROMPT_CODE,

|

| 12 |

+

)

|

| 13 |

+

|

| 14 |

+

TIMEOUT = 60

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def initialize_agents(config_list, docs_path=None):

|

| 18 |

+

if isinstance(config_list, gr.State):

|

| 19 |

+

_config_list = config_list.value

|

| 20 |

+

else:

|

| 21 |

+

_config_list = config_list

|

| 22 |

+

if docs_path is None:

|

| 23 |

+

docs_path = "https://raw.githubusercontent.com/microsoft/autogen/main/README.md"

|

| 24 |

+

|

| 25 |

+

assistant = RetrieveAssistantAgent(

|

| 26 |

+

name="assistant",

|

| 27 |

+

system_message="You are a helpful assistant.",

|

| 28 |

+

)

|

| 29 |

+

|

| 30 |

+

ragproxyagent = RetrieveUserProxyAgent(

|

| 31 |

+

name="ragproxyagent",

|

| 32 |

+

human_input_mode="NEVER",

|

| 33 |

+

max_consecutive_auto_reply=5,

|

| 34 |

+

retrieve_config={

|

| 35 |

+

"task": "code",

|

| 36 |

+

"docs_path": docs_path,

|

| 37 |

+

"chunk_token_size": 2000,

|

| 38 |

+

"model": _config_list[0]["model"],

|

| 39 |

+

"client": chromadb.PersistentClient(path="/tmp/chromadb"),

|

| 40 |

+

"embedding_model": "all-mpnet-base-v2",

|

| 41 |

+

"customized_prompt": PROMPT_CODE,

|

| 42 |

+

"get_or_create": True,

|

| 43 |

+

"collection_name": "autogen_rag",

|

| 44 |

+

},

|

| 45 |

+

)

|

| 46 |

+

|

| 47 |

+

return assistant, ragproxyagent

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def initiate_chat(config_list, problem, queue, n_results=3):

|

| 51 |

+

global assistant, ragproxyagent

|

| 52 |

+

if isinstance(config_list, gr.State):

|

| 53 |

+

_config_list = config_list.value

|

| 54 |

+

else:

|

| 55 |

+

_config_list = config_list

|

| 56 |

+

if len(_config_list[0].get("api_key", "")) < 2:

|

| 57 |

+

queue.put(

|

| 58 |

+

["Hi, nice to meet you! Please enter your API keys in below text boxs."]

|

| 59 |

+

)

|

| 60 |

+

return

|

| 61 |

+

else:

|

| 62 |

+

llm_config = (

|

| 63 |

+

{

|

| 64 |

+

"request_timeout": TIMEOUT,

|

| 65 |

+

# "seed": 42,

|

| 66 |

+

"config_list": _config_list,

|

| 67 |

+

"use_cache": False,

|

| 68 |

+

},

|

| 69 |

+

)

|

| 70 |

+

assistant.llm_config.update(llm_config[0])

|

| 71 |

+

assistant.reset()

|

| 72 |

+

try:

|

| 73 |

+

ragproxyagent.initiate_chat(

|

| 74 |

+

assistant, problem=problem, silent=False, n_results=n_results

|

| 75 |

+

)

|

| 76 |

+

messages = ragproxyagent.chat_messages

|

| 77 |

+

messages = [messages[k] for k in messages.keys()][0]

|

| 78 |

+

messages = [m["content"] for m in messages if m["role"] == "user"]

|

| 79 |

+

print("messages: ", messages)

|

| 80 |

+

except Exception as e:

|

| 81 |

+

messages = [str(e)]

|

| 82 |

+

queue.put(messages)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def chatbot_reply(input_text):

|

| 86 |

+

"""Chat with the agent through terminal."""

|

| 87 |

+

queue = mp.Queue()

|

| 88 |

+

process = mp.Process(

|

| 89 |

+

target=initiate_chat,

|

| 90 |

+

args=(config_list, input_text, queue),

|

| 91 |

+

)

|

| 92 |

+

process.start()

|

| 93 |

+

try:

|

| 94 |

+

# process.join(TIMEOUT+2)

|

| 95 |

+

messages = queue.get(timeout=TIMEOUT)

|

| 96 |

+

except Exception as e:

|

| 97 |

+

messages = [

|

| 98 |

+

str(e)

|

| 99 |

+

if len(str(e)) > 0

|

| 100 |

+

else "Invalid Request to OpenAI, please check your API keys."

|

| 101 |

+

]

|

| 102 |

+

finally:

|

| 103 |

+

try:

|

| 104 |

+

process.terminate()

|

| 105 |

+

except:

|

| 106 |

+

pass

|

| 107 |

+

return messages

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

def get_description_text():

|

| 111 |

+

return """

|

| 112 |

+

# Microsoft AutoGen: Retrieve Chat Demo

|

| 113 |

+

|

| 114 |

+

This demo shows how to use the RetrieveUserProxyAgent and RetrieveAssistantAgent to build a chatbot.

|

| 115 |

+

|

| 116 |

+

#### [GitHub](https://github.com/microsoft/autogen) [Discord](https://discord.gg/pAbnFJrkgZ) [Blog](https://microsoft.github.io/autogen/blog/2023/10/18/RetrieveChat) [Paper](https://arxiv.org/abs/2308.08155)

|

| 117 |

+

"""

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

global assistant, ragproxyagent

|

| 121 |

+

|

| 122 |

+

with gr.Blocks() as demo:

|

| 123 |

+

config_list, assistant, ragproxyagent = (

|

| 124 |

+

gr.State(

|

| 125 |

+

[

|

| 126 |

+

{

|

| 127 |

+

"api_key": "",

|

| 128 |

+

"api_base": "",

|

| 129 |

+

"api_type": "azure",

|

| 130 |

+

"api_version": "2023-07-01-preview",

|

| 131 |

+

"model": "gpt-35-turbo",

|

| 132 |

+

}

|

| 133 |

+

]

|

| 134 |

+

),

|

| 135 |

+

None,

|

| 136 |

+

None,

|

| 137 |

+

)

|

| 138 |

+

assistant, ragproxyagent = initialize_agents(config_list)

|

| 139 |

+

|

| 140 |

+

gr.Markdown(get_description_text())

|

| 141 |

+

chatbot = gr.Chatbot(

|

| 142 |

+

[],

|

| 143 |

+

elem_id="chatbot",

|

| 144 |

+

bubble_full_width=False,

|

| 145 |

+

avatar_images=(None, (os.path.join(os.path.dirname(__file__), "autogen.png"))),

|

| 146 |

+

# height=600,

|

| 147 |

+

)

|

| 148 |

+

|

| 149 |

+

txt_input = gr.Textbox(

|

| 150 |

+

scale=4,

|

| 151 |

+

show_label=False,

|

| 152 |

+

placeholder="Enter text and press enter",

|

| 153 |

+

container=False,

|

| 154 |

+

)

|

| 155 |

+

|

| 156 |

+

with gr.Row():

|

| 157 |

+

|

| 158 |

+

def update_config(config_list):

|

| 159 |

+

global assistant, ragproxyagent

|

| 160 |

+

config_list = autogen.config_list_from_models(

|

| 161 |

+

model_list=[os.environ.get("MODEL", "gpt-35-turbo")],

|

| 162 |

+

)

|

| 163 |

+

if not config_list:

|

| 164 |

+

config_list = [

|

| 165 |

+

{

|

| 166 |

+

"api_key": "",

|

| 167 |

+

"api_base": "",

|

| 168 |

+

"api_type": "azure",

|

| 169 |

+

"api_version": "2023-07-01-preview",

|

| 170 |

+

"model": "gpt-35-turbo",

|

| 171 |

+

}

|

| 172 |

+

]

|

| 173 |

+

llm_config = (

|

| 174 |

+

{

|

| 175 |

+

"request_timeout": TIMEOUT,

|

| 176 |

+

# "seed": 42,

|

| 177 |

+

"config_list": config_list,

|

| 178 |

+

},

|

| 179 |

+

)

|

| 180 |

+

assistant.llm_config.update(llm_config[0])

|

| 181 |

+

ragproxyagent._model = config_list[0]["model"]

|

| 182 |

+

return config_list

|

| 183 |

+

|

| 184 |

+

def set_params(model, oai_key, aoai_key, aoai_base):

|

| 185 |

+

os.environ["MODEL"] = model

|

| 186 |

+

os.environ["OPENAI_API_KEY"] = oai_key

|

| 187 |

+

os.environ["AZURE_OPENAI_API_KEY"] = aoai_key

|

| 188 |

+

os.environ["AZURE_OPENAI_API_BASE"] = aoai_base

|

| 189 |

+

return model, oai_key, aoai_key, aoai_base

|

| 190 |

+

|

| 191 |

+

txt_model = gr.Dropdown(

|

| 192 |

+

label="Model",

|

| 193 |

+

choices=[

|

| 194 |

+

"gpt-4",

|

| 195 |

+

"gpt-35-turbo",

|

| 196 |

+

"gpt-3.5-turbo",

|

| 197 |

+

],

|

| 198 |

+

allow_custom_value=True,

|

| 199 |

+

value="gpt-35-turbo",

|

| 200 |

+

container=True,

|

| 201 |

+

)

|

| 202 |

+

txt_oai_key = gr.Textbox(

|

| 203 |

+

label="OpenAI API Key",

|

| 204 |

+

placeholder="Enter key and press enter",

|

| 205 |

+

max_lines=1,

|

| 206 |

+

show_label=True,

|

| 207 |

+

value=os.environ.get("OPENAI_API_KEY", ""),

|

| 208 |

+

container=True,

|

| 209 |

+

type="password",

|

| 210 |

+

)

|

| 211 |

+

txt_aoai_key = gr.Textbox(

|

| 212 |

+

label="Azure OpenAI API Key",

|

| 213 |

+

placeholder="Enter key and press enter",

|

| 214 |

+

max_lines=1,

|

| 215 |

+

show_label=True,

|

| 216 |

+

value=os.environ.get("AZURE_OPENAI_API_KEY", ""),

|

| 217 |

+

container=True,

|

| 218 |

+

type="password",

|

| 219 |

+

)

|

| 220 |

+

txt_aoai_base_url = gr.Textbox(

|

| 221 |

+

label="Azure OpenAI API Base",

|

| 222 |

+

placeholder="Enter base url and press enter",

|

| 223 |

+

max_lines=1,

|

| 224 |

+

show_label=True,

|

| 225 |

+

value=os.environ.get("AZURE_OPENAI_API_BASE", ""),

|

| 226 |

+

container=True,

|

| 227 |

+

type="password",

|

| 228 |

+

)

|

| 229 |

+

|

| 230 |

+

clear = gr.ClearButton([txt_input, chatbot])

|

| 231 |

+

|

| 232 |

+

with gr.Row():

|

| 233 |

+

|

| 234 |

+

def upload_file(file):

|

| 235 |

+

return update_context_url(file.name)

|

| 236 |

+

|

| 237 |

+

upload_button = gr.UploadButton(

|

| 238 |

+

"Click to upload a context file or enter a url in the right textbox",

|

| 239 |

+

file_types=[f".{i}" for i in TEXT_FORMATS],

|

| 240 |

+

file_count="single",

|

| 241 |

+

)

|

| 242 |

+

|

| 243 |

+

txt_context_url = gr.Textbox(

|

| 244 |

+

label="Enter the url to your context file and chat on the context",

|

| 245 |

+

info=f"File must be in the format of [{', '.join(TEXT_FORMATS)}]",

|

| 246 |

+

max_lines=1,

|

| 247 |

+

show_label=True,

|

| 248 |

+

value="https://raw.githubusercontent.com/microsoft/autogen/main/README.md",

|

| 249 |

+

container=True,

|

| 250 |

+

)

|

| 251 |

+

|

| 252 |

+

txt_prompt = gr.Textbox(

|

| 253 |

+

label="Enter your prompt for Retrieve Agent and press enter to replace the default prompt",

|

| 254 |

+

max_lines=40,

|

| 255 |

+

show_label=True,

|

| 256 |

+

value=PROMPT_CODE,

|

| 257 |

+

container=True,

|

| 258 |

+

show_copy_button=True,

|

| 259 |

+

)

|

| 260 |

+

|

| 261 |

+

def respond(message, chat_history, model, oai_key, aoai_key, aoai_base):

|

| 262 |

+

global config_list

|

| 263 |

+

set_params(model, oai_key, aoai_key, aoai_base)

|

| 264 |

+

config_list = update_config(config_list)

|

| 265 |

+

messages = chatbot_reply(message)

|

| 266 |

+

_msg = (

|

| 267 |

+

messages[-1]

|

| 268 |

+

if len(messages) > 0 and messages[-1] != "TERMINATE"

|

| 269 |

+

else messages[-2]

|

| 270 |

+

if len(messages) > 1

|

| 271 |

+

else "Context is not enough for answering the question. Please press `enter` in the context url textbox to make sure the context is activated for the chat."

|

| 272 |

+

)

|

| 273 |

+

chat_history.append((message, _msg))

|

| 274 |

+

return "", chat_history

|

| 275 |

+

|

| 276 |

+

def update_prompt(prompt):

|

| 277 |

+

ragproxyagent.customized_prompt = prompt

|

| 278 |

+

return prompt

|

| 279 |

+

|

| 280 |

+

def update_context_url(context_url):

|

| 281 |

+

global assistant, ragproxyagent

|

| 282 |

+

|

| 283 |

+

file_extension = Path(context_url).suffix

|

| 284 |

+

print("file_extension: ", file_extension)

|

| 285 |

+

if file_extension.lower() not in [f".{i}" for i in TEXT_FORMATS]:

|

| 286 |

+

return f"File must be in the format of {TEXT_FORMATS}"

|

| 287 |

+

|

| 288 |

+

if is_url(context_url):

|

| 289 |

+

try:

|

| 290 |

+

file_path = get_file_from_url(

|

| 291 |

+

context_url,

|

| 292 |

+

save_path=os.path.join("/tmp", os.path.basename(context_url)),

|

| 293 |

+

)

|

| 294 |

+

except Exception as e:

|

| 295 |

+

return str(e)

|

| 296 |

+

else:

|

| 297 |

+

file_path = context_url

|

| 298 |

+

context_url = os.path.basename(context_url)

|

| 299 |

+

|

| 300 |

+

try:

|

| 301 |

+

chromadb.PersistentClient(path="/tmp/chromadb").delete_collection(

|

| 302 |

+

name="autogen_rag"

|

| 303 |

+

)

|

| 304 |

+

except:

|

| 305 |

+

pass

|

| 306 |

+

assistant, ragproxyagent = initialize_agents(config_list, docs_path=file_path)

|

| 307 |

+

return context_url

|

| 308 |

+

|

| 309 |

+

txt_input.submit(

|

| 310 |

+

respond,

|

| 311 |

+

[txt_input, chatbot, txt_model, txt_oai_key, txt_aoai_key, txt_aoai_base_url],

|

| 312 |

+

[txt_input, chatbot],

|

| 313 |

+

)

|

| 314 |

+

txt_prompt.submit(update_prompt, [txt_prompt], [txt_prompt])

|

| 315 |

+

txt_context_url.submit(update_context_url, [txt_context_url], [txt_context_url])

|

| 316 |

+

upload_button.upload(upload_file, upload_button, [txt_context_url])

|

| 317 |

+

|

| 318 |

+

|

| 319 |

+

if __name__ == "__main__":

|

| 320 |

+

demo.launch(share=True, server_name="0.0.0.0")

|

autogen-rag.gif

ADDED

|

Git LFS Details

|

autogen.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pyautogen[retrievechat]>=0.1.10

|

| 2 |

+

gradio<4.0.0

|