GoogleSearchTool-fix

#33

by

davidxia

- opened

- README.md +3 -22

- bonus-unit1/bonus-unit1.ipynb +13 -18

- bonus-unit2/monitoring-and-evaluating-agents.ipynb +0 -657

- fr/unit1/dummy_agent_library.ipynb → dummy_agent_library.ipynb +318 -159

- fr/bonus-unit1/bonus-unit1.ipynb +0 -0

- fr/bonus-unit2/monitoring-and-evaluating-agents.ipynb +0 -657

- fr/unit2/langgraph/agent.ipynb +0 -326

- fr/unit2/langgraph/mail_sorting.ipynb +0 -457

- fr/unit2/llama-index/agents.ipynb +0 -334

- fr/unit2/llama-index/components.ipynb +0 -0

- fr/unit2/llama-index/tools.ipynb +0 -274

- fr/unit2/llama-index/workflows.ipynb +0 -402

- fr/unit2/smolagents/code_agents.ipynb +0 -0

- fr/unit2/smolagents/multiagent_notebook.ipynb +0 -0

- fr/unit2/smolagents/retrieval_agents.ipynb +0 -0

- fr/unit2/smolagents/tool_calling_agents.ipynb +0 -605

- fr/unit2/smolagents/tools.ipynb +0 -0

- fr/unit2/smolagents/vision_agents.ipynb +0 -548

- unit1/dummy_agent_library.ipynb +0 -539

- unit2/langgraph/agent.ipynb +0 -332

- unit2/langgraph/mail_sorting.ipynb +0 -457

- unit2/llama-index/agents.ipynb +0 -334

- unit2/llama-index/components.ipynb +0 -0

- unit2/llama-index/tools.ipynb +0 -274

- unit2/llama-index/workflows.ipynb +0 -401

- unit2/smolagents/code_agents.ipynb +0 -0

- unit2/smolagents/multiagent_notebook.ipynb +0 -0

- unit2/smolagents/retrieval_agents.ipynb +8 -8

- unit2/smolagents/tool_calling_agents.ipynb +14 -14

- unit2/smolagents/tools.ipynb +19 -19

- unit2/smolagents/vision_agents.ipynb +15 -18

- unit2/smolagents/vision_web_browser.py +3 -4

README.md

CHANGED

|

@@ -2,26 +2,7 @@

|

|

| 2 |

license: apache-2.0

|

| 3 |

---

|

| 4 |

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

## 📚 Notebook Index

|

| 8 |

-

|

| 9 |

-

| Unit | Notebook Name | Redirect Link |

|

| 10 |

-

|--------------|------------------------------------|----------------|

|

| 11 |

-

| Unit 1 | Dummy Agent Library | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit1/dummy_agent_library.ipynb) |

|

| 12 |

-

| Unit 2.1 - smolagents | Code Agents | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/smolagents/code_agents.ipynb) |

|

| 13 |

-

| Unit 2.1 - smolagents | Multi-agent Notebook | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/smolagents/multiagent_notebook.ipynb) |

|

| 14 |

-

| Unit 2.1 - smolagents | Retrieval Agents | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/smolagents/retrieval_agents.ipynb) |

|

| 15 |

-

| Unit 2.1 - smolagents | Tool Calling Agents | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/smolagents/tool_calling_agents.ipynb) |

|

| 16 |

-

| Unit 2.1 - smolagents | Tools | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/smolagents/tools.ipynb) |

|

| 17 |

-

| Unit 2.1 - smolagents | Vision Agents | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/smolagents/vision_agents.ipynb) |

|

| 18 |

-

| Unit 2.1 - smolagents | Vision Web Browser | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/smolagents/vision_web_browser.py) |

|

| 19 |

-

| Unit 2.2 - LlamaIndex | Agents | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/llama-index/agents.ipynb) |

|

| 20 |

-

| Unit 2.2 - LlamaIndex | Components | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/llama-index/components.ipynb) |

|

| 21 |

-

| Unit 2.2 - LlamaIndex | Tools | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/llama-index/tools.ipynb) |

|

| 22 |

-

| Unit 2.2 - LlamaIndex | Workflows | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/llama-index/workflows.ipynb) |

|

| 23 |

-

| Unit 2.3 - LangGraph | Agent | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/langgraph/agent.ipynb) |

|

| 24 |

-

| Unit 2.3 - LangGraph | Mail Sorting | [↗](https://huggingface.co/agents-course/notebooks/blob/main/unit2/langgraph/mail_sorting.ipynb) |

|

| 25 |

-

| Bonus Unit 1 | Gemma SFT & Thinking Function Call | [↗](https://huggingface.co/agents-course/notebooks/blob/main/bonus-unit1/bonus-unit1.ipynb) |

|

| 26 |

-

| Bonus Unit 2 | Monitoring & Evaluating Agents | [↗](https://huggingface.co/agents-course/notebooks/blob/main/bonus-unit2/monitoring-and-evaluating-agents.ipynb) |

|

| 27 |

|

|

|

|

|

|

|

|

|

| 2 |

license: apache-2.0

|

| 3 |

---

|

| 4 |

|

| 5 |

+

Example notebooks, part of the [Hugging Face Agents Course](https://huggingface.co/learn/agents-course/unit0/introduction).

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

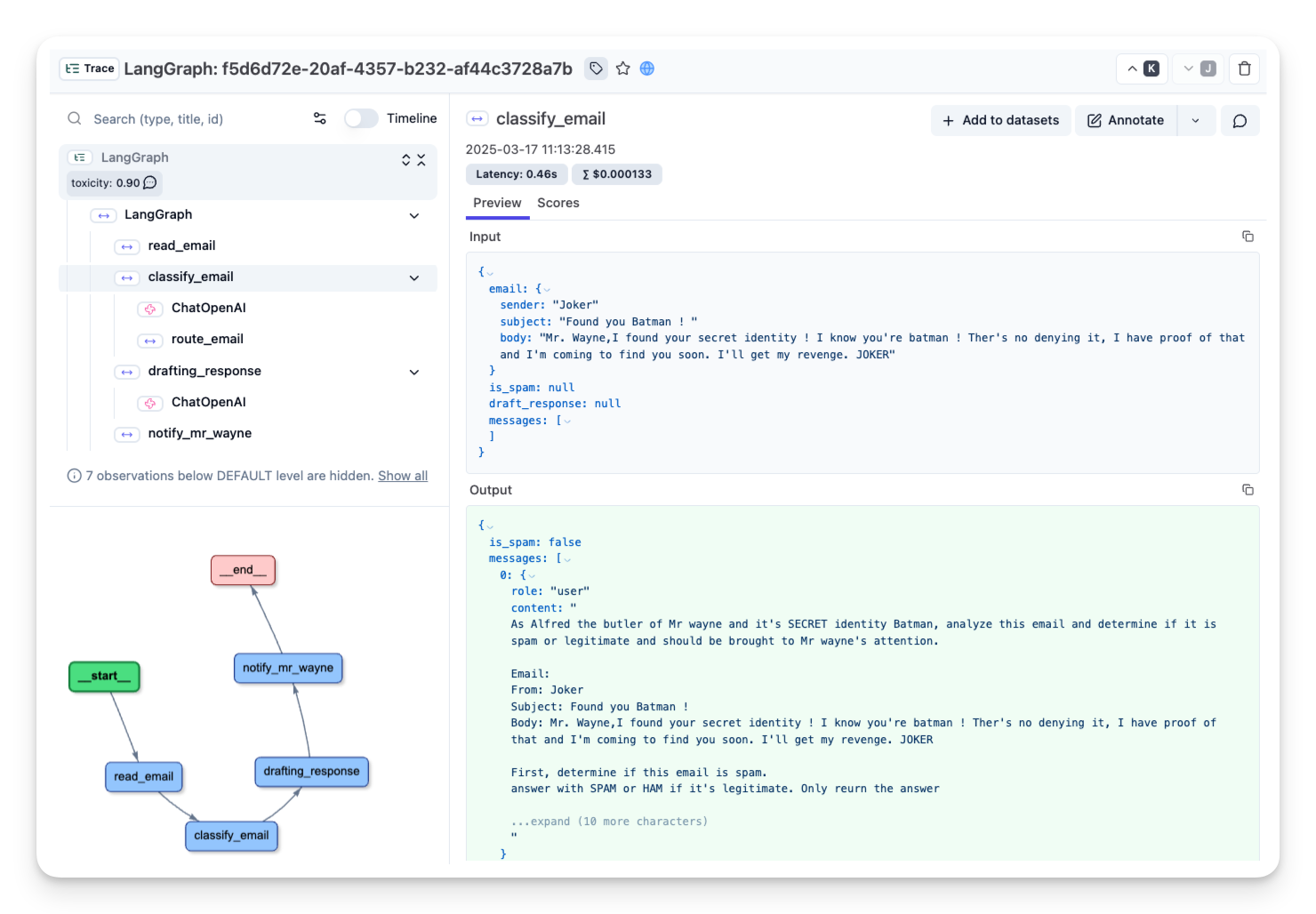

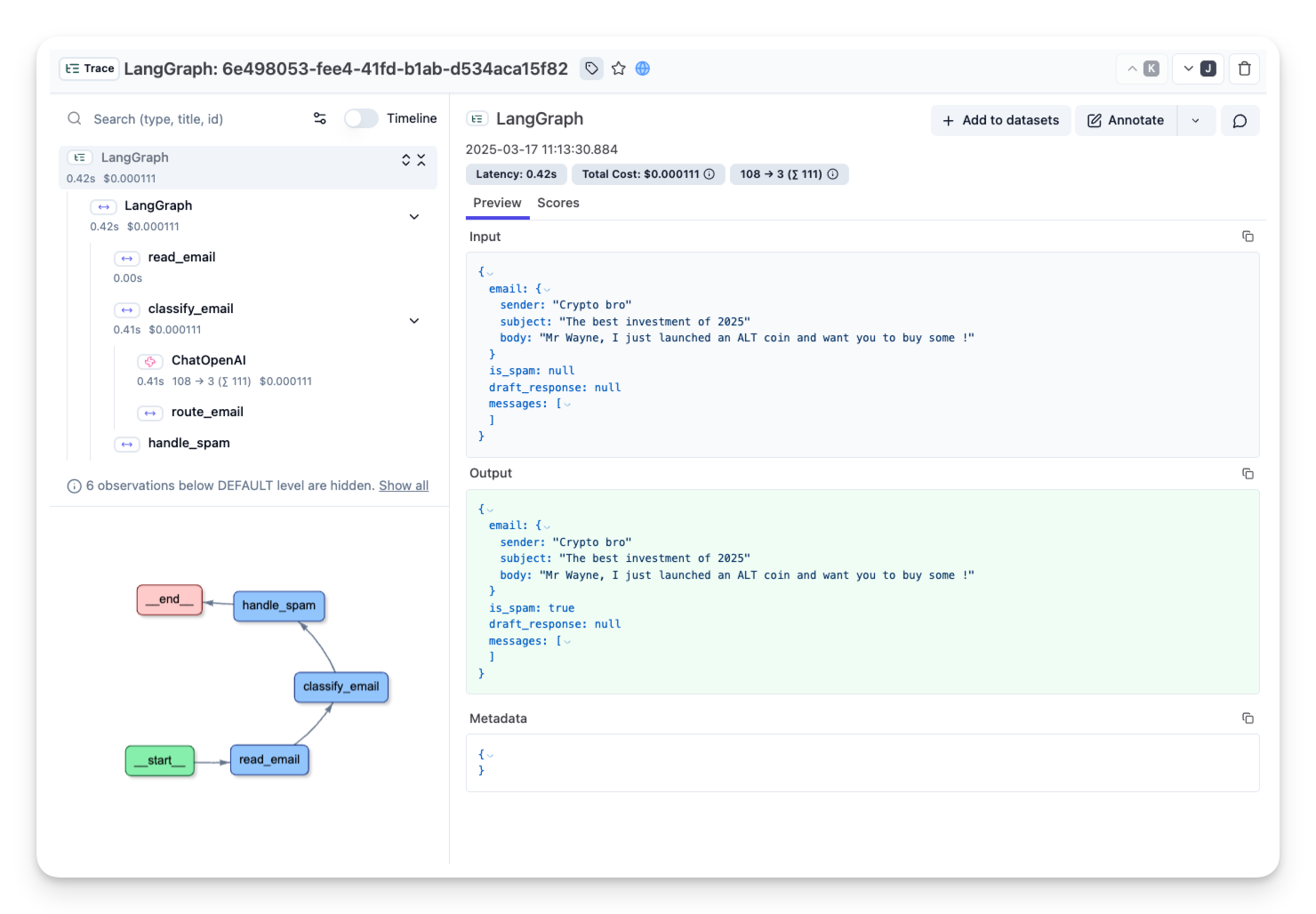

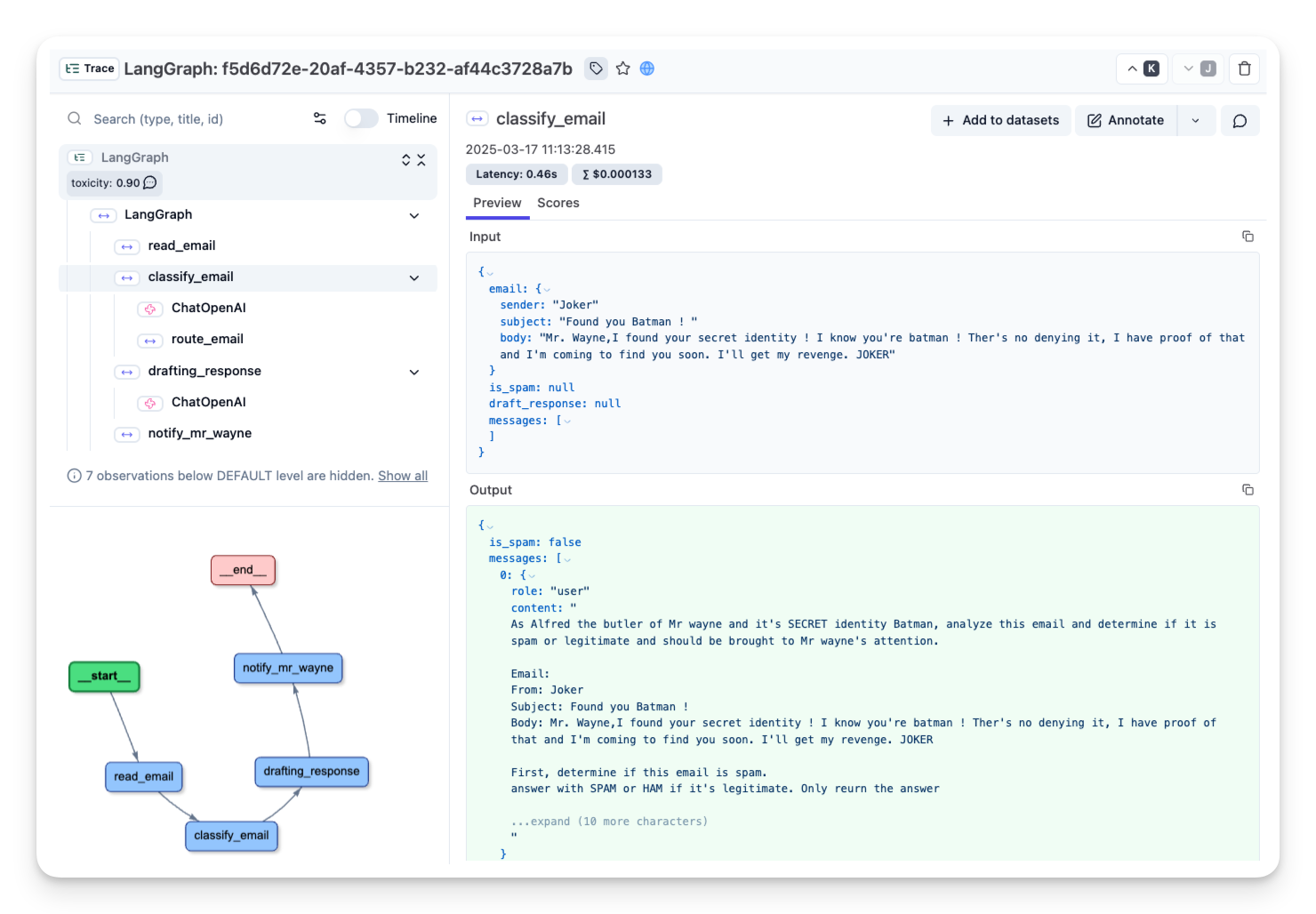

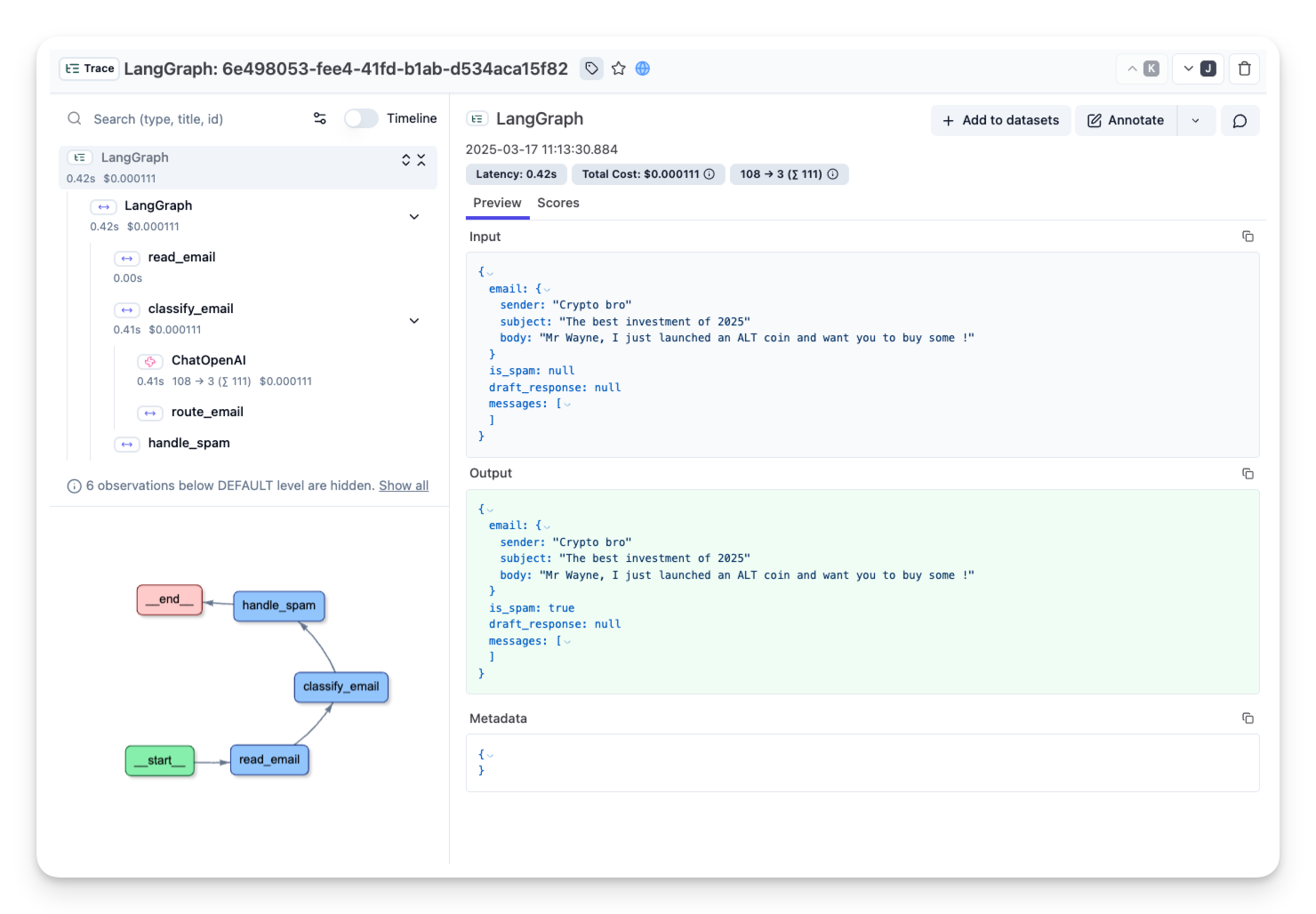

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

|

| 7 |

+

* [Dummy Agent Library](https://huggingface.co/agents-course/notebooks/blob/main/dummy_agent_library.ipynb) – Creating an Agent from scratch. It's complicated!

|

| 8 |

+

*

|

bonus-unit1/bonus-unit1.ipynb

CHANGED

|

@@ -23,7 +23,7 @@

|

|

| 23 |

"id": "gWR4Rvpmjq5T"

|

| 24 |

},

|

| 25 |

"source": [

|

| 26 |

-

"##

|

| 27 |

"\n",

|

| 28 |

"Before diving into the notebook, you need to:\n",

|

| 29 |

"\n",

|

|

@@ -103,7 +103,7 @@

|

|

| 103 |

"source": [

|

| 104 |

"## Step 2: Install dependencies 📚\n",

|

| 105 |

"\n",

|

| 106 |

-

"We need multiple

|

| 107 |

"\n",

|

| 108 |

"- `bitsandbytes` for quantization\n",

|

| 109 |

"- `peft`for LoRA adapters\n",

|

|

@@ -130,9 +130,7 @@

|

|

| 130 |

"!pip install -q -U peft\n",

|

| 131 |

"!pip install -q -U trl\n",

|

| 132 |

"!pip install -q -U tensorboardX\n",

|

| 133 |

-

"!pip install -q wandb

|

| 134 |

-

"!pip install -q -U torchvision\n",

|

| 135 |

-

"!pip install -q -U transformers"

|

| 136 |

]

|

| 137 |

},

|

| 138 |

{

|

|

@@ -165,7 +163,7 @@

|

|

| 165 |

"id": "vBAkwg9zu6A1"

|

| 166 |

},

|

| 167 |

"source": [

|

| 168 |

-

"## Step 4: Import the

|

| 169 |

"\n",

|

| 170 |

"Don't forget to put your HF token."

|

| 171 |

]

|

|

@@ -186,7 +184,7 @@

|

|

| 186 |

"import torch\n",

|

| 187 |

"import json\n",

|

| 188 |

"\n",

|

| 189 |

-

"from transformers import AutoModelForCausalLM, AutoTokenizer,

|

| 190 |

"from datasets import load_dataset\n",

|

| 191 |

"from trl import SFTConfig, SFTTrainer\n",

|

| 192 |

"from peft import LoraConfig, TaskType\n",

|

|

@@ -321,10 +319,7 @@

|

|

| 321 |

"source": [

|

| 322 |

"dataset = dataset.map(preprocess, remove_columns=\"messages\")\n",

|

| 323 |

"dataset = dataset[\"train\"].train_test_split(0.1)\n",

|

| 324 |

-

"print(dataset)

|

| 325 |

-

"\n",

|

| 326 |

-

"dataset[\"train\"] = dataset[\"train\"].select(range(100))\n",

|

| 327 |

-

"dataset[\"test\"] = dataset[\"test\"].select(range(10))"

|

| 328 |

]

|

| 329 |

},

|

| 330 |

{

|

|

@@ -342,7 +337,7 @@

|

|

| 342 |

"\n",

|

| 343 |

"1. A *User message* containing the **necessary information with the list of available tools** inbetween `<tools></tools>` then the user query, here: `\"Can you get me the latest news headlines for the United States?\"`\n",

|

| 344 |

"\n",

|

| 345 |

-

"2. An *Assistant message* here called \"model\" to fit the criterias from gemma models containing two new phases, a **\"thinking\"** phase contained in `<think></think>` and an **\"Act\"** phase contained in `<tool_call

|

| 346 |

"\n",

|

| 347 |

"3. If the model contains a `<tools_call>`, we will append the result of this action in a new **\"Tool\"** message containing a `<tool_response></tool_response>` with the answer from the tool."

|

| 348 |

]

|

|

@@ -624,8 +619,8 @@

|

|

| 624 |

" eothink = \"</think>\"\n",

|

| 625 |

" tool_call=\"<tool_call>\"\n",

|

| 626 |

" eotool_call=\"</tool_call>\"\n",

|

| 627 |

-

" tool_response=\"<

|

| 628 |

-

" eotool_response=\"</

|

| 629 |

" pad_token = \"<pad>\"\n",

|

| 630 |

" eos_token = \"<eos>\"\n",

|

| 631 |

" @classmethod\n",

|

|

@@ -655,7 +650,7 @@

|

|

| 655 |

"source": [

|

| 656 |

"## Step 9: Let's configure the LoRA\n",

|

| 657 |

"\n",

|

| 658 |

-

"This is we are going to define the parameter of our adapter. Those

|

| 659 |

]

|

| 660 |

},

|

| 661 |

{

|

|

@@ -707,7 +702,7 @@

|

|

| 707 |

},

|

| 708 |

"outputs": [],

|

| 709 |

"source": [

|

| 710 |

-

"username=\"Jofthomas\"#

|

| 711 |

"output_dir = \"gemma-2-2B-it-thinking-function_calling-V0\" # The directory where the trained model checkpoints, logs, and other artifacts will be saved. It will also be the default name of the model when pushed to the hub if not redefined later.\n",

|

| 712 |

"per_device_train_batch_size = 1\n",

|

| 713 |

"per_device_eval_batch_size = 1\n",

|

|

@@ -1196,7 +1191,7 @@

|

|

| 1196 |

},

|

| 1197 |

{

|

| 1198 |

"cell_type": "code",

|

| 1199 |

-

"execution_count":

|

| 1200 |

"id": "56b89825-70ac-42c1-934c-26e2d54f3b7b",

|

| 1201 |

"metadata": {

|

| 1202 |

"colab": {

|

|

@@ -1476,7 +1471,7 @@

|

|

| 1476 |

"device = \"auto\"\n",

|

| 1477 |

"config = PeftConfig.from_pretrained(peft_model_id)\n",

|

| 1478 |

"model = AutoModelForCausalLM.from_pretrained(config.base_model_name_or_path,\n",

|

| 1479 |

-

" device_map

|

| 1480 |

" )\n",

|

| 1481 |

"tokenizer = AutoTokenizer.from_pretrained(peft_model_id)\n",

|

| 1482 |

"model.resize_token_embeddings(len(tokenizer))\n",

|

|

|

|

| 23 |

"id": "gWR4Rvpmjq5T"

|

| 24 |

},

|

| 25 |

"source": [

|

| 26 |

+

"## Prerequisites 🏗️\n",

|

| 27 |

"\n",

|

| 28 |

"Before diving into the notebook, you need to:\n",

|

| 29 |

"\n",

|

|

|

|

| 103 |

"source": [

|

| 104 |

"## Step 2: Install dependencies 📚\n",

|

| 105 |

"\n",

|

| 106 |

+

"We need multiple librairies:\n",

|

| 107 |

"\n",

|

| 108 |

"- `bitsandbytes` for quantization\n",

|

| 109 |

"- `peft`for LoRA adapters\n",

|

|

|

|

| 130 |

"!pip install -q -U peft\n",

|

| 131 |

"!pip install -q -U trl\n",

|

| 132 |

"!pip install -q -U tensorboardX\n",

|

| 133 |

+

"!pip install -q wandb"

|

|

|

|

|

|

|

| 134 |

]

|

| 135 |

},

|

| 136 |

{

|

|

|

|

| 163 |

"id": "vBAkwg9zu6A1"

|

| 164 |

},

|

| 165 |

"source": [

|

| 166 |

+

"## Step 4: Import the librairies\n",

|

| 167 |

"\n",

|

| 168 |

"Don't forget to put your HF token."

|

| 169 |

]

|

|

|

|

| 184 |

"import torch\n",

|

| 185 |

"import json\n",

|

| 186 |

"\n",

|

| 187 |

+

"from transformers import AutoModelForCausalLM, AutoTokenizer, set_seed\n",

|

| 188 |

"from datasets import load_dataset\n",

|

| 189 |

"from trl import SFTConfig, SFTTrainer\n",

|

| 190 |

"from peft import LoraConfig, TaskType\n",

|

|

|

|

| 319 |

"source": [

|

| 320 |

"dataset = dataset.map(preprocess, remove_columns=\"messages\")\n",

|

| 321 |

"dataset = dataset[\"train\"].train_test_split(0.1)\n",

|

| 322 |

+

"print(dataset)"

|

|

|

|

|

|

|

|

|

|

| 323 |

]

|

| 324 |

},

|

| 325 |

{

|

|

|

|

| 337 |

"\n",

|

| 338 |

"1. A *User message* containing the **necessary information with the list of available tools** inbetween `<tools></tools>` then the user query, here: `\"Can you get me the latest news headlines for the United States?\"`\n",

|

| 339 |

"\n",

|

| 340 |

+

"2. An *Assistant message* here called \"model\" to fit the criterias from gemma models containing two new phases, a **\"thinking\"** phase contained in `<think></think>` and an **\"Act\"** phase contained in `<tool_call></<tool_call>`.\n",

|

| 341 |

"\n",

|

| 342 |

"3. If the model contains a `<tools_call>`, we will append the result of this action in a new **\"Tool\"** message containing a `<tool_response></tool_response>` with the answer from the tool."

|

| 343 |

]

|

|

|

|

| 619 |

" eothink = \"</think>\"\n",

|

| 620 |

" tool_call=\"<tool_call>\"\n",

|

| 621 |

" eotool_call=\"</tool_call>\"\n",

|

| 622 |

+

" tool_response=\"<tool_reponse>\"\n",

|

| 623 |

+

" eotool_response=\"</tool_reponse>\"\n",

|

| 624 |

" pad_token = \"<pad>\"\n",

|

| 625 |

" eos_token = \"<eos>\"\n",

|

| 626 |

" @classmethod\n",

|

|

|

|

| 650 |

"source": [

|

| 651 |

"## Step 9: Let's configure the LoRA\n",

|

| 652 |

"\n",

|

| 653 |

+

"This is we are going to define the parameter of our adapter. Those a the most important parameters in LoRA as they define the size and importance of the adapters we are training."

|

| 654 |

]

|

| 655 |

},

|

| 656 |

{

|

|

|

|

| 702 |

},

|

| 703 |

"outputs": [],

|

| 704 |

"source": [

|

| 705 |

+

"username=\"Jofthomas\"# REPLCAE with your Hugging Face username\n",

|

| 706 |

"output_dir = \"gemma-2-2B-it-thinking-function_calling-V0\" # The directory where the trained model checkpoints, logs, and other artifacts will be saved. It will also be the default name of the model when pushed to the hub if not redefined later.\n",

|

| 707 |

"per_device_train_batch_size = 1\n",

|

| 708 |

"per_device_eval_batch_size = 1\n",

|

|

|

|

| 1191 |

},

|

| 1192 |

{

|

| 1193 |

"cell_type": "code",

|

| 1194 |

+

"execution_count": 19,

|

| 1195 |

"id": "56b89825-70ac-42c1-934c-26e2d54f3b7b",

|

| 1196 |

"metadata": {

|

| 1197 |

"colab": {

|

|

|

|

| 1471 |

"device = \"auto\"\n",

|

| 1472 |

"config = PeftConfig.from_pretrained(peft_model_id)\n",

|

| 1473 |

"model = AutoModelForCausalLM.from_pretrained(config.base_model_name_or_path,\n",

|

| 1474 |

+

" device_map=\"auto\",\n",

|

| 1475 |

" )\n",

|

| 1476 |

"tokenizer = AutoTokenizer.from_pretrained(peft_model_id)\n",

|

| 1477 |

"model.resize_token_embeddings(len(tokenizer))\n",

|

bonus-unit2/monitoring-and-evaluating-agents.ipynb

DELETED

|

@@ -1,657 +0,0 @@

|

|

| 1 |

-

{

|

| 2 |

-

"cells": [

|

| 3 |

-

{

|

| 4 |

-

"cell_type": "markdown",

|

| 5 |

-

"metadata": {},

|

| 6 |

-

"source": [

|

| 7 |

-

"# Bonus Unit 1: Observability and Evaluation of Agents\n",

|

| 8 |

-

"\n",

|

| 9 |

-

"In this tutorial, we will learn how to **monitor the internal steps (traces) of our AI agent** and **evaluate its performance** using open-source observability tools.\n",

|

| 10 |

-

"\n",

|

| 11 |

-

"The ability to observe and evaluate an agent’s behavior is essential for:\n",

|

| 12 |

-

"- Debugging issues when tasks fail or produce suboptimal results\n",

|

| 13 |

-

"- Monitoring costs and performance in real-time\n",

|

| 14 |

-

"- Improving reliability and safety through continuous feedback\n",

|

| 15 |

-

"\n",

|

| 16 |

-

"This notebook is part of the [Hugging Face Agents Course](https://www.hf.co/learn/agents-course/unit1/introduction)."

|

| 17 |

-

]

|

| 18 |

-

},

|

| 19 |

-

{

|

| 20 |

-

"cell_type": "markdown",

|

| 21 |

-

"metadata": {},

|

| 22 |

-

"source": [

|

| 23 |

-

"## Exercise Prerequisites 🏗️\n",

|

| 24 |

-

"\n",

|

| 25 |

-

"Before running this notebook, please be sure you have:\n",

|

| 26 |

-

"\n",

|

| 27 |

-

"🔲 📚 **Studied** [Introduction to Agents](https://huggingface.co/learn/agents-course/unit1/introduction)\n",

|

| 28 |

-

"\n",

|

| 29 |

-

"🔲 📚 **Studied** [The smolagents framework](https://huggingface.co/learn/agents-course/unit2/smolagents/introduction)"

|

| 30 |

-

]

|

| 31 |

-

},

|

| 32 |

-

{

|

| 33 |

-

"cell_type": "markdown",

|

| 34 |

-

"metadata": {},

|

| 35 |

-

"source": [

|

| 36 |

-

"## Step 0: Install the Required Libraries\n",

|

| 37 |

-

"\n",

|

| 38 |

-

"We will need a few libraries that allow us to run, monitor, and evaluate our agents:"

|

| 39 |

-

]

|

| 40 |

-

},

|

| 41 |

-

{

|

| 42 |

-

"cell_type": "code",

|

| 43 |

-

"execution_count": null,

|

| 44 |

-

"metadata": {},

|

| 45 |

-

"outputs": [],

|

| 46 |

-

"source": [

|

| 47 |

-

"%pip install langfuse 'smolagents[telemetry]' openinference-instrumentation-smolagents datasets 'smolagents[gradio]' gradio --upgrade"

|

| 48 |

-

]

|

| 49 |

-

},

|

| 50 |

-

{

|

| 51 |

-

"cell_type": "markdown",

|

| 52 |

-

"metadata": {},

|

| 53 |

-

"source": [

|

| 54 |

-

"## Step 1: Instrument Your Agent\n",

|

| 55 |

-

"\n",

|

| 56 |

-

"In this notebook, we will use [Langfuse](https://langfuse.com/) as our observability tool, but you can use **any other OpenTelemetry-compatible service**. The code below shows how to set environment variables for Langfuse (or any OTel endpoint) and how to instrument your smolagent.\n",

|

| 57 |

-

"\n",

|

| 58 |

-

"**Note:** If you are using LlamaIndex or LangGraph, you can find documentation on instrumenting them [here](https://langfuse.com/docs/integrations/llama-index/workflows) and [here](https://langfuse.com/docs/integrations/langchain/example-python-langgraph). "

|

| 59 |

-

]

|

| 60 |

-

},

|

| 61 |

-

{

|

| 62 |

-

"cell_type": "code",

|

| 63 |

-

"execution_count": 1,

|

| 64 |

-

"metadata": {},

|

| 65 |

-

"outputs": [],

|

| 66 |

-

"source": [

|

| 67 |

-

"import os\n",

|

| 68 |

-

"\n",

|

| 69 |

-

"# Get keys for your project from the project settings page: https://cloud.langfuse.com\n",

|

| 70 |

-

"os.environ[\"LANGFUSE_PUBLIC_KEY\"] = \"pk-lf-...\" \n",

|

| 71 |

-

"os.environ[\"LANGFUSE_SECRET_KEY\"] = \"sk-lf-...\" \n",

|

| 72 |

-

"os.environ[\"LANGFUSE_HOST\"] = \"https://cloud.langfuse.com\" # 🇪🇺 EU region\n",

|

| 73 |

-

"# os.environ[\"LANGFUSE_HOST\"] = \"https://us.cloud.langfuse.com\" # 🇺🇸 US region\n",

|

| 74 |

-

"\n",

|

| 75 |

-

"# Set your Hugging Face and other tokens/secrets as environment variable\n",

|

| 76 |

-

"os.environ[\"HF_TOKEN\"] = \"hf_...\" "

|

| 77 |

-

]

|

| 78 |

-

},

|

| 79 |

-

{

|

| 80 |

-

"cell_type": "markdown",

|

| 81 |

-

"metadata": {},

|

| 82 |

-

"source": [

|

| 83 |

-

"With the environment variables set, we can now initialize the Langfuse client. get_client() initializes the Langfuse client using the credentials provided in the environment variables."

|

| 84 |

-

]

|

| 85 |

-

},

|

| 86 |

-

{

|

| 87 |

-

"cell_type": "code",

|

| 88 |

-

"execution_count": 12,

|

| 89 |

-

"metadata": {},

|

| 90 |

-

"outputs": [

|

| 91 |

-

{

|

| 92 |

-

"name": "stdout",

|

| 93 |

-

"output_type": "stream",

|

| 94 |

-

"text": [

|

| 95 |

-

"Langfuse client is authenticated and ready!\n"

|

| 96 |

-

]

|

| 97 |

-

}

|

| 98 |

-

],

|

| 99 |

-

"source": [

|

| 100 |

-

"from langfuse import get_client\n",

|

| 101 |

-

" \n",

|

| 102 |

-

"langfuse = get_client()\n",

|

| 103 |

-

" \n",

|

| 104 |

-

"# Verify connection\n",

|

| 105 |

-

"if langfuse.auth_check():\n",

|

| 106 |

-

" print(\"Langfuse client is authenticated and ready!\")\n",

|

| 107 |

-

"else:\n",

|

| 108 |

-

" print(\"Authentication failed. Please check your credentials and host.\")"

|

| 109 |

-

]

|

| 110 |

-

},

|

| 111 |

-

{

|

| 112 |

-

"cell_type": "code",

|

| 113 |

-

"execution_count": 13,

|

| 114 |

-

"metadata": {},

|

| 115 |

-

"outputs": [

|

| 116 |

-

{

|

| 117 |

-

"name": "stderr",

|

| 118 |

-

"output_type": "stream",

|

| 119 |

-

"text": [

|

| 120 |

-

"Attempting to instrument while already instrumented\n"

|

| 121 |

-

]

|

| 122 |

-

}

|

| 123 |

-

],

|

| 124 |

-

"source": [

|

| 125 |

-

"from openinference.instrumentation.smolagents import SmolagentsInstrumentor\n",

|

| 126 |

-

" \n",

|

| 127 |

-

"SmolagentsInstrumentor().instrument()"

|

| 128 |

-

]

|

| 129 |

-

},

|

| 130 |

-

{

|

| 131 |

-

"cell_type": "markdown",

|

| 132 |

-

"metadata": {},

|

| 133 |

-

"source": [

|

| 134 |

-

"## Step 2: Test Your Instrumentation\n",

|

| 135 |

-

"\n",

|

| 136 |

-

"Here is a simple CodeAgent from smolagents that calculates `1+1`. We run it to confirm that the instrumentation is working correctly. If everything is set up correctly, you will see logs/spans in your observability dashboard."

|

| 137 |

-

]

|

| 138 |

-

},

|

| 139 |

-

{

|

| 140 |

-

"cell_type": "code",

|

| 141 |

-

"execution_count": null,

|

| 142 |

-

"metadata": {},

|

| 143 |

-

"outputs": [],

|

| 144 |

-

"source": [

|

| 145 |

-

"from smolagents import InferenceClientModel, CodeAgent\n",

|

| 146 |

-

"\n",

|

| 147 |

-

"# Create a simple agent to test instrumentation\n",

|

| 148 |

-

"agent = CodeAgent(\n",

|

| 149 |

-

" tools=[],\n",

|

| 150 |

-

" model=InferenceClientModel()\n",

|

| 151 |

-

")\n",

|

| 152 |

-

"\n",

|

| 153 |

-

"agent.run(\"1+1=\")"

|

| 154 |

-

]

|

| 155 |

-

},

|

| 156 |

-

{

|

| 157 |

-

"cell_type": "markdown",

|

| 158 |

-

"metadata": {},

|

| 159 |

-

"source": [

|

| 160 |

-

"Check your [Langfuse Traces Dashboard](https://cloud.langfuse.com/traces) (or your chosen observability tool) to confirm that the spans and logs have been recorded.\n",

|

| 161 |

-

"\n",

|

| 162 |

-

"Example screenshot from Langfuse:\n",

|

| 163 |

-

"\n",

|

| 164 |

-

"\n",

|

| 165 |

-

"\n",

|

| 166 |

-

"_[Link to the trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/1b94d6888258e0998329cdb72a371155?timestamp=2025-03-10T11%3A59%3A41.743Z)_"

|

| 167 |

-

]

|

| 168 |

-

},

|

| 169 |

-

{

|

| 170 |

-

"cell_type": "markdown",

|

| 171 |

-

"metadata": {},

|

| 172 |

-

"source": [

|

| 173 |

-

"## Step 3: Observe and Evaluate a More Complex Agent\n",

|

| 174 |

-

"\n",

|

| 175 |

-

"Now that you have confirmed your instrumentation works, let's try a more complex query so we can see how advanced metrics (token usage, latency, costs, etc.) are tracked."

|

| 176 |

-

]

|

| 177 |

-

},

|

| 178 |

-

{

|

| 179 |

-

"cell_type": "code",

|

| 180 |

-

"execution_count": null,

|

| 181 |

-

"metadata": {},

|

| 182 |

-

"outputs": [],

|

| 183 |

-

"source": [

|

| 184 |

-

"from smolagents import (CodeAgent, DuckDuckGoSearchTool, InferenceClientModel)\n",

|

| 185 |

-

"\n",

|

| 186 |

-

"search_tool = DuckDuckGoSearchTool()\n",

|

| 187 |

-

"agent = CodeAgent(tools=[search_tool], model=InferenceClientModel())\n",

|

| 188 |

-

"\n",

|

| 189 |

-

"agent.run(\"How many Rubik's Cubes could you fit inside the Notre Dame Cathedral?\")"

|

| 190 |

-

]

|

| 191 |

-

},

|

| 192 |

-

{

|

| 193 |

-

"cell_type": "markdown",

|

| 194 |

-

"metadata": {},

|

| 195 |

-

"source": [

|

| 196 |

-

"### Trace Structure\n",

|

| 197 |

-

"\n",

|

| 198 |

-

"Most observability tools record a **trace** that contains **spans**, which represent each step of your agent’s logic. Here, the trace contains the overall agent run and sub-spans for:\n",

|

| 199 |

-

"- The tool calls (DuckDuckGoSearchTool)\n",

|

| 200 |

-

"- The LLM calls (InferenceClientModel)\n",

|

| 201 |

-

"\n",

|

| 202 |

-

"You can inspect these to see precisely where time is spent, how many tokens are used, and so on:\n",

|

| 203 |

-

"\n",

|

| 204 |

-

"\n",

|

| 205 |

-

"\n",

|

| 206 |

-

"_[Link to the trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/1ac33b89ffd5e75d4265b62900c348ed?timestamp=2025-03-07T13%3A45%3A09.149Z&display=preview)_"

|

| 207 |

-

]

|

| 208 |

-

},

|

| 209 |

-

{

|

| 210 |

-

"cell_type": "markdown",

|

| 211 |

-

"metadata": {},

|

| 212 |

-

"source": [

|

| 213 |

-

"## Online Evaluation\n",

|

| 214 |

-

"\n",

|

| 215 |

-

"In the previous section, we learned about the difference between online and offline evaluation. Now, we will see how to monitor your agent in production and evaluate it live.\n",

|

| 216 |

-

"\n",

|

| 217 |

-

"### Common Metrics to Track in Production\n",

|

| 218 |

-

"\n",

|

| 219 |

-

"1. **Costs** — The smolagents instrumentation captures token usage, which you can transform into approximate costs by assigning a price per token.\n",

|

| 220 |

-

"2. **Latency** — Observe the time it takes to complete each step, or the entire run.\n",

|

| 221 |

-

"3. **User Feedback** — Users can provide direct feedback (thumbs up/down) to help refine or correct the agent.\n",

|

| 222 |

-

"4. **LLM-as-a-Judge** — Use a separate LLM to evaluate your agent’s output in near real-time (e.g., checking for toxicity or correctness).\n",

|

| 223 |

-

"\n",

|

| 224 |

-

"Below, we show examples of these metrics."

|

| 225 |

-

]

|

| 226 |

-

},

|

| 227 |

-

{

|

| 228 |

-

"cell_type": "markdown",

|

| 229 |

-

"metadata": {},

|

| 230 |

-

"source": [

|

| 231 |

-

"#### 1. Costs\n",

|

| 232 |

-

"\n",

|

| 233 |

-

"Below is a screenshot showing usage for `Qwen2.5-Coder-32B-Instruct` calls. This is useful to see costly steps and optimize your agent. \n",

|

| 234 |

-

"\n",

|

| 235 |

-

"\n",

|

| 236 |

-

"\n",

|

| 237 |

-

"_[Link to the trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/1ac33b89ffd5e75d4265b62900c348ed?timestamp=2025-03-07T13%3A45%3A09.149Z&display=preview)_"

|

| 238 |

-

]

|

| 239 |

-

},

|

| 240 |

-

{

|

| 241 |

-

"cell_type": "markdown",

|

| 242 |

-

"metadata": {},

|

| 243 |

-

"source": [

|

| 244 |

-

"#### 2. Latency\n",

|

| 245 |

-

"\n",

|

| 246 |

-

"We can also see how long it took to complete each step. In the example below, the entire conversation took 32 seconds, which you can break down by step. This helps you identify bottlenecks and optimize your agent.\n",

|

| 247 |

-

"\n",

|

| 248 |

-

"\n",

|

| 249 |

-

"\n",

|

| 250 |

-

"_[Link to the trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/1ac33b89ffd5e75d4265b62900c348ed?timestamp=2025-03-07T13%3A45%3A09.149Z&display=preview)_"

|

| 251 |

-

]

|

| 252 |

-

},

|

| 253 |

-

{

|

| 254 |

-

"cell_type": "markdown",

|

| 255 |

-

"metadata": {},

|

| 256 |

-

"source": [

|

| 257 |

-

"#### 3. Additional Attributes\n",

|

| 258 |

-

"\n",

|

| 259 |

-

"You may also pass additional attributes to your spans. These can include `user_id`, `tags`, `session_id`, and custom metadata. Enriching traces with these details is important for analysis, debugging, and monitoring of your application’s behavior across different users or sessions."

|

| 260 |

-

]

|

| 261 |

-

},

|

| 262 |

-

{

|

| 263 |

-

"cell_type": "code",

|

| 264 |

-

"execution_count": null,

|

| 265 |

-

"metadata": {},

|

| 266 |

-

"outputs": [],

|

| 267 |

-

"source": [

|

| 268 |

-

"from smolagents import (CodeAgent, DuckDuckGoSearchTool, InferenceClientModel)\n",

|

| 269 |

-

"\n",

|

| 270 |

-

"search_tool = DuckDuckGoSearchTool()\n",

|

| 271 |

-

"agent = CodeAgent(\n",

|

| 272 |

-

" tools=[search_tool],\n",

|

| 273 |

-

" model=InferenceClientModel()\n",

|

| 274 |

-

")\n",

|

| 275 |

-

"\n",

|

| 276 |

-

"with langfuse.start_as_current_span(\n",

|

| 277 |

-

" name=\"Smolagent-Trace\",\n",

|

| 278 |

-

" ) as span:\n",

|

| 279 |

-

" \n",

|

| 280 |

-

" # Run your application here\n",

|

| 281 |

-

" response = agent.run(\"What is the capital of Germany?\")\n",

|

| 282 |

-

" \n",

|

| 283 |

-

" # Pass additional attributes to the span\n",

|

| 284 |

-

" span.update_trace(\n",

|

| 285 |

-

" input=\"What is the capital of Germany?\",\n",

|

| 286 |

-

" output=response,\n",

|

| 287 |

-

" user_id=\"smolagent-user-123\",\n",

|

| 288 |

-

" session_id=\"smolagent-session-123456789\",\n",

|

| 289 |

-

" tags=[\"city-question\", \"testing-agents\"],\n",

|

| 290 |

-

" metadata={\"email\": \"[email protected]\"},\n",

|

| 291 |

-

" )\n",

|

| 292 |

-

" \n",

|

| 293 |

-

"# Flush events in short-lived applications\n",

|

| 294 |

-

"langfuse.flush()"

|

| 295 |

-

]

|

| 296 |

-

},

|

| 297 |

-

{

|

| 298 |

-

"cell_type": "markdown",

|

| 299 |

-

"metadata": {},

|

| 300 |

-

"source": [

|

| 301 |

-

""

|

| 302 |

-

]

|

| 303 |

-

},

|

| 304 |

-

{

|

| 305 |

-

"cell_type": "markdown",

|

| 306 |

-

"metadata": {},

|

| 307 |

-

"source": [

|

| 308 |

-

"#### 4. User Feedback\n",

|

| 309 |

-

"\n",

|

| 310 |

-

"If your agent is embedded into a user interface, you can record direct user feedback (like a thumbs-up/down in a chat UI). Below is an example using [Gradio](https://gradio.app/) to embed a chat with a simple feedback mechanism.\n",

|

| 311 |

-

"\n",

|

| 312 |

-

"In the code snippet below, when a user sends a chat message, we capture the trace in Langfuse. If the user likes/dislikes the last answer, we attach a score to the trace."

|

| 313 |

-

]

|

| 314 |

-

},

|

| 315 |

-

{

|

| 316 |

-

"cell_type": "code",

|

| 317 |

-

"execution_count": null,

|

| 318 |

-

"metadata": {},

|

| 319 |

-

"outputs": [],

|

| 320 |

-

"source": [

|

| 321 |

-

"import gradio as gr\n",

|

| 322 |

-

"from smolagents import (CodeAgent, InferenceClientModel)\n",

|

| 323 |

-

"from langfuse import get_client\n",

|

| 324 |

-

"\n",

|

| 325 |

-

"langfuse = get_client()\n",

|

| 326 |

-

"\n",

|

| 327 |

-

"model = InferenceClientModel()\n",

|

| 328 |

-

"agent = CodeAgent(tools=[], model=model, add_base_tools=True)\n",

|

| 329 |

-

"\n",

|

| 330 |

-

"trace_id = None\n",

|

| 331 |

-

"\n",

|

| 332 |

-

"def respond(prompt, history):\n",

|

| 333 |

-

" with langfuse.start_as_current_span(\n",

|

| 334 |

-

" name=\"Smolagent-Trace\"):\n",

|

| 335 |

-

" \n",

|

| 336 |

-

" # Run your application here\n",

|

| 337 |

-

" output = agent.run(prompt)\n",

|

| 338 |

-

"\n",

|

| 339 |

-

" global trace_id\n",

|

| 340 |

-

" trace_id = langfuse.get_current_trace_id()\n",

|

| 341 |

-

"\n",

|

| 342 |

-

" history.append({\"role\": \"assistant\", \"content\": str(output)})\n",

|

| 343 |

-

" return history\n",

|

| 344 |

-

"\n",

|

| 345 |

-

"def handle_like(data: gr.LikeData):\n",

|

| 346 |

-

" # For demonstration, we map user feedback to a 1 (like) or 0 (dislike)\n",

|

| 347 |

-

" if data.liked:\n",

|

| 348 |

-

" langfuse.create_score(\n",

|

| 349 |

-

" value=1,\n",

|

| 350 |

-

" name=\"user-feedback\",\n",

|

| 351 |

-

" trace_id=trace_id\n",

|

| 352 |

-

" )\n",

|

| 353 |

-

" else:\n",

|

| 354 |

-

" langfuse.create_score(\n",

|

| 355 |

-

" value=0,\n",

|

| 356 |

-

" name=\"user-feedback\",\n",

|

| 357 |

-

" trace_id=trace_id\n",

|

| 358 |

-

" )\n",

|

| 359 |

-

"\n",

|

| 360 |

-

"with gr.Blocks() as demo:\n",

|

| 361 |

-

" chatbot = gr.Chatbot(label=\"Chat\", type=\"messages\")\n",

|

| 362 |

-

" prompt_box = gr.Textbox(placeholder=\"Type your message...\", label=\"Your message\")\n",

|

| 363 |

-

"\n",

|

| 364 |

-

" # When the user presses 'Enter' on the prompt, we run 'respond'\n",

|

| 365 |

-

" prompt_box.submit(\n",

|

| 366 |

-

" fn=respond,\n",

|

| 367 |

-

" inputs=[prompt_box, chatbot],\n",

|

| 368 |

-

" outputs=chatbot\n",

|

| 369 |

-

" )\n",

|

| 370 |

-

"\n",

|

| 371 |

-

" # When the user clicks a 'like' button on a message, we run 'handle_like'\n",

|

| 372 |

-

" chatbot.like(handle_like, None, None)\n",

|

| 373 |

-

"\n",

|

| 374 |

-

"demo.launch()\n"

|

| 375 |

-

]

|

| 376 |

-

},

|

| 377 |

-

{

|

| 378 |

-

"cell_type": "markdown",

|

| 379 |

-

"metadata": {},

|

| 380 |

-

"source": [

|

| 381 |

-

"User feedback is then captured in your observability tool:\n",

|

| 382 |

-

"\n",

|

| 383 |

-

""

|

| 384 |

-

]

|

| 385 |

-

},

|

| 386 |

-

{

|

| 387 |

-

"cell_type": "markdown",

|

| 388 |

-

"metadata": {},

|

| 389 |

-

"source": [

|

| 390 |

-

"#### 5. LLM-as-a-Judge\n",

|

| 391 |

-

"\n",

|

| 392 |

-

"LLM-as-a-Judge is another way to automatically evaluate your agent's output. You can set up a separate LLM call to gauge the output’s correctness, toxicity, style, or any other criteria you care about.\n",

|

| 393 |

-

"\n",

|

| 394 |

-

"**Workflow**:\n",

|

| 395 |

-

"1. You define an **Evaluation Template**, e.g., \"Check if the text is toxic.\"\n",

|

| 396 |

-

"2. Each time your agent generates output, you pass that output to your \"judge\" LLM with the template.\n",

|

| 397 |

-

"3. The judge LLM responds with a rating or label that you log to your observability tool.\n",

|

| 398 |

-

"\n",

|

| 399 |

-

"Example from Langfuse:\n",

|

| 400 |

-

"\n",

|

| 401 |

-

"\n",

|

| 402 |

-

""

|

| 403 |

-

]

|

| 404 |

-

},

|

| 405 |

-

{

|

| 406 |

-

"cell_type": "code",

|

| 407 |

-

"execution_count": null,

|

| 408 |

-

"metadata": {},

|

| 409 |

-

"outputs": [],

|

| 410 |

-

"source": [

|

| 411 |

-

"# Example: Checking if the agent’s output is toxic or not.\n",

|

| 412 |

-

"from smolagents import (CodeAgent, DuckDuckGoSearchTool, InferenceClientModel)\n",

|

| 413 |

-

"\n",

|

| 414 |

-

"search_tool = DuckDuckGoSearchTool()\n",

|

| 415 |

-

"agent = CodeAgent(tools=[search_tool], model=InferenceClientModel())\n",

|

| 416 |

-

"\n",

|

| 417 |

-

"agent.run(\"Can eating carrots improve your vision?\")"

|

| 418 |

-

]

|

| 419 |

-

},

|

| 420 |

-

{

|

| 421 |

-

"cell_type": "markdown",

|

| 422 |

-

"metadata": {},

|

| 423 |

-

"source": [

|

| 424 |

-

"You can see that the answer of this example is judged as \"not toxic\".\n",

|

| 425 |

-

"\n",

|

| 426 |

-

""

|

| 427 |

-

]

|

| 428 |

-

},

|

| 429 |

-

{

|

| 430 |

-

"cell_type": "markdown",

|

| 431 |

-

"metadata": {},

|

| 432 |

-

"source": [

|

| 433 |

-

"#### 6. Observability Metrics Overview\n",

|

| 434 |

-

"\n",

|

| 435 |

-

"All of these metrics can be visualized together in dashboards. This enables you to quickly see how your agent performs across many sessions and helps you to track quality metrics over time.\n",

|

| 436 |

-

"\n",

|

| 437 |

-

""

|

| 438 |

-

]

|

| 439 |

-

},

|

| 440 |

-

{

|

| 441 |

-

"cell_type": "markdown",

|

| 442 |

-

"metadata": {},

|

| 443 |

-

"source": [

|

| 444 |

-

"## Offline Evaluation\n",

|

| 445 |

-

"\n",

|

| 446 |

-

"Online evaluation is essential for live feedback, but you also need **offline evaluation**—systematic checks before or during development. This helps maintain quality and reliability before rolling changes into production."

|

| 447 |

-

]

|

| 448 |

-

},

|

| 449 |

-

{

|

| 450 |

-

"cell_type": "markdown",

|

| 451 |

-

"metadata": {},

|

| 452 |

-

"source": [

|

| 453 |

-

"### Dataset Evaluation\n",

|

| 454 |

-

"\n",

|

| 455 |

-

"In offline evaluation, you typically:\n",

|

| 456 |

-

"1. Have a benchmark dataset (with prompt and expected output pairs)\n",

|

| 457 |

-

"2. Run your agent on that dataset\n",

|

| 458 |

-

"3. Compare outputs to the expected results or use an additional scoring mechanism\n",

|

| 459 |

-

"\n",

|

| 460 |

-

"Below, we demonstrate this approach with the [GSM8K dataset](https://huggingface.co/datasets/gsm8k), which contains math questions and solutions."

|

| 461 |

-

]

|

| 462 |

-

},

|

| 463 |

-

{

|

| 464 |

-

"cell_type": "code",

|

| 465 |

-

"execution_count": null,

|

| 466 |

-

"metadata": {},

|

| 467 |

-

"outputs": [],

|

| 468 |

-

"source": [

|

| 469 |

-

"import pandas as pd\n",

|

| 470 |

-

"from datasets import load_dataset\n",

|

| 471 |

-

"\n",

|

| 472 |

-

"# Fetch GSM8K from Hugging Face\n",

|

| 473 |

-

"dataset = load_dataset(\"openai/gsm8k\", 'main', split='train')\n",

|

| 474 |

-

"df = pd.DataFrame(dataset)\n",

|

| 475 |

-

"print(\"First few rows of GSM8K dataset:\")\n",

|

| 476 |

-

"print(df.head())"

|

| 477 |

-

]

|

| 478 |

-

},

|

| 479 |

-

{

|

| 480 |

-

"cell_type": "markdown",

|

| 481 |

-

"metadata": {},

|

| 482 |

-

"source": [

|

| 483 |

-

"Next, we create a dataset entity in Langfuse to track the runs. Then, we add each item from the dataset to the system. (If you’re not using Langfuse, you might simply store these in your own database or local file for analysis.)"

|

| 484 |

-

]

|

| 485 |

-

},

|

| 486 |

-

{

|

| 487 |

-

"cell_type": "code",

|

| 488 |

-

"execution_count": null,

|

| 489 |

-

"metadata": {},

|

| 490 |

-

"outputs": [],

|

| 491 |

-

"source": [

|

| 492 |

-

"from langfuse import get_client\n",

|

| 493 |

-

"langfuse = get_client()\n",

|

| 494 |

-

"\n",

|

| 495 |

-

"langfuse_dataset_name = \"gsm8k_dataset_huggingface\"\n",

|

| 496 |

-

"\n",

|

| 497 |

-

"# Create a dataset in Langfuse\n",

|

| 498 |

-

"langfuse.create_dataset(\n",

|

| 499 |

-

" name=langfuse_dataset_name,\n",

|

| 500 |

-

" description=\"GSM8K benchmark dataset uploaded from Huggingface\",\n",

|

| 501 |

-

" metadata={\n",

|

| 502 |

-

" \"date\": \"2025-03-10\", \n",

|

| 503 |

-

" \"type\": \"benchmark\"\n",

|

| 504 |

-

" }\n",

|

| 505 |

-

")"

|

| 506 |

-

]

|

| 507 |

-

},

|

| 508 |

-

{

|

| 509 |

-

"cell_type": "code",

|

| 510 |

-

"execution_count": null,

|

| 511 |

-

"metadata": {},

|

| 512 |

-

"outputs": [],

|

| 513 |

-

"source": [

|

| 514 |

-

"for idx, row in df.iterrows():\n",

|

| 515 |

-

" langfuse.create_dataset_item(\n",

|

| 516 |

-

" dataset_name=langfuse_dataset_name,\n",

|

| 517 |

-

" input={\"text\": row[\"question\"]},\n",

|

| 518 |

-

" expected_output={\"text\": row[\"answer\"]},\n",

|

| 519 |

-

" metadata={\"source_index\": idx}\n",

|

| 520 |

-

" )\n",

|

| 521 |

-

" if idx >= 9: # Upload only the first 10 items for demonstration\n",

|

| 522 |

-

" break"

|

| 523 |

-

]

|

| 524 |

-

},

|

| 525 |

-

{

|

| 526 |

-

"cell_type": "markdown",

|

| 527 |

-

"metadata": {},

|

| 528 |

-

"source": [

|

| 529 |

-

""

|

| 530 |

-

]

|

| 531 |

-

},

|

| 532 |

-

{

|

| 533 |

-

"cell_type": "markdown",

|

| 534 |

-

"metadata": {},

|

| 535 |

-

"source": [

|

| 536 |

-

"#### Running the Agent on the Dataset\n",

|

| 537 |

-

"\n",

|

| 538 |

-

"We define a helper function `run_smolagent()` that:\n",

|

| 539 |

-

"1. Starts a Langfuse span\n",

|

| 540 |

-

"2. Runs our agent on the prompt\n",

|

| 541 |

-

"3. Records the trace ID in Langfuse\n",

|

| 542 |

-

"\n",

|

| 543 |

-

"Then, we loop over each dataset item, run the agent, and link the trace to the dataset item. We can also attach a quick evaluation score if desired."

|

| 544 |

-

]

|

| 545 |

-

},

|

| 546 |

-

{

|

| 547 |

-

"cell_type": "code",

|

| 548 |

-

"execution_count": null,

|

| 549 |

-

"metadata": {},

|

| 550 |

-

"outputs": [],

|

| 551 |

-

"source": [

|

| 552 |

-

"from opentelemetry.trace import format_trace_id\n",

|

| 553 |

-

"from smolagents import (CodeAgent, InferenceClientModel, LiteLLMModel)\n",

|

| 554 |

-

"from langfuse import get_client\n",

|

| 555 |

-

" \n",

|

| 556 |

-

"langfuse = get_client()\n",

|

| 557 |

-

"\n",

|

| 558 |

-

"\n",

|

| 559 |

-

"# Example: using InferenceClientModel or LiteLLMModel to access openai, anthropic, gemini, etc. models:\n",

|

| 560 |

-

"model = InferenceClientModel()\n",

|

| 561 |

-

"\n",

|

| 562 |

-

"agent = CodeAgent(\n",

|

| 563 |

-

" tools=[],\n",

|

| 564 |

-

" model=model,\n",

|

| 565 |

-

" add_base_tools=True\n",

|

| 566 |

-

")\n",

|

| 567 |

-

"\n",

|

| 568 |

-

"dataset_name = \"gsm8k_dataset_huggingface\"\n",

|

| 569 |

-

"current_run_name = \"smolagent-notebook-run-01\" # Identifies this specific evaluation run\n",

|

| 570 |

-

" \n",

|

| 571 |

-

"# Assume 'run_smolagent' is your instrumented application function\n",

|

| 572 |

-

"def run_smolagent(question):\n",

|

| 573 |

-

" with langfuse.start_as_current_generation(name=\"qna-llm-call\") as generation:\n",

|

| 574 |

-

" # Simulate LLM call\n",

|

| 575 |

-

" result = agent.run(question)\n",

|

| 576 |

-

" \n",

|

| 577 |

-

" # Update the trace with the input and output\n",

|

| 578 |

-

" generation.update_trace(\n",

|

| 579 |

-

" input= question,\n",

|

| 580 |

-

" output=result,\n",

|

| 581 |

-

" )\n",

|

| 582 |

-

" \n",

|

| 583 |

-

" return result\n",

|

| 584 |

-

" \n",

|

| 585 |

-

"dataset = langfuse.get_dataset(name=dataset_name) # Fetch your pre-populated dataset\n",

|

| 586 |

-

" \n",

|

| 587 |

-

"for item in dataset.items:\n",

|

| 588 |

-

" \n",

|

| 589 |

-

" # Use the item.run() context manager\n",

|

| 590 |

-

" with item.run(\n",

|

| 591 |

-

" run_name=current_run_name,\n",

|

| 592 |

-

" run_metadata={\"model_provider\": \"Hugging Face\", \"temperature_setting\": 0.7},\n",

|

| 593 |

-

" run_description=\"Evaluation run for GSM8K dataset\"\n",

|

| 594 |

-

" ) as root_span: # root_span is the root span of the new trace for this item and run.\n",

|

| 595 |

-

" # All subsequent langfuse operations within this block are part of this trace.\n",

|

| 596 |

-

" \n",

|

| 597 |

-

" # Call your application logic\n",

|

| 598 |

-

" generated_answer = run_smolagent(question=item.input[\"text\"])\n",

|

| 599 |

-

" \n",

|

| 600 |

-

" print(item.input)"

|

| 601 |

-

]

|

| 602 |

-

},

|

| 603 |

-

{

|

| 604 |

-

"cell_type": "markdown",

|

| 605 |

-

"metadata": {},

|

| 606 |

-

"source": [

|

| 607 |

-

"You can repeat this process with different:\n",

|

| 608 |

-

"- Models (OpenAI GPT, local LLM, etc.)\n",

|

| 609 |

-

"- Tools (search vs. no search)\n",

|

| 610 |

-

"- Prompts (different system messages)\n",

|

| 611 |

-

"\n",

|

| 612 |

-

"Then compare them side-by-side in your observability tool:\n",

|

| 613 |

-

"\n",

|

| 614 |

-

"\n",

|

| 615 |

-

"\n"

|

| 616 |

-

]

|

| 617 |

-

},

|

| 618 |

-

{

|

| 619 |

-

"cell_type": "markdown",

|

| 620 |

-

"metadata": {},

|

| 621 |

-

"source": [

|

| 622 |

-

"## Final Thoughts\n",

|

| 623 |

-

"\n",

|

| 624 |

-

"In this notebook, we covered how to:\n",

|

| 625 |

-

"1. **Set up Observability** using smolagents + OpenTelemetry exporters\n",

|

| 626 |

-

"2. **Check Instrumentation** by running a simple agent\n",

|

| 627 |

-

"3. **Capture Detailed Metrics** (cost, latency, etc.) through an observability tools\n",

|

| 628 |

-

"4. **Collect User Feedback** via a Gradio interface\n",

|

| 629 |

-

"5. **Use LLM-as-a-Judge** to automatically evaluate outputs\n",

|

| 630 |

-

"6. **Perform Offline Evaluation** with a benchmark dataset\n",

|

| 631 |

-

"\n",

|

| 632 |

-

"🤗 Happy coding!"

|

| 633 |

-

]

|

| 634 |

-

}

|

| 635 |

-

],

|

| 636 |

-

"metadata": {

|

| 637 |

-

"kernelspec": {

|

| 638 |

-

"display_name": ".venv",

|

| 639 |

-

"language": "python",

|

| 640 |

-

"name": "python3"

|

| 641 |

-

},

|

| 642 |

-

"language_info": {

|

| 643 |

-

"codemirror_mode": {

|

| 644 |

-

"name": "ipython",

|

| 645 |

-

"version": 3

|

| 646 |

-

},

|

| 647 |

-

"file_extension": ".py",

|

| 648 |

-

"mimetype": "text/x-python",

|

| 649 |

-

"name": "python",

|

| 650 |

-

"nbconvert_exporter": "python",

|

| 651 |

-

"pygments_lexer": "ipython3",

|

| 652 |

-

"version": "3.13.2"

|

| 653 |

-

}

|

| 654 |

-

},

|

| 655 |

-

"nbformat": 4,

|

| 656 |

-

"nbformat_minor": 2

|

| 657 |

-

}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|