hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

0ca84eec978b9de6eeb072304b5498fc042a4e5e | 3,505 | md | Markdown | Docs/HOWTO_linux.md | Arjanit21/Digital-electronics-3 | 2b28277b5bdce2129cc6131ca56cc56f7bf095f6 | [

"MIT"

] | null | null | null | Docs/HOWTO_linux.md | Arjanit21/Digital-electronics-3 | 2b28277b5bdce2129cc6131ca56cc56f7bf095f6 | [

"MIT"

] | null | null | null | Docs/HOWTO_linux.md | Arjanit21/Digital-electronics-3 | 2b28277b5bdce2129cc6131ca56cc56f7bf095f6 | [

"MIT"

] | null | null | null | ## How to use AVR template on Linux

1. Download and install [Visual Studio Code](https://code.visualstudio.com/) source code editor.

2. AVR template requires the following packages to be installed correctly:

```bash

sudo apt-get install git make avrdude putty doxygen doxygen-gui

```

3. Download the latest toolchain AVR 8-bit Toolchain - Linux 64-bit from Microchip [webpage](https://www.microchip.com/mplab/avr-support/avr-and-arm-toolchains-c-compilers), from this [repository](../Install/avr8-gnu-toolchain-3.6.2.1778-linux.any.x86_64.tar.gz), or from Microchip's [archive](https://www.microchip.com/en-us/development-tools-tools-and-software/avr-and-sam-downloads-archive) and extract all files to `/opt` directory:

```bash

sudo tar -xzvf avr8-gnu-toolchain-3.6.2.1778-linux.any.x86_64.tar.gz -C /opt/

```

4. Download and extract `Examples` folder from this [repository](https://github.com/tomas-fryza/Digital-electronics-2/archive/master.zip) to local computer.

5. Start Visual Studio Code source code editor, open examples folder, and in `Examples/Makefile.in` enable and/or modify Linux parameters according to your local settings:

```Makefile

## Linux

PREFIX = /opt/avr8-gnu-toolchain-linux_x86_64

AVRDUDE = avrdude

RM = rm -f

# See "dmesg" command output

USBPORT = /dev/ttyUSB0

## Windows

#PREFIX = C:\Appz\Avr\avr8-gnu-toolchain-win32_x86

#AVRDUDE = C:\Appz\Avr\avrdude.exe

#RM = del

## See USB-SERIAL CH340 port in Device Manager

#USBPORT = COM3

```

6. In Visual Studio Code, open a new terminal in menu **Terminal > New Terminal** and change working directory to `Examples/blink`.

```bash

cd Examples/blink

ls

```

All processes are done with help of `Makefile` script file. The following commands allow compilation and programming:

```bash

make all

make flash

make size

make list

make clean

```

7. To create a new project, make a new directory within `Labs` folder and copy three files `main.c`, `Makefile`, and `README.md` from `Examples/blink` project to `Labs/new-project-folder`

> If your Arduino board (or clone) does not contain any bootloader, follow instructions at [Instructables](https://www.instructables.com/id/How-to-fix-bad-Chinese-Arduino-clones/) or [Arduino webpages](https://www.arduino.cc/en/Tutorial/ArduinoISP).

>

> Install **AVR Support** extension in Visual Studio Code for AVR assembly language support.

>

#### Tested on operating systems

**Name** | **Version** | **Date (YYYY-MM-DD)** | **Note** |

:--------- | :------------------------- | :-------------------: | :------------------ |

Linux Mint | 20.1, Ulyssa | 2021-06-28 | Office |

Linux Mint | 20.1, Ulyssa | 2021-06-24 | Laptop |

Ubuntu | 20.04.1 LTS, Focal Fossa | 2020-12-22 | Student, VirtualBox |

Ubuntu | 20.04.1 LTS, Focal Fossa | 2020-12-10 | Student, Laptop |

Linux Mint | 18.3, Sylvia | 2019-06-13 | Laptop |

Linux Mint | 18.2, Sonya | 2019-05-17 | Lab SC 6.61 |

Ubuntu | 18.04.1 LTS, Bionic Beaver | 2019-05-15 | Office |

Ubuntu | 16.04, Xenial Xerus | 2018-09-15 | Office |

```bash

# FYI: How to check OS version in Linux

cat /etc/os-release

```

| 44.367089 | 436 | 0.62311 | eng_Latn | 0.453536 |

0ca8b4baa6e7dceef8060ead3dfb0d14ae566e7f | 510 | md | Markdown | archives/2022-03-26.md | erbanku/v2ex-hot-hub | 746387344a12bbb5265a65511af95f9e3eddd6c1 | [

"MIT"

] | null | null | null | archives/2022-03-26.md | erbanku/v2ex-hot-hub | 746387344a12bbb5265a65511af95f9e3eddd6c1 | [

"MIT"

] | null | null | null | archives/2022-03-26.md | erbanku/v2ex-hot-hub | 746387344a12bbb5265a65511af95f9e3eddd6c1 | [

"MIT"

] | null | null | null | # v2ex 热议话题

`最后更新时间:2022-03-26 11:19:05 +0800`

1. [发现 64GB iPhone / iPad 足够用了](https://www.v2ex.com/t/842826)

1. [ubuntu20.04 如何安装微信](https://www.v2ex.com/t/842818)

1. [打游戏心跳很快](https://www.v2ex.com/t/842861)

1. [ios 彩云天气放弃了免费用户?](https://www.v2ex.com/t/842823)

1. [offer 决赛圈!](https://www.v2ex.com/t/842869)

1. [同样是 MySQL 8,这个我问题我硬是没搞明白会这样,希望大家帮我分析一下](https://www.v2ex.com/t/842853)

1. [请教一下,出售 M1 Air 本本的人的原因?](https://www.v2ex.com/t/842841)

1. [常用 insert 键的请慎重选择 联想/小新 笔记本](https://www.v2ex.com/t/842808)

| 39.230769 | 74 | 0.696078 | yue_Hant | 0.788207 |

0ca8d64f4fc6f6d4d2ce2ec38c0bee2a39c70770 | 447 | md | Markdown | README.md | Elycin/Larahacks | 1559d1098f3ab99643c6a929577066a005ef29e9 | [

"MIT"

] | null | null | null | README.md | Elycin/Larahacks | 1559d1098f3ab99643c6a929577066a005ef29e9 | [

"MIT"

] | null | null | null | README.md | Elycin/Larahacks | 1559d1098f3ab99643c6a929577066a005ef29e9 | [

"MIT"

] | null | null | null | # Larahacks

A bunch of hacks and tweaks that you can use in your Laravel project.

## Models

### Traits

You can use traits by appending `use {TraitName};` inside of your model class.

- [Write Through Cache](https://github.com/Elycin/Larahacks/blob/main/Traits/WriteThroughCache.php)

A write through cache is extremely reliable in situations where you are heavily dependent on the usage of the cache. Requires that you have a taggable store.

| 34.384615 | 157 | 0.771812 | eng_Latn | 0.997758 |

0ca9191f9cc1f72f0db6f13a30c9487cbca2ecc7 | 714 | md | Markdown | docs/components/form/rating.md | CareyToboo/amis | b43445931614a42462c2d2add173ad58a0a5ec30 | [

"Apache-2.0"

] | 2 | 2021-04-10T10:20:50.000Z | 2021-04-10T10:26:59.000Z | docs/components/form/rating.md | CareyToboo/amis | b43445931614a42462c2d2add173ad58a0a5ec30 | [

"Apache-2.0"

] | null | null | null | docs/components/form/rating.md | CareyToboo/amis | b43445931614a42462c2d2add173ad58a0a5ec30 | [

"Apache-2.0"

] | 1 | 2021-04-10T10:27:10.000Z | 2021-04-10T10:27:10.000Z | ---

title: Rating 评分

description:

type: 0

group: null

menuName: Rating 评分

icon:

order: 37

---

## 基本用法

```schema:height="400" scope="body"

{

"type": "form",

"api": "https://houtai.baidu.com/api/mock2/form/saveForm",

"controls": [

{

"type": "rating",

"name": "rating",

"label": "评分"

}

]

}

```

## 属性表

当做选择器表单项使用时,除了支持 [普通表单项属性表](./formitem#%E5%B1%9E%E6%80%A7%E8%A1%A8) 中的配置以外,还支持下面一些配置

| 属性名 | 类型 | 默认值 | 说明 |

| -------- | --------- | ------- | ------------------ |

| half | `boolean` | `false` | 是否使用半星选择 |

| count | `number` | `5` | 共有多少星可供选择 |

| readOnly | `boolean` | `false` | 只读 |

| 17.414634 | 84 | 0.45098 | yue_Hant | 0.263985 |

0ca9e4db11b9810d4a8b60973692a55f159a8914 | 2,571 | md | Markdown | docker/README.md | doytsujin/trow | 9925d57dae8095a80c99e997e9470ed0be13c425 | [

"Apache-2.0"

] | null | null | null | docker/README.md | doytsujin/trow | 9925d57dae8095a80c99e997e9470ed0be13c425 | [

"Apache-2.0"

] | null | null | null | docker/README.md | doytsujin/trow | 9925d57dae8095a80c99e997e9470ed0be13c425 | [

"Apache-2.0"

] | null | null | null | # Building Trow

The easiest way to build Trow is via Dockerfile. From this directory, either run `build.sh` or run

something similar to following:

```

docker build -f Dockerfile -t trow ..

```

Note that the build context needs to be the root directory of the project (*not* the directory with

the Dockerfile).

To run tests, use the `build.sh` script or `Dockerfile.test` image (tests will run as part of the build).

Once issues related to TLS libraries have been resolved, a minimal build based on a scratch image

will be added.

## Mulitplatform Builds

There are several ways to produce multiplatform builds with Docker:

1. Build directly on the target hardware.

2. Use Docker multiplatform support e.g. `--platform` argument available with buildx to produce

images for other platforms. This uses QEMU internally to emulate the target platform. In

practice, I hit issues with this solution, seemingly because of bugs in QEMU and interactions

with multi-stage builds.

3. Use Rust cross-compilation to produce a binary for the target platform and copy into a base

image for the target platform. This requires a bit more configuration, but does work. When

targetting a low-powered platform (e.g. Raspberry Pi), this option may be considerably faster

than building directly on the hardware or using emulation.

Our Dockerfile uses 3 (with Docker multiplatform support to assemble the final image). Assuming

you're running on amd64, you can run the following:

```

docker buildx build --pull --load -t trow:armv7 -f Dockerfile --platform linux/arm/v7 ../

```

You can build a multi-platform image (or rather manifest pointing to multiple images) with:

```

docker buildx build --pull --load -t trow:armv7 -f Dockerfile --platform linux/arm/v7,linux/arm64,linux/amd64 ../

```

But be aware that you can't load the result into a local Docker instance as it doesn't

currently understand multi-platform manifests.

All of this assumes you have a recent version of Docker with buildkit installed.

Note that `--pull` avoids an issue whereby Docker can use the wrong base image and `--load` puts the

image into the host Docker image cache.

If you get an error about an unsupported platform, you may need to install binfmt handlers. This can

be done for common platforms with `docker run --privileged --rm

docker/binfmt:a7996909642ee92942dcd6cff44b9b95f08dad64` (also see [qus](https://github.com/dbhi/qus)

for an alternative approach and explanation of what is happening here). Restart docker or create a

new builder instance after doing this.

| 43.576271 | 113 | 0.770517 | eng_Latn | 0.997911 |

0ca9fc6d6caa3e4dbbc40ccf32f66003a2658d31 | 3,310 | md | Markdown | README.md | Bhaskers-Blu-Org2/GuardedFabricTools | 76abbdaf396d0cd87d105cfa35a6241475372272 | [

"MIT"

] | 6 | 2018-01-24T11:59:27.000Z | 2019-04-07T02:41:11.000Z | README.md | Bhaskers-Blu-Org2/GuardedFabricTools | 76abbdaf396d0cd87d105cfa35a6241475372272 | [

"MIT"

] | 3 | 2019-09-30T17:11:02.000Z | 2020-06-23T14:17:53.000Z | README.md | Microsoft/GuardedFabricTools | 76abbdaf396d0cd87d105cfa35a6241475372272 | [

"MIT"

] | 5 | 2020-04-10T00:52:36.000Z | 2021-09-09T01:31:48.000Z | # Guarded Fabric Tools

A PowerShell module containing tools to make deploying shielded virtual machines and managing a guarded fabric easier.

Included tools:

- **New-ShieldedVM** helps you deploy a shielded VM from PowerShell using a template disk and shielding data file. This function is intended for use on a guarded host.

- **ConvertTo-ShieldedVM** allows you to quickly add a virtual TPM and security policy to an existing VM. This function is intended for use on a guarded host.

- **New-ShieldingDataAnswerFile** generates answer files (also called unattend files) that automate configuration of Windows or Linux in a shielded VM. These answer files are compliant with System Center Virtual Machine Manager and `New-ShieldedVM`. This function is intended for use on the machine where you are preparing a shielding data file.

- **Get-HgsAttestationReport** queries the event log on an HGS server for information about recent attestation attempts to help you understand which hosts have tried attesting and whether or not they passed. This function is intended for use on an HGS server. [Additional documentation](./AttestationReport/Usage.md)

- **Add-AccessRule** and its accompanying extensions to the X509Certificate2 class in PowerShell allow you to manage the access control list (ACL) on certificate private keys through PowerShell. This function is intended for use on an HGS server when granting the group managed service account access to use the HGS encryption and signing keys. [Additional documentation](./CertificateManagement/Usage.md)

Check out the [official documentation](https://aka.ms/ShieldedVMs) for more information about shielded virtual machines in Windows Server.

## Installing

To use the Guarded Fabric Tools in a production environment, download and install the digitally signed module from the PowerShell Gallery. See [Guarded Fabric Tools on the PowerShell Gallery](https://www.powershellgallery.com/packages/GuardedFabricTools/).

Run the following command in PowerShell to install the module.

```powershell

Install-Module -Name GuardedFabricTools

```

If the computer where you're installing the module does not have internet connectivity, use [Save-Module](https://docs.microsoft.com/en-us/powershell/module/PowershellGet/Save-Module) to download the files and copy them manually to `C:\Program Files\WindowsPowerShell\Modules` on the target machine.

## Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a

Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide

a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions

provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

contact [[email protected]](mailto:[email protected]) with any additional questions or comments.

| 84.871795 | 405 | 0.807251 | eng_Latn | 0.994476 |

0caaee7d59e8ba370aa32b5789cfd30d0c3c8f9b | 3,642 | md | Markdown | mdop/dart-v7/planning-how-to-save-and-deploy-the-dart-70-recovery-image.md | pahuijbr/windows-itpro-docs | 81c52b83f06e20aea1fdb3c47500c3ebd2a7a120 | [

"CC-BY-4.0",

"MIT"

] | 1 | 2021-08-04T12:48:06.000Z | 2021-08-04T12:48:06.000Z | mdop/dart-v7/planning-how-to-save-and-deploy-the-dart-70-recovery-image.md | heatherpoulsen/windows-itpro-docs | fe1a16acde81ae5a0f24a63dc6ef94b5a2a38c63 | [

"CC-BY-3.0"

] | 1 | 2021-02-01T22:14:31.000Z | 2021-02-01T23:02:31.000Z | mdop/dart-v7/planning-how-to-save-and-deploy-the-dart-70-recovery-image.md | heatherpoulsen/windows-itpro-docs | fe1a16acde81ae5a0f24a63dc6ef94b5a2a38c63 | [

"CC-BY-3.0"

] | 1 | 2020-07-13T22:27:08.000Z | 2020-07-13T22:27:08.000Z | ---

title: Planning How to Save and Deploy the DaRT 7.0 Recovery Image

description: Planning How to Save and Deploy the DaRT 7.0 Recovery Image

author: jamiejdt

ms.assetid: d96e9363-6186-4fc3-9b83-ba15ed9694a5

ms.pagetype: mdop

ms.mktglfcycl: support

ms.sitesec: library

ms.prod: w7

---

# Planning How to Save and Deploy the DaRT 7.0 Recovery Image

Use the information in this section when you plan for saving and deploying the Microsoft Diagnostics and Recovery Toolset (DaRT) 7 recovery image.

## Planning How to Save and Deploy the DaRT Recovery Image

You can save and deploy the DaRT recovery image by using the following methods. When you are determining the method that you will use, consider the advantages and disadvantages of each. Also, consider how you want to use DaRT in your enterprise.

**Note**

You might want to use more than one method in your organization. For example, you can boot into DaRT from a remote partition for most situations and have a USB flash drive available in case the end-user computer cannot connect to the network.

The following table shows some advantages and disadvantages of each method of using DaRT in your organization.

<table>

<colgroup>

<col width="33%" />

<col width="33%" />

<col width="33%" />

</colgroup>

<thead>

<tr class="header">

<th align="left">Method to Boot into DaRT</th>

<th align="left">Advantages</th>

<th align="left">Disadvantages</th>

</tr>

</thead>

<tbody>

<tr class="odd">

<td align="left"><p>From a CD or DVD</p></td>

<td align="left"><p>Supports scenarios in which the master boot record (MBR) is corrupted and you cannot access the hard disk. Also supports cases in which there is no network connection.</p>

<p>This is most familiar to users of earlier versions of DaRT, and a CD or DVD can be burned directly from the <strong>DaRT Recovery Image Wizard</strong>.</p></td>

<td align="left"><p>Requires that someone with access to the CD or DVD is physically at the end-user computer to boot into DaRT.</p></td>

</tr>

<tr class="even">

<td align="left"><p>From a USB flash drive (UFD)</p></td>

<td align="left"><p>Provides same advantages as booting from a CD or DVD and also provides support to computers that have no CD or DVD drive.</p></td>

<td align="left"><p>Requires you to format the UFD before you can use it to boot into DaRT. Also requires that someone with access to the UFD is physically at the end-user computer to boot into DaRT.</p></td>

</tr>

<tr class="odd">

<td align="left"><p>From a remote (network) partition</p></td>

<td align="left"><p>Lets you boot into DaRT without needing a CD, DVD, or UFD. Also allows for easy upgrades of DaRT because there is only one file location to update.</p></td>

<td align="left"><p>Does not work if the end-user computer is not connected to the network.</p>

<p>Widely available to end users and might require additional security considerations when you are creating the recovery image.</p></td>

</tr>

<tr class="even">

<td align="left"><p>From a recovery partition</p></td>

<td align="left"><p>Lets you boot into DaRT without needing a CD, DVD, or UFD that includes instances in which there is no network connectivity.</p>

<p>Also, can be implemented and managed as part of your standard Windows image process by using automated distribution tools, such as System Center Configuration Manager.</p></td>

<td align="left"><p>When updating DaRT, requires you to update all computers in your enterprise instead of just one partition (on the network) or device (CD, DVD, or UFD).</p></td>

</tr>

</tbody>

</table>

## Related topics

[Planning to Deploy DaRT 7.0](planning-to-deploy-dart-70.md)

| 42.847059 | 245 | 0.7419 | eng_Latn | 0.996037 |

0cab19a8eba05bb099db3ffe4753496e7c044894 | 907 | md | Markdown | _posts/2018-11-08-python-Goal_of_Python-03.md | TheFrancisHe/TheFrancisHe.github.io | 984b359eba57fddb524ba41a162574ba59d4dbc2 | [

"MIT"

] | null | null | null | _posts/2018-11-08-python-Goal_of_Python-03.md | TheFrancisHe/TheFrancisHe.github.io | 984b359eba57fddb524ba41a162574ba59d4dbc2 | [

"MIT"

] | null | null | null | _posts/2018-11-08-python-Goal_of_Python-03.md | TheFrancisHe/TheFrancisHe.github.io | 984b359eba57fddb524ba41a162574ba59d4dbc2 | [

"MIT"

] | null | null | null | ---

layout: post

title: Goal of Python

subtitle: 开发者的好伙伴——Python

date: 2018-11-08

author: HD

catalog: true

tags:

- Python

---

## 正文

*既有概念的补充*

**1.像纯英语那样容易理解**

有这样一种情况:

开发使用一些语言开发出了某个功能,过段时间后,可能出现连开发者自己都无法读懂自己写的代码。

**2.用一种方法。最好是只有一种方法来做一件事情**

实现了一个功能,拒绝花俏,拒绝“炫技”代码

**3.人生苦短,我用Python”**

代码量少 ==》解决更多问题

**4.OO-面向对象**

Utils直接拿来用,复用性体现了OO。

大概方法型语言复用性较弱。

**5.可扩展性~~**

Python结合C、C++

---

*Python 程序*

**编译型语言错误的演示:**

```python

code:

print("Hello Python");

print("Hello World");

prit("Hello error");

```

**output:**

```python

[dba@bda 认识Python]$ python 01-HelloPython.py

Hello Python

Hello World //前两句还是输出结果了,解释型语言,解释一句,执行一句。

Traceback (most recent call last):

File "01-HelloPython.py", line 3, in <module>

prit("Hello error");

NameError: name 'prit' is not defined

[dba@bda 认识Python]$

```

> 每行代码负责完成一个动作 : 写python的优秀习惯

> 语法严苛,从而使得写出的代码整齐简洁。

| 10.670588 | 47 | 0.652701 | yue_Hant | 0.542079 |

0cac1f572bfcc396b591ad1337c0518a3c826b42 | 1,947 | md | Markdown | clients/csharp/docs/RevisionHistoryApi.md | Soluto/tweek-openapi-clients | feee32006743ea4bb815f2608bd95950439388c3 | [

"Apache-2.0"

] | null | null | null | clients/csharp/docs/RevisionHistoryApi.md | Soluto/tweek-openapi-clients | feee32006743ea4bb815f2608bd95950439388c3 | [

"Apache-2.0"

] | null | null | null | clients/csharp/docs/RevisionHistoryApi.md | Soluto/tweek-openapi-clients | feee32006743ea4bb815f2608bd95950439388c3 | [

"Apache-2.0"

] | null | null | null | # Org.OpenAPITools.Api.RevisionHistoryApi

All URIs are relative to *http://localhost/api/v2*

Method | HTTP request | Description

------------- | ------------- | -------------

[**GetRevisionHistory**](RevisionHistoryApi.md#getrevisionhistory) | **GET** /revision-history |

<a name="getrevisionhistory"></a>

# **GetRevisionHistory**

> List<Object> GetRevisionHistory (string keyPath, string since)

Get Revision History

### Example

```csharp

using System;

using System.Diagnostics;

using Org.OpenAPITools.Api;

using Org.OpenAPITools.Client;

using Org.OpenAPITools.Model;

namespace Example

{

public class GetRevisionHistoryExample

{

public void main()

{

// Configure HTTP basic authorization: bearerAuth

Configuration.Default.Username = "YOUR_USERNAME";

Configuration.Default.Password = "YOUR_PASSWORD";

var apiInstance = new RevisionHistoryApi();

var keyPath = keyPath_example; // string |

var since = since_example; // string |

try

{

List<Object> result = apiInstance.GetRevisionHistory(keyPath, since);

Debug.WriteLine(result);

}

catch (Exception e)

{

Debug.Print("Exception when calling RevisionHistoryApi.GetRevisionHistory: " + e.Message );

}

}

}

}

```

### Parameters

Name | Type | Description | Notes

------------- | ------------- | ------------- | -------------

**keyPath** | **string**| |

**since** | **string**| |

### Return type

**List<Object>**

### Authorization

[bearerAuth](../README.md#bearerAuth)

### HTTP request headers

- **Content-Type**: Not defined

- **Accept**: application/json

[[Back to top]](#) [[Back to API list]](../README.md#documentation-for-api-endpoints) [[Back to Model list]](../README.md#documentation-for-models) [[Back to README]](../README.md)

| 25.618421 | 180 | 0.598356 | yue_Hant | 0.618772 |

0cac7995f0fc53b875848e33da19c99784dd6829 | 149 | md | Markdown | jitsi/installer/buster/README.md | emrahcom/emrah-tools | 6b1460464240970a4d94ed1e4d0c45c235b93759 | [

"Apache-2.0"

] | 14 | 2020-11-23T14:23:55.000Z | 2022-03-06T09:29:09.000Z | jitsi/installer/buster/README.md | emrahcom/emrah-tools | 6b1460464240970a4d94ed1e4d0c45c235b93759 | [

"Apache-2.0"

] | 3 | 2021-02-09T18:17:45.000Z | 2021-04-12T15:00:06.000Z | jitsi/installer/buster/README.md | emrahcom/emrah-tools | 6b1460464240970a4d94ed1e4d0c45c235b93759 | [

"Apache-2.0"

] | 6 | 2021-02-02T10:47:21.000Z | 2021-12-16T03:26:13.000Z | # jitsi-buster-installer

This repo has been moved to

[jitsi-contrib / installers](https://github.com/jitsi-contrib/installers/tree/main/jitsi-base)

| 29.8 | 94 | 0.785235 | eng_Latn | 0.618461 |

0cad8b5da86ad43e591c08271bd47df94f41f184 | 845 | md | Markdown | apps/todosnavigators/JS-HTML5-localstorage-CRUD-master/README.md | ribafs/mobile | 518f8bf5b9a8b62d639a6c8e034f78ee925fac9f | [

"MIT"

] | 1 | 2021-02-10T03:10:21.000Z | 2021-02-10T03:10:21.000Z | apps/todosnavigators/JS-HTML5-localstorage-CRUD-master/README.md | ribafs/mobile | 518f8bf5b9a8b62d639a6c8e034f78ee925fac9f | [

"MIT"

] | null | null | null | apps/todosnavigators/JS-HTML5-localstorage-CRUD-master/README.md | ribafs/mobile | 518f8bf5b9a8b62d639a6c8e034f78ee925fac9f | [

"MIT"

] | null | null | null | # CRUD with JS and HTML5 localstorage

It's a basic CRUD(create, read, update, delete) based application with ES5 where data is saving in to the HTML5 localstorage. It's a practice project for learning JS DOM and HTML5.

## Visit Site

https://sudiptochy.github.io/JS-HTML5-localstorage-CRUD/

## Getting Started

Just clone or download this repository as a zip and run it on your local server.

### Prerequisites

What things you need to install the software and how to install them

```

HTML5, CSS3, JS(ES6), Local Server

```

## Built With

* [ES6](https://developer.mozilla.org/bm/docs/Web/JavaScript)

* [HTML5](https://www.w3schools.com/html/html5_intro.asp)

* [CSS3](https://www.w3schools.com/css/default.asp)

## Features

* You can Create, Read, Update and Delete Data

* You can Search any data

* Ascending and Descending Sorting of Data | 27.258065 | 180 | 0.746746 | eng_Latn | 0.913194 |

0cad98088a6018ed622329aa0fc7c8897561255a | 18 | md | Markdown | content/p1.md | bep/hugo-alpine-test | 7680792c2f9dc2c0acdd1af696370243fcd43946 | [

"MIT"

] | 13 | 2020-01-27T16:31:23.000Z | 2022-01-28T13:49:28.000Z | content/p1.md | bep/hugo-alpine-test | 7680792c2f9dc2c0acdd1af696370243fcd43946 | [

"MIT"

] | 2 | 2020-05-05T13:16:27.000Z | 2020-05-23T23:33:05.000Z | content/p1.md | bep/hugo-alpine-test | 7680792c2f9dc2c0acdd1af696370243fcd43946 | [

"MIT"

] | 2 | 2021-11-26T13:31:10.000Z | 2022-01-23T09:51:13.000Z | ---

title: P1

---

| 4.5 | 9 | 0.388889 | eng_Latn | 0.82527 |

0cad9fde410bec89f723b119898083c0f7647962 | 75 | md | Markdown | packages/components/spin/demo/Icon.md | 15051107253/idux | b5a95457edefb2de6a2d2ab35e85a458d0288112 | [

"MIT"

] | 170 | 2021-08-22T15:33:08.000Z | 2022-03-31T03:58:00.000Z | packages/components/spin/demo/Icon.md | Tyh2001/idux | 152f9782acbec9619fdc1e02cb91894aacfb44e4 | [

"MIT"

] | 435 | 2021-08-07T06:42:30.000Z | 2022-03-31T07:51:52.000Z | packages/components/spin/demo/Icon.md | Tyh2001/idux | 152f9782acbec9619fdc1e02cb91894aacfb44e4 | [

"MIT"

] | 42 | 2021-08-09T14:36:56.000Z | 2022-03-24T12:03:30.000Z | ---

order: 2

title:

zh: 图标

en: icon

---

## zh

图标名称

## en

icon name

| 5 | 10 | 0.493333 | nld_Latn | 0.661249 |

0cadb6576ec57607cc52a91989573c51a3df469e | 6,908 | md | Markdown | docs/containers/includes/vs-2017/container-tools.md | mhartkorn/visualstudio-docs | 506d078d467def6509f22fc8ba522cdae1917a98 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/containers/includes/vs-2017/container-tools.md | mhartkorn/visualstudio-docs | 506d078d467def6509f22fc8ba522cdae1917a98 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/containers/includes/vs-2017/container-tools.md | mhartkorn/visualstudio-docs | 506d078d467def6509f22fc8ba522cdae1917a98 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: Visual Studio Container Tools with ASP.NET Core

author: ghogen

description: Learn how to use Visual Studio 2017 tooling and Docker for Windows

ms.author: ghogen

ms.date: 02/01/2019

ms.technology: vs-azure

ms.topic: include

---

With Visual Studio, you can easily build, debug, and run containerized ASP.NET Core apps and publish them to Azure Container Registry, Docker Hub, Azure App Service, or your own container registry. In this article, we'll publish to Container Registry.

## Prerequisites

* [Docker Desktop](https://hub.docker.com/editions/community/docker-ce-desktop-windows)

* [Visual Studio 2017](https://visualstudio.microsoft.com/vs/older-downloads/?utm_medium=microsoft&utm_source=docs.microsoft.com&utm_campaign=vs+2017+download) with the **Web Development**, **Azure Tools** workload, and/or **.NET Core cross-platform development** workload installed

* To publish to Azure Container Registry, an Azure subscription. [Sign up for a free trial](https://azure.microsoft.com/free/dotnet/).

## Installation and setup

For Docker installation, first review the information at [Docker Desktop for Windows: What to know before you install](https://docs.docker.com/docker-for-windows/install/#what-to-know-before-you-install). Next, install [Docker Desktop](https://hub.docker.com/editions/community/docker-ce-desktop-windows).

## Add a project to a Docker container

1. From the Visual Studio menu, select **File > New > Project**.

1. Under the **Templates** section of the **New Project** dialog box, select **Visual C# > Web**.

1. Select **ASP.NET Core Web Application** or if you want to use the .NET Framework instead of .NET Core, select **ASP.NET Web Application**.

1. Give your new application a name (or take the default) and select **OK**.

1. Select **Web Application**.

1. Check the **Enable Docker Support** checkbox.

The screenshot shows .NET Core; if you're using .NET Framework, it looks a bit different.

1. Select the type of container you want (Windows or Linux) and click **OK**.

## Dockerfile overview

A *Dockerfile*, the recipe for creating a final Docker image, is created in the project. Refer to [Dockerfile reference](https://docs.docker.com/engine/reference/builder/) for an understanding of the commands within it.:

```

FROM mcr.microsoft.com/dotnet/aspnet:2.1 AS base

WORKDIR /app

EXPOSE 59518

EXPOSE 44364

FROM mcr.microsoft.com/dotnet/sdk:2.1 AS build

WORKDIR /src

COPY HelloDockerTools/HelloDockerTools.csproj HelloDockerTools/

RUN dotnet restore HelloDockerTools/HelloDockerTools.csproj

COPY . .

WORKDIR /src/HelloDockerTools

RUN dotnet build HelloDockerTools.csproj -c Release -o /app

FROM build AS publish

RUN dotnet publish HelloDockerTools.csproj -c Release -o /app

FROM base AS final

WORKDIR /app

COPY --from=publish /app .

ENTRYPOINT ["dotnet", "HelloDockerTools.dll"]

```

The preceding *Dockerfile* is based on the [microsoft/aspnetcore](https://hub.docker.com/r/microsoft/aspnetcore/) image, and includes instructions for modifying the base image by building your project and adding it to the container. If you're using the .NET Framework, the base image will be different.

When the new project dialog's **Configure for HTTPS** check box is checked, the *Dockerfile* exposes two ports. One port is used for HTTP traffic; the other port is used for HTTPS. If the check box isn't checked, a single port (80) is exposed for HTTP traffic.

## Debug

Select **Docker** from the debug drop-down in the toolbar, and start debugging the app. You might see a message with a prompt about trusting a certificate; choose to trust the certificate to continue.

The **Output** window shows what actions are taking place.

Open the **Package Manager Console** (PMC) from the menu **Tools**> NuGet Package Manager, **Package Manager Console**.

The resulting Docker image of the app is tagged as *dev*. The image is based on the *2.1-aspnetcore-runtime* tag of the *microsoft/dotnet* base image. Run the `docker images` command in the **Package Manager Console** (PMC) window. The images on the machine are displayed:

```console

REPOSITORY TAG IMAGE ID CREATED SIZE

hellodockertools dev d72ce0f1dfe7 30 seconds ago 255MB

microsoft/dotnet 2.1-aspnetcore-runtime fcc3887985bb 6 days ago 255MB

```

> [!NOTE]

> The **dev** image does not contain the app binaries and other content, as **Debug** configurations use volume mounting to provide the iterative edit and debug experience. To create a production image containing all contents, use the **Release** configuration.

Run the `docker ps` command in PMC. Notice the app is running using the container:

```console

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

baf9a678c88d hellodockertools:dev "C:\\remote_debugge..." 21 seconds ago Up 19 seconds 0.0.0.0:37630->80/tcp dockercompose4642749010770307127_hellodockertools_1

```

## Publish Docker images

Once the develop and debug cycle of the app is completed, you can create a production image of the app.

1. Change the configuration drop-down to **Release** and build the app.

1. Right-click your project in **Solution Explorer** and choose **Publish**.

1. On the publish target dialog, select the **Container Registry** tab.

1. Choose **Create New Azure Container Registry** and click **Publish**.

1. Fill in your desired values in the **Create a new Azure Container Registry**.

| Setting | Suggested value | Description |

| ------------ | ------- | -------------------------------------------------- |

| **DNS Prefix** | Globally unique name | Name that uniquely identifies your container registry. |

| **Subscription** | Choose your subscription | The Azure subscription to use. |

| **[Resource Group](/azure/azure-resource-manager/resource-group-overview)** | myResourceGroup | Name of the resource group in which to create your container registry. Choose **New** to create a new resource group.|

| **[SKU](/azure/container-registry/container-registry-skus)** | Standard | Service tier of the container registry |

| **Registry Location** | A location close to you | Choose a Location in a [region](https://azure.microsoft.com/regions/) near you or near other services that will use your container registry. |

![Visual Studio's create Azure Container Registry dialog][0]

1. Click **Create**

## Next steps

You can now pull the container from the registry to any host capable of running Docker images, for example [Azure Container Instances](/azure/container-instances/container-instances-tutorial-deploy-app).

[0]:../../media/hosting-web-apps-in-docker/vs-acr-provisioning-dialog.png

| 56.622951 | 305 | 0.729155 | eng_Latn | 0.911344 |

0cae65009498c142b2b1f64d85e00ef32c00a1d5 | 664 | md | Markdown | docs/devices/philips/7199960ph.md | augustskare/homebridge-z2m | 450427eb9328ecb4720744e05cc42235dc66b65a | [

"Apache-2.0"

] | 139 | 2020-06-28T00:14:19.000Z | 2022-03-30T05:11:11.000Z | docs/devices/philips/7199960ph.md | augustskare/homebridge-z2m | 450427eb9328ecb4720744e05cc42235dc66b65a | [

"Apache-2.0"

] | 380 | 2020-06-30T23:11:31.000Z | 2022-03-31T18:32:23.000Z | docs/devices/philips/7199960ph.md | augustskare/homebridge-z2m | 450427eb9328ecb4720744e05cc42235dc66b65a | [

"Apache-2.0"

] | 31 | 2020-07-12T06:29:50.000Z | 2022-03-13T02:30:53.000Z | ---

title: "Philips 7199960PH Homebridge/HomeKit integration"

description: "Add HomeKit support to your Philips 7199960PH, using Homebridge, Zigbee2MQTT and homebridge-z2m."

---

<!---

This file has been GENERATED using src/docgen/docgen.ts

DO NOT EDIT THIS FILE MANUALLY!

-->

# Philips 7199960PH

> Hue Iris

# Services and characteristics

The following HomeKit Services and Characteristics are exposed by

the Philips 7199960PH

* [Lightbulb](../../light.md)

* Brightness

* Hue

* On

* Saturation

# Related

* [Other devices from Philips](../index.md#philips)

* [Zigbee2MQTT documentation for this device](https://www.zigbee2mqtt.io/devices/7199960PH.html) | 25.538462 | 111 | 0.753012 | eng_Latn | 0.724105 |

0cae776101ffbdc1e0ea3bc5ef8e37e449ac20a4 | 1,035 | md | Markdown | README.md | vorasagar7/microservices-frontend-test | df69a0de13095041912b361696aca34ff6ef53cc | [

"MIT"

] | null | null | null | README.md | vorasagar7/microservices-frontend-test | df69a0de13095041912b361696aca34ff6ef53cc | [

"MIT"

] | 15 | 2017-03-07T07:22:52.000Z | 2017-03-07T08:04:21.000Z | README.md | vorasagar7/microservices-frontend-test | df69a0de13095041912b361696aca34ff6ef53cc | [

"MIT"

] | null | null | null | # Microservice-Catalog-Frontend

[](https://travis-ci.org/p632-sp-2017/microservice-catalog-frontend) [](https://codeclimate.com/github/p632-sp-2017/microservice-catalog-frontend) [](https://codeclimate.com/github/p632-sp-2017/microservice-catalog-frontend/coverage) [](https://codeclimate.com/github/p632-sp-2017/microservice-catalog-frontend)

This is the Microservice Catalog project made under UITS for the P632 course.

Steps to Run:

1) Install Dependencies

```

cd src/main/app

yarn install

```

2) Run Application

```

cd ../../..

mvn spring-boot:run

```

3) Stop Application

```

mvn spring-boot:stop

```

| 47.045455 | 738 | 0.776812 | yue_Hant | 0.285604 |

0cae9cf5ef18f3cea69eb6ed253742192cca374b | 462 | md | Markdown | README.md | herrecito/engine | 42dc0288068ac7d215cc5c96d59299c60ea43a49 | [

"Unlicense"

] | 11 | 2015-07-29T08:07:20.000Z | 2019-07-11T14:20:59.000Z | README.md | herrecito/engine | 42dc0288068ac7d215cc5c96d59299c60ea43a49 | [

"Unlicense"

] | 2 | 2017-05-27T22:00:03.000Z | 2020-12-27T17:48:55.000Z | README.md | herrecito/engine | 42dc0288068ac7d215cc5c96d59299c60ea43a49 | [

"Unlicense"

] | null | null | null |

# Dependencies

* SDL 2.0

* SDL_image 2.0

* GLEW

# Build

If you want to take it for a spin, build with:

make

Create a map with:

./bin/editor

(Left button to add walls, right button to delete, S to save, L to load)

And run it with:

./bin/engine

You'll need some textures and spritesheets:

* ascii.png

* ceil.png

* floor.png

* pistol.png

* wall.png

You can find mine here: http://imgur.com/a/F2Cnu

| 13.588235 | 72 | 0.675325 | eng_Latn | 0.862529 |

0caf2593e26be0aeaaafee0ca7e4774c235f47e4 | 1,289 | md | Markdown | docs/framework/windows-workflow-foundation/4207-maximumretriesexceededforsqlcommand.md | trubor/docs.ru-ru | 95745f1c3bd3bb4cf7026dc91d786b97e56fcc70 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/framework/windows-workflow-foundation/4207-maximumretriesexceededforsqlcommand.md | trubor/docs.ru-ru | 95745f1c3bd3bb4cf7026dc91d786b97e56fcc70 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/framework/windows-workflow-foundation/4207-maximumretriesexceededforsqlcommand.md | trubor/docs.ru-ru | 95745f1c3bd3bb4cf7026dc91d786b97e56fcc70 | [

"CC-BY-4.0",

"MIT"

] | 1 | 2021-10-31T15:06:56.000Z | 2021-10-31T15:06:56.000Z | ---

description: 'Дополнительные сведения: 4207-Максимумретриесексцеедедфорсклкомманд'

title: 4207 - MaximumRetriesExceededForSqlCommand

ms.date: 03/30/2017

ms.assetid: 8c8bee26-9ad4-4e01-bd16-0e1fd510fb6b

ms.openlocfilehash: e831da08e37010afaa33f3a52cd7cf7a9b4d713b

ms.sourcegitcommit: ddf7edb67715a5b9a45e3dd44536dabc153c1de0

ms.translationtype: MT

ms.contentlocale: ru-RU

ms.lasthandoff: 02/06/2021

ms.locfileid: "99742723"

---

# <a name="4207---maximumretriesexceededforsqlcommand"></a>4207 - MaximumRetriesExceededForSqlCommand

## <a name="properties"></a>Свойства

|||

|-|-|

|ID|4207|

|Keywords|Quota, WFInstanceStore|

|Уровень|Сведения|

|Канал|Microsoft-Windows-Application Server-Applications/Debug|

## <a name="description"></a>Описание

Указывает, что поставщиком SQL выполнено максимальное количество повторов команды SQL и дальнейшие попытки выполняться не будут.

## <a name="message"></a>Сообщение

Выполнено максимальное количество повторов команды SQL. Дальнейшие попытки выполняться не будут.

## <a name="details"></a>Сведения

|Имя элемента данных|Тип элемента данных|Описание|

|--------------------|--------------------|-----------------|

|Домен приложения|xs:string|Строка, возвращаемая AppDomain.CurrentDomain.FriendlyName.|

| 34.837838 | 131 | 0.742436 | rus_Cyrl | 0.228015 |

0caf38767f3ecced3b521c441869e35698b89461 | 835 | md | Markdown | skills/B01I763DSY/README.md | zwang695/alexa-skills-list | 43fb6168a3313f004d02a910d1d8930f42a5fce4 | [

"MIT"

] | null | null | null | skills/B01I763DSY/README.md | zwang695/alexa-skills-list | 43fb6168a3313f004d02a910d1d8930f42a5fce4 | [

"MIT"

] | null | null | null | skills/B01I763DSY/README.md | zwang695/alexa-skills-list | 43fb6168a3313f004d02a910d1d8930f42a5fce4 | [

"MIT"

] | null | null | null | # [planoFacts](http://alexa.amazon.com/#skills/amzn1.echo-sdk-ams.app.e6acf209-eada-4aaa-bd2e-154d9961dfaa)

0

To use the planoFacts skill, try saying...

* *Alexa, open plano facts.*

* *Alexa, begin plano facts.*

* *Alexa, start plano facts.*

Get a fact about Plano

***

### Skill Details

* **Invocation Name:** plano facts

* **Category:** null

* **ID:** amzn1.echo-sdk-ams.app.e6acf209-eada-4aaa-bd2e-154d9961dfaa

* **ASIN:** B01I763DSY

* **Author:** secretsquirrel123

* **Release Date:** July 11, 2016 @ 08:27:36

* **In-App Purchasing:** No

| 33.4 | 287 | 0.706587 | kor_Hang | 0.23598 |

0cb07cd1fa064abf12974c97c66ffdb76e9dc453 | 234 | md | Markdown | _posts/1933-09-01-pioneer-james-lummus-writes-about.md | MiamiMaritime/miamimaritime.github.io | d087ae8c104ca00d78813b5a974c154dfd9f3630 | [

"MIT"

] | null | null | null | _posts/1933-09-01-pioneer-james-lummus-writes-about.md | MiamiMaritime/miamimaritime.github.io | d087ae8c104ca00d78813b5a974c154dfd9f3630 | [

"MIT"

] | null | null | null | _posts/1933-09-01-pioneer-james-lummus-writes-about.md | MiamiMaritime/miamimaritime.github.io | d087ae8c104ca00d78813b5a974c154dfd9f3630 | [

"MIT"

] | null | null | null | ---

title: Pioneer James Lummus writes about

tags:

- Sep 1933

---

Pioneer James Lummus writes about life in Miami in 1896.

Newspapers: **Miami Morning News or The Miami Herald**

Page: **8**, Section: **N/A**

| 19.5 | 58 | 0.628205 | eng_Latn | 0.931737 |

0cb0904c762da46609b6c95799cca75b7468ed65 | 3,734 | md | Markdown | README.md | Andrei-Dolgolev/humbug | 5936fc6e2c9dbf35ecf964983a1ef2253e4e085c | [

"Apache-2.0"

] | null | null | null | README.md | Andrei-Dolgolev/humbug | 5936fc6e2c9dbf35ecf964983a1ef2253e4e085c | [

"Apache-2.0"

] | null | null | null | README.md | Andrei-Dolgolev/humbug | 5936fc6e2c9dbf35ecf964983a1ef2253e4e085c | [

"Apache-2.0"

] | null | null | null | # humbug

Humbug helps you understand what keeps users coming back to your developer tool as well as any

friction they experience.

Humbug lets you collect basic system information and crash reports while respecting your users'

privacy. In addition to getting reports, you get to be [GDPR](https://gdpr-info.eu/)-compliant from

day one.

Humbug is currently available in the following programming languages:

1. [Python](./python)

2. [Go](./go)

3. Javascript (coming soon)

If you would like support for another programming language, please

[create an issue](https://github.com/bugout-dev/humbug/issues/new).

---

## Using Humbug

### Trying it out

First, sign up for an account at https://bugout.dev.

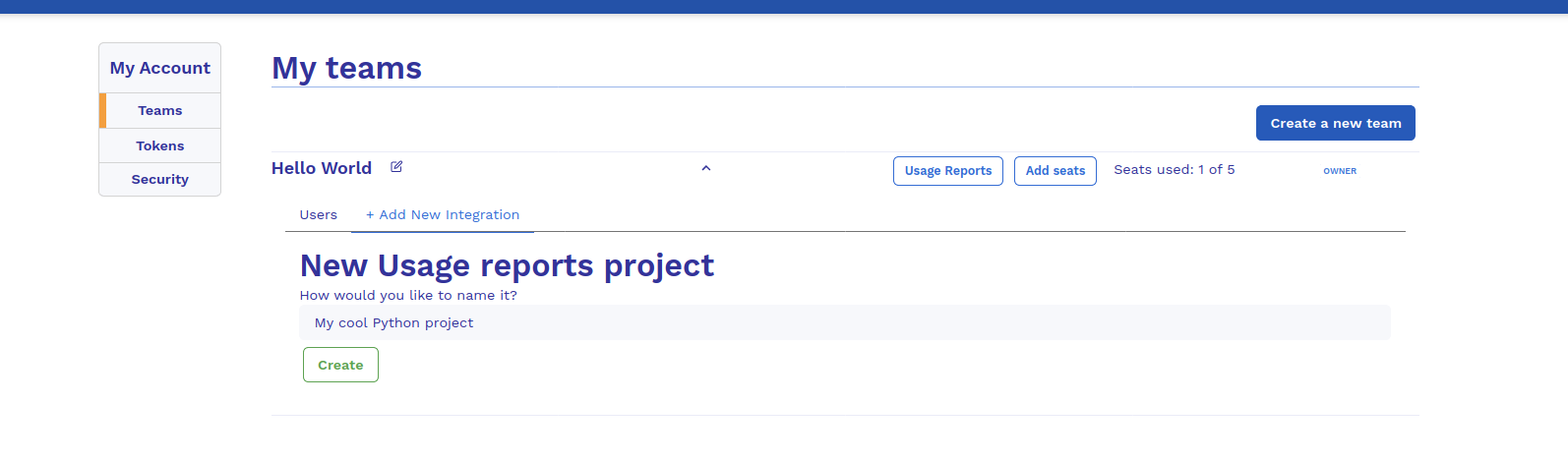

Once you have created your account, go to the [`Account > Teams`](https://bugout.dev/account/teams)

page and create a team:

Once you have created a team, you should see something like this:

Click on the `Usage Reports` button on your new team to set up reporting:

Enter a name for your project:

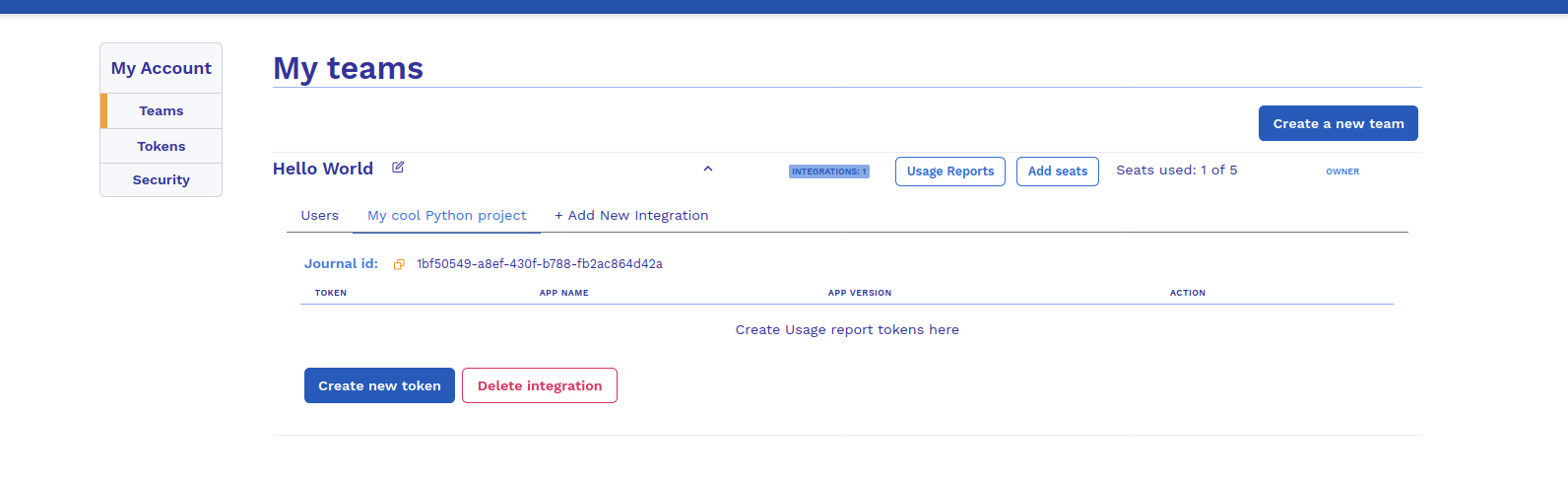

This should result in a view like this one:

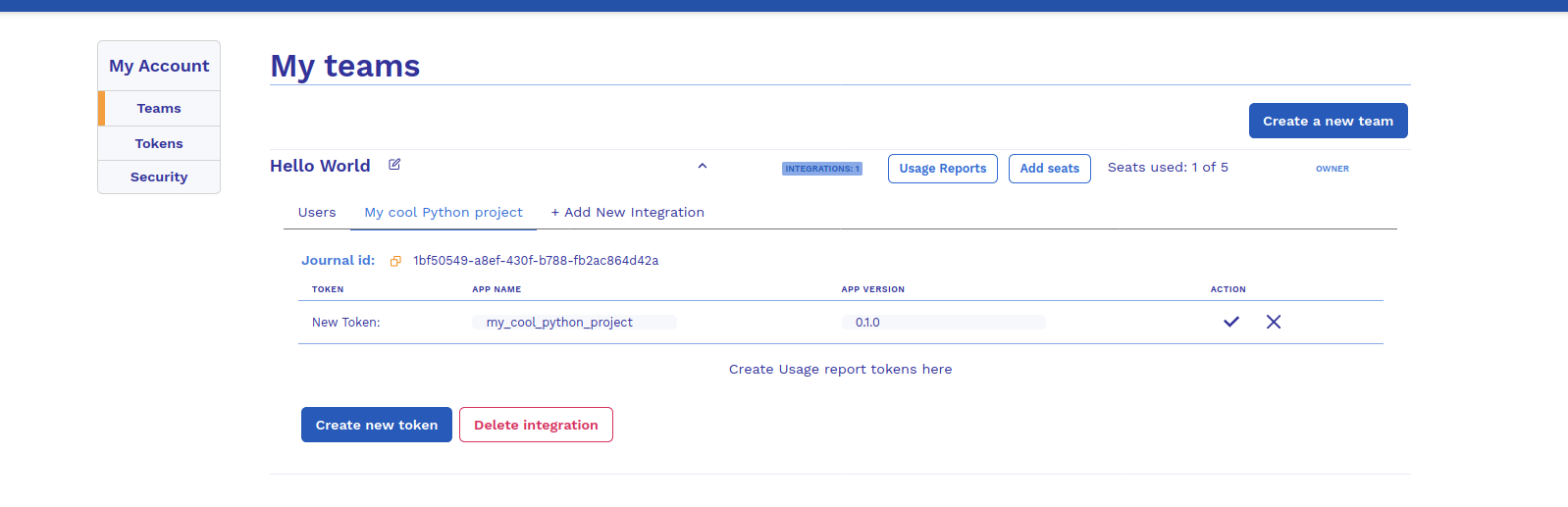

Now, create a new token that you can use for reporting:

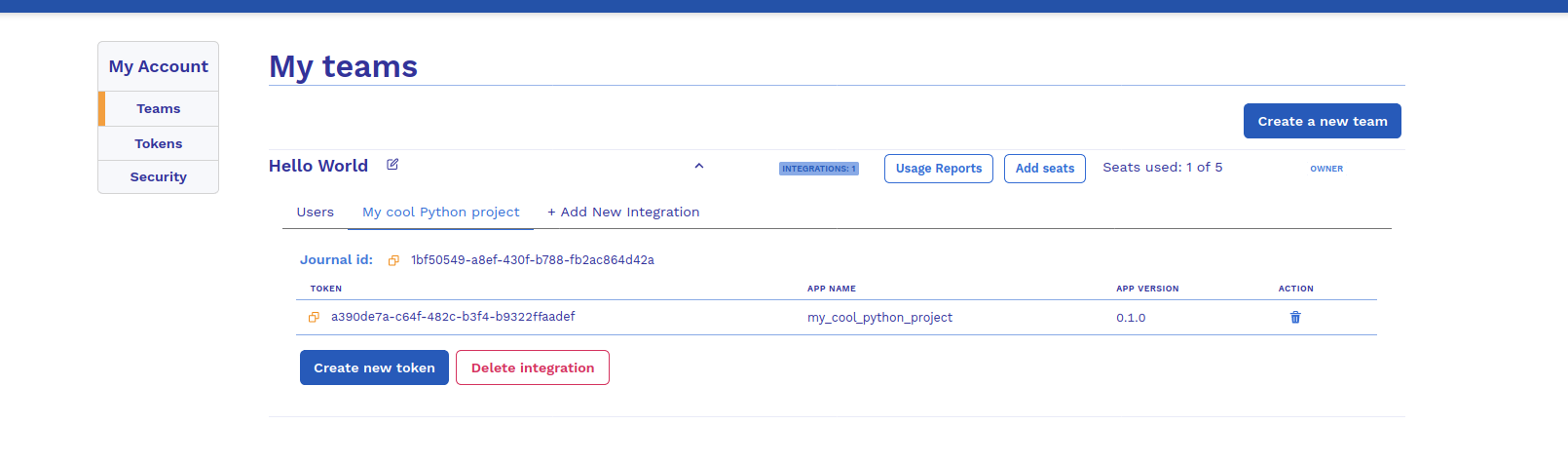

Which should get you to a view like this one:

Make special note of the `Journal ID` and the `Token`. You will need them in the next step, where

you will instrument your application to register usage reports with Bugout.

Here are some examples of how to do this in:

1. [Python](./python/README.md#integration)

#### Using the demo journal and token

If you would like to try things out with the demo integration from above, just email

[me](mailto:[email protected]) ([zomglings](https://github.com/zomglings)) with your Bugout

username and I will add you to the demo team.

You can also reach me on the [Bugout.dev community slack](https://join.slack.com/t/bugout-dev/shared_invite/zt-fhepyt87-5XcJLy0iu702SO_hMFKNhQ).

#### From development to production

We recommend generating one token for development and testing and using different tokens for each

version of your production library or application.

### Accessing reports

You can access your Bugout knowledge base at https://bugout.dev, via the Bugout API, or using the

`bugout` command line tool.

Bugout client libraries:

1. [Python](https://pypi.org/project/bugout/)

2. [Go](https://github.com/bugout-dev/bugout-go)

3. [Javascript](https://github.com/bugout-dev/bugout-js)

The `bugout` command line tool can be installed from:

https://github.com/bugout-dev/bugout-go/releases/latest

You can use [`humbug.bash`](https://gist.github.com/zomglings/a82ea32e8533afe62278bb2056e95621)

to download your Humbug reports to your filesystem in an easy to analyze JSON format.

### Getting help

You can get help by:

1. [Creating an issue](https://github.com/bugout-dev/humbug/issues/new)

2. [Asking for help on the Bugout.dev community Slack](https://join.slack.com/t/bugout-dev/shared_invite/zt-fhepyt87-5XcJLy0iu702SO_hMFKNhQ)

3. [Emailing zomglings](mailto:[email protected])

4. [Scheduling a meeting with zomglings](https://calendly.com/neeraj-simiotics/bugout-30)

| 37.34 | 144 | 0.767006 | eng_Latn | 0.950873 |

0cb0faca99c335f26148953b214f8fc08496cc85 | 10,107 | md | Markdown | docs/ssms/scripting/run-the-transact-sql-debugger.md | ysy68251435/sql-docs | 56b963446965f3a4bb0fa1446f49578dbff382e0 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/ssms/scripting/run-the-transact-sql-debugger.md | ysy68251435/sql-docs | 56b963446965f3a4bb0fa1446f49578dbff382e0 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/ssms/scripting/run-the-transact-sql-debugger.md | ysy68251435/sql-docs | 56b963446965f3a4bb0fa1446f49578dbff382e0 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: "Run the Transact-SQL Debugger | Microsoft Docs"

ms.custom: ""

ms.date: "03/14/2017"

ms.prod: sql

ms.technology: scripting

ms.reviewer: ""

ms.topic: conceptual

helpviewer_keywords:

- "Transact-SQL debugger, sysadmin requirement"

- "Transact-SQL debugger, supported versions"

- "Query Editor [Database Engine], right-click menu"

- "debugging [SQL Server], T-SQL debugger"

- "Transact-SQL debugger, Query Editor shortcut menu"

- "Transact-SQL debugger, stopping"

- "Transact-SQL debugger, Debug menu"

- "debugging [SQL Server]"

- "Transact-SQL debugger, Debug toolbar"

- "Transact-SQL debugger, keyboard shortcuts"

- "Transact-SQL debugger, starting"

ms.assetid: 386f6d09-dbec-4dc7-9e8a-cd9a4a50168c

author: markingmyname

ms.author: maghan

manager: jroth

monikerRange: ">=aps-pdw-2016||=azuresqldb-current||=azure-sqldw-latest||>=sql-server-2016||=sqlallproducts-allversions||>=sql-server-linux-2017||=azuresqldb-mi-current"

---

# Run the Transact-SQL Debugger

[!INCLUDE[appliesto-ss-asdb-asdw-pdw-md](../../includes/appliesto-ss-asdb-asdw-pdw-md.md)]

You can start the [!INCLUDE[tsql](../../includes/tsql-md.md)] debugger after you open a [!INCLUDE[ssDE](../../includes/ssde-md.md)] Query Editor window. Then, you can run your [!INCLUDE[tsql](../../includes/tsql-md.md)] code in debug mode until you stop the debugger. You can set options to customize how the debugger runs.

## Starting and Stopping the Debugger

The requirements to start the [!INCLUDE[tsql](../../includes/tsql-md.md)] debugger are as follows:

- If your [!INCLUDE[ssDE](../../includes/ssde-md.md)] Query Editor is connected to an instance of the [!INCLUDE[ssDE](../../includes/ssde-md.md)] on another computer, you must have configured the debugger for remote debugging. For more information, see [Configure firewall rules before running the TSQL Debugger](../../relational-databases/scripting/configure-firewall-rules-before-running-the-tsql-debugger.md).

- [!INCLUDE[ssManStudioFull](../../includes/ssmanstudiofull-md.md)] must be running under a Windows account that is a member of the sysadmin fixed server roll.

- The [!INCLUDE[ssDE](../../includes/ssde-md.md)] Query Editor window must be connected by using either a Windows Authentication or [!INCLUDE[ssNoVersion](../../includes/ssnoversion-md.md)] Authentication login that is a member of the sysadmin fixed server role.

- The [!INCLUDE[ssDE](../../includes/ssde-md.md)] Query Editor window must be connected to an instance of the [!INCLUDE[ssDE](../../includes/ssde-md.md)] from [!INCLUDE[ssVersion2005](../../includes/ssversion2005-md.md)] Service Pack 2 (SP2) or later. You cannot run the debugger when the Query Editor window is connected to an instance that is in single-user mode.

We recommend that [!INCLUDE[tsql](../../includes/tsql-md.md)] code be debugged on a test server, not a production server, for the following reasons:

- Debugging is a highly privileged operation. Therefore, only members of the sysadmin fixed server role are allowed to debug in [!INCLUDE[ssNoVersion](../../includes/ssnoversion-md.md)].

- Debugging sessions often run for long periods of time while you investigate the operations of several [!INCLUDE[tsql](../../includes/tsql-md.md)] statements. Locks, such as update locks, that are acquired by the session might be held for extended periods, until the session is ended or the transaction committed or rolled back.

Starting the [!INCLUDE[tsql](../../includes/tsql-md.md)] debugger puts the Query Editor window into debug mode. When the Query Editor window enters debug mode, the debugger pauses at the first line of code. You can then step through the code, pause the execution on specific [!INCLUDE[tsql](../../includes/tsql-md.md)] statements, and use the debugger windows to view the current execution state. You can start the debugger by either clicking the **Debug** button on the **Query** toolbar or by clicking **Start Debugging** on the **Debug** menu.

The Query Editor window stays in debug mode until either the last statement in the Query Editor window finishes or you stop debug mode. You can stop debug mode and statement execution by using any one of the following methods:

- On the **Debug** menu, click **Stop Debugging**.

- On the **Debug** toolbar, click the **Stop Debugging** button.

- On the **Query** menu, click **Cancel Executing Query**.

- On the **Query** toolbar, click the **Cancel Executing Query** button.

You can also stop debug mode and allow for the remaining [!INCLUDE[tsql](../../includes/tsql-md.md)] statements to finish executing by clicking **Detach All** on the **Debug** menu.

## Controlling the Debugger

You can control how the [!INCLUDE[tsql](../../includes/tsql-md.md)] debugger operates by using the following menu commands, toolbars, and shortcuts:

- The **Debug** menu and the **Debug** toolbar. Both the **Debug** menu and **Debug** toolbar are inactive until the focus is placed in an open Query Editor window. They remain active until the current project is closed.

- The debugger keyboard shortcuts.

- The Query Editor shortcut menu. The shortcut menu is displayed when you right-click a line in a Query Editor window. When the Query Editor window is in debug mode, the shortcut menu displays debugger commands that apply to the selected line or string.

- Menu items and context commands in the windows that are opened by the debugger, such as the **Watch** or **Breakpoints** windows.

The following table shows the debugger menu commands, toolbar buttons, and keyboard shortcuts.

|Debug menu command|Editor shortcut command|Toolbar button|Keyboard shortcut|Action|

|------------------------|-----------------------------|--------------------|-----------------------|------------|

|**Windows/Breakpoints**|Not available|**Breakpoints**|CTRL+ALT+B|Display the **Breakpoints** window in which you can view and manage breakpoints.|

|**Windows/Watch/Watch1**|Not available|**Breakpoints/Watch/Watch1**|CTRL+ALT+W, 1|Display the **Watch1** window.|

|**Windows/Watch/Watch2**|Not available|**Breakpoints/Watch/Watch2**|CTRL+ALT+W, 2|Display the **Watch2** window.|

|**Windows/Watch/Watch3**|Not available|**Breakpoints/Watch/Watch3**|CTRL+ALT+W, 3|Display the **Watch3** window.|

|**Windows/Watch/Watch4**|Not available|**Breakpoints/Watch/Watch4**|CTRL+ALT+W, 4|Display the **Watch4** window.|

|**Windows/Locals**|Not available|**Breakpoints/Locals**|CTRL+ALT+V, L|Display the **Locals** window.|

|**Windows/Call Stack**|Not available|**Breakpoints/Call Stack**|CTRL+ALT+C|Display the **Call Stack** window.|

|**Windows/Threads**|Not available|**Breakpoints/Threads**|CTRL+ALT+H|Display the **Threads** window.|

|**Continue**|Not available|**Continue**|ALT+F5|Run to the next breakpoint. **Continue** is not active until you are focused on a Query Editor window that is in debug mode.|

|**Start Debugging**|Not available|**Start Debugging**|ALT+F5|Put a Query Editor window into debug mode and run to the first breakpoint. If you are focused on a Query Editor window that is in debug mode, **Start Debugging** is replaced by **Continue**.|

|**Break All**|Not available|**Break All**|CTRL+ALT+BREAK|This feature not used by the [!INCLUDE[tsql](../../includes/tsql-md.md)] debugger.|

|**Stop Debugging**|Not available|**Stop Debugging**|SHIFT+F5|Take a Query Editor window out of debug mode and return it to regular mode.|

|**Detach All**|Not available|Not available|Not available|Stops debug mode, but executes the remaining statements in the Query Editor window.|

|**Step Into**|Not available|**Step Into**|F11|Run the next statement, and also open a new Query Editor window in debug mode if the next statement runs a stored procedure, trigger, or function.|

|**Step Over**|Not available|**Step Over**|F10|Same as **Step Into**, except that no functions, stored procedures, or triggers are debugged.|

|**Step Out**|Not available|**Step Out**|SHIFT+F11|Execute the remaining code in a trigger, function, or stored procedure without pausing for any breakpoints. Regular debug mode resumes when control is returned to the code that called the module.|

|Not available|**Run To** Cursor|Not available|CTRL+F10|Execute all code from the last stop location to the current cursor location without stopping at any breakpoints.|

|**QuickWatch**|**QuickWatch**|Not available|CTRL+ALT+Q|Display the **QuickWatch** window.|

|**Toggle Breakpoint**|**Breakpoint/Insert Breakpoint**|Not available|F9|Position a breakpoint on the current or selected [!INCLUDE[tsql](../../includes/tsql-md.md)] statement.|

|Not available|**Breakpoint/Delete Breakpoint**|Not available|Not available|Delete the breakpoint from the selected line.|

|Not available|**Breakpoint/Disable Breakpoint**|Not available|Not available|Disable the breakpoint on the selected line. The breakpoint remains on the line of code, but will not stop execution until it is reenabled.|

|Not available|**Breakpoint/Enable Breakpoint**|Not available|Not available|Enable the breakpoint on the selected line.|

|**Delete All Breakpoints**|Not available|Not available|CTRL+SHIFT+F9|Delete all breakpoints.|

|**Disable All Breakpoints**|Not available|Not available|Not available|Disable all breakpoints.|

|Not available|**Add Watch**|Not available|Not available|Add the selected expression to the **Watch** window.|

## See Also

[Transact-SQL Debugger](../../relational-databases/scripting/transact-sql-debugger.md)

[Step Through Transact-SQL Code](../../relational-databases/scripting/step-through-transact-sql-code.md)

[Transact-SQL Debugger Information](../../relational-databases/scripting/transact-sql-debugger-information.md)

[Database Engine Query Editor (SQL Server Management Studio)](../../relational-databases/scripting/database-engine-query-editor-sql-server-management-studio.md)

[Live Query Statistics](../../relational-databases/performance/live-query-statistics.md)

| 91.054054 | 549 | 0.731374 | eng_Latn | 0.939101 |

0cb11faa86e32d7ce817c685ecf5ff31bcd49ffe | 880 | md | Markdown | docs/components/trainhook/README.md | limberc/HyperGAN | b074e74abf0ed9b81bd52084706e3707a47e0fe2 | [

"MIT"

] | 889 | 2016-08-27T01:37:35.000Z | 2018-10-07T19:47:56.000Z | docs/components/trainhook/README.md | limberc/HyperGAN | b074e74abf0ed9b81bd52084706e3707a47e0fe2 | [

"MIT"

] | 101 | 2016-11-30T03:34:02.000Z | 2018-10-02T13:50:52.000Z | docs/components/trainhook/README.md | limberc/HyperGAN | b074e74abf0ed9b81bd52084706e3707a47e0fe2 | [

"MIT"

] | 145 | 2016-09-27T06:56:24.000Z | 2018-09-25T16:09:28.000Z | ---

description: Train hooks provide training events and loss modification to trainers.

---

# Train Hook

[https://github.com/HyperGAN/HyperGAN/tree/master/hypergan/train\_hooks](https://github.com/HyperGAN/HyperGAN/tree/master/hypergan/train_hooks)

## Access

```python

gan.trainer.train_hooks # => [...]

```

Train hooks are setup and invoked by the trainer.

## Events

Override these methods to change the train loop

```python

before_step(step, feed_dict)

after_step(step, feed_dict)

after_create()

gradients(d_grads, g_grads)

```

### before\_step\(feed\_dict\)

### after\_step\(feed\_dict\)

Executed before/after the step takes place. `feed_dict` is what is being sent to the graph during the training step.

### after\_create\(\)

Ran after the trainer is created.

### gradients\(d\_grads, g\_grads\)

Refines the gradients before they are applied to the optimizer.

| 20.952381 | 143 | 0.740909 | eng_Latn | 0.97338 |

0cb1338eb164607ba42e5237943cec4dd6538c7f | 5,906 | md | Markdown | resources/website/content/fr/certificates.md | dominicporter/web | 073effa83391c1a77e1317eb4cb39fa0a941ecf9 | [

"Apache-2.0"

] | 1 | 2019-12-31T07:17:55.000Z | 2019-12-31T07:17:55.000Z | resources/website/content/fr/certificates.md | dominicporter/web | 073effa83391c1a77e1317eb4cb39fa0a941ecf9 | [

"Apache-2.0"

] | 8 | 2019-12-04T10:27:59.000Z | 2020-01-05T14:33:26.000Z | resources/website/content/fr/certificates.md | winwisely99/web | 935ee6006644abda2cd8aa3563e86eacad73eba6 | [

"Apache-2.0"

] | null | null | null | ---

title: Chaîne de confiance

slug: certificates

top_graphic: 5

lastmod: 2019-05-01

---

# Certificats racine

Nos racines sont conservées en toute sécurité hors ligne. Nous émettons des certificats finaux signés par les intermédiaires de la section suivante.

* Actif

* [ISRG Root X1 (auto-signé)](/certs/isrgrootx1.pem.txt)

Nous avons mis en place des sites Web pour tester les certificats liés à nos racines.

* ISRG Root X1 Certificat valide

* [https://valid-isrgrootx1.letsencrypt.org/](https://valid-isrgrootx1.letsencrypt.org/)

* ISRG Root X1 Certificat Révoqué

* [https://revoked-isrgrootx1.letsencrypt.org/](https://revoked-isrgrootx1.letsencrypt.org/)

* ISRG Root X1 Certificat Expiré

* [https://expired-isrgrootx1.letsencrypt.org/](https://expired-isrgrootx1.letsencrypt.org/)

# Certificats Intermédiaires

IdenTrust a aussi signé (signature croisée, *cross signed* en anglais) nos intermédiaires. Cela permet à nos certificats finaux d'être acceptés par tous les principaux navigateurs pendant que nous propageons notre propre racine.

Dans des circonstances normales, les certificats émis par Let's Encrypt proviendront de «Let's Encrypt Authority X3». L'autre intermédiaire, «Let's Encrypt Authority X4», est réservé à la reprise après sinistre et ne sera utilisé que si nous perdons la possibilité d'utiliser «Let's Encrypt Authority X3». Les intermédiaires X1 et X2 étaient notre première génération d'intermédiaires. Nous les avons remplacés par de nouveaux intermédiaires plus compatibles avec Windows XP.

* Actif

* [Let's Encrypt Authority X3 (Signé par IdenTrust)](/certs/lets-encrypt-x3-cross-signed.pem.txt)

* [Let's Encrypt Authority X3 (Signé par ISRG Root X1)](/certs/letsencryptauthorityx3.pem.txt)

* Secours

* [Let's Encrypt Authority X4 (Signé par IdenTrust)](/certs/lets-encrypt-x4-cross-signed.pem.txt)

* [Let's Encrypt Authority X4 (Signé par ISRG Root X1)](/certs/letsencryptauthorityx4.pem.txt)

* Retirés

* [Let's Encrypt Authority X2 (Signé par IdenTrust)](/certs/lets-encrypt-x2-cross-signed.pem.txt)

* [Let's Encrypt Authority X2 (Signé par ISRG Root X1)](/certs/letsencryptauthorityx2.pem.txt)

* [Let's Encrypt Authority X1 (Signé par IdenTrust)](/certs/lets-encrypt-x1-cross-signed.pem.txt)

* [Let's Encrypt Authority X1 (Signé par ISRG Root X1)](/certs/letsencryptauthorityx1.pem.txt)

# Signature croisée

Notre intermédiaire "Let's Encrypt Authority X3" représente une seule paire de clés public/privée.

La clé privée de cette paire génère la signature pour tous les certificats finaux, c'est-à-dire les certificats que nous délivrons pour une utilisation sur votre serveur.

Notre intermédiaire est signé par la racine ISRG X1. Cependant, puisque nous sommes une toute nouvelle

autorité de certification, ISRG Root X1 n'est pas encore approuvé dans la plupart des navigateurs.

Afin d'être reconnu immédiatement, notre intermédiaire est également signé par

une autre autorité de certification, IdenTrust, dont la racine est déjà approuvée par

les principaux navigateurs. Plus précisément, IdenTrust a signé notre intermédiaire en utilisant leur certificat racine

"DST Root CA X3" (maintenant appelé "TrustID X3 Root"). [Télécharger "TrustID X3 Root" sur identrust.com](https://www.identrust.com/support/downloads) (ou, de façon alternative, vous pouvez télécharger une copie ici : [.pem](/certs/trustid-x3-root.pem.txt), [.p7b](/certs/trustid-x3-root.p7b)).

Cela signifie qu'il y a deux certificats disponibles qui représentent tous deux notre

intermédiaire. L'un est signé par DST Root CA X3, et l'autre est signé par ISRG

Racine X1. La façon la plus simple de les distinguer est de regarder leur champ "émetteur".

Lors de la configuration d'un serveur Web, l'opérateur du serveur configure non seulement le

certificat final, mais aussi une liste d'intermédiaires pour aider les navigateurs à vérifier

que le certificat d'entité finale possède une chaîne de confiance menant à une racine approuvée

certificat. Presque tous les opérateurs de serveurs choisiront de servir une chaîne contenant

le certificat intermédiaire avec le sujet "Let's Encrypt Authority X3" et

ayant pour émetteur "DST Root CA X3." Le logiciel recommandé par Let's Encrypt, [Certbot](https://certbot.org), rendra

la configuration transparente.

L'image suivante explique visuellement les relations entre nos certificats :

<img src="/certs/isrg-keys.png" alt="Schéma des relations clés de l'ISRG">

# Certificat de signature de l'OCSP

Ce certificat est utilisé pour signer les réponses OCSP pour les intermédiaires de l'autorité Let's Encrypt, de sorte que nous n'avons pas besoin d'avoir la clé racine en ligne afin de

signer ces réponses. Une copie de ce certificat est automatiquement incluse dans

ces réponses OCSP, donc les abonnés n'ont pas besoin de faire quoi que ce soit avec.

Ceci est inclus uniquement à titre informatif.

* [ISRG Root OCSP X1 (Signé par ISRG Root X1)](/certs/isrg-root-ocsp-x1.pem.txt)

# Certificate Transparency

Nous nous engageons à la transparence dans nos opérations et dans les certificats que nous

émettons. Nous soumettons tous les certificats aux [Logs de Certificate Transparency](https://www.certificate-transparency.org/) au fur et à mesure que nous les émettons. Vous pouvez voir tous

émis Let's Encrypt certificats via ces liens:

* [Émis par Let's Encrypt Authority X1](https://crt.sh/?Identity=%25&iCAID=7395)

* [Émis par Let's Encrypt Authority X3](https://crt.sh/?Identity=%25&iCAID=16418)

# Plus d'informations

Les clés privées de l'autorité de certification racine ISRG et des autorités de certification intermédiaires de Let's Encrypt sont stockées sur des modules de sécurité matériels (HSM), qui offrent un degré de protection élevé contre le vol de clés.

Toutes les clés de l'ISRG sont actuellement des clés RSA. Nous [prévoyons de générer des clés ECDSA]({{< ref "/upcoming-features.md" >}}).

| 62.829787 | 475 | 0.785303 | fra_Latn | 0.970976 |

0cb1639d6f5c0fa0923f35e14b95a34f06dce358 | 1,164 | md | Markdown | README.md | artemeu/DotnetCoreSmtp | 15678910237a69623c7c1066873cc22644aa7d0e | [

"MIT"

] | null | null | null | README.md | artemeu/DotnetCoreSmtp | 15678910237a69623c7c1066873cc22644aa7d0e | [

"MIT"

] | null | null | null | README.md | artemeu/DotnetCoreSmtp | 15678910237a69623c7c1066873cc22644aa7d0e | [

"MIT"

] | null | null | null | # DotnetCoreSmtp Project

## Author

[Artem Erkal Ucar](https://github.com/artemeu)

## Project setup instructions

This project is a .Net 5.0 Web Api Smtp Service Project.

## Installation

Download and install .Net 5.0 SDK

```bash

https://dotnet.microsoft.com/download

```

## Configuration

Modify EmailSettings content in DotnetCoreSmtp.Api/appsettings.json file with valid sender email information.

```python

"EfmailSettings": {

"SenderEmailAddress": "[email protected]",

"SenderEmailAddressPassword": "password",

"SenderName": "Sender Name",

"Port": 587,

"Host": "Email Host Name"

},

```

## Run Projcet

In DotnetCoreSmtp.Api project directory run command

```bash

dotnet run

```

## Swagger

This project has swagger implementation

```bash

http://localhost:8034/swagger/index.html

```

## Send Email

POST Smtp Post Api

```python

# /Smtp/send/email

```

Project runs on localhost or local network IP address on port 8034

```python

# localhost:8034/Smtp/send/email

```

## Request Body

Example Value | Schema

```python

{

"recipientEmailAddress": "[email protected]",

"emailSubject": "string",

"emailContent": "string"

}

```

| 15.72973 | 109 | 0.705326 | eng_Latn | 0.263223 |

0cb1ade8734f31e624e3b8f4591080127a4307f8 | 4,465 | md | Markdown | windows-driver-docs-pr/network/managing-the-local-dcbx-willing-state.md | hugmyndakassi/windows-driver-docs | aa56990cc71e945465bd4d4f128478b8ef5b3a1a | [

"CC-BY-4.0",

"MIT"

] | 1 | 2022-02-07T12:25:23.000Z | 2022-02-07T12:25:23.000Z | windows-driver-docs-pr/network/managing-the-local-dcbx-willing-state.md | hugmyndakassi/windows-driver-docs | aa56990cc71e945465bd4d4f128478b8ef5b3a1a | [

"CC-BY-4.0",

"MIT"

] | null | null | null | windows-driver-docs-pr/network/managing-the-local-dcbx-willing-state.md | hugmyndakassi/windows-driver-docs | aa56990cc71e945465bd4d4f128478b8ef5b3a1a | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: Managing the Local DCBX Willing State

description: Managing the Local DCBX Willing State

ms.date: 04/20/2017

---

# Managing the Local DCBX Willing State

The IEEE 802.1Qaz draft standard defines the Data Center Bridging Exchange (DCBX) protocol. This protocol allows DCB configuration parameters to be exchanged between the network adapter (local peer) and a directly connected remote peer. This allows these peers to adapt and tune Quality of Service (QoS) parameters to optimize data transfer over the connection.

Based on the local and remote QoS parameter settings, the miniport driver resolves the conflicts and derives a set of operational QoS parameters. The network adapter uses these operational parameters for the prioritized transmission of packets to the remote peer. For more information about how the driver resolves its operational NDIS QoS parameter settings, see [Resolving Operational NDIS QoS Parameters](resolving-operational-ndis-qos-parameters.md).

DCBX consists of DCB type-length-value (TLV) settings that are carried over Link Layer Discovery Protocol (LLDP) packets. A separate TLV is defined for the following types of QoS parameters:

- [Enhanced Transmission Selection (ETS)](enhanced-transmission-selection--ets--algorithm.md)

- [Priority-based Flow Control (PFC)](priority-based-flow-control--pfc.md)

The TLVs for ETS and PFC define a bit known as the *Willing* bit. If the network adapter sends its TLV settings to the remote peer with the Willing bit set to one, it indicates that the adapter is willing to accept QoS parameters from the remote peer.

The ability to set individual Willing bits in these TLVs depends on the local DCBX Willing state that is managed by the miniport driver. The miniport driver must follow these guidelines for managing the local DCBX Willing state:

- If the local DCBX Willing state is disabled, the local Willing bit must be set to zero in the DCBX TLVs. In this case, the operational QoS parameters are always resolved from the local QoS parameters. For more information on these parameters, see [Setting Local NDIS QoS Parameters](setting-local-ndis-qos-parameters.md).

- If the local DCBX Willing state is enabled, the local Willing bit must be set to one in the DCBX TLVs. In this case, the operational QoS parameters must be resolved from the remote QoS parameters. For more information on these parameters, see [Receiving Remote NDIS QoS Parameters](receiving-remote-ndis-qos-parameters.md).

**Note** If local DCBX Willing state is enabled, the miniport driver can also resolve its operational QoS parameters based on any proprietary QoS settings that are defined by the independent hardware vendor (IHV). The driver can only do this for QoS parameters that are not configured remotely by the peer or locally by the operating system.

The miniport driver manages the local DCBX Willing state in the following way:

- When the miniport driver is initialized through a call to its [*MiniportInitializeEx*](/windows-hardware/drivers/ddi/ndis/nc-ndis-miniport_initialize) function, it should enable the local DCBX Willing state based on proprietary QoS settings that are defined by the IHV.

- The DCB component (Msdcb.sys) issues an object identifier (OID) method request of [OID\_QOS\_PARAMETERS](./oid-qos-parameters.md) to configure the local QoS parameters on a network adapter. The **InformationBuffer** member of the [**NDIS\_OID\_REQUEST**](/windows-hardware/drivers/ddi/oidrequest/ns-oidrequest-ndis_oid_request) structure for this OID request contains a pointer to an [**NDIS\_QOS\_PARAMETERS**](/windows-hardware/drivers/ddi/ntddndis/ns-ntddndis-_ndis_qos_parameters) structure.

If the **NDIS\_QOS\_PARAMETERS\_WILLING** flag is set in the **Flags** member of this structure, the miniport driver enables the DCBX Willing state. If this bit is not set, the miniport driver disabled the DCBX Willing state.

For more information about LLDP, refer to the IEEE 802.1AB-2005 standard.

For more information about the local DCBX Willing bits and TLVs, refer to the IEEE 802.1Qaz draft standard.

**Note** Starting with Windows Server 2012, the DCB component can be configured through a PowerShell cmdlet to set or clear the **NDIS\_QOS\_PARAMETERS\_WILLING** flag when it issues an [OID\_QOS\_PARAMETERS](./oid-qos-parameters.md) request. This causes the miniport driver to respectively enable or disable the local DCBX Willing state.

| 89.3 | 499 | 0.795297 | eng_Latn | 0.994594 |

0cb3046ae7b0e75cf94f99837918baa0e90790b2 | 355 | md | Markdown | content/publication/ferrari-2014-territorial/index.md | DiogoFerrari/academic-kickstart | 2730c7beb6b23f11ae2e831226ef782e2dc8cdc6 | [

"MIT"

] | null | null | null | content/publication/ferrari-2014-territorial/index.md | DiogoFerrari/academic-kickstart | 2730c7beb6b23f11ae2e831226ef782e2dc8cdc6 | [

"MIT"

] | null | null | null | content/publication/ferrari-2014-territorial/index.md | DiogoFerrari/academic-kickstart | 2730c7beb6b23f11ae2e831226ef782e2dc8cdc6 | [

"MIT"

] | null | null | null | ---

title: "The Territorial Division of Power: A Survey on Brazil"

date: 2014-01-01

publishDate: 2019-07-10T03:08:17.665598Z

authors: ["Marta Arretche", "Rogerio Schlegel", "Diogo Ferrari"]

publication_types: ["1"]

abstract: ""

featured: false

publication: "*Paper presented at the International Political Science Association Annual Meeting (IPSA)*"

---

| 29.583333 | 105 | 0.752113 | eng_Latn | 0.510552 |

0cb395225b376a902ff9c30ec54aba316ac3c41b | 1,003 | md | Markdown | problems/leetcode217_ContainsDuplicate/readme.md | WuYifanX/leetcode | 9e8097f0e8dc54910c0de4d814bced38d1c4aaa1 | [

"Apache-2.0"

] | null | null | null | problems/leetcode217_ContainsDuplicate/readme.md | WuYifanX/leetcode | 9e8097f0e8dc54910c0de4d814bced38d1c4aaa1 | [

"Apache-2.0"

] | null | null | null | problems/leetcode217_ContainsDuplicate/readme.md | WuYifanX/leetcode | 9e8097f0e8dc54910c0de4d814bced38d1c4aaa1 | [

"Apache-2.0"

] | null | null | null | # Leetcode

Given an array of integers, find if the array contains any duplicates.

Your function should return true if any value appears at least twice in the array, and it should return false if every element is distinct.

Example 1:

Input: [1,2,3,1]

Output: true

Example 2:

Input: [1,2,3,4]

Output: false

Example 3:

Input: [1,1,1,3,3,4,3,2,4,2]

Output: true

# Solution

```java

package leetcode217_ContainsDuplicate;

import java.util.HashSet;

import java.util.Set;

class Solution {

public boolean containsDuplicate(int[] nums) {

if (nums.length == 0) {

return false;

}

Set<Integer> countSet = new HashSet<>();

for (int currentValue : nums) {

if (countSet.contains(currentValue)) {

return true;

} else {

countSet.add(currentValue);

}

}

return false;

}

public static void main(String[] args) {

int[] inputs2 = new int[] {1, 2, 3, 4};

System.out.println(new Solution().containsDuplicate(inputs2));

}

}

```

| 16.716667 | 139 | 0.653041 | eng_Latn | 0.864224 |

0cb3e7c0add465169df5a9278031545451756007 | 1,612 | md | Markdown | mac/razor.md | 1DanielaBlanco/visualstudio-docs.es-es | 9e934cd5752dc7df6f5e93744805e3c600c87ff0 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | mac/razor.md | 1DanielaBlanco/visualstudio-docs.es-es | 9e934cd5752dc7df6f5e93744805e3c600c87ff0 | [

"CC-BY-4.0",

"MIT"