url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

sequence | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/2712 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2712/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2712/comments | https://api.github.com/repos/huggingface/transformers/issues/2712/events | https://github.com/huggingface/transformers/issues/2712 | 558,705,269 | MDU6SXNzdWU1NTg3MDUyNjk= | 2,712 | a problem occur when I train Chinese distilgpt2 model | {

"login": "ScottishFold007",

"id": 36957508,

"node_id": "MDQ6VXNlcjM2OTU3NTA4",

"avatar_url": "https://avatars.githubusercontent.com/u/36957508?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ScottishFold007",

"html_url": "https://github.com/ScottishFold007",

"followers_url": "https://api.github.com/users/ScottishFold007/followers",

"following_url": "https://api.github.com/users/ScottishFold007/following{/other_user}",

"gists_url": "https://api.github.com/users/ScottishFold007/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ScottishFold007/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ScottishFold007/subscriptions",

"organizations_url": "https://api.github.com/users/ScottishFold007/orgs",

"repos_url": "https://api.github.com/users/ScottishFold007/repos",

"events_url": "https://api.github.com/users/ScottishFold007/events{/privacy}",

"received_events_url": "https://api.github.com/users/ScottishFold007/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

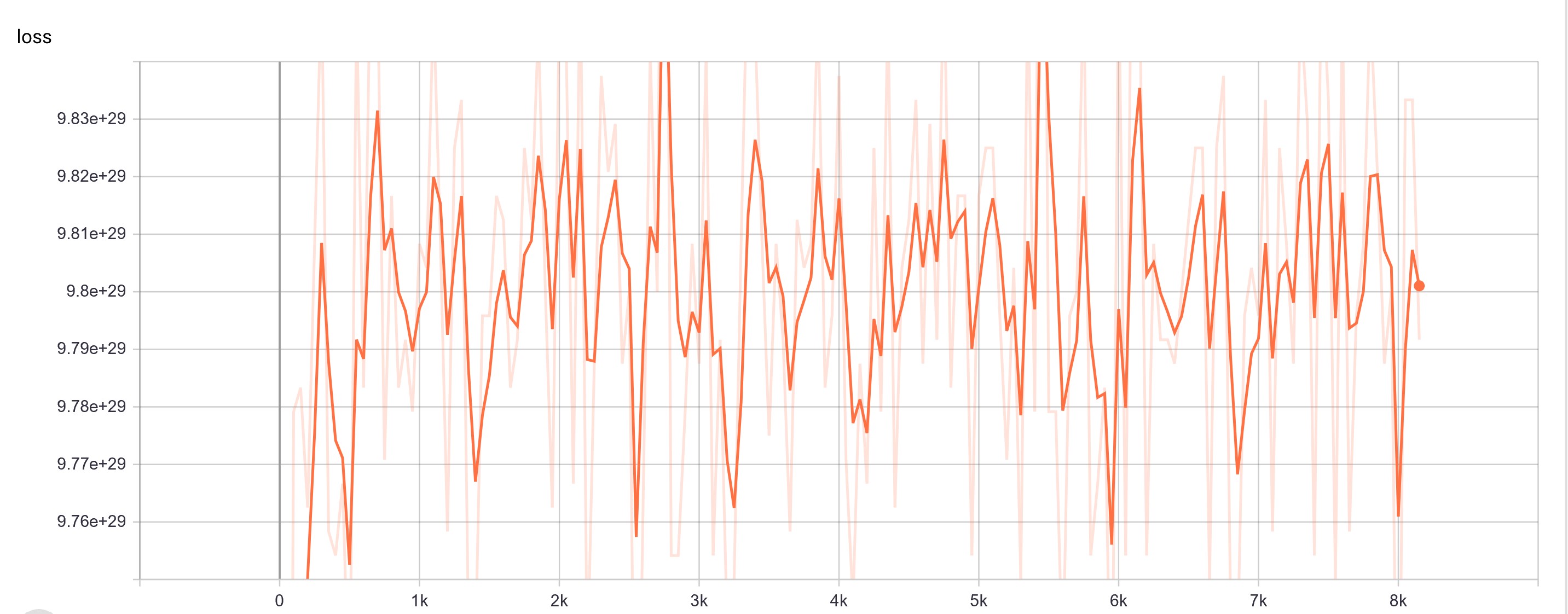

] | 1,580 | 1,586 | 1,586 | CONTRIBUTOR | null | ### When I was training a new model from zero to one, the following questions appeared, please help me answer them, thank you very much!

C:\Users\gaochangkuan\Desktop\transformers-master\examples\distillation>python train.py --student_type gpt2 --student_config training_configs/distilgpt2.json --teacher_type gpt2 --teacher_name distilgpt2 --alpha_ce 5.0 --alpha_mlm 2.0 --alpha_cos 1.0 --alpha_clm 0.0 --mlm --freeze_pos_embs --data_file data/binarized_text.bert-base-chinese.pickle --token_counts data/token_counts.bert-base-chinese.pickle --dump_path model --force

02/02/2020 22:26:54 - INFO - transformers.file_utils - PID: 27864 - PyTorch version 1.4.0+cpu available.

02/02/2020 22:27:02 - INFO - utils - PID: 27864 - Experiment will be dumped and logged in model

02/02/2020 22:27:02 - INFO - utils - PID: 27864 - Param: Namespace(adam_epsilon=1e-06, alpha_ce=5.0, alpha_clm=0.0, alpha_cos=1.0, alpha_mlm=2.0, alpha_mse=0.0, batch_size=5, checkpoint_interval=4000, data_file='data/binarized_text.bert-base-chinese.pickle', dump_path='model', force=True, fp16=False, fp16_opt_level='O1', freeze_pos_embs=True, freeze_token_type_embds=False, gradient_accumulation_steps=50, group_by_size=True, initializer_range=0.02, is_master=True, learning_rate=0.0005, local_rank=0, log_interval=500, master_port=-1, max_grad_norm=5.0, mlm=True, mlm_mask_prop=0.15, mlm_smoothing=0.7, multi_gpu=False, n_epoch=3, n_gpu=0, restrict_ce_to_mask=False, seed=56, student_config='training_configs/distilgpt2.json', student_pretrained_weights=None, student_type='gpt2', teacher_name='distilgpt2', teacher_type='gpt2', temperature=2.0, token_counts='data/token_counts.bert-base-chinese.pickle', warmup_prop=0.05, weight_decay=0.0, word_keep=0.1, word_mask=0.8, word_rand=0.1)

Using cache found in C:\Users\gaochangkuan/.cache\torch\hub\huggingface_pytorch-pretrained-BERT_master

02/02/2020 22:27:12 - INFO - transformers.configuration_utils - PID: 27864 - loading configuration file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-chinese-config.json from cache at C:\Users\gaochangkuan\.cache\torch\transformers\8a3b1cfe5da58286e12a0f5d7d182b8d6eca88c08e26c332ee3817548cf7e60a.3767c74c8ed285531d04153fe84a0791672aff52f7249b27df341dbce09b8305

02/02/2020 22:27:12 - INFO - transformers.configuration_utils - PID: 27864 - Model config BertConfig {

"architectures": [

"BertForMaskedLM"

],

"attention_probs_dropout_prob": 0.1,

"bos_token_id": 0,

"directionality": "bidi",

"do_sample": false,

"eos_token_ids": 0,

"finetuning_task": null,

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"id2label": {

"0": "LABEL_0",

"1": "LABEL_1"

},

"initializer_range": 0.02,

"intermediate_size": 3072,

"is_decoder": false,

"label2id": {

"LABEL_0": 0,

"LABEL_1": 1

},

"layer_norm_eps": 1e-12,

"length_penalty": 1.0,

"max_length": 20,

"max_position_embeddings": 512,

"model_type": "bert",

"num_attention_heads": 12,

"num_beams": 1,

"num_hidden_layers": 12,

"num_labels": 2,

"num_return_sequences": 1,

"output_attentions": false,

"output_hidden_states": false,

"output_past": true,

"pad_token_id": 0,

"pooler_fc_size": 768,

"pooler_num_attention_heads": 12,

"pooler_num_fc_layers": 3,

"pooler_size_per_head": 128,

"pooler_type": "first_token_transform",

"pruned_heads": {},

"repetition_penalty": 1.0,

"temperature": 1.0,

"top_k": 50,

"top_p": 1.0,

"torchscript": false,

"type_vocab_size": 2,

"use_bfloat16": false,

"vocab_size": 21128

}

02/02/2020 22:27:22 - INFO - transformers.tokenization_utils - PID: 27864 - loading file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-chinese-vocab.txt from cache at C:\Users\gaochangkuan\.cache\torch\transformers\8a0c070123c1f794c42a29c6904beb7c1b8715741e235bee04aca2c7636fc83f.9b42061518a39ca00b8b52059fd2bede8daa613f8a8671500e518a8c29de8c00

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Special tokens {'unk_token': 100, 'sep_token': 102, 'pad_token': 0, 'cls_token': 101, 'mask_token': 103}

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Loading data from data/binarized_text.bert-base-chinese.pickle

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Loading token counts from data/token_counts.bert-base-chinese.pickle (already pre-computed)

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Splitting 124 too long sequences.

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Remove 2840 too short (<=11 tokens) sequences.

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Remove 0 sequences with a high level of unknown tokens (50%).

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - 30807 sequences

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Data loader created.

02/02/2020 22:27:22 - INFO - utils - PID: 27864 - Loading student config from training_configs/distilgpt2.json

02/02/2020 22:27:22 - INFO - transformers.configuration_utils - PID: 27864 - loading configuration file training_configs/distilgpt2.json

02/02/2020 22:27:22 - INFO - transformers.configuration_utils - PID: 27864 - Model config GPT2Config {

"architectures": null,

"attn_pdrop": 0.1,

"bos_token_id": 0,

"do_sample": false,

"embd_pdrop": 0.1,

"eos_token_ids": 0,

"finetuning_task": null,

"id2label": {

"0": "LABEL_0",

"1": "LABEL_1"

},

"initializer_range": 0.02,

"is_decoder": false,

"label2id": {

"LABEL_0": 0,

"LABEL_1": 1

},

"layer_norm_epsilon": 1e-05,

"length_penalty": 1.0,

"max_length": 20,

"model_type": "gpt2",

"n_ctx": 1024,

"n_embd": 768,

"n_head": 12,

"n_layer": 6,

"n_positions": 1024,

"num_beams": 1,

"num_labels": 2,

"num_return_sequences": 1,

"output_attentions": false,

"output_hidden_states": false,

"output_past": true,

"pad_token_id": 0,

"pruned_heads": {},

"repetition_penalty": 1.0,

"resid_pdrop": 0.1,

"summary_activation": null,

"summary_first_dropout": 0.1,

"summary_proj_to_labels": true,

"summary_type": "cls_index",

"summary_use_proj": true,

"temperature": 1.0,

"top_k": 50,

"top_p": 1.0,

"torchscript": false,

"use_bfloat16": false,

"vocab_size": 21128

}

02/02/2020 22:27:24 - INFO - utils - PID: 27864 - Student loaded.

02/02/2020 22:27:24 - INFO - transformers.configuration_utils - PID: 27864 - loading configuration file E:\GPT2_Text_generation\GPT2-Chinese-master\GPT2Model\config.json

02/02/2020 22:27:24 - INFO - transformers.configuration_utils - PID: 27864 - Model config GPT2Config {

"architectures": null,

"attn_pdrop": 0.1,

"bos_token_id": 0,

"do_sample": false,

"embd_pdrop": 0.1,

"eos_token_ids": 0,

"finetuning_task": null,

"id2label": {

"0": "LABEL_0"

},

"initializer_range": 0.02,

"is_decoder": false,

"label2id": {

"LABEL_0": 0

},

"layer_norm_epsilon": 1e-05,

"length_penalty": 1.0,

"max_length": 20,

"model_type": "gpt2",

"n_ctx": 1024,

"n_embd": 768,

"n_head": 12,

"n_layer": 10,

"n_positions": 1024,

"num_beams": 1,

"num_labels": 1,

"num_return_sequences": 1,

"output_attentions": false,

"output_hidden_states": true,

"output_past": true,

"pad_token_id": 0,

"pruned_heads": {},

"repetition_penalty": 1.0,

"resid_pdrop": 0.1,

"summary_activation": null,

"summary_first_dropout": 0.1,

"summary_proj_to_labels": true,

"summary_type": "cls_index",

"summary_use_proj": true,

"temperature": 1.0,

"top_k": 50,

"top_p": 1.0,

"torchscript": false,

"use_bfloat16": false,

"vocab_size": 21128

}

02/02/2020 22:27:24 - INFO - transformers.modeling_utils - PID: 27864 - loading weights file E:\GPT2_Text_generation\GPT2-Chinese-master\GPT2Model\pytorch_model.bin

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - Teacher loaded from distilgpt2.

21128 21128

768 768

1024 1024

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - Initializing Distiller

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - Using [0, 3, 7, 11, 15, 19, 23, 27, 31, 35, 39, 43, 47, 51, 55, 59, 63, 67, 71, 75, 79, 83, 87, 91, 95, 99, 103, 107, 111, 115, 119, 123, 127, 131, 135, 139, 143, 147, 151, 155, 159, 163, 167, 171, 175, 179, 183, 187, 191, 195, 199, 203, 207, 211, 215, 219, 223, 227, 231, 235, 239, 243, 247, 251, 255, 259, 263, 267, 271, 275, 279, 283, 287, 291, 295, 299, 303, 307, 311, 315, 319, 323, 327, 331, 335, 339, 343, 347, 351, 355, 359, 363, 367, 371, 375, 379, 383, 387, 391, 395, 399, 403, 407, 411, 415, 419, 423, 427, 431, 435, 439, 443, 447, 451, 455, 459, 463, 467, 471, 475, 479, 483, 487, 491, 495, 499, 503, 507, 511, inf] as bins for aspect lengths quantization

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - Count of instances per bin: [1267 1907 1866 1702 1584 1483 1380 1237 1205 1101 1047 974 882 854

758 672 598 583 593 519 492 453 444 414 371 338 352 305

290 298 260 250 260 214 210 200 189 190 149 153 121 125

124 106 116 105 87 100 78 103 73 70 74 78 65 70

52 43 46 51 48 38 49 28 32 41 34 27 29 31

28 39 28 23 25 26 17 25 23 12 20 17 17 20

8 12 15 16 8 11 11 10 13 11 3 9 8 5

9 5 6 6 6 4 10 4 6 3 3 3 2 4

3 6 4 3 7 2 6 9 1 2 6 2 3 134]

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - Using MLM loss for LM step.

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - --- Initializing model optimizer

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - ------ Number of trainable parameters (student): 58755072

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - ------ Number of parameters (student): 59541504

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - --- Initializing Tensorboard

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - Starting training

02/02/2020 22:27:27 - INFO - utils - PID: 27864 - --- Starting epoch 0/2

-Iter: 0%| | 0/6162 [00:00<?, ?it/s]Traceback (most recent call last):

File "train.py", line 329, in <module>

main()

File "train.py", line 324, in main

distiller.train()

File "C:\Users\gaochangkuan\Desktop\transformers-master\examples\distillation\distiller.py", line 355, in train

self.step(input_ids=token_ids, attention_mask=attn_mask, lm_labels=lm_labels)

File "C:\Users\gaochangkuan\Desktop\transformers-master\examples\distillation\distiller.py", line 385, in step

input_ids=input_ids, attention_mask=attention_mask

**ValueError: too many values to unpack (expected 2)** | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2712/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2712/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2711 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2711/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2711/comments | https://api.github.com/repos/huggingface/transformers/issues/2711/events | https://github.com/huggingface/transformers/issues/2711 | 558,698,789 | MDU6SXNzdWU1NTg2OTg3ODk= | 2,711 | TypeError: apply_gradients() missing 1 required positional argument: 'clip_norm' | {

"login": "dimitreOliveira",

"id": 16668746,

"node_id": "MDQ6VXNlcjE2NjY4NzQ2",

"avatar_url": "https://avatars.githubusercontent.com/u/16668746?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/dimitreOliveira",

"html_url": "https://github.com/dimitreOliveira",

"followers_url": "https://api.github.com/users/dimitreOliveira/followers",

"following_url": "https://api.github.com/users/dimitreOliveira/following{/other_user}",

"gists_url": "https://api.github.com/users/dimitreOliveira/gists{/gist_id}",

"starred_url": "https://api.github.com/users/dimitreOliveira/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/dimitreOliveira/subscriptions",

"organizations_url": "https://api.github.com/users/dimitreOliveira/orgs",

"repos_url": "https://api.github.com/users/dimitreOliveira/repos",

"events_url": "https://api.github.com/users/dimitreOliveira/events{/privacy}",

"received_events_url": "https://api.github.com/users/dimitreOliveira/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi, indeed this optimizer `AdamWeightDecay` requires an additional argument for truncating the gradient norm.\r\n\r\nIt essentially feeds the `clip_norm` argument (which is the second required argument in `apply_gradients`) to [tf.clip_by_global_norm](https://www.tensorflow.org/api_docs/python/tf/clip_by_global_norm).\r\n\r\nYou can see a usage example in our [run_tf_ner.py example](https://github.com/huggingface/transformers/blob/master/examples/run_tf_ner.py#L203)",

"I wasn't able to implement this fix on my problem but I think this answer closes the issue, thank!",

"This problem occurs if you don't specify `clip_norm` when calling `apply_gradients`.\r\nIf using a custom training loop, the fix is easy :)\r\nIf you are using `keras.model.fit`, you can do it the following way:\r\n\r\n```\r\nfrom functools import partialmethod\r\n\r\nAdamWeightDecay.apply_gradients = partialmethod(AdamWeightDecay.apply_gradients, clip_norm=1.0)\r\noptimizer = create_optimizer(p.learning_rate, num_train_steps=total_steps, num_warmup_steps=warmup_steps)\r\n```"

] | 1,580 | 1,588 | 1,581 | CONTRIBUTOR | null | # 🐛 Bug

## Information

Model I am using (TFBertModel):

Language I am using the model on (English):

Also I'm using `tensorflow==2.1.0` and `transformers==2.3.0`

The problem arises when using:

* [x] the official example scripts: (give details below)

I'm trying to use the `optimization_tf.create_optimizer` from the source code.

The tasks I am working on is:

* [x] my own task or dataset: (give details below)

Just a text classification task.

## To reproduce

Steps to reproduce the behavior:

1. Try to use the model the regular way

2. When running the model with "optimization_tf.create_optimizer"

## Environment info

```

/opt/conda/lib/python3.6/site-packages/tensorflow_core/python/keras/engine/training_eager.py in _process_single_batch(model, inputs, targets, output_loss_metrics, sample_weights, training)

271 loss_scale_optimizer.LossScaleOptimizer):

272 grads = model.optimizer.get_unscaled_gradients(grads)

--> 273 model.optimizer.apply_gradients(zip(grads, trainable_weights))

274 else:

275 logging.warning('The list of trainable weights is empty. Make sure that'

TypeError: apply_gradients() missing 1 required positional argument: 'clip_norm'

```

## How I am able to run

On the class `AdamWeightDecay` and the method `apply_gradients` I just call the supper function like this:

```

def apply_gradients(self, grads_and_vars, name=None):

return super().apply_gradients(grads_and_vars)

```

but as you can see I'm not using the `clip_norm` as the source example uses.

Is there a way to use the original source function as described in the source code? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2711/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2711/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2710 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2710/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2710/comments | https://api.github.com/repos/huggingface/transformers/issues/2710/events | https://github.com/huggingface/transformers/pull/2710 | 558,667,128 | MDExOlB1bGxSZXF1ZXN0MzY5OTg5ODQ3 | 2,710 | Removed unused fields in DistilBert TransformerBlock | {

"login": "guillaume-be",

"id": 27071604,

"node_id": "MDQ6VXNlcjI3MDcxNjA0",

"avatar_url": "https://avatars.githubusercontent.com/u/27071604?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/guillaume-be",

"html_url": "https://github.com/guillaume-be",

"followers_url": "https://api.github.com/users/guillaume-be/followers",

"following_url": "https://api.github.com/users/guillaume-be/following{/other_user}",

"gists_url": "https://api.github.com/users/guillaume-be/gists{/gist_id}",

"starred_url": "https://api.github.com/users/guillaume-be/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/guillaume-be/subscriptions",

"organizations_url": "https://api.github.com/users/guillaume-be/orgs",

"repos_url": "https://api.github.com/users/guillaume-be/repos",

"events_url": "https://api.github.com/users/guillaume-be/events{/privacy}",

"received_events_url": "https://api.github.com/users/guillaume-be/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2710?src=pr&el=h1) Report\n> Merging [#2710](https://codecov.io/gh/huggingface/transformers/pull/2710?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/2ba147ecffa28e5a4f96eebd09dcd642117dedae?src=pr&el=desc) will **decrease** coverage by `0.27%`.\n> The diff coverage is `n/a`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/2710?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #2710 +/- ##\n==========================================\n- Coverage 74.09% 73.81% -0.28% \n==========================================\n Files 93 93 \n Lines 15248 15243 -5 \n==========================================\n- Hits 11298 11252 -46 \n- Misses 3950 3991 +41\n```\n\n\n| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2710?src=pr&el=tree) | Coverage Δ | |\n|---|---|---|\n| [src/transformers/modeling\\_distilbert.py](https://codecov.io/gh/huggingface/transformers/pull/2710/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19kaXN0aWxiZXJ0LnB5) | `95.79% <ø> (-0.07%)` | :arrow_down: |\n| [src/transformers/modeling\\_tf\\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/2710/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9hdXRvLnB5) | `52.94% <0%> (-21.57%)` | :arrow_down: |\n| [src/transformers/pipelines.py](https://codecov.io/gh/huggingface/transformers/pull/2710/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9waXBlbGluZXMucHk=) | `68.79% <0%> (-3.33%)` | :arrow_down: |\n| [src/transformers/tokenization\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2710/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHMucHk=) | `84.87% <0%> (-0.82%)` | :arrow_down: |\n| [src/transformers/modeling\\_tf\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2710/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `92.3% <0%> (-0.52%)` | :arrow_down: |\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2710?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2710?src=pr&el=footer). Last update [2ba147e...d40db22](https://codecov.io/gh/huggingface/transformers/pull/2710?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n"

] | 1,580 | 1,582 | 1,582 | CONTRIBUTOR | null | A few fields in the TransformerBlock are unused - this small PR cleans it up.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2710/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2710/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/2710",

"html_url": "https://github.com/huggingface/transformers/pull/2710",

"diff_url": "https://github.com/huggingface/transformers/pull/2710.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/2710.patch",

"merged_at": 1582232902000

} |

https://api.github.com/repos/huggingface/transformers/issues/2709 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2709/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2709/comments | https://api.github.com/repos/huggingface/transformers/issues/2709/events | https://github.com/huggingface/transformers/issues/2709 | 558,655,284 | MDU6SXNzdWU1NTg2NTUyODQ= | 2,709 | DistributedDataParallel for multi-gpu single-node runs in run_lm_finetuning.py | {

"login": "Genius1237",

"id": 15867363,

"node_id": "MDQ6VXNlcjE1ODY3MzYz",

"avatar_url": "https://avatars.githubusercontent.com/u/15867363?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Genius1237",

"html_url": "https://github.com/Genius1237",

"followers_url": "https://api.github.com/users/Genius1237/followers",

"following_url": "https://api.github.com/users/Genius1237/following{/other_user}",

"gists_url": "https://api.github.com/users/Genius1237/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Genius1237/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Genius1237/subscriptions",

"organizations_url": "https://api.github.com/users/Genius1237/orgs",

"repos_url": "https://api.github.com/users/Genius1237/repos",

"events_url": "https://api.github.com/users/Genius1237/events{/privacy}",

"received_events_url": "https://api.github.com/users/Genius1237/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"As far as I can see, the script fully supports DDP:\r\n\r\nhttps://github.com/huggingface/transformers/blob/2ba147ecffa28e5a4f96eebd09dcd642117dedae/examples/run_lm_finetuning.py#L282-L286\r\n\r\nI haven't run the script myself, but looking at the source this should work with the [torch launch](https://pytorch.org/docs/stable/distributed.html#launch-utility) utility. Your command would then look like this when using a single node with four GPUs.\r\n\r\n```bash\r\npython -m torch.distributed.launch --nproc_per_node 4 run_lm_finetuning.py [arguments]\r\n```\r\n",

"Ah ok, I wasn't aware that it had to be launched this way. I was looking at the code and thought it DDP would happen only when the process was launched across multiple nodes.\r\n\r\nThanks for the help @BramVanroy "

] | 1,580 | 1,580 | 1,580 | CONTRIBUTOR | null | # 🚀 Feature request

<!-- A clear and concise description of the feature proposal.

Please provide a link to the paper and code in case they exist. -->

Modify `run_lm_finetuning.py` with DDP for multi-gpu single-node jobs.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request

related to a problem? e.g., I'm always frustrated when [...]. If this is related

to another GitHub issue, please link here too. -->

In it's current state, `run_lm_finetuning.py` does not run in DDP for multi-gpu single-node training jobs. This results in all but the first GPU having very low utilization (as low as 50%, when the first one is in the high 80%) due to the way simple DP works. Once implemented, the load would be more evenly balanced across all the GPUs.

## Your contribution

<!-- Is there any way that you could help, e.g. by submitting a PR?

Make sure to read the CONTRIBUTING.MD readme:

https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md -->

I can help implementing this feature, but would need guidance on what should/shouldn't be modified to get this working properly. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2709/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2709/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2708 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2708/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2708/comments | https://api.github.com/repos/huggingface/transformers/issues/2708/events | https://github.com/huggingface/transformers/issues/2708 | 558,610,431 | MDU6SXNzdWU1NTg2MTA0MzE= | 2,708 | Can't pickle local object using the finetuning example. | {

"login": "Normand-1024",

"id": 17085216,

"node_id": "MDQ6VXNlcjE3MDg1MjE2",

"avatar_url": "https://avatars.githubusercontent.com/u/17085216?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Normand-1024",

"html_url": "https://github.com/Normand-1024",

"followers_url": "https://api.github.com/users/Normand-1024/followers",

"following_url": "https://api.github.com/users/Normand-1024/following{/other_user}",

"gists_url": "https://api.github.com/users/Normand-1024/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Normand-1024/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Normand-1024/subscriptions",

"organizations_url": "https://api.github.com/users/Normand-1024/orgs",

"repos_url": "https://api.github.com/users/Normand-1024/repos",

"events_url": "https://api.github.com/users/Normand-1024/events{/privacy}",

"received_events_url": "https://api.github.com/users/Normand-1024/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"Do you mind specifying which versions of everything you're using, as detailed in the [bug report issue template](https://github.com/huggingface/transformers/issues/new/choose)?",

"Hi @Normand-1024 \r\n\r\nwere you able to fix this error?\r\nas i am getting the same error while trying to run glue task (QQP) but works fine when i run MRPC.",

"Hi,\r\n\r\nI was able to get rid of this error by upgrading the torch version.",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"Still having this issue with transformers `2.11.0`.",

"@lucadiliello did you maange to fix it?",

"Not yet. A solution would be to use `dill` instead of `pickle`... but I'm not sure how to do it.",

"Getting same error,\r\nNot sure how to fix this error.",

"same error, with all newest version",

"I solved by reimplementing all the schedulers without lambda functions. [here](https://github.com/iKernels/transformers-lightning) I published many schedulers.",

"Same error with all newest version too.\r\n\r\n\r\n",

"UP. \r\nIs it a package version related issue?",

"Having the same issue: \r\n`Can't pickle local object 'get_linear_schedule_with_warmup.<locals>.lr_lambda'`",

"For running the example scripts passing `--no_multi_process` solved it for me.\r\n\r\nI haven't looked into the huggingface code yet but I could imagine that [this](https://stackoverflow.com/questions/52265120/python-multiprocessing-pool-attributeerror) is the bug here. I think it only shows up when `spawn` instead of `fork` is used to create new processes, which is why the developers might have missed it.",

"I set the `gpus=1`, and it works. ",

"Well, this seems that it is a local object that can not be forked, you may define it at each forked process. This may work well. However, somebody should fix it."

] | 1,580 | 1,647 | 1,587 | NONE | null | I was testing out the finetuning example from the repo:

`python run_lm_finetuning.py --train_data_file="finetune-output/KantText.txt" --output_dir="finetune-output/hugkant" --model_type=gpt2 --model_name_or_path=gpt2 --do_train --block_size=128`

While saving the checkpoint, it gives the following error:

```

Traceback (most recent call last):

File "run_lm_finetuning.py", line 790, in <module>

main()

File "run_lm_finetuning.py", line 740, in main

global_step, tr_loss = train(args, train_dataset, model, tokenizer)

File "run_lm_finetuning.py", line 398, in train

torch.save(scheduler.state_dict(), os.path.join(output_dir, "scheduler.pt"))

File "D:\Software\Python\lib\site-packages\torch\serialization.py", line 209, in save

return _with_file_like(f, "wb", lambda f: _save(obj, f, pickle_module, pickle_protocol))

File "D:\Software\Python\lib\site-packages\torch\serialization.py", line 134, in _with_file_like

return body(f)

File "D:\Software\Python\lib\site-packages\torch\serialization.py", line 209, in <lambda>

return _with_file_like(f, "wb", lambda f: _save(obj, f, pickle_module, pickle_protocol))

File "D:\Software\Python\lib\site-packages\torch\serialization.py", line 282, in _save

pickler.dump(obj)

AttributeError: Can't pickle local object 'get_linear_schedule_with_warmup.<locals>.lr_lambda'

``` | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2708/reactions",

"total_count": 12,

"+1": 12,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2708/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2707 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2707/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2707/comments | https://api.github.com/repos/huggingface/transformers/issues/2707/events | https://github.com/huggingface/transformers/pull/2707 | 558,559,779 | MDExOlB1bGxSZXF1ZXN0MzY5OTEyMjM4 | 2,707 | Fix typo in examples/utils_ner.py | {

"login": "falcaopetri",

"id": 8387736,

"node_id": "MDQ6VXNlcjgzODc3MzY=",

"avatar_url": "https://avatars.githubusercontent.com/u/8387736?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/falcaopetri",

"html_url": "https://github.com/falcaopetri",

"followers_url": "https://api.github.com/users/falcaopetri/followers",

"following_url": "https://api.github.com/users/falcaopetri/following{/other_user}",

"gists_url": "https://api.github.com/users/falcaopetri/gists{/gist_id}",

"starred_url": "https://api.github.com/users/falcaopetri/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/falcaopetri/subscriptions",

"organizations_url": "https://api.github.com/users/falcaopetri/orgs",

"repos_url": "https://api.github.com/users/falcaopetri/repos",

"events_url": "https://api.github.com/users/falcaopetri/events{/privacy}",

"received_events_url": "https://api.github.com/users/falcaopetri/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2707?src=pr&el=h1) Report\n> Merging [#2707](https://codecov.io/gh/huggingface/transformers/pull/2707?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/ddb6f9476b58ed9bf4433622ca9aa49932929bc0?src=pr&el=desc) will **not change** coverage.\n> The diff coverage is `n/a`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/2707?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #2707 +/- ##\n=======================================\n Coverage 74.25% 74.25% \n=======================================\n Files 92 92 \n Lines 15216 15216 \n=======================================\n Hits 11298 11298 \n Misses 3918 3918\n```\n\n\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2707?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2707?src=pr&el=footer). Last update [ddb6f94...dd19c80](https://codecov.io/gh/huggingface/transformers/pull/2707?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n",

"Good catch, thanks"

] | 1,580 | 1,580 | 1,580 | CONTRIBUTOR | null | `"%s-%d".format()` -> `"{}-{}".format()` | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2707/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2707/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/2707",

"html_url": "https://github.com/huggingface/transformers/pull/2707",

"diff_url": "https://github.com/huggingface/transformers/pull/2707.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/2707.patch",

"merged_at": 1580573458000

} |

https://api.github.com/repos/huggingface/transformers/issues/2706 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2706/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2706/comments | https://api.github.com/repos/huggingface/transformers/issues/2706/events | https://github.com/huggingface/transformers/issues/2706 | 558,555,100 | MDU6SXNzdWU1NTg1NTUxMDA= | 2,706 | Load from tf2.0 checkpoint fail | {

"login": "stevewyl",

"id": 12755003,

"node_id": "MDQ6VXNlcjEyNzU1MDAz",

"avatar_url": "https://avatars.githubusercontent.com/u/12755003?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stevewyl",

"html_url": "https://github.com/stevewyl",

"followers_url": "https://api.github.com/users/stevewyl/followers",

"following_url": "https://api.github.com/users/stevewyl/following{/other_user}",

"gists_url": "https://api.github.com/users/stevewyl/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stevewyl/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stevewyl/subscriptions",

"organizations_url": "https://api.github.com/users/stevewyl/orgs",

"repos_url": "https://api.github.com/users/stevewyl/repos",

"events_url": "https://api.github.com/users/stevewyl/events{/privacy}",

"received_events_url": "https://api.github.com/users/stevewyl/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"Hi, in order to convert an official checkpoint to a checkpoint readable by `transformers`, you need to use the script `convert_bert_original_tf_checkpoint_to_pytorch`. You can then load it in a `BertModel` (PyTorch) or a `TFBertModel` (TensorFlow), by specifying the argument `from_pt=True` in your `from_pretrained` method.",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,580 | 1,586 | 1,586 | NONE | null | # 🐛 Bug

## Information

Model I am using (Bert, XLNet ...): Bert

Language I am using the model on (English, Chinese ...): English

The problem arises when using:

* [x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Download tf2.0 checkpoint from https://storage.googleapis.com/cloud-tpu-checkpoints/bert/keras_bert/uncased_L-12_H-768_A-12.tar.gz

2. unpack the model tar.gz to `bert_models` folder

3. start an iPython console and type following codes:

```python

import tensorflow as tf

from transformers import TFBertModel, BertConfig

config = BertConfig.from_json_file("./bert_models/uncased_L-12_H-768_A-12/bert_config.json")

model = TFBertModel.from_pretrained("./bert_models/uncased_L-12_H-768_A-12/bert_model.ckpt.index", config=config)

```

4. I check the original code from tf2.0 and found they didn't implement model.load_weights when by_name is True. Error is following:

NotImplementedError: Weights may only be loaded based on topology into Models when loading TensorFlow-formatted weights (got by_name=True to load_weights)

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

## Environment

* OS: CentOS Linux release 7.4.1708 (Core)

* Python version: 3.7.6

* PyTorch version: 1.3.1

* `transformers` version (or branch):

* Using GPU ? Yes

* Distributed or parallel setup ? No

* Any other relevant information:

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2706/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2706/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2705 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2705/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2705/comments | https://api.github.com/repos/huggingface/transformers/issues/2705/events | https://github.com/huggingface/transformers/issues/2705 | 558,519,030 | MDU6SXNzdWU1NTg1MTkwMzA= | 2,705 | What is the input for TFBertForSequenceClassification? | {

"login": "sainimohit23",

"id": 26195811,

"node_id": "MDQ6VXNlcjI2MTk1ODEx",

"avatar_url": "https://avatars.githubusercontent.com/u/26195811?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sainimohit23",

"html_url": "https://github.com/sainimohit23",

"followers_url": "https://api.github.com/users/sainimohit23/followers",

"following_url": "https://api.github.com/users/sainimohit23/following{/other_user}",

"gists_url": "https://api.github.com/users/sainimohit23/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sainimohit23/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sainimohit23/subscriptions",

"organizations_url": "https://api.github.com/users/sainimohit23/orgs",

"repos_url": "https://api.github.com/users/sainimohit23/repos",

"events_url": "https://api.github.com/users/sainimohit23/events{/privacy}",

"received_events_url": "https://api.github.com/users/sainimohit23/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Have a look at an example, for instance [`run_tf_glue.py`](https://github.com/huggingface/transformers/blob/master/examples/run_tf_glue.py). To better understand all the arguments, I advise you to read the [documentation](https://huggingface.co/transformers/model_doc/bert.html#bertmodel). You'll find that token_type_ids are\r\n\r\n> Segment token indices to indicate first and second portions of the inputs. Indices are selected in [0, 1]: 0 corresponds to a sentence A token, 1 corresponds to a sentence B token\r\n\r\nSo they're only practically useful if your input contains two sequences (for instance if you wish to model some relationship between sentence A and sentence B). In your case, it's probably not needed.",

"Hi @BramVanroy as you said I tried to run the code from run_tf_glue.py. Yesterday it was working fine on google colab. But today when I tried to rerun the script. I am getting following error:\r\n\r\n```\r\nImportError Traceback (most recent call last)\r\n<ipython-input-7-63fb7d040ab0> in <module>()\r\n 4 import tensorflow_datasets\r\n 5 \r\n----> 6 from transformers import (\r\n 7 BertConfig,\r\n 8 BertForSequenceClassification,\r\n\r\nImportError: cannot import name 'TFBertForSequenceClassification'\r\n```",

"Looks like there was some issue in colab session. So, closing this.",

"@sainimohit23 Getting similar issue in local Jupyter notebook. \r\n\"AttributeError: module 'transformers' has no attribute 'TFBertForSequenceClassification' \" \r\nLooks like there is some changes in transformers package.\r\n\r\nlet me know if this is fixed..\r\n",

"> @sainimohit23 Getting similar issue in local Jupyter notebook.\r\n> \"AttributeError: module 'transformers' has no attribute 'TFBertForSequenceClassification' \"\r\n> Looks like there is some changes in transformers package.\r\n> \r\n> let me know if this is fixed..\r\n\r\nAre you using the latest version of transformers? Try updating, because it is right there in the source code:\r\n\r\nhttps://github.com/huggingface/transformers/blob/5c3d441ee1dc9150ccaf1075eb0168bbfe28c7f9/src/transformers/modeling_tf_bert.py#L875",

"@BramVanroy Using latest version of transformers. Double checked. \r\nLet me know if there is any other issue.\r\n\r\nPlease find below details useful. \r\n```\r\n`AttributeError Traceback (most recent call last)\r\n<ipython-input-6-5c0ab52ed729> in <module>\r\n----> 1 model = BertForSequenceClassification.from_pretrained('sentiment_model/',from_tf=True) # re-load\r\n 2 tokenizer = BertTokenizer.from_pretrained('sentiment_model/')\r\n\r\n~\\AppData\\Roaming\\Python\\Python37\\site-packages\\transformers\\modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)\r\n 485 from transformers import load_tf2_checkpoint_in_pytorch_model\r\n 486 \r\n--> 487 model = load_tf2_checkpoint_in_pytorch_model(model, resolved_archive_file, allow_missing_keys=True)\r\n 488 except ImportError:\r\n 489 logger.error(\r\n\r\n~\\AppData\\Roaming\\Python\\Python37\\site-packages\\transformers\\modeling_tf_pytorch_utils.py in load_tf2_checkpoint_in_pytorch_model(pt_model, tf_checkpoint_path, tf_inputs, allow_missing_keys)\r\n 223 # Instantiate and load the associated TF 2.0 model\r\n 224 tf_model_class_name = \"TF\" + pt_model.__class__.__name__ # Add \"TF\" at the beggining\r\n--> 225 tf_model_class = getattr(transformers, tf_model_class_name)\r\n 226 tf_model = tf_model_class(pt_model.config)\r\n 227 \r\n\r\nAttributeError: module 'transformers' has no attribute 'TFBertForSequenceClassification'`\r\n```",

"I went through the source code, and this should work _unless_ Tensorflow is not installed in your environment. In such a case, the Tensorflow models are not imported in __init__. Make sure that Tensorflow is installed.\r\n\r\nhttps://github.com/huggingface/transformers/blob/0dbddba6d2c5b2c6fc08866358c1994a00d6a1ff/src/transformers/__init__.py#L287-L313",

"Hi @BramVanroy, Thanks for the help there was a miss match of tensorflow version, but it looks like the issue is something different. \r\n`RuntimeError: storage has wrong size: expected -273778883 got 768`\r\n\r\nEither fine tuned model is corrupted or other issue. .\r\n\r\nThanks",

"Can you post the full trace?",

"@BramVanroy Please find the below details useful. \r\nLet me know what can be the issue. \r\n```\r\nRuntimeError Traceback (most recent call last)\r\n<ipython-input-14-d609d3be6585> in <module>\r\n 2 # model = BertForSequenceClassification.from_pretrained('sentiment_model/')\r\n 3 \r\n----> 4 model = BertForSequenceClassification.from_pretrained(\"sentiment_model/\", num_labels=2)\r\n 5 tokenizer = BertTokenizer.from_pretrained('sentiment_model/')\r\n\r\n~\\AppData\\Roaming\\Python\\Python37\\site-packages\\pytorch_pretrained_bert\\modeling.py in from_pretrained(cls, pretrained_model_name_or_path, *inputs, **kwargs)\r\n 601 if state_dict is None and not from_tf:\r\n 602 weights_path = os.path.join(serialization_dir, WEIGHTS_NAME)\r\n--> 603 state_dict = torch.load(weights_path, map_location='cpu')\r\n 604 if tempdir:\r\n 605 # Clean up temp dir\r\n\r\n~\\AppData\\Roaming\\Python\\Python37\\site-packages\\torch\\serialization.py in load(f, map_location, pickle_module, **pickle_load_args)\r\n 384 f = f.open('rb')\r\n 385 try:\r\n--> 386 return _load(f, map_location, pickle_module, **pickle_load_args)\r\n 387 finally:\r\n 388 if new_fd:\r\n\r\n~\\AppData\\Roaming\\Python\\Python37\\site-packages\\torch\\serialization.py in _load(f, map_location, pickle_module, **pickle_load_args)\r\n 578 for key in deserialized_storage_keys:\r\n 579 assert key in deserialized_objects\r\n--> 580 deserialized_objects[key]._set_from_file(f, offset, f_should_read_directly)\r\n 581 if offset is not None:\r\n 582 offset = f.tell()\r\n\r\nRuntimeError: storage has wrong size: expected -273778883 got 768\r\n```\r\n\r\n",

"@vijender412 i found this [comment](https://github.com/pytorch/pytorch/issues/12042#issuecomment-426466826) useful ",

"I don't use Tensorflow, but the documentation suggests that you should load your model like this:\r\n\r\nhttps://github.com/huggingface/transformers/blob/20fc18fbda3669c2f4a3510e0705b2acd54bff07/src/transformers/modeling_utils.py#L366-L368",

"@ArashHosseini gone through that but was not able to link my code.\r\n\r\n@BramVanroy \r\nThe fine tuned model was saved using'\r\n```\r\nmodel.save_pretrained('./sentiment_model/')\r\ntokenizer.save_pretrained('./sentiment_model/')\r\n```\r\nAnd files created were (config.json,pytorch_model.bin,special_tokens_map.json,tokenizer_config.json,vocab.txt) So no checkpoint were created wrt to tensorflow. \r\n\r\nNow as per documentation the loading should be \r\n```\r\nmodel = BertForSequenceClassification.from_pretrained(\"sentiment_model/\", num_labels=2)\r\ntokenizer = BertTokenizer.from_pretrained('sentiment_model/')\r\n```\r\nThe tokenizer is getting loaded but getting issues while loading model. \r\n\"RuntimeError: storage has wrong size: expected -273778883 got 768\"\r\n",

"Then why did you say in your original comment that you had a Tensorflow mismatch? \r\n\r\nI am not sure why this happens. Please open your own topic, and provide all necessary information from the template.",

"@BramVanroy Earlier I was getting this issue\r\n`AttributeError: module 'transformers' has no attribute 'TFBertForSequenceClassification'`\r\nwhich got resolved by changing tensorflow version to 2.0. \r\n**For current issue l will create a new issue after tracing out my code from scratch.**",

"> @ArashHosseini gone through that but was not able to link my code.\r\n> \r\n> @BramVanroy\r\n> The fine tuned model was saved using'\r\n> \r\n> ```\r\n> model.save_pretrained('./sentiment_model/')\r\n> tokenizer.save_pretrained('./sentiment_model/')\r\n> ```\r\n> \r\n> And files created were (config.json,pytorch_model.bin,special_tokens_map.json,tokenizer_config.json,vocab.txt) So no checkpoint were created wrt to tensorflow.\r\n> \r\n> Now as per documentation the loading should be\r\n> \r\n> ```\r\n> model = BertForSequenceClassification.from_pretrained(\"sentiment_model/\", num_labels=2)\r\n> tokenizer = BertTokenizer.from_pretrained('sentiment_model/')\r\n> ```\r\n> \r\n> The tokenizer is getting loaded but getting issues while loading model.\r\n> \"RuntimeError: storage has wrong size: expected -273778883 got 768\"\r\n\r\nHi, I meet the same issue(can't load state_dict after saving it), Have you solve it?"

] | 1,580 | 1,584 | 1,580 | NONE | null | # ❓ Questions & Help

What is the input for TFBertForSequenceClassification?

## Details

I have a simple multiclass text data on which I want to train the BERT model.

From docs I have found the input format of data:

```a list of varying length with one or several input Tensors IN THE ORDER given in the docstring: model([input_ids, attention_mask]) or model([input_ids, attention_mask, token_type_ids])```

In my understanding:

`input_ids`- tokenized sentences, generated from BERT tokenizer.

`attention_mask`- As name suggests it is attention mask. I should use it to mask out padding tokens. Please correct me if I am wrong.

Now what is `token_type_ids'? is it necessary?

When I tried to print output_shape of the model? I got:

`AttributeError: The layer has never been called and thus has no defined output shape.`

So, let's say my dataset has 5 classes. Does this model expect one-hot encoded vector of shape [BATCH_SIZE, CLASSES] for .fit() method?

Also if I don't use .from_pretrained() method, will it load an untrained model? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2705/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2705/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2704 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2704/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2704/comments | https://api.github.com/repos/huggingface/transformers/issues/2704/events | https://github.com/huggingface/transformers/issues/2704 | 558,474,768 | MDU6SXNzdWU1NTg0NzQ3Njg= | 2,704 | How to make transformers examples use GPU? | {

"login": "abhijith-athreya",

"id": 387274,

"node_id": "MDQ6VXNlcjM4NzI3NA==",

"avatar_url": "https://avatars.githubusercontent.com/u/387274?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/abhijith-athreya",

"html_url": "https://github.com/abhijith-athreya",

"followers_url": "https://api.github.com/users/abhijith-athreya/followers",

"following_url": "https://api.github.com/users/abhijith-athreya/following{/other_user}",

"gists_url": "https://api.github.com/users/abhijith-athreya/gists{/gist_id}",

"starred_url": "https://api.github.com/users/abhijith-athreya/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/abhijith-athreya/subscriptions",

"organizations_url": "https://api.github.com/users/abhijith-athreya/orgs",

"repos_url": "https://api.github.com/users/abhijith-athreya/repos",

"events_url": "https://api.github.com/users/abhijith-athreya/events{/privacy}",

"received_events_url": "https://api.github.com/users/abhijith-athreya/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"GPU should be used by default and can be disabled with the `no_cuda` flag. If your GPU is not being used, that means that PyTorch can't access your CUDA installation. \r\n\r\nWhat is the output of running this in your Python interpreter?\r\n\r\n```python\r\nimport torch\r\ntorch.cuda.is_available()\r\n```",

"Thanks for the response. The output is True. Looks like it is using the GPU. But the utilization never crosses 10%. ",

"And how is your CPU usage? Which GPU are you using? Which settings are you using? (Batch size, seq len...)",

"CPU Usage also is less than 10%. I'm using a Ryzen 3700X with Nvidia 2080 ti. I did not change any default settings of the batch size (4) and sequence length. ",

"@abhijith-athreya What was the issue? I am facing the same issue. I am encoding the sentences using bert model but it's quite slow and not using GPU too.\r\n",

"You need to post some sample code @monk1337, also https://discuss.huggingface.co will be more suited",

"@julien-c \r\n\r\nIt's working now.\r\n\r\nfrom transformers import BertTokenizer, BertModel, BertForMaskedLM\r\ndef assign_GPU(Tokenizer_output):\r\n \r\n tokens_tensor = Tokenizer_output['input_ids'].to('cuda:0')\r\n token_type_ids = Tokenizer_output['token_type_ids'].to('cuda:0')\r\n attention_mask = Tokenizer_output['attention_mask'].to('cuda:0')\r\n \r\n output = {'input_ids' : tokens_tensor, \r\n 'token_type_ids' : token_type_ids, \r\n 'attention_mask' : attention_mask}\r\n \r\n return output\r\n\r\n\r\n\r\n```\r\nsentence = 'Hello World!'\r\ntokenizer = BertTokenizer.from_pretrained('bert-large-uncased')\r\nmodel = BertModel.from_pretrained('bert-large-uncased')\r\n\r\ninputs = assign_GPU(tokenizer(sentence, return_tensors=\"pt\"))\r\nmodel = model.to('cuda:0')\r\noutputs = model(**inputs)\r\noutputs\r\n```",

"> @julien-c\r\n> \r\n> It's working now.\r\n> \r\n> from transformers import BertTokenizer, BertModel, BertForMaskedLM\r\n> def assign_GPU(Tokenizer_output):\r\n> \r\n> ```\r\n> tokens_tensor = Tokenizer_output['input_ids'].to('cuda:0')\r\n> token_type_ids = Tokenizer_output['token_type_ids'].to('cuda:0')\r\n> attention_mask = Tokenizer_output['attention_mask'].to('cuda:0')\r\n> \r\n> output = {'input_ids' : tokens_tensor, \r\n> 'token_type_ids' : token_type_ids, \r\n> 'attention_mask' : attention_mask}\r\n> \r\n> return output\r\n> ```\r\n> \r\n> ```\r\n> sentence = 'Hello World!'\r\n> tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')\r\n> model = BertModel.from_pretrained('bert-large-uncased')\r\n> \r\n> inputs = assign_GPU(tokenizer(sentence, return_tensors=\"pt\"))\r\n> model = model.to('cuda:0')\r\n> outputs = model(**inputs)\r\n> outputs\r\n> ```\r\n\r\nHey, I just want to complement here. The current version of transformers does support the call to `to()` for the `BatchEncoding` returned by the tokenizer, making it much more cleaner:\r\n\r\n```python\r\n> device = \"cuda:0\" if torch.cuda.is_available() else \"cpu\"\r\n> sentence = 'Hello World!'\r\n> tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')\r\n> model = BertModel.from_pretrained('bert-large-uncased')\r\n\r\n> inputs = tokenizer(sentence, return_tensors=\"pt\").to(device)\r\n> model = model.to(device)\r\n> outputs = model(**inputs)\r\n```",

"wanted to add that in the new version of transformers, the Pipeline instance can also be run on GPU using as in the following example:\r\n```python\r\npipeline = pipeline(TASK, \r\n model=MODEL_PATH,\r\n device=1, # to utilize GPU cuda:1\r\n device=0, # to utilize GPU cuda:0\r\n device=-1) # default value which utilize CPU\r\n```",

"> wanted to add that in the new version of transformers, the Pipeline instance can also be run on GPU using as in the following example:\r\n> \r\n> ```python\r\n> pipeline = pipeline(TASK, \r\n> model=MODEL_PATH,\r\n> device=1, # to utilize GPU cuda:1\r\n> device=0, # to utilize GPU cuda:0\r\n> device=-1) # default value which utilize CPU\r\n> ```\r\n\r\nAnd about work with multiple GPUs?"

] | 1,580 | 1,659 | 1,580 | NONE | null | # ❓ Questions & Help

I'm training the run_lm_finetuning.py with wiki-raw dataset. The training seems to work fine, but it is not using my GPU. Is there any flag which I should set to enable GPU usage?

## Details

I'm training the run_lm_finetuning.py with wiki-raw dataset. The training seems to work fine, but it is not using my GPU. Is there any flag which I should set to enable GPU usage?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2704/reactions",

"total_count": 8,

"+1": 8,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2704/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2703 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2703/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2703/comments | https://api.github.com/repos/huggingface/transformers/issues/2703/events | https://github.com/huggingface/transformers/issues/2703 | 558,468,127 | MDU6SXNzdWU1NTg0NjgxMjc= | 2,703 | run_lm_finetuning.py on bert-base-uncased with wikitext-2-raw does not work | {

"login": "abhijith-athreya",

"id": 387274,

"node_id": "MDQ6VXNlcjM4NzI3NA==",

"avatar_url": "https://avatars.githubusercontent.com/u/387274?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/abhijith-athreya",

"html_url": "https://github.com/abhijith-athreya",

"followers_url": "https://api.github.com/users/abhijith-athreya/followers",

"following_url": "https://api.github.com/users/abhijith-athreya/following{/other_user}",

"gists_url": "https://api.github.com/users/abhijith-athreya/gists{/gist_id}",

"starred_url": "https://api.github.com/users/abhijith-athreya/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/abhijith-athreya/subscriptions",

"organizations_url": "https://api.github.com/users/abhijith-athreya/orgs",

"repos_url": "https://api.github.com/users/abhijith-athreya/repos",

"events_url": "https://api.github.com/users/abhijith-athreya/events{/privacy}",

"received_events_url": "https://api.github.com/users/abhijith-athreya/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi, did you manage to fix your issue?",

"Hi,\r\nYes, I took the latest build, and it worked without any changes. "

] | 1,580 | 1,580 | 1,580 | NONE | null | # 🐛 Bug

## Running run_lm_finetuning.py on bert-base-uncased with wikitext-2-raw does not work.

Model I am using (Bert, XLNet ...): Bert - bert-base-uncased

Language I am using the model on (English, Chinese ...): English

The problem arises when using:

* [*] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [*] an official GLUE/SQUaD task: train language model on wikitext

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Installed Transformers from the source (git pull and then pip install). Downloaded Wikitext-2 raw dataset.

2. Ran this command ""python run_lm_finetuning.py --output_dir=output --model_type=bert --model_name_or_path=bert-base-uncased --do_train --train_data_file=E:\\Code\\data\\wikitext-2-raw\\wiki.train.raw --do_eval --eval_data_file=E:\\Code\\data\\wikitext-2-raw\\wiki.test.raw --mlm""

3. This fails in train() method. I haven't touched the code. Stacktraces below:

python run_lm_finetuning.py --output_dir=output --model_type=bert --model_name_or_path=bert-base-uncased --do_train --train_data_file=E:\\Code\\data\\wikitext-2-raw\\wiki.train.raw --do_eval --eval_data_file=E:\\Code\\data\\wikitext-2-raw\\wiki.test.raw --mlm

2020-01-31 21:51:38.831236: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cudart64_101.dll

01/31/2020 21:51:40 - WARNING - __main__ - Process rank: -1, device: cuda, n_gpu: 1, distributed training: False, 16-bits training: False

01/31/2020 21:51:40 - INFO - transformers.file_utils - https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-config.json not found in cache or force_download set to True, downloading to C:\Users\athre\AppData\Local\Temp\tmp91_kkef0

01/31/2020 21:51:40 - INFO - transformers.file_utils - copying C:\Users\athre\AppData\Local\Temp\tmp91_kkef0 to cache at C:\Users\athre\.cache\torch\transformers\4dad0251492946e18ac39290fcfe91b89d370fee250efe9521476438fe8ca185.8f56353af4a709bf5ff0fbc915d8f5b42bfff892cbb6ac98c3c45f481a03c685

01/31/2020 21:51:40 - INFO - transformers.file_utils - creating metadata file for C:\Users\athre\.cache\torch\transformers\4dad0251492946e18ac39290fcfe91b89d370fee250efe9521476438fe8ca185.8f56353af4a709bf5ff0fbc915d8f5b42bfff892cbb6ac98c3c45f481a03c685

01/31/2020 21:51:40 - INFO - transformers.file_utils - removing temp file C:\Users\athre\AppData\Local\Temp\tmp91_kkef0

01/31/2020 21:51:40 - INFO - transformers.configuration_utils - loading configuration file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-config.json from cache at C:\Users\athre\.cache\torch\transformers\4dad0251492946e18ac39290fcfe91b89d370fee250efe9521476438fe8ca185.8f56353af4a709bf5ff0fbc915d8f5b42bfff892cbb6ac98c3c45f481a03c685

01/31/2020 21:51:40 - INFO - transformers.configuration_utils - Model config {

"architectures": [

"BertForMaskedLM"

],

"attention_probs_dropout_prob": 0.1,

"finetuning_task": null,

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"id2label": {

"0": "LABEL_0",

"1": "LABEL_1"

},

"initializer_range": 0.02,

"intermediate_size": 3072,

"is_decoder": false,

"label2id": {

"LABEL_0": 0,

"LABEL_1": 1

},

"layer_norm_eps": 1e-12,

"max_position_embeddings": 512,

"num_attention_heads": 12,

"num_hidden_layers": 12,

"num_labels": 2,

"output_attentions": false,

"output_hidden_states": false,

"output_past": true,

"pruned_heads": {},

"torchscript": false,

"type_vocab_size": 2,

"use_bfloat16": false,

"vocab_size": 30522

}

01/31/2020 21:51:40 - INFO - transformers.file_utils - https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-vocab.txt not found in cache or force_download set to True, downloading to C:\Users\athre\AppData\Local\Temp\tmpx8hth4qr

01/31/2020 21:51:41 - INFO - transformers.file_utils - copying C:\Users\athre\AppData\Local\Temp\tmpx8hth4qr to cache at C:\Users\athre\.cache\torch\transformers\26bc1ad6c0ac742e9b52263248f6d0f00068293b33709fae12320c0e35ccfbbb.542ce4285a40d23a559526243235df47c5f75c197f04f37d1a0c124c32c9a084

01/31/2020 21:51:41 - INFO - transformers.file_utils - creating metadata file for C:\Users\athre\.cache\torch\transformers\26bc1ad6c0ac742e9b52263248f6d0f00068293b33709fae12320c0e35ccfbbb.542ce4285a40d23a559526243235df47c5f75c197f04f37d1a0c124c32c9a084

01/31/2020 21:51:41 - INFO - transformers.file_utils - removing temp file C:\Users\athre\AppData\Local\Temp\tmpx8hth4qr

01/31/2020 21:51:41 - INFO - transformers.tokenization_utils - loading file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-vocab.txt from cache at C:\Users\athre\.cache\torch\transformers\26bc1ad6c0ac742e9b52263248f6d0f00068293b33709fae12320c0e35ccfbbb.542ce4285a40d23a559526243235df47c5f75c197f04f37d1a0c124c32c9a084

01/31/2020 21:51:41 - INFO - transformers.file_utils - https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-pytorch_model.bin not found in cache or force_download set to True, downloading to C:\Users\athre\AppData\Local\Temp\tmpy8kf8hkd

01/31/2020 21:54:14 - INFO - transformers.file_utils - copying C:\Users\athre\AppData\Local\Temp\tmpy8kf8hkd to cache at C:\Users\athre\.cache\torch\transformers\aa1ef1aede4482d0dbcd4d52baad8ae300e60902e88fcb0bebdec09afd232066.36ca03ab34a1a5d5fa7bc3d03d55c4fa650fed07220e2eeebc06ce58d0e9a157

01/31/2020 21:54:14 - INFO - transformers.file_utils - creating metadata file for C:\Users\athre\.cache\torch\transformers\aa1ef1aede4482d0dbcd4d52baad8ae300e60902e88fcb0bebdec09afd232066.36ca03ab34a1a5d5fa7bc3d03d55c4fa650fed07220e2eeebc06ce58d0e9a157

01/31/2020 21:54:14 - INFO - transformers.file_utils - removing temp file C:\Users\athre\AppData\Local\Temp\tmpy8kf8hkd

01/31/2020 21:54:14 - INFO - transformers.modeling_utils - loading weights file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-pytorch_model.bin from cache at C:\Users\athre\.cache\torch\transformers\aa1ef1aede4482d0dbcd4d52baad8ae300e60902e88fcb0bebdec09afd232066.36ca03ab34a1a5d5fa7bc3d03d55c4fa650fed07220e2eeebc06ce58d0e9a157

01/31/2020 21:54:16 - INFO - transformers.modeling_utils - Weights from pretrained model not used in BertForMaskedLM: ['cls.seq_relationship.weight', 'cls.seq_relationship.bias']

01/31/2020 21:54:18 - INFO - __main__ - Training/evaluation parameters Namespace(adam_epsilon=1e-08, block_size=510, cache_dir=None, config_name=None, device=device(type='cuda'), do_eval=True, do_train=True, eval_all_checkpoints=False, eval_data_file='E:\\\\Code\\\\data\\\\wikitext-2-raw\\\\wiki.test.raw', evaluate_during_training=False, fp16=False, fp16_opt_level='O1', gradient_accumulation_steps=1, learning_rate=5e-05, line_by_line=False, local_rank=-1, logging_steps=500, max_grad_norm=1.0, max_steps=-1, mlm=True, mlm_probability=0.15, model_name_or_path='bert-base-uncased', model_type='bert', n_gpu=1, no_cuda=False, num_train_epochs=1.0, output_dir='output', overwrite_cache=False, overwrite_output_dir=False, per_gpu_eval_batch_size=4, per_gpu_train_batch_size=4, save_steps=500, save_total_limit=None, seed=42, server_ip='', server_port='', should_continue=False, tokenizer_name=None, train_data_file='E:\\\\Code\\\\data\\\\wikitext-2-raw\\\\wiki.train.raw', warmup_steps=0, weight_decay=0.0)

01/31/2020 21:54:18 - INFO - __main__ - Loading features from cached file E:\\Code\\data\\wikitext-2-raw\bert_cached_lm_510_wiki.train.raw

01/31/2020 21:54:18 - INFO - __main__ - ***** Running training *****

01/31/2020 21:54:18 - INFO - __main__ - Num examples = 4664

01/31/2020 21:54:18 - INFO - __main__ - Num Epochs = 1

01/31/2020 21:54:18 - INFO - __main__ - Instantaneous batch size per GPU = 4

01/31/2020 21:54:18 - INFO - __main__ - Total train batch size (w. parallel, distributed & accumulation) = 4

01/31/2020 21:54:18 - INFO - __main__ - Gradient Accumulation steps = 1

01/31/2020 21:54:18 - INFO - __main__ - Total optimization steps = 1166

Epoch: 0%| | 0/1 [00:00<?, ?it/s]C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [0,0,0] Assertion `t >= 0 && t < n_classes` failed. | 0/1166 [00:00<?, ?it/s]

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [1,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [2,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [3,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [4,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [5,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [6,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [7,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [8,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [9,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [10,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [11,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [12,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [13,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [14,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [15,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [16,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [17,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [18,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [19,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [20,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [21,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [22,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [24,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [25,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [26,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [27,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [28,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [29,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [30,0,0] Assertion `t >= 0 && t < n_classes` failed.

C:/w/1/s/windows/pytorch/aten/src/THCUNN/ClassNLLCriterion.cu:106: block: [0,0,0], thread: [31,0,0] Assertion `t >= 0 && t < n_classes` failed.

Traceback (most recent call last):

File "run_lm_finetuning.py", line 790, in <module>

main()

File "run_lm_finetuning.py", line 740, in main

global_step, tr_loss = train(args, train_dataset, model, tokenizer)

File "run_lm_finetuning.py", line 356, in train

loss.backward()

File "E:\Code\torch_env\lib\site-packages\torch\tensor.py", line 195, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "E:\Code\torch_env\lib\site-packages\torch\autograd\__init__.py", line 99, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: CUDA error: device-side assert triggered

Epoch: 0%| | 0/1 [00:00<?, ?it/s]

Iteration: 0%|

## Expected behavior

Training should start.

## Environment

* OS: Windows 10

* Python version: 3.7

* PyTorch version: 1.4 stable

* `transformers` version (or branch): Latest (Jan-31-2020)

* Using GPU ? Yes

* Distributed or parallel setup ? Only 1 GPU

* Any other relevant information:

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/2703/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/2703/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/2702 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/2702/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/2702/comments | https://api.github.com/repos/huggingface/transformers/issues/2702/events | https://github.com/huggingface/transformers/issues/2702 | 558,450,963 | MDU6SXNzdWU1NTg0NTA5NjM= | 2,702 | DistilBERT does not support token type ids, but the tokenizers produce them | {

"login": "dirkgr",

"id": 920638,

"node_id": "MDQ6VXNlcjkyMDYzOA==",

"avatar_url": "https://avatars.githubusercontent.com/u/920638?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/dirkgr",

"html_url": "https://github.com/dirkgr",

"followers_url": "https://api.github.com/users/dirkgr/followers",

"following_url": "https://api.github.com/users/dirkgr/following{/other_user}",

"gists_url": "https://api.github.com/users/dirkgr/gists{/gist_id}",

"starred_url": "https://api.github.com/users/dirkgr/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/dirkgr/subscriptions",

"organizations_url": "https://api.github.com/users/dirkgr/orgs",

"repos_url": "https://api.github.com/users/dirkgr/repos",

"events_url": "https://api.github.com/users/dirkgr/events{/privacy}",