text

stringlengths 20

57.3k

| labels

class label 4

classes |

|---|---|

Title: error of processing all videos in upload folder

Body: when running

`!python inference_realesrgan_video.py --input upload -n RealESRGANv2-animevideo-xsx2 -s 4 -v -a --half --suffix outx2

`

I'm getting :

```

Traceback (most recent call last):

File "inference_realesrgan_video.py", line 199, in <module>

main()

File "inference_realesrgan_video.py", line 108, in main

if mimetypes.guess_type(args.input)[0].startswith('video'): # is a video file

AttributeError: 'NoneType' object has no attribute 'startswith'

```

I'm unable to run the inference on the videos inside the upload folder one after the other.

Hope someone can help fix this issue.

| 1medium

|

Title: Instance Segmentation

Body: Are there any plans to add support for instance segmentation in the future? From what I understand it is currently not supported, correct?

| 1medium

|

Title: Decimating a mesh with islands results in missing cells

Body: `mesh.ncells` > `mesh.cells.shape[0]` after `mesh.decimate_pro().clean()`

This causes issues as `mesh.cell_centers()` matches the number of cells returned by `mesh.ncells`. Trying to use `mesh.cell_centers()` and `mesh.cells`. So it looks like `mesh.cells` is missing some cells.

I wasn't able to reproduce this with the typical bunny model but I found out it only occurs with a mesh that has both multiple regions and a face that is only connected to the rest of the mesh by one vertex. The issue is resolved after running `mesh = mesh.extract_largest_region()` and then `mesh.clean()`.

Maybe an error should raise if decimating a mesh with small islands?

| 2hard

|

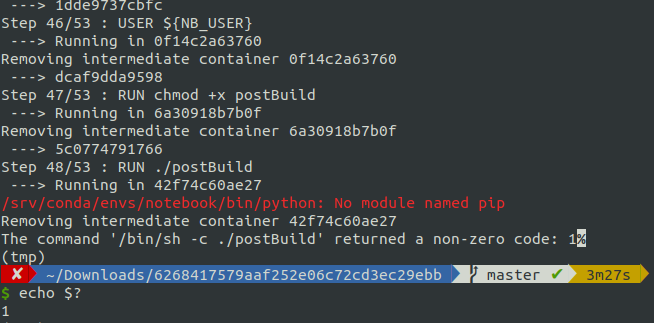

Title: Unable to run docker container (missing ipython_genutils package)

Body: @pplonski With a clone of the repo, I am unable to run a docker container containing the `mercury_demo` .

The message **Problem while loading notebooks. Please try again later or contact Mercury administrator.** displays

Steps to reproduce:

Clone `https://github.com/MarvinKweyu/mercury-docker-demo` and run the container

| 1medium

|

Title: Error: Warning! Status check on the deployed lambda failed. A GET request to '/' yielded a 502 response code.

Body: <!--- Provide a general summary of the issue in the Title above -->

## Context

When im trying to deploy using _zappa update dev_ im getting this error:

Error: Warning! Status check on the deployed lambda failed. A GET request to '/' yielded a 502 response code.

## Expected Behavior

Could not get a valid link such as https://**********.execute-api.us-west-2.amazonaws.com/dev such as this

## Actual Behavior

`{'message': 'An uncaught exception happened while servicing this request. You can investigate this with the `zappa tail` command.', 'traceback': ['Traceback (most recent call last):', ' File /var/task/handler.py, line 540, in handler with Response.from_app(self.wsgi_app, environ) as response:', ' File /var/task/werkzeug/wrappers/base_response.py, line 287, in from_app return cls(*_run_wsgi_app(app, environ, buffered))', ' File /var/task/werkzeug/wrappers/base_response.py, line 26, in _run_wsgi_app return _run_wsgi_app(*args)', ' File /var/task/werkzeug/test.py, line 1119, in run_wsgi_app app_rv = app(environ, start_response)', TypeError: 'NoneType' object is not callable]}`

when im trying to access any endpoint from my application

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Zappa 0.54.1

* Python version 3.7

* Zappa settings

{

"dev": {

"app_function": "app.app",

"aws_region": "us-west-2",

"profile_name": "default",

"project_name": "***************",

"runtime": "python3.7",

"s3_bucket": "zappa-*********"

}

}

| 1medium

|

Title: Remove shadow

Body: Please, how can I remove the shadow?

| 0easy

|

Title: Allow early commiting transaction from inside the context manager before raising an exception

Body: ## Problem

Use case:

```python

async def get_transaction():

async with db.tx() as tx:

yield tx

@router.post("/")

async def endpoint(tx: Annotated[Prisma, Depends(get_transaction)]):

await tx.entity.delete_many()

if not_good:

raise HTTPException()

return "ok"

```

I require the removal of the `entity` to be committed, even though the function was interrupted with an exception.

## Suggested solution

Add a `commit` method to the `Prisma` class:

```python

class Prisma:

...

async def commit(self):

if self._tx_id:

await self._engine.commit_transaction(self._tx_id)

```

Similar function could be added for rollback.

Usage example:

```python

@router.post("/")

async def endpoint(tx: Annotated[Prisma, Depends(get_transaction)]):

await tx.entity.delete_many()

if not_good:

await tx.commit()

raise HTTPException()

return "ok"

```

## Alternatives

Instead of calling `commit_transaction` it may be possible to set an internal flag that will be consulted on the context exit.

## Additional context

Currently I am using this function to do what I want:

```python

async def early_commit(tx: prisma.Prisma):

if tx._tx_id: # pyright: ignore[reportPrivateUsage]

await tx._engine.commit_transaction( # pyright: ignore[reportPrivateUsage]

tx._tx_id # pyright: ignore[reportPrivateUsage]

)

```

| 1medium

|

Title: Multithreading race condition when lazy loading NUMPY_TYPES

Body: The following code crashes randomly with "Illegal instruction" (tested with orjson 3.8.7 and 3.8.8):

```

import orjson

import multiprocessing.pool

class X:

pass

def mydump(i):

orjson.dumps({'abc': X()}, option=orjson.OPT_SERIALIZE_NUMPY, default=lambda x: None)

# mydump(0)

with multiprocessing.pool.ThreadPool(processes=16) as pool:

pool.map(mydump, (i for i in range(0, 16)))

```

Commenting out the mydump(0) call circumvents the issue (with CPython 3.8.13). When building without --strip and with RUST_BACKTRACE=1, the following call stack can be seen:

```

thread '<unnamed>' panicked at 'Lazy instance has previously been poisoned', /usr/local/cargo/registry/src/github.com-1ecc6299db9ec823/once_cell-1.17.1/src/lib.rs:749:25

stack backtrace:

0: rust_begin_unwind

at /rustc/2c8cc343237b8f7d5a3c3703e3a87f2eb2c54a74/library/std/src/panicking.rs:575:5

1: core::panicking::panic_fmt

at /rustc/2c8cc343237b8f7d5a3c3703e3a87f2eb2c54a74/library/core/src/panicking.rs:64:14

2: orjson::serialize::numpy::is_numpy_scalar

3: orjson::serialize::serializer::pyobject_to_obtype_unlikely

4: <orjson::serialize::serializer::PyObjectSerializer as serde::ser::Serialize>::serialize

5: <orjson::serialize::dict::Dict as serde::ser::Serialize>::serialize

6: <orjson::serialize::serializer::PyObjectSerializer as serde::ser::Serialize>::serialize

7: dumps

8: cfunction_vectorcall_FASTCALL_KEYWORDS

at /Python-3.8.13/build_release/../Objects/methodobject.c:441:24

...

```

This happens no matter whether numpy is installed or not.

| 2hard

|

Title: Labelling while plotting time-series data over a network

Body: Hi @marcomusy ,

This is related to the question that was posted [here](https://github.com/marcomusy/vedo/issues/183).

When I run the code below,

```

import networkx as nx

from vedo import *

G = nx.gnm_random_graph(n=10, m=15, seed=1)

nxpos = nx.spring_layout(G)

nxpts = [nxpos[pt] for pt in sorted(nxpos)]

nx_lines = [ (nxpts[i], nxpts[j]) for i, j in G.edges() ]

nx_pts = Points(nxpts, r=12)

nx_edg = Lines(nx_lines).lw(2)

# node values

values = [[1, .80, .10, .79, .70, .60, .75, .78, .65, .90],

[3, .80, .10, .79, .70, .60, .75, .78, .65, .10],

[1, .30, .10, .79, .70, .60, .75, .78, .65, .90]]

time = [0.0, 0.1, 0.2] # in seconds

for val,t in zip(values, time):

nx_pts.cmap('YlGn', val, vmin=0.1, vmax=3)

if t==0:

nx_pts.addScalarBar()

# make a plot title

x0, x1 = nx_pts.xbounds()

y0, y1 = nx_pts.ybounds()

t = Text('My μ-Graph at time='+str(t)+' seconds',

font='BPmonoItalics', justify='center', s=.07, c='lb')

t.pos((x0+x1)/2, y1*1.4)

show(nx_pts, nx_edg, nx_pts.labels('id',c='w'), t,

interactive=True, bg='black')

```

The `text` overlaps

I see this issue in the current version.

Could you please suggest how to update the `text` that corresponds to each time instant and remove the `text` of the previous time step?

| 1medium

|

Title: Enabling data ingestion pipelines

Body: For now, the ingestion of new data is managed in `backend/worker/quivr_worker/process/process_file.py` or in `backend/core/quivr_core/brain/brain.py` using the `get_processor_class` in `backend/core/quivr_core/processor/registry.py`.

This approach prevents the construction and use of more complex ingestion pipelines, for instance based on LangGraph.

We would need to restructure the code so that a Data Ingestion pipeline can be build and used by `backend/core/quivr_core/brain/brain.py` or by `backend/api/quivr_api/modules/upload/controller/upload_routes.py`

| 2hard

|

Title: Add the ability to specify custom feature column names and save/recreate them during serialization

Body: - As a user, I wish I could use Featuretools to specify custom column names for the feature columns that are generated when a feature matrix is calculated. Column names are automatically generated based on a variety of factors including the primitive name, the base features and any parameters passed to the primitive, but it would be beneficial in some circumstances to allow users to easily override these names with a `Feature.set_feature_names` method to directly set the `Feature._names` attribute, rather than having the names generated.

This setter should include a simple check to confirm that the number of feature names provided matches the number of output columns for the feature. Optionally, the names could be serialized only in situations where the user has set custom names.

#### Code Example

```python

custom_feature_names = ["feat_col1", "feat_col2"]

my_feature.set_feature_names(custom_feature_names)

assert my_feature.get_feature_names == custom_feature_names

ft.save_features([my_feature], "features.json")

deserialized_features = ft.load_features("features.json")

assert deserialized_features[0].get_feature_names == custom_feature_names

```

| 1medium

|

Title: Sub folders are not indexed in Nextcloud watch folder

Body:

## 📝 Description of issue:

My nextcloud watch folder contains many sub folders (organized by date, occasion, etc) and LibrePhotos are only importing a few of those folders. So if `/nextcloud` containers `/nextcloud/photoset1` `nextcloud/photoset2` `/nextcloud/photoset3` it is only importing the photos in photoset 1 and 3

## 🔁 How can we reproduce it:

1. connect nextcloud to librephotos

2. choose a scan directory that includes subfolders

3. click scan photos (nextcloud)

4. an incomplete number of folders/photos are infexed

## Please provide additional information:

- 💻 Operating system: Ubuntu 22.04 in a proxmox VM

- ⚙ Architecture (x86 or ARM): x86

- 🔢 Librephotos version:

- 📸 Librephotos installation method (Docker, Kubernetes, .deb, etc.):

* 🐋 If Docker or Kubernets, provide docker-compose image tag: `latest`

- 📁 How is you picture library mounted (Local file system (Type), NFS, SMB, etc.): SMB

- ☁ If you are virtualizing librephotos, Virtualization platform (Proxmox, Xen, HyperV, etc.): proxmox

| 1medium

|

Title: [AZURE] Job and cluster terminated due to a Runtime error after 2 days running

Body: **Versions:**

skypilot==0.7.0

skypilot-nightly==1.0.0.dev20250107

**Description:**

Job and cluster was terminated by Skypilot without any retry due to this runtime error. The controller free disk space, memory and CPU resources are fine.

`(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:41:12 utils.py:95] === Checking the job status... ===

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:41:12 utils.py:101] Job status: JobStatus.RUNNING

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:41:12 utils.py:104] ==================================

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:41:43 common_utils.py:404] Caught Failed to parse status from Azure response: None.. Retrying.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:42:21 common_utils.py:404] Caught Failed to parse status from Azure response: None.. Retrying.

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] Traceback (most recent call last):

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/backends/backend_utils.py", line 1791, in _query_cluster_status_via_cloud_api

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] node_status_dict = provision_lib.query_instances(

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/utils/common_utils.py", line 386, in _record

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return f(*args, **kwargs)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/provision/__init__.py", line 52, in _wrapper

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return impl(*args, **kwargs)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/utils/common_utils.py", line 400, in method_with_retries

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return method(*args, **kwargs)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/provision/azure/instance.py", line 984, in query_instances

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] p.starmap(_fetch_and_map_status,

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/miniconda3/envs/skypilot-runtime/lib/python3.10/multiprocessing/pool.py", line 375, in starmap

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return self._map_async(func, iterable, starmapstar, chunksize).get()

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/miniconda3/envs/skypilot-runtime/lib/python3.10/multiprocessing/pool.py", line 774, in get

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] raise self._value

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/miniconda3/envs/skypilot-runtime/lib/python3.10/multiprocessing/pool.py", line 125, in worker

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] result = (True, func(*args, **kwds))

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/miniconda3/envs/skypilot-runtime/lib/python3.10/multiprocessing/pool.py", line 51, in starmapstar

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return list(itertools.starmap(args[0], args[1]))

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/provision/azure/instance.py", line 976, in _fetch_and_map_status

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] status = _get_instance_status(compute_client, node, resource_group)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/provision/azure/instance.py", line 740, in _get_instance_status

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return AzureInstanceStatus.from_raw_states(provisioning_state, None)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/provision/azure/instance.py", line 128, in from_raw_states

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] raise exceptions.ClusterStatusFetchingError(

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] sky.exceptions.ClusterStatusFetchingError: Failed to parse status from Azure response: None.

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394]

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] During handling of the above exception, another exception occurred:

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394]

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] Traceback (most recent call last):

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/jobs/controller.py", line 369, in run

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] succeeded = self._run_one_task(task_id, task)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/jobs/controller.py", line 273, in _run_one_task

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] handle) = backend_utils.refresh_cluster_status_handle(

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/utils/common_utils.py", line 386, in _record

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return f(*args, **kwargs)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/backends/backend_utils.py", line 2328, in refresh_cluster_status_handle

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] record = refresh_cluster_record(

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/backends/backend_utils.py", line 2290, in refresh_cluster_record

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] return _update_cluster_status_no_lock(cluster_name)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/backends/backend_utils.py", line 1959, in _update_cluster_status_no_lock

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] node_statuses = _query_cluster_status_via_cloud_api(handle)

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] File "/home/azureuser/skypilot-runtime/lib/python3.10/site-packages/sky/backends/backend_utils.py", line 1799, in _query_cluster_status_via_cloud_api

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] raise exceptions.ClusterStatusFetchingError(

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394] sky.exceptions.ClusterStatusFetchingError: Failed to query Azure cluster 'noleak-yolov5mblob-150-6l-73' status: [sky.exceptions.ClusterStatusFetchingError] Failed to parse status from Azure response: None.

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:394]

(noleak_yolov5mblob_150102025, pid=1267637) E 01-18 00:42:54 controller.py:397] Unexpected error occurred: [sky.exceptions.ClusterStatusFetchingError] Failed to query Azure cluster 'noleak-yolov5mblob-150-6l-73' status: [sky.exceptions.ClusterStatusFetchingError] Failed to parse status from Azure response: None.

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:42:54 state.py:480] Unexpected error occurred: [sky.exceptions.ClusterStatusFetchingError] Failed to query Azure cluster 'noleak-yolov5mblob-150-6l-73' status: [sky.exceptions.ClusterStatusFetchingError] Failed to parse status from Azure response: None.

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:42:56 controller.py:523] Killing controller process 1267707.

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:42:56 controller.py:531] Controller process 1267707 killed.

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:42:56 controller.py:533] Cleaning up any cluster for job 73.

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:43:01 storage.py:645] Verifying bucket for storage test-bucket

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:43:01 storage.py:997] Storage type StoreType.AZURE already exists under storage account 'sky63566309a1c8c949'.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) W 01-18 00:43:06 task.py:153] Docker login configs SKYPILOT_DOCKER_PASSWORD, SKYPILOT_DOCKER_SERVER, SKYPILOT_DOCKER_USERNAME are provided, but no docker image is specified in `image_id`. The login configs will be ignored.

(noleak_yolov5mblob_150102025, pid=1267637) I 01-18 00:43:19 controller.py:542] Cluster of managed job 73 has been cleaned up.

`

| 2hard

|

Title: Allow setting of alias along with version, for use with rollback

Body: ## Context

AWS lambdas allow you to set an `alias` along with `versions`, per the [documentation](https://docs.aws.amazon.com/lambda/latest/dg/aliases-intro.html). Although this may not be useful within the zappa_settings, having a switch like `--alias` during zappa deploy could allow a user to set this field, and reference said alias during a rollback. This could also allow for other useful features, like setting a `default` rollback, if a function fails, but for now, just being able to create the references would be useful.

## Use case

For our projects, I have been using AWS tags to create a tag for the function, setting it to the most current git commit hash, so we can compare the latest commit to the currently deployed commit. It allows us to reference it so that we can directly deploy any previous commit, without being tied to 'how many versions before'. Ideally, setting the aliases could be a better way of handling this use case.

## Optional use case

Regarding this use case, (this would be terribly specific), it could be useful to have aliases set by default to git commit hashes, so they could be referenced, and allow a different type of hash or naming mechanism in zappa_settings. Thus, we could rollback to specific commits by referencing aliases, while the 'versions back' ability would still remain.

| 1medium

|

Title: Colorbar is not showing last tick

Body: Hi,

I have added a horizontal colorbar to a heatmap figure and can't get the last tick to show. Here are some of things I tried and thought are the most logical:

```

colorbar= dict(

orientation='h',

y=1.01,

tickformat=".0%",

tickmode='array',

tickvals=[0, 0.25, 0.5, 0.75, 1],

showticksuffix='last',

),

```

and

```

colorbar= dict(

orientation='h',

y=1.01,

tickformat=".0%",

tickmode='linear',

tick0=0,

dtick=0.25,

nticks=5,

ticklabeloverflow="allow",

),

```

but both yield the same result:

I have also tried moving the bar around the x axis and played with labels and length, but it always seems missing.

Is this a bug?

Plotly: `5.24.1` and Python: `3.12.7`

| 1medium

|

Title: Demonstrate how to use the trained model with new data in examples

Body: This is more of a feature request around examples.

It'd be very useful to extend the examples to demonstrate how one might use the trained models on new data. This is already done for the generative models such as the NLP City Name Generator but when it comes to the classifiers the examples are currently only concerned with creating networks and training. They never show how the model can be used on new data.

For example, the `lstm.py` currently finishes with the line where `mode.fit` is called. What I'm suggesting is to extend the example code to include a case where the model is used on new data.

````python

.....

# Training

model = tflearn.DNN(net, tensorboard_verbose=0)

model.fit(trainX, trainY, validation_set=(testX, testY), show_metric=True,

batch_size=32)

# Use

new_sentence = 'this is a new sentence to be analysed using the trained model`

# code to prepare the new string ....

predictions = model.predict(new_sentence)

print(predictions)

````

Same goes for the computer vision examples.

This can be particularly useful for people (like myself) who are new to machine learning.

| 1medium

|

Title: Punycode support

Body: I wanted to use https://twitter.com/rfreebern/status/1214560971185778693 with httpie, alas,

```

» http 👻:6677

http: error: InvalidURL: Failed to parse: http://👻:6677

```

Should resolve to `http://xn--9q8h:6677`

| 1medium

|

Title: How to extract multiple faces in align_dataset_mtcnn.py

Body: I execute:

python src/align/align_dataset_mtcnn.py input output --image_size 160 --margin 32 --random_order

And there are multiple faces in one of those images,but the result only shows one face in each image,how can i modify this code?

Please give me some tips~~~

| 1medium

|

Title: Unhandled Exception (534dde169)

Body: Autosploit version: `3.0`

OS information: `Linux-4.15.0-kali2-amd64-x86_64-with-Kali-kali-rolling-kali-rolling`

Running context: `autosploit.py -a -q ****** -C WORKSPACE LHOST 192.168.19.128 -e`

Error meesage: `'access_token'`

Error traceback:

```

Traceback (most recent call):

File "/root/AutoSploit/autosploit/main.py", line 110, in main

AutoSploitParser().single_run_args(opts, loaded_tokens, loaded_exploits)

File "/root/AutoSploit/lib/cmdline/cmd.py", line 207, in single_run_args

save_mode=search_save_mode

File "/root/AutoSploit/api_calls/zoomeye.py", line 88, in search

raise AutoSploitAPIConnectionError(str(e))

errors: 'access_token'

```

Metasploit launched: `False`

| 1medium

|

Title: Решение вопроса 5.11 не стабильно

Body: Даже при выставленных random_state параметрах, best_score лучшей модели отличается от вариантов в ответах.

Подтверждено запуском несколькими участниками.

Возможно влияют конкретные версии пакетов на расчеты.

Могу приложить ipynb, на котором воспроизводится.

| 1medium

|

Title: Duplicate sentences and missing sentences in large-v3

Body: Duplicate sentences and missing sentences in large-v3

| 1medium

|

Title: deprecated `def embed_kernel` whcih should use directly ipykernel

Body:

| 1medium

|

Title: Table triggers render on mouse move

Body: Since the tooltip feature addition, the table is re-rendering on each mouse move. While it's mostly cached an unexpensive vs. an actual full re-render, this still takes ~15ms and happens extremely often.

- there's no need to re-render the table when moving inside a cell, a normal debounce for activating the tooltip will do

- there's no need to do this if the table doesn't have tooltips (or better yet, if the cells involved do not have tooltips)

| 1medium

|

Title: Retrieve object primary keys with Relay

Body: Hello,

Using Relay, the `id` field is not the primary key of the ingredients objects in the database. Is there a way to get it back ?

```

query {

allIngredients {

edges {

node {

id,

name

}

}

}

}

```

Thanks,

| 1medium

|

Title: Unexpected keyword arguments with textual entailment

Body: Following the code sample at https://demo.allennlp.org/textual-entailment, I stumbled across an issue (discussed in #4192 ), but has not been solved:

```python

pip install allennlp==1.0.0 allennlp-models==1.0.0

from allennlp.predictors.predictor import Predictor

import allennlp_models.tagging

predictor = Predictor.from_path("https://storage.googleapis.com/allennlp-public-models/snli-roberta-2020-07-29.tar.gz")

predictor.predict(

premise="Two women are wandering along the shore drinking iced tea.",

hypothesis="Two women are sitting on a blanket near some rocks talking about politics."

)

```

And it returns an error:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-33-9b5a14a8a794> in <module>()

1 predictor.predict(

2 premise="Two women are wandering along the shore drinking iced tea.",

----> 3 hypothesis="Two women are sitting on a blanket near some rocks talking about politics."

4 )

TypeError: predict() got an unexpected keyword argument 'premise'

```

It should just return the values.

| 1medium

|

Title: Type-safe "optional-nullable" fields

Body: ### Preface

it is a common practice in strawberry that when your data layer have

an optional field i.e

```py

class Person:

name: str

phone: str | None = None

```

and you want to update it you would use `UNSET` in the mutation input

in order to check whether this field provided by the client or not like so:

```py

@strawberry.input

class UpdatePersonInput:

id: strawberry.ID

name: str| None

phone: str | None = UNSET

@strawberry.mutation

def update_person(input: UpdatePersonInput) -> Person:

inst = service.get_person(input.id)

if name := input.name:

inst.name = name

if input.phone is not UNSET:

inst.phone = input.phone # ❌ not type safe

service.save(inst)

```

Note that this is not an optimization rather a business requirement.

if the user wants to nullify the phone it won't be possible other wise

OTOH you might nullify the phone unintentionally.

This approach can cause lots of bugs since you need to **remember** that you have

used `UNSET` and to handle this correspondingly.

Since strawberry claims to

> Strawberry leverages Python type hints to provide a great developer experience while creating GraphQL Libraries.

it is only natural for us to provide a typesafe way to mitigate this.

### Proposal

The `Option` type.which will require only this minimal implementation

```py

import dataclasses

@dataclasses.dataclass

class Some[T]:

value: T

def some(self) -> Some[T | None] | None:

return self

@dataclasses.dataclass

class Nothing[T]:

def some(self) -> Some[T | None] | None:

return None

Maybe[T] = Some[T] | Nothing[T]

```

and this is how you'd use it

```py

@strawberry.input

class UpdatePersonInput:

id: strawberry.ID

name: str| None

phone: Maybe[str | None]

@strawberry.mutation

def update_person(input: UpdatePersonInput) -> Person:

inst = service.get_person(input.id)

if name := input.name:

inst.name = name

if phone := input.phone.some():

inst.phone = phone.value # ✅ type safe

service.save(inst)

```

Currently if you want to know if a field was provided

### Backward compat

`UNSET` can remain as is for existing codebases.

`Option` would be handled separately.

### which `Option` library should we use?

1. **Don't use any library craft something minimal our own** as suggested above.

2. ** use something existing**

The sad truth is that there are no well-maintained libs in the ecosystem.

Never the less it is not hard to maintain something just for strawberry since the implementation

is rather straight forward and not much features are needed. we can fork either

- https://github.com/rustedpy/maybe

- https://github.com/MaT1g3R/option

and just forget about it.

3. **allow users to decide**

```py

# before anything

strawberry.register_option_type((MyOptionType, NOTHING))

```

then strawberry could use that and you could use whatever you want.

- [ ] Core functionality

- [ ] Alteration (enhancement/optimization) of existing feature(s)

- [x] New behavior

| 2hard

|

Title: SelfAttributes Fail When Called Through PostGeneration functions

Body: I have a simplified set of factory definitions below. If i try to create a WorkOrderKit object, via WorkOrderKitFactory(), it successfully generates a workorderkit with factory_boy 2.6.1 but fails with 2.9.2. I'm wondering if this is a bug or if it worked unintentionally before and this is the intended behavior. (If it is the intended behavior, do you have any suggestions on achieving this behavior now?)

The whole example django project: https://bitbucket.org/marky1991/factory-test/ .

If you would like to test it yourself, checkout the project, setup the database, run setup_db.psql, and then run factory_test/factory_test/factory_test_app/test.py.

Please let me know if anything is unclear or if you have any questions.

```python

import factory

from factory.declarations import SubFactory, SelfAttribute

from factory.fuzzy import FuzzyText, FuzzyChoice

from factory_test_app import models

class ItemFactory(factory.DjangoModelFactory):

class Meta:

model = models.Item

barcode = factory.fuzzy.FuzzyText(length=10)

class OrderHdrFactory(factory.DjangoModelFactory):

order_nbr = factory.fuzzy.FuzzyText(length=20)

class Meta:

model = models.OrderHdr

@factory.post_generation

def order_dtls(self, create, extracted, **kwargs):

if not create:

return

if extracted is not None:

for order_dtl in extracted:

order_dtl.order = self

author.save()

return

for _ in range(5):

OrderDtlFactory(order=self,

**kwargs)

class WorkOrderKitFactory(factory.DjangoModelFactory):

class Meta:

model = models.WorkOrderKit

work_order_nbr = factory.fuzzy.FuzzyText(length=20)

item = SubFactory(ItemFactory)

sales_order = SubFactory(OrderHdrFactory,

order_dtls__item=SelfAttribute("..item"))

class OrderDtlFactory(factory.DjangoModelFactory):

class Meta:

model = models.OrderDtl

order = SubFactory(OrderHdrFactory,

order_dtls=[])

item = SubFactory(ItemFactory)

```

The traceback in 2.9.1:

```

Traceback (most recent call last):

File "factory_test_app/test.py", line 8, in <module>

kit = WorkOrderKitFactory()

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/base.py", line 46, in __call__

return cls.create(**kwargs)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/base.py", line 568, in create

return cls._generate(enums.CREATE_STRATEGY, kwargs)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/base.py", line 505, in _generate

return step.build()

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 275, in build

step.resolve(pre)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 224, in resolve

self.attributes[field_name] = getattr(self.stub, field_name)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 366, in __getattr__

extra=declaration.context,

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/declarations.py", line 306, in evaluate

return self.generate(step, defaults)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/declarations.py", line 395, in generate

return step.recurse(subfactory, params, force_sequence=force_sequence)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 236, in recurse

return builder.build(parent_step=self, force_sequence=force_sequence)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 296, in build

context=postgen_context,

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/declarations.py", line 570, in call

instance, create, context.value, **context.extra)

File "/home/lgfdev/factory_test/factory_test/factory_test_app/factories.py", line 29, in order_dtls

**kwargs)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/base.py", line 46, in __call__

return cls.create(**kwargs)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/base.py", line 568, in create

return cls._generate(enums.CREATE_STRATEGY, kwargs)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/base.py", line 505, in _generate

return step.build()

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 275, in build

step.resolve(pre)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 224, in resolve

self.attributes[field_name] = getattr(self.stub, field_name)

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/builder.py", line 366, in __getattr__

extra=declaration.context,

File "/home/lgfdev/ve_factory_test/local/lib/python2.7/site-packages/factory/declarations.py", line 137, in evaluate

target = step.chain[self.depth - 1]

IndexError: tuple index out of range

```

| 2hard

|

Title: 高版本的torch报错未发现torch._six

Body: **System information**

* Have I writtenTraceback (most recent call last):

File "C:\Work\Pycharm\faster_rcnn\train_mobilenetv2.py", line 11, in <module>

from train_utils import GroupedBatchSampler, create_aspect_ratio_groups

File "C:\Work\Pycharm\faster_rcnn\train_utils\__init__.py", line 4, in <module>

from .coco_eval import CocoEvaluator

File "C:\Work\Pycharm\faster_rcnn\train_utils\coco_eval.py", line 7, in <module>

import torch._six

ModuleNotFoundError: No module named 'torch._six'

我的torch版本是2.0.1

| 1medium

|

Title: window上用BERT在XNLI任务上Fine-tuning报错

Body: 在LARK/BERT目录中,执行python -u run_classifier.py --task_name XNLI --use_cuda true --do_train true --do_val true --do_test true --batch_size 8192 --in_tokens true --init_pretraining_params chinese_L-12_H-768_A-12/params --data_dir ./XNLI --checkpoints ./XNLI_checkpoints --save_steps 1000 --weight_decay 0.01 --warmup_proportion 0.0 --validation_steps 25 --epoch 1 --max_seq_len 512 --bert_config_path chinese_L-12_H-768_A-12/bert_config.json --learning_rate 1e-4 --skip_steps 10 --random_seed 1,使用BERT在NLP任务上Fine-tuning,报错信息如下:

| 1medium

|

Title: Support for PySpark

Body: **Is your feature request related to a problem? Please describe.**

Hello, I see that this package supports Pandas, but does it support pyspark? I'd like to use this on large datasets and pandas is insufficient for my use case.

**Describe the outcome you'd like:**

I'd like to be able to run this on large datasets over 10k+ rows. Do you think this would be possible?

| 1medium

|

Title: 指令精调之后,除了指令里面的问题能回答,其他回答全是none

Body: ### 提交前必须检查以下项目

- [X] 请确保使用的是仓库最新代码(git pull),一些问题已被解决和修复。

- [X] 我已阅读[项目文档](https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/wiki)和[FAQ章节](https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/wiki/常见问题)并且已在Issue中对问题进行了搜索,没有找到相似问题和解决方案。

- [X] 第三方插件问题:例如[llama.cpp](https://github.com/ggerganov/llama.cpp)、[LangChain](https://github.com/hwchase17/langchain)、[text-generation-webui](https://github.com/oobabooga/text-generation-webui)等,同时建议到对应的项目中查找解决方案。

### 问题类型

模型训练与精调

### 基础模型

Chinese-Alpaca-2 (7B/13B)

### 操作系统

Linux

### 详细描述问题

对Chinese-llama-alpaca2-hf进行指令精调,后将得到的lora与Chinese-llama-alpaca2-hf进行合并,但只能回答指令精调中的内容,其他任何语句的回答均为none

```

# 请在此处粘贴运行代码(请粘贴在本代码块里)

``lr=1e-4

lora_rank=64

lora_alpha=128

lora_trainable="q_proj,v_proj,k_proj,o_proj,gate_proj,down_proj,up_proj"

modules_to_save="embed_tokens,lm_head"

lora_dropout=0.05

pretrained_model=/home/sensorweb/lijialin/llm_Chinese/Chinese-LLaMA-Alpaca-2/chinese-alpaca-2-7b-hf

chinese_tokenizer_path=/home/sensorweb/lijialin/llm_Chinese/Chinese-LLaMA-Alpaca-2/chinese-alpaca-2-7b-hf

dataset_dir=/home/sensorweb/lijialin/llm_Chinese/Chinese-LLaMA-Alpaca-2/data_final/train

per_device_train_batch_size=1

per_device_eval_batch_size=1

gradient_accumulation_steps=8

max_seq_length=512

output_dir=/home/sensorweb/lijialin/llm_Chinese/Chinese-LLaMA-Alpaca-2/base_sft/lora

peft_model=/home/sensorweb/lijialin/llm_Chinese/Chinese-LLaMA-Alpaca-2/chinese-alpaca-2-lora-7b

validation_file=/home/sensorweb/lijialin/llm_Chinese/Chinese-LLaMA-Alpaca-2/data_final/eval/eval.json

deepspeed_config_file=ds_zero2_no_offload.json

torchrun --nnodes 1 --nproc_per_node 1 run_clm_sft_with_peft.py \

--deepspeed ${deepspeed_config_file} \

--model_name_or_path ${pretrained_model} \

--tokenizer_name_or_path ${chinese_tokenizer_path} \

--dataset_dir ${dataset_dir} \

--per_device_train_batch_size ${per_device_train_batch_size} \

--per_device_eval_batch_size ${per_device_eval_batch_size} \

--do_train \

--do_eval \

--seed $RANDOM \

--fp16 \

--num_train_epochs 1 \

--lr_scheduler_type cosine \

--learning_rate ${lr} \

--warmup_ratio 0.03 \

--weight_decay 0 \

--logging_strategy steps \

--logging_steps 10 \

--save_strategy steps \

--save_total_limit 3 \

--evaluation_strategy steps \

--eval_steps 100 \

--save_steps 200 \

--gradient_accumulation_steps ${gradient_accumulation_steps} \

--preprocessing_num_workers 8 \

--max_seq_length ${max_seq_length} \

--output_dir ${output_dir} \

--overwrite_output_dir \

--ddp_timeout 30000 \

--logging_first_step True \

--torch_dtype float16 \

--validation_file ${validation_file} \

--peft_path ${peft_model} \

--load_in_kbits 16

### 依赖情况(代码类问题务必提供)

```

# 请在此处粘贴依赖情况(请粘贴在本代码块里)

```

### 运行日志或截图

```

# 请在此处粘贴运行日志(请粘贴在本代码块里)

```

history: [[ 'nihao', None]]Input length: 38 history: [['nihao', 'None' ],['*SBAS#InSAR可观测什么事件',"形变速率'],['事件',"滑坡形变特征']

| 1medium

|

Title: How do I subscribe to block_action using bolt?

Body: How do I subscribe to `block_action` using bolt?

I've found the decorator in the source code, but there are no comprehensive examples and the docs omit it. The decorator takes constrains argument, what do I pass there? I tried "button" and the value that I use for my action blocks, but nothing changes. I am just frustrated at this point.

What I am trying to do:

I am trying to open a modal when user clicks on a button in an ephemeral message.

| 1medium

|

Title: [BUG]ValueError: Model Dlinear is not supported yet.

Body: **Bug Report Checklist**

<!-- Please ensure at least one of the following to help the developers troubleshoot the problem: -->

- [ ] I provided code that demonstrates a minimal reproducible example. <!-- Ideal, especially via source install -->

- [ ] I confirmed bug exists on the latest mainline of AutoGluon via source install. <!-- Preferred -->

- [ ] I confirmed bug exists on the latest stable version of AutoGluon. <!-- Unnecessary if prior items are checked -->

**Describe the bug**

ValueError: Model Dlinear is not supported yet.

**Expected behavior**

<!-- A clear and concise description of what you expected to happen. -->

**To Reproduce**

<!-- A minimal script to reproduce the issue. Links to Colab notebooks or similar tools are encouraged.

```

predictor = TimeSeriesPredictor(

quantile_levels=None,

prediction_length=prediction_length,

eval_metric="RMSE",

freq="15T",

path=f"{station}-{prediction_length}_ahead-15min/" + pd.Timestamp.now().strftime("%Y_%m_%d_%H_%M_%S"),

known_covariates_names=known_covariates_name,

target="power",

)

predictor.fit(

train_data,

# presets="best_quality",

hyperparameters={

"DlinearModel": {}

},

num_val_windows=3,

refit_every_n_windows=1,

refit_full=True,

)

```

**Screenshots / Logs**

<!-- If applicable, add screenshots or logs to help explain your problem. -->

**Installed Versions**

<!-- Please run the following code snippet: -->

gluonts 0.14.4

autogluon 1.0.0

autogluon.common 1.0.0

autogluon.core 1.0.0

autogluon.features 1.0.0

autogluon.multimodal 1.0.0

autogluon.tabular 1.0.0

autogluon.timeseries 1.0.0

Python 3.10.14

</details>

| 1medium

|

Title: Building Horovod 0.23.0 w HOROVOD_GPU=CUDA on a system with ROCM also installed-- Build tries to use ROCM too

Body: **Environment:**

1. Framework: TensorFlow, PyTorch

2. Framework version: 2.7.0, 1.9.1

3. Horovod version: 0.23.0

4. MPI version: MPICH 3.4.2

5. CUDA version: 11.4.2

6. NCCL version: 2.11.4

7. Python version: 3.9.7

8. Spark / PySpark version: NA

9. Ray version: NA

10. OS and version: Ubuntu 20.04

11. GCC version: GCC 9.3.0

12. CMake version: 3.21.4

**Bug report:**

Trying to build Horovod w/ CUDA, on a system that also has ROCM 4.3.1 installed, and despite setting `HOROVOD_GPU=CUDA` it looks like the install is trying to build against ROCM too:

```

$> HOROVOD_WITH_TENSORFLOW=1 \

HOROVOD_WITH_PYTORCH=1 \

HOROVOD_WITH_MPI=1 \

HOROVOD_GPU_OPERATIONS=NCCL \

HOROVOD_BUILD_CUDA_CC_LIST=35,70,80 \

HOROVOD_BUILD_ARCH_FLAGS="-march=x86-64" \

HOROVOD_CUDA_HOME=/usr/local/cuda-11.4 \

HOROVOD_GPU=CUDA \

pip install horovod[tensorflow,pytorch]

...

[ 74%] Building CXX object horovod/torch/CMakeFiles/pytorch.dir/__/common/common.cc.o

cd /tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/build/temp.linux-x86_64-3.9/RelWithDebInfo/horovod/torch && /usr/bin/c++ -DEIGEN_MPL2_ONLY=1 -DHAVE_CUDA=1 -DHAVE_GLOO=1 -DHAVE_GPU=1 -DHAVE_MPI=1 -DHAVE_NCCL=1 -DHAVE_NVTX=1 -DHAVE_ROCM=1 -DHOROVOD_GPU_ALLGATHER=78 -DHOROVOD_GPU_ALLREDUCE=78 -DHOROVOD_GPU_ALLTOALL=78 -DHOROVOD_GPU_BROADCAST=78 -DTORCH_API_INCLUDE_EXTENSION_H=1 -DTORCH_VERSION=1009001000 -Dpytorch_EXPORTS -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/HTTPRequest/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/assert/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/config/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/core/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/detail/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/iterator/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/lockfree/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/mpl/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/parameter/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/predef/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/preprocessor/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/static_assert/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/type_traits/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/boost/utility/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/lbfgs/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/gloo -I/usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/tensorflow/include -I/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/third_party/flatbuffers/include -isystem /spack/opt/spack/linux-ubuntu20.04-x86_64/gcc-9.3.0/mpich-3.4.2-qfhacakdkcdmvjzstuukmphjr4khbdgn/include -isystem /usr/local/cuda-11.4/include -isystem /usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/torch/include -isystem /usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/torch/include/torch/csrc/api/include -isystem /usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/torch/include/TH -isystem /usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/torch/include/THC -isystem /usr/local/miniconda3/envs/cuda/include/python3.9 No ROCm runtime is found, using ROCM_HOME='/opt/rocm-4.3.1' -MD -MT horovod/torch/CMakeFiles/pytorch.dir/__/common/common.cc.o -MF CMakeFiles/pytorch.dir/__/common/common.cc.o.d -o CMakeFiles/pytorch.dir/__/common/common.cc.o -c /tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/horovod/common/common.cc

c++: error: No: No such file or directory

c++: error: ROCm: No such file or directory

c++: error: runtime: No such file or directory

c++: error: is: No such file or directory

c++: error: found,: No such file or directory

c++: error: using: No such file or directory

c++: error: ROCM_HOME=/opt/rocm-4.3.1: No such file or directory

make[2]: *** [horovod/torch/CMakeFiles/pytorch.dir/build.make:76: horovod/torch/CMakeFiles/pytorch.dir/__/common/common.cc.o] Error 1

make[2]: Leaving directory '/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/build/temp.linux-x86_64-3.9/RelWithDebInfo'

make[1]: *** [CMakeFiles/Makefile2:446: horovod/torch/CMakeFiles/pytorch.dir/all] Error 2

make[1]: Leaving directory '/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/build/temp.linux-x86_64-3.9/RelWithDebInfo'

make: *** [Makefile:136: all] Error 2

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/setup.py", line 167, in <module>

setup(name='horovod',

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/setuptools/__init__.py", line 153, in setup

return distutils.core.setup(**attrs)

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/core.py", line 148, in setup

dist.run_commands()

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/dist.py", line 966, in run_commands

self.run_command(cmd)

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/dist.py", line 985, in run_command

cmd_obj.run()

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/wheel/bdist_wheel.py", line 299, in run

self.run_command('build')

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/dist.py", line 985, in run_command

cmd_obj.run()

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/command/build.py", line 135, in run

self.run_command(cmd_name)

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/dist.py", line 985, in run_command

cmd_obj.run()

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/site-packages/setuptools/command/build_ext.py", line 79, in run

_build_ext.run(self)

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/distutils/command/build_ext.py", line 340, in run

self.build_extensions()

File "/tmp/pip-install-bs7lwyxo/horovod_9543edab589b4acfbafcd2a92c02c4c3/setup.py", line 100, in build_extensions

subprocess.check_call([cmake_bin, '--build', '.'] + cmake_build_args,

File "/usr/local/miniconda3/envs/cuda/lib/python3.9/subprocess.py", line 373, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['cmake', '--build', '.', '--config', 'RelWithDebInfo', '--', 'VERBOSE=1']' returned non-zero exit status 2.

----------------------------------------

ERROR: Failed building wheel for horovod

Running setup.py clean for horovod

Failed to build horovod

...

```

| 2hard

|

Title: [Migrated] When no aws_environment_variables are defined in settings, a zappa update will delete any vars defined in the console

Body: Originally from: https://github.com/Miserlou/Zappa/issues/1010 by [seanpaley](https://github.com/seanpaley)

<!--- Provide a general summary of the issue in the Title above -->

## Context

Title says it - when I have no aws_environment_variables in my zappa_settings.json, any env vars I set manually in the Lambda console disappear on zappa update. If I define one var in the aws_environment_variables dictionary, the vars I manually set persist after an update.

## Expected Behavior

Manually defined vars don't get deleted.

## Actual Behavior

They get deleted.

## Possible Fix

<!--- Not obligatory, but suggest a fix or reason for the bug -->

## Steps to Reproduce

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

<!--- reproduce this bug include code to reproduce, if relevant -->

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Zappa version used: 43.0

* Operating System and Python version: mac os, python 2.7.13

* The output of `pip freeze`:

argcomplete==1.8.2

base58==0.2.4

boto3==1.4.4

botocore==1.5.40

certifi==2017.4.17

chardet==3.0.4

click==6.7

docutils==0.13.1

durationpy==0.4

future==0.16.0

futures==3.1.1

hjson==2.0.7

idna==2.5

jmespath==0.9.3

kappa==0.6.0

lambda-packages==0.16.1

placebo==0.8.1

psycopg2==2.7.1

python-dateutil==2.6.1

python-slugify==1.2.4

PyYAML==3.12

requests==2.18.1

s3transfer==0.1.10

six==1.10.0

toml==0.9.2

tqdm==4.14.0

troposphere==1.9.4

Unidecode==0.4.21

urllib3==1.21.1

Werkzeug==0.12

wsgi-request-logger==0.4.6

zappa==0.43.0

* Your `zappa_settings.py`:

| 1medium

|

Title: Cannot import OptimizerLRSchedulerConfig or OptimizerLRSchedulerConfigDict

Body: ### Bug description

Since I bumped up `lightning` to `2.5.0`, the `configure_optimizers` has been failing the type checker. I saw that `OptimizerLRSchedulerConfig` had been replaced with `OptimizerLRSchedulerConfigDict`, but I cannot import any of them.

### What version are you seeing the problem on?

v2.5

### How to reproduce the bug

```python

import torch

import pytorch_lightning as pl

from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfigDict

from torch.optim.lr_scheduler import ReduceLROnPlateau

class Model(pl.LightningModule):

...

def configure_optimizers(self) -> OptimizerLRSchedulerConfigDict:

optimizer = torch.optim.Adam(self.parameters(), lr=1e-3)

scheduler = ReduceLROnPlateau(

optimizer, mode="min", factor=0.1, patience=20, min_lr=1e-6

)

return {

"optimizer": optimizer,

"lr_scheduler": {

"scheduler": scheduler,

"monitor": "val_loss",

"interval": "epoch",

"frequency": 1,

},

}

```

### Error messages and logs

```

In [2]: import lightning

In [3]: lightning.__version__

Out[3]: '2.5.0'

In [4]: from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfigDict

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Cell In[4], line 1

----> 1 from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfigDict

ImportError: cannot import name 'OptimizerLRSchedulerConfigDict' from 'lightning.pytorch.utilities.types' (/home/test/.venv/lib/python3.11/site-packages/lightning/pytorch/utilities/types.py)

In [5]: from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfig

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Cell In[5], line 1

----> 1 from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfig

ImportError: cannot import name 'OptimizerLRSchedulerConfig' from 'lightning.pytorch.utilities.types' (/home/test/.venv/lib/python3.11/site-packages/lightning/pytorch/utilities/types.py)

```

### Environment

<details>

<summary>Current environment</summary>

```

#- PyTorch Lightning Version (e.g., 2.5.0):

#- PyTorch Version (e.g., 2.5):

#- Python version (e.g., 3.12):

#- OS (e.g., Linux):

#- CUDA/cuDNN version:

#- GPU models and configuration:

#- How you installed Lightning(`conda`, `pip`, source):

```

</details>

### More info

_No response_

| 1medium

|

Title: get_portfolio_history TypeError:: Cannot convert Float64Index to dtype datetime64[ns]

Body: I've used this for months to check my account's current profit/loss % for the day:

```

# Connect to the Alpaca API

alpaca = tradeapi.REST(API_KEY, API_SECRET, APCA_API_BASE_URL, 'v2')

# Define our destination time zone

tz_dest = pytz.timezone('America/New_York')

# Get the current date

today = datetime.datetime.date(datetime.datetime.now(tz_dest))

# Get today's account history

history = alpaca.get_portfolio_history(date_start=today, timeframe = '1D', extended_hours = False).df

# Format profit/loss as a string and percent

profit_pct = str("{:.2%}".format(history.profit_loss_pct[0]))

```

As of today though, I'm getting this error when I try to put any date in the "date_start" command of the get_portfolio_history function. I haven't been able to find a date format it'll take yet. I keep hitting this error:

**TypeError: Cannot convert Float64Index to dtype datetime64[ns]; integer values are required for conversion**

It seems that the error from these two earlier issues has re-surfaced. Help?

https://github.com/alpacahq/alpaca-trade-api-python/issues/62#issue-422913769

https://github.com/alpacahq/alpaca-trade-api-python/issues/53#issue-405386294

| 1medium

|

Title: How to add request.user to serializer?

Body: The codes seems not passing context to serializer when it's called.

`serializer = cls._meta.serializer_class(data=new_obj)`

I would like to add `request.user` when it tries to save, I couldn't find a way to do it.

Anyone has done this before?

| 1medium

|

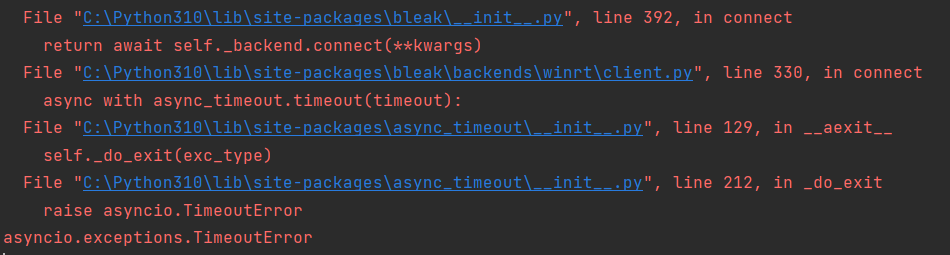

Title: micro:bit disconnected after pairing (In JustWorks setting) and cannot connect to micro:bit again

Body: * bleak version: 0.18.1

* Python version: 3.10.7

* Operating System: Windows10

### Description

I want to connect to micro:bit using bleak's api in windows 10 OS. And use the

> BleakClient.write_gatt_char

to send messge to the micro:bit Event Service (UUID: E95D93AF-251D-470A-A062-FA1922DFA9A8) (Which have Characteristic: 'E95D5404-251D-470A-A062-FA1922DFA9A8' to trigger the event I've been written and downloaded to micro:bit). Unfortunately I found out that I must paired with microbit in order to send message from my computer. So I used:

```

async with BleakClient(device,disconnected_callback=disconnected_callback,timeout=15.0) as client:

print("Pairing Client")

await client.pair()

....

codes that use to send message

```

to pair with micro:bit. I found out that micro:bit is successfully connect and pair with my computer. But after pairing finished, disconnected_callback called out (Though I saw it is still connected On Windows Bluetooth setting)

After that I add connect command after paired, and it always failed with **TimeoutError**:

```

async with BleakClient(device,disconnected_callback=disconnected_callback,timeout=15.0) as client:

print("Pairing Client")

await pairClient(client)

await asyncio.sleep(10)

print("Reconnecting")

print(f'is connect? {client.is_connected}')

if not client.is_connected:

await client.connect()

```

### What I Did

This is an example code that micro:bit used

https://makecode.microbit.org/_1yFbXM6TyPxT

This is an brief python code (minimal code that can run) that I used to connect and pair with micro:bit :

```

import time

import asyncio

import logging

from bleak import BleakClient, BLEDevice, BleakGATTCharacteristic

from bleak import BleakScanner

#device pair tag

deviceChar = "zipeg"

disconnected_event = asyncio.Event()

def disconnected_callback(client):

print("Disconnected callback called!")

disconnected_event.set()

async def scanWithNamePart(wanted_name_part):

'''

Find device by specific string

:param wanted_name_part:

:return:

'''

device = await BleakScanner.find_device_by_filter(

lambda d,ad: (wanted_name_part.lower() in d.name or wanted_name_part.lower() in d.name.lower()) if d.name is not None else False

)

print(device)

device_data = device

return device_data

async def mainCheck():

#main function

print("Discover device by name:")

device: BLEDevice = await scanWithNamePart(deviceChar)

if device is not None:

print("Device Found!")

else:

print(f"Failed to discover device with string: {deviceChar}")

return 0

async with BleakClient(device,disconnected_callback=disconnected_callback,timeout=15.0) as client:

print("Pairing Client")

await client.pair()

await asyncio.sleep(10)

print(f'is connect? {client.is_connected}')

if not client.is_connected:

print("Reconnecting")

await client.connect()

```

And the running result from Terminal:

'''

Discover device by name:

D1:09:0B:5C:D7:FC: BBC micro:bit [zipeg]

Device Found!

Pairing Client

INFO:bleak.backends.winrt.client:Services resolved for BleakClientWinRT (D1:09:0B:5C:D7:FC)

INFO:bleak.backends.winrt.client:Paired to device with protection level 1.

Disconnected callback called!

Reconnecting

is connect? False

Disconnected callback called!

Traceback (most recent call last):

File "C:\Python310\lib\site-packages\bleak\backends\winrt\client.py", line 331, in connect

await event.wait()

File "C:\Python310\lib\asyncio\locks.py", line 214, in wait

await fut

asyncio.exceptions.CancelledError

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\...\microbit_control.py", line 271, in <module>

asyncio.run(mainCheck())

File "C:\Python310\lib\asyncio\runners.py", line 44, in run

return loop.run_until_complete(main)

File "C:\Python310\lib\asyncio\base_events.py", line 646, in run_until_complete

return future.result()

File "C:\...\microbit_control.py", line 221, in mainCheck

await client.connect()

File "C:\Python310\lib\site-packages\bleak\__init__.py", line 392, in connect

return await self._backend.connect(**kwargs)

File "C:\Python310\lib\site-packages\bleak\backends\winrt\client.py", line 330, in connect

async with async_timeout.timeout(timeout):

File "C:\Python310\lib\site-packages\async_timeout\__init__.py", line 129, in __aexit__

self._do_exit(exc_type)

File "C:\Python310\lib\site-packages\async_timeout\__init__.py", line 212, in _do_exit

raise asyncio.TimeoutError

asyncio.exceptions.TimeoutError

'''

### Logs

I opened Bleak_Logging andd this is the result:

```

Discover device by name:

2022-10-11 17:43:29,454 bleak.backends.winrt.scanner DEBUG: Received 7F:BA:14:5F:F8:35: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 7F:BA:14:5F:F8:35: Unknown.

2022-10-11 17:43:29,456 bleak.backends.winrt.scanner DEBUG: Received 7F:BA:14:5F:F8:35: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 7F:BA:14:5F:F8:35: Unknown.

2022-10-11 17:43:29,458 bleak.backends.winrt.scanner DEBUG: Received 4E:FB:74:B8:29:3C: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 4E:FB:74:B8:29:3C: Unknown.

2022-10-11 17:43:29,459 bleak.backends.winrt.scanner DEBUG: Received 4A:1E:A1:26:F4:50: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 4A:1E:A1:26:F4:50: Unknown.

2022-10-11 17:43:29,460 bleak.backends.winrt.scanner DEBUG: Received 4A:1E:A1:26:F4:50: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 4A:1E:A1:26:F4:50: Unknown.

2022-10-11 17:43:29,461 bleak.backends.winrt.scanner DEBUG: Received C0:00:00:11:40:7E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received C0:00:00:11:40:7E: Unknown.

2022-10-11 17:43:29,464 bleak.backends.winrt.scanner DEBUG: Received 5C:A7:C9:C4:04:3A: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 5C:A7:C9:C4:04:3A: Unknown.

2022-10-11 17:43:29,465 bleak.backends.winrt.scanner DEBUG: Received 5C:A7:C9:C4:04:3A: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 5C:A7:C9:C4:04:3A: Unknown.

2022-10-11 17:43:29,467 bleak.backends.winrt.scanner DEBUG: Received 46:4F:18:B2:E4:CF: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 46:4F:18:B2:E4:CF: Unknown.

2022-10-11 17:43:29,469 bleak.backends.winrt.scanner DEBUG: Received 46:4F:18:B2:E4:CF: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 46:4F:18:B2:E4:CF: Unknown.

2022-10-11 17:43:29,470 bleak.backends.winrt.scanner DEBUG: Received 09:99:4B:01:CD:3C: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 09:99:4B:01:CD:3C: Unknown.

2022-10-11 17:43:29,472 bleak.backends.winrt.scanner DEBUG: Received 29:18:E2:EB:C9:7D: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 29:18:E2:EB:C9:7D: Unknown.

2022-10-11 17:43:29,473 bleak.backends.winrt.scanner DEBUG: Received 0F:E9:A6:AE:17:19: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 0F:E9:A6:AE:17:19: Unknown.

2022-10-11 17:43:29,475 bleak.backends.winrt.scanner DEBUG: Received 0B:16:3D:50:42:BD: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 0B:16:3D:50:42:BD: Unknown.

2022-10-11 17:43:29,476 bleak.backends.winrt.scanner DEBUG: Received 06:0B:51:37:3C:77: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 06:0B:51:37:3C:77: Unknown.

2022-10-11 17:43:29,481 bleak.backends.winrt.scanner DEBUG: Received C0:00:00:11:40:7E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received C0:00:00:11:40:7E: Unknown.

2022-10-11 17:43:29,573 bleak.backends.winrt.scanner DEBUG: Received 61:FD:DA:F3:5B:5B: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 61:FD:DA:F3:5B:5B: Unknown.

2022-10-11 17:43:29,575 bleak.backends.winrt.scanner DEBUG: Received 61:FD:DA:F3:5B:5B: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 61:FD:DA:F3:5B:5B: Unknown.

2022-10-11 17:43:29,577 bleak.backends.winrt.scanner DEBUG: Received 0F:E9:A6:AE:17:19: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 0F:E9:A6:AE:17:19: Unknown.

2022-10-11 17:43:29,578 bleak.backends.winrt.scanner DEBUG: Received 29:18:E2:EB:C9:7D: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 29:18:E2:EB:C9:7D: Unknown.

2022-10-11 17:43:29,581 bleak.backends.winrt.scanner DEBUG: Received 75:A3:C8:DD:0B:24: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 75:A3:C8:DD:0B:24: Unknown.

2022-10-11 17:43:29,583 bleak.backends.winrt.scanner DEBUG: Received 06:0B:51:37:3C:77: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 06:0B:51:37:3C:77: Unknown.

2022-10-11 17:43:29,588 bleak.backends.winrt.scanner DEBUG: Received 6D:B2:A0:39:C6:93: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 6D:B2:A0:39:C6:93: Unknown.

2022-10-11 17:43:29,589 bleak.backends.winrt.scanner DEBUG: Received 6D:B2:A0:39:C6:93: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 6D:B2:A0:39:C6:93: Unknown.

2022-10-11 17:43:29,594 bleak.backends.winrt.scanner DEBUG: Received 4A:1E:A1:26:F4:50: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 4A:1E:A1:26:F4:50: Unknown.

2022-10-11 17:43:29,595 bleak.backends.winrt.scanner DEBUG: Received 4A:1E:A1:26:F4:50: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 4A:1E:A1:26:F4:50: Unknown.

2022-10-11 17:43:29,714 bleak.backends.winrt.scanner DEBUG: Received C0:00:00:11:40:7E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received C0:00:00:11:40:7E: Unknown.

2022-10-11 17:43:29,716 bleak.backends.winrt.scanner DEBUG: Received 76:FD:EF:61:02:C4: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 76:FD:EF:61:02:C4: Unknown.

2022-10-11 17:43:29,718 bleak.backends.winrt.scanner DEBUG: Received E9:49:CE:EC:44:B3: mobike.

DEBUG:bleak.backends.winrt.scanner:Received E9:49:CE:EC:44:B3: mobike.

2022-10-11 17:43:29,719 bleak.backends.winrt.scanner DEBUG: Received 69:D2:4E:A9:07:BA: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 69:D2:4E:A9:07:BA: Unknown.

2022-10-11 17:43:29,720 bleak.backends.winrt.scanner DEBUG: Received 69:D2:4E:A9:07:BA: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 69:D2:4E:A9:07:BA: Unknown.

2022-10-11 17:43:29,753 bleak.backends.winrt.scanner DEBUG: Received 25:F3:1E:DF:34:0D: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 25:F3:1E:DF:34:0D: Unknown.

2022-10-11 17:43:29,757 bleak.backends.winrt.scanner DEBUG: Received 6F:CA:44:42:9A:FB: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 6F:CA:44:42:9A:FB: Unknown.

2022-10-11 17:43:29,759 bleak.backends.winrt.scanner DEBUG: Received CA:1D:25:29:E2:C7: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received CA:1D:25:29:E2:C7: Unknown.

2022-10-11 17:43:29,760 bleak.backends.winrt.scanner DEBUG: Received C0:00:00:11:40:7E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received C0:00:00:11:40:7E: Unknown.

2022-10-11 17:43:29,803 bleak.backends.winrt.scanner DEBUG: Received 79:13:B2:67:E8:9E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 79:13:B2:67:E8:9E: Unknown.

2022-10-11 17:43:29,810 bleak.backends.winrt.scanner DEBUG: Received C0:00:00:11:40:7E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received C0:00:00:11:40:7E: Unknown.

2022-10-11 17:43:29,813 bleak.backends.winrt.scanner DEBUG: Received 46:4F:18:B2:E4:CF: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 46:4F:18:B2:E4:CF: Unknown.

2022-10-11 17:43:29,815 bleak.backends.winrt.scanner DEBUG: Received 46:4F:18:B2:E4:CF: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 46:4F:18:B2:E4:CF: Unknown.

2022-10-11 17:43:29,816 bleak.backends.winrt.scanner DEBUG: Received 69:90:EA:55:4C:CB: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 69:90:EA:55:4C:CB: Unknown.

2022-10-11 17:43:29,822 bleak.backends.winrt.scanner DEBUG: Received 12:76:BC:82:9C:33: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 12:76:BC:82:9C:33: Unknown.

2022-10-11 17:43:29,825 bleak.backends.winrt.scanner DEBUG: Received 7D:07:F2:06:19:E9: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 7D:07:F2:06:19:E9: Unknown.

2022-10-11 17:43:29,826 bleak.backends.winrt.scanner DEBUG: Received 7D:07:F2:06:19:E9: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 7D:07:F2:06:19:E9: Unknown.

2022-10-11 17:43:29,831 bleak.backends.winrt.scanner DEBUG: Received 7B:9D:B8:3D:F3:E4: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 7B:9D:B8:3D:F3:E4: Unknown.

2022-10-11 17:43:29,832 bleak.backends.winrt.scanner DEBUG: Received 22:F0:52:59:39:80: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 22:F0:52:59:39:80: Unknown.

2022-10-11 17:43:29,835 bleak.backends.winrt.scanner DEBUG: Received 10:6D:61:74:6F:3E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 10:6D:61:74:6F:3E: Unknown.

2022-10-11 17:43:29,931 bleak.backends.winrt.scanner DEBUG: Received 76:FD:EF:61:02:C4: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 76:FD:EF:61:02:C4: Unknown.

2022-10-11 17:43:29,933 bleak.backends.winrt.scanner DEBUG: Received 52:11:FA:6D:49:99: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 52:11:FA:6D:49:99: Unknown.

2022-10-11 17:43:29,934 bleak.backends.winrt.scanner DEBUG: Received 52:11:FA:6D:49:99: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 52:11:FA:6D:49:99: Unknown.

2022-10-11 17:43:29,935 bleak.backends.winrt.scanner DEBUG: Received 7B:9D:B8:3D:F3:E4: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 7B:9D:B8:3D:F3:E4: Unknown.

2022-10-11 17:43:29,938 bleak.backends.winrt.scanner DEBUG: Received 2F:F3:72:2E:1D:B1: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received 2F:F3:72:2E:1D:B1: Unknown.

2022-10-11 17:43:29,940 bleak.backends.winrt.scanner DEBUG: Received C0:00:00:11:40:7E: Unknown.

DEBUG:bleak.backends.winrt.scanner:Received C0:00:00:11:40:7E: Unknown.

2022-10-11 17:43:29,941 bleak.backends.winrt.scanner DEBUG: Received 35:E5:49:CD:8F:64: Unknown.