repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

ckulka/baikal-docker | 976463277 | Title: Exposing local directories for all directories within /var/www/baikal/

Question:

username_0: As the title says, I've tried mapping ./www to /var/www and creaeting all the directories manually, and applying the same permissions that are in the example docker-compose.yml file to all the folders, but when I spin the container up it doesn't work.

The reason I need to do this is I need to edit the .htaaccess file (for remapping the default iPhone CardDAV value) within the html directory, but my container doesn't have vim, vi, nano, or any other text editor installed, installing them is on face value a PITA, and I would much rather map everything to a local directory for future config changes if required.

Answers:

username_1: Hi @username_0, just to make sure I understand your scenario correctly: you only need to modify the `/var/www/baikal/html/.htaccess` file?

I would recommend mounting just that modified file into the container and not the entire `/var/www/baikal` folder, for example based on [examples/docker-compose.localvolumes.yaml](https://github.com/username_1/baikal-docker/blob/8781617f179fb7b115a179d47f425c460996c876/examples/docker-compose.localvolumes.yaml):

```yaml

version: '2'

services:

baikal:

image: username_1/baikal:nginx

restart: always

ports:

- "80:80"

volumes:

- ./config:/var/www/baikal/config

- ./data:/var/www/baikal/Specific

# Mount your modified .htaccess file

- ./my.htaccess:/var/www/baikal/html/.htaccess

```

My main reason is that you then don't have to worry about all the other files in the `/var/www/baikal` folder, especially when later upgrading to a newer version.

If you really (for other reasons) want to mount everything of `/var/www/baikal` from a local directory, you'll have to

1. Copy the Baikal files from the Docker image into your local directory

2. Fix file permissions

3. Add the mount in the Docker Compose file

```bash

# Step 1: create the local directory and get the Baikal files

mkdir /my/local/directory

docker run --rm -it -v /my/local/directory:/tmp username_1/baikal cp -R /var/www/baikal /tmp

# Step 2: fix file permissions (Nginx)

chown -R 101:101 /my/local/directory

# Step 2: fix file permissions (Apache)

chown -R 33:33 /my/local/directory

```

Let me know if that helps 👍

username_0: I did not know you could mount a single file! That is a much easier option, let me do that now.

username_0: @username_1 OK so I've done the above and it has worked a treat. I am now however having issues with the rewriting for iOS 14. I've found several different links all suggesting different formats, such as:

```

RewriteRule /carddav /card.php [R,L]

RewriteRule /caldav /cal.php [R,L]

```

```

RewriteRule /.well-known/carddav /card.php [R,L]

RewriteRule /.well-known/caldav /cal.php [R,L]

```

```

RedirectPermanent /.well-known/caldav http://servername.tld/cal.php

RedirectPermanent /.well-known/carddav http://servername.tld/card.php

```

```

rewrite ^/.well-known/caldav /dav.php redirect;

rewrite ^/.well-known/carddav /dav.php redirect;

```

And others that I can now not find.

I have also just stumbled across [this](https://github.com/sabre-io/Baikal/issues/744). Much like the OP, I've tried many of those URIs too and none have worked. One comment mentions that from iOS 12 CardDAV will only work with HTTPs (which I have not configured because certificates), yet OP also mentions CardDAV working with HTTP. I cannot get an output from either of the log files in /var/log/nginx/, and I've seen my iPhone autoppulating the URI with dav.php rather than card.dav, so I honestly don't know what to believe any more!

username_1: I'm afraid I can't help you there, I don't have an iPhone - just one thing (and this is to some extend me misleading you): nginx doesn't use the `.htacess` file, I forgot to take that out when using the Docker Compose file example.

Can you please retry this with the Apache variant, e.g. just `image: username_1/baikal`?

If that doesn't work either, I think your best bet now is to open an issue with in Baikal repository given this is related to how Baikal works with iOS 14 with Apache. They are maintaining the code + configuration and might have a working setup/example for iOS 14. |

fenom-template/fenom | 252730880 | Title: Custom Filters/Modifiers

Question:

username_0: Does Fenom have a filter where you can use your own custom PHP functions? I see that it was on the To Do list, but I've also seen other things on a different To Do list that seem to be implemented already but couldn't figure out if this one was still pending or not.

Answers:

username_0: Never mind. I tested it out and it works.

Status: Issue closed

|

jfbercher/jupyter_latex_envs | 198163978 | Title: References issue

Question:

username_0: (<a id="cit-gerstner2014neuronal" href="#call-gerstner2014neuronal">Gerstner, 2014</a>) <NAME>, ``_Neuronal dynamics : from single neurons to networks and models of cognition_'', 2014.

I thin the last line should be "..." not ``..."

Answers:

username_1: Hi, these kind of quotes will render correctly in LaTeX as "typographic quotes" instead of typewriter/straight quotes. See the wikipedia [article](https://en.wikipedia.org/wiki/Quotation_mark) on quotes. anyway, if you want change this (eg because it looks better on the html output), you can edit the citation templates available in the top of the file `latex_env.js`. Thanks for using this extension. I am always happy to know that it is useful for people!

Status: Issue closed

|

godotengine/godot | 361671177 | Title: Allow expression node for visual shaders

Question:

username_0: **Issue description:**

Allow custom shader code inside of the visual shaders.

I made a mockup of what the node will look like.

Answers:

username_1: The inputs could be auto-generated from uniforms in the shader code.

username_0: This is the definition of a function. You mean scan the shader code for variables?

username_1: I meant it like adding something like `uniform vec3 Color = ...;` in the shader code and the node automatically adding an input called `Color` which is passed to the shader.

username_2: I've created a similar `Expression` node my my own noise module in:

https://github.com/username_2/godot-anl/commit/facbbcc59e0666c93b4a7877ec61910491eeb0f6

https://github.com/username_2/godot-anl/commit/299bfc61840a6bfec7f711bc8a577f1bdfae941f

I've adapted visual shader codebase to my own needs, some structures share similarities, might be useful...

username_3: I currently began to implement it. Not sure if the resulted form will be the same, but I always trying to achieve the best result. demo screenshot -

Status: Issue closed

|

jasonrohrer/OneLifeData7 | 555843014 | Title: Bear cave with bear on it doesn't block movement.

Question:

username_0:

Basically this bug was abused for two successful apocalypses where anyone who had no idea how the bug worked would have zero chance to stop it.

Basically: Block the tile directly in front of the bear cave with an impassible tile.

Double click the bear cave to wake the bear up into its animation where it stands at the mouth.

While bear is sticking out of cave run through the bear tile as it doesn't block movement.

????

Profit.

Answers:

username_0: Also to a lesser extent you can do the same by putting a goose on a tree stump. A lot less abusable but the same concept.

username_1: Unfortunately, due to the way moving animals work, they can't cross blocking tiles.

So any block tile that generates a moving animal has to become temporarily unblocking.

I will look at the code and see if there's a way to let an animal that is blocked by the same tile that it's currently standing on "escape", but this would prevent you from slamming a door in a sheep's face.

Blocking tiles, I think, prevent you from walking south, ever ever ever, even if you're standing on that tile (this is why a door can get slammed in your face and block you from "ghosting" through it).

And in the case of the bear and the goose, they NEED to walk south. |

CocoaPods/CocoaPods | 310694570 | Title: git version issue

Question:

username_0: <!--

ℹ Please fill out this template when filing an issue.

All lines beginning with an ℹ symbol instruct you with

what info we expect.

Before you start, are you using the latest CocoaPods release?

A lot changes with Xcode releases that are not backwards compatible.

Not an issue about the CocoaPods command line app? Please file an issue in the appropriate repo - https://github.com/CocoaPods

Issues are for feature requests, and bugs; questions should go to Stack Overflow

Using CocoaPods <= 0.39: http://blog.cocoapods.org/Sharding/

Using Xcode 8: Requires CocoaPods 1.1.0 or above.

Issue with Nanaimo not loading:

Please run `[sudo] gem uninstall nanaimo` and remove all but the latest version.

Issues with `pod search`? Try deleting your cache `rm -rf ~/Library/Caches/CocoaPods`first.

-->

* [ ] I've read and understood the [*CONTRIBUTING guidelines and have done my best effort to follow](https://github.com/CocoaPods/CocoaPods/blob/master/CONTRIBUTING.md).

# Report

## What did you do?

ℹ Please replace these two lines with what you did.

e.g. Run `pod install`

## What did you expect to happen?

ℹ Please replace these two lines with what you expected to happen.

e.g. Install all pod dependencies correctly.

## What happened instead?

ℹ Please replace these two lines with of what happened instead.

e.g. Pod A is missing the subspec B for target C.

## CocoaPods Environment

ℹ Please replace these two lines with the output of `pod env`.

e.g. via `pod env | pbcopy`

## Project that demonstrates the issue

ℹ Please link to a project we can download that reproduces the issue.

You can delete this section if your issue is unrelated to build problems,

i.e. it's only an issue with CocoaPods the tool.

Answers:

username_1: please fill in the template

Status: Issue closed

|

whatwg/html | 104063207 | Title: Consider defining "write-only" form elements.

Question:

username_0: I put together a "write-only" strawman a few months ago that might be worth evaluating: https://username_0.github.io/credentialmanagement/writeonly/

Discussed briefly on https://lists.w3.org/Archives/Public/public-whatwg-archive/2014Oct/0181.html and http://discourse.wicg.io/t/write-only-input-fields/598.

Answers:

username_1: Even though "Removing the writeonly attribute will not clear an element’s write-only value flag. Once that flag is set for an element, it cannot be cleared." is true, it seems you can just as easily replace the `<input>`. Perhaps it should specify a nonce you cannot access from the page?

username_1: I'm going to close this issue as it's mostly noise for us and not helping you get more attention for the idea. Hope that's acceptable. Once there's more interest we can figure out the logistics.

Status: Issue closed

username_0: Yeah, no worries. I've poked some folks on Chrome's password management team (Hi, @vabr!) in the hopes of getting them interested in this. If I can find someone to implement this kind of thing, I'll clean up the spec in the form of PRs.

username_2: @username_1 What is the best way to re-open this discussion?

username_1: @username_2 https://discourse.wicg.io/ perhaps. |

ansible/community | 137046233 | Title: create a label explicitly for greg/robyn tasks

Question:

username_0: Considering making a label to be used as a secondary label for things that only Greg / Robyn can do for the time being. This is stuff like "pay bills / do expenses", meetup-related tasks, etc.

This is mostly because we don't necessarily want to have to assign everything to one or the other of us (particularly when it's in backlog and either of us could do it) immediately; and because we want contributors to be able to easily find things they can do.

Since we're often the ones who create these greg-or-robyn items, it seems like it would be easier for us to label those explicitly, rather than labeling everything else as "adopt me" if it's adoptable (because then we have a combo of unlabeled + adoptme things, even though often unlabeled things are adoptable, particularly if we don't religiously triage / label incoming issues.

Answers:

username_0: Also: I suggest "batmobile" as the label. Only because right now we know that at least greg and robyn are in that car, but the car could have more passengers in the future :)

Only half-jokingly :) (But willing to consider other things)

username_0: Also:

Yes, we could delineate along the lines of "backlog" vs. "ready" (ie: things can only be adopted from the ready column, not the backlog column) -- and maybe we keep our things in backlog until one of us self-assigns it and we put it in-progress.

Arguments against that: backlog is sort of a capacity-management strategy; normally it would be a way to constrain how much a team is working on -- backlog can't move over until time is freed up to do things.

In a contribution environment, that's kind of a weird thing; by definition, when a new contributor wants to contribute by working on a specific task, the capacity of the overall team increases, in a sense.

It almost seems like "adopt me" type things would be in backlog; someone could indicate they want to adopt or ask questions, and once they or we collectively scope out the further plan in the issue and when they think it might be done, it goes into Ready; once they start *actually doing work* (and showing it / linking to it in the issue), it goes to in progress.

Or maybe too philosophical for this early in the morning. :)

username_1: So why would we create a separate tag here, versus just assigning it to one of us?

Status: Issue closed

username_1: Per irc convo:

<•username_1> Not sure why we wouldn't just assign it to one of us if we know up front that it belongs to one of us.

2:50 PM <•username_1> i.e. if it's in that class -- i.e. the kind of thing only Robyn or Greg *could* do -- then we'd know that up front and assign it.

2:51 PM nitzmahone → •nitzmahone_

2:51 PM <•username_1> And yes, that might mean Robyn and Greg reassigning things back and forth and that's fine. :)

2:51 PM nitzmahone_ → •nitzmahone

2:51 PM <•username_1> Which implies that if it's a task we think anyone can do, we leave it unassigned.

2:52 PM <•username_1> (And then we are free to pick it up ourselves once we move it into in_progress). |

Azure/azure-cli | 1010647994 | Title: az vm list-sizes doesn't support location norwaywest

Question:

username_0: **Resource Provider: VM**

<!--- What is the Azure resource provider your feature is part of? --->

**Description of Feature or Work Requested: Location support for Norway West**

<!--- Provide a brief description of the feature or work requested. A link to conceptual documentation may be helpful too. --->

**Minimum API Version Required**

<!--- What is the minimum API version of your service required to implement your feature? --->

**Swagger Link**

<!--- Provide a link to the location of your feature(s) in the REST API specs repo. If your feature(s) has corresponding commit or pull request in the REST API specs repo, provide them. This should be on the master branch of the REST API specs repo. --->

**Target Date**

<!--- If you have a target date for release of this feature/work, please provide it. While we can't guarantee these dates,

it will help us prioritize your request against other requests. --->

Answers:

username_0: Below is the output of the command

# az vm list-sizes --location norwaywest -o table

(NoRegisteredProviderFound) No registered resource provider found for location 'norwaywest' and API version '2021-04-01' for type 'locations/vmSizes'. The supported api-versions are '2015-05-01-preview, 2015-06-15, 2016-03-30, 2016-04-30-preview, 2016-08-30, 2017-03-30, 2017-12-01, 2018-04-01, 2018-06-01, 2018-10-01, 2019-03-01, 2019-07-01, 2019-12-01, 2020-06-01, 2020-12-01, 2021-03-01, 2021-04-01, 2021-07-01'. The supported locations are 'eastus, eastus2, westus, centralus, northcentralus, southcentralus, northeurope, westeurope, eastasia, southeastasia, japaneast, japanwest, australiaeast, australiasoutheast, australiacentral, brazilsouth, southindia, centralindia, westindia, canadacentral, canadaeast, westus2, westcentralus, uksouth, ukwest, koreacentral, koreasouth, francecentral, southafricanorth, uaenorth, switzerlandnorth, germanywestcentral, norwayeast, jioindiawest, westus3'.

username_1: Compute |

QutEcoacoustics/audio-analysis | 371363479 | Title: Continuous integration build and tests need to be run on other platforms

Question:

username_0: ## Is your feature request related to a problem? Please describe.

We only test and build on Windows platforms. We have no visibility into failures on other platforms until users report it.

## Describe the solution you'd like

Our CI process should run ideally on both Mac and Linux.

## Describe alternatives you've considered

There are no alternatives. We probably don't need to build the project, maybe we could just run unit tests or some more basic black box tests?

## Additional context

I think Azure Pipelines may offer a great way to do this.<issue_closed>

Status: Issue closed |

PyMySQL/PyMySQL | 349780555 | Title: Deprecate old password

Question:

username_0: Old password is not recommended for all version of MySQL which PyMySQL supports.

I don't test it. I don't use it. I don't want support it. And existing code is ugly.

Deprecate it in next version, and remove it eventually.

Answers:

username_1: So how does one use the new password? I looked at the docs trying to understand what I am doing wrong, but couldn't figure it out (I'm using the password= keyword argument in the pymysql.connect function, is this no longer correct?).

PS: I arrived here from https://github.com/PyMySQL/PyMySQL/pull/713, wondering why the fix hadn't been pushed to pypi yet).

username_0: Because make new release take my time and energy.

And there is far better solution: upgrading password.

Note that old password is Pre-4.1 format. MySQL 4.1 is released 2003!

MySQL 5.7 CLI dropped old password support already.

username_1: Believe it or not, I did google, but I was under the assumption that "new password" was a pymysql concept, not a server setting. I absolutely respect your time and realize that you are under no obligation to answer my questions.

That said, I cannot upgrade a 20 year old system like that. So if you stop supporting it, I'll have to use a different package.

username_2: @username_1 - I'm in the same situation. @username_0, why would you remove backwards-compatible support? Users should have the option to use less secure methods if they so choose - not everyone is able to upgrade older systems as @username_1 already pointed out.

username_0: Huh. You have option to use old version of PyMySQL, or fork this project.

Don't forget this is not Oracle's project: I maintain this project almost as a volunteer.

Or do you want to donate $10/month for maintain old password?

username_0: One more note: Even Oracle (MySQL owner) removed old password support form MySQL client library.

Why do you think one volunteer maintainer should support 20-year-old legacy feature longer than owner company?

username_3: This is really unfortunate. I have a project that needs to connect to an old server that doesn't use new password yet. I can't force the server maintainer to update it, I just need it to work. Backwards compatibility would have been nice, I'll have to find an alternative library to use.

username_0: You can use old PyMySQL like you are using old password, old MySQL cli, etc.

username_3: I wonder if something else is going on then, because the command-line client works fine for the DB I'm connecting to, but I get the same error from the client. Is there a way to tell (with restricted rights on the DB) what's happening?

username_0: Don't bother me anymore.

MySQL already **removed** (not only deprecated) it.

I haven't test it for long years. I don't want to test and maintain it anymore.

I'm tired to maintain this project and I must reduce maintenance cost of this project.

Status: Issue closed

|

chenyee1981/bibizip | 548520783 | Title: Cape breton Community Housing Association -

Question:

username_0: [https://www.bibizip.com/#git](https://www.bibizip.com/#git)

`https://www.bibizip.com/company/view/uXnS/CAREER TRAINING CONCEPTS INC./1` salary @

`https://www.bibizip.com/company/view/vKVX/Kunstverein Trier Junge Kunst/1` salary @

`https://www.bibizip.com/company/view/dYYX/Safe Work Medicina e Segurança do Trabalho /1` salary @

`https://www.bibizip.com/company/view/7bry/MERRION HALL MANAGEMENT COMPANY LTD/1` salary @

`https://www.bibizip.com/company/view/2IUO/P Douglas Mays/1` salary @

`https://www.bibizip.com/company/view/BA9T/Proton Systems - India/1` salary @

`https://www.bibizip.com/company/view/BxOM/PECULIAR SUPPLIERS/1` salary @

`https://www.bibizip.com/company/view/e33I/BEMA RAIL TRAINING ACADEMY LIMITED/1` salary @

`https://www.bibizip.com/company/view/448R/Discover Avalon, the Land of Wonder and Enchantment/1` salary @

`https://www.bibizip.com/company/view/HNQA/FORT WAYNE TURNERS/1` salary @

`https://www.bibizip.com/company/view/QWgB/MARYLAND STREAM RESTORATION ASSOCIATION/1` salary @

`https://www.bibizip.com/company/view/qwRT/Saileela Tapes - India/1` salary @

`https://www.bibizip.com/company/view/8SVx/Telebyte S.A.de C.V./1` salary @

`https://www.bibizip.com/company/view/y2eZ/Eat Tucker/1` salary @

`https://www.bibizip.com/company/view/Epwn/HJELM-PEDERSEN CONSULT/1` salary @

`https://www.bibizip.com/company/view/EcRX/Instituto De Pesquisas Veterinarias Especializada/1` salary @

`https://www.bibizip.com/company/view/5zTO/Worldspeaking/1` salary @

`https://www.bibizip.com/company/view/ql2I/Sekundarschule Hinrich Brunsberg/1` salary @

`https://www.bibizip.com/company/view/81iS/STRATEGOS CONSULTING PRIV LIMITED/1` salary @

`https://www.bibizip.com/company/view/y2PY/EL CLUB DEL ASESOR INTERSOFT SL/1` salary @

`https://www.bibizip.com/company/view/6pMn/Zellar Home Care/1` salary @

`https://www.bibizip.com/company/view/pwKR/AMBER ADVOKATER I HÄSSLEHOLM OCH ÄLMHULT AB/1` salary @

`https://www.bibizip.com/company/view/kFGh/BreaCan/1` salary @

`https://www.bibizip.com/company/view/OlKJ/DELTA OFİS/1` salary @

`https://www.bibizip.com/company/view/7X6J/Maenan Abbey/1` salary @

`https://www.bibizip.com/company/view/aa4J/Agro del mañana/1` salary @

`https://www.bibizip.com/company/view/e5xU/Bait Al Tamur - India/1` salary @

`https://www.bibizip.com/company/view/LqUn/Golden Orange Turizm/1` salary @

`https://www.bibizip.com/company/view/oLD1/dfp.hu/1` salary @

`https://www.bibizip.com/company/view/IIQJ/KSB Service COTUMER/1` salary @

`https://www.bibizip.com/company/view/se8L/Q1033 KTMQ/1` salary @

`https://www.bibizip.com/company/view/DqUZ/Saksham Sales Corporation - India/1` salary @

`https://www.bibizip.com/company/view/UtCV/Audika AG Switzerland/1` salary @

`https://www.bibizip.com/company/view/1QMP/ARTISAN FERN LTD/1` salary @

`https://www.bibizip.com/company/view/U1fA/Angler Lawn & Landscape, Inc/1` salary @

`https://www.bibizip.com/company/view/e5Zx/BABY BELLE EXCLUSIVE/1` salary @

`https://www.bibizip.com/company/view/PUEY/C.C.G., Inc./1` salary @

`https://www.bibizip.com/company/view/EpKG/Honk Product/1` salary @

`https://www.bibizip.com/company/view/sEhS/Rigsurveys/1` salary @

`https://www.bibizip.com/company/view/a2nx/beahead.biz/1` salary @

`https://www.bibizip.com/company/view/V75R/West Feliciana Parish/1` salary @

`https://www.bibizip.com/company/view/U1ky/Ambaji Medical - India/1` salary @

`https://www.bibizip.com/company/view/UtPT/ALUVENT/1` salary @

`https://www.bibizip.com/company/view/1Qxx/afigraficas ltda/1` salary @

`https://www.bibizip.com/company/view/e5YZ/Blog Neoorog/1` salary @

`https://www.bibizip.com/company/view/PHGO/<NAME>/1` salary @

`https://www.bibizip.com/company/view/y2dh/Entretien Dijonnais/1` salary @

`https://www.bibizip.com/company/view/lDxP/The Friesian Connection/1` salary @

`https://www.bibizip.com/company/view/5O8L/SEVEN RANGERS HEALTHCARE PRIVATE LIMITED/1` salary @

`https://www.bibizip.com/company/view/K9xj/Signing Agent/1` salary @

`https://www.bibizip.com/company/view/e3Vi/Be at your Best/1` salary @

`https://www.bibizip.com/company/view/0ghx/Landesjugendchor Sachsen/1` salary @

`https://www.bibizip.com/company/view/BJ1Y/PRISM Resources Group/1` salary @

`https://www.bibizip.com/company/view/UStG/Anthony Passerino/1` salary @

`https://www.bibizip.com/company/view/UKES/Aza INC/1` salary @

`https://www.bibizip.com/company/view/kT39/BD AND P HOTELS (INDIA) PRIVATE LIMITED/1` salary @

`https://www.bibizip.com/company/view/kJFb/brunnebyved/1` salary @

`https://www.bibizip.com/company/view/9kLO/Bhardwaj Hospital - India/1` salary @

`https://www.bibizip.com/company/view/b4pd/CxSAST/1` salary @

[Truncated]

`https://www.bibizip.com/company/view/nSCJ/Route 64/1` salary @

`https://www.bibizip.com/company/view/Rvoz/Megamedia Sp. z o.o./1` salary @

`https://www.bibizip.com/company/view/csuE/Optary Consult GmbH/1` salary @

`https://www.bibizip.com/company/view/gadO/Asian Business Publication Ltd (ABPL Group)/1` salary @

`https://www.bibizip.com/company/view/1stB/Apollo PN Strapping/1` salary @

`https://www.bibizip.com/company/view/PPLT/Chakana Pacific/1` salary @

`https://www.bibizip.com/company/view/wkPP/Inkubator Ås/1` salary @

`https://www.bibizip.com/company/view/VV2z/Thorpe Park/1` salary @

`https://www.bibizip.com/company/view/gMmE/ANTELOPE INVESTMENT INC/1` salary @

`https://www.bibizip.com/company/view/ut4L/Centro Veterinario A Marosa/1` salary @

`https://www.bibizip.com/company/view/3fQR/Casa Green /1` salary @

`https://www.bibizip.com/company/view/oXgJ/DPSG St. Franziskus St. Marien Herne Eickel/1` salary @

`https://www.bibizip.com/company/view/OrPY/Dataflow Computers/1` salary @

`https://www.bibizip.com/company/view/wkOT/Inversiones Luesco SAS/1` salary @

`https://www.bibizip.com/company/view/7SbY/MediBus/1` salary @

`https://www.bibizip.com/company/view/sCiU/Pacific Controls Ltd/1` salary @

`https://www.bibizip.com/company/view/n11P/Referral Marketing & Business Networking/1` salary @

`https://www.bibizip.com/company/view/g2Yx/ARDEN TRANSFORMATION/1` salary @

`https://www.bibizip.com/company/view/9RPM/BIKAPPA S.R.L./1` salary @

`https://www.bibizip.com/company/view/ba8z/Cape breton Community Housing Association/1` salary @ |

gryffon/ringteki | 426987852 | Title: Asahina Takako lag on showing fase

Question:

username_0: A recently put face-down card in province is not immediately available to watch via Takako's ability. I takes another action or pass (I think) in order to become available.

It's a really small bug but sometimes can condition your play having that information fresh.

Cheers! |

Lagalt/Lagalt | 846022584 | Title: Bug: Posting new project with userId returns null user

Question:

username_0: Making POST method to [https://lagalt-server.herokuapp.com/api/v1/projects](https://lagalt-server.herokuapp.com/api/v1/projects) returns user:null. Project is added to db and mapped to correct user. Return body won't show it...

Request body :

`{

"title": "testT2",

"industry": "testI",

"description": "testD",

"gitlink": "testGit",

"progress": null,

"skills": [],

"user": {"id":1}

}`

Response HTTP 201

`{

"id": 15,

"title": "testT2",

"industry": "testI",

"description": "testD",

"gitlink": "testGit",

"progress": null,

"skills": [],

"user": null

}`

Status: Issue closed

Answers:

username_0: POST method return body has user id as it should. Rollback from google id fixed it |

machinezone/IXWebSocket | 574328039 | Title: "Compressed bit must be 0 if no negotiated deflate-frame extension" in Safari

Question:

username_0: Hello!

We're getting an error when trying to make a WebSocket connection from JavaScript in Safari to a C++ server using the latest version of the IXWebSocket library. The error is as follows:

`Compressed bit must be 0 if no negotiated deflate-frame extension`

We don't get this error as of IXWebSocket's SHA 1bb847a51cc54fc6417831a5a6c8b4e245923b4a but we are getting it with master.

Our suspicion is that it may be related to the per-message deflate compression changes that were made recently. Specifically, it seems that Safari/WebKit does not support per-message deflate (they offer their own "deflate-frame" compression). So, we attempted to turn off per-message deflate in our IXWebSocket server, but we're still getting this error. We're not sure if that means that it's not related to per-message deflate after all, or if it means that we aren't correctly turning it off.

Either way, we thought you'd like to know. For now, we're going to keep using 1bb847a51cc54fc6417831a5a6c8b4e245923b4a. Please let us know if you have any questions or if we can provide any additional information!

Answers:

username_1: Hi Maia,

Thanks for the report. Sorry I broke something recently ... I'm pretty sure that the bad commit is this one:

```

commit 4c66a7561e225103efc9caf262d3224700ccb70b

Author: <NAME> <<EMAIL>>

Date: Tue Feb 18 21:38:28 2020 -0800

(WebSocketServer) add option to disable deflate compression, exposed with the -x option to ws echo_server

```

The commit right before is 111475e65c30e23bd215db9dff515f88e680895b / so that one should be safe. I'll try to fix that soon. We now have unittest hooked up to github actions btw.

username_1: The unittest is green now, I believe that 4ef04b8 has fixed the problem.

username_0: Confirmed! Looking good with 4ef04b8. Thanks very much for the amazingly quick turnaround, and for making such a great library! This ticket can be closed from our standpoint.

Cheers!

--maia

username_1: Great, thanks for testing and using !

The 'fix' could be polished a bit, I don't know when I'll get to that.

username_2: Thanks Benjamin!

username_1: My pleasure Sam, undoing my previous mistake was a piece of cake 🎂🎂🎂🎂 !

Status: Issue closed

|

tobischw/notery | 419763181 | Title: Is this Paginated editor?

Question:

username_0:

Answers:

username_1: Hi Sunjinwu,

this is not the paginated editor but a hackathon submission. I am still working on the paginated editor, I hope to have a working example soon. There are still a couple issues relating to backspaces/deleting blocks.

Status: Issue closed

|

prettier/prettier | 654858261 | Title: Adding prettier-ignore comment in JSX doubles the comment

Question:

username_0: test

</div>

);

}

```

**Expected behavior:**

I would expect prettier to ignore this ugly block entirely. This is probably a pretty uncommon use case, but I was trying to get a `ts-ignore` comment to work in JSX by following instructions here (https://github.com/microsoft/TypeScript/issues/27552#issuecomment-550531540) and ended up in this state where every time I saved the file, prettier auto formatting would double the number of comments I had

Answers:

username_1: test

</div>

);

}

```

Status: Issue closed

|

alexryd/homebridge-shelly | 567880135 | Title: Please add support for the new Shelly Bulb Duo

Question:

username_0: Hi there, please add support for the new Shelly Bulb Duo, Homebridge does find the device, but it in an unknown device. Please find below the output description. Thank you and best regards

server:~ server$ homebridge-shelly describe 192.168.x.x

Type: SHBDUO-1

CoAP description: {"blk":[{"I":0,"D":"Channel0"}],"sen":[{"I":111,"T":"S","D":"Brightness","R":"0/100","L":0}]}

CoAP status: {"G":[[0,111,1073740964]]}

HTTP Settings:: {"device":{"type":"SHBDUO-1","mac":"98F4ABD16245”,”hostname":"ShellyBulbDuo-D16245”,”num_outputs":1},"wifi_ap":{"enabled":false,"ssid":"ShellyBulbDuo-D16245”,”key":""},"wifi_sta":{"enabled":true,"ssid":"Wireless","ipv4_method":"dhcp","ip":null,"gw":null,"mask":null,"dns":null},"wifi_sta1":{"enabled":false,"ssid":null,"ipv4_method":"dhcp","ip":null,"gw":null,"mask":null,"dns":null},"mqtt":{"enable":false,"server":"192.168.33.3:1883","user":"","id":"ShellyBulbDuo-D16284","reconnect_timeout_max":60,"reconnect_timeout_min":2,"clean_session":true,"keep_alive":60,"max_qos":0,"retain":false,"update_period":30},"coiot":{"update_period":15},"sntp":{"server":"time.google.com"},"login":{"enabled":false,"unprotected":false,"username":"admin","password":"<PASSWORD>"},"pin_code":"AIwp$C","name":"","fw":"20200129-155730/master@a18bfaec","discoverable":true,"build_info":{"build_id":"20200129-155730/master@a18bfaec","build_timestamp":"2020-01-29T15:57:30Z","build_version":"1.0"},"cloud":{"enabled":true,"connected":true},"timezone":"Europe/Madrid”,”lat":41.0123,”lng":13.0123,”tzautodetect":true,"tz_utc_offset":3600,"tz_dst":false,"tz_dst_auto":true,"time":"22:57","hwinfo":{"hw_revision":"prod-2019-12","batch_id":0},"mode":"white","transition":1000,"lights":[{"ison":true,"brightness":70,"white":36,"temp":4068,"default_state":"on","auto_on":0,"auto_off":0,"schedule":false,"schedule_rules":[]}],"night_mode":{"enabled":0,"start_time":"00:00","end_time":"00:00","brightness":0}}

HTTP Status:: {"wifi_sta":{"connected":true,"ssid":"Wireless","ip":"192.168.x.x”,”rssi":-54},"cloud":{"enabled":true,"connected":true},"mqtt":{"connected":false},"time":"22:57","serial":22,"has_update":false,"mac":"98F4ABD16245”,”lights":[{"ison":true,"brightness":70,"white":36,"temp":4068}],"meters":[{"power":4.41,"is_valid":"true"}],"update":{"status":"idle","has_update":false,"new_version":"20200129-155730/master@a18bfaec","old_version":"20200129-155730/master@a18bfaec"},"ram_total":50656,"ram_free":39600,"fs_size":233681,"fs_free":166915,"uptime":18794}

server:~ server$

Answers:

username_1: I +1

username_2: Seems like the Duo is not reporting its state correctly over CoAP. @username_0 could you update your device to the latest firmware and then run the describe command again?

username_1: pi@homebridge:~ $ homebridge-shelly describe 192.168.XXX.XXX

Type: SHBDUO-1

CoAP description: {"blk":[{"I":0,"D":"Channel0"}],"sen":[{"I":121,"T":"S","D":"State","R":"0/1","L":0},{"I":111,"T":"S","D":"Brightness","R":"0/100","L":0},{"I":131,"T":"S","D":"ColorTemperature","R":"2700/6500","L":0},{"I":141,"T":"P","D":"Power","R":"0/9","L":0},{"I":211,"T":"S","D":"Energy counter 0 [W-min]","L":0},{"I":212,"T":"S","D":"Energy counter 1 [W-min]","L":0},{"I":213,"T":"S","D":"Energy counter 2 [W-min]","L":0},{"I":214,"T":"S","D":"Energy counter total [W-min]","L":0}]}

CoAP status: {"G":[[0,121,0],[0,111,37],[0,131,0],[0,141,0],[0,211,0],[0,212,0],[0,213,0],[0,214,3896]]}

HTTP Settings:: {"device":{"type":"SHBDUO-1","mac":"98F4ABD0CF33","hostname":"ShellyBulbDuo-D0CF33","num_outputs":1},"wifi_ap":{"enabled":false,"ssid":"ShellyBulbDuo-D0CF33","key":""},"wifi_sta":{"enabled":true,"ssid":"MySSID","ipv4_method":"static","ip":"192.168.XXX.XXX","gw":"192.168.XXX.1","mask":"255.255.255.0","dns":null},"wifi_sta1":{"enabled":false,"ssid":null,"ipv4_method":"dhcp","ip":null,"gw":null,"mask":null,"dns":null},"mqtt":{"enable":false,"server":"192.168.33.3:1883","user":"","id":"ShellyBulbDuo-D0CF33","reconnect_timeout_max":60,"reconnect_timeout_min":2,"clean_session":true,"keep_alive":60,"max_qos":0,"retain":false,"update_period":30},"coiot":{"update_period":15},"sntp":{"server":"time.google.com","enabled":true},"login":{"enabled":false,"unprotected":false,"username":"admin","password":"<PASSWORD>"},"pin_code":"!w$L#J","name":"","fw":"20200320-123338/v1.6.2@514044b4","discoverable":false,"build_info":{"build_id":"20200320-123338/v1.6.2@514044b4","build_timestamp":"2020-03-20T12:33:38Z","build_version":"1.0"},"cloud":{"enabled":true,"connected":true},"timezone":"Europe/Berlin","lat":53.551102,"lng":9.99368,"tzautodetect":true,"tz_utc_offset":7200,"tz_dst":true,"tz_dst_auto":true,"time":"09:17","unixtime":1586510273,"hwinfo":{"hw_revision":"prod-2019-12","batch_id":0},"mode":"white","transition":1000,"lights":[{"ison":false,"brightness":37,"white":0,"temp":0,"default_state":"on","auto_on":0,"auto_off":0,"schedule":true,"out_on_url":"","out_off_url":"","schedule_rules":["0000bss-0123456-50;0;on","0000bsr-0123456-50;0;off"]}],"night_mode":{"enabled":false,"start_time":"00:00","end_time":"00:00","brightness":0}}

HTTP Status:: {"wifi_sta":{"connected":true,"ssid":"MySSID","ip":"192.168.XXX.XXX","rssi":-66},"cloud":{"enabled":true,"connected":true},"mqtt":{"connected":false},"time":"09:17","unixtime":1586510273,"serial":6846,"has_update":false,"mac":"98F4ABD0CF33","lights":[{"ison":false,"has_timer":false,"timer_remaining":0,"brightness":37,"white":0,"temp":0}],"meters":[{"power":0,"is_valid":"true","timestamp":1586510273,"counters":[0,0,0],"total":3896}],"update":{"status":"idle","has_update":false,"new_version":"20200320-123338/v1.6.2@514044b4","old_version":"20200320-123338/v1.6.2@514044b4"},"ram_total":50376,"ram_free":39440,"fs_size":233681,"fs_free":163652,"uptime":423286}

username_0: Does the code from user username_1 solve it?

username_0: Update done, attached the output:

server:~ server$ homebridge-shelly describe 192.168.x.xx

Type: SHBDUO-1

CoAP description: {"blk":[{"I":0,"D":"Channel0"}],"sen":[{"I":121,"T":"S","D":"State","R":"0/1","L":0},{"I":111,"T":"S","D":"Brightness","R":"0/100","L":0},{"I":131,"T":"S","D":"ColorTemperature","R":"2700/6500","L":0},{"I":141,"T":"P","D":"Power","R":"0/9","L":0},{"I":211,"T":"S","D":"Energy counter 0 [W-min]","L":0},{"I":212,"T":"S","D":"Energy counter 1 [W-min]","L":0},{"I":213,"T":"S","D":"Energy counter 2 [W-min]","L":0},{"I":214,"T":"S","D":"Energy counter total [W-min]","L":0}]}

CoAP status: {"G":[[0,121,0],[0,111,73],[0,131,4828],[0,141,0],[0,211,0],[0,212,0],[0,213,0],[0,214,1601]]}

HTTP Settings:: {"device":{"type":"SHBDUO-1","mac”:”xxx”,”hostname":"ShellyBulbDuo-xxx","num_outputs":1},"wifi_ap":{"enabled":false,"ssid":"ShellyBulbDuo-xxx”,”key":""},"wifi_sta":{"enabled":true,"ssid”:”xxx”,”ipv4_method":"dhcp","ip":null,"gw":null,"mask":null,"dns":null},"wifi_sta1":{"enabled":false,"ssid":null,"ipv4_method":"dhcp","ip":null,"gw":null,"mask":null,"dns":null},"mqtt":{"enable":false,"server":"192.168.33.3:1883","user":"","id":"ShellyBulbDuo-xxx”,”reconnect_timeout_max":60,"reconnect_timeout_min":2,"clean_session":true,"keep_alive":60,"max_qos":0,"retain":false,"update_period":30},"coiot":{"update_period":15},"sntp":{"server":"time.google.com","enabled":true},"login":{"enabled":false,"unprotected":false,"username":"admin","password":"<PASSWORD>"},"pin_code":"AIwp$C","name":"","fw":"20200320-123338/v1.6.2@514044b4","discoverable":false,"build_info":{"build_id":"20200320-123338/v1.6.2@514044b4","build_timestamp":"2020-03-20T12:33:38Z","build_version":"1.0"},"cloud":{"enabled":true,"connected":true},"timezone":"Europe/Madrid”,”lat":46.0564,"lng":14.5081,"tzautodetect":true,"tz_utc_offset":7200,"tz_dst":true,"tz_dst_auto":true,"time":"09:33","unixtime":1586511219,"hwinfo":{"hw_revision":"prod-2019-12","batch_id":0},"mode":"white","transition":1000,"lights":[{"ison":false,"brightness":73,"white":56,"temp":4828,"default_state":"on","auto_on":0,"auto_off":0,"schedule":false,"out_on_url":"","out_off_url":"","schedule_rules":[]}],"night_mode":{"enabled":false,"start_time":"00:00","end_time":"00:00","brightness":0}}

HTTP Status:: {"wifi_sta":{"connected":true,"ssid”:”xxx”,”ip":"192.168.x.xx”,”rssi":-55},"cloud":{"enabled":true,"connected":true},"mqtt":{"connected":false},"time":"09:33","unixtime":1586511219,"serial":16256,"has_update":false,"mac”:”xxx”,”lights":[{"ison":false,"has_timer":false,"timer_remaining":0,"brightness":73,"white":56,"temp":4828}],"meters":[{"power":0,"is_valid":"true","timestamp":1586511219,"counters":[0,0,0],"total":1601}],"update":{"status":"idle","has_update":false,"new_version":"20200320-123338/v1.6.2@514044b4","old_version":"20200320-123338/v1.6.2@514044b4"},"ram_total":50376,"ram_free":39364,"fs_size":233681,"fs_free":164154,"uptime":1008166}

username_0: Thank you for taking the time to look into it.

username_2: Thanks @username_0 @username_1. I've added support for the Duo and published it as a beta version. You can try it out by installing `homebridge-shelly@beta` and then restarting homebridge.

username_0: Alexryd thank you! It works great.

username_1: Yes, it does! Thanks, great job :-)

username_3: Great, thanks, it works.

Any chance of adjusting the light-temp, too?

Have a splendid day =)

username_2: @username_3 You should be able to adjust the color temperature already?

username_3: Hi,

it looks like a local problem, Siri can‘t access the internet, other connections work fine.

I‘m including the screenshot:

Sorry for not checking further before commenting.

>

username_0: Confirmed, I can change the brightness and the temperature of the light with HomeKit.

Status: Issue closed

|

odranoelBR/vue-quasar-admin-example | 212611767 | Title: Inifinite-scrool CPU spíkes

Question:

username_0: Scrool down to more load more todos make CPU spikesa according to : http://forum.quasar-framework.org/topic/269/quasar-admin-examples/4

Answers:

username_0: I did not find the reason for the peaks.

Btw i put the timeout ( like quasar showcase), and now it's ok.

Fixed: 68b9d277426f7f128b3a38883652807bc2cbd2f3

Tks for report on forum.

Status: Issue closed

|

spring-cloud/spring-cloud-stream-binder-kafka | 261467143 | Title: Consider using KStreamBuilderFactoryBean from Spring Kafka

Question:

username_0: See this comment: https://github.com/spring-cloud/spring-cloud-stream-binder-kafka/pull/198#discussion_r140350539

Answers:

username_0: Latest changes in `kafka streams` binder around the usage of `StreamsBuilderFactoryBean` from `spring-kafka` address this issue.

Status: Issue closed

|

naser44/1 | 119252130 | Title: موزيلا: لم نعد بحاجة إلى أموال غوغل لنواصل النمو

Question:

username_0: <a href="http://ift.tt/1lOKWo3">موزيلا: لم نعد بحاجة إلى أموال غوغل لنواصل النموّ</a> |

hubmapconsortium/portal-ui | 792271820 | Title: Replace Nystrom with Blood in portal information

Question:

username_0: ## Feedback

__Help Desk Issue Tracker URL__:https://on.spiceworks.com/tickets/open/1080/activity

__Message__: Please update contact info for questions about HuBMAP infrastructure from

<NAME> to <NAME> (<EMAIL>). Here is an example, although

there might be others:

https://portal.hubmapconsortium.org/docs/infrastructure

Answers:

username_1: added to next sprint |

sbt/sbt | 519068504 | Title: stopping sbt requires twice CTRL+C

Question:

username_0: ## steps

sbt version: 1.3.3

1. start a akka-http app via sbt

2. CTRL+C => exception but sbt / akka still runs

3. CTRL+C => sbt stops

## problem

The following exception occurs for the first CTRL+C

```

Exception in thread "sbt-bg-threads-1" java.util.concurrent.RejectedExecutionException

at java.base/java.util.concurrent.ForkJoinPool.externalPush(ForkJoinPool.java:1880)

at java.base/java.util.concurrent.ForkJoinPool.externalSubmit(ForkJoinPool.java:1921)

at java.base/java.util.concurrent.ForkJoinPool.execute(ForkJoinPool.java:2453)

at scala.concurrent.impl.ExecutionContextImpl.execute(ExecutionContextImpl.scala:24)

at sbt.internal.BackgroundThreadPool$BackgroundRunnable.$anonfun$cleanup$1(DefaultBackgroundJobService.scala:390)

at sbt.internal.BackgroundThreadPool$BackgroundRunnable.$anonfun$cleanup$1$adapted(DefaultBackgroundJobService.scala:389)

at scala.collection.immutable.List.foreach(List.scala:392)

at sbt.internal.BackgroundThreadPool$BackgroundRunnable.cleanup(DefaultBackgroundJobService.scala:389)

at sbt.internal.BackgroundThreadPool$BackgroundRunnable.run(DefaultBackgroundJobService.scala:359)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:834)

```

## expectation

It should stop immediately

## notes

This behaviour changed when we updated sbt from 1.2.8 to 1.3.0 (maybe with 1.3.2)

Answers:

username_1: This is especially tricky when trying to kill the sbt process via script.

username_2: May be related: after an update to SBT 1.3.3, Play! framework cannot be stopped using CTRL-C when launched using `sbt run`.

It only logs `[warn] Canceling execution...` and does not stop. I forces to kill the process.

Reverting to 1.2.8 fixes the problem. Going to 1.3.0 makes it happen again.

username_3: Seeing this too on sbt 1.3.3 with AKKA microservices

Status: Issue closed

username_4: @username_0 Thanks for the report. This is one of sbt 1.3.x feature we added, which is to allow Ctrl-C to cancel the running task.

If you want the older behavior, you can do this:

```scala

Global / cancelable := false

```

username_5: @username_4 This seems to be caused by `StandardMain.executionContext` being shutdown by `StandardMain.closeRunnable`

before `BackgroundRunnable.cleanup()` receives its chance to execute the callbacks of `stopListeners` on it:

https://github.com/sbt/sbt/blob/v1.3.8/main/src/main/scala/sbt/Main.scala#L123

https://github.com/sbt/sbt/blob/v1.3.8/main/src/main/scala/sbt/internal/DefaultBackgroundJobService.scala#L390

This leads to an indefinite loop in `AbstractBackgroundJobService.shutdown()` because the job is never removed from `jobSet`:

https://github.com/sbt/sbt/blob/v1.3.8/main/src/main/scala/sbt/internal/DefaultBackgroundJobService.scala#L390

Should the `stopListeners` callbacks be executed on the `AbstractBackgroundJobService.pool` instead? It seems to be live just as long as all the jobs and their callback are executed:

https://github.com/sbt/sbt/blob/v1.3.8/main/src/main/scala/sbt/internal/DefaultBackgroundJobService.scala#L168

I’ll create a PR to address this. |

glotzerlab/signac | 543732545 | Title: H5Store locking issues

Question:

username_0: ### Description

I have been facing two issues that may or may not be related. Both issues are related to HDF5 file locking, a feature that was [implemented in HDF5 1.10.0 and changed in HDF5 1.10.1](https://support.hdfgroup.org/ftp/HDF5/releases/ReleaseFiles/hdf5-1.10.1-RELEASE.txt). This file locking feature is meant to help make SWMR (single writer, multiple reader) operations safer.

### To reproduce

The tests for storing pandas DataFrames in an H5Store fail. The file is already open from the `H5Store` instance, so when the `_h5get` function creates a pandas `HDFStore` object (which uses pytables internally), the file fails to open because it's already locked by the `H5Store`.

### Error output

Example of a test failure message:

```python

======================================================================

ERROR: test_clear (__main__.H5StorePandasDataTest)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/home/username_0/miniconda3/envs/py38/lib/python3.8/site-packages/pandas/io/pytables.py", line 627, in open

self._handle = tables.open_file(self._path, self._mode, **kwargs)

File "/home/username_0/miniconda3/envs/py38/lib/python3.8/site-packages/tables/file.py", line 315, in open_file

return File(filename, mode, title, root_uep, filters, **kwargs)

File "/home/username_0/miniconda3/envs/py38/lib/python3.8/site-packages/tables/file.py", line 778, in __init__

self._g_new(filename, mode, **params)

File "tables/hdf5extension.pyx", line 492, in tables.hdf5extension.File._g_new

tables.exceptions.HDF5ExtError: HDF5 error back trace

File "H5F.c", line 509, in H5Fopen

unable to open file

File "H5Fint.c", line 1400, in H5F__open

unable to open file

File "H5Fint.c", line 1615, in H5F_open

unable to lock the file

File "H5FD.c", line 1640, in H5FD_lock

driver lock request failed

File "H5FDsec2.c", line 941, in H5FD_sec2_lock

unable to lock file, errno = 11, error message = 'Resource temporarily unavailable'

End of HDF5 error back trace

Unable to open/create file '/tmp/signac_test_h5store_952j661f/signac_test_h5store.h5'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "test_h5store.py", line 286, in test_clear

self.assertEqual(h5s[key], d)

File "/home/username_0/code/signac/signac/core/h5store.py", line 405, in __getitem__

return _h5get(self, self._file, key)

File "/home/username_0/code/signac/signac/core/h5store.py", line 137, in _h5get

with _pandas.HDFStore(grp.file.filename, mode='r') as store_:

File "/home/username_0/miniconda3/envs/py38/lib/python3.8/site-packages/pandas/io/pytables.py", line 505, in __init__

self.open(mode=mode, **kwargs)

File "/home/username_0/miniconda3/envs/py38/lib/python3.8/site-packages/pandas/io/pytables.py", line 661, in open

raise IOError(str(e))

OSError: HDF5 error back trace

File "H5F.c", line 509, in H5Fopen

unable to open file

File "H5Fint.c", line 1400, in H5F__open

unable to open file

File "H5Fint.c", line 1615, in H5F_open

[Truncated]

Default FS encoding: utf-8

Default locale: (en_US, UTF-8)

-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=

Summary of the h5py configuration

---------------------------------

h5py 2.10.0

HDF5 1.10.5

Python 3.7.6 | packaged by conda-forge | (default, Dec 27 2019, 00:09:34)

[GCC 7.3.0]

sys.platform linux

sys.maxsize 9223372036854775807

numpy 1.17.3

```

### System configuration

- Operating System: Ubuntu 18.04

- Version of Python: 3.7 or 3.8

- Version of signac: 1.3

Answers:

username_1: @username_0 is there anything we can do about this other than document the usage of `HDF5_USE_FILE_LOCKING` (with all appropriate "here there be dragons" warnings for SWMR users)?

username_0: @username_1 This was partly resolved by #266. Otherwise

Status: Issue closed

|

SunPower/PVMismatch | 249247218 | Title: use lookup table for 2-diode parameters (a la SAM)

Question:

username_0: related to #50 and #51

At PVSC Janine Freeman introduced a great idea to nearly eliminate errors from diode-analog cell models, which was to use a lookup table.

Since we already have the `pvmismatch.contrib.gen_coeffs` methods, all we need is to loop over it so it creates an IEC-61853 table but for Isat1, Isat2, Rs and Rsh instead of Imp, Vmp, Isc, and Voc.

Then we would have to add the interpolation methods into `pvmismatch.pvcell` or at least the option to.

Answers:

username_0: see [**Significant Improvement in Module Performance Prediction Accuracy Based on IEC-61853 Data** by <NAME>an at PVPMC-8](https://www.slideshare.net/sandiaecis/06-20170509-freeman-8th-pvpmc-iec-61853-presentation)

username_0: see [Trello](https://trello.com/c/qpLucqHp)

username_1: Dear Mikofski : i saw the fuction about' gen_coeffs.gen_two_diode()', it can return the cell parameter such as isat1, isat2, rs, rsh. actually, Some R&D Projects also need the cell reversed parameter such as ARBD,BRBD,VRBD,NRBD. The current packages can solve this problem ?

username_0: @username_1, no, I didn't add methods to fit reverse breakdown parameters, because AFAIK there isn't an IEC standard to test or measure for this, and often there is little or no published data on reverse bias characteristics of cells or modules.

But I agree that we should at least provide some guidelines or rules of thumb, maybe in the documentation or the wiki. Probably we should get a few other researchers to give their input?

The equations for the reverse breakdown are in issue #25 and in [`pvcells.py`](../blob/master/pvmismatch/pvmismatch_lib/pvcell.py#L169-L174).

Here's what I would suggest:

1. Try to find out what the breakdown voltage is and use that to set `VRBD`. Typically front contact p-type c-Si cells breakdown in reverse bias somewhere between -17.0-volts to -25.0-volts. SunPower modules are back contact n-type and they breakdown between -3.5 and -5.5 volts.

2. Try to get real reverse bias test data and fit it using [`scipy.optimize.curve_fit`](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.curve_fit.html) to get ARBD, BRBD and NRBD using the equation from #25 and . Otherwise, just use the defaults for for ARBD, BRBD, and NRBD - unfortunately, this model is not great, but there is an issue #26 to make a more flexible reverse bias model

username_1: Thanks ,Mark.

I will try it follow your idea, and i also give you all feedbacks about result whatever good or bad.

hoping the pvmismatch more and more powerful

username_2: What’s your fitting data look like? I have a “global” single diode model

fitting already in production, and I’m always curious to compare fit values

between single and double diode models. |

ClinGen/clincoded | 186000446 | Title: Suppress a few moi terms in GDM pull down (for create GDM page)

Question:

username_0: We need to suppress a few terms in the moi of the Create GDM pull-down:

Also, as shown on image, change "Autosomal unknown" to "Unknown"

Answers:

username_0: There is one record that uses one of the "X"d out moi's (from Tam). Could we switch this to "X-linked inheritance, and when we add second pull-down (R9), we can add the adjective recessive? I can let Tam know. X-linked inheritance is still correct.

username_1: For reference in production:

**GDMs mode of inheritance (only 3 types have been used, in 21 records)**

- Autosomal recessive inheritance (HP:0000007)

- X-linked recessive inheritance (HP:0001419)

- Autosomal dominant inheritance (HP:0000006)

**Interpretations mode of inheritance ( only 2 types have been used [of 8 records that have mode of inheritance])**

- Autosomal recessive inheritance (HP:0000007)

- Autosomal dominant inheritance (HP:0000006)

username_1: https://1103-kd-modeofinheritancechange-2ee9609-karen.demo.clinicalgenome.org

username_0: Looks/works great in release candidate for both GCI and VCI - thank you for adding.

username_1: Included in last release (R8). Nice job and thanks for your hard work.

Status: Issue closed

|

OpenZeppelin/openzeppelin-test-helpers | 769013313 | Title: patch for "npm audit fix"

Question:

username_0: If I understood correctly, in the last version available ```"@openzeppelin/test-helpers": "0.5.9``` there are required updates:

```

┌───────────────┬──────────────────────────────────────────────────────────────┐

│ High │ Signature Malleability │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Package │ elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Patched in │ >=6.5.3 │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Dependency of │ @openzeppelin/test-helpers [dev] │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Path │ @openzeppelin/test-helpers > @truffle/contract > web3 > │

│ │ web3-eth > web3-eth-abi > ethers > elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ More info │ https://npmjs.com/advisories/1547 │

└───────────────┴──────────────────────────────────────────────────────────────┘

┌───────────────┬──────────────────────────────────────────────────────────────┐

│ High │ Signature Malleability │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Package │ elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Patched in │ >=6.5.3 │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Dependency of │ @openzeppelin/test-helpers [dev] │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Path │ @openzeppelin/test-helpers > @truffle/contract > web3 > │

│ │ web3-eth > web3-eth-contract > web3-eth-abi > ethers > │

│ │ elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ More info │ https://npmjs.com/advisories/1547 │

└───────────────┴──────────────────────────────────────────────────────────────┘

┌───────────────┬──────────────────────────────────────────────────────────────┐

│ High │ Signature Malleability │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Package │ elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Patched in │ >=6.5.3 │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Dependency of │ @openzeppelin/test-helpers [dev] │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Path │ @openzeppelin/test-helpers > @truffle/contract > web3 > │

│ │ web3-eth > web3-eth-ens > web3-eth-contract > web3-eth-abi > │

│ │ ethers > elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ More info │ https://npmjs.com/advisories/1547 │

└───────────────┴──────────────────────────────────────────────────────────────┘

┌───────────────┬──────────────────────────────────────────────────────────────┐

│ High │ Signature Malleability │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Package │ elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Patched in │ >=6.5.3 │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Dependency of │ @openzeppelin/test-helpers [dev] │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Path │ @openzeppelin/test-helpers > @truffle/contract > web3 > │

│ │ web3-eth > web3-eth-ens > web3-eth-abi > ethers > elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ More info │ https://npmjs.com/advisories/1547 │

└───────────────┴──────────────────────────────────────────────────────────────┘

┌───────────────┬──────────────────────────────────────────────────────────────┐

│ High │ Signature Malleability │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Package │ elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Patched in │ >=6.5.3 │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Dependency of │ @openzeppelin/test-helpers [dev] │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ Path │ @openzeppelin/test-helpers > @truffle/contract > │

│ │ web3-eth-abi > ethers > elliptic │

├───────────────┼──────────────────────────────────────────────────────────────┤

│ More info │ https://npmjs.com/advisories/1547 │

└───────────────┴──────────────────────────────────────────────────────────────┘

```

Answers:

username_1: Hi @username_0! I’m sorry that you had this issue.

Thanks so much for reporting it! The project owner will review and triage this issue as soon as they can.

Some of the dependencies could be updated to resolve some of these issues for OpenZeppelin Test Helpers (similar to: https://github.com/OpenZeppelin/openzeppelin-test-environment/issues/152).

username_2: +1

`found 44102 vulnerabilities (42409 low, 24 moderate, 1669 high) in 2328 scanned packages`

very annoying actually :-)

username_3: @username_0 I've released a new version that will allow updating the @truffle/contract dependency to one that doesn't have the vulnerabilities you reported.

---

@username_2 Where did you get that `npm audit` report? After this release the only reported vulnerabilities in `npm audit` for OpenZeppelin Test Helpers should be a couple of low severity ones originating in web3.

Status: Issue closed

|

HubSpot/hubspot-api-nodejs | 1013815373 | Title: Duplicate parameter names for some API definitions

Question:

username_0: Some .d.ts files have duplicate parameter names.

The examples I've found exist in filesApi.d.ts,

`options` appears twice in `replace` and `upload`

https://unpkg.com/browse/@hubspot/[email protected]/lib/codegen/files/api/filesApi.d.ts

This may be a more systemic issue than just this file.

Answers:

username_0: One further note. It seems as though this isn't just an issue with the definition. I think the javascript is equally affected where both parameters are named the same thing and thus the logic is probably wrong. |

mozillazg/python-pinyin | 782581605 | Title: 提供几个拼音声调风格转换相关的辅助函数

Question:

username_0: 提供几个拼音声调风格转换相关的辅助函数,实现如下转换:

* `nǐ hǎo` -> `ni hao`

* `nǐ hǎo` -> `ni3 ha3o`

* `ni3 ha3o` -> `nǐ hǎo`

* `ni3 ha3o` -> `ni3 hao3`

* `ni3 ha3o` -> `ni hao`

* `nǐ hǎo` -> `ni3 hao3`

* `ni3 hao3` -> `nǐ hǎo`

* `ni3 hao3` -> `ni3 ha3o`

* `ni3 hao3` -> `ni hao`<issue_closed>

Status: Issue closed |

topcoder-platform/TCO21-Regionals-QA-Competition | 961167996 | Title: [Chrome] App shows 2 feedback button in the “Specs” Tab

Question:

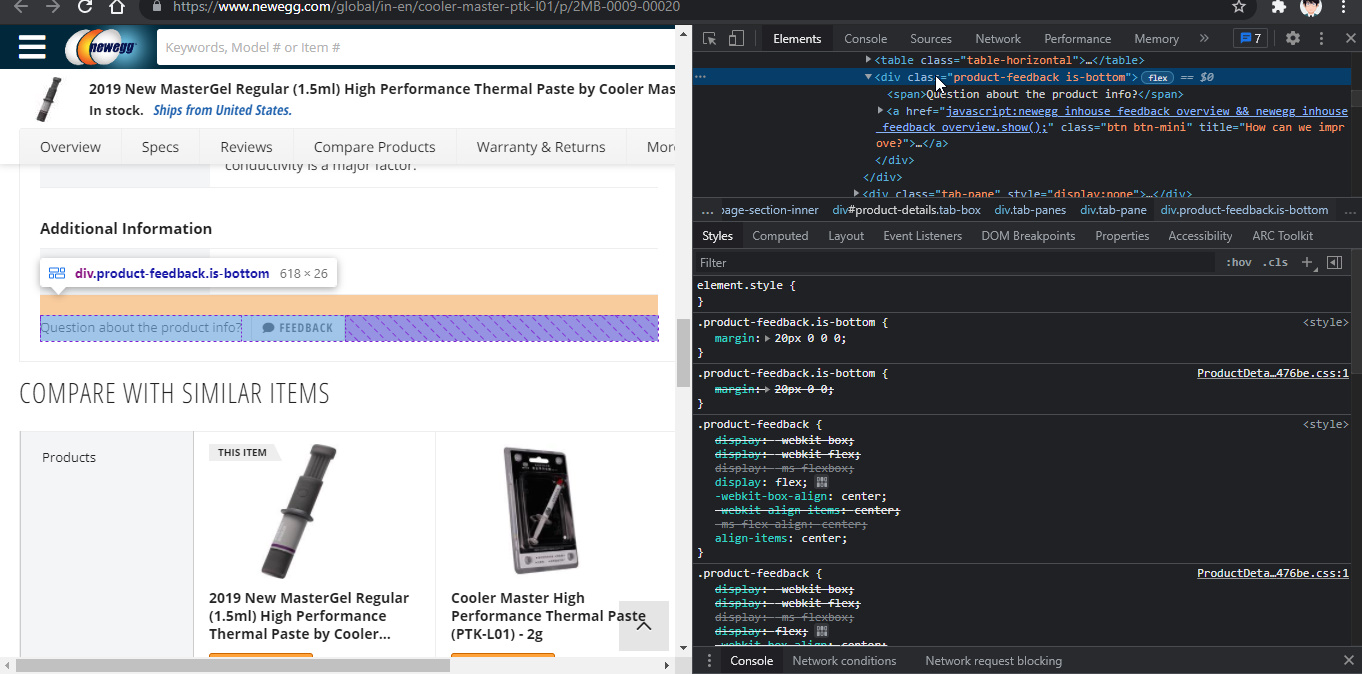

username_0: Summary :

[Chrome] App shows 2 feedback button in the “Specs” Tab

Target URL:

https://www.newegg.com/cooler-master-pro/p/2MB-0009-00008

Steps to reproduce:

1. Open the https://www.newegg.com/cooler-master-pro/p/2MB-0009-00008

2. Scroll down and click “Specs”

3. Verify the count of Feedback button

Actual:

App shows 2 feedback button in the “Specs” Tab

Expected:

App should show 1 feedback button in the “Specs” Tab

Environment:

• Device(s): Windows

• Resolution: 1920×1080

• Operating System: Windows 10

• Browser(s): Chrome | Version 92.0.4515.131 (Official Build) (64-bit

https://user-images.githubusercontent.com/31862600/128264990-446deea1-4be4-4109-8bdf-80a36ec8cf37.mp4

Answers:

username_1: Valid

username_2: Not a bug; If the content is too big user needs to scroll all the way up to give the `Feedback` so the application has two feedback buttons on top as well as the bottom. This is intentional check the `class` name on the code

```

<div class="product-feedback is-bottom"><span>Question about the product info?</span><a href="javascript:newegg_inhouse_feedback_overview && newegg_inhouse_feedback_overview.show();" class="btn btn-mini" title="How can we improve?"><i class="fas fa-comment"></i> Feedback</a></div>

```

```

<div class="product-feedback is-top"><span>Question about the product info?</span><a href="javascript:newegg_inhouse_feedback_overview && newegg_inhouse_feedback_overview.show();" class="btn btn-mini" title="How can we improve?"><i class="fas fa-comment"></i> Feedback</a></div>

```

**Submitter**: 0 Points

**Challenger**: 0 Points | Invalid challenge,

ref: https://github.com/topcoder-platform/TCO21-Regionals-QA-Competition/issues/193#issuecomment-894592042 |

richterger/Perl-LanguageServer | 747523395 | Title: Question: Disable Symbol list provider

Question:

username_0: Hi @username_1

As some times the symbol table is stuck, is there a possibility to disable it from this extension in order to use another provider for the symbol list?

((enjoy))

cr

Status: Issue closed

Answers:

username_1: You can disable the whole extention, because the whole extesion was getting stuck. Hopefully this issue is solved with commit c3e8670c41a28027b27dbe5d9a8394c49ce640c7 |

phpactor/phpactor | 611644894 | Title: [RPC][transform] Convert transform sub-commands to first-class commands

Question:

username_0: Possible sub-commands of `transform` at the moment:

- complete_constructor

- add_missing_properties

- fix_namespace_class_name

- implement_contracts

I think it would be good to convert these "sub-command" to "first-class" commands because:

- it would allow to run them directly without going through the `transform` command

- it would allow to specify extra arguments for any of them without affecting the others if the need arises later

- it would allow to list them in the context menu directly: currently you need to pick transform from the context menu, then pick a transformation, instead of just picking the command in 1 step (unless you provide a key-binding to trigger the transform-choice menu directly)

- it would allow to show/hide them in the context menu based on the given context: e.g. in the constructor the `complete_constructor` is relevant while the `fix_namespace_class_name` and `implement_contracts` are not

- it would simplify the context menu: having to go multiple choice steps can be annoying

Basically what I am saying is that it is better to make the `context_menu` command a bit more smart and let it provide relevant commands in the given context instead of providing commands which trigger further choice menus (triggering an input callback is still fine if the picked command needs further input).

Note that even if you convert these to "first-class" command as I suggested above you could still keep the current "transform choice menu" if you want to. It would be just a chooser type command like the `context_menu` anyway.

Answers:

username_1: These are "context less" refactorings - they apply to the entire file but there is no reason they cannot be part of the context menu - https://github.com/phpactor/phpactor/blob/develop/lib/Extension/ContextMenu/menu.json (other than running out of space and key bindings ...) |

mbrn/material-table | 529277988 | Title: Problem with update editcomponent by props

Question:

username_0: Hi I am facing with a problem which is I built a custom editcomponent by React-select and option value is from props. However, material Table is not re-render again after receive the new props.

I have try two ways to solve it. Firstly, I let react-select option's value equals to props.selectList

Secondly, I try to reset whole columns but it still not working.

Is there any methods can solve this problem?

Following is my code

```

const updateState = (list) => {

let tempState = state

tempState.columns[4].editComponent = props => (

<Select

name='positiveNumerator'

options={list}

value={props.value.split(',').map(function(elem){

return(elem==""? "": {value:elem, label:elem})

})}

isMulti={true}

onChange={e => props.onChange(e.map(function(elem){return elem.value}).join(','))}

/>

)

}

const [items, setItems] = useState(props.selectList)

useEffect(() => {

// props change

console.log(`items`, items, props.selectList);

setItems(props.selectList);

updateState(props.selectList)

}, [props.selectList]);

const [state, setState] = useState({

columns: [

{ title: 'Positive Numerator', field: 'positiveNumerator'

,editComponent: props => (

<Select

name='positiveNumerator'

options={props.selectList}

value={props.value.split(',').map(function(elem){

return(elem==""? "": {value:elem, label:elem})

})}

isMulti={true}

onChange={e => props.onChange(e.map(function(elem){return elem.value}).join(','))}

/>

),

},

});

``` |

HypixelDev/PublicAPI | 505612619 | Title: Json schema

Question:

username_0: Currently its hard to figure out if something will return a double or an integer. If there was a json schema for the returned objects it would be easy to write a wrapper in any other language using https://app.quicktype.io/ to convert the schema into the desired language.

Status: Issue closed

Answers:

username_1: Unfortunately, the API is mostly data served directly from our database. So creating a schema for all of the data isn't really on the table. |

bigskysoftware/htmx | 892572418 | Title: Input array not send empty fields

Question:

username_0: Hello, I have a form where it has additional multifield and I use the name of the input to transform it into an array and receive it in the backend with PHP, something like:

`<input type = "text" name = "subject[]">`

It turns out that as I can have several of these and the fields are optional, HTMX only sends those that are filled, ignoring the fields that are empty and this ends up generating the server-side matrix in the wrong way. With JQuery Ajax works correctly, is there any way to resolve this?

https://www.php.net/manual/en/faq.html.php#faq.html.arrays

Answers:

username_0: @username_1 can you help me with this problem?

username_1: htmx does it the "normal" way: unchecked checkboxes are ignored. Some frameworks such as rails work around this by including a hidden version of the input too, but I don't think we should support server-side specific needs.

Status: Issue closed

|

Berserker66/MultiWorld-Utilities | 600695703 | Title: Entrance rando removes silvers when swordless and hard/expert pool are set

Question:

username_0: This has been raised several times on the main github, but Berserker told me during a multi to submit it here, so here I am.

When running the entrance randomizer, and swordless and hard/expert pool are set, the logically-hard-required silvers end up removed. This does apply to multi as well; I experienced it personally by way of receiving a green 20 in place of the second progressive bow when it was sent to me.

My understanding, from reading descriptions by others who are more familiar with the code than I, is that it's something to do with the patch order. The interaction of swordless and hard/expert happens correctly, but then entrance comes in, sees the pool setting, and strips the silvers. This results in an effectively unwinnable seed.

Answers:

username_1: Yeah, I don't know enough about the baserom asm to figure out where it is going wrong. I can tell someone already tried to fix it. Relevant bits from rom.py:

```

#Work around for json patch ordering issues - write bow limit separately so that it is replaced in the patch

rom.write_bytes(0x180098, [difficulty.progressive_bow_limit, overflow_replacement])

if difficulty.progressive_bow_limit < 2 and world.swords[player] == 'swordless':

rom.write_bytes(0x180098, [2, overflow_replacement])

rom.write_byte(0x180181, 0x01) # Make silver arrows work only on ganon

rom.write_byte(0x180182, 0x00) # Don't auto equip silvers on pickup

```

^ That if gets triggered. It might just be missing flags. overflow replacement is 20 rupees, this might need to put the bow back in instead of rupees. Would need to try tinkering with it

username_1: Further checking shows, that this is where

```

#Work around for json patch ordering issues - write bow limit separately so that it is replaced in the patch

rom.write_bytes(0x180098, [difficulty.progressive_bow_limit, overflow_replacement])

```

came from in the first place, so that won't solve anything.

username_2: That line was solving an issue where the json rom contained 0x180098 = 1, and also 0x180098 = 2. And then on python 3.5 and below, the order of the patch was non-deterministic and the flag would end up as 1. The flag at 0x180098 is the number of bows you're allowed to collect.

So I would have expected this to be fixed in Multiworld Utils 1.9, and in previous versions to only affect hard / swordless / enemizer (including sprite changing) generated with python 3.5 and below.

If that's not the case, then there's another bug with the bow limit and hopefully a way to replicate it.

username_1: Utils 1.9 only supports Python 3.7 and up. Annotations features are used that got introduced with 3.7

username_2: Ok, so as an update that seed was generated with Multiworld Utils 1.8 which means that it doesn't have the json patch fix. It might still be another issue though, especially if the 2nd bow doesn't appear in the spoiler log.

username_0: I'm happy to report that a run of similar settings on the current release did not produce the issue. I was able to collect the silvers (in tile room after collecting somaria in DM room, no less) and utilize them against Ganon.

[ER_M482833355_Spoiler.txt](https://github.com/username_1/MultiWorld-Utilities/files/4495901/ER_M482833355_Spoiler.txt)

[ER_M482833355.zip](https://github.com/username_1/MultiWorld-Utilities/files/4495902/ER_M482833355.zip)

username_1: Hm. I'll have to check non-progressive again then, it might spawn two instances of Bow and none of Silvers, which would explain why I didn't see them in my tests. As I was also looking for Progressive Bow (Alt), which is missing from the progressive log.

Status: Issue closed

username_1: This seems to be fixed. Should someone encounter this again on newer versions just open a new issue with a spoiler log please. |

ruby-concurrency/concurrent-ruby | 182235549 | Title: Documentation enhance: Is it possible to upgrade a reentrant rw-lock from read to write?

Question:

username_0: Hi!

On the page http://ruby-concurrency.github.io/concurrent-ruby/Concurrent/ReentrantReadWriteLock.html there is no information about up- or downgrading locks. The parent index page gives some info, but not a lot.

IMHO it would be helpful to have a brief sentence whether or not it is possible to up- and / or downgrade a lock, as done in https://docs.oracle.com/javase/7/docs/api/java/util/concurrent/locks/ReentrantReadWriteLock.html -> "Lock Downgrading"

Answers:

username_1: Thanks, for letting us know. Makes sense to add it. Would you open a PR with the update please?

username_0: I'd love to, but I can't write documentation about something I don't know (that's why I asked the question here).

username_1: Ah sorry, I've misunderstood. The ReentrantReadWrite supports both downgrade and upgrade.

username_1: Examples can be found in specs: https://github.com/ruby-concurrency/concurrent-ruby/blob/master/spec/concurrent/atomic/reentrant_read_write_lock_spec.rb

username_1: done in 476f59383e6bb357d41416f7e1693ede6350e7bb

Status: Issue closed

|

asmagin/Cake.Sitecore | 331369286 | Title: Deprecation warning for "mapCoverage" option when running unit tests

Question:

username_0: ● Deprecation Warning:

Option "mapCoverage" has been removed, as it's no longer necessary.

Please update your configuration.

Configuration Documentation:

https://facebook.github.io/jest/docs/configuration.html

PASS Project\Shared\client\client.index.test.ts

PROJECTNAME Sample Test

√ sample (3ms)

```

Answers:

username_0: After an offline chat with @asmagin, it turns out that this issue isn't actually part of Cake but rather part of the base solution that we are using, which is a separate repo from the same developer. As such this issue can be closed.

Status: Issue closed

|

vuetifyjs/vuetify | 1107618757 | Title: [Bug Report][3.0.0-alpha.12] RadioGroup with row prop always gets column

Question:

username_0: ### Environment

**Vuetify Version:** 3.0.0-alpha.12

**Last working version:** 2.6.2

**Vue Version:** 3.2.26

**Browsers:** Chrome 97.0.4692.71

**OS:** Windows 10

### Steps to reproduce

No step, just create this form and it becomes column layout.

### Expected Behavior

Row layout

### Actual Behavior

Column layout

### Reproduction Link

<a href="https://codepen.io/username_0/pen/vYeMMZQ" target="_blank">https://codepen.io/username_0/pen/vYeMMZQ</a>

### Other comments

This also happens on the [document](https://next.vuetifyjs.com/en/components/radio-buttons/) page

<!-- generated by vuetify-issue-helper. DO NOT REMOVE -->

Answers:

username_0: Also, because of my carelessness, I've missed that V3 is now for test purpose only and created my project with it. Is there a way to downgrade it to V2 instead of recreating the project? |

gnab/remark | 152064483 | Title: Code syntax highlighting: multiline comments