status

stringclasses 1

value | repo_name

stringclasses 31

values | repo_url

stringclasses 31

values | issue_id

int64 1

104k

| title

stringlengths 4

369

| body

stringlengths 0

254k

⌀ | issue_url

stringlengths 37

56

| pull_url

stringlengths 37

54

| before_fix_sha

stringlengths 40

40

| after_fix_sha

stringlengths 40

40

| report_datetime

timestamp[us, tz=UTC] | language

stringclasses 5

values | commit_datetime

timestamp[us, tz=UTC] | updated_file

stringlengths 4

188

| file_content

stringlengths 0

5.12M

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

closed

|

apache/dolphinscheduler

|

https://github.com/apache/dolphinscheduler

| 3,463 |

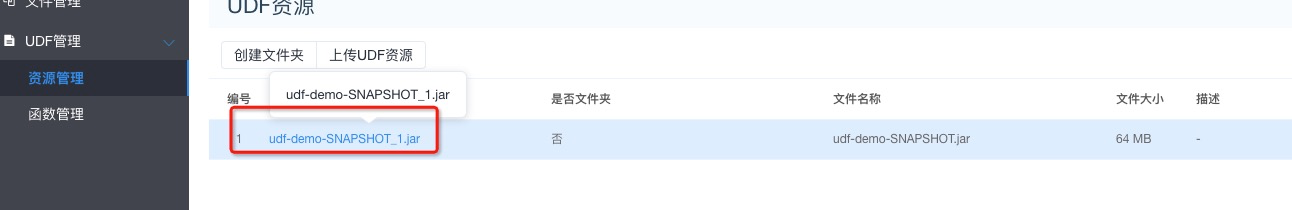

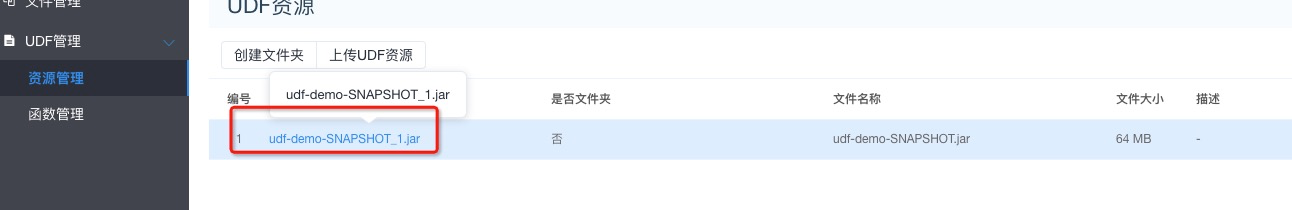

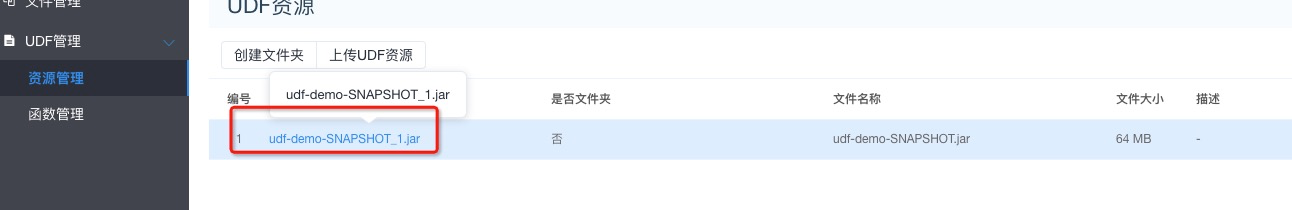

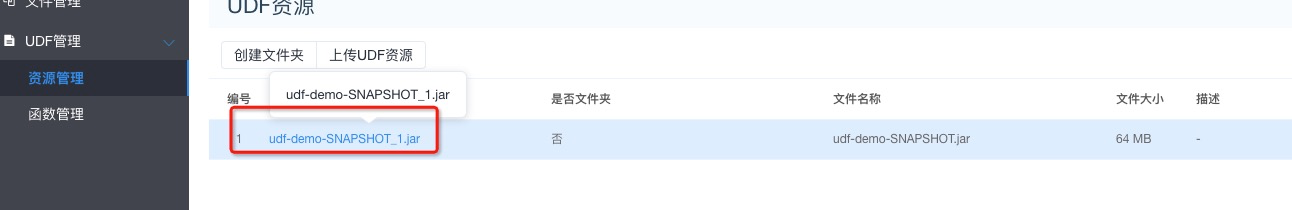

[Bug][api] rename the udf resource file associated with the udf function, Failed to execute hive task

|

1. Rename the udf resource file associated with the udf function

2. Executing hive task is fail

**Which version of Dolphin Scheduler:**

-[1.3.2-release]

|

https://github.com/apache/dolphinscheduler/issues/3463

|

https://github.com/apache/dolphinscheduler/pull/3482

|

a678c827600d44623f30311574b8226c1c59ace2

|

c8322482bbd021a89c407809abfcdd50cf3b2dc6

| 2020-08-11T03:37:09Z |

java

| 2020-08-13T08:19:11Z |

dolphinscheduler-api/src/main/java/org/apache/dolphinscheduler/api/service/ResourcesService.java

|

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.api.service;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.serializer.SerializerFeature;

import com.baomidou.mybatisplus.core.metadata.IPage;

import com.baomidou.mybatisplus.extension.plugins.pagination.Page;

import org.apache.commons.collections.BeanMap;

import org.apache.dolphinscheduler.api.dto.resources.ResourceComponent;

import org.apache.dolphinscheduler.api.dto.resources.filter.ResourceFilter;

import org.apache.dolphinscheduler.api.dto.resources.visitor.ResourceTreeVisitor;

import org.apache.dolphinscheduler.api.dto.resources.visitor.Visitor;

import org.apache.dolphinscheduler.api.enums.Status;

import org.apache.dolphinscheduler.api.exceptions.ServiceException;

import org.apache.dolphinscheduler.api.utils.PageInfo;

import org.apache.dolphinscheduler.api.utils.Result;

import org.apache.dolphinscheduler.common.Constants;

import org.apache.dolphinscheduler.common.enums.ResourceType;

import org.apache.dolphinscheduler.common.utils.*;

import org.apache.dolphinscheduler.dao.entity.*;

import org.apache.dolphinscheduler.dao.mapper.*;

import org.apache.dolphinscheduler.dao.utils.ResourceProcessDefinitionUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import org.springframework.web.multipart.MultipartFile;

import java.io.IOException;

import java.text.MessageFormat;

import java.util.*;

import java.util.regex.Matcher;

import java.util.stream.Collectors;

import static org.apache.dolphinscheduler.common.Constants.*;

/**

* resources service

*/

@Service

public class ResourcesService extends BaseService {

private static final Logger logger = LoggerFactory.getLogger(ResourcesService.class);

@Autowired

private ResourceMapper resourcesMapper;

@Autowired

private UdfFuncMapper udfFunctionMapper;

@Autowired

private TenantMapper tenantMapper;

@Autowired

private UserMapper userMapper;

@Autowired

private ResourceUserMapper resourceUserMapper;

@Autowired

private ProcessDefinitionMapper processDefinitionMapper;

/**

* create directory

*

* @param loginUser login user

* @param name alias

* @param description description

* @param type type

* @param pid parent id

* @param currentDir current directory

* @return create directory result

*/

@Transactional(rollbackFor = Exception.class)

public Result createDirectory(User loginUser,

String name,

String description,

ResourceType type,

int pid,

String currentDir) {

Result result = new Result();

// if hdfs not startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

putMsg(result, Status.HDFS_NOT_STARTUP);

return result;

}

String fullName = currentDir.equals("/") ? String.format("%s%s",currentDir,name):String.format("%s/%s",currentDir,name);

if (pid != -1) {

Resource parentResource = resourcesMapper.selectById(pid);

if (parentResource == null) {

putMsg(result, Status.PARENT_RESOURCE_NOT_EXIST);

return result;

}

if (!hasPerm(loginUser, parentResource.getUserId())) {

putMsg(result, Status.USER_NO_OPERATION_PERM);

return result;

}

}

if (checkResourceExists(fullName, 0, type.ordinal())) {

logger.error("resource directory {} has exist, can't recreate", fullName);

putMsg(result, Status.RESOURCE_EXIST);

return result;

}

Date now = new Date();

Resource resource = new Resource(pid,name,fullName,true,description,name,loginUser.getId(),type,0,now,now);

try {

resourcesMapper.insert(resource);

putMsg(result, Status.SUCCESS);

Map<Object, Object> dataMap = new BeanMap(resource);

Map<String, Object> resultMap = new HashMap<String, Object>();

for (Map.Entry<Object, Object> entry: dataMap.entrySet()) {

if (!"class".equalsIgnoreCase(entry.getKey().toString())) {

resultMap.put(entry.getKey().toString(), entry.getValue());

}

}

result.setData(resultMap);

} catch (Exception e) {

logger.error("resource already exists, can't recreate ", e);

throw new RuntimeException("resource already exists, can't recreate");

}

//create directory in hdfs

createDirecotry(loginUser,fullName,type,result);

return result;

}

/**

* create resource

*

* @param loginUser login user

* @param name alias

* @param desc description

* @param file file

* @param type type

* @param pid parent id

* @param currentDir current directory

* @return create result code

*/

@Transactional(rollbackFor = Exception.class)

public Result createResource(User loginUser,

String name,

String desc,

ResourceType type,

MultipartFile file,

int pid,

String currentDir) {

Result result = new Result();

// if hdfs not startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

putMsg(result, Status.HDFS_NOT_STARTUP);

return result;

}

if (pid != -1) {

Resource parentResource = resourcesMapper.selectById(pid);

if (parentResource == null) {

putMsg(result, Status.PARENT_RESOURCE_NOT_EXIST);

return result;

}

if (!hasPerm(loginUser, parentResource.getUserId())) {

putMsg(result, Status.USER_NO_OPERATION_PERM);

return result;

}

}

// file is empty

if (file.isEmpty()) {

logger.error("file is empty: {}", file.getOriginalFilename());

putMsg(result, Status.RESOURCE_FILE_IS_EMPTY);

return result;

}

// file suffix

String fileSuffix = FileUtils.suffix(file.getOriginalFilename());

String nameSuffix = FileUtils.suffix(name);

// determine file suffix

if (!(StringUtils.isNotEmpty(fileSuffix) && fileSuffix.equalsIgnoreCase(nameSuffix))) {

/**

* rename file suffix and original suffix must be consistent

*/

logger.error("rename file suffix and original suffix must be consistent: {}", file.getOriginalFilename());

putMsg(result, Status.RESOURCE_SUFFIX_FORBID_CHANGE);

return result;

}

//If resource type is UDF, only jar packages are allowed to be uploaded, and the suffix must be .jar

if (Constants.UDF.equals(type.name()) && !JAR.equalsIgnoreCase(fileSuffix)) {

logger.error(Status.UDF_RESOURCE_SUFFIX_NOT_JAR.getMsg());

putMsg(result, Status.UDF_RESOURCE_SUFFIX_NOT_JAR);

return result;

}

if (file.getSize() > Constants.MAX_FILE_SIZE) {

logger.error("file size is too large: {}", file.getOriginalFilename());

putMsg(result, Status.RESOURCE_SIZE_EXCEED_LIMIT);

return result;

}

// check resoure name exists

String fullName = currentDir.equals("/") ? String.format("%s%s",currentDir,name):String.format("%s/%s",currentDir,name);

if (checkResourceExists(fullName, 0, type.ordinal())) {

logger.error("resource {} has exist, can't recreate", name);

putMsg(result, Status.RESOURCE_EXIST);

return result;

}

Date now = new Date();

Resource resource = new Resource(pid,name,fullName,false,desc,file.getOriginalFilename(),loginUser.getId(),type,file.getSize(),now,now);

try {

resourcesMapper.insert(resource);

putMsg(result, Status.SUCCESS);

Map<Object, Object> dataMap = new BeanMap(resource);

Map<String, Object> resultMap = new HashMap<>();

for (Map.Entry<Object, Object> entry: dataMap.entrySet()) {

if (!"class".equalsIgnoreCase(entry.getKey().toString())) {

resultMap.put(entry.getKey().toString(), entry.getValue());

}

}

result.setData(resultMap);

} catch (Exception e) {

logger.error("resource already exists, can't recreate ", e);

throw new RuntimeException("resource already exists, can't recreate");

}

// fail upload

if (!upload(loginUser, fullName, file, type)) {

logger.error("upload resource: {} file: {} failed.", name, file.getOriginalFilename());

putMsg(result, Status.HDFS_OPERATION_ERROR);

throw new RuntimeException(String.format("upload resource: %s file: %s failed.", name, file.getOriginalFilename()));

}

return result;

}

/**

* check resource is exists

*

* @param fullName fullName

* @param userId user id

* @param type type

* @return true if resource exists

*/

private boolean checkResourceExists(String fullName, int userId, int type ){

List<Resource> resources = resourcesMapper.queryResourceList(fullName, userId, type);

if (resources != null && resources.size() > 0) {

return true;

}

return false;

}

/**

* update resource

* @param loginUser login user

* @param resourceId resource id

* @param name name

* @param desc description

* @param type resource type

* @param file resource file

* @return update result code

*/

@Transactional(rollbackFor = Exception.class)

public Result updateResource(User loginUser,

int resourceId,

String name,

String desc,

ResourceType type,

MultipartFile file) {

Result result = new Result();

// if resource upload startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

putMsg(result, Status.HDFS_NOT_STARTUP);

return result;

}

Resource resource = resourcesMapper.selectById(resourceId);

if (resource == null) {

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

if (!hasPerm(loginUser, resource.getUserId())) {

putMsg(result, Status.USER_NO_OPERATION_PERM);

return result;

}

if (name.equals(resource.getAlias()) && desc.equals(resource.getDescription())) {

putMsg(result, Status.SUCCESS);

return result;

}

//check resource aleady exists

String originFullName = resource.getFullName();

String originResourceName = resource.getAlias();

String fullName = String.format("%s%s",originFullName.substring(0,originFullName.lastIndexOf("/")+1),name);

if (!originResourceName.equals(name) && checkResourceExists(fullName, 0, type.ordinal())) {

logger.error("resource {} already exists, can't recreate", name);

putMsg(result, Status.RESOURCE_EXIST);

return result;

}

if (file != null) {

// file is empty

if (file.isEmpty()) {

logger.error("file is empty: {}", file.getOriginalFilename());

putMsg(result, Status.RESOURCE_FILE_IS_EMPTY);

return result;

}

// file suffix

String fileSuffix = FileUtils.suffix(file.getOriginalFilename());

String nameSuffix = FileUtils.suffix(name);

// determine file suffix

if (!(StringUtils.isNotEmpty(fileSuffix) && fileSuffix.equalsIgnoreCase(nameSuffix))) {

/**

* rename file suffix and original suffix must be consistent

*/

logger.error("rename file suffix and original suffix must be consistent: {}", file.getOriginalFilename());

putMsg(result, Status.RESOURCE_SUFFIX_FORBID_CHANGE);

return result;

}

//If resource type is UDF, only jar packages are allowed to be uploaded, and the suffix must be .jar

if (Constants.UDF.equals(type.name()) && !JAR.equalsIgnoreCase(FileUtils.suffix(originFullName))) {

logger.error(Status.UDF_RESOURCE_SUFFIX_NOT_JAR.getMsg());

putMsg(result, Status.UDF_RESOURCE_SUFFIX_NOT_JAR);

return result;

}

if (file.getSize() > Constants.MAX_FILE_SIZE) {

logger.error("file size is too large: {}", file.getOriginalFilename());

putMsg(result, Status.RESOURCE_SIZE_EXCEED_LIMIT);

return result;

}

}

// query tenant by user id

String tenantCode = getTenantCode(resource.getUserId(),result);

if (StringUtils.isEmpty(tenantCode)){

return result;

}

// verify whether the resource exists in storage

// get the path of origin file in storage

String originHdfsFileName = HadoopUtils.getHdfsFileName(resource.getType(),tenantCode,originFullName);

try {

if (!HadoopUtils.getInstance().exists(originHdfsFileName)) {

logger.error("{} not exist", originHdfsFileName);

putMsg(result,Status.RESOURCE_NOT_EXIST);

return result;

}

} catch (IOException e) {

logger.error(e.getMessage(),e);

throw new ServiceException(Status.HDFS_OPERATION_ERROR);

}

if (!resource.isDirectory()) {

//get the origin file suffix

String originSuffix = FileUtils.suffix(originFullName);

String suffix = FileUtils.suffix(fullName);

boolean suffixIsChanged = false;

if (StringUtils.isBlank(suffix) && StringUtils.isNotBlank(originSuffix)) {

suffixIsChanged = true;

}

if (StringUtils.isNotBlank(suffix) && !suffix.equals(originSuffix)) {

suffixIsChanged = true;

}

//verify whether suffix is changed

if (suffixIsChanged) {

//need verify whether this resource is authorized to other users

Map<String, Object> columnMap = new HashMap<>();

columnMap.put("resources_id", resourceId);

List<ResourcesUser> resourcesUsers = resourceUserMapper.selectByMap(columnMap);

if (CollectionUtils.isNotEmpty(resourcesUsers)) {

List<Integer> userIds = resourcesUsers.stream().map(ResourcesUser::getUserId).collect(Collectors.toList());

List<User> users = userMapper.selectBatchIds(userIds);

String userNames = users.stream().map(User::getUserName).collect(Collectors.toList()).toString();

logger.error("resource is authorized to user {},suffix not allowed to be modified", userNames);

putMsg(result,Status.RESOURCE_IS_AUTHORIZED,userNames);

return result;

}

}

}

// updateResource data

Date now = new Date();

resource.setAlias(name);

resource.setFullName(fullName);

resource.setDescription(desc);

resource.setUpdateTime(now);

if (file != null) {

resource.setFileName(file.getOriginalFilename());

resource.setSize(file.getSize());

}

try {

resourcesMapper.updateById(resource);

if (resource.isDirectory()) {

List<Integer> childrenResource = listAllChildren(resource,false);

if (CollectionUtils.isNotEmpty(childrenResource)) {

String matcherFullName = Matcher.quoteReplacement(fullName);

List<Resource> childResourceList = new ArrayList<>();

List<Resource> resourceList = resourcesMapper.listResourceByIds(childrenResource.toArray(new Integer[childrenResource.size()]));

childResourceList = resourceList.stream().map(t -> {

t.setFullName(t.getFullName().replaceFirst(originFullName, matcherFullName));

t.setUpdateTime(now);

return t;

}).collect(Collectors.toList());

resourcesMapper.batchUpdateResource(childResourceList);

}

}

putMsg(result, Status.SUCCESS);

Map<Object, Object> dataMap = new BeanMap(resource);

Map<String, Object> resultMap = new HashMap<>(5);

for (Map.Entry<Object, Object> entry: dataMap.entrySet()) {

if (!Constants.CLASS.equalsIgnoreCase(entry.getKey().toString())) {

resultMap.put(entry.getKey().toString(), entry.getValue());

}

}

result.setData(resultMap);

} catch (Exception e) {

logger.error(Status.UPDATE_RESOURCE_ERROR.getMsg(), e);

throw new ServiceException(Status.UPDATE_RESOURCE_ERROR);

}

// if name unchanged, return directly without moving on HDFS

if (originResourceName.equals(name) && file == null) {

return result;

}

if (file != null) {

// fail upload

if (!upload(loginUser, fullName, file, type)) {

logger.error("upload resource: {} file: {} failed.", name, file.getOriginalFilename());

putMsg(result, Status.HDFS_OPERATION_ERROR);

throw new RuntimeException(String.format("upload resource: %s file: %s failed.", name, file.getOriginalFilename()));

}

if (!fullName.equals(originFullName)) {

try {

HadoopUtils.getInstance().delete(originHdfsFileName,false);

} catch (IOException e) {

logger.error(e.getMessage(),e);

throw new RuntimeException(String.format("delete resource: %s failed.", originFullName));

}

}

return result;

}

// get the path of dest file in hdfs

String destHdfsFileName = HadoopUtils.getHdfsFileName(resource.getType(),tenantCode,fullName);

try {

logger.info("start hdfs copy {} -> {}", originHdfsFileName, destHdfsFileName);

HadoopUtils.getInstance().copy(originHdfsFileName, destHdfsFileName, true, true);

} catch (Exception e) {

logger.error(MessageFormat.format("hdfs copy {0} -> {1} fail", originHdfsFileName, destHdfsFileName), e);

putMsg(result,Status.HDFS_COPY_FAIL);

throw new ServiceException(Status.HDFS_COPY_FAIL);

}

return result;

}

/**

* query resources list paging

*

* @param loginUser login user

* @param type resource type

* @param searchVal search value

* @param pageNo page number

* @param pageSize page size

* @return resource list page

*/

public Map<String, Object> queryResourceListPaging(User loginUser, int direcotryId, ResourceType type, String searchVal, Integer pageNo, Integer pageSize) {

HashMap<String, Object> result = new HashMap<>(5);

Page<Resource> page = new Page(pageNo, pageSize);

int userId = loginUser.getId();

if (isAdmin(loginUser)) {

userId= 0;

}

if (direcotryId != -1) {

Resource directory = resourcesMapper.selectById(direcotryId);

if (directory == null) {

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

}

IPage<Resource> resourceIPage = resourcesMapper.queryResourcePaging(page,

userId,direcotryId, type.ordinal(), searchVal);

PageInfo pageInfo = new PageInfo<Resource>(pageNo, pageSize);

pageInfo.setTotalCount((int)resourceIPage.getTotal());

pageInfo.setLists(resourceIPage.getRecords());

result.put(Constants.DATA_LIST, pageInfo);

putMsg(result,Status.SUCCESS);

return result;

}

/**

* create direcoty

* @param loginUser login user

* @param fullName full name

* @param type resource type

* @param result Result

*/

private void createDirecotry(User loginUser,String fullName,ResourceType type,Result result){

// query tenant

String tenantCode = tenantMapper.queryById(loginUser.getTenantId()).getTenantCode();

String directoryName = HadoopUtils.getHdfsFileName(type,tenantCode,fullName);

String resourceRootPath = HadoopUtils.getHdfsDir(type,tenantCode);

try {

if (!HadoopUtils.getInstance().exists(resourceRootPath)) {

createTenantDirIfNotExists(tenantCode);

}

if (!HadoopUtils.getInstance().mkdir(directoryName)) {

logger.error("create resource directory {} of hdfs failed",directoryName);

putMsg(result,Status.HDFS_OPERATION_ERROR);

throw new RuntimeException(String.format("create resource directory: %s failed.", directoryName));

}

} catch (Exception e) {

logger.error("create resource directory {} of hdfs failed",directoryName);

putMsg(result,Status.HDFS_OPERATION_ERROR);

throw new RuntimeException(String.format("create resource directory: %s failed.", directoryName));

}

}

/**

* upload file to hdfs

*

* @param loginUser login user

* @param fullName full name

* @param file file

*/

private boolean upload(User loginUser, String fullName, MultipartFile file, ResourceType type) {

// save to local

String fileSuffix = FileUtils.suffix(file.getOriginalFilename());

String nameSuffix = FileUtils.suffix(fullName);

// determine file suffix

if (!(StringUtils.isNotEmpty(fileSuffix) && fileSuffix.equalsIgnoreCase(nameSuffix))) {

return false;

}

// query tenant

String tenantCode = tenantMapper.queryById(loginUser.getTenantId()).getTenantCode();

// random file name

String localFilename = FileUtils.getUploadFilename(tenantCode, UUID.randomUUID().toString());

// save file to hdfs, and delete original file

String hdfsFilename = HadoopUtils.getHdfsFileName(type,tenantCode,fullName);

String resourcePath = HadoopUtils.getHdfsDir(type,tenantCode);

try {

// if tenant dir not exists

if (!HadoopUtils.getInstance().exists(resourcePath)) {

createTenantDirIfNotExists(tenantCode);

}

org.apache.dolphinscheduler.api.utils.FileUtils.copyFile(file, localFilename);

HadoopUtils.getInstance().copyLocalToHdfs(localFilename, hdfsFilename, true, true);

} catch (Exception e) {

logger.error(e.getMessage(), e);

return false;

}

return true;

}

/**

* query resource list

*

* @param loginUser login user

* @param type resource type

* @return resource list

*/

public Map<String, Object> queryResourceList(User loginUser, ResourceType type) {

Map<String, Object> result = new HashMap<>(5);

int userId = loginUser.getId();

if(isAdmin(loginUser)){

userId = 0;

}

List<Resource> allResourceList = resourcesMapper.queryResourceListAuthored(userId, type.ordinal(),0);

Visitor resourceTreeVisitor = new ResourceTreeVisitor(allResourceList);

//JSONArray jsonArray = JSON.parseArray(JSON.toJSONString(resourceTreeVisitor.visit().getChildren(), SerializerFeature.SortField));

result.put(Constants.DATA_LIST, resourceTreeVisitor.visit().getChildren());

putMsg(result,Status.SUCCESS);

return result;

}

/**

* query resource list

*

* @param loginUser login user

* @param type resource type

* @return resource list

*/

public Map<String, Object> queryResourceJarList(User loginUser, ResourceType type) {

Map<String, Object> result = new HashMap<>(5);

int userId = loginUser.getId();

if(isAdmin(loginUser)){

userId = 0;

}

List<Resource> allResourceList = resourcesMapper.queryResourceListAuthored(userId, type.ordinal(),0);

List<Resource> resources = new ResourceFilter(".jar",new ArrayList<>(allResourceList)).filter();

Visitor resourceTreeVisitor = new ResourceTreeVisitor(resources);

result.put(Constants.DATA_LIST, resourceTreeVisitor.visit().getChildren());

putMsg(result,Status.SUCCESS);

return result;

}

/**

* delete resource

*

* @param loginUser login user

* @param resourceId resource id

* @return delete result code

* @throws Exception exception

*/

@Transactional(rollbackFor = Exception.class)

public Result delete(User loginUser, int resourceId) throws Exception {

Result result = new Result();

// if resource upload startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

putMsg(result, Status.HDFS_NOT_STARTUP);

return result;

}

//get resource and hdfs path

Resource resource = resourcesMapper.selectById(resourceId);

if (resource == null) {

logger.error("resource file not exist, resource id {}", resourceId);

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

if (!hasPerm(loginUser, resource.getUserId())) {

putMsg(result, Status.USER_NO_OPERATION_PERM);

return result;

}

String tenantCode = getTenantCode(resource.getUserId(),result);

if (StringUtils.isEmpty(tenantCode)){

return result;

}

// get all resource id of process definitions those is released

List<Map<String, Object>> list = processDefinitionMapper.listResources();

Map<Integer, Set<Integer>> resourceProcessMap = ResourceProcessDefinitionUtils.getResourceProcessDefinitionMap(list);

Set<Integer> resourceIdSet = resourceProcessMap.keySet();

// get all children of the resource

List<Integer> allChildren = listAllChildren(resource,true);

Integer[] needDeleteResourceIdArray = allChildren.toArray(new Integer[allChildren.size()]);

//if resource type is UDF,need check whether it is bound by UDF functon

if (resource.getType() == (ResourceType.UDF)) {

List<UdfFunc> udfFuncs = udfFunctionMapper.listUdfByResourceId(needDeleteResourceIdArray);

if (CollectionUtils.isNotEmpty(udfFuncs)) {

logger.error("can't be deleted,because it is bound by UDF functions:{}",udfFuncs.toString());

putMsg(result,Status.UDF_RESOURCE_IS_BOUND,udfFuncs.get(0).getFuncName());

return result;

}

}

if (resourceIdSet.contains(resource.getPid())) {

logger.error("can't be deleted,because it is used of process definition");

putMsg(result, Status.RESOURCE_IS_USED);

return result;

}

resourceIdSet.retainAll(allChildren);

if (CollectionUtils.isNotEmpty(resourceIdSet)) {

logger.error("can't be deleted,because it is used of process definition");

for (Integer resId : resourceIdSet) {

logger.error("resource id:{} is used of process definition {}",resId,resourceProcessMap.get(resId));

}

putMsg(result, Status.RESOURCE_IS_USED);

return result;

}

// get hdfs file by type

String hdfsFilename = HadoopUtils.getHdfsFileName(resource.getType(), tenantCode, resource.getFullName());

//delete data in database

resourcesMapper.deleteIds(needDeleteResourceIdArray);

resourceUserMapper.deleteResourceUserArray(0, needDeleteResourceIdArray);

//delete file on hdfs

HadoopUtils.getInstance().delete(hdfsFilename, true);

putMsg(result, Status.SUCCESS);

return result;

}

/**

* verify resource by name and type

* @param loginUser login user

* @param fullName resource full name

* @param type resource type

* @return true if the resource name not exists, otherwise return false

*/

public Result verifyResourceName(String fullName, ResourceType type,User loginUser) {

Result result = new Result();

putMsg(result, Status.SUCCESS);

if (checkResourceExists(fullName, 0, type.ordinal())) {

logger.error("resource type:{} name:{} has exist, can't create again.", type, fullName);

putMsg(result, Status.RESOURCE_EXIST);

} else {

// query tenant

Tenant tenant = tenantMapper.queryById(loginUser.getTenantId());

if(tenant != null){

String tenantCode = tenant.getTenantCode();

try {

String hdfsFilename = HadoopUtils.getHdfsFileName(type,tenantCode,fullName);

if(HadoopUtils.getInstance().exists(hdfsFilename)){

logger.error("resource type:{} name:{} has exist in hdfs {}, can't create again.", type, fullName,hdfsFilename);

putMsg(result, Status.RESOURCE_FILE_EXIST,hdfsFilename);

}

} catch (Exception e) {

logger.error(e.getMessage(),e);

putMsg(result,Status.HDFS_OPERATION_ERROR);

}

}else{

putMsg(result,Status.TENANT_NOT_EXIST);

}

}

return result;

}

/**

* verify resource by full name or pid and type

* @param fullName resource full name

* @param id resource id

* @param type resource type

* @return true if the resource full name or pid not exists, otherwise return false

*/

public Result queryResource(String fullName,Integer id,ResourceType type) {

Result result = new Result();

if (StringUtils.isBlank(fullName) && id == null) {

logger.error("You must input one of fullName and pid");

putMsg(result, Status.REQUEST_PARAMS_NOT_VALID_ERROR);

return result;

}

if (StringUtils.isNotBlank(fullName)) {

List<Resource> resourceList = resourcesMapper.queryResource(fullName,type.ordinal());

if (CollectionUtils.isEmpty(resourceList)) {

logger.error("resource file not exist, resource full name {} ", fullName);

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

putMsg(result, Status.SUCCESS);

result.setData(resourceList.get(0));

} else {

Resource resource = resourcesMapper.selectById(id);

if (resource == null) {

logger.error("resource file not exist, resource id {}", id);

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

Resource parentResource = resourcesMapper.selectById(resource.getPid());

if (parentResource == null) {

logger.error("parent resource file not exist, resource id {}", id);

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

putMsg(result, Status.SUCCESS);

result.setData(parentResource);

}

return result;

}

/**

* view resource file online

*

* @param resourceId resource id

* @param skipLineNum skip line number

* @param limit limit

* @return resource content

*/

public Result readResource(int resourceId, int skipLineNum, int limit) {

Result result = new Result();

// if resource upload startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

putMsg(result, Status.HDFS_NOT_STARTUP);

return result;

}

// get resource by id

Resource resource = resourcesMapper.selectById(resourceId);

if (resource == null) {

logger.error("resource file not exist, resource id {}", resourceId);

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

//check preview or not by file suffix

String nameSuffix = FileUtils.suffix(resource.getAlias());

String resourceViewSuffixs = FileUtils.getResourceViewSuffixs();

if (StringUtils.isNotEmpty(resourceViewSuffixs)) {

List<String> strList = Arrays.asList(resourceViewSuffixs.split(","));

if (!strList.contains(nameSuffix)) {

logger.error("resource suffix {} not support view, resource id {}", nameSuffix, resourceId);

putMsg(result, Status.RESOURCE_SUFFIX_NOT_SUPPORT_VIEW);

return result;

}

}

String tenantCode = getTenantCode(resource.getUserId(),result);

if (StringUtils.isEmpty(tenantCode)){

return result;

}

// hdfs path

String hdfsFileName = HadoopUtils.getHdfsResourceFileName(tenantCode, resource.getFullName());

logger.info("resource hdfs path is {} ", hdfsFileName);

try {

if(HadoopUtils.getInstance().exists(hdfsFileName)){

List<String> content = HadoopUtils.getInstance().catFile(hdfsFileName, skipLineNum, limit);

putMsg(result, Status.SUCCESS);

Map<String, Object> map = new HashMap<>();

map.put(ALIAS, resource.getAlias());

map.put(CONTENT, String.join("\n", content));

result.setData(map);

}else{

logger.error("read file {} not exist in hdfs", hdfsFileName);

putMsg(result, Status.RESOURCE_FILE_NOT_EXIST,hdfsFileName);

}

} catch (Exception e) {

logger.error("Resource {} read failed", hdfsFileName, e);

putMsg(result, Status.HDFS_OPERATION_ERROR);

}

return result;

}

/**

* create resource file online

*

* @param loginUser login user

* @param type resource type

* @param fileName file name

* @param fileSuffix file suffix

* @param desc description

* @param content content

* @return create result code

*/

@Transactional(rollbackFor = Exception.class)

public Result onlineCreateResource(User loginUser, ResourceType type, String fileName, String fileSuffix, String desc, String content,int pid,String currentDirectory) {

Result result = new Result();

// if resource upload startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

putMsg(result, Status.HDFS_NOT_STARTUP);

return result;

}

//check file suffix

String nameSuffix = fileSuffix.trim();

String resourceViewSuffixs = FileUtils.getResourceViewSuffixs();

if (StringUtils.isNotEmpty(resourceViewSuffixs)) {

List<String> strList = Arrays.asList(resourceViewSuffixs.split(","));

if (!strList.contains(nameSuffix)) {

logger.error("resouce suffix {} not support create", nameSuffix);

putMsg(result, Status.RESOURCE_SUFFIX_NOT_SUPPORT_VIEW);

return result;

}

}

String name = fileName.trim() + "." + nameSuffix;

String fullName = currentDirectory.equals("/") ? String.format("%s%s",currentDirectory,name):String.format("%s/%s",currentDirectory,name);

result = verifyResourceName(fullName,type,loginUser);

if (!result.getCode().equals(Status.SUCCESS.getCode())) {

return result;

}

// save data

Date now = new Date();

Resource resource = new Resource(pid,name,fullName,false,desc,name,loginUser.getId(),type,content.getBytes().length,now,now);

resourcesMapper.insert(resource);

putMsg(result, Status.SUCCESS);

Map<Object, Object> dataMap = new BeanMap(resource);

Map<String, Object> resultMap = new HashMap<>();

for (Map.Entry<Object, Object> entry: dataMap.entrySet()) {

if (!Constants.CLASS.equalsIgnoreCase(entry.getKey().toString())) {

resultMap.put(entry.getKey().toString(), entry.getValue());

}

}

result.setData(resultMap);

String tenantCode = tenantMapper.queryById(loginUser.getTenantId()).getTenantCode();

result = uploadContentToHdfs(fullName, tenantCode, content);

if (!result.getCode().equals(Status.SUCCESS.getCode())) {

throw new RuntimeException(result.getMsg());

}

return result;

}

/**

* updateProcessInstance resource

*

* @param resourceId resource id

* @param content content

* @return update result cod

*/

@Transactional(rollbackFor = Exception.class)

public Result updateResourceContent(int resourceId, String content) {

Result result = new Result();

// if resource upload startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

putMsg(result, Status.HDFS_NOT_STARTUP);

return result;

}

Resource resource = resourcesMapper.selectById(resourceId);

if (resource == null) {

logger.error("read file not exist, resource id {}", resourceId);

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

//check can edit by file suffix

String nameSuffix = FileUtils.suffix(resource.getAlias());

String resourceViewSuffixs = FileUtils.getResourceViewSuffixs();

if (StringUtils.isNotEmpty(resourceViewSuffixs)) {

List<String> strList = Arrays.asList(resourceViewSuffixs.split(","));

if (!strList.contains(nameSuffix)) {

logger.error("resource suffix {} not support updateProcessInstance, resource id {}", nameSuffix, resourceId);

putMsg(result, Status.RESOURCE_SUFFIX_NOT_SUPPORT_VIEW);

return result;

}

}

String tenantCode = getTenantCode(resource.getUserId(),result);

if (StringUtils.isEmpty(tenantCode)){

return result;

}

resource.setSize(content.getBytes().length);

resource.setUpdateTime(new Date());

resourcesMapper.updateById(resource);

result = uploadContentToHdfs(resource.getFullName(), tenantCode, content);

if (!result.getCode().equals(Status.SUCCESS.getCode())) {

throw new RuntimeException(result.getMsg());

}

return result;

}

/**

* @param resourceName resource name

* @param tenantCode tenant code

* @param content content

* @return result

*/

private Result uploadContentToHdfs(String resourceName, String tenantCode, String content) {

Result result = new Result();

String localFilename = "";

String hdfsFileName = "";

try {

localFilename = FileUtils.getUploadFilename(tenantCode, UUID.randomUUID().toString());

if (!FileUtils.writeContent2File(content, localFilename)) {

// write file fail

logger.error("file {} fail, content is {}", localFilename, content);

putMsg(result, Status.RESOURCE_NOT_EXIST);

return result;

}

// get resource file hdfs path

hdfsFileName = HadoopUtils.getHdfsResourceFileName(tenantCode, resourceName);

String resourcePath = HadoopUtils.getHdfsResDir(tenantCode);

logger.info("resource hdfs path is {} ", hdfsFileName);

HadoopUtils hadoopUtils = HadoopUtils.getInstance();

if (!hadoopUtils.exists(resourcePath)) {

// create if tenant dir not exists

createTenantDirIfNotExists(tenantCode);

}

if (hadoopUtils.exists(hdfsFileName)) {

hadoopUtils.delete(hdfsFileName, false);

}

hadoopUtils.copyLocalToHdfs(localFilename, hdfsFileName, true, true);

} catch (Exception e) {

logger.error(e.getMessage(), e);

result.setCode(Status.HDFS_OPERATION_ERROR.getCode());

result.setMsg(String.format("copy %s to hdfs %s fail", localFilename, hdfsFileName));

return result;

}

putMsg(result, Status.SUCCESS);

return result;

}

/**

* download file

*

* @param resourceId resource id

* @return resource content

* @throws Exception exception

*/

public org.springframework.core.io.Resource downloadResource(int resourceId) throws Exception {

// if resource upload startup

if (!PropertyUtils.getResUploadStartupState()){

logger.error("resource upload startup state: {}", PropertyUtils.getResUploadStartupState());

throw new RuntimeException("hdfs not startup");

}

Resource resource = resourcesMapper.selectById(resourceId);

if (resource == null) {

logger.error("download file not exist, resource id {}", resourceId);

return null;

}

if (resource.isDirectory()) {

logger.error("resource id {} is directory,can't download it", resourceId);

throw new RuntimeException("cant't download directory");

}

int userId = resource.getUserId();

User user = userMapper.selectById(userId);

if(user == null){

logger.error("user id {} not exists", userId);

throw new RuntimeException(String.format("resource owner id %d not exist",userId));

}

Tenant tenant = tenantMapper.queryById(user.getTenantId());

if(tenant == null){

logger.error("tenant id {} not exists", user.getTenantId());

throw new RuntimeException(String.format("The tenant id %d of resource owner not exist",user.getTenantId()));

}

String tenantCode = tenant.getTenantCode();

String hdfsFileName = HadoopUtils.getHdfsFileName(resource.getType(), tenantCode, resource.getFullName());

String localFileName = FileUtils.getDownloadFilename(resource.getAlias());

logger.info("resource hdfs path is {} ", hdfsFileName);

HadoopUtils.getInstance().copyHdfsToLocal(hdfsFileName, localFileName, false, true);

return org.apache.dolphinscheduler.api.utils.FileUtils.file2Resource(localFileName);

}

/**

* list all file

*

* @param loginUser login user

* @param userId user id

* @return unauthorized result code

*/

public Map<String, Object> authorizeResourceTree(User loginUser, Integer userId) {

Map<String, Object> result = new HashMap<>();

if (checkAdmin(loginUser, result)) {

return result;

}

List<Resource> resourceList = resourcesMapper.queryResourceExceptUserId(userId);

List<ResourceComponent> list ;

if (CollectionUtils.isNotEmpty(resourceList)) {

Visitor visitor = new ResourceTreeVisitor(resourceList);

list = visitor.visit().getChildren();

}else {

list = new ArrayList<>(0);

}

result.put(Constants.DATA_LIST, list);

putMsg(result,Status.SUCCESS);

return result;

}

/**

* unauthorized file

*

* @param loginUser login user

* @param userId user id

* @return unauthorized result code

*/

public Map<String, Object> unauthorizedFile(User loginUser, Integer userId) {

Map<String, Object> result = new HashMap<>();

if (checkAdmin(loginUser, result)) {

return result;

}

List<Resource> resourceList = resourcesMapper.queryResourceExceptUserId(userId);

List<Resource> list ;

if (resourceList != null && resourceList.size() > 0) {

Set<Resource> resourceSet = new HashSet<>(resourceList);

List<Resource> authedResourceList = resourcesMapper.queryAuthorizedResourceList(userId);

getAuthorizedResourceList(resourceSet, authedResourceList);

list = new ArrayList<>(resourceSet);

}else {

list = new ArrayList<>(0);

}

Visitor visitor = new ResourceTreeVisitor(list);

result.put(Constants.DATA_LIST, visitor.visit().getChildren());

putMsg(result,Status.SUCCESS);

return result;

}

/**

* unauthorized udf function

*

* @param loginUser login user

* @param userId user id

* @return unauthorized result code

*/

public Map<String, Object> unauthorizedUDFFunction(User loginUser, Integer userId) {

Map<String, Object> result = new HashMap<>(5);

//only admin can operate

if (checkAdmin(loginUser, result)) {

return result;

}

List<UdfFunc> udfFuncList = udfFunctionMapper.queryUdfFuncExceptUserId(userId);

List<UdfFunc> resultList = new ArrayList<>();

Set<UdfFunc> udfFuncSet = null;

if (CollectionUtils.isNotEmpty(udfFuncList)) {

udfFuncSet = new HashSet<>(udfFuncList);

List<UdfFunc> authedUDFFuncList = udfFunctionMapper.queryAuthedUdfFunc(userId);

getAuthorizedResourceList(udfFuncSet, authedUDFFuncList);

resultList = new ArrayList<>(udfFuncSet);

}

result.put(Constants.DATA_LIST, resultList);

putMsg(result,Status.SUCCESS);

return result;

}

/**

* authorized udf function

*

* @param loginUser login user

* @param userId user id

* @return authorized result code

*/

public Map<String, Object> authorizedUDFFunction(User loginUser, Integer userId) {

Map<String, Object> result = new HashMap<>();

if (checkAdmin(loginUser, result)) {

return result;

}

List<UdfFunc> udfFuncs = udfFunctionMapper.queryAuthedUdfFunc(userId);

result.put(Constants.DATA_LIST, udfFuncs);

putMsg(result,Status.SUCCESS);

return result;

}

/**

* authorized file

*

* @param loginUser login user

* @param userId user id

* @return authorized result

*/

public Map<String, Object> authorizedFile(User loginUser, Integer userId) {

Map<String, Object> result = new HashMap<>(5);

if (checkAdmin(loginUser, result)){

return result;

}

List<Resource> authedResources = resourcesMapper.queryAuthorizedResourceList(userId);

Visitor visitor = new ResourceTreeVisitor(authedResources);

logger.info(JSON.toJSONString(visitor.visit(), SerializerFeature.SortField));

String jsonTreeStr = JSON.toJSONString(visitor.visit().getChildren(), SerializerFeature.SortField);

logger.info(jsonTreeStr);

result.put(Constants.DATA_LIST, visitor.visit().getChildren());

putMsg(result,Status.SUCCESS);

return result;

}

/**

* get authorized resource list

*

* @param resourceSet resource set

* @param authedResourceList authorized resource list

*/

private void getAuthorizedResourceList(Set<?> resourceSet, List<?> authedResourceList) {

Set<?> authedResourceSet = null;

if (CollectionUtils.isNotEmpty(authedResourceList)) {

authedResourceSet = new HashSet<>(authedResourceList);

resourceSet.removeAll(authedResourceSet);

}

}

/**

* get tenantCode by UserId

*

* @param userId user id

* @param result return result

* @return

*/

private String getTenantCode(int userId,Result result){

User user = userMapper.selectById(userId);

if (user == null) {

logger.error("user {} not exists", userId);

putMsg(result, Status.USER_NOT_EXIST,userId);

return null;

}

Tenant tenant = tenantMapper.queryById(user.getTenantId());

if (tenant == null){

logger.error("tenant not exists");

putMsg(result, Status.TENANT_NOT_EXIST);

return null;

}

return tenant.getTenantCode();

}

/**

* list all children id

* @param resource resource

* @param containSelf whether add self to children list

* @return all children id

*/

List<Integer> listAllChildren(Resource resource,boolean containSelf){

List<Integer> childList = new ArrayList<>();

if (resource.getId() != -1 && containSelf) {

childList.add(resource.getId());

}

if(resource.isDirectory()){

listAllChildren(resource.getId(),childList);

}

return childList;

}

/**

* list all children id

* @param resourceId resource id

* @param childList child list

*/

void listAllChildren(int resourceId,List<Integer> childList){

List<Integer> children = resourcesMapper.listChildren(resourceId);

for(int chlidId:children){

childList.add(chlidId);

listAllChildren(chlidId,childList);

}

}

}

|

closed

|

apache/dolphinscheduler

|

https://github.com/apache/dolphinscheduler

| 3,463 |

[Bug][api] rename the udf resource file associated with the udf function, Failed to execute hive task

|

1. Rename the udf resource file associated with the udf function

2. Executing hive task is fail

**Which version of Dolphin Scheduler:**

-[1.3.2-release]

|

https://github.com/apache/dolphinscheduler/issues/3463

|

https://github.com/apache/dolphinscheduler/pull/3482

|

a678c827600d44623f30311574b8226c1c59ace2

|

c8322482bbd021a89c407809abfcdd50cf3b2dc6

| 2020-08-11T03:37:09Z |

java

| 2020-08-13T08:19:11Z |

dolphinscheduler-dao/src/main/java/org/apache/dolphinscheduler/dao/mapper/UdfFuncMapper.java

|

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.dao.mapper;

import org.apache.dolphinscheduler.dao.entity.UdfFunc;

import com.baomidou.mybatisplus.core.mapper.BaseMapper;

import com.baomidou.mybatisplus.core.metadata.IPage;

import org.apache.ibatis.annotations.Param;

import java.util.List;

/**

* udf function mapper interface

*/

public interface UdfFuncMapper extends BaseMapper<UdfFunc> {

/**

* select udf by id

* @param id udf id

* @return UdfFunc

*/

UdfFunc selectUdfById(@Param("id") int id);

/**

* query udf function by ids and function name

* @param ids ids

* @param funcNames funcNames

* @return udf function list

*/

List<UdfFunc> queryUdfByIdStr(@Param("ids") int[] ids,

@Param("funcNames") String funcNames);

/**

* udf function page

* @param page page

* @param userId userId

* @param searchVal searchVal

* @return udf function IPage

*/

IPage<UdfFunc> queryUdfFuncPaging(IPage<UdfFunc> page,

@Param("userId") int userId,

@Param("searchVal") String searchVal);

/**

* query udf function by type

* @param userId userId

* @param type type

* @return udf function list

*/

List<UdfFunc> getUdfFuncByType(@Param("userId") int userId,

@Param("type") Integer type);

/**

* query udf function except userId

* @param userId userId

* @return udf function list

*/

List<UdfFunc> queryUdfFuncExceptUserId(@Param("userId") int userId);

/**

* query authed udf function

* @param userId userId

* @return udf function list

*/

List<UdfFunc> queryAuthedUdfFunc(@Param("userId") int userId);

/**

* list authorized UDF function

* @param userId userId

* @param udfIds UDF function id array

* @return UDF function list

*/

<T> List<UdfFunc> listAuthorizedUdfFunc (@Param("userId") int userId,@Param("udfIds")T[] udfIds);

/**

* list UDF by resource id

* @param resourceIds resource id array

* @return UDF function list

*/

List<UdfFunc> listUdfByResourceId(@Param("resourceIds") Integer[] resourceIds);

/**

* list authorized UDF by resource id

* @param resourceIds resource id array

* @return UDF function list

*/

List<UdfFunc> listAuthorizedUdfByResourceId(@Param("userId") int userId,@Param("resourceIds") int[] resourceIds);

}

|

closed

|

apache/dolphinscheduler

|

https://github.com/apache/dolphinscheduler

| 3,463 |

[Bug][api] rename the udf resource file associated with the udf function, Failed to execute hive task

|

1. Rename the udf resource file associated with the udf function

2. Executing hive task is fail

**Which version of Dolphin Scheduler:**

-[1.3.2-release]

|

https://github.com/apache/dolphinscheduler/issues/3463

|

https://github.com/apache/dolphinscheduler/pull/3482

|

a678c827600d44623f30311574b8226c1c59ace2

|

c8322482bbd021a89c407809abfcdd50cf3b2dc6

| 2020-08-11T03:37:09Z |

java

| 2020-08-13T08:19:11Z |

dolphinscheduler-dao/src/main/resources/org/apache/dolphinscheduler/dao/mapper/UdfFuncMapper.xml

|

<?xml version="1.0" encoding="UTF-8" ?>

<!--

~ Licensed to the Apache Software Foundation (ASF) under one or more

~ contributor license agreements. See the NOTICE file distributed with

~ this work for additional information regarding copyright ownership.

~ The ASF licenses this file to You under the Apache License, Version 2.0

~ (the "License"); you may not use this file except in compliance with

~ the License. You may obtain a copy of the License at

~

~ http://www.apache.org/licenses/LICENSE-2.0

~

~ Unless required by applicable law or agreed to in writing, software

~ distributed under the License is distributed on an "AS IS" BASIS,

~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

~ See the License for the specific language governing permissions and

~ limitations under the License.

-->

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" >

<mapper namespace="org.apache.dolphinscheduler.dao.mapper.UdfFuncMapper">

<select id="selectUdfById" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where id = #{id}

</select>

<select id="queryUdfByIdStr" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where 1 = 1

<if test="ids != null and ids != ''">

and id in

<foreach collection="ids" item="i" open="(" close=")" separator=",">

#{i}

</foreach>

</if>

<if test="funcNames != null and funcNames != ''">

and func_name = #{funcNames}

</if>

order by id asc

</select>

<select id="queryUdfFuncPaging" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where 1=1

<if test="searchVal!= null and searchVal != ''">

and func_name like concat('%', #{searchVal}, '%')

</if>

<if test="userId != 0">

and id in (

select udf_id from t_ds_relation_udfs_user where user_id=#{userId}

union select id as udf_id from t_ds_udfs where user_id=#{userId})

</if>

order by create_time desc

</select>

<select id="getUdfFuncByType" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where type=#{type}

<if test="userId != 0">

and id in (

select udf_id from t_ds_relation_udfs_user where user_id=#{userId}

union select id as udf_id from t_ds_udfs where user_id=#{userId})

</if>

</select>

<select id="queryUdfFuncExceptUserId" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where user_id <![CDATA[ <> ]]> #{userId}

</select>

<select id="queryAuthedUdfFunc" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

SELECT u.*

from t_ds_udfs u,t_ds_relation_udfs_user rel

WHERE u.id = rel.udf_id

AND rel.user_id = #{userId}

</select>

<select id="listAuthorizedUdfFunc" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where

id in (select udf_id from t_ds_relation_udfs_user where user_id=#{userId}

union select id as udf_id from t_ds_udfs where user_id=#{userId})

<if test="udfIds != null and udfIds != ''">

and id in

<foreach collection="udfIds" item="i" open="(" close=")" separator=",">

#{i}

</foreach>

</if>

</select>

<select id="listUdfByResourceId" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where 1=1

<if test="resourceIds != null and resourceIds != ''">

and resource_id in

<foreach collection="resourceIds" item="i" open="(" close=")" separator=",">

#{i}

</foreach>

</if>

</select>

<select id="listAuthorizedUdfByResourceId" resultType="org.apache.dolphinscheduler.dao.entity.UdfFunc">

select *

from t_ds_udfs

where

id in (select udf_id from t_ds_relation_udfs_user where user_id=#{userId}

union select id as udf_id from t_ds_udfs where user_id=#{userId})

<if test="resourceIds != null and resourceIds != ''">

and resource_id in

<foreach collection="resourceIds" item="i" open="(" close=")" separator=",">

#{i}

</foreach>

</if>

</select>

</mapper>

|

closed

|

apache/dolphinscheduler

|

https://github.com/apache/dolphinscheduler

| 3,463 |

[Bug][api] rename the udf resource file associated with the udf function, Failed to execute hive task

|

1. Rename the udf resource file associated with the udf function

2. Executing hive task is fail

**Which version of Dolphin Scheduler:**

-[1.3.2-release]

|

https://github.com/apache/dolphinscheduler/issues/3463

|

https://github.com/apache/dolphinscheduler/pull/3482

|

a678c827600d44623f30311574b8226c1c59ace2

|

c8322482bbd021a89c407809abfcdd50cf3b2dc6

| 2020-08-11T03:37:09Z |

java

| 2020-08-13T08:19:11Z |

dolphinscheduler-dao/src/test/java/org/apache/dolphinscheduler/dao/mapper/UdfFuncMapperTest.java

|

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.dao.mapper;

import com.baomidou.mybatisplus.core.metadata.IPage;

import com.baomidou.mybatisplus.extension.plugins.pagination.Page;

import org.apache.dolphinscheduler.common.enums.UdfType;

import org.apache.dolphinscheduler.common.enums.UserType;

import org.apache.dolphinscheduler.dao.entity.UDFUser;

import org.apache.dolphinscheduler.dao.entity.UdfFunc;

import org.apache.dolphinscheduler.dao.entity.User;

import org.junit.Assert;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.annotation.Rollback;

import org.springframework.test.context.junit4.SpringRunner;

import org.springframework.transaction.annotation.Transactional;

import java.util.Arrays;

import java.util.Date;

import java.util.List;

import static java.util.stream.Collectors.toList;

@RunWith(SpringRunner.class)

@SpringBootTest

@Transactional

@Rollback(true)

public class UdfFuncMapperTest {

@Autowired

private UserMapper userMapper;

@Autowired

UdfFuncMapper udfFuncMapper;

@Autowired

UDFUserMapper udfUserMapper;

/**

* insert one udf

* @return UdfFunc

*/

private UdfFunc insertOne(){

UdfFunc udfFunc = new UdfFunc();

udfFunc.setUserId(1);

udfFunc.setFuncName("dolphin_udf_func");

udfFunc.setClassName("org.apache.dolphinscheduler.test.mr");

udfFunc.setType(UdfType.HIVE);

udfFunc.setResourceId(1);

udfFunc.setResourceName("dolphin_resource");

udfFunc.setCreateTime(new Date());

udfFunc.setUpdateTime(new Date());

udfFuncMapper.insert(udfFunc);

return udfFunc;

}

/**

* insert one udf

* @return

*/

private UdfFunc insertOne(User user){

UdfFunc udfFunc = new UdfFunc();

udfFunc.setUserId(user.getId());

udfFunc.setFuncName("dolphin_udf_func");

udfFunc.setClassName("org.apache.dolphinscheduler.test.mr");

udfFunc.setType(UdfType.HIVE);

udfFunc.setResourceId(1);

udfFunc.setResourceName("dolphin_resource");

udfFunc.setCreateTime(new Date());

udfFunc.setUpdateTime(new Date());

udfFuncMapper.insert(udfFunc);

return udfFunc;

}

/**

* insert one user

* @return User

*/

private User insertOneUser(){

User user = new User();

user.setUserName("user1");

user.setUserPassword("1");

user.setEmail("[email protected]");

user.setUserType(UserType.GENERAL_USER);

user.setCreateTime(new Date());

user.setTenantId(1);

user.setUpdateTime(new Date());

userMapper.insert(user);

return user;

}

/**

* insert one user

* @return User

*/

private User insertOneUser(String userName){

User user = new User();

user.setUserName(userName);

user.setUserPassword("1");

user.setEmail("[email protected]");

user.setUserType(UserType.GENERAL_USER);

user.setCreateTime(new Date());

user.setTenantId(1);

user.setUpdateTime(new Date());

userMapper.insert(user);

return user;

}

/**

* insert UDFUser

* @param user user

* @param udfFunc udf func

* @return UDFUser

*/

private UDFUser insertOneUDFUser(User user,UdfFunc udfFunc){

UDFUser udfUser = new UDFUser();

udfUser.setUdfId(udfFunc.getId());

udfUser.setUserId(user.getId());

udfUser.setCreateTime(new Date());

udfUser.setUpdateTime(new Date());

udfUserMapper.insert(udfUser);

return udfUser;

}

/**

* create general user

* @return User

*/

private User createGeneralUser(String userName){

User user = new User();

user.setUserName(userName);

user.setUserPassword("1");

user.setEmail("[email protected]");

user.setUserType(UserType.GENERAL_USER);

user.setCreateTime(new Date());

user.setTenantId(1);

user.setUpdateTime(new Date());

userMapper.insert(user);

return user;

}

/**

* test update

*/

@Test

public void testUpdate(){

//insertOne

UdfFunc udfFunc = insertOne();

udfFunc.setResourceName("dolphin_resource_update");

udfFunc.setResourceId(2);

udfFunc.setClassName("org.apache.dolphinscheduler.test.mrUpdate");

udfFunc.setUpdateTime(new Date());

//update

int update = udfFuncMapper.updateById(udfFunc);

Assert.assertEquals(update, 1);

}

/**

* test delete

*/

@Test

public void testDelete(){

//insertOne

UdfFunc udfFunc = insertOne();

//delete

int delete = udfFuncMapper.deleteById(udfFunc.getId());

Assert.assertEquals(delete, 1);

}

/**

* test query

*/

@Test

public void testQuery(){

//insertOne

UdfFunc udfFunc = insertOne();

//query

List<UdfFunc> udfFuncList = udfFuncMapper.selectList(null);

Assert.assertNotEquals(udfFuncList.size(), 0);

}

/**

* test query udf by ids

*/

@Test

public void testQueryUdfByIdStr() {

//insertOne

UdfFunc udfFunc = insertOne();

//insertOne

UdfFunc udfFunc1 = insertOne();

int[] idArray = new int[]{udfFunc.getId(),udfFunc1.getId()};

//queryUdfByIdStr

List<UdfFunc> udfFuncList = udfFuncMapper.queryUdfByIdStr(idArray,"");

Assert.assertNotEquals(udfFuncList.size(), 0);

}

/**

* test page

*/

@Test

public void testQueryUdfFuncPaging() {

//insertOneUser

User user = insertOneUser();

//insertOne

UdfFunc udfFunc = insertOne(user);

//queryUdfFuncPaging

Page<UdfFunc> page = new Page(1,3);

IPage<UdfFunc> udfFuncIPage = udfFuncMapper.queryUdfFuncPaging(page,user.getId(),"");

Assert.assertNotEquals(udfFuncIPage.getTotal(), 0);

}

/**

* test get udffunc by type

*/

@Test

public void testGetUdfFuncByType() {

//insertOneUser

User user = insertOneUser();

//insertOne

UdfFunc udfFunc = insertOne(user);

//getUdfFuncByType

List<UdfFunc> udfFuncList = udfFuncMapper.getUdfFuncByType(user.getId(), udfFunc.getType().ordinal());

Assert.assertNotEquals(udfFuncList.size(), 0);

}

/**

* test query udffunc expect userId

*/

@Test

public void testQueryUdfFuncExceptUserId() {

//insertOneUser

User user1 = insertOneUser();

User user2 = insertOneUser("user2");

//insertOne

UdfFunc udfFunc1 = insertOne(user1);

UdfFunc udfFunc2 = insertOne(user2);

List<UdfFunc> udfFuncList = udfFuncMapper.queryUdfFuncExceptUserId(user1.getId());

Assert.assertNotEquals(udfFuncList.size(), 0);

}

/**

* test query authed udffunc

*/

@Test

public void testQueryAuthedUdfFunc() {

//insertOneUser

User user = insertOneUser();

//insertOne

UdfFunc udfFunc = insertOne(user);

//insertOneUDFUser

UDFUser udfUser = insertOneUDFUser(user, udfFunc);

//queryAuthedUdfFunc

List<UdfFunc> udfFuncList = udfFuncMapper.queryAuthedUdfFunc(user.getId());

Assert.assertNotEquals(udfFuncList.size(), 0);

}

@Test

public void testListAuthorizedUdfFunc(){

//create general user

User generalUser1 = createGeneralUser("user1");

User generalUser2 = createGeneralUser("user2");

//create udf function

UdfFunc udfFunc = insertOne(generalUser1);

UdfFunc unauthorizdUdfFunc = insertOne(generalUser2);

//udf function ids

Integer[] udfFuncIds = new Integer[]{udfFunc.getId(),unauthorizdUdfFunc.getId()};

List<UdfFunc> authorizedUdfFunc = udfFuncMapper.listAuthorizedUdfFunc(generalUser1.getId(), udfFuncIds);

Assert.assertEquals(generalUser1.getId(),udfFunc.getUserId());

Assert.assertNotEquals(generalUser1.getId(),unauthorizdUdfFunc.getUserId());

Assert.assertFalse(authorizedUdfFunc.stream().map(t -> t.getId()).collect(toList()).containsAll(Arrays.asList(udfFuncIds)));

//authorize object unauthorizdUdfFunc to generalUser1

insertOneUDFUser(generalUser1,unauthorizdUdfFunc);

authorizedUdfFunc = udfFuncMapper.listAuthorizedUdfFunc(generalUser1.getId(), udfFuncIds);

Assert.assertTrue(authorizedUdfFunc.stream().map(t -> t.getId()).collect(toList()).containsAll(Arrays.asList(udfFuncIds)));

}

}

|

closed

|

apache/dolphinscheduler

|

https://github.com/apache/dolphinscheduler

| 3,324 |

[Bug][Master] there exists some problems in checking task dependency

|

**Describe the bug**

There exists some problems in Master-Server executing logic. Some nodes could execute normally though their depenpency is not satisfied. Just like the screenshots below, if you get the same work flow, and you will find the result is wrong.

**Screenshots**

As you can see, task B shouldn't be executed which is the opposite of the result. When task X has condition-type node after, the tasks that depend on X will be probably executed.

**Which version of Dolphin Scheduler:**

-[1.3.2-snapshot]

**Probable reason**

- it's wrong because of the code below in the red rectangle.

|

https://github.com/apache/dolphinscheduler/issues/3324

|

https://github.com/apache/dolphinscheduler/pull/3473

|

927985c012342f0c9f1dde397d66b5737571fed2

|

5756d6b02910d139f00b4b412078f32c5ca14c54

| 2020-07-27T15:35:45Z |

java

| 2020-08-13T11:02:14Z |

dolphinscheduler-server/src/main/java/org/apache/dolphinscheduler/server/master/runner/MasterExecThread.java

|

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.server.master.runner;

import com.alibaba.fastjson.JSON;

import com.google.common.collect.Lists;

import org.apache.commons.io.FileUtils;

import org.apache.dolphinscheduler.common.Constants;

import org.apache.dolphinscheduler.common.enums.*;

import org.apache.dolphinscheduler.common.graph.DAG;

import org.apache.dolphinscheduler.common.model.TaskNode;

import org.apache.dolphinscheduler.common.model.TaskNodeRelation;

import org.apache.dolphinscheduler.common.process.ProcessDag;

import org.apache.dolphinscheduler.common.task.conditions.ConditionsParameters;

import org.apache.dolphinscheduler.common.thread.Stopper;

import org.apache.dolphinscheduler.common.thread.ThreadUtils;

import org.apache.dolphinscheduler.common.utils.*;

import org.apache.dolphinscheduler.dao.entity.ProcessInstance;

import org.apache.dolphinscheduler.dao.entity.Schedule;

import org.apache.dolphinscheduler.dao.entity.TaskInstance;

import org.apache.dolphinscheduler.dao.utils.DagHelper;

import org.apache.dolphinscheduler.remote.NettyRemotingClient;

import org.apache.dolphinscheduler.server.master.config.MasterConfig;

import org.apache.dolphinscheduler.server.utils.AlertManager;

import org.apache.dolphinscheduler.service.bean.SpringApplicationContext;

import org.apache.dolphinscheduler.service.process.ProcessService;

import org.apache.dolphinscheduler.service.quartz.cron.CronUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.File;

import java.io.IOException;

import java.util.*;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Future;

import static org.apache.dolphinscheduler.common.Constants.*;

/**

* master exec thread,split dag

*/

public class MasterExecThread implements Runnable {

/**

* logger of MasterExecThread

*/

private static final Logger logger = LoggerFactory.getLogger(MasterExecThread.class);

/**

* process instance

*/

private ProcessInstance processInstance;

/**

* runing TaskNode

*/

private final Map<MasterBaseTaskExecThread,Future<Boolean>> activeTaskNode = new ConcurrentHashMap<>();

/**

* task exec service

*/

private final ExecutorService taskExecService;

/**

* submit failure nodes

*/

private boolean taskFailedSubmit = false;

/**

* recover node id list

*/

private List<TaskInstance> recoverNodeIdList = new ArrayList<>();

/**

* error task list

*/

private Map<String,TaskInstance> errorTaskList = new ConcurrentHashMap<>();

/**

* complete task list

*/

private Map<String, TaskInstance> completeTaskList = new ConcurrentHashMap<>();

/**

* ready to submit task list

*/

private Map<String, TaskInstance> readyToSubmitTaskList = new ConcurrentHashMap<>();

/**

* depend failed task map

*/

private Map<String, TaskInstance> dependFailedTask = new ConcurrentHashMap<>();

/**

* forbidden task map

*/

private Map<String, TaskNode> forbiddenTaskList = new ConcurrentHashMap<>();

/**

* skip task map

*/

private Map<String, TaskNode> skipTaskNodeList = new ConcurrentHashMap<>();

/**

* recover tolerance fault task list

*/

private List<TaskInstance> recoverToleranceFaultTaskList = new ArrayList<>();

/**

* alert manager

*/

private AlertManager alertManager = new AlertManager();

/**

* the object of DAG

*/

private DAG<String,TaskNode,TaskNodeRelation> dag;

/**

* process service

*/

private ProcessService processService;

/**

* master config

*/

private MasterConfig masterConfig;

/**

*

*/

private NettyRemotingClient nettyRemotingClient;

/**

* constructor of MasterExecThread

* @param processInstance processInstance

* @param processService processService

* @param nettyRemotingClient nettyRemotingClient

*/

public MasterExecThread(ProcessInstance processInstance, ProcessService processService, NettyRemotingClient nettyRemotingClient){

this.processService = processService;

this.processInstance = processInstance;

this.masterConfig = SpringApplicationContext.getBean(MasterConfig.class);

int masterTaskExecNum = masterConfig.getMasterExecTaskNum();

this.taskExecService = ThreadUtils.newDaemonFixedThreadExecutor("Master-Task-Exec-Thread",

masterTaskExecNum);

this.nettyRemotingClient = nettyRemotingClient;

}

@Override

public void run() {

// process instance is null

if (processInstance == null){

logger.info("process instance is not exists");

return;

}

// check to see if it's done

if (processInstance.getState().typeIsFinished()){

logger.info("process instance is done : {}",processInstance.getId());

return;

}

try {

if (processInstance.isComplementData() && Flag.NO == processInstance.getIsSubProcess()){

// sub process complement data

executeComplementProcess();

}else{

// execute flow

executeProcess();

}

}catch (Exception e){

logger.error("master exec thread exception", e);

logger.error("process execute failed, process id:{}", processInstance.getId());

processInstance.setState(ExecutionStatus.FAILURE);

processInstance.setEndTime(new Date());

processService.updateProcessInstance(processInstance);

}finally {

taskExecService.shutdown();

// post handle

postHandle();

}

}

/**

* execute process

* @throws Exception exception

*/

private void executeProcess() throws Exception {

prepareProcess();

runProcess();

endProcess();

}

/**

* execute complement process

* @throws Exception exception

*/

private void executeComplementProcess() throws Exception {

Map<String, String> cmdParam = JSONUtils.toMap(processInstance.getCommandParam());

Date startDate = DateUtils.getScheduleDate(cmdParam.get(CMDPARAM_COMPLEMENT_DATA_START_DATE));

Date endDate = DateUtils.getScheduleDate(cmdParam.get(CMDPARAM_COMPLEMENT_DATA_END_DATE));

processService.saveProcessInstance(processInstance);

// get schedules

int processDefinitionId = processInstance.getProcessDefinitionId();

List<Schedule> schedules = processService.queryReleaseSchedulerListByProcessDefinitionId(processDefinitionId);

List<Date> listDate = Lists.newLinkedList();

if(!CollectionUtils.isEmpty(schedules)){

for (Schedule schedule : schedules) {

listDate.addAll(CronUtils.getSelfFireDateList(startDate, endDate, schedule.getCrontab()));

}

}

// get first fire date

Iterator<Date> iterator = null;

Date scheduleDate = null;

if(!CollectionUtils.isEmpty(listDate)) {

iterator = listDate.iterator();

scheduleDate = iterator.next();

processInstance.setScheduleTime(scheduleDate);