text

stringlengths 226

34.5k

|

|---|

How to sort a Python list descending based on item count, but return a list with sorted items only, and not a count also?

Question: I am generating some large lists in python, and I need to figure out the

absolute fastest way to return a sort list from something like this:

myList = ['a','b','c','a','c','a']

and return a list of it in descending order, based on the count of the items,

so it looks like this.

sortedList = ['a','c','b']

I have been using Counter().most_common(), but this returns a tuple in

descending order with the item, and the number of times in appears in the

list. I really just need a tuple or list in descending order based off count,

with just the items, and not the count amounts. Any ideas?

Edit: So would doing something like this be faster?

myList = ['a','b','c','a','c','a']

count = Counter(myList).most_common()

res = [k for k,v in count]

Answer:

from collections import Counter

myList = ['a','b','c','a','c','a']

res = [k for k,v in Counter(myList).most_common()]

# ['a', 'c', 'b']

|

python threading wont start in background

Question: this is my class for turn on , turn off , and blink LED in raspberry pi i want

stop thread blink in some time ... but thread wont run in background ...

> class LED:

>

>

> _GPIOPORT=None

> flagstop=0

> flag=threading.Event()

>

>

> def __init__(self,GPIONUM):

> self._GPIOPORT=GPIONUM

> GPIO.setmode(GPIO.BOARD)

> GPIO.setwarnings(False)

> GPIO.setup(self._GPIOPORT, GPIO.OUT)

> self.flag.set()

>

>

> def TurnOn(self):

> self.flag.clear()

> print 'Turn On ...'

> GPIO.output(self._GPIOPORT,True)

>

> def TurnOff(self):

> self.flag.clear()

> print 'Turn Off ...'

> GPIO.output(self._GPIOPORT,False)

>

> def Blink(self,delay,e):

> print 'Thread Blink Create ...'

> t1=threading.Thread(self.BlinkThread(delay,e))

> t1.start()

> print 'Thread Started'

>

> def BlinkThread(self,delaytime,event):

> print 'BlinkTrehad Start ....'

> while not event:

> GPIO.output(self._GPIOPORT,False)

> sleep(delaytime)

> GPIO.output(self._GPIOPORT,True)

> sleep(delaytime)

>

and this is my module for use this class :

import LED

from time import sleep

import threading

if __name__ == '__main__':

e=threading.Event()

e.set()

ledblue=LED.LED(11)

ledblue.TurnOn()

sleep(1)

ledblue.Blink(1,e)

ledblue.TurnOn()

never "Thread Started" print in consol and loop run in line

Answer: You are calling BlinkThread() in the Thread constructor instead of just

passing the function reference and arguments and letting the thread start the

function. Change it to:

def Blink(self,delay,e):

print 'Thread Blink Create ...'

t1=threading.Thread(target=self.BlinkThread, args=(delay,e))

t1.start()

print 'Thread Started'

|

Speech library installation error

Question: I am new to Python and I installed the

[`speech`](https://pypi.python.org/pypi/speech) library. But whenever I'm

importing `speech` from Python shell it's giving the error

>>> import speech

Traceback (most recent call last):

File "<pyshell#0>", line 1, in <module>

import speech

File "C:\Python34\lib\site-packages\speech-0.5.2-py3.4.egg\speech.py", line 55, in <module>

from win32com.client import constants as _constants

File "C:\Python34\lib\site-packages\win32com\__init__.py", line 5, in <module>

import win32api, sys, os

ImportError: DLL load failed: The specified module could not be found.

Answer: You need to install `pywin32`.

Use `easy_install pywin32` or `pip install pywin32`

|

Random number generator in Python on Linux

Question: This is the code that i've written:

import os

import string

import random

def id_generator(size=8, chars=string.digits):

return ''.join(random.choice(chars) for _ in range(size))

b="echo " + id_generator() + "> file.txt"

os.system(100*b)

Now the problem is that when i run the script it returns one random number not

100. I want that when i open **file.txt** it must be like:

123456

241721

126428

How can i resolve this problem?

Answer: The redirection overwrites the previous contents of the file. You want `>>`,

not `>`.

There is no need in using the obsolete `os.system()` and redirections here, by

the way. You can do:

with open('file.txt', 'w') as f:

for _ in range(100):

f.write(id_generator() + '\n')

This is also more cross-platform.

|

How to add c compiler options to distutils

Question: I want to call Mathematica from C code and C code from Python. I have the

individual parts working, but I can't put everything together.

When I compile the C code that calls Mathematica, then I use the following

command in makefile

$(CC) -O mlcall.c -I$(INCDIR) -L$(LIBDIR) -l${MLLIB} ${EXTRALIBS} -o $@

Where

MLINKDIR = /opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit

SYS=Linux-x86-64

CADDSDIR = ${MLINKDIR}/${SYS}/CompilerAdditions

INCDIR = ${CADDSDIR}

LIBDIR = ${CADDSDIR}

MLLIB = ML64i3

My question is how can I use distutils with those same options (currently I'm

getting undefined symbol: MLActivate error, when calling from Python and I

think the problem is because I'm not using these options)?

I saw the answer <http://stackoverflow.com/a/16078447/1335014> and tried to

use CFLAGS (by running the following script):

MLINKDIR=/opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit

SYS="Linux-x86-64"

CADDSDIR=${MLINKDIR}/${SYS}/CompilerAdditions

INCDIR=${CADDSDIR}

LIBDIR=${CADDSDIR}

MLLIB=ML64i3

EXTRALIBS="-lm -lpthread -lrt -lstdc++"

export CFLAGS="-I$INCDIR -L$LIBDIR -l${MLLIB} ${EXTRALIBS}"

So I get the following output for `echo $CFLAGS`

-I/opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit/Linux-x86-64/CompilerAdditions -L/opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit/Linux-x86-64/CompilerAdditions -lML64i3 -lm -lpthread -lrt -lstdc++

Which seems correct, but didn't have any effect. Maybe because I'm adding more

than one option.

Answer: I realized that if you don't modify the C source code then nothing is compiled

(the original error I had stayed because of that reason). Using CFLAGS, as I

did, actually does fix the problem.

After digging around in distutils documentations I found two additional fixes

(these only use `setup.py` and don't require any environment variables).

1:

from distutils.core import setup, Extension

module1 = Extension('spammodule',

sources = ['spammodule.c'],

extra_compile_args=["-I/opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit/Linux-x86-64/CompilerAdditions"],

extra_link_args=["-L/opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit/Linux-x86-64/CompilerAdditions", "-lML64i3", "-lm", "-lpthread", "-lrt", "-lstdc++"])

setup (name = 'MyPackage',

version = '1.0',

description = 'This is a demo package',

ext_modules = [module1])

2:

from distutils.core import setup, Extension

module1 = Extension('spammodule',

sources = ['spammodule.c'],

include_dirs=['/opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit/Linux-x86-64/CompilerAdditions'],

library_dirs=['/opt/Mathematica-9.0/SystemFiles/Links/MathLink/DeveloperKit/Linux-x86-64/CompilerAdditions'],

libraries=["ML64i3", "m", "pthread", "rt", "stdc++"])

setup (name = 'MyPackage',

version = '1.0',

description = 'This is a demo package',

ext_modules = [module1])

|

Python: Aggregate data for different users on different days

Question: I'm a new Python user and learning how to manipulate/aggregate data.

I have some sample data of the format:

User Date Price

A 20130101 50

A 20130102 20

A 20130103 30

B 20130201 40

B 20130202 20

and so on.

I'm looking for some aggregates around each user and expecting an output for

mean spend like:

User Mean_Spend

A 33

B 30

I could read line by line and get aggregates for one user but I'm struggling

to read the data for different users.

Any suggestions highly appreciated around how to read the file for different

users.

Thanks

Answer: The collections have a `Counter` object

([documentation](https://docs.python.org/2/library/collections.html#counter-

objects)) based off of `Dictionary` that's meant for this kind of quick

summation. Naively, you could use one to accumulate the spend amounts, and

another to tally the number of transactions, and then divide.

from collections import Counter

accumulator = Counter()

transactions = Counter()

# assuming your input is exactly as shown...

with open('my_foo.txt', 'r') as f:

f.readline() # skip header line

for line in f.readlines():

parts = line.split()

transactions[parts[0]] += 1

accumulator[parts[0]]+=int(parts[2])

result = dict((k, float(accumulator[k])/transactions[k]) for k in transactions)

|

Django CSV export

Question: I want to export the csv file form database, I have the following errors: that

say tuple index out of range, i don't know why

Request Method: GET

Request URL: http://www.article/export_excel/

Django Version: 1.6.2

Exception Type: IndexError

Exception Value: tuple index out of range

Exception Location: /var/www/article/views.py in export_excel, line 191

Python Executable: /usr/bin/python

Python Version: 2.6.6

Python Path:

['/usr/lib/python2.6/site-packages/pip-1.5.2-py2.6.egg',

'/usr/lib64/python26.zip',

'/usr/lib64/python2.6',

'/usr/lib64/python2.6/plat-linux2',

'/usr/lib64/python2.6/lib-tk',

'/usr/lib64/python2.6/lib-old',

'/usr/lib64/python2.6/lib-dynload',

'/usr/lib64/python2.6/site-packages',

'/usr/lib/python2.6/site-packages',

'/usr/lib/python2.6/site-packages/setuptools-0.6c11-py2.6.egg-info',

Server time: Thu, 22 May 2014 14:45:02 +0900

Traceback Switch to copy-and-paste view

/usr/lib/python2.6/site-packages/django/core/handlers/base.py in get_response

response = wrapped_callback(request, *callback_args, **callback_kwargs)

...

▶ Local vars

/var/www/article/views.py in export_excel

day = att[2].day

...

▶ Local vars

This is my views.py :

from datetime import datetime, time, date, timedelta

class workLog(object):

def __init__(self, name, day, attTime, leaveTime):

self.name = name

self.day = day

self.attTime = attTime

self.leaveTime = leaveTime

def export_excel(request):

from staffprofile.models import Myattendance,Myleavework

response = HttpResponse(mimetype='application/vnd.ms-excel; charset="Shift_JIS"')

response['Content-Disposition'] = 'attachment; filename=file.csv'

writer = csv.writer(response)

titles = ["No","name","day","attendance_time", "leave_time"

writer.writerow(titles)

obj_all = attendance.objects.filter(user_id = 3).values_list('user', 'contact_date', 'contact_time').order_by("-contact_date")

lea = leavework.objects.filter(user_id = 3).values_list('contact_time').order_by('-contact_date')

S = Staff.objects.all()

row = [workLog('name', i, None, None) for i in range(32)]

for att in obj_all.filter(user_id = 3).values_list('contact_date'):

day = att[2]

log = row[day]

if log.attTime is None:

log.attTime = att[2]

elif log.attTime < att[2]:

log.attTime = att[2]

for leav in lea:

day = leav[2].day

log = row[day]

if log.leaveTime is None:

log.leaveTime = leav[2]

elif log.leaveTime < leav[2]:

log.leaveTime = leav[2]

for log in row:

if log.attTime is not None:

if log.leaveTime is not None:

row.append((log.attTime, log.leaveTime))

else:

row.append(None)

else:

if log.leaveTime is not None:

row(None)

writer.writerow(row)

return response

Answer: <https://docs.djangoproject.com/en/dev/ref/models/querysets/#values-list>

Statement `obj_all.filter(user_id = 3).values_list('contact_date')` return an

n * 1 dimension array. The length of `attr` is 1.

|

Scatter-plot matrix with lowess smoother

Question: **What would the Python code be for a scatter-plot matrix with lowess

smoothers similar to the following one?**

I'm not sure about the original source of the graph. I saw it on [this

post](http://stats.stackexchange.com/questions/64789/exploring-a-scatter-plot-

matrix-for-many-variables) on CrossValidated. The ellipses define the

covariance according to the original post. I'm not sure what the numbers mean.

Answer: I adapted the pandas scatter_matrix function and got a decent result:

import pandas as pd

import numpy as np

frame = pd.DataFrame(np.random.randn(100, 4), columns=['A','B','C','D'])

fig = scatter_matrix_lowess(frame, alpha=0.4, figsize=(12,12));

fig.suptitle('Scatterplot matrix with lowess smoother', fontsize=16);

* * *

This is the code for `scatter_matrix_lowess`:

def scatter_matrix_lowess(frame, alpha=0.5, figsize=None, grid=False,

diagonal='hist', marker='.', density_kwds=None,

hist_kwds=None, range_padding=0.05, **kwds):

"""

Draw a matrix of scatter plots with lowess smoother.

This is an adapted version of the pandas scatter_matrix function.

Parameters

----------

frame : DataFrame

alpha : float, optional

amount of transparency applied

figsize : (float,float), optional

a tuple (width, height) in inches

ax : Matplotlib axis object, optional

grid : bool, optional

setting this to True will show the grid

diagonal : {'hist', 'kde'}

pick between 'kde' and 'hist' for

either Kernel Density Estimation or Histogram

plot in the diagonal

marker : str, optional

Matplotlib marker type, default '.'

hist_kwds : other plotting keyword arguments

To be passed to hist function

density_kwds : other plotting keyword arguments

To be passed to kernel density estimate plot

range_padding : float, optional

relative extension of axis range in x and y

with respect to (x_max - x_min) or (y_max - y_min),

default 0.05

kwds : other plotting keyword arguments

To be passed to scatter function

Examples

--------

>>> df = DataFrame(np.random.randn(1000, 4), columns=['A','B','C','D'])

>>> scatter_matrix_lowess(df, alpha=0.2)

"""

import matplotlib.pyplot as plt

from matplotlib.artist import setp

import pandas.core.common as com

from pandas.compat import range, lrange, lmap, map, zip

from statsmodels.nonparametric.smoothers_lowess import lowess

df = frame._get_numeric_data()

n = df.columns.size

fig, axes = plt.subplots(nrows=n, ncols=n, figsize=figsize, squeeze=False)

# no gaps between subplots

fig.subplots_adjust(wspace=0, hspace=0)

mask = com.notnull(df)

marker = _get_marker_compat(marker)

hist_kwds = hist_kwds or {}

density_kwds = density_kwds or {}

# workaround because `c='b'` is hardcoded in matplotlibs scatter method

kwds.setdefault('c', plt.rcParams['patch.facecolor'])

boundaries_list = []

for a in df.columns:

values = df[a].values[mask[a].values]

rmin_, rmax_ = np.min(values), np.max(values)

rdelta_ext = (rmax_ - rmin_) * range_padding / 2.

boundaries_list.append((rmin_ - rdelta_ext, rmax_+ rdelta_ext))

for i, a in zip(lrange(n), df.columns):

for j, b in zip(lrange(n), df.columns):

ax = axes[i, j]

if i == j:

values = df[a].values[mask[a].values]

# Deal with the diagonal by drawing a histogram there.

if diagonal == 'hist':

ax.hist(values, **hist_kwds)

elif diagonal in ('kde', 'density'):

from scipy.stats import gaussian_kde

y = values

gkde = gaussian_kde(y)

ind = np.linspace(y.min(), y.max(), 1000)

ax.plot(ind, gkde.evaluate(ind), **density_kwds)

ax.set_xlim(boundaries_list[i])

else:

common = (mask[a] & mask[b]).values

ax.scatter(df[b][common], df[a][common],

marker=marker, alpha=alpha, **kwds)

# The following 2 lines are new and add the lowess smoothing

ys = lowess(df[a][common], df[b][common])

ax.plot(ys[:,0], ys[:,1], 'red', linewidth=1)

ax.set_xlim(boundaries_list[j])

ax.set_ylim(boundaries_list[i])

ax.set_xlabel('')

ax.set_ylabel('')

_label_axis(ax, kind='x', label=b, position='bottom', rotate=True)

_label_axis(ax, kind='y', label=a, position='left')

if j!= 0:

ax.yaxis.set_visible(False)

if i != n-1:

ax.xaxis.set_visible(False)

for ax in axes.flat:

setp(ax.get_xticklabels(), fontsize=8)

setp(ax.get_yticklabels(), fontsize=8)

return fig

def _label_axis(ax, kind='x', label='', position='top',

ticks=True, rotate=False):

from matplotlib.artist import setp

if kind == 'x':

ax.set_xlabel(label, visible=True)

ax.xaxis.set_visible(True)

ax.xaxis.set_ticks_position(position)

ax.xaxis.set_label_position(position)

if rotate:

setp(ax.get_xticklabels(), rotation=90)

elif kind == 'y':

ax.yaxis.set_visible(True)

ax.set_ylabel(label, visible=True)

# ax.set_ylabel(a)

ax.yaxis.set_ticks_position(position)

ax.yaxis.set_label_position(position)

return

def _get_marker_compat(marker):

import matplotlib.lines as mlines

import matplotlib as mpl

if mpl.__version__ < '1.1.0' and marker == '.':

return 'o'

if marker not in mlines.lineMarkers:

return 'o'

return marker

|

Process Memory grows huge -Tornado CurlAsyncHTTPClient

Question: I am using Tornado CurlAsyncHTTPClient. My process memory keeps growing for

both blocking and non blocking requests when I instantiate corresponding

httpclients for each request. This memory usage growth does not happen if I

just have one instance of the

httpclients(tornado.httpclient.HTTPClient/tornado.httpclient.AsyncHTTPClient)

and reuse them.

Also If I use SimpleAsyncHTTPClient instead of CurlAsyncHTTPClient this memory

growth doesnot happen irrespective of how I instantiate.

Here is a sample code that reproduces this,

import tornado.httpclient

import json

import functools

instantiate_once = False

tornado.httpclient.AsyncHTTPClient.configure('tornado.curl_httpclient.CurlAsyncHTTPClient')

hc, io_loop, async_hc = None, None, None

if instantiate_once:

hc = tornado.httpclient.HTTPClient()

io_loop = tornado.ioloop.IOLoop()

async_hc = tornado.httpclient.AsyncHTTPClient(io_loop=io_loop)

def fire_sync_request():

global count

if instantiate_once:

global hc

if not instantiate_once:

hc = tornado.httpclient.HTTPClient()

url = '<Please try with a url>'

try:

resp = hc.fetch(url)

except (Exception,tornado.httpclient.HTTPError) as e:

print str(e)

if not instantiate_once:

hc.close()

def fire_async_requests():

#generic response callback fn

def response_callback(response):

response_callback_info['response_count'] += 1

if response_callback_info['response_count'] >= request_count:

io_loop.stop()

if instantiate_once:

global io_loop, async_hc

if not instantiate_once:

io_loop = tornado.ioloop.IOLoop()

requests = ['<Please add ur url to try>']*5

response_callback_info = {'response_count': 0}

request_count = len(requests)

global count

count +=request_count

hcs=[]

for url in requests:

kwargs ={}

kwargs['method'] = 'GET'

if not instantiate_once:

async_hc = tornado.httpclient.AsyncHTTPClient(io_loop=io_loop)

async_hc.fetch(url, callback=functools.partial(response_callback), **kwargs)

if not instantiate_once:

hcs.append(async_hc)

io_loop.start()

for hc in hcs:

hc.close()

if not instantiate_once:

io_loop.close()

if __name__ == '__main__':

import sys

if sys.argv[1] == 'sync':

while True:

output = fire_sync_request()

elif sys.argv[1] == 'async':

while True:

output = fire_async_requests()

Here set instantiate_once variable to True, and execute python check.py sync

or python check.py async. The process memory increases continuously

With instantiate_once=False, this doesnot happen.

Also If I use SimpleAsyncHTTPClient instead of CurlAsyncHTTPClient this memory

growth doesnot happen.

I have python 2.7/ tornado 2.3.2/ pycurl(libcurl/7.26.0 GnuTLS/2.12.20

zlib/1.2.7 libidn/1.25 libssh2/1.4.2 librtmp/2.3)

I could reproduce the same issue with latest tornado 3.2

Please help me to understand this behaviour and figure out the right way of

using tornado as http library.

Answer: HTTPClient and AsyncHTTPClient are designed to be reused, so it will always be

more efficient not to recreate them all the time. In fact, AsyncHTTPClient

will try to magically detect if there is an existing AsyncHTTPClient on the

same IOLoop and use that instead of creating a new one.

But even though it's better to reuse one http client object, it shouldn't leak

to create many of them as you're doing here (as long as you're closing them).

This looks like a bug in pycurl: <https://github.com/pycurl/pycurl/issues/182>

|

Test failures ("no transaction is active") with Ghost.py

Question: I have a Django project that does some calculations in Javascript.

I am using [Ghost.py](http://jeanphix.me/Ghost.py/) to try and incorporate

efficient tests of the Javascript calculations into the Django test suite:

from ghost.ext.django.test import GhostTestCase

class CalculationsTest(GhostTestCase):

def setUp(self):

page, resources = self.ghost.open('http://localhost:8081/_test/')

self.assertEqual(page.http_status, 200)

def test_frobnicate(self):

result, e_resources = self.ghost.evaluate('''

frobnicate(test_data, "argument");

''')

self.assertEqual(result, 1.204)

(where `frobnicate()` is a Javascript function on the test page.

This works very well if I run one test at a time.

If, however, I run `django-admin.py test`, I get

Traceback (most recent call last):

...

result = self.run_suite(suite)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/test/runner.py", line 113, in run_suite

).run(suite)

File "/usr/lib64/python2.7/unittest/runner.py", line 151, in run

test(result)

File "/usr/lib64/python2.7/unittest/suite.py", line 70, in __call__

return self.run(*args, **kwds)

File "/usr/lib64/python2.7/unittest/suite.py", line 108, in run

test(result)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/test/testcases.py", line 184, in __call__

super(SimpleTestCase, self).__call__(result)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/ghost/test.py", line 53, in __call__

self._post_teardown()

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/test/testcases.py", line 796, in _post_teardown

self._fixture_teardown()

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/test/testcases.py", line 817, in _fixture_teardown

inhibit_post_syncdb=self.available_apps is not None)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/core/management/__init__.py", line 159, in call_command

return klass.execute(*args, **defaults)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/core/management/base.py", line 285, in execute

output = self.handle(*args, **options)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/core/management/base.py", line 415, in handle

return self.handle_noargs(**options)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/core/management/commands/flush.py", line 81, in handle_noargs

self.emit_post_syncdb(verbosity, interactive, db)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/core/management/commands/flush.py", line 101, in emit_post_syncdb

emit_post_sync_signal(set(all_models), verbosity, interactive, database)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/core/management/sql.py", line 216, in emit_post_sync_signal

interactive=interactive, db=db)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/dispatch/dispatcher.py", line 185, in send

response = receiver(signal=self, sender=sender, **named)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/contrib/auth/management/__init__.py", line 82, in create_permissions

ctype = ContentType.objects.db_manager(db).get_for_model(klass)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/contrib/contenttypes/models.py", line 47, in get_for_model

defaults = {'name': smart_text(opts.verbose_name_raw)},

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/models/manager.py", line 154, in get_or_create

return self.get_queryset().get_or_create(**kwargs)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/models/query.py", line 388, in get_or_create

six.reraise(*exc_info)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/models/query.py", line 380, in get_or_create

obj.save(force_insert=True, using=self.db)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/transaction.py", line 305, in __exit__

connection.commit()

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/backends/__init__.py", line 168, in commit

self._commit()

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/backends/__init__.py", line 136, in _commit

return self.connection.commit()

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/utils.py", line 99, in __exit__

six.reraise(dj_exc_type, dj_exc_value, traceback)

File "/home/carl/.virtualenvs/fecfc/lib/python2.7/site-packages/django/db/backends/__init__.py", line 136, in _commit

return self.connection.commit()

django.db.utils.OperationalError: cannot commit - no transaction is active

(running with `django-nose` gives weirder, inconsistent results)

Any clues on how to prevent this issue, which is currently standing in the way

of CI?

Answer: I haven't used Ghost myself but for a similar test I had to use

[TransactionTestCase](https://docs.djangoproject.com/en/1.8/topics/testing/tools/#django.test.TransactionTestCase)

to get a similar test working. Could you try changing GhostTestCase and see if

that's working?

|

Problen running python scripts outside of Eclipse

Question: All my python scripts work just fine when I run them in Eclipse, however when

I drage them over to the python.exe, they never work, the cmd opens and closes

immediately. If I try to do it with a command in cmd, so it doesn't close, I

get errors like:

**ImportErrror: No module named _Some Name_**

and the likes. How can I resolve this issue?

Answer: Your pathing is wrong. In eclipse right click on the project properties >

PyDev - PYTHONPATH or Project References. The source folders show all of the

pathing that eclipse automatically handles. It is likely that the module you

are trying to import is in the parent directory.

Project

src/

my_module.py

import_module.py

You may want to make a python package/library.

Project

bin/

my_module.py

lib_name/

__init__.py

import_module.py

other_module.py

In this instance my_module.py has no clue where import_module.py is. It has to

know where the library is. I believe other_module.py as well as my_module.py

needs to have from lib_name import import_module if they are in a library.

my_module.py

# Add the parent directory to the path

CURRDIR = os.path.dirname(inspect.getfile(inspect.currentframe()))

PARENTDIR = os.path.dirname(CURRDIR)

sys.path.append(PARENTDIR)

from lib_name import import_module

import_module.do_something()

ADVANCED: The way I like to do things is to add a setup.py file which uses

setuptools. This file handles distributing your project library. It is

convenient if you are working on several related projects. You can use pip or

the setup.py commandline arguments to create a link to the library in your

site-packages python folder (The location for all of the installed python

libraries).

Terminal

pip install -e existing/folder/that/has/setup.py

This adds a link to your in the easy_install.pth file to the directory

containing your library. It also adds some egg-link file in site-packages.

Then you don't really have to worry about pathing for that library just use

from lib_name import import_module.

|

socket error - python

Question: i want to get the local private machine's address, running the following piece

of code:

socket.gethostbyaddr(socket.gethostname())

gives the error:

socket.herror: [Errno 2] Host name lookup failure

i know i can see local machine's address, by using

socket.gethostbyname(socket.gethostname())

but it shows the public address of my network (or machine) and ifcofig shows

another address for my wlan. can some one help me on this issue? Thanks

Answer: I believe you're going to find

[netifaces](https://pypi.python.org/pypi/netifaces/0.10.4) a little more

useful here.

It appears to be a cross-platform library to deal with Network Interfaces.

**Example:**

>>> from netifaces import interfaces, ifaddresses

>>> interfaces()

['lo', 'sit0', 'enp3s0', 'docker0']

>>> ifaddresses("enp3s0")

{17: [{'broadcast': 'ff:ff:ff:ff:ff:ff', 'addr': 'bc:5f:f4:97:5a:69'}], 2: [{'broadcast': '10.0.0.255', 'netmask': '255.255.255.0', 'addr': '10.0.0.2'}], 10: [{'netmask': 'ffff:ffff:ffff:ffff::', 'addr': '2001:470:edee:0:be5f:f4ff:fe97:5a69'}, {'netmask': 'ffff:ffff:ffff:ffff::', 'addr': 'fe80::be5f:f4ff:fe97:5a69%enp3s0'}]}

>>>

>>> ifaddresses("enp3s0")[2][0]["addr"]

'10.0.0.2' # <-- My Desktop's LAN IP Address.

|

Python\Numpy: Comparing arrays with NAN

Question: Why are the following two lists not equal?

a = [1.0, np.NAN]

b = np.append(np.array(1.0), [np.NAN]).tolist()

I am using the following to check for identicalness.

((a == b) | (np.isnan(a) & np.isnan(b))).all(), np.in1d(a,b)

Using `np.in1d(a, b)` it seems the `np.NAN` values are not equal but I am not

sure why this is. Can anyone shed some light on this issue?

Answer: `NaN` values never compare equal. That is, the test `NaN==NaN` is always

`False` _by definition of`NaN`_.

So `[1.0, NaN] == [1.0, NaN]` is also `False`. Indeed, once a `NaN` occurs in

any list, it cannot compare equal to any other list, even itself.

If you want to test a variable to see if it's `NaN` in `numpy`, you use the

`numpy.isnan()` function. I don't see any obvious way of obtaining the

comparison semantics that you seem to want other than by “manually” iterating

over the list with a loop.

Consider the following:

import math

import numpy as np

def nan_eq(a, b):

for i,j in zip(a,b):

if i!=j and not (math.isnan(i) and math.isnan(j)):

return False

return True

a=[1.0, float('nan')]

b=[1.0, float('nan')]

print( float('nan')==float('nan') )

print( a==a )

print( a==b )

print( nan_eq(a,a) )

It will print:

False

True

False

True

The test `a==a` succeeds because, presumably, Python's idea that references to

the same object are equal trumps what would be the result of the element-wise

comparison that `a==b` requires.

|

Simplegui module for Window 7

Question: Is there any way to install SimpleGUI module in window 7 for python 3.2 with

all other module dependencies or having Linux OS is only the way to have that!

Answer: Yes. Go to: <https://pypi.python.org/pypi/SimpleGUICS2Pygame/>

1. Download whichever Python you use

2. Change the EGG extension to ZIP

3. Extract in Python

4. Instead of 'import simplegui' type: import SimpleGUICS2Pygame.simpleguics2pygame as simplegui

DONE!

|

Dynamic functions creation from json, python

Question: I am new to python and I need to create class on the fly from the following

json:

{

"name": "ICallback",

"functions": [

{

"name": "OnNavigation",

"parameters": [

{"name":"Type", "type": "int", "value": "0"},

{"name":"source", "type": "int", "value": "0"},

{"name":"tabId", "type": "string", "value": ""},

{"name":"Url", "type": "string", "value": ""},

{"name":"Context", "type": "int", "value": "0"}

]

}

]

}

I found how to create class, but I don't understand how to create methods on

the fly. For now function will just raise `NotImplemented` exception.

Answer: So you already know to create a class:

class Void(object):

pass

kls = Void()

You want to create a method from a JSON, but I'm going to do it from a string

that can be created from the JSON:

from types import MethodType

d = {}

exec "def OnNavigation(self, param): return param" in d

kls.OnNavigation = MethodType(d["OnNavigation"], kls)

# setattr(kls, "OnNavigation", MethodType(d["OnNavigation"], kls))

print kls.OnNavigation("test")

Should output _test_.

|

Control line ending of Print in Python

Question: I've read elsewhere that I can prevent print from going to the next line by

adding a "," to the end of the statement. However, is there a way to control

this conditionally? To sometimes end the line and sometimes not based on

variables?

Answer: One solution without future imports:

print "my line of text",

if print_newline:

print

which is equivalent to:

from __future__ import print_function

print("my line of text", end="\n" if print_newline else "")

|

Creating a gradebook with the pandas module

Question: So I have recently started teaching a course and wanted to handle my grades

using python and the pandas module. For this class the students work in groups

and turn in one assignment per table. I have a file with all of the students

that is formatted like such

Name, Email, Table

"John Doe", [email protected], 3

"Jane Doe", [email protected], 5

.

.

.

and another file with the grades for each table for the assignments done

Table, worksheet, another assignment, etc

1, 8, 15, 4

2, 9, 23, 5

3, 3, 20, 7

.

.

.

What I want to do is assign the appropriate grade to each student based on

their table number. Here is what I have done

import pandas as pd

t_data = pd.read_csv('table_grades.csv')

roster = pd.read_csv('roster.csv')

for i in range(1, len(t_data.columns)):

x = []

for j in range(len(roster)):

for k in range(len(t_data)):

if roster.Table.values[j] == k+1:

x.append(t_data[t_data.columns.values[i]][k])

roster[t_data.columns.values[i]] = x

Which does what I want but I feel like there must be a better way to do a task

like this using the pandas. I am new to pandas and appreciate any help.

Answer: IIUC -- unfortunately your code doesn't run for me with your data and you

didn't give example output, so I can't be sure -- you're looking for `merge`.

Adding a new student, Fred Smith, to table 3:

In [182]: roster.merge(t_data, on="Table")

Out[182]:

Name Email Table worksheet another assignment etc

0 John Doe [email protected] 3 3 20 7

1 Fred Smith [email protected] 3 3 20 7

[2 rows x 6 columns]

or maybe an outer merge, to make it easier to spot missing/misaligned data:

In [183]: roster.merge(t_data, on="Table", how="outer")

Out[183]:

Name Email Table worksheet another assignment etc

0 John Doe [email protected] 3 3 20 7

1 Fred Smith [email protected] 3 3 20 7

2 Jane Doe [email protected] 5 NaN NaN NaN

3 NaN NaN 1 8 15 4

4 NaN NaN 2 9 23 5

[5 rows x 6 columns]

|

calling python from R with instant output to console

Question: I run python scripts from R using the R command:

system('python test.py')

But my print statements in test.py do not appear in the R console until the

python program is finished. I would like to view the print statements as the

python program is running inside R. I have also tried `sys.stdout.write()`,

but the result is the same. Any help is greatly appreciated.

Here is my code for test.py:

import time

for i in range(10):

print 'i=',i

time.sleep(5)

Answer: Tested on Windows 8 with R v3.0.1

Simply right click on the r console then **untick/unselect** the `Buffered

Output` option (See image below). Now execute your code you shall see the

output of `print` statements!

Update:

I forgot to mention that I also needed to add `sys.stdout.flush()` after the

`print` statement in the python file.

import time

import sys

for i in range(5):

print 'i=',i

sys.stdout.flush()

time.sleep(1)

Also if you select the `Buffered Output` option then when you left click on

the r console while your script is executing you shall see the output. Keep

clicking and the output is shown. :)

|

cx_Freeze is not finding self defined modules in my project when creating exe

Question: I have a project that runs from GUI.py and imports modules I created.

Specifically it imports modules from a "Library" package that exists in the

same directory as GUI.py. I want to freeze the scripts with cx_Freeze to

create a windows executable, and I can create the exe, but when I try to run

it, I get: "ImportError: No module named Library."

I see in the output that all the modules that I import from Library aren't

imported. Here's what my setup.py looks like:

import sys, os

from cx_Freeze import setup, Executable

build_exe_options = {"packages":['Libary', 'Graphs', 'os'],

"includes":["tkinter", "csv", "subprocess", "datetime", "shutil", "random", "Library", "Graphs"],

"include_files": ['GUI','HTML','Users','Tests','E.icns', 'Graphs'],

}

base = None

exe = None

if sys.platform == "win32":

exe = Executable(

script="GUI.py",

initScript = None,

base = "Win32GUI",

targetDir = r"built",

targetName = "GUI.exe",

compress = True,

copyDependentFiles = True,

appendScriptToExe = False,

appendScriptToLibrary = False,

icon = None

)

base = "Win32GUI"

setup( name = "MrProj",

version = "2.0",

description = "My project",

options = {"build.exe": build_exe_options},

#executables = [Executable("GUI.py", base=base)]

executables = [exe]

)

I've tried everything that I could find in StackOverflow and made a lot of

fixes based upon similar problems people were having. However, no matter what

I do, I can't seem to get cx_freeze to import my modules in Library.

My setup.py is in the same directory as GUI.py and the Library directory.

I'm running it on a Windows 7 Laptop with cx_Freeze-4.3.3.

I have python 3.4 installed.

Any help would be a godsend, thank you very much!

Answer: If `Library` (funny name by the way) in `packages` doesn't work you could try

as a workaround to put it in the `includes` list. For this to work you maybe

have to explicitly include every single submodule like:

includes = ['Library', 'Library.submodule1', 'Library.sub2', ...]

For the `include_files` you have to add each file with full (relative) path.

Directories don't work.

You could of course make use of `os.listdir()` or the `glob` module to append

paths to your include_files like this:

from glob import glob

...

include_files = ['GUI.py','HTML','Users','E.icns', 'Graphs'],

include_files += glob('Tests/*/*')

...

In some cases something like `sys.path.insert(0, '.')` or even

`sys.path.insert(0, '..')` can help.

|

Selenium Webdriver with Firebug + NetExport + FireStarter not creating a har file in Python

Question: I am currently running Selenium with Firebug, NetExport, and (trying out)

FireStarter in Python trying to get the network traffic of a URL. I expect a

HAR file to appear in the directory listed, however nothing appears. When I

test it in Firefox and go through the UI, a HAR file is exported and saved so

I know the code itself functions as expected. After viewing multiple examples

I do not see what I am missing.

I am using Firefox 29.0.1 Firebug 1.12.8 FireStarter 0.1a6 NetExport 0.9b6

Has anyone else encountered this issue? I am receiving a "webFile.txt" file

being filled out correctly.

After looking up each version of the add-ons they are supposed to be

compatible with the version of Firefox I am using. I tried using Firefox

version 20, however that did not help. I am currently pulling source code.

In addition I have tried it with and without FireStarter, and I have tried

refreshing the page manually in both cases to try to generate a HAR.

My code looks like this:

import urllib2

import sys

import re

import os

import subprocess

import hashlib

import time

import datetime

from browsermobproxy import Server

from selenium import webdriver

import selenium

a=[];

theURL='';

fireBugPath = '/Users/tai/Documents/workspace/testSelenium/testS/firebug.xpi';

netExportPath = '/Users/tai/Documents/workspace/testSelenium/testS/netExport.xpi';

fireStarterPath = '/Users/tai/Documents/workspace/testSelenium/testS/fireStarter.xpi';

profile = webdriver.firefox.firefox_profile.FirefoxProfile();

profile.add_extension( fireBugPath);

profile.add_extension(netExportPath);

profile.add_extension(fireStarterPath);

#firefox preferences

profile.set_preference("app.update.enabled", False)

profile.native_events_enabled = True

profile.set_preference("webdriver.log.file", "/Users/tai/Documents/workspace/testSelenium/testS/webFile.txt")

profile.set_preference("extensions.firebug.DBG_STARTER", True);

profile.set_preference("extensions.firebug.currentVersion", "1.12.8");

profile.set_preference("extensions.firebug.addonBarOpened", True);

profile.set_preference("extensions.firebug.addonBarOpened", True);

profile.set_preference('extensions.firebug.consoles.enableSite', True)

profile.set_preference("extensions.firebug.console.enableSites", True);

profile.set_preference("extensions.firebug.script.enableSites", True);

profile.set_preference("extensions.firebug.net.enableSites", True);

profile.set_preference("extensions.firebug.previousPlacement", 1);

profile.set_preference("extensions.firebug.allPagesActivation", "on");

profile.set_preference("extensions.firebug.onByDefault", True);

profile.set_preference("extensions.firebug.defaultPanelName", "net");

#set net export preferences

profile.set_preference("extensions.firebug.netexport.alwaysEnableAutoExport", True);

profile.set_preference("extensions.firebug.netexport.autoExportToFile", True);

profile.set_preference("extensions.firebug.netexport.saveFiles", True);

profile.set_preference("extensions.firebug.netexport.autoExportToServer", False);

profile.set_preference("extensions.firebug.netexport.Automation", True);

profile.set_preference("extensions.firebug.netexport.showPreview", False);

profile.set_preference("extensions.firebug.netexport.pageLoadedTimeout", 15000);

profile.set_preference("extensions.firebug.netexport.timeout", 10000);

profile.set_preference("extensions.firebug.netexport.defaultLogDir", "/Users/tai/Documents/workspace/testSelenium/testS/har");

profile.update_preferences();

browser = webdriver.Firefox(firefox_profile=profile);

def openURL(url,s):

theURL = url;

time.sleep(6);

#browser = webdriver.Chrome();

browser.get(url); #load the url in firefox

time.sleep(3); #wait for the page to load

browser.execute_script("window.scrollTo(0, document.body.scrollHeight/5);")

time.sleep(1); #wait for the page to load

browser.execute_script("window.scrollTo(0, document.body.scrollHeight/4);")

time.sleep(1); #wait for the page to load

browser.execute_script("window.scrollTo(0, document.body.scrollHeight/3);")

time.sleep(1); #wait for the page to load

browser.execute_script("window.scrollTo(0, document.body.scrollHeight/2);")

time.sleep(1); #wait for the page to load

browser.execute_script("window.scrollTo(0, document.body.scrollHeight);")

searchText='';

time.sleep(20); #wait for the page to load

if(s.__len__() >0):

for x in range(0, s.__len__()):

searchText+= ("" + browser.find_element_by_id(x));

else:

searchText+= browser.page_source;

a=getMatches(searchText)

#print ("\n".join(swfLinks));

print('\n'.join(removeNonURL(a)));

# print(browser.page_source);

browser.quit();

return a;

def found_window(name):

try: browser.switch_to_window(name)

except NoSuchWindowException:

return False

else:

return True # found window

def removeFirstQuote(tex):

for x in tex:

b = x[1:];

if not b in a:

a.append(b);

return a;

def getMatches(t):

return removeFirstQuote(re.findall('([\"|\'][^\"|\']*\.swf)', t));

def removeNonURL(t):

a=[];

for b in t:

if(b.lower()[:4] !="http" ):

if(b[0] == "//"):

a.append(theURL+b[2:b.__len__()]);

else:

while(b.lower()[:4] !="http" and b.__len__() >5):

b=b[1:b.__len__()];

a.append(b);

else:

a.append(b);

return a;

openURL("http://www.chron.com",a);

Answer: I fixed this issue for my own work by setting a longer wait before closing the

browser. I think you are currently setting netexport to export after the

program has quit, so no file is written. The line causing this is:

profile.set_preference("extensions.firebug.netexport.pageLoadedTimeout", 15000);

From the [netexport source

code](https://github.com/firebug/netexport/blob/master/defaults/preferences/prefs.js)

we have that pageLoadedTimeout is the `Number of milliseconds to wait after

the last page request to declare the page loaded'. So I suspect all your minor

page loads are preventing netexport from having enough time to write the file.

One caveat is you set the system to automatically export after 10s so I'm not

sure why you are not acquiring half loaded json files.

|

Extract a number of continuous digits from a random string in python

Question: I am trying to parse this list of strings that contains ID values as a series

of 7 digits but I am not sure how to approach this.

lst1=[

"(Tower 3rd fl floor_WINDOW CORNER : option 2_ floor cut out_small_wood) : GA -

Floors : : Model Lines : id 3925810

(Tower 3rd fl floor_WINDOW CORNER : option 2_ floor cut out_small_wood) : GA - Floors : Floors : Floor : Duke new core floors : id 3925721",

"(Tower 3rd fl floor_WINDOW CORNER : option 3_ floor cut out_large_wood) : GA - Floors : : Model Lines : id 3976019

(Tower 3rd fl floor_WINDOW CORNER : option 3_ floor cut out_large_wood) : GA - Floors : Floors : Floor : Duke new core floors : id 3975995"

]

I really want to pull out just the digit values and combine them into one

string separated by a colon ";". The resulting list would be something like

this:

lst1 = ["3925810; 3925721", "3976019; 3975995"]

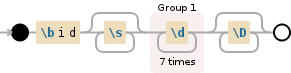

Answer: You can use regular expression, like this

import re

pattern = re.compile(r"\bid\s*?(\d+)")

print ["; ".join(pattern.findall(item)) for item in lst1]

# ['3925810; 3925721', '3976019; 3975995']

[Debuggex Demo](https://www.debuggex.com/r/WjsdMuFf3Ajy4sfR)

If you want to make sure that the numbers you pick will only be of length 7,

then you can do it like this

pattern = re.compile(r"\bid\s*?(\d{7})\D*?")

[Debuggex Demo](https://www.debuggex.com/r/4fFYJ9l06fEJScay)

The `\b` refers to the word boundary. So, it makes sure that `id` will be a

separate word and it is followed by 0 or more whitespace characters. And then

we match numeric digits `[0-9]`, `\d` is just a shorthand notation for the

same. `{7}` matches only seven times and followed by `\D`, its the inverse of

`\d`.

|

karger min cut algorithm in python 2.7

Question: Here is my code for the karger min cut algorithm.. To the best of my knowledge

the algorithm i have implemented is right. But I don get the answer right. If

someone can check what's going wrong I would be grateful.

import random

from random import randint

#loading data from the text file#

with open('data.txt') as req_file:

mincut_data = []

for line in req_file:

line = line.split()

if line:

line = [int(i) for i in line]

mincut_data.append(line)

#extracting edges from the data #

edgelist = []

nodelist = []

for every_list in mincut_data:

nodelist.append(every_list[0])

temp_list = []

for temp in range(1,len(every_list)):

temp_list = [every_list[0], every_list[temp]]

flag = 0

for ad in edgelist:

if set(ad) == set(temp_list):

flag = 1

if flag == 0 :

edgelist.append([every_list[0],every_list[temp]])

#karger min cut algorithm#

while(len(nodelist) > 2):

val = randint(0,(len(edgelist)-1))

print val

target_edge = edgelist[val]

replace_with = target_edge[0]

should_replace = target_edge[1]

for edge in edgelist:

if(edge[0] == should_replace):

edge[0] = replace_with

if(edge[1] == should_replace):

edge[1] = replace_with

edgelist.remove(target_edge)

nodelist.remove(should_replace)

for edge in edgelist:

if edge[0] == edge[1]:

edgelist.remove(edge)

print ('edgelist remaining: ',edgelist)

print ('nodelist remaining: ',nodelist)

The test case data is :

1 2 3 4 7

2 1 3 4

3 1 2 4

4 1 2 3 5

5 4 6 7 8

6 5 7 8

7 1 5 6 8

8 5 6 7

Please copy it in a text file and save it as "data.txt" and run the program

The answer should be : the number of min cuts is 2 and the cuts are at edges

[(1,7), (4,5)]

Answer: So Karger's algorithm is a `random alogorithm'. That is, each time you run it

it produces a solution which is in no way guaranteed to be best. The general

approach is to run it lots of times and keep the best solution. For lots of

configurations there will be many solutions which are best or approximately

best, so you heuristically find a good solution quickly.

As far as I can see, you are only running the algorithms once. Thus the

solution is unlikely to be the optimal one. Try running it 100 times in for

loop and holding onto the best solution.

|

Django with apache and wsgi throws ImportError

Question: I'm trying to deploy my Django app to an Apache server with no luck. I

succeeded with the WSGI sample application, and tried to host an empty Django

project. While it works properly with the manage.py runserver, it throws the

following error when using apache:

[notice] Apache/2.2.22 (Debian) PHP/5.4.4-14+deb7u9 mod_python/3.3.1 Python/2.7.3 mod_wsgi/2.7 configured -- resuming normal operations

[error] [client x.x.x.x] mod_wsgi (pid=8300): Exception occurred processing WSGI script '/usr/local/www/django/myapp/wsgi.py'.

[error] [client x.x.x.x] Traceback (most recent call last):

[error] [client x.x.x.x] File "/usr/local/lib/python2.7/dist-packages/django/core/handlers/wsgi.py", line 187, in __call__

[error] [client x.x.x.x] self.load_middleware()

[error] [client x.x.x.x] File "/usr/local/lib/python2.7/dist-packages/django/core/handlers/base.py", line 44, in load_middleware

[error] [client x.x.x.x] for middleware_path in settings.MIDDLEWARE_CLASSES:

[error] [client x.x.x.x] File "/usr/local/lib/python2.7/dist-packages/django/conf/__init__.py", line 54, in __getattr__

[error] [client x.x.x.x] self._setup(name)

[error] [client x.x.x.x] File "/usr/local/lib/python2.7/dist-packages/django/conf/__init__.py", line 49, in _setup

[error] [client x.x.x.x] self._wrapped = Settings(settings_module)

[error] [client x.x.x.x] File "/usr/local/lib/python2.7/dist-packages/django/conf/__init__.py", line 132, in __init__

[error] [client x.x.x.x] % (self.SETTINGS_MODULE, e)

[error] [client x.x.x.x] ImportError: Could not import settings 'myapp.settings' (Is it on sys.path? Is there an import error in the settings file?): No module named myapp.settings

My wsgi.py is the following:

"""

WSGI config for myapp project.

It exposes the WSGI callable as a module-level variable named ``application``.

For more information on this file, see

https://docs.djangoproject.com/en/1.6/howto/deployment/wsgi/

"""

import os

os.environ.setdefault("DJANGO_SETTINGS_MODULE", "myapp.settings")

from django.core.wsgi import get_wsgi_application

application = get_wsgi_application()

I have a wsgi.conf in the conf.d library for apache:

<VirtualHost *:80>

ServerName myapp.example.com

ServerAlias myapp

ServerAdmin [email protected]

DocumentRoot /var/www

<Directory /usr/local/www/django>

Order allow,deny

Allow from all

</Directory>

WSGIDaemonProcess myapp processes=2 threads=15 display-name=%{GROUP}

WSGIProcessGroup myapp

WSGIScriptAlias /myapp /usr/local/www/django/myapp/wsgi.py

LoadModule wsgi_module /usr/lib/apache2/modules/mod_wsgi.so

</VirtualHost>

WSGIPythonPath /usr/local/www/django/myapp

[SOLVED] Thanks, I started all over again, made the suggested modifications to

my configuration files, and now it's working. I couldn't flag both suggestions

correct, but I think both of them were necessary and I had a third (fourth,

fifth...) bug to, which went away after reinstallation.

Answer: It looks like you've been using an old guide for setting up apache2 / wsgi.

I'd recommend using the official guide at

<https://code.google.com/p/modwsgi/wiki/InstallationInstructions>

Anyway, your specific problem is that the wsgi application isn't picking up

the python path correctly. Change you VirtualHost conf to something like this

<VirtualHost *:80>

ServerName myapp.example.com

ServerAlias myapp

ServerAdmin [email protected]

DocumentRoot /usr/local/www/django/myapp

WSGIDaemonProcess myapp processes=2 threads=15 display-name=%{GROUP} python-path=/usr/local/www/django/myapp:/path/to/system/python/site-packages

WSGIProcessGroup myapp

WSGIScriptAlias / /usr/local/www/django/myapp/wsgi.py

<Directory /usr/local/www/django/myapp>

<Files wsgi.py>

Order allow,deny

Allow from all

</Files>

</Directory>

</VirtualHost>

|

Cannot connect to FTP server

Question: I'm not able to connect to FTP server getting below error :-

vmware@localhost ~]$ python try_ftp.py

Traceback (most recent call last):

File "try_ftp.py", line 5, in <module>

f = ftplib.FTP('ftp.python.org')

File "/usr/lib/python2.6/ftplib.py", line 116, in __init__

self.connect(host)

File "/usr/lib/python2.6/ftplib.py", line 131, in connect

self.sock = socket.create_connection((self.host, self.port), self.timeout)

File "/usr/lib/python2.6/socket.py", line 567, in create_connection

raise error, msg

socket.error: [Errno 101] Network is unreachable

I'm writing a very simple code

import ftplib

f = ftplib.FTP('ftp.python.org')

f.login('anonymous','[email protected]')

f.dir()

f.retrlines('RETR motd')

f.quit()

I checked my proxy settings , but it is set to "System proxy setttings"

Please suggest what should I do.

Thanks, Sam

Answer:

[torxed@archie ~]$ telnet ftp.python.org 21

Trying 82.94.164.162...

Connection failed: Connection refused

Trying 2001:888:2000:d::a2...

telnet: Unable to connect to remote host: Network is unreachable

It's not as much the hostname that's bad (ping works you mentioned) but the

default port of 21 is bad. Or they're not running a standard FTP server on

that host at all but rather they're using HTTP as a transport:

<https://www.python.org/ftp/python/>

Try against **ftp.acc.umu.se** instead.

[torxed@archie ~]$ python

Python 3.3.5 (default, Mar 10 2014, 03:21:31)

[GCC 4.8.2 20140206 (prerelease)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import ftplib

>>> f = ftplib.FTP('ftp.acc.umu.se')

>>>

|

python abstract attribute (not property)

Question: What's the best practice to define an abstract instance attribute, but not as

a property?

I would like to write something like:

class AbstractFoo(metaclass=ABCMeta):

@property

@abstractmethod

def bar(self):

pass

class Foo(AbstractFoo):

def __init__(self):

self.bar = 3

Instead of:

class Foo(AbstractFoo):

def __init__(self):

self._bar = 3

@property

def bar(self):

return self._bar

@bar.setter

def setbar(self, bar):

self._bar = bar

@bar.deleter

def delbar(self):

del self._bar

Properties are handy, but for simple attribute requiring no computation they

are an overkill. This is especially important for abstract classes which will

be subclassed and implemented by the user (I don't want to force someone to

use `@property` when he just could have written `self.foo = foo` in the

`__init__`).

[Abstract attributes in

Python](http://stackoverflow.com/questions/2736255/abstract-attributes-in-

python) question proposes as only answer to use `@property` and

`@abstractmethod`: it doesn't answer my question.

<http://code.activestate.com/recipes/577761-simple-abstract-constants-to-use-

when-abstractprop/> may be the right way, but I am not sure. It also only

works with class attributes and not instance attributes.

Answer: If you really want to enforce that a subclass define a given attribute, you

can use metaclass. Personally, I think it may be overkill and not very

pythonic, but you could do something like this:

class AbstractFooMeta(type):

def __call__(cls, *args, **kwargs):

"""Called when you call Foo(*args, **kwargs) """

obj = type.__call__(cls, *args, **kwargs)

obj.check_bar()

return obj

class AbstractFoo(object):

__metaclass__ = AbstractFooMeta

bar = None

def check_bar(self):

if self.bar is None:

raise NotImplementedError('Subclasses must define bar')

class GoodFoo(AbstractFoo):

def __init__(self):

self.bar = 3

class BadFoo(AbstractFoo):

def __init__(self):

pass

Basically the meta class redefine `__call__` to make sure `check_bar` is

called after the init on an instance.

GoodFoo() # ok

BadFoo () # yield NotImplementedError

|

How to use itertools to compute all combinations with repeating elements?

Question: I have tried to use

[itertools](https://docs.python.org/2/library/itertools.html) to compute all

combinations of a list `['a', 'b', 'c']` using `combinations_with_replacement`

with repeating elements. The problem is in the fact that the indices seem to

be used to distinguish the elements:

> Return r length subsequences of elements from the input iterable allowing

> individual elements to be repeated more than once.

>

> Combinations are emitted in lexicographic sort order. So, if the input

> iterable is sorted, the combination tuples will be produced in sorted order.

>

> Elements are treated as unique based on their position, not on their value.

> So if the input elements are unique, the generated combinations will also be

> unique.

Sot this code snippet:

import itertools

for item in itertools.combinations_with_replacement(['a','b','c'], 3):

print (item)

results in this output:

('a', 'a', 'a')

('a', 'a', 'b')

('a', 'a', 'c')

('a', 'b', 'b')

('a', 'b', 'c')

('a', 'c', 'c')

('b', 'b', 'b')

('b', 'b', 'c')

('b', 'c', 'c')

('c', 'c', 'c')

And what I need is the combination set to contain elements like: `('a', 'b',

'a')` which seem to be missing. How to compute the complete combination set?

Answer: It sounds like you want

[`itertools.product`](https://docs.python.org/2/library/itertools.html#itertools.product):

>>> from itertools import product

>>> for item in product(['a', 'b', 'c'], repeat=3):

... print item

...

('a', 'a', 'a')

('a', 'a', 'b')

('a', 'a', 'c')

('a', 'b', 'a')

('a', 'b', 'b')

('a', 'b', 'c')

('a', 'c', 'a')

('a', 'c', 'b')

('a', 'c', 'c')

('b', 'a', 'a')

('b', 'a', 'b')

('b', 'a', 'c')

('b', 'b', 'a')

('b', 'b', 'b')

('b', 'b', 'c')

('b', 'c', 'a')

('b', 'c', 'b')

('b', 'c', 'c')

('c', 'a', 'a')

('c', 'a', 'b')

('c', 'a', 'c')

('c', 'b', 'a')

('c', 'b', 'b')

('c', 'b', 'c')

('c', 'c', 'a')

('c', 'c', 'b')

('c', 'c', 'c')

>>>

|

DB2 Query Error SQL0204N Even With The Schema Defined

Question: I'm using pyodbc to access DB2 10.1.0

I have a login account named foobar and a schema with the same name. I have a

table named users under the schema.

When I'm logged in as foobar, I can run the following query successfully from

the command line:

select * from users

I have a small Python script that I'm using to connect to the database. The

script is:

#!/usr/bin/python

import pyodbc

if __name__ == "__main__":

accessString ="DRIVER={DB2};DATABASE=MYDATABASE;SERVER=localhost;UID=foobar; PWD=foobarish1;CURRENTSCHEMA=FOOBAR"

print accessString

cnxn = pyodbc.connect(accessString , autocommit=True)

cursor = cnxn.cursor()

query = "SELECT * FROM USERS"

cursor.execute(query)

rows = cursor.fetchall()

for row in rows:

print 'Row data'

print row[0]

cursor.close()

cnxn.close()

When I run the script, I get the following error:

('42S02', '[42S02] [IBM][CLI Driver][DB2/LINUXX8664] SQL0204N "FOOBAR.USERS"

is an undefined name. SQLSTATE=42704\n (-204) (SQLExecDirectW)')

This usually means that the schema isn't defined. However, if I change the

query in the script to:

VALUES CURRENT SCHEMA

the script runs successfully and it returns

FOOBAR

Does anyone know how to fix this so I can query the user table? Your

assistance and insight is appreciated.

EDIT: I've also tried adding the schema directly to the table name, making the

query

SELECT * FROM FOOBAR.USERS

and I still get the same error.

Answer: I had the same issue and solved it by setting the SCHEMA explicit by query:

SET CURRENT SCHEMA foobar

|

Plotting histogram from dictionary Python

Question: I have a dictionary with one value associated to each key.

I would like to plot this dictionary as a bar chart with `matplotlib`, set a

different color for each bar, and find a way to use long strings as legible

labels.

X = np.arange(len(dictionay))

pl.bar(X, dictionay.values(), align='center', width=0.5)

pl.xticks(X, dictionay.keys())

pl.xticks(rotation=20)

ymax = max(dictionay.values()) + 1

pl.ylim(0, ymax)

pl.show()

The result:

My keys are very long so we don't see anything clearly! And in addition it

would be great to have this plot sorted by y values. I know a dictionary can't

be sorted so may I go through a list?

Any ideas?

Thanks

Answer: > I would like to plot this dictionnay with matplotlib, set a different color

> for each key and find a way to plot the key which is a long string ... And

> in addition it would be great to have this plot sorted.

Unfortunately the best way I was able to graph long strings was to truncate

them. I arbitrarily chose 15 characters as the maximum length, you can use any

length you find appropriate.

The following code defines a dictionary (`Dictionary`), creates a list of

sorted keys and sorted values by value from largest to smallest, and truncates

keys that are too long to display well. When the bar plot is graphed, it is

done one bar at a time so it can set an individual color to the bar. The color

is chosen by iterating through the tuple defined at the beginning (Colors).

import numpy as np

import matplotlib.pyplot as plt

Dictionary = {"A":3,"C":5,"B":2,"D":3,"E":4,

"A very long key that will be truncated when it is graphed":1}

Dictionary_Length = len(Dictionary)

Max_Key_Length = 15

Sorted_Dict_Values = sorted(Dictionary.values(), reverse=True)

Sorted_Dict_Keys = sorted(Dictionary, key=Dictionary.get, reverse=True)

for i in range(0,Dictionary_Length):

Key = Sorted_Dict_Keys[i]

Key = Key[:Max_Key_Length]

Sorted_Dict_Keys[i] = Key

X = np.arange(Dictionary_Length)

Colors = ('b','g','r','c') # blue, green, red, cyan

Figure = plt.figure()

Axis = Figure.add_subplot(1,1,1)

for i in range(0,Dictionary_Length):

Axis.bar(X[i], Sorted_Dict_Values[i], align='center',width=0.5, color=Colors[i%len(Colors)])

Axis.set_xticks(X)

xtickNames = Axis.set_xticklabels(Sorted_Dict_Keys)

plt.setp(Sorted_Dict_Keys)

plt.xticks(rotation=20)

ymax = max(Sorted_Dict_Values) + 1

plt.ylim(0,ymax)

plt.show()

Output graph:

|

Is there a max image size (pixel width and height) within wx where png images lose there transparency?

Question: Initially, I loaded in 5 .png's with transparent backgrounds using wx.Image()

and every single one kept its transparent background and looked the way I

wanted it to on the canvas (it kept the background of the canvas). These png

images were about (200,200) in size. I proceeded to load a png image with a

transparent background that was about (900,500) in size onto the canvas and it

made the transparency a black box around the image. Next, I opened the image

up with gimp and exported the transparent image as a smaller size. Then when I

loaded the image into python the image kept its transparency. Is there a max

image size (pixel width and height) within wx where png images lose there

transparency? Any info would help. Keep in mind that I can't resize the

picture before it is loaded into wxpython. If I do that, it will have already

lost its transparency.

import wx

import os

def opj(path):

return apply(os.path.join, tuple(path.split('/')))

def saveSnapShot(dcSource):

size = dcSource.Size

bmp= wx.EmptyBitmap(size.width, size.height)

memDC = wx.MemoryDC()

memDC.SelectObject(bmp)

memDC.Blit(0, 0, size.width, size.height, dcSource, 0,0)

memDC.SelectObject(wx.NullBitmap)

img = bmp.ConvertToImage()

img.SaveFile('path to new image created', wx.BITMAP_TYPE_JPEG)

def main():

app = wx.App(None)

testImage = wx.Image(opj('path to original image'), wx.BITMAP_TYPE_PNG).ConvertToBitmap()

draw_bmp = wx.EmptyBitmap(1500, 1500)

canvas_dc = wx.MemoryDC(draw_bmp)

background = wx.Colour(208, 11, 11)

canvas_dc.SetBackground(wx.Brush(background))

canvas_dc.Clear()

canvas_dc.DrawBitmap(testImage,0, 0)

saveSnapShot(canvas_dc)

if __name__ == '__main__':

main()

Answer: I don't know if I got this right. But if I convert your example from MemoryDC

to PaintDC, then I could fix the transparency issue. The key was to pass True

to useMask in DrawBitmap method. If I omit useMask parameter, it will default

to False and no transparency will be used.

The documentation is here: <http://www.wxpython.org/docs/api/wx.DC-

class.html#DrawBitmap>

I hope this what you wanted to do...

import wx

class myFrame(wx.Frame):

def __init__(self, testImage):

wx.Frame.__init__(self, None, size=testImage.Size)

self.Bind(wx.EVT_PAINT, self.OnPaint)

self.testImage = testImage

self.Show()

def OnPaint(self, event):

dc = wx.PaintDC(self)

background = wx.Colour(255, 0, 0)

dc.SetBackground(wx.Brush(background))

dc.Clear()

#dc.DrawBitmap(self.testImage, 0, 0) # black background

dc.DrawBitmap(self.testImage, 0, 0, True) # transparency on, now red

def main():

app = wx.App(None)

testImage = wx.Image(r"path_to_image.png", wx.BITMAP_TYPE_PNG).ConvertToBitmap()

Frame = myFrame(testImage)

app.MainLoop()

if __name__ == '__main__':

main()

(Edit) Ok. I think your original example can be fixed in a similar way

memDC.Blit(0, 0, size.width, size.height, dcSource, 0,0, useMask=True)

canvas_dc.DrawBitmap(testImage,0, 0, useMask=True)

Just making sure that useMask is True was enough to fix the transparency issue

in your example, too.

|

Using Vagrant and VM with python-django & PostgreSQL

Question: I am trying to make a python-django project on a VM with Python/Django 2.7.6

and PostgreSQL 9.3.4 installed.

I am following [this](https://docs.djangoproject.com/en/1.6/intro/tutorial01/)

tutorial. After making

[changes](https://docs.djangoproject.com/en/1.6/ref/settings/#std:setting-

DATABASES) in settings.py for Postgres, when I do vagrant up and vagrant ssh,

and after python manage.py syncdb, it shows the following error.

File "manage.py", line 10, in <module>

execute_from_command_line(sys.argv)

File "/usr/lib/python2.7/dist-packages/django/core/management/__init__.py", line 399, in execute_from_command_line

utility.execute()

File "/usr/lib/python2.7/dist-packages/django/core/management/__init__.py", line 392, in execute

self.fetch_command(subcommand).run_from_argv(self.argv)

File "/usr/lib/python2.7/dist-packages/django/core/management/base.py", line 242, in run_from_argv

self.execute(*args, **options.__dict__)

File "/usr/lib/python2.7/dist-packages/django/core/management/base.py", line 280, in execute

translation.activate('en-us')

File "/usr/lib/python2.7/dist-packages/django/utils/translation/__init__.py", line 130, in activate

return _trans.activate(language)

File "/usr/lib/python2.7/dist-packages/django/utils/translation/trans_real.py", line 188, in activate

_active.value = translation(language)

File "/usr/lib/python2.7/dist-packages/django/utils/translation/trans_real.py", line 177, in translation

default_translation = _fetch(settings.LANGUAGE_CODE)

File "/usr/lib/python2.7/dist-packages/django/utils/translation/trans_real.py", line 159, in _fetch

app = import_module(appname)

File "/usr/lib/python2.7/dist-packages/django/utils/importlib.py", line 40, in import_module

__import__(name)

File "/usr/lib/python2.7/dist-packages/django/contrib/admin/__init__.py", line 6, in <module>

from django.contrib.admin.sites import AdminSite, site

File "/usr/lib/python2.7/dist-packages/django/contrib/admin/sites.py", line 4, in <module>

from django.contrib.admin.forms import AdminAuthenticationForm

File "/usr/lib/python2.7/dist-packages/django/contrib/admin/forms.py", line 6, in <module>

from django.contrib.auth.forms import AuthenticationForm

File "/usr/lib/python2.7/dist-packages/django/contrib/auth/forms.py", line 17, in <module>

from django.contrib.auth.models import User

File "/usr/lib/python2.7/dist-packages/django/contrib/auth/models.py", line 48, in <module>

class Permission(models.Model):

File "/usr/lib/python2.7/dist-packages/django/db/models/base.py", line 96, in __new__

new_class.add_to_class('_meta', Options(meta, **kwargs))

File "/usr/lib/python2.7/dist-packages/django/db/models/base.py", line 264, in add_to_class

value.contribute_to_class(cls, name)

File "/usr/lib/python2.7/dist-packages/django/db/models/options.py", line 124, in contribute_to_class

self.db_table = truncate_name(self.db_table, connection.ops.max_name_length())

File "/usr/lib/python2.7/dist-packages/django/db/__init__.py", line 34, in __getattr__

return getattr(connections[DEFAULT_DB_ALIAS], item)

File "/usr/lib/python2.7/dist-packages/django/db/utils.py", line 198, in __getitem__

backend = load_backend(db['ENGINE'])

File "/usr/lib/python2.7/dist-packages/django/db/utils.py", line 113, in load_backend

return import_module('%s.base' % backend_name)

File "/usr/lib/python2.7/dist-packages/django/utils/importlib.py", line 40, in import_module

__import__(name)

File "/usr/lib/python2.7/dist-packages/django/db/backends/postgresql_psycopg2/base.py", line 25, in <module>

raise ImproperlyConfigured("Error loading psycopg2 module: %s" % e)

django.core.exceptions.ImproperlyConfigured: Error loading psycopg2 module: No

module named psycopg2

Also one more thing, I am able to run a project with SQLite on the VM.

What to do ?

Answer: `pyscopg2` is the name of the Python module normally used to interface with

Postgresql. You will need to install it.

You should be able to install it using Pip with `pip install psycopg2`. Pip

itself can usually be found using your package manager - on Ubuntu, for

instance, IIRC the package is called python-pip for Python 2 and python3-pip

for Python 3.

|

Error with hex encode in Python 3.3

Question: I am trying modify code from [this

question](http://stackoverflow.com/questions/3241929/python-find-dominant-

most-common-color-in-an-image) to use in Python 3.3 (I installed Pillow, scipy

and NumPy):

import struct

from PIL import Image

import scipy

import scipy.misc

import scipy.cluster

NUM_CLUSTERS = 5

print ('reading image')

im = Image.open("image.jpg")

im = im.resize((150, 150)) # optional, to reduce time

ar = scipy.misc.fromimage(im)

shape = ar.shape

ar = ar.reshape(scipy.product(shape[:2]), shape[2])

print ('finding clusters')

codes, dist = scipy.cluster.vq.kmeans(ar, NUM_CLUSTERS)

print ('cluster centres:\n'), codes

vecs, dist = scipy.cluster.vq.vq(ar, codes) # assign codes

counts, bins = scipy.histogram(vecs, len(codes)) # count occurrences

index_max = scipy.argmax(counts) # find most frequent

peak = codes[index_max]

colour = ''.join(chr(c) for c in peak).encode('hex')

print ('most frequent is %s (#%s)') % (peak, colour)

But I get this error:

Traceback (most recent call last): File

"C:/Users/User/Desktop/pyt33_pic.py", line 24, in <module>

colour = ''.join(chr(c) for c in peak).encode('hex') LookupError: unknown encoding: hex

What am I doing wrong?

Answer: In 2.x the codec "hex_codec" is aliased to "hex". This alias is restored in

3.4. A bigger change is that a buffer to `bytes` encoding requires using

`codecs.encode` in Python 3. Additionally, for string formatting you'll need

to decode the result. For example:

>>> peak

array([131, 128, 124], dtype=uint8)

>>> codecs.encode(peak, 'hex_codec').decode('ascii')

'83807c'

Alternatively you can use the `format` function to individually convert the

numbers to hex:

>>> ''.join(format(c, '02x') for c in peak)

'83807c'

|