sha

stringlengths 40

40

| text

stringlengths 1

13.4M

| id

stringlengths 2

117

| tags

listlengths 1

7.91k

| created_at

stringlengths 25

25

| metadata

stringlengths 2

875k

| last_modified

stringlengths 25

25

| arxiv

listlengths 0

25

| languages

listlengths 0

7.91k

| tags_str

stringlengths 17

159k

| text_str

stringlengths 1

447k

| text_lists

listlengths 0

352

| processed_texts

listlengths 1

353

| tokens_length

listlengths 1

353

| input_texts

listlengths 1

40

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

12b6d35dd29a10d2cb9f9e44b2c0666414f90dc1

|

# Dataset Card for "autotree_pmlb_letter_sgosdt_l256_d3_sd0"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

yzhuang/autotree_pmlb_letter_sgosdt_l256_d3_sd0

|

[

"region:us"

] |

2023-09-01T07:56:29+00:00

|

{"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "input_x", "sequence": {"sequence": "float32"}}, {"name": "input_y", "sequence": {"sequence": "float32"}}, {"name": "rtg", "sequence": "float64"}, {"name": "status", "sequence": {"sequence": "float32"}}, {"name": "split_threshold", "sequence": {"sequence": "float32"}}, {"name": "split_dimension", "sequence": "int64"}], "splits": [{"name": "train", "num_bytes": 523118976, "num_examples": 10000}, {"name": "validation", "num_bytes": 523120000, "num_examples": 10000}], "download_size": 61880916, "dataset_size": 1046238976}}

|

2023-09-01T07:56:37+00:00

|

[] |

[] |

TAGS

#region-us

|

# Dataset Card for "autotree_pmlb_letter_sgosdt_l256_d3_sd0"

More Information needed

|

[

"# Dataset Card for \"autotree_pmlb_letter_sgosdt_l256_d3_sd0\"\n\nMore Information needed"

] |

[

"TAGS\n#region-us \n",

"# Dataset Card for \"autotree_pmlb_letter_sgosdt_l256_d3_sd0\"\n\nMore Information needed"

] |

[

6,

31

] |

[

"passage: TAGS\n#region-us \n# Dataset Card for \"autotree_pmlb_letter_sgosdt_l256_d3_sd0\"\n\nMore Information needed"

] |

1699f8a2eef308efd4aa9207b5151f7c95f11f20

|

# Dataset Card for Evaluation run of Charlie911/vicuna-7b-v1.5-lora-mctaco

## Dataset Description

- **Homepage:**

- **Repository:** https://huggingface.co/Charlie911/vicuna-7b-v1.5-lora-mctaco

- **Paper:**

- **Leaderboard:** https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

- **Point of Contact:** [email protected]

### Dataset Summary

Dataset automatically created during the evaluation run of model [Charlie911/vicuna-7b-v1.5-lora-mctaco](https://huggingface.co/Charlie911/vicuna-7b-v1.5-lora-mctaco) on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard).

The dataset is composed of 64 configuration, each one coresponding to one of the evaluated task.

The dataset has been created from 3 run(s). Each run can be found as a specific split in each configuration, the split being named using the timestamp of the run.The "train" split is always pointing to the latest results.

An additional configuration "results" store all the aggregated results of the run (and is used to compute and display the agregated metrics on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)).

To load the details from a run, you can for instance do the following:

```python

from datasets import load_dataset

data = load_dataset("open-llm-leaderboard/details_Charlie911__vicuna-7b-v1.5-lora-mctaco",

"harness_winogrande_5",

split="train")

```

## Latest results

These are the [latest results from run 2023-09-17T20:27:23.554125](https://huggingface.co/datasets/open-llm-leaderboard/details_Charlie911__vicuna-7b-v1.5-lora-mctaco/blob/main/results_2023-09-17T20-27-23.554125.json)(note that their might be results for other tasks in the repos if successive evals didn't cover the same tasks. You find each in the results and the "latest" split for each eval):

```python

{

"all": {

"em": 0.2488464765100671,

"em_stderr": 0.004427614016278926,

"f1": 0.28849937080536914,

"f1_stderr": 0.00442953185165108,

"acc": 0.372010258662628,

"acc_stderr": 0.00929094831305589

},

"harness|drop|3": {

"em": 0.2488464765100671,

"em_stderr": 0.004427614016278926,

"f1": 0.28849937080536914,

"f1_stderr": 0.00442953185165108

},

"harness|gsm8k|5": {

"acc": 0.04473085670962851,

"acc_stderr": 0.005693886131407047

},

"harness|winogrande|5": {

"acc": 0.6992896606156275,

"acc_stderr": 0.012888010494704732

}

}

```

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

[More Information Needed]

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

[More Information Needed]

|

open-llm-leaderboard/details_Charlie911__vicuna-7b-v1.5-lora-mctaco

|

[

"region:us"

] |

2023-09-01T08:01:17+00:00

|

{"pretty_name": "Evaluation run of Charlie911/vicuna-7b-v1.5-lora-mctaco", "dataset_summary": "Dataset automatically created during the evaluation run of model [Charlie911/vicuna-7b-v1.5-lora-mctaco](https://huggingface.co/Charlie911/vicuna-7b-v1.5-lora-mctaco) on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard).\n\nThe dataset is composed of 64 configuration, each one coresponding to one of the evaluated task.\n\nThe dataset has been created from 3 run(s). Each run can be found as a specific split in each configuration, the split being named using the timestamp of the run.The \"train\" split is always pointing to the latest results.\n\nAn additional configuration \"results\" store all the aggregated results of the run (and is used to compute and display the agregated metrics on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)).\n\nTo load the details from a run, you can for instance do the following:\n```python\nfrom datasets import load_dataset\ndata = load_dataset(\"open-llm-leaderboard/details_Charlie911__vicuna-7b-v1.5-lora-mctaco\",\n\t\"harness_winogrande_5\",\n\tsplit=\"train\")\n```\n\n## Latest results\n\nThese are the [latest results from run 2023-09-17T20:27:23.554125](https://huggingface.co/datasets/open-llm-leaderboard/details_Charlie911__vicuna-7b-v1.5-lora-mctaco/blob/main/results_2023-09-17T20-27-23.554125.json)(note that their might be results for other tasks in the repos if successive evals didn't cover the same tasks. You find each in the results and the \"latest\" split for each eval):\n\n```python\n{\n \"all\": {\n \"em\": 0.2488464765100671,\n \"em_stderr\": 0.004427614016278926,\n \"f1\": 0.28849937080536914,\n \"f1_stderr\": 0.00442953185165108,\n \"acc\": 0.372010258662628,\n \"acc_stderr\": 0.00929094831305589\n },\n \"harness|drop|3\": {\n \"em\": 0.2488464765100671,\n \"em_stderr\": 0.004427614016278926,\n \"f1\": 0.28849937080536914,\n \"f1_stderr\": 0.00442953185165108\n },\n \"harness|gsm8k|5\": {\n \"acc\": 0.04473085670962851,\n \"acc_stderr\": 0.005693886131407047\n },\n \"harness|winogrande|5\": {\n \"acc\": 0.6992896606156275,\n \"acc_stderr\": 0.012888010494704732\n }\n}\n```", "repo_url": "https://huggingface.co/Charlie911/vicuna-7b-v1.5-lora-mctaco", "leaderboard_url": "https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard", "point_of_contact": "[email protected]", "configs": [{"config_name": "harness_arc_challenge_25", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|arc:challenge|25_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|arc:challenge|25_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|arc:challenge|25_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_drop_3", "data_files": [{"split": "2023_09_17T20_27_23.554125", "path": ["**/details_harness|drop|3_2023-09-17T20-27-23.554125.parquet"]}, {"split": "latest", "path": ["**/details_harness|drop|3_2023-09-17T20-27-23.554125.parquet"]}]}, {"config_name": "harness_gsm8k_5", "data_files": [{"split": "2023_09_17T20_27_23.554125", "path": ["**/details_harness|gsm8k|5_2023-09-17T20-27-23.554125.parquet"]}, {"split": "latest", "path": ["**/details_harness|gsm8k|5_2023-09-17T20-27-23.554125.parquet"]}]}, {"config_name": "harness_hellaswag_10", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hellaswag|10_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hellaswag|10_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hellaswag|10_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-abstract_algebra|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-anatomy|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-astronomy|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-business_ethics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-clinical_knowledge|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-college_biology|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-college_chemistry|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-college_computer_science|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-college_mathematics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-college_medicine|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-college_physics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-computer_security|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-conceptual_physics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-econometrics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-electrical_engineering|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-elementary_mathematics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-formal_logic|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-global_facts|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_biology|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_chemistry|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_computer_science|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_european_history|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_geography|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_government_and_politics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_macroeconomics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_mathematics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_microeconomics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_physics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_psychology|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_statistics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_us_history|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-high_school_world_history|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-human_aging|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-human_sexuality|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-international_law|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-jurisprudence|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-logical_fallacies|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-machine_learning|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-management|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-marketing|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-medical_genetics|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-miscellaneous|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-moral_disputes|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-moral_scenarios|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-nutrition|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-philosophy|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-prehistory|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-professional_accounting|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-professional_law|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-professional_medicine|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-professional_psychology|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-public_relations|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-security_studies|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-sociology|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-us_foreign_policy|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-virology|5_2023-09-01T09:00:53.100273.parquet", "**/details_harness|hendrycksTest-world_religions|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-abstract_algebra|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-anatomy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-astronomy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-business_ethics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-clinical_knowledge|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_biology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_chemistry|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_computer_science|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_mathematics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_medicine|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_physics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-computer_security|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-conceptual_physics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-econometrics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-electrical_engineering|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-elementary_mathematics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-formal_logic|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-global_facts|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_biology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_chemistry|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_computer_science|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_european_history|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_geography|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_government_and_politics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_macroeconomics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_mathematics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_microeconomics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_physics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_psychology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_statistics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_us_history|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_world_history|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-human_aging|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-human_sexuality|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-international_law|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-jurisprudence|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-logical_fallacies|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-machine_learning|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-management|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-marketing|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-medical_genetics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-miscellaneous|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-moral_disputes|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-moral_scenarios|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-nutrition|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-philosophy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-prehistory|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_accounting|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_law|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_medicine|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_psychology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-public_relations|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-security_studies|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-sociology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-us_foreign_policy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-virology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-world_religions|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-abstract_algebra|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-anatomy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-astronomy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-business_ethics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-clinical_knowledge|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_biology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_chemistry|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_computer_science|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_mathematics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_medicine|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-college_physics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-computer_security|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-conceptual_physics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-econometrics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-electrical_engineering|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-elementary_mathematics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-formal_logic|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-global_facts|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_biology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_chemistry|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_computer_science|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_european_history|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_geography|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_government_and_politics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_macroeconomics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_mathematics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_microeconomics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_physics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_psychology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_statistics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_us_history|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-high_school_world_history|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-human_aging|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-human_sexuality|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-international_law|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-jurisprudence|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-logical_fallacies|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-machine_learning|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-management|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-marketing|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-medical_genetics|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-miscellaneous|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-moral_disputes|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-moral_scenarios|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-nutrition|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-philosophy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-prehistory|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_accounting|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_law|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_medicine|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-professional_psychology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-public_relations|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-security_studies|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-sociology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-us_foreign_policy|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-virology|5_2023-09-01T09:03:24.370765.parquet", "**/details_harness|hendrycksTest-world_religions|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_abstract_algebra_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-abstract_algebra|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-abstract_algebra|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-abstract_algebra|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_anatomy_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-anatomy|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-anatomy|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-anatomy|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_astronomy_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-astronomy|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-astronomy|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-astronomy|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_business_ethics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-business_ethics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-business_ethics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-business_ethics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_clinical_knowledge_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-clinical_knowledge|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-clinical_knowledge|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-clinical_knowledge|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_college_biology_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-college_biology|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-college_biology|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-college_biology|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_college_chemistry_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-college_chemistry|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-college_chemistry|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-college_chemistry|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_college_computer_science_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-college_computer_science|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-college_computer_science|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-college_computer_science|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_college_mathematics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-college_mathematics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-college_mathematics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-college_mathematics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_college_medicine_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-college_medicine|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-college_medicine|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-college_medicine|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_college_physics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-college_physics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-college_physics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-college_physics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_computer_security_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-computer_security|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-computer_security|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-computer_security|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_conceptual_physics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-conceptual_physics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-conceptual_physics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-conceptual_physics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_econometrics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-econometrics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-econometrics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-econometrics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_electrical_engineering_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-electrical_engineering|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-electrical_engineering|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-electrical_engineering|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_elementary_mathematics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-elementary_mathematics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-elementary_mathematics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-elementary_mathematics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_formal_logic_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-formal_logic|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-formal_logic|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-formal_logic|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_global_facts_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-global_facts|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-global_facts|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-global_facts|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_biology_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_biology|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_biology|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_biology|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_chemistry_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_chemistry|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_chemistry|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_chemistry|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_computer_science_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_computer_science|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_computer_science|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_computer_science|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_european_history_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_european_history|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_european_history|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_european_history|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_geography_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_geography|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_geography|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_geography|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_government_and_politics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_government_and_politics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_government_and_politics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_government_and_politics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_macroeconomics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_macroeconomics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_macroeconomics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_macroeconomics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_mathematics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_mathematics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_mathematics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_mathematics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_microeconomics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_microeconomics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_microeconomics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_microeconomics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_physics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_physics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_physics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_physics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_psychology_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_psychology|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_psychology|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_psychology|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_statistics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_statistics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_statistics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_statistics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_us_history_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_us_history|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_us_history|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_us_history|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_high_school_world_history_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-high_school_world_history|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-high_school_world_history|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-high_school_world_history|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_human_aging_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-human_aging|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-human_aging|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-human_aging|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_human_sexuality_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-human_sexuality|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-human_sexuality|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-human_sexuality|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_international_law_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-international_law|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-international_law|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-international_law|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_jurisprudence_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-jurisprudence|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-jurisprudence|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-jurisprudence|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_logical_fallacies_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-logical_fallacies|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-logical_fallacies|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-logical_fallacies|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_machine_learning_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-machine_learning|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-machine_learning|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-machine_learning|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_management_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-management|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-management|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-management|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_marketing_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-marketing|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-marketing|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-marketing|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_medical_genetics_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-medical_genetics|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-medical_genetics|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-medical_genetics|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_miscellaneous_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-miscellaneous|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-miscellaneous|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-miscellaneous|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_moral_disputes_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-moral_disputes|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-moral_disputes|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-moral_disputes|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_moral_scenarios_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-moral_scenarios|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-moral_scenarios|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-moral_scenarios|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_nutrition_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-nutrition|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-nutrition|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-nutrition|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_philosophy_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-philosophy|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-philosophy|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-philosophy|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_prehistory_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-prehistory|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-prehistory|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-prehistory|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_professional_accounting_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-professional_accounting|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-professional_accounting|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-professional_accounting|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_professional_law_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-professional_law|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-professional_law|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-professional_law|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_professional_medicine_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-professional_medicine|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-professional_medicine|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-professional_medicine|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_professional_psychology_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-professional_psychology|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-professional_psychology|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-professional_psychology|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_public_relations_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-public_relations|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-public_relations|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-public_relations|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_security_studies_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-security_studies|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-security_studies|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-security_studies|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_sociology_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-sociology|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-sociology|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-sociology|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_us_foreign_policy_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-us_foreign_policy|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-us_foreign_policy|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-us_foreign_policy|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_virology_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-virology|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-virology|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-virology|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_hendrycksTest_world_religions_5", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|hendrycksTest-world_religions|5_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|hendrycksTest-world_religions|5_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|hendrycksTest-world_religions|5_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_truthfulqa_mc_0", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["**/details_harness|truthfulqa:mc|0_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["**/details_harness|truthfulqa:mc|0_2023-09-01T09:03:24.370765.parquet"]}, {"split": "latest", "path": ["**/details_harness|truthfulqa:mc|0_2023-09-01T09:03:24.370765.parquet"]}]}, {"config_name": "harness_winogrande_5", "data_files": [{"split": "2023_09_17T20_27_23.554125", "path": ["**/details_harness|winogrande|5_2023-09-17T20-27-23.554125.parquet"]}, {"split": "latest", "path": ["**/details_harness|winogrande|5_2023-09-17T20-27-23.554125.parquet"]}]}, {"config_name": "results", "data_files": [{"split": "2023_09_01T09_00_53.100273", "path": ["results_2023-09-01T09:00:53.100273.parquet"]}, {"split": "2023_09_01T09_03_24.370765", "path": ["results_2023-09-01T09:03:24.370765.parquet"]}, {"split": "2023_09_17T20_27_23.554125", "path": ["results_2023-09-17T20-27-23.554125.parquet"]}, {"split": "latest", "path": ["results_2023-09-17T20-27-23.554125.parquet"]}]}]}

|

2023-09-17T19:27:35+00:00

|

[] |

[] |

TAGS

#region-us

|

# Dataset Card for Evaluation run of Charlie911/vicuna-7b-v1.5-lora-mctaco

## Dataset Description

- Homepage:

- Repository: URL

- Paper:

- Leaderboard: URL

- Point of Contact: clementine@URL

### Dataset Summary

Dataset automatically created during the evaluation run of model Charlie911/vicuna-7b-v1.5-lora-mctaco on the Open LLM Leaderboard.

The dataset is composed of 64 configuration, each one coresponding to one of the evaluated task.

The dataset has been created from 3 run(s). Each run can be found as a specific split in each configuration, the split being named using the timestamp of the run.The "train" split is always pointing to the latest results.

An additional configuration "results" store all the aggregated results of the run (and is used to compute and display the agregated metrics on the Open LLM Leaderboard).

To load the details from a run, you can for instance do the following:

## Latest results

These are the latest results from run 2023-09-17T20:27:23.554125(note that their might be results for other tasks in the repos if successive evals didn't cover the same tasks. You find each in the results and the "latest" split for each eval):

### Supported Tasks and Leaderboards

### Languages

## Dataset Structure

### Data Instances

### Data Fields

### Data Splits

## Dataset Creation

### Curation Rationale

### Source Data

#### Initial Data Collection and Normalization

#### Who are the source language producers?

### Annotations

#### Annotation process

#### Who are the annotators?

### Personal and Sensitive Information

## Considerations for Using the Data

### Social Impact of Dataset

### Discussion of Biases

### Other Known Limitations

## Additional Information

### Dataset Curators

### Licensing Information

### Contributions

|

[

"# Dataset Card for Evaluation run of Charlie911/vicuna-7b-v1.5-lora-mctaco",

"## Dataset Description\n\n- Homepage: \n- Repository: URL\n- Paper: \n- Leaderboard: URL\n- Point of Contact: clementine@URL",

"### Dataset Summary\n\nDataset automatically created during the evaluation run of model Charlie911/vicuna-7b-v1.5-lora-mctaco on the Open LLM Leaderboard.\n\nThe dataset is composed of 64 configuration, each one coresponding to one of the evaluated task.\n\nThe dataset has been created from 3 run(s). Each run can be found as a specific split in each configuration, the split being named using the timestamp of the run.The \"train\" split is always pointing to the latest results.\n\nAn additional configuration \"results\" store all the aggregated results of the run (and is used to compute and display the agregated metrics on the Open LLM Leaderboard).\n\nTo load the details from a run, you can for instance do the following:",

"## Latest results\n\nThese are the latest results from run 2023-09-17T20:27:23.554125(note that their might be results for other tasks in the repos if successive evals didn't cover the same tasks. You find each in the results and the \"latest\" split for each eval):",

"### Supported Tasks and Leaderboards",

"### Languages",

"## Dataset Structure",

"### Data Instances",

"### Data Fields",

"### Data Splits",

"## Dataset Creation",

"### Curation Rationale",

"### Source Data",

"#### Initial Data Collection and Normalization",

"#### Who are the source language producers?",

"### Annotations",

"#### Annotation process",

"#### Who are the annotators?",

"### Personal and Sensitive Information",

"## Considerations for Using the Data",

"### Social Impact of Dataset",

"### Discussion of Biases",

"### Other Known Limitations",

"## Additional Information",

"### Dataset Curators",

"### Licensing Information",

"### Contributions"

] |

[

"TAGS\n#region-us \n",

"# Dataset Card for Evaluation run of Charlie911/vicuna-7b-v1.5-lora-mctaco",

"## Dataset Description\n\n- Homepage: \n- Repository: URL\n- Paper: \n- Leaderboard: URL\n- Point of Contact: clementine@URL",

"### Dataset Summary\n\nDataset automatically created during the evaluation run of model Charlie911/vicuna-7b-v1.5-lora-mctaco on the Open LLM Leaderboard.\n\nThe dataset is composed of 64 configuration, each one coresponding to one of the evaluated task.\n\nThe dataset has been created from 3 run(s). Each run can be found as a specific split in each configuration, the split being named using the timestamp of the run.The \"train\" split is always pointing to the latest results.\n\nAn additional configuration \"results\" store all the aggregated results of the run (and is used to compute and display the agregated metrics on the Open LLM Leaderboard).\n\nTo load the details from a run, you can for instance do the following:",

"## Latest results\n\nThese are the latest results from run 2023-09-17T20:27:23.554125(note that their might be results for other tasks in the repos if successive evals didn't cover the same tasks. You find each in the results and the \"latest\" split for each eval):",

"### Supported Tasks and Leaderboards",

"### Languages",

"## Dataset Structure",

"### Data Instances",

"### Data Fields",

"### Data Splits",

"## Dataset Creation",

"### Curation Rationale",

"### Source Data",

"#### Initial Data Collection and Normalization",

"#### Who are the source language producers?",

"### Annotations",

"#### Annotation process",

"#### Who are the annotators?",

"### Personal and Sensitive Information",

"## Considerations for Using the Data",

"### Social Impact of Dataset",

"### Discussion of Biases",

"### Other Known Limitations",

"## Additional Information",

"### Dataset Curators",

"### Licensing Information",

"### Contributions"

] |

[

6,

26,

31,

174,

66,

10,

4,

6,

6,

5,

5,

5,

7,

4,

10,

10,

5,

5,

9,

8,

8,

7,

8,

7,

5,

6,

6,

5

] |

[

"passage: TAGS\n#region-us \n# Dataset Card for Evaluation run of Charlie911/vicuna-7b-v1.5-lora-mctaco## Dataset Description\n\n- Homepage: \n- Repository: URL\n- Paper: \n- Leaderboard: URL\n- Point of Contact: clementine@URL### Dataset Summary\n\nDataset automatically created during the evaluation run of model Charlie911/vicuna-7b-v1.5-lora-mctaco on the Open LLM Leaderboard.\n\nThe dataset is composed of 64 configuration, each one coresponding to one of the evaluated task.\n\nThe dataset has been created from 3 run(s). Each run can be found as a specific split in each configuration, the split being named using the timestamp of the run.The \"train\" split is always pointing to the latest results.\n\nAn additional configuration \"results\" store all the aggregated results of the run (and is used to compute and display the agregated metrics on the Open LLM Leaderboard).\n\nTo load the details from a run, you can for instance do the following:## Latest results\n\nThese are the latest results from run 2023-09-17T20:27:23.554125(note that their might be results for other tasks in the repos if successive evals didn't cover the same tasks. You find each in the results and the \"latest\" split for each eval):### Supported Tasks and Leaderboards### Languages## Dataset Structure### Data Instances### Data Fields### Data Splits## Dataset Creation### Curation Rationale### Source Data#### Initial Data Collection and Normalization#### Who are the source language producers?### Annotations#### Annotation process#### Who are the annotators?### Personal and Sensitive Information## Considerations for Using the Data### Social Impact of Dataset### Discussion of Biases### Other Known Limitations## Additional Information### Dataset Curators### Licensing Information### Contributions"

] |

ba3ef806166aa5b525ed5696f17a12ede79960c0

|

# Dataset Card for "dark_granger"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

mickume/dark_granger

|

[

"region:us"

] |

2023-09-01T08:09:53+00:00

|

{"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}], "dataset_info": {"features": [{"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 160190011, "num_examples": 925620}], "download_size": 99596348, "dataset_size": 160190011}}

|

2023-10-31T11:03:39+00:00

|

[] |

[] |

TAGS

#region-us

|

# Dataset Card for "dark_granger"

More Information needed

|

[

"# Dataset Card for \"dark_granger\"\n\nMore Information needed"

] |

[

"TAGS\n#region-us \n",

"# Dataset Card for \"dark_granger\"\n\nMore Information needed"

] |

[

6,

15

] |

[

"passage: TAGS\n#region-us \n# Dataset Card for \"dark_granger\"\n\nMore Information needed"

] |

f4bbafac5d2d95757840936213313decd9449d2e

|

# Dataset Card for "rick_and_morty_text_to_image"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

qwertyaditya/rick_and_morty_text_to_image

|

[

"region:us"

] |

2023-09-01T08:18:52+00:00

|

{"configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}], "dataset_info": {"features": [{"name": "image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 3791351.0, "num_examples": 40}], "download_size": 3456089, "dataset_size": 3791351.0}}

|

2023-09-01T08:31:35+00:00

|

[] |

[] |

TAGS

#region-us

|

# Dataset Card for "rick_and_morty_text_to_image"

More Information needed

|

[

"# Dataset Card for \"rick_and_morty_text_to_image\"\n\nMore Information needed"

] |

[

"TAGS\n#region-us \n",

"# Dataset Card for \"rick_and_morty_text_to_image\"\n\nMore Information needed"

] |

[

6,

22

] |

[

"passage: TAGS\n#region-us \n# Dataset Card for \"rick_and_morty_text_to_image\"\n\nMore Information needed"

] |

3c092a5f2eecf4993a1f37de9482948286e7cac9

|

# Dataset Card for "srbd1_segmented2"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

Lancelot53/srbd1_segmented2

|

[

"region:us"

] |

2023-09-01T08:25:34+00:00

|

{"dataset_info": {"features": [{"name": "html", "dtype": "string"}, {"name": "response", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 1452582, "num_examples": 1508}], "download_size": 405675, "dataset_size": 1452582}, "configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}]}

|

2023-09-01T08:25:39+00:00

|

[] |

[] |

TAGS

#region-us

|

# Dataset Card for "srbd1_segmented2"

More Information needed

|

[

"# Dataset Card for \"srbd1_segmented2\"\n\nMore Information needed"

] |

[

"TAGS\n#region-us \n",

"# Dataset Card for \"srbd1_segmented2\"\n\nMore Information needed"

] |

[

6,

19

] |

[

"passage: TAGS\n#region-us \n# Dataset Card for \"srbd1_segmented2\"\n\nMore Information needed"

] |

4c918c3e2bd96dfda634afe307093e298a9d3aa4

|

This is a Non-IID split version of [databricks/databricks-dolly-15k](https://huggingface.co/datasets/databricks/databricks-dolly-15k).

|

fedml/databricks-dolly-15k-niid

|

[

"size_categories:10K<n<100K",

"language:en",

"license:cc-by-sa-3.0",

"region:us"

] |

2023-09-01T08:51:54+00:00

|

{"language": ["en"], "license": "cc-by-sa-3.0", "size_categories": ["10K<n<100K"], "configs": [{"config_name": "default", "default": true, "data_files": [{"split": "train", "path": "train.parquet"}, {"split": "test", "path": "test.parquet"}]}], "dataset_info": {"config_name": "default", "features": [{"name": "instruction", "dtype": "string"}, {"name": "context", "dtype": "string"}, {"name": "response", "dtype": "string"}, {"name": "category", "dtype": "string"}]}}

|

2023-09-05T11:03:26+00:00

|

[] |

[

"en"

] |

TAGS

#size_categories-10K<n<100K #language-English #license-cc-by-sa-3.0 #region-us

|

This is a Non-IID split version of databricks/databricks-dolly-15k.

|

[] |

[

"TAGS\n#size_categories-10K<n<100K #language-English #license-cc-by-sa-3.0 #region-us \n"

] |

[

33

] |

[

"passage: TAGS\n#size_categories-10K<n<100K #language-English #license-cc-by-sa-3.0 #region-us \n"

] |

cc2a593691f05888a3895be54f68950baa938be3

|

**Product name** - [MarineFlex Ultra](https://marineflex-ultra-reviews.jimdosite.com/)

**Category** - Dietary supplement, Flexibility, Mobility.

**Benefits** - Treats Joint Pain

**Dosage** - Take 2 pills everyday

**Availability** - [Online](https://www.healthsupplement24x7.com/get-marineflex-ultra)

**Official Website** - [https://www.healthsupplement24x7.com/get-marineflex-ultra](https://www.healthsupplement24x7.com/get-marineflex-ultra)

With the help of [Marine Flex Ultra](https://pdfhost.io/v/oUE.LN6TI_MarineFlex_Ultra_New_Update_2023_Reduce_Joint_Pain_Boosting_Flexibility_Mobility_Faster), people can restore their young mobility and flexibility and resume participating in their favorite activities.The joint support formula has nutrients that relieve pain and soothe inflammation and swelling. It improves physical function and reduces joint discomfort. [MarineFlex Ultra](https://www.ivoox.com/marineflex-ultra-new-update-2023-reduce-joint-pain-audios-mp3_rf_115267571_1.html) supports healthy inflammatory response and enhances the production of synovial fluid.The fluid nourishes and lubricates the cartilage and joints.

[.png)](https://www.healthsupplement24x7.com/get-marineflex-ultra)

### _**[Visit MarineFlex Ultra Official Website Here](https://www.healthsupplement24x7.com/get-marineflex-ultra)**_

**What is MarineFlex Ultra?**

-----------------------------

[MarineFlex Ultra](https://healthsupplements24x7.blogspot.com/2023/08/marineflex-ultra.html) helps you move better and have stronger bones. It helps with stiffness, aching, and swelling of joints. The supplement works to make your joints healthy and last longer.

[MarineFlex Ultra](https://soundcloud.com/marine-flex-ultra/marineflex-ultra-new-update-2023-reduce-joint-pain-boosting-flexibility-mobility-faster) helps fix the main problem of joint decay that happens when you get older without causing side effects. It works well by making more joint jello, lowering inflammation, and feeding and oiling the joints.

**How Does It Work?**

---------------------

[MarineFlex Ultra](https://www.townscript.com/e/marineflex-ultra-323231) is made from a unique blend of necessary ingredients and systemic proteolytic proteins. Your body develops distinct characters made up of proteins in the location of pain and white blood leukocytes that treat the damage. Nonetheless, after the healing process is completed, the sticky tissues obstruct the flow of red blood cells, which deliver oxygen to all bodily areas.

**Benefits of Marine Flex Ultra**

---------------------------------

### Reduces Joint Pain and Discomfort

One of the primary benefits of Marine Flex Ultra is reducing joint pain and discomfort. The ingredients work together to target the underlying causes of pain, providing relief and improving overall joint health.

### Supports Joint Health and Mobility

[Marine Flex Ultra](https://groups.google.com/g/marineflex-ultra-pills/c/u9UnGYu1Zr8) helps support joint health by promoting synovial fluid production. This fluid cushions and lubricates the joints leading to improved mobility and decreased joint stiffness.

### Promotes a Healthy Inflammatory Response

Chronic inflammation causes joint pain and discomfort. Marine Flex Ultra contains ingredients that support a healthy inflammatory response, helping to alleviate issues caused by inflammation.

### Enhances Bone Marrow Function

Bone marrow plays a crucial role in joint health, as it produces cells that contribute to the maintenance and repair of joint tissues. Marine Flex Ultra supports bone marrow function, promoting overall joint health.

### **Increase circulation**

Marine Flex Ultra improves blood flow and the delivery of nutrients and oxygen to the joints and other parts of the body.

### Strengthen Bones

The ingredients in Marine Flex Ultra support bone and muscle strength by preventing fractures.

[.png)](https://www.healthsupplement24x7.com/get-marineflex-ultra)

### _**[Order Your Supply Of Marine Flex Ultra Now And Start Enjoying The Benefits!](https://www.healthsupplement24x7.com/get-marineflex-ultra)**_

**Ingredients of MarineFlex Ultra!**

------------------------------------

Here are all of the active ingredients in MarineFlex Ultra and how they work, according to Dr. Kahn and the official MarineFlex Ultra website:

**Green Lipped Mussel**:– Green lipped mussel, also known as Perna canaliculus, is a rare marine organism that only grows in the clean and pristine waters off the coast of New Zealand. This mussel gives Marine Flex Ultra its name, because the primary active ingredient comes from the ocean. Green lipped mussels are rich with omega-3 fatty acids, including proven inflammation fighters like DHA and EPA.

**Boswellia Serrata**:–Boswellia serrata extract comes from a tree native to India. It has been used in traditional Indian medicine (Ayurveda) for centuries as a general health and wellness aid. Today, we know boswellia serrata is rich with phytochemicals (plant-based antioxidants) and other natural ingredients that can decrease knee pain, boost mobility, and help with swelling and inflammation.

**Ashwagandha**:– Today, we know ashwagandha works because it’s rich with a substance called Withaferin A (WFA). This substance appears to help with chronic joint pain by suppressing inflammatory cytokines. In fact, WFA could help supress inflammation throughout the body, leading to positive effects on cognition, physical energy, mobility, and more. Many people with joint pain have chronic inflammation, and ashwagandha could help.

**Hyaluronic Acid**:- Hyaluronic acid is known for carrying many times its weight in water, increasing hydration throughout the body – including in the area between your joints. Hyaluronic acid support the synovial fluid and lubrication between your joints, but it also stimulates new cell formation to help repair cartilage, helping it support joint pain relief in multiple ways.

**MSM**:– MSM helps your body form new cartilage and it decreases joint inflammation. Dr. Kahn cites one study where 50 patients with knee osteoarthritis took MSM or a placebo pill. After 12 weeks, MSM significantly decreased pain and physical function impairment compared to the placebo. Today, many people with joint pain take MSM daily to help with the condition.

**Collagen**:– Collagen is the most abundant connective protein in the body, and many people take collagen daily for anti-aging, wrinkle defense, joint pain, and muscle recovery. It helps to repair cartilage and other joint cells. The reason is simple: most cartilage is made of collagen, and collagen plays a crucial role in holding your body together.

**Chondroitin Sulfate**:– Like glucosamine sulfate, chondroitin sulfate is well-known for its effects on joint pain relief and bone repair. As proof, Dr. Kahn cites one study involving 162 patients with osteoarthritis in their hand. Patients took chondroitin sulfate or a placebo pill, and those in the chondroitin sulfate group had significantly less hand pain than those taking a placebo.

15+ Other Herbs, Plants Ingredients include bromelain, calendula, burdock, cetyl myristoleate, yucca, feverfew, shark cartilage, horsetail, white willow bark, gentian root, cinnamon, shatavari, N-acetyl D-glucosamine, grape root extract, and Rehmannia root.

[.png)](https://www.healthsupplement24x7.com/get-marineflex-ultra)

### _**[\[click Here To Order\] Unlock The Benefits Of Marineflex Ultra Natural Ingredients.](https://www.healthsupplement24x7.com/get-marineflex-ultra)**_

**What Is The dosage for MarineFlex Ultra?**

--------------------------------------------

MarineFlex Ultra is a supplement for those who are looking for natural ways to relieve pain and discomfort. In every bottle, you get a month’s supply, that is, 90 capsules per container.

The serving size or recommended dosage for this supplement is 3 capsules daily. It is important to consult a doctor before using the supplement. The formula cannot be used along with blood thinning supplements.

**Marine Flex Ultra Side Effects**

----------------------------------

According to several users, taking Marine Flex Ultra is safe, but an overdose may be harmful. Taking the supplement on an empty stomach may form indigestion such as gas. But just as with any other supplement, you must give your body to adjust to it. Apart from these, Marine Flex Ultra has an excellent track record and clean safety history.

The only way that consumers can be sure to purchase [MarineFlex Ultra](https://events.humanitix.com/marineflex-ultra-new-update-2023-reduce-joint-pain-boosting-flexibility-mobility-faster) is to go through the official website. Consumers have their choice of various quantities, and they can even opt-in to a subscription.

**Pricing Of MarineFlex Ultra**

-------------------------------

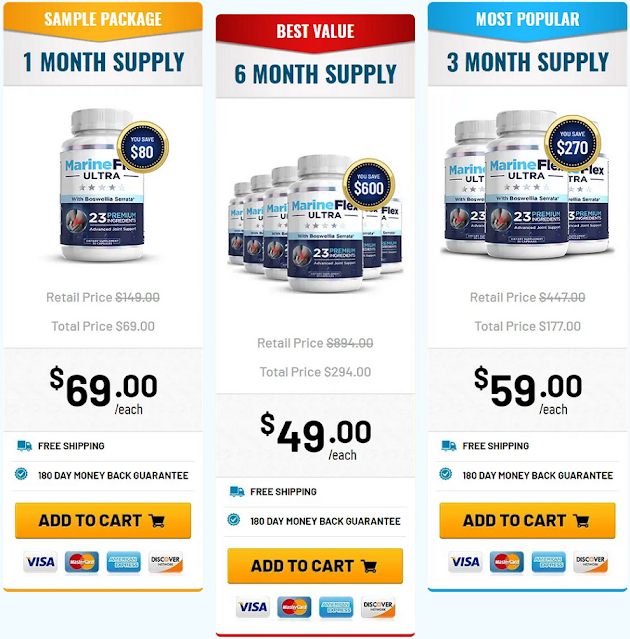

The Marine Flex Ultra supplement is very cheap because the manufacturers wanted to make it a viable pain-relieving option. There are currently three packages being offered on the Marine Flex Ultra website that we have listed here.

The packages available are:

_**1 Month Supply - $69.00/each + free shipping**_

_**3 Month SUpply - $59.00/each + free shipping**_

_**6 Month supply - $49.00/each + free shipping**_

[.png)](https://www.healthsupplement24x7.com/get-marineflex-ultra)

### _**[\[SPECIAL DISCOUNT\] Click Here To Visit MarineFlex Ultra Official Website](https://www.healthsupplement24x7.com/get-marineflex-ultra)**_

**MarineFlex Ultra™ 180-Day Money Back Guarantee!**

---------------------------------------------------

The [MarineFlex Ultra](https://marineflexultra.clubeo.com/page/marineflex-ultra-new-update-2023-reduce-joint-pain-boosting-flexibility-mobility-faster.html)™ is backed by a 100% money back guarantee for 180 full days from your original purchase.

If you're not totally and completely satisfied with our product or your results within the first 180 days from your purchase simply let us know at [MarineFlex Ultra](https://marineflexultra.clubeo.com/calendar/2023/09/01/marineflex-ultra-new-update-2023-reduce-joint-pain-boosting-flexibility-mobility-faster)™ and we’ll give you a refund within 48 hours of the product being returned. That’s right, simply return the [MarineFlex Ultra](https://marineflexultrareviews.hashnode.dev/marineflex-ultra-new-update-2023-reduce-joint-pain-boosting-flexibility-mobility-faster), even empty bottles, anytime within 180 days of your purchase and you’ll receive a refund, no questions asked!

**Where To Buy?**

-----------------

When it comes to purchasing [Marine Flex Ultra](https://www.scoop.it/topic/marineflex-ultra-reviews), you have a few options. You can buy the supplement directly from their website, or you can purchase it from various retailers.

It is important to remember that Marine Flex Ultra is not FDA approved, and as such you should always take the recommended doseIf you are taking other medications, you should talk to your doctor before taking Marine Flex Ultra.

**Final Verdict**

-----------------