sha

stringlengths 40

40

| text

stringlengths 1

13.4M

| id

stringlengths 2

117

| tags

listlengths 1

7.91k

| created_at

stringlengths 25

25

| metadata

stringlengths 2

875k

| last_modified

stringlengths 25

25

| arxiv

listlengths 0

25

| languages

listlengths 0

7.91k

| tags_str

stringlengths 17

159k

| text_str

stringlengths 1

447k

| text_lists

listlengths 0

352

| processed_texts

listlengths 1

353

| tokens_length

listlengths 1

353

| input_texts

listlengths 1

40

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

4a5009abc37cbb2d3fd1a745f80e5ea1405ba9aa |

# Dataset

`GSM8K_zh` is a dataset for mathematical reasoning in Chinese, question-answer pairs are translated from GSM8K (https://github.com/openai/grade-school-math/tree/master) by `GPT-3.5-Turbo` with few-shot prompting.

The dataset consists of 7473 training samples and 1319 testing samples. The former is for **supervised fine-tuning**, while the latter is for **evaluation**.

for training samples, `question_zh` and `answer_zh` are question and answer keys, respectively;

for testing samples, only the translated questions are provided (`question_zh`).

# Citation

If you find the `GSM8K_zh` dataset useful for your projects/papers, please cite the following paper.

```bibtex

@article{yu2023metamath,

title={MetaMath: Bootstrap Your Own Mathematical Questions for Large Language Models},

author={Yu, Longhui and Jiang, Weisen and Shi, Han and Yu, Jincheng and Liu, Zhengying and Zhang, Yu and Kwok, James T and Li, Zhenguo and Weller, Adrian and Liu, Weiyang},

journal={arXiv preprint arXiv:2309.12284},

year={2023}

}

``` | meta-math/GSM8K_zh | [

"task_categories:question-answering",

"size_categories:n<1K",

"language:en",

"language:zh",

"license:mit",

"math",

"math-qa",

"chinese-math-qa",

"region:us"

] | 2023-12-04T03:08:44+00:00 | {"language": ["en", "zh"], "license": "mit", "size_categories": ["n<1K"], "task_categories": ["question-answering"], "tags": ["math", "math-qa", "chinese-math-qa"]} | 2023-12-04T04:02:01+00:00 | [] | [

"en",

"zh"

] | TAGS

#task_categories-question-answering #size_categories-n<1K #language-English #language-Chinese #license-mit #math #math-qa #chinese-math-qa #region-us

|

# Dataset

'GSM8K_zh' is a dataset for mathematical reasoning in Chinese, question-answer pairs are translated from GSM8K (URL by 'GPT-3.5-Turbo' with few-shot prompting.

The dataset consists of 7473 training samples and 1319 testing samples. The former is for supervised fine-tuning, while the latter is for evaluation.

for training samples, 'question_zh' and 'answer_zh' are question and answer keys, respectively;

for testing samples, only the translated questions are provided ('question_zh').

If you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper.

| [

"# Dataset\n\n'GSM8K_zh' is a dataset for mathematical reasoning in Chinese, question-answer pairs are translated from GSM8K (URL by 'GPT-3.5-Turbo' with few-shot prompting.\nThe dataset consists of 7473 training samples and 1319 testing samples. The former is for supervised fine-tuning, while the latter is for evaluation.\n\nfor training samples, 'question_zh' and 'answer_zh' are question and answer keys, respectively;\nfor testing samples, only the translated questions are provided ('question_zh').\n\n\n\nIf you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper."

] | [

"TAGS\n#task_categories-question-answering #size_categories-n<1K #language-English #language-Chinese #license-mit #math #math-qa #chinese-math-qa #region-us \n",

"# Dataset\n\n'GSM8K_zh' is a dataset for mathematical reasoning in Chinese, question-answer pairs are translated from GSM8K (URL by 'GPT-3.5-Turbo' with few-shot prompting.\nThe dataset consists of 7473 training samples and 1319 testing samples. The former is for supervised fine-tuning, while the latter is for evaluation.\n\nfor training samples, 'question_zh' and 'answer_zh' are question and answer keys, respectively;\nfor testing samples, only the translated questions are provided ('question_zh').\n\n\n\nIf you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper."

] | [

55,

172

] | [

"passage: TAGS\n#task_categories-question-answering #size_categories-n<1K #language-English #language-Chinese #license-mit #math #math-qa #chinese-math-qa #region-us \n# Dataset\n\n'GSM8K_zh' is a dataset for mathematical reasoning in Chinese, question-answer pairs are translated from GSM8K (URL by 'GPT-3.5-Turbo' with few-shot prompting.\nThe dataset consists of 7473 training samples and 1319 testing samples. The former is for supervised fine-tuning, while the latter is for evaluation.\n\nfor training samples, 'question_zh' and 'answer_zh' are question and answer keys, respectively;\nfor testing samples, only the translated questions are provided ('question_zh').\n\n\n\nIf you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper."

] |

38ee9a74b40f897c2347b5068fda5d7208cac0ce |

원본 데이터셋: [databricks/databricks-dolly-15k](https://huggingface.co/datasets/databricks/databricks-dolly-15k) | nlp-with-deeplearning/ko.databricks-dolly-15k | [

"task_categories:question-answering",

"task_categories:summarization",

"size_categories:10K<n<100K",

"language:en",

"language:ko",

"license:cc-by-nc-sa-4.0",

"region:us"

] | 2023-12-04T03:12:08+00:00 | {"language": ["en", "ko"], "license": "cc-by-nc-sa-4.0", "size_categories": ["10K<n<100K"], "task_categories": ["question-answering", "summarization"]} | 2023-12-04T03:27:59+00:00 | [] | [

"en",

"ko"

] | TAGS

#task_categories-question-answering #task_categories-summarization #size_categories-10K<n<100K #language-English #language-Korean #license-cc-by-nc-sa-4.0 #region-us

|

원본 데이터셋: databricks/databricks-dolly-15k | [] | [

"TAGS\n#task_categories-question-answering #task_categories-summarization #size_categories-10K<n<100K #language-English #language-Korean #license-cc-by-nc-sa-4.0 #region-us \n"

] | [

62

] | [

"passage: TAGS\n#task_categories-question-answering #task_categories-summarization #size_categories-10K<n<100K #language-English #language-Korean #license-cc-by-nc-sa-4.0 #region-us \n"

] |

9347e6bba50eb20ad36b572f17eeba6d377dd5a1 |

# Dataset

`MetaMathQA_GSM8K_zh` is a dataset for mathematical reasoning in Chinese,

question-answer pairs are translated from MetaMathQA (https://huggingface.co/datasets/meta-math/MetaMathQA) by `GPT-3.5-Turbo` with few-shot prompting.

The dataset consists of 231685 samples.

# Citation

If you find the `GSM8K_zh` dataset useful for your projects/papers, please cite the following paper.

```bibtex

@article{yu2023metamath,

title={MetaMath: Bootstrap Your Own Mathematical Questions for Large Language Models},

author={Yu, Longhui and Jiang, Weisen and Shi, Han and Yu, Jincheng and Liu, Zhengying and Zhang, Yu and Kwok, James T and Li, Zhenguo and Weller, Adrian and Liu, Weiyang},

journal={arXiv preprint arXiv:2309.12284},

year={2023}

}

``` | meta-math/MetaMathQA_GSM8K_zh | [

"task_categories:question-answering",

"size_categories:n<1K",

"language:en",

"language:zh",

"license:mit",

"math",

"math-qa",

"region:us"

] | 2023-12-04T03:13:47+00:00 | {"language": ["en", "zh"], "license": "mit", "size_categories": ["n<1K"], "task_categories": ["question-answering"], "tags": ["math", "math-qa"]} | 2023-12-04T05:23:20+00:00 | [] | [

"en",

"zh"

] | TAGS

#task_categories-question-answering #size_categories-n<1K #language-English #language-Chinese #license-mit #math #math-qa #region-us

|

# Dataset

'MetaMathQA_GSM8K_zh' is a dataset for mathematical reasoning in Chinese,

question-answer pairs are translated from MetaMathQA (URL by 'GPT-3.5-Turbo' with few-shot prompting.

The dataset consists of 231685 samples.

If you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper.

| [

"# Dataset\n\n'MetaMathQA_GSM8K_zh' is a dataset for mathematical reasoning in Chinese, \nquestion-answer pairs are translated from MetaMathQA (URL by 'GPT-3.5-Turbo' with few-shot prompting.\nThe dataset consists of 231685 samples.\n\n\n\nIf you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper."

] | [

"TAGS\n#task_categories-question-answering #size_categories-n<1K #language-English #language-Chinese #license-mit #math #math-qa #region-us \n",

"# Dataset\n\n'MetaMathQA_GSM8K_zh' is a dataset for mathematical reasoning in Chinese, \nquestion-answer pairs are translated from MetaMathQA (URL by 'GPT-3.5-Turbo' with few-shot prompting.\nThe dataset consists of 231685 samples.\n\n\n\nIf you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper."

] | [

48,

101

] | [

"passage: TAGS\n#task_categories-question-answering #size_categories-n<1K #language-English #language-Chinese #license-mit #math #math-qa #region-us \n# Dataset\n\n'MetaMathQA_GSM8K_zh' is a dataset for mathematical reasoning in Chinese, \nquestion-answer pairs are translated from MetaMathQA (URL by 'GPT-3.5-Turbo' with few-shot prompting.\nThe dataset consists of 231685 samples.\n\n\n\nIf you find the 'GSM8K_zh' dataset useful for your projects/papers, please cite the following paper."

] |

dec24968c6e3eea2d2f0691d57797299374ba612 |

원본 데이터셋: [teknium/openhermes](https://huggingface.co/datasets/teknium/openhermes) | nlp-with-deeplearning/ko.openhermes | [

"task_categories:text-generation",

"size_categories:100K<n<1M",

"language:en",

"language:ko",

"license:cc-by-nc-sa-4.0",

"distillation",

"synthetic data",

"gpt",

"region:us"

] | 2023-12-04T03:14:12+00:00 | {"language": ["en", "ko"], "license": "cc-by-nc-sa-4.0", "size_categories": ["100K<n<1M"], "task_categories": ["text-generation"], "tags": ["distillation", "synthetic data", "gpt"]} | 2023-12-04T03:26:54+00:00 | [] | [

"en",

"ko"

] | TAGS

#task_categories-text-generation #size_categories-100K<n<1M #language-English #language-Korean #license-cc-by-nc-sa-4.0 #distillation #synthetic data #gpt #region-us

|

원본 데이터셋: teknium/openhermes | [] | [

"TAGS\n#task_categories-text-generation #size_categories-100K<n<1M #language-English #language-Korean #license-cc-by-nc-sa-4.0 #distillation #synthetic data #gpt #region-us \n"

] | [

63

] | [

"passage: TAGS\n#task_categories-text-generation #size_categories-100K<n<1M #language-English #language-Korean #license-cc-by-nc-sa-4.0 #distillation #synthetic data #gpt #region-us \n"

] |

56303eec1fe0f52eb30a3191a0ec569953f9b34b |

원본 데이터셋: [nvidia/HelpSteer](https://huggingface.co/datasets/nvidia/HelpSteer) | nlp-with-deeplearning/Ko.HelpSteer | [

"size_categories:10K<n<100K",

"language:en",

"language:ko",

"license:cc-by-nc-sa-4.0",

"region:us"

] | 2023-12-04T03:18:07+00:00 | {"language": ["en", "ko"], "license": "cc-by-nc-sa-4.0", "size_categories": ["10K<n<100K"]} | 2023-12-04T03:25:23+00:00 | [] | [

"en",

"ko"

] | TAGS

#size_categories-10K<n<100K #language-English #language-Korean #license-cc-by-nc-sa-4.0 #region-us

|

원본 데이터셋: nvidia/HelpSteer | [] | [

"TAGS\n#size_categories-10K<n<100K #language-English #language-Korean #license-cc-by-nc-sa-4.0 #region-us \n"

] | [

40

] | [

"passage: TAGS\n#size_categories-10K<n<100K #language-English #language-Korean #license-cc-by-nc-sa-4.0 #region-us \n"

] |

f2a0896a737fb03eb81bc88cc96cc187f73e9c1b |

This dataset represents the supervised fine-tuning instructions for HuatuoGPT2, with all responses generated by GPT-4. We have made 50,000 entries of this dataset publicly available.

- **Repository:** https://github.com/FreedomIntelligence/HuatuoGPT-II | FreedomIntelligence/HuatuoGPT2_sft_instruct_GPT4_50K | [

"license:apache-2.0",

"region:us"

] | 2023-12-04T03:41:42+00:00 | {"license": "apache-2.0"} | 2023-12-04T11:39:54+00:00 | [] | [] | TAGS

#license-apache-2.0 #region-us

|

This dataset represents the supervised fine-tuning instructions for HuatuoGPT2, with all responses generated by GPT-4. We have made 50,000 entries of this dataset publicly available.

- Repository: URL | [] | [

"TAGS\n#license-apache-2.0 #region-us \n"

] | [

14

] | [

"passage: TAGS\n#license-apache-2.0 #region-us \n"

] |

121352c0e164f001cbd2fc29aa6d4ceb23779104 | # Dataset Card for "thestack_omp"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | celinelee/thestack_omp | [

"region:us"

] | 2023-12-04T03:44:36+00:00 | {"dataset_info": {"features": [{"name": "source", "dtype": "string"}, {"name": "c", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 2214853376.8, "num_examples": 76232}, {"name": "valid", "num_bytes": 276856672.1, "num_examples": 9529}, {"name": "test", "num_bytes": 276856672.1, "num_examples": 9529}], "download_size": 828673457, "dataset_size": 2768566721.0}} | 2023-12-04T19:52:10+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "thestack_omp"

More Information needed | [

"# Dataset Card for \"thestack_omp\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"thestack_omp\"\n\nMore Information needed"

] | [

6,

16

] | [

"passage: TAGS\n#region-us \n# Dataset Card for \"thestack_omp\"\n\nMore Information needed"

] |

2efd9081cd858f09e56a44ed3105b3af9cce35d0 |

# Sea-bench - a benchmark for evaluating chat assistants in Southeast Asian languages.

**See interactive benchmark view at [Spaces/SeaLLMs/Sea-bench](https://huggingface.co/spaces/SeaLLMs/Sea-bench)**

While there are popular benchmarks to evaluate LLMs as helpful assistants, such as [MT-bench](https://huggingface.co/spaces/lmsys/mt-bench),

they are only English-based and likely to be unsuitable to evaluate performances in low-resource languages.

Due to this lack of multilingual benchmarks for assistant-style models, we engaged native linguists to build a multilingual test set with instructions that

cover 9 Southeast Asian languages, called [Sea-bench](https://huggingface.co/datasets/SeaLLMs/Sea-bench).

The linguists sourced such data by manually translating open-source English test sets, collecting real user questions from local forums and websites,

collecting real math and reasoning questions from reputable sources, as well as writing test instructions and questions themselves.

Our Sea-Bench consists of diverse categories of instructions to evaluate models, as described in the following:

* Task-solving: This type of data comprises various text understanding and processing tasks that test the ability of the language model to perform certain NLP tasks such as summarization, translation, etc.

* Math-reasoning: This includes math problems and logical reasoning tasks.

* General-instruction data: This type of data consists of general user-centric instructions, which evaluate the model's ability in general knowledge and writing. Examples for this type can be requests for recommendations, such as "Suggest three popular books," or instructions that require the model to generate creative outputs, like "Write a short story about a dragon."

* NaturalQA: This consists of queries posted by real users, often in popular local forums, involving local contexts or scenarios. The aim is to test the model's capacity to understand and respond coherently to colloquial language, idioms, and locally contextual references.

* Safety: This includes both general safety and local context-related safety instructions. The instructions could involve testing the model's understanding of safe practices, its ability to advise on safety rules, or its capacity to respond to safety-related queries. While most general safety questions are translated from open sources, other local context-related safety instructions are written by linguists of each language. Safety data only covers Vietnamese, Indonesian, and Thai.

The released Sea-bench test set is a **small subset** that contains **20 questions per task type per language**.

Sea-bench can be evaluated similar to MT-bench, using GPT-4 as a judge in a score-based grading metrics and a peer comparison (or pairwise comparison) manner.

Instructions to evaluate models on Sea-bench (score-based grading):

```bash

# Clone and install LLM judge: https://github.com/lm-sys/FastChat/tree/main/fastchat/llm_judge

# Download SeaLLMs/Sea-bench files to folder `Sea-bench`

# Copy `Sea-bench` folder to llm `FastChat/fastchat/llm_judge`

# Run generation, similar to MT-bench, e.g:

python gen_model_answer.py --model-path lmsys/vicuna-7b-v1.5 --model-id vicuna-7b-v1.5 --bench-name Sea-bench

# Run LLM judgement

python gen_judgment.py \

--parallel 6 \

--bench-name Sea-bench \

--model-list ${YOUR_MODEL_NAME}

```

## Evaluation results:

Sea-bench is used to evaluate [SeaLLMs](https://huggingface.co/SeaLLMs/SeaLLM-Chat-13b), a group of language models built with focus in Southeast Asian languages.

## Contribution

If you have Sea-bench evaluations on your model that you would like into the aggregated results. Please kindly submit a pull request with an updated [model_judgment/gpt-4_single.jsonl

](https://huggingface.co/datasets/SeaLLMs/Sea-bench/tree/main/model_judgment/gpt-4_single.jsonl) file. Please use a different model name than the ones indicated in the file.

## Citation

If you find our project useful, hope you can star our repo and cite our work as follows. Corresponding Author: [[email protected]](mailto:[email protected])

```

@article{damonlpsg2023seallm,

author = {Xuan-Phi Nguyen*, Wenxuan Zhang*, Xin Li*, Mahani Aljunied*,

Qingyu Tan, Liying Cheng, Guanzheng Chen, Yue Deng, Sen Yang,

Chaoqun Liu, Hang Zhang, Lidong Bing},

title = {SeaLLMs - Large Language Models for Southeast Asia},

year = 2023,

Eprint = {arXiv:2312.00738},

}

``` | SeaLLMs/Sea-bench | [

"size_categories:n<1K",

"language:en",

"language:vi",

"language:id",

"language:km",

"language:th",

"language:lo",

"language:my",

"language:ms",

"language:tl",

"language:zh",

"license:apache-2.0",

"region:us"

] | 2023-12-04T03:53:06+00:00 | {"language": ["en", "vi", "id", "km", "th", "lo", "my", "ms", "tl", "zh"], "license": "apache-2.0", "size_categories": ["n<1K"], "configs": [{"config_name": "Question", "data_files": "question.jsonl"}]} | 2023-12-12T13:34:52+00:00 | [] | [

"en",

"vi",

"id",

"km",

"th",

"lo",

"my",

"ms",

"tl",

"zh"

] | TAGS

#size_categories-n<1K #language-English #language-Vietnamese #language-Indonesian #language-Khmer #language-Thai #language-Lao #language-Burmese #language-Malay (macrolanguage) #language-Tagalog #language-Chinese #license-apache-2.0 #region-us

|

# Sea-bench - a benchmark for evaluating chat assistants in Southeast Asian languages.

See interactive benchmark view at Spaces/SeaLLMs/Sea-bench

While there are popular benchmarks to evaluate LLMs as helpful assistants, such as MT-bench,

they are only English-based and likely to be unsuitable to evaluate performances in low-resource languages.

Due to this lack of multilingual benchmarks for assistant-style models, we engaged native linguists to build a multilingual test set with instructions that

cover 9 Southeast Asian languages, called Sea-bench.

The linguists sourced such data by manually translating open-source English test sets, collecting real user questions from local forums and websites,

collecting real math and reasoning questions from reputable sources, as well as writing test instructions and questions themselves.

Our Sea-Bench consists of diverse categories of instructions to evaluate models, as described in the following:

* Task-solving: This type of data comprises various text understanding and processing tasks that test the ability of the language model to perform certain NLP tasks such as summarization, translation, etc.

* Math-reasoning: This includes math problems and logical reasoning tasks.

* General-instruction data: This type of data consists of general user-centric instructions, which evaluate the model's ability in general knowledge and writing. Examples for this type can be requests for recommendations, such as "Suggest three popular books," or instructions that require the model to generate creative outputs, like "Write a short story about a dragon."

* NaturalQA: This consists of queries posted by real users, often in popular local forums, involving local contexts or scenarios. The aim is to test the model's capacity to understand and respond coherently to colloquial language, idioms, and locally contextual references.

* Safety: This includes both general safety and local context-related safety instructions. The instructions could involve testing the model's understanding of safe practices, its ability to advise on safety rules, or its capacity to respond to safety-related queries. While most general safety questions are translated from open sources, other local context-related safety instructions are written by linguists of each language. Safety data only covers Vietnamese, Indonesian, and Thai.

The released Sea-bench test set is a small subset that contains 20 questions per task type per language.

Sea-bench can be evaluated similar to MT-bench, using GPT-4 as a judge in a score-based grading metrics and a peer comparison (or pairwise comparison) manner.

Instructions to evaluate models on Sea-bench (score-based grading):

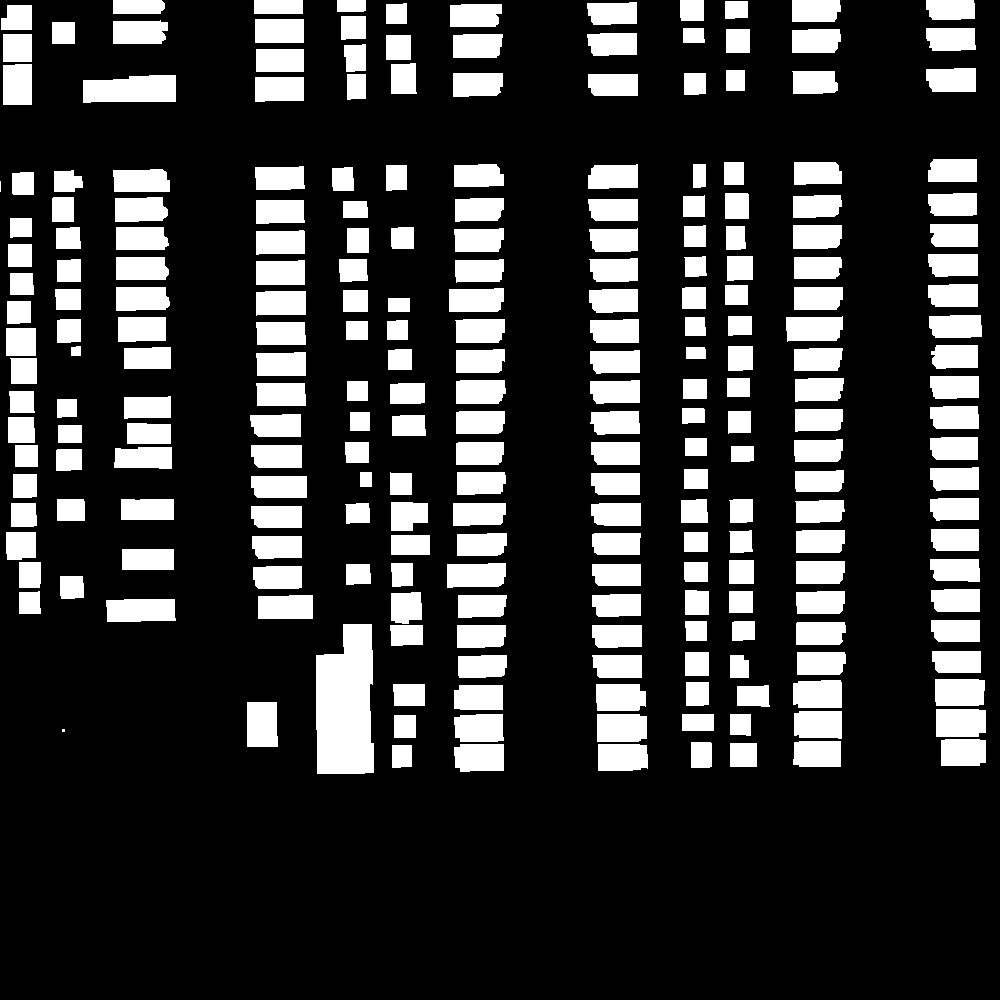

## Evaluation results:

!fig_sea_bench_side_by_side.png

Sea-bench is used to evaluate SeaLLMs, a group of language models built with focus in Southeast Asian languages.

## Contribution

If you have Sea-bench evaluations on your model that you would like into the aggregated results. Please kindly submit a pull request with an updated model_judgment/gpt-4_single.jsonl

file. Please use a different model name than the ones indicated in the file.

If you find our project useful, hope you can star our repo and cite our work as follows. Corresponding Author: l.bing@URL

| [

"# Sea-bench - a benchmark for evaluating chat assistants in Southeast Asian languages.\n\nSee interactive benchmark view at Spaces/SeaLLMs/Sea-bench\n\nWhile there are popular benchmarks to evaluate LLMs as helpful assistants, such as MT-bench, \nthey are only English-based and likely to be unsuitable to evaluate performances in low-resource languages. \nDue to this lack of multilingual benchmarks for assistant-style models, we engaged native linguists to build a multilingual test set with instructions that \ncover 9 Southeast Asian languages, called Sea-bench. \nThe linguists sourced such data by manually translating open-source English test sets, collecting real user questions from local forums and websites, \ncollecting real math and reasoning questions from reputable sources, as well as writing test instructions and questions themselves.\n\nOur Sea-Bench consists of diverse categories of instructions to evaluate models, as described in the following:\n* Task-solving: This type of data comprises various text understanding and processing tasks that test the ability of the language model to perform certain NLP tasks such as summarization, translation, etc.\n* Math-reasoning: This includes math problems and logical reasoning tasks.\n* General-instruction data: This type of data consists of general user-centric instructions, which evaluate the model's ability in general knowledge and writing. Examples for this type can be requests for recommendations, such as \"Suggest three popular books,\" or instructions that require the model to generate creative outputs, like \"Write a short story about a dragon.\"\n* NaturalQA: This consists of queries posted by real users, often in popular local forums, involving local contexts or scenarios. The aim is to test the model's capacity to understand and respond coherently to colloquial language, idioms, and locally contextual references.\n* Safety: This includes both general safety and local context-related safety instructions. The instructions could involve testing the model's understanding of safe practices, its ability to advise on safety rules, or its capacity to respond to safety-related queries. While most general safety questions are translated from open sources, other local context-related safety instructions are written by linguists of each language. Safety data only covers Vietnamese, Indonesian, and Thai.\n\nThe released Sea-bench test set is a small subset that contains 20 questions per task type per language. \n\nSea-bench can be evaluated similar to MT-bench, using GPT-4 as a judge in a score-based grading metrics and a peer comparison (or pairwise comparison) manner.\n\nInstructions to evaluate models on Sea-bench (score-based grading):",

"## Evaluation results:\n\n!fig_sea_bench_side_by_side.png\n\nSea-bench is used to evaluate SeaLLMs, a group of language models built with focus in Southeast Asian languages.",

"## Contribution\n\nIf you have Sea-bench evaluations on your model that you would like into the aggregated results. Please kindly submit a pull request with an updated model_judgment/gpt-4_single.jsonl\n file. Please use a different model name than the ones indicated in the file.\n\n\nIf you find our project useful, hope you can star our repo and cite our work as follows. Corresponding Author: l.bing@URL"

] | [

"TAGS\n#size_categories-n<1K #language-English #language-Vietnamese #language-Indonesian #language-Khmer #language-Thai #language-Lao #language-Burmese #language-Malay (macrolanguage) #language-Tagalog #language-Chinese #license-apache-2.0 #region-us \n",

"# Sea-bench - a benchmark for evaluating chat assistants in Southeast Asian languages.\n\nSee interactive benchmark view at Spaces/SeaLLMs/Sea-bench\n\nWhile there are popular benchmarks to evaluate LLMs as helpful assistants, such as MT-bench, \nthey are only English-based and likely to be unsuitable to evaluate performances in low-resource languages. \nDue to this lack of multilingual benchmarks for assistant-style models, we engaged native linguists to build a multilingual test set with instructions that \ncover 9 Southeast Asian languages, called Sea-bench. \nThe linguists sourced such data by manually translating open-source English test sets, collecting real user questions from local forums and websites, \ncollecting real math and reasoning questions from reputable sources, as well as writing test instructions and questions themselves.\n\nOur Sea-Bench consists of diverse categories of instructions to evaluate models, as described in the following:\n* Task-solving: This type of data comprises various text understanding and processing tasks that test the ability of the language model to perform certain NLP tasks such as summarization, translation, etc.\n* Math-reasoning: This includes math problems and logical reasoning tasks.\n* General-instruction data: This type of data consists of general user-centric instructions, which evaluate the model's ability in general knowledge and writing. Examples for this type can be requests for recommendations, such as \"Suggest three popular books,\" or instructions that require the model to generate creative outputs, like \"Write a short story about a dragon.\"\n* NaturalQA: This consists of queries posted by real users, often in popular local forums, involving local contexts or scenarios. The aim is to test the model's capacity to understand and respond coherently to colloquial language, idioms, and locally contextual references.\n* Safety: This includes both general safety and local context-related safety instructions. The instructions could involve testing the model's understanding of safe practices, its ability to advise on safety rules, or its capacity to respond to safety-related queries. While most general safety questions are translated from open sources, other local context-related safety instructions are written by linguists of each language. Safety data only covers Vietnamese, Indonesian, and Thai.\n\nThe released Sea-bench test set is a small subset that contains 20 questions per task type per language. \n\nSea-bench can be evaluated similar to MT-bench, using GPT-4 as a judge in a score-based grading metrics and a peer comparison (or pairwise comparison) manner.\n\nInstructions to evaluate models on Sea-bench (score-based grading):",

"## Evaluation results:\n\n!fig_sea_bench_side_by_side.png\n\nSea-bench is used to evaluate SeaLLMs, a group of language models built with focus in Southeast Asian languages.",

"## Contribution\n\nIf you have Sea-bench evaluations on your model that you would like into the aggregated results. Please kindly submit a pull request with an updated model_judgment/gpt-4_single.jsonl\n file. Please use a different model name than the ones indicated in the file.\n\n\nIf you find our project useful, hope you can star our repo and cite our work as follows. Corresponding Author: l.bing@URL"

] | [

79,

614,

51,

101

] | [

"passage: TAGS\n#size_categories-n<1K #language-English #language-Vietnamese #language-Indonesian #language-Khmer #language-Thai #language-Lao #language-Burmese #language-Malay (macrolanguage) #language-Tagalog #language-Chinese #license-apache-2.0 #region-us \n"

] |

c6da1ea3a6350f7a18b84e3e197666a2f67e3526 |

# Code Instruct Multiturn

Originally from https://huggingface.co/datasets/TokenBender/glaive_coder_raw_text, use Ultrachat template to generate multiturn using ChatGPT3.5

Notebooks at https://github.com/mesolitica/malaysian-dataset/tree/master/chatbot/code-instruct-multiturn

There are bad pairs you need to reject, follow https://github.com/mesolitica/malaysian-dataset/blob/master/llm-instruction/prepare-malaysian-ultrachat.ipynb

- [ultrachat-glaive_coder_raw_text.jsonl](ultrachat-glaive_coder_raw_text.jsonl), 127253 rows, 1.43 GB

## Example data

```python

[{'role': 'user',

'content': 'Bagaimana cara untuk memasang Python 3 pada instans AWS EC2? Saya telah mencuba menggunakan arahan `sudo yum install python3`, tetapi saya menerima mesej ralat yang mengatakan `Tiada pakej python3 tersedia.`. Saya telah mencari dalam talian tetapi tidak menemui sebarang penyelesaian. Adakah saya perlu memuat turun dan memasangnya secara manual?',

'content_ms': None,

'indon': False},

{'role': 'assistant',

'content': 'Untuk memasang Python 3 pada instans AWS EC2, anda boleh menggunakan arahan `yum` untuk memasang pakej yang diperlukan. Namun, cara terbaik untuk memasang Python 3 adalah dengan menggunakan EPEL (Extra Packages for Enterprise Linux) repository. Anda boleh menggunakan arahan berikut untuk memasang EPEL repository:\n\n```bash\nsudo yum install epel-release\n```\n\nSelepas itu, anda boleh memasang Python 3 dengan menggunakan arahan berikut:\n\n```bash\nsudo yum install python3\n```\n\nDengan langkah-langkah ini, anda seharusnya dapat memasang Python 3 dengan mudah pada instans AWS EC2 anda. Semoga berjaya!',

'content_ms': 'Untuk memasang Python 3 pada instans AWS EC2, anda boleh menggunakan arahan `yum` untuk memasang pakej yang diperlukan. Walau bagaimanapun, cara terbaik untuk memasang Python 3 adalah dengan menggunakan repositori EPEL (Extra Packages for Enterprise Linux). Anda boleh menggunakan arahan berikut untuk memasang repositori EPEL:\n\n```bash\nsudo yum install epel-release\n```\n\nSelepas itu, anda boleh memasang Python 3 dengan menggunakan arahan berikut:\n\n```bash\nsudo yum install python3\n```\n\nDengan langkah-langkah ini, anda sepatutnya dapat memasang Python 3 dengan mudah pada instans AWS EC2 anda. Semoga berjaya!',

'indon': False},

{'role': 'user',

'content': 'Terima kasih atas panduannya. Saya ingin tahu apakah pakej-pakej lain yang perlu saya pasang bagi memastikan Python 3 berfungsi dengan baik? Adakah ada langkah-langkah tambahan yang perlu saya ikuti setelah memasang Python 3? Saya ingin memastikan bahawa semua kebergantungan dan konfigurasi yang diperlukan dipenuhi.',

'content_ms': 'Terima kasih atas panduan. Saya ingin tahu apakah pakej lain yang perlu saya pasang untuk memastikan Python 3 berfungsi dengan baik? Adakah terdapat langkah tambahan yang perlu saya ikuti selepas memasang Python 3? Saya ingin memastikan semua kebergantungan dan konfigurasi yang diperlukan dipenuhi.',

'indon': False},

{'role': 'assistant',

'content': 'Setelah anda memasang Python 3, terdapat beberapa pakej tambahan yang disyorkan untuk memastikan ia berfungsi dengan baik. Berikut adalah beberapa pakej yang perlu anda pasang:\n\n1. Setuptools: Pakej ini menyediakan alat bantu untuk mengelola dan memasang pustaka Python. Anda boleh memasangnya dengan menggunakan pip dengan arahan berikut: \n```bash\nsudo pip3 install setuptools\n```\n\n2. Pip: Pakej ini merupakan pengurus pustaka Python yang popular. Ia membolehkan anda memasang dan menguruskan pustaka pihak ketiga dengan mudah. Anda boleh memasangnya dengan menggunakan arahan berikut:\n```bash\nsudo yum install python3-pip\n```\n\n3. Virtualenv: Pakej ini membolehkan anda membuat persekitaran Python yang berasingan untuk setiap projek. Ini adalah cara yang baik untuk mengurangkan konflik kebergantungan antara projek yang berbeza. Anda boleh memasangnya dengan menggunakan pip:\n```bash\nsudo pip3 install virtualenv\n```\n\nSetelah memasang pakej-pakej ini, anda sepatutnya mempunyai pengaturan asas untuk menggunakan Python 3 secara efektif. Jika anda memperlukan konfigurasi tambahan untuk kegunaan tertentu, ia akan bergantung kepada keperluan projek anda. Tetapi dalam kebanyakan kes, langkah-langkah ini sudah mencukupi untuk memastikan Python 3 berfungsi dengan baik. Jika anda menghadapi sebarang isu atau memerlukan bantuan tambahan, jangan segan untuk bertanya!',

'content_ms': 'Selepas anda memasang Python 3, terdapat beberapa pakej tambahan yang disyorkan untuk memastikan ia berfungsi dengan baik. Berikut adalah beberapa pakej yang anda perlu pasang:\n\n1. Setuptools: Pakej ini menyediakan alat bantu untuk menguruskan dan memasang perpustakaan Python. Anda boleh memasangnya dengan menggunakan pip dengan arahan berikut:\n```bash\nsudo pip3 install setuptools\n```\n\n2. Pip: Pakej ini adalah pengurus perpustakaan Python yang popular. Ia membolehkan anda memasang dan menguruskan perpustakaan pihak ketiga dengan mudah. Anda boleh memasangnya dengan menggunakan arahan berikut:\n```bash\nsudo yum install python3-pip\n```\n\n3. Virtualenv: Pakej ini membolehkan anda membuat persekitaran Python yang berasingan untuk setiap projek. Ini adalah cara yang baik untuk mengurangkan konflik kebergantungan antara projek yang berbeza. Anda boleh memasangnya dengan menggunakan pip:\n```bash\nsudo pip3 install virtualenv\n```\n\nSetelah memasang pakej-pakej ini, anda sepatutnya mempunyai pengaturan asas untuk menggunakan Python 3 dengan berkesan. Jika anda memerlukan konfigurasi tambahan untuk kegunaan tertentu, ia akan bergantung pada keperluan projek anda. Tetapi dalam kebanyakan kes, langkah-langkah ini sudah mencukupi untuk memastikan Python 3 berfungsi dengan baik. Jika anda menghadapi sebarang masalah atau memerlukan bantuan tambahan, jangan ragu untuk bertanya!',

'indon': False}]

``` | mesolitica/chatgpt-code-instruct-multiturn | [

"task_categories:conversational",

"language:ms",

"region:us"

] | 2023-12-04T04:01:33+00:00 | {"language": ["ms"], "task_categories": ["conversational"]} | 2024-02-02T08:23:33+00:00 | [] | [

"ms"

] | TAGS

#task_categories-conversational #language-Malay (macrolanguage) #region-us

|

# Code Instruct Multiturn

Originally from URL use Ultrachat template to generate multiturn using ChatGPT3.5

Notebooks at URL

There are bad pairs you need to reject, follow URL

- ultrachat-glaive_coder_raw_text.jsonl, 127253 rows, 1.43 GB

## Example data

bash\nsudo yum install epel-release\nbash\nsudo yum install python3\nbash\nsudo yum install epel-release\nbash\nsudo yum install python3\nbash\nsudo pip3 install setuptools\nbash\nsudo yum install python3-pip\nbash\nsudo pip3 install virtualenv\nbash\nsudo pip3 install setuptools\nbash\nsudo yum install python3-pip\nbash\nsudo pip3 install virtualenv\n | [

"# Code Instruct Multiturn\n\nOriginally from URL use Ultrachat template to generate multiturn using ChatGPT3.5\n\nNotebooks at URL\n\nThere are bad pairs you need to reject, follow URL\n\n- ultrachat-glaive_coder_raw_text.jsonl, 127253 rows, 1.43 GB",

"## Example data\n\nbash\\nsudo yum install epel-release\\nbash\\nsudo yum install python3\\nbash\\nsudo yum install epel-release\\nbash\\nsudo yum install python3\\nbash\\nsudo pip3 install setuptools\\nbash\\nsudo yum install python3-pip\\nbash\\nsudo pip3 install virtualenv\\nbash\\nsudo pip3 install setuptools\\nbash\\nsudo yum install python3-pip\\nbash\\nsudo pip3 install virtualenv\\n"

] | [

"TAGS\n#task_categories-conversational #language-Malay (macrolanguage) #region-us \n",

"# Code Instruct Multiturn\n\nOriginally from URL use Ultrachat template to generate multiturn using ChatGPT3.5\n\nNotebooks at URL\n\nThere are bad pairs you need to reject, follow URL\n\n- ultrachat-glaive_coder_raw_text.jsonl, 127253 rows, 1.43 GB",

"## Example data\n\nbash\\nsudo yum install epel-release\\nbash\\nsudo yum install python3\\nbash\\nsudo yum install epel-release\\nbash\\nsudo yum install python3\\nbash\\nsudo pip3 install setuptools\\nbash\\nsudo yum install python3-pip\\nbash\\nsudo pip3 install virtualenv\\nbash\\nsudo pip3 install setuptools\\nbash\\nsudo yum install python3-pip\\nbash\\nsudo pip3 install virtualenv\\n"

] | [

26,

70,

129

] | [

"passage: TAGS\n#task_categories-conversational #language-Malay (macrolanguage) #region-us \n# Code Instruct Multiturn\n\nOriginally from URL use Ultrachat template to generate multiturn using ChatGPT3.5\n\nNotebooks at URL\n\nThere are bad pairs you need to reject, follow URL\n\n- ultrachat-glaive_coder_raw_text.jsonl, 127253 rows, 1.43 GB## Example data\n\nbash\\nsudo yum install epel-release\\nbash\\nsudo yum install python3\\nbash\\nsudo yum install epel-release\\nbash\\nsudo yum install python3\\nbash\\nsudo pip3 install setuptools\\nbash\\nsudo yum install python3-pip\\nbash\\nsudo pip3 install virtualenv\\nbash\\nsudo pip3 install setuptools\\nbash\\nsudo yum install python3-pip\\nbash\\nsudo pip3 install virtualenv\\n"

] |

61ec86634fe97cabd3b687b06f7a458a97aab7ef |

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.3.dev0 | minimindy/lora-checkpoint-50 | [

"arxiv:1910.09700",

"region:us"

] | 2023-12-04T04:01:57+00:00 | {"library_name": "peft", "base_model": "baffo32/decapoda-research-llama-7B-hf"} | 2023-12-04T05:58:17+00:00 | [

"1910.09700"

] | [] | TAGS

#arxiv-1910.09700 #region-us

|

# Model Card for Model ID

## Model Details

### Model Description

- Developed by:

- Funded by [optional]:

- Shared by [optional]:

- Model type:

- Language(s) (NLP):

- License:

- Finetuned from model [optional]:

### Model Sources [optional]

- Repository:

- Paper [optional]:

- Demo [optional]:

## Uses

### Direct Use

### Downstream Use [optional]

### Out-of-Scope Use

## Bias, Risks, and Limitations

### Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

## Training Details

### Training Data

### Training Procedure

#### Preprocessing [optional]

#### Training Hyperparameters

- Training regime:

#### Speeds, Sizes, Times [optional]

## Evaluation

### Testing Data, Factors & Metrics

#### Testing Data

#### Factors

#### Metrics

### Results

#### Summary

## Model Examination [optional]

## Environmental Impact

Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).

- Hardware Type:

- Hours used:

- Cloud Provider:

- Compute Region:

- Carbon Emitted:

## Technical Specifications [optional]

### Model Architecture and Objective

### Compute Infrastructure

#### Hardware

#### Software

[optional]

BibTeX:

APA:

## Glossary [optional]

## More Information [optional]

## Model Card Authors [optional]

## Model Card Contact

## Training procedure

The following 'bitsandbytes' quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.3.dev0 | [

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\n\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact",

"## Training procedure\n\nThe following 'bitsandbytes' quantization config was used during training:\n- quant_method: bitsandbytes\n- load_in_8bit: True\n- load_in_4bit: False\n- llm_int8_threshold: 6.0\n- llm_int8_skip_modules: None\n- llm_int8_enable_fp32_cpu_offload: False\n- llm_int8_has_fp16_weight: False\n- bnb_4bit_quant_type: fp4\n- bnb_4bit_use_double_quant: False\n- bnb_4bit_compute_dtype: float32",

"### Framework versions\n\n- PEFT 0.6.3.dev0"

] | [

"TAGS\n#arxiv-1910.09700 #region-us \n",

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\n\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact",

"## Training procedure\n\nThe following 'bitsandbytes' quantization config was used during training:\n- quant_method: bitsandbytes\n- load_in_8bit: True\n- load_in_4bit: False\n- llm_int8_threshold: 6.0\n- llm_int8_skip_modules: None\n- llm_int8_enable_fp32_cpu_offload: False\n- llm_int8_has_fp16_weight: False\n- bnb_4bit_quant_type: fp4\n- bnb_4bit_use_double_quant: False\n- bnb_4bit_compute_dtype: float32",

"### Framework versions\n\n- PEFT 0.6.3.dev0"

] | [

15,

6,

3,

54,

28,

3,

4,

9,

9,

10,

42,

20,

3,

4,

5,

9,

11,

13,

3,

12,

5,

4,

5,

3,

4,

9,

53,

9,

8,

6,

3,

14,

8,

7,

9,

4,

164,

13

] | [

"passage: TAGS\n#arxiv-1910.09700 #region-us \n# Model Card for Model ID## Model Details### Model Description\n\n\n\n\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:## Uses### Direct Use### Downstream Use [optional]### Out-of-Scope Use## Bias, Risks, and Limitations### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.## How to Get Started with the Model\n\nUse the code below to get started with the model.## Training Details### Training Data### Training Procedure#### Preprocessing [optional]#### Training Hyperparameters\n\n- Training regime:#### Speeds, Sizes, Times [optional]## Evaluation### Testing Data, Factors & Metrics#### Testing Data#### Factors#### Metrics### Results#### Summary## Model Examination [optional]## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:## Technical Specifications [optional]### Model Architecture and Objective### Compute Infrastructure#### Hardware#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:## Glossary [optional]## More Information [optional]## Model Card Authors [optional]## Model Card Contact"

] |

4ba8e43ab50177eed64df3a96e78d898978d5771 | ### Dataset

The glue consists of a roughly even three-way split between:

- The entirety of HF No Robots.

- The entirety of TinyPixel/orca-mini

- Part of the Alpaca dataset (randomly chosen) | athirdpath/Merge_Glue | [

"language:en",

"license:cc-by-nc-4.0",

"region:us"

] | 2023-12-04T04:29:39+00:00 | {"language": ["en"], "license": "cc-by-nc-4.0"} | 2023-12-05T11:00:18+00:00 | [] | [

"en"

] | TAGS

#language-English #license-cc-by-nc-4.0 #region-us

| ### Dataset

The glue consists of a roughly even three-way split between:

- The entirety of HF No Robots.

- The entirety of TinyPixel/orca-mini

- Part of the Alpaca dataset (randomly chosen) | [

"### Dataset\n\nThe glue consists of a roughly even three-way split between:\n- The entirety of HF No Robots.\n- The entirety of TinyPixel/orca-mini\n- Part of the Alpaca dataset (randomly chosen)"

] | [

"TAGS\n#language-English #license-cc-by-nc-4.0 #region-us \n",

"### Dataset\n\nThe glue consists of a roughly even three-way split between:\n- The entirety of HF No Robots.\n- The entirety of TinyPixel/orca-mini\n- Part of the Alpaca dataset (randomly chosen)"

] | [

21,

60

] | [

"passage: TAGS\n#language-English #license-cc-by-nc-4.0 #region-us \n### Dataset\n\nThe glue consists of a roughly even three-way split between:\n- The entirety of HF No Robots.\n- The entirety of TinyPixel/orca-mini\n- Part of the Alpaca dataset (randomly chosen)"

] |

dabc6f941af13714bc347b14efd5dcaf81629b80 |

# Bangumi Image Base of Kami Nomi Zo Shiru Sekai

This is the image base of bangumi Kami Nomi zo Shiru Sekai, we detected 60 characters, 5684 images in total. The full dataset is [here](all.zip).

**Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual.** If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| # | Images | Download | Preview 1 | Preview 2 | Preview 3 | Preview 4 | Preview 5 | Preview 6 | Preview 7 | Preview 8 |

|:------|---------:|:---------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|

| 0 | 331 | [Download](0/dataset.zip) |  |  |  |  |  |  |  |  |

| 1 | 1290 | [Download](1/dataset.zip) |  |  |  |  |  |  |  |  |

| 2 | 64 | [Download](2/dataset.zip) |  |  |  |  |  |  |  |  |

| 3 | 289 | [Download](3/dataset.zip) |  |  |  |  |  |  |  |  |

| 4 | 77 | [Download](4/dataset.zip) |  |  |  |  |  |  |  |  |

| 5 | 244 | [Download](5/dataset.zip) |  |  |  |  |  |  |  |  |

| 6 | 18 | [Download](6/dataset.zip) |  |  |  |  |  |  |  |  |

| 7 | 74 | [Download](7/dataset.zip) |  |  |  |  |  |  |  |  |

| 8 | 27 | [Download](8/dataset.zip) |  |  |  |  |  |  |  |  |

| 9 | 29 | [Download](9/dataset.zip) |  |  |  |  |  |  |  |  |

| 10 | 49 | [Download](10/dataset.zip) |  |  |  |  |  |  |  |  |

| 11 | 129 | [Download](11/dataset.zip) |  |  |  |  |  |  |  |  |

| 12 | 114 | [Download](12/dataset.zip) |  |  |  |  |  |  |  |  |

| 13 | 492 | [Download](13/dataset.zip) |  |  |  |  |  |  |  |  |

| 14 | 353 | [Download](14/dataset.zip) |  |  |  |  |  |  |  |  |

| 15 | 38 | [Download](15/dataset.zip) |  |  |  |  |  |  |  |  |

| 16 | 95 | [Download](16/dataset.zip) |  |  |  |  |  |  |  |  |

| 17 | 25 | [Download](17/dataset.zip) |  |  |  |  |  |  |  |  |

| 18 | 37 | [Download](18/dataset.zip) |  |  |  |  |  |  |  |  |

| 19 | 14 | [Download](19/dataset.zip) |  |  |  |  |  |  |  |  |

| 20 | 49 | [Download](20/dataset.zip) |  |  |  |  |  |  |  |  |

| 21 | 208 | [Download](21/dataset.zip) |  |  |  |  |  |  |  |  |

| 22 | 13 | [Download](22/dataset.zip) |  |  |  |  |  |  |  |  |

| 23 | 19 | [Download](23/dataset.zip) |  |  |  |  |  |  |  |  |

| 24 | 136 | [Download](24/dataset.zip) |  |  |  |  |  |  |  |  |

| 25 | 41 | [Download](25/dataset.zip) |  |  |  |  |  |  |  |  |

| 26 | 30 | [Download](26/dataset.zip) |  |  |  |  |  |  |  |  |

| 27 | 29 | [Download](27/dataset.zip) |  |  |  |  |  |  |  |  |

| 28 | 12 | [Download](28/dataset.zip) |  |  |  |  |  |  |  |  |

| 29 | 26 | [Download](29/dataset.zip) |  |  |  |  |  |  |  |  |

| 30 | 84 | [Download](30/dataset.zip) |  |  |  |  |  |  |  |  |

| 31 | 324 | [Download](31/dataset.zip) |  |  |  |  |  |  |  |  |

| 32 | 16 | [Download](32/dataset.zip) |  |  |  |  |  |  |  |  |

| 33 | 11 | [Download](33/dataset.zip) |  |  |  |  |  |  |  |  |

| 34 | 7 | [Download](34/dataset.zip) |  |  |  |  |  |  |  | N/A |

| 35 | 17 | [Download](35/dataset.zip) |  |  |  |  |  |  |  |  |

| 36 | 25 | [Download](36/dataset.zip) |  |  |  |  |  |  |  |  |

| 37 | 18 | [Download](37/dataset.zip) |  |  |  |  |  |  |  |  |

| 38 | 50 | [Download](38/dataset.zip) |  |  |  |  |  |  |  |  |

| 39 | 21 | [Download](39/dataset.zip) |  |  |  |  |  |  |  |  |

| 40 | 5 | [Download](40/dataset.zip) |  |  |  |  |  | N/A | N/A | N/A |

| 41 | 51 | [Download](41/dataset.zip) |  |  |  |  |  |  |  |  |

| 42 | 10 | [Download](42/dataset.zip) |  |  |  |  |  |  |  |  |

| 43 | 7 | [Download](43/dataset.zip) |  |  |  |  |  |  |  | N/A |

| 44 | 12 | [Download](44/dataset.zip) |  |  |  |  |  |  |  |  |

| 45 | 18 | [Download](45/dataset.zip) |  |  |  |  |  |  |  |  |

| 46 | 10 | [Download](46/dataset.zip) |  |  |  |  |  |  |  |  |

| 47 | 7 | [Download](47/dataset.zip) |  |  |  |  |  |  |  | N/A |

| 48 | 14 | [Download](48/dataset.zip) |  |  |  |  |  |  |  |  |

| 49 | 20 | [Download](49/dataset.zip) |  |  |  |  |  |  |  |  |

| 50 | 22 | [Download](50/dataset.zip) |  |  |  |  |  |  |  |  |

| 51 | 19 | [Download](51/dataset.zip) |  |  |  |  |  |  |  |  |

| 52 | 7 | [Download](52/dataset.zip) |  |  |  |  |  |  |  | N/A |

| 53 | 12 | [Download](53/dataset.zip) |  |  |  |  |  |  |  |  |

| 54 | 16 | [Download](54/dataset.zip) |  |  |  |  |  |  |  |  |

| 55 | 5 | [Download](55/dataset.zip) |  |  |  |  |  | N/A | N/A | N/A |

| 56 | 6 | [Download](56/dataset.zip) |  |  |  |  |  |  | N/A | N/A |

| 57 | 23 | [Download](57/dataset.zip) |  |  |  |  |  |  |  |  |

| 58 | 6 | [Download](58/dataset.zip) |  |  |  |  |  |  | N/A | N/A |

| noise | 489 | [Download](-1/dataset.zip) |  |  |  |  |  |  |  |  |

| BangumiBase/kaminomizoshirusekai | [

"size_categories:1K<n<10K",

"license:mit",

"art",

"region:us"

] | 2023-12-04T05:16:11+00:00 | {"license": "mit", "size_categories": ["1K<n<10K"], "tags": ["art"]} | 2023-12-04T08:33:31+00:00 | [] | [] | TAGS

#size_categories-1K<n<10K #license-mit #art #region-us

| Bangumi Image Base of Kami Nomi Zo Shiru Sekai

==============================================

This is the image base of bangumi Kami Nomi zo Shiru Sekai, we detected 60 characters, 5684 images in total. The full dataset is here.

Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual. If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| [] | [

"TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] | [

25

] | [

"passage: TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] |

cf77665e7d6dc28a9b57cb9155a3c5406e902ca0 |

# Bangumi Image Base of Kimi Ni Todoke

This is the image base of bangumi Kimi ni Todoke, we detected 31 characters, 4387 images in total. The full dataset is [here](all.zip).

**Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual.** If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| # | Images | Download | Preview 1 | Preview 2 | Preview 3 | Preview 4 | Preview 5 | Preview 6 | Preview 7 | Preview 8 |

|:------|---------:|:---------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|

| 0 | 897 | [Download](0/dataset.zip) |  |  |  |  |  |  |  |  |

| 1 | 234 | [Download](1/dataset.zip) |  |  |  |  |  |  |  |  |

| 2 | 778 | [Download](2/dataset.zip) |  |  |  |  |  |  |  |  |

| 3 | 36 | [Download](3/dataset.zip) |  |  |  |  |  |  |  |  |

| 4 | 48 | [Download](4/dataset.zip) |  |  |  |  |  |  |  |  |

| 5 | 166 | [Download](5/dataset.zip) |  |  |  |  |  |  |  |  |

| 6 | 222 | [Download](6/dataset.zip) |  |  |  |  |  |  |  |  |

| 7 | 38 | [Download](7/dataset.zip) |  |  |  |  |  |  |  |  |

| 8 | 52 | [Download](8/dataset.zip) |  |  |  |  |  |  |  |  |

| 9 | 34 | [Download](9/dataset.zip) |  |  |  |  |  |  |  |  |

| 10 | 16 | [Download](10/dataset.zip) |  |  |  |  |  |  |  |  |

| 11 | 408 | [Download](11/dataset.zip) |  |  |  |  |  |  |  |  |

| 12 | 571 | [Download](12/dataset.zip) |  |  |  |  |  |  |  |  |

| 13 | 27 | [Download](13/dataset.zip) |  |  |  |  |  |  |  |  |

| 14 | 238 | [Download](14/dataset.zip) |  |  |  |  |  |  |  |  |

| 15 | 46 | [Download](15/dataset.zip) |  |  |  |  |  |  |  |  |

| 16 | 19 | [Download](16/dataset.zip) |  |  |  |  |  |  |  |  |

| 17 | 48 | [Download](17/dataset.zip) |  |  |  |  |  |  |  |  |

| 18 | 42 | [Download](18/dataset.zip) |  |  |  |  |  |  |  |  |

| 19 | 29 | [Download](19/dataset.zip) |  |  |  |  |  |  |  |  |

| 20 | 47 | [Download](20/dataset.zip) |  |  |  |  |  |  |  |  |

| 21 | 86 | [Download](21/dataset.zip) |  |  |  |  |  |  |  |  |

| 22 | 62 | [Download](22/dataset.zip) |  |  |  |  |  |  |  |  |

| 23 | 18 | [Download](23/dataset.zip) |  |  |  |  |  |  |  |  |

| 24 | 18 | [Download](24/dataset.zip) |  |  |  |  |  |  |  |  |

| 25 | 89 | [Download](25/dataset.zip) |  |  |  |  |  |  |  |  |

| 26 | 17 | [Download](26/dataset.zip) |  |  |  |  |  |  |  |  |

| 27 | 25 | [Download](27/dataset.zip) |  |  |  |  |  |  |  |  |

| 28 | 17 | [Download](28/dataset.zip) |  |  |  |  |  |  |  |  |

| 29 | 6 | [Download](29/dataset.zip) |  |  |  |  |  |  | N/A | N/A |

| noise | 53 | [Download](-1/dataset.zip) |  |  |  |  |  |  |  |  |

| BangumiBase/kiminitodoke | [

"size_categories:1K<n<10K",

"license:mit",

"art",

"region:us"

] | 2023-12-04T05:16:29+00:00 | {"license": "mit", "size_categories": ["1K<n<10K"], "tags": ["art"]} | 2023-12-04T08:20:23+00:00 | [] | [] | TAGS

#size_categories-1K<n<10K #license-mit #art #region-us

| Bangumi Image Base of Kimi Ni Todoke

====================================

This is the image base of bangumi Kimi ni Todoke, we detected 31 characters, 4387 images in total. The full dataset is here.

Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual. If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| [] | [

"TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] | [

25

] | [

"passage: TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] |

a6e5bbc38bd072b251ac2c9e25ab3d10462677d0 |

# Bangumi Image Base of Ef - A Tale

This is the image base of bangumi Ef - A Tale, we detected 21 characters, 2004 images in total. The full dataset is [here](all.zip).

**Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual.** If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| # | Images | Download | Preview 1 | Preview 2 | Preview 3 | Preview 4 | Preview 5 | Preview 6 | Preview 7 | Preview 8 |

|:------|---------:|:---------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|

| 0 | 101 | [Download](0/dataset.zip) |  |  |  |  |  |  |  |  |

| 1 | 52 | [Download](1/dataset.zip) |  |  |  |  |  |  |  |  |

| 2 | 127 | [Download](2/dataset.zip) |  |  |  |  |  |  |  |  |

| 3 | 252 | [Download](3/dataset.zip) |  |  |  |  |  |  |  |  |

| 4 | 157 | [Download](4/dataset.zip) |  |  |  |  |  |  |  |  |

| 5 | 193 | [Download](5/dataset.zip) |  |  |  |  |  |  |  |  |

| 6 | 53 | [Download](6/dataset.zip) |  |  |  |  |  |  |  |  |

| 7 | 81 | [Download](7/dataset.zip) |  |  |  |  |  |  |  |  |

| 8 | 21 | [Download](8/dataset.zip) |  |  |  |  |  |  |  |  |

| 9 | 15 | [Download](9/dataset.zip) |  |  |  |  |  |  |  |  |

| 10 | 323 | [Download](10/dataset.zip) |  |  |  |  |  |  |  |  |

| 11 | 103 | [Download](11/dataset.zip) |  |  |  |  |  |  |  |  |

| 12 | 11 | [Download](12/dataset.zip) |  |  |  |  |  |  |  |  |

| 13 | 24 | [Download](13/dataset.zip) |  |  |  |  |  |  |  |  |

| 14 | 10 | [Download](14/dataset.zip) |  |  |  |  |  |  |  |  |

| 15 | 24 | [Download](15/dataset.zip) |  |  |  |  |  |  |  |  |

| 16 | 76 | [Download](16/dataset.zip) |  |  |  |  |  |  |  |  |

| 17 | 44 | [Download](17/dataset.zip) |  |  |  |  |  |  |  |  |

| 18 | 155 | [Download](18/dataset.zip) |  |  |  |  |  |  |  |  |

| 19 | 27 | [Download](19/dataset.zip) |  |  |  |  |  |  |  |  |

| noise | 155 | [Download](-1/dataset.zip) |  |  |  |  |  |  |  |  |

| BangumiBase/efatale | [

"size_categories:1K<n<10K",

"license:mit",

"art",

"region:us"

] | 2023-12-04T05:17:05+00:00 | {"license": "mit", "size_categories": ["1K<n<10K"], "tags": ["art"]} | 2023-12-04T06:58:35+00:00 | [] | [] | TAGS

#size_categories-1K<n<10K #license-mit #art #region-us

| Bangumi Image Base of Ef - A Tale

=================================

This is the image base of bangumi Ef - A Tale, we detected 21 characters, 2004 images in total. The full dataset is here.

Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual. If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| [] | [

"TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] | [

25

] | [

"passage: TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] |

2821c8cd2744932a4a0992607d1aea96e40c6405 |

# Bangumi Image Base of Kaichou Wa Maid-sama!

This is the image base of bangumi Kaichou wa Maid-sama!, we detected 47 characters, 3607 images in total. The full dataset is [here](all.zip).

**Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual.** If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| # | Images | Download | Preview 1 | Preview 2 | Preview 3 | Preview 4 | Preview 5 | Preview 6 | Preview 7 | Preview 8 |

|:------|---------:|:---------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|:-------------------------------|

| 0 | 60 | [Download](0/dataset.zip) |  |  |  |  |  |  |  |  |

| 1 | 46 | [Download](1/dataset.zip) |  |  |  |  |  |  |  |  |

| 2 | 6 | [Download](2/dataset.zip) |  |  |  |  |  |  | N/A | N/A |

| 3 | 423 | [Download](3/dataset.zip) |  |  |  |  |  |  |  |  |

| 4 | 55 | [Download](4/dataset.zip) |  |  |  |  |  |  |  |  |

| 5 | 144 | [Download](5/dataset.zip) |  |  |  |  |  |  |  |  |

| 6 | 68 | [Download](6/dataset.zip) |  |  |  |  |  |  |  |  |

| 7 | 9 | [Download](7/dataset.zip) |  |  |  |  |  |  |  |  |

| 8 | 204 | [Download](8/dataset.zip) |  |  |  |  |  |  |  |  |

| 9 | 124 | [Download](9/dataset.zip) |  |  |  |  |  |  |  |  |

| 10 | 106 | [Download](10/dataset.zip) |  |  |  |  |  |  |  |  |

| 11 | 34 | [Download](11/dataset.zip) |  |  |  |  |  |  |  |  |

| 12 | 66 | [Download](12/dataset.zip) |  |  |  |  |  |  |  |  |

| 13 | 78 | [Download](13/dataset.zip) |  |  |  |  |  |  |  |  |

| 14 | 40 | [Download](14/dataset.zip) |  |  |  |  |  |  |  |  |

| 15 | 70 | [Download](15/dataset.zip) |  |  |  |  |  |  |  |  |

| 16 | 55 | [Download](16/dataset.zip) |  |  |  |  |  |  |  |  |

| 17 | 32 | [Download](17/dataset.zip) |  |  |  |  |  |  |  |  |

| 18 | 70 | [Download](18/dataset.zip) |  |  |  |  |  |  |  |  |

| 19 | 854 | [Download](19/dataset.zip) |  |  |  |  |  |  |  |  |

| 20 | 64 | [Download](20/dataset.zip) |  |  |  |  |  |  |  |  |

| 21 | 50 | [Download](21/dataset.zip) |  |  |  |  |  |  |  |  |

| 22 | 27 | [Download](22/dataset.zip) |  |  |  |  |  |  |  |  |

| 23 | 106 | [Download](23/dataset.zip) |  |  |  |  |  |  |  |  |

| 24 | 134 | [Download](24/dataset.zip) |  |  |  |  |  |  |  |  |

| 25 | 24 | [Download](25/dataset.zip) |  |  |  |  |  |  |  |  |

| 26 | 26 | [Download](26/dataset.zip) |  |  |  |  |  |  |  |  |

| 27 | 22 | [Download](27/dataset.zip) |  |  |  |  |  |  |  |  |

| 28 | 31 | [Download](28/dataset.zip) |  |  |  |  |  |  |  |  |

| 29 | 24 | [Download](29/dataset.zip) |  |  |  |  |  |  |  |  |

| 30 | 28 | [Download](30/dataset.zip) |  |  |  |  |  |  |  |  |

| 31 | 35 | [Download](31/dataset.zip) |  |  |  |  |  |  |  |  |

| 32 | 37 | [Download](32/dataset.zip) |  |  |  |  |  |  |  |  |

| 33 | 10 | [Download](33/dataset.zip) |  |  |  |  |  |  |  |  |

| 34 | 20 | [Download](34/dataset.zip) |  |  |  |  |  |  |  |  |

| 35 | 13 | [Download](35/dataset.zip) |  |  |  |  |  |  |  |  |

| 36 | 19 | [Download](36/dataset.zip) |  |  |  |  |  |  |  |  |

| 37 | 56 | [Download](37/dataset.zip) |  |  |  |  |  |  |  |  |

| 38 | 9 | [Download](38/dataset.zip) |  |  |  |  |  |  |  |  |

| 39 | 104 | [Download](39/dataset.zip) |  |  |  |  |  |  |  |  |

| 40 | 10 | [Download](40/dataset.zip) |  |  |  |  |  |  |  |  |

| 41 | 41 | [Download](41/dataset.zip) |  |  |  |  |  |  |  |  |

| 42 | 9 | [Download](42/dataset.zip) |  |  |  |  |  |  |  |  |

| 43 | 5 | [Download](43/dataset.zip) |  |  |  |  |  | N/A | N/A | N/A |

| 44 | 7 | [Download](44/dataset.zip) |  |  |  |  |  |  |  | N/A |

| 45 | 12 | [Download](45/dataset.zip) |  |  |  |  |  |  |  |  |

| noise | 140 | [Download](-1/dataset.zip) |  |  |  |  |  |  |  |  |

| BangumiBase/kaichouwamaidsama | [

"size_categories:1K<n<10K",

"license:mit",

"art",

"region:us"

] | 2023-12-04T05:17:21+00:00 | {"license": "mit", "size_categories": ["1K<n<10K"], "tags": ["art"]} | 2023-12-04T07:11:45+00:00 | [] | [] | TAGS

#size_categories-1K<n<10K #license-mit #art #region-us

| Bangumi Image Base of Kaichou Wa Maid-sama!

===========================================

This is the image base of bangumi Kaichou wa Maid-sama!, we detected 47 characters, 3607 images in total. The full dataset is here.

Please note that these image bases are not guaranteed to be 100% cleaned, they may be noisy actual. If you intend to manually train models using this dataset, we recommend performing necessary preprocessing on the downloaded dataset to eliminate potential noisy samples (approximately 1% probability).

Here is the characters' preview:

| [] | [

"TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] | [

25

] | [

"passage: TAGS\n#size_categories-1K<n<10K #license-mit #art #region-us \n"

] |

547412eecd50d7f87c8357b2de4d4b2987215217 | # Dataset Card for "sni-10k-flat"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | sordonia/sni-10k-flat | [

"region:us"

] | 2023-12-04T05:32:08+00:00 | {"dataset_info": {"features": [{"name": "source", "dtype": "string"}, {"name": "target", "dtype": "string"}, {"name": "task_name", "dtype": "string"}, {"name": "task_source", "dtype": "string"}, {"name": "split", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 715867937, "num_examples": 549506}], "download_size": 204866337, "dataset_size": 715867937}, "configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}]}]} | 2023-12-04T13:49:06+00:00 | [] | [] | TAGS

#region-us

| # Dataset Card for "sni-10k-flat"

More Information needed | [

"# Dataset Card for \"sni-10k-flat\"\n\nMore Information needed"

] | [

"TAGS\n#region-us \n",

"# Dataset Card for \"sni-10k-flat\"\n\nMore Information needed"

] | [

6,

16

] | [

"passage: TAGS\n#region-us \n# Dataset Card for \"sni-10k-flat\"\n\nMore Information needed"

] |

ba4815d6a62f9607c02b6b016b3eebfd47ae5aeb |

# OpenPlatypus-Commercial version

This dataset is focused on improving LLM logical reasoning skills and was used to train the Platypus2 models. It is comprised of the following datasets, which were filtered using keyword search and then Sentence Transformers to remove questions with a similarity above 80%:

| Dataset Name | License Type |

|--------------------------------------------------------------|--------------|

| [PRM800K](https://github.com/openai/prm800k) | MIT |

| [SciBench](https://github.com/mandyyyyii/scibench) | MIT |

| [TheoremQA](https://huggingface.co/datasets/wenhu/TheoremQA) | MIT |

| [`nuprl/leetcode-solutions-python-testgen-gpt4`](https://huggingface.co/datasets/nuprl/leetcode-solutions-python-testgen-gpt4/viewer/nuprl--leetcode-solutions-python-testgen-gpt4/train?p=1) | None listed |

| [`jondurbin/airoboros-gpt4-1.4.1`](https://huggingface.co/datasets/jondurbin/airoboros-gpt4-1.4.1) | other |

| [`TigerResearch/tigerbot-kaggle-leetcodesolutions-en-2k`](https://huggingface.co/datasets/TigerResearch/tigerbot-kaggle-leetcodesolutions-en-2k/viewer/TigerResearch--tigerbot-kaggle-leetcodesolutions-en-2k/train?p=2) | apache-2.0 |

| [openbookQA](https://huggingface.co/datasets/openbookqa/viewer/additional/train?row=35) | apache-2.0 |

| [ARB](https://arb.duckai.org) | MIT |