sha

stringlengths 40

40

| text

stringlengths 0

13.4M

| id

stringlengths 2

117

| tags

list | created_at

stringlengths 25

25

| metadata

stringlengths 2

31.7M

| last_modified

stringlengths 25

25

|

|---|---|---|---|---|---|---|

8236d452590550fa360857c51b6137daab6d5aa1

|

ValenHumano/reviews_filmaffinity

|

[

"license:gpl",

"region:us"

] |

2023-04-13T20:53:13+00:00

|

{"license": "gpl"}

|

2023-04-13T20:54:34+00:00

|

|

925b307210c970ed357c9c99302b307891fdc9b0

|

# Dataset Card for "chunk_245"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_245

|

[

"region:us"

] |

2023-04-13T21:02:58+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 14219234064.625, "num_examples": 148043}], "download_size": 12535753833, "dataset_size": 14219234064.625}}

|

2023-04-13T21:14:44+00:00

|

52e30d75c03b9966971e6e24a0db9e39c0247979

|

# Dataset Card for "chunk_246"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_246

|

[

"region:us"

] |

2023-04-13T21:48:32+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 15612986592.75, "num_examples": 162554}], "download_size": 14032898971, "dataset_size": 15612986592.75}}

|

2023-04-13T22:01:31+00:00

|

dffbc89b2ff9d11de09d25cab50634205d3b9b1f

|

# Dataset Card for "chunk_248"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_248

|

[

"region:us"

] |

2023-04-13T21:57:46+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 17332822080.5, "num_examples": 180460}], "download_size": 14606880914, "dataset_size": 17332822080.5}}

|

2023-04-13T22:06:14+00:00

|

27f4236adda67b4dffe7a2ba6ae45cf967009304

|

# Dataset Card for "chunk_232"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_232

|

[

"region:us"

] |

2023-04-13T22:10:58+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 22610275488.25, "num_examples": 235406}], "download_size": 20883870881, "dataset_size": 22610275488.25}}

|

2023-04-13T22:44:45+00:00

|

a2c2fc2eb8066fa34580912995f328a890dd9ad7

|

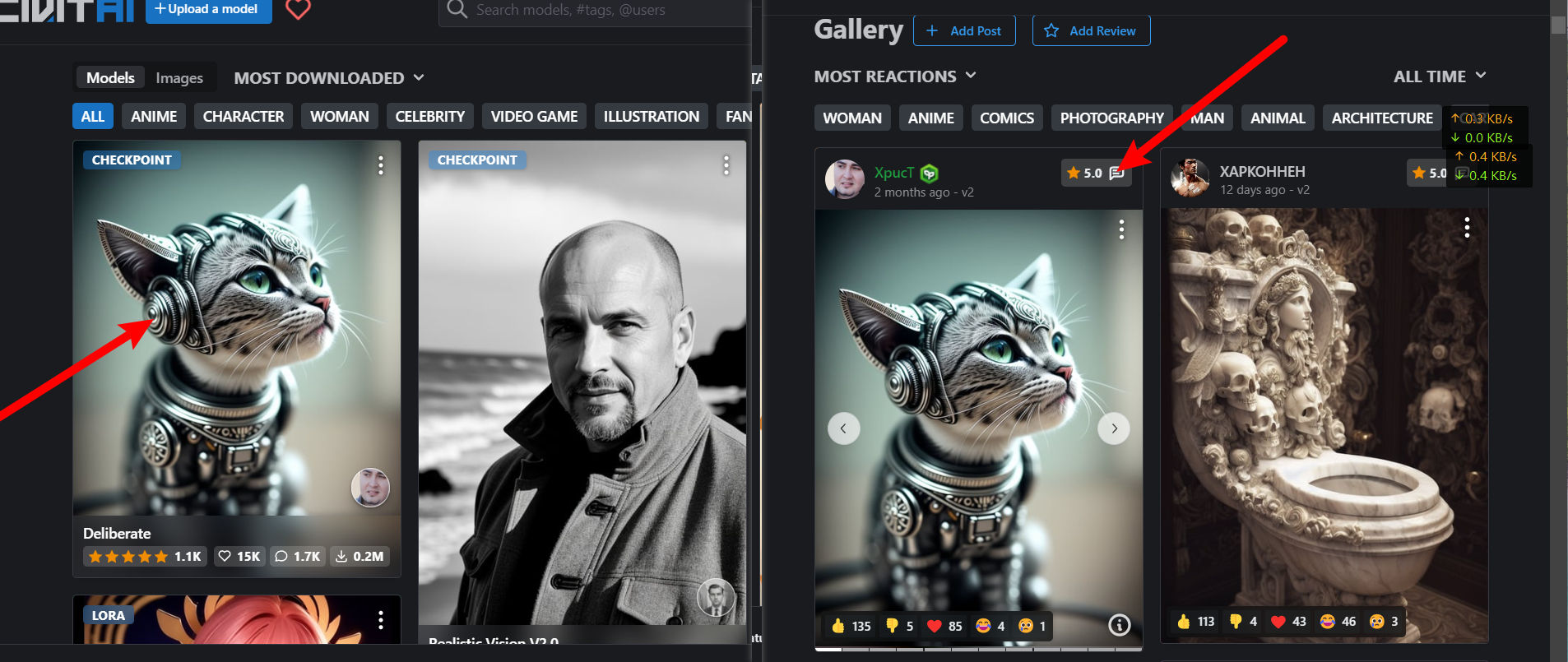

# Model and Gallery Data in Civitai

### Dataset Summary

This Dataset includes model message and gallery data under model, like:

1. I crawl some data from [Civitai](https://civitai.com/) using Github CodeSpace and Deno, It takes me 6 hours to download it safely😄.

2. This dataset can be use to create many interesting model like auto prompting AI or prompt improve AI.

3. This project has a github repo for code that crawl all Data. [Link](https://github.com/KonghaYao/tinyproxy/tree/main/civitai)

### Dataset

1. /index/index.jsonl: It's all Model Base Message!

2. /index/index.filter.jsonl: filtered Model!

3. /index/info.jsonl: All Gallery Post Info!

### Notice some info in dataset

1. It includes many **NSFW** prompts or image URLs you will meet in dataset

2. `jsonl file` is a file that every row is a single json, but I just use '\n' to join an array and wrote to the file, so some bug could appear.

|

KonghaYao/civitai_all_data

|

[

"language:en",

"license:cc-by-4.0",

"art",

"region:us"

] |

2023-04-13T22:31:04+00:00

|

{"language": ["en"], "license": "cc-by-4.0", "tags": ["art"]}

|

2023-04-14T00:38:02+00:00

|

1e1ca050bf85410fc59b329889859fd108d5be6a

|

# Dataset Card for "counterfact-easy"

The dataset form ROME, but simplified to be just the main assertions (no paraphrased prompts included)

|

azhx/counterfact-easy

|

[

"region:us"

] |

2023-04-13T23:04:19+00:00

|

{"dataset_info": {"features": [{"name": "subject", "dtype": "string"}, {"name": "proposition", "dtype": "string"}, {"name": "label", "dtype": {"class_label": {"names": {"0": "False", "1": "True"}}}}, {"name": "case_id", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 3112032.700396916, "num_examples": 39455}, {"name": "test", "num_bytes": 345711.2996030841, "num_examples": 4383}], "download_size": 1618051, "dataset_size": 3457744.0}}

|

2023-04-30T16:41:27+00:00

|

1133d0436694a1c3252667025033a2c41b025b34

|

MiruP/LoraGirl

|

[

"region:us"

] |

2023-04-13T23:14:14+00:00

|

{}

|

2023-04-13T23:14:34+00:00

|

|

5f5021e94b3f45e7e19083f58662c87c4211835e

|

# Dataset Card for "chunk_249"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_249

|

[

"region:us"

] |

2023-04-13T23:23:11+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 20808991296.5, "num_examples": 216652}], "download_size": 18692442529, "dataset_size": 20808991296.5}}

|

2023-04-13T23:34:30+00:00

|

a1daadbd88541e1fe208aeec0a136b875518d8c2

|

# Dataset Card for "ChatCombined"

Combined 5 AI Conversational datasets, added a <|SYSTEM|> prompt for each, and broke the conversation down with <|USER|> and <|ASSISTANT|> tags.

You will need to add these tokens to your tokenizer to fully utilize this dataset: <|SYSTEM|> <|USER|> <|ASSISTANT|>

Collated dataset links:

* [Alpaca GPT-4](https://huggingface.co/datasets/c-s-ale/alpaca-gpt4-data)

* [databricks-dolly-15k](https://github.com/databrickslabs/dolly)

* [Helpful and Harmless](https://huggingface.co/datasets/Dahoas/full-hh-rlhf)

* [Vicuna](https://huggingface.co/datasets/jeffwan/sharegpt_vicuna) - English subset only

* [GPT4ALL-J](https://huggingface.co/datasets/nomic-ai/gpt4all-j-prompt-generations)

## Citations

```bibtex

@misc{alpaca,

author = {Rohan Taori and Ishaan Gulrajani and Tianyi Zhang and Yann Dubois and Xuechen Li and Carlos Guestrin and Percy Liang and Tatsunori B. Hashimoto },

title = {Stanford Alpaca: An Instruction-following LLaMA model},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/tatsu-lab/stanford_alpaca}},

}

```

```bibtext

@misc{bai2022training,

title={Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback},

author={Yuntao Bai and Andy Jones and Kamal Ndousse and Amanda Askell and Anna Chen and Nova DasSarma and Dawn Drain and Stanislav Fort and Deep Ganguli and Tom Henighan and Nicholas Joseph and Saurav Kadavath and Jackson Kernion and Tom Conerly and Sheer El-Showk and Nelson Elhage and Zac Hatfield-Dodds and Danny Hernandez and Tristan Hume and Scott Johnston and Shauna Kravec and Liane Lovitt and Neel Nanda and Catherine Olsson and Dario Amodei and Tom Brown and Jack Clark and Sam McCandlish and Chris Olah and Ben Mann and Jared Kaplan},

year={2022},

eprint={2204.05862},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

```bibtext

@misc{vicuna2023,

title = {Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality},

url = {https://vicuna.lmsys.org},

author = {Chiang, Wei-Lin and Li, Zhuohan and Lin, Zi and Sheng, Ying and Wu, Zhanghao and Zhang, Hao and Zheng, Lianmin and Zhuang, Siyuan and Zhuang, Yonghao and Gonzalez, Joseph E. and Stoica, Ion and Xing, Eric P.},

month = {March},

year = {2023}

}

```

```bibtex

@misc{gpt4all,

author = {Yuvanesh Anand and Zach Nussbaum and Brandon Duderstadt and Benjamin Schmidt and Andriy Mulyar},

title = {GPT4All: Training an Assistant-style Chatbot with Large Scale Data Distillation from GPT-3.5-Turbo},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/nomic-ai/gpt4all}},

}

```

```bibtex

@article{peng2023gpt4llm,

title={Instruction Tuning with GPT-4},

author={Baolin Peng, Chunyuan Li, Pengcheng He, Michel Galley, Jianfeng Gao},

journal={arXiv preprint arXiv:2304.03277},

year={2023}

}

```

|

dmayhem93/ChatCombined

|

[

"task_categories:text-generation",

"task_categories:conversational",

"size_categories:1M<n<10M",

"language:en",

"license:cc-by-nc-4.0",

"arxiv:2204.05862",

"region:us"

] |

2023-04-13T23:29:29+00:00

|

{"language": ["en"], "license": "cc-by-nc-4.0", "size_categories": ["1M<n<10M"], "task_categories": ["text-generation", "conversational"], "dataset_info": {"features": [{"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 2530432677, "num_examples": 1045936}], "download_size": 1272242079, "dataset_size": 2530432677}}

|

2023-04-19T12:03:40+00:00

|

4505b975058c8e8440aa41b65b690f24e66eb095

|

# Dataset Card for "discursos-primera-class-separated-by-idx"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

Sleoruiz/discursos-primera-class-separated-by-idx

|

[

"region:us"

] |

2023-04-14T00:08:05+00:00

|

{"dataset_info": {"features": [{"name": "text", "dtype": "string"}, {"name": "name", "dtype": "string"}, {"name": "comision", "dtype": "string"}, {"name": "gaceta_numero", "dtype": "string"}, {"name": "fecha_gaceta", "dtype": "string"}, {"name": "labels", "sequence": "string"}, {"name": "scores", "sequence": "float64"}, {"name": "idx", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 33715220, "num_examples": 21172}], "download_size": 16042383, "dataset_size": 33715220}}

|

2023-04-14T00:08:20+00:00

|

dcc59473b3d939bbc101b756deeceeb3a992feb8

|

# Dataset Card for Dataset Name

## Dataset Description

- **Homepage:**

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

This dataset card aims to be a base template for new datasets. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/datasetcard_template.md?plain=1).

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

[More Information Needed]

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

[More Information Needed]

|

gvaccaro1/ventas

|

[

"license:unknown",

"region:us"

] |

2023-04-14T00:09:38+00:00

|

{"license": "unknown"}

|

2023-04-14T00:10:37+00:00

|

d002a90383ca4547e59aedaa1ac6b8364339cb3c

|

SFKs/dd.s

|

[

"license:openrail",

"region:us"

] |

2023-04-14T01:24:01+00:00

|

{"license": "openrail"}

|

2023-04-14T01:24:01+00:00

|

|

793876137726a66b106583ef2c4b8b3021b2dea2

|

<div align="center">

<article style="display: flex; flex-direction: column; align-items: center; justify-content: center;">

<p align="center"><img width="300" src="https://user-images.githubusercontent.com/25022954/209616423-9ab056be-5d62-4eeb-b91d-3b20f64cfcf8.svg" /></p>

<h1 style="width: 100%; text-align: center;"></h1>

</p>

</article>

<a href="./README_zh-CN.md" >简体中文</a> | English

</div>

## Introduction

LabelU is an open source data annotation tool that supports Chinese. At present, it has image annotation capabilities such as rectangle, polygon, point, line, classification, and caption. It can support detection, classification, segmentation, text transcription, Line detection, key point detection and other computer vision task scenarios. You can customize the annotation task by freely combining tools, and support COCO and MASK format data export.

## Getting started

### Install locally with miniconda

```

# Download and Install miniconda

# https://docs.conda.io/en/latest/miniconda.html

# Create virtual environment(python = 3.7)

conda create -n labelu python=3.7

# Activate virtual environment

conda activate labelu

# Install labelu

pip install labelu

# Start labelu, server: http://localhost:8000

labelu

```

### Install for local development

```

# Download and Install miniconda

# https://docs.conda.io/en/latest/miniconda.html

# Create virtual environment(python = 3.7)

conda create -n labelu python=3.7

# Activate virtual environment

conda activate labelu

# Install peotry

# https://python-poetry.org/docs/#installing-with-the-official-installer

# Install all package dependencies

poetry install

# Start labelu, server: http://localhost:8000

uvicorn labelu.main:app --reload

# Update submodule

git submodule update --remote --merge

```

## feature

- Uniform, Six image annotation tools are provided, which can be configured through simple visualization or Yaml

- Unlimited, Multiple tools can be freely combined to meet most image annotation requirements

<p align="center">

<img style="width: 600px" src="https://user-images.githubusercontent.com/25022954/209318236-79d3a5c3-2700-46c3-b59a-62d9c132a6c3.gif">

</p>

- Universal, Support multiple data export formats, including LabelU, COCO, Mask

## Scenes

### Computer Vision

- Detection: Detection scenes for vehicles, license plates, pedestrians, faces, industrial parts, etc.

- Classification: Detection of object classification, target characteristics, right and wrong judgments, and other classification scenarios

- Semantic segmentation: Human body segmentation, panoramic segmentation, drivable area segmentation, vehicle segmentation, etc.

- Text transcription: Text detection and recognition of license plates, invoices, insurance policies, signs, etc.

- Contour detection: positioning line scenes such as human contour lines, lane lines, etc.

- Key point detection: positioning scenes such as human face key points, vehicle key points, road edge key points, etc.

## Usage

- [Guide](./docs/GUIDE.md)

## Annotation Format

- [LabelU Annotation Format](./docs/annotation%20format/README.md)

## Communication

Welcome to the Opendatalab Wechat group!

<p align="center">

<img style="width: 400px" src="https://user-images.githubusercontent.com/25022954/208374419-2dffb701-321a-4091-944d-5d913de79a15.jpg">

</p>

## Links

- [labelU-Kit](https://github.com/opendatalab/labelU-Kit)(Powered by labelU-Kit)

## LICENSE

This project is released under the [Apache 2.0 license](./LICENSE).

|

SFKs/ddd.com

|

[

"task_categories:text-classification",

"language:ae",

"license:openrail",

"region:us"

] |

2023-04-14T01:24:28+00:00

|

{"language": ["ae"], "license": "openrail", "task_categories": ["text-classification"]}

|

2023-05-06T05:23:01+00:00

|

fd15bae7d280480f86790b16d982a8297a676801

|

Rana is an alter in Duskfall Crew's system -

Virtual World Lycoris sets are based on Dissociative Identity Disorder

Actually wait.. Rana is A FORMER alter, and is now fused with Tobias and Tori lol.

|

EarthnDusk/RanaLycoris

|

[

"task_categories:text-to-image",

"size_categories:n<1K",

"language:en",

"license:creativeml-openrail-m",

"lora",

"lycoris",

"region:us"

] |

2023-04-14T01:50:57+00:00

|

{"language": ["en"], "license": "creativeml-openrail-m", "size_categories": ["n<1K"], "task_categories": ["text-to-image"], "pretty_name": "Rana Solas", "tags": ["lora", "lycoris"]}

|

2023-04-14T03:45:49+00:00

|

80ecb9f917c03d75fbb0f4e74398f318aac2e9fd

|

Alex Brightman Lycoris

|

Duskfallcrew/Alex_Brightman

|

[

"task_categories:text-to-image",

"size_categories:1K<n<10K",

"language:en",

"license:creativeml-openrail-m",

"lora",

"lycoris",

"region:us"

] |

2023-04-14T02:40:31+00:00

|

{"language": ["en"], "license": "creativeml-openrail-m", "size_categories": ["1K<n<10K"], "task_categories": ["text-to-image"], "pretty_name": "Alex Brightman", "tags": ["lora", "lycoris"]}

|

2023-04-14T03:42:51+00:00

|

876b6f8f30a0370c8d9339a319c275dbc4e5b2cc

|

# Dataset Card for "asr_capstone_train"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

Sammarieo/asr_capstone_train

|

[

"region:us"

] |

2023-04-14T03:13:49+00:00

|

{"dataset_info": {"features": [{"name": "path", "dtype": "audio"}, {"name": "sentence", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 14641650.150537634, "num_examples": 74}], "download_size": 11853152, "dataset_size": 14641650.150537634}}

|

2023-04-14T03:13:51+00:00

|

e4d3e981788368d1a0335242a40c743d7116e98a

|

# Dataset Card for "asr_capstone_test"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

Sammarieo/asr_capstone_test

|

[

"region:us"

] |

2023-04-14T03:13:51+00:00

|

{"dataset_info": {"features": [{"name": "path", "dtype": "audio"}, {"name": "sentence", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 4118361.8494623657, "num_examples": 19}], "download_size": 3257710, "dataset_size": 4118361.8494623657}}

|

2023-04-14T03:13:53+00:00

|

85c21d41f284cd99ca0a03bce4b6b7afa41e6261

|

# Dataset Card for "asr_capstone_project"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

Sammarieo/asr_capstone_project

|

[

"region:us"

] |

2023-04-14T03:29:23+00:00

|

{"dataset_info": {"features": [{"name": "path", "dtype": "audio"}, {"name": "sentence", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 14641650.150537634, "num_examples": 74}, {"name": "test", "num_bytes": 4118361.8494623657, "num_examples": 19}], "download_size": 0, "dataset_size": 18760012.0}}

|

2023-04-14T03:52:14+00:00

|

92983f25edd02de572fe93e0f76c3d7f551be2b6

|

# Model Card: Pixiv HiRes Performance Testing Dataset

**Dataset Name:** pixiv_hires_performance_testing

**Dataset Version:** 1.0

## Dataset Summary

The Pixiv HiRes Performance Testing Dataset is a small, high-quality dataset of 1024x1024 resolution images curated from the largest single file size images on Pixiv between 2020 and 2023. These images have been collected and resized to a minimum resolution of 1024x1024 pixels to create a dataset suitable for performance testing and benchmarking of image-based machine learning models, specifically targeting tasks like image synthesis, style transfer, and image-to-image translation.

## Dataset Size

- Number of Images: 2,000

- Total File Size: 2.5 GB

## Dataset Composition

- Images are sourced from the largest single file size images on Pixiv between 2020 and 2023.

- Images have a minimum resolution of 1024x1024 pixels.

- The dataset is diverse, containing a variety of image styles and subjects, including illustrations, paintings, and photographs.

- All images have been resized to fit the 1024x1024 resolution requirement.

## Dataset Preprocessing and Cleaning

- Resizing: Images were resized using bicubic interpolation to maintain a consistent resolution of 1024x1024 pixels across the dataset.

- Image format: All images have been converted to the lossless PNG format to minimize compression artifacts and preserve image quality.

## Dataset Split

This dataset is not split into train, validation, and test sets, as it is intended for performance testing and benchmarking purposes rather than training models. Users are encouraged to create their own splits as needed.

## Intended Use

The Pixiv HiRes Performance Testing Dataset is designed for evaluating the performance of image-based machine learning models, particularly those focused on high-resolution image synthesis, style transfer, and image-to-image translation. It can be used for:

- Benchmarking and comparing the performance of different models.

- Assessing the robustness and generalization capabilities of models when dealing with high-resolution images.

- Fine-tuning and optimizing model hyperparameters for high-resolution image tasks.

## Limitations and Considerations

- The dataset is relatively small and may not cover all possible variations in image content and style.

- Images are sourced from Pixiv, which may have a bias towards certain types of art styles and subject matter.

- The dataset may contain some images with mature or explicit content. Users should be aware of this when using the dataset for research or applications.

## Data Source

Images in this dataset are sourced from Pixiv, specifically the largest single file size images uploaded between 2020 and 2023.

|

kiriyamaX/1024PerfTest

|

[

"region:us"

] |

2023-04-14T03:39:32+00:00

|

{}

|

2023-04-14T05:21:17+00:00

|

89b0da7937a9dc2f7b48dce62f79cb9738fe6320

|

sliderforthewin/whisper-medium-lt

|

[

"license:unknown",

"region:us"

] |

2023-04-14T03:59:30+00:00

|

{"license": "unknown"}

|

2023-04-14T03:59:30+00:00

|

|

c03ebd4e389b24a01f9b4915fd2de529a8fed95e

|

The AAPL Stock dataset includes daily historical stock market data for Apple Inc. from the New York Stock Exchange (NYSE) from 12th December 1980 to April 1st 2023 The dataset includes six columns: Date, Open, High, Low, Close, Adjusted Close, and Volume.

The "Date" column contains the date of each trading day, while the "Open," "High," "Low," and "Close" columns represent the stock prices of Apple Inc. at the opening, highest, lowest, and closing points of each trading day, respectively.

The "Adjusted Close" column is the closing price adjusted to reflect any corporate actions, such as stock splits, dividends, or mergers and acquisitions. Finally, the "Volume" column indicates the number of shares of Apple Inc. that were traded on each trading day.

This dataset can be used to analyze the historical performance of Apple Inc. stock, to create predictive models, or to perform technical analysis to identify patterns or trends in the data. It is suitable for use in financial analysis, machine learning, and other data-driven applications.

|

chrisaydat/applestockpricehistory

|

[

"license:openrail",

"region:us"

] |

2023-04-14T04:12:06+00:00

|

{"license": "openrail"}

|

2023-04-15T18:58:33+00:00

|

ee77f1e9a00dd52e10ab5d3d6115c89015c00022

|

# Dataset Card for "SheepsDiffusion"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

GreeneryScenery/SheepsDiffusion

|

[

"region:us"

] |

2023-04-14T04:22:01+00:00

|

{"dataset_info": {"features": [{"name": "image", "dtype": "image"}, {"name": "prompt", "dtype": "string"}, {"name": "square_image", "dtype": "image"}, {"name": "conditioning_image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 10700034070.0, "num_examples": 10000}], "download_size": 10815458379, "dataset_size": 10700034070.0}}

|

2023-04-14T08:33:03+00:00

|

293f37e0dac967cb6449262a69ba09641017c3be

|

# Dataset Card for "hindawi_fonts"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

gagan3012/hindawi_fonts

|

[

"region:us"

] |

2023-04-14T04:46:23+00:00

|

{"dataset_info": {"features": [{"name": "image", "dtype": "image"}, {"name": "label", "dtype": {"class_label": {"names": {"0": "Noto_Sans_Arabic", "1": "Readex_Pro", "2": "Amiri", "3": "Noto_Kufi_Arabic", "4": "Reem_Kufi_Fun", "5": "Lateef", "6": "Changa", "7": "Kufam", "8": "ElMessiri", "9": "Reem_Kufi", "10": "Noto_Naskh_Arabic", "11": "Reem_Kufi_Ink", "12": "Tajawal", "13": "Aref_Ruqaa_Ink", "14": "Markazi_Text", "15": "IBM_Plex_Sans_Arabic", "16": "Vazirmatn", "17": "Harmattan", "18": "Gulzar", "19": "Scheherazade_New", "20": "Cairo", "21": "Amiri_Quran", "22": "Noto_Nastaliq_Urdu", "23": "Mada", "24": "Aref_Ruqaa", "25": "Almarai", "26": "Alkalami", "27": "Qahiri"}}}}, {"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 4209517973.992, "num_examples": 64624}, {"name": "validation", "num_bytes": 471903903.624, "num_examples": 7196}, {"name": "test", "num_bytes": 471903903.624, "num_examples": 7196}], "download_size": 5057297184, "dataset_size": 5153325781.24}}

|

2023-04-14T04:49:45+00:00

|

f65286c5b8aec1cadf8fb62d628cbef56c149e08

|

# Dataset Card for XNLI Code-Mixed Corpus (Sampled)

## Dataset Description

- **Homepage:**

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

### Supported Tasks and Leaderboards

Binary mode classification (spoken vs written)

### Languages

- English

- German

- French

- German-English code-mixed by Equivalence Constraint Theory

- German-English code-mixed by Matrix Language Theory

- French-English code-mixed by Equivalence Constraint Theory

- German-English code-mixed by Matrix Language Theory

## Dataset Structure

### Data Instances

{

'text': "And he said , Mama , I 'm home",

'label': 0

}

### Data Fields

- text: sentence

- label: binary label of text (0: spoken 1: written)

### Data Splits

- monolingual

- train (English, German, French monolingual): 2490

- test (English, German, French monolingual): 5007

- de_ec

- train (English, German, French monolingual): 2490

- test (German-English code-mixed by Equivalence Constraint Theory): 14543

- de_ml

- train (English, German, French monolingual): 2490

- test (German-English code-mixed by Matrix Language Theory): 12750

- fr_ec

- train (English, German, French monolingual): 2490

- test (French-English code-mixed by Equivalence Constraint Theory): 18653

- fr_ml

- train (English, German, French monolingual): 2490

- test (French-English code-mixed by Matrix Language Theory): 17381

### Other Statistics

#### Average Sentence Length

- monolingual

- train: 19.18714859437751

- test: 19.321150389454765

- de_ec

- train: 19.18714859437751

- test: 11.24314103004882

- de_ml

- train: 19.18714859437751

- test: 12.159450980392156

- fr_ec

- train: 19.18714859437751

- test: 12.26526564091567

- fr_ml

- train: 19.18714859437751

- test: 13.486968528853346

#### Label Split

- monolingual

- train

- 0: 498

- 1: 1992

- test

- 0: 1002

- 1: 4005

- de_ec

- train

- 0: 498

- 1: 1992

- test

- 0: 2777

- 1: 11766

- de_ml

- train

- 0: 498

- 1: 1992

- test

- 0: 2329

- 1: 10421

- fr_ec

- train

- 0: 498

- 1: 1992

- test

- 0: 3322

- 1: 15331

- fr_ml

- train

- 0: 498

- 1: 1992

- test

- 0: 2788

- 1: 14593

## Dataset Creation

### Curation Rationale

Using the XNLI Parallel Corpus, we generated a code-mixed corpus using CodeMixed Text Generator, and sampled a maximum of 30 sentences per original English sentence.

The XNLI Parallel Corpus is available here:

https://huggingface.co/datasets/nanakonoda/xnli_parallel

It was created from the XNLI corpus.

More information is available in the datacard for the XNLI Parallel Corpus.

Here is the link and citation for the original CodeMixed Text Generator paper.

https://github.com/microsoft/CodeMixed-Text-Generator

```

@inproceedings{rizvi-etal-2021-gcm,

title = "{GCM}: A Toolkit for Generating Synthetic Code-mixed Text",

author = "Rizvi, Mohd Sanad Zaki and

Srinivasan, Anirudh and

Ganu, Tanuja and

Choudhury, Monojit and

Sitaram, Sunayana",

booktitle = "Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations",

month = apr,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.eacl-demos.24",

pages = "205--211",

abstract = "Code-mixing is common in multilingual communities around the world, and processing it is challenging due to the lack of labeled and unlabeled data. We describe a tool that can automatically generate code-mixed data given parallel data in two languages. We implement two linguistic theories of code-mixing, the Equivalence Constraint theory and the Matrix Language theory to generate all possible code-mixed sentences in the language-pair, followed by sampling of the generated data to generate natural code-mixed sentences. The toolkit provides three modes: a batch mode, an interactive library mode and a web-interface to address the needs of researchers, linguists and language experts. The toolkit can be used to generate unlabeled text data for pre-trained models, as well as visualize linguistic theories of code-mixing. We plan to release the toolkit as open source and extend it by adding more implementations of linguistic theories, visualization techniques and better sampling techniques. We expect that the release of this toolkit will help facilitate more research in code-mixing in diverse language pairs.",

}

```

### Source Data

XNLI Code-Mixed Corpus

https://huggingface.co/datasets/nanakonoda/xnli_cm

XNLI Parallel Corpus

https://huggingface.co/datasets/nanakonoda/xnli_parallel

#### Original Source Data

XNLI Parallel Corpus was created using the XNLI Corpus.

https://github.com/facebookresearch/XNLI

Here is the citation for the original XNLI paper.

```

@InProceedings{conneau2018xnli,

author = "Conneau, Alexis

and Rinott, Ruty

and Lample, Guillaume

and Williams, Adina

and Bowman, Samuel R.

and Schwenk, Holger

and Stoyanov, Veselin",

title = "XNLI: Evaluating Cross-lingual Sentence Representations",

booktitle = "Proceedings of the 2018 Conference on Empirical Methods

in Natural Language Processing",

year = "2018",

publisher = "Association for Computational Linguistics",

location = "Brussels, Belgium",

}

```

#### Initial Data Collection and Normalization

We removed all punctuation from the XNLI Parallel Corpus except apostrophes.

#### Who are the source language producers?

N/A

### Annotations

#### Annotation process

N/A

#### Who are the annotators?

N/A

### Personal and Sensitive Information

N/A

## Considerations for Using the Data

### Social Impact of Dataset

N/A

### Discussion of Biases

N/A

### Other Known Limitations

N/A

## Additional Information

### Dataset Curators

N/A

### Licensing Information

N/A

### Citation Information

### Contributions

N/A

|

nanakonoda/xnli_cm_sample

|

[

"task_categories:text-classification",

"annotations_creators:expert-generated",

"language_creators:found",

"multilinguality:multilingual",

"size_categories:1M<n<10M",

"source_datasets:extended|xnli",

"language:en",

"language:de",

"language:fr",

"mode classification",

"aligned",

"code-mixed",

"region:us"

] |

2023-04-14T04:49:35+00:00

|

{"annotations_creators": ["expert-generated"], "language_creators": ["found"], "language": ["en", "de", "fr"], "license": [], "multilinguality": ["multilingual"], "size_categories": ["1M<n<10M"], "source_datasets": ["extended|xnli"], "task_categories": ["text-classification"], "task_ids": [], "pretty_name": "XNLI Code-Mixed Corpus (Sampled)", "tags": ["mode classification", "aligned", "code-mixed"], "dataset_info": [{"config_name": "monolingual", "features": [{"name": "text", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 317164, "num_examples": 2490}, {"name": "test", "num_bytes": 641496, "num_examples": 5007}], "download_size": 891209, "dataset_size": 958660}, {"config_name": "de_ec", "features": [{"name": "text", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 317164, "num_examples": 2490}, {"name": "test", "num_bytes": 1136549, "num_examples": 14543}], "download_size": 1298619, "dataset_size": 1453713}, {"config_name": "de_ml", "features": [{"name": "text", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 317164, "num_examples": 2490}, {"name": "test", "num_bytes": 1068937, "num_examples": 12750}], "download_size": 1248962, "dataset_size": 1386101}, {"config_name": "fr_ec", "features": [{"name": "text", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 317164, "num_examples": 2490}, {"name": "test", "num_bytes": 1520429, "num_examples": 18653}], "download_size": 1644995, "dataset_size": 1837593}, {"config_name": "fr_ml", "features": [{"name": "text", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 317164, "num_examples": 2490}, {"name": "test", "num_bytes": 1544539, "num_examples": 17381}], "download_size": 1682885, "dataset_size": 1861703}], "download_size": 891209, "dataset_size": 958660}

|

2023-05-01T21:13:21+00:00

|

b0e7aaae7d4e6075dde902f4a72528c8d3c8b645

|

dmxlc3M6Ly85YjE0MDE5ZS03OTE1LTRmNzAtYjlhYS1jNTBjNTdlYWQ3N2ZAanAuYWluZXdlcmEuY246NDQzP2VuY3J5cHRpb249bm9uZSZzZWN1cml0eT10bHMmc25pPWpwLmFpbmV3ZXJhLmNuJmZwPWNocm9tZSZ0eXBlPXdzJmhvc3Q9anAuYWluZXdlcmEuY24mcGF0aD0lMkZ0cHpud3MjSlAtQWluZXdlcmElMjBWTEVTU19XUw==

|

DikaZ/vle

|

[

"region:us"

] |

2023-04-14T05:08:44+00:00

|

{}

|

2024-01-26T18:04:44+00:00

|

c469e777afc4abb3e935df325f4ff783a3727e5d

|

# Dataset Card for "diffsion_from_scratch"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

lansinuote/diffsion_from_scratch

|

[

"region:us"

] |

2023-04-14T05:34:05+00:00

|

{"dataset_info": {"features": [{"name": "image", "dtype": "image"}, {"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 119417305.0, "num_examples": 833}], "download_size": 99672356, "dataset_size": 119417305.0}}

|

2023-04-14T05:36:47+00:00

|

e6768fe915c4c09355993cf2438a93f9332b535e

|

# XSUM NO

A norwegian summarization dataset custom made for evaluation or fine-tuning of GPT models.

## Data Collection

Data was scraped from Aftenposten.no and Vg.no, and the summarization column is represented by the title and ingress.

## How to Use

```python

from datasets import load_dataset

data = load_dataset("MasterThesisCBS/XSum_NO")

```

### Dataset Curators

[John Oskar Holmen Skjeldrum](mailto:[email protected]) and [Peder Tanberg](mailto:[email protected])

|

MasterThesisCBS/XSum_NO

|

[

"task_categories:text-generation",

"task_categories:summarization",

"language:no",

"language:nb",

"license:cc-by-4.0",

"summarization",

"region:us"

] |

2023-04-14T05:38:00+00:00

|

{"language": ["no", "nb"], "license": "cc-by-4.0", "task_categories": ["text-generation", "summarization"], "pretty_name": "XSUM Norwegian", "tags": ["summarization"], "dataset_info": {"features": [{"name": "title", "dtype": "string"}, {"name": "url", "dtype": "string"}, {"name": "timestamp", "dtype": "string"}, {"name": "body", "dtype": "string"}, {"name": "lead", "dtype": "string"}, {"name": "body_length", "dtype": "float64"}, {"name": "summary", "dtype": "string"}, {"name": "prompt_train", "dtype": "string"}, {"name": "prompt_test", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 284661834, "num_examples": 64070}, {"name": "test", "num_bytes": 14882449, "num_examples": 3373}], "download_size": 186192491, "dataset_size": 299544283}}

|

2023-04-16T09:34:50+00:00

|

a37ec0f7f586d36757be8e636d6001cc40b6194d

|

alpindale/light-novels

|

[

"license:creativeml-openrail-m",

"region:us"

] |

2023-04-14T06:40:01+00:00

|

{"license": "creativeml-openrail-m"}

|

2023-04-14T17:46:15+00:00

|

|

e70e188295ea831f4979b99a49dc319830e44fb2

|

# Amazon ESCI/ESCI-S dataset

A combination of [Amazon ESCI](https://github.com/amazon-science/esci-data) and [ESCI-S](https://github.com/shuttie/esci-s) datasets in a JSON format.

Used for fine-tuning bi- and cross-encoder models in the [Metarank](https://huggingface.co/metarank) project.

## Dataset format

The dataset is encoded in a JSON-line format, where each row is a single ranking event,

with all item metadata pre-joined. An example:

```json

{

"query": "!qscreen fence without holes",

"e": [

{

"title": "Zippity Outdoor Products ZP19026 Lightweight Portable Vinyl Picket Fence Kit w/Metal Base(42\" H x 92\" W), White",

"desc": "..."

},

{

"title": "Sunnyglade 6 feet x 50 feet Privacy Screen Fence Heavy Duty Fencing Mesh Shade Net Cover for Wall Garden Yard Backyard (6 ft X 50 ft, Green)",

"desc": "..."

},

{

"title": "Amgo 6' x 50' Black Fence Privacy Screen Windscreen,with Bindings & Grommets, Heavy Duty for Commercial and Residential, 90% Blockage, Cable Zip Ties Included, (Available for Custom Sizes)",

"desc": "..."

},

{

"title": "Amgo 4' x 50' Black Fence Privacy Screen Windscreen,with Bindings & Grommets, Heavy Duty for Commercial and Residential, 90% Blockage, Cable Zip Ties Included, (Available for Custom Sizes)",

"desc": "..."

}

]

}

```

## License

Apache 2.0

|

metarank/esci

|

[

"size_categories:10K<n<100K",

"language:en",

"license:apache-2.0",

"shopping",

"ranking",

"amazon",

"region:us"

] |

2023-04-14T07:30:46+00:00

|

{"language": ["en"], "license": "apache-2.0", "size_categories": ["10K<n<100K"], "pretty_name": "ESCI", "tags": ["shopping", "ranking", "amazon"]}

|

2023-04-14T07:45:10+00:00

|

9706bb83f87039fdc84e371aaab8d35400e8635f

|

armonia/wasm-smart-contract

|

[

"license:mit",

"region:us"

] |

2023-04-14T07:35:44+00:00

|

{"license": "mit"}

|

2023-04-14T07:35:44+00:00

|

|

9b6554a29c39954f4d17a9d2c93cba0b872c0e77

|

ChigozieAnyaejiP/Interview_questions

|

[

"task_categories:question-answering",

"size_categories:n<1K",

"language:en",

"license:mit",

"region:us"

] |

2023-04-14T07:37:27+00:00

|

{"language": ["en"], "license": "mit", "size_categories": ["n<1K"], "task_categories": ["question-answering"]}

|

2023-04-17T08:44:40+00:00

|

|

610846e5ff81a6307585bb8c466bbc2ceb50fddf

|

# h2oGPT Data Card

## Summary

H2O.ai's `h2ogpt-oig-instruct-cleaned` is an open-source instruct-type dataset for fine-tuning of large language models, licensed for commercial use.

- Number of rows: `195436`

- Number of columns: `1`

- Column names: `['input']`

## Source

- [Original LAION OIG Dataset](https://github.com/LAION-AI/Open-Instruction-Generalist)

- [LAION OIG data detoxed and filtered down by scripts in h2oGPT repository](https://github.com/h2oai/h2ogpt/blob/b8f15efcc305a953c52a0ee25b8b4897ceb68c0a/scrape_dai_docs.py)

|

h2oai/h2ogpt-oig-instruct-cleaned

|

[

"language:en",

"license:apache-2.0",

"gpt",

"llm",

"large language model",

"open-source",

"region:us"

] |

2023-04-14T07:43:25+00:00

|

{"language": ["en"], "license": "apache-2.0", "thumbnail": "https://h2o.ai/etc.clientlibs/h2o/clientlibs/clientlib-site/resources/images/favicon.ico", "tags": ["gpt", "llm", "large language model", "open-source"]}

|

2023-04-19T03:42:50+00:00

|

a91bed2d6c8ca8c178337fd8bfe0e2682074d4b9

|

# Dataset Card for "java_unifiedbug_5-1"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

nguyenminh871/java_unifiedbug_5-1

|

[

"region:us"

] |

2023-04-14T07:59:11+00:00

|

{"dataset_info": {"features": [{"name": "Unnamed: 0", "dtype": "int64"}, {"name": "func", "dtype": "string"}, {"name": "target", "dtype": {"class_label": {"names": {"0": true, "1": false}}}}, {"name": "project", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 20529988.026214454, "num_examples": 6699}, {"name": "test", "num_bytes": 6843329.342071485, "num_examples": 2233}, {"name": "validation", "num_bytes": 6846393.976796999, "num_examples": 2234}], "download_size": 0, "dataset_size": 34219711.34508294}}

|

2023-04-19T02:22:09+00:00

|

1ef8009372dc207ed84b947953c809dc0d0d6ec2

|

# Dataset Card for "shp_with_features_20k"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

tomekkorbak/shp_with_features_20k

|

[

"region:us"

] |

2023-04-14T08:02:03+00:00

|

{"dataset_info": {"features": [{"name": "post_id", "dtype": "string"}, {"name": "domain", "dtype": "string"}, {"name": "upvote_ratio", "dtype": "float64"}, {"name": "history", "dtype": "string"}, {"name": "c_root_id_A", "dtype": "string"}, {"name": "c_root_id_B", "dtype": "string"}, {"name": "created_at_utc_A", "dtype": "int64"}, {"name": "created_at_utc_B", "dtype": "int64"}, {"name": "score_A", "dtype": "int64"}, {"name": "score_B", "dtype": "int64"}, {"name": "human_ref_A", "dtype": "string"}, {"name": "human_ref_B", "dtype": "string"}, {"name": "labels", "dtype": "int64"}, {"name": "seconds_difference", "dtype": "float64"}, {"name": "score_ratio", "dtype": "float64"}, {"name": "helpfulness_A", "dtype": "float64"}, {"name": "helpfulness_B", "dtype": "float64"}, {"name": "specificity_A", "dtype": "float64"}, {"name": "specificity_B", "dtype": "float64"}, {"name": "intent_A", "dtype": "float64"}, {"name": "intent_B", "dtype": "float64"}, {"name": "factuality_A", "dtype": "float64"}, {"name": "factuality_B", "dtype": "float64"}, {"name": "easy-to-understand_A", "dtype": "float64"}, {"name": "easy-to-understand_B", "dtype": "float64"}, {"name": "relevance_A", "dtype": "float64"}, {"name": "relevance_B", "dtype": "float64"}, {"name": "readability_A", "dtype": "float64"}, {"name": "readability_B", "dtype": "float64"}, {"name": "enough-detail_A", "dtype": "float64"}, {"name": "enough-detail_B", "dtype": "float64"}, {"name": "biased:_A", "dtype": "float64"}, {"name": "biased:_B", "dtype": "float64"}, {"name": "fail-to-consider-individual-preferences_A", "dtype": "float64"}, {"name": "fail-to-consider-individual-preferences_B", "dtype": "float64"}, {"name": "repetetive_A", "dtype": "float64"}, {"name": "repetetive_B", "dtype": "float64"}, {"name": "fail-to-consider-context_A", "dtype": "float64"}, {"name": "fail-to-consider-context_B", "dtype": "float64"}, {"name": "too-long_A", "dtype": "float64"}, {"name": "too-long_B", "dtype": "float64"}, {"name": "__index_level_0__", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 20532157.0, "num_examples": 9459}, {"name": "test", "num_bytes": 20532157.0, "num_examples": 9459}], "download_size": 23638147, "dataset_size": 41064314.0}}

|

2023-04-14T08:02:38+00:00

|

bf4ab5bc29be00f3c0d27b7adc33bd59cd528608

|

# Dataset Card for "chunk_240"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_240

|

[

"region:us"

] |

2023-04-14T08:28:04+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 27411234768.125, "num_examples": 285391}], "download_size": 25751179542, "dataset_size": 27411234768.125}}

|

2023-04-14T08:43:00+00:00

|

fa4da4b0154baa874b3fab4b45e21ef8fa68b9a6

|

Quake24/paraphrasedTwitter

|

[

"license:apache-2.0",

"region:us"

] |

2023-04-14T08:49:09+00:00

|

{"license": "apache-2.0"}

|

2023-04-14T08:49:50+00:00

|

|

087755e95af101619497cc0680afcf25674144a6

|

Quake24/paraphrasedPayPal

|

[

"license:apache-2.0",

"region:us"

] |

2023-04-14T08:58:45+00:00

|

{"license": "apache-2.0"}

|

2023-04-14T09:07:21+00:00

|

|

4ef6c763011ea5ade96d8a1b586bf3aaeb8ac42d

|

# Dataset Card for "SheepsDiffusionNet"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

GreeneryScenery/SheepsDiffusionNet

|

[

"region:us"

] |

2023-04-14T09:18:49+00:00

|

{"dataset_info": {"features": [{"name": "prompt", "dtype": "string"}, {"name": "image", "dtype": "image"}, {"name": "conditioning_image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 8712517143.812, "num_examples": 32719}], "download_size": 8690399921, "dataset_size": 8712517143.812}}

|

2023-04-14T10:33:33+00:00

|

993e3168e921d84ca60474ece7db824ea5fcc6ed

|

mstz/segment

|

[

"license:cc-by-4.0",

"region:us"

] |

2023-04-14T09:21:45+00:00

|

{"license": "cc-by-4.0"}

|

2023-04-14T09:25:43+00:00

|

|

a3388b88f0f44e979f3b010fade27e38e973ca2d

|

# Dataset Card for "arquivo_news"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

jfecunha/arquivo_news

|

[

"region:us"

] |

2023-04-14T09:24:40+00:00

|

{"dataset_info": {"features": [{"name": "image_id", "dtype": "int64"}, {"name": "words", "sequence": "string"}, {"name": "bboxes", "sequence": {"sequence": "int64"}}, {"name": "labels", "sequence": "float64"}, {"name": "image", "dtype": "binary"}, {"name": "image_path", "dtype": "string"}, {"name": "source", "dtype": "string"}, {"name": "__index_level_0__", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 419370110, "num_examples": 528}, {"name": "test", "num_bytes": 110356586, "num_examples": 133}], "download_size": 509864102, "dataset_size": 529726696}}

|

2023-05-03T14:29:12+00:00

|

979d9154fc3a69f70d7430a2edd72a4859d6d687

|

# Dataset Card for "Modified-Caption-Train-Set"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

JerryMo/Modified-Caption-Train-Set

|

[

"region:us"

] |

2023-04-14T09:32:55+00:00

|

{"dataset_info": {"features": [{"name": "image", "dtype": "image"}, {"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 113638008.565, "num_examples": 2485}], "download_size": 112605764, "dataset_size": 113638008.565}}

|

2023-04-15T05:38:47+00:00

|

e8dde316f685a0ef45767016e206ce61a78af3d0

|

# Landsat

The [Landsat dataset](https://archive-beta.ics.uci.edu/dataset/146/statlog+landsat+satellite) from the [UCI repository](https://archive-beta.ics.uci.edu/).

# Configurations and tasks

| **Configuration** | **Task** | **Description** |

|-----------------------|---------------------------|-------------------------|

| landsat | Multiclass classification.| |

| landsat_0 | Binary classification. | Is the image of class 0? |

| landsat_1 | Binary classification. | Is the image of class 1? |

| landsat_2 | Binary classification. | Is the image of class 2? |

| landsat_3 | Binary classification. | Is the image of class 3? |

| landsat_4 | Binary classification. | Is the image of class 4? |

| landsat_5 | Binary classification. | Is the image of class 5? |

|

mstz/landsat

|

[

"task_categories:tabular-classification",

"size_categories:1K<n<10K",

"language:en",

"license:cc",

"landsat",

"tabular_classification",

"binary_classification",

"multiclass_classification",

"UCI",

"region:us"

] |

2023-04-14T09:42:16+00:00

|

{"language": ["en"], "license": "cc", "size_categories": ["1K<n<10K"], "task_categories": ["tabular-classification"], "pretty_name": "Landsat", "tags": ["landsat", "tabular_classification", "binary_classification", "multiclass_classification", "UCI"], "configs": ["landsat", "landsat_binary"]}

|

2023-04-16T16:33:23+00:00

|

b0450ad3c59980bd6975e5f6756b779766fe4d26

|

### Dataset Summary

A bunch of datasets preprocessed and formatted with https://github.com/openai/openai-python/blob/main/chatml.md (with an addition of a context message to help RWKV (no lookback))

The dataset makes use of two more tokens. You will need to use the supplied 20b_tokeniser file with both training and inference.

### Languages

English mainly, might be a few bits of other languages.

### Things to do

1. Improve system prompt effect on output.

2. Get more reasoning data.

3. More data!

### Format

Example below

```

<|im_start|>system

You are a teacher.<|im_end|>

<|im_start|>user

Analyse the following data to answer the question "Which homecoming-related traditions are illegal?":

<data>

Dartmouth Night starts the college's traditional \"Homecoming\" weekend with an evening of speeches, a parade, and a bonfire. Traditionally, the freshman class builds the bonfire and then runs around it a set number of times in concordance with their class year; the class of 2009 performed 109 circuits, the class of 1999 performed 99, etc. The College officially discourages a number of student traditions of varying degrees of antiquity. During the circling of the bonfire, upperclassmen encourage the freshmen to \"touch the fire\", an action legally considered trespassing and prohibited by police officials present. At halftime of the Homecoming football game on the Saturday of the weekend, some upperclassmen encourage freshman to \"rush the field\", although no upperclassman has seen a significant rush since several injuries sustained during the 1986 rush prompted the school to ban the practice. Among the two or three students who sometimes run across the field, those who are arrested are charged with trespassing (the independent newspaper The Dartmouth Review claimed to set up a fund to automatically pay any fines associated with freshman who rush the field.) However, in 2012 this was proven false when two students rushed the field and were arrested for disorderly conduct. The Dartmouth Review ignored their emails until finally replying and denying that this fund had ever existed. These students then had to pay $300 fines out of pocket. For the 2011 Homecoming game, however, over 40 members of the Class of 2015 rushed the field at homecoming without any action taken by Safety and Security or the Hanover Police Department.

</data>

Which homecoming-related traditions are illegal?

<|im_end|>

<|im_start|>assistant

Touching the bonfire, and rushing the football field during halftime of the homecoming game<|im_end|>

```

|

m8than/raccoon_instruct_conversation

|

[

"region:us"

] |

2023-04-14T09:43:38+00:00

|

{}

|

2023-09-23T02:52:13+00:00

|

d1140e25cb2557e5503c7c766e2f78da5b084dd3

|

Windowsの方は[ggml-japanese-gpt2](https://github.com/thx-pw/ggml/releases)の実行ファイルで動くと思います。

同じファイル名のggml形式のbinとSentencePiece形式のmodelをダウンロードして保存してください。

使い方は以下のようになります。

```

gpt-2.exe -m ggml-model-japanese-gpt2-medium-f16.bin -p "こんにちは"

```

`ggml-model-japanese-gpt2-xsmall`のファイル形式がおかしくなっているので、ダウンロードしても動きません。

あとで修正します。

|

inu-ai/ggml-japanese-gpt2

|

[

"language:ja",

"license:mit",

"region:us"

] |

2023-04-14T09:51:10+00:00

|

{"language": ["ja"], "license": "mit"}

|

2023-04-14T17:11:40+00:00

|

21724fafe6793c9fa62dcf844cd11762f3ccabb8

|

# AutoTrain Dataset for project: beproj_meeting_summarization_usingt5

## Dataset Description

This dataset has been automatically processed by AutoTrain for project beproj_meeting_summarization_usingt5.

### Languages

The BCP-47 code for the dataset's language is en.

## Dataset Structure

### Data Instances

A sample from this dataset looks as follows:

```json

[

{

"feat_id": "16e6a86e9189b5566c19bc7fc48d923139da9bd2",

"text": "(CNN)A TV series based on the 1999 sci-fi film \"Galaxy Quest\" is in the works at Paramount Television. The DreamWorks film centered on the cast of a canceled space TV show who are accidentally sent to a spaceship and must save an alien nation. TV Land's 'Younger' renewed for second season . The film's scribe Robert Gordon is expected to write the TV version and executive produce with the film's director Dean Parisot, producer Mark Johnson and Johnson's producing partner Melissa Bernstein. 'The Voice' coaches CeeLo Green, Gwen Stefani and Usher to return . The film starred Tim Allen, Sigourney Weaver, Alan Rickman, Tony Shalhoub, Sam Rockwell, Daryl Mitchell and Enrico Colantoni. PBS to conduct \"Internal Review\" over Ben Affleck's request to hide slave-owner ancestry . \"Galaxy Quest\" is the latest movie to be adapted for the small screen. This pilot season, ABC has \"Uncle Buck,\" CBS has \"Rush Hour\" and Fox has \"Minority Report.\" Paramount Television specifically has turned several of the studio's hit films into TV series. \"School of Rock\" will debut on Nickelodeon later this year, and USA recently ordered a pilot for \"Shooter,\" based on the Mark Wahlberg film. \u00a92015 The Hollywood Reporter. All rights reserved.",

"target": "\"Galaxy Quest\" TV series in the works .\nShow would be based on the cult classic 1999 sci-fi comedy ."

},

{

"feat_id": "3815d19af18ff22be6ad6095722d7367bb7271af",

"text": "A paramedic who pretended he was gay to get close to women before sexually assaulting them has been struck off the medical register. Christopher Bridger, 25, from Stevenage, Hertfordshire, attacked three women after separate drinking sessions and was jailed for 12 years after being convicted of rape and four other abuse charges last year. The HCPC Conduct and Competence Committee today removed him from the register after hearing his crimes and describing them as 'a serious breach of trust'. Christopher Bridger, 25, who was jailed for 12 years after he sexually assaulted three women, has been struck off the medical register . A jury at Guildford Crown Court, Surrey, found him guilty of raping a fellow student while he was studying to be a paramedic at St George's University Hospital in London in 2008. He had accompanied her back to her halls following a Freshers' Week fancy dress party and began kissing and cuddling her, despite being told to stop. He then raped her but astonishingly broke down in tears afterwards and said: 'I just want to like girls.' The woman told the jury she ended up comforting Bridger, despite knowing he was in the wrong. His other victims were co-workers at South East Coast Ambulance Service NHS Trust, where he started working in 2010. A lesbian colleague told the court she was molested by Bridger after a staff Christmas party while her girlfriend was in the same hotel bed. The HCPC Conduct and Competence Committee found his crimes were a 'serious breach of trust' The women, aged in their 20s - who cannot be named for legal reasons - were forced to relive their ordeals after the ambulance worker accused them of lying during a trial in July last year. His colleague explained how Bridger came up to her hotel room after she got extremely intoxicated at the party in December 2011. He climbed into bed between his victim and her partner and the woman awoke to find him sexually assaulting her and pleasuring himself as her girlfriend lay asleep next to them. She kept quiet, fearing her partner wouldn't understand what had happened, but the day after on his birthday, he sheepishly sent the woman a number of text messages apologising for his behaviour. One text said: 'It was one night of stupidity for which I will be eternally sorry.' Another said: 'You don't have to forgive me, I'm just telling you the truth. I'm ashamed of myself.' His final victim was also a colleague from the South East Coast Ambulance Service, who said she was sexually assaulted after she allowed him to stay at her house after a dinner in October 2012. Bridger was suspended from work after the incidents were reported to South East Coast Ambulance Services bosses in 2012. He was jailed for 12 years and ordered to sign the Sex Offenders' Register for life but failed to attend today's medical register hearing. Striking him off, chair of the HCPC panel, Nicola Bastin said: 'The panel has heard that the offences were committed against three vulnerable young women who were known to the registrant as friends and colleagues including a student paramedic. This represented a serious breach of trust. 'The panel has also heard that the women were vulnerable due to the effects of alcohol and that one of the offences was committed when the woman was asleep. 'The panel has considered this case very carefully and cannot find any redeeming features on the part of the registrant. A jury at Guildford Crown Court, Surrey, found him guilty of rape and four other sex abuse charges . 'The panel takes the view that this case is serious, it does indeed involve abuse of trust, sexual abuse of a serious nature and, furthermore, there is no evidence of insight on the part of the registrant.' The HCPC panel chairman Brian Wroe added: 'The registrant entered a plea of not guilty to each of the charges and was found guilty following a 13 day trial. 'This showed Christopher Bridger lacks the insight into the circumstances which resulted in the convictions and does not take responsibility for his actions.' When he was sentenced in September, Mr Recorder Mark Milliken-Smith told him: 'These were wicked, mean and utterly cowardly offences which have and will have serious consequences on these young women and those around them for a very long time.'",

"target": "Christopher Bridger, 25, attacked three women after drinking sessions .\nHe was convicted of rape and four other abuse charges at court last year .\nAmbulance worker told women he was gay before assaulting them in bed .\nHCPC Conduct and Competence Committee removed him from register .\nPanel described crimes against three women as 'a serious breach of trust'"

}

]

```

### Dataset Fields

The dataset has the following fields (also called "features"):

```json

{

"feat_id": "Value(dtype='string', id=None)",

"text": "Value(dtype='string', id=None)",

"target": "Value(dtype='string', id=None)"

}

```

### Dataset Splits

This dataset is split into a train and validation split. The split sizes are as follow:

| Split name | Num samples |

| ------------ | ------------------- |

| train | 2400 |

| valid | 600 |

|

ishajo/autotrain-data-beproj_meeting_summarization_usingt5

|

[

"task_categories:summarization",

"language:en",

"region:us"

] |

2023-04-14T09:57:03+00:00

|

{"language": ["en"], "task_categories": ["summarization"]}

|

2023-04-14T09:59:49+00:00

|

a83a833ca1b2688cd5fcb65e8176745a19b7ead2

|

Madiator2011/lyoko-dataset

|

[

"task_categories:question-answering",

"language:en",

"license:mit",

"region:us"

] |

2023-04-14T10:03:49+00:00

|

{"language": ["en"], "license": "mit", "task_categories": ["question-answering"], "pretty_name": "Lyoko Wiki"}

|

2023-04-14T10:07:01+00:00

|

|

ca947c50163da32281216ebe1cf081f9c352406b

|

Vector store of embeddings for CFA Level 1 Curriculum

This is a faiss vector store created with Sentence Transformer embeddings using LangChain . Use it for similarity search, question answering or anything else that leverages embeddings! 😃

Creating these embeddings can take a while so here's a convenient, downloadable one 🤗

How to use

Download data

Load to use with LangChain

```

pip install -qqq langchain sentence_transformers faiss-cpu huggingface_hub

import os

from langchain.embeddings import HuggingFaceEmbeddings, HuggingFaceInstructEmbeddings

from langchain.vectorstores.faiss import FAISS

from huggingface_hub import snapshot_download

```

# download the vectorstore for the book you want

```

cache_dir="cfa_level_1_cache"

vectorstore = snapshot_download(repo_id="nickmuchi/CFA_Level_1_Text_Embeddings",

repo_type="dataset",

revision="main",

allow_patterns=f"books/{book}/*", # to download only the one book

cache_dir=cache_dir,

)

```

# get path to the `vectorstore` folder that you just downloaded

# we'll look inside the `cache_dir` for the folder we want

```

target_dir = f"cfa/cfa_level_1"

```

# Walk through the directory tree recursively

```

for root, dirs, files in os.walk(cache_dir):

# Check if the target directory is in the list of directories

if target_dir in dirs:

# Get the full path of the target directory

target_path = os.path.join(root, target_dir)

```

# load embeddings

# this is what was used to create embeddings for the text

```

embed_instruction = "Represent the financial paragraph for document retrieval: "

query_instruction = "Represent the question for retrieving supporting documents: "

model_sbert = "sentence-transformers/all-mpnet-base-v2"

sbert_emb = HuggingFaceEmbeddings(model_name=model_sbert)

model_instr = "hkunlp/instructor-large"

instruct_emb = HuggingFaceInstructEmbeddings(model_name=model_instr,

embed_instruction=embed_instruction,

query_instruction=query_instruction)

# load vector store to use with langchain

docsearch = FAISS.load_local(folder_path=target_path, embeddings=sbert_emb)

# similarity search

question = "How do you hedge the interest rate risk of an MBS?"

search = docsearch.similarity_search(question, k=4)

for item in search:

print(item.page_content)

print(f"From page: {item.metadata['page']}")

print("---")

```

|

nickmuchi/CFA_Level_1_Text_Embeddings

|

[

"task_categories:question-answering",

"task_categories:summarization",

"task_categories:conversational",

"task_categories:sentence-similarity",

"language:en",

"license:apache-2.0",

"faiss",

"langchain",

"instructor embeddings",

"vector stores",

"LLM",

"region:us"

] |

2023-04-14T10:26:21+00:00

|

{"language": ["en"], "license": "apache-2.0", "task_categories": ["question-answering", "summarization", "conversational", "sentence-similarity"], "pretty_name": "FAISS Vector Store of Embeddings of the Chartered Financial Analysts Level 1 Curriculum", "tags": ["faiss", "langchain", "instructor embeddings", "vector stores", "LLM"]}

|

2023-04-14T10:47:20+00:00

|

362ac0a54642ecb5728e700e9e1dbbed4f459c9c

|

# Dataset Card for "processed_pubmed_scientific_papers"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

JYumeko/processed_pubmed_scientific_papers

|

[

"region:us"

] |

2023-04-14T10:26:43+00:00

|

{"dataset_info": {"features": [{"name": "abstract", "dtype": "string"}, {"name": "summary", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 1713154010, "num_examples": 119924}, {"name": "validation", "num_bytes": 96932057, "num_examples": 6633}, {"name": "test", "num_bytes": 96752765, "num_examples": 6658}], "download_size": 879691152, "dataset_size": 1906838832}}

|

2023-04-14T10:30:48+00:00

|

120b4b160e5cd041f1157e553a9f18a33806d0f9

|

# Dataset Card for "chunk_257"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_257

|

[

"region:us"

] |

2023-04-14T10:36:04+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 20717169408.0, "num_examples": 215696}], "download_size": 18802186566, "dataset_size": 20717169408.0}}

|

2023-04-14T10:53:47+00:00

|

b622388b094179e36a428408b6773892ebe62508

|

UnipaPolitoUnimore/covid_unipa_polito_unimore_mild

|

[

"license:unknown",

"region:us"

] |

2023-04-14T10:49:24+00:00

|

{"license": "unknown"}

|

2023-04-14T11:12:04+00:00

|

|

f32ffe9f47bbe0e72cab9e6faf6aa98121d548c8

|

# Shuttle

The [Shuttle dataset](https://archive-beta.ics.uci.edu/dataset/146/statlog+shuttle+satellite) from the [UCI repository](https://archive-beta.ics.uci.edu/).

# Configurations and tasks

| **Configuration** | **Task** | **Description** |

|-----------------------|---------------------------|-------------------------|

| shuttle | Multiclass classification.| |

| shuttle_0 | Binary classification. | Is the image of class 0? |

| shuttle_1 | Binary classification. | Is the image of class 1? |

| shuttle_2 | Binary classification. | Is the image of class 2? |

| shuttle_3 | Binary classification. | Is the image of class 3? |

| shuttle_4 | Binary classification. | Is the image of class 4? |

| shuttle_5 | Binary classification. | Is the image of class 5? |

| shuttle_6 | Binary classification. | Is the image of class 6? |

|

mstz/shuttle

|

[

"task_categories:tabular-classification",

"size_categories:10K<n<100K",

"language:en",

"license:cc",

"shuttle",

"tabular_classification",

"binary_classification",

"multiclass_classification",

"UCI",

"region:us"

] |

2023-04-14T11:03:39+00:00

|

{"language": ["en"], "license": "cc", "size_categories": ["10K<n<100K"], "task_categories": ["tabular-classification"], "pretty_name": "Shuttle", "tags": ["shuttle", "tabular_classification", "binary_classification", "multiclass_classification", "UCI"], "configs": ["shuttle", "shuttle_binary"]}

|

2023-04-16T16:58:41+00:00

|

e653eeaf1e8b6a3280469d1de6612886669605a9

|

# Dataset Card for "chunk_247"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_247

|

[

"region:us"

] |

2023-04-14T11:17:48+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 15218325360.375, "num_examples": 158445}], "download_size": 13050596541, "dataset_size": 15218325360.375}}

|

2023-04-14T11:36:48+00:00

|

c766e6943b44bf27432a595c0a226e5c50c5405d

|

# Human-vs-Machine

This is a dataset collection created in relation to a bachelor thesis written by Nicolai Thorer Sivesind and Andreas Bentzen Winje. It contains human-produced and machine-generated text samples from two domains: Wikipedia introducions and Scientific research abstracts.

Each of the two domains are already exisitng datasets reformatted for text-classification:

[GPT-wiki-intros:](https://huggingface.co/datasets/aadityaubhat/GPT-wiki-intro)

+ Generated samples are produced using the GPT-3 model, _text-curie-001_

+ Target content set by title of real wikipedia introduction and a starter sentence.

+ Target word count of 200 words each.

+ Contains 150k data points of each class.

+ Created by Aaditya Bhat

[ChatGPT-Research-Abstracts](https://huggingface.co/datasets/NicolaiSivesind/ChatGPT-Research-Abstracts):

+ Generated samples are produced using the GPT-3.5 model, _GPT-3.5-turbo-0301_ (Snapshot of the model used in ChatGPT 1st of March, 2023).

+ Target content set by title of real abstract.

+ Target word count equal to the human-produced abstract

+ Contains 10k data points of each class.

+ Created by Nicolai Thorer Sivesind

### Credits

+ [GPT-wiki-intro](https://huggingface.co/datasets/aadityaubhat/GPT-wiki-intro), by Aaditya Bhat

### Citation

Please use the following citation:

```

@misc {sivesind_2023,

author = { {Nicolai Thorer Sivesind}, {Andreas Bentzen Winje}},

title = { Human-vs-Machine },

year = 2023,

publisher = { Hugging Face }

}

```

More information about the dataset will be added once the thesis is finished (end of may 2023).

|

NicolaiSivesind/human-vs-machine

|

[

"task_categories:text-classification",

"size_categories:100K<n<1M",

"language:en",

"license:cc",

"chatgpt",

"gpt",

"research abstracts",

"wikipedia introductions",

"region:us"

] |

2023-04-14T11:24:29+00:00

|

{"language": ["en"], "license": "cc", "size_categories": ["100K<n<1M"], "task_categories": ["text-classification"], "pretty_name": "Human vs Machine - Labled text segments produced by humans and LLMs", "tags": ["chatgpt", "gpt", "research abstracts", "wikipedia introductions"]}

|

2023-05-11T12:03:54+00:00

|

ff323b592a1edb847e5c8979f86c419c7311f312

|

# Dataset Card for "chunk_255"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_255

|

[

"region:us"

] |

2023-04-14T11:55:36+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 17459797536.25, "num_examples": 181782}], "download_size": 14918899973, "dataset_size": 17459797536.25}}

|

2023-04-14T12:17:27+00:00

|

6fae26975634d298ab926f81583fc7f193f6be38

|

# Dataset Card for "chunk_256"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

|

one-sec-cv12/chunk_256

|

[

"region:us"

] |

2023-04-14T12:09:35+00:00

|

{"dataset_info": {"features": [{"name": "audio", "dtype": {"audio": {"sampling_rate": 16000}}}], "splits": [{"name": "train", "num_bytes": 16750098864.875, "num_examples": 174393}], "download_size": 14215175625, "dataset_size": 16750098864.875}}

|

2023-04-14T12:32:40+00:00

|

01bf3e707bfcd1601ac9722b60536df606aab38a

|

This dataset was created by translating "databricks-dolly-15k.jsonl" into Urdu. It is licensed under CC BY 3.0.

.اس ڈیٹا سیٹ کو "ڈیٹابرکس-ڈولی" کو اردو میں ترجمہ کرکے تیار کیا گیا تھا

databricks-dolly-15k https://github.com/databrickslabs/dolly/tree/master/data

|

aaqibsaeed/databricks-dolly-15k-ur

|

[

"license:cc-by-3.0",

"region:us"

] |

2023-04-14T12:13:53+00:00

|

{"license": "cc-by-3.0"}

|

2023-04-14T12:24:03+00:00

|

c0b1111933a7b87bef0e5b3221d8e5f76b5ac27c

|

# Symbolic Instruction Tuning

This is the offical repo to host the datasets used in the paper [From Zero to Hero: Examining the Power of Symbolic Tasks in Instruction Tuning](https://arxiv.org/abs/2304.07995). The training code can be found in [here](https://github.com/sail-sg/symbolic-instruction-tuning).

|

sail/symbolic-instruction-tuning

|

[

"license:mit",

"arxiv:2304.07995",

"region:us"

] |

2023-04-14T12:25:45+00:00

|

{"license": "mit"}

|

2023-07-19T06:53:13+00:00

|

bd9e1492d1097af225551c4740f1691d78c0eaff

|

AyoubChLin/20_ag_cnn_tokenize_bart_zeroShot

|

[

"license:apache-2.0",

"region:us"

] |

2023-04-14T12:56:46+00:00

|

{"license": "apache-2.0"}

|

2023-04-14T15:40:30+00:00

|

|

5cdeb6a737bcb9928546ff2aac035f92ac5e32c4

|

### Dataset Summary

First 10k rows of the scientific_papers["pubmed"] dataset. 8:1:1 split (10000:1250:1250).

### Usage

```

from datasets import load_dataset

train_dataset = load_dataset("ronitHF/pubmed-10k-8.1.1", split="train")

val_dataset = load_dataset("ronitHF/pubmed-10k-8.1.1", split="validation")

test_dataset = load_dataset("ronitHF/pubmed-10k-8.1.1", split="test")

```

|

ronitHF/pubmed-10k-8.1.1

|

[

"task_categories:summarization",

"size_categories:1K<n<10K",

"region:us"

] |

2023-04-14T13:07:00+00:00

|

{"size_categories": ["1K<n<10K"], "task_categories": ["summarization"], "pretty_name": "PubMed 10k (8:1:1)"}

|

2023-04-16T17:34:21+00:00

|

8e1763a767682436d4dae87086da55e46fabe593

|

hongyan123/highlights_momentDETR

|

[

"license:mit",

"region:us"

] |

2023-04-14T13:17:50+00:00

|

{"license": "mit"}

|

2023-04-14T13:17:50+00:00

|

|

532411c5bc01809928045c05a72f38708757fc00

|