sha

stringlengths 40

40

| text

stringlengths 0

13.4M

| id

stringlengths 2

117

| tags

list | created_at

stringlengths 25

25

| metadata

stringlengths 2

31.7M

| last_modified

stringlengths 25

25

|

|---|---|---|---|---|---|---|

6ebad500e4c26070bf0250887f2ea1add40535e9 | # Dataset Card for "task-pages"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | osanseviero/task-pages | [

"region:us"

] | 2022-11-18T06:58:02+00:00 | {"dataset_info": {"features": [{"name": "CHANNEL_NAME", "dtype": "string"}, {"name": "URL", "dtype": "string"}, {"name": "TITLE", "dtype": "string"}, {"name": "DESCRIPTION", "dtype": "string"}, {"name": "TRANSCRIPTION", "dtype": "string"}, {"name": "SEGMENTS", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 27732, "num_examples": 2}], "download_size": 29958, "dataset_size": 27732}} | 2022-11-18T07:01:05+00:00 |

1b7fe9e45386ba995a6e91128bcfd3b278fb7c42 | # Dataset Card for "azure"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | osanseviero/azure | [

"task_categories:automatic-speech-recognition",

"whisper",

"whispering",

"region:us"

] | 2022-11-18T07:06:59+00:00 | {"task_categories": ["automatic-speech-recognition"], "dataset_info": {"features": [{"name": "CHANNEL_NAME", "dtype": "string"}, {"name": "URL", "dtype": "string"}, {"name": "TITLE", "dtype": "string"}, {"name": "DESCRIPTION", "dtype": "string"}, {"name": "TRANSCRIPTION", "dtype": "string"}, {"name": "SEGMENTS", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 27732, "num_examples": 2}], "download_size": 29958, "dataset_size": 27732}, "tags": ["whisper", "whispering"]} | 2022-11-18T07:07:02+00:00 |

744e1fd35ab07eb9d83860154b4298d453050009 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-2e778dac-2622-46c9-930e-6f9e705a27bf-2018 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T10:00:57+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T10:01:40+00:00 |

f1323cd10266ca8d8e135a7f567210d03a747139 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-61fd61be-9af8-4428-ac3c-2fe701ee60d1-2119 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T10:10:19+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T10:10:55+00:00 |

924ae6077edddf60f3ad2f2cbc54df3825a70930 |

# WikiCAT_es: Spanish Text Classification dataset

## Dataset Description

- **Paper:**

- **Point of Contact:** [email protected]

**Repository**

### Dataset Summary

WikiCAT_ca is a Spanish corpus for thematic Text Classification tasks. It is created automatically from Wikipedia and Wikidata sources, and contains 8401 articles from the Viquipedia classified under 12 different categories.

This dataset was developed by BSC TeMU as part of the PlanTL project, and intended as an evaluation of LT capabilities to generate useful synthetic corpus.

### Supported Tasks and Leaderboards

Text classification, Language Model

### Languages

ES- Spanish

## Dataset Structure

### Data Instances

Two json files, one for each split.

### Data Fields

We used a simple model with the article text and associated labels, without further metadata.

#### Example:

<pre>

{'sentence': 'La economía de Reunión se ha basado tradicionalmente en la agricultura. La caña de azúcar ha sido el cultivo principal durante más de un siglo, y en algunos años representa el 85% de las exportaciones. El gobierno ha estado impulsando el desarrollo de una industria turística para aliviar el alto desempleo, que representa más del 40% de la fuerza laboral.(...) El PIB total de la isla fue de 18.800 millones de dólares EE.UU. en 2007., 'label': 'Economía'}

</pre>

#### Labels

'Religión', 'Entretenimiento', 'Música', 'Ciencia_y_Tecnología', 'Política', 'Economía', 'Matemáticas', 'Humanidades', 'Deporte', 'Derecho', 'Historia', 'Filosofía'

### Data Splits

* hfeval_esv5.json: 1681 label-document pairs

* hftrain_esv5.json: 6716 label-document pairs

## Dataset Creation

### Methodology

La páginas de "Categoría" representan los temas.

para cada tema, extraemos las páginas asociadas a ese primer nivel de la jerarquía, y utilizamos el resúmen ("summary") como texto representativo.

### Curation Rationale

### Source Data

#### Initial Data Collection and Normalization

The source data are thematic categories in the different Wikipedias

#### Who are the source language producers?

### Annotations

#### Annotation process

Automatic annotation

#### Who are the annotators?

[N/A]

### Personal and Sensitive Information

No personal or sensitive information included.

## Considerations for Using the Data

### Social Impact of Dataset

We hope this corpus contributes to the development of language models in Spanish.

### Discussion of Biases

We are aware that this data might contain biases. We have not applied any steps to reduce their impact.

### Other Known Limitations

[N/A]

## Additional Information

### Dataset Curators

Text Mining Unit (TeMU) at the Barcelona Supercomputing Center ([email protected]).

For further information, send an email to ([email protected]).

This work was funded by the [Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA)](https://avancedigital.mineco.gob.es/en-us/Paginas/index.aspx) within the framework of the [Plan-TL](https://plantl.mineco.gob.es/Paginas/index.aspx).

### Licensing Information

This work is licensed under [CC Attribution 4.0 International](https://creativecommons.org/licenses/by/4.0/) License.

Copyright by the Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA) (2022)

### Contributions

[N/A]

| PlanTL-GOB-ES/WikiCAT_esv2 | [

"task_categories:text-classification",

"task_ids:multi-class-classification",

"annotations_creators:automatically-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:unknown",

"language:es",

"license:cc-by-sa-3.0",

"region:us"

] | 2022-11-18T10:18:53+00:00 | {"annotations_creators": ["automatically-generated"], "language_creators": ["found"], "language": ["es"], "license": ["cc-by-sa-3.0"], "multilinguality": ["monolingual"], "size_categories": ["unknown"], "source_datasets": [], "task_categories": ["text-classification"], "task_ids": ["multi-class-classification"], "pretty_name": "wikicat_esv2"} | 2023-07-27T08:13:16+00:00 |

facf3772e67d51b7d27508477f777565c6c720f5 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-ec388423-7e76-47a7-a778-e7cfff84a71c-2220 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T10:28:05+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T10:28:40+00:00 |

9c34d9a5a168152c846b6d3e07f34e97dff5f0bd | shiertier/conda | [

"license:other",

"region:us"

] | 2022-11-18T10:28:37+00:00 | {"license": "other"} | 2022-11-18T14:46:33+00:00 |

|

2278fe0ab44d4eaf561999862ac1a67ec0fbf4b7 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-fc4d51f7-9dde-4256-8b44-b5a68a081b2b-2321 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T10:42:05+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T10:42:41+00:00 |

2f1635ca28e9b62ec23b10311b737f02996d799b | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-ef91170b-c394-482c-8a00-6b7bc5ea5574-2422 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T10:59:57+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T11:00:32+00:00 |

febb64298be8a000cbc22029364c563b0b9c2105 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-622e0c30-b54d-415c-87b9-70c107d23cec-2523 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T11:04:27+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T11:05:03+00:00 |

b7cdd5ca43df8833b34d5ca2d4088051b6b82926 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-e6349348-5660-49a6-843b-4c305a6146f2-2624 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T11:09:03+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T11:09:39+00:00 |

bfc6a6071e5fc81c992e01784c8195aa1d23e910 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Binary Text Classification

* Model: autoevaluate/binary-classification

* Dataset: glue

* Config: sst2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-project-6a0cd869-0e5a-4c97-8312-c7fea68b3609-2725 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-11-18T11:19:34+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["glue"], "eval_info": {"task": "binary_classification", "model": "autoevaluate/binary-classification", "metrics": ["matthews_correlation"], "dataset_name": "glue", "dataset_config": "sst2", "dataset_split": "validation", "col_mapping": {"text": "sentence", "target": "label"}}} | 2022-11-18T11:20:11+00:00 |

ceab8df9442a7649bc1f6656df30bda47e646039 | prerana17/testing1 | [

"license:afl-3.0",

"region:us"

] | 2022-11-18T11:25:01+00:00 | {"license": "afl-3.0"} | 2022-11-18T15:41:31+00:00 |

|

07b121a28410f42f09cf334d35a1042c48a60d67 | # Citation

```

@article{DBLP:journals/corr/abs-1710-10196,

author = {Tero Karras and

Timo Aila and

Samuli Laine and

Jaakko Lehtinen},

title = {Progressive Growing of GANs for Improved Quality, Stability, and Variation},

journal = {CoRR},

volume = {abs/1710.10196},

year = {2017},

url = {http://arxiv.org/abs/1710.10196},

eprinttype = {arXiv},

eprint = {1710.10196},

timestamp = {Mon, 13 Aug 2018 16:46:42 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1710-10196.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` | Chris1/celebA-HQ | [

"arxiv:1710.10196",

"region:us"

] | 2022-11-18T14:01:17+00:00 | {} | 2022-11-18T14:30:59+00:00 |

23c0a29d28d0bbdb4c2bbcff56fda332379e69b0 | # Dataset Card for "sidewalk-imagery"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | pattern123/sidewalk-imagery | [

"region:us"

] | 2022-11-18T14:01:20+00:00 | {"dataset_info": {"features": [{"name": "pixel_values", "dtype": "image"}, {"name": "label", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 3138394.0, "num_examples": 10}], "download_size": 3139599, "dataset_size": 3138394.0}} | 2022-11-19T05:23:06+00:00 |

11be2afac6a84a3963bc34b66eb2c6fcfca4264e | arbml/arabic_pos_dialect | [

"license:apache-2.0",

"region:us"

] | 2022-11-18T14:17:01+00:00 | {"license": "apache-2.0"} | 2022-11-18T14:40:06+00:00 |

|

6dc072319bf629545aa7926c37d15c002d951c9e | awkwardneutrino/arkghn | [

"license:creativeml-openrail-m",

"region:us"

] | 2022-11-18T15:58:14+00:00 | {"license": "creativeml-openrail-m"} | 2022-11-18T16:00:46+00:00 |

|

21187d1891b1911eeb12022294a5681e28edb7eb |

# Dataset Card for Dataset Name

## Dataset Description

- **Homepage:**

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

This dataset card aims to be a base template for new datasets. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/datasetcard_template.md?plain=1).

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

[More Information Needed]

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

[More Information Needed] | ccao/test | [

"region:us"

] | 2022-11-18T16:17:38+00:00 | {} | 2023-01-19T05:02:01+00:00 |

2bc7902538956d23470bd31923ae3ff2d12757bb | # Dataset Card for "cstop_artificial"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | WillHeld/cstop_artificial | [

"region:us"

] | 2022-11-18T20:13:29+00:00 | {"dataset_info": {"features": [{"name": "utterance", "dtype": "string"}, {"name": " semantic_parse", "dtype": "string"}, {"name": "semantic_parse", "dtype": "string"}], "splits": [{"name": "eval", "num_bytes": 113084, "num_examples": 559}, {"name": "test", "num_bytes": 233020, "num_examples": 1167}, {"name": "train", "num_bytes": 819464, "num_examples": 4077}], "download_size": 371646, "dataset_size": 1165568}} | 2022-12-10T17:54:16+00:00 |

9e0916d21f6fbedd8a1786e8be29b0df87b40bb1 |

<h4> Usage </h4>

To use this embedding you have to download the file and put it into the "\stable-diffusion-webui\embeddings" folder

To use it in a prompt add

<em style="font-weight:600">art by rogue_style </em>

add <b>[ ]</b> around it to reduce its weight.

<h4> Included Files </h4>

<ul>

<li>500 steps <em>Usage: art by rogue_style-500</em></li>

<li>3500 steps <em>Usage: art by rogue_style-3500</em></li>

<li>6500 steps <em>Usage: art by rogue_style</em> </li>

</ul>

cheers<br>

Wipeout

<h4> Example Pictures </h4>

<table>

<tbody>

<tr>

<td><img height="100%/" width="100%" src="https://i.imgur.com/JefZ3cA.png"></td>

<td><img height="100%/" width="100%" src="https://i.imgur.com/YBJzVIi.png"></td>

<td><img height="100%/" width="100%" src="https://i.imgur.com/96iutfu.png"></td>

<td><img height="100%/" width="100%" src="https://i.imgur.com/SBKfnc4.png"></td>

</tr>

</tbody>

</table>

<h4> prompt comparison </h4>

<em> click the image to enlarge</em>

<a href="https://i.imgur.com/a6te4zG.png" target="_blank"><img height="50%" width="50%" src="https://i.imgur.com/a6te4zG.png"></a>

| zZWipeoutZz/rogue_style | [

"license:creativeml-openrail-m",

"region:us"

] | 2022-11-18T20:40:41+00:00 | {"license": "creativeml-openrail-m"} | 2022-11-19T15:03:02+00:00 |

74c07125220961838bfdecf0c0b205d32c471e1d | mamivac/db | [

"license:openrail",

"region:us"

] | 2022-11-18T21:17:16+00:00 | {"license": "openrail"} | 2022-11-18T21:17:16+00:00 |

|

45ae712ac42fa0209015db476c1d040e17442527 | # Dataset Card for "highways-hacktum"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | ogimgio/highways-hacktum | [

"region:us"

] | 2022-11-18T23:59:44+00:00 | {"dataset_info": {"features": [{"name": "image", "dtype": "image"}, {"name": "label", "dtype": {"class_label": {"names": {"0": "footway", "1": "primary"}}}}], "splits": [{"name": "train", "num_bytes": 1155915978.0, "num_examples": 500}, {"name": "validation", "num_bytes": 284161545.0, "num_examples": 125}], "download_size": 1431719317, "dataset_size": 1440077523.0}} | 2022-11-19T00:04:45+00:00 |

74a44153625d0382b9d3c8af0a49a16e0c3cef0e |

# Dataset Card for ravnursson_asr

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [Ravnursson Faroese Speech and Transcripts](http://hdl.handle.net/20.500.12537/276)

- **Repository:** [Clarin.is](http://hdl.handle.net/20.500.12537/276)

- **Paper:** [ASR Language Resources for Faroese](https://aclanthology.org/2023.nodalida-1.4.pdf)

- **Paper:** [Creating a basic language resource kit for faroese.](https://aclanthology.org/2022.lrec-1.495.pdf)

- **Point of Contact:** [Annika Simonsen](mailto:[email protected]), [Carlos Mena](mailto:[email protected])

### Dataset Summary

The corpus "RAVNURSSON FAROESE SPEECH AND TRANSCRIPTS" (or RAVNURSSON Corpus for short) is a collection of speech recordings with transcriptions intended for Automatic Speech Recognition (ASR) applications in the language that is spoken at the Faroe Islands (Faroese). It was curated at the Reykjavík University (RU) in 2022.

The RAVNURSSON Corpus is an extract of the "Basic Language Resource Kit 1.0" (BLARK 1.0) [1] developed by the Ravnur Project from the Faroe Islands [2]. As a matter of fact, the name RAVNURSSON comes from Ravnur (a tribute to the Ravnur Project) and the suffix "son" which in Icelandic means "son of". Therefore, the name "RAVNURSSON" means "The (Icelandic) son of Ravnur". The double "ss" is just for aesthetics.

The audio was collected by recording speakers reading texts. The participants are aged 15-83, divided into 3 age groups: 15-35, 36-60 and 61+.

The speech files are made of 249 female speakers and 184 male speakers; 433 speakers total. The recordings were made on TASCAM DR-40 Linear PCM audio recorders using the built-in stereo microphones in WAVE 16 bit with a sample rate of 48kHz, but then, downsampled to 16kHz@16bit mono for this corpus.

[1] Simonsen, A., Debess, I. N., Lamhauge, S. S., & Henrichsen, P. J. Creating a basic language resource kit for Faroese. In LREC 2022. 13th International Conference on Language Resources and Evaluation.

[2] Website. The Project Ravnur under the Talutøkni Foundation https://maltokni.fo/en/the-ravnur-project

### Example Usage

The RAVNURSSON Corpus is divided in 3 splits: train, validation and test. To load a specific split pass its name as a config name:

```python

from datasets import load_dataset

ravnursson = load_dataset("carlosdanielhernandezmena/ravnursson_asr")

```

To load an specific split (for example, the validation split) do:

```python

from datasets import load_dataset

ravnursson = load_dataset("carlosdanielhernandezmena/ravnursson_asr",split="validation")

```

### Supported Tasks

automatic-speech-recognition: The dataset can be used to train a model for Automatic Speech Recognition (ASR). The model is presented with an audio file and asked to transcribe the audio file to written text. The most common evaluation metric is the word error rate (WER).

### Languages

The audio is in Faroese.

The reading prompts for the RAVNURSSON Corpus have been generated by expert linguists. The whole corpus was balanced for phonetic and dialectal coverage; Test and Dev subsets are gender-balanced. Tabular computer-searchable information is included as well as written documentation.

## Dataset Structure

### Data Instances

```python

{

'audio_id': 'KAM06_151121_0101',

'audio': {

'path': '/home/carlos/.cache/HuggingFace/datasets/downloads/extracted/32b4a757027b72b8d2e25cd9c8be9c7c919cc8d4eb1a9a899e02c11fd6074536/dev/RDATA2/KAM06_151121/KAM06_151121_0101.flac',

'array': array([ 0.0010376 , -0.00521851, -0.00393677, ..., 0.00128174,

0.00076294, 0.00045776], dtype=float32),

'sampling_rate': 16000

},

'speaker_id': 'KAM06_151121',

'gender': 'female',

'age': '36-60',

'duration': 4.863999843597412,

'normalized_text': 'endurskin eru týdningarmikil í myrkri',

'dialect': 'sandoy'

}

```

### Data Fields

* `audio_id` (string) - id of audio segment

* `audio` (datasets.Audio) - a dictionary containing the path to the audio, the decoded audio array, and the sampling rate. In non-streaming mode (default), the path points to the locally extracted audio. In streaming mode, the path is the relative path of an audio inside its archive (as files are not downloaded and extracted locally).

* `speaker_id` (string) - id of speaker

* `gender` (string) - gender of speaker (male or female)

* `age` (string) - range of age of the speaker: Younger (15-35), Middle-aged (36-60) or Elderly (61+).

* `duration` (float32) - duration of the audio file in seconds.

* `normalized_text` (string) - normalized audio segment transcription

* `dialect` (string) - dialect group, for example "Suðuroy" or "Sandoy".

### Data Splits

The speech material has been subdivided into portions for training (train), development (evaluation) and testing (test). Lengths of each portion are: train = 100h08m, test = 4h30m, dev (evaluation)=4h30m.

To load an specific portion please see the above section "Example Usage".

The development and test portions have exactly 10 male and 10 female speakers each and both portions have exactly the same size in hours (4.5h each).

## Dataset Creation

### Curation Rationale

The directory called "speech" contains all the speech files of the corpus. The files in the speech directory are divided in three directories: train, dev and test. The train portion is sub-divided in three types of recordings: RDATA1O, RDATA1OP and RDATA2; this is due to the organization of the recordings in the original BLARK 1.0. There, the recordings are divided in Rdata1 and Rdata2.

One main difference between Rdata1 and Rdata2 is that the reading environment for Rdata2 was controlled by a software called "PushPrompt" which is included in the original BLARK 1.0. Another main difference is that in Rdata1 there are some available transcriptions labeled at the phoneme level. For this reason the audio files in the speech directory of the RAVNURSSON corpus are divided in the folders RDATA1O where "O" is for "Orthographic" and RDATA1OP where "O" is for Orthographic and "P" is for phonetic.

In the case of the dev and test portions, the data come only from Rdata2 which does not have labels at the phonetic level.

It is important to clarify that the RAVNURSSON Corpus only includes transcriptions at the orthographic level.

### Source Data

#### Initial Data Collection and Normalization

The dataset was released with normalized text only at an orthographic level in lower-case. The normalization process was performed by automatically removing punctuation marks and characters that are not present in the Faroese alphabet.

#### Who are the source language producers?

* The utterances were recorded using a TASCAM DR-40.

* Participants self-reported their age group, gender, native language and dialect.

* Participants are aged between 15 to 83 years.

* The corpus contains 71949 speech files from 433 speakers, totalling 109 hours and 9 minutes.

### Annotations

#### Annotation process

Most of the reading prompts were selected by experts from a Faroese text corpus (news, blogs, Wikipedia etc.) and were edited to fit the format. Reading prompts that are within specific domains (such as Faroese place names, numbers, license plates, telling time etc.) were written by the Ravnur Project. Then, a software tool called PushPrompt were used for reading sessions (voice recordings). PushPromt presents the text items in the reading material to the reader, allowing him/her to manage the session interactively (adjusting the reading tempo, repeating speech productions at wish, inserting short breaks as needed, etc.). When the reading session is completed, a log file (with time stamps for each production) is written as a data table compliant with the TextGrid-format.

#### Who are the annotators?

The corpus was annotated by the [Ravnur Project](https://maltokni.fo/en/the-ravnur-project)

### Personal and Sensitive Information

The dataset consists of people who have donated their voice. You agree to not attempt to determine the identity of speakers in this dataset.

## Considerations for Using the Data

### Social Impact of Dataset

This is the first ASR corpus in Faroese.

### Discussion of Biases

As the number of reading prompts was limited, the common denominator in the RAVNURSSON corpus is that one prompt is read by more than one speaker. This is relevant because is a common practice in ASR to create a language model using the prompts that are found in the train portion of the corpus. That is not recommended for the RAVNURSSON Corpus as it counts with many prompts shared by all the portions and that will produce an important bias in the language modeling task.

In this section we present some statistics about the repeated prompts through all the portions of the corpus.

- In the train portion:

* Total number of prompts = 65616

* Number of unique prompts = 38646

There are 26970 repeated prompts in the train portion. In other words, 41.1% of the prompts are repeated.

- In the test portion:

* Total number of prompts = 3002

* Number of unique prompts = 2887

There are 115 repeated prompts in the test portion. In other words, 3.83% of the prompts are repeated.

- In the dev portion:

* Total number of prompts = 3331

* Number of unique prompts = 3302

There are 29 repeated prompts in the dev portion. In other words, 0.87% of the prompts are repeated.

- Considering the corpus as a whole:

* Total number of prompts = 71949

* Number of unique prompts = 39945

There are 32004 repeated prompts in the whole corpus. In other words, 44.48% of the prompts are repeated.

NOTICE!: It is also important to clarify that none of the 3 portions of the corpus share speakers.

### Other Known Limitations

"RAVNURSSON FAROESE SPEECH AND TRANSCRIPTS" by Carlos Daniel Hernández Mena and Annika Simonsen is licensed under a Creative Commons Attribution 4.0 International (CC BY 4.0) License with the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

## Additional Information

### Dataset Curators

The dataset was collected by Annika Simonsen and curated by Carlos Daniel Hernández Mena.

### Licensing Information

[CC-BY-4.0](https://creativecommons.org/licenses/by/4.0/)

### Citation Information

```

@misc{carlosmenaravnursson2022,

title={Ravnursson Faroese Speech and Transcripts},

author={Hernandez Mena, Carlos Daniel and Simonsen, Annika},

year={2022},

url={http://hdl.handle.net/20.500.12537/276},

}

```

### Contributions

This project was made possible under the umbrella of the Language Technology Programme for Icelandic 2019-2023. The programme, which is managed and coordinated by Almannarómur, is funded by the Icelandic Ministry of Education, Science and Culture.

Special thanks to Dr. Jón Guðnason, professor at Reykjavík University and head of the Language and Voice Lab (LVL) for providing computational resources.

| carlosdanielhernandezmena/ravnursson_asr | [

"task_categories:automatic-speech-recognition",

"annotations_creators:expert-generated",

"language_creators:expert-generated",

"multilinguality:monolingual",

"size_categories:10K<n<100K",

"source_datasets:original",

"language:fo",

"license:cc-by-4.0",

"faroe islands",

"faroese",

"ravnur project",

"speech recognition in faroese",

"region:us"

] | 2022-11-19T00:02:04+00:00 | {"annotations_creators": ["expert-generated"], "language_creators": ["expert-generated"], "language": ["fo"], "license": ["cc-by-4.0"], "multilinguality": ["monolingual"], "size_categories": ["10K<n<100K"], "source_datasets": ["original"], "task_categories": ["automatic-speech-recognition"], "task_ids": [], "pretty_name": "RAVNURSSON FAROESE SPEECH AND TRANSCRIPTS", "tags": ["faroe islands", "faroese", "ravnur project", "speech recognition in faroese"]} | 2023-07-10T20:20:03+00:00 |

45f2b87fa811b809c4482efc897ab9580fa3f910 | munozariasjm/tab_pib_4_7 | [

"license:mit",

"region:us"

] | 2022-11-19T02:31:12+00:00 | {"license": "mit"} | 2022-11-30T10:40:06+00:00 |

|

b908bad5ef0759d2c03baf09715a98aedda9ded1 |

# Dataset Card for kodak

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** <https://r0k.us/graphics/kodak/>

- **Repository:** <https://github.com/MohamedBakrAli/Kodak-Lossless-True-Color-Image-Suite>

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

The pictures below link to lossless, true color (24 bits per pixel, aka "full

color") images. It is my understanding they have been released by the Eastman

Kodak Company for unrestricted usage. Many sites use them as a standard test

suite for compression testing, etc. Prior to this site, they were only

available in the Sun Raster format via ftp. This meant that the images could

not be previewed before downloading. Since their release, however, the lossless

PNG format has been incorporated into all the major browsers. Since PNG

supports 24-bit lossless color (which GIF and JPEG do not), it is now possible

to offer this browser-friendly access to the images.

### Supported Tasks and Leaderboards

- Image compression

### Languages

- en

## Dataset Structure

### Data Instances

- [](https://r0k.us/graphics/kodak/kodak/kodim01.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim02.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim03.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim04.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim05.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim06.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim07.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim08.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim09.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim10.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim11.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim12.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim13.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim14.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim15.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim16.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim17.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim18.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim19.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim20.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim21.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim22.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim23.png)

- [](https://r0k.us/graphics/kodak/kodak/kodim24.png)

### Data Fields

### Data Splits

## Dataset Creation

### Curation Rationale

### Source Data

#### Initial Data Collection and Normalization

#### Who are the source language producers?

<https://www.kodak.com>

### Annotations

#### Annotation process

#### Who are the annotators?

### Personal and Sensitive Information

## Considerations for Using the Data

### Social Impact of Dataset

### Discussion of Biases

### Other Known Limitations

## Additional Information

### Dataset Curators

### Licensing Information

[LICENSE](LICENSE)

### Citation Information

### Contributions

Thanks to [@Freed-Wu](https://github.com/Freed-Wu) for adding this dataset.

| Freed-Wu/kodak | [

"task_categories:other",

"annotations_creators:no-annotation",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:n<1K",

"source_datasets:original",

"language:en",

"license:gpl-3.0",

"region:us"

] | 2022-11-19T05:43:53+00:00 | {"annotations_creators": ["no-annotation"], "language_creators": ["found"], "language": ["en"], "license": ["gpl-3.0"], "multilinguality": ["monolingual"], "size_categories": ["n<1K"], "source_datasets": ["original"], "task_categories": ["other"], "task_ids": [], "pretty_name": "kodak", "tags": [], "dataset_info": {"features": [{"name": "image", "dtype": "image"}], "splits": [{"name": "test", "num_bytes": 15072, "num_examples": 24}], "download_size": 15072, "dataset_size": 15072}} | 2022-11-19T05:43:53+00:00 |

40df846508452e5b666a40f5def1e9dddd321975 | uetchy/thesession | [

"license:odbl",

"region:us"

] | 2022-11-19T07:14:42+00:00 | {"license": "odbl"} | 2022-11-19T07:14:42+00:00 |

|

aadde6fe7f3a14364dcf4ed61b6173625beffead | # Dataset Card for "koikatsu-cards"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | chenrm/koikatsu-cards | [

"region:us"

] | 2022-11-19T08:54:34+00:00 | {"dataset_info": {"features": [{"name": "image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 43368873054.078, "num_examples": 10178}, {"name": "test", "num_bytes": 20733059.0, "num_examples": 5}], "download_size": 56731523062, "dataset_size": 43389606113.078}} | 2022-11-19T10:33:28+00:00 |

4eaa49ac06038400da1437d5cd98686ac3712ab0 | # Dataset Card for "del"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | galman33/del | [

"region:us"

] | 2022-11-19T12:26:10+00:00 | {"dataset_info": {"features": [{"name": "lat", "dtype": "float64"}, {"name": "lon", "dtype": "float64"}, {"name": "country_code", "dtype": "string"}, {"name": "pixels", "dtype": {"array3_d": {"shape": [256, 256, 3], "dtype": "uint8"}}}], "splits": [{"name": "train", "num_bytes": 3816359256, "num_examples": 8300}], "download_size": 1455177025, "dataset_size": 3816359256}} | 2022-11-19T12:45:41+00:00 |

4f15697f40bdb5be4c583942d825e48627d4bef5 | # Dataset Card for "gal_yair_83000_256x256"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | galman33/gal_yair_83000_256x256 | [

"region:us"

] | 2022-11-19T12:29:05+00:00 | {"dataset_info": {"features": [{"name": "lat", "dtype": "float64"}, {"name": "lon", "dtype": "float64"}, {"name": "country_code", "dtype": "string"}, {"name": "image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 8075570913.0, "num_examples": 83000}], "download_size": 8075813262, "dataset_size": 8075570913.0}} | 2022-11-19T12:33:25+00:00 |

24aea2a13efaddb810cdff6ae2b133ccb565d025 | Hugging Face's logo

Hugging Face

Search models, datasets, users...

Models

Datasets

Spaces

Docs

Solutions

Pricing

Hugging Face is way more fun with friends and colleagues! 🤗 Join an organization

Datasets:

Mostafa3zazi

/

Arabic_SQuAD Copied

like

0

Dataset card

Files and versions

Community

Arabic_SQuAD

/

README.md

Mostafa3zazi's picture

Mostafa3zazi

Update README.md

17d5b9d

19 days ago

raw

history

blame

contribute

delete

Safe

2.18 kB

---

dataset_info:

features:

- name: index

dtype: string

- name: question

dtype: string

- name: context

dtype: string

- name: text

dtype: string

- name: answer_start

dtype: int64

- name: c_id

dtype: int64

splits:

- name: train

num_bytes: 61868003

num_examples: 48344

download_size: 10512179

dataset_size: 61868003

---

# Dataset Card for "Arabic_SQuAD"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

---

# Citation

```

@inproceedings{mozannar-etal-2019-neural,

title = "Neural {A}rabic Question Answering",

author = "Mozannar, Hussein and

Maamary, Elie and

El Hajal, Karl and

Hajj, Hazem",

booktitle = "Proceedings of the Fourth Arabic Natural Language Processing Workshop",

month = aug,

year = "2019",

address = "Florence, Italy",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/W19-4612",

doi = "10.18653/v1/W19-4612",

pages = "108--118",

abstract = "This paper tackles the problem of open domain factual Arabic question answering (QA) using Wikipedia as our knowledge source. This constrains the answer of any question to be a span of text in Wikipedia. Open domain QA for Arabic entails three challenges: annotated QA datasets in Arabic, large scale efficient information retrieval and machine reading comprehension. To deal with the lack of Arabic QA datasets we present the Arabic Reading Comprehension Dataset (ARCD) composed of 1,395 questions posed by crowdworkers on Wikipedia articles, and a machine translation of the Stanford Question Answering Dataset (Arabic-SQuAD). Our system for open domain question answering in Arabic (SOQAL) is based on two components: (1) a document retriever using a hierarchical TF-IDF approach and (2) a neural reading comprehension model using the pre-trained bi-directional transformer BERT. Our experiments on ARCD indicate the effectiveness of our approach with our BERT-based reader achieving a 61.3 F1 score, and our open domain system SOQAL achieving a 27.6 F1 score.",

}

```

---

| ZIZOU/Arabic_Squad | [

"region:us"

] | 2022-11-19T12:58:41+00:00 | {} | 2022-11-26T09:36:45+00:00 |

e9805e363e41f46225021bc9f3da6d0b0483b8e1 | This dataset is for use in Automatic Speech Recognition (ASR) for a project at University of Zambia(UNZA) | unza/unza-nyanja | [

"region:us"

] | 2022-11-19T14:18:39+00:00 | {} | 2022-11-19T17:42:38+00:00 |

d7c094f2a6ae22d41a3c143b42033e61c6ecfd72 | # Dataset Card for "L1_poleval_korpus_pelny_train"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_poleval_korpus_pelny_train | [

"region:us"

] | 2022-11-19T14:43:15+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 764265, "num_examples": 9443}], "download_size": 509113, "dataset_size": 764265}} | 2022-11-19T18:25:13+00:00 |

56bfdb5641038be046b772f3378e9b45b06a6bb2 | # Dataset Card for "L1_poleval_korpus_pelny_test"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_poleval_korpus_pelny_test | [

"region:us"

] | 2022-11-19T14:43:36+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 71297, "num_examples": 891}], "download_size": 47500, "dataset_size": 71297}} | 2022-11-19T18:25:17+00:00 |

c4d9f2faf033cc7f4f86b65814ae21df6e2ba768 | # Dataset Card for "L1_poleval_korpus_wzorcowy_train"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_poleval_korpus_wzorcowy_train | [

"region:us"

] | 2022-11-19T14:55:23+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 20564, "num_examples": 253}], "download_size": 15381, "dataset_size": 20564}} | 2022-11-19T18:25:28+00:00 |

85e49f1e1bc10c9cacc44a24d17112d42fb09ccb | # Dataset Card for "L1_poleval_korpus_wzorcowy_test"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_poleval_korpus_wzorcowy_test | [

"region:us"

] | 2022-11-19T14:55:27+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 1963, "num_examples": 25}], "download_size": 2784, "dataset_size": 1963}} | 2022-11-19T18:25:32+00:00 |

3a031ae539b268125dad64f3ac9d559bd9ea9e22 | # Dataset Card for "gal_yair_83000_100x100"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | galman33/gal_yair_83000_100x100 | [

"region:us"

] | 2022-11-19T14:56:55+00:00 | {"dataset_info": {"features": [{"name": "lat", "dtype": "float64"}, {"name": "lon", "dtype": "float64"}, {"name": "country_code", "dtype": "string"}, {"name": "image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 1423239502.0, "num_examples": 83000}], "download_size": 1423108777, "dataset_size": 1423239502.0}} | 2022-11-19T14:57:47+00:00 |

88c830071713a2d643fe625eb6bb49fdc3b638a9 | Juanchoxs/model1 | [

"license:openrail",

"region:us"

] | 2022-11-19T15:20:07+00:00 | {"license": "openrail"} | 2022-11-19T15:21:08+00:00 |

|

e8aa28670485bbe09d7cc3ce8a47ea4186f01429 |

# Dataset Card for FIB

## Dataset Summary

The FIB benchmark consists of 3579 examples for evaluating the factual inconsistency of large language models. Each example consists of a document and a pair of summaries: a factually consistent one and a factually inconsistent one. It is based on documents and summaries from XSum and CNN/DM.

Since this dataset is intended to evaluate the factual inconsistency of large language models, there is only a test split.

Accuracies should be reported separately for examples from XSum and for examples from CNN/DM. This is because the behavior of models on XSum and CNN/DM are expected to be very different. The factually inconsistent summaries are model-extracted from the document for CNN/DM but are model-generated for XSum.

### Citation Information

```

@article{tam2022fib,

title={Evaluating the Factual Consistency of Large Language Models Through Summarization},

author={Tam, Derek and Mascarenhas, Anisha and Zhang, Shiyue and Kwan, Sarah and Bansal, Mohit and Raffel, Colin},

journal={arXiv preprint arXiv:2211.08412},

year={2022}

}

```

### Licensing Information

license: cc-by-4.0 | r-three/fib | [

"region:us"

] | 2022-11-19T15:22:00+00:00 | {} | 2022-11-19T15:57:58+00:00 |

75e773476edee6fafc144ee1e0833fc06e106326 | nlhappy/CLUE-NER | [

"license:mit",

"region:us"

] | 2022-11-19T15:36:25+00:00 | {"license": "mit"} | 2022-11-19T15:43:09+00:00 |

|

93d367c80cbd29aad9c9412a95b95ec782509b39 | два датасета: один с оригинальными нуждиками и один поменьше с пупами на основе нуждиков. оба в аудио и текстовом формате. | 4eJIoBek/nujdiki | [

"license:wtfpl",

"region:us"

] | 2022-11-19T16:23:30+00:00 | {"license": "wtfpl"} | 2023-02-13T15:35:25+00:00 |

51fbabf0496b056d0e018b99157a29261d6b9a93 | # Dataset Card for "L1_scraped_korpus_pelny_train"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_scraped_korpus_pelny_train | [

"region:us"

] | 2022-11-19T16:38:58+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 118294409, "num_examples": 1249536}], "download_size": 86623523, "dataset_size": 118294409}} | 2022-11-19T17:15:59+00:00 |

67a3ee8544898d09a5aefd43e59d2461a8231a65 | # Dataset Card for "L1_scraped_korpus_pelny_test"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_scraped_korpus_pelny_test | [

"region:us"

] | 2022-11-19T16:39:05+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 29613125, "num_examples": 312385}], "download_size": 21671824, "dataset_size": 29613125}} | 2022-11-19T17:16:03+00:00 |

208f15b308d39fe537796a914044a9d1f9656a23 | # Dataset Card for "L1_scraped_korpus_wzorcowy_train"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_scraped_korpus_wzorcowy_train | [

"region:us"

] | 2022-11-19T16:39:36+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 4838134, "num_examples": 29488}], "download_size": 3466828, "dataset_size": 4838134}} | 2022-11-19T17:18:32+00:00 |

7e3a8ff3857ecff1c74aaba90a379cb57dad6cae | # Dataset Card for "L1_scraped_korpus_wzorcowy_test"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_scraped_korpus_wzorcowy_test | [

"region:us"

] | 2022-11-19T16:39:40+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 1207567, "num_examples": 7372}], "download_size": 865883, "dataset_size": 1207567}} | 2022-11-19T17:18:35+00:00 |

9dede1f6ae1d869828ed07cd1ef20c07ec4d6b2f | # Dataset Card for "L1_scraped_korpus_wzorcowy"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_scraped_korpus_wzorcowy | [

"region:us"

] | 2022-11-19T16:39:44+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 4838134, "num_examples": 29488}, {"name": "test", "num_bytes": 1207567, "num_examples": 7372}], "download_size": 4332711, "dataset_size": 6045701}} | 2022-11-19T17:18:41+00:00 |

3aafab6b17f62690ee8aeba776319482cd1e9ce8 | lIlBrother/KsponSpeech-Phonetic | [

"license:apache-2.0",

"region:us"

] | 2022-11-19T16:43:59+00:00 | {"license": "apache-2.0"} | 2022-11-19T16:43:59+00:00 |

|

5c4e18e9fa5fd20be2eb840b2f43d241bc073fc9 | # Dataset Card for "L1_scraped_korpus_pelny"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_scraped_korpus_pelny | [

"region:us"

] | 2022-11-19T16:50:52+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 118294409, "num_examples": 1249536}, {"name": "test", "num_bytes": 29613125, "num_examples": 312385}], "download_size": 108295347, "dataset_size": 147907534}} | 2022-11-19T17:15:54+00:00 |

a953228ef0aad99a67b9da3b5cc2a7e6dc3fff55 | # Dataset Card for "L1_poleval_korpus_pelny"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_poleval_korpus_pelny | [

"region:us"

] | 2022-11-19T16:51:32+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 764265, "num_examples": 9443}, {"name": "test", "num_bytes": 71297, "num_examples": 891}], "download_size": 556613, "dataset_size": 835562}} | 2022-11-19T16:51:39+00:00 |

12d7363c2f20943fa85687da7ac2a6947fb2923a | # Dataset Card for "L1_poleval_korpus_wzorcowy"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Nikutka/L1_poleval_korpus_wzorcowy | [

"region:us"

] | 2022-11-19T16:51:55+00:00 | {"dataset_info": {"features": [{"name": "content", "dtype": "string"}, {"name": "label", "dtype": "int64"}], "splits": [{"name": "train", "num_bytes": 20564, "num_examples": 253}, {"name": "test", "num_bytes": 1963, "num_examples": 25}], "download_size": 18165, "dataset_size": 22527}} | 2022-11-19T16:52:02+00:00 |

2b67f85fe6ae0762e5b6bcb5e2202477c204dc83 |

_The Dataset Teaser is now enabled instead! Isn't this better?_

# TD 01: Natural Ground Textures

This dataset contains multi-photo texture captures in outdoor nature scenes — all focusing on the ground. Each set has different photos that showcase texture variety, making them ideal for training a domain-specific image generator!

Overall information about this dataset:

* **Format** — JPEG-XL, lossless RGB

* **Resolution** — 4032 × 2268

* **Device** — mobile camera

* **Technique** — hand-held

* **Orientation** — portrait or landscape

* **Author**: Alex J. Champandard

* **Configurations**: 4K, 2K (default), 1K

To load the medium- and high-resolution images of the dataset, you'll need to install `jxlpy` from [PyPI](https://pypi.org/project/jxlpy/) with `pip install jxlpy`:

```python

# Recommended use, JXL at high-quality.

from jxlpy import JXLImagePlugin

from datasets import load_dataset

d = load_dataset('texturedesign/td01_natural-ground-textures', 'JXL@4K', num_proc=4)

print(len(d['train']), len(d['test']))

```

The lowest-resolution images are available as PNG with a regular installation of `pillow`:

```python

# Alternative use, PNG at low-quality.

from datasets import load_dataset

d = load_dataset('texturedesign/td01_natural-ground-textures', 'PNG@1K', num_proc=4)

# EXAMPLE: Discard all other sets except Set #1.

dataset = dataset.filter(lambda s: s['set'] == 1)

# EXAMPLE: Only keep images with index 0 and 2.

dataset = dataset.select([0, 2])

```

Use built-in dataset `filter()` and `select()` to narrow down the loaded dataset for training, or to ease with development.

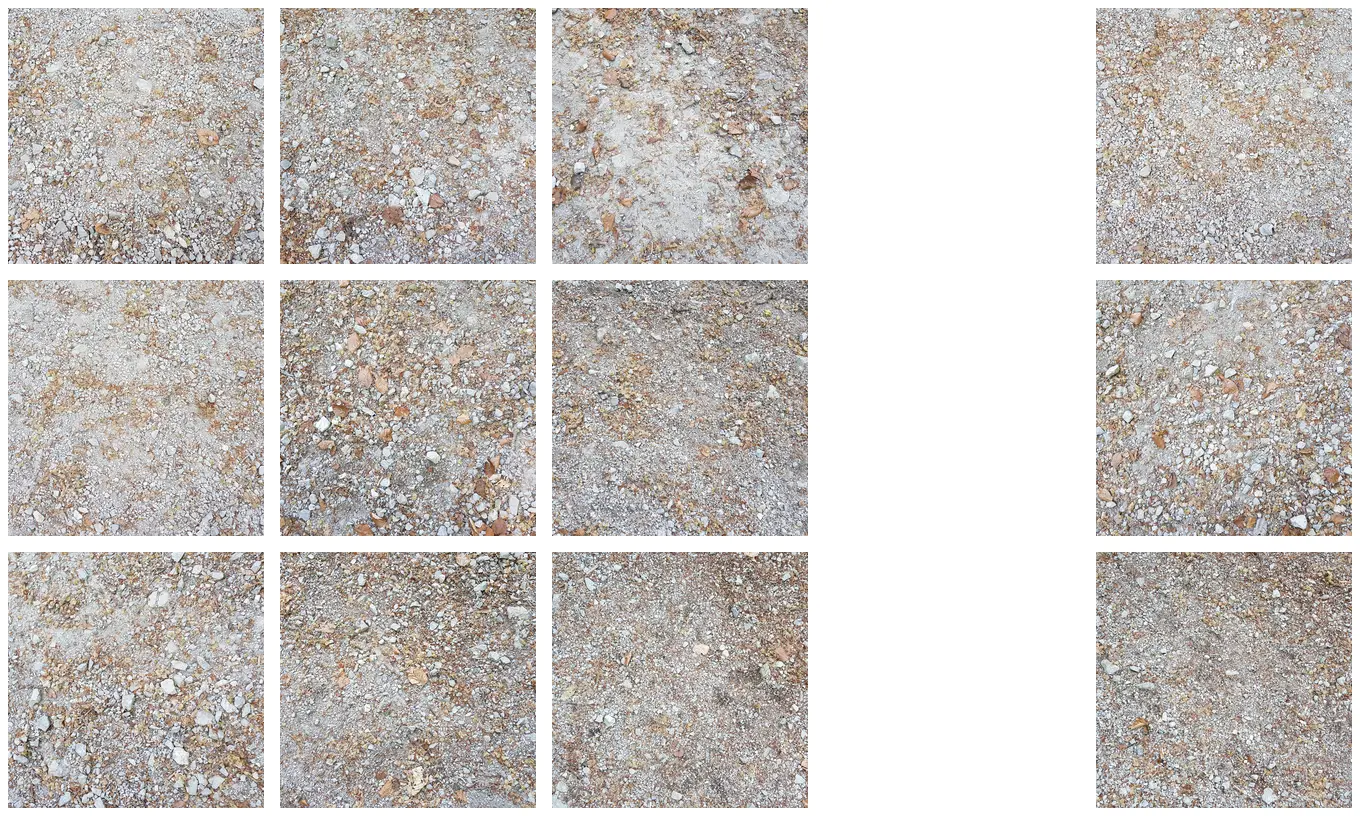

## Set #1: Rock and Gravel

* **Description**:

- surface rocks with gravel and coarse sand

- strong sunlight from the left, sharp shadows

* **Number of Photos**:

- 7 train

- 2 test

* **Edits**:

- rotated photos to align sunlight

- removed infrequent objects

* **Size**: 77.8 Mb

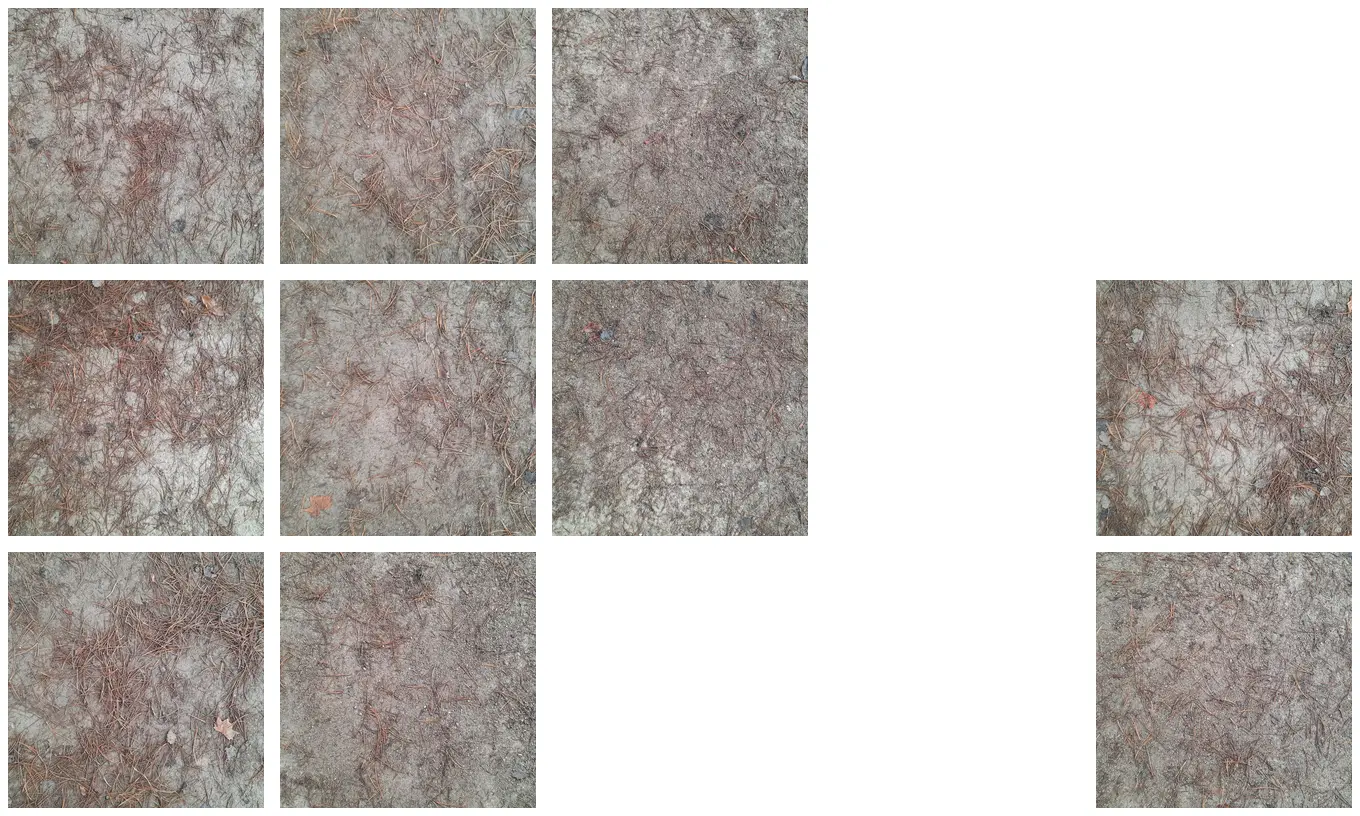

## Set #2: Dry Grass with Pine Needles

* **Description**:

- field of dry grass and pine needles

- sunlight from the top right, some shadows

* **Number of Photos**:

- 6 train

- 1 test

* **Edits**:

- removed dry leaves and large plants

- removed sticks, rocks and sporadic daisies

* **Size**: 95.2 Mb

## Set #3: Chipped Stones, Broken Leaves and Twiglets

* **Description**:

- autumn path with chipped stones and dry broken leaves

- diffuse light on a cloudy day, very soft shadows

* **Number of Photos**:

- 9 train

- 3 test

* **Edits**:

- removed anything that looks green, fresh leaves

- removed long sticks and large/odd stones

* **Size**: 126.9 Mb

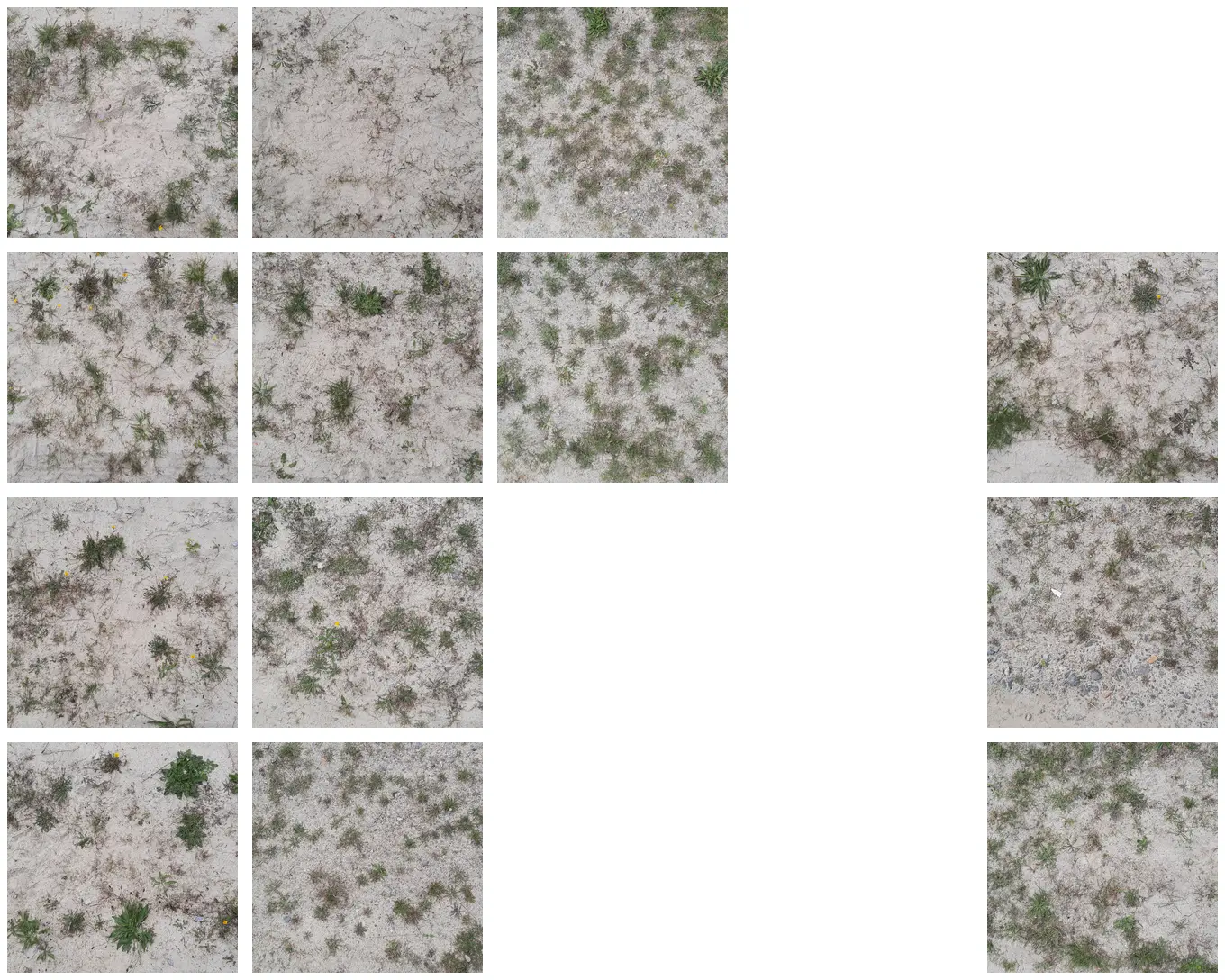

## Set #4: Grass Clumps and Cracked Dirt

* **Description**:

- clumps of green grass, clover and patches of cracked dirt

- diffuse light on cloudy day, shadows under large blades of grass

* **Number of Photos**:

- 9 train

- 2 test

* **Edits**:

- removed dry leaves, sporadic dandelions, and large objects

- histogram matching for two of the photos so the colors look similar

* **Size**: 126.8 Mb

## Set #5: Dirt, Stones, Rock, Twigs...

* **Description**:

- intricate micro-scene with grey dirt, surface rock, stones, twigs and organic debris

- diffuse light on cloudy day, soft shadows around the larger objects

* **Number of Photos**:

- 9 train

- 3 test

* **Edits**:

- removed odd objects that felt out-of-distribution

* **Size**: 102.1 Mb

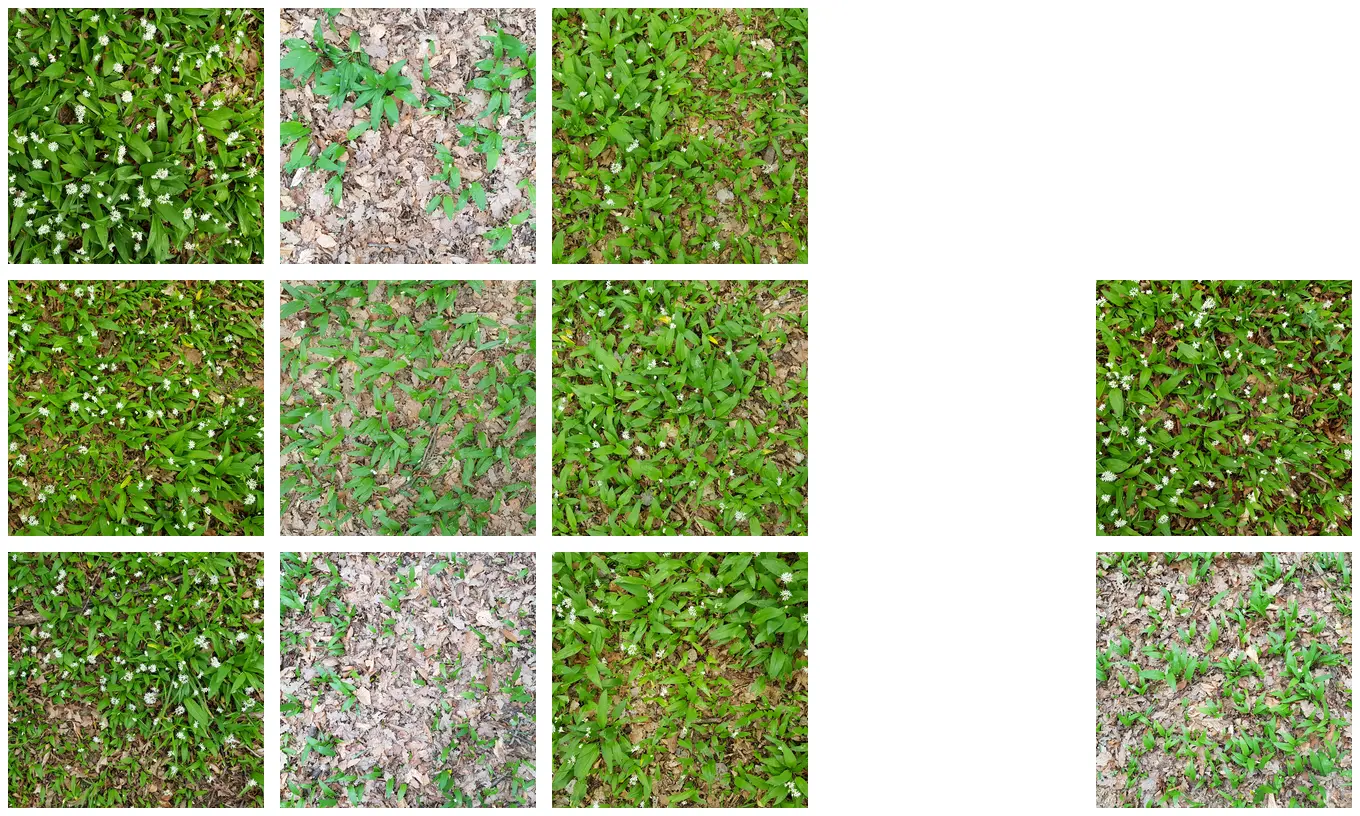

## Set #6: Plants with Flowers on Dry Leaves

* **Description**:

- leafy plants with white flowers on a bed of dry brown leaves

- soft diffuse light, shaded areas under the plants

* **Number of Photos**:

- 9 train

- 2 test

* **Edits**:

- none yet, inpainting doesn't work well enough

- would remove long sticks and pieces of wood

* **Size**: 105.1 Mb

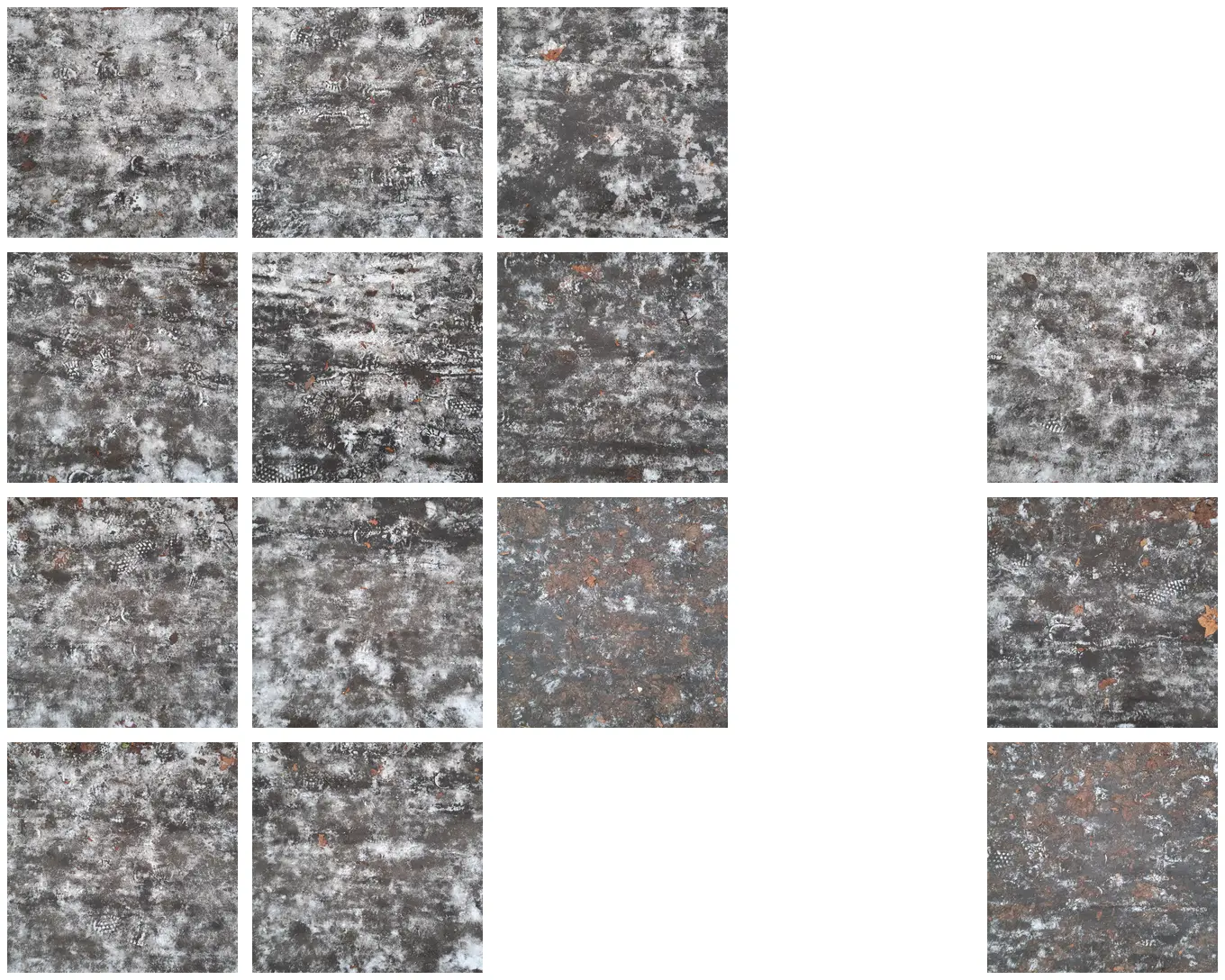

## Set #7: Frozen Footpath with Snow

* **Description**:

- frozen ground on a path with footprints

- areas with snow and dark brown ground beneath

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 11 train

- 3 test

* **Size**: 95.5 Mb

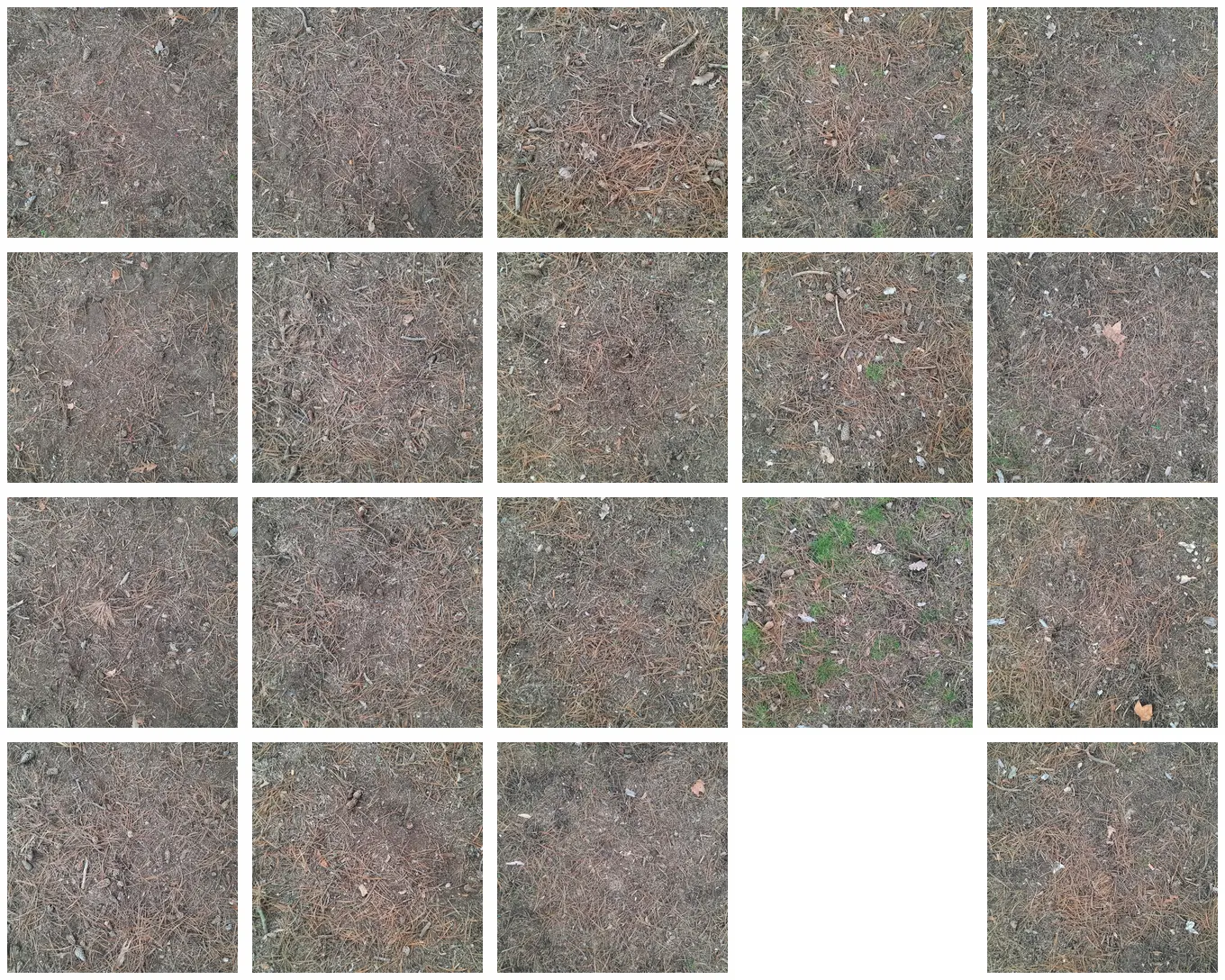

## Set #8: Pine Needles Forest Floor

* **Description**:

- forest floor with a mix of brown soil and grass

- variety of dry white leaves, sticks, pinecones, pine needles

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 15 train

- 4 test

* **Size**: 160.6 Mb

## Set #9: Snow on Grass and Dried Leaves

* **Description**:

- field in a park with short green grass

- large dried brown leaves and fallen snow on top

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 8 train

- 3 test

* **Size**: 99.8 Mb

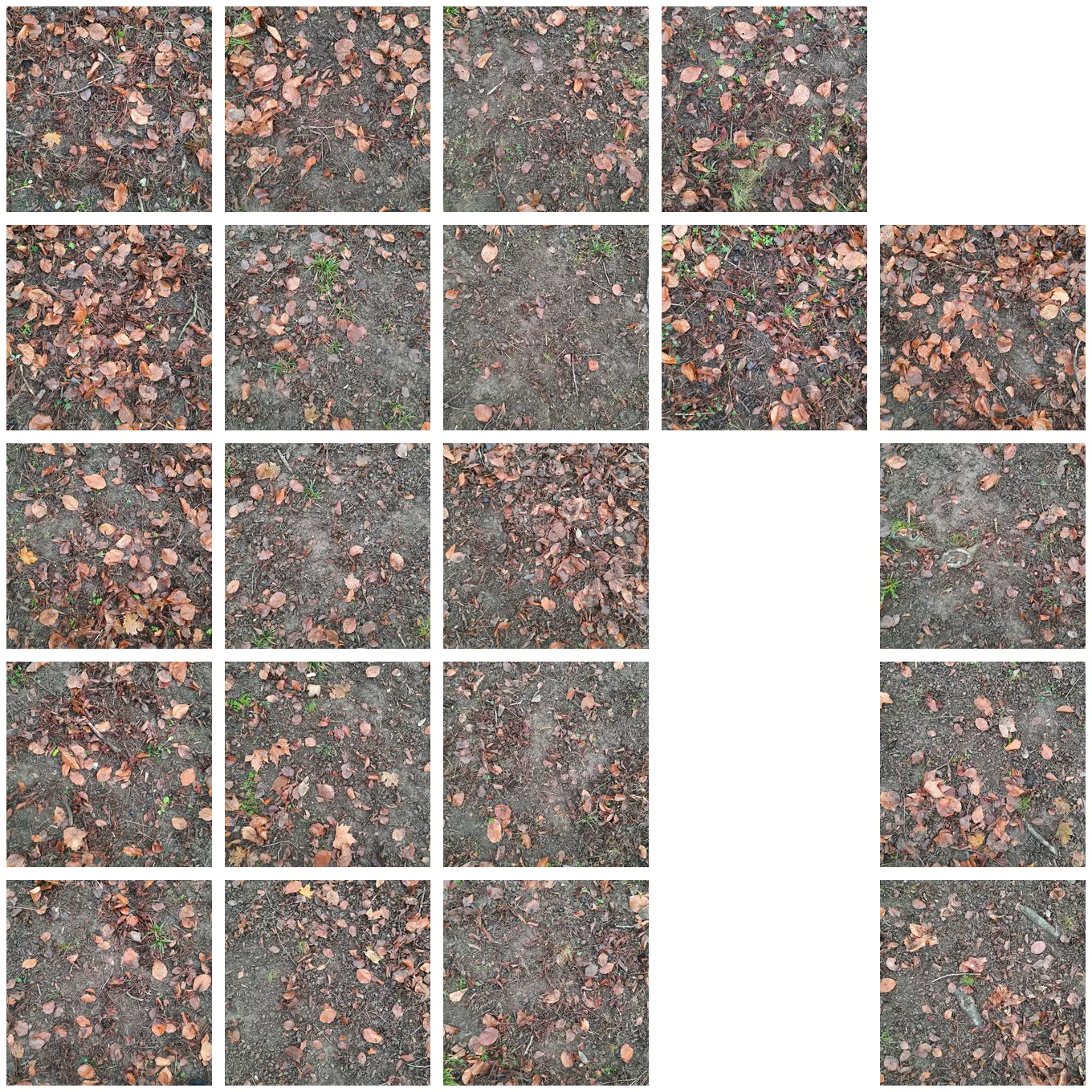

## Set #10: Brown Leaves on Wet Ground

* **Description**:

- fallew brown leaves on wet ground

- occasional tree root and twiglets

- diffuse lighting on a rainy day

* **Number of Photos**:

- 17 train

- 4 test

* **Size**: 186.2 Mb

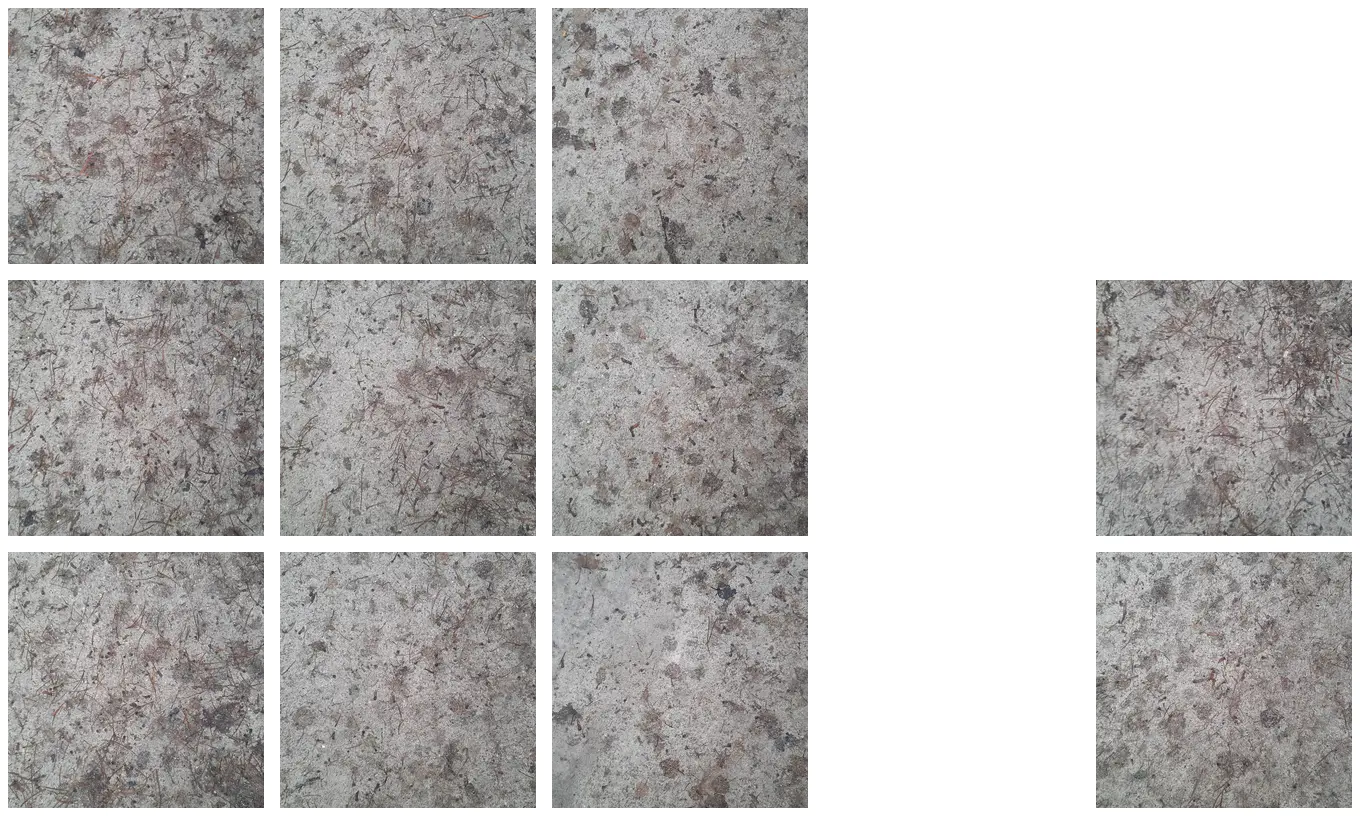

## Set #11: Wet Sand Path with Debris

* **Description**:

- hard sandy path in the rain

- decomposing leaves and other organic debris

- diffuse lighting on a rainy day

* **Number of Photos**:

- 17 train

- 4 test

* **Size**: 186.2 Mb

## Set #12: Wood Chips & Sawdust Sprinkled on Forest Path

* **Description**:

- wood chips, sawdust, twigs and roots on forest path

- intermittent sunlight with shadows of trees

* **Number of Photos**:

- 8 train

- 2 test

* **Size**: 110.4 Mb

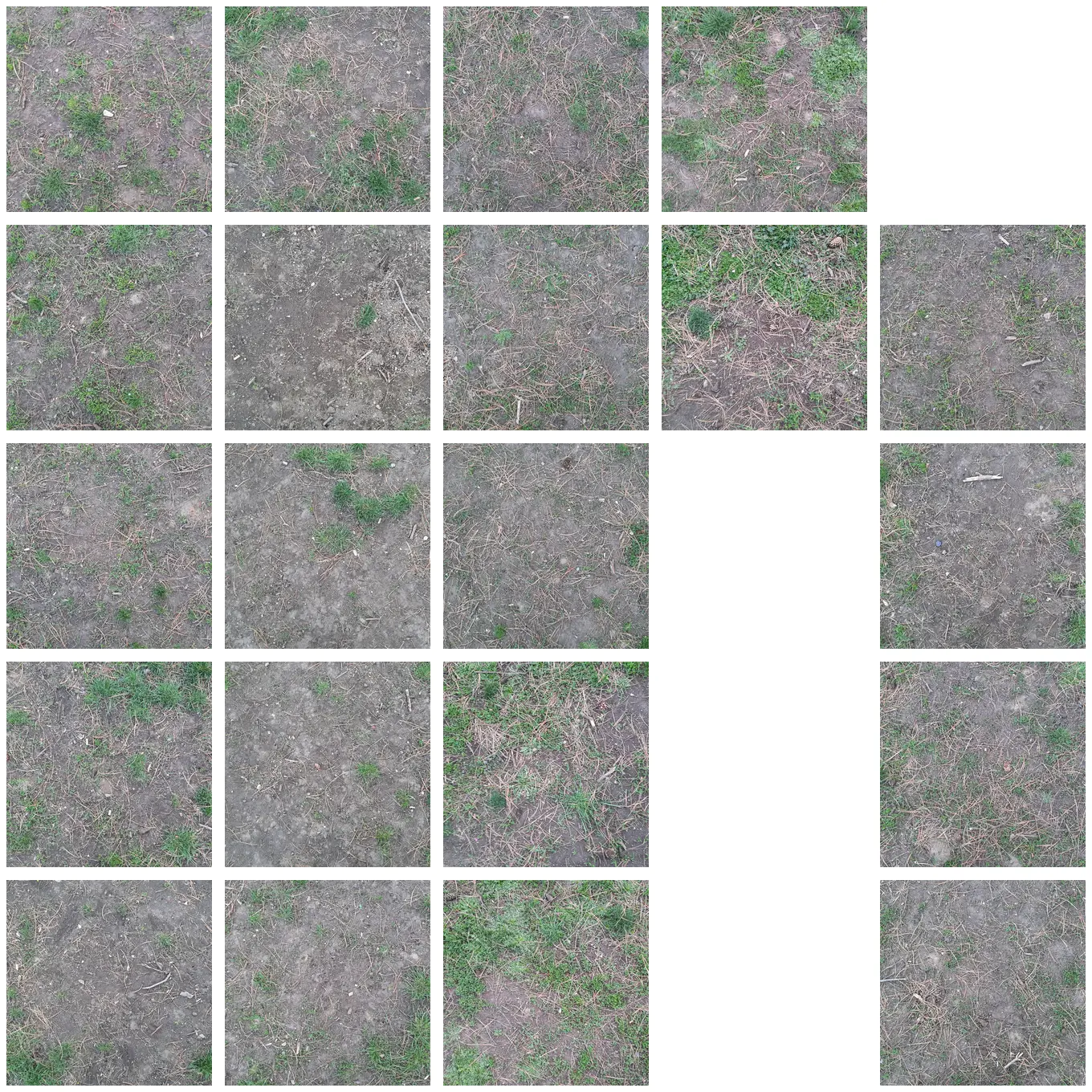

## Set #13: Young Grass Growing in the Dog Park

* **Description**:

- young grass growing in a dog park after overnight rain

- occasional stones, sticks and twigs, pine needles

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 17 train

- 4 test

* **Size**: 193.4 Mb

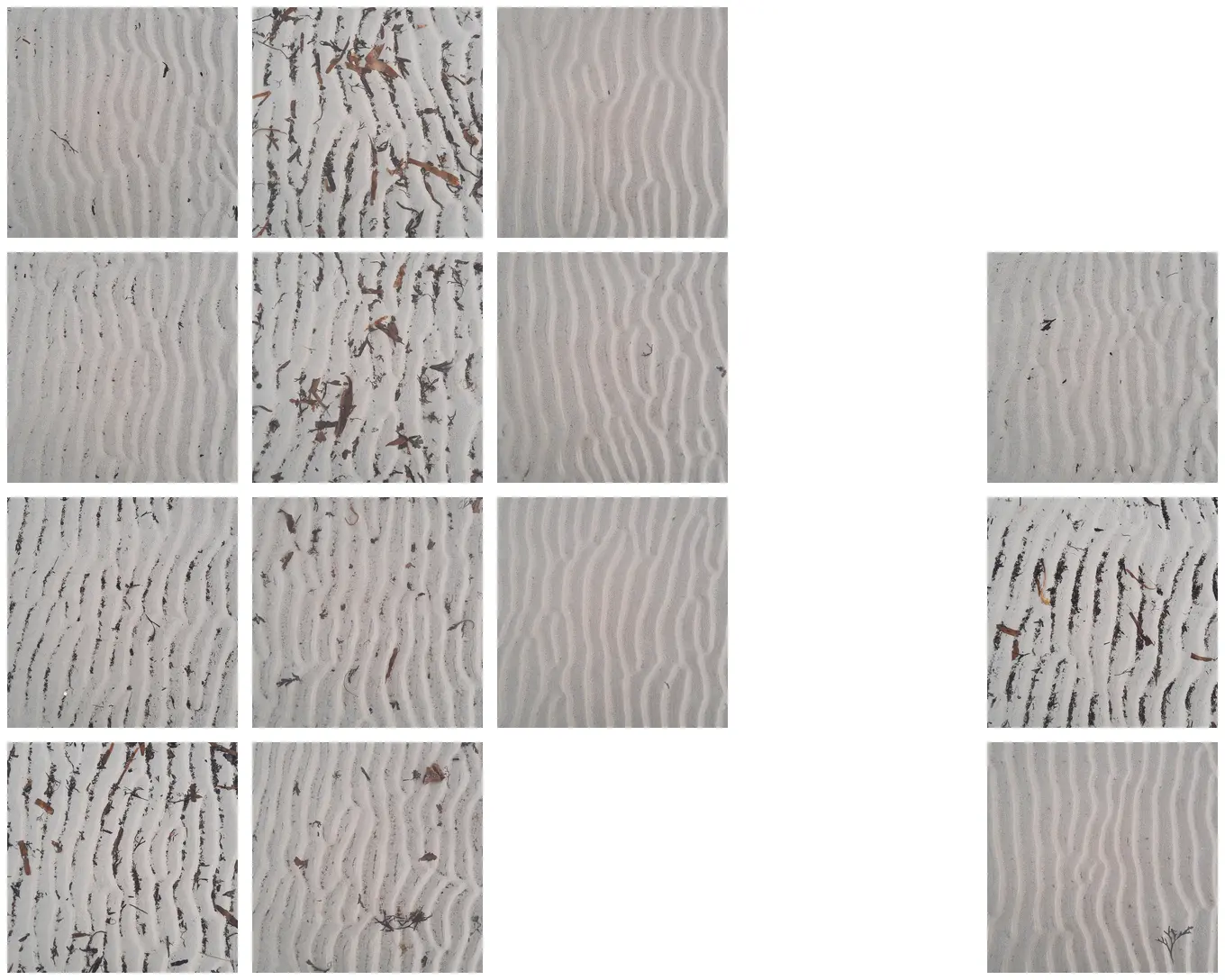

## Set #14: Wavy Wet Beach Sand

* **Description**:

- wavy wet sand on the beach after the tide retreated

- some dirt and large pieces algae debris

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 11 train

- 3 test

* **Size**: 86.5 Mb

## Set #15: Dry Dirt Road and Debris from Trees

* **Description**:

- dirt road of dry compacted sand with debris on top

- old pine needles and dry brown leaves

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 8 train

- 2 test

* **Size**: 86.9 Mb

## Set #16: Sandy Beach Path with Grass Clumps

* **Description**:

- path with sand and clumps grass heading towards the beach

- occasional blueish stones, leafy weeds, and yellow flowers

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 10 train

- 3 test

* **Size**: 118.8 Mb

## Set #17: Pine Needles and Brown Leaves on Park Floor

* **Description**:

- park floor with predominantly pine needles

- brown leaves from nearby trees, green grass underneath

- diffuse lighting on a cloudy day

* **Number of Photos**:

- 8 train

- 2 test

* **Size**: 99.9 Mb

| texturedesign/td01_natural-ground-textures | [

"task_categories:unconditional-image-generation",

"annotations_creators:expert-generated",

"size_categories:n<1K",

"source_datasets:original",

"license:cc-by-nc-4.0",

"texture-synthesis",

"photography",

"non-infringing",

"region:us"

] | 2022-11-19T17:43:30+00:00 | {"annotations_creators": ["expert-generated"], "language_creators": [], "language": [], "license": ["cc-by-nc-4.0"], "multilinguality": [], "size_categories": ["n<1K"], "source_datasets": ["original"], "task_categories": ["unconditional-image-generation"], "task_ids": [], "pretty_name": "TD01: Natural Ground Texture Photos", "tags": ["texture-synthesis", "photography", "non-infringing"], "viewer": false} | 2023-09-02T09:21:04+00:00 |

a7ae4cad6d4d34f32c1912718be9037f0015b779 | starbotica/markkistler | [

"license:unknown",

"region:us"

] | 2022-11-19T19:23:57+00:00 | {"license": "unknown"} | 2022-11-19T19:37:19+00:00 |

|

bfc723a1831e441b95d5604a266ab939b48dd4f2 | # AutoTrain Dataset for project: autotrain_goodreads_string

## Dataset Description

This dataset has been automatically processed by AutoTrain for project autotrain_goodreads_string.

### Languages

The BCP-47 code for the dataset's language is en.

## Dataset Structure

### Data Instances

A sample from this dataset looks as follows:

```json

[

{

"target": 5,

"text": "This book was absolutely ADORABLE!!!!!!!!!!! It was an awesome, light and FUN read. \n I loved the characters but I absolutely LOVED Cam!!!!!!!!!!!! Major Swoooon Worthy! J \n \"You've been checking me out, haven't you? In-between your flaming insults? I feel like man candy.\" \n Seriously, between being HOT, FUNNY and OH SO VERY ADORABLE, Cam was the perfect catch!! \n \" I'm not going out with you Cam.\" \n \" I didn't ask you at this moment, now did I\" One side of his lips curved up. \" But you will eventually.\" \n \"You're delusional\" \n \"I'm determined.\" \n \" More like annoying.\" \n \" Most would say amazing.\" \n Cam and Avery's relationship is tough due to the secrets she keeps but he is the perfect match for breaking her out of her shell and facing her fears. \n This book is definitely a MUST READ. \n Trust me when I say this YOU will not regret it! \n www.Jenreadit.com"

},

{

"target": 4,

"text": "I FINISHED!!! This book took me FOREVER to read! But I am so glad I stuck with it, I really loved it. It took me a while to get into: this book has a TON of characters and storylines. But once I hit about the 100-page mark, I became very invested in the story and couldn't wait to see what would happen with Lizzie, Lane, Edward, Gin and the rest of the family. Oh, and Samuel T. There's a little bit of sex but mostly this is a sweeping romance novel, much like Dynasty and Dallas from the 1980's. If you loved those series, you will love this book. There's betrayal, unrequited love, family fortunes, and much scheming. \n There are many characters to love here and many to hate. Some are over-the-top but I loved the central storyline involving Lane and Lizzie. \n The author really gets the Southern mannerisms right, and the backdrop of the Kentucky Bourbon industry is fascinating. This book ends not so much on a cliffhanger but with many, many loose ends, and I will eagerly pick up the next book in this series."

}

]

```

### Dataset Fields

The dataset has the following fields (also called "features"):

```json

{

"target": "ClassLabel(num_classes=6, names=['0_stars', '1_stars', '2_stars', '3_stars', '4_stars', '5_stars'], id=None)",

"text": "Value(dtype='string', id=None)"

}

```

### Dataset Splits

This dataset is split into a train and validation split. The split sizes are as follow:

| Split name | Num samples |

| ------------ | ------------------- |

| train | 2357 |

| valid | 592 |

| fernanda-dionello/good-reads-string | [

"task_categories:text-classification",

"language:en",

"region:us"

] | 2022-11-19T20:09:23+00:00 | {"language": ["en"], "task_categories": ["text-classification"]} | 2022-11-19T20:10:26+00:00 |

fd46dee0a684d1f651e353ce659e0f0ff11322e7 | # Dataset Card for "stefano-finetune"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | albluc24/stefano-finetune | [

"region:us"

] | 2022-11-19T20:30:42+00:00 | {"dataset_info": {"features": [{"name": "text", "dtype": "string"}, {"name": "audio", "dtype": "audio"}], "splits": [{"name": "eval", "num_bytes": 3732782.0, "num_examples": 1}, {"name": "train", "num_bytes": 227326609.0, "num_examples": 55}], "download_size": 0, "dataset_size": 231059391.0}} | 2022-11-19T20:46:15+00:00 |

ae1e4ca8b786994cd3192930ad28f1676b4b02ef | # Dataset Card for "two-minute-papers"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Whispering-GPT/two-minute-papers | [

"task_categories:automatic-speech-recognition",

"whisper",

"whispering",

"base",

"region:us"

] | 2022-11-19T20:52:17+00:00 | {"task_categories": ["automatic-speech-recognition"], "dataset_info": {"features": [{"name": "CHANNEL_NAME", "dtype": "string"}, {"name": "URL", "dtype": "string"}, {"name": "TITLE", "dtype": "string"}, {"name": "DESCRIPTION", "dtype": "string"}, {"name": "TRANSCRIPTION", "dtype": "string"}, {"name": "SEGMENTS", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 10435074, "num_examples": 737}], "download_size": 4626170, "dataset_size": 10435074}, "tags": ["whisper", "whispering", "base"]} | 2022-11-19T23:34:46+00:00 |

eb5f579468dc10ed0510d26e5f3de6b34f8700ca | # Dataset Card for "gal_yair_8300_100x100_fixed"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | galman33/gal_yair_8300_100x100_fixed | [

"region:us"

] | 2022-11-19T22:43:01+00:00 | {"dataset_info": {"features": [{"name": "lat", "dtype": "float64"}, {"name": "lon", "dtype": "float64"}, {"name": "country_code", "dtype": {"class_label": {"names": {"0": "ad", "1": "ae", "2": "al", "3": "aq", "4": "ar", "5": "au", "6": "bd", "7": "be", "8": "bg", "9": "bm", "10": "bo", "11": "br", "12": "bt", "13": "bw", "14": "ca", "15": "ch", "16": "cl", "17": "co", "18": "cz", "19": "de", "20": "dk", "21": "ec", "22": "ee", "23": "es", "24": "fi", "25": "fr", "26": "gb", "27": "gh", "28": "gl", "29": "gr", "30": "gt", "31": "hk", "32": "hr", "33": "hu", "34": "id", "35": "ie", "36": "il", "37": "is", "38": "it", "39": "ix", "40": "jp", "41": "kg", "42": "kh", "43": "kr", "44": "la", "45": "lk", "46": "ls", "47": "lt", "48": "lu", "49": "lv", "50": "me", "51": "mg", "52": "mk", "53": "mn", "54": "mo", "55": "mt", "56": "mx", "57": "my", "58": "nl", "59": "no", "60": "nz", "61": "pe", "62": "ph", "63": "pl", "64": "pt", "65": "ro", "66": "rs", "67": "ru", "68": "se", "69": "sg", "70": "si", "71": "sk", "72": "sn", "73": "sz", "74": "th", "75": "tn", "76": "tr", "77": "tw", "78": "ua", "79": "ug", "80": "us", "81": "uy", "82": "za"}}}}, {"name": "image", "dtype": "image"}], "splits": [{"name": "train", "num_bytes": 142019429.5, "num_examples": 8300}], "download_size": 141877783, "dataset_size": 142019429.5}} | 2022-11-26T13:15:23+00:00 |

f7a01f58b105e3b089b9c492cab91d4bc5d9c0ca | hounst/whitn | [

"license:cc",

"region:us"

] | 2022-11-20T00:55:52+00:00 | {"license": "cc"} | 2022-11-20T01:18:07+00:00 |

|

6af6fbe04f185dabec6605e5c3858bac1dd23396 |

# Genshin Datasets for Diff-SVC

## 仓库地址

| 仓库 | 传送门 |

| :--------------------------------------------------------: | :----------------------------------------------------------: |

| Diff-SVC | [点此传送](https://github.com/prophesier/diff-svc) |

| 44.1KHz声码器 | [点此传送](https://openvpi.github.io/vocoders) |

| 原神语音数据集 | [点此传送](https://github.com/w4123/GenshinVoice) |

| 训练用底模(如果想用这个数据集自己训练,非原神模型也可用) | [点此传送](https://huggingface.co/Erythrocyte/Diff-SVC_Pre-trained_Models) |

| 已训练原神 Diff-SVC 模型(可用于二创整活) | [点此传送](https://huggingface.co/Erythrocyte/Diff-SVC_Genshin_Models) |

## 介绍

该数据集为训练 Diff-SVC 原神模型的数据集,上传的数据集均已进行 `长音频切割` 、`响度匹配`。可以 `直接用来预处理并训练`,由于每个角色数据集规模不够,训练时候建议配合 `预训练模型` 训练。预训练模型见上表,并且提供了 `详细的教程` 以及 `多种可选预训练模型`。

## 使用教程(以下操作均需要在Diff-SVC目录下进行)

### Windows平台

1. 下载自己需要的数据集

2. 解压到 Diff-SVC 根目录,如果提示覆盖,请直接覆盖。

4. 依次输入如下指令进行预处理

```bash

set PYTHONPATH=.

set CUDA_VISIBLE_DEVICES=0

python preprocessing/binarize.py --config training/config_nsf.yaml

```

5. 按照教程进行加载预训练模型并训练:

教程地址:https://huggingface.co/Erythrocyte/Diff-SVC_Pre-trained_Models

### Linux平台

1. 输入如下命令下载需要的数据集(这里以纳西妲为例,其它角色可以从下表右击链接复制)

```bash

wget https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Sumeru/nahida.zip

```

2. 输入如下命令解压到 Diff-SVC 根目录,如果提示覆盖请输入 y

```bash

unzip nahida.zip

```

4. 依次输入如下指令进行预处理

```bash

export PYTHONPATH=.

CUDA_VISIBLE_DEVICES=0 python preprocessing/binarize.py --config training/config_nsf.yaml

```

5. 按照教程进行加载预训练模型并训练:

教程地址:https://huggingface.co/Erythrocyte/Diff-SVC_Pre-trained_Models

## 下载地址

| 地区 | 角色名 | 下载地址 |

| :--: | :----------: | :----------------------------------------------------------: |

| 蒙德 | 优菈 | [eula.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Mondstadt/eula.zip) |

| 蒙德 | 阿贝多 | [albedo.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Mondstadt/albedo.zip) |

| 蒙德 | 温迪 | [venti.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Mondstadt/venti.zip) |

| 蒙德 | 莫娜 | [mona.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Mondstadt/mona.zip) |

| 蒙德 | 可莉 | [klee.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Mondstadt/klee.zip) |

| 蒙德 | 琴 | [jean.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Mondstadt/jean.zip) |

| 蒙德 | 迪卢克 | [diluc.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Mondstadt/diluc.zip) |

| 璃月 | 钟离 | [zhongli.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Liyue/zhongli.zip) |

| 稻妻 | 雷电将军 | [raiden.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Inazuma/raiden.zip) |

| 须弥 | 流浪者(散兵) | [wanderer.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Sumeru/wanderer.zip) |

| 须弥 | 纳西妲 | [nahida.zip](https://huggingface.co/datasets/Erythrocyte/Diff-SVC_Genshin_Datasets/resolve/main/Sumeru/nahida.zip) |

| Erythrocyte/Diff-SVC_Genshin_Datasets | [

"Diff-SVC",

"Genshin",

"Genshin Impact",

"Voice Data",

"Voice Dataset",

"region:us"

] | 2022-11-20T01:25:40+00:00 | {"tags": ["Diff-SVC", "Genshin", "Genshin Impact", "Voice Data", "Voice Dataset"]} | 2022-12-19T01:33:22+00:00 |

d6115c5b9a52a758d6f0d08f0bd9b19df294edf9 |

# Dataset Card for EUWikipedias: A dataset of Wikipedias in the EU languages

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:**

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:** [Joel Niklaus](mailto:[email protected])

### Dataset Summary

Wikipedia dataset containing cleaned articles of all languages.