pipeline_tag

stringclasses 48

values | library_name

stringclasses 198

values | text

stringlengths 1

900k

| metadata

stringlengths 2

438k

| id

stringlengths 5

122

| last_modified

null | tags

listlengths 1

1.84k

| sha

null | created_at

stringlengths 25

25

| arxiv

listlengths 0

201

| languages

listlengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

listlengths 0

722

| processed_texts

listlengths 1

723

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

text2text-generation

|

transformers

|

This model is used in the paper **Generative Relation Linking for Question Answering over Knowledge Bases**. [ArXiv](https://arxiv.org/abs/2108.07337), [GitHub](https://github.com/IBM/kbqa-relation-linking)

## Citation

```bibtex

@inproceedings{rossiello-genrl-2021,

title={Generative relation linking for question answering over knowledge bases},

author={Rossiello, Gaetano and Mihindukulasooriya, Nandana and Abdelaziz, Ibrahim and Bornea, Mihaela and Gliozzo, Alfio and Naseem, Tahira and Kapanipathi, Pavan},

booktitle={International Semantic Web Conference},

pages={321--337},

year={2021},

organization={Springer},

url = "https://link.springer.com/chapter/10.1007/978-3-030-88361-4_19",

doi = "10.1007/978-3-030-88361-4_19"

}

```

|

{"license": "apache-2.0"}

|

gaetangate/bart-large_genrl_lcquad1

| null |

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"arxiv:2108.07337",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[

"2108.07337"

] |

[] |

TAGS

#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us

|

This model is used in the paper Generative Relation Linking for Question Answering over Knowledge Bases. ArXiv, GitHub

|

[] |

[

"TAGS\n#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n"

] |

text2text-generation

|

transformers

|

This model is used in the paper **Generative Relation Linking for Question Answering over Knowledge Bases**. [ArXiv](https://arxiv.org/abs/2108.07337), [GitHub](https://github.com/IBM/kbqa-relation-linking)

## Citation

```bibtex

@inproceedings{rossiello-genrl-2021,

title={Generative relation linking for question answering over knowledge bases},

author={Rossiello, Gaetano and Mihindukulasooriya, Nandana and Abdelaziz, Ibrahim and Bornea, Mihaela and Gliozzo, Alfio and Naseem, Tahira and Kapanipathi, Pavan},

booktitle={International Semantic Web Conference},

pages={321--337},

year={2021},

organization={Springer},

url = "https://link.springer.com/chapter/10.1007/978-3-030-88361-4_19",

doi = "10.1007/978-3-030-88361-4_19"

}

```

|

{"license": "apache-2.0"}

|

gaetangate/bart-large_genrl_lcquad2

| null |

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"arxiv:2108.07337",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[

"2108.07337"

] |

[] |

TAGS

#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us

|

This model is used in the paper Generative Relation Linking for Question Answering over Knowledge Bases. ArXiv, GitHub

|

[] |

[

"TAGS\n#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n"

] |

text2text-generation

|

transformers

|

This model is used in the paper **Generative Relation Linking for Question Answering over Knowledge Bases**. [ArXiv](https://arxiv.org/abs/2108.07337), [GitHub](https://github.com/IBM/kbqa-relation-linking)

## Citation

```bibtex

@inproceedings{rossiello-genrl-2021,

title={Generative relation linking for question answering over knowledge bases},

author={Rossiello, Gaetano and Mihindukulasooriya, Nandana and Abdelaziz, Ibrahim and Bornea, Mihaela and Gliozzo, Alfio and Naseem, Tahira and Kapanipathi, Pavan},

booktitle={International Semantic Web Conference},

pages={321--337},

year={2021},

organization={Springer},

url = "https://link.springer.com/chapter/10.1007/978-3-030-88361-4_19",

doi = "10.1007/978-3-030-88361-4_19"

}

```

|

{"license": "apache-2.0"}

|

gaetangate/bart-large_genrl_qald9

| null |

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"arxiv:2108.07337",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[

"2108.07337"

] |

[] |

TAGS

#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us

|

This model is used in the paper Generative Relation Linking for Question Answering over Knowledge Bases. ArXiv, GitHub

|

[] |

[

"TAGS\n#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n"

] |

text2text-generation

|

transformers

|

This model is used in the paper **Generative Relation Linking for Question Answering over Knowledge Bases**. [ArXiv](https://arxiv.org/abs/2108.07337), [GitHub](https://github.com/IBM/kbqa-relation-linking)

## Citation

```bibtex

@inproceedings{rossiello-genrl-2021,

title={Generative relation linking for question answering over knowledge bases},

author={Rossiello, Gaetano and Mihindukulasooriya, Nandana and Abdelaziz, Ibrahim and Bornea, Mihaela and Gliozzo, Alfio and Naseem, Tahira and Kapanipathi, Pavan},

booktitle={International Semantic Web Conference},

pages={321--337},

year={2021},

organization={Springer},

url = "https://link.springer.com/chapter/10.1007/978-3-030-88361-4_19",

doi = "10.1007/978-3-030-88361-4_19"

}

```

|

{"license": "apache-2.0"}

|

gaetangate/bart-large_genrl_simpleq

| null |

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"arxiv:2108.07337",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[

"2108.07337"

] |

[] |

TAGS

#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us

|

This model is used in the paper Generative Relation Linking for Question Answering over Knowledge Bases. ArXiv, GitHub

|

[] |

[

"TAGS\n#transformers #pytorch #bart #text2text-generation #arxiv-2108.07337 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n"

] |

null | null |

test 123

|

{}

|

gaga42gaga42/test

| null |

[

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#region-us

|

test 123

|

[] |

[

"TAGS\n#region-us \n"

] |

text-generation

|

transformers

|

# Generating Right Wing News Using GPT2

### I have built a custom model for it using data from Kaggle

Creating a new finetuned model using data from FOX news

### My model can be accessed at gagan3012/Fox-News-Generator

Check the [BenchmarkTest](https://github.com/gagan3012/Fox-News-Generator/blob/master/BenchmarkTest.ipynb) notebook for results

Find the model at [gagan3012/Fox-News-Generator](https://huggingface.co/gagan3012/Fox-News-Generator)

```

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/Fox-News-Generator")

model = AutoModelWithLMHead.from_pretrained("gagan3012/Fox-News-Generator")

```

|

{}

|

gagan3012/Fox-News-Generator

| null |

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #jax #gpt2 #text-generation #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# Generating Right Wing News Using GPT2

### I have built a custom model for it using data from Kaggle

Creating a new finetuned model using data from FOX news

### My model can be accessed at gagan3012/Fox-News-Generator

Check the BenchmarkTest notebook for results

Find the model at gagan3012/Fox-News-Generator

|

[

"# Generating Right Wing News Using GPT2",

"### I have built a custom model for it using data from Kaggle \n\nCreating a new finetuned model using data from FOX news",

"### My model can be accessed at gagan3012/Fox-News-Generator\n\nCheck the BenchmarkTest notebook for results\n\nFind the model at gagan3012/Fox-News-Generator"

] |

[

"TAGS\n#transformers #pytorch #jax #gpt2 #text-generation #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# Generating Right Wing News Using GPT2",

"### I have built a custom model for it using data from Kaggle \n\nCreating a new finetuned model using data from FOX news",

"### My model can be accessed at gagan3012/Fox-News-Generator\n\nCheck the BenchmarkTest notebook for results\n\nFind the model at gagan3012/Fox-News-Generator"

] |

null |

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ViTGPT2I2A

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the vizwiz dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0708

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- distributed_type: multi-GPU

- num_devices: 2

- total_train_batch_size: 4

- total_eval_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.1528 | 0.17 | 1000 | 0.0869 |

| 0.0899 | 0.34 | 2000 | 0.0817 |

| 0.084 | 0.51 | 3000 | 0.0790 |

| 0.0814 | 0.68 | 4000 | 0.0773 |

| 0.0803 | 0.85 | 5000 | 0.0757 |

| 0.077 | 1.02 | 6000 | 0.0745 |

| 0.0739 | 1.19 | 7000 | 0.0740 |

| 0.0719 | 1.37 | 8000 | 0.0737 |

| 0.0717 | 1.54 | 9000 | 0.0730 |

| 0.0731 | 1.71 | 10000 | 0.0727 |

| 0.0708 | 1.88 | 11000 | 0.0720 |

| 0.0697 | 2.05 | 12000 | 0.0717 |

| 0.0655 | 2.22 | 13000 | 0.0719 |

| 0.0653 | 2.39 | 14000 | 0.0719 |

| 0.0657 | 2.56 | 15000 | 0.0712 |

| 0.0663 | 2.73 | 16000 | 0.0710 |

| 0.0654 | 2.9 | 17000 | 0.0708 |

| 0.0645 | 3.07 | 18000 | 0.0716 |

| 0.0616 | 3.24 | 19000 | 0.0712 |

| 0.0607 | 3.41 | 20000 | 0.0712 |

| 0.0611 | 3.58 | 21000 | 0.0711 |

| 0.0615 | 3.76 | 22000 | 0.0711 |

| 0.0614 | 3.93 | 23000 | 0.0710 |

| 0.0594 | 4.1 | 24000 | 0.0716 |

| 0.0587 | 4.27 | 25000 | 0.0715 |

| 0.0574 | 4.44 | 26000 | 0.0715 |

| 0.0579 | 4.61 | 27000 | 0.0715 |

| 0.0581 | 4.78 | 28000 | 0.0715 |

| 0.0579 | 4.95 | 29000 | 0.0715 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.2+cu113

- Datasets 1.18.3

- Tokenizers 0.11.0

|

{"license": "apache-2.0", "tags": ["image-captioning", "generated_from_trainer"], "model-index": [{"name": "ViTGPT2I2A", "results": []}]}

|

gagan3012/ViTGPT2I2A

| null |

[

"transformers",

"pytorch",

"vision-encoder-decoder",

"image-captioning",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #vision-encoder-decoder #image-captioning #generated_from_trainer #license-apache-2.0 #endpoints_compatible #has_space #region-us

|

ViTGPT2I2A

==========

This model is a fine-tuned version of google/vit-base-patch16-224-in21k on the vizwiz dataset.

It achieves the following results on the evaluation set:

* Loss: 0.0708

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 2

* eval\_batch\_size: 2

* seed: 42

* distributed\_type: multi-GPU

* num\_devices: 2

* total\_train\_batch\_size: 4

* total\_eval\_batch\_size: 4

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 5.0

* mixed\_precision\_training: Native AMP

### Training results

### Framework versions

* Transformers 4.16.2

* Pytorch 1.10.2+cu113

* Datasets 1.18.3

* Tokenizers 0.11.0

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 2\n* eval\\_batch\\_size: 2\n* seed: 42\n* distributed\\_type: multi-GPU\n* num\\_devices: 2\n* total\\_train\\_batch\\_size: 4\n* total\\_eval\\_batch\\_size: 4\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 5.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.2\n* Pytorch 1.10.2+cu113\n* Datasets 1.18.3\n* Tokenizers 0.11.0"

] |

[

"TAGS\n#transformers #pytorch #vision-encoder-decoder #image-captioning #generated_from_trainer #license-apache-2.0 #endpoints_compatible #has_space #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 2\n* eval\\_batch\\_size: 2\n* seed: 42\n* distributed\\_type: multi-GPU\n* num\\_devices: 2\n* total\\_train\\_batch\\_size: 4\n* total\\_eval\\_batch\\_size: 4\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 5.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.2\n* Pytorch 1.10.2+cu113\n* Datasets 1.18.3\n* Tokenizers 0.11.0"

] |

null |

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ViTGPT2_VW

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0771

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- distributed_type: multi-GPU

- num_devices: 2

- total_train_batch_size: 4

- total_eval_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.1256 | 0.03 | 1000 | 0.0928 |

| 0.0947 | 0.07 | 2000 | 0.0897 |

| 0.0889 | 0.1 | 3000 | 0.0859 |

| 0.0888 | 0.14 | 4000 | 0.0842 |

| 0.0866 | 0.17 | 5000 | 0.0831 |

| 0.0852 | 0.2 | 6000 | 0.0819 |

| 0.0833 | 0.24 | 7000 | 0.0810 |

| 0.0835 | 0.27 | 8000 | 0.0802 |

| 0.081 | 0.31 | 9000 | 0.0796 |

| 0.0803 | 0.34 | 10000 | 0.0789 |

| 0.0814 | 0.38 | 11000 | 0.0785 |

| 0.0799 | 0.41 | 12000 | 0.0780 |

| 0.0786 | 0.44 | 13000 | 0.0776 |

| 0.0796 | 0.48 | 14000 | 0.0771 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.2+cu113

- Datasets 1.18.3

- Tokenizers 0.11.0

|

{"tags": ["generated_from_trainer"], "model-index": [{"name": "ViTGPT2_VW", "results": []}]}

|

gagan3012/ViTGPT2_VW

| null |

[

"transformers",

"pytorch",

"vision-encoder-decoder",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #vision-encoder-decoder #generated_from_trainer #endpoints_compatible #region-us

|

ViTGPT2\_VW

===========

This model is a fine-tuned version of [](URL on an unknown dataset.

It achieves the following results on the evaluation set:

* Loss: 0.0771

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 2

* eval\_batch\_size: 2

* seed: 42

* distributed\_type: multi-GPU

* num\_devices: 2

* total\_train\_batch\_size: 4

* total\_eval\_batch\_size: 4

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 1.0

* mixed\_precision\_training: Native AMP

### Training results

### Framework versions

* Transformers 4.16.2

* Pytorch 1.10.2+cu113

* Datasets 1.18.3

* Tokenizers 0.11.0

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 2\n* eval\\_batch\\_size: 2\n* seed: 42\n* distributed\\_type: multi-GPU\n* num\\_devices: 2\n* total\\_train\\_batch\\_size: 4\n* total\\_eval\\_batch\\_size: 4\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 1.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.2\n* Pytorch 1.10.2+cu113\n* Datasets 1.18.3\n* Tokenizers 0.11.0"

] |

[

"TAGS\n#transformers #pytorch #vision-encoder-decoder #generated_from_trainer #endpoints_compatible #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 2\n* eval\\_batch\\_size: 2\n* seed: 42\n* distributed\\_type: multi-GPU\n* num\\_devices: 2\n* total\\_train\\_batch\\_size: 4\n* total\\_eval\\_batch\\_size: 4\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 1.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.2\n* Pytorch 1.10.2+cu113\n* Datasets 1.18.3\n* Tokenizers 0.11.0"

] |

image-to-text

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ViTGPT2_vizwiz

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0719

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.1207 | 0.07 | 1000 | 0.0906 |

| 0.0916 | 0.14 | 2000 | 0.0861 |

| 0.0879 | 0.2 | 3000 | 0.0840 |

| 0.0856 | 0.27 | 4000 | 0.0822 |

| 0.0834 | 0.34 | 5000 | 0.0806 |

| 0.0817 | 0.41 | 6000 | 0.0795 |

| 0.0812 | 0.48 | 7000 | 0.0785 |

| 0.0808 | 0.55 | 8000 | 0.0779 |

| 0.0796 | 0.61 | 9000 | 0.0771 |

| 0.0786 | 0.68 | 10000 | 0.0767 |

| 0.0774 | 0.75 | 11000 | 0.0762 |

| 0.0772 | 0.82 | 12000 | 0.0758 |

| 0.0756 | 0.89 | 13000 | 0.0754 |

| 0.0759 | 0.96 | 14000 | 0.0750 |

| 0.0756 | 1.02 | 15000 | 0.0748 |

| 0.0726 | 1.09 | 16000 | 0.0745 |

| 0.0727 | 1.16 | 17000 | 0.0745 |

| 0.0715 | 1.23 | 18000 | 0.0742 |

| 0.0726 | 1.3 | 19000 | 0.0741 |

| 0.072 | 1.37 | 20000 | 0.0738 |

| 0.0723 | 1.43 | 21000 | 0.0735 |

| 0.0715 | 1.5 | 22000 | 0.0734 |

| 0.0724 | 1.57 | 23000 | 0.0732 |

| 0.0723 | 1.64 | 24000 | 0.0730 |

| 0.0718 | 1.71 | 25000 | 0.0729 |

| 0.07 | 1.78 | 26000 | 0.0728 |

| 0.0702 | 1.84 | 27000 | 0.0726 |

| 0.0704 | 1.91 | 28000 | 0.0725 |

| 0.0703 | 1.98 | 29000 | 0.0725 |

| 0.0686 | 2.05 | 30000 | 0.0726 |

| 0.0687 | 2.12 | 31000 | 0.0726 |

| 0.0688 | 2.19 | 32000 | 0.0724 |

| 0.0677 | 2.25 | 33000 | 0.0724 |

| 0.0665 | 2.32 | 34000 | 0.0725 |

| 0.0684 | 2.39 | 35000 | 0.0723 |

| 0.0678 | 2.46 | 36000 | 0.0722 |

| 0.0686 | 2.53 | 37000 | 0.0722 |

| 0.067 | 2.59 | 38000 | 0.0721 |

| 0.0669 | 2.66 | 39000 | 0.0721 |

| 0.0673 | 2.73 | 40000 | 0.0721 |

| 0.0673 | 2.8 | 41000 | 0.0720 |

| 0.0662 | 2.87 | 42000 | 0.0720 |

| 0.0681 | 2.94 | 43000 | 0.0719 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.2.dev0

- Tokenizers 0.11.0

|

{"tags": ["generated_from_trainer", "image-to-text"], "model-index": [{"name": "ViTGPT2_vizwiz", "results": []}]}

|

gagan3012/ViTGPT2_vizwiz

| null |

[

"transformers",

"pytorch",

"vision-encoder-decoder",

"generated_from_trainer",

"image-to-text",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #vision-encoder-decoder #generated_from_trainer #image-to-text #endpoints_compatible #has_space #region-us

|

ViTGPT2\_vizwiz

===============

This model is a fine-tuned version of [](URL on an unknown dataset.

It achieves the following results on the evaluation set:

* Loss: 0.0719

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 8

* eval\_batch\_size: 8

* seed: 42

* distributed\_type: multi-GPU

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 3.0

* mixed\_precision\_training: Native AMP

### Training results

### Framework versions

* Transformers 4.17.0.dev0

* Pytorch 1.10.2+cu102

* Datasets 1.18.2.dev0

* Tokenizers 0.11.0

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 8\n* eval\\_batch\\_size: 8\n* seed: 42\n* distributed\\_type: multi-GPU\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 3.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.17.0.dev0\n* Pytorch 1.10.2+cu102\n* Datasets 1.18.2.dev0\n* Tokenizers 0.11.0"

] |

[

"TAGS\n#transformers #pytorch #vision-encoder-decoder #generated_from_trainer #image-to-text #endpoints_compatible #has_space #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 8\n* eval\\_batch\\_size: 8\n* seed: 42\n* distributed\\_type: multi-GPU\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 3.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.17.0.dev0\n* Pytorch 1.10.2+cu102\n* Datasets 1.18.2.dev0\n* Tokenizers 0.11.0"

] |

token-classification

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-tiny-finetuned-ner

This model is a fine-tuned version of [prajjwal1/bert-tiny](https://huggingface.co/prajjwal1/bert-tiny) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1689

- Precision: 0.8083

- Recall: 0.8274

- F1: 0.8177

- Accuracy: 0.9598

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0355 | 1.0 | 878 | 0.1692 | 0.8072 | 0.8248 | 0.8159 | 0.9594 |

| 0.0411 | 2.0 | 1756 | 0.1678 | 0.8101 | 0.8277 | 0.8188 | 0.9600 |

| 0.0386 | 3.0 | 2634 | 0.1697 | 0.8103 | 0.8269 | 0.8186 | 0.9599 |

| 0.0373 | 4.0 | 3512 | 0.1694 | 0.8106 | 0.8263 | 0.8183 | 0.9600 |

| 0.0383 | 5.0 | 4390 | 0.1689 | 0.8083 | 0.8274 | 0.8177 | 0.9598 |

### Framework versions

- Transformers 4.10.0

- Pytorch 1.9.0+cu102

- Datasets 1.11.0

- Tokenizers 0.10.3

|

{"tags": ["generated_from_trainer"], "datasets": ["conll2003"], "metrics": ["precision", "recall", "f1", "accuracy"], "model-index": [{"name": "bert-tiny-finetuned-ner", "results": [{"task": {"type": "token-classification", "name": "Token Classification"}, "dataset": {"name": "conll2003", "type": "conll2003", "args": "conll2003"}, "metrics": [{"type": "precision", "value": 0.8083060109289617, "name": "Precision"}, {"type": "recall", "value": 0.8273856136033113, "name": "Recall"}, {"type": "f1", "value": 0.8177345348001547, "name": "F1"}, {"type": "accuracy", "value": 0.9597597979252387, "name": "Accuracy"}]}]}]}

|

gagan3012/bert-tiny-finetuned-ner

| null |

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #tensorboard #bert #token-classification #generated_from_trainer #dataset-conll2003 #model-index #autotrain_compatible #endpoints_compatible #has_space #region-us

|

bert-tiny-finetuned-ner

=======================

This model is a fine-tuned version of prajjwal1/bert-tiny on the conll2003 dataset.

It achieves the following results on the evaluation set:

* Loss: 0.1689

* Precision: 0.8083

* Recall: 0.8274

* F1: 0.8177

* Accuracy: 0.9598

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 16

* eval\_batch\_size: 16

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 5

### Training results

### Framework versions

* Transformers 4.10.0

* Pytorch 1.9.0+cu102

* Datasets 1.11.0

* Tokenizers 0.10.3

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 5",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.10.0\n* Pytorch 1.9.0+cu102\n* Datasets 1.11.0\n* Tokenizers 0.10.3"

] |

[

"TAGS\n#transformers #pytorch #tensorboard #bert #token-classification #generated_from_trainer #dataset-conll2003 #model-index #autotrain_compatible #endpoints_compatible #has_space #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 5",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.10.0\n* Pytorch 1.9.0+cu102\n* Datasets 1.11.0\n* Tokenizers 0.10.3"

] |

token-classification

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0614

- Precision: 0.9274

- Recall: 0.9363

- F1: 0.9319

- Accuracy: 0.9840

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2403 | 1.0 | 878 | 0.0701 | 0.9101 | 0.9202 | 0.9151 | 0.9805 |

| 0.0508 | 2.0 | 1756 | 0.0600 | 0.9220 | 0.9350 | 0.9285 | 0.9833 |

| 0.0301 | 3.0 | 2634 | 0.0614 | 0.9274 | 0.9363 | 0.9319 | 0.9840 |

### Framework versions

- Transformers 4.10.2

- Pytorch 1.9.0+cu102

- Datasets 1.12.0

- Tokenizers 0.10.3

|

{"license": "apache-2.0", "tags": ["generated_from_trainer"], "datasets": ["conll2003"], "metrics": ["precision", "recall", "f1", "accuracy"], "model-index": [{"name": "distilbert-base-uncased-finetuned-ner", "results": [{"task": {"type": "token-classification", "name": "Token Classification"}, "dataset": {"name": "conll2003", "type": "conll2003", "args": "conll2003"}, "metrics": [{"type": "precision", "value": 0.9274238227146815, "name": "Precision"}, {"type": "recall", "value": 0.9363463474661595, "name": "Recall"}, {"type": "f1", "value": 0.9318637274549098, "name": "F1"}, {"type": "accuracy", "value": 0.9839865283492462, "name": "Accuracy"}]}]}]}

|

gagan3012/distilbert-base-uncased-finetuned-ner

| null |

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #tensorboard #distilbert #token-classification #generated_from_trainer #dataset-conll2003 #license-apache-2.0 #model-index #autotrain_compatible #endpoints_compatible #region-us

|

distilbert-base-uncased-finetuned-ner

=====================================

This model is a fine-tuned version of distilbert-base-uncased on the conll2003 dataset.

It achieves the following results on the evaluation set:

* Loss: 0.0614

* Precision: 0.9274

* Recall: 0.9363

* F1: 0.9319

* Accuracy: 0.9840

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 16

* eval\_batch\_size: 16

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 3

### Training results

### Framework versions

* Transformers 4.10.2

* Pytorch 1.9.0+cu102

* Datasets 1.12.0

* Tokenizers 0.10.3

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 3",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.10.2\n* Pytorch 1.9.0+cu102\n* Datasets 1.12.0\n* Tokenizers 0.10.3"

] |

[

"TAGS\n#transformers #pytorch #tensorboard #distilbert #token-classification #generated_from_trainer #dataset-conll2003 #license-apache-2.0 #model-index #autotrain_compatible #endpoints_compatible #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 3",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.10.2\n* Pytorch 1.9.0+cu102\n* Datasets 1.12.0\n* Tokenizers 0.10.3"

] |

text2text-generation

|

transformers

|

# keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface 🤗

[](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

## Model:

Keytotext is based on the Amazing T5 Model:

- `k2t`: [Model](https://huggingface.co/gagan3012/k2t)

- `k2t-tiny`: [Model](https://huggingface.co/gagan3012/k2t-tiny)

- `k2t-base`: [Model](https://huggingface.co/gagan3012/k2t-base)

Training Notebooks can be found in the [`Training Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Training%20Notebooks) Folder

## Usage:

Example usage: [](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

Example Notebooks can be found in the [`Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Examples) Folder

```

pip install keytotext

```

## UI:

UI: [](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

```

pip install streamlit-tags

```

This uses a custom streamlit component built by me: [GitHub](https://github.com/gagan3012/streamlit-tags)

|

{"language": "en", "license": "mit", "tags": ["keytotext", "k2t-base", "Keywords to Sentences"], "datasets": ["WebNLG", "Dart"], "metrics": ["NLG"], "thumbnail": "Keywords to Sentences"}

|

gagan3012/k2t-base

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"keytotext",

"k2t-base",

"Keywords to Sentences",

"en",

"dataset:WebNLG",

"dataset:Dart",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"en"

] |

TAGS

#transformers #pytorch #t5 #text2text-generation #keytotext #k2t-base #Keywords to Sentences #en #dataset-WebNLG #dataset-Dart #license-mit #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# keytotext

!keytotext (1)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface

## UI:

UI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI: ](https://user-images.githubusercontent.com/49101362/116334480-f5e57a00-a7dd-11eb-987c-186477f94b6e.png)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface 🤗

[](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

## Model:

Keytotext is based on the Amazing T5 Model:

- `k2t`: [Model](https://huggingface.co/gagan3012/k2t)

- `k2t-tiny`: [Model](https://huggingface.co/gagan3012/k2t-tiny)

- `k2t-base`: [Model](https://huggingface.co/gagan3012/k2t-base)

Training Notebooks can be found in the [`Training Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Training%20Notebooks) Folder

## Usage:

Example usage: [](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

Example Notebooks can be found in the [`Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Examples) Folder

```

pip install keytotext

```

## UI:

UI: [](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

```

pip install streamlit-tags

```

This uses a custom streamlit component built by me: [GitHub](https://github.com/gagan3012/streamlit-tags)

|

{"language": "en", "license": "mit", "tags": ["keytotext", "k2t", "Keywords to Sentences"], "datasets": ["common_gen"], "metrics": ["NLG"], "thumbnail": "Keywords to Sentences"}

|

gagan3012/k2t-new

| null |

[

"transformers",

"pytorch",

"jax",

"t5",

"text2text-generation",

"keytotext",

"k2t",

"Keywords to Sentences",

"en",

"dataset:common_gen",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"en"

] |

TAGS

#transformers #pytorch #jax #t5 #text2text-generation #keytotext #k2t #Keywords to Sentences #en #dataset-common_gen #license-mit #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# keytotext

!keytotext (1)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface

## UI:

UI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI: ](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/notebooks/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

[](https://github.com/gagan3012/keytotext#api)

[](https://hub.docker.com/r/gagan30/keytotext)

[](https://huggingface.co/models?filter=keytotext)

[](https://keytotext.readthedocs.io/en/latest/?badge=latest)

[](https://github.com/psf/black)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

Potential use case can include:

- Marketing

- Search Engine Optimization

- Topic generation etc.

- Fine tuning of topic modeling models

|

{"language": "en", "license": "MIT", "tags": ["keytotext", "k2t", "Keywords to Sentences"], "datasets": ["WebNLG", "Dart"], "metrics": ["NLG"], "thumbnail": "Keywords to Sentences"}

|

gagan3012/k2t-test

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"keytotext",

"k2t",

"Keywords to Sentences",

"en",

"dataset:WebNLG",

"dataset:Dart",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"en"

] |

TAGS

#transformers #pytorch #t5 #text2text-generation #keytotext #k2t #Keywords to Sentences #en #dataset-WebNLG #dataset-Dart #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

<h1 align="center">keytotext</h1>

](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/notebooks/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

[](https://github.com/gagan3012/keytotext#api)

[](https://hub.docker.com/r/gagan30/keytotext)

[](https://huggingface.co/models?filter=keytotext)

[](https://keytotext.readthedocs.io/en/latest/?badge=latest)

[](https://github.com/psf/black)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

Potential use case can include:

- Marketing

- Search Engine Optimization

- Topic generation etc.

- Fine tuning of topic modeling models

|

{"language": "en", "license": "MIT", "tags": ["keytotext", "k2t", "Keywords to Sentences"], "datasets": ["WebNLG", "Dart"], "metrics": ["NLG"], "thumbnail": "Keywords to Sentences"}

|

gagan3012/k2t-test3

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"keytotext",

"k2t",

"Keywords to Sentences",

"en",

"dataset:WebNLG",

"dataset:Dart",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"en"

] |

TAGS

#transformers #pytorch #t5 #text2text-generation #keytotext #k2t #Keywords to Sentences #en #dataset-WebNLG #dataset-Dart #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

#keytotext

](https://user-images.githubusercontent.com/49101362/116334480-f5e57a00-a7dd-11eb-987c-186477f94b6e.png)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface 🤗

[](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

## Model:

Keytotext is based on the Amazing T5 Model:

- `k2t`: [Model](https://huggingface.co/gagan3012/k2t)

- `k2t-tiny`: [Model](https://huggingface.co/gagan3012/k2t-tiny)

- `k2t-base`: [Model](https://huggingface.co/gagan3012/k2t-base)

Training Notebooks can be found in the [`Training Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Training%20Notebooks) Folder

## Usage:

Example usage: [](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

Example Notebooks can be found in the [`Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Examples) Folder

```

pip install keytotext

```

## UI:

UI: [](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

```

pip install streamlit-tags

```

This uses a custom streamlit component built by me: [GitHub](https://github.com/gagan3012/streamlit-tags)

|

{"language": "en", "license": "mit", "tags": ["keytotext", "k2t-tiny", "Keywords to Sentences"], "datasets": ["WebNLG", "Dart"], "metrics": ["NLG"], "thumbnail": "Keywords to Sentences"}

|

gagan3012/k2t-tiny

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"keytotext",

"k2t-tiny",

"Keywords to Sentences",

"en",

"dataset:WebNLG",

"dataset:Dart",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"en"

] |

TAGS

#transformers #pytorch #t5 #text2text-generation #keytotext #k2t-tiny #Keywords to Sentences #en #dataset-WebNLG #dataset-Dart #license-mit #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# keytotext

!keytotext (1)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface

## UI:

UI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI: ](https://user-images.githubusercontent.com/49101362/116334480-f5e57a00-a7dd-11eb-987c-186477f94b6e.png)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface 🤗

[](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

## Model:

Keytotext is based on the Amazing T5 Model:

- `k2t`: [Model](https://huggingface.co/gagan3012/k2t)

- `k2t-tiny`: [Model](https://huggingface.co/gagan3012/k2t-tiny)

- `k2t-base`: [Model](https://huggingface.co/gagan3012/k2t-base)

Training Notebooks can be found in the [`Training Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Training%20Notebooks) Folder

## Usage:

Example usage: [](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

Example Notebooks can be found in the [`Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Examples) Folder

```

pip install keytotext

```

## UI:

UI: [](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

```

pip install streamlit-tags

```

This uses a custom streamlit component built by me: [GitHub](https://github.com/gagan3012/streamlit-tags)

|

{"language": "en", "license": "mit", "tags": ["keytotext", "k2t", "Keywords to Sentences"], "datasets": ["WebNLG", "Dart"], "metrics": ["NLG"], "thumbnail": "Keywords to Sentences"}

|

gagan3012/k2t

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"keytotext",

"k2t",

"Keywords to Sentences",

"en",

"dataset:WebNLG",

"dataset:Dart",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"en"

] |

TAGS

#transformers #pytorch #t5 #text2text-generation #keytotext #k2t #Keywords to Sentences #en #dataset-WebNLG #dataset-Dart #license-mit #autotrain_compatible #endpoints_compatible #has_space #text-generation-inference #region-us

|

# keytotext

!keytotext (1)

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface

## UI:

UI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI: \n\nIdea is to build a model which will take keywords as inputs and generate sentences as outputs.",

"### Keytotext is powered by Huggingface \n\n",

"## UI:\n\nUI:

model = AutoModelWithLMHead.from_pretrained("gagan3012/keytotext-small")

```

### Demo:

[](https://share.streamlit.io/gagan3012/keytotext/app.py)

https://share.streamlit.io/gagan3012/keytotext/app.py

### Example:

['India', 'Wedding'] -> We are celebrating today in New Delhi with three wedding anniversary parties.

|

{}

|

gagan3012/keytotext-small

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #t5 #text2text-generation #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Model:

Two Models have been built:

- Using T5-base size = 850 MB can be found here: URL

- Using T5-small size = 230 MB can be found here: URL

#### Usage:

### Demo:

model = AutoModelWithLMHead.from_pretrained("gagan3012/keytotext-small")

```

### Demo:

[](https://share.streamlit.io/gagan3012/keytotext/app.py)

https://share.streamlit.io/gagan3012/keytotext/app.py

### Example:

['India', 'Wedding'] -> We are celebrating today in New Delhi with three wedding anniversary parties.

|

{}

|

gagan3012/keytotext

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #t5 #text2text-generation #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Model:

Two Models have been built:

- Using T5-base size = 850 MB can be found here: URL

- Using T5-small size = 230 MB can be found here: URL

#### Usage:

### Demo:

on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6250

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

{"license": "apache-2.0", "tags": ["generated_from_trainer"], "model-index": [{"name": "model", "results": []}]}

|

gagan3012/model

| null |

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #tensorboard #gpt2 #text-generation #generated_from_trainer #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# model

This model is a fine-tuned version of distilgpt2 on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6250

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

[

"# model\n\nThis model is a fine-tuned version of distilgpt2 on an unknown dataset.\nIt achieves the following results on the evaluation set:\n- Loss: 3.6250",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 5e-05\n- train_batch_size: 2\n- eval_batch_size: 8\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 3.0",

"### Training results",

"### Framework versions\n\n- Transformers 4.12.0.dev0\n- Pytorch 1.9.0+cu111\n- Datasets 1.13.3\n- Tokenizers 0.10.3"

] |

[

"TAGS\n#transformers #pytorch #tensorboard #gpt2 #text-generation #generated_from_trainer #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# model\n\nThis model is a fine-tuned version of distilgpt2 on an unknown dataset.\nIt achieves the following results on the evaluation set:\n- Loss: 3.6250",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 5e-05\n- train_batch_size: 2\n- eval_batch_size: 8\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 3.0",

"### Training results",

"### Framework versions\n\n- Transformers 4.12.0.dev0\n- Pytorch 1.9.0+cu111\n- Datasets 1.13.3\n- Tokenizers 0.10.3"

] |

text-generation

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pickuplines

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.7873

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 100.0

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

{"license": "mit", "tags": ["generated_from_trainer"], "model-index": [{"name": "pickuplines", "results": []}]}

|

gagan3012/pickuplines

| null |

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #tensorboard #gpt2 #text-generation #generated_from_trainer #license-mit #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# pickuplines

This model is a fine-tuned version of gpt2 on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.7873

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 100.0

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

[

"# pickuplines\n\nThis model is a fine-tuned version of gpt2 on an unknown dataset.\nIt achieves the following results on the evaluation set:\n- Loss: 5.7873",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 5e-05\n- train_batch_size: 2\n- eval_batch_size: 8\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 100.0",

"### Training results",

"### Framework versions\n\n- Transformers 4.12.0.dev0\n- Pytorch 1.9.0+cu111\n- Datasets 1.13.3\n- Tokenizers 0.10.3"

] |

[

"TAGS\n#transformers #pytorch #tensorboard #gpt2 #text-generation #generated_from_trainer #license-mit #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# pickuplines\n\nThis model is a fine-tuned version of gpt2 on an unknown dataset.\nIt achieves the following results on the evaluation set:\n- Loss: 5.7873",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 5e-05\n- train_batch_size: 2\n- eval_batch_size: 8\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 100.0",

"### Training results",

"### Framework versions\n\n- Transformers 4.12.0.dev0\n- Pytorch 1.9.0+cu111\n- Datasets 1.13.3\n- Tokenizers 0.10.3"

] |

text-generation

|

transformers

|

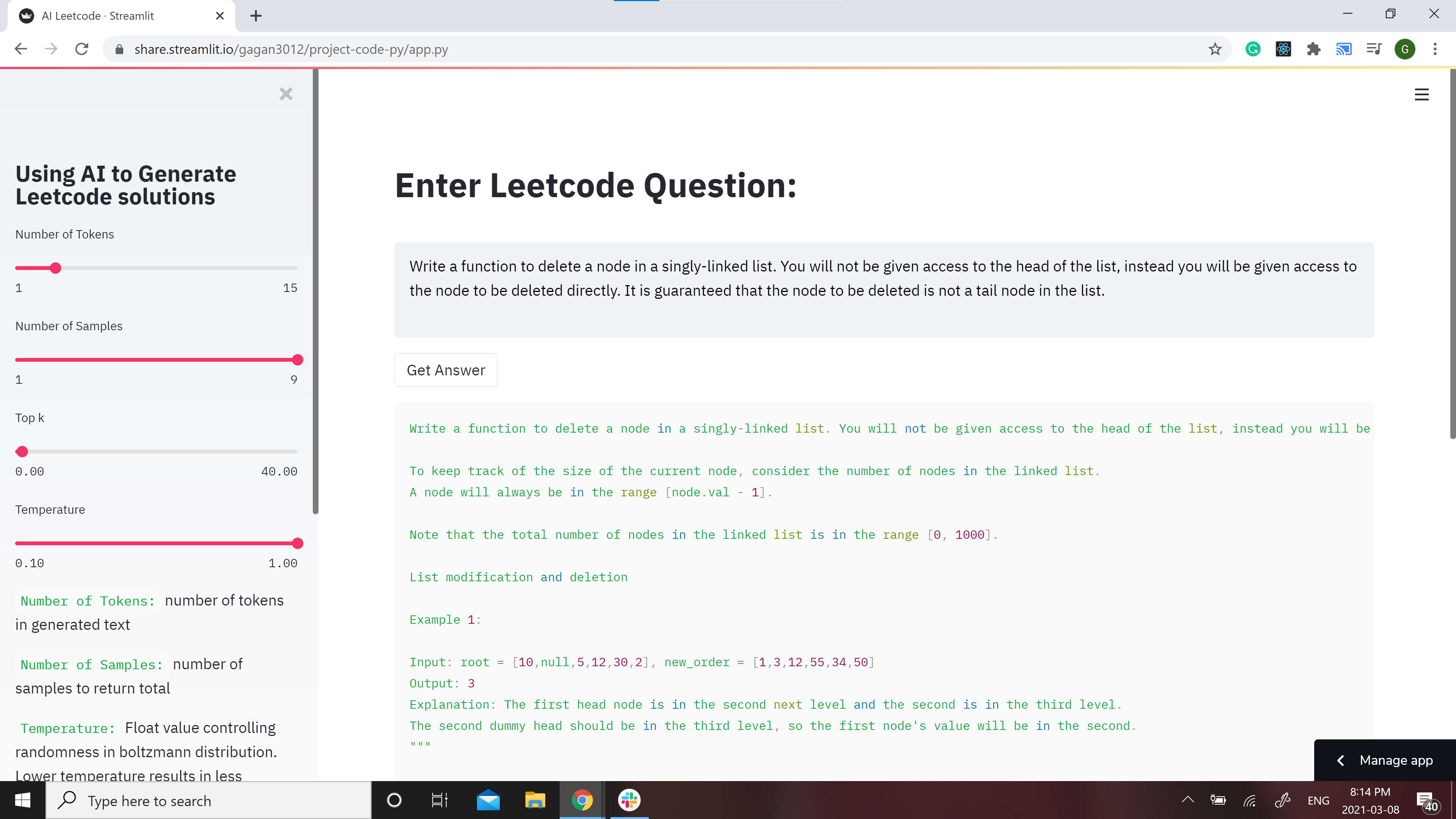

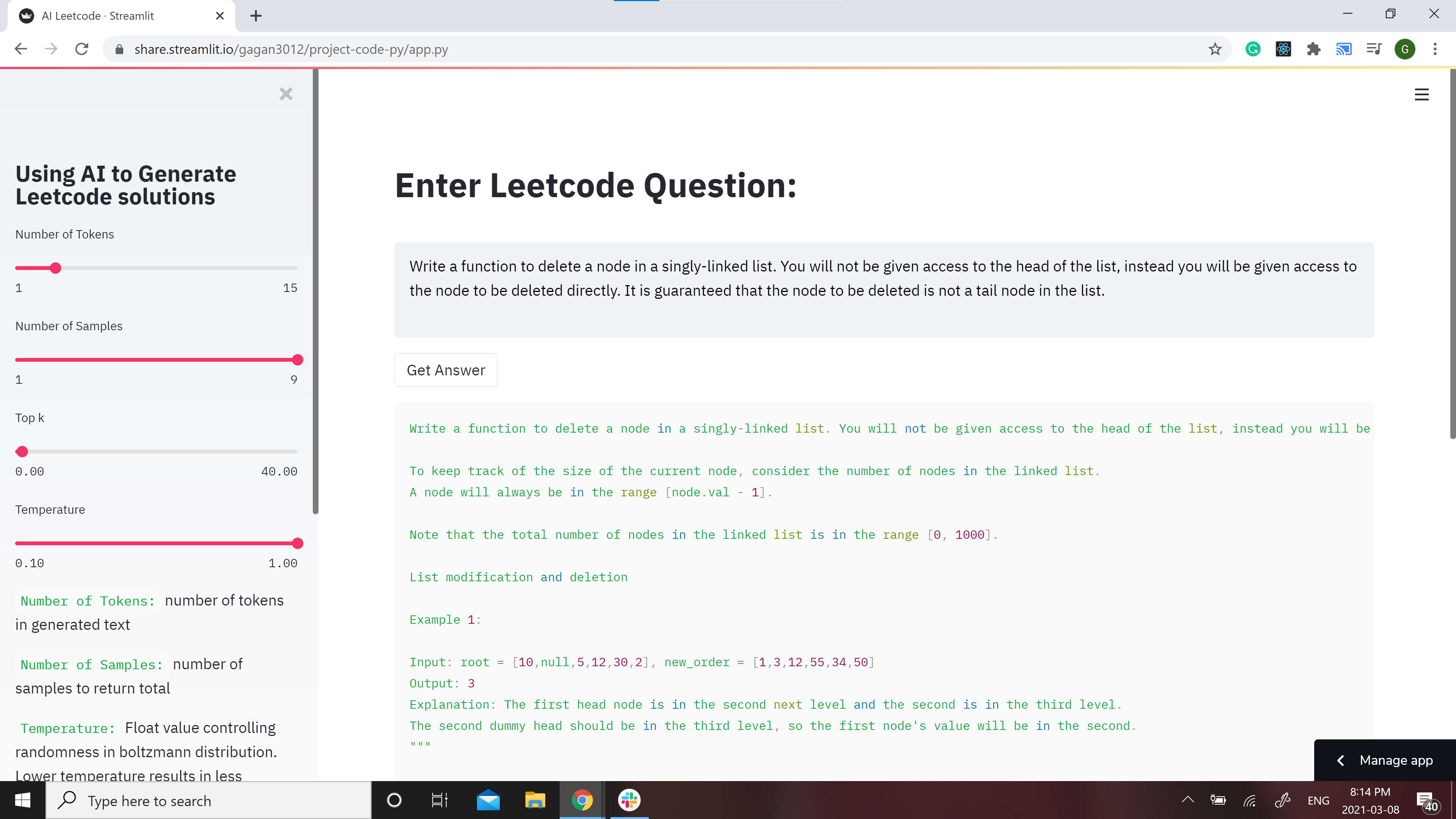

# Leetcode using AI :robot:

GPT-2 Model for Leetcode Questions in python

**Note**: the Answers might not make sense in some cases because of the bias in GPT-2

**Contribtuions:** If you would like to make the model better contributions are welcome Check out [CONTRIBUTIONS.md](https://github.com/gagan3012/project-code-py/blob/master/CONTRIBUTIONS.md)

### 📢 Favour:

It would be highly motivating, if you can STAR⭐ this repo if you find it helpful.

## Model

Two models have been developed for different use cases and they can be found at https://huggingface.co/gagan3012

The model weights can be found here: [GPT-2](https://huggingface.co/gagan3012/project-code-py) and [DistilGPT-2](https://huggingface.co/gagan3012/project-code-py-small)

### Example usage:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/project-code-py")

model = AutoModelWithLMHead.from_pretrained("gagan3012/project-code-py")

```

## Demo

[](https://share.streamlit.io/gagan3012/project-code-py/app.py)

A streamlit webapp has been setup to use the model: https://share.streamlit.io/gagan3012/project-code-py/app.py

## Example results:

### Question:

```

Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

```

### Answer:

```python

""" Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

For example,

a = 1->2->3

b = 3->1->2

t = ListNode(-1, 1)

Note: The lexicographic ordering of the nodes in a tree matters. Do not assign values to nodes in a tree.

Example 1:

Input: [1,2,3]

Output: 1->2->5

Explanation: 1->2->3->3->4, then 1->2->5[2] and then 5->1->3->4.

Note:

The length of a linked list will be in the range [1, 1000].

Node.val must be a valid LinkedListNode type.

Both the length and the value of the nodes in a linked list will be in the range [-1000, 1000].

All nodes are distinct.

"""

# Definition for singly-linked list.

# class ListNode:

# def __init__(self, x):

# self.val = x

# self.next = None

class Solution:

def deleteNode(self, head: ListNode, val: int) -> None:

"""

BFS

Linked List

:param head: ListNode

:param val: int

:return: ListNode

"""

if head is not None:

return head

dummy = ListNode(-1, 1)

dummy.next = head

dummy.next.val = val

dummy.next.next = head

dummy.val = ""

s1 = Solution()

print(s1.deleteNode(head))

print(s1.deleteNode(-1))

print(s1.deleteNode(-1))

```

|

{}

|

gagan3012/project-code-py-small

| null |

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #jax #gpt2 #text-generation #autotrain_compatible #endpoints_compatible #has_space #text-generation-inference #region-us

|

# Leetcode using AI :robot:

GPT-2 Model for Leetcode Questions in python

Note: the Answers might not make sense in some cases because of the bias in GPT-2

Contribtuions: If you would like to make the model better contributions are welcome Check out URL

### Favour:

It would be highly motivating, if you can STAR⭐ this repo if you find it helpful.

## Model

Two models have been developed for different use cases and they can be found at URL

The model weights can be found here: GPT-2 and DistilGPT-2

### Example usage:

## Demo

### 📢 Favour:

It would be highly motivating, if you can STAR⭐ this repo if you find it helpful.

## Model

Two models have been developed for different use cases and they can be found at https://huggingface.co/gagan3012

The model weights can be found here: [GPT-2](https://huggingface.co/gagan3012/project-code-py) and [DistilGPT-2](https://huggingface.co/gagan3012/project-code-py-small)

### Example usage:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/project-code-py")

model = AutoModelWithLMHead.from_pretrained("gagan3012/project-code-py")

```

## Demo

[](https://share.streamlit.io/gagan3012/project-code-py/app.py)

A streamlit webapp has been setup to use the model: https://share.streamlit.io/gagan3012/project-code-py/app.py

## Example results:

### Question:

```

Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

```

### Answer:

```python

""" Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

For example,

a = 1->2->3

b = 3->1->2

t = ListNode(-1, 1)

Note: The lexicographic ordering of the nodes in a tree matters. Do not assign values to nodes in a tree.

Example 1:

Input: [1,2,3]

Output: 1->2->5

Explanation: 1->2->3->3->4, then 1->2->5[2] and then 5->1->3->4.

Note:

The length of a linked list will be in the range [1, 1000].

Node.val must be a valid LinkedListNode type.

Both the length and the value of the nodes in a linked list will be in the range [-1000, 1000].

All nodes are distinct.

"""

# Definition for singly-linked list.

# class ListNode:

# def __init__(self, x):

# self.val = x

# self.next = None

class Solution:

def deleteNode(self, head: ListNode, val: int) -> None:

"""

BFS

Linked List

:param head: ListNode

:param val: int

:return: ListNode

"""

if head is not None:

return head

dummy = ListNode(-1, 1)

dummy.next = head

dummy.next.val = val

dummy.next.next = head

dummy.val = ""

s1 = Solution()

print(s1.deleteNode(head))

print(s1.deleteNode(-1))

print(s1.deleteNode(-1))

```

|

{}

|

gagan3012/project-code-py

| null |

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #jax #gpt2 #text-generation #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# Leetcode using AI :robot:

GPT-2 Model for Leetcode Questions in python

Note: the Answers might not make sense in some cases because of the bias in GPT-2

Contribtuions: If you would like to make the model better contributions are welcome Check out URL

### Favour:

It would be highly motivating, if you can STAR⭐ this repo if you find it helpful.

## Model

Two models have been developed for different use cases and they can be found at URL

The model weights can be found here: GPT-2 and DistilGPT-2

### Example usage:

## Demo

model = AutoModelWithLMHead.from_pretrained("gagan3012/rap-writer")

```

|

{}

|

gagan3012/rap-writer

| null |

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #jax #gpt2 #text-generation #autotrain_compatible #endpoints_compatible #has_space #text-generation-inference #region-us

|

# Generating Rap song Lyrics like Eminem Using GPT2

### I have built a custom model for it using data from Kaggle

Creating a new finetuned model using data lyrics from leading hip-hop stars

### My model can be accessed at: gagan3012/rap-writer

|

[

"# Generating Rap song Lyrics like Eminem Using GPT2",

"### I have built a custom model for it using data from Kaggle \n\nCreating a new finetuned model using data lyrics from leading hip-hop stars",

"### My model can be accessed at: gagan3012/rap-writer"

] |

[

"TAGS\n#transformers #pytorch #jax #gpt2 #text-generation #autotrain_compatible #endpoints_compatible #has_space #text-generation-inference #region-us \n",

"# Generating Rap song Lyrics like Eminem Using GPT2",

"### I have built a custom model for it using data from Kaggle \n\nCreating a new finetuned model using data lyrics from leading hip-hop stars",

"### My model can be accessed at: gagan3012/rap-writer"

] |

text2text-generation

|

transformers

|

---

Summarisation model summarsiation

|

{}

|

gagan3012/summarsiation

| null |

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #t5 #text2text-generation #autotrain_compatible #endpoints_compatible #has_space #text-generation-inference #region-us

|

---

Summarisation model summarsiation

|

[] |

[

"TAGS\n#transformers #pytorch #t5 #text2text-generation #autotrain_compatible #endpoints_compatible #has_space #text-generation-inference #region-us \n"

] |

automatic-speech-recognition

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-hindi

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.2.dev0

- Tokenizers 0.11.0

|

{"license": "apache-2.0", "tags": ["generated_from_trainer"], "datasets": ["common_voice"], "model-index": [{"name": "wav2vec2-large-xls-r-300m-hindi", "results": []}]}

|

gagan3012/wav2vec2-large-xls-r-300m-hindi

| null |

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #wav2vec2 #automatic-speech-recognition #generated_from_trainer #dataset-common_voice #license-apache-2.0 #endpoints_compatible #region-us

|

# wav2vec2-large-xls-r-300m-hindi

This model is a fine-tuned version of facebook/wav2vec2-xls-r-300m on the common_voice dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters