problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_36882

|

rasdani/github-patches

|

git_diff

|

ipython__ipython-5963

|

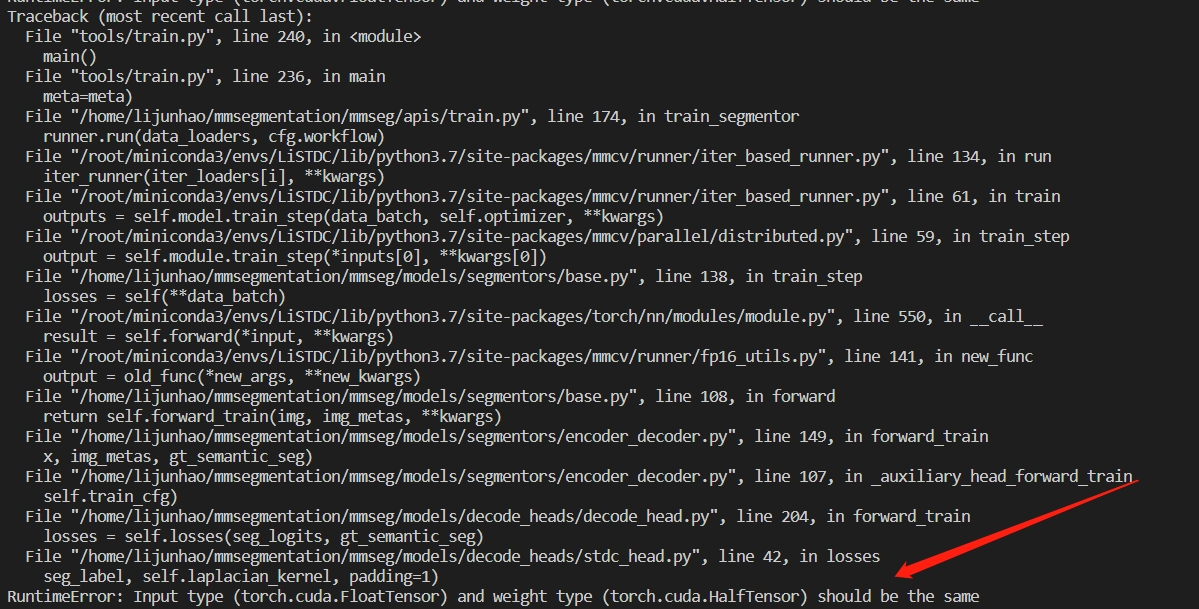

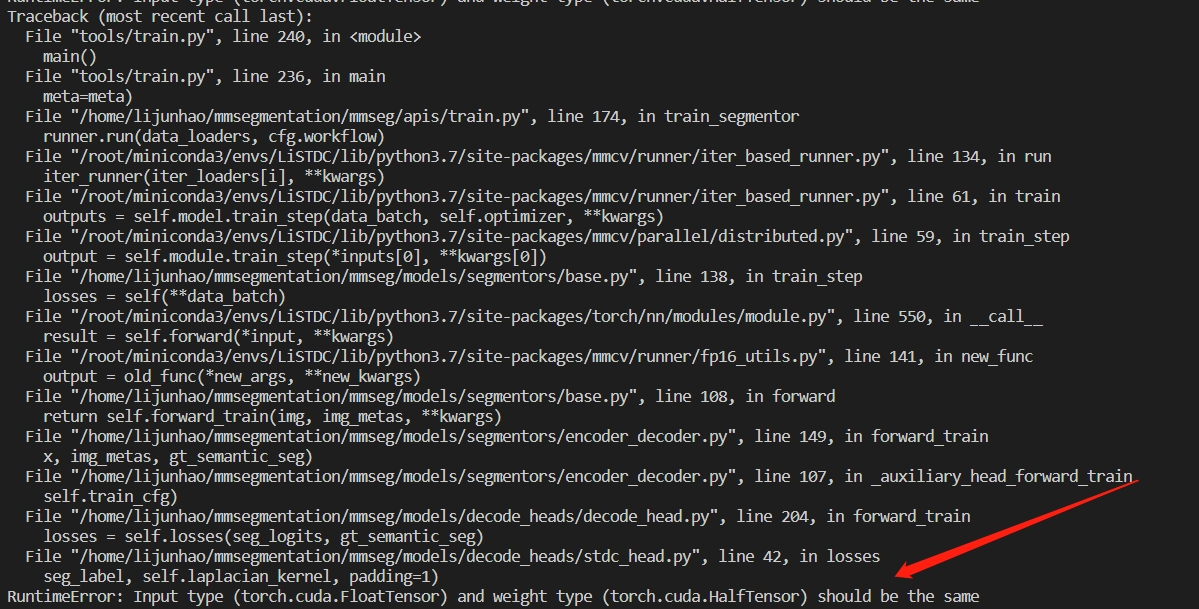

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Problems when displaying multiple views of ContainerWidget.

`ContainerView.render` registers anonymous functions to `this.model.on('displayed')` . This is a problem because when rendering multiple views of the same widget container, multiple instances of this anonymous function get registered to this event.

PS: This is also related to #5958

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `IPython/html/widgets/widget_container.py`

Content:

```

1 """ContainerWidget class.

2

3 Represents a container that can be used to group other widgets.

4 """

5 #-----------------------------------------------------------------------------

6 # Copyright (c) 2013, the IPython Development Team.

7 #

8 # Distributed under the terms of the Modified BSD License.

9 #

10 # The full license is in the file COPYING.txt, distributed with this software.

11 #-----------------------------------------------------------------------------

12

13 #-----------------------------------------------------------------------------

14 # Imports

15 #-----------------------------------------------------------------------------

16 from .widget import DOMWidget

17 from IPython.utils.traitlets import Unicode, Tuple, TraitError

18

19 #-----------------------------------------------------------------------------

20 # Classes

21 #-----------------------------------------------------------------------------

22

23 class ContainerWidget(DOMWidget):

24 _view_name = Unicode('ContainerView', sync=True)

25

26 # Child widgets in the container.

27 # Using a tuple here to force reassignment to update the list.

28 # When a proper notifying-list trait exists, that is what should be used here.

29 children = Tuple()

30 _children = Tuple(sync=True)

31

32

33 def __init__(self, **kwargs):

34 super(ContainerWidget, self).__init__(**kwargs)

35 self.on_displayed(ContainerWidget._fire_children_displayed)

36

37 def _fire_children_displayed(self):

38 for child in self._children:

39 child._handle_displayed()

40

41 def _children_changed(self, name, old, new):

42 """Validate children list.

43

44 Makes sure only one instance of any given model can exist in the

45 children list.

46 An excellent post on uniqifiers is available at

47 http://www.peterbe.com/plog/uniqifiers-benchmark

48 which provides the inspiration for using this implementation. Below

49 I've implemented the `f5` algorithm using Python comprehensions."""

50 if new is not None:

51 seen = {}

52 def add_item(i):

53 seen[i.model_id] = True

54 return i

55 self._children = [add_item(i) for i in new if not i.model_id in seen]

56

57

58 class PopupWidget(ContainerWidget):

59 _view_name = Unicode('PopupView', sync=True)

60

61 description = Unicode(sync=True)

62 button_text = Unicode(sync=True)

63

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/IPython/html/widgets/widget_container.py b/IPython/html/widgets/widget_container.py

--- a/IPython/html/widgets/widget_container.py

+++ b/IPython/html/widgets/widget_container.py

@@ -2,58 +2,29 @@

Represents a container that can be used to group other widgets.

"""

-#-----------------------------------------------------------------------------

-# Copyright (c) 2013, the IPython Development Team.

-#

+

+# Copyright (c) IPython Development Team.

# Distributed under the terms of the Modified BSD License.

-#

-# The full license is in the file COPYING.txt, distributed with this software.

-#-----------------------------------------------------------------------------

-#-----------------------------------------------------------------------------

-# Imports

-#-----------------------------------------------------------------------------

from .widget import DOMWidget

from IPython.utils.traitlets import Unicode, Tuple, TraitError

-#-----------------------------------------------------------------------------

-# Classes

-#-----------------------------------------------------------------------------

-

class ContainerWidget(DOMWidget):

_view_name = Unicode('ContainerView', sync=True)

# Child widgets in the container.

# Using a tuple here to force reassignment to update the list.

# When a proper notifying-list trait exists, that is what should be used here.

- children = Tuple()

- _children = Tuple(sync=True)

-

+ children = Tuple(sync=True)

def __init__(self, **kwargs):

super(ContainerWidget, self).__init__(**kwargs)

self.on_displayed(ContainerWidget._fire_children_displayed)

def _fire_children_displayed(self):

- for child in self._children:

+ for child in self.children:

child._handle_displayed()

- def _children_changed(self, name, old, new):

- """Validate children list.

-

- Makes sure only one instance of any given model can exist in the

- children list.

- An excellent post on uniqifiers is available at

- http://www.peterbe.com/plog/uniqifiers-benchmark

- which provides the inspiration for using this implementation. Below

- I've implemented the `f5` algorithm using Python comprehensions."""

- if new is not None:

- seen = {}

- def add_item(i):

- seen[i.model_id] = True

- return i

- self._children = [add_item(i) for i in new if not i.model_id in seen]

-

class PopupWidget(ContainerWidget):

_view_name = Unicode('PopupView', sync=True)

|

{"golden_diff": "diff --git a/IPython/html/widgets/widget_container.py b/IPython/html/widgets/widget_container.py\n--- a/IPython/html/widgets/widget_container.py\n+++ b/IPython/html/widgets/widget_container.py\n@@ -2,58 +2,29 @@\n \n Represents a container that can be used to group other widgets.\n \"\"\"\n-#-----------------------------------------------------------------------------\n-# Copyright (c) 2013, the IPython Development Team.\n-#\n+\n+# Copyright (c) IPython Development Team.\n # Distributed under the terms of the Modified BSD License.\n-#\n-# The full license is in the file COPYING.txt, distributed with this software.\n-#-----------------------------------------------------------------------------\n \n-#-----------------------------------------------------------------------------\n-# Imports\n-#-----------------------------------------------------------------------------\n from .widget import DOMWidget\n from IPython.utils.traitlets import Unicode, Tuple, TraitError\n \n-#-----------------------------------------------------------------------------\n-# Classes\n-#-----------------------------------------------------------------------------\n-\n class ContainerWidget(DOMWidget):\n _view_name = Unicode('ContainerView', sync=True)\n \n # Child widgets in the container.\n # Using a tuple here to force reassignment to update the list.\n # When a proper notifying-list trait exists, that is what should be used here.\n- children = Tuple()\n- _children = Tuple(sync=True)\n-\n+ children = Tuple(sync=True)\n \n def __init__(self, **kwargs):\n super(ContainerWidget, self).__init__(**kwargs)\n self.on_displayed(ContainerWidget._fire_children_displayed)\n \n def _fire_children_displayed(self):\n- for child in self._children:\n+ for child in self.children:\n child._handle_displayed()\n \n- def _children_changed(self, name, old, new):\n- \"\"\"Validate children list.\n-\n- Makes sure only one instance of any given model can exist in the \n- children list.\n- An excellent post on uniqifiers is available at \n- http://www.peterbe.com/plog/uniqifiers-benchmark\n- which provides the inspiration for using this implementation. Below\n- I've implemented the `f5` algorithm using Python comprehensions.\"\"\"\n- if new is not None:\n- seen = {}\n- def add_item(i):\n- seen[i.model_id] = True\n- return i\n- self._children = [add_item(i) for i in new if not i.model_id in seen]\n-\n \n class PopupWidget(ContainerWidget):\n _view_name = Unicode('PopupView', sync=True)\n", "issue": "Problems when displaying multiple views of ContainerWidget. \n`ContainerView.render` registers anonymous functions to `this.model.on('displayed')` . This is a problem because when rendering multiple views of the same widget container, multiple instances of this anonymous function get registered to this event. \n\nPS: This is also related to #5958\n\n", "before_files": [{"content": "\"\"\"ContainerWidget class. \n\nRepresents a container that can be used to group other widgets.\n\"\"\"\n#-----------------------------------------------------------------------------\n# Copyright (c) 2013, the IPython Development Team.\n#\n# Distributed under the terms of the Modified BSD License.\n#\n# The full license is in the file COPYING.txt, distributed with this software.\n#-----------------------------------------------------------------------------\n\n#-----------------------------------------------------------------------------\n# Imports\n#-----------------------------------------------------------------------------\nfrom .widget import DOMWidget\nfrom IPython.utils.traitlets import Unicode, Tuple, TraitError\n\n#-----------------------------------------------------------------------------\n# Classes\n#-----------------------------------------------------------------------------\n\nclass ContainerWidget(DOMWidget):\n _view_name = Unicode('ContainerView', sync=True)\n\n # Child widgets in the container.\n # Using a tuple here to force reassignment to update the list.\n # When a proper notifying-list trait exists, that is what should be used here.\n children = Tuple()\n _children = Tuple(sync=True)\n\n\n def __init__(self, **kwargs):\n super(ContainerWidget, self).__init__(**kwargs)\n self.on_displayed(ContainerWidget._fire_children_displayed)\n\n def _fire_children_displayed(self):\n for child in self._children:\n child._handle_displayed()\n\n def _children_changed(self, name, old, new):\n \"\"\"Validate children list.\n\n Makes sure only one instance of any given model can exist in the \n children list.\n An excellent post on uniqifiers is available at \n http://www.peterbe.com/plog/uniqifiers-benchmark\n which provides the inspiration for using this implementation. Below\n I've implemented the `f5` algorithm using Python comprehensions.\"\"\"\n if new is not None:\n seen = {}\n def add_item(i):\n seen[i.model_id] = True\n return i\n self._children = [add_item(i) for i in new if not i.model_id in seen]\n\n\nclass PopupWidget(ContainerWidget):\n _view_name = Unicode('PopupView', sync=True)\n \n description = Unicode(sync=True)\n button_text = Unicode(sync=True)\n", "path": "IPython/html/widgets/widget_container.py"}], "after_files": [{"content": "\"\"\"ContainerWidget class. \n\nRepresents a container that can be used to group other widgets.\n\"\"\"\n\n# Copyright (c) IPython Development Team.\n# Distributed under the terms of the Modified BSD License.\n\nfrom .widget import DOMWidget\nfrom IPython.utils.traitlets import Unicode, Tuple, TraitError\n\nclass ContainerWidget(DOMWidget):\n _view_name = Unicode('ContainerView', sync=True)\n\n # Child widgets in the container.\n # Using a tuple here to force reassignment to update the list.\n # When a proper notifying-list trait exists, that is what should be used here.\n children = Tuple(sync=True)\n\n def __init__(self, **kwargs):\n super(ContainerWidget, self).__init__(**kwargs)\n self.on_displayed(ContainerWidget._fire_children_displayed)\n\n def _fire_children_displayed(self):\n for child in self.children:\n child._handle_displayed()\n\n\nclass PopupWidget(ContainerWidget):\n _view_name = Unicode('PopupView', sync=True)\n \n description = Unicode(sync=True)\n button_text = Unicode(sync=True)\n", "path": "IPython/html/widgets/widget_container.py"}]}

| 886 | 516 |

gh_patches_debug_2794

|

rasdani/github-patches

|

git_diff

|

bridgecrewio__checkov-5468

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[CKV_DOCKER_7] Erroneous failed check when --platform flag is used in multistage Dockerfile

**Describe the issue**

In the `CKV_DOCKER_7` check, when the `--platform` flag is used in a multistage Dockerfile, the check fails erroneously for images that have been created within the current Dockerfile and thus no version pegging should be required.

**Examples**

The Dockerfile below is green

```

FROM golang:1.20-bullseye AS base

FROM base AS build

```

but as soon as a `--platform` flag is added, the check fails:

```

FROM --platform=$BUILDPLATFORM golang:1.20-bullseye AS base

FROM base AS build

```

**Version (please complete the following information):**

- Checkov Version 2.4.4

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `checkov/dockerfile/checks/ReferenceLatestTag.py`

Content:

```

1 from __future__ import annotations

2

3 import re

4 from typing import TYPE_CHECKING

5

6 from checkov.common.models.enums import CheckCategories, CheckResult

7 from checkov.dockerfile.base_dockerfile_check import BaseDockerfileCheck

8

9 if TYPE_CHECKING:

10 from dockerfile_parse.parser import _Instruction

11

12 MULTI_STAGE_PATTERN = re.compile(r"(\S+)\s+as\s+(\S+)", re.IGNORECASE)

13

14

15 class ReferenceLatestTag(BaseDockerfileCheck):

16 def __init__(self) -> None:

17 name = "Ensure the base image uses a non latest version tag"

18 id = "CKV_DOCKER_7"

19 supported_instructions = ("FROM",)

20 categories = (CheckCategories.CONVENTION,)

21 super().__init__(name=name, id=id, categories=categories, supported_instructions=supported_instructions)

22

23 def scan_resource_conf(self, conf: list[_Instruction]) -> tuple[CheckResult, list[_Instruction] | None]:

24 stages = []

25

26 for content in conf:

27 base_image = content["value"]

28 if " as " in base_image.lower():

29 # do an initial lookup before using the regex

30 multi_stage = re.match(MULTI_STAGE_PATTERN, base_image)

31 if multi_stage:

32 base_image = multi_stage[1]

33 stages.append(multi_stage[2])

34

35 if ":" not in base_image and base_image not in stages and base_image != "scratch":

36 return CheckResult.FAILED, [content]

37 elif base_image.endswith(":latest"):

38 return CheckResult.FAILED, [content]

39 return CheckResult.PASSED, [content]

40

41

42 check = ReferenceLatestTag()

43

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/checkov/dockerfile/checks/ReferenceLatestTag.py b/checkov/dockerfile/checks/ReferenceLatestTag.py

--- a/checkov/dockerfile/checks/ReferenceLatestTag.py

+++ b/checkov/dockerfile/checks/ReferenceLatestTag.py

@@ -9,7 +9,7 @@

if TYPE_CHECKING:

from dockerfile_parse.parser import _Instruction

-MULTI_STAGE_PATTERN = re.compile(r"(\S+)\s+as\s+(\S+)", re.IGNORECASE)

+MULTI_STAGE_PATTERN = re.compile(r"(?:--platform=\S+\s+)?(\S+)\s+as\s+(\S+)", re.IGNORECASE)

class ReferenceLatestTag(BaseDockerfileCheck):

|

{"golden_diff": "diff --git a/checkov/dockerfile/checks/ReferenceLatestTag.py b/checkov/dockerfile/checks/ReferenceLatestTag.py\n--- a/checkov/dockerfile/checks/ReferenceLatestTag.py\n+++ b/checkov/dockerfile/checks/ReferenceLatestTag.py\n@@ -9,7 +9,7 @@\n if TYPE_CHECKING:\n from dockerfile_parse.parser import _Instruction\n \n-MULTI_STAGE_PATTERN = re.compile(r\"(\\S+)\\s+as\\s+(\\S+)\", re.IGNORECASE)\n+MULTI_STAGE_PATTERN = re.compile(r\"(?:--platform=\\S+\\s+)?(\\S+)\\s+as\\s+(\\S+)\", re.IGNORECASE)\n \n \n class ReferenceLatestTag(BaseDockerfileCheck):\n", "issue": "[CKV_DOCKER_7] Erroneous failed check when --platform flag is used in multistage Dockerfile\n**Describe the issue**\r\nIn the `CKV_DOCKER_7` check, when the `--platform` flag is used in a multistage Dockerfile, the check fails erroneously for images that have been created within the current Dockerfile and thus no version pegging should be required.\r\n\r\n**Examples**\r\nThe Dockerfile below is green\r\n\r\n```\r\nFROM golang:1.20-bullseye AS base\r\n\r\nFROM base AS build\r\n```\r\n\r\nbut as soon as a `--platform` flag is added, the check fails:\r\n\r\n```\r\nFROM --platform=$BUILDPLATFORM golang:1.20-bullseye AS base\r\n\r\nFROM base AS build\r\n```\r\n\r\n**Version (please complete the following information):**\r\n - Checkov Version 2.4.4\n", "before_files": [{"content": "from __future__ import annotations\n\nimport re\nfrom typing import TYPE_CHECKING\n\nfrom checkov.common.models.enums import CheckCategories, CheckResult\nfrom checkov.dockerfile.base_dockerfile_check import BaseDockerfileCheck\n\nif TYPE_CHECKING:\n from dockerfile_parse.parser import _Instruction\n\nMULTI_STAGE_PATTERN = re.compile(r\"(\\S+)\\s+as\\s+(\\S+)\", re.IGNORECASE)\n\n\nclass ReferenceLatestTag(BaseDockerfileCheck):\n def __init__(self) -> None:\n name = \"Ensure the base image uses a non latest version tag\"\n id = \"CKV_DOCKER_7\"\n supported_instructions = (\"FROM\",)\n categories = (CheckCategories.CONVENTION,)\n super().__init__(name=name, id=id, categories=categories, supported_instructions=supported_instructions)\n\n def scan_resource_conf(self, conf: list[_Instruction]) -> tuple[CheckResult, list[_Instruction] | None]:\n stages = []\n\n for content in conf:\n base_image = content[\"value\"]\n if \" as \" in base_image.lower():\n # do an initial lookup before using the regex\n multi_stage = re.match(MULTI_STAGE_PATTERN, base_image)\n if multi_stage:\n base_image = multi_stage[1]\n stages.append(multi_stage[2])\n\n if \":\" not in base_image and base_image not in stages and base_image != \"scratch\":\n return CheckResult.FAILED, [content]\n elif base_image.endswith(\":latest\"):\n return CheckResult.FAILED, [content]\n return CheckResult.PASSED, [content]\n\n\ncheck = ReferenceLatestTag()\n", "path": "checkov/dockerfile/checks/ReferenceLatestTag.py"}], "after_files": [{"content": "from __future__ import annotations\n\nimport re\nfrom typing import TYPE_CHECKING\n\nfrom checkov.common.models.enums import CheckCategories, CheckResult\nfrom checkov.dockerfile.base_dockerfile_check import BaseDockerfileCheck\n\nif TYPE_CHECKING:\n from dockerfile_parse.parser import _Instruction\n\nMULTI_STAGE_PATTERN = re.compile(r\"(?:--platform=\\S+\\s+)?(\\S+)\\s+as\\s+(\\S+)\", re.IGNORECASE)\n\n\nclass ReferenceLatestTag(BaseDockerfileCheck):\n def __init__(self) -> None:\n name = \"Ensure the base image uses a non latest version tag\"\n id = \"CKV_DOCKER_7\"\n supported_instructions = (\"FROM\",)\n categories = (CheckCategories.CONVENTION,)\n super().__init__(name=name, id=id, categories=categories, supported_instructions=supported_instructions)\n\n def scan_resource_conf(self, conf: list[_Instruction]) -> tuple[CheckResult, list[_Instruction] | None]:\n stages = []\n\n for content in conf:\n base_image = content[\"value\"]\n if \" as \" in base_image.lower():\n # do an initial lookup before using the regex\n multi_stage = re.match(MULTI_STAGE_PATTERN, base_image)\n if multi_stage:\n base_image = multi_stage[1]\n stages.append(multi_stage[2])\n\n if \":\" not in base_image and base_image not in stages and base_image != \"scratch\":\n return CheckResult.FAILED, [content]\n elif base_image.endswith(\":latest\"):\n return CheckResult.FAILED, [content]\n return CheckResult.PASSED, [content]\n\n\ncheck = ReferenceLatestTag()\n", "path": "checkov/dockerfile/checks/ReferenceLatestTag.py"}]}

| 883 | 151 |

gh_patches_debug_31303

|

rasdani/github-patches

|

git_diff

|

alltheplaces__alltheplaces-3410

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Spider anytime_fitness is broken

During the global build at 2021-09-29-14-42-48, spider **anytime_fitness** failed with **0 features** and **0 errors**.

Here's [the log](https://data.alltheplaces.xyz/runs/2021-09-29-14-42-48/logs/anytime_fitness.txt) and [the output](https://data.alltheplaces.xyz/runs/2021-09-29-14-42-48/output/anytime_fitness.geojson) ([on a map](https://data.alltheplaces.xyz/map.html?show=https://data.alltheplaces.xyz/runs/2021-09-29-14-42-48/output/anytime_fitness.geojson))

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `locations/spiders/anytime_fitness.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 import scrapy

3 from locations.items import GeojsonPointItem

4 import json

5 import html

6

7

8 class AnytimeFitnessSpider(scrapy.Spider):

9 name = 'anytime_fitness'

10 item_attributes = { 'brand': "Anytime Fitness" }

11 allowed_domains = ['www.anytimefitness.com']

12

13 def start_requests(self):

14 url = 'https://www.anytimefitness.com/wp-content/uploads/gyms.json'

15 yield scrapy.Request(url, callback=self.parse)

16

17 def parse(self, response):

18 gyms = json.loads(response.body_as_unicode())

19

20 for gym in gyms:

21 yield GeojsonPointItem(

22 lat = gym['latitude'],

23 lon = gym['longitude'],

24 addr_full = ", ".join(filter(None, [gym['content']['address'], gym['content']['address2']])),

25 city = gym['content']['city'],

26 phone = gym['content']['phone'],

27 state = gym['content']['state_abbr'],

28 postcode = gym['content']['zip'],

29 ref = gym['content']['url'],

30 country = gym['content']['country'],

31 name = html.unescape(gym['content']['title']),

32 extras = {"number": gym['content']['number']}

33 )

34

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/locations/spiders/anytime_fitness.py b/locations/spiders/anytime_fitness.py

--- a/locations/spiders/anytime_fitness.py

+++ b/locations/spiders/anytime_fitness.py

@@ -7,27 +7,27 @@

class AnytimeFitnessSpider(scrapy.Spider):

name = 'anytime_fitness'

- item_attributes = { 'brand': "Anytime Fitness" }

+ item_attributes = {'brand': "Anytime Fitness", 'brand_wikidata': "Q4778364"}

allowed_domains = ['www.anytimefitness.com']

def start_requests(self):

- url = 'https://www.anytimefitness.com/wp-content/uploads/gyms.json'

+ url = 'https://www.anytimefitness.com/wp-content/uploads/locations.json'

yield scrapy.Request(url, callback=self.parse)

def parse(self, response):

- gyms = json.loads(response.body_as_unicode())

+ gyms = json.loads(response.text)

for gym in gyms:

yield GeojsonPointItem(

- lat = gym['latitude'],

- lon = gym['longitude'],

- addr_full = ", ".join(filter(None, [gym['content']['address'], gym['content']['address2']])),

- city = gym['content']['city'],

- phone = gym['content']['phone'],

- state = gym['content']['state_abbr'],

- postcode = gym['content']['zip'],

- ref = gym['content']['url'],

- country = gym['content']['country'],

- name = html.unescape(gym['content']['title']),

- extras = {"number": gym['content']['number']}

- )

+ lat=gym['latitude'],

+ lon=gym['longitude'],

+ addr_full=", ".join(filter(None, [gym['content']['address'], gym['content']['address2']])),

+ city=gym['content']['city'],

+ phone=gym['content']['phone'],

+ state=gym['content']['state_abbr'],

+ postcode=gym['content']['zip'],

+ ref=gym['content']['url'],

+ country=gym['content']['country'],

+ name=html.unescape(gym['content']['title']),

+ extras={"number": gym['content']['number']}

+ )

|

{"golden_diff": "diff --git a/locations/spiders/anytime_fitness.py b/locations/spiders/anytime_fitness.py\n--- a/locations/spiders/anytime_fitness.py\n+++ b/locations/spiders/anytime_fitness.py\n@@ -7,27 +7,27 @@\n \n class AnytimeFitnessSpider(scrapy.Spider):\n name = 'anytime_fitness'\n- item_attributes = { 'brand': \"Anytime Fitness\" }\n+ item_attributes = {'brand': \"Anytime Fitness\", 'brand_wikidata': \"Q4778364\"}\n allowed_domains = ['www.anytimefitness.com']\n \n def start_requests(self):\n- url = 'https://www.anytimefitness.com/wp-content/uploads/gyms.json'\n+ url = 'https://www.anytimefitness.com/wp-content/uploads/locations.json'\n yield scrapy.Request(url, callback=self.parse)\n \n def parse(self, response):\n- gyms = json.loads(response.body_as_unicode())\n+ gyms = json.loads(response.text)\n \n for gym in gyms:\n yield GeojsonPointItem(\n- lat = gym['latitude'],\n- lon = gym['longitude'],\n- addr_full = \", \".join(filter(None, [gym['content']['address'], gym['content']['address2']])),\n- city = gym['content']['city'],\n- phone = gym['content']['phone'],\n- state = gym['content']['state_abbr'],\n- postcode = gym['content']['zip'],\n- ref = gym['content']['url'],\n- country = gym['content']['country'],\n- name = html.unescape(gym['content']['title']),\n- extras = {\"number\": gym['content']['number']}\n- )\n+ lat=gym['latitude'],\n+ lon=gym['longitude'],\n+ addr_full=\", \".join(filter(None, [gym['content']['address'], gym['content']['address2']])),\n+ city=gym['content']['city'],\n+ phone=gym['content']['phone'],\n+ state=gym['content']['state_abbr'],\n+ postcode=gym['content']['zip'],\n+ ref=gym['content']['url'],\n+ country=gym['content']['country'],\n+ name=html.unescape(gym['content']['title']),\n+ extras={\"number\": gym['content']['number']}\n+ )\n", "issue": "Spider anytime_fitness is broken\nDuring the global build at 2021-09-29-14-42-48, spider **anytime_fitness** failed with **0 features** and **0 errors**.\n\nHere's [the log](https://data.alltheplaces.xyz/runs/2021-09-29-14-42-48/logs/anytime_fitness.txt) and [the output](https://data.alltheplaces.xyz/runs/2021-09-29-14-42-48/output/anytime_fitness.geojson) ([on a map](https://data.alltheplaces.xyz/map.html?show=https://data.alltheplaces.xyz/runs/2021-09-29-14-42-48/output/anytime_fitness.geojson))\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\nimport scrapy\nfrom locations.items import GeojsonPointItem\nimport json\nimport html\n\n\nclass AnytimeFitnessSpider(scrapy.Spider):\n name = 'anytime_fitness'\n item_attributes = { 'brand': \"Anytime Fitness\" }\n allowed_domains = ['www.anytimefitness.com']\n\n def start_requests(self):\n url = 'https://www.anytimefitness.com/wp-content/uploads/gyms.json'\n yield scrapy.Request(url, callback=self.parse)\n\n def parse(self, response):\n gyms = json.loads(response.body_as_unicode())\n\n for gym in gyms:\n yield GeojsonPointItem(\n lat = gym['latitude'],\n lon = gym['longitude'],\n addr_full = \", \".join(filter(None, [gym['content']['address'], gym['content']['address2']])),\n city = gym['content']['city'],\n phone = gym['content']['phone'],\n state = gym['content']['state_abbr'],\n postcode = gym['content']['zip'],\n ref = gym['content']['url'],\n country = gym['content']['country'],\n name = html.unescape(gym['content']['title']),\n extras = {\"number\": gym['content']['number']}\n )\n", "path": "locations/spiders/anytime_fitness.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\nimport scrapy\nfrom locations.items import GeojsonPointItem\nimport json\nimport html\n\n\nclass AnytimeFitnessSpider(scrapy.Spider):\n name = 'anytime_fitness'\n item_attributes = {'brand': \"Anytime Fitness\", 'brand_wikidata': \"Q4778364\"}\n allowed_domains = ['www.anytimefitness.com']\n\n def start_requests(self):\n url = 'https://www.anytimefitness.com/wp-content/uploads/locations.json'\n yield scrapy.Request(url, callback=self.parse)\n\n def parse(self, response):\n gyms = json.loads(response.text)\n\n for gym in gyms:\n yield GeojsonPointItem(\n lat=gym['latitude'],\n lon=gym['longitude'],\n addr_full=\", \".join(filter(None, [gym['content']['address'], gym['content']['address2']])),\n city=gym['content']['city'],\n phone=gym['content']['phone'],\n state=gym['content']['state_abbr'],\n postcode=gym['content']['zip'],\n ref=gym['content']['url'],\n country=gym['content']['country'],\n name=html.unescape(gym['content']['title']),\n extras={\"number\": gym['content']['number']}\n )\n", "path": "locations/spiders/anytime_fitness.py"}]}

| 772 | 503 |

gh_patches_debug_29620

|

rasdani/github-patches

|

git_diff

|

ansible__ansible-modules-core-3251

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

apache2_module hangs with "WARNING: The following essential module will be disabled."

I have this code in my playbook:

```

- apache2_module: name={{item}} state=absent

with_items:

- autoindex

- negotiation

```

However someone decided that autoindex and negotiation both are "essential", so when I try to disable them I get this warning:

```

# a2dismod autoindex

WARNING: The following essential module will be disabled.

This might result in unexpected behavior and should NOT be done

unless you know exactly what you are doing!

autoindex

To continue type in the phrase 'Yes, do as I say!' or retry by passing '-f':

```

and expects me to actually type "Yes, do as I say!" (WTF?!?) if I don't provide "-f". The ansible module does not provide that -f parameter, so it locks up.

As weird as that is, I think there _at least_ should be a "force=true" option in apache2_module - or -f should be default.

(Not sure if that's true for all "a2dismod" instances because there is a suspiciously low amount of Google results (none). It is at least there for apache2 currently installed from dotdeb.org)

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `web_infrastructure/apache2_module.py`

Content:

```

1 #!/usr/bin/python

2 #coding: utf-8 -*-

3

4 # (c) 2013-2014, Christian Berendt <[email protected]>

5 #

6 # This module is free software: you can redistribute it and/or modify

7 # it under the terms of the GNU General Public License as published by

8 # the Free Software Foundation, either version 3 of the License, or

9 # (at your option) any later version.

10 #

11 # This software is distributed in the hope that it will be useful,

12 # but WITHOUT ANY WARRANTY; without even the implied warranty of

13 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

14 # GNU General Public License for more details.

15 #

16 # You should have received a copy of the GNU General Public License

17 # along with this software. If not, see <http://www.gnu.org/licenses/>.

18

19 DOCUMENTATION = '''

20 ---

21 module: apache2_module

22 version_added: 1.6

23 author: "Christian Berendt (@berendt)"

24 short_description: enables/disables a module of the Apache2 webserver

25 description:

26 - Enables or disables a specified module of the Apache2 webserver.

27 options:

28 name:

29 description:

30 - name of the module to enable/disable

31 required: true

32 state:

33 description:

34 - indicate the desired state of the resource

35 choices: ['present', 'absent']

36 default: present

37

38 requirements: ["a2enmod","a2dismod"]

39 '''

40

41 EXAMPLES = '''

42 # enables the Apache2 module "wsgi"

43 - apache2_module: state=present name=wsgi

44

45 # disables the Apache2 module "wsgi"

46 - apache2_module: state=absent name=wsgi

47 '''

48

49 import re

50

51 def _disable_module(module):

52 name = module.params['name']

53 a2dismod_binary = module.get_bin_path("a2dismod")

54 if a2dismod_binary is None:

55 module.fail_json(msg="a2dismod not found. Perhaps this system does not use a2dismod to manage apache")

56

57 result, stdout, stderr = module.run_command("%s %s" % (a2dismod_binary, name))

58

59 if re.match(r'.*\b' + name + r' already disabled', stdout, re.S|re.M):

60 module.exit_json(changed = False, result = "Success")

61 elif result != 0:

62 module.fail_json(msg="Failed to disable module %s: %s" % (name, stdout))

63 else:

64 module.exit_json(changed = True, result = "Disabled")

65

66 def _enable_module(module):

67 name = module.params['name']

68 a2enmod_binary = module.get_bin_path("a2enmod")

69 if a2enmod_binary is None:

70 module.fail_json(msg="a2enmod not found. Perhaps this system does not use a2enmod to manage apache")

71

72 result, stdout, stderr = module.run_command("%s %s" % (a2enmod_binary, name))

73

74 if re.match(r'.*\b' + name + r' already enabled', stdout, re.S|re.M):

75 module.exit_json(changed = False, result = "Success")

76 elif result != 0:

77 module.fail_json(msg="Failed to enable module %s: %s" % (name, stdout))

78 else:

79 module.exit_json(changed = True, result = "Enabled")

80

81 def main():

82 module = AnsibleModule(

83 argument_spec = dict(

84 name = dict(required=True),

85 state = dict(default='present', choices=['absent', 'present'])

86 ),

87 )

88

89 if module.params['state'] == 'present':

90 _enable_module(module)

91

92 if module.params['state'] == 'absent':

93 _disable_module(module)

94

95 # import module snippets

96 from ansible.module_utils.basic import *

97 if __name__ == '__main__':

98 main()

99

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/web_infrastructure/apache2_module.py b/web_infrastructure/apache2_module.py

--- a/web_infrastructure/apache2_module.py

+++ b/web_infrastructure/apache2_module.py

@@ -29,6 +29,12 @@

description:

- name of the module to enable/disable

required: true

+ force:

+ description:

+ - force disabling of default modules and override Debian warnings

+ required: false

+ choices: ['yes', 'no']

+ default: no

state:

description:

- indicate the desired state of the resource

@@ -50,10 +56,14 @@

def _disable_module(module):

name = module.params['name']

+ force = module.params['force']

a2dismod_binary = module.get_bin_path("a2dismod")

if a2dismod_binary is None:

module.fail_json(msg="a2dismod not found. Perhaps this system does not use a2dismod to manage apache")

+ if force:

+ a2dismod_binary += ' -f'

+

result, stdout, stderr = module.run_command("%s %s" % (a2dismod_binary, name))

if re.match(r'.*\b' + name + r' already disabled', stdout, re.S|re.M):

@@ -82,6 +92,7 @@

module = AnsibleModule(

argument_spec = dict(

name = dict(required=True),

+ force = dict(required=False, type='bool', default=False),

state = dict(default='present', choices=['absent', 'present'])

),

)

|

{"golden_diff": "diff --git a/web_infrastructure/apache2_module.py b/web_infrastructure/apache2_module.py\n--- a/web_infrastructure/apache2_module.py\n+++ b/web_infrastructure/apache2_module.py\n@@ -29,6 +29,12 @@\n description:\n - name of the module to enable/disable\n required: true\n+ force:\n+ description:\n+ - force disabling of default modules and override Debian warnings\n+ required: false\n+ choices: ['yes', 'no']\n+ default: no\n state:\n description:\n - indicate the desired state of the resource\n@@ -50,10 +56,14 @@\n \n def _disable_module(module):\n name = module.params['name']\n+ force = module.params['force']\n a2dismod_binary = module.get_bin_path(\"a2dismod\")\n if a2dismod_binary is None:\n module.fail_json(msg=\"a2dismod not found. Perhaps this system does not use a2dismod to manage apache\")\n \n+ if force:\n+ a2dismod_binary += ' -f'\n+\n result, stdout, stderr = module.run_command(\"%s %s\" % (a2dismod_binary, name))\n \n if re.match(r'.*\\b' + name + r' already disabled', stdout, re.S|re.M):\n@@ -82,6 +92,7 @@\n module = AnsibleModule(\n argument_spec = dict(\n name = dict(required=True),\n+ force = dict(required=False, type='bool', default=False),\n state = dict(default='present', choices=['absent', 'present'])\n ),\n )\n", "issue": "apache2_module hangs with \"WARNING: The following essential module will be disabled.\"\nI have this code in my playbook:\n\n```\n- apache2_module: name={{item}} state=absent\n with_items:\n - autoindex\n - negotiation\n```\n\nHowever someone decided that autoindex and negotiation both are \"essential\", so when I try to disable them I get this warning:\n\n```\n# a2dismod autoindex\nWARNING: The following essential module will be disabled.\nThis might result in unexpected behavior and should NOT be done\nunless you know exactly what you are doing!\n autoindex\n\nTo continue type in the phrase 'Yes, do as I say!' or retry by passing '-f':\n```\n\nand expects me to actually type \"Yes, do as I say!\" (WTF?!?) if I don't provide \"-f\". The ansible module does not provide that -f parameter, so it locks up.\n\nAs weird as that is, I think there _at least_ should be a \"force=true\" option in apache2_module - or -f should be default.\n\n(Not sure if that's true for all \"a2dismod\" instances because there is a suspiciously low amount of Google results (none). It is at least there for apache2 currently installed from dotdeb.org)\n\n", "before_files": [{"content": "#!/usr/bin/python\n#coding: utf-8 -*-\n\n# (c) 2013-2014, Christian Berendt <[email protected]>\n#\n# This module is free software: you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation, either version 3 of the License, or\n# (at your option) any later version.\n#\n# This software is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with this software. If not, see <http://www.gnu.org/licenses/>.\n\nDOCUMENTATION = '''\n---\nmodule: apache2_module\nversion_added: 1.6\nauthor: \"Christian Berendt (@berendt)\"\nshort_description: enables/disables a module of the Apache2 webserver\ndescription:\n - Enables or disables a specified module of the Apache2 webserver.\noptions:\n name:\n description:\n - name of the module to enable/disable\n required: true\n state:\n description:\n - indicate the desired state of the resource\n choices: ['present', 'absent']\n default: present\n\nrequirements: [\"a2enmod\",\"a2dismod\"]\n'''\n\nEXAMPLES = '''\n# enables the Apache2 module \"wsgi\"\n- apache2_module: state=present name=wsgi\n\n# disables the Apache2 module \"wsgi\"\n- apache2_module: state=absent name=wsgi\n'''\n\nimport re\n\ndef _disable_module(module):\n name = module.params['name']\n a2dismod_binary = module.get_bin_path(\"a2dismod\")\n if a2dismod_binary is None:\n module.fail_json(msg=\"a2dismod not found. Perhaps this system does not use a2dismod to manage apache\")\n\n result, stdout, stderr = module.run_command(\"%s %s\" % (a2dismod_binary, name))\n\n if re.match(r'.*\\b' + name + r' already disabled', stdout, re.S|re.M):\n module.exit_json(changed = False, result = \"Success\")\n elif result != 0:\n module.fail_json(msg=\"Failed to disable module %s: %s\" % (name, stdout))\n else:\n module.exit_json(changed = True, result = \"Disabled\")\n\ndef _enable_module(module):\n name = module.params['name']\n a2enmod_binary = module.get_bin_path(\"a2enmod\")\n if a2enmod_binary is None:\n module.fail_json(msg=\"a2enmod not found. Perhaps this system does not use a2enmod to manage apache\")\n\n result, stdout, stderr = module.run_command(\"%s %s\" % (a2enmod_binary, name))\n\n if re.match(r'.*\\b' + name + r' already enabled', stdout, re.S|re.M):\n module.exit_json(changed = False, result = \"Success\")\n elif result != 0:\n module.fail_json(msg=\"Failed to enable module %s: %s\" % (name, stdout))\n else:\n module.exit_json(changed = True, result = \"Enabled\")\n\ndef main():\n module = AnsibleModule(\n argument_spec = dict(\n name = dict(required=True),\n state = dict(default='present', choices=['absent', 'present'])\n ),\n )\n\n if module.params['state'] == 'present':\n _enable_module(module)\n\n if module.params['state'] == 'absent':\n _disable_module(module)\n\n# import module snippets\nfrom ansible.module_utils.basic import *\nif __name__ == '__main__':\n main()\n", "path": "web_infrastructure/apache2_module.py"}], "after_files": [{"content": "#!/usr/bin/python\n#coding: utf-8 -*-\n\n# (c) 2013-2014, Christian Berendt <[email protected]>\n#\n# This module is free software: you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation, either version 3 of the License, or\n# (at your option) any later version.\n#\n# This software is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with this software. If not, see <http://www.gnu.org/licenses/>.\n\nDOCUMENTATION = '''\n---\nmodule: apache2_module\nversion_added: 1.6\nauthor: \"Christian Berendt (@berendt)\"\nshort_description: enables/disables a module of the Apache2 webserver\ndescription:\n - Enables or disables a specified module of the Apache2 webserver.\noptions:\n name:\n description:\n - name of the module to enable/disable\n required: true\n force:\n description:\n - force disabling of default modules and override Debian warnings\n required: false\n choices: ['yes', 'no']\n default: no\n state:\n description:\n - indicate the desired state of the resource\n choices: ['present', 'absent']\n default: present\n\nrequirements: [\"a2enmod\",\"a2dismod\"]\n'''\n\nEXAMPLES = '''\n# enables the Apache2 module \"wsgi\"\n- apache2_module: state=present name=wsgi\n\n# disables the Apache2 module \"wsgi\"\n- apache2_module: state=absent name=wsgi\n'''\n\nimport re\n\ndef _disable_module(module):\n name = module.params['name']\n force = module.params['force']\n a2dismod_binary = module.get_bin_path(\"a2dismod\")\n if a2dismod_binary is None:\n module.fail_json(msg=\"a2dismod not found. Perhaps this system does not use a2dismod to manage apache\")\n\n if force:\n a2dismod_binary += ' -f'\n\n result, stdout, stderr = module.run_command(\"%s %s\" % (a2dismod_binary, name))\n\n if re.match(r'.*\\b' + name + r' already disabled', stdout, re.S|re.M):\n module.exit_json(changed = False, result = \"Success\")\n elif result != 0:\n module.fail_json(msg=\"Failed to disable module %s: %s\" % (name, stdout))\n else:\n module.exit_json(changed = True, result = \"Disabled\")\n\ndef _enable_module(module):\n name = module.params['name']\n a2enmod_binary = module.get_bin_path(\"a2enmod\")\n if a2enmod_binary is None:\n module.fail_json(msg=\"a2enmod not found. Perhaps this system does not use a2enmod to manage apache\")\n\n result, stdout, stderr = module.run_command(\"%s %s\" % (a2enmod_binary, name))\n\n if re.match(r'.*\\b' + name + r' already enabled', stdout, re.S|re.M):\n module.exit_json(changed = False, result = \"Success\")\n elif result != 0:\n module.fail_json(msg=\"Failed to enable module %s: %s\" % (name, stdout))\n else:\n module.exit_json(changed = True, result = \"Enabled\")\n\ndef main():\n module = AnsibleModule(\n argument_spec = dict(\n name = dict(required=True),\n force = dict(required=False, type='bool', default=False),\n state = dict(default='present', choices=['absent', 'present'])\n ),\n )\n\n if module.params['state'] == 'present':\n _enable_module(module)\n\n if module.params['state'] == 'absent':\n _disable_module(module)\n\n# import module snippets\nfrom ansible.module_utils.basic import *\nif __name__ == '__main__':\n main()\n", "path": "web_infrastructure/apache2_module.py"}]}

| 1,571 | 368 |

gh_patches_debug_21796

|

rasdani/github-patches

|

git_diff

|

psychopy__psychopy-4625

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

404 errors on some of the page in the HELP menus

Version used

- psychopy: 2021.2.3

https://www.psychopy.org/builder/builder.html

https://www.psychopy.org/api/api.html

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `psychopy/app/urls.py`

Content:

```

1 #!/usr/bin/env python

2 # -*- coding: utf-8 -*-

3

4 """A central location to store information about urls

5 """

6 urls = dict()

7

8 # links based on string names

9 urls['builder'] = "https://www.psychopy.org/builder/builder.html"

10 urls['builder.loops'] = "https://www.psychopy.org/builder/flow.html#loops"

11 # NB. builder components get their urls defined by the component code

12 # (so a custom component can have a url)

13

14 urls['downloads'] = "https://github.com/psychopy/psychopy/releases"

15 urls['changelog'] = "https://www.psychopy.org/changelog.html"

16

17 general = "https://www.psychopy.org/general/"

18 urls['prefs'] = general + "prefs.html"

19 urls['prefs.general'] = general + "prefs.html#general-settings"

20 urls['prefs.app'] = general + "prefs.html#application-settings"

21 urls['prefs.coder'] = general + "prefs.html#coder-settings"

22 urls['prefs.builder'] = general + "prefs.html#builder-settings"

23 urls['prefs.connections'] = general + "prefs.html#connection-settings"

24

25 # links keyed by wxIDs (e.g. menu item IDs)

26 urls['psychopyHome'] = "https://www.psychopy.org/"

27 urls['psychopyReference'] = "https://www.psychopy.org/api/api.html"

28 urls['coderTutorial'] = "https://www.psychopy.org/coder/tutorial1.html"

29 urls['builderHelp'] = urls['builder']

30 urls['builderDemos'] = "http://code.google.com/p/psychopy/downloads/list?can=2&q=demos"

31 urls['projsAbout'] = "https://www.psychopy.org/general/projects.html"

32

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/psychopy/app/urls.py b/psychopy/app/urls.py

--- a/psychopy/app/urls.py

+++ b/psychopy/app/urls.py

@@ -6,7 +6,7 @@

urls = dict()

# links based on string names

-urls['builder'] = "https://www.psychopy.org/builder/builder.html"

+urls['builder'] = "https://www.psychopy.org/builder"

urls['builder.loops'] = "https://www.psychopy.org/builder/flow.html#loops"

# NB. builder components get their urls defined by the component code

# (so a custom component can have a url)

@@ -24,7 +24,7 @@

# links keyed by wxIDs (e.g. menu item IDs)

urls['psychopyHome'] = "https://www.psychopy.org/"

-urls['psychopyReference'] = "https://www.psychopy.org/api/api.html"

+urls['psychopyReference'] = "https://www.psychopy.org/api"

urls['coderTutorial'] = "https://www.psychopy.org/coder/tutorial1.html"

urls['builderHelp'] = urls['builder']

urls['builderDemos'] = "http://code.google.com/p/psychopy/downloads/list?can=2&q=demos"

|

{"golden_diff": "diff --git a/psychopy/app/urls.py b/psychopy/app/urls.py\n--- a/psychopy/app/urls.py\n+++ b/psychopy/app/urls.py\n@@ -6,7 +6,7 @@\n urls = dict()\n \n # links based on string names\n-urls['builder'] = \"https://www.psychopy.org/builder/builder.html\"\n+urls['builder'] = \"https://www.psychopy.org/builder\"\n urls['builder.loops'] = \"https://www.psychopy.org/builder/flow.html#loops\"\n # NB. builder components get their urls defined by the component code\n # (so a custom component can have a url)\n@@ -24,7 +24,7 @@\n \n # links keyed by wxIDs (e.g. menu item IDs)\n urls['psychopyHome'] = \"https://www.psychopy.org/\"\n-urls['psychopyReference'] = \"https://www.psychopy.org/api/api.html\"\n+urls['psychopyReference'] = \"https://www.psychopy.org/api\"\n urls['coderTutorial'] = \"https://www.psychopy.org/coder/tutorial1.html\"\n urls['builderHelp'] = urls['builder']\n urls['builderDemos'] = \"http://code.google.com/p/psychopy/downloads/list?can=2&q=demos\"\n", "issue": "404 errors on some of the page in the HELP menus\nVersion used\r\n- psychopy: 2021.2.3\r\n\r\nhttps://www.psychopy.org/builder/builder.html\r\nhttps://www.psychopy.org/api/api.html\n", "before_files": [{"content": "#!/usr/bin/env python\n# -*- coding: utf-8 -*-\n\n\"\"\"A central location to store information about urls\n\"\"\"\nurls = dict()\n\n# links based on string names\nurls['builder'] = \"https://www.psychopy.org/builder/builder.html\"\nurls['builder.loops'] = \"https://www.psychopy.org/builder/flow.html#loops\"\n# NB. builder components get their urls defined by the component code\n# (so a custom component can have a url)\n\nurls['downloads'] = \"https://github.com/psychopy/psychopy/releases\"\nurls['changelog'] = \"https://www.psychopy.org/changelog.html\"\n\ngeneral = \"https://www.psychopy.org/general/\"\nurls['prefs'] = general + \"prefs.html\"\nurls['prefs.general'] = general + \"prefs.html#general-settings\"\nurls['prefs.app'] = general + \"prefs.html#application-settings\"\nurls['prefs.coder'] = general + \"prefs.html#coder-settings\"\nurls['prefs.builder'] = general + \"prefs.html#builder-settings\"\nurls['prefs.connections'] = general + \"prefs.html#connection-settings\"\n\n# links keyed by wxIDs (e.g. menu item IDs)\nurls['psychopyHome'] = \"https://www.psychopy.org/\"\nurls['psychopyReference'] = \"https://www.psychopy.org/api/api.html\"\nurls['coderTutorial'] = \"https://www.psychopy.org/coder/tutorial1.html\"\nurls['builderHelp'] = urls['builder']\nurls['builderDemos'] = \"http://code.google.com/p/psychopy/downloads/list?can=2&q=demos\"\nurls['projsAbout'] = \"https://www.psychopy.org/general/projects.html\"\n", "path": "psychopy/app/urls.py"}], "after_files": [{"content": "#!/usr/bin/env python\n# -*- coding: utf-8 -*-\n\n\"\"\"A central location to store information about urls\n\"\"\"\nurls = dict()\n\n# links based on string names\nurls['builder'] = \"https://www.psychopy.org/builder\"\nurls['builder.loops'] = \"https://www.psychopy.org/builder/flow.html#loops\"\n# NB. builder components get their urls defined by the component code\n# (so a custom component can have a url)\n\nurls['downloads'] = \"https://github.com/psychopy/psychopy/releases\"\nurls['changelog'] = \"https://www.psychopy.org/changelog.html\"\n\ngeneral = \"https://www.psychopy.org/general/\"\nurls['prefs'] = general + \"prefs.html\"\nurls['prefs.general'] = general + \"prefs.html#general-settings\"\nurls['prefs.app'] = general + \"prefs.html#application-settings\"\nurls['prefs.coder'] = general + \"prefs.html#coder-settings\"\nurls['prefs.builder'] = general + \"prefs.html#builder-settings\"\nurls['prefs.connections'] = general + \"prefs.html#connection-settings\"\n\n# links keyed by wxIDs (e.g. menu item IDs)\nurls['psychopyHome'] = \"https://www.psychopy.org/\"\nurls['psychopyReference'] = \"https://www.psychopy.org/api\"\nurls['coderTutorial'] = \"https://www.psychopy.org/coder/tutorial1.html\"\nurls['builderHelp'] = urls['builder']\nurls['builderDemos'] = \"http://code.google.com/p/psychopy/downloads/list?can=2&q=demos\"\nurls['projsAbout'] = \"https://www.psychopy.org/general/projects.html\"\n", "path": "psychopy/app/urls.py"}]}

| 739 | 285 |

gh_patches_debug_36007

|

rasdani/github-patches

|

git_diff

|

arviz-devs__arviz-414

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

arviz-darkgrid not working in example from quickstart

This command is not being recognized.

I installed the program from github on Mac OS 10.13 using macports Python3.5

az.style.use('arviz-darkgrid')

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup.py`

Content:

```

1 import codecs

2 import shutil

3 import os

4 import re

5

6 import setuptools

7 from setuptools import setup, find_packages

8 from setuptools.command.install import install

9 from setuptools.command.develop import develop

10

11

12 PROJECT_ROOT = os.path.dirname(os.path.realpath(__file__))

13 REQUIREMENTS_FILE = os.path.join(PROJECT_ROOT, 'requirements.txt')

14 README_FILE = os.path.join(PROJECT_ROOT, 'README.md')

15 VERSION_FILE = os.path.join(PROJECT_ROOT, 'arviz', '__init__.py')

16

17

18 # Ensure matplotlib dependencies are available to copy

19 # styles over

20 setuptools.dist.Distribution().fetch_build_eggs(['matplotlib>=3.0'])

21

22 def get_requirements():

23 with codecs.open(REQUIREMENTS_FILE) as buff:

24 return buff.read().splitlines()

25

26

27 def get_long_description():

28 with codecs.open(README_FILE, 'rt') as buff:

29 return buff.read()

30

31

32 def copy_styles():

33 from matplotlib import get_configdir

34 sd = os.path.join(get_configdir(), "stylelib")

35 source = os.path.dirname(os.path.realpath(__file__))

36 lsd = os.path.join(source, 'arviz', 'plots', 'styles')

37 styles = [f for f in os.listdir(lsd)]

38 if not os.path.isdir(sd):

39 os.makedirs(sd)

40 for s in styles:

41 shutil.copy(os.path.join(lsd, s), os.path.join(sd, s))

42

43

44 class DevelopStyles(develop):

45 def run(self):

46 copy_styles()

47 develop.run(self)

48

49 class InstallStyles(install):

50 def run(self):

51 copy_styles()

52 install.run(self)

53

54 def get_version():

55 lines = open(VERSION_FILE, 'rt').readlines()

56 version_regex = r"^__version__ = ['\"]([^'\"]*)['\"]"

57 for line in lines:

58 mo = re.search(version_regex, line, re.M)

59 if mo:

60 return mo.group(1)

61 raise RuntimeError('Unable to find version in %s.' % (VERSION_FILE,))

62

63 setup(

64 name='arviz',

65 version=get_version(),

66 description='Exploratory analysis of Bayesian models',

67 author='ArviZ Developers',

68 url="http://github.com/arviz-devs/arviz",

69 packages=find_packages(),

70 install_requires=get_requirements(),

71 long_description=get_long_description(),

72 long_description_content_type='text/markdown',

73 include_package_data=True,

74 cmdclass={

75 'develop': DevelopStyles,

76 'install': InstallStyles,

77 },

78 )

79

```

Path: `arviz/__init__.py`

Content:

```

1 # pylint: disable=wildcard-import,invalid-name,wrong-import-position

2 """ArviZ is a library for exploratory analysis of Bayesian models."""

3 __version__ = "0.2.1"

4

5 import logging

6 from matplotlib.pyplot import style

7

8 # Configure logging before importing arviz internals

9 _log = logging.getLogger("arviz")

10

11 if not logging.root.handlers:

12 handler = logging.StreamHandler()

13 _log.setLevel(logging.INFO)

14 _log.addHandler(handler)

15

16 from .data import *

17 from .plots import *

18 from .stats import *

19

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/arviz/__init__.py b/arviz/__init__.py

--- a/arviz/__init__.py

+++ b/arviz/__init__.py

@@ -2,9 +2,15 @@

"""ArviZ is a library for exploratory analysis of Bayesian models."""

__version__ = "0.2.1"

+import os

import logging

from matplotlib.pyplot import style

+# add ArviZ's styles to matplotlib's styles

+arviz_style_path = os.path.join(os.path.dirname(__file__), "plots", "styles")

+style.core.USER_LIBRARY_PATHS.append(arviz_style_path)

+style.core.reload_library()

+

# Configure logging before importing arviz internals

_log = logging.getLogger("arviz")

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -1,5 +1,4 @@

import codecs

-import shutil

import os

import re

@@ -15,10 +14,6 @@

VERSION_FILE = os.path.join(PROJECT_ROOT, 'arviz', '__init__.py')

-# Ensure matplotlib dependencies are available to copy

-# styles over

-setuptools.dist.Distribution().fetch_build_eggs(['matplotlib>=3.0'])

-

def get_requirements():

with codecs.open(REQUIREMENTS_FILE) as buff:

return buff.read().splitlines()

@@ -28,29 +23,6 @@

with codecs.open(README_FILE, 'rt') as buff:

return buff.read()

-

-def copy_styles():

- from matplotlib import get_configdir

- sd = os.path.join(get_configdir(), "stylelib")

- source = os.path.dirname(os.path.realpath(__file__))

- lsd = os.path.join(source, 'arviz', 'plots', 'styles')

- styles = [f for f in os.listdir(lsd)]

- if not os.path.isdir(sd):

- os.makedirs(sd)

- for s in styles:

- shutil.copy(os.path.join(lsd, s), os.path.join(sd, s))

-

-

-class DevelopStyles(develop):

- def run(self):

- copy_styles()

- develop.run(self)

-

-class InstallStyles(install):

- def run(self):

- copy_styles()

- install.run(self)

-

def get_version():

lines = open(VERSION_FILE, 'rt').readlines()

version_regex = r"^__version__ = ['\"]([^'\"]*)['\"]"

@@ -71,8 +43,4 @@

long_description=get_long_description(),

long_description_content_type='text/markdown',

include_package_data=True,

- cmdclass={

- 'develop': DevelopStyles,

- 'install': InstallStyles,

- },

)

|

{"golden_diff": "diff --git a/arviz/__init__.py b/arviz/__init__.py\n--- a/arviz/__init__.py\n+++ b/arviz/__init__.py\n@@ -2,9 +2,15 @@\n \"\"\"ArviZ is a library for exploratory analysis of Bayesian models.\"\"\"\n __version__ = \"0.2.1\"\n \n+import os\n import logging\n from matplotlib.pyplot import style\n \n+# add ArviZ's styles to matplotlib's styles\n+arviz_style_path = os.path.join(os.path.dirname(__file__), \"plots\", \"styles\")\n+style.core.USER_LIBRARY_PATHS.append(arviz_style_path)\n+style.core.reload_library()\n+\n # Configure logging before importing arviz internals\n _log = logging.getLogger(\"arviz\")\n \ndiff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -1,5 +1,4 @@\n import codecs\n-import shutil\n import os\n import re\n \n@@ -15,10 +14,6 @@\n VERSION_FILE = os.path.join(PROJECT_ROOT, 'arviz', '__init__.py')\n \n \n-# Ensure matplotlib dependencies are available to copy\n-# styles over\n-setuptools.dist.Distribution().fetch_build_eggs(['matplotlib>=3.0'])\n-\n def get_requirements():\n with codecs.open(REQUIREMENTS_FILE) as buff:\n return buff.read().splitlines()\n@@ -28,29 +23,6 @@\n with codecs.open(README_FILE, 'rt') as buff:\n return buff.read()\n \n-\n-def copy_styles():\n- from matplotlib import get_configdir\n- sd = os.path.join(get_configdir(), \"stylelib\")\n- source = os.path.dirname(os.path.realpath(__file__))\n- lsd = os.path.join(source, 'arviz', 'plots', 'styles')\n- styles = [f for f in os.listdir(lsd)]\n- if not os.path.isdir(sd):\n- os.makedirs(sd)\n- for s in styles:\n- shutil.copy(os.path.join(lsd, s), os.path.join(sd, s))\n-\n-\n-class DevelopStyles(develop):\n- def run(self):\n- copy_styles()\n- develop.run(self)\n-\n-class InstallStyles(install):\n- def run(self):\n- copy_styles()\n- install.run(self)\n-\n def get_version():\n lines = open(VERSION_FILE, 'rt').readlines()\n version_regex = r\"^__version__ = ['\\\"]([^'\\\"]*)['\\\"]\"\n@@ -71,8 +43,4 @@\n long_description=get_long_description(),\n long_description_content_type='text/markdown',\n include_package_data=True,\n- cmdclass={\n- 'develop': DevelopStyles,\n- 'install': InstallStyles,\n- },\n )\n", "issue": "arviz-darkgrid not working in example from quickstart\nThis command is not being recognized.\r\nI installed the program from github on Mac OS 10.13 using macports Python3.5 \r\n\r\naz.style.use('arviz-darkgrid')\n", "before_files": [{"content": "import codecs\nimport shutil\nimport os\nimport re\n\nimport setuptools\nfrom setuptools import setup, find_packages\nfrom setuptools.command.install import install\nfrom setuptools.command.develop import develop\n\n\nPROJECT_ROOT = os.path.dirname(os.path.realpath(__file__))\nREQUIREMENTS_FILE = os.path.join(PROJECT_ROOT, 'requirements.txt')\nREADME_FILE = os.path.join(PROJECT_ROOT, 'README.md')\nVERSION_FILE = os.path.join(PROJECT_ROOT, 'arviz', '__init__.py')\n\n\n# Ensure matplotlib dependencies are available to copy\n# styles over\nsetuptools.dist.Distribution().fetch_build_eggs(['matplotlib>=3.0'])\n\ndef get_requirements():\n with codecs.open(REQUIREMENTS_FILE) as buff:\n return buff.read().splitlines()\n\n\ndef get_long_description():\n with codecs.open(README_FILE, 'rt') as buff:\n return buff.read()\n\n\ndef copy_styles():\n from matplotlib import get_configdir\n sd = os.path.join(get_configdir(), \"stylelib\")\n source = os.path.dirname(os.path.realpath(__file__))\n lsd = os.path.join(source, 'arviz', 'plots', 'styles')\n styles = [f for f in os.listdir(lsd)]\n if not os.path.isdir(sd):\n os.makedirs(sd)\n for s in styles:\n shutil.copy(os.path.join(lsd, s), os.path.join(sd, s))\n\n\nclass DevelopStyles(develop):\n def run(self):\n copy_styles()\n develop.run(self)\n\nclass InstallStyles(install):\n def run(self):\n copy_styles()\n install.run(self)\n\ndef get_version():\n lines = open(VERSION_FILE, 'rt').readlines()\n version_regex = r\"^__version__ = ['\\\"]([^'\\\"]*)['\\\"]\"\n for line in lines:\n mo = re.search(version_regex, line, re.M)\n if mo:\n return mo.group(1)\n raise RuntimeError('Unable to find version in %s.' % (VERSION_FILE,))\n\nsetup(\n name='arviz',\n version=get_version(),\n description='Exploratory analysis of Bayesian models',\n author='ArviZ Developers',\n url=\"http://github.com/arviz-devs/arviz\",\n packages=find_packages(),\n install_requires=get_requirements(),\n long_description=get_long_description(),\n long_description_content_type='text/markdown',\n include_package_data=True,\n cmdclass={\n 'develop': DevelopStyles,\n 'install': InstallStyles,\n },\n)\n", "path": "setup.py"}, {"content": "# pylint: disable=wildcard-import,invalid-name,wrong-import-position\n\"\"\"ArviZ is a library for exploratory analysis of Bayesian models.\"\"\"\n__version__ = \"0.2.1\"\n\nimport logging\nfrom matplotlib.pyplot import style\n\n# Configure logging before importing arviz internals\n_log = logging.getLogger(\"arviz\")\n\nif not logging.root.handlers:\n handler = logging.StreamHandler()\n _log.setLevel(logging.INFO)\n _log.addHandler(handler)\n\nfrom .data import *\nfrom .plots import *\nfrom .stats import *\n", "path": "arviz/__init__.py"}], "after_files": [{"content": "import codecs\nimport os\nimport re\n\nimport setuptools\nfrom setuptools import setup, find_packages\nfrom setuptools.command.install import install\nfrom setuptools.command.develop import develop\n\n\nPROJECT_ROOT = os.path.dirname(os.path.realpath(__file__))\nREQUIREMENTS_FILE = os.path.join(PROJECT_ROOT, 'requirements.txt')\nREADME_FILE = os.path.join(PROJECT_ROOT, 'README.md')\nVERSION_FILE = os.path.join(PROJECT_ROOT, 'arviz', '__init__.py')\n\n\ndef get_requirements():\n with codecs.open(REQUIREMENTS_FILE) as buff:\n return buff.read().splitlines()\n\n\ndef get_long_description():\n with codecs.open(README_FILE, 'rt') as buff:\n return buff.read()\n\ndef get_version():\n lines = open(VERSION_FILE, 'rt').readlines()\n version_regex = r\"^__version__ = ['\\\"]([^'\\\"]*)['\\\"]\"\n for line in lines:\n mo = re.search(version_regex, line, re.M)\n if mo:\n return mo.group(1)\n raise RuntimeError('Unable to find version in %s.' % (VERSION_FILE,))\n\nsetup(\n name='arviz',\n version=get_version(),\n description='Exploratory analysis of Bayesian models',\n author='ArviZ Developers',\n url=\"http://github.com/arviz-devs/arviz\",\n packages=find_packages(),\n install_requires=get_requirements(),\n long_description=get_long_description(),\n long_description_content_type='text/markdown',\n include_package_data=True,\n)\n", "path": "setup.py"}, {"content": "# pylint: disable=wildcard-import,invalid-name,wrong-import-position\n\"\"\"ArviZ is a library for exploratory analysis of Bayesian models.\"\"\"\n__version__ = \"0.2.1\"\n\nimport os\nimport logging\nfrom matplotlib.pyplot import style\n\n# add ArviZ's styles to matplotlib's styles\narviz_style_path = os.path.join(os.path.dirname(__file__), \"plots\", \"styles\")\nstyle.core.USER_LIBRARY_PATHS.append(arviz_style_path)\nstyle.core.reload_library()\n\n# Configure logging before importing arviz internals\n_log = logging.getLogger(\"arviz\")\n\nif not logging.root.handlers:\n handler = logging.StreamHandler()\n _log.setLevel(logging.INFO)\n _log.addHandler(handler)\n\nfrom .data import *\nfrom .plots import *\nfrom .stats import *\n", "path": "arviz/__init__.py"}]}

| 1,138 | 588 |

gh_patches_debug_30651

|

rasdani/github-patches

|

git_diff

|

googleapis__google-cloud-python-8481

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Firestore: sync shim module with 'firestore_v1' package.

See https://github.com/googleapis/google-cloud-python/issues/7533#issuecomment-495917295 &ff.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `firestore/google/cloud/firestore.py`

Content:

```

1 # Copyright 2017 Google LLC All rights reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """Python idiomatic client for Google Cloud Firestore."""

16

17

18 from google.cloud.firestore_v1 import __version__

19 from google.cloud.firestore_v1 import Client

20 from google.cloud.firestore_v1 import CollectionReference

21 from google.cloud.firestore_v1 import DELETE_FIELD

22 from google.cloud.firestore_v1 import DocumentReference

23 from google.cloud.firestore_v1 import DocumentSnapshot

24 from google.cloud.firestore_v1 import enums

25 from google.cloud.firestore_v1 import ExistsOption

26 from google.cloud.firestore_v1 import GeoPoint

27 from google.cloud.firestore_v1 import LastUpdateOption

28 from google.cloud.firestore_v1 import Query

29 from google.cloud.firestore_v1 import ReadAfterWriteError

30 from google.cloud.firestore_v1 import SERVER_TIMESTAMP

31 from google.cloud.firestore_v1 import Transaction

32 from google.cloud.firestore_v1 import transactional

33 from google.cloud.firestore_v1 import types

34 from google.cloud.firestore_v1 import Watch

35 from google.cloud.firestore_v1 import WriteBatch

36 from google.cloud.firestore_v1 import WriteOption

37

38

39 __all__ = [

40 "__version__",

41 "Client",

42 "CollectionReference",

43 "DELETE_FIELD",

44 "DocumentReference",

45 "DocumentSnapshot",

46 "enums",

47 "ExistsOption",

48 "GeoPoint",

49 "LastUpdateOption",

50 "Query",

51 "ReadAfterWriteError",

52 "SERVER_TIMESTAMP",

53 "Transaction",

54 "transactional",

55 "types",

56 "Watch",

57 "WriteBatch",

58 "WriteOption",

59 ]

60

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/firestore/google/cloud/firestore.py b/firestore/google/cloud/firestore.py

--- a/firestore/google/cloud/firestore.py

+++ b/firestore/google/cloud/firestore.py

@@ -16,6 +16,8 @@

from google.cloud.firestore_v1 import __version__

+from google.cloud.firestore_v1 import ArrayRemove

+from google.cloud.firestore_v1 import ArrayUnion

from google.cloud.firestore_v1 import Client

from google.cloud.firestore_v1 import CollectionReference

from google.cloud.firestore_v1 import DELETE_FIELD

@@ -24,7 +26,10 @@

from google.cloud.firestore_v1 import enums

from google.cloud.firestore_v1 import ExistsOption

from google.cloud.firestore_v1 import GeoPoint

+from google.cloud.firestore_v1 import Increment

from google.cloud.firestore_v1 import LastUpdateOption

+from google.cloud.firestore_v1 import Maximum

+from google.cloud.firestore_v1 import Minimum

from google.cloud.firestore_v1 import Query

from google.cloud.firestore_v1 import ReadAfterWriteError

from google.cloud.firestore_v1 import SERVER_TIMESTAMP

@@ -38,6 +43,8 @@

__all__ = [

"__version__",

+ "ArrayRemove",

+ "ArrayUnion",

"Client",

"CollectionReference",

"DELETE_FIELD",

@@ -46,7 +53,10 @@

"enums",

"ExistsOption",

"GeoPoint",

+ "Increment",

"LastUpdateOption",

+ "Maximum",

+ "Minimum",

"Query",

"ReadAfterWriteError",

"SERVER_TIMESTAMP",

|