problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

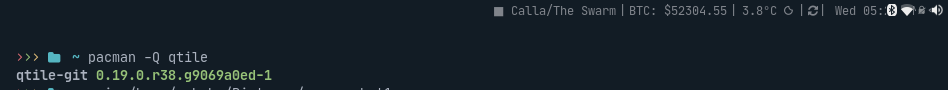

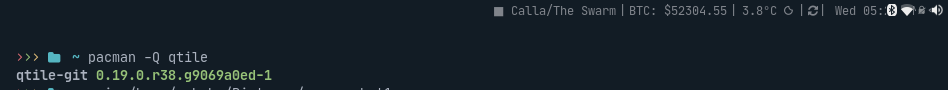

gh_patches_debug_33725

|

rasdani/github-patches

|

git_diff

|

modoboa__modoboa-1859

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Dashboard - server behind proxy

# Impacted versions

* Modoboa: 1.14.0

* Webserver: Nginx

# Steps to reproduce

Modoboa server is behind proxy, so no internet direct access

Acces dashboard via admin account

# Current behavior

504 Gateway Time-out

# Expected behavior

no error

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `modoboa/core/views/dashboard.py`

Content:

```

1 """Core dashboard views."""

2

3 import feedparser

4 import requests

5 from dateutil import parser

6 from requests.exceptions import RequestException

7

8 from django.contrib.auth import mixins as auth_mixins

9 from django.views import generic

10

11 from .. import signals

12

13 MODOBOA_WEBSITE_URL = "https://modoboa.org/"

14

15

16 class DashboardView(auth_mixins.AccessMixin, generic.TemplateView):

17 """Dashboard view."""

18

19 template_name = "core/dashboard.html"

20

21 def dispatch(self, request, *args, **kwargs):

22 """Check if user can access dashboard."""

23 if not request.user.is_authenticated or not request.user.is_admin:

24 return self.handle_no_permission()

25 return super(DashboardView, self).dispatch(request, *args, **kwargs)

26

27 def get_context_data(self, **kwargs):

28 """Add context variables."""

29 context = super(DashboardView, self).get_context_data(**kwargs)

30 context.update({

31 "selection": "dashboard", "widgets": {"left": [], "right": []}

32 })

33 # Fetch latest news

34 if self.request.user.language == "fr":

35 lang = "fr"

36 else:

37 lang = "en"

38 context.update({"selection": "dashboard"})

39

40 feed_url = "{}{}/weblog/feeds/".format(MODOBOA_WEBSITE_URL, lang)

41 if self.request.user.role != "SuperAdmins":

42 custom_feed_url = (

43 self.request.localconfig.parameters.get_value("rss_feed_url"))

44 if custom_feed_url:

45 feed_url = custom_feed_url

46 posts = feedparser.parse(feed_url)

47 entries = []

48 for entry in posts["entries"][:5]:

49 entry["published"] = parser.parse(entry["published"])

50 entries.append(entry)

51 context["widgets"]["left"].append("core/_latest_news_widget.html")

52 context.update({"news": entries})

53

54 hide_features_widget = self.request.localconfig.parameters.get_value(

55 "hide_features_widget")

56 if self.request.user.is_superuser or not hide_features_widget:

57 url = "{}{}/api/projects/?featured=true".format(

58 MODOBOA_WEBSITE_URL, lang)

59 features = []

60 try:

61 response = requests.get(url)

62 except RequestException:

63 pass

64 else:

65 if response.status_code == 200:

66 features = response.json()

67 context["widgets"]["right"].append("core/_current_features.html")

68 context.update({"features": features})

69

70 # Extra widgets

71 result = signals.extra_admin_dashboard_widgets.send(

72 sender=self.__class__, user=self.request.user)

73 for _receiver, widgets in result:

74 for widget in widgets:

75 context["widgets"][widget["column"]].append(

76 widget["template"])

77 # FIXME: can raise conflicts...

78 context.update(widget["context"])

79

80 return context

81

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/modoboa/core/views/dashboard.py b/modoboa/core/views/dashboard.py

--- a/modoboa/core/views/dashboard.py

+++ b/modoboa/core/views/dashboard.py

@@ -8,6 +8,8 @@

from django.contrib.auth import mixins as auth_mixins

from django.views import generic

+from django.conf import settings

+

from .. import signals

MODOBOA_WEBSITE_URL = "https://modoboa.org/"

@@ -43,11 +45,12 @@

self.request.localconfig.parameters.get_value("rss_feed_url"))

if custom_feed_url:

feed_url = custom_feed_url

- posts = feedparser.parse(feed_url)

entries = []

- for entry in posts["entries"][:5]:

- entry["published"] = parser.parse(entry["published"])

- entries.append(entry)

+ if not settings.DISABLE_DASHBOARD_EXTERNAL_QUERIES:

+ posts = feedparser.parse(feed_url)

+ for entry in posts["entries"][:5]:

+ entry["published"] = parser.parse(entry["published"])

+ entries.append(entry)

context["widgets"]["left"].append("core/_latest_news_widget.html")

context.update({"news": entries})

@@ -57,13 +60,14 @@

url = "{}{}/api/projects/?featured=true".format(

MODOBOA_WEBSITE_URL, lang)

features = []

- try:

- response = requests.get(url)

- except RequestException:

- pass

- else:

- if response.status_code == 200:

- features = response.json()

+ if not settings.DISABLE_DASHBOARD_EXTERNAL_QUERIES:

+ try:

+ response = requests.get(url)

+ except RequestException:

+ pass

+ else:

+ if response.status_code == 200:

+ features = response.json()

context["widgets"]["right"].append("core/_current_features.html")

context.update({"features": features})

|

{"golden_diff": "diff --git a/modoboa/core/views/dashboard.py b/modoboa/core/views/dashboard.py\n--- a/modoboa/core/views/dashboard.py\n+++ b/modoboa/core/views/dashboard.py\n@@ -8,6 +8,8 @@\n from django.contrib.auth import mixins as auth_mixins\n from django.views import generic\n \n+from django.conf import settings\n+\n from .. import signals\n \n MODOBOA_WEBSITE_URL = \"https://modoboa.org/\"\n@@ -43,11 +45,12 @@\n self.request.localconfig.parameters.get_value(\"rss_feed_url\"))\n if custom_feed_url:\n feed_url = custom_feed_url\n- posts = feedparser.parse(feed_url)\n entries = []\n- for entry in posts[\"entries\"][:5]:\n- entry[\"published\"] = parser.parse(entry[\"published\"])\n- entries.append(entry)\n+ if not settings.DISABLE_DASHBOARD_EXTERNAL_QUERIES:\n+ posts = feedparser.parse(feed_url)\n+ for entry in posts[\"entries\"][:5]:\n+ entry[\"published\"] = parser.parse(entry[\"published\"])\n+ entries.append(entry)\n context[\"widgets\"][\"left\"].append(\"core/_latest_news_widget.html\")\n context.update({\"news\": entries})\n \n@@ -57,13 +60,14 @@\n url = \"{}{}/api/projects/?featured=true\".format(\n MODOBOA_WEBSITE_URL, lang)\n features = []\n- try:\n- response = requests.get(url)\n- except RequestException:\n- pass\n- else:\n- if response.status_code == 200:\n- features = response.json()\n+ if not settings.DISABLE_DASHBOARD_EXTERNAL_QUERIES:\n+ try:\n+ response = requests.get(url)\n+ except RequestException:\n+ pass\n+ else:\n+ if response.status_code == 200:\n+ features = response.json()\n context[\"widgets\"][\"right\"].append(\"core/_current_features.html\")\n context.update({\"features\": features})\n", "issue": "Dashboard - server behind proxy\n# Impacted versions\r\n\r\n* Modoboa: 1.14.0\r\n* Webserver: Nginx\r\n\r\n# Steps to reproduce\r\nModoboa server is behind proxy, so no internet direct access\r\nAcces dashboard via admin account\r\n\r\n# Current behavior\r\n504 Gateway Time-out\r\n\r\n# Expected behavior\r\nno error\r\n\n", "before_files": [{"content": "\"\"\"Core dashboard views.\"\"\"\n\nimport feedparser\nimport requests\nfrom dateutil import parser\nfrom requests.exceptions import RequestException\n\nfrom django.contrib.auth import mixins as auth_mixins\nfrom django.views import generic\n\nfrom .. import signals\n\nMODOBOA_WEBSITE_URL = \"https://modoboa.org/\"\n\n\nclass DashboardView(auth_mixins.AccessMixin, generic.TemplateView):\n \"\"\"Dashboard view.\"\"\"\n\n template_name = \"core/dashboard.html\"\n\n def dispatch(self, request, *args, **kwargs):\n \"\"\"Check if user can access dashboard.\"\"\"\n if not request.user.is_authenticated or not request.user.is_admin:\n return self.handle_no_permission()\n return super(DashboardView, self).dispatch(request, *args, **kwargs)\n\n def get_context_data(self, **kwargs):\n \"\"\"Add context variables.\"\"\"\n context = super(DashboardView, self).get_context_data(**kwargs)\n context.update({\n \"selection\": \"dashboard\", \"widgets\": {\"left\": [], \"right\": []}\n })\n # Fetch latest news\n if self.request.user.language == \"fr\":\n lang = \"fr\"\n else:\n lang = \"en\"\n context.update({\"selection\": \"dashboard\"})\n\n feed_url = \"{}{}/weblog/feeds/\".format(MODOBOA_WEBSITE_URL, lang)\n if self.request.user.role != \"SuperAdmins\":\n custom_feed_url = (\n self.request.localconfig.parameters.get_value(\"rss_feed_url\"))\n if custom_feed_url:\n feed_url = custom_feed_url\n posts = feedparser.parse(feed_url)\n entries = []\n for entry in posts[\"entries\"][:5]:\n entry[\"published\"] = parser.parse(entry[\"published\"])\n entries.append(entry)\n context[\"widgets\"][\"left\"].append(\"core/_latest_news_widget.html\")\n context.update({\"news\": entries})\n\n hide_features_widget = self.request.localconfig.parameters.get_value(\n \"hide_features_widget\")\n if self.request.user.is_superuser or not hide_features_widget:\n url = \"{}{}/api/projects/?featured=true\".format(\n MODOBOA_WEBSITE_URL, lang)\n features = []\n try:\n response = requests.get(url)\n except RequestException:\n pass\n else:\n if response.status_code == 200:\n features = response.json()\n context[\"widgets\"][\"right\"].append(\"core/_current_features.html\")\n context.update({\"features\": features})\n\n # Extra widgets\n result = signals.extra_admin_dashboard_widgets.send(\n sender=self.__class__, user=self.request.user)\n for _receiver, widgets in result:\n for widget in widgets:\n context[\"widgets\"][widget[\"column\"]].append(\n widget[\"template\"])\n # FIXME: can raise conflicts...\n context.update(widget[\"context\"])\n\n return context\n", "path": "modoboa/core/views/dashboard.py"}], "after_files": [{"content": "\"\"\"Core dashboard views.\"\"\"\n\nimport feedparser\nimport requests\nfrom dateutil import parser\nfrom requests.exceptions import RequestException\n\nfrom django.contrib.auth import mixins as auth_mixins\nfrom django.views import generic\n\nfrom django.conf import settings\n\nfrom .. import signals\n\nMODOBOA_WEBSITE_URL = \"https://modoboa.org/\"\n\n\nclass DashboardView(auth_mixins.AccessMixin, generic.TemplateView):\n \"\"\"Dashboard view.\"\"\"\n\n template_name = \"core/dashboard.html\"\n\n def dispatch(self, request, *args, **kwargs):\n \"\"\"Check if user can access dashboard.\"\"\"\n if not request.user.is_authenticated or not request.user.is_admin:\n return self.handle_no_permission()\n return super(DashboardView, self).dispatch(request, *args, **kwargs)\n\n def get_context_data(self, **kwargs):\n \"\"\"Add context variables.\"\"\"\n context = super(DashboardView, self).get_context_data(**kwargs)\n context.update({\n \"selection\": \"dashboard\", \"widgets\": {\"left\": [], \"right\": []}\n })\n # Fetch latest news\n if self.request.user.language == \"fr\":\n lang = \"fr\"\n else:\n lang = \"en\"\n context.update({\"selection\": \"dashboard\"})\n\n feed_url = \"{}{}/weblog/feeds/\".format(MODOBOA_WEBSITE_URL, lang)\n if self.request.user.role != \"SuperAdmins\":\n custom_feed_url = (\n self.request.localconfig.parameters.get_value(\"rss_feed_url\"))\n if custom_feed_url:\n feed_url = custom_feed_url\n entries = []\n if not settings.DISABLE_DASHBOARD_EXTERNAL_QUERIES:\n posts = feedparser.parse(feed_url)\n for entry in posts[\"entries\"][:5]:\n entry[\"published\"] = parser.parse(entry[\"published\"])\n entries.append(entry)\n context[\"widgets\"][\"left\"].append(\"core/_latest_news_widget.html\")\n context.update({\"news\": entries})\n\n hide_features_widget = self.request.localconfig.parameters.get_value(\n \"hide_features_widget\")\n if self.request.user.is_superuser or not hide_features_widget:\n url = \"{}{}/api/projects/?featured=true\".format(\n MODOBOA_WEBSITE_URL, lang)\n features = []\n if not settings.DISABLE_DASHBOARD_EXTERNAL_QUERIES:\n try:\n response = requests.get(url)\n except RequestException:\n pass\n else:\n if response.status_code == 200:\n features = response.json()\n context[\"widgets\"][\"right\"].append(\"core/_current_features.html\")\n context.update({\"features\": features})\n\n # Extra widgets\n result = signals.extra_admin_dashboard_widgets.send(\n sender=self.__class__, user=self.request.user)\n for _receiver, widgets in result:\n for widget in widgets:\n context[\"widgets\"][widget[\"column\"]].append(\n widget[\"template\"])\n # FIXME: can raise conflicts...\n context.update(widget[\"context\"])\n\n return context\n", "path": "modoboa/core/views/dashboard.py"}]}

| 1,085 | 432 |

gh_patches_debug_15542

|

rasdani/github-patches

|

git_diff

|

replicate__cog-553

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Dear friend,please tell me why I can't run it from cog example.

I am a newbie.

I run the code from cog examples.

I can run "cog run python",but I can't run following command.

input:

sudo cog predict -i @input.jpg

resluts:

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `python/cog/json.py`

Content:

```

1 from enum import Enum

2 import io

3 from typing import Any

4

5 from pydantic import BaseModel

6

7 from .types import Path

8

9 try:

10 import numpy as np # type: ignore

11

12 has_numpy = True

13 except ImportError:

14 has_numpy = False

15

16

17 def encode_json(obj: Any, upload_file) -> Any:

18 """

19 Returns a JSON-compatible version of the object. It will encode any Pydantic models and custom types.

20

21 When a file is encountered, it will be passed to upload_file. Any paths will be opened and converted to files.

22

23 Somewhat based on FastAPI's jsonable_encoder().

24 """

25 if isinstance(obj, BaseModel):

26 return encode_json(obj.dict(exclude_unset=True), upload_file)

27 if isinstance(obj, dict):

28 return {key: encode_json(value, upload_file) for key, value in obj.items()}

29 if isinstance(obj, list):

30 return [encode_json(value, upload_file) for value in obj]

31 if isinstance(obj, Enum):

32 return obj.value

33 if isinstance(obj, Path):

34 with obj.open("rb") as f:

35 return upload_file(f)

36 if isinstance(obj, io.IOBase):

37 return upload_file(obj)

38 if has_numpy:

39 if isinstance(obj, np.integer):

40 return int(obj)

41 if isinstance(obj, np.floating):

42 return float(obj)

43 if isinstance(obj, np.ndarray):

44 return obj.tolist()

45 return obj

46

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/python/cog/json.py b/python/cog/json.py

--- a/python/cog/json.py

+++ b/python/cog/json.py

@@ -1,5 +1,6 @@

from enum import Enum

import io

+from types import GeneratorType

from typing import Any

from pydantic import BaseModel

@@ -26,7 +27,7 @@

return encode_json(obj.dict(exclude_unset=True), upload_file)

if isinstance(obj, dict):

return {key: encode_json(value, upload_file) for key, value in obj.items()}

- if isinstance(obj, list):

+ if isinstance(obj, (list, set, frozenset, GeneratorType, tuple)):

return [encode_json(value, upload_file) for value in obj]

if isinstance(obj, Enum):

return obj.value

|

{"golden_diff": "diff --git a/python/cog/json.py b/python/cog/json.py\n--- a/python/cog/json.py\n+++ b/python/cog/json.py\n@@ -1,5 +1,6 @@\n from enum import Enum\n import io\n+from types import GeneratorType\n from typing import Any\n \n from pydantic import BaseModel\n@@ -26,7 +27,7 @@\n return encode_json(obj.dict(exclude_unset=True), upload_file)\n if isinstance(obj, dict):\n return {key: encode_json(value, upload_file) for key, value in obj.items()}\n- if isinstance(obj, list):\n+ if isinstance(obj, (list, set, frozenset, GeneratorType, tuple)):\n return [encode_json(value, upload_file) for value in obj]\n if isinstance(obj, Enum):\n return obj.value\n", "issue": "Dear friend,please tell me why I can't run it from cog example.\nI am a newbie.\r\nI run the code from cog examples.\r\nI can run \"cog run python\",but I can't run following command.\r\ninput:\r\nsudo cog predict -i @input.jpg\r\nresluts:\r\n\r\n\n", "before_files": [{"content": "from enum import Enum\nimport io\nfrom typing import Any\n\nfrom pydantic import BaseModel\n\nfrom .types import Path\n\ntry:\n import numpy as np # type: ignore\n\n has_numpy = True\nexcept ImportError:\n has_numpy = False\n\n\ndef encode_json(obj: Any, upload_file) -> Any:\n \"\"\"\n Returns a JSON-compatible version of the object. It will encode any Pydantic models and custom types.\n\n When a file is encountered, it will be passed to upload_file. Any paths will be opened and converted to files.\n\n Somewhat based on FastAPI's jsonable_encoder().\n \"\"\"\n if isinstance(obj, BaseModel):\n return encode_json(obj.dict(exclude_unset=True), upload_file)\n if isinstance(obj, dict):\n return {key: encode_json(value, upload_file) for key, value in obj.items()}\n if isinstance(obj, list):\n return [encode_json(value, upload_file) for value in obj]\n if isinstance(obj, Enum):\n return obj.value\n if isinstance(obj, Path):\n with obj.open(\"rb\") as f:\n return upload_file(f)\n if isinstance(obj, io.IOBase):\n return upload_file(obj)\n if has_numpy:\n if isinstance(obj, np.integer):\n return int(obj)\n if isinstance(obj, np.floating):\n return float(obj)\n if isinstance(obj, np.ndarray):\n return obj.tolist()\n return obj\n", "path": "python/cog/json.py"}], "after_files": [{"content": "from enum import Enum\nimport io\nfrom types import GeneratorType\nfrom typing import Any\n\nfrom pydantic import BaseModel\n\nfrom .types import Path\n\ntry:\n import numpy as np # type: ignore\n\n has_numpy = True\nexcept ImportError:\n has_numpy = False\n\n\ndef encode_json(obj: Any, upload_file) -> Any:\n \"\"\"\n Returns a JSON-compatible version of the object. It will encode any Pydantic models and custom types.\n\n When a file is encountered, it will be passed to upload_file. Any paths will be opened and converted to files.\n\n Somewhat based on FastAPI's jsonable_encoder().\n \"\"\"\n if isinstance(obj, BaseModel):\n return encode_json(obj.dict(exclude_unset=True), upload_file)\n if isinstance(obj, dict):\n return {key: encode_json(value, upload_file) for key, value in obj.items()}\n if isinstance(obj, (list, set, frozenset, GeneratorType, tuple)):\n return [encode_json(value, upload_file) for value in obj]\n if isinstance(obj, Enum):\n return obj.value\n if isinstance(obj, Path):\n with obj.open(\"rb\") as f:\n return upload_file(f)\n if isinstance(obj, io.IOBase):\n return upload_file(obj)\n if has_numpy:\n if isinstance(obj, np.integer):\n return int(obj)\n if isinstance(obj, np.floating):\n return float(obj)\n if isinstance(obj, np.ndarray):\n return obj.tolist()\n return obj\n", "path": "python/cog/json.py"}]}

| 775 | 177 |

gh_patches_debug_12833

|

rasdani/github-patches

|

git_diff

|

mindee__doctr-219

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Demo app error when analyzing my first document

## 🐛 Bug

I tried to analyze a PNG and a PDF, got the same error. I try to change the model, didn't change anything.

## To Reproduce

Steps to reproduce the behavior:

1. Upload a PNG

2. Click on analyze document

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

```

KeyError: 0

Traceback:

File "/Users/thibautmorla/opt/anaconda3/lib/python3.8/site-packages/streamlit/script_runner.py", line 337, in _run_script

exec(code, module.__dict__)

File "/Users/thibautmorla/Downloads/doctr/demo/app.py", line 93, in <module>

main()

File "/Users/thibautmorla/Downloads/doctr/demo/app.py", line 77, in main

seg_map = predictor.det_predictor.model(processed_batches[0])[0]

```

## Additional context

First image upload

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `demo/app.py`

Content:

```

1 # Copyright (C) 2021, Mindee.

2

3 # This program is licensed under the Apache License version 2.

4 # See LICENSE or go to <https://www.apache.org/licenses/LICENSE-2.0.txt> for full license details.

5

6 import os

7 import streamlit as st

8 import matplotlib.pyplot as plt

9

10 os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

11

12 import tensorflow as tf

13 import cv2

14

15 gpu_devices = tf.config.experimental.list_physical_devices('GPU')

16 if any(gpu_devices):

17 tf.config.experimental.set_memory_growth(gpu_devices[0], True)

18

19 from doctr.documents import DocumentFile

20 from doctr.models import ocr_predictor

21 from doctr.utils.visualization import visualize_page

22

23 DET_ARCHS = ["db_resnet50"]

24 RECO_ARCHS = ["crnn_vgg16_bn", "crnn_resnet31", "sar_vgg16_bn", "sar_resnet31"]

25

26

27 def main():

28

29 # Wide mode

30 st.set_page_config(layout="wide")

31

32 # Designing the interface

33 st.title("DocTR: Document Text Recognition")

34 # For newline

35 st.write('\n')

36 # Set the columns

37 cols = st.beta_columns((1, 1, 1))

38 cols[0].header("Input document")

39 cols[1].header("Text segmentation")

40 cols[-1].header("OCR output")

41

42 # Sidebar

43 # File selection

44 st.sidebar.title("Document selection")

45 # Disabling warning

46 st.set_option('deprecation.showfileUploaderEncoding', False)

47 # Choose your own image

48 uploaded_file = st.sidebar.file_uploader("Upload files", type=['pdf', 'png', 'jpeg', 'jpg'])

49 if uploaded_file is not None:

50 if uploaded_file.name.endswith('.pdf'):

51 doc = DocumentFile.from_pdf(uploaded_file.read())

52 else:

53 doc = DocumentFile.from_images(uploaded_file.read())

54 cols[0].image(doc[0], "First page", use_column_width=True)

55

56 # Model selection

57 st.sidebar.title("Model selection")

58 det_arch = st.sidebar.selectbox("Text detection model", DET_ARCHS)

59 reco_arch = st.sidebar.selectbox("Text recognition model", RECO_ARCHS)

60

61 # For newline

62 st.sidebar.write('\n')

63

64 if st.sidebar.button("Analyze document"):

65

66 if uploaded_file is None:

67 st.sidebar.write("Please upload a document")

68

69 else:

70 with st.spinner('Loading model...'):

71 predictor = ocr_predictor(det_arch, reco_arch, pretrained=True)

72

73 with st.spinner('Analyzing...'):

74

75 # Forward the image to the model

76 processed_batches = predictor.det_predictor.pre_processor(doc)

77 seg_map = predictor.det_predictor.model(processed_batches[0])[0]

78 seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),

79 interpolation=cv2.INTER_LINEAR)

80 # Plot the raw heatmap

81 fig, ax = plt.subplots()

82 ax.imshow(seg_map)

83 ax.axis('off')

84 cols[1].pyplot(fig)

85

86 # OCR

87 out = predictor(doc)

88 fig = visualize_page(out.pages[0].export(), doc[0], interactive=False)

89 cols[-1].pyplot(fig)

90

91

92 if __name__ == '__main__':

93 main()

94

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/demo/app.py b/demo/app.py

--- a/demo/app.py

+++ b/demo/app.py

@@ -74,7 +74,8 @@

# Forward the image to the model

processed_batches = predictor.det_predictor.pre_processor(doc)

- seg_map = predictor.det_predictor.model(processed_batches[0])[0]

+ seg_map = predictor.det_predictor.model(processed_batches[0])["proba_map"]

+ seg_map = tf.squeeze(seg_map, axis=[0, 3])

seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),

interpolation=cv2.INTER_LINEAR)

# Plot the raw heatmap

|

{"golden_diff": "diff --git a/demo/app.py b/demo/app.py\n--- a/demo/app.py\n+++ b/demo/app.py\n@@ -74,7 +74,8 @@\n \n # Forward the image to the model\n processed_batches = predictor.det_predictor.pre_processor(doc)\n- seg_map = predictor.det_predictor.model(processed_batches[0])[0]\n+ seg_map = predictor.det_predictor.model(processed_batches[0])[\"proba_map\"]\n+ seg_map = tf.squeeze(seg_map, axis=[0, 3])\n seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),\n interpolation=cv2.INTER_LINEAR)\n # Plot the raw heatmap\n", "issue": "Demo app error when analyzing my first document\n## \ud83d\udc1b Bug\r\n\r\nI tried to analyze a PNG and a PDF, got the same error. I try to change the model, didn't change anything.\r\n\r\n## To Reproduce\r\n\r\nSteps to reproduce the behavior:\r\n\r\n1. Upload a PNG\r\n2. Click on analyze document\r\n\r\n\r\n<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->\r\n```\r\nKeyError: 0\r\nTraceback:\r\nFile \"/Users/thibautmorla/opt/anaconda3/lib/python3.8/site-packages/streamlit/script_runner.py\", line 337, in _run_script\r\n exec(code, module.__dict__)\r\nFile \"/Users/thibautmorla/Downloads/doctr/demo/app.py\", line 93, in <module>\r\n main()\r\nFile \"/Users/thibautmorla/Downloads/doctr/demo/app.py\", line 77, in main\r\n seg_map = predictor.det_predictor.model(processed_batches[0])[0]\r\n```\r\n\r\n\r\n## Additional context\r\n\r\nFirst image upload\n", "before_files": [{"content": "# Copyright (C) 2021, Mindee.\n\n# This program is licensed under the Apache License version 2.\n# See LICENSE or go to <https://www.apache.org/licenses/LICENSE-2.0.txt> for full license details.\n\nimport os\nimport streamlit as st\nimport matplotlib.pyplot as plt\n\nos.environ[\"TF_CPP_MIN_LOG_LEVEL\"] = \"2\"\n\nimport tensorflow as tf\nimport cv2\n\ngpu_devices = tf.config.experimental.list_physical_devices('GPU')\nif any(gpu_devices):\n tf.config.experimental.set_memory_growth(gpu_devices[0], True)\n\nfrom doctr.documents import DocumentFile\nfrom doctr.models import ocr_predictor\nfrom doctr.utils.visualization import visualize_page\n\nDET_ARCHS = [\"db_resnet50\"]\nRECO_ARCHS = [\"crnn_vgg16_bn\", \"crnn_resnet31\", \"sar_vgg16_bn\", \"sar_resnet31\"]\n\n\ndef main():\n\n # Wide mode\n st.set_page_config(layout=\"wide\")\n\n # Designing the interface\n st.title(\"DocTR: Document Text Recognition\")\n # For newline\n st.write('\\n')\n # Set the columns\n cols = st.beta_columns((1, 1, 1))\n cols[0].header(\"Input document\")\n cols[1].header(\"Text segmentation\")\n cols[-1].header(\"OCR output\")\n\n # Sidebar\n # File selection\n st.sidebar.title(\"Document selection\")\n # Disabling warning\n st.set_option('deprecation.showfileUploaderEncoding', False)\n # Choose your own image\n uploaded_file = st.sidebar.file_uploader(\"Upload files\", type=['pdf', 'png', 'jpeg', 'jpg'])\n if uploaded_file is not None:\n if uploaded_file.name.endswith('.pdf'):\n doc = DocumentFile.from_pdf(uploaded_file.read())\n else:\n doc = DocumentFile.from_images(uploaded_file.read())\n cols[0].image(doc[0], \"First page\", use_column_width=True)\n\n # Model selection\n st.sidebar.title(\"Model selection\")\n det_arch = st.sidebar.selectbox(\"Text detection model\", DET_ARCHS)\n reco_arch = st.sidebar.selectbox(\"Text recognition model\", RECO_ARCHS)\n\n # For newline\n st.sidebar.write('\\n')\n\n if st.sidebar.button(\"Analyze document\"):\n\n if uploaded_file is None:\n st.sidebar.write(\"Please upload a document\")\n\n else:\n with st.spinner('Loading model...'):\n predictor = ocr_predictor(det_arch, reco_arch, pretrained=True)\n\n with st.spinner('Analyzing...'):\n\n # Forward the image to the model\n processed_batches = predictor.det_predictor.pre_processor(doc)\n seg_map = predictor.det_predictor.model(processed_batches[0])[0]\n seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),\n interpolation=cv2.INTER_LINEAR)\n # Plot the raw heatmap\n fig, ax = plt.subplots()\n ax.imshow(seg_map)\n ax.axis('off')\n cols[1].pyplot(fig)\n\n # OCR\n out = predictor(doc)\n fig = visualize_page(out.pages[0].export(), doc[0], interactive=False)\n cols[-1].pyplot(fig)\n\n\nif __name__ == '__main__':\n main()\n", "path": "demo/app.py"}], "after_files": [{"content": "# Copyright (C) 2021, Mindee.\n\n# This program is licensed under the Apache License version 2.\n# See LICENSE or go to <https://www.apache.org/licenses/LICENSE-2.0.txt> for full license details.\n\nimport os\nimport streamlit as st\nimport matplotlib.pyplot as plt\n\nos.environ[\"TF_CPP_MIN_LOG_LEVEL\"] = \"2\"\n\nimport tensorflow as tf\nimport cv2\n\ngpu_devices = tf.config.experimental.list_physical_devices('GPU')\nif any(gpu_devices):\n tf.config.experimental.set_memory_growth(gpu_devices[0], True)\n\nfrom doctr.documents import DocumentFile\nfrom doctr.models import ocr_predictor\nfrom doctr.utils.visualization import visualize_page\n\nDET_ARCHS = [\"db_resnet50\"]\nRECO_ARCHS = [\"crnn_vgg16_bn\", \"crnn_resnet31\", \"sar_vgg16_bn\", \"sar_resnet31\"]\n\n\ndef main():\n\n # Wide mode\n st.set_page_config(layout=\"wide\")\n\n # Designing the interface\n st.title(\"DocTR: Document Text Recognition\")\n # For newline\n st.write('\\n')\n # Set the columns\n cols = st.beta_columns((1, 1, 1))\n cols[0].header(\"Input document\")\n cols[1].header(\"Text segmentation\")\n cols[-1].header(\"OCR output\")\n\n # Sidebar\n # File selection\n st.sidebar.title(\"Document selection\")\n # Disabling warning\n st.set_option('deprecation.showfileUploaderEncoding', False)\n # Choose your own image\n uploaded_file = st.sidebar.file_uploader(\"Upload files\", type=['pdf', 'png', 'jpeg', 'jpg'])\n if uploaded_file is not None:\n if uploaded_file.name.endswith('.pdf'):\n doc = DocumentFile.from_pdf(uploaded_file.read())\n else:\n doc = DocumentFile.from_images(uploaded_file.read())\n cols[0].image(doc[0], \"First page\", use_column_width=True)\n\n # Model selection\n st.sidebar.title(\"Model selection\")\n det_arch = st.sidebar.selectbox(\"Text detection model\", DET_ARCHS)\n reco_arch = st.sidebar.selectbox(\"Text recognition model\", RECO_ARCHS)\n\n # For newline\n st.sidebar.write('\\n')\n\n if st.sidebar.button(\"Analyze document\"):\n\n if uploaded_file is None:\n st.sidebar.write(\"Please upload a document\")\n\n else:\n with st.spinner('Loading model...'):\n predictor = ocr_predictor(det_arch, reco_arch, pretrained=True)\n\n with st.spinner('Analyzing...'):\n\n # Forward the image to the model\n processed_batches = predictor.det_predictor.pre_processor(doc)\n seg_map = predictor.det_predictor.model(processed_batches[0])[\"proba_map\"]\n seg_map = tf.squeeze(seg_map, axis=[0, 3])\n seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),\n interpolation=cv2.INTER_LINEAR)\n # Plot the raw heatmap\n fig, ax = plt.subplots()\n ax.imshow(seg_map)\n ax.axis('off')\n cols[1].pyplot(fig)\n\n # OCR\n out = predictor(doc)\n fig = visualize_page(out.pages[0].export(), doc[0], interactive=False)\n cols[-1].pyplot(fig)\n\n\nif __name__ == '__main__':\n main()\n", "path": "demo/app.py"}]}

| 1,387 | 156 |

gh_patches_debug_3507

|

rasdani/github-patches

|

git_diff

|

jazzband__pip-tools-1039

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

setup.py install_requires should have `"click>=7"` not `"click>=6"`

Thank you for all the work on this tool, it's very useful.

Issue:

As of 4.4.0 pip-tools now depends on version 7.0 of click, not 6.0.

The argument `show_envvar` is now being passed to `click.option()`

https://github.com/jazzband/pip-tools/compare/4.3.0...4.4.0#diff-c8673e93c598354ab4a9aa8dd090e913R183

That argument was added in click 7.0

https://click.palletsprojects.com/en/7.x/api/#click.Option

compared to

https://click.palletsprojects.com/en/6.x/api/#click.Option

Fix: setup.py install_requires should have `"click>=7"` not `"click>=6"`

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup.py`

Content:

```

1 """

2 pip-tools keeps your pinned dependencies fresh.

3 """

4 from os.path import abspath, dirname, join

5

6 from setuptools import find_packages, setup

7

8

9 def read_file(filename):

10 """Read the contents of a file located relative to setup.py"""

11 with open(join(abspath(dirname(__file__)), filename)) as thefile:

12 return thefile.read()

13

14

15 setup(

16 name="pip-tools",

17 use_scm_version=True,

18 url="https://github.com/jazzband/pip-tools/",

19 license="BSD",

20 author="Vincent Driessen",

21 author_email="[email protected]",

22 description=__doc__.strip(),

23 long_description=read_file("README.rst"),

24 long_description_content_type="text/x-rst",

25 packages=find_packages(exclude=["tests"]),

26 package_data={},

27 python_requires=">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*",

28 setup_requires=["setuptools_scm"],

29 install_requires=["click>=6", "six"],

30 zip_safe=False,

31 entry_points={

32 "console_scripts": [

33 "pip-compile = piptools.scripts.compile:cli",

34 "pip-sync = piptools.scripts.sync:cli",

35 ]

36 },

37 platforms="any",

38 classifiers=[

39 "Development Status :: 5 - Production/Stable",

40 "Intended Audience :: Developers",

41 "Intended Audience :: System Administrators",

42 "License :: OSI Approved :: BSD License",

43 "Operating System :: OS Independent",

44 "Programming Language :: Python",

45 "Programming Language :: Python :: 2",

46 "Programming Language :: Python :: 2.7",

47 "Programming Language :: Python :: 3",

48 "Programming Language :: Python :: 3.5",

49 "Programming Language :: Python :: 3.6",

50 "Programming Language :: Python :: 3.7",

51 "Programming Language :: Python :: 3.8",

52 "Programming Language :: Python :: Implementation :: CPython",

53 "Programming Language :: Python :: Implementation :: PyPy",

54 "Topic :: System :: Systems Administration",

55 ],

56 )

57

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -26,7 +26,7 @@

package_data={},

python_requires=">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*",

setup_requires=["setuptools_scm"],

- install_requires=["click>=6", "six"],

+ install_requires=["click>=7", "six"],

zip_safe=False,

entry_points={

"console_scripts": [

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -26,7 +26,7 @@\n package_data={},\n python_requires=\">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*\",\n setup_requires=[\"setuptools_scm\"],\n- install_requires=[\"click>=6\", \"six\"],\n+ install_requires=[\"click>=7\", \"six\"],\n zip_safe=False,\n entry_points={\n \"console_scripts\": [\n", "issue": "setup.py install_requires should have `\"click>=7\"` not `\"click>=6\"`\nThank you for all the work on this tool, it's very useful.\r\n\r\nIssue:\r\nAs of 4.4.0 pip-tools now depends on version 7.0 of click, not 6.0.\r\n\r\nThe argument `show_envvar` is now being passed to `click.option()`\r\nhttps://github.com/jazzband/pip-tools/compare/4.3.0...4.4.0#diff-c8673e93c598354ab4a9aa8dd090e913R183\r\n\r\nThat argument was added in click 7.0\r\nhttps://click.palletsprojects.com/en/7.x/api/#click.Option\r\ncompared to \r\nhttps://click.palletsprojects.com/en/6.x/api/#click.Option\r\n\r\nFix: setup.py install_requires should have `\"click>=7\"` not `\"click>=6\"`\n", "before_files": [{"content": "\"\"\"\npip-tools keeps your pinned dependencies fresh.\n\"\"\"\nfrom os.path import abspath, dirname, join\n\nfrom setuptools import find_packages, setup\n\n\ndef read_file(filename):\n \"\"\"Read the contents of a file located relative to setup.py\"\"\"\n with open(join(abspath(dirname(__file__)), filename)) as thefile:\n return thefile.read()\n\n\nsetup(\n name=\"pip-tools\",\n use_scm_version=True,\n url=\"https://github.com/jazzband/pip-tools/\",\n license=\"BSD\",\n author=\"Vincent Driessen\",\n author_email=\"[email protected]\",\n description=__doc__.strip(),\n long_description=read_file(\"README.rst\"),\n long_description_content_type=\"text/x-rst\",\n packages=find_packages(exclude=[\"tests\"]),\n package_data={},\n python_requires=\">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*\",\n setup_requires=[\"setuptools_scm\"],\n install_requires=[\"click>=6\", \"six\"],\n zip_safe=False,\n entry_points={\n \"console_scripts\": [\n \"pip-compile = piptools.scripts.compile:cli\",\n \"pip-sync = piptools.scripts.sync:cli\",\n ]\n },\n platforms=\"any\",\n classifiers=[\n \"Development Status :: 5 - Production/Stable\",\n \"Intended Audience :: Developers\",\n \"Intended Audience :: System Administrators\",\n \"License :: OSI Approved :: BSD License\",\n \"Operating System :: OS Independent\",\n \"Programming Language :: Python\",\n \"Programming Language :: Python :: 2\",\n \"Programming Language :: Python :: 2.7\",\n \"Programming Language :: Python :: 3\",\n \"Programming Language :: Python :: 3.5\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n \"Programming Language :: Python :: 3.8\",\n \"Programming Language :: Python :: Implementation :: CPython\",\n \"Programming Language :: Python :: Implementation :: PyPy\",\n \"Topic :: System :: Systems Administration\",\n ],\n)\n", "path": "setup.py"}], "after_files": [{"content": "\"\"\"\npip-tools keeps your pinned dependencies fresh.\n\"\"\"\nfrom os.path import abspath, dirname, join\n\nfrom setuptools import find_packages, setup\n\n\ndef read_file(filename):\n \"\"\"Read the contents of a file located relative to setup.py\"\"\"\n with open(join(abspath(dirname(__file__)), filename)) as thefile:\n return thefile.read()\n\n\nsetup(\n name=\"pip-tools\",\n use_scm_version=True,\n url=\"https://github.com/jazzband/pip-tools/\",\n license=\"BSD\",\n author=\"Vincent Driessen\",\n author_email=\"[email protected]\",\n description=__doc__.strip(),\n long_description=read_file(\"README.rst\"),\n long_description_content_type=\"text/x-rst\",\n packages=find_packages(exclude=[\"tests\"]),\n package_data={},\n python_requires=\">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*\",\n setup_requires=[\"setuptools_scm\"],\n install_requires=[\"click>=7\", \"six\"],\n zip_safe=False,\n entry_points={\n \"console_scripts\": [\n \"pip-compile = piptools.scripts.compile:cli\",\n \"pip-sync = piptools.scripts.sync:cli\",\n ]\n },\n platforms=\"any\",\n classifiers=[\n \"Development Status :: 5 - Production/Stable\",\n \"Intended Audience :: Developers\",\n \"Intended Audience :: System Administrators\",\n \"License :: OSI Approved :: BSD License\",\n \"Operating System :: OS Independent\",\n \"Programming Language :: Python\",\n \"Programming Language :: Python :: 2\",\n \"Programming Language :: Python :: 2.7\",\n \"Programming Language :: Python :: 3\",\n \"Programming Language :: Python :: 3.5\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n \"Programming Language :: Python :: 3.8\",\n \"Programming Language :: Python :: Implementation :: CPython\",\n \"Programming Language :: Python :: Implementation :: PyPy\",\n \"Topic :: System :: Systems Administration\",\n ],\n)\n", "path": "setup.py"}]}

| 1,020 | 120 |

gh_patches_debug_5040

|

rasdani/github-patches

|

git_diff

|

pymodbus-dev__pymodbus-1355

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

pymodbus.simulator fails with no running event loop

### Versions

* Python: 3.9.2

* OS: Debian Bullseye

* Pymodbus: 3.1.3 latest dev branch

* Modbus Hardware (if used):

### Pymodbus Specific

* Server: tcp - sync/async

* Client: tcp - sync/async

### Description

Executing pymodbus.simulator from the commandline results in the following error:

```

$ pymodbus.simulator

10:39:28 INFO logging:74 Start simulator

Traceback (most recent call last):

File "/usr/local/bin/pymodbus.simulator", line 33, in <module>

sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py", line 112, in main

task = ModbusSimulatorServer(**cmd_args)

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py", line 134, in __init__

server["loop"] = asyncio.get_running_loop()

RuntimeError: no running event loop

```

NOTE: I am running this from the pymodbus/server/simulator/ folder, so it picks up the example [setup.json](https://github.com/pymodbus-dev/pymodbus/blob/dev/pymodbus/server/simulator/setup.json) file.

Manually specifying available options from the commandline results in the same error as well:

```

$ pymodbus.simulator \

--http_host 0.0.0.0 \

--http_port 8080 \

--modbus_server server \

--modbus_device device \

--json_file ~/git/pymodbus/pymodbus/server/simulator/setup.json

11:24:07 INFO logging:74 Start simulator

Traceback (most recent call last):

File "/usr/local/bin/pymodbus.simulator", line 33, in <module>

sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py", line 112, in main

task = ModbusSimulatorServer(**cmd_args)

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py", line 134, in __init__

server["loop"] = asyncio.get_running_loop()

RuntimeError: no running event loop

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pymodbus/server/simulator/main.py`

Content:

```

1 #!/usr/bin/env python3

2 """HTTP server for modbus simulator.

3

4 The modbus simulator contain 3 distint parts:

5

6 - Datastore simulator, to define registers and their behaviour including actions: (simulator)(../../datastore/simulator.py)

7 - Modbus server: (server)(./http_server.py)

8 - HTTP server with REST API and web pages providing an online console in your browser

9

10 Multiple setups for different server types and/or devices are prepared in a (json file)(./setup.json), the detailed configuration is explained in (doc)(README.md)

11

12 The command line parameters are kept to a minimum:

13

14 usage: main.py [-h] [--modbus_server MODBUS_SERVER]

15 [--modbus_device MODBUS_DEVICE] [--http_host HTTP_HOST]

16 [--http_port HTTP_PORT]

17 [--log {critical,error,warning,info,debug}]

18 [--json_file JSON_FILE]

19 [--custom_actions_module CUSTOM_ACTIONS_MODULE]

20

21 Modbus server with REST-API and web server

22

23 options:

24 -h, --help show this help message and exit

25 --modbus_server MODBUS_SERVER

26 use <modbus_server> from server_list in json file

27 --modbus_device MODBUS_DEVICE

28 use <modbus_device> from device_list in json file

29 --http_host HTTP_HOST

30 use <http_host> as host to bind http listen

31 --http_port HTTP_PORT

32 use <http_port> as port to bind http listen

33 --log {critical,error,warning,info,debug}

34 set log level, default is info

35 --log_file LOG_FILE

36 name of server log file, default is "server.log"

37 --json_file JSON_FILE

38 name of json_file, default is "setup.json"

39 --custom_actions_module CUSTOM_ACTIONS_MODULE

40 python file with custom actions, default is none

41 """

42 import argparse

43 import asyncio

44

45 from pymodbus import pymodbus_apply_logging_config

46 from pymodbus.logging import Log

47 from pymodbus.server.simulator.http_server import ModbusSimulatorServer

48

49

50 def get_commandline():

51 """Get command line arguments."""

52 parser = argparse.ArgumentParser(

53 description="Modbus server with REST-API and web server"

54 )

55 parser.add_argument(

56 "--modbus_server",

57 help="use <modbus_server> from server_list in json file",

58 type=str,

59 )

60 parser.add_argument(

61 "--modbus_device",

62 help="use <modbus_device> from device_list in json file",

63 type=str,

64 )

65 parser.add_argument(

66 "--http_host",

67 help="use <http_host> as host to bind http listen",

68 type=str,

69 )

70 parser.add_argument(

71 "--http_port",

72 help="use <http_port> as port to bind http listen",

73 type=str,

74 )

75 parser.add_argument(

76 "--log",

77 choices=["critical", "error", "warning", "info", "debug"],

78 help="set log level, default is info",

79 default="info",

80 type=str,

81 )

82 parser.add_argument(

83 "--json_file",

84 help='name of json file, default is "setup.json"',

85 type=str,

86 )

87 parser.add_argument(

88 "--log_file",

89 help='name of server log file, default is "server.log"',

90 type=str,

91 )

92 parser.add_argument(

93 "--custom_actions_module",

94 help="python file with custom actions, default is none",

95 type=str,

96 )

97 args = parser.parse_args()

98 pymodbus_apply_logging_config(args.log.upper())

99 Log.info("Start simulator")

100 cmd_args = {}

101 for argument in args.__dict__:

102 if argument == "log":

103 continue

104 if args.__dict__[argument] is not None:

105 cmd_args[argument] = args.__dict__[argument]

106 return cmd_args

107

108

109 def main():

110 """Run server."""

111 cmd_args = get_commandline()

112 task = ModbusSimulatorServer(**cmd_args)

113 asyncio.run(task.run_forever(), debug=True)

114

115

116 if __name__ == "__main__":

117 main()

118

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pymodbus/server/simulator/main.py b/pymodbus/server/simulator/main.py

--- a/pymodbus/server/simulator/main.py

+++ b/pymodbus/server/simulator/main.py

@@ -106,11 +106,16 @@

return cmd_args

-def main():

- """Run server."""

+async def run_main():

+ """Run server async."""

cmd_args = get_commandline()

task = ModbusSimulatorServer(**cmd_args)

- asyncio.run(task.run_forever(), debug=True)

+ await task.run_forever()

+

+

+def main():

+ """Run server."""

+ asyncio.run(run_main(), debug=True)

if __name__ == "__main__":

|

{"golden_diff": "diff --git a/pymodbus/server/simulator/main.py b/pymodbus/server/simulator/main.py\n--- a/pymodbus/server/simulator/main.py\n+++ b/pymodbus/server/simulator/main.py\n@@ -106,11 +106,16 @@\n return cmd_args\n \n \n-def main():\n- \"\"\"Run server.\"\"\"\n+async def run_main():\n+ \"\"\"Run server async.\"\"\"\n cmd_args = get_commandline()\n task = ModbusSimulatorServer(**cmd_args)\n- asyncio.run(task.run_forever(), debug=True)\n+ await task.run_forever()\n+\n+\n+def main():\n+ \"\"\"Run server.\"\"\"\n+ asyncio.run(run_main(), debug=True)\n \n \n if __name__ == \"__main__\":\n", "issue": "pymodbus.simulator fails with no running event loop\n### Versions\r\n\r\n* Python: 3.9.2\r\n* OS: Debian Bullseye\r\n* Pymodbus: 3.1.3 latest dev branch\r\n* Modbus Hardware (if used):\r\n\r\n### Pymodbus Specific\r\n* Server: tcp - sync/async\r\n* Client: tcp - sync/async\r\n\r\n### Description\r\n\r\nExecuting pymodbus.simulator from the commandline results in the following error:\r\n\r\n```\r\n$ pymodbus.simulator\r\n10:39:28 INFO logging:74 Start simulator\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/pymodbus.simulator\", line 33, in <module>\r\n sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py\", line 112, in main\r\n task = ModbusSimulatorServer(**cmd_args)\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py\", line 134, in __init__\r\n server[\"loop\"] = asyncio.get_running_loop()\r\nRuntimeError: no running event loop\r\n```\r\nNOTE: I am running this from the pymodbus/server/simulator/ folder, so it picks up the example [setup.json](https://github.com/pymodbus-dev/pymodbus/blob/dev/pymodbus/server/simulator/setup.json) file.\r\n\r\nManually specifying available options from the commandline results in the same error as well:\r\n```\r\n$ pymodbus.simulator \\\r\n --http_host 0.0.0.0 \\\r\n --http_port 8080 \\\r\n --modbus_server server \\\r\n --modbus_device device \\\r\n --json_file ~/git/pymodbus/pymodbus/server/simulator/setup.json\r\n\r\n11:24:07 INFO logging:74 Start simulator\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/pymodbus.simulator\", line 33, in <module>\r\n sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py\", line 112, in main\r\n task = ModbusSimulatorServer(**cmd_args)\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py\", line 134, in __init__\r\n server[\"loop\"] = asyncio.get_running_loop()\r\nRuntimeError: no running event loop\r\n```\r\n\n", "before_files": [{"content": "#!/usr/bin/env python3\n\"\"\"HTTP server for modbus simulator.\n\nThe modbus simulator contain 3 distint parts:\n\n- Datastore simulator, to define registers and their behaviour including actions: (simulator)(../../datastore/simulator.py)\n- Modbus server: (server)(./http_server.py)\n- HTTP server with REST API and web pages providing an online console in your browser\n\nMultiple setups for different server types and/or devices are prepared in a (json file)(./setup.json), the detailed configuration is explained in (doc)(README.md)\n\nThe command line parameters are kept to a minimum:\n\nusage: main.py [-h] [--modbus_server MODBUS_SERVER]\n [--modbus_device MODBUS_DEVICE] [--http_host HTTP_HOST]\n [--http_port HTTP_PORT]\n [--log {critical,error,warning,info,debug}]\n [--json_file JSON_FILE]\n [--custom_actions_module CUSTOM_ACTIONS_MODULE]\n\nModbus server with REST-API and web server\n\noptions:\n -h, --help show this help message and exit\n --modbus_server MODBUS_SERVER\n use <modbus_server> from server_list in json file\n --modbus_device MODBUS_DEVICE\n use <modbus_device> from device_list in json file\n --http_host HTTP_HOST\n use <http_host> as host to bind http listen\n --http_port HTTP_PORT\n use <http_port> as port to bind http listen\n --log {critical,error,warning,info,debug}\n set log level, default is info\n --log_file LOG_FILE\n name of server log file, default is \"server.log\"\n --json_file JSON_FILE\n name of json_file, default is \"setup.json\"\n --custom_actions_module CUSTOM_ACTIONS_MODULE\n python file with custom actions, default is none\n\"\"\"\nimport argparse\nimport asyncio\n\nfrom pymodbus import pymodbus_apply_logging_config\nfrom pymodbus.logging import Log\nfrom pymodbus.server.simulator.http_server import ModbusSimulatorServer\n\n\ndef get_commandline():\n \"\"\"Get command line arguments.\"\"\"\n parser = argparse.ArgumentParser(\n description=\"Modbus server with REST-API and web server\"\n )\n parser.add_argument(\n \"--modbus_server\",\n help=\"use <modbus_server> from server_list in json file\",\n type=str,\n )\n parser.add_argument(\n \"--modbus_device\",\n help=\"use <modbus_device> from device_list in json file\",\n type=str,\n )\n parser.add_argument(\n \"--http_host\",\n help=\"use <http_host> as host to bind http listen\",\n type=str,\n )\n parser.add_argument(\n \"--http_port\",\n help=\"use <http_port> as port to bind http listen\",\n type=str,\n )\n parser.add_argument(\n \"--log\",\n choices=[\"critical\", \"error\", \"warning\", \"info\", \"debug\"],\n help=\"set log level, default is info\",\n default=\"info\",\n type=str,\n )\n parser.add_argument(\n \"--json_file\",\n help='name of json file, default is \"setup.json\"',\n type=str,\n )\n parser.add_argument(\n \"--log_file\",\n help='name of server log file, default is \"server.log\"',\n type=str,\n )\n parser.add_argument(\n \"--custom_actions_module\",\n help=\"python file with custom actions, default is none\",\n type=str,\n )\n args = parser.parse_args()\n pymodbus_apply_logging_config(args.log.upper())\n Log.info(\"Start simulator\")\n cmd_args = {}\n for argument in args.__dict__:\n if argument == \"log\":\n continue\n if args.__dict__[argument] is not None:\n cmd_args[argument] = args.__dict__[argument]\n return cmd_args\n\n\ndef main():\n \"\"\"Run server.\"\"\"\n cmd_args = get_commandline()\n task = ModbusSimulatorServer(**cmd_args)\n asyncio.run(task.run_forever(), debug=True)\n\n\nif __name__ == \"__main__\":\n main()\n", "path": "pymodbus/server/simulator/main.py"}], "after_files": [{"content": "#!/usr/bin/env python3\n\"\"\"HTTP server for modbus simulator.\n\nThe modbus simulator contain 3 distint parts:\n\n- Datastore simulator, to define registers and their behaviour including actions: (simulator)(../../datastore/simulator.py)\n- Modbus server: (server)(./http_server.py)\n- HTTP server with REST API and web pages providing an online console in your browser\n\nMultiple setups for different server types and/or devices are prepared in a (json file)(./setup.json), the detailed configuration is explained in (doc)(README.md)\n\nThe command line parameters are kept to a minimum:\n\nusage: main.py [-h] [--modbus_server MODBUS_SERVER]\n [--modbus_device MODBUS_DEVICE] [--http_host HTTP_HOST]\n [--http_port HTTP_PORT]\n [--log {critical,error,warning,info,debug}]\n [--json_file JSON_FILE]\n [--custom_actions_module CUSTOM_ACTIONS_MODULE]\n\nModbus server with REST-API and web server\n\noptions:\n -h, --help show this help message and exit\n --modbus_server MODBUS_SERVER\n use <modbus_server> from server_list in json file\n --modbus_device MODBUS_DEVICE\n use <modbus_device> from device_list in json file\n --http_host HTTP_HOST\n use <http_host> as host to bind http listen\n --http_port HTTP_PORT\n use <http_port> as port to bind http listen\n --log {critical,error,warning,info,debug}\n set log level, default is info\n --log_file LOG_FILE\n name of server log file, default is \"server.log\"\n --json_file JSON_FILE\n name of json_file, default is \"setup.json\"\n --custom_actions_module CUSTOM_ACTIONS_MODULE\n python file with custom actions, default is none\n\"\"\"\nimport argparse\nimport asyncio\n\nfrom pymodbus import pymodbus_apply_logging_config\nfrom pymodbus.logging import Log\nfrom pymodbus.server.simulator.http_server import ModbusSimulatorServer\n\n\ndef get_commandline():\n \"\"\"Get command line arguments.\"\"\"\n parser = argparse.ArgumentParser(\n description=\"Modbus server with REST-API and web server\"\n )\n parser.add_argument(\n \"--modbus_server\",\n help=\"use <modbus_server> from server_list in json file\",\n type=str,\n )\n parser.add_argument(\n \"--modbus_device\",\n help=\"use <modbus_device> from device_list in json file\",\n type=str,\n )\n parser.add_argument(\n \"--http_host\",\n help=\"use <http_host> as host to bind http listen\",\n type=str,\n )\n parser.add_argument(\n \"--http_port\",\n help=\"use <http_port> as port to bind http listen\",\n type=str,\n )\n parser.add_argument(\n \"--log\",\n choices=[\"critical\", \"error\", \"warning\", \"info\", \"debug\"],\n help=\"set log level, default is info\",\n default=\"info\",\n type=str,\n )\n parser.add_argument(\n \"--json_file\",\n help='name of json file, default is \"setup.json\"',\n type=str,\n )\n parser.add_argument(\n \"--log_file\",\n help='name of server log file, default is \"server.log\"',\n type=str,\n )\n parser.add_argument(\n \"--custom_actions_module\",\n help=\"python file with custom actions, default is none\",\n type=str,\n )\n args = parser.parse_args()\n pymodbus_apply_logging_config(args.log.upper())\n Log.info(\"Start simulator\")\n cmd_args = {}\n for argument in args.__dict__:\n if argument == \"log\":\n continue\n if args.__dict__[argument] is not None:\n cmd_args[argument] = args.__dict__[argument]\n return cmd_args\n\n\nasync def run_main():\n \"\"\"Run server async.\"\"\"\n cmd_args = get_commandline()\n task = ModbusSimulatorServer(**cmd_args)\n await task.run_forever()\n\n\ndef main():\n \"\"\"Run server.\"\"\"\n asyncio.run(run_main(), debug=True)\n\n\nif __name__ == \"__main__\":\n main()\n", "path": "pymodbus/server/simulator/main.py"}]}

| 1,975 | 163 |

gh_patches_debug_11654

|

rasdani/github-patches

|

git_diff

|

mitmproxy__mitmproxy-2919

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Update socks mode access commandline + documentation (v3.0.2)

##### Steps to reproduce the problem:

1. mitmproxy --socks

"--socks is deprecated

Please use '--set socks=value' instead"

2. Check online documentation at:

https://mitmproxy.org/docs/latest/concepts-modes/#socks-proxy

3. Check mitmproxy --help

##### Any other comments? What have you tried so far?

1. The advice given here doesn't appear to work (no combinations I tried were accepted).

2. The online documentation stops at Socks Proxy (no content)

3. The --help text shows the correct method (--mode socks5)

##### System information

Mitmproxy: 3.0.2

Python: 3.5.5rc1

OpenSSL: OpenSSL 1.0.1f 6 Jan 2014

Platform: Linux-2.6.39.4-kat124-ga627d40-armv7l-with-debian-jessie-sid

(Android: KatKiss Marshmallow hosting Linux Deploy: Ubuntu Trusty [armhf] using pyenv)

<!-- Please use the mitmproxy forums (https://discourse.mitmproxy.org/) for support/how-to questions. Thanks! :) -->

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mitmproxy/utils/arg_check.py`

Content:

```

1 import sys

2

3 DEPRECATED = """

4 --cadir

5 -Z

6 --body-size-limit

7 --stream

8 --palette

9 --palette-transparent

10 --follow

11 --order

12 --no-mouse

13 --reverse

14 --socks

15 --http2-priority

16 --no-http2-priority

17 --no-websocket

18 --websocket

19 --spoof-source-address

20 --upstream-bind-address

21 --ciphers-client

22 --ciphers-server

23 --client-certs

24 --no-upstream-cert

25 --add-upstream-certs-to-client-chain

26 --upstream-trusted-cadir

27 --upstream-trusted-ca

28 --ssl-version-client

29 --ssl-version-server

30 --no-onboarding

31 --onboarding-host

32 --onboarding-port

33 --server-replay-use-header

34 --no-pop

35 --replay-ignore-content

36 --replay-ignore-payload-param

37 --replay-ignore-param

38 --replay-ignore-host

39 --replace-from-file

40 """

41

42 REPLACED = """

43 -t

44 -u

45 --wfile

46 -a

47 --afile

48 -z

49 -b

50 --bind-address

51 --port

52 -I

53 --ignore

54 --tcp

55 --cert

56 --insecure

57 -c

58 --replace

59 -i

60 -f

61 --filter

62 """

63

64 REPLACEMENTS = {

65 "--stream": "stream_large_bodies",

66 "--palette": "console_palette",

67 "--palette-transparent": "console_palette_transparent:",

68 "--follow": "console_focus_follow",

69 "--order": "view_order",

70 "--no-mouse": "console_mouse",

71 "--reverse": "view_order_reversed",

72 "--no-http2-priority": "http2_priority",

73 "--no-websocket": "websocket",

74 "--no-upstream-cert": "upstream_cert",

75 "--upstream-trusted-cadir": "ssl_verify_upstream_trusted_cadir",

76 "--upstream-trusted-ca": "ssl_verify_upstream_trusted_ca",

77 "--no-onboarding": "onboarding",

78 "--no-pop": "server_replay_nopop",

79 "--replay-ignore-content": "server_replay_ignore_content",

80 "--replay-ignore-payload-param": "server_replay_ignore_payload_params",

81 "--replay-ignore-param": "server_replay_ignore_params",

82 "--replay-ignore-host": "server_replay_ignore_host",

83 "--replace-from-file": "replacements (use @ to specify path)",

84 "-t": "--stickycookie",

85 "-u": "--stickyauth",

86 "--wfile": "--save-stream-file",

87 "-a": "-w Prefix path with + to append.",

88 "--afile": "-w Prefix path with + to append.",

89 "-z": "--anticomp",

90 "-b": "--listen-host",

91 "--bind-address": "--listen-host",

92 "--port": "--listen-port",

93 "-I": "--ignore-hosts",

94 "--ignore": "--ignore-hosts",

95 "--tcp": "--tcp-hosts",

96 "--cert": "--certs",

97 "--insecure": "--ssl-insecure",

98 "-c": "-C",

99 "--replace": "--replacements",

100 "-i": "--intercept",

101 "-f": "--view-filter",

102 "--filter": "--view-filter"

103 }

104

105

106 def check():

107 args = sys.argv[1:]

108 print()

109 if "-U" in args:

110 print("-U is deprecated, please use --mode upstream:SPEC instead")

111

112 if "-T" in args:

113 print("-T is deprecated, please use --mode transparent instead")

114

115 for option in ("-e", "--eventlog", "--norefresh"):

116 if option in args:

117 print("{} has been removed.".format(option))

118

119 for option in ("--nonanonymous", "--singleuser", "--htpasswd"):

120 if option in args:

121 print(

122 '{} is deprecated.\n'

123 'Please use `--proxyauth SPEC` instead.\n'

124 'SPEC Format: "username:pass", "any" to accept any user/pass combination,\n'

125 '"@path" to use an Apache htpasswd file, or\n'

126 '"ldap[s]:url_server_ldap:dn_auth:password:dn_subtree" '

127 'for LDAP authentication.'.format(option))

128

129 for option in REPLACED.splitlines():

130 if option in args:

131 print(

132 "{} is deprecated.\n"

133 "Please use `{}` instead.".format(

134 option,

135 REPLACEMENTS.get(option)

136 )

137 )

138

139 for option in DEPRECATED.splitlines():

140 if option in args:

141 print(

142 "{} is deprecated.\n"

143 "Please use `--set {}=value` instead.\n"

144 "To show all options and their default values use --options".format(

145 option,

146 REPLACEMENTS.get(option, None) or option.lstrip("-").replace("-", "_")

147 )

148 )

149

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mitmproxy/utils/arg_check.py b/mitmproxy/utils/arg_check.py

--- a/mitmproxy/utils/arg_check.py

+++ b/mitmproxy/utils/arg_check.py

@@ -11,7 +11,6 @@

--order

--no-mouse

--reverse

---socks

--http2-priority

--no-http2-priority

--no-websocket

@@ -59,6 +58,7 @@

-i

-f

--filter

+--socks

"""

REPLACEMENTS = {

@@ -99,7 +99,8 @@

"--replace": "--replacements",

"-i": "--intercept",

"-f": "--view-filter",

- "--filter": "--view-filter"

+ "--filter": "--view-filter",

+ "--socks": "--mode socks5"

}

|