problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_13173

|

rasdani/github-patches

|

git_diff

|

ros__ros_comm-187

|

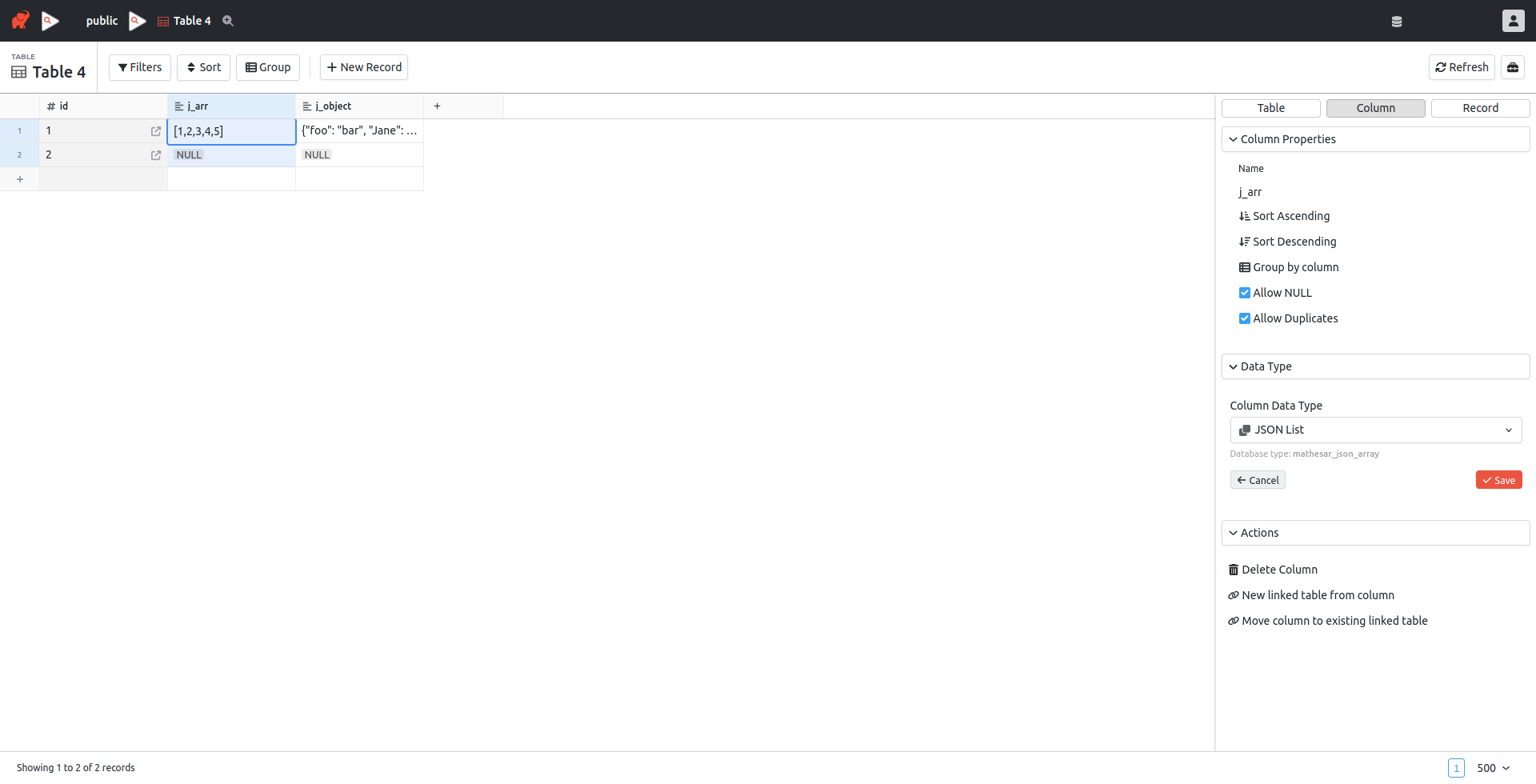

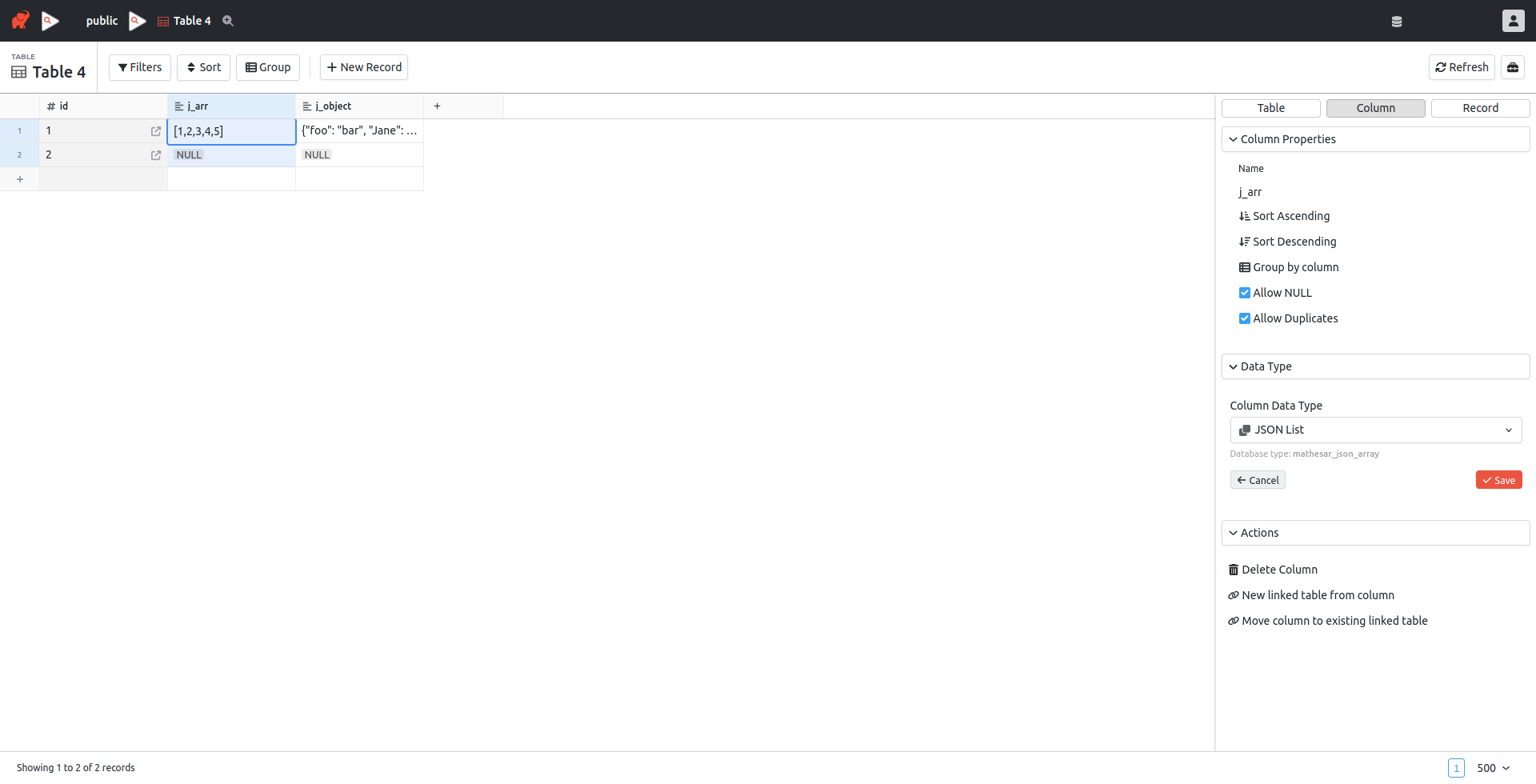

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

roslaunch --files prints error if multiple anon nodes have same id

When running:

```

roslaunch --files package_name launch_file.launch

```

I get the following error:

```

roslaunch file contains multiple nodes named [/$(anon foo)].

Please check all <node> 'name' attributes to make sure they are unique.

Also check that $(anon id) use different ids.

```

Note that when actually launching the launch file, this will not be a problem because the anonymous node names will be expanded with unique suffixes. Also, the list of files does not relate to the expansion of anonymous node names, so the file list should be printable regardless.

This is similar in spirit to bugs #94 and #65, both of which suffer because roslaunch simply prints an error and quits when it finds non-unique node names instead of doing as much as possible to help you find your error.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `tools/roslaunch/src/roslaunch/rlutil.py`

Content:

```

1 # Software License Agreement (BSD License)

2 #

3 # Copyright (c) 2009, Willow Garage, Inc.

4 # All rights reserved.

5 #

6 # Redistribution and use in source and binary forms, with or without

7 # modification, are permitted provided that the following conditions

8 # are met:

9 #

10 # * Redistributions of source code must retain the above copyright

11 # notice, this list of conditions and the following disclaimer.

12 # * Redistributions in binary form must reproduce the above

13 # copyright notice, this list of conditions and the following

14 # disclaimer in the documentation and/or other materials provided

15 # with the distribution.

16 # * Neither the name of Willow Garage, Inc. nor the names of its

17 # contributors may be used to endorse or promote products derived

18 # from this software without specific prior written permission.

19 #

20 # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

21 # "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

22 # LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

23 # FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

24 # COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

25 # INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

26 # BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;

27 # LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

28 # CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

29 # LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

30 # ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

31 # POSSIBILITY OF SUCH DAMAGE.

32

33 """

34 Uncategorized utility routines for roslaunch.

35

36 This API should not be considered stable.

37 """

38

39 from __future__ import print_function

40

41 import os

42 import sys

43 import time

44

45 import roslib.packages

46

47 import rosclean

48 import rospkg

49 import rosgraph

50

51 import roslaunch.core

52 import roslaunch.config

53 import roslaunch.depends

54 from rosmaster import DEFAULT_MASTER_PORT

55

56 def check_log_disk_usage():

57 """

58 Check size of log directory. If high, print warning to user

59 """

60 try:

61 d = rospkg.get_log_dir()

62 roslaunch.core.printlog("Checking log directory for disk usage. This may take awhile.\nPress Ctrl-C to interrupt")

63 disk_usage = rosclean.get_disk_usage(d)

64 # warn if over a gig

65 if disk_usage > 1073741824:

66 roslaunch.core.printerrlog("WARNING: disk usage in log directory [%s] is over 1GB.\nIt's recommended that you use the 'rosclean' command."%d)

67 else:

68 roslaunch.core.printlog("Done checking log file disk usage. Usage is <1GB.")

69 except:

70 pass

71

72 def resolve_launch_arguments(args):

73 """

74 Resolve command-line args to roslaunch filenames.

75

76 :returns: resolved filenames, ``[str]``

77 """

78

79 # strip remapping args for processing

80 args = rosgraph.myargv(args)

81

82 # user can either specify:

83 # - filename + launch args

84 # - package + relative-filename + launch args

85 if not args:

86 return args

87 resolved_args = None

88 top = args[0]

89 if os.path.isfile(top):

90 resolved_args = [top] + args[1:]

91 elif len(args) == 1:

92 raise roslaunch.core.RLException("[%s] does not exist. please specify a package and launch file"%(top))

93 else:

94 try:

95 resolved = roslib.packages.find_resource(top, args[1])

96 if len(resolved) == 1:

97 resolved = resolved[0]

98 elif len(resolved) > 1:

99 raise roslaunch.core.RLException("multiple files named [%s] in package [%s]:%s\nPlease specify full path instead" % (args[1], top, ''.join(['\n- %s' % r for r in resolved])))

100 except rospkg.ResourceNotFound as e:

101 raise roslaunch.core.RLException("[%s] is not a package or launch file name"%top)

102 if not resolved:

103 raise roslaunch.core.RLException("cannot locate [%s] in package [%s]"%(args[1], top))

104 else:

105 resolved_args = [resolved] + args[2:]

106 return resolved_args

107

108 def _wait_for_master():

109 """

110 Block until ROS Master is online

111

112 :raise: :exc:`RuntimeError` If unexpected error occurs

113 """

114 m = roslaunch.core.Master() # get a handle to the default master

115 is_running = m.is_running()

116 if not is_running:

117 roslaunch.core.printlog("roscore/master is not yet running, will wait for it to start")

118 while not is_running:

119 time.sleep(0.1)

120 is_running = m.is_running()

121 if is_running:

122 roslaunch.core.printlog("master has started, initiating launch")

123 else:

124 raise RuntimeError("unknown error waiting for master to start")

125

126 _terminal_name = None

127

128 def _set_terminal(s):

129 import platform

130 if platform.system() in ['FreeBSD', 'Linux', 'Darwin', 'Unix']:

131 try:

132 print('\033]2;%s\007'%(s))

133 except:

134 pass

135

136 def update_terminal_name(ros_master_uri):

137 """

138 append master URI to the terminal name

139 """

140 if _terminal_name:

141 _set_terminal(_terminal_name + ' ' + ros_master_uri)

142

143 def change_terminal_name(args, is_core):

144 """

145 use echo (where available) to change the name of the terminal window

146 """

147 global _terminal_name

148 _terminal_name = 'roscore' if is_core else ','.join(args)

149 _set_terminal(_terminal_name)

150

151 def get_or_generate_uuid(options_runid, options_wait_for_master):

152 """

153 :param options_runid: run_id value from command-line or ``None``, ``str``

154 :param options_wait_for_master: the wait_for_master command

155 option. If this is True, it means that we must retrieve the

156 value from the parameter server and need to avoid any race

157 conditions with the roscore being initialized. ``bool``

158 """

159

160 # Three possible sources of the run_id:

161 #

162 # - if we're a child process, we get it from options_runid

163 # - if there's already a roscore running, read from the param server

164 # - generate one if we're running the roscore

165 if options_runid:

166 return options_runid

167

168 # #773: Generate a run_id to use if we launch a master

169 # process. If a master is already running, we'll get the

170 # run_id from it instead

171 param_server = rosgraph.Master('/roslaunch')

172 val = None

173 while val is None:

174 try:

175 val = param_server.getParam('/run_id')

176 except:

177 if not options_wait_for_master:

178 val = roslaunch.core.generate_run_id()

179 return val

180

181 def check_roslaunch(f):

182 """

183 Check roslaunch file for errors, returning error message if check fails. This routine

184 is mainly to support rostest's roslaunch_check.

185

186 :param f: roslaunch file name, ``str``

187 :returns: error message or ``None``

188 """

189 try:

190 rl_config = roslaunch.config.load_config_default([f], DEFAULT_MASTER_PORT, verbose=False)

191 except roslaunch.core.RLException as e:

192 return str(e)

193

194 errors = []

195 # check for missing deps

196 base_pkg, file_deps, missing = roslaunch.depends.roslaunch_deps([f])

197 for pkg, miss in missing.iteritems():

198 if miss:

199 errors.append("Missing manifest dependencies: %s/manifest.xml: %s"%(pkg, ', '.join(miss)))

200

201 # load all node defs

202 nodes = []

203 for filename, rldeps in file_deps.iteritems():

204 nodes.extend(rldeps.nodes)

205

206 # check for missing packages

207 rospack = rospkg.RosPack()

208 for pkg, node_type in nodes:

209 try:

210 rospack.get_path(pkg)

211 except:

212 errors.append("cannot find package [%s] for node [%s]"%(pkg, node_type))

213

214 # check for missing nodes

215 for pkg, node_type in nodes:

216 try:

217 if not roslib.packages.find_node(pkg, node_type):

218 errors.append("cannot find node [%s] in package [%s]"%(node_type, pkg))

219 except Exception as e:

220 errors.append("unable to find node [%s/%s]: %s"%(pkg, node_type, str(e)))

221

222 # Check for configuration errors, #2889

223 for err in rl_config.config_errors:

224 errors.append('ROSLaunch config error: %s' % err)

225

226 if errors:

227 return '\n'.join(errors)

228

229 def print_file_list(roslaunch_files):

230 """

231 :param roslaunch_files: list of launch files to load, ``str``

232

233 :returns: list of files involved in processing roslaunch_files, including the files themselves.

234 """

235 from roslaunch.config import load_config_default, get_roscore_filename

236 import roslaunch.xmlloader

237 try:

238 loader = roslaunch.xmlloader.XmlLoader(resolve_anon=False)

239 config = load_config_default(roslaunch_files, None, loader=loader, verbose=False, assign_machines=False)

240 files = [os.path.abspath(x) for x in set(config.roslaunch_files) - set([get_roscore_filename()])]

241 print('\n'.join(files))

242 except roslaunch.core.RLException as e:

243 print(str(e), file=sys.stderr)

244 sys.exit(1)

245

246

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/tools/roslaunch/src/roslaunch/rlutil.py b/tools/roslaunch/src/roslaunch/rlutil.py

--- a/tools/roslaunch/src/roslaunch/rlutil.py

+++ b/tools/roslaunch/src/roslaunch/rlutil.py

@@ -235,7 +235,7 @@

from roslaunch.config import load_config_default, get_roscore_filename

import roslaunch.xmlloader

try:

- loader = roslaunch.xmlloader.XmlLoader(resolve_anon=False)

+ loader = roslaunch.xmlloader.XmlLoader(resolve_anon=True)

config = load_config_default(roslaunch_files, None, loader=loader, verbose=False, assign_machines=False)

files = [os.path.abspath(x) for x in set(config.roslaunch_files) - set([get_roscore_filename()])]

print('\n'.join(files))

|

{"golden_diff": "diff --git a/tools/roslaunch/src/roslaunch/rlutil.py b/tools/roslaunch/src/roslaunch/rlutil.py\n--- a/tools/roslaunch/src/roslaunch/rlutil.py\n+++ b/tools/roslaunch/src/roslaunch/rlutil.py\n@@ -235,7 +235,7 @@\n from roslaunch.config import load_config_default, get_roscore_filename\n import roslaunch.xmlloader\n try:\n- loader = roslaunch.xmlloader.XmlLoader(resolve_anon=False)\n+ loader = roslaunch.xmlloader.XmlLoader(resolve_anon=True)\n config = load_config_default(roslaunch_files, None, loader=loader, verbose=False, assign_machines=False)\n files = [os.path.abspath(x) for x in set(config.roslaunch_files) - set([get_roscore_filename()])]\n print('\\n'.join(files))\n", "issue": "roslaunch --files prints error if multiple anon nodes have same id\nWhen running:\n\n```\nroslaunch --files package_name launch_file.launch\n```\n\nI get the following error:\n\n```\nroslaunch file contains multiple nodes named [/$(anon foo)].\nPlease check all <node> 'name' attributes to make sure they are unique.\nAlso check that $(anon id) use different ids.\n```\n\nNote that when actually launching the launch file, this will not be a problem because the anonymous node names will be expanded with unique suffixes. Also, the list of files does not relate to the expansion of anonymous node names, so the file list should be printable regardless.\n\nThis is similar in spirit to bugs #94 and #65, both of which suffer because roslaunch simply prints an error and quits when it finds non-unique node names instead of doing as much as possible to help you find your error.\n\n", "before_files": [{"content": "# Software License Agreement (BSD License)\n#\n# Copyright (c) 2009, Willow Garage, Inc.\n# All rights reserved.\n#\n# Redistribution and use in source and binary forms, with or without\n# modification, are permitted provided that the following conditions\n# are met:\n#\n# * Redistributions of source code must retain the above copyright\n# notice, this list of conditions and the following disclaimer.\n# * Redistributions in binary form must reproduce the above\n# copyright notice, this list of conditions and the following\n# disclaimer in the documentation and/or other materials provided\n# with the distribution.\n# * Neither the name of Willow Garage, Inc. nor the names of its\n# contributors may be used to endorse or promote products derived\n# from this software without specific prior written permission.\n#\n# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS\n# \"AS IS\" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT\n# LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS\n# FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE\n# COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,\n# INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,\n# BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;\n# LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER\n# CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT\n# LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN\n# ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE\n# POSSIBILITY OF SUCH DAMAGE.\n\n\"\"\"\nUncategorized utility routines for roslaunch.\n\nThis API should not be considered stable.\n\"\"\"\n\nfrom __future__ import print_function\n\nimport os\nimport sys\nimport time\n\nimport roslib.packages\n\nimport rosclean\nimport rospkg\nimport rosgraph\n\nimport roslaunch.core\nimport roslaunch.config\nimport roslaunch.depends\nfrom rosmaster import DEFAULT_MASTER_PORT\n\ndef check_log_disk_usage():\n \"\"\"\n Check size of log directory. If high, print warning to user\n \"\"\"\n try:\n d = rospkg.get_log_dir()\n roslaunch.core.printlog(\"Checking log directory for disk usage. This may take awhile.\\nPress Ctrl-C to interrupt\") \n disk_usage = rosclean.get_disk_usage(d)\n # warn if over a gig\n if disk_usage > 1073741824:\n roslaunch.core.printerrlog(\"WARNING: disk usage in log directory [%s] is over 1GB.\\nIt's recommended that you use the 'rosclean' command.\"%d)\n else:\n roslaunch.core.printlog(\"Done checking log file disk usage. Usage is <1GB.\") \n except:\n pass\n\ndef resolve_launch_arguments(args):\n \"\"\"\n Resolve command-line args to roslaunch filenames.\n\n :returns: resolved filenames, ``[str]``\n \"\"\"\n\n # strip remapping args for processing\n args = rosgraph.myargv(args)\n \n # user can either specify:\n # - filename + launch args\n # - package + relative-filename + launch args\n if not args:\n return args\n resolved_args = None\n top = args[0]\n if os.path.isfile(top):\n resolved_args = [top] + args[1:]\n elif len(args) == 1:\n raise roslaunch.core.RLException(\"[%s] does not exist. please specify a package and launch file\"%(top))\n else:\n try:\n resolved = roslib.packages.find_resource(top, args[1])\n if len(resolved) == 1:\n resolved = resolved[0]\n elif len(resolved) > 1:\n raise roslaunch.core.RLException(\"multiple files named [%s] in package [%s]:%s\\nPlease specify full path instead\" % (args[1], top, ''.join(['\\n- %s' % r for r in resolved])))\n except rospkg.ResourceNotFound as e:\n raise roslaunch.core.RLException(\"[%s] is not a package or launch file name\"%top)\n if not resolved:\n raise roslaunch.core.RLException(\"cannot locate [%s] in package [%s]\"%(args[1], top))\n else:\n resolved_args = [resolved] + args[2:]\n return resolved_args\n\ndef _wait_for_master():\n \"\"\"\n Block until ROS Master is online\n \n :raise: :exc:`RuntimeError` If unexpected error occurs\n \"\"\"\n m = roslaunch.core.Master() # get a handle to the default master\n is_running = m.is_running()\n if not is_running:\n roslaunch.core.printlog(\"roscore/master is not yet running, will wait for it to start\")\n while not is_running:\n time.sleep(0.1)\n is_running = m.is_running()\n if is_running:\n roslaunch.core.printlog(\"master has started, initiating launch\")\n else:\n raise RuntimeError(\"unknown error waiting for master to start\")\n\n_terminal_name = None\n\ndef _set_terminal(s):\n import platform\n if platform.system() in ['FreeBSD', 'Linux', 'Darwin', 'Unix']:\n try:\n print('\\033]2;%s\\007'%(s))\n except:\n pass\n \ndef update_terminal_name(ros_master_uri):\n \"\"\"\n append master URI to the terminal name\n \"\"\"\n if _terminal_name:\n _set_terminal(_terminal_name + ' ' + ros_master_uri)\n\ndef change_terminal_name(args, is_core):\n \"\"\"\n use echo (where available) to change the name of the terminal window\n \"\"\"\n global _terminal_name\n _terminal_name = 'roscore' if is_core else ','.join(args)\n _set_terminal(_terminal_name)\n\ndef get_or_generate_uuid(options_runid, options_wait_for_master):\n \"\"\"\n :param options_runid: run_id value from command-line or ``None``, ``str``\n :param options_wait_for_master: the wait_for_master command\n option. If this is True, it means that we must retrieve the\n value from the parameter server and need to avoid any race\n conditions with the roscore being initialized. ``bool``\n \"\"\"\n\n # Three possible sources of the run_id:\n #\n # - if we're a child process, we get it from options_runid\n # - if there's already a roscore running, read from the param server\n # - generate one if we're running the roscore\n if options_runid:\n return options_runid\n\n # #773: Generate a run_id to use if we launch a master\n # process. If a master is already running, we'll get the\n # run_id from it instead\n param_server = rosgraph.Master('/roslaunch')\n val = None\n while val is None:\n try:\n val = param_server.getParam('/run_id')\n except:\n if not options_wait_for_master:\n val = roslaunch.core.generate_run_id()\n return val\n \ndef check_roslaunch(f):\n \"\"\"\n Check roslaunch file for errors, returning error message if check fails. This routine\n is mainly to support rostest's roslaunch_check.\n\n :param f: roslaunch file name, ``str``\n :returns: error message or ``None``\n \"\"\"\n try:\n rl_config = roslaunch.config.load_config_default([f], DEFAULT_MASTER_PORT, verbose=False)\n except roslaunch.core.RLException as e:\n return str(e)\n \n errors = []\n # check for missing deps\n base_pkg, file_deps, missing = roslaunch.depends.roslaunch_deps([f])\n for pkg, miss in missing.iteritems():\n if miss:\n errors.append(\"Missing manifest dependencies: %s/manifest.xml: %s\"%(pkg, ', '.join(miss)))\n \n # load all node defs\n nodes = []\n for filename, rldeps in file_deps.iteritems():\n nodes.extend(rldeps.nodes)\n\n # check for missing packages\n rospack = rospkg.RosPack()\n for pkg, node_type in nodes:\n try:\n rospack.get_path(pkg)\n except:\n errors.append(\"cannot find package [%s] for node [%s]\"%(pkg, node_type))\n\n # check for missing nodes\n for pkg, node_type in nodes:\n try:\n if not roslib.packages.find_node(pkg, node_type):\n errors.append(\"cannot find node [%s] in package [%s]\"%(node_type, pkg))\n except Exception as e:\n errors.append(\"unable to find node [%s/%s]: %s\"%(pkg, node_type, str(e)))\n \n # Check for configuration errors, #2889\n for err in rl_config.config_errors:\n errors.append('ROSLaunch config error: %s' % err)\n\n if errors:\n return '\\n'.join(errors)\n \ndef print_file_list(roslaunch_files):\n \"\"\"\n :param roslaunch_files: list of launch files to load, ``str``\n\n :returns: list of files involved in processing roslaunch_files, including the files themselves.\n \"\"\"\n from roslaunch.config import load_config_default, get_roscore_filename\n import roslaunch.xmlloader\n try:\n loader = roslaunch.xmlloader.XmlLoader(resolve_anon=False)\n config = load_config_default(roslaunch_files, None, loader=loader, verbose=False, assign_machines=False)\n files = [os.path.abspath(x) for x in set(config.roslaunch_files) - set([get_roscore_filename()])]\n print('\\n'.join(files))\n except roslaunch.core.RLException as e:\n print(str(e), file=sys.stderr)\n sys.exit(1)\n\n", "path": "tools/roslaunch/src/roslaunch/rlutil.py"}], "after_files": [{"content": "# Software License Agreement (BSD License)\n#\n# Copyright (c) 2009, Willow Garage, Inc.\n# All rights reserved.\n#\n# Redistribution and use in source and binary forms, with or without\n# modification, are permitted provided that the following conditions\n# are met:\n#\n# * Redistributions of source code must retain the above copyright\n# notice, this list of conditions and the following disclaimer.\n# * Redistributions in binary form must reproduce the above\n# copyright notice, this list of conditions and the following\n# disclaimer in the documentation and/or other materials provided\n# with the distribution.\n# * Neither the name of Willow Garage, Inc. nor the names of its\n# contributors may be used to endorse or promote products derived\n# from this software without specific prior written permission.\n#\n# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS\n# \"AS IS\" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT\n# LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS\n# FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE\n# COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,\n# INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,\n# BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;\n# LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER\n# CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT\n# LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN\n# ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE\n# POSSIBILITY OF SUCH DAMAGE.\n\n\"\"\"\nUncategorized utility routines for roslaunch.\n\nThis API should not be considered stable.\n\"\"\"\n\nfrom __future__ import print_function\n\nimport os\nimport sys\nimport time\n\nimport roslib.packages\n\nimport rosclean\nimport rospkg\nimport rosgraph\n\nimport roslaunch.core\nimport roslaunch.config\nimport roslaunch.depends\nfrom rosmaster import DEFAULT_MASTER_PORT\n\ndef check_log_disk_usage():\n \"\"\"\n Check size of log directory. If high, print warning to user\n \"\"\"\n try:\n d = rospkg.get_log_dir()\n roslaunch.core.printlog(\"Checking log directory for disk usage. This may take awhile.\\nPress Ctrl-C to interrupt\") \n disk_usage = rosclean.get_disk_usage(d)\n # warn if over a gig\n if disk_usage > 1073741824:\n roslaunch.core.printerrlog(\"WARNING: disk usage in log directory [%s] is over 1GB.\\nIt's recommended that you use the 'rosclean' command.\"%d)\n else:\n roslaunch.core.printlog(\"Done checking log file disk usage. Usage is <1GB.\") \n except:\n pass\n\ndef resolve_launch_arguments(args):\n \"\"\"\n Resolve command-line args to roslaunch filenames.\n\n :returns: resolved filenames, ``[str]``\n \"\"\"\n\n # strip remapping args for processing\n args = rosgraph.myargv(args)\n \n # user can either specify:\n # - filename + launch args\n # - package + relative-filename + launch args\n if not args:\n return args\n resolved_args = None\n top = args[0]\n if os.path.isfile(top):\n resolved_args = [top] + args[1:]\n elif len(args) == 1:\n raise roslaunch.core.RLException(\"[%s] does not exist. please specify a package and launch file\"%(top))\n else:\n try:\n resolved = roslib.packages.find_resource(top, args[1])\n if len(resolved) == 1:\n resolved = resolved[0]\n elif len(resolved) > 1:\n raise roslaunch.core.RLException(\"multiple files named [%s] in package [%s]:%s\\nPlease specify full path instead\" % (args[1], top, ''.join(['\\n- %s' % r for r in resolved])))\n except rospkg.ResourceNotFound as e:\n raise roslaunch.core.RLException(\"[%s] is not a package or launch file name\"%top)\n if not resolved:\n raise roslaunch.core.RLException(\"cannot locate [%s] in package [%s]\"%(args[1], top))\n else:\n resolved_args = [resolved] + args[2:]\n return resolved_args\n\ndef _wait_for_master():\n \"\"\"\n Block until ROS Master is online\n \n :raise: :exc:`RuntimeError` If unexpected error occurs\n \"\"\"\n m = roslaunch.core.Master() # get a handle to the default master\n is_running = m.is_running()\n if not is_running:\n roslaunch.core.printlog(\"roscore/master is not yet running, will wait for it to start\")\n while not is_running:\n time.sleep(0.1)\n is_running = m.is_running()\n if is_running:\n roslaunch.core.printlog(\"master has started, initiating launch\")\n else:\n raise RuntimeError(\"unknown error waiting for master to start\")\n\n_terminal_name = None\n\ndef _set_terminal(s):\n import platform\n if platform.system() in ['FreeBSD', 'Linux', 'Darwin', 'Unix']:\n try:\n print('\\033]2;%s\\007'%(s))\n except:\n pass\n \ndef update_terminal_name(ros_master_uri):\n \"\"\"\n append master URI to the terminal name\n \"\"\"\n if _terminal_name:\n _set_terminal(_terminal_name + ' ' + ros_master_uri)\n\ndef change_terminal_name(args, is_core):\n \"\"\"\n use echo (where available) to change the name of the terminal window\n \"\"\"\n global _terminal_name\n _terminal_name = 'roscore' if is_core else ','.join(args)\n _set_terminal(_terminal_name)\n\ndef get_or_generate_uuid(options_runid, options_wait_for_master):\n \"\"\"\n :param options_runid: run_id value from command-line or ``None``, ``str``\n :param options_wait_for_master: the wait_for_master command\n option. If this is True, it means that we must retrieve the\n value from the parameter server and need to avoid any race\n conditions with the roscore being initialized. ``bool``\n \"\"\"\n\n # Three possible sources of the run_id:\n #\n # - if we're a child process, we get it from options_runid\n # - if there's already a roscore running, read from the param server\n # - generate one if we're running the roscore\n if options_runid:\n return options_runid\n\n # #773: Generate a run_id to use if we launch a master\n # process. If a master is already running, we'll get the\n # run_id from it instead\n param_server = rosgraph.Master('/roslaunch')\n val = None\n while val is None:\n try:\n val = param_server.getParam('/run_id')\n except:\n if not options_wait_for_master:\n val = roslaunch.core.generate_run_id()\n return val\n \ndef check_roslaunch(f):\n \"\"\"\n Check roslaunch file for errors, returning error message if check fails. This routine\n is mainly to support rostest's roslaunch_check.\n\n :param f: roslaunch file name, ``str``\n :returns: error message or ``None``\n \"\"\"\n try:\n rl_config = roslaunch.config.load_config_default([f], DEFAULT_MASTER_PORT, verbose=False)\n except roslaunch.core.RLException as e:\n return str(e)\n \n errors = []\n # check for missing deps\n base_pkg, file_deps, missing = roslaunch.depends.roslaunch_deps([f])\n for pkg, miss in missing.iteritems():\n if miss:\n errors.append(\"Missing manifest dependencies: %s/manifest.xml: %s\"%(pkg, ', '.join(miss)))\n \n # load all node defs\n nodes = []\n for filename, rldeps in file_deps.iteritems():\n nodes.extend(rldeps.nodes)\n\n # check for missing packages\n rospack = rospkg.RosPack()\n for pkg, node_type in nodes:\n try:\n rospack.get_path(pkg)\n except:\n errors.append(\"cannot find package [%s] for node [%s]\"%(pkg, node_type))\n\n # check for missing nodes\n for pkg, node_type in nodes:\n try:\n if not roslib.packages.find_node(pkg, node_type):\n errors.append(\"cannot find node [%s] in package [%s]\"%(node_type, pkg))\n except Exception as e:\n errors.append(\"unable to find node [%s/%s]: %s\"%(pkg, node_type, str(e)))\n \n # Check for configuration errors, #2889\n for err in rl_config.config_errors:\n errors.append('ROSLaunch config error: %s' % err)\n\n if errors:\n return '\\n'.join(errors)\n \ndef print_file_list(roslaunch_files):\n \"\"\"\n :param roslaunch_files: list of launch files to load, ``str``\n\n :returns: list of files involved in processing roslaunch_files, including the files themselves.\n \"\"\"\n from roslaunch.config import load_config_default, get_roscore_filename\n import roslaunch.xmlloader\n try:\n loader = roslaunch.xmlloader.XmlLoader(resolve_anon=True)\n config = load_config_default(roslaunch_files, None, loader=loader, verbose=False, assign_machines=False)\n files = [os.path.abspath(x) for x in set(config.roslaunch_files) - set([get_roscore_filename()])]\n print('\\n'.join(files))\n except roslaunch.core.RLException as e:\n print(str(e), file=sys.stderr)\n sys.exit(1)\n\n", "path": "tools/roslaunch/src/roslaunch/rlutil.py"}]}

| 3,231 | 202 |

gh_patches_debug_9186

|

rasdani/github-patches

|

git_diff

|

fidals__shopelectro-199

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

SE Iterate tags in templates

[trello origin](https://trello.com/c/zulRj7lF/294-se-iterate-tags-in-templates)

[seo templates doc](https://docs.google.com/document/d/18DFBsuh6NT8hjyihOJ2bxw9zEe8z_070MBQrbAq0kvE/edit#)

**Проблема**

Сеошники после некоторых применений мультисв-в обнаружили заголовок *Блоки питания для ноутбуков устанавливает пользователь и от сети 220 В* [по этому линку](https://www.shopelectro.ru/catalog/categories/bloki-pitaniia-288/tags/ustanavlivaet-polzovatel-and-ot-seti-220-v/)

Получилось нехорошо. Предлагают сделать заголовок таким:

*Блоки питания для ноутбуков от сети 220 В, выбор выходного напряжения - устанавливает пользователь*

**Решение**

Чтобы переделать заголовок, нам нужно добавить в шаблон возможность указывать имя одного конкретного тега.

А решить это можно так: добавляем полноценный tags в seo-шаблоны.

Сейчас у нас только обрезанный tags.titles

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `shopelectro/views/catalog.py`

Content:

```

1 from functools import partial

2

3 from django.conf import settings

4 from django.http import HttpResponse, HttpResponseForbidden

5 from django.shortcuts import render, get_object_or_404

6 from django.views.decorators.http import require_POST

7 from django.urls import reverse

8 from django_user_agents.utils import get_user_agent

9

10 from catalog.views import catalog

11 from images.models import Image

12 from pages import views as pages_views

13

14 from shopelectro import config

15 from shopelectro import models

16 from shopelectro.views.helpers import set_csrf_cookie

17

18 PRODUCTS_ON_PAGE_PC = 48

19 PRODUCTS_ON_PAGE_MOB = 10

20

21

22 def get_products_count(request):

23 """Get Products count for response context depends on the `user_agent`."""

24 mobile_view = get_user_agent(request).is_mobile

25 return PRODUCTS_ON_PAGE_MOB if mobile_view else PRODUCTS_ON_PAGE_PC

26

27

28 # CATALOG VIEWS

29 class CategoryTree(catalog.CategoryTree):

30 category_model = models.Category

31

32

33 @set_csrf_cookie

34 class ProductPage(catalog.ProductPage):

35 pk_url_kwarg = None

36 slug_url_kwarg = 'product_vendor_code'

37 slug_field = 'vendor_code'

38

39 queryset = (

40 models.Product.objects

41 .filter(category__isnull=False)

42 .prefetch_related('product_feedbacks', 'page__images')

43 .select_related('page')

44 )

45

46 def get_context_data(self, **kwargs):

47 context = super(ProductPage, self).get_context_data(**kwargs)

48

49 group_tags_pairs = (

50 models.Tag.objects

51 .filter(products=self.object)

52 .get_group_tags_pairs()

53 )

54

55 return {

56 **context,

57 'price_bounds': config.PRICE_BOUNDS,

58 'group_tags_pairs': group_tags_pairs

59 }

60

61

62 # SHOPELECTRO-SPECIFIC VIEWS

63 @set_csrf_cookie

64 class IndexPage(pages_views.CustomPageView):

65

66 def get_context_data(self, **kwargs):

67 """Extended method. Add product's images to context."""

68 context = super(IndexPage, self).get_context_data(**kwargs)

69 mobile_view = get_user_agent(self.request).is_mobile

70

71 top_products = (

72 models.Product.objects

73 .filter(id__in=settings.TOP_PRODUCTS)

74 .prefetch_related('category')

75 .select_related('page')

76 )

77

78 images = Image.objects.get_main_images_by_pages(

79 models.ProductPage.objects.filter(

80 shopelectro_product__in=top_products

81 )

82 )

83

84 categories = models.Category.objects.get_root_categories_by_products(

85 top_products)

86

87 prepared_top_products = []

88 if not mobile_view:

89 prepared_top_products = [

90 (product, images.get(product.page), categories.get(product))

91 for product in top_products

92 ]

93

94 return {

95 **context,

96 'category_tile': config.MAIN_PAGE_TILE,

97 'prepared_top_products': prepared_top_products,

98 }

99

100

101 def merge_products_and_images(products):

102 images = Image.objects.get_main_images_by_pages(

103 models.ProductPage.objects.filter(shopelectro_product__in=products)

104 )

105

106 return [

107 (product, images.get(product.page))

108 for product in products

109 ]

110

111

112 @set_csrf_cookie

113 class CategoryPage(catalog.CategoryPage):

114

115 def get_context_data(self, **kwargs):

116 """Add sorting options and view_types in context."""

117 context = super(CategoryPage, self).get_context_data(**kwargs)

118 products_on_page = get_products_count(self.request)

119

120 # tile is default view_type

121 view_type = self.request.session.get('view_type', 'tile')

122

123 category = context['category']

124

125 sorting = int(self.kwargs.get('sorting', 0))

126 sorting_option = config.category_sorting(sorting)

127

128 all_products = (

129 models.Product.objects

130 .prefetch_related('page__images')

131 .select_related('page')

132 .get_by_category(category, ordering=(sorting_option, ))

133 )

134

135 group_tags_pairs = (

136 models.Tag.objects

137 .filter(products__in=all_products)

138 .get_group_tags_pairs()

139 )

140

141 tags = self.kwargs.get('tags')

142 tags_metadata = {

143 'titles': '',

144 }

145

146 if tags:

147 slugs = models.Tag.parse_url_tags(tags)

148 tags = models.Tag.objects.filter(slug__in=slugs)

149

150 all_products = (

151 all_products

152 .filter(tags__in=tags)

153 # Use distinct because filtering by QuerySet tags,

154 # that related with products by many-to-many relation.

155 .distinct(sorting_option.lstrip('-'))

156 )

157

158 tags_titles = models.Tag.serialize_title_tags(

159 tags.get_group_tags_pairs()

160 )

161

162 tags_metadata['titles'] = tags_titles

163

164 def template_context(page, tags):

165 return {

166 'page': page,

167 'tags': tags,

168 }

169

170 page = context['page']

171 page.get_template_render_context = partial(

172 template_context, page, tags_metadata)

173

174 products = all_products.get_offset(0, products_on_page)

175

176 return {

177 **context,

178 'product_image_pairs': merge_products_and_images(products),

179 'group_tags_pairs': group_tags_pairs,

180 'total_products': all_products.count(),

181 'sorting_options': config.category_sorting(),

182 'sort': sorting,

183 'tags': tags,

184 'view_type': view_type,

185 'tags_metadata': tags_metadata,

186 'skip_canonical': bool(tags),

187 }

188

189

190 def load_more(request, category_slug, offset=0, sorting=0, tags=None):

191 """

192 Load more products of a given category.

193

194 :param sorting: preferred sorting index from CATEGORY_SORTING tuple

195 :param request: HttpRequest object

196 :param category_slug: Slug for a given category

197 :param offset: used for slicing QuerySet.

198 :return:

199 """

200 products_on_page = get_products_count(request)

201

202 category = get_object_or_404(models.CategoryPage, slug=category_slug).model

203 sorting_option = config.category_sorting(int(sorting))

204

205 products = (

206 models.Product.objects

207 .prefetch_related('page__images')

208 .select_related('page')

209 .get_by_category(category, ordering=(sorting_option,))

210 )

211

212 if tags:

213 tag_entities = models.Tag.objects.filter(

214 slug__in=models.Tag.parse_url_tags(tags)

215 )

216

217 products = (

218 products

219 .filter(tags__in=tag_entities)

220 # Use distinct because filtering by QuerySet tags,

221 # that related with products by many-to-many relation.

222 .distinct(sorting_option.lstrip('-'))

223 )

224

225 products = products.get_offset(int(offset), products_on_page)

226 view = request.session.get('view_type', 'tile')

227

228 return render(request, 'catalog/category_products.html', {

229 'product_image_pairs': merge_products_and_images(products),

230 'view_type': view,

231 'prods': products_on_page,

232 })

233

234

235 @require_POST

236 def save_feedback(request):

237 def get_keys_from_post(*args):

238 return {arg: request.POST.get(arg, '') for arg in args}

239

240 product_id = request.POST.get('id')

241 product = models.Product.objects.filter(id=product_id).first()

242 if not (product_id and product):

243 return HttpResponse(status=422)

244

245 fields = ['rating', 'name', 'dignities', 'limitations', 'general']

246 feedback_data = get_keys_from_post(*fields)

247

248 models.ProductFeedback.objects.create(product=product, **feedback_data)

249 return HttpResponse('ok')

250

251

252 @require_POST

253 def delete_feedback(request):

254 if not request.user.is_authenticated:

255 return HttpResponseForbidden('Not today, sly guy...')

256

257 feedback_id = request.POST.get('id')

258 feedback = models.ProductFeedback.objects.filter(id=feedback_id).first()

259 if not (feedback_id and feedback):

260 return HttpResponse(status=422)

261

262 feedback.delete()

263 return HttpResponse('Feedback with id={} was deleted.'.format(feedback_id))

264

265

266 class ProductsWithoutImages(catalog.ProductsWithoutImages):

267 model = models.Product

268

269

270 class ProductsWithoutText(catalog.ProductsWithoutText):

271 model = models.Product

272

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/shopelectro/views/catalog.py b/shopelectro/views/catalog.py

--- a/shopelectro/views/catalog.py

+++ b/shopelectro/views/catalog.py

@@ -141,6 +141,7 @@

tags = self.kwargs.get('tags')

tags_metadata = {

'titles': '',

+ 'raw': [],

}

if tags:

@@ -160,6 +161,7 @@

)

tags_metadata['titles'] = tags_titles

+ tags_metadata['raw'] = tags

def template_context(page, tags):

return {

|

{"golden_diff": "diff --git a/shopelectro/views/catalog.py b/shopelectro/views/catalog.py\n--- a/shopelectro/views/catalog.py\n+++ b/shopelectro/views/catalog.py\n@@ -141,6 +141,7 @@\n tags = self.kwargs.get('tags')\n tags_metadata = {\n 'titles': '',\n+ 'raw': [],\n }\n \n if tags:\n@@ -160,6 +161,7 @@\n )\n \n tags_metadata['titles'] = tags_titles\n+ tags_metadata['raw'] = tags\n \n def template_context(page, tags):\n return {\n", "issue": "SE Iterate tags in templates\n[trello origin](https://trello.com/c/zulRj7lF/294-se-iterate-tags-in-templates)\r\n[seo templates doc](https://docs.google.com/document/d/18DFBsuh6NT8hjyihOJ2bxw9zEe8z_070MBQrbAq0kvE/edit#)\r\n\r\n**\u041f\u0440\u043e\u0431\u043b\u0435\u043c\u0430**\r\n\u0421\u0435\u043e\u0448\u043d\u0438\u043a\u0438 \u043f\u043e\u0441\u043b\u0435 \u043d\u0435\u043a\u043e\u0442\u043e\u0440\u044b\u0445 \u043f\u0440\u0438\u043c\u0435\u043d\u0435\u043d\u0438\u0439 \u043c\u0443\u043b\u044c\u0442\u0438\u0441\u0432-\u0432 \u043e\u0431\u043d\u0430\u0440\u0443\u0436\u0438\u043b\u0438 \u0437\u0430\u0433\u043e\u043b\u043e\u0432\u043e\u043a *\u0411\u043b\u043e\u043a\u0438 \u043f\u0438\u0442\u0430\u043d\u0438\u044f \u0434\u043b\u044f \u043d\u043e\u0443\u0442\u0431\u0443\u043a\u043e\u0432 \u0443\u0441\u0442\u0430\u043d\u0430\u0432\u043b\u0438\u0432\u0430\u0435\u0442 \u043f\u043e\u043b\u044c\u0437\u043e\u0432\u0430\u0442\u0435\u043b\u044c \u0438 \u043e\u0442 \u0441\u0435\u0442\u0438 220 \u0412* [\u043f\u043e \u044d\u0442\u043e\u043c\u0443 \u043b\u0438\u043d\u043a\u0443](https://www.shopelectro.ru/catalog/categories/bloki-pitaniia-288/tags/ustanavlivaet-polzovatel-and-ot-seti-220-v/)\r\n\r\n\u041f\u043e\u043b\u0443\u0447\u0438\u043b\u043e\u0441\u044c \u043d\u0435\u0445\u043e\u0440\u043e\u0448\u043e. \u041f\u0440\u0435\u0434\u043b\u0430\u0433\u0430\u044e\u0442 \u0441\u0434\u0435\u043b\u0430\u0442\u044c \u0437\u0430\u0433\u043e\u043b\u043e\u0432\u043e\u043a \u0442\u0430\u043a\u0438\u043c:\r\n*\u0411\u043b\u043e\u043a\u0438 \u043f\u0438\u0442\u0430\u043d\u0438\u044f \u0434\u043b\u044f \u043d\u043e\u0443\u0442\u0431\u0443\u043a\u043e\u0432 \u043e\u0442 \u0441\u0435\u0442\u0438 220 \u0412, \u0432\u044b\u0431\u043e\u0440 \u0432\u044b\u0445\u043e\u0434\u043d\u043e\u0433\u043e \u043d\u0430\u043f\u0440\u044f\u0436\u0435\u043d\u0438\u044f - \u0443\u0441\u0442\u0430\u043d\u0430\u0432\u043b\u0438\u0432\u0430\u0435\u0442 \u043f\u043e\u043b\u044c\u0437\u043e\u0432\u0430\u0442\u0435\u043b\u044c*\r\n\r\n\r\n**\u0420\u0435\u0448\u0435\u043d\u0438\u0435**\r\n\u0427\u0442\u043e\u0431\u044b \u043f\u0435\u0440\u0435\u0434\u0435\u043b\u0430\u0442\u044c \u0437\u0430\u0433\u043e\u043b\u043e\u0432\u043e\u043a, \u043d\u0430\u043c \u043d\u0443\u0436\u043d\u043e \u0434\u043e\u0431\u0430\u0432\u0438\u0442\u044c \u0432 \u0448\u0430\u0431\u043b\u043e\u043d \u0432\u043e\u0437\u043c\u043e\u0436\u043d\u043e\u0441\u0442\u044c \u0443\u043a\u0430\u0437\u044b\u0432\u0430\u0442\u044c \u0438\u043c\u044f \u043e\u0434\u043d\u043e\u0433\u043e \u043a\u043e\u043d\u043a\u0440\u0435\u0442\u043d\u043e\u0433\u043e \u0442\u0435\u0433\u0430.\r\n\r\n\u0410 \u0440\u0435\u0448\u0438\u0442\u044c \u044d\u0442\u043e \u043c\u043e\u0436\u043d\u043e \u0442\u0430\u043a: \u0434\u043e\u0431\u0430\u0432\u043b\u044f\u0435\u043c \u043f\u043e\u043b\u043d\u043e\u0446\u0435\u043d\u043d\u044b\u0439 tags \u0432 seo-\u0448\u0430\u0431\u043b\u043e\u043d\u044b.\r\n\u0421\u0435\u0439\u0447\u0430\u0441 \u0443 \u043d\u0430\u0441 \u0442\u043e\u043b\u044c\u043a\u043e \u043e\u0431\u0440\u0435\u0437\u0430\u043d\u043d\u044b\u0439 tags.titles\n", "before_files": [{"content": "from functools import partial\n\nfrom django.conf import settings\nfrom django.http import HttpResponse, HttpResponseForbidden\nfrom django.shortcuts import render, get_object_or_404\nfrom django.views.decorators.http import require_POST\nfrom django.urls import reverse\nfrom django_user_agents.utils import get_user_agent\n\nfrom catalog.views import catalog\nfrom images.models import Image\nfrom pages import views as pages_views\n\nfrom shopelectro import config\nfrom shopelectro import models\nfrom shopelectro.views.helpers import set_csrf_cookie\n\nPRODUCTS_ON_PAGE_PC = 48\nPRODUCTS_ON_PAGE_MOB = 10\n\n\ndef get_products_count(request):\n \"\"\"Get Products count for response context depends on the `user_agent`.\"\"\"\n mobile_view = get_user_agent(request).is_mobile\n return PRODUCTS_ON_PAGE_MOB if mobile_view else PRODUCTS_ON_PAGE_PC\n\n\n# CATALOG VIEWS\nclass CategoryTree(catalog.CategoryTree):\n category_model = models.Category\n\n\n@set_csrf_cookie\nclass ProductPage(catalog.ProductPage):\n pk_url_kwarg = None\n slug_url_kwarg = 'product_vendor_code'\n slug_field = 'vendor_code'\n\n queryset = (\n models.Product.objects\n .filter(category__isnull=False)\n .prefetch_related('product_feedbacks', 'page__images')\n .select_related('page')\n )\n\n def get_context_data(self, **kwargs):\n context = super(ProductPage, self).get_context_data(**kwargs)\n\n group_tags_pairs = (\n models.Tag.objects\n .filter(products=self.object)\n .get_group_tags_pairs()\n )\n\n return {\n **context,\n 'price_bounds': config.PRICE_BOUNDS,\n 'group_tags_pairs': group_tags_pairs\n }\n\n\n# SHOPELECTRO-SPECIFIC VIEWS\n@set_csrf_cookie\nclass IndexPage(pages_views.CustomPageView):\n\n def get_context_data(self, **kwargs):\n \"\"\"Extended method. Add product's images to context.\"\"\"\n context = super(IndexPage, self).get_context_data(**kwargs)\n mobile_view = get_user_agent(self.request).is_mobile\n\n top_products = (\n models.Product.objects\n .filter(id__in=settings.TOP_PRODUCTS)\n .prefetch_related('category')\n .select_related('page')\n )\n\n images = Image.objects.get_main_images_by_pages(\n models.ProductPage.objects.filter(\n shopelectro_product__in=top_products\n )\n )\n\n categories = models.Category.objects.get_root_categories_by_products(\n top_products)\n\n prepared_top_products = []\n if not mobile_view:\n prepared_top_products = [\n (product, images.get(product.page), categories.get(product))\n for product in top_products\n ]\n\n return {\n **context,\n 'category_tile': config.MAIN_PAGE_TILE,\n 'prepared_top_products': prepared_top_products,\n }\n\n\ndef merge_products_and_images(products):\n images = Image.objects.get_main_images_by_pages(\n models.ProductPage.objects.filter(shopelectro_product__in=products)\n )\n\n return [\n (product, images.get(product.page))\n for product in products\n ]\n\n\n@set_csrf_cookie\nclass CategoryPage(catalog.CategoryPage):\n\n def get_context_data(self, **kwargs):\n \"\"\"Add sorting options and view_types in context.\"\"\"\n context = super(CategoryPage, self).get_context_data(**kwargs)\n products_on_page = get_products_count(self.request)\n\n # tile is default view_type\n view_type = self.request.session.get('view_type', 'tile')\n\n category = context['category']\n\n sorting = int(self.kwargs.get('sorting', 0))\n sorting_option = config.category_sorting(sorting)\n\n all_products = (\n models.Product.objects\n .prefetch_related('page__images')\n .select_related('page')\n .get_by_category(category, ordering=(sorting_option, ))\n )\n\n group_tags_pairs = (\n models.Tag.objects\n .filter(products__in=all_products)\n .get_group_tags_pairs()\n )\n\n tags = self.kwargs.get('tags')\n tags_metadata = {\n 'titles': '',\n }\n\n if tags:\n slugs = models.Tag.parse_url_tags(tags)\n tags = models.Tag.objects.filter(slug__in=slugs)\n\n all_products = (\n all_products\n .filter(tags__in=tags)\n # Use distinct because filtering by QuerySet tags,\n # that related with products by many-to-many relation.\n .distinct(sorting_option.lstrip('-'))\n )\n\n tags_titles = models.Tag.serialize_title_tags(\n tags.get_group_tags_pairs()\n )\n\n tags_metadata['titles'] = tags_titles\n\n def template_context(page, tags):\n return {\n 'page': page,\n 'tags': tags,\n }\n\n page = context['page']\n page.get_template_render_context = partial(\n template_context, page, tags_metadata)\n\n products = all_products.get_offset(0, products_on_page)\n\n return {\n **context,\n 'product_image_pairs': merge_products_and_images(products),\n 'group_tags_pairs': group_tags_pairs,\n 'total_products': all_products.count(),\n 'sorting_options': config.category_sorting(),\n 'sort': sorting,\n 'tags': tags,\n 'view_type': view_type,\n 'tags_metadata': tags_metadata,\n 'skip_canonical': bool(tags),\n }\n\n\ndef load_more(request, category_slug, offset=0, sorting=0, tags=None):\n \"\"\"\n Load more products of a given category.\n\n :param sorting: preferred sorting index from CATEGORY_SORTING tuple\n :param request: HttpRequest object\n :param category_slug: Slug for a given category\n :param offset: used for slicing QuerySet.\n :return:\n \"\"\"\n products_on_page = get_products_count(request)\n\n category = get_object_or_404(models.CategoryPage, slug=category_slug).model\n sorting_option = config.category_sorting(int(sorting))\n\n products = (\n models.Product.objects\n .prefetch_related('page__images')\n .select_related('page')\n .get_by_category(category, ordering=(sorting_option,))\n )\n\n if tags:\n tag_entities = models.Tag.objects.filter(\n slug__in=models.Tag.parse_url_tags(tags)\n )\n\n products = (\n products\n .filter(tags__in=tag_entities)\n # Use distinct because filtering by QuerySet tags,\n # that related with products by many-to-many relation.\n .distinct(sorting_option.lstrip('-'))\n )\n\n products = products.get_offset(int(offset), products_on_page)\n view = request.session.get('view_type', 'tile')\n\n return render(request, 'catalog/category_products.html', {\n 'product_image_pairs': merge_products_and_images(products),\n 'view_type': view,\n 'prods': products_on_page,\n })\n\n\n@require_POST\ndef save_feedback(request):\n def get_keys_from_post(*args):\n return {arg: request.POST.get(arg, '') for arg in args}\n\n product_id = request.POST.get('id')\n product = models.Product.objects.filter(id=product_id).first()\n if not (product_id and product):\n return HttpResponse(status=422)\n\n fields = ['rating', 'name', 'dignities', 'limitations', 'general']\n feedback_data = get_keys_from_post(*fields)\n\n models.ProductFeedback.objects.create(product=product, **feedback_data)\n return HttpResponse('ok')\n\n\n@require_POST\ndef delete_feedback(request):\n if not request.user.is_authenticated:\n return HttpResponseForbidden('Not today, sly guy...')\n\n feedback_id = request.POST.get('id')\n feedback = models.ProductFeedback.objects.filter(id=feedback_id).first()\n if not (feedback_id and feedback):\n return HttpResponse(status=422)\n\n feedback.delete()\n return HttpResponse('Feedback with id={} was deleted.'.format(feedback_id))\n\n\nclass ProductsWithoutImages(catalog.ProductsWithoutImages):\n model = models.Product\n\n\nclass ProductsWithoutText(catalog.ProductsWithoutText):\n model = models.Product\n", "path": "shopelectro/views/catalog.py"}], "after_files": [{"content": "from functools import partial\n\nfrom django.conf import settings\nfrom django.http import HttpResponse, HttpResponseForbidden\nfrom django.shortcuts import render, get_object_or_404\nfrom django.views.decorators.http import require_POST\nfrom django.urls import reverse\nfrom django_user_agents.utils import get_user_agent\n\nfrom catalog.views import catalog\nfrom images.models import Image\nfrom pages import views as pages_views\n\nfrom shopelectro import config\nfrom shopelectro import models\nfrom shopelectro.views.helpers import set_csrf_cookie\n\nPRODUCTS_ON_PAGE_PC = 48\nPRODUCTS_ON_PAGE_MOB = 10\n\n\ndef get_products_count(request):\n \"\"\"Get Products count for response context depends on the `user_agent`.\"\"\"\n mobile_view = get_user_agent(request).is_mobile\n return PRODUCTS_ON_PAGE_MOB if mobile_view else PRODUCTS_ON_PAGE_PC\n\n\n# CATALOG VIEWS\nclass CategoryTree(catalog.CategoryTree):\n category_model = models.Category\n\n\n@set_csrf_cookie\nclass ProductPage(catalog.ProductPage):\n pk_url_kwarg = None\n slug_url_kwarg = 'product_vendor_code'\n slug_field = 'vendor_code'\n\n queryset = (\n models.Product.objects\n .filter(category__isnull=False)\n .prefetch_related('product_feedbacks', 'page__images')\n .select_related('page')\n )\n\n def get_context_data(self, **kwargs):\n context = super(ProductPage, self).get_context_data(**kwargs)\n\n group_tags_pairs = (\n models.Tag.objects\n .filter(products=self.object)\n .get_group_tags_pairs()\n )\n\n return {\n **context,\n 'price_bounds': config.PRICE_BOUNDS,\n 'group_tags_pairs': group_tags_pairs\n }\n\n\n# SHOPELECTRO-SPECIFIC VIEWS\n@set_csrf_cookie\nclass IndexPage(pages_views.CustomPageView):\n\n def get_context_data(self, **kwargs):\n \"\"\"Extended method. Add product's images to context.\"\"\"\n context = super(IndexPage, self).get_context_data(**kwargs)\n mobile_view = get_user_agent(self.request).is_mobile\n\n top_products = (\n models.Product.objects\n .filter(id__in=settings.TOP_PRODUCTS)\n .prefetch_related('category')\n .select_related('page')\n )\n\n images = Image.objects.get_main_images_by_pages(\n models.ProductPage.objects.filter(\n shopelectro_product__in=top_products\n )\n )\n\n categories = models.Category.objects.get_root_categories_by_products(\n top_products)\n\n prepared_top_products = []\n if not mobile_view:\n prepared_top_products = [\n (product, images.get(product.page), categories.get(product))\n for product in top_products\n ]\n\n return {\n **context,\n 'category_tile': config.MAIN_PAGE_TILE,\n 'prepared_top_products': prepared_top_products,\n }\n\n\ndef merge_products_and_images(products):\n images = Image.objects.get_main_images_by_pages(\n models.ProductPage.objects.filter(shopelectro_product__in=products)\n )\n\n return [\n (product, images.get(product.page))\n for product in products\n ]\n\n\n@set_csrf_cookie\nclass CategoryPage(catalog.CategoryPage):\n\n def get_context_data(self, **kwargs):\n \"\"\"Add sorting options and view_types in context.\"\"\"\n context = super(CategoryPage, self).get_context_data(**kwargs)\n products_on_page = get_products_count(self.request)\n\n # tile is default view_type\n view_type = self.request.session.get('view_type', 'tile')\n\n category = context['category']\n\n sorting = int(self.kwargs.get('sorting', 0))\n sorting_option = config.category_sorting(sorting)\n\n all_products = (\n models.Product.objects\n .prefetch_related('page__images')\n .select_related('page')\n .get_by_category(category, ordering=(sorting_option, ))\n )\n\n group_tags_pairs = (\n models.Tag.objects\n .filter(products__in=all_products)\n .get_group_tags_pairs()\n )\n\n tags = self.kwargs.get('tags')\n tags_metadata = {\n 'titles': '',\n 'raw': [],\n }\n\n if tags:\n slugs = models.Tag.parse_url_tags(tags)\n tags = models.Tag.objects.filter(slug__in=slugs)\n\n all_products = (\n all_products\n .filter(tags__in=tags)\n # Use distinct because filtering by QuerySet tags,\n # that related with products by many-to-many relation.\n .distinct(sorting_option.lstrip('-'))\n )\n\n tags_titles = models.Tag.serialize_title_tags(\n tags.get_group_tags_pairs()\n )\n\n tags_metadata['titles'] = tags_titles\n tags_metadata['raw'] = tags\n\n def template_context(page, tags):\n return {\n 'page': page,\n 'tags': tags,\n }\n\n page = context['page']\n page.get_template_render_context = partial(\n template_context, page, tags_metadata)\n\n products = all_products.get_offset(0, products_on_page)\n\n return {\n **context,\n 'product_image_pairs': merge_products_and_images(products),\n 'group_tags_pairs': group_tags_pairs,\n 'total_products': all_products.count(),\n 'sorting_options': config.category_sorting(),\n 'sort': sorting,\n 'tags': tags,\n 'view_type': view_type,\n 'tags_metadata': tags_metadata,\n 'skip_canonical': bool(tags),\n }\n\n\ndef load_more(request, category_slug, offset=0, sorting=0, tags=None):\n \"\"\"\n Load more products of a given category.\n\n :param sorting: preferred sorting index from CATEGORY_SORTING tuple\n :param request: HttpRequest object\n :param category_slug: Slug for a given category\n :param offset: used for slicing QuerySet.\n :return:\n \"\"\"\n products_on_page = get_products_count(request)\n\n category = get_object_or_404(models.CategoryPage, slug=category_slug).model\n sorting_option = config.category_sorting(int(sorting))\n\n products = (\n models.Product.objects\n .prefetch_related('page__images')\n .select_related('page')\n .get_by_category(category, ordering=(sorting_option,))\n )\n\n if tags:\n tag_entities = models.Tag.objects.filter(\n slug__in=models.Tag.parse_url_tags(tags)\n )\n\n products = (\n products\n .filter(tags__in=tag_entities)\n # Use distinct because filtering by QuerySet tags,\n # that related with products by many-to-many relation.\n .distinct(sorting_option.lstrip('-'))\n )\n\n products = products.get_offset(int(offset), products_on_page)\n view = request.session.get('view_type', 'tile')\n\n return render(request, 'catalog/category_products.html', {\n 'product_image_pairs': merge_products_and_images(products),\n 'view_type': view,\n 'prods': products_on_page,\n })\n\n\n@require_POST\ndef save_feedback(request):\n def get_keys_from_post(*args):\n return {arg: request.POST.get(arg, '') for arg in args}\n\n product_id = request.POST.get('id')\n product = models.Product.objects.filter(id=product_id).first()\n if not (product_id and product):\n return HttpResponse(status=422)\n\n fields = ['rating', 'name', 'dignities', 'limitations', 'general']\n feedback_data = get_keys_from_post(*fields)\n\n models.ProductFeedback.objects.create(product=product, **feedback_data)\n return HttpResponse('ok')\n\n\n@require_POST\ndef delete_feedback(request):\n if not request.user.is_authenticated:\n return HttpResponseForbidden('Not today, sly guy...')\n\n feedback_id = request.POST.get('id')\n feedback = models.ProductFeedback.objects.filter(id=feedback_id).first()\n if not (feedback_id and feedback):\n return HttpResponse(status=422)\n\n feedback.delete()\n return HttpResponse('Feedback with id={} was deleted.'.format(feedback_id))\n\n\nclass ProductsWithoutImages(catalog.ProductsWithoutImages):\n model = models.Product\n\n\nclass ProductsWithoutText(catalog.ProductsWithoutText):\n model = models.Product\n", "path": "shopelectro/views/catalog.py"}]}

| 3,027 | 134 |

gh_patches_debug_17994

|

rasdani/github-patches

|

git_diff

|

alltheplaces__alltheplaces-6938

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Anthropologie spider produces transposed coordinates

https://www.alltheplaces.xyz/map/#7.69/-75.171/39.95

The cause is the upstream data:

https://www.anthropologie.com/stores/rittenhouse-square-philadelphia

It might be worth doing any of the following:

- Suspend the lat/long from the parser for now

- Contact the company (I'll probably do that shortly) about the bug

- Any kind of high level validations that can check the expected bounds for a scraper, vs the results?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `locations/spiders/anthropologie.py`

Content:

```

1 from scrapy.spiders import SitemapSpider

2

3 from locations.structured_data_spider import StructuredDataSpider

4

5

6 class AnthropologieSpider(SitemapSpider, StructuredDataSpider):

7 name = "anthropologie"

8 item_attributes = {"brand": "Anthropologie", "brand_wikidata": "Q4773903"}

9 allowed_domains = ["anthropologie.com"]

10 sitemap_urls = ["https://www.anthropologie.com/store_sitemap.xml"]

11 sitemap_rules = [("/stores/", "parse_sd")]

12 requires_proxy = True

13

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/locations/spiders/anthropologie.py b/locations/spiders/anthropologie.py

--- a/locations/spiders/anthropologie.py

+++ b/locations/spiders/anthropologie.py

@@ -1,5 +1,6 @@

from scrapy.spiders import SitemapSpider

+from locations.items import set_closed

from locations.structured_data_spider import StructuredDataSpider

@@ -10,3 +11,17 @@

sitemap_urls = ["https://www.anthropologie.com/store_sitemap.xml"]

sitemap_rules = [("/stores/", "parse_sd")]

requires_proxy = True

+

+ def pre_process_data(self, ld_data, **kwargs):

+ ld_data["geo"]["latitude"], ld_data["geo"]["longitude"] = (

+ ld_data["geo"]["longitude"],

+ ld_data["geo"]["latitude"],

+ )

+

+ def post_process_item(self, item, response, ld_data, **kwargs):

+ item["branch"] = item.pop("name").removeprefix(" - Anthropologie Store")

+

+ if item["branch"].startswith("Closed - ") or item["branch"].endswith(" - Closed"):

+ set_closed(item)

+

+ yield item

|

{"golden_diff": "diff --git a/locations/spiders/anthropologie.py b/locations/spiders/anthropologie.py\n--- a/locations/spiders/anthropologie.py\n+++ b/locations/spiders/anthropologie.py\n@@ -1,5 +1,6 @@\n from scrapy.spiders import SitemapSpider\n \n+from locations.items import set_closed\n from locations.structured_data_spider import StructuredDataSpider\n \n \n@@ -10,3 +11,17 @@\n sitemap_urls = [\"https://www.anthropologie.com/store_sitemap.xml\"]\n sitemap_rules = [(\"/stores/\", \"parse_sd\")]\n requires_proxy = True\n+\n+ def pre_process_data(self, ld_data, **kwargs):\n+ ld_data[\"geo\"][\"latitude\"], ld_data[\"geo\"][\"longitude\"] = (\n+ ld_data[\"geo\"][\"longitude\"],\n+ ld_data[\"geo\"][\"latitude\"],\n+ )\n+\n+ def post_process_item(self, item, response, ld_data, **kwargs):\n+ item[\"branch\"] = item.pop(\"name\").removeprefix(\" - Anthropologie Store\")\n+\n+ if item[\"branch\"].startswith(\"Closed - \") or item[\"branch\"].endswith(\" - Closed\"):\n+ set_closed(item)\n+\n+ yield item\n", "issue": "Anthropologie spider produces transposed coordinates\nhttps://www.alltheplaces.xyz/map/#7.69/-75.171/39.95\r\n\r\n\r\n\r\nThe cause is the upstream data:\r\n\r\nhttps://www.anthropologie.com/stores/rittenhouse-square-philadelphia\r\n\r\n\r\nIt might be worth doing any of the following:\r\n\r\n- Suspend the lat/long from the parser for now\r\n- Contact the company (I'll probably do that shortly) about the bug\r\n- Any kind of high level validations that can check the expected bounds for a scraper, vs the results?\r\n\r\n\n", "before_files": [{"content": "from scrapy.spiders import SitemapSpider\n\nfrom locations.structured_data_spider import StructuredDataSpider\n\n\nclass AnthropologieSpider(SitemapSpider, StructuredDataSpider):\n name = \"anthropologie\"\n item_attributes = {\"brand\": \"Anthropologie\", \"brand_wikidata\": \"Q4773903\"}\n allowed_domains = [\"anthropologie.com\"]\n sitemap_urls = [\"https://www.anthropologie.com/store_sitemap.xml\"]\n sitemap_rules = [(\"/stores/\", \"parse_sd\")]\n requires_proxy = True\n", "path": "locations/spiders/anthropologie.py"}], "after_files": [{"content": "from scrapy.spiders import SitemapSpider\n\nfrom locations.items import set_closed\nfrom locations.structured_data_spider import StructuredDataSpider\n\n\nclass AnthropologieSpider(SitemapSpider, StructuredDataSpider):\n name = \"anthropologie\"\n item_attributes = {\"brand\": \"Anthropologie\", \"brand_wikidata\": \"Q4773903\"}\n allowed_domains = [\"anthropologie.com\"]\n sitemap_urls = [\"https://www.anthropologie.com/store_sitemap.xml\"]\n sitemap_rules = [(\"/stores/\", \"parse_sd\")]\n requires_proxy = True\n\n def pre_process_data(self, ld_data, **kwargs):\n ld_data[\"geo\"][\"latitude\"], ld_data[\"geo\"][\"longitude\"] = (\n ld_data[\"geo\"][\"longitude\"],\n ld_data[\"geo\"][\"latitude\"],\n )\n\n def post_process_item(self, item, response, ld_data, **kwargs):\n item[\"branch\"] = item.pop(\"name\").removeprefix(\" - Anthropologie Store\")\n\n if item[\"branch\"].startswith(\"Closed - \") or item[\"branch\"].endswith(\" - Closed\"):\n set_closed(item)\n\n yield item\n", "path": "locations/spiders/anthropologie.py"}]}

| 630 | 268 |

gh_patches_debug_29189

|

rasdani/github-patches

|

git_diff

|

pytorch__pytorch-53822

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Problems in TensorPipeRpcBackendOptions device mapping documentation?

## 📚 Documentation

The new release of PyTorch 1.8 introduces CUDA-support in RPC.

I've referred to the RPC documentation, and the only reference for the CUDA-support I could find is under [`TensorPipeRpcBackendOptions`](https://pytorch.org/docs/1.8.0/rpc.html#torch.distributed.rpc.TensorPipeRpcBackendOptions) and [`set_device_map`](https://pytorch.org/docs/1.8.0/rpc.html#torch.distributed.rpc.TensorPipeRpcBackendOptions.set_device_map).

Seems like setting up CUDA-support is simply done by supplying a device mapping in the `TensorPipeRpcBackendOptions`, pretty cool.

However, I find the documentation for the `device_maps`/`device_map` to be unclear. It seems that `TensorPipeRpcBackendOptions`'s `device_maps` is a dictionary where the keys are worker names, but I'm not exactly sure what the structure of the dictionary's values should be like? Supposedly each value should be some sort of dictionary (as indicated by the parameter's type - `Dict[str, Dict]`), yet the example code provides a set: `device_maps={"worker1": {0, 1}}`. I don't really understand how does this "map worker0's cuda:0 to worker1's cuda:1"?

Same for `set_device_map`'s `device_map`, the parameter's type also indicates it's a dictionary (`(Dict of python:int, str, or torch.device)`), but doesn't quite explain its structure. And again, the example code provides a set: `options.set_device_map("worker1", {1, 2})`.

It is also not explained how to define a GPU->CPU mapping (or vice versa).

Apart for this, there are 2 obvious errors in the example code provided in that documentation:

1. There is a missing comma in the following part:

```python

>>> rpc.init_rpc(

>>> "worker0",

>>> rank=0,

>>> world_size=2 # <-- missing comma

>>> backend=rpc.BackendType.TENSORPIPE,

>>> rpc_backend_options=options

>>> )

```

2. I don't see how it is possible that those two `print`s will give different results. I'm guessing that the second line should read `print(rets[1])`?

```python

>>> print(rets[0]) # tensor([2., 2.], device='cuda:0')

>>> print(rets[0]) # tensor([2., 2.], device='cuda:1')

```

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @gqchen @aazzolini @rohan-varma @jjlilley @osalpekar @jiayisuse @mrzzd @agolynski @SciPioneer @H-Huang @cbalioglu

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `torch/distributed/rpc/options.py`

Content:

```

1 from torch._C._distributed_rpc import _TensorPipeRpcBackendOptionsBase

2 from . import constants as rpc_contants

3

4 import torch

5

6 from typing import Dict, List

7

8

9 class TensorPipeRpcBackendOptions(_TensorPipeRpcBackendOptionsBase):

10 r"""

11 The backend options for

12 :class:`~torch.distributed.rpc.TensorPipeAgent`, derived from

13 :class:`~torch.distributed.rpc.RpcBackendOptions`.

14

15 Args:

16 num_worker_threads (int, optional): The number of threads in the

17 thread-pool used by

18 :class:`~torch.distributed.rpc.TensorPipeAgent` to execute

19 requests (default: 16).

20 rpc_timeout (float, optional): The default timeout, in seconds,

21 for RPC requests (default: 60 seconds). If the RPC has not

22 completed in this timeframe, an exception indicating so will

23 be raised. Callers can override this timeout for individual

24 RPCs in :meth:`~torch.distributed.rpc.rpc_sync` and

25 :meth:`~torch.distributed.rpc.rpc_async` if necessary.

26 init_method (str, optional): The URL to initialize the distributed

27 store used for rendezvous. It takes any value accepted for the

28 same argument of :meth:`~torch.distributed.init_process_group`

29 (default: ``env://``).

30 device_maps (Dict[str, Dict]): Device placement mappings from this

31 worker to the callee. Key is the callee worker name and value the

32 dictionary (``Dict`` of ``int``, ``str``, or ``torch.device``) that

33 maps this worker's devices to the callee worker's devices.

34 (default: ``None``)

35 """

36 def __init__(

37 self,

38 *,

39 num_worker_threads: int = rpc_contants.DEFAULT_NUM_WORKER_THREADS,

40 rpc_timeout: float = rpc_contants.DEFAULT_RPC_TIMEOUT_SEC,

41 init_method: str = rpc_contants.DEFAULT_INIT_METHOD,

42 device_maps: Dict = None,

43 _transports: List = None,

44 _channels: List = None,

45 ):

46 super().__init__(

47 num_worker_threads,

48 _transports,

49 _channels,

50 rpc_timeout,

51 init_method,

52 device_maps if device_maps else {}

53 )

54

55 def set_device_map(self, to: str, device_map: Dict):

56 r"""

57 Set device mapping between each RPC caller and callee pair. This

58 function can be called multiple times to incrementally add

59 device placement configurations.

60

61 Args:

62 worker_name (str): Callee name.

63 device_map (Dict of int, str, or torch.device): Device placement

64 mappings from this worker to the callee. This map must be

65 invertible.

66

67 Example::

68 >>> # both workers

69 >>> def add(x, y):

70 >>> print(x) # tensor([1., 1.], device='cuda:1')

71 >>> return x + y, (x + y).to(2)

72 >>>

73 >>> # on worker 0

74 >>> options = TensorPipeRpcBackendOptions(

75 >>> num_worker_threads=8,

76 >>> device_maps={"worker1": {0, 1}}

77 >>> # maps worker0's cuda:0 to worker1's cuda:1

78 >>> )

79 >>> options.set_device_map("worker1", {1, 2})

80 >>> # maps worker0's cuda:1 to worker1's cuda:2

81 >>>

82 >>> rpc.init_rpc(

83 >>> "worker0",

84 >>> rank=0,

85 >>> world_size=2

86 >>> backend=rpc.BackendType.TENSORPIPE,

87 >>> rpc_backend_options=options

88 >>> )

89 >>>

90 >>> x = torch.ones(2)

91 >>> rets = rpc.rpc_sync("worker1", add, args=(x.to(0), 1))

92 >>> # The first argument will be moved to cuda:1 on worker1. When

93 >>> # sending the return value back, it will follow the invert of

94 >>> # the device map, and hence will be moved back to cuda:0 and

95 >>> # cuda:1 on worker0

96 >>> print(rets[0]) # tensor([2., 2.], device='cuda:0')

97 >>> print(rets[0]) # tensor([2., 2.], device='cuda:1')

98 """

99 device_index_map = {}

100 curr_device_maps = super().device_maps

101 for k in device_map:

102 v = device_map[k]

103 k, v = torch.device(k), torch.device(v)

104 if k.type != 'cuda' or v.type != 'cuda':

105 raise ValueError(

106 "`set_device_map` only supports CUDA devices, "

107 f"but got device pair {k}: {v}"

108

109 )

110 if to in curr_device_maps and k.index in curr_device_maps[to]:

111 curr_v = super().device_maps[to][k.index]

112 if curr_v != v.index:

113 raise ValueError(

114 "`set_device_map` only supports 1-to-1 mapping, "

115 f"trying to map {k} to {v} and {curr_v}"

116 )

117 device_index_map[k.index] = v.index

118 super().set_device_map(to, device_index_map)

119

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/torch/distributed/rpc/options.py b/torch/distributed/rpc/options.py

--- a/torch/distributed/rpc/options.py

+++ b/torch/distributed/rpc/options.py

@@ -73,16 +73,16 @@

>>> # on worker 0

>>> options = TensorPipeRpcBackendOptions(

>>> num_worker_threads=8,

- >>> device_maps={"worker1": {0, 1}}

+ >>> device_maps={"worker1": {0: 1}}

>>> # maps worker0's cuda:0 to worker1's cuda:1

>>> )

- >>> options.set_device_map("worker1", {1, 2})

+ >>> options.set_device_map("worker1", {1: 2})

>>> # maps worker0's cuda:1 to worker1's cuda:2

>>>

>>> rpc.init_rpc(

>>> "worker0",

>>> rank=0,

- >>> world_size=2

+ >>> world_size=2,

>>> backend=rpc.BackendType.TENSORPIPE,

>>> rpc_backend_options=options

>>> )

@@ -94,7 +94,7 @@

>>> # the device map, and hence will be moved back to cuda:0 and

>>> # cuda:1 on worker0

>>> print(rets[0]) # tensor([2., 2.], device='cuda:0')

- >>> print(rets[0]) # tensor([2., 2.], device='cuda:1')

+ >>> print(rets[1]) # tensor([2., 2.], device='cuda:1')

"""

device_index_map = {}

curr_device_maps = super().device_maps

|