problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

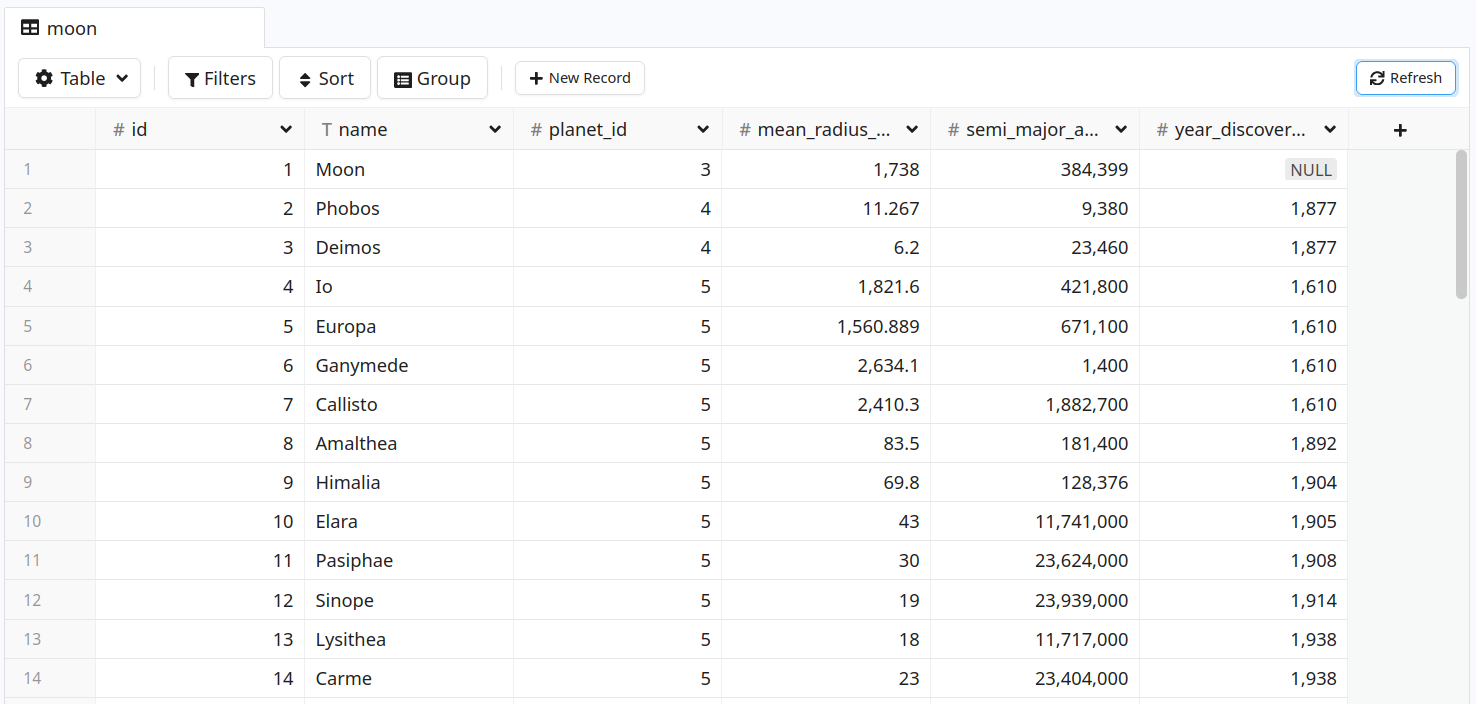

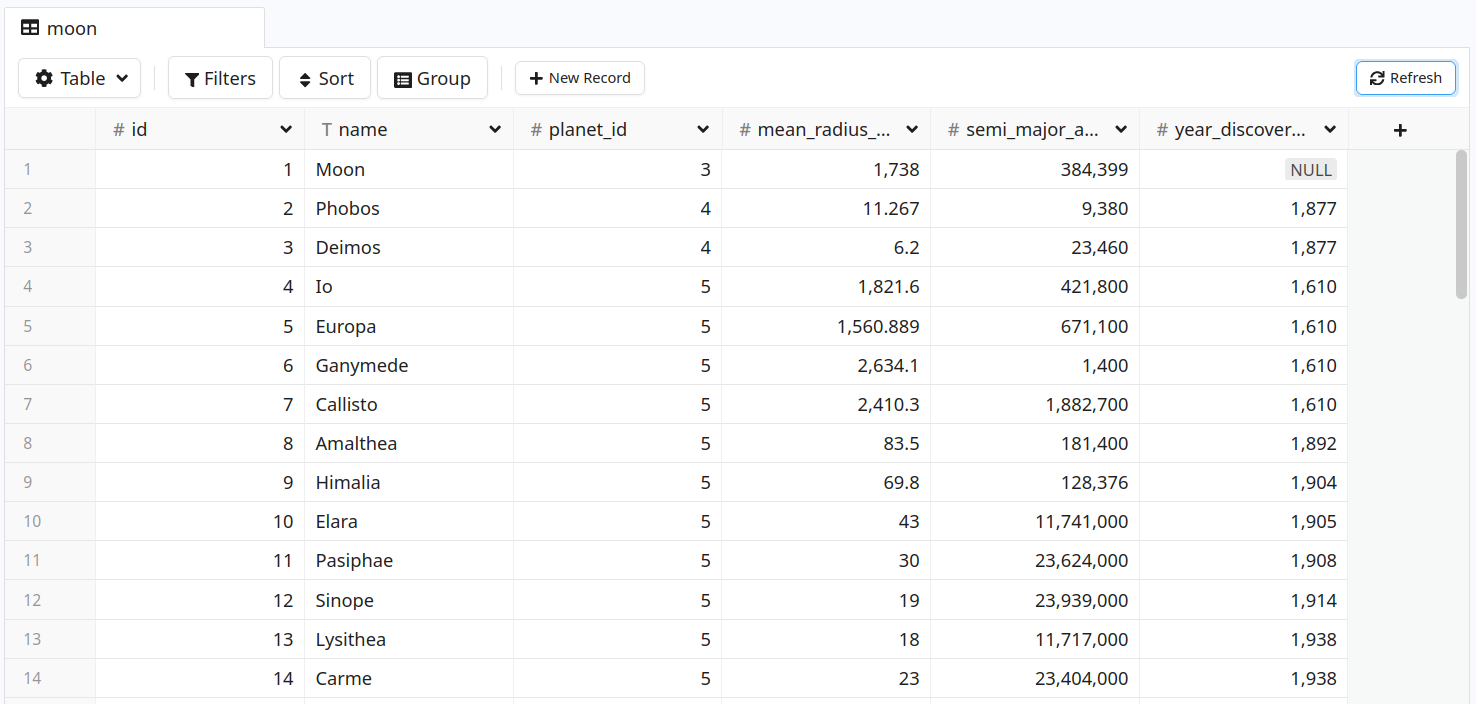

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_18040

|

rasdani/github-patches

|

git_diff

|

liqd__a4-meinberlin-1652

|

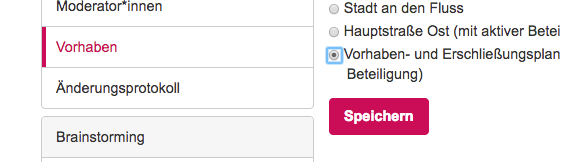

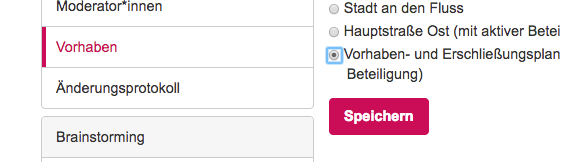

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

make it possible to detach plan from project

now that a connection of a project with a plan is no longer obligatory, initiators need to be able to undo a connection. Once I click on a plan I can no longer NOT connect to a plan, which should be possible.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

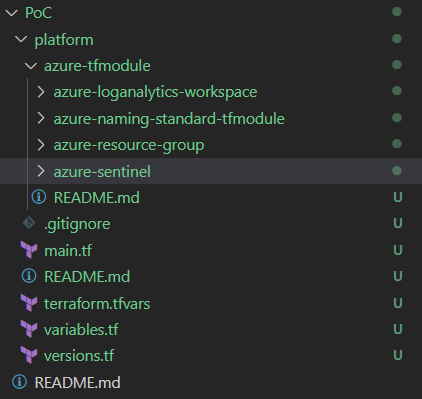

Path: `meinberlin/apps/plans/forms.py`

Content:

```

1 from django import forms

2 from django.conf import settings

3 from django.db.models import Q

4 from django.utils.translation import ugettext_lazy as _

5

6 from adhocracy4.dashboard.components.forms import ProjectDashboardForm

7 from adhocracy4.maps import widgets as maps_widgets

8 from adhocracy4.projects import models as project_models

9 from meinberlin.apps.contrib import widgets as contrib_widgets

10

11 from . import models

12

13

14 def get_theme_options():

15 return models.Plan.objects\

16 .filter(~Q(theme=''))\

17 .order_by('theme')\

18 .values_list('theme', flat=True)\

19 .distinct()

20

21

22 class PlanForm(forms.ModelForm):

23

24 class Meta:

25 model = models.Plan

26 fields = [

27 'title',

28 'description_image',

29 'contact',

30 'point',

31 'point_label',

32 'district',

33 'cost',

34 'description',

35 'theme',

36 'status',

37 'participation']

38 widgets = {

39 'point': maps_widgets.MapChoosePointWidget(

40 polygon=settings.BERLIN_POLYGON),

41 'theme': contrib_widgets.TextWithDatalistWidget(attrs={

42 'options': get_theme_options

43 })

44 }

45 error_messages = {

46 'point': {

47 'required': _('Please locate the plan on the map.')

48 }

49 }

50

51 def __init__(self, *args, **kwargs):

52 super().__init__(*args, **kwargs)

53 self.fields['district'].empty_label = _('City wide')

54

55

56 class CustomMultipleChoiceField(forms.ModelMultipleChoiceField):

57

58 widget = forms.RadioSelect

59

60 def clean(self, value):

61 if value is None:

62 return super().clean([])

63 return super().clean([value])

64

65

66 class ProjectPlansDashboardForm(ProjectDashboardForm):

67 plans = CustomMultipleChoiceField(queryset=None,

68 label=_('Plans'))

69

70 class Meta:

71 model = project_models.Project

72 fields = ['plans']

73 required = False

74

75 def save(self, commit=False):

76 plans = self.cleaned_data['plans']

77 self.instance.plans.set(plans)

78

79 def __init__(self, *args, **kwargs):

80 super().__init__(*args, **kwargs)

81 self.initial['plans'] = self.instance.plans.all()

82 self.fields['plans'

83 ].queryset = self.instance.organisation.plan_set.all()

84

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/meinberlin/apps/plans/forms.py b/meinberlin/apps/plans/forms.py

--- a/meinberlin/apps/plans/forms.py

+++ b/meinberlin/apps/plans/forms.py

@@ -55,10 +55,10 @@

class CustomMultipleChoiceField(forms.ModelMultipleChoiceField):

- widget = forms.RadioSelect

+ widget = forms.Select

def clean(self, value):

- if value is None:

+ if not value:

return super().clean([])

return super().clean([value])

@@ -79,5 +79,7 @@

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.initial['plans'] = self.instance.plans.all()

- self.fields['plans'

- ].queryset = self.instance.organisation.plan_set.all()

+ self.fields['plans'].required = False

+ self.fields['plans'].empty_label = '----------'

+ self.fields['plans'].queryset = \

+ self.instance.organisation.plan_set.all()

|

{"golden_diff": "diff --git a/meinberlin/apps/plans/forms.py b/meinberlin/apps/plans/forms.py\n--- a/meinberlin/apps/plans/forms.py\n+++ b/meinberlin/apps/plans/forms.py\n@@ -55,10 +55,10 @@\n \n class CustomMultipleChoiceField(forms.ModelMultipleChoiceField):\n \n- widget = forms.RadioSelect\n+ widget = forms.Select\n \n def clean(self, value):\n- if value is None:\n+ if not value:\n return super().clean([])\n return super().clean([value])\n \n@@ -79,5 +79,7 @@\n def __init__(self, *args, **kwargs):\n super().__init__(*args, **kwargs)\n self.initial['plans'] = self.instance.plans.all()\n- self.fields['plans'\n- ].queryset = self.instance.organisation.plan_set.all()\n+ self.fields['plans'].required = False\n+ self.fields['plans'].empty_label = '----------'\n+ self.fields['plans'].queryset = \\\n+ self.instance.organisation.plan_set.all()\n", "issue": "make it possible to detach plan from project\nnow that a connection of a project with a plan is no longer obligatory, initiators need to be able to undo a connection. Once I click on a plan I can no longer NOT connect to a plan, which should be possible.\r\n\r\n\r\n\n", "before_files": [{"content": "from django import forms\nfrom django.conf import settings\nfrom django.db.models import Q\nfrom django.utils.translation import ugettext_lazy as _\n\nfrom adhocracy4.dashboard.components.forms import ProjectDashboardForm\nfrom adhocracy4.maps import widgets as maps_widgets\nfrom adhocracy4.projects import models as project_models\nfrom meinberlin.apps.contrib import widgets as contrib_widgets\n\nfrom . import models\n\n\ndef get_theme_options():\n return models.Plan.objects\\\n .filter(~Q(theme=''))\\\n .order_by('theme')\\\n .values_list('theme', flat=True)\\\n .distinct()\n\n\nclass PlanForm(forms.ModelForm):\n\n class Meta:\n model = models.Plan\n fields = [\n 'title',\n 'description_image',\n 'contact',\n 'point',\n 'point_label',\n 'district',\n 'cost',\n 'description',\n 'theme',\n 'status',\n 'participation']\n widgets = {\n 'point': maps_widgets.MapChoosePointWidget(\n polygon=settings.BERLIN_POLYGON),\n 'theme': contrib_widgets.TextWithDatalistWidget(attrs={\n 'options': get_theme_options\n })\n }\n error_messages = {\n 'point': {\n 'required': _('Please locate the plan on the map.')\n }\n }\n\n def __init__(self, *args, **kwargs):\n super().__init__(*args, **kwargs)\n self.fields['district'].empty_label = _('City wide')\n\n\nclass CustomMultipleChoiceField(forms.ModelMultipleChoiceField):\n\n widget = forms.RadioSelect\n\n def clean(self, value):\n if value is None:\n return super().clean([])\n return super().clean([value])\n\n\nclass ProjectPlansDashboardForm(ProjectDashboardForm):\n plans = CustomMultipleChoiceField(queryset=None,\n label=_('Plans'))\n\n class Meta:\n model = project_models.Project\n fields = ['plans']\n required = False\n\n def save(self, commit=False):\n plans = self.cleaned_data['plans']\n self.instance.plans.set(plans)\n\n def __init__(self, *args, **kwargs):\n super().__init__(*args, **kwargs)\n self.initial['plans'] = self.instance.plans.all()\n self.fields['plans'\n ].queryset = self.instance.organisation.plan_set.all()\n", "path": "meinberlin/apps/plans/forms.py"}], "after_files": [{"content": "from django import forms\nfrom django.conf import settings\nfrom django.db.models import Q\nfrom django.utils.translation import ugettext_lazy as _\n\nfrom adhocracy4.dashboard.components.forms import ProjectDashboardForm\nfrom adhocracy4.maps import widgets as maps_widgets\nfrom adhocracy4.projects import models as project_models\nfrom meinberlin.apps.contrib import widgets as contrib_widgets\n\nfrom . import models\n\n\ndef get_theme_options():\n return models.Plan.objects\\\n .filter(~Q(theme=''))\\\n .order_by('theme')\\\n .values_list('theme', flat=True)\\\n .distinct()\n\n\nclass PlanForm(forms.ModelForm):\n\n class Meta:\n model = models.Plan\n fields = [\n 'title',\n 'description_image',\n 'contact',\n 'point',\n 'point_label',\n 'district',\n 'cost',\n 'description',\n 'theme',\n 'status',\n 'participation']\n widgets = {\n 'point': maps_widgets.MapChoosePointWidget(\n polygon=settings.BERLIN_POLYGON),\n 'theme': contrib_widgets.TextWithDatalistWidget(attrs={\n 'options': get_theme_options\n })\n }\n error_messages = {\n 'point': {\n 'required': _('Please locate the plan on the map.')\n }\n }\n\n def __init__(self, *args, **kwargs):\n super().__init__(*args, **kwargs)\n self.fields['district'].empty_label = _('City wide')\n\n\nclass CustomMultipleChoiceField(forms.ModelMultipleChoiceField):\n\n widget = forms.Select\n\n def clean(self, value):\n if not value:\n return super().clean([])\n return super().clean([value])\n\n\nclass ProjectPlansDashboardForm(ProjectDashboardForm):\n plans = CustomMultipleChoiceField(queryset=None,\n label=_('Plans'))\n\n class Meta:\n model = project_models.Project\n fields = ['plans']\n required = False\n\n def save(self, commit=False):\n plans = self.cleaned_data['plans']\n self.instance.plans.set(plans)\n\n def __init__(self, *args, **kwargs):\n super().__init__(*args, **kwargs)\n self.initial['plans'] = self.instance.plans.all()\n self.fields['plans'].required = False\n self.fields['plans'].empty_label = '----------'\n self.fields['plans'].queryset = \\\n self.instance.organisation.plan_set.all()\n", "path": "meinberlin/apps/plans/forms.py"}]}

| 1,038 | 240 |

gh_patches_debug_1022

|

rasdani/github-patches

|

git_diff

|

searxng__searxng-437

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Bug: microsoft academic engine

**Version of SearXNG, commit number if you are using on master branch and stipulate if you forked SearXNG**

Repository: https://github.com/tiekoetter/searxng

Branch: master

Version: 1.0.0-972-93548243

<!-- Check if these values are correct -->

**How did you install SearXNG?**

<!-- Did you install SearXNG using the official wiki or using searxng-docker

or manually by executing the searx/webapp.py file? -->

**What happened?**

<!-- A clear and concise description of what the bug is. -->

**How To Reproduce**

<!-- How can we reproduce this issue? (as minimally and as precisely as possible) -->

**Expected behavior**

<!-- A clear and concise description of what you expected to happen. -->

**Screenshots & Logs**

<!-- If applicable, add screenshots, logs to help explain your problem. -->

**Additional context**

<!-- Add any other context about the problem here. -->

**Technical report**

Error

* Error: httpx.TimeoutException

* Percentage: 50

* Parameters: `(None, None, None)`

* File name: `searx/search/processors/online.py:97`

* Function: `_send_http_request`

* Code: `response = req(params['url'], **request_args)`

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `searx/engines/microsoft_academic.py`

Content:

```

1 # SPDX-License-Identifier: AGPL-3.0-or-later

2 """

3 Microsoft Academic (Science)

4 """

5

6 from json import dumps, loads

7 from searx.utils import html_to_text

8

9 # about

10 about = {

11 "website": 'https://academic.microsoft.com',

12 "wikidata_id": 'Q28136779',

13 "official_api_documentation": 'http://ma-graph.org/',

14 "use_official_api": False,

15 "require_api_key": False,

16 "results": 'JSON',

17 }

18

19 categories = ['images']

20 paging = True

21 search_url = 'https://academic.microsoft.com/api/search'

22 _paper_url = 'https://academic.microsoft.com/paper/{id}/reference'

23

24

25 def request(query, params):

26 params['url'] = search_url

27 params['method'] = 'POST'

28 params['headers']['content-type'] = 'application/json; charset=utf-8'

29 params['data'] = dumps({

30 'query': query,

31 'queryExpression': '',

32 'filters': [],

33 'orderBy': 0,

34 'skip': (params['pageno'] - 1) * 10,

35 'sortAscending': True,

36 'take': 10,

37 'includeCitationContexts': False,

38 'profileId': '',

39 })

40

41 return params

42

43

44 def response(resp):

45 results = []

46 response_data = loads(resp.text)

47 if not response_data:

48 return results

49

50 for result in response_data['pr']:

51 if 'dn' not in result['paper']:

52 continue

53

54 title = result['paper']['dn']

55 content = _get_content(result['paper'])

56 url = _paper_url.format(id=result['paper']['id'])

57 results.append({

58 'url': url,

59 'title': html_to_text(title),

60 'content': html_to_text(content),

61 })

62

63 return results

64

65

66 def _get_content(result):

67 if 'd' in result:

68 content = result['d']

69 if len(content) > 300:

70 return content[:300] + '...'

71 return content

72

73 return ''

74

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/searx/engines/microsoft_academic.py b/searx/engines/microsoft_academic.py

--- a/searx/engines/microsoft_academic.py

+++ b/searx/engines/microsoft_academic.py

@@ -47,7 +47,7 @@

if not response_data:

return results

- for result in response_data['pr']:

+ for result in response_data.get('pr', {}):

if 'dn' not in result['paper']:

continue

|

{"golden_diff": "diff --git a/searx/engines/microsoft_academic.py b/searx/engines/microsoft_academic.py\n--- a/searx/engines/microsoft_academic.py\n+++ b/searx/engines/microsoft_academic.py\n@@ -47,7 +47,7 @@\n if not response_data:\n return results\n \n- for result in response_data['pr']:\n+ for result in response_data.get('pr', {}):\n if 'dn' not in result['paper']:\n continue\n", "issue": "Bug: microsoft academic engine\n**Version of SearXNG, commit number if you are using on master branch and stipulate if you forked SearXNG**\r\nRepository: https://github.com/tiekoetter/searxng\r\nBranch: master\r\nVersion: 1.0.0-972-93548243\r\n<!-- Check if these values are correct -->\r\n\r\n**How did you install SearXNG?**\r\n<!-- Did you install SearXNG using the official wiki or using searxng-docker\r\nor manually by executing the searx/webapp.py file? -->\r\n**What happened?**\r\n<!-- A clear and concise description of what the bug is. -->\r\n\r\n**How To Reproduce**\r\n<!-- How can we reproduce this issue? (as minimally and as precisely as possible) -->\r\n\r\n**Expected behavior**\r\n<!-- A clear and concise description of what you expected to happen. -->\r\n\r\n**Screenshots & Logs**\r\n<!-- If applicable, add screenshots, logs to help explain your problem. -->\r\n\r\n**Additional context**\r\n<!-- Add any other context about the problem here. -->\r\n\r\n**Technical report**\r\n\r\nError\r\n * Error: httpx.TimeoutException\r\n * Percentage: 50\r\n * Parameters: `(None, None, None)`\r\n * File name: `searx/search/processors/online.py:97`\r\n * Function: `_send_http_request`\r\n * Code: `response = req(params['url'], **request_args)`\r\n\r\n\n", "before_files": [{"content": "# SPDX-License-Identifier: AGPL-3.0-or-later\n\"\"\"\n Microsoft Academic (Science)\n\"\"\"\n\nfrom json import dumps, loads\nfrom searx.utils import html_to_text\n\n# about\nabout = {\n \"website\": 'https://academic.microsoft.com',\n \"wikidata_id\": 'Q28136779',\n \"official_api_documentation\": 'http://ma-graph.org/',\n \"use_official_api\": False,\n \"require_api_key\": False,\n \"results\": 'JSON',\n}\n\ncategories = ['images']\npaging = True\nsearch_url = 'https://academic.microsoft.com/api/search'\n_paper_url = 'https://academic.microsoft.com/paper/{id}/reference'\n\n\ndef request(query, params):\n params['url'] = search_url\n params['method'] = 'POST'\n params['headers']['content-type'] = 'application/json; charset=utf-8'\n params['data'] = dumps({\n 'query': query,\n 'queryExpression': '',\n 'filters': [],\n 'orderBy': 0,\n 'skip': (params['pageno'] - 1) * 10,\n 'sortAscending': True,\n 'take': 10,\n 'includeCitationContexts': False,\n 'profileId': '',\n })\n\n return params\n\n\ndef response(resp):\n results = []\n response_data = loads(resp.text)\n if not response_data:\n return results\n\n for result in response_data['pr']:\n if 'dn' not in result['paper']:\n continue\n\n title = result['paper']['dn']\n content = _get_content(result['paper'])\n url = _paper_url.format(id=result['paper']['id'])\n results.append({\n 'url': url,\n 'title': html_to_text(title),\n 'content': html_to_text(content),\n })\n\n return results\n\n\ndef _get_content(result):\n if 'd' in result:\n content = result['d']\n if len(content) > 300:\n return content[:300] + '...'\n return content\n\n return ''\n", "path": "searx/engines/microsoft_academic.py"}], "after_files": [{"content": "# SPDX-License-Identifier: AGPL-3.0-or-later\n\"\"\"\n Microsoft Academic (Science)\n\"\"\"\n\nfrom json import dumps, loads\nfrom searx.utils import html_to_text\n\n# about\nabout = {\n \"website\": 'https://academic.microsoft.com',\n \"wikidata_id\": 'Q28136779',\n \"official_api_documentation\": 'http://ma-graph.org/',\n \"use_official_api\": False,\n \"require_api_key\": False,\n \"results\": 'JSON',\n}\n\ncategories = ['images']\npaging = True\nsearch_url = 'https://academic.microsoft.com/api/search'\n_paper_url = 'https://academic.microsoft.com/paper/{id}/reference'\n\n\ndef request(query, params):\n params['url'] = search_url\n params['method'] = 'POST'\n params['headers']['content-type'] = 'application/json; charset=utf-8'\n params['data'] = dumps({\n 'query': query,\n 'queryExpression': '',\n 'filters': [],\n 'orderBy': 0,\n 'skip': (params['pageno'] - 1) * 10,\n 'sortAscending': True,\n 'take': 10,\n 'includeCitationContexts': False,\n 'profileId': '',\n })\n\n return params\n\n\ndef response(resp):\n results = []\n response_data = loads(resp.text)\n if not response_data:\n return results\n\n for result in response_data.get('pr', {}):\n if 'dn' not in result['paper']:\n continue\n\n title = result['paper']['dn']\n content = _get_content(result['paper'])\n url = _paper_url.format(id=result['paper']['id'])\n results.append({\n 'url': url,\n 'title': html_to_text(title),\n 'content': html_to_text(content),\n })\n\n return results\n\n\ndef _get_content(result):\n if 'd' in result:\n content = result['d']\n if len(content) > 300:\n return content[:300] + '...'\n return content\n\n return ''\n", "path": "searx/engines/microsoft_academic.py"}]}

| 1,175 | 115 |

gh_patches_debug_30987

|

rasdani/github-patches

|

git_diff

|

kubeflow__pipelines-5293

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

'kubeflow-pipelines-profile-controller' fails to deploy pods on profile creation when ResourceQuota is set in the profile.

In multi-user mode, it seems like `kubeflow-pipelines-profile-controller` deploy below pods on Kubeflow profile creation.

- `ml-pipeline-ui-artifact`

- `ml-pipeline-visualizationserver`

When `ResourceQuota` is set in the profile, `kubeflow-pipelines-profile-controller` fails to deploy `ml-pipeline-ui-artifact` and `ml-pipeline-visualizationserver` with below error.

```

Warning FailedCreate 17m replicaset-controller

Error creating: pods "ml-pipeline-ui-artifact-684c5db68-s74w8" is forbidden: failed quota: kf-resource-quota: must specify cpu,memory

```

- Related code: [https://github.com/kubeflow/manifests/blob/master/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py](https://github.com/kubeflow/manifests/blob/master/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py)

- Cause: The container resource limit & request is not set on the pod specs, so the pods can not be deployed in the namespace (which has `ResourceQuota` ).

Since Kubeflow profile supports setting `ResourceQuota`, `kubeflow-pipelines-profile-controller` should set container resource requests & limits in pod specs to avoid above errors.

I confirmed that with below patch, ml-pipeline pods are successfully deployed.

```python

diff --git a/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py b/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py

index 75c6e5db..a0e71fbf 100644

--- a/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py

+++ b/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py

@@ -104,6 +104,16 @@ class Controller(BaseHTTPRequestHandler):

"ports": [{

"containerPort": 8888

}],

+ "resources": {

+ "requests": {

+ "cpu": "50m",

+ "memory": "200Mi"

+ },

+ "limits": {

+ "cpu": "500m",

+ "memory": "2Gi"

+ },

+ }

}],

"serviceAccountName":

"default-editor",

@@ -204,7 +214,17 @@ class Controller(BaseHTTPRequestHandler):

"IfNotPresent",

"ports": [{

"containerPort": 3000

- }]

+ }],

+ "resources": {

+ "requests": {

+ "cpu": "50m",

+ "memory": "200Mi"

+ },

+ "limits": {

+ "cpu": "500m",

+ "memory": "2Gi"

+ },

+ }

}],

"serviceAccountName":

"default-editor"

```

Please take a look, thanks.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py`

Content:

```

1 # Copyright 2020 Google LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 from http.server import BaseHTTPRequestHandler, HTTPServer

16 import json

17 import os

18 import base64

19

20 kfp_version = os.environ["KFP_VERSION"]

21 disable_istio_sidecar = os.environ.get("DISABLE_ISTIO_SIDECAR") == "true"

22 mlpipeline_minio_access_key = base64.b64encode(

23 bytes(os.environ.get("MINIO_ACCESS_KEY"), 'utf-8')).decode('utf-8')

24 mlpipeline_minio_secret_key = base64.b64encode(

25 bytes(os.environ.get("MINIO_SECRET_KEY"), 'utf-8')).decode('utf-8')

26

27

28 class Controller(BaseHTTPRequestHandler):

29 def sync(self, parent, children):

30 # HACK: Currently using serving.kubeflow.org/inferenceservice to identify

31 # kubeflow user namespaces.

32 # TODO: let Kubeflow profile controller add a pipeline specific label to

33 # user namespaces and use that label instead.

34 pipeline_enabled = parent.get("metadata", {}).get(

35 "labels", {}).get("serving.kubeflow.org/inferenceservice")

36

37 if not pipeline_enabled:

38 return {"status": {}, "children": []}

39

40 # Compute status based on observed state.

41 desired_status = {

42 "kubeflow-pipelines-ready": \

43 len(children["Secret.v1"]) == 1 and \

44 len(children["ConfigMap.v1"]) == 1 and \

45 len(children["Deployment.apps/v1"]) == 2 and \

46 len(children["Service.v1"]) == 2 and \

47 len(children["DestinationRule.networking.istio.io/v1alpha3"]) == 1 and \

48 len(children["AuthorizationPolicy.security.istio.io/v1beta1"]) == 1 and \

49 "True" or "False"

50 }

51

52 # Generate the desired child object(s).

53 # parent is a namespace

54 namespace = parent.get("metadata", {}).get("name")

55 desired_resources = [

56 {

57 "apiVersion": "v1",

58 "kind": "ConfigMap",

59 "metadata": {

60 "name": "metadata-grpc-configmap",

61 "namespace": namespace,

62 },

63 "data": {

64 "METADATA_GRPC_SERVICE_HOST":

65 "metadata-grpc-service.kubeflow",

66 "METADATA_GRPC_SERVICE_PORT": "8080",

67 },

68 },

69 # Visualization server related manifests below

70 {

71 "apiVersion": "apps/v1",

72 "kind": "Deployment",

73 "metadata": {

74 "labels": {

75 "app": "ml-pipeline-visualizationserver"

76 },

77 "name": "ml-pipeline-visualizationserver",

78 "namespace": namespace,

79 },

80 "spec": {

81 "selector": {

82 "matchLabels": {

83 "app": "ml-pipeline-visualizationserver"

84 },

85 },

86 "template": {

87 "metadata": {

88 "labels": {

89 "app": "ml-pipeline-visualizationserver"

90 },

91 "annotations": disable_istio_sidecar and {

92 "sidecar.istio.io/inject": "false"

93 } or {},

94 },

95 "spec": {

96 "containers": [{

97 "image":

98 "gcr.io/ml-pipeline/visualization-server:" +

99 kfp_version,

100 "imagePullPolicy":

101 "IfNotPresent",

102 "name":

103 "ml-pipeline-visualizationserver",

104 "ports": [{

105 "containerPort": 8888

106 }],

107 }],

108 "serviceAccountName":

109 "default-editor",

110 },

111 },

112 },

113 },

114 {

115 "apiVersion": "networking.istio.io/v1alpha3",

116 "kind": "DestinationRule",

117 "metadata": {

118 "name": "ml-pipeline-visualizationserver",

119 "namespace": namespace,

120 },

121 "spec": {

122 "host": "ml-pipeline-visualizationserver",

123 "trafficPolicy": {

124 "tls": {

125 "mode": "ISTIO_MUTUAL"

126 }

127 }

128 }

129 },

130 {

131 "apiVersion": "security.istio.io/v1beta1",

132 "kind": "AuthorizationPolicy",

133 "metadata": {

134 "name": "ml-pipeline-visualizationserver",

135 "namespace": namespace,

136 },

137 "spec": {

138 "selector": {

139 "matchLabels": {

140 "app": "ml-pipeline-visualizationserver"

141 }

142 },

143 "rules": [{

144 "from": [{

145 "source": {

146 "principals": ["cluster.local/ns/kubeflow/sa/ml-pipeline"]

147 }

148 }]

149 }]

150 }

151 },

152 {

153 "apiVersion": "v1",

154 "kind": "Service",

155 "metadata": {

156 "name": "ml-pipeline-visualizationserver",

157 "namespace": namespace,

158 },

159 "spec": {

160 "ports": [{

161 "name": "http",

162 "port": 8888,

163 "protocol": "TCP",

164 "targetPort": 8888,

165 }],

166 "selector": {

167 "app": "ml-pipeline-visualizationserver",

168 },

169 },

170 },

171 # Artifact fetcher related resources below.

172 {

173 "apiVersion": "apps/v1",

174 "kind": "Deployment",

175 "metadata": {

176 "labels": {

177 "app": "ml-pipeline-ui-artifact"

178 },

179 "name": "ml-pipeline-ui-artifact",

180 "namespace": namespace,

181 },

182 "spec": {

183 "selector": {

184 "matchLabels": {

185 "app": "ml-pipeline-ui-artifact"

186 }

187 },

188 "template": {

189 "metadata": {

190 "labels": {

191 "app": "ml-pipeline-ui-artifact"

192 },

193 "annotations": disable_istio_sidecar and {

194 "sidecar.istio.io/inject": "false"

195 } or {},

196 },

197 "spec": {

198 "containers": [{

199 "name":

200 "ml-pipeline-ui-artifact",

201 "image":

202 "gcr.io/ml-pipeline/frontend:" + kfp_version,

203 "imagePullPolicy":

204 "IfNotPresent",

205 "ports": [{

206 "containerPort": 3000

207 }]

208 }],

209 "serviceAccountName":

210 "default-editor"

211 }

212 }

213 }

214 },

215 {

216 "apiVersion": "v1",

217 "kind": "Service",

218 "metadata": {

219 "name": "ml-pipeline-ui-artifact",

220 "namespace": namespace,

221 "labels": {

222 "app": "ml-pipeline-ui-artifact"

223 }

224 },

225 "spec": {

226 "ports": [{

227 "name":

228 "http", # name is required to let istio understand request protocol

229 "port": 80,

230 "protocol": "TCP",

231 "targetPort": 3000

232 }],

233 "selector": {

234 "app": "ml-pipeline-ui-artifact"

235 }

236 }

237 },

238 ]

239 print('Received request:', parent)

240 print('Desired resources except secrets:', desired_resources)

241 # Moved after the print argument because this is sensitive data.

242 desired_resources.append({

243 "apiVersion": "v1",

244 "kind": "Secret",

245 "metadata": {

246 "name": "mlpipeline-minio-artifact",

247 "namespace": namespace,

248 },

249 "data": {

250 "accesskey": mlpipeline_minio_access_key,

251 "secretkey": mlpipeline_minio_secret_key,

252 },

253 })

254

255 return {"status": desired_status, "children": desired_resources}

256

257 def do_POST(self):

258 # Serve the sync() function as a JSON webhook.

259 observed = json.loads(

260 self.rfile.read(int(self.headers.get("content-length"))))

261 desired = self.sync(observed["parent"], observed["children"])

262

263 self.send_response(200)

264 self.send_header("Content-type", "application/json")

265 self.end_headers()

266 self.wfile.write(bytes(json.dumps(desired), 'utf-8'))

267

268

269 HTTPServer(("", 80), Controller).serve_forever()

270

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py b/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py

--- a/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py

+++ b/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py

@@ -104,6 +104,16 @@

"ports": [{

"containerPort": 8888

}],

+ "resources": {

+ "requests": {

+ "cpu": "50m",

+ "memory": "200Mi"

+ },

+ "limits": {

+ "cpu": "500m",

+ "memory": "1Gi"

+ },

+ }

}],

"serviceAccountName":

"default-editor",

@@ -204,7 +214,17 @@

"IfNotPresent",

"ports": [{

"containerPort": 3000

- }]

+ }],

+ "resources": {

+ "requests": {

+ "cpu": "10m",

+ "memory": "70Mi"

+ },

+ "limits": {

+ "cpu": "100m",

+ "memory": "500Mi"

+ },

+ }

}],

"serviceAccountName":

"default-editor"

|

{"golden_diff": "diff --git a/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py b/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py\n--- a/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py\n+++ b/manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py\n@@ -104,6 +104,16 @@\n \"ports\": [{\n \"containerPort\": 8888\n }],\n+ \"resources\": {\n+ \"requests\": {\n+ \"cpu\": \"50m\",\n+ \"memory\": \"200Mi\"\n+ },\n+ \"limits\": {\n+ \"cpu\": \"500m\",\n+ \"memory\": \"1Gi\"\n+ },\n+ }\n }],\n \"serviceAccountName\":\n \"default-editor\",\n@@ -204,7 +214,17 @@\n \"IfNotPresent\",\n \"ports\": [{\n \"containerPort\": 3000\n- }]\n+ }],\n+ \"resources\": {\n+ \"requests\": {\n+ \"cpu\": \"10m\",\n+ \"memory\": \"70Mi\"\n+ },\n+ \"limits\": {\n+ \"cpu\": \"100m\",\n+ \"memory\": \"500Mi\"\n+ },\n+ }\n }],\n \"serviceAccountName\":\n \"default-editor\"\n", "issue": "'kubeflow-pipelines-profile-controller' fails to deploy pods on profile creation when ResourceQuota is set in the profile.\nIn multi-user mode, it seems like `kubeflow-pipelines-profile-controller` deploy below pods on Kubeflow profile creation.\r\n\r\n- `ml-pipeline-ui-artifact`\r\n- `ml-pipeline-visualizationserver`\r\n\r\nWhen `ResourceQuota` is set in the profile, `kubeflow-pipelines-profile-controller` fails to deploy `ml-pipeline-ui-artifact` and `ml-pipeline-visualizationserver` with below error.\r\n\r\n```\r\nWarning FailedCreate 17m replicaset-controller \r\nError creating: pods \"ml-pipeline-ui-artifact-684c5db68-s74w8\" is forbidden: failed quota: kf-resource-quota: must specify cpu,memory\r\n```\r\n\r\n- Related code: [https://github.com/kubeflow/manifests/blob/master/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py](https://github.com/kubeflow/manifests/blob/master/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py)\r\n- Cause: The container resource limit & request is not set on the pod specs, so the pods can not be deployed in the namespace (which has `ResourceQuota` ).\r\n\r\nSince Kubeflow profile supports setting `ResourceQuota`, `kubeflow-pipelines-profile-controller` should set container resource requests & limits in pod specs to avoid above errors. \r\n\r\nI confirmed that with below patch, ml-pipeline pods are successfully deployed.\r\n\r\n```python\r\ndiff --git a/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py b/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py\r\nindex 75c6e5db..a0e71fbf 100644\r\n--- a/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py\r\n+++ b/apps/pipeline/upstream/installs/multi-user/pipelines-profile-controller/sync.py\r\n@@ -104,6 +104,16 @@ class Controller(BaseHTTPRequestHandler):\r\n \"ports\": [{\r\n \"containerPort\": 8888\r\n }],\r\n+ \"resources\": {\r\n+ \"requests\": {\r\n+ \"cpu\": \"50m\",\r\n+ \"memory\": \"200Mi\"\r\n+ },\r\n+ \"limits\": {\r\n+ \"cpu\": \"500m\",\r\n+ \"memory\": \"2Gi\"\r\n+ },\r\n+ }\r\n }],\r\n \"serviceAccountName\":\r\n \"default-editor\",\r\n@@ -204,7 +214,17 @@ class Controller(BaseHTTPRequestHandler):\r\n \"IfNotPresent\",\r\n \"ports\": [{\r\n \"containerPort\": 3000\r\n- }]\r\n+ }],\r\n+ \"resources\": {\r\n+ \"requests\": {\r\n+ \"cpu\": \"50m\",\r\n+ \"memory\": \"200Mi\"\r\n+ },\r\n+ \"limits\": {\r\n+ \"cpu\": \"500m\",\r\n+ \"memory\": \"2Gi\"\r\n+ },\r\n+ }\r\n }],\r\n \"serviceAccountName\":\r\n \"default-editor\"\r\n```\r\n\r\nPlease take a look, thanks.\n", "before_files": [{"content": "# Copyright 2020 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom http.server import BaseHTTPRequestHandler, HTTPServer\nimport json\nimport os\nimport base64\n\nkfp_version = os.environ[\"KFP_VERSION\"]\ndisable_istio_sidecar = os.environ.get(\"DISABLE_ISTIO_SIDECAR\") == \"true\"\nmlpipeline_minio_access_key = base64.b64encode(\n bytes(os.environ.get(\"MINIO_ACCESS_KEY\"), 'utf-8')).decode('utf-8')\nmlpipeline_minio_secret_key = base64.b64encode(\n bytes(os.environ.get(\"MINIO_SECRET_KEY\"), 'utf-8')).decode('utf-8')\n\n\nclass Controller(BaseHTTPRequestHandler):\n def sync(self, parent, children):\n # HACK: Currently using serving.kubeflow.org/inferenceservice to identify\n # kubeflow user namespaces.\n # TODO: let Kubeflow profile controller add a pipeline specific label to\n # user namespaces and use that label instead.\n pipeline_enabled = parent.get(\"metadata\", {}).get(\n \"labels\", {}).get(\"serving.kubeflow.org/inferenceservice\")\n\n if not pipeline_enabled:\n return {\"status\": {}, \"children\": []}\n\n # Compute status based on observed state.\n desired_status = {\n \"kubeflow-pipelines-ready\": \\\n len(children[\"Secret.v1\"]) == 1 and \\\n len(children[\"ConfigMap.v1\"]) == 1 and \\\n len(children[\"Deployment.apps/v1\"]) == 2 and \\\n len(children[\"Service.v1\"]) == 2 and \\\n len(children[\"DestinationRule.networking.istio.io/v1alpha3\"]) == 1 and \\\n len(children[\"AuthorizationPolicy.security.istio.io/v1beta1\"]) == 1 and \\\n \"True\" or \"False\"\n }\n\n # Generate the desired child object(s).\n # parent is a namespace\n namespace = parent.get(\"metadata\", {}).get(\"name\")\n desired_resources = [\n {\n \"apiVersion\": \"v1\",\n \"kind\": \"ConfigMap\",\n \"metadata\": {\n \"name\": \"metadata-grpc-configmap\",\n \"namespace\": namespace,\n },\n \"data\": {\n \"METADATA_GRPC_SERVICE_HOST\":\n \"metadata-grpc-service.kubeflow\",\n \"METADATA_GRPC_SERVICE_PORT\": \"8080\",\n },\n },\n # Visualization server related manifests below\n {\n \"apiVersion\": \"apps/v1\",\n \"kind\": \"Deployment\",\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n },\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"selector\": {\n \"matchLabels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n },\n },\n \"template\": {\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n },\n \"annotations\": disable_istio_sidecar and {\n \"sidecar.istio.io/inject\": \"false\"\n } or {},\n },\n \"spec\": {\n \"containers\": [{\n \"image\":\n \"gcr.io/ml-pipeline/visualization-server:\" +\n kfp_version,\n \"imagePullPolicy\":\n \"IfNotPresent\",\n \"name\":\n \"ml-pipeline-visualizationserver\",\n \"ports\": [{\n \"containerPort\": 8888\n }],\n }],\n \"serviceAccountName\":\n \"default-editor\",\n },\n },\n },\n },\n {\n \"apiVersion\": \"networking.istio.io/v1alpha3\",\n \"kind\": \"DestinationRule\",\n \"metadata\": {\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"host\": \"ml-pipeline-visualizationserver\",\n \"trafficPolicy\": {\n \"tls\": {\n \"mode\": \"ISTIO_MUTUAL\"\n }\n }\n }\n },\n {\n \"apiVersion\": \"security.istio.io/v1beta1\",\n \"kind\": \"AuthorizationPolicy\",\n \"metadata\": {\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"selector\": {\n \"matchLabels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n }\n },\n \"rules\": [{\n \"from\": [{\n \"source\": {\n \"principals\": [\"cluster.local/ns/kubeflow/sa/ml-pipeline\"]\n }\n }]\n }]\n }\n },\n {\n \"apiVersion\": \"v1\",\n \"kind\": \"Service\",\n \"metadata\": {\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"ports\": [{\n \"name\": \"http\",\n \"port\": 8888,\n \"protocol\": \"TCP\",\n \"targetPort\": 8888,\n }],\n \"selector\": {\n \"app\": \"ml-pipeline-visualizationserver\",\n },\n },\n },\n # Artifact fetcher related resources below.\n {\n \"apiVersion\": \"apps/v1\",\n \"kind\": \"Deployment\",\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n },\n \"name\": \"ml-pipeline-ui-artifact\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"selector\": {\n \"matchLabels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n }\n },\n \"template\": {\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n },\n \"annotations\": disable_istio_sidecar and {\n \"sidecar.istio.io/inject\": \"false\"\n } or {},\n },\n \"spec\": {\n \"containers\": [{\n \"name\":\n \"ml-pipeline-ui-artifact\",\n \"image\":\n \"gcr.io/ml-pipeline/frontend:\" + kfp_version,\n \"imagePullPolicy\":\n \"IfNotPresent\",\n \"ports\": [{\n \"containerPort\": 3000\n }]\n }],\n \"serviceAccountName\":\n \"default-editor\"\n }\n }\n }\n },\n {\n \"apiVersion\": \"v1\",\n \"kind\": \"Service\",\n \"metadata\": {\n \"name\": \"ml-pipeline-ui-artifact\",\n \"namespace\": namespace,\n \"labels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n }\n },\n \"spec\": {\n \"ports\": [{\n \"name\":\n \"http\", # name is required to let istio understand request protocol\n \"port\": 80,\n \"protocol\": \"TCP\",\n \"targetPort\": 3000\n }],\n \"selector\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n }\n }\n },\n ]\n print('Received request:', parent)\n print('Desired resources except secrets:', desired_resources)\n # Moved after the print argument because this is sensitive data.\n desired_resources.append({\n \"apiVersion\": \"v1\",\n \"kind\": \"Secret\",\n \"metadata\": {\n \"name\": \"mlpipeline-minio-artifact\",\n \"namespace\": namespace,\n },\n \"data\": {\n \"accesskey\": mlpipeline_minio_access_key,\n \"secretkey\": mlpipeline_minio_secret_key,\n },\n })\n\n return {\"status\": desired_status, \"children\": desired_resources}\n\n def do_POST(self):\n # Serve the sync() function as a JSON webhook.\n observed = json.loads(\n self.rfile.read(int(self.headers.get(\"content-length\"))))\n desired = self.sync(observed[\"parent\"], observed[\"children\"])\n\n self.send_response(200)\n self.send_header(\"Content-type\", \"application/json\")\n self.end_headers()\n self.wfile.write(bytes(json.dumps(desired), 'utf-8'))\n\n\nHTTPServer((\"\", 80), Controller).serve_forever()\n", "path": "manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py"}], "after_files": [{"content": "# Copyright 2020 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom http.server import BaseHTTPRequestHandler, HTTPServer\nimport json\nimport os\nimport base64\n\nkfp_version = os.environ[\"KFP_VERSION\"]\ndisable_istio_sidecar = os.environ.get(\"DISABLE_ISTIO_SIDECAR\") == \"true\"\nmlpipeline_minio_access_key = base64.b64encode(\n bytes(os.environ.get(\"MINIO_ACCESS_KEY\"), 'utf-8')).decode('utf-8')\nmlpipeline_minio_secret_key = base64.b64encode(\n bytes(os.environ.get(\"MINIO_SECRET_KEY\"), 'utf-8')).decode('utf-8')\n\n\nclass Controller(BaseHTTPRequestHandler):\n def sync(self, parent, children):\n # HACK: Currently using serving.kubeflow.org/inferenceservice to identify\n # kubeflow user namespaces.\n # TODO: let Kubeflow profile controller add a pipeline specific label to\n # user namespaces and use that label instead.\n pipeline_enabled = parent.get(\"metadata\", {}).get(\n \"labels\", {}).get(\"serving.kubeflow.org/inferenceservice\")\n\n if not pipeline_enabled:\n return {\"status\": {}, \"children\": []}\n\n # Compute status based on observed state.\n desired_status = {\n \"kubeflow-pipelines-ready\": \\\n len(children[\"Secret.v1\"]) == 1 and \\\n len(children[\"ConfigMap.v1\"]) == 1 and \\\n len(children[\"Deployment.apps/v1\"]) == 2 and \\\n len(children[\"Service.v1\"]) == 2 and \\\n len(children[\"DestinationRule.networking.istio.io/v1alpha3\"]) == 1 and \\\n len(children[\"AuthorizationPolicy.security.istio.io/v1beta1\"]) == 1 and \\\n \"True\" or \"False\"\n }\n\n # Generate the desired child object(s).\n # parent is a namespace\n namespace = parent.get(\"metadata\", {}).get(\"name\")\n desired_resources = [\n {\n \"apiVersion\": \"v1\",\n \"kind\": \"ConfigMap\",\n \"metadata\": {\n \"name\": \"metadata-grpc-configmap\",\n \"namespace\": namespace,\n },\n \"data\": {\n \"METADATA_GRPC_SERVICE_HOST\":\n \"metadata-grpc-service.kubeflow\",\n \"METADATA_GRPC_SERVICE_PORT\": \"8080\",\n },\n },\n # Visualization server related manifests below\n {\n \"apiVersion\": \"apps/v1\",\n \"kind\": \"Deployment\",\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n },\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"selector\": {\n \"matchLabels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n },\n },\n \"template\": {\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n },\n \"annotations\": disable_istio_sidecar and {\n \"sidecar.istio.io/inject\": \"false\"\n } or {},\n },\n \"spec\": {\n \"containers\": [{\n \"image\":\n \"gcr.io/ml-pipeline/visualization-server:\" +\n kfp_version,\n \"imagePullPolicy\":\n \"IfNotPresent\",\n \"name\":\n \"ml-pipeline-visualizationserver\",\n \"ports\": [{\n \"containerPort\": 8888\n }],\n \"resources\": {\n \"requests\": {\n \"cpu\": \"50m\",\n \"memory\": \"200Mi\"\n },\n \"limits\": {\n \"cpu\": \"500m\",\n \"memory\": \"1Gi\"\n },\n }\n }],\n \"serviceAccountName\":\n \"default-editor\",\n },\n },\n },\n },\n {\n \"apiVersion\": \"networking.istio.io/v1alpha3\",\n \"kind\": \"DestinationRule\",\n \"metadata\": {\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"host\": \"ml-pipeline-visualizationserver\",\n \"trafficPolicy\": {\n \"tls\": {\n \"mode\": \"ISTIO_MUTUAL\"\n }\n }\n }\n },\n {\n \"apiVersion\": \"security.istio.io/v1beta1\",\n \"kind\": \"AuthorizationPolicy\",\n \"metadata\": {\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"selector\": {\n \"matchLabels\": {\n \"app\": \"ml-pipeline-visualizationserver\"\n }\n },\n \"rules\": [{\n \"from\": [{\n \"source\": {\n \"principals\": [\"cluster.local/ns/kubeflow/sa/ml-pipeline\"]\n }\n }]\n }]\n }\n },\n {\n \"apiVersion\": \"v1\",\n \"kind\": \"Service\",\n \"metadata\": {\n \"name\": \"ml-pipeline-visualizationserver\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"ports\": [{\n \"name\": \"http\",\n \"port\": 8888,\n \"protocol\": \"TCP\",\n \"targetPort\": 8888,\n }],\n \"selector\": {\n \"app\": \"ml-pipeline-visualizationserver\",\n },\n },\n },\n # Artifact fetcher related resources below.\n {\n \"apiVersion\": \"apps/v1\",\n \"kind\": \"Deployment\",\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n },\n \"name\": \"ml-pipeline-ui-artifact\",\n \"namespace\": namespace,\n },\n \"spec\": {\n \"selector\": {\n \"matchLabels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n }\n },\n \"template\": {\n \"metadata\": {\n \"labels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n },\n \"annotations\": disable_istio_sidecar and {\n \"sidecar.istio.io/inject\": \"false\"\n } or {},\n },\n \"spec\": {\n \"containers\": [{\n \"name\":\n \"ml-pipeline-ui-artifact\",\n \"image\":\n \"gcr.io/ml-pipeline/frontend:\" + kfp_version,\n \"imagePullPolicy\":\n \"IfNotPresent\",\n \"ports\": [{\n \"containerPort\": 3000\n }],\n \"resources\": {\n \"requests\": {\n \"cpu\": \"10m\",\n \"memory\": \"70Mi\"\n },\n \"limits\": {\n \"cpu\": \"100m\",\n \"memory\": \"500Mi\"\n },\n }\n }],\n \"serviceAccountName\":\n \"default-editor\"\n }\n }\n }\n },\n {\n \"apiVersion\": \"v1\",\n \"kind\": \"Service\",\n \"metadata\": {\n \"name\": \"ml-pipeline-ui-artifact\",\n \"namespace\": namespace,\n \"labels\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n }\n },\n \"spec\": {\n \"ports\": [{\n \"name\":\n \"http\", # name is required to let istio understand request protocol\n \"port\": 80,\n \"protocol\": \"TCP\",\n \"targetPort\": 3000\n }],\n \"selector\": {\n \"app\": \"ml-pipeline-ui-artifact\"\n }\n }\n },\n ]\n print('Received request:', parent)\n print('Desired resources except secrets:', desired_resources)\n # Moved after the print argument because this is sensitive data.\n desired_resources.append({\n \"apiVersion\": \"v1\",\n \"kind\": \"Secret\",\n \"metadata\": {\n \"name\": \"mlpipeline-minio-artifact\",\n \"namespace\": namespace,\n },\n \"data\": {\n \"accesskey\": mlpipeline_minio_access_key,\n \"secretkey\": mlpipeline_minio_secret_key,\n },\n })\n\n return {\"status\": desired_status, \"children\": desired_resources}\n\n def do_POST(self):\n # Serve the sync() function as a JSON webhook.\n observed = json.loads(\n self.rfile.read(int(self.headers.get(\"content-length\"))))\n desired = self.sync(observed[\"parent\"], observed[\"children\"])\n\n self.send_response(200)\n self.send_header(\"Content-type\", \"application/json\")\n self.end_headers()\n self.wfile.write(bytes(json.dumps(desired), 'utf-8'))\n\n\nHTTPServer((\"\", 80), Controller).serve_forever()\n", "path": "manifests/kustomize/base/installs/multi-user/pipelines-profile-controller/sync.py"}]}

| 3,575 | 331 |

gh_patches_debug_552

|

rasdani/github-patches

|

git_diff

|

pex-tool__pex-880

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Release 2.1.1

On the docket:

+ [x] PEX 2.1.0 regression: pex file won't build inside a running docker image as user #850

+ [x] Fully pin vendored requirements. #853

+ [x] Fix `tox -epackage` to create pex supporting 3.8. #843

+ [x] Pex erroneously warns about needing to use vendored `pkg_resources` for distributions with empty `namespace_packages.txt` metadata files. #840

+ [x] Interpreter discovery and pyenv don't interact well #782

+ [x] ensure_python_interpreter() bootstrapping broken on pypy shard #477

+ [x] Resolve error checking does not account for environment markers. #851

+ [x] Ensure Pex PEX contraints match pex wheel / sdist. #863

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pex/version.py`

Content:

```

1 # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 __version__ = '2.1.0'

5

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pex/version.py b/pex/version.py

--- a/pex/version.py

+++ b/pex/version.py

@@ -1,4 +1,4 @@

# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

# Licensed under the Apache License, Version 2.0 (see LICENSE).

-__version__ = '2.1.0'

+__version__ = '2.1.1'

|

{"golden_diff": "diff --git a/pex/version.py b/pex/version.py\n--- a/pex/version.py\n+++ b/pex/version.py\n@@ -1,4 +1,4 @@\n # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n # Licensed under the Apache License, Version 2.0 (see LICENSE).\n \n-__version__ = '2.1.0'\n+__version__ = '2.1.1'\n", "issue": "Release 2.1.1\nOn the docket:\r\n+ [x] PEX 2.1.0 regression: pex file won't build inside a running docker image as user #850\r\n+ [x] Fully pin vendored requirements. #853\r\n+ [x] Fix `tox -epackage` to create pex supporting 3.8. #843\r\n+ [x] Pex erroneously warns about needing to use vendored `pkg_resources` for distributions with empty `namespace_packages.txt` metadata files. #840\r\n+ [x] Interpreter discovery and pyenv don't interact well #782\r\n+ [x] ensure_python_interpreter() bootstrapping broken on pypy shard #477\r\n+ [x] Resolve error checking does not account for environment markers. #851\r\n+ [x] Ensure Pex PEX contraints match pex wheel / sdist. #863\r\n\r\n\n", "before_files": [{"content": "# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\n__version__ = '2.1.0'\n", "path": "pex/version.py"}], "after_files": [{"content": "# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\n__version__ = '2.1.1'\n", "path": "pex/version.py"}]}

| 515 | 94 |

gh_patches_debug_26729

|

rasdani/github-patches

|

git_diff

|

mathesar-foundation__mathesar-1398

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Allow the user to hide grouping separators for Number and Money

## Current behavior

- Look at this table of numbers. The right-most column is storing years as in integer, which I think will be common. But they are displayed with grouping separators. Ugh. That looks terrible!

- There is currently no way for the user to disable the display of grouping separators.

## Desired behavior

- The user can use display options to enable/disable grouping separators.

## Proposal

### Schema

- Use the `use_grouping` property as [spec'ed in this comment](https://github.com/centerofci/mathesar/discussions/1243#discussioncomment-2519195).

### UX

- Add a new form field labeled "Use Grouping Separators", with a dropdown input having options "Yes", "No", and "Auto". Display this new field before the already-existing "Format" field.

For integers, the default is "No".

For non-integers, and Money, the default is "Yes".

- Modify the "Format" form field to behave as follows:

- If we have an **integer with grouping separators**, then present the following options:

| option | value |

| --------- | ------- |

| Use browser locale | `null` |

| 1,234,567 | `'english'` |

| 1.234.567 | `'german'` |

| 1 234 567 | `'french'` |

| 12,34,567 | `'hindi'` |

| 1'234'567 | `'swiss'` |

- If we have an **integer without grouping separators**, then don't show the "format" option, because it's irrelevant. Keep value as `null`.

- If we have a **non-integer with grouping separators**, then present the following options:

| option | value |

| --------- | ------- |

| Use browser locale | `null` |

| 1,234,567.89 | `'english'` |

| 1.234.567,89 | `'german'` |

| 1 234 567,89 | `'french'` |

| 12,34,567.89 | `'hindi'` |

| 1'234'567.89 | `'swiss'` |

- If we have a **non-integer without grouping separators**, then present the following options:

| option | value |

| --------- | ------- |

| Use browser locale | `null` |

| 1234.56 | `'english'` |

| 1234,56 | `'german'` |

- When changing the "Use Grouping Separators" field from "No" to "Yes", we set a more specific value for the "Format" field, using the browser locale to resolve ambiguity. For example, if the user has configured `format` to `'german'` (because they just wanted a comma decimal separator), but their locale is `fr-FR`, then we change `'german'` to `'french'`, which also has a comma decimal separator but is more likely to match their desired grouping separator. We can use the browser locale to determine the format option by formatting a number and inspecting it. As a further edge case, if the user has configured `format` to `'german'`, but their locale is `en-US` (which is _not_ a more specific form of `'german'`), then we'd leave the value untouched.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mathesar/api/display_options.py`

Content:

```

1 import json

2 from mathesar.database.types import UIType

3 from lazydict import LazyDictionary

4

5

6 def _money_display_options_schema():

7 with open("currency_info.json", "r") as info_file:

8 currency_info = json.load(info_file)

9 currency_codes = list(currency_info.keys())

10 return {

11 "options": [

12 {"name": "currency_code", "type": "string", "enum": currency_codes},

13 {

14 "name": "currency_details",

15 "type": "object",

16 "items": [

17 {"name": "symbol", "type": "string"},

18 {"name": "symbol_location", "type": "number", "enum": [1, -1]},

19 {"name": "decimal_symbol", "type": "string", "enum": [",", "."]},

20 {"name": "digit_grouping", "type": "array"},

21 {"name": "digit_symbol", "type": "string", "enum": [",", ".", " "]}

22 ]

23 }]

24 }

25

26

27 DISPLAY_OPTIONS_BY_UI_TYPE = LazyDictionary(

28 {

29 UIType.BOOLEAN:

30 {

31 "options": [

32 {

33 "name": "input", "type": "string",

34 "enum": ['dropdown', 'checkbox']

35 },

36 {

37 'name': "custom_labels", "type": "object",

38 "items": [

39 {"name": "TRUE", "type": "string"},

40 {'name': "FALSE", "type": "string"}

41 ]

42 }

43 ]

44

45 },

46 UIType.NUMBER:

47 {

48 "options": [

49 {

50 "name": "show_as_percentage",

51 "type": "string",

52 "enum": ['dropdown', 'checkbox']

53 },

54 {

55 "name": "locale",

56 "type": "string"

57 }

58 ]

59 },

60 UIType.DATETIME:

61 {

62 "options": [{"name": "format", "type": "string"}]

63 },

64 UIType.TIME:

65 {

66 "options": [{"name": "format", "type": "string"}]

67 },

68 UIType.DATE:

69 {

70 "options": [{"name": "format", "type": "string"}]

71 },

72 UIType.DURATION:

73 {

74 "options": [

75 {"name": "min", "type": "string"},

76 {"name": "max", "type": "string"},

77 {"name": "show_units", "type": "boolean"},

78 ]

79 },

80 # NOTE: below callable will be evaluated lazily by LazyDictionary

81 UIType.MONEY: _money_display_options_schema,

82 }

83 )

84

```

Path: `mathesar/api/serializers/shared_serializers.py`

Content:

```

1 from django.core.exceptions import ImproperlyConfigured

2 from rest_framework import serializers

3

4 from mathesar.api.exceptions.mixins import MathesarErrorMessageMixin

5 from mathesar.database.types import UIType, get_ui_type_from_db_type

6

7

8 class ReadOnlyPolymorphicSerializerMappingMixin:

9 """

10 This serializer mixin is helpful in serializing polymorphic models,

11 by switching to correct serializer based on the mapping field value.

12 """

13 default_serializer = None

14

15 def __new__(cls, *args, **kwargs):

16 if cls.serializers_mapping is None:

17 raise ImproperlyConfigured(

18 '`{cls}` is missing a '

19 '`{cls}.model_serializer_mapping` attribute'.format(cls=cls.__name__)

20 )

21 return super().__new__(cls, *args, **kwargs)

22

23 def _init_serializer(self, serializer_cls, *args, **kwargs):

24 if callable(serializer_cls):

25 serializer = serializer_cls(*args, **kwargs)

26 serializer.parent = self

27 else:

28 serializer = serializer_cls

29 return serializer

30

31 def __init__(self, *args, **kwargs):

32 super().__init__(*args, **kwargs)

33 self.serializers_cls_mapping = {}

34 serializers_mapping = self.serializers_mapping

35 self.serializers_mapping = {}

36 if self.default_serializer is not None:

37 self.default_serializer = self._init_serializer(self.default_serializer, *args, **kwargs)

38 for identifier, serializer_cls in serializers_mapping.items():

39 serializer = self._init_serializer(serializer_cls, *args, **kwargs)

40 self.serializers_mapping[identifier] = serializer

41 self.serializers_cls_mapping[identifier] = serializer_cls

42

43 def get_serializer_class(self, identifier):

44 if identifier in self.serializers_mapping:

45 return self.serializers_mapping.get(identifier)

46 else:

47 return self.default_serializer

48

49 def to_representation(self, instance):

50 serializer = self.get_serializer_class(self.get_mapping_field(instance))

51 if serializer is not None:

52 return serializer.to_representation(instance)

53 else:

54 return instance

55

56 def get_mapping_field(self, data):

57 mapping_field = getattr(self, "mapping_field", None)

58 if mapping_field is None:

59 raise Exception(

60 "Add a `mapping_field` to be used as a identifier"

61 "or override this method to return a identifier to identify a proper serializer"

62 )

63 return mapping_field

64

65

66 class ReadWritePolymorphicSerializerMappingMixin(ReadOnlyPolymorphicSerializerMappingMixin):

67 def to_internal_value(self, data):

68 serializer = self.get_serializer_class(self.get_mapping_field(data))

69 if serializer is not None:

70 return serializer.to_internal_value(data=data)

71 else:

72 data = {}

73 return data

74

75 def validate(self, attrs):

76 serializer = self.serializers_mapping.get(self.get_mapping_field(attrs))

77 if serializer is not None:

78 return serializer.validate(attrs)

79 return {}

80

81

82 class MonkeyPatchPartial:

83 """

84 Work around bug #3847 in djangorestframework by monkey-patching the partial

85 attribute of the root serializer during the call to validate_empty_values.

86 https://github.com/encode/django-rest-framework/issues/3847

87 """

88

89 def __init__(self, root):

90 self._root = root

91

92 def __enter__(self):

93 self._old = getattr(self._root, 'partial')

94 setattr(self._root, 'partial', False)

95

96 def __exit__(self, *args):

97 setattr(self._root, 'partial', self._old)

98

99

100 class OverrideRootPartialMixin:

101 """

102 This mixin is used to convert a serializer into a partial serializer,

103 based on the serializer `partial` property rather than the parent's `partial` property.

104 Refer to the issue

105 https://github.com/encode/django-rest-framework/issues/3847

106 """

107

108 def run_validation(self, *args, **kwargs):

109 if not self.partial:

110 with MonkeyPatchPartial(self.root):

111 return super().run_validation(*args, **kwargs)

112 return super().run_validation(*args, **kwargs)

113

114

115 class MathesarPolymorphicErrorMixin(MathesarErrorMessageMixin):

116 def get_serializer_fields(self, data):

117 return self.serializers_mapping[self.get_mapping_field(data)].fields

118

119

120 class BaseDisplayOptionsSerializer(MathesarErrorMessageMixin, OverrideRootPartialMixin, serializers.Serializer):

121 show_fk_preview = serializers.BooleanField(default=True)

122

123

124 class CustomBooleanLabelSerializer(MathesarErrorMessageMixin, serializers.Serializer):

125 TRUE = serializers.CharField()

126 FALSE = serializers.CharField()

127

128

129 # This is the key which will determine which display options serializer is used. Its value is

130 # supposed to be the column's DB type (a DatabaseType instance).

131 DISPLAY_OPTIONS_SERIALIZER_MAPPING_KEY = 'db_type'

132

133

134 class BooleanDisplayOptionSerializer(BaseDisplayOptionsSerializer):

135 input = serializers.ChoiceField(choices=[("dropdown", "dropdown"), ("checkbox", "checkbox")])

136 custom_labels = CustomBooleanLabelSerializer(required=False)

137

138

139 class AbstractNumberDisplayOptionSerializer(BaseDisplayOptionsSerializer):

140 number_format = serializers.ChoiceField(

141 required=False,

142 allow_null=True,

143 choices=['english', 'german', 'french', 'hindi', 'swiss']

144 )

145

146

147 class NumberDisplayOptionSerializer(AbstractNumberDisplayOptionSerializer):

148 show_as_percentage = serializers.BooleanField(default=False)

149

150

151 class MoneyDisplayOptionSerializer(AbstractNumberDisplayOptionSerializer):

152 currency_symbol = serializers.CharField()

153 currency_symbol_location = serializers.ChoiceField(choices=['after-minus', 'end-with-space'])

154

155

156 class TimeFormatDisplayOptionSerializer(BaseDisplayOptionsSerializer):

157 format = serializers.CharField(max_length=255)

158

159

160 class DateTimeFormatDisplayOptionSerializer(BaseDisplayOptionsSerializer):

161 time_format = serializers.CharField(max_length=255)

162 date_format = serializers.CharField(max_length=255)

163

164

165 class DurationDisplayOptionSerializer(BaseDisplayOptionsSerializer):

166 min = serializers.CharField(max_length=255)

167 max = serializers.CharField(max_length=255)

168 show_units = serializers.BooleanField()

169

170

171 class DisplayOptionsMappingSerializer(

172 OverrideRootPartialMixin,

173 MathesarPolymorphicErrorMixin,

174 ReadWritePolymorphicSerializerMappingMixin,

175 serializers.Serializer

176 ):

177 serializers_mapping = {

178 UIType.BOOLEAN: BooleanDisplayOptionSerializer,

179 UIType.NUMBER: NumberDisplayOptionSerializer,

180 UIType.DATETIME: DateTimeFormatDisplayOptionSerializer,

181 UIType.DATE: TimeFormatDisplayOptionSerializer,

182 UIType.TIME: TimeFormatDisplayOptionSerializer,

183 UIType.DURATION: DurationDisplayOptionSerializer,

184 UIType.MONEY: MoneyDisplayOptionSerializer,

185 }

186 default_serializer = BaseDisplayOptionsSerializer

187

188 def get_mapping_field(self, _):

189 return self._get_ui_type_of_column_being_serialized()

190

191 def _get_ui_type_of_column_being_serialized(self):

192 db_type = self.context[DISPLAY_OPTIONS_SERIALIZER_MAPPING_KEY]

193 ui_type = get_ui_type_from_db_type(db_type)

194 return ui_type

195

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mathesar/api/display_options.py b/mathesar/api/display_options.py

--- a/mathesar/api/display_options.py

+++ b/mathesar/api/display_options.py

@@ -51,6 +51,11 @@

"type": "string",

"enum": ['dropdown', 'checkbox']

},

+ {

+ "name": "use_grouping",

+ "type": "string",

+ "enum": ['true', 'false', 'auto']

+ },

{

"name": "locale",

"type": "string"

diff --git a/mathesar/api/serializers/shared_serializers.py b/mathesar/api/serializers/shared_serializers.py

--- a/mathesar/api/serializers/shared_serializers.py

+++ b/mathesar/api/serializers/shared_serializers.py

@@ -143,6 +143,18 @@

choices=['english', 'german', 'french', 'hindi', 'swiss']

)

+ use_grouping = serializers.ChoiceField(required=False, choices=['true', 'false', 'auto'], default='auto')

+ """

+ The choices here correspond to the options available for the `useGrouping`

+ property within the [Intl API][1]. True and False are encoded as strings

+ instead of booleans to maintain consistency with the Intl API and to keep

+ the type consistent. We did considering using an optional boolean but

+ decided a string would be better, especially if we want to support other

+ options eventually, like "min2".

+

+ [1]: https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Intl/NumberFormat/NumberFormat

+ """

+

class NumberDisplayOptionSerializer(AbstractNumberDisplayOptionSerializer):

show_as_percentage = serializers.BooleanField(default=False)

|