problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_1116

|

rasdani/github-patches

|

git_diff

|

scikit-hep__pyhf-895

|

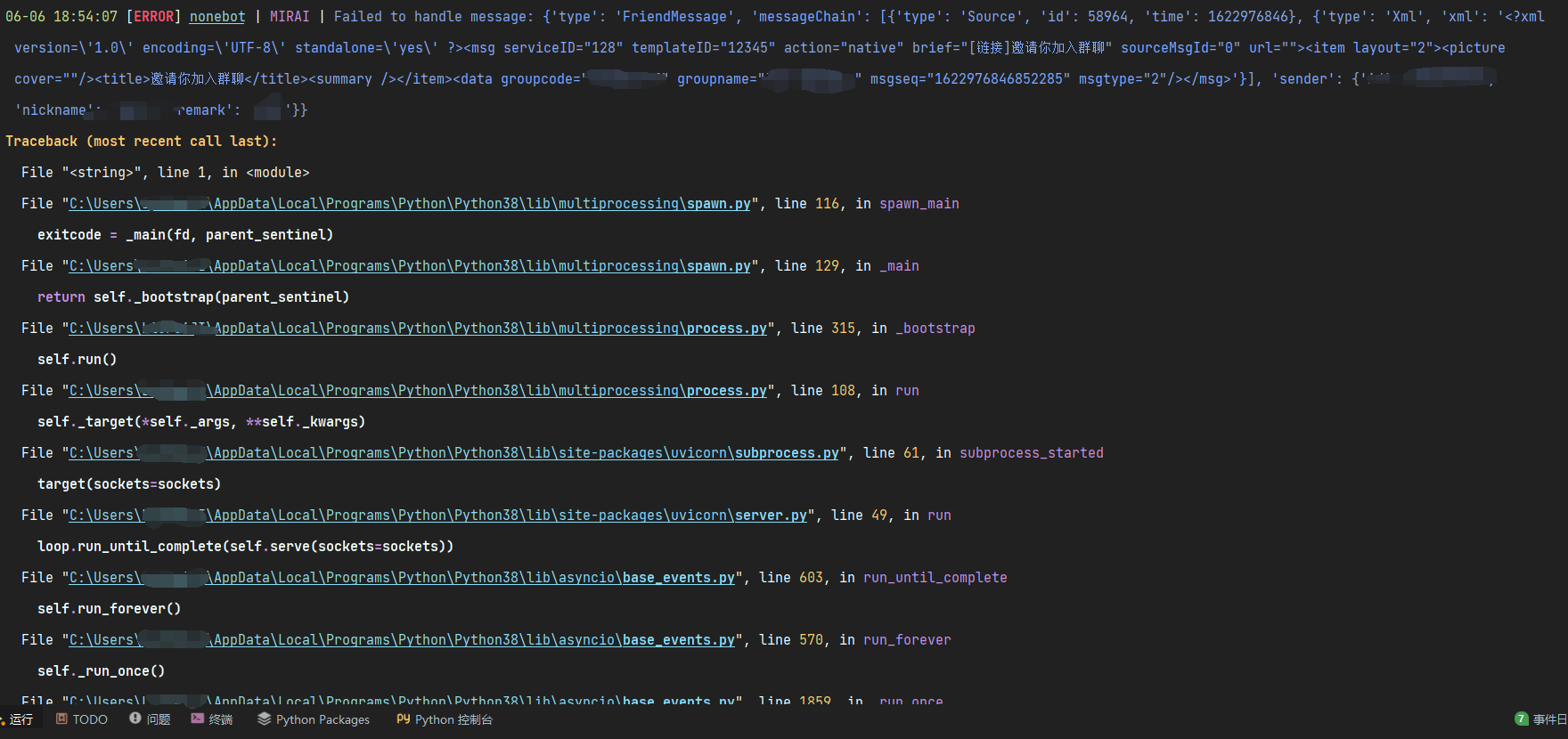

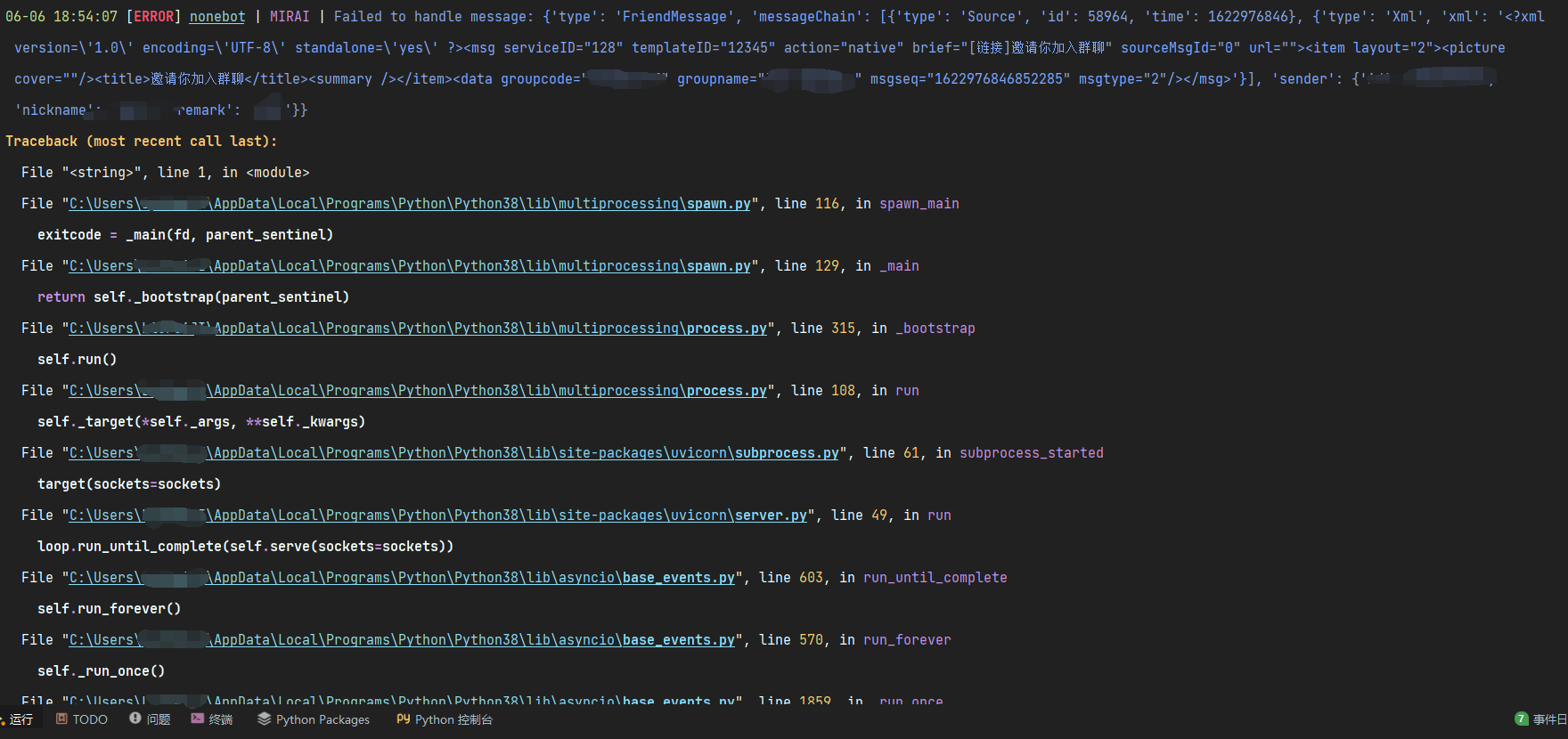

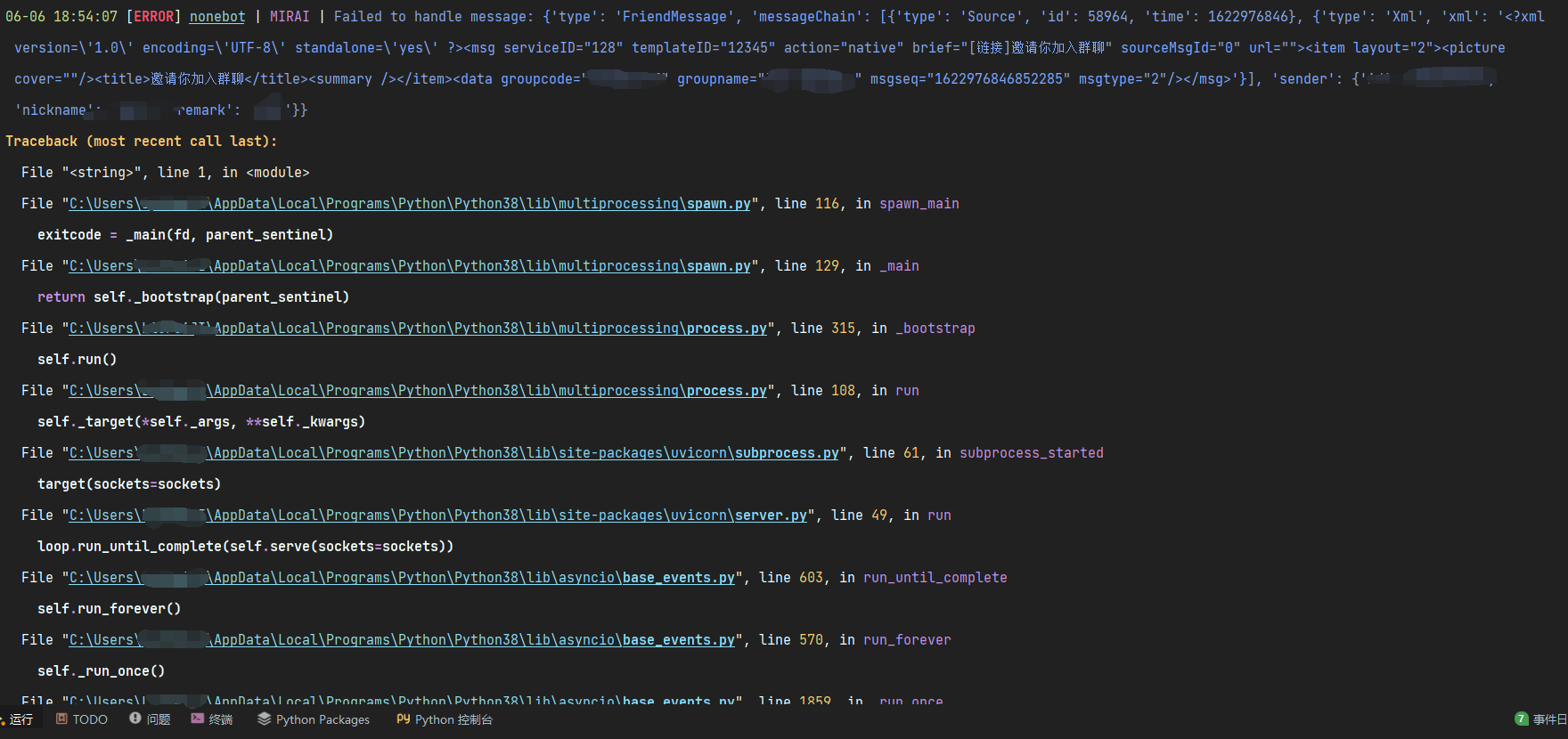

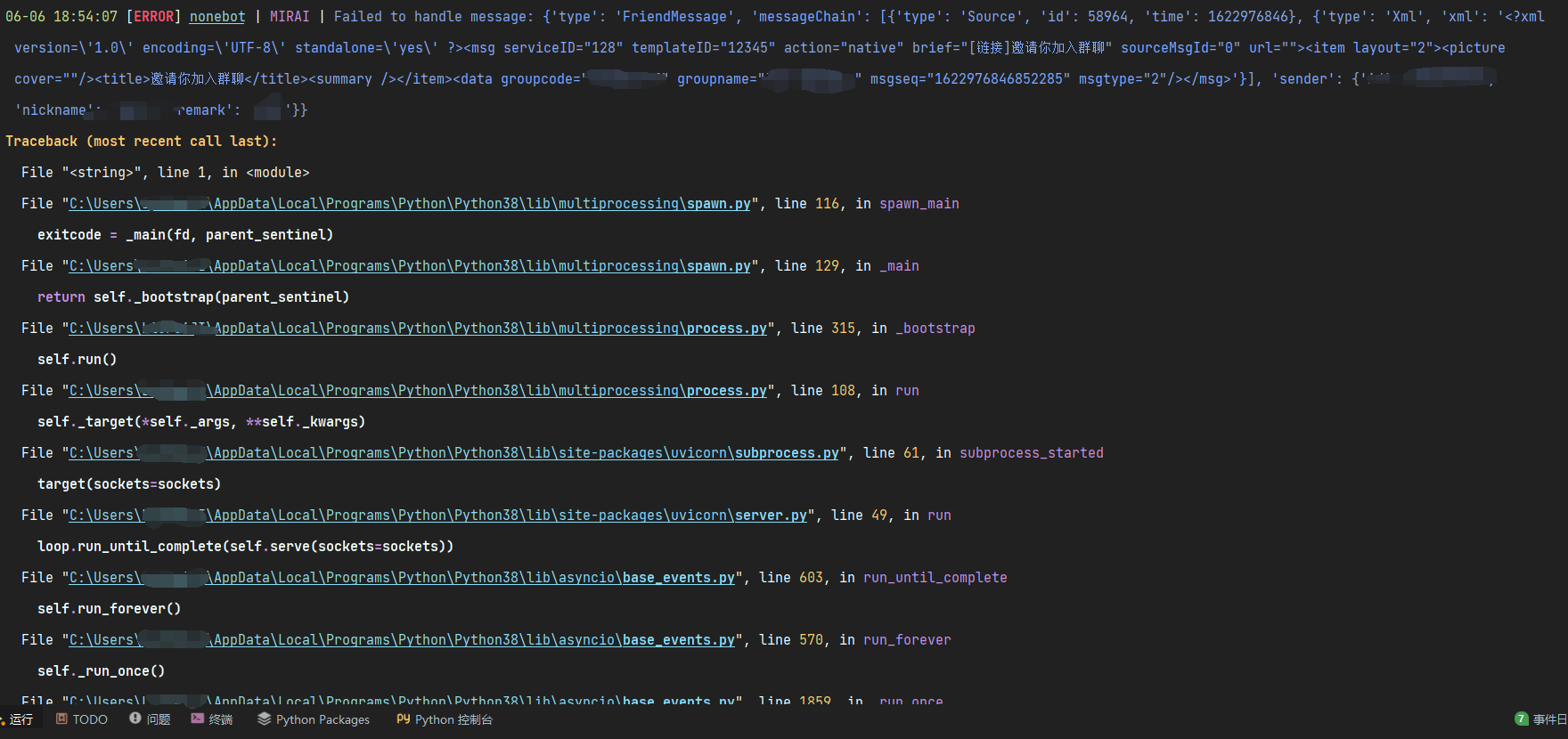

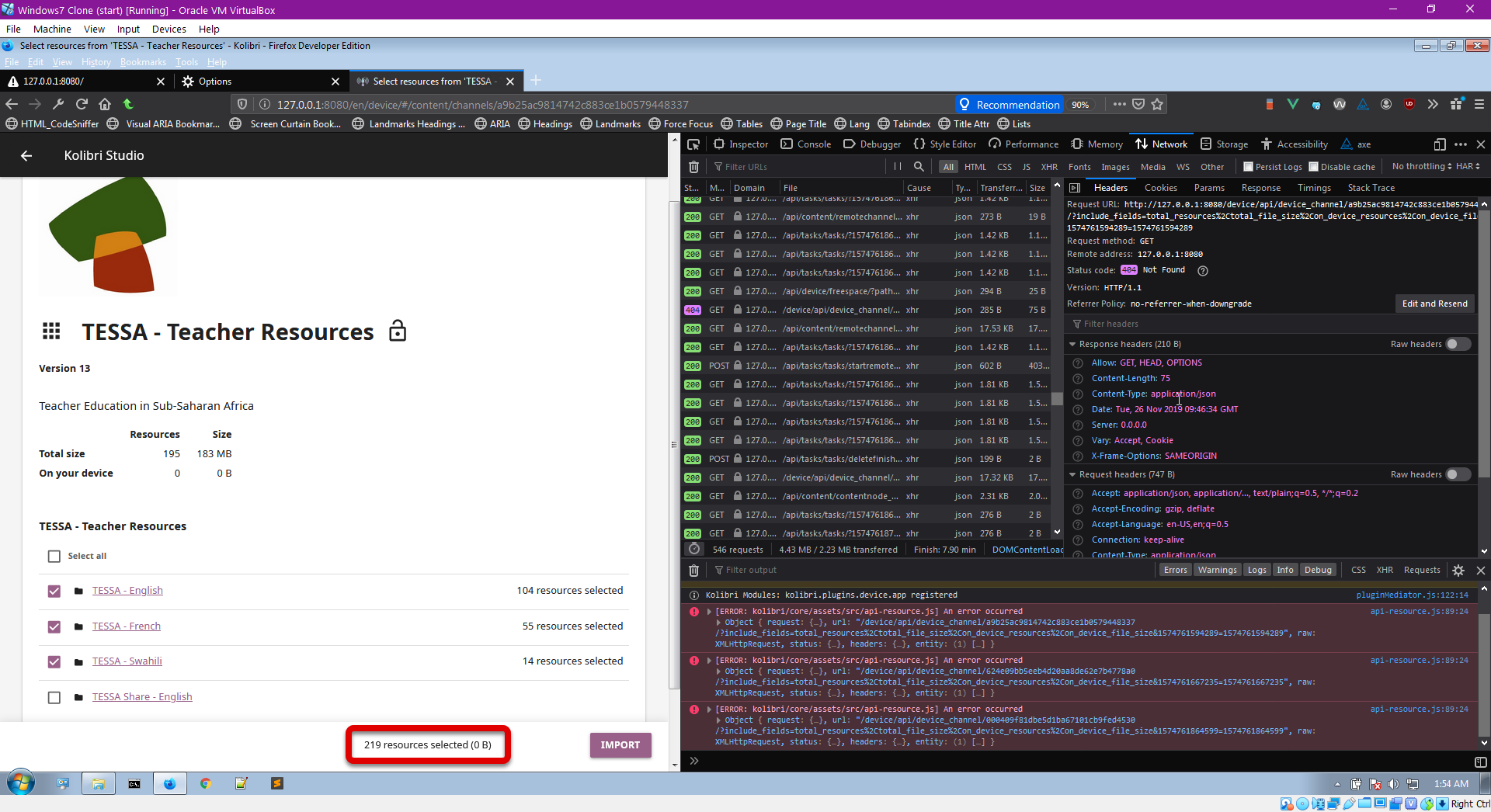

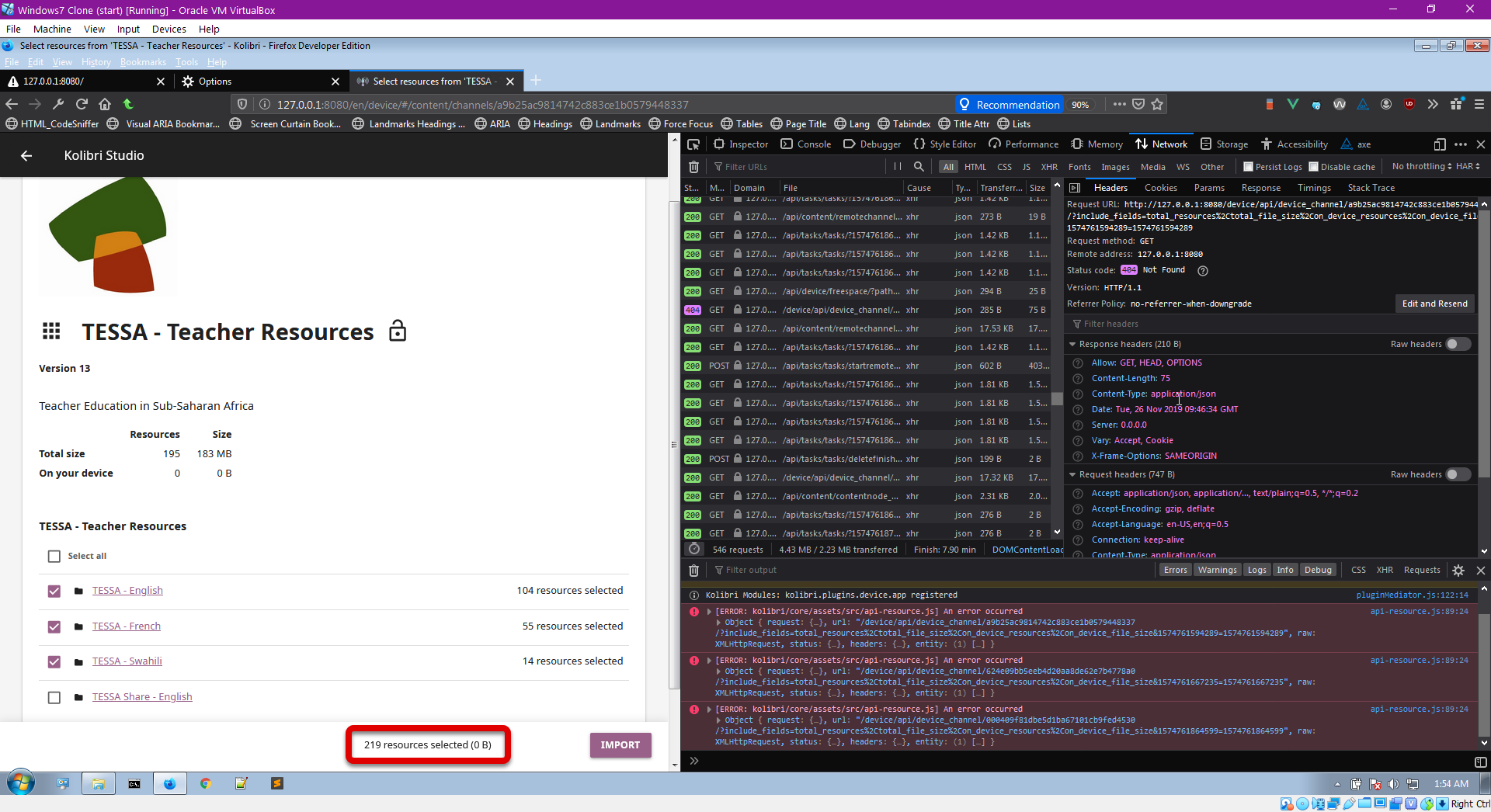

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Docs build broken with Sphinx v3.1.0

# Description

Today (2020-06-08) [Sphinx `v3.1.0`](https://github.com/sphinx-doc/sphinx/releases/tag/v3.1.0) was released which now classifies pyhf's particular usages of the "autoclass" directive as an Error in the docs generated for [`interpolators/code0.py`](https://github.com/scikit-hep/pyhf/blob/62becc2e469f89babf75534a2decfb3ace6ff179/src/pyhf/interpolators/code0.py)

```

Warning, treated as error:

/home/runner/work/pyhf/pyhf/docs/_generated/pyhf.interpolators.code0.rst:8:Error in "autoclass" directive:

1 argument(s) required, 0 supplied.

.. autoclass::

:show-inheritance:

.. rubric:: Methods

.. automethod:: .__init__

##[error]Process completed with exit code 1.

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup.py`

Content:

```

1 from setuptools import setup

2

3 extras_require = {

4 'tensorflow': ['tensorflow~=2.0', 'tensorflow-probability~=0.8'],

5 'torch': ['torch~=1.2'],

6 'jax': ['jax~=0.1,>0.1.51', 'jaxlib~=0.1,>0.1.33'],

7 'xmlio': ['uproot'],

8 'minuit': ['iminuit'],

9 }

10 extras_require['backends'] = sorted(

11 set(

12 extras_require['tensorflow']

13 + extras_require['torch']

14 + extras_require['jax']

15 + extras_require['minuit']

16 )

17 )

18 extras_require['contrib'] = sorted(set(['matplotlib']))

19

20 extras_require['test'] = sorted(

21 set(

22 extras_require['backends']

23 + extras_require['xmlio']

24 + extras_require['contrib']

25 + [

26 'pyflakes',

27 'pytest~=3.5',

28 'pytest-cov>=2.5.1',

29 'pytest-mock',

30 'pytest-benchmark[histogram]',

31 'pytest-console-scripts',

32 'pytest-mpl',

33 'pydocstyle',

34 'coverage>=4.0', # coveralls

35 'papermill~=2.0',

36 'nteract-scrapbook~=0.2',

37 'check-manifest',

38 'jupyter',

39 'uproot~=3.3',

40 'graphviz',

41 'jsonpatch',

42 'black',

43 ]

44 )

45 )

46 extras_require['docs'] = sorted(

47 set(

48 [

49 'sphinx',

50 'sphinxcontrib-bibtex',

51 'sphinx-click',

52 'sphinx_rtd_theme',

53 'nbsphinx',

54 'ipywidgets',

55 'sphinx-issues',

56 'sphinx-copybutton>0.2.9',

57 ]

58 )

59 )

60 extras_require['develop'] = sorted(

61 set(

62 extras_require['docs']

63 + extras_require['test']

64 + ['nbdime', 'bumpversion', 'ipython', 'pre-commit', 'twine']

65 )

66 )

67 extras_require['complete'] = sorted(set(sum(extras_require.values(), [])))

68

69

70 setup(

71 extras_require=extras_require,

72 use_scm_version=lambda: {'local_scheme': lambda version: ''},

73 )

74

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -46,7 +46,7 @@

extras_require['docs'] = sorted(

set(

[

- 'sphinx',

+ 'sphinx!=3.1.0',

'sphinxcontrib-bibtex',

'sphinx-click',

'sphinx_rtd_theme',

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -46,7 +46,7 @@\n extras_require['docs'] = sorted(\n set(\n [\n- 'sphinx',\n+ 'sphinx!=3.1.0',\n 'sphinxcontrib-bibtex',\n 'sphinx-click',\n 'sphinx_rtd_theme',\n", "issue": "Docs build broken with Sphinx v3.1.0\n# Description\r\n\r\nToday (2020-06-08) [Sphinx `v3.1.0`](https://github.com/sphinx-doc/sphinx/releases/tag/v3.1.0) was released which now classifies pyhf's particular usages of the \"autoclass\" directive as an Error in the docs generated for [`interpolators/code0.py`](https://github.com/scikit-hep/pyhf/blob/62becc2e469f89babf75534a2decfb3ace6ff179/src/pyhf/interpolators/code0.py)\r\n\r\n```\r\nWarning, treated as error:\r\n/home/runner/work/pyhf/pyhf/docs/_generated/pyhf.interpolators.code0.rst:8:Error in \"autoclass\" directive:\r\n1 argument(s) required, 0 supplied.\r\n\r\n.. autoclass::\r\n :show-inheritance:\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n .. rubric:: Methods\r\n\r\n\r\n\r\n .. automethod:: .__init__\r\n##[error]Process completed with exit code 1.\r\n```\n", "before_files": [{"content": "from setuptools import setup\n\nextras_require = {\n 'tensorflow': ['tensorflow~=2.0', 'tensorflow-probability~=0.8'],\n 'torch': ['torch~=1.2'],\n 'jax': ['jax~=0.1,>0.1.51', 'jaxlib~=0.1,>0.1.33'],\n 'xmlio': ['uproot'],\n 'minuit': ['iminuit'],\n}\nextras_require['backends'] = sorted(\n set(\n extras_require['tensorflow']\n + extras_require['torch']\n + extras_require['jax']\n + extras_require['minuit']\n )\n)\nextras_require['contrib'] = sorted(set(['matplotlib']))\n\nextras_require['test'] = sorted(\n set(\n extras_require['backends']\n + extras_require['xmlio']\n + extras_require['contrib']\n + [\n 'pyflakes',\n 'pytest~=3.5',\n 'pytest-cov>=2.5.1',\n 'pytest-mock',\n 'pytest-benchmark[histogram]',\n 'pytest-console-scripts',\n 'pytest-mpl',\n 'pydocstyle',\n 'coverage>=4.0', # coveralls\n 'papermill~=2.0',\n 'nteract-scrapbook~=0.2',\n 'check-manifest',\n 'jupyter',\n 'uproot~=3.3',\n 'graphviz',\n 'jsonpatch',\n 'black',\n ]\n )\n)\nextras_require['docs'] = sorted(\n set(\n [\n 'sphinx',\n 'sphinxcontrib-bibtex',\n 'sphinx-click',\n 'sphinx_rtd_theme',\n 'nbsphinx',\n 'ipywidgets',\n 'sphinx-issues',\n 'sphinx-copybutton>0.2.9',\n ]\n )\n)\nextras_require['develop'] = sorted(\n set(\n extras_require['docs']\n + extras_require['test']\n + ['nbdime', 'bumpversion', 'ipython', 'pre-commit', 'twine']\n )\n)\nextras_require['complete'] = sorted(set(sum(extras_require.values(), [])))\n\n\nsetup(\n extras_require=extras_require,\n use_scm_version=lambda: {'local_scheme': lambda version: ''},\n)\n", "path": "setup.py"}], "after_files": [{"content": "from setuptools import setup\n\nextras_require = {\n 'tensorflow': ['tensorflow~=2.0', 'tensorflow-probability~=0.8'],\n 'torch': ['torch~=1.2'],\n 'jax': ['jax~=0.1,>0.1.51', 'jaxlib~=0.1,>0.1.33'],\n 'xmlio': ['uproot'],\n 'minuit': ['iminuit'],\n}\nextras_require['backends'] = sorted(\n set(\n extras_require['tensorflow']\n + extras_require['torch']\n + extras_require['jax']\n + extras_require['minuit']\n )\n)\nextras_require['contrib'] = sorted(set(['matplotlib']))\n\nextras_require['test'] = sorted(\n set(\n extras_require['backends']\n + extras_require['xmlio']\n + extras_require['contrib']\n + [\n 'pyflakes',\n 'pytest~=3.5',\n 'pytest-cov>=2.5.1',\n 'pytest-mock',\n 'pytest-benchmark[histogram]',\n 'pytest-console-scripts',\n 'pytest-mpl',\n 'pydocstyle',\n 'coverage>=4.0', # coveralls\n 'papermill~=2.0',\n 'nteract-scrapbook~=0.2',\n 'check-manifest',\n 'jupyter',\n 'uproot~=3.3',\n 'graphviz',\n 'jsonpatch',\n 'black',\n ]\n )\n)\nextras_require['docs'] = sorted(\n set(\n [\n 'sphinx!=3.1.0',\n 'sphinxcontrib-bibtex',\n 'sphinx-click',\n 'sphinx_rtd_theme',\n 'nbsphinx',\n 'ipywidgets',\n 'sphinx-issues',\n 'sphinx-copybutton>0.2.9',\n ]\n )\n)\nextras_require['develop'] = sorted(\n set(\n extras_require['docs']\n + extras_require['test']\n + ['nbdime', 'bumpversion', 'ipython', 'pre-commit', 'twine']\n )\n)\nextras_require['complete'] = sorted(set(sum(extras_require.values(), [])))\n\n\nsetup(\n extras_require=extras_require,\n use_scm_version=lambda: {'local_scheme': lambda version: ''},\n)\n", "path": "setup.py"}]}

| 1,126 | 86 |

gh_patches_debug_64733

|

rasdani/github-patches

|

git_diff

|

python-gitlab__python-gitlab-1099

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Duplicated code in gitlab/config.py

## Description of the problem, including code/CLI snippet

Duplicated code found in gitlab/config.py . I think one should be get from 'global'.

```python

self.http_username = None

self.http_password = None

try:

self.http_username = self._config.get(self.gitlab_id, "http_username")

self.http_password = self._config.get(self.gitlab_id, "http_password")

except Exception:

pass

self.http_username = None

self.http_password = None

try:

self.http_username = self._config.get(self.gitlab_id, "http_username")

self.http_password = self._config.get(self.gitlab_id, "http_password")

except Exception:

pass

```

## Expected Behavior

## Actual Behavior

## Specifications

- python-gitlab version: python-gitlab==2.2.0

- API version you are using (v3/v4): v4

- Gitlab server version (or gitlab.com):

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `gitlab/config.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 #

3 # Copyright (C) 2013-2017 Gauvain Pocentek <[email protected]>

4 #

5 # This program is free software: you can redistribute it and/or modify

6 # it under the terms of the GNU Lesser General Public License as published by

7 # the Free Software Foundation, either version 3 of the License, or

8 # (at your option) any later version.

9 #

10 # This program is distributed in the hope that it will be useful,

11 # but WITHOUT ANY WARRANTY; without even the implied warranty of

12 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

13 # GNU Lesser General Public License for more details.

14 #

15 # You should have received a copy of the GNU Lesser General Public License

16 # along with this program. If not, see <http://www.gnu.org/licenses/>.

17

18 import os

19 import configparser

20

21

22 def _env_config():

23 if "PYTHON_GITLAB_CFG" in os.environ:

24 return [os.environ["PYTHON_GITLAB_CFG"]]

25 return []

26

27

28 _DEFAULT_FILES = _env_config() + [

29 "/etc/python-gitlab.cfg",

30 os.path.expanduser("~/.python-gitlab.cfg"),

31 ]

32

33

34 class ConfigError(Exception):

35 pass

36

37

38 class GitlabIDError(ConfigError):

39 pass

40

41

42 class GitlabDataError(ConfigError):

43 pass

44

45

46 class GitlabConfigMissingError(ConfigError):

47 pass

48

49

50 class GitlabConfigParser(object):

51 def __init__(self, gitlab_id=None, config_files=None):

52 self.gitlab_id = gitlab_id

53 _files = config_files or _DEFAULT_FILES

54 file_exist = False

55 for file in _files:

56 if os.path.exists(file):

57 file_exist = True

58 if not file_exist:

59 raise GitlabConfigMissingError(

60 "Config file not found. \nPlease create one in "

61 "one of the following locations: {} \nor "

62 "specify a config file using the '-c' parameter.".format(

63 ", ".join(_DEFAULT_FILES)

64 )

65 )

66

67 self._config = configparser.ConfigParser()

68 self._config.read(_files)

69

70 if self.gitlab_id is None:

71 try:

72 self.gitlab_id = self._config.get("global", "default")

73 except Exception as e:

74 raise GitlabIDError(

75 "Impossible to get the gitlab id (not specified in config file)"

76 ) from e

77

78 try:

79 self.url = self._config.get(self.gitlab_id, "url")

80 except Exception as e:

81 raise GitlabDataError(

82 "Impossible to get gitlab informations from "

83 "configuration (%s)" % self.gitlab_id

84 ) from e

85

86 self.ssl_verify = True

87 try:

88 self.ssl_verify = self._config.getboolean("global", "ssl_verify")

89 except ValueError:

90 # Value Error means the option exists but isn't a boolean.

91 # Get as a string instead as it should then be a local path to a

92 # CA bundle.

93 try:

94 self.ssl_verify = self._config.get("global", "ssl_verify")

95 except Exception:

96 pass

97 except Exception:

98 pass

99 try:

100 self.ssl_verify = self._config.getboolean(self.gitlab_id, "ssl_verify")

101 except ValueError:

102 # Value Error means the option exists but isn't a boolean.

103 # Get as a string instead as it should then be a local path to a

104 # CA bundle.

105 try:

106 self.ssl_verify = self._config.get(self.gitlab_id, "ssl_verify")

107 except Exception:

108 pass

109 except Exception:

110 pass

111

112 self.timeout = 60

113 try:

114 self.timeout = self._config.getint("global", "timeout")

115 except Exception:

116 pass

117 try:

118 self.timeout = self._config.getint(self.gitlab_id, "timeout")

119 except Exception:

120 pass

121

122 self.private_token = None

123 try:

124 self.private_token = self._config.get(self.gitlab_id, "private_token")

125 except Exception:

126 pass

127

128 self.oauth_token = None

129 try:

130 self.oauth_token = self._config.get(self.gitlab_id, "oauth_token")

131 except Exception:

132 pass

133

134 self.job_token = None

135 try:

136 self.job_token = self._config.get(self.gitlab_id, "job_token")

137 except Exception:

138 pass

139

140 self.http_username = None

141 self.http_password = None

142 try:

143 self.http_username = self._config.get(self.gitlab_id, "http_username")

144 self.http_password = self._config.get(self.gitlab_id, "http_password")

145 except Exception:

146 pass

147

148 self.http_username = None

149 self.http_password = None

150 try:

151 self.http_username = self._config.get(self.gitlab_id, "http_username")

152 self.http_password = self._config.get(self.gitlab_id, "http_password")

153 except Exception:

154 pass

155

156 self.api_version = "4"

157 try:

158 self.api_version = self._config.get("global", "api_version")

159 except Exception:

160 pass

161 try:

162 self.api_version = self._config.get(self.gitlab_id, "api_version")

163 except Exception:

164 pass

165 if self.api_version not in ("4",):

166 raise GitlabDataError("Unsupported API version: %s" % self.api_version)

167

168 self.per_page = None

169 for section in ["global", self.gitlab_id]:

170 try:

171 self.per_page = self._config.getint(section, "per_page")

172 except Exception:

173 pass

174 if self.per_page is not None and not 0 <= self.per_page <= 100:

175 raise GitlabDataError("Unsupported per_page number: %s" % self.per_page)

176

177 self.pagination = None

178 try:

179 self.pagination = self._config.get(self.gitlab_id, "pagination")

180 except Exception:

181 pass

182

183 self.order_by = None

184 try:

185 self.order_by = self._config.get(self.gitlab_id, "order_by")

186 except Exception:

187 pass

188

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/gitlab/config.py b/gitlab/config.py

--- a/gitlab/config.py

+++ b/gitlab/config.py

@@ -145,14 +145,6 @@

except Exception:

pass

- self.http_username = None

- self.http_password = None

- try:

- self.http_username = self._config.get(self.gitlab_id, "http_username")

- self.http_password = self._config.get(self.gitlab_id, "http_password")

- except Exception:

- pass

-

self.api_version = "4"

try:

self.api_version = self._config.get("global", "api_version")

|

{"golden_diff": "diff --git a/gitlab/config.py b/gitlab/config.py\n--- a/gitlab/config.py\n+++ b/gitlab/config.py\n@@ -145,14 +145,6 @@\n except Exception:\n pass\n \n- self.http_username = None\n- self.http_password = None\n- try:\n- self.http_username = self._config.get(self.gitlab_id, \"http_username\")\n- self.http_password = self._config.get(self.gitlab_id, \"http_password\")\n- except Exception:\n- pass\n-\n self.api_version = \"4\"\n try:\n self.api_version = self._config.get(\"global\", \"api_version\")\n", "issue": "Duplicated code in gitlab/config.py\n## Description of the problem, including code/CLI snippet\r\nDuplicated code found in gitlab/config.py . I think one should be get from 'global'.\r\n```python\r\n self.http_username = None\r\n self.http_password = None\r\n try:\r\n self.http_username = self._config.get(self.gitlab_id, \"http_username\")\r\n self.http_password = self._config.get(self.gitlab_id, \"http_password\")\r\n except Exception:\r\n pass\r\n\r\n self.http_username = None\r\n self.http_password = None\r\n try:\r\n self.http_username = self._config.get(self.gitlab_id, \"http_username\")\r\n self.http_password = self._config.get(self.gitlab_id, \"http_password\")\r\n except Exception:\r\n pass\r\n```\r\n\r\n## Expected Behavior\r\n\r\n\r\n## Actual Behavior\r\n\r\n\r\n## Specifications\r\n\r\n - python-gitlab version: python-gitlab==2.2.0\r\n - API version you are using (v3/v4): v4\r\n - Gitlab server version (or gitlab.com): \r\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n#\n# Copyright (C) 2013-2017 Gauvain Pocentek <[email protected]>\n#\n# This program is free software: you can redistribute it and/or modify\n# it under the terms of the GNU Lesser General Public License as published by\n# the Free Software Foundation, either version 3 of the License, or\n# (at your option) any later version.\n#\n# This program is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU Lesser General Public License for more details.\n#\n# You should have received a copy of the GNU Lesser General Public License\n# along with this program. If not, see <http://www.gnu.org/licenses/>.\n\nimport os\nimport configparser\n\n\ndef _env_config():\n if \"PYTHON_GITLAB_CFG\" in os.environ:\n return [os.environ[\"PYTHON_GITLAB_CFG\"]]\n return []\n\n\n_DEFAULT_FILES = _env_config() + [\n \"/etc/python-gitlab.cfg\",\n os.path.expanduser(\"~/.python-gitlab.cfg\"),\n]\n\n\nclass ConfigError(Exception):\n pass\n\n\nclass GitlabIDError(ConfigError):\n pass\n\n\nclass GitlabDataError(ConfigError):\n pass\n\n\nclass GitlabConfigMissingError(ConfigError):\n pass\n\n\nclass GitlabConfigParser(object):\n def __init__(self, gitlab_id=None, config_files=None):\n self.gitlab_id = gitlab_id\n _files = config_files or _DEFAULT_FILES\n file_exist = False\n for file in _files:\n if os.path.exists(file):\n file_exist = True\n if not file_exist:\n raise GitlabConfigMissingError(\n \"Config file not found. \\nPlease create one in \"\n \"one of the following locations: {} \\nor \"\n \"specify a config file using the '-c' parameter.\".format(\n \", \".join(_DEFAULT_FILES)\n )\n )\n\n self._config = configparser.ConfigParser()\n self._config.read(_files)\n\n if self.gitlab_id is None:\n try:\n self.gitlab_id = self._config.get(\"global\", \"default\")\n except Exception as e:\n raise GitlabIDError(\n \"Impossible to get the gitlab id (not specified in config file)\"\n ) from e\n\n try:\n self.url = self._config.get(self.gitlab_id, \"url\")\n except Exception as e:\n raise GitlabDataError(\n \"Impossible to get gitlab informations from \"\n \"configuration (%s)\" % self.gitlab_id\n ) from e\n\n self.ssl_verify = True\n try:\n self.ssl_verify = self._config.getboolean(\"global\", \"ssl_verify\")\n except ValueError:\n # Value Error means the option exists but isn't a boolean.\n # Get as a string instead as it should then be a local path to a\n # CA bundle.\n try:\n self.ssl_verify = self._config.get(\"global\", \"ssl_verify\")\n except Exception:\n pass\n except Exception:\n pass\n try:\n self.ssl_verify = self._config.getboolean(self.gitlab_id, \"ssl_verify\")\n except ValueError:\n # Value Error means the option exists but isn't a boolean.\n # Get as a string instead as it should then be a local path to a\n # CA bundle.\n try:\n self.ssl_verify = self._config.get(self.gitlab_id, \"ssl_verify\")\n except Exception:\n pass\n except Exception:\n pass\n\n self.timeout = 60\n try:\n self.timeout = self._config.getint(\"global\", \"timeout\")\n except Exception:\n pass\n try:\n self.timeout = self._config.getint(self.gitlab_id, \"timeout\")\n except Exception:\n pass\n\n self.private_token = None\n try:\n self.private_token = self._config.get(self.gitlab_id, \"private_token\")\n except Exception:\n pass\n\n self.oauth_token = None\n try:\n self.oauth_token = self._config.get(self.gitlab_id, \"oauth_token\")\n except Exception:\n pass\n\n self.job_token = None\n try:\n self.job_token = self._config.get(self.gitlab_id, \"job_token\")\n except Exception:\n pass\n\n self.http_username = None\n self.http_password = None\n try:\n self.http_username = self._config.get(self.gitlab_id, \"http_username\")\n self.http_password = self._config.get(self.gitlab_id, \"http_password\")\n except Exception:\n pass\n\n self.http_username = None\n self.http_password = None\n try:\n self.http_username = self._config.get(self.gitlab_id, \"http_username\")\n self.http_password = self._config.get(self.gitlab_id, \"http_password\")\n except Exception:\n pass\n\n self.api_version = \"4\"\n try:\n self.api_version = self._config.get(\"global\", \"api_version\")\n except Exception:\n pass\n try:\n self.api_version = self._config.get(self.gitlab_id, \"api_version\")\n except Exception:\n pass\n if self.api_version not in (\"4\",):\n raise GitlabDataError(\"Unsupported API version: %s\" % self.api_version)\n\n self.per_page = None\n for section in [\"global\", self.gitlab_id]:\n try:\n self.per_page = self._config.getint(section, \"per_page\")\n except Exception:\n pass\n if self.per_page is not None and not 0 <= self.per_page <= 100:\n raise GitlabDataError(\"Unsupported per_page number: %s\" % self.per_page)\n\n self.pagination = None\n try:\n self.pagination = self._config.get(self.gitlab_id, \"pagination\")\n except Exception:\n pass\n\n self.order_by = None\n try:\n self.order_by = self._config.get(self.gitlab_id, \"order_by\")\n except Exception:\n pass\n", "path": "gitlab/config.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\n#\n# Copyright (C) 2013-2017 Gauvain Pocentek <[email protected]>\n#\n# This program is free software: you can redistribute it and/or modify\n# it under the terms of the GNU Lesser General Public License as published by\n# the Free Software Foundation, either version 3 of the License, or\n# (at your option) any later version.\n#\n# This program is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU Lesser General Public License for more details.\n#\n# You should have received a copy of the GNU Lesser General Public License\n# along with this program. If not, see <http://www.gnu.org/licenses/>.\n\nimport os\nimport configparser\n\n\ndef _env_config():\n if \"PYTHON_GITLAB_CFG\" in os.environ:\n return [os.environ[\"PYTHON_GITLAB_CFG\"]]\n return []\n\n\n_DEFAULT_FILES = _env_config() + [\n \"/etc/python-gitlab.cfg\",\n os.path.expanduser(\"~/.python-gitlab.cfg\"),\n]\n\n\nclass ConfigError(Exception):\n pass\n\n\nclass GitlabIDError(ConfigError):\n pass\n\n\nclass GitlabDataError(ConfigError):\n pass\n\n\nclass GitlabConfigMissingError(ConfigError):\n pass\n\n\nclass GitlabConfigParser(object):\n def __init__(self, gitlab_id=None, config_files=None):\n self.gitlab_id = gitlab_id\n _files = config_files or _DEFAULT_FILES\n file_exist = False\n for file in _files:\n if os.path.exists(file):\n file_exist = True\n if not file_exist:\n raise GitlabConfigMissingError(\n \"Config file not found. \\nPlease create one in \"\n \"one of the following locations: {} \\nor \"\n \"specify a config file using the '-c' parameter.\".format(\n \", \".join(_DEFAULT_FILES)\n )\n )\n\n self._config = configparser.ConfigParser()\n self._config.read(_files)\n\n if self.gitlab_id is None:\n try:\n self.gitlab_id = self._config.get(\"global\", \"default\")\n except Exception as e:\n raise GitlabIDError(\n \"Impossible to get the gitlab id (not specified in config file)\"\n ) from e\n\n try:\n self.url = self._config.get(self.gitlab_id, \"url\")\n except Exception as e:\n raise GitlabDataError(\n \"Impossible to get gitlab informations from \"\n \"configuration (%s)\" % self.gitlab_id\n ) from e\n\n self.ssl_verify = True\n try:\n self.ssl_verify = self._config.getboolean(\"global\", \"ssl_verify\")\n except ValueError:\n # Value Error means the option exists but isn't a boolean.\n # Get as a string instead as it should then be a local path to a\n # CA bundle.\n try:\n self.ssl_verify = self._config.get(\"global\", \"ssl_verify\")\n except Exception:\n pass\n except Exception:\n pass\n try:\n self.ssl_verify = self._config.getboolean(self.gitlab_id, \"ssl_verify\")\n except ValueError:\n # Value Error means the option exists but isn't a boolean.\n # Get as a string instead as it should then be a local path to a\n # CA bundle.\n try:\n self.ssl_verify = self._config.get(self.gitlab_id, \"ssl_verify\")\n except Exception:\n pass\n except Exception:\n pass\n\n self.timeout = 60\n try:\n self.timeout = self._config.getint(\"global\", \"timeout\")\n except Exception:\n pass\n try:\n self.timeout = self._config.getint(self.gitlab_id, \"timeout\")\n except Exception:\n pass\n\n self.private_token = None\n try:\n self.private_token = self._config.get(self.gitlab_id, \"private_token\")\n except Exception:\n pass\n\n self.oauth_token = None\n try:\n self.oauth_token = self._config.get(self.gitlab_id, \"oauth_token\")\n except Exception:\n pass\n\n self.job_token = None\n try:\n self.job_token = self._config.get(self.gitlab_id, \"job_token\")\n except Exception:\n pass\n\n self.http_username = None\n self.http_password = None\n try:\n self.http_username = self._config.get(self.gitlab_id, \"http_username\")\n self.http_password = self._config.get(self.gitlab_id, \"http_password\")\n except Exception:\n pass\n\n self.api_version = \"4\"\n try:\n self.api_version = self._config.get(\"global\", \"api_version\")\n except Exception:\n pass\n try:\n self.api_version = self._config.get(self.gitlab_id, \"api_version\")\n except Exception:\n pass\n if self.api_version not in (\"4\",):\n raise GitlabDataError(\"Unsupported API version: %s\" % self.api_version)\n\n self.per_page = None\n for section in [\"global\", self.gitlab_id]:\n try:\n self.per_page = self._config.getint(section, \"per_page\")\n except Exception:\n pass\n if self.per_page is not None and not 0 <= self.per_page <= 100:\n raise GitlabDataError(\"Unsupported per_page number: %s\" % self.per_page)\n\n self.pagination = None\n try:\n self.pagination = self._config.get(self.gitlab_id, \"pagination\")\n except Exception:\n pass\n\n self.order_by = None\n try:\n self.order_by = self._config.get(self.gitlab_id, \"order_by\")\n except Exception:\n pass\n", "path": "gitlab/config.py"}]}

| 2,275 | 145 |

gh_patches_debug_11412

|

rasdani/github-patches

|

git_diff

|

RedHatInsights__insights-core-3108

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

The modprobe combiner is raising AttributeError exceptions in production.

The AllModProbe combiner is throwing a number of the exception AttributeError("'bool' object has no attribute 'append'",) in production.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `insights/combiners/modprobe.py`

Content:

```

1 """

2 Modprobe configuration

3 ======================

4

5 The modprobe configuration files are normally available to rules as a list of

6 ModProbe objects. This combiner turns those into one set of data, preserving

7 the original file name that defined modprobe configuration line using a tuple.

8

9 """

10

11 from insights.core.plugins import combiner

12 from insights.parsers.modprobe import ModProbe

13 from .. import LegacyItemAccess

14

15 from collections import namedtuple

16

17

18 ModProbeValue = namedtuple("ModProbeValue", ['value', 'source'])

19 """

20 A value from a ModProbe source

21 """

22

23

24 @combiner(ModProbe)

25 class AllModProbe(LegacyItemAccess):

26 """

27 Combiner for accessing all the modprobe configuration files in one

28 structure.

29

30 It's important for our reporting and information purposes to know not

31 only what the configuration was but where it was defined. Therefore, the

32 format of the data in this combiner is slightly different compared to the

33 ModProbe parser. Here, each 'value' is actually a 2-tuple, with the

34 actual data first and the file name from whence the value came second.

35 This does mean that you need to pull the value out of each item - e.g.

36 using a list comprehension - but it means that every item is associated

37 with the file it was defined in.

38

39 In line with the ModProbe configuration parser, the actual value is

40 usually a list of the space-separated parts on the line, and the

41 definitions for each module are similarly kept in a list, which makes

42

43 Thanks to the LegacyItemAccess class, this can also be treated as a

44 dictionary for look-ups of data in the `data` attribute.

45

46 Attributes:

47 data (dict): The combined data structures, with each item as a

48 2-tuple, as described above.

49 bad_lines(list): The list of unparseable lines from all files, with

50 each line as a 2-tuple as described above.

51

52 Sample data files::

53

54 /etc/modprobe.conf:

55 # watchdog drivers

56 blacklist i8xx_tco

57

58 # Don't install the Firewire ethernet driver

59 install eth1394 /bin/true

60

61 /etc/modprobe.conf.d/no_ipv6.conf:

62 options ipv6 disable=1

63 install ipv6 /bin/true

64

65 Examples:

66 >>> all_modprobe = shared[AllModProbe]

67 >>> all_modprobe['alias']

68 []

69 >>> all_modprobe['blacklist']

70 {'i8xx_tco': ModProbeValue(True, '/etc/modprobe.conf')}

71 >>> all_modprobe['install']

72 {'eth1394': ModProbeValue(['/bin/true'], '/etc/modprobe.conf'),

73 'ipv6': ModProbeValue(['/bin/true'], '/etc/modprobe.conf.d/no_ipv6.conf')}

74 """

75 def __init__(self, modprobe):

76 self.data = {}

77 self.bad_lines = []

78 for mod in modprobe:

79 filename = mod.file_path # relative path inside archive

80 # Copy data section

81 for section, sectdict in mod.data.items():

82 if section not in self.data:

83 self.data[section] = {}

84 for name, value in sectdict.items():

85 if name in self.data[section]:

86 # append to this module's value - should only

87 # happen for aliases.

88 self.data[section][name][0].append(value)

89 else:

90 # create new tuple

91 self.data[section][name] = ModProbeValue(value=value, source=filename)

92 # Copy bad lines, if any

93 if mod.bad_lines:

94 self.bad_lines.extend(

95 [ModProbeValue(value=line, source=filename) for line in mod.bad_lines]

96 )

97 super(AllModProbe, self).__init__()

98

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/insights/combiners/modprobe.py b/insights/combiners/modprobe.py

--- a/insights/combiners/modprobe.py

+++ b/insights/combiners/modprobe.py

@@ -82,7 +82,7 @@

if section not in self.data:

self.data[section] = {}

for name, value in sectdict.items():

- if name in self.data[section]:

+ if name in self.data[section] and type(self.data[section][name][0]) == list:

# append to this module's value - should only

# happen for aliases.

self.data[section][name][0].append(value)

|

{"golden_diff": "diff --git a/insights/combiners/modprobe.py b/insights/combiners/modprobe.py\n--- a/insights/combiners/modprobe.py\n+++ b/insights/combiners/modprobe.py\n@@ -82,7 +82,7 @@\n if section not in self.data:\n self.data[section] = {}\n for name, value in sectdict.items():\n- if name in self.data[section]:\n+ if name in self.data[section] and type(self.data[section][name][0]) == list:\n # append to this module's value - should only\n # happen for aliases.\n self.data[section][name][0].append(value)\n", "issue": "The modprobe combiner is raising AttributeError exceptions in production.\nThe AllModProbe combiner is throwing a number of the exception AttributeError(\"'bool' object has no attribute 'append'\",) in production.\n", "before_files": [{"content": "\"\"\"\nModprobe configuration\n======================\n\nThe modprobe configuration files are normally available to rules as a list of\nModProbe objects. This combiner turns those into one set of data, preserving\nthe original file name that defined modprobe configuration line using a tuple.\n\n\"\"\"\n\nfrom insights.core.plugins import combiner\nfrom insights.parsers.modprobe import ModProbe\nfrom .. import LegacyItemAccess\n\nfrom collections import namedtuple\n\n\nModProbeValue = namedtuple(\"ModProbeValue\", ['value', 'source'])\n\"\"\"\nA value from a ModProbe source\n\"\"\"\n\n\n@combiner(ModProbe)\nclass AllModProbe(LegacyItemAccess):\n \"\"\"\n Combiner for accessing all the modprobe configuration files in one\n structure.\n\n It's important for our reporting and information purposes to know not\n only what the configuration was but where it was defined. Therefore, the\n format of the data in this combiner is slightly different compared to the\n ModProbe parser. Here, each 'value' is actually a 2-tuple, with the\n actual data first and the file name from whence the value came second.\n This does mean that you need to pull the value out of each item - e.g.\n using a list comprehension - but it means that every item is associated\n with the file it was defined in.\n\n In line with the ModProbe configuration parser, the actual value is\n usually a list of the space-separated parts on the line, and the\n definitions for each module are similarly kept in a list, which makes\n\n Thanks to the LegacyItemAccess class, this can also be treated as a\n dictionary for look-ups of data in the `data` attribute.\n\n Attributes:\n data (dict): The combined data structures, with each item as a\n 2-tuple, as described above.\n bad_lines(list): The list of unparseable lines from all files, with\n each line as a 2-tuple as described above.\n\n Sample data files::\n\n /etc/modprobe.conf:\n # watchdog drivers\n blacklist i8xx_tco\n\n # Don't install the Firewire ethernet driver\n install eth1394 /bin/true\n\n /etc/modprobe.conf.d/no_ipv6.conf:\n options ipv6 disable=1\n install ipv6 /bin/true\n\n Examples:\n >>> all_modprobe = shared[AllModProbe]\n >>> all_modprobe['alias']\n []\n >>> all_modprobe['blacklist']\n {'i8xx_tco': ModProbeValue(True, '/etc/modprobe.conf')}\n >>> all_modprobe['install']\n {'eth1394': ModProbeValue(['/bin/true'], '/etc/modprobe.conf'),\n 'ipv6': ModProbeValue(['/bin/true'], '/etc/modprobe.conf.d/no_ipv6.conf')}\n \"\"\"\n def __init__(self, modprobe):\n self.data = {}\n self.bad_lines = []\n for mod in modprobe:\n filename = mod.file_path # relative path inside archive\n # Copy data section\n for section, sectdict in mod.data.items():\n if section not in self.data:\n self.data[section] = {}\n for name, value in sectdict.items():\n if name in self.data[section]:\n # append to this module's value - should only\n # happen for aliases.\n self.data[section][name][0].append(value)\n else:\n # create new tuple\n self.data[section][name] = ModProbeValue(value=value, source=filename)\n # Copy bad lines, if any\n if mod.bad_lines:\n self.bad_lines.extend(\n [ModProbeValue(value=line, source=filename) for line in mod.bad_lines]\n )\n super(AllModProbe, self).__init__()\n", "path": "insights/combiners/modprobe.py"}], "after_files": [{"content": "\"\"\"\nModprobe configuration\n======================\n\nThe modprobe configuration files are normally available to rules as a list of\nModProbe objects. This combiner turns those into one set of data, preserving\nthe original file name that defined modprobe configuration line using a tuple.\n\n\"\"\"\n\nfrom insights.core.plugins import combiner\nfrom insights.parsers.modprobe import ModProbe\nfrom .. import LegacyItemAccess\n\nfrom collections import namedtuple\n\n\nModProbeValue = namedtuple(\"ModProbeValue\", ['value', 'source'])\n\"\"\"\nA value from a ModProbe source\n\"\"\"\n\n\n@combiner(ModProbe)\nclass AllModProbe(LegacyItemAccess):\n \"\"\"\n Combiner for accessing all the modprobe configuration files in one\n structure.\n\n It's important for our reporting and information purposes to know not\n only what the configuration was but where it was defined. Therefore, the\n format of the data in this combiner is slightly different compared to the\n ModProbe parser. Here, each 'value' is actually a 2-tuple, with the\n actual data first and the file name from whence the value came second.\n This does mean that you need to pull the value out of each item - e.g.\n using a list comprehension - but it means that every item is associated\n with the file it was defined in.\n\n In line with the ModProbe configuration parser, the actual value is\n usually a list of the space-separated parts on the line, and the\n definitions for each module are similarly kept in a list, which makes\n\n Thanks to the LegacyItemAccess class, this can also be treated as a\n dictionary for look-ups of data in the `data` attribute.\n\n Attributes:\n data (dict): The combined data structures, with each item as a\n 2-tuple, as described above.\n bad_lines(list): The list of unparseable lines from all files, with\n each line as a 2-tuple as described above.\n\n Sample data files::\n\n /etc/modprobe.conf:\n # watchdog drivers\n blacklist i8xx_tco\n\n # Don't install the Firewire ethernet driver\n install eth1394 /bin/true\n\n /etc/modprobe.conf.d/no_ipv6.conf:\n options ipv6 disable=1\n install ipv6 /bin/true\n\n Examples:\n >>> all_modprobe = shared[AllModProbe]\n >>> all_modprobe['alias']\n []\n >>> all_modprobe['blacklist']\n {'i8xx_tco': ModProbeValue(True, '/etc/modprobe.conf')}\n >>> all_modprobe['install']\n {'eth1394': ModProbeValue(['/bin/true'], '/etc/modprobe.conf'),\n 'ipv6': ModProbeValue(['/bin/true'], '/etc/modprobe.conf.d/no_ipv6.conf')}\n \"\"\"\n def __init__(self, modprobe):\n self.data = {}\n self.bad_lines = []\n for mod in modprobe:\n filename = mod.file_path # relative path inside archive\n # Copy data section\n for section, sectdict in mod.data.items():\n if section not in self.data:\n self.data[section] = {}\n for name, value in sectdict.items():\n if name in self.data[section] and type(self.data[section][name][0]) == list:\n # append to this module's value - should only\n # happen for aliases.\n self.data[section][name][0].append(value)\n else:\n # create new tuple\n self.data[section][name] = ModProbeValue(value=value, source=filename)\n # Copy bad lines, if any\n if mod.bad_lines:\n self.bad_lines.extend(\n [ModProbeValue(value=line, source=filename) for line in mod.bad_lines]\n )\n super(AllModProbe, self).__init__()\n", "path": "insights/combiners/modprobe.py"}]}

| 1,305 | 146 |

gh_patches_debug_33260

|

rasdani/github-patches

|

git_diff

|

apache__airflow-1056

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

UnicodeDecodeError in bash_operator.py

Hi,

I see a lot of these errors when running `airflow backfill` :

```

Traceback (most recent call last):

File "/usr/lib/python2.7/logging/__init__.py", line 851, in emit

msg = self.format(record)

File "/usr/lib/python2.7/logging/__init__.py", line 724, in format

return fmt.format(record)

File "/usr/lib/python2.7/logging/__init__.py", line 467, in format

s = self._fmt % record.__dict__

UnicodeDecodeError: 'ascii' codec can't decode byte 0xc3 in position 13: ordinal not in range(128)

Logged from file bash_operator.py, line 72

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `airflow/operators/bash_operator.py`

Content:

```

1

2 from builtins import bytes

3 import logging

4 import sys

5 from subprocess import Popen, STDOUT, PIPE

6 from tempfile import gettempdir, NamedTemporaryFile

7

8 from airflow.utils import AirflowException

9 from airflow.models import BaseOperator

10 from airflow.utils import apply_defaults, TemporaryDirectory

11

12

13 class BashOperator(BaseOperator):

14 """

15 Execute a Bash script, command or set of commands.

16

17 :param bash_command: The command, set of commands or reference to a

18 bash script (must be '.sh') to be executed.

19 :type bash_command: string

20 :param env: If env is not None, it must be a mapping that defines the

21 environment variables for the new process; these are used instead

22 of inheriting the current process environment, which is the default

23 behavior.

24 :type env: dict

25 """

26 template_fields = ('bash_command', 'env')

27 template_ext = ('.sh', '.bash',)

28 ui_color = '#f0ede4'

29

30 @apply_defaults

31 def __init__(

32 self,

33 bash_command,

34 xcom_push=False,

35 env=None,

36 *args, **kwargs):

37 """

38 If xcom_push is True, the last line written to stdout will also

39 be pushed to an XCom when the bash command completes.

40 """

41 super(BashOperator, self).__init__(*args, **kwargs)

42 self.bash_command = bash_command

43 self.env = env

44 self.xcom_push_flag = xcom_push

45

46 def execute(self, context):

47 """

48 Execute the bash command in a temporary directory

49 which will be cleaned afterwards

50 """

51 bash_command = self.bash_command

52 logging.info("tmp dir root location: \n" + gettempdir())

53 with TemporaryDirectory(prefix='airflowtmp') as tmp_dir:

54 with NamedTemporaryFile(dir=tmp_dir, prefix=self.task_id) as f:

55

56 f.write(bytes(bash_command, 'utf_8'))

57 f.flush()

58 fname = f.name

59 script_location = tmp_dir + "/" + fname

60 logging.info("Temporary script "

61 "location :{0}".format(script_location))

62 logging.info("Running command: " + bash_command)

63 sp = Popen(

64 ['bash', fname],

65 stdout=PIPE, stderr=STDOUT,

66 cwd=tmp_dir, env=self.env)

67

68 self.sp = sp

69

70 logging.info("Output:")

71 line = ''

72 for line in iter(sp.stdout.readline, b''):

73 line = line.decode().strip()

74 logging.info(line)

75 sp.wait()

76 logging.info("Command exited with "

77 "return code {0}".format(sp.returncode))

78

79 if sp.returncode:

80 raise AirflowException("Bash command failed")

81

82 if self.xcom_push_flag:

83 return line

84

85 def on_kill(self):

86 logging.info('Sending SIGTERM signal to bash subprocess')

87 self.sp.terminate()

88

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/airflow/operators/bash_operator.py b/airflow/operators/bash_operator.py

--- a/airflow/operators/bash_operator.py

+++ b/airflow/operators/bash_operator.py

@@ -1,7 +1,6 @@

from builtins import bytes

import logging

-import sys

from subprocess import Popen, STDOUT, PIPE

from tempfile import gettempdir, NamedTemporaryFile

@@ -22,6 +21,7 @@

of inheriting the current process environment, which is the default

behavior.

:type env: dict

+ :type output_encoding: output encoding of bash command

"""

template_fields = ('bash_command', 'env')

template_ext = ('.sh', '.bash',)

@@ -33,6 +33,7 @@

bash_command,

xcom_push=False,

env=None,

+ output_encoding='utf-8',

*args, **kwargs):

"""

If xcom_push is True, the last line written to stdout will also

@@ -42,6 +43,7 @@

self.bash_command = bash_command

self.env = env

self.xcom_push_flag = xcom_push

+ self.output_encoding = output_encoding

def execute(self, context):

"""

@@ -70,7 +72,7 @@

logging.info("Output:")

line = ''

for line in iter(sp.stdout.readline, b''):

- line = line.decode().strip()

+ line = line.decode(self.output_encoding).strip()

logging.info(line)

sp.wait()

logging.info("Command exited with "

|

{"golden_diff": "diff --git a/airflow/operators/bash_operator.py b/airflow/operators/bash_operator.py\n--- a/airflow/operators/bash_operator.py\n+++ b/airflow/operators/bash_operator.py\n@@ -1,7 +1,6 @@\n \n from builtins import bytes\n import logging\n-import sys\n from subprocess import Popen, STDOUT, PIPE\n from tempfile import gettempdir, NamedTemporaryFile\n \n@@ -22,6 +21,7 @@\n of inheriting the current process environment, which is the default\n behavior.\n :type env: dict\n+ :type output_encoding: output encoding of bash command\n \"\"\"\n template_fields = ('bash_command', 'env')\n template_ext = ('.sh', '.bash',)\n@@ -33,6 +33,7 @@\n bash_command,\n xcom_push=False,\n env=None,\n+ output_encoding='utf-8',\n *args, **kwargs):\n \"\"\"\n If xcom_push is True, the last line written to stdout will also\n@@ -42,6 +43,7 @@\n self.bash_command = bash_command\n self.env = env\n self.xcom_push_flag = xcom_push\n+ self.output_encoding = output_encoding\n \n def execute(self, context):\n \"\"\"\n@@ -70,7 +72,7 @@\n logging.info(\"Output:\")\n line = ''\n for line in iter(sp.stdout.readline, b''):\n- line = line.decode().strip()\n+ line = line.decode(self.output_encoding).strip()\n logging.info(line)\n sp.wait()\n logging.info(\"Command exited with \"\n", "issue": "UnicodeDecodeError in bash_operator.py\nHi,\n\nI see a lot of these errors when running `airflow backfill` : \n\n```\nTraceback (most recent call last):\n File \"/usr/lib/python2.7/logging/__init__.py\", line 851, in emit\n msg = self.format(record)\n File \"/usr/lib/python2.7/logging/__init__.py\", line 724, in format\n return fmt.format(record)\n File \"/usr/lib/python2.7/logging/__init__.py\", line 467, in format\n s = self._fmt % record.__dict__\nUnicodeDecodeError: 'ascii' codec can't decode byte 0xc3 in position 13: ordinal not in range(128)\nLogged from file bash_operator.py, line 72\n```\n\n", "before_files": [{"content": "\nfrom builtins import bytes\nimport logging\nimport sys\nfrom subprocess import Popen, STDOUT, PIPE\nfrom tempfile import gettempdir, NamedTemporaryFile\n\nfrom airflow.utils import AirflowException\nfrom airflow.models import BaseOperator\nfrom airflow.utils import apply_defaults, TemporaryDirectory\n\n\nclass BashOperator(BaseOperator):\n \"\"\"\n Execute a Bash script, command or set of commands.\n\n :param bash_command: The command, set of commands or reference to a\n bash script (must be '.sh') to be executed.\n :type bash_command: string\n :param env: If env is not None, it must be a mapping that defines the\n environment variables for the new process; these are used instead\n of inheriting the current process environment, which is the default\n behavior.\n :type env: dict\n \"\"\"\n template_fields = ('bash_command', 'env')\n template_ext = ('.sh', '.bash',)\n ui_color = '#f0ede4'\n\n @apply_defaults\n def __init__(\n self,\n bash_command,\n xcom_push=False,\n env=None,\n *args, **kwargs):\n \"\"\"\n If xcom_push is True, the last line written to stdout will also\n be pushed to an XCom when the bash command completes.\n \"\"\"\n super(BashOperator, self).__init__(*args, **kwargs)\n self.bash_command = bash_command\n self.env = env\n self.xcom_push_flag = xcom_push\n\n def execute(self, context):\n \"\"\"\n Execute the bash command in a temporary directory\n which will be cleaned afterwards\n \"\"\"\n bash_command = self.bash_command\n logging.info(\"tmp dir root location: \\n\" + gettempdir())\n with TemporaryDirectory(prefix='airflowtmp') as tmp_dir:\n with NamedTemporaryFile(dir=tmp_dir, prefix=self.task_id) as f:\n\n f.write(bytes(bash_command, 'utf_8'))\n f.flush()\n fname = f.name\n script_location = tmp_dir + \"/\" + fname\n logging.info(\"Temporary script \"\n \"location :{0}\".format(script_location))\n logging.info(\"Running command: \" + bash_command)\n sp = Popen(\n ['bash', fname],\n stdout=PIPE, stderr=STDOUT,\n cwd=tmp_dir, env=self.env)\n\n self.sp = sp\n\n logging.info(\"Output:\")\n line = ''\n for line in iter(sp.stdout.readline, b''):\n line = line.decode().strip()\n logging.info(line)\n sp.wait()\n logging.info(\"Command exited with \"\n \"return code {0}\".format(sp.returncode))\n\n if sp.returncode:\n raise AirflowException(\"Bash command failed\")\n\n if self.xcom_push_flag:\n return line\n\n def on_kill(self):\n logging.info('Sending SIGTERM signal to bash subprocess')\n self.sp.terminate()\n", "path": "airflow/operators/bash_operator.py"}], "after_files": [{"content": "\nfrom builtins import bytes\nimport logging\nfrom subprocess import Popen, STDOUT, PIPE\nfrom tempfile import gettempdir, NamedTemporaryFile\n\nfrom airflow.utils import AirflowException\nfrom airflow.models import BaseOperator\nfrom airflow.utils import apply_defaults, TemporaryDirectory\n\n\nclass BashOperator(BaseOperator):\n \"\"\"\n Execute a Bash script, command or set of commands.\n\n :param bash_command: The command, set of commands or reference to a\n bash script (must be '.sh') to be executed.\n :type bash_command: string\n :param env: If env is not None, it must be a mapping that defines the\n environment variables for the new process; these are used instead\n of inheriting the current process environment, which is the default\n behavior.\n :type env: dict\n :type output_encoding: output encoding of bash command\n \"\"\"\n template_fields = ('bash_command', 'env')\n template_ext = ('.sh', '.bash',)\n ui_color = '#f0ede4'\n\n @apply_defaults\n def __init__(\n self,\n bash_command,\n xcom_push=False,\n env=None,\n output_encoding='utf-8',\n *args, **kwargs):\n \"\"\"\n If xcom_push is True, the last line written to stdout will also\n be pushed to an XCom when the bash command completes.\n \"\"\"\n super(BashOperator, self).__init__(*args, **kwargs)\n self.bash_command = bash_command\n self.env = env\n self.xcom_push_flag = xcom_push\n self.output_encoding = output_encoding\n\n def execute(self, context):\n \"\"\"\n Execute the bash command in a temporary directory\n which will be cleaned afterwards\n \"\"\"\n bash_command = self.bash_command\n logging.info(\"tmp dir root location: \\n\" + gettempdir())\n with TemporaryDirectory(prefix='airflowtmp') as tmp_dir:\n with NamedTemporaryFile(dir=tmp_dir, prefix=self.task_id) as f:\n\n f.write(bytes(bash_command, 'utf_8'))\n f.flush()\n fname = f.name\n script_location = tmp_dir + \"/\" + fname\n logging.info(\"Temporary script \"\n \"location :{0}\".format(script_location))\n logging.info(\"Running command: \" + bash_command)\n sp = Popen(\n ['bash', fname],\n stdout=PIPE, stderr=STDOUT,\n cwd=tmp_dir, env=self.env)\n\n self.sp = sp\n\n logging.info(\"Output:\")\n line = ''\n for line in iter(sp.stdout.readline, b''):\n line = line.decode(self.output_encoding).strip()\n logging.info(line)\n sp.wait()\n logging.info(\"Command exited with \"\n \"return code {0}\".format(sp.returncode))\n\n if sp.returncode:\n raise AirflowException(\"Bash command failed\")\n\n if self.xcom_push_flag:\n return line\n\n def on_kill(self):\n logging.info('Sending SIGTERM signal to bash subprocess')\n self.sp.terminate()\n", "path": "airflow/operators/bash_operator.py"}]}

| 1,230 | 346 |

gh_patches_debug_10161

|

rasdani/github-patches

|

git_diff

|

onnx__sklearn-onnx-598

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

saving model works with binary classifier, fails with multiclass classifier

I am using Sklearn's SVC for a classifier, with TfidfVectorizer as the feature embedding method. Following #478 I am using `skl2onnx` version 1.7.1. My code is here:

```

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.svm import SVC

import numpy as np

from skl2onnx import convert_sklearn

from skl2onnx.common.data_types import FloatTensorType

#import json

#with open('fake_data.json', 'r') as f:

# dataset = json.load(f)

#docs = [doc for (doc, _) in dataset]

#labels = [label for (_, label) in dataset]

data = [

["schedule a meeting", 0],

["schedule a sync with the team", 0],

["slot in a meeting", 0],

["call ron", 1],

["make a phone call", 1],

["call in on the phone", 2] # changing this from 2 to 1 will allow code to successfully run

]

docs = [doc for (doc, _) in data]

labels = [label for (_, label) in data]

vectorizer = TfidfVectorizer()

vectorizer.fit_transform(docs)

embeddings = vectorizer.transform(docs)

dim = embeddings.shape[1]

#embeddings = np.vstack(embeddings)

clf = SVC()

clf.fit(embeddings, labels)

initial_type = [('float_input', FloatTensorType([1, dim]))]

onnx_model = convert_sklearn(clf, initial_types=initial_type) # this is line 37, where the crash occurs

with open('model.onnx', 'wb') as f:

f.write(onnx_model.SerializeToString())

```

When I run the above, I get:

```

Traceback (most recent call last):

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\common\_container.py", line 536, in add_node

node = make_node(op_type, inputs, outputs, name=name,

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\proto\onnx_helper_modified.py", line 66, in make_node

node.attribute.extend(

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\google\protobuf\internal\containers.py", line 410, in extend

for message in elem_seq:

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\proto\onnx_helper_modified.py", line 67, in <genexpr>

make_attribute(key, value, dtype=_dtype, domain=domain)

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\proto\onnx_helper_modified.py", line 175, in make_attribute

raise ValueError(

ValueError: You passed in an iterable attribute but I cannot figure out its applicable type, key='coefficients', type=<class 'numpy.matrix'>, dtype=float32, types=[<class 'numpy.matrix'>, <class 'numpy.matrix'>].

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File ".\dust.py", line 37, in <module>

onnx_model = convert_sklearn(clf, initial_types=initial_type)

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\convert.py", line 160, in convert_sklearn

onnx_model = convert_topology(topology, name, doc_string, target_opset,

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\common\_topology.py", line 1069, in convert_topology

conv(scope, operator, container)

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\common\_registration.py", line 29, in __call__

return self._fct(*args)

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\operator_converters\support_vector_machines.py", line 214, in convert_sklearn_svm_classifier

container.add_node(

File "C:\Users\Stefan Larson\AppData\Local\Programs\Python\Python38\lib\site-packages\skl2onnx\common\_container.py", line 539, in add_node

raise ValueError("Unable to create node '{}' with name='{}'."

ValueError: Unable to create node 'SVMClassifier' with name='SVMc'.

```

The code crashes at the `convert_sklearn` function call. However, when I alter the dataset to have only two classes ('1', and '0'), the code runs successfully.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `skl2onnx/proto/onnx_helper_modified.py`

Content:

```

1 # Modified file from

2 # https://github.com/onnx/onnx/blob/master/onnx/helper.py.

3 import collections

4 import numbers

5

6 from onnx import (

7 TensorProto, AttributeProto,

8 NodeProto, GraphProto

9 )

10 from onnx.helper import ( # noqa

11 make_tensor, make_model, make_graph, _to_bytes_or_false,

12 make_tensor_value_info, ValueInfoProto

13 )

14

15 try:

16 from onnx import SparseTensorProto

17 from onnx.helper import make_sparse_tensor # noqa

18 except ImportError:

19 # onnx is too old.

20 SparseTensorProto = None

21

22 from onnx.numpy_helper import from_array # noqa

23 from typing import (

24 Text, Sequence, Any, Optional,

25 List, cast

26 )

27 import numpy as np # type: ignore

28

29

30 def make_node(

31 op_type, # type: Text

32 inputs, # type: Sequence[Text]

33 outputs, # type: Sequence[Text]

34 name=None, # type: Optional[Text]

35 doc_string=None, # type: Optional[Text]

36 domain=None, # type: Optional[Text]

37 _dtype=None, # type: [np.float32, np.float64]

38 **kwargs # type: Any

39 ): # type: (...) -> NodeProto

40 """Construct a NodeProto.

41

42 Arguments:

43 op_type (string): The name of the operator to construct

44 inputs (list of string): list of input names

45 outputs (list of string): list of output names

46 name (string, default None): optional unique identifier for NodeProto

47 doc_string (string, default None): optional documentation

48 string for NodeProto

49 dtype: dtype for double used to infer

50 domain (string, default None): optional domain for NodeProto.

51 If it's None, we will just use default domain (which is empty)

52 **kwargs (dict): the attributes of the node. The acceptable values

53 are documented in :func:`make_attribute`.

54 """

55 node = NodeProto()

56 node.op_type = op_type

57 node.input.extend(inputs)

58 node.output.extend(outputs)

59 if name:

60 node.name = name

61 if doc_string:

62 node.doc_string = doc_string

63 if domain is not None:

64 node.domain = domain

65 if kwargs:

66 node.attribute.extend(

67 make_attribute(key, value, dtype=_dtype, domain=domain)

68 for key, value in sorted(kwargs.items()))

69 return node

70

71

72 def make_attribute(

73 key, # type: Text

74 value, # type: Any

75 dtype=None, # type: [np.float32, np.float64]

76 domain='', # type: Text

77 doc_string=None # type: Optional[Text]

78 ): # type: (...) -> AttributeProto

79 """Makes an AttributeProto based on the value type."""

80 attr = AttributeProto()

81 attr.name = key

82 if doc_string:

83 attr.doc_string = doc_string

84

85 is_iterable = isinstance(value, collections.abc.Iterable)

86 bytes_or_false = _to_bytes_or_false(value)

87

88 use_float64 = dtype == np.float64 and domain not in ('', 'ai.onnx.ml')

89

90 if isinstance(value, np.float32):

91 attr.f = value

92 attr.type = AttributeProto.FLOAT

93 elif isinstance(value, (float, np.float64)):

94 if use_float64:

95 attr.type = AttributeProto.TENSOR

96 attr.t.CopyFrom(

97 make_tensor(

98 key, TensorProto.DOUBLE, (1, ), [value]))

99 else:

100 attr.f = value

101 attr.type = AttributeProto.FLOAT

102 elif isinstance(value, np.int32):

103 attr.i = value

104 attr.type = AttributeProto.INT

105 elif isinstance(value, np.int64):

106 attr.i = value

107 attr.type = AttributeProto.INT

108 elif isinstance(value, numbers.Integral):

109 attr.i = value

110 attr.type = AttributeProto.INT

111 # string

112 elif bytes_or_false is not False:

113 assert isinstance(bytes_or_false, bytes)

114 attr.s = bytes_or_false

115 attr.type = AttributeProto.STRING

116 elif isinstance(value, TensorProto):

117 attr.t.CopyFrom(value)

118 attr.type = AttributeProto.TENSOR

119 elif (SparseTensorProto is not None and

120 isinstance(value, SparseTensorProto)):

121 attr.sparse_tensor.CopyFrom(value)

122 attr.type = AttributeProto.SPARSE_TENSOR

123 elif isinstance(value, GraphProto):

124 attr.g.CopyFrom(value)

125 attr.type = AttributeProto.GRAPH

126 # third, iterable cases

127 elif is_iterable:

128 byte_array = [_to_bytes_or_false(v) for v in value]

129 if all(isinstance(v, np.float32) for v in value):

130 attr.floats.extend(value)

131 attr.type = AttributeProto.FLOATS

132 elif all(isinstance(v, np.float64) for v in value):

133 if use_float64:

134 attr.type = AttributeProto.TENSOR

135 attr.t.CopyFrom(

136 make_tensor(

137 key, TensorProto.DOUBLE, (len(value), ), value))

138 else:

139 attr.floats.extend(value)

140 attr.type = AttributeProto.FLOATS

141 elif all(isinstance(v, float) for v in value):

142 if use_float64:

143 attr.type = AttributeProto.TENSOR

144 attr.t.CopyFrom(

145 make_tensor(

146 key, TensorProto.DOUBLE, (len(value), ), value))

147 else:

148 attr.floats.extend(value)

149 attr.type = AttributeProto.FLOATS

150 elif all(isinstance(v, np.int32) for v in value):

151 attr.ints.extend(int(v) for v in value)

152 attr.type = AttributeProto.INTS

153 elif all(isinstance(v, np.int64) for v in value):

154 attr.ints.extend(int(v) for v in value)

155 attr.type = AttributeProto.INTS

156 elif all(isinstance(v, numbers.Integral) for v in value):

157 # Turn np.int32/64 into Python built-in int.

158 attr.ints.extend(int(v) for v in value)

159 attr.type = AttributeProto.INTS

160 elif all(map(lambda bytes_or_false: bytes_or_false is not False,

161 byte_array)):

162 attr.strings.extend(cast(List[bytes], byte_array))

163 attr.type = AttributeProto.STRINGS

164 elif all(isinstance(v, TensorProto) for v in value):

165 attr.tensors.extend(value)

166 attr.type = AttributeProto.TENSORS

167 elif (SparseTensorProto is not None and

168 all(isinstance(v, SparseTensorProto) for v in value)):

169 attr.sparse_tensors.extend(value)

170 attr.type = AttributeProto.SPARSE_TENSORS

171 elif all(isinstance(v, GraphProto) for v in value):

172 attr.graphs.extend(value)

173 attr.type = AttributeProto.GRAPHS

174 else:

175 raise ValueError(

176 "You passed in an iterable attribute but I cannot figure out "

177 "its applicable type, key='{}', type={}, dtype={}, "

178 "types={}.".format(

179 key, type(value), dtype,

180 [type(_) for _, __ in zip(value, range(0, 5))]))

181 else:

182 raise ValueError(

183 "Value '{}' is not valid attribute data type for attribute "

184 "'{}'.".format(value, key))

185 return attr

186

187

188 def get_attribute_value(attr): # type: (AttributeProto) -> Any

189 if attr.type == AttributeProto.FLOAT:

190 return attr.f

191 elif attr.type == AttributeProto.INT:

192 return attr.i

193 elif attr.type == AttributeProto.STRING:

194 return attr.s

195 elif attr.type == AttributeProto.TENSOR:

196 return attr.t

197 elif attr.type == AttributeProto.GRAPH:

198 return attr.g

199 elif attr.type == AttributeProto.FLOATS:

200 return list(attr.floats)

201 elif attr.type == AttributeProto.INTS:

202 return list(attr.ints)

203 elif attr.type == AttributeProto.STRINGS:

204 return list(attr.strings)

205 elif attr.type == AttributeProto.TENSORS:

206 return list(attr.tensors)

207 elif attr.type == AttributeProto.GRAPHS:

208 return list(attr.graphs)

209 else:

210 raise ValueError("Unsupported ONNX attribute: {}".format(attr))

211

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/skl2onnx/proto/onnx_helper_modified.py b/skl2onnx/proto/onnx_helper_modified.py

--- a/skl2onnx/proto/onnx_helper_modified.py

+++ b/skl2onnx/proto/onnx_helper_modified.py

@@ -171,6 +171,10 @@

elif all(isinstance(v, GraphProto) for v in value):

attr.graphs.extend(value)

attr.type = AttributeProto.GRAPHS

+ elif isinstance(value, np.matrix):

+ return make_attribute(

+ key, np.asarray(value).ravel(), dtype=dtype, domain=domain,

+ doc_string=doc_string)

else:

raise ValueError(

"You passed in an iterable attribute but I cannot figure out "

|