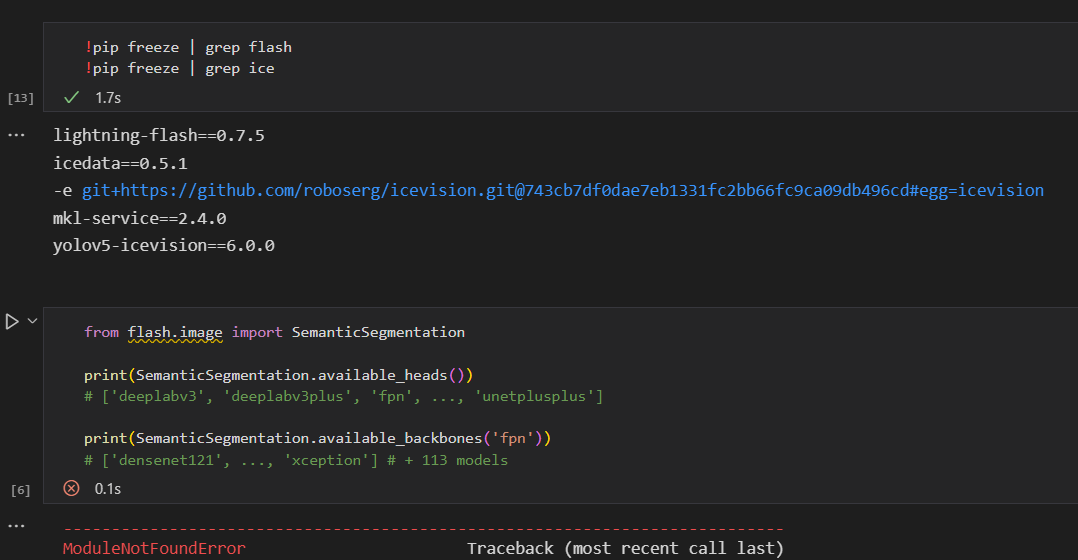

problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_43814

|

rasdani/github-patches

|

git_diff

|

open-telemetry__opentelemetry-python-1135

|

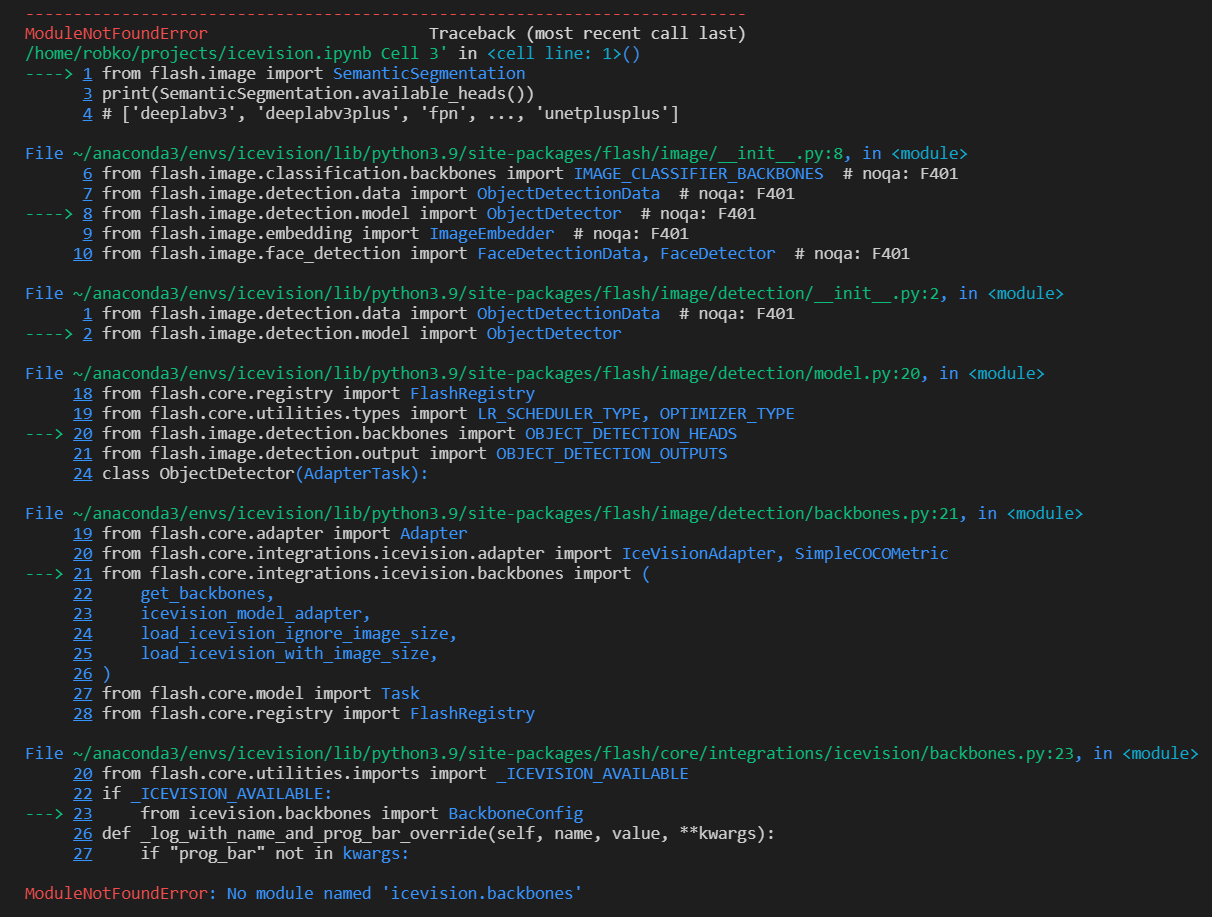

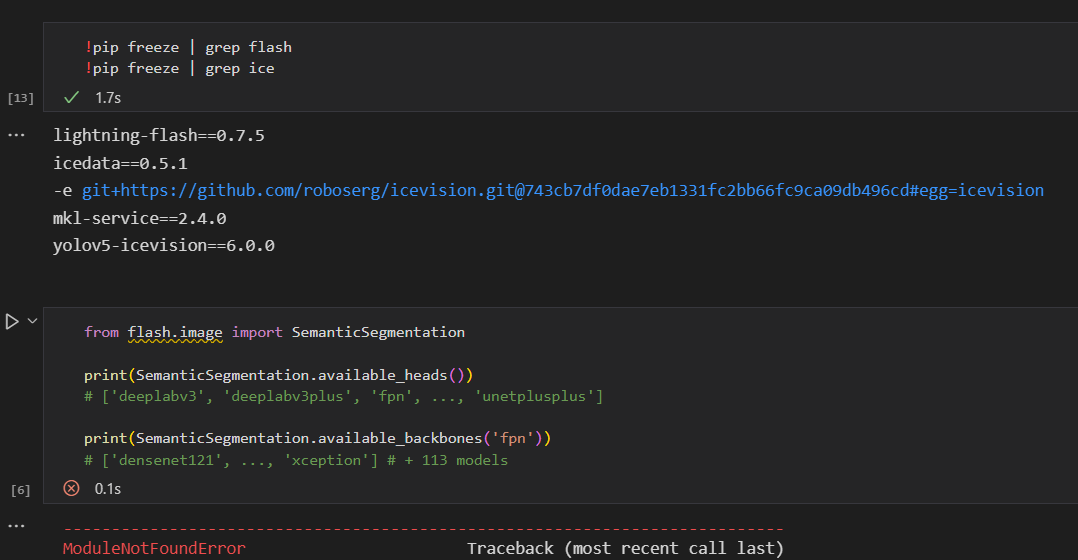

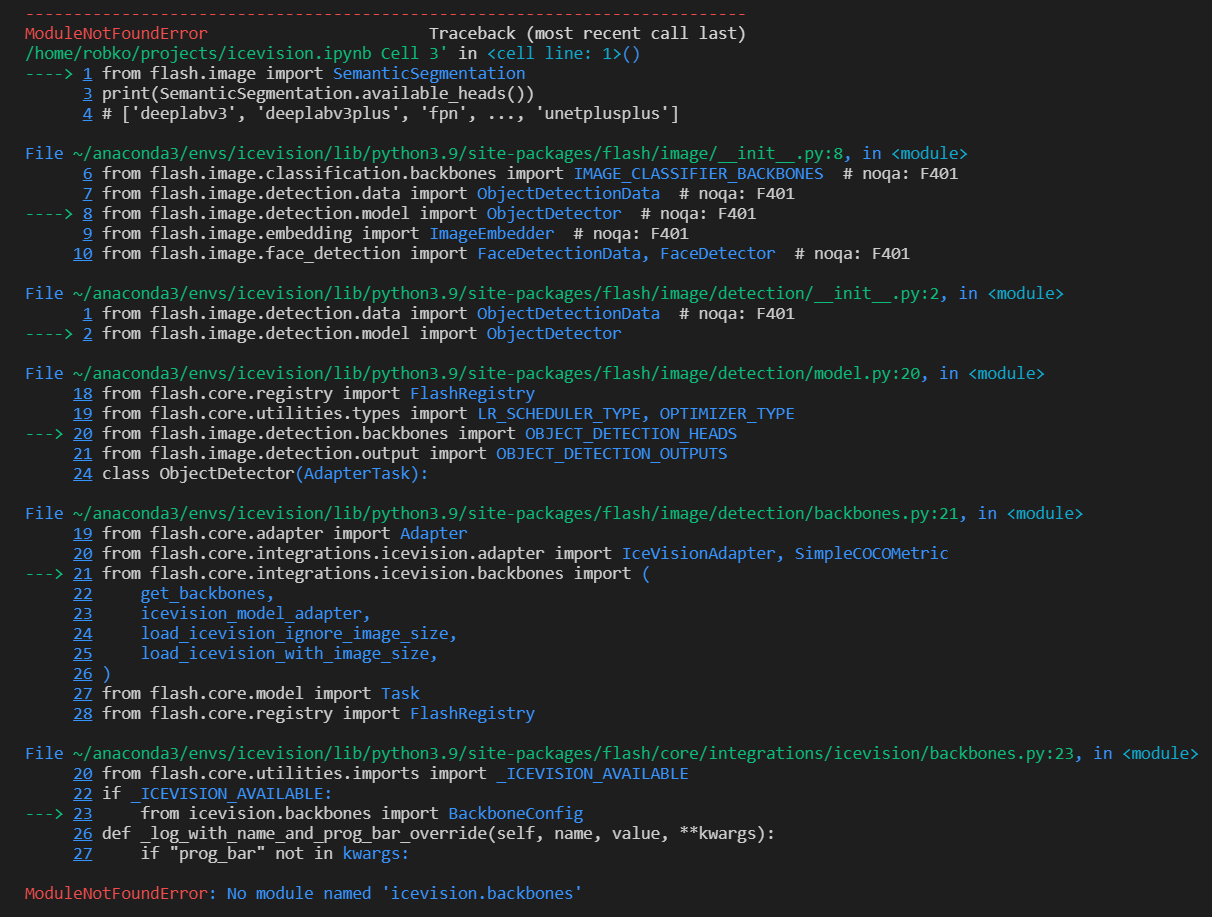

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Celery Instrumentation with apply_async function

I have a basic django application which uses celery to call a method using apply_async and delay functions. I am trying to integrate Otel into my django application. I am using 0.10b0 version of all Otel libraries. I have used celery-auto instrumentation for produce the spans/traces. But I see that the celery-auto instrumentation isnt working.

**Steps to reproduce**

I have attached my code in order to reproduce the issue.

1. Run the python application using

`python manage.py runserver`

<img width="684" alt="Screen Shot 2020-07-01 at 1 54 05 PM" src="https://user-images.githubusercontent.com/11060386/86276134-5bf37780-bba2-11ea-951e-75cca763ee55.png">

2. Then also start the celery worker using

celery -A django_email_celery worker --loglevel=info

<img width="1352" alt="Screen Shot 2020-07-01 at 1 55 11 PM" src="https://user-images.githubusercontent.com/11060386/86276219-82b1ae00-bba2-11ea-9a8d-96454683a694.png">

**What is the expected behavior?**

Once you hit the `localhost` url, I see the trace and spans created by the Otel. But I was expecting the `traceid` to continue from method `index()` in `views.py` to `send_log` in `tasks.py`.

**What is the actual behavior?**

But then the a new trace is being created at the `send_log`.

<img width="748" alt="Screen Shot 2020-07-01 at 1 59 29 PM" src="https://user-images.githubusercontent.com/11060386/86276630-331fb200-bba3-11ea-9eab-5df16112a275.png">

<img width="1336" alt="Screen Shot 2020-07-01 at 2 01 44 PM" src="https://user-images.githubusercontent.com/11060386/86276746-737f3000-bba3-11ea-8750-be0e416399f0.png">

**Other Info**

I have added celery instrumentation in `celery.py` file

[django_email_celery.zip](https://github.com/open-telemetry/opentelemetry-python/files/4859611/django_email_celery.zip)

@ocelotl @codeboten

--- END ISSUE ---

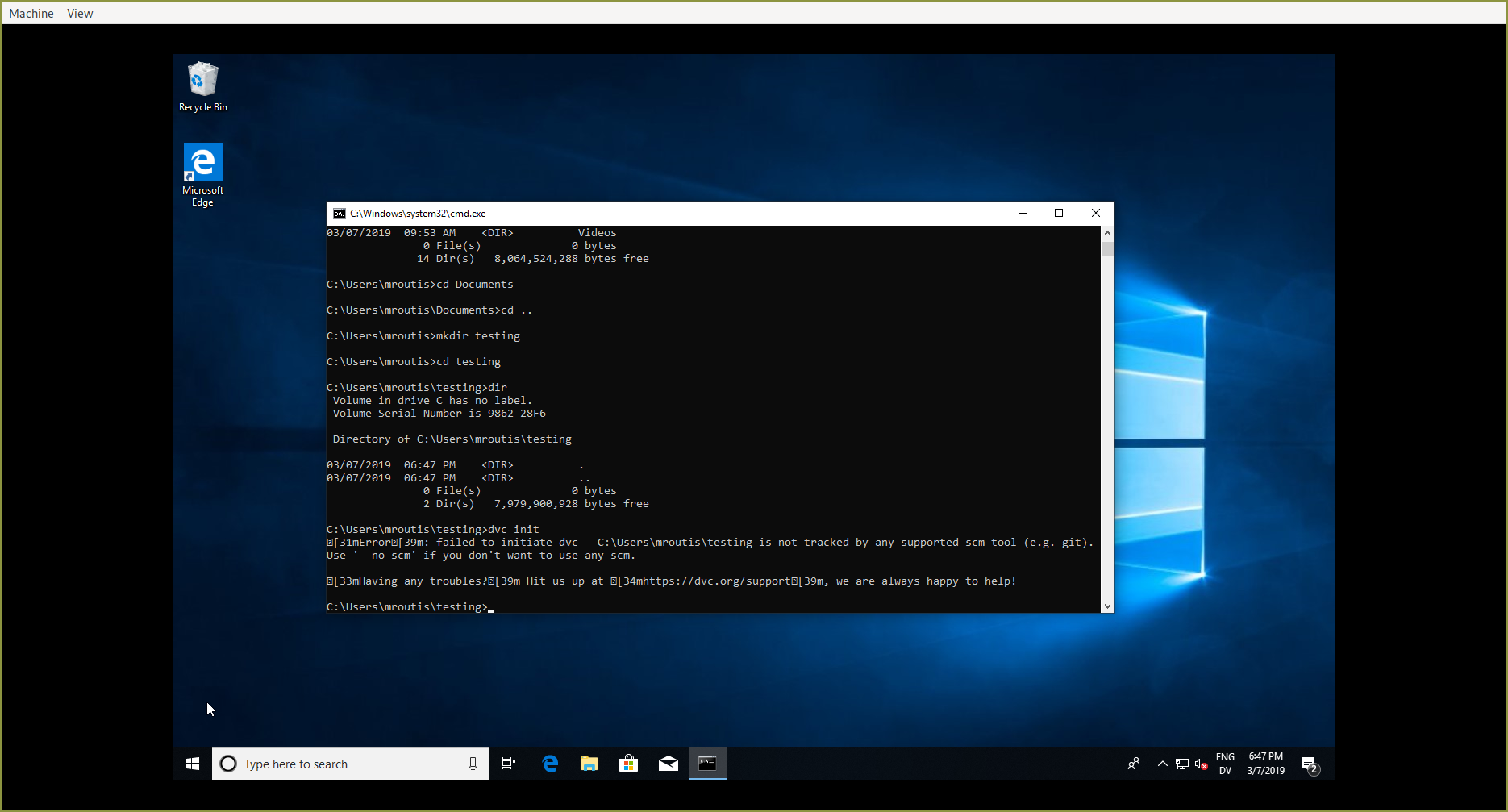

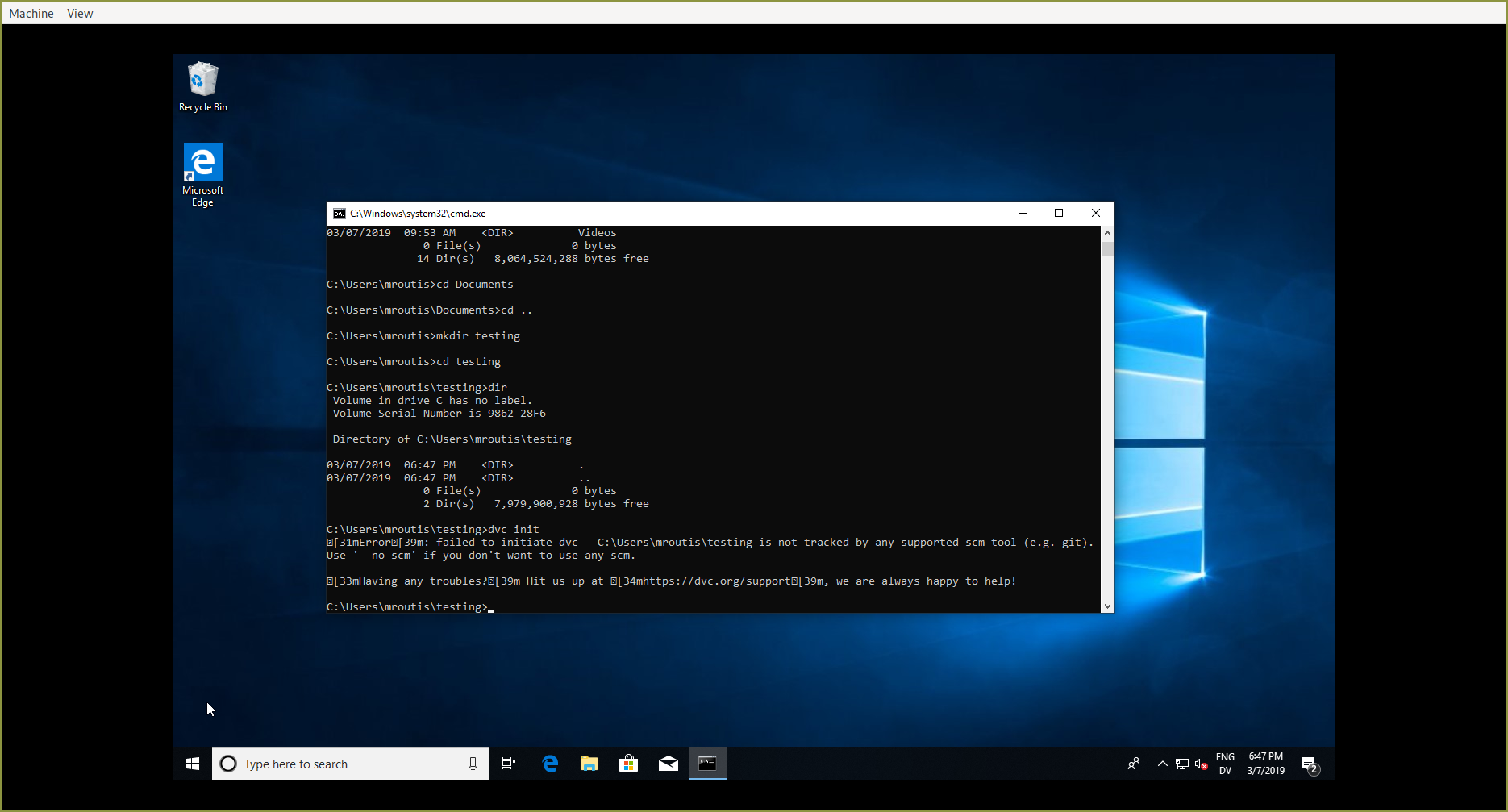

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py`

Content:

```

1 # Copyright The OpenTelemetry Authors

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 """

15 Instrument `celery`_ to trace Celery applications.

16

17 .. _celery: https://pypi.org/project/celery/

18

19 Usage

20 -----

21

22 * Start broker backend

23

24 .. code::

25

26 docker run -p 5672:5672 rabbitmq

27

28

29 * Run instrumented task

30

31 .. code:: python

32

33 from opentelemetry.instrumentation.celery import CeleryInstrumentor

34

35 CeleryInstrumentor().instrument()

36

37 from celery import Celery

38

39 app = Celery("tasks", broker="amqp://localhost")

40

41 @app.task

42 def add(x, y):

43 return x + y

44

45 add.delay(42, 50)

46

47 API

48 ---

49 """

50

51 import logging

52 import signal

53

54 from celery import signals # pylint: disable=no-name-in-module

55

56 from opentelemetry import trace

57 from opentelemetry.instrumentation.celery import utils

58 from opentelemetry.instrumentation.celery.version import __version__

59 from opentelemetry.instrumentation.instrumentor import BaseInstrumentor

60 from opentelemetry.trace.status import Status, StatusCanonicalCode

61

62 logger = logging.getLogger(__name__)

63

64 # Task operations

65 _TASK_TAG_KEY = "celery.action"

66 _TASK_APPLY_ASYNC = "apply_async"

67 _TASK_RUN = "run"

68

69 _TASK_RETRY_REASON_KEY = "celery.retry.reason"

70 _TASK_REVOKED_REASON_KEY = "celery.revoked.reason"

71 _TASK_REVOKED_TERMINATED_SIGNAL_KEY = "celery.terminated.signal"

72 _TASK_NAME_KEY = "celery.task_name"

73 _MESSAGE_ID_ATTRIBUTE_NAME = "messaging.message_id"

74

75

76 class CeleryInstrumentor(BaseInstrumentor):

77 def _instrument(self, **kwargs):

78 tracer_provider = kwargs.get("tracer_provider")

79

80 # pylint: disable=attribute-defined-outside-init

81 self._tracer = trace.get_tracer(__name__, __version__, tracer_provider)

82

83 signals.task_prerun.connect(self._trace_prerun, weak=False)

84 signals.task_postrun.connect(self._trace_postrun, weak=False)

85 signals.before_task_publish.connect(

86 self._trace_before_publish, weak=False

87 )

88 signals.after_task_publish.connect(

89 self._trace_after_publish, weak=False

90 )

91 signals.task_failure.connect(self._trace_failure, weak=False)

92 signals.task_retry.connect(self._trace_retry, weak=False)

93

94 def _uninstrument(self, **kwargs):

95 signals.task_prerun.disconnect(self._trace_prerun)

96 signals.task_postrun.disconnect(self._trace_postrun)

97 signals.before_task_publish.disconnect(self._trace_before_publish)

98 signals.after_task_publish.disconnect(self._trace_after_publish)

99 signals.task_failure.disconnect(self._trace_failure)

100 signals.task_retry.disconnect(self._trace_retry)

101

102 def _trace_prerun(self, *args, **kwargs):

103 task = utils.retrieve_task(kwargs)

104 task_id = utils.retrieve_task_id(kwargs)

105

106 if task is None or task_id is None:

107 return

108

109 logger.debug("prerun signal start task_id=%s", task_id)

110

111 span = self._tracer.start_span(task.name, kind=trace.SpanKind.CONSUMER)

112

113 activation = self._tracer.use_span(span, end_on_exit=True)

114 activation.__enter__()

115 utils.attach_span(task, task_id, (span, activation))

116

117 @staticmethod

118 def _trace_postrun(*args, **kwargs):

119 task = utils.retrieve_task(kwargs)

120 task_id = utils.retrieve_task_id(kwargs)

121

122 if task is None or task_id is None:

123 return

124

125 logger.debug("postrun signal task_id=%s", task_id)

126

127 # retrieve and finish the Span

128 span, activation = utils.retrieve_span(task, task_id)

129 if span is None:

130 logger.warning("no existing span found for task_id=%s", task_id)

131 return

132

133 # request context tags

134 span.set_attribute(_TASK_TAG_KEY, _TASK_RUN)

135 utils.set_attributes_from_context(span, kwargs)

136 utils.set_attributes_from_context(span, task.request)

137 span.set_attribute(_TASK_NAME_KEY, task.name)

138

139 activation.__exit__(None, None, None)

140 utils.detach_span(task, task_id)

141

142 def _trace_before_publish(self, *args, **kwargs):

143 task = utils.retrieve_task_from_sender(kwargs)

144 task_id = utils.retrieve_task_id_from_message(kwargs)

145

146 if task is None or task_id is None:

147 return

148

149 span = self._tracer.start_span(task.name, kind=trace.SpanKind.PRODUCER)

150

151 # apply some attributes here because most of the data is not available

152 span.set_attribute(_TASK_TAG_KEY, _TASK_APPLY_ASYNC)

153 span.set_attribute(_MESSAGE_ID_ATTRIBUTE_NAME, task_id)

154 span.set_attribute(_TASK_NAME_KEY, task.name)

155 utils.set_attributes_from_context(span, kwargs)

156

157 activation = self._tracer.use_span(span, end_on_exit=True)

158 activation.__enter__()

159 utils.attach_span(task, task_id, (span, activation), is_publish=True)

160

161 @staticmethod

162 def _trace_after_publish(*args, **kwargs):

163 task = utils.retrieve_task_from_sender(kwargs)

164 task_id = utils.retrieve_task_id_from_message(kwargs)

165

166 if task is None or task_id is None:

167 return

168

169 # retrieve and finish the Span

170 _, activation = utils.retrieve_span(task, task_id, is_publish=True)

171 if activation is None:

172 logger.warning("no existing span found for task_id=%s", task_id)

173 return

174

175 activation.__exit__(None, None, None)

176 utils.detach_span(task, task_id, is_publish=True)

177

178 @staticmethod

179 def _trace_failure(*args, **kwargs):

180 task = utils.retrieve_task_from_sender(kwargs)

181 task_id = utils.retrieve_task_id(kwargs)

182

183 if task is None or task_id is None:

184 return

185

186 # retrieve and pass exception info to activation

187 span, _ = utils.retrieve_span(task, task_id)

188 if span is None:

189 return

190

191 status_kwargs = {"canonical_code": StatusCanonicalCode.UNKNOWN}

192

193 ex = kwargs.get("einfo")

194

195 if (

196 hasattr(task, "throws")

197 and ex is not None

198 and isinstance(ex.exception, task.throws)

199 ):

200 return

201

202 if ex is not None:

203 status_kwargs["description"] = str(ex)

204

205 span.set_status(Status(**status_kwargs))

206

207 @staticmethod

208 def _trace_retry(*args, **kwargs):

209 task = utils.retrieve_task_from_sender(kwargs)

210 task_id = utils.retrieve_task_id_from_request(kwargs)

211 reason = utils.retrieve_reason(kwargs)

212

213 if task is None or task_id is None or reason is None:

214 return

215

216 span, _ = utils.retrieve_span(task, task_id)

217 if span is None:

218 return

219

220 # Add retry reason metadata to span

221 # Use `str(reason)` instead of `reason.message` in case we get

222 # something that isn't an `Exception`

223 span.set_attribute(_TASK_RETRY_REASON_KEY, str(reason))

224

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py b/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py

--- a/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py

+++ b/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py

@@ -30,11 +30,20 @@

.. code:: python

+ from opentelemetry import trace

+ from opentelemetry.sdk.trace import TracerProvider

+ from opentelemetry.sdk.trace.export import BatchExportSpanProcessor

from opentelemetry.instrumentation.celery import CeleryInstrumentor

- CeleryInstrumentor().instrument()

-

from celery import Celery

+ from celery.signals import worker_process_init

+

+ @worker_process_init.connect(weak=False)

+ def init_celery_tracing(*args, **kwargs):

+ trace.set_tracer_provider(TracerProvider())

+ span_processor = BatchExportSpanProcessor(ConsoleSpanExporter())

+ trace.get_tracer_provider().add_span_processor(span_processor)

+ CeleryInstrumentor().instrument()

app = Celery("tasks", broker="amqp://localhost")

@@ -50,13 +59,15 @@

import logging

import signal

+from collections.abc import Iterable

from celery import signals # pylint: disable=no-name-in-module

-from opentelemetry import trace

+from opentelemetry import propagators, trace

from opentelemetry.instrumentation.celery import utils

from opentelemetry.instrumentation.celery.version import __version__

from opentelemetry.instrumentation.instrumentor import BaseInstrumentor

+from opentelemetry.trace.propagation import get_current_span

from opentelemetry.trace.status import Status, StatusCanonicalCode

logger = logging.getLogger(__name__)

@@ -106,9 +117,16 @@

if task is None or task_id is None:

return

+ request = task.request

+ tracectx = propagators.extract(carrier_extractor, request) or {}

+ parent = get_current_span(tracectx)

+

logger.debug("prerun signal start task_id=%s", task_id)

- span = self._tracer.start_span(task.name, kind=trace.SpanKind.CONSUMER)

+ operation_name = "{0}/{1}".format(_TASK_RUN, task.name)

+ span = self._tracer.start_span(

+ operation_name, parent=parent, kind=trace.SpanKind.CONSUMER

+ )

activation = self._tracer.use_span(span, end_on_exit=True)

activation.__enter__()

@@ -146,7 +164,10 @@

if task is None or task_id is None:

return

- span = self._tracer.start_span(task.name, kind=trace.SpanKind.PRODUCER)

+ operation_name = "{0}/{1}".format(_TASK_APPLY_ASYNC, task.name)

+ span = self._tracer.start_span(

+ operation_name, kind=trace.SpanKind.PRODUCER

+ )

# apply some attributes here because most of the data is not available

span.set_attribute(_TASK_TAG_KEY, _TASK_APPLY_ASYNC)

@@ -158,6 +179,10 @@

activation.__enter__()

utils.attach_span(task, task_id, (span, activation), is_publish=True)

+ headers = kwargs.get("headers")

+ if headers:

+ propagators.inject(type(headers).__setitem__, headers)

+

@staticmethod

def _trace_after_publish(*args, **kwargs):

task = utils.retrieve_task_from_sender(kwargs)

@@ -221,3 +246,10 @@

# Use `str(reason)` instead of `reason.message` in case we get

# something that isn't an `Exception`

span.set_attribute(_TASK_RETRY_REASON_KEY, str(reason))

+

+

+def carrier_extractor(carrier, key):

+ value = getattr(carrier, key, [])

+ if isinstance(value, str) or not isinstance(value, Iterable):

+ value = (value,)

+ return value

|

{"golden_diff": "diff --git a/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py b/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py\n--- a/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py\n+++ b/instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py\n@@ -30,11 +30,20 @@\n \n .. code:: python\n \n+ from opentelemetry import trace\n+ from opentelemetry.sdk.trace import TracerProvider\n+ from opentelemetry.sdk.trace.export import BatchExportSpanProcessor\n from opentelemetry.instrumentation.celery import CeleryInstrumentor\n \n- CeleryInstrumentor().instrument()\n-\n from celery import Celery\n+ from celery.signals import worker_process_init\n+\n+ @worker_process_init.connect(weak=False)\n+ def init_celery_tracing(*args, **kwargs):\n+ trace.set_tracer_provider(TracerProvider())\n+ span_processor = BatchExportSpanProcessor(ConsoleSpanExporter())\n+ trace.get_tracer_provider().add_span_processor(span_processor)\n+ CeleryInstrumentor().instrument()\n \n app = Celery(\"tasks\", broker=\"amqp://localhost\")\n \n@@ -50,13 +59,15 @@\n \n import logging\n import signal\n+from collections.abc import Iterable\n \n from celery import signals # pylint: disable=no-name-in-module\n \n-from opentelemetry import trace\n+from opentelemetry import propagators, trace\n from opentelemetry.instrumentation.celery import utils\n from opentelemetry.instrumentation.celery.version import __version__\n from opentelemetry.instrumentation.instrumentor import BaseInstrumentor\n+from opentelemetry.trace.propagation import get_current_span\n from opentelemetry.trace.status import Status, StatusCanonicalCode\n \n logger = logging.getLogger(__name__)\n@@ -106,9 +117,16 @@\n if task is None or task_id is None:\n return\n \n+ request = task.request\n+ tracectx = propagators.extract(carrier_extractor, request) or {}\n+ parent = get_current_span(tracectx)\n+\n logger.debug(\"prerun signal start task_id=%s\", task_id)\n \n- span = self._tracer.start_span(task.name, kind=trace.SpanKind.CONSUMER)\n+ operation_name = \"{0}/{1}\".format(_TASK_RUN, task.name)\n+ span = self._tracer.start_span(\n+ operation_name, parent=parent, kind=trace.SpanKind.CONSUMER\n+ )\n \n activation = self._tracer.use_span(span, end_on_exit=True)\n activation.__enter__()\n@@ -146,7 +164,10 @@\n if task is None or task_id is None:\n return\n \n- span = self._tracer.start_span(task.name, kind=trace.SpanKind.PRODUCER)\n+ operation_name = \"{0}/{1}\".format(_TASK_APPLY_ASYNC, task.name)\n+ span = self._tracer.start_span(\n+ operation_name, kind=trace.SpanKind.PRODUCER\n+ )\n \n # apply some attributes here because most of the data is not available\n span.set_attribute(_TASK_TAG_KEY, _TASK_APPLY_ASYNC)\n@@ -158,6 +179,10 @@\n activation.__enter__()\n utils.attach_span(task, task_id, (span, activation), is_publish=True)\n \n+ headers = kwargs.get(\"headers\")\n+ if headers:\n+ propagators.inject(type(headers).__setitem__, headers)\n+\n @staticmethod\n def _trace_after_publish(*args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n@@ -221,3 +246,10 @@\n # Use `str(reason)` instead of `reason.message` in case we get\n # something that isn't an `Exception`\n span.set_attribute(_TASK_RETRY_REASON_KEY, str(reason))\n+\n+\n+def carrier_extractor(carrier, key):\n+ value = getattr(carrier, key, [])\n+ if isinstance(value, str) or not isinstance(value, Iterable):\n+ value = (value,)\n+ return value\n", "issue": "Celery Instrumentation with apply_async function\nI have a basic django application which uses celery to call a method using apply_async and delay functions. I am trying to integrate Otel into my django application. I am using 0.10b0 version of all Otel libraries. I have used celery-auto instrumentation for produce the spans/traces. But I see that the celery-auto instrumentation isnt working. \r\n\r\n**Steps to reproduce**\r\nI have attached my code in order to reproduce the issue. \r\n1. Run the python application using \r\n`python manage.py runserver`\r\n<img width=\"684\" alt=\"Screen Shot 2020-07-01 at 1 54 05 PM\" src=\"https://user-images.githubusercontent.com/11060386/86276134-5bf37780-bba2-11ea-951e-75cca763ee55.png\">\r\n\r\n2. Then also start the celery worker using\r\ncelery -A django_email_celery worker --loglevel=info\r\n<img width=\"1352\" alt=\"Screen Shot 2020-07-01 at 1 55 11 PM\" src=\"https://user-images.githubusercontent.com/11060386/86276219-82b1ae00-bba2-11ea-9a8d-96454683a694.png\">\r\n\r\n\r\n**What is the expected behavior?**\r\nOnce you hit the `localhost` url, I see the trace and spans created by the Otel. But I was expecting the `traceid` to continue from method `index()` in `views.py` to `send_log` in `tasks.py`. \r\n\r\n**What is the actual behavior?**\r\nBut then the a new trace is being created at the `send_log`. \r\n<img width=\"748\" alt=\"Screen Shot 2020-07-01 at 1 59 29 PM\" src=\"https://user-images.githubusercontent.com/11060386/86276630-331fb200-bba3-11ea-9eab-5df16112a275.png\">\r\n<img width=\"1336\" alt=\"Screen Shot 2020-07-01 at 2 01 44 PM\" src=\"https://user-images.githubusercontent.com/11060386/86276746-737f3000-bba3-11ea-8750-be0e416399f0.png\">\r\n\r\n**Other Info**\r\nI have added celery instrumentation in `celery.py` file\r\n\r\n[django_email_celery.zip](https://github.com/open-telemetry/opentelemetry-python/files/4859611/django_email_celery.zip)\r\n\r\n@ocelotl @codeboten \n", "before_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"\nInstrument `celery`_ to trace Celery applications.\n\n.. _celery: https://pypi.org/project/celery/\n\nUsage\n-----\n\n* Start broker backend\n\n.. code::\n\n docker run -p 5672:5672 rabbitmq\n\n\n* Run instrumented task\n\n.. code:: python\n\n from opentelemetry.instrumentation.celery import CeleryInstrumentor\n\n CeleryInstrumentor().instrument()\n\n from celery import Celery\n\n app = Celery(\"tasks\", broker=\"amqp://localhost\")\n\n @app.task\n def add(x, y):\n return x + y\n\n add.delay(42, 50)\n\nAPI\n---\n\"\"\"\n\nimport logging\nimport signal\n\nfrom celery import signals # pylint: disable=no-name-in-module\n\nfrom opentelemetry import trace\nfrom opentelemetry.instrumentation.celery import utils\nfrom opentelemetry.instrumentation.celery.version import __version__\nfrom opentelemetry.instrumentation.instrumentor import BaseInstrumentor\nfrom opentelemetry.trace.status import Status, StatusCanonicalCode\n\nlogger = logging.getLogger(__name__)\n\n# Task operations\n_TASK_TAG_KEY = \"celery.action\"\n_TASK_APPLY_ASYNC = \"apply_async\"\n_TASK_RUN = \"run\"\n\n_TASK_RETRY_REASON_KEY = \"celery.retry.reason\"\n_TASK_REVOKED_REASON_KEY = \"celery.revoked.reason\"\n_TASK_REVOKED_TERMINATED_SIGNAL_KEY = \"celery.terminated.signal\"\n_TASK_NAME_KEY = \"celery.task_name\"\n_MESSAGE_ID_ATTRIBUTE_NAME = \"messaging.message_id\"\n\n\nclass CeleryInstrumentor(BaseInstrumentor):\n def _instrument(self, **kwargs):\n tracer_provider = kwargs.get(\"tracer_provider\")\n\n # pylint: disable=attribute-defined-outside-init\n self._tracer = trace.get_tracer(__name__, __version__, tracer_provider)\n\n signals.task_prerun.connect(self._trace_prerun, weak=False)\n signals.task_postrun.connect(self._trace_postrun, weak=False)\n signals.before_task_publish.connect(\n self._trace_before_publish, weak=False\n )\n signals.after_task_publish.connect(\n self._trace_after_publish, weak=False\n )\n signals.task_failure.connect(self._trace_failure, weak=False)\n signals.task_retry.connect(self._trace_retry, weak=False)\n\n def _uninstrument(self, **kwargs):\n signals.task_prerun.disconnect(self._trace_prerun)\n signals.task_postrun.disconnect(self._trace_postrun)\n signals.before_task_publish.disconnect(self._trace_before_publish)\n signals.after_task_publish.disconnect(self._trace_after_publish)\n signals.task_failure.disconnect(self._trace_failure)\n signals.task_retry.disconnect(self._trace_retry)\n\n def _trace_prerun(self, *args, **kwargs):\n task = utils.retrieve_task(kwargs)\n task_id = utils.retrieve_task_id(kwargs)\n\n if task is None or task_id is None:\n return\n\n logger.debug(\"prerun signal start task_id=%s\", task_id)\n\n span = self._tracer.start_span(task.name, kind=trace.SpanKind.CONSUMER)\n\n activation = self._tracer.use_span(span, end_on_exit=True)\n activation.__enter__()\n utils.attach_span(task, task_id, (span, activation))\n\n @staticmethod\n def _trace_postrun(*args, **kwargs):\n task = utils.retrieve_task(kwargs)\n task_id = utils.retrieve_task_id(kwargs)\n\n if task is None or task_id is None:\n return\n\n logger.debug(\"postrun signal task_id=%s\", task_id)\n\n # retrieve and finish the Span\n span, activation = utils.retrieve_span(task, task_id)\n if span is None:\n logger.warning(\"no existing span found for task_id=%s\", task_id)\n return\n\n # request context tags\n span.set_attribute(_TASK_TAG_KEY, _TASK_RUN)\n utils.set_attributes_from_context(span, kwargs)\n utils.set_attributes_from_context(span, task.request)\n span.set_attribute(_TASK_NAME_KEY, task.name)\n\n activation.__exit__(None, None, None)\n utils.detach_span(task, task_id)\n\n def _trace_before_publish(self, *args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id_from_message(kwargs)\n\n if task is None or task_id is None:\n return\n\n span = self._tracer.start_span(task.name, kind=trace.SpanKind.PRODUCER)\n\n # apply some attributes here because most of the data is not available\n span.set_attribute(_TASK_TAG_KEY, _TASK_APPLY_ASYNC)\n span.set_attribute(_MESSAGE_ID_ATTRIBUTE_NAME, task_id)\n span.set_attribute(_TASK_NAME_KEY, task.name)\n utils.set_attributes_from_context(span, kwargs)\n\n activation = self._tracer.use_span(span, end_on_exit=True)\n activation.__enter__()\n utils.attach_span(task, task_id, (span, activation), is_publish=True)\n\n @staticmethod\n def _trace_after_publish(*args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id_from_message(kwargs)\n\n if task is None or task_id is None:\n return\n\n # retrieve and finish the Span\n _, activation = utils.retrieve_span(task, task_id, is_publish=True)\n if activation is None:\n logger.warning(\"no existing span found for task_id=%s\", task_id)\n return\n\n activation.__exit__(None, None, None)\n utils.detach_span(task, task_id, is_publish=True)\n\n @staticmethod\n def _trace_failure(*args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id(kwargs)\n\n if task is None or task_id is None:\n return\n\n # retrieve and pass exception info to activation\n span, _ = utils.retrieve_span(task, task_id)\n if span is None:\n return\n\n status_kwargs = {\"canonical_code\": StatusCanonicalCode.UNKNOWN}\n\n ex = kwargs.get(\"einfo\")\n\n if (\n hasattr(task, \"throws\")\n and ex is not None\n and isinstance(ex.exception, task.throws)\n ):\n return\n\n if ex is not None:\n status_kwargs[\"description\"] = str(ex)\n\n span.set_status(Status(**status_kwargs))\n\n @staticmethod\n def _trace_retry(*args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id_from_request(kwargs)\n reason = utils.retrieve_reason(kwargs)\n\n if task is None or task_id is None or reason is None:\n return\n\n span, _ = utils.retrieve_span(task, task_id)\n if span is None:\n return\n\n # Add retry reason metadata to span\n # Use `str(reason)` instead of `reason.message` in case we get\n # something that isn't an `Exception`\n span.set_attribute(_TASK_RETRY_REASON_KEY, str(reason))\n", "path": "instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py"}], "after_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"\nInstrument `celery`_ to trace Celery applications.\n\n.. _celery: https://pypi.org/project/celery/\n\nUsage\n-----\n\n* Start broker backend\n\n.. code::\n\n docker run -p 5672:5672 rabbitmq\n\n\n* Run instrumented task\n\n.. code:: python\n\n from opentelemetry import trace\n from opentelemetry.sdk.trace import TracerProvider\n from opentelemetry.sdk.trace.export import BatchExportSpanProcessor\n from opentelemetry.instrumentation.celery import CeleryInstrumentor\n\n from celery import Celery\n from celery.signals import worker_process_init\n\n @worker_process_init.connect(weak=False)\n def init_celery_tracing(*args, **kwargs):\n trace.set_tracer_provider(TracerProvider())\n span_processor = BatchExportSpanProcessor(ConsoleSpanExporter())\n trace.get_tracer_provider().add_span_processor(span_processor)\n CeleryInstrumentor().instrument()\n\n app = Celery(\"tasks\", broker=\"amqp://localhost\")\n\n @app.task\n def add(x, y):\n return x + y\n\n add.delay(42, 50)\n\nAPI\n---\n\"\"\"\n\nimport logging\nimport signal\nfrom collections.abc import Iterable\n\nfrom celery import signals # pylint: disable=no-name-in-module\n\nfrom opentelemetry import propagators, trace\nfrom opentelemetry.instrumentation.celery import utils\nfrom opentelemetry.instrumentation.celery.version import __version__\nfrom opentelemetry.instrumentation.instrumentor import BaseInstrumentor\nfrom opentelemetry.trace.propagation import get_current_span\nfrom opentelemetry.trace.status import Status, StatusCanonicalCode\n\nlogger = logging.getLogger(__name__)\n\n# Task operations\n_TASK_TAG_KEY = \"celery.action\"\n_TASK_APPLY_ASYNC = \"apply_async\"\n_TASK_RUN = \"run\"\n\n_TASK_RETRY_REASON_KEY = \"celery.retry.reason\"\n_TASK_REVOKED_REASON_KEY = \"celery.revoked.reason\"\n_TASK_REVOKED_TERMINATED_SIGNAL_KEY = \"celery.terminated.signal\"\n_TASK_NAME_KEY = \"celery.task_name\"\n_MESSAGE_ID_ATTRIBUTE_NAME = \"messaging.message_id\"\n\n\nclass CeleryInstrumentor(BaseInstrumentor):\n def _instrument(self, **kwargs):\n tracer_provider = kwargs.get(\"tracer_provider\")\n\n # pylint: disable=attribute-defined-outside-init\n self._tracer = trace.get_tracer(__name__, __version__, tracer_provider)\n\n signals.task_prerun.connect(self._trace_prerun, weak=False)\n signals.task_postrun.connect(self._trace_postrun, weak=False)\n signals.before_task_publish.connect(\n self._trace_before_publish, weak=False\n )\n signals.after_task_publish.connect(\n self._trace_after_publish, weak=False\n )\n signals.task_failure.connect(self._trace_failure, weak=False)\n signals.task_retry.connect(self._trace_retry, weak=False)\n\n def _uninstrument(self, **kwargs):\n signals.task_prerun.disconnect(self._trace_prerun)\n signals.task_postrun.disconnect(self._trace_postrun)\n signals.before_task_publish.disconnect(self._trace_before_publish)\n signals.after_task_publish.disconnect(self._trace_after_publish)\n signals.task_failure.disconnect(self._trace_failure)\n signals.task_retry.disconnect(self._trace_retry)\n\n def _trace_prerun(self, *args, **kwargs):\n task = utils.retrieve_task(kwargs)\n task_id = utils.retrieve_task_id(kwargs)\n\n if task is None or task_id is None:\n return\n\n request = task.request\n tracectx = propagators.extract(carrier_extractor, request) or {}\n parent = get_current_span(tracectx)\n\n logger.debug(\"prerun signal start task_id=%s\", task_id)\n\n operation_name = \"{0}/{1}\".format(_TASK_RUN, task.name)\n span = self._tracer.start_span(\n operation_name, parent=parent, kind=trace.SpanKind.CONSUMER\n )\n\n activation = self._tracer.use_span(span, end_on_exit=True)\n activation.__enter__()\n utils.attach_span(task, task_id, (span, activation))\n\n @staticmethod\n def _trace_postrun(*args, **kwargs):\n task = utils.retrieve_task(kwargs)\n task_id = utils.retrieve_task_id(kwargs)\n\n if task is None or task_id is None:\n return\n\n logger.debug(\"postrun signal task_id=%s\", task_id)\n\n # retrieve and finish the Span\n span, activation = utils.retrieve_span(task, task_id)\n if span is None:\n logger.warning(\"no existing span found for task_id=%s\", task_id)\n return\n\n # request context tags\n span.set_attribute(_TASK_TAG_KEY, _TASK_RUN)\n utils.set_attributes_from_context(span, kwargs)\n utils.set_attributes_from_context(span, task.request)\n span.set_attribute(_TASK_NAME_KEY, task.name)\n\n activation.__exit__(None, None, None)\n utils.detach_span(task, task_id)\n\n def _trace_before_publish(self, *args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id_from_message(kwargs)\n\n if task is None or task_id is None:\n return\n\n operation_name = \"{0}/{1}\".format(_TASK_APPLY_ASYNC, task.name)\n span = self._tracer.start_span(\n operation_name, kind=trace.SpanKind.PRODUCER\n )\n\n # apply some attributes here because most of the data is not available\n span.set_attribute(_TASK_TAG_KEY, _TASK_APPLY_ASYNC)\n span.set_attribute(_MESSAGE_ID_ATTRIBUTE_NAME, task_id)\n span.set_attribute(_TASK_NAME_KEY, task.name)\n utils.set_attributes_from_context(span, kwargs)\n\n activation = self._tracer.use_span(span, end_on_exit=True)\n activation.__enter__()\n utils.attach_span(task, task_id, (span, activation), is_publish=True)\n\n headers = kwargs.get(\"headers\")\n if headers:\n propagators.inject(type(headers).__setitem__, headers)\n\n @staticmethod\n def _trace_after_publish(*args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id_from_message(kwargs)\n\n if task is None or task_id is None:\n return\n\n # retrieve and finish the Span\n _, activation = utils.retrieve_span(task, task_id, is_publish=True)\n if activation is None:\n logger.warning(\"no existing span found for task_id=%s\", task_id)\n return\n\n activation.__exit__(None, None, None)\n utils.detach_span(task, task_id, is_publish=True)\n\n @staticmethod\n def _trace_failure(*args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id(kwargs)\n\n if task is None or task_id is None:\n return\n\n # retrieve and pass exception info to activation\n span, _ = utils.retrieve_span(task, task_id)\n if span is None:\n return\n\n status_kwargs = {\"canonical_code\": StatusCanonicalCode.UNKNOWN}\n\n ex = kwargs.get(\"einfo\")\n\n if (\n hasattr(task, \"throws\")\n and ex is not None\n and isinstance(ex.exception, task.throws)\n ):\n return\n\n if ex is not None:\n status_kwargs[\"description\"] = str(ex)\n\n span.set_status(Status(**status_kwargs))\n\n @staticmethod\n def _trace_retry(*args, **kwargs):\n task = utils.retrieve_task_from_sender(kwargs)\n task_id = utils.retrieve_task_id_from_request(kwargs)\n reason = utils.retrieve_reason(kwargs)\n\n if task is None or task_id is None or reason is None:\n return\n\n span, _ = utils.retrieve_span(task, task_id)\n if span is None:\n return\n\n # Add retry reason metadata to span\n # Use `str(reason)` instead of `reason.message` in case we get\n # something that isn't an `Exception`\n span.set_attribute(_TASK_RETRY_REASON_KEY, str(reason))\n\n\ndef carrier_extractor(carrier, key):\n value = getattr(carrier, key, [])\n if isinstance(value, str) or not isinstance(value, Iterable):\n value = (value,)\n return value\n", "path": "instrumentation/opentelemetry-instrumentation-celery/src/opentelemetry/instrumentation/celery/__init__.py"}]}

| 3,159 | 939 |

gh_patches_debug_38480

|

rasdani/github-patches

|

git_diff

|

pex-tool__pex-1149

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Add support for PEX_INTERPRETER `-c`, `-m` and `-` special modes to PEX venvs.

#1128 lands a Venv tool that creates a venv from a PEX file with an entrypoint that fully emulates PEX behavior except for handling `-c`, `-m` and `-` special modes when `PEX_INTERPRETER` is activated. Implement these modes so that a PEX venv can be counted on to work exactly like a PEX file.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pex/tools/commands/venv.py`

Content:

```

1 # Copyright 2020 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 from __future__ import absolute_import

5

6 import errno

7 import os

8 import shutil

9 import zipfile

10 from argparse import ArgumentParser, Namespace

11 from textwrap import dedent

12

13 from pex import pex_builder, pex_warnings

14 from pex.common import chmod_plus_x, safe_mkdir

15 from pex.environment import PEXEnvironment

16 from pex.pex import PEX

17 from pex.tools.command import Command, Error, Ok, Result

18 from pex.tools.commands.virtualenv import PipUnavailableError, Virtualenv

19 from pex.tracer import TRACER

20 from pex.typing import TYPE_CHECKING

21

22 if TYPE_CHECKING:

23 from typing import Tuple

24

25

26 # N.B.: We can't use shutil.copytree since we copy from multiple source locations to the same site

27 # packages directory destination. Since we're forced to stray from the stdlib here, support for

28 # hardlinks is added to provide a measurable speed up and disk space savings when possible.

29 def _copytree(

30 src, # type: str

31 dst, # type: str

32 exclude=(), # type: Tuple[str, ...]

33 collisions_ok=False, # type: bool

34 ):

35 # type: (...) -> None

36 safe_mkdir(dst)

37 link = True

38 for root, dirs, files in os.walk(src, topdown=True, followlinks=False):

39 if src == root:

40 dirs[:] = [d for d in dirs if d not in exclude]

41 files[:] = [f for f in files if f not in exclude]

42

43 for d in dirs:

44 try:

45 os.mkdir(os.path.join(dst, os.path.relpath(os.path.join(root, d), src)))

46 except OSError as e:

47 if e.errno != errno.EEXIST:

48 raise e

49

50 for f in files:

51 src_entry = os.path.join(root, f)

52 dst_entry = os.path.join(dst, os.path.relpath(src_entry, src))

53 try:

54 if link:

55 try:

56 os.link(src_entry, dst_entry)

57 continue

58 except OSError as e:

59 if e.errno != errno.EXDEV:

60 raise e

61 link = False

62 shutil.copy(src_entry, dst_entry)

63 except OSError as e:

64 if e.errno == errno.EEXIST:

65 pex_warnings.warn(

66 "Failed to overwrite {} with {}: {}".format(dst_entry, src_entry, e)

67 )

68 if not collisions_ok:

69 raise e

70

71

72 class Venv(Command):

73 """Creates a venv from the PEX file."""

74

75 def add_arguments(self, parser):

76 # type: (ArgumentParser) -> None

77 parser.add_argument(

78 "venv",

79 nargs=1,

80 metavar="PATH",

81 help="The directory to create the virtual environment in.",

82 )

83 parser.add_argument(

84 "-b",

85 "--bin-path",

86 choices=("prepend", "append"),

87 default=None,

88 help="Add the venv bin dir to the PATH in the __main__.py script.",

89 )

90 parser.add_argument(

91 "-f",

92 "--force",

93 action="store_true",

94 default=False,

95 help="If the venv directory already exists, overwrite it.",

96 )

97 parser.add_argument(

98 "--collisions-ok",

99 action="store_true",

100 default=False,

101 help=(

102 "Don't error if population of the venv encounters distributions in the PEX file "

103 "with colliding files."

104 ),

105 )

106 parser.add_argument(

107 "-p",

108 "--pip",

109 action="store_true",

110 default=False,

111 help="Add pip to the venv.",

112 )

113

114 def run(

115 self,

116 pex, # type: PEX

117 options, # type: Namespace

118 ):

119 # type: (...) -> Result

120

121 # 0. Create an empty virtual environment to populate with the PEX code and dependencies.

122 venv = Virtualenv.create(options.venv[0], interpreter=pex.interpreter, force=options.force)

123

124 # 1. Populate the venv with the PEX contents.

125 pex_info = pex.pex_info()

126 if zipfile.is_zipfile(pex.path()):

127 PEXEnvironment.explode_code(

128 pex.path(), pex_info, venv.site_packages_dir, exclude=("__main__.py",)

129 )

130 else:

131 _copytree(

132 src=pex.path(),

133 dst=venv.site_packages_dir,

134 exclude=(pex_info.internal_cache, pex_builder.BOOTSTRAP_DIR, "__main__.py"),

135 )

136

137 for dist in pex.activate():

138 _copytree(

139 src=dist.location,

140 dst=venv.site_packages_dir,

141 exclude=("bin",),

142 collisions_ok=options.collisions_ok,

143 )

144 dist_bin_dir = os.path.join(dist.location, "bin")

145 if os.path.isdir(dist_bin_dir):

146 _copytree(dist_bin_dir, venv.bin_dir, collisions_ok=options.collisions_ok)

147

148 # 2. Add a __main__ to the root of the venv for running the venv dir like a loose PEX dir

149 # and a main.py for running as a script.

150 main_contents = dedent(

151 """\

152 #!{venv_python} -sE

153

154 import os

155 import sys

156

157 python = {venv_python!r}

158 if sys.executable != python:

159 os.execv(python, [python, "-sE"] + sys.argv)

160

161 os.environ["VIRTUAL_ENV"] = {venv_dir!r}

162 sys.path.extend(os.environ.get("PEX_EXTRA_SYS_PATH", "").split(os.pathsep))

163

164 bin_dir = {venv_bin_dir!r}

165 bin_path = {bin_path!r}

166 if bin_path:

167 PATH = os.environ.get("PATH", "").split(os.pathsep)

168 if bin_path == "prepend":

169 PATH = [bin_dir] + PATH

170 else:

171 PATH.append(bin_dir)

172 os.environ["PATH"] = os.pathsep.join(PATH)

173

174 PEX_OVERRIDE_KEYS = ("PEX_INTERPRETER", "PEX_SCRIPT", "PEX_MODULE")

175 pex_overrides = dict(

176 (key, os.environ.pop(key)) for key in PEX_OVERRIDE_KEYS if key in os.environ

177 )

178 if len(pex_overrides) > 1:

179 sys.stderr.write(

180 "Can only specify one of {{overrides}}; found: {{found}}\\n".format(

181 overrides=", ".join(PEX_OVERRIDE_KEYS),

182 found=" ".join("{{}}={{}}".format(k, v) for k, v in pex_overrides.items())

183 )

184 )

185 sys.exit(1)

186

187 pex_script = pex_overrides.get("PEX_SCRIPT")

188 if pex_script:

189 script_path = os.path.join(bin_dir, pex_script)

190 os.execv(script_path, [script_path] + sys.argv[1:])

191

192 # TODO(John Sirois): Support `-c`, `-m` and `-` special modes when PEX_INTERPRETER is

193 # activated like PEX files do: https://github.com/pantsbuild/pex/issues/1136

194 pex_interpreter = pex_overrides.get("PEX_INTERPRETER", "").lower()

195 entry_point = (

196 "code:interact"

197 if pex_interpreter in ("1", "true")

198 else pex_overrides.get("PEX_MODULE", {entry_point!r} or "code:interact")

199 )

200 module_name, _, function = entry_point.partition(":")

201 if not function:

202 import runpy

203 runpy.run_module(module_name, run_name="__main__")

204 else:

205 import importlib

206 module = importlib.import_module(module_name)

207 # N.B.: Functions may be hung off top-level objects in the module namespace,

208 # e.g.: Class.method; so we drill down through any attributes to the final function

209 # object.

210 namespace, func = module, None

211 for attr in function.split("."):

212 func = namespace = getattr(namespace, attr)

213 func()

214 """.format(

215 venv_python=venv.interpreter.binary,

216 bin_path=options.bin_path,

217 venv_dir=venv.venv_dir,

218 venv_bin_dir=venv.bin_dir,

219 entry_point=pex_info.entry_point,

220 )

221 )

222 with open(venv.join_path("__main__.py"), "w") as fp:

223 fp.write(main_contents)

224 chmod_plus_x(fp.name)

225 os.symlink(os.path.basename(fp.name), venv.join_path("pex"))

226

227 # 3. Re-write any (console) scripts to use the venv Python.

228 for script in venv.rewrite_scripts(python_args="-sE"):

229 TRACER.log("Re-writing {}".format(script))

230

231 if options.pip:

232 try:

233 venv.install_pip()

234 except PipUnavailableError as e:

235 return Error(

236 "The virtual environment was successfully created, but Pip was not "

237 "installed:\n{}".format(e)

238 )

239

240 return Ok()

241

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pex/tools/commands/venv.py b/pex/tools/commands/venv.py

--- a/pex/tools/commands/venv.py

+++ b/pex/tools/commands/venv.py

@@ -189,14 +189,47 @@

script_path = os.path.join(bin_dir, pex_script)

os.execv(script_path, [script_path] + sys.argv[1:])

- # TODO(John Sirois): Support `-c`, `-m` and `-` special modes when PEX_INTERPRETER is

- # activated like PEX files do: https://github.com/pantsbuild/pex/issues/1136

- pex_interpreter = pex_overrides.get("PEX_INTERPRETER", "").lower()

+ pex_interpreter = pex_overrides.get("PEX_INTERPRETER", "").lower() in ("1", "true")

+ PEX_INTERPRETER_ENTRYPOINT = "code:interact"

entry_point = (

- "code:interact"

- if pex_interpreter in ("1", "true")

- else pex_overrides.get("PEX_MODULE", {entry_point!r} or "code:interact")

+ PEX_INTERPRETER_ENTRYPOINT

+ if pex_interpreter

+ else pex_overrides.get("PEX_MODULE", {entry_point!r} or PEX_INTERPRETER_ENTRYPOINT)

)

+ if entry_point == PEX_INTERPRETER_ENTRYPOINT and len(sys.argv) > 1:

+ args = sys.argv[1:]

+ arg = args[0]

+ if arg == "-m":

+ if len(args) < 2:

+ sys.stderr.write("Argument expected for the -m option\\n")

+ sys.exit(2)

+ entry_point = module = args[1]

+ sys.argv = args[1:]

+ # Fall through to entry_point handling below.

+ else:

+ filename = arg

+ sys.argv = args

+ if arg == "-c":

+ if len(args) < 2:

+ sys.stderr.write("Argument expected for the -c option\\n")

+ sys.exit(2)

+ filename = "-c <cmd>"

+ content = args[1]

+ sys.argv = ["-c"] + args[2:]

+ elif arg == "-":

+ content = sys.stdin.read()

+ else:

+ with open(arg) as fp:

+ content = fp.read()

+

+ ast = compile(content, filename, "exec", flags=0, dont_inherit=1)

+ globals_map = globals().copy()

+ globals_map["__name__"] = "__main__"

+ globals_map["__file__"] = filename

+ locals_map = globals_map

+ {exec_ast}

+ sys.exit(0)

+

module_name, _, function = entry_point.partition(":")

if not function:

import runpy

@@ -217,6 +250,11 @@

venv_dir=venv.venv_dir,

venv_bin_dir=venv.bin_dir,

entry_point=pex_info.entry_point,

+ exec_ast=(

+ "exec ast in globals_map, locals_map"

+ if venv.interpreter.version[0] == 2

+ else "exec(ast, globals_map, locals_map)"

+ ),

)

)

with open(venv.join_path("__main__.py"), "w") as fp:

|

{"golden_diff": "diff --git a/pex/tools/commands/venv.py b/pex/tools/commands/venv.py\n--- a/pex/tools/commands/venv.py\n+++ b/pex/tools/commands/venv.py\n@@ -189,14 +189,47 @@\n script_path = os.path.join(bin_dir, pex_script)\n os.execv(script_path, [script_path] + sys.argv[1:])\n \n- # TODO(John Sirois): Support `-c`, `-m` and `-` special modes when PEX_INTERPRETER is\n- # activated like PEX files do: https://github.com/pantsbuild/pex/issues/1136\n- pex_interpreter = pex_overrides.get(\"PEX_INTERPRETER\", \"\").lower()\n+ pex_interpreter = pex_overrides.get(\"PEX_INTERPRETER\", \"\").lower() in (\"1\", \"true\")\n+ PEX_INTERPRETER_ENTRYPOINT = \"code:interact\"\n entry_point = (\n- \"code:interact\"\n- if pex_interpreter in (\"1\", \"true\")\n- else pex_overrides.get(\"PEX_MODULE\", {entry_point!r} or \"code:interact\")\n+ PEX_INTERPRETER_ENTRYPOINT\n+ if pex_interpreter\n+ else pex_overrides.get(\"PEX_MODULE\", {entry_point!r} or PEX_INTERPRETER_ENTRYPOINT)\n )\n+ if entry_point == PEX_INTERPRETER_ENTRYPOINT and len(sys.argv) > 1:\n+ args = sys.argv[1:]\n+ arg = args[0]\n+ if arg == \"-m\":\n+ if len(args) < 2:\n+ sys.stderr.write(\"Argument expected for the -m option\\\\n\")\n+ sys.exit(2)\n+ entry_point = module = args[1]\n+ sys.argv = args[1:]\n+ # Fall through to entry_point handling below.\n+ else:\n+ filename = arg\n+ sys.argv = args\n+ if arg == \"-c\":\n+ if len(args) < 2:\n+ sys.stderr.write(\"Argument expected for the -c option\\\\n\")\n+ sys.exit(2)\n+ filename = \"-c <cmd>\"\n+ content = args[1]\n+ sys.argv = [\"-c\"] + args[2:]\n+ elif arg == \"-\":\n+ content = sys.stdin.read()\n+ else:\n+ with open(arg) as fp:\n+ content = fp.read()\n+ \n+ ast = compile(content, filename, \"exec\", flags=0, dont_inherit=1)\n+ globals_map = globals().copy()\n+ globals_map[\"__name__\"] = \"__main__\"\n+ globals_map[\"__file__\"] = filename\n+ locals_map = globals_map\n+ {exec_ast}\n+ sys.exit(0)\n+\n module_name, _, function = entry_point.partition(\":\")\n if not function:\n import runpy\n@@ -217,6 +250,11 @@\n venv_dir=venv.venv_dir,\n venv_bin_dir=venv.bin_dir,\n entry_point=pex_info.entry_point,\n+ exec_ast=(\n+ \"exec ast in globals_map, locals_map\"\n+ if venv.interpreter.version[0] == 2\n+ else \"exec(ast, globals_map, locals_map)\"\n+ ),\n )\n )\n with open(venv.join_path(\"__main__.py\"), \"w\") as fp:\n", "issue": "Add support for PEX_INTERPRETER `-c`, `-m` and `-` special modes to PEX venvs.\n#1128 lands a Venv tool that creates a venv from a PEX file with an entrypoint that fully emulates PEX behavior except for handling `-c`, `-m` and `-` special modes when `PEX_INTERPRETER` is activated. Implement these modes so that a PEX venv can be counted on to work exactly like a PEX file.\n", "before_files": [{"content": "# Copyright 2020 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\nfrom __future__ import absolute_import\n\nimport errno\nimport os\nimport shutil\nimport zipfile\nfrom argparse import ArgumentParser, Namespace\nfrom textwrap import dedent\n\nfrom pex import pex_builder, pex_warnings\nfrom pex.common import chmod_plus_x, safe_mkdir\nfrom pex.environment import PEXEnvironment\nfrom pex.pex import PEX\nfrom pex.tools.command import Command, Error, Ok, Result\nfrom pex.tools.commands.virtualenv import PipUnavailableError, Virtualenv\nfrom pex.tracer import TRACER\nfrom pex.typing import TYPE_CHECKING\n\nif TYPE_CHECKING:\n from typing import Tuple\n\n\n# N.B.: We can't use shutil.copytree since we copy from multiple source locations to the same site\n# packages directory destination. Since we're forced to stray from the stdlib here, support for\n# hardlinks is added to provide a measurable speed up and disk space savings when possible.\ndef _copytree(\n src, # type: str\n dst, # type: str\n exclude=(), # type: Tuple[str, ...]\n collisions_ok=False, # type: bool\n):\n # type: (...) -> None\n safe_mkdir(dst)\n link = True\n for root, dirs, files in os.walk(src, topdown=True, followlinks=False):\n if src == root:\n dirs[:] = [d for d in dirs if d not in exclude]\n files[:] = [f for f in files if f not in exclude]\n\n for d in dirs:\n try:\n os.mkdir(os.path.join(dst, os.path.relpath(os.path.join(root, d), src)))\n except OSError as e:\n if e.errno != errno.EEXIST:\n raise e\n\n for f in files:\n src_entry = os.path.join(root, f)\n dst_entry = os.path.join(dst, os.path.relpath(src_entry, src))\n try:\n if link:\n try:\n os.link(src_entry, dst_entry)\n continue\n except OSError as e:\n if e.errno != errno.EXDEV:\n raise e\n link = False\n shutil.copy(src_entry, dst_entry)\n except OSError as e:\n if e.errno == errno.EEXIST:\n pex_warnings.warn(\n \"Failed to overwrite {} with {}: {}\".format(dst_entry, src_entry, e)\n )\n if not collisions_ok:\n raise e\n\n\nclass Venv(Command):\n \"\"\"Creates a venv from the PEX file.\"\"\"\n\n def add_arguments(self, parser):\n # type: (ArgumentParser) -> None\n parser.add_argument(\n \"venv\",\n nargs=1,\n metavar=\"PATH\",\n help=\"The directory to create the virtual environment in.\",\n )\n parser.add_argument(\n \"-b\",\n \"--bin-path\",\n choices=(\"prepend\", \"append\"),\n default=None,\n help=\"Add the venv bin dir to the PATH in the __main__.py script.\",\n )\n parser.add_argument(\n \"-f\",\n \"--force\",\n action=\"store_true\",\n default=False,\n help=\"If the venv directory already exists, overwrite it.\",\n )\n parser.add_argument(\n \"--collisions-ok\",\n action=\"store_true\",\n default=False,\n help=(\n \"Don't error if population of the venv encounters distributions in the PEX file \"\n \"with colliding files.\"\n ),\n )\n parser.add_argument(\n \"-p\",\n \"--pip\",\n action=\"store_true\",\n default=False,\n help=\"Add pip to the venv.\",\n )\n\n def run(\n self,\n pex, # type: PEX\n options, # type: Namespace\n ):\n # type: (...) -> Result\n\n # 0. Create an empty virtual environment to populate with the PEX code and dependencies.\n venv = Virtualenv.create(options.venv[0], interpreter=pex.interpreter, force=options.force)\n\n # 1. Populate the venv with the PEX contents.\n pex_info = pex.pex_info()\n if zipfile.is_zipfile(pex.path()):\n PEXEnvironment.explode_code(\n pex.path(), pex_info, venv.site_packages_dir, exclude=(\"__main__.py\",)\n )\n else:\n _copytree(\n src=pex.path(),\n dst=venv.site_packages_dir,\n exclude=(pex_info.internal_cache, pex_builder.BOOTSTRAP_DIR, \"__main__.py\"),\n )\n\n for dist in pex.activate():\n _copytree(\n src=dist.location,\n dst=venv.site_packages_dir,\n exclude=(\"bin\",),\n collisions_ok=options.collisions_ok,\n )\n dist_bin_dir = os.path.join(dist.location, \"bin\")\n if os.path.isdir(dist_bin_dir):\n _copytree(dist_bin_dir, venv.bin_dir, collisions_ok=options.collisions_ok)\n\n # 2. Add a __main__ to the root of the venv for running the venv dir like a loose PEX dir\n # and a main.py for running as a script.\n main_contents = dedent(\n \"\"\"\\\n #!{venv_python} -sE\n\n import os\n import sys\n\n python = {venv_python!r}\n if sys.executable != python:\n os.execv(python, [python, \"-sE\"] + sys.argv)\n\n os.environ[\"VIRTUAL_ENV\"] = {venv_dir!r}\n sys.path.extend(os.environ.get(\"PEX_EXTRA_SYS_PATH\", \"\").split(os.pathsep))\n\n bin_dir = {venv_bin_dir!r}\n bin_path = {bin_path!r}\n if bin_path:\n PATH = os.environ.get(\"PATH\", \"\").split(os.pathsep)\n if bin_path == \"prepend\":\n PATH = [bin_dir] + PATH\n else:\n PATH.append(bin_dir)\n os.environ[\"PATH\"] = os.pathsep.join(PATH)\n\n PEX_OVERRIDE_KEYS = (\"PEX_INTERPRETER\", \"PEX_SCRIPT\", \"PEX_MODULE\")\n pex_overrides = dict(\n (key, os.environ.pop(key)) for key in PEX_OVERRIDE_KEYS if key in os.environ\n )\n if len(pex_overrides) > 1:\n sys.stderr.write(\n \"Can only specify one of {{overrides}}; found: {{found}}\\\\n\".format(\n overrides=\", \".join(PEX_OVERRIDE_KEYS),\n found=\" \".join(\"{{}}={{}}\".format(k, v) for k, v in pex_overrides.items())\n )\n )\n sys.exit(1)\n\n pex_script = pex_overrides.get(\"PEX_SCRIPT\")\n if pex_script:\n script_path = os.path.join(bin_dir, pex_script)\n os.execv(script_path, [script_path] + sys.argv[1:])\n\n # TODO(John Sirois): Support `-c`, `-m` and `-` special modes when PEX_INTERPRETER is\n # activated like PEX files do: https://github.com/pantsbuild/pex/issues/1136\n pex_interpreter = pex_overrides.get(\"PEX_INTERPRETER\", \"\").lower()\n entry_point = (\n \"code:interact\"\n if pex_interpreter in (\"1\", \"true\")\n else pex_overrides.get(\"PEX_MODULE\", {entry_point!r} or \"code:interact\")\n )\n module_name, _, function = entry_point.partition(\":\")\n if not function:\n import runpy\n runpy.run_module(module_name, run_name=\"__main__\")\n else:\n import importlib\n module = importlib.import_module(module_name)\n # N.B.: Functions may be hung off top-level objects in the module namespace,\n # e.g.: Class.method; so we drill down through any attributes to the final function\n # object.\n namespace, func = module, None\n for attr in function.split(\".\"):\n func = namespace = getattr(namespace, attr)\n func()\n \"\"\".format(\n venv_python=venv.interpreter.binary,\n bin_path=options.bin_path,\n venv_dir=venv.venv_dir,\n venv_bin_dir=venv.bin_dir,\n entry_point=pex_info.entry_point,\n )\n )\n with open(venv.join_path(\"__main__.py\"), \"w\") as fp:\n fp.write(main_contents)\n chmod_plus_x(fp.name)\n os.symlink(os.path.basename(fp.name), venv.join_path(\"pex\"))\n\n # 3. Re-write any (console) scripts to use the venv Python.\n for script in venv.rewrite_scripts(python_args=\"-sE\"):\n TRACER.log(\"Re-writing {}\".format(script))\n\n if options.pip:\n try:\n venv.install_pip()\n except PipUnavailableError as e:\n return Error(\n \"The virtual environment was successfully created, but Pip was not \"\n \"installed:\\n{}\".format(e)\n )\n\n return Ok()\n", "path": "pex/tools/commands/venv.py"}], "after_files": [{"content": "# Copyright 2020 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\nfrom __future__ import absolute_import\n\nimport errno\nimport os\nimport shutil\nimport zipfile\nfrom argparse import ArgumentParser, Namespace\nfrom textwrap import dedent\n\nfrom pex import pex_builder, pex_warnings\nfrom pex.common import chmod_plus_x, safe_mkdir\nfrom pex.environment import PEXEnvironment\nfrom pex.pex import PEX\nfrom pex.tools.command import Command, Error, Ok, Result\nfrom pex.tools.commands.virtualenv import PipUnavailableError, Virtualenv\nfrom pex.tracer import TRACER\nfrom pex.typing import TYPE_CHECKING\n\nif TYPE_CHECKING:\n from typing import Tuple\n\n\n# N.B.: We can't use shutil.copytree since we copy from multiple source locations to the same site\n# packages directory destination. Since we're forced to stray from the stdlib here, support for\n# hardlinks is added to provide a measurable speed up and disk space savings when possible.\ndef _copytree(\n src, # type: str\n dst, # type: str\n exclude=(), # type: Tuple[str, ...]\n collisions_ok=False, # type: bool\n):\n # type: (...) -> None\n safe_mkdir(dst)\n link = True\n for root, dirs, files in os.walk(src, topdown=True, followlinks=False):\n if src == root:\n dirs[:] = [d for d in dirs if d not in exclude]\n files[:] = [f for f in files if f not in exclude]\n\n for d in dirs:\n try:\n os.mkdir(os.path.join(dst, os.path.relpath(os.path.join(root, d), src)))\n except OSError as e:\n if e.errno != errno.EEXIST:\n raise e\n\n for f in files:\n src_entry = os.path.join(root, f)\n dst_entry = os.path.join(dst, os.path.relpath(src_entry, src))\n try:\n if link:\n try:\n os.link(src_entry, dst_entry)\n continue\n except OSError as e:\n if e.errno != errno.EXDEV:\n raise e\n link = False\n shutil.copy(src_entry, dst_entry)\n except OSError as e:\n if e.errno == errno.EEXIST:\n pex_warnings.warn(\n \"Failed to overwrite {} with {}: {}\".format(dst_entry, src_entry, e)\n )\n if not collisions_ok:\n raise e\n\n\nclass Venv(Command):\n \"\"\"Creates a venv from the PEX file.\"\"\"\n\n def add_arguments(self, parser):\n # type: (ArgumentParser) -> None\n parser.add_argument(\n \"venv\",\n nargs=1,\n metavar=\"PATH\",\n help=\"The directory to create the virtual environment in.\",\n )\n parser.add_argument(\n \"-b\",\n \"--bin-path\",\n choices=(\"prepend\", \"append\"),\n default=None,\n help=\"Add the venv bin dir to the PATH in the __main__.py script.\",\n )\n parser.add_argument(\n \"-f\",\n \"--force\",\n action=\"store_true\",\n default=False,\n help=\"If the venv directory already exists, overwrite it.\",\n )\n parser.add_argument(\n \"--collisions-ok\",\n action=\"store_true\",\n default=False,\n help=(\n \"Don't error if population of the venv encounters distributions in the PEX file \"\n \"with colliding files.\"\n ),\n )\n parser.add_argument(\n \"-p\",\n \"--pip\",\n action=\"store_true\",\n default=False,\n help=\"Add pip to the venv.\",\n )\n\n def run(\n self,\n pex, # type: PEX\n options, # type: Namespace\n ):\n # type: (...) -> Result\n\n # 0. Create an empty virtual environment to populate with the PEX code and dependencies.\n venv = Virtualenv.create(options.venv[0], interpreter=pex.interpreter, force=options.force)\n\n # 1. Populate the venv with the PEX contents.\n pex_info = pex.pex_info()\n if zipfile.is_zipfile(pex.path()):\n PEXEnvironment.explode_code(\n pex.path(), pex_info, venv.site_packages_dir, exclude=(\"__main__.py\",)\n )\n else:\n _copytree(\n src=pex.path(),\n dst=venv.site_packages_dir,\n exclude=(pex_info.internal_cache, pex_builder.BOOTSTRAP_DIR, \"__main__.py\"),\n )\n\n for dist in pex.activate():\n _copytree(\n src=dist.location,\n dst=venv.site_packages_dir,\n exclude=(\"bin\",),\n collisions_ok=options.collisions_ok,\n )\n dist_bin_dir = os.path.join(dist.location, \"bin\")\n if os.path.isdir(dist_bin_dir):\n _copytree(dist_bin_dir, venv.bin_dir, collisions_ok=options.collisions_ok)\n\n # 2. Add a __main__ to the root of the venv for running the venv dir like a loose PEX dir\n # and a main.py for running as a script.\n main_contents = dedent(\n \"\"\"\\\n #!{venv_python} -sE\n\n import os\n import sys\n\n python = {venv_python!r}\n if sys.executable != python:\n os.execv(python, [python, \"-sE\"] + sys.argv)\n\n os.environ[\"VIRTUAL_ENV\"] = {venv_dir!r}\n sys.path.extend(os.environ.get(\"PEX_EXTRA_SYS_PATH\", \"\").split(os.pathsep))\n\n bin_dir = {venv_bin_dir!r}\n bin_path = {bin_path!r}\n if bin_path:\n PATH = os.environ.get(\"PATH\", \"\").split(os.pathsep)\n if bin_path == \"prepend\":\n PATH = [bin_dir] + PATH\n else:\n PATH.append(bin_dir)\n os.environ[\"PATH\"] = os.pathsep.join(PATH)\n\n PEX_OVERRIDE_KEYS = (\"PEX_INTERPRETER\", \"PEX_SCRIPT\", \"PEX_MODULE\")\n pex_overrides = dict(\n (key, os.environ.pop(key)) for key in PEX_OVERRIDE_KEYS if key in os.environ\n )\n if len(pex_overrides) > 1:\n sys.stderr.write(\n \"Can only specify one of {{overrides}}; found: {{found}}\\\\n\".format(\n overrides=\", \".join(PEX_OVERRIDE_KEYS),\n found=\" \".join(\"{{}}={{}}\".format(k, v) for k, v in pex_overrides.items())\n )\n )\n sys.exit(1)\n\n pex_script = pex_overrides.get(\"PEX_SCRIPT\")\n if pex_script:\n script_path = os.path.join(bin_dir, pex_script)\n os.execv(script_path, [script_path] + sys.argv[1:])\n\n pex_interpreter = pex_overrides.get(\"PEX_INTERPRETER\", \"\").lower() in (\"1\", \"true\")\n PEX_INTERPRETER_ENTRYPOINT = \"code:interact\"\n entry_point = (\n PEX_INTERPRETER_ENTRYPOINT\n if pex_interpreter\n else pex_overrides.get(\"PEX_MODULE\", {entry_point!r} or PEX_INTERPRETER_ENTRYPOINT)\n )\n if entry_point == PEX_INTERPRETER_ENTRYPOINT and len(sys.argv) > 1:\n args = sys.argv[1:]\n arg = args[0]\n if arg == \"-m\":\n if len(args) < 2:\n sys.stderr.write(\"Argument expected for the -m option\\\\n\")\n sys.exit(2)\n entry_point = module = args[1]\n sys.argv = args[1:]\n # Fall through to entry_point handling below.\n else:\n filename = arg\n sys.argv = args\n if arg == \"-c\":\n if len(args) < 2:\n sys.stderr.write(\"Argument expected for the -c option\\\\n\")\n sys.exit(2)\n filename = \"-c <cmd>\"\n content = args[1]\n sys.argv = [\"-c\"] + args[2:]\n elif arg == \"-\":\n content = sys.stdin.read()\n else:\n with open(arg) as fp:\n content = fp.read()\n \n ast = compile(content, filename, \"exec\", flags=0, dont_inherit=1)\n globals_map = globals().copy()\n globals_map[\"__name__\"] = \"__main__\"\n globals_map[\"__file__\"] = filename\n locals_map = globals_map\n {exec_ast}\n sys.exit(0)\n\n module_name, _, function = entry_point.partition(\":\")\n if not function:\n import runpy\n runpy.run_module(module_name, run_name=\"__main__\")\n else:\n import importlib\n module = importlib.import_module(module_name)\n # N.B.: Functions may be hung off top-level objects in the module namespace,\n # e.g.: Class.method; so we drill down through any attributes to the final function\n # object.\n namespace, func = module, None\n for attr in function.split(\".\"):\n func = namespace = getattr(namespace, attr)\n func()\n \"\"\".format(\n venv_python=venv.interpreter.binary,\n bin_path=options.bin_path,\n venv_dir=venv.venv_dir,\n venv_bin_dir=venv.bin_dir,\n entry_point=pex_info.entry_point,\n exec_ast=(\n \"exec ast in globals_map, locals_map\"\n if venv.interpreter.version[0] == 2\n else \"exec(ast, globals_map, locals_map)\"\n ),\n )\n )\n with open(venv.join_path(\"__main__.py\"), \"w\") as fp:\n fp.write(main_contents)\n chmod_plus_x(fp.name)\n os.symlink(os.path.basename(fp.name), venv.join_path(\"pex\"))\n\n # 3. Re-write any (console) scripts to use the venv Python.\n for script in venv.rewrite_scripts(python_args=\"-sE\"):\n TRACER.log(\"Re-writing {}\".format(script))\n\n if options.pip:\n try:\n venv.install_pip()\n except PipUnavailableError as e:\n return Error(\n \"The virtual environment was successfully created, but Pip was not \"\n \"installed:\\n{}\".format(e)\n )\n\n return Ok()\n", "path": "pex/tools/commands/venv.py"}]}

| 2,971 | 776 |

gh_patches_debug_13620

|

rasdani/github-patches

|

git_diff

|

python-poetry__poetry-5880

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Poetry inheriting issue for git-client on github.com

- [X] I am on the [latest](https://github.com/python-poetry/poetry/releases/latest) Poetry version.

- [X] I have searched the [issues](https://github.com/python-poetry/poetry/issues) of this repo and believe that this is not a duplicate.

- [X] If an exception occurs when executing a command, I executed it again in debug mode (`-vvv` option).

- **OS version and name**: Linux Mint 20, Py3.8.2 virtualenv

- **Poetry version**: 1.1.2

- **Link of a [Gist](https://gist.github.com/) with the contents of your pyproject.toml file**:

## Issue

While `installing` or `locking`, if the github git repo is wrong (e.g. returns a 404 in a browser), then poetry (sometimes) shows an authentication error and ask for the username for that url.

```Updating dependencies

Resolving dependencies...

1: fact: actions-gateway is 0.7.0

1: derived: actions-gateway

Username for 'https://github.com':

```

The pyproject.toml has a git dependency like

```

Flask-Pika = { git = "https://github.com/rienafairefr/flask_pika.git", rev= "b2b4d68186c52ae034b39f4fb56fe86786b3a055"}

```

The typo is hard to see, it should be `flask-pika` instead of `flask_pika`

If the command is run without verbose output, then the "Username for 'https://github.com':" is sometimes shown only for a fraction of a second, so the command may never terminate and it's hard to know why.

Not sure poetry can or should mitigate the problem that comes from a lower level.

The problem comes (pretty sure) from github.com returning a 401 when it should return a 404:

```

GET /inexistent-user/inexistent-repo/info/refs?service=git-upload-pack

Host github.com

User-Agent: git/inexistent-version

```

gives us

```

HTTP/1.1 401 Authorization Required

Server: GitHub Babel 2.0

```

This makes the git client (which is called in a subprocess by poetry) to ask for authentication.

setting the GIT_ASKPASS variable to false while caling `git` is an option, the credentials to use for a git dependency should be provided by poetry, not leaving `git` to figure it out by itself

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/poetry/vcs/git/system.py`

Content:

```

1 from __future__ import annotations

2

3 import subprocess

4

5 from typing import TYPE_CHECKING

6

7 from dulwich.client import find_git_command

8

9

10 if TYPE_CHECKING:

11 from pathlib import Path

12 from typing import Any

13

14

15 class SystemGit:

16 @classmethod

17 def clone(cls, repository: str, dest: Path) -> str:

18 cls._check_parameter(repository)

19

20 return cls.run("clone", "--recurse-submodules", "--", repository, str(dest))

21

22 @classmethod

23 def checkout(cls, rev: str, target: Path | None = None) -> str:

24 args = []

25

26 if target:

27 args += [

28 "--git-dir",

29 (target / ".git").as_posix(),

30 "--work-tree",

31 target.as_posix(),

32 ]

33

34 cls._check_parameter(rev)

35

36 args += ["checkout", rev]

37

38 return cls.run(*args)

39

40 @staticmethod

41 def run(*args: Any, **kwargs: Any) -> str:

42 folder = kwargs.pop("folder", None)

43 if folder:

44 args = (

45 "--git-dir",

46 (folder / ".git").as_posix(),

47 "--work-tree",

48 folder.as_posix(),

49 ) + args

50

51 git_command = find_git_command()

52 return (

53 subprocess.check_output(git_command + list(args), stderr=subprocess.STDOUT)

54 .decode()

55 .strip()

56 )

57

58 @staticmethod

59 def _check_parameter(parameter: str) -> None:

60 """

61 Checks a git parameter to avoid unwanted code execution.

62 """

63 if parameter.strip().startswith("-"):

64 raise RuntimeError(f"Invalid Git parameter: {parameter}")

65

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/poetry/vcs/git/system.py b/src/poetry/vcs/git/system.py

--- a/src/poetry/vcs/git/system.py

+++ b/src/poetry/vcs/git/system.py

@@ -1,5 +1,6 @@

from __future__ import annotations

+import os

import subprocess

from typing import TYPE_CHECKING

@@ -49,8 +50,14 @@

) + args

git_command = find_git_command()

+ env = os.environ.copy()

+ env["GIT_TERMINAL_PROMPT"] = "0"

return (

- subprocess.check_output(git_command + list(args), stderr=subprocess.STDOUT)

+ subprocess.check_output(

+ git_command + list(args),

+ stderr=subprocess.STDOUT,

+ env=env,

+ )

.decode()

.strip()

)

|

{"golden_diff": "diff --git a/src/poetry/vcs/git/system.py b/src/poetry/vcs/git/system.py\n--- a/src/poetry/vcs/git/system.py\n+++ b/src/poetry/vcs/git/system.py\n@@ -1,5 +1,6 @@\n from __future__ import annotations\n \n+import os\n import subprocess\n \n from typing import TYPE_CHECKING\n@@ -49,8 +50,14 @@\n ) + args\n \n git_command = find_git_command()\n+ env = os.environ.copy()\n+ env[\"GIT_TERMINAL_PROMPT\"] = \"0\"\n return (\n- subprocess.check_output(git_command + list(args), stderr=subprocess.STDOUT)\n+ subprocess.check_output(\n+ git_command + list(args),\n+ stderr=subprocess.STDOUT,\n+ env=env,\n+ )\n .decode()\n .strip()\n )\n", "issue": "Poetry inheriting issue for git-client on github.com\n- [X] I am on the [latest](https://github.com/python-poetry/poetry/releases/latest) Poetry version. \r\n- [X] I have searched the [issues](https://github.com/python-poetry/poetry/issues) of this repo and believe that this is not a duplicate.\r\n- [X] If an exception occurs when executing a command, I executed it again in debug mode (`-vvv` option).\r\n\r\n- **OS version and name**: Linux Mint 20, Py3.8.2 virtualenv\r\n- **Poetry version**: 1.1.2\r\n- **Link of a [Gist](https://gist.github.com/) with the contents of your pyproject.toml file**: \r\n\r\n## Issue\r\nWhile `installing` or `locking`, if the github git repo is wrong (e.g. returns a 404 in a browser), then poetry (sometimes) shows an authentication error and ask for the username for that url. \r\n\r\n```Updating dependencies\r\nResolving dependencies...\r\n 1: fact: actions-gateway is 0.7.0\r\n 1: derived: actions-gateway\r\nUsername for 'https://github.com':\r\n```\r\nThe pyproject.toml has a git dependency like\r\n```\r\nFlask-Pika = { git = \"https://github.com/rienafairefr/flask_pika.git\", rev= \"b2b4d68186c52ae034b39f4fb56fe86786b3a055\"}\r\n```\r\nThe typo is hard to see, it should be `flask-pika` instead of `flask_pika`\r\n\r\nIf the command is run without verbose output, then the \"Username for 'https://github.com':\" is sometimes shown only for a fraction of a second, so the command may never terminate and it's hard to know why.\r\n\r\nNot sure poetry can or should mitigate the problem that comes from a lower level.\r\n\r\nThe problem comes (pretty sure) from github.com returning a 401 when it should return a 404:\r\n```\r\nGET /inexistent-user/inexistent-repo/info/refs?service=git-upload-pack\r\nHost github.com\r\nUser-Agent: git/inexistent-version\r\n```\r\ngives us\r\n```\r\nHTTP/1.1 401 Authorization Required\r\nServer: GitHub Babel 2.0\r\n```\r\nThis makes the git client (which is called in a subprocess by poetry) to ask for authentication. \r\n\r\nsetting the GIT_ASKPASS variable to false while caling `git` is an option, the credentials to use for a git dependency should be provided by poetry, not leaving `git` to figure it out by itself\r\n\n", "before_files": [{"content": "from __future__ import annotations\n\nimport subprocess\n\nfrom typing import TYPE_CHECKING\n\nfrom dulwich.client import find_git_command\n\n\nif TYPE_CHECKING:\n from pathlib import Path\n from typing import Any\n\n\nclass SystemGit:\n @classmethod\n def clone(cls, repository: str, dest: Path) -> str:\n cls._check_parameter(repository)\n\n return cls.run(\"clone\", \"--recurse-submodules\", \"--\", repository, str(dest))\n\n @classmethod\n def checkout(cls, rev: str, target: Path | None = None) -> str:\n args = []\n\n if target:\n args += [\n \"--git-dir\",\n (target / \".git\").as_posix(),\n \"--work-tree\",\n target.as_posix(),\n ]\n\n cls._check_parameter(rev)\n\n args += [\"checkout\", rev]\n\n return cls.run(*args)\n\n @staticmethod\n def run(*args: Any, **kwargs: Any) -> str:\n folder = kwargs.pop(\"folder\", None)\n if folder:\n args = (\n \"--git-dir\",\n (folder / \".git\").as_posix(),\n \"--work-tree\",\n folder.as_posix(),\n ) + args\n\n git_command = find_git_command()\n return (\n subprocess.check_output(git_command + list(args), stderr=subprocess.STDOUT)\n .decode()\n .strip()\n )\n\n @staticmethod\n def _check_parameter(parameter: str) -> None:\n \"\"\"\n Checks a git parameter to avoid unwanted code execution.\n \"\"\"\n if parameter.strip().startswith(\"-\"):\n raise RuntimeError(f\"Invalid Git parameter: {parameter}\")\n", "path": "src/poetry/vcs/git/system.py"}], "after_files": [{"content": "from __future__ import annotations\n\nimport os\nimport subprocess\n\nfrom typing import TYPE_CHECKING\n\nfrom dulwich.client import find_git_command\n\n\nif TYPE_CHECKING:\n from pathlib import Path\n from typing import Any\n\n\nclass SystemGit:\n @classmethod\n def clone(cls, repository: str, dest: Path) -> str:\n cls._check_parameter(repository)\n\n return cls.run(\"clone\", \"--recurse-submodules\", \"--\", repository, str(dest))\n\n @classmethod\n def checkout(cls, rev: str, target: Path | None = None) -> str:\n args = []\n\n if target:\n args += [\n \"--git-dir\",\n (target / \".git\").as_posix(),\n \"--work-tree\",\n target.as_posix(),\n ]\n\n cls._check_parameter(rev)\n\n args += [\"checkout\", rev]\n\n return cls.run(*args)\n\n @staticmethod\n def run(*args: Any, **kwargs: Any) -> str:\n folder = kwargs.pop(\"folder\", None)\n if folder:\n args = (\n \"--git-dir\",\n (folder / \".git\").as_posix(),\n \"--work-tree\",\n folder.as_posix(),\n ) + args\n\n git_command = find_git_command()\n env = os.environ.copy()\n env[\"GIT_TERMINAL_PROMPT\"] = \"0\"\n return (\n subprocess.check_output(\n git_command + list(args),\n stderr=subprocess.STDOUT,\n env=env,\n )\n .decode()\n .strip()\n )\n\n @staticmethod\n def _check_parameter(parameter: str) -> None:\n \"\"\"\n Checks a git parameter to avoid unwanted code execution.\n \"\"\"\n if parameter.strip().startswith(\"-\"):\n raise RuntimeError(f\"Invalid Git parameter: {parameter}\")\n", "path": "src/poetry/vcs/git/system.py"}]}

| 1,328 | 189 |

gh_patches_debug_26049

|

rasdani/github-patches

|

git_diff

|

mindee__doctr-384

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[utils] Add visualization capabilities for independent tasks