problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_34765

|

rasdani/github-patches

|

git_diff

|

crytic__slither-1909

|

We are currently solving the following issue within our repository. Here is the issue text:

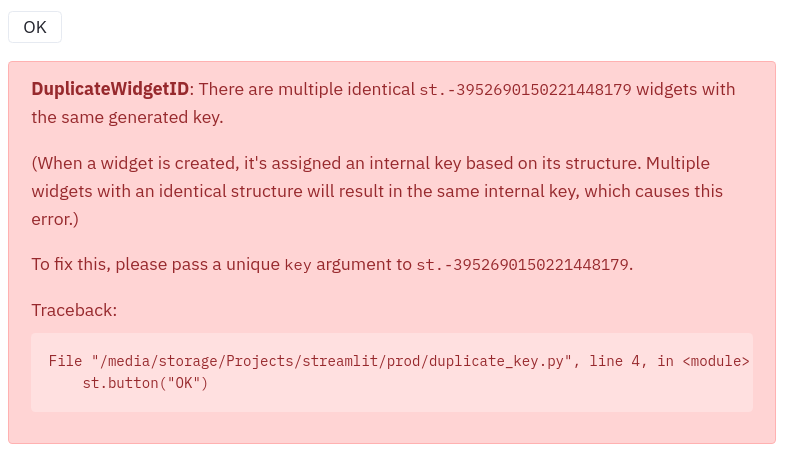

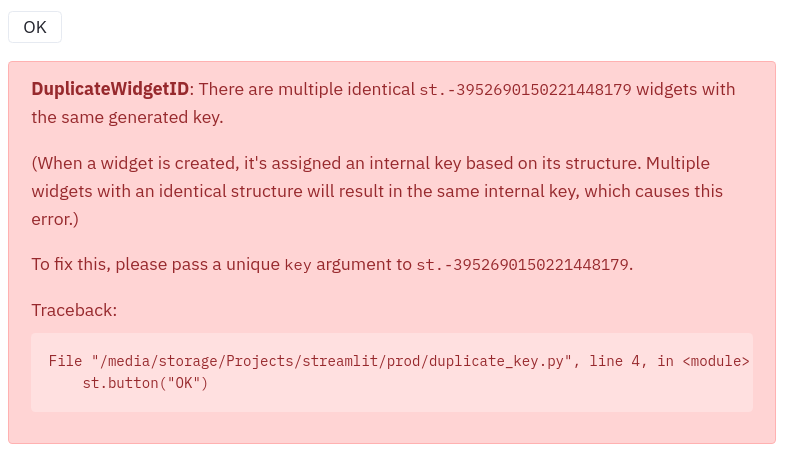

--- BEGIN ISSUE ---

[Bug] contract reports ether as locked when ether is sent in Yul

The following contract reports ether as locked despite it being sent in a Yul block

```

contract FPLockedEther {

receive() payable external {}

function yulSendEther() external {

bool success;

assembly {

success := call(gas(), caller(), balance(address()), 0,0,0,0)

}

}

}

```

```

Contract locking ether found:

Contract FPLockedEther (locked-ether.sol#1-13) has payable functions:

- FPLockedEther.receive() (locked-ether.sol#2-3)

But does not have a function to withdraw the ether

Reference: https://github.com/crytic/slither/wiki/Detector-Documentation#contracts-that-lock-ether

```

It could be that the IR is incorrect here as it should not be a `SOLIDITY_CALL`

```

Contract FPLockedEther

Function FPLockedEther.receive() (*)

Function FPLockedEther.yulSendEther() (*)

Expression: success = call(uint256,uint256,uint256,uint256,uint256,uint256,uint256)(gas()(),caller()(),balance(uint256)(address()()),0,0,0,0)

IRs:

TMP_0(uint256) = SOLIDITY_CALL gas()()

TMP_1(address) := msg.sender(address)

TMP_2 = CONVERT this to address

TMP_3(uint256) = SOLIDITY_CALL balance(uint256)(TMP_2)

TMP_4(uint256) = SOLIDITY_CALL call(uint256,uint256,uint256,uint256,uint256,uint256,uint256)(TMP_0,TMP_1,TMP_3,0,0,0,0)

success(bool) := TMP_4(uint256)

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `slither/detectors/attributes/locked_ether.py`

Content:

```

1 """

2 Check if ethers are locked in the contract

3 """

4 from typing import List

5

6 from slither.core.declarations.contract import Contract

7 from slither.detectors.abstract_detector import (

8 AbstractDetector,

9 DetectorClassification,

10 DETECTOR_INFO,

11 )

12 from slither.slithir.operations import (

13 HighLevelCall,

14 LowLevelCall,

15 Send,

16 Transfer,

17 NewContract,

18 LibraryCall,

19 InternalCall,

20 )

21 from slither.utils.output import Output

22

23

24 class LockedEther(AbstractDetector): # pylint: disable=too-many-nested-blocks

25

26 ARGUMENT = "locked-ether"

27 HELP = "Contracts that lock ether"

28 IMPACT = DetectorClassification.MEDIUM

29 CONFIDENCE = DetectorClassification.HIGH

30

31 WIKI = "https://github.com/crytic/slither/wiki/Detector-Documentation#contracts-that-lock-ether"

32

33 WIKI_TITLE = "Contracts that lock Ether"

34 WIKI_DESCRIPTION = "Contract with a `payable` function, but without a withdrawal capacity."

35

36 # region wiki_exploit_scenario

37 WIKI_EXPLOIT_SCENARIO = """

38 ```solidity

39 pragma solidity 0.4.24;

40 contract Locked{

41 function receive() payable public{

42 }

43 }

44 ```

45 Every Ether sent to `Locked` will be lost."""

46 # endregion wiki_exploit_scenario

47

48 WIKI_RECOMMENDATION = "Remove the payable attribute or add a withdraw function."

49

50 @staticmethod

51 def do_no_send_ether(contract: Contract) -> bool:

52 functions = contract.all_functions_called

53 to_explore = functions

54 explored = []

55 while to_explore: # pylint: disable=too-many-nested-blocks

56 functions = to_explore

57 explored += to_explore

58 to_explore = []

59 for function in functions:

60 calls = [c.name for c in function.internal_calls]

61 if "suicide(address)" in calls or "selfdestruct(address)" in calls:

62 return False

63 for node in function.nodes:

64 for ir in node.irs:

65 if isinstance(

66 ir,

67 (Send, Transfer, HighLevelCall, LowLevelCall, NewContract),

68 ):

69 if ir.call_value and ir.call_value != 0:

70 return False

71 if isinstance(ir, (LowLevelCall)):

72 if ir.function_name in ["delegatecall", "callcode"]:

73 return False

74 # If a new internal call or librarycall

75 # Add it to the list to explore

76 # InternalCall if to follow internal call in libraries

77 if isinstance(ir, (InternalCall, LibraryCall)):

78 if not ir.function in explored:

79 to_explore.append(ir.function)

80

81 return True

82

83 def _detect(self) -> List[Output]:

84 results = []

85

86 for contract in self.compilation_unit.contracts_derived:

87 if contract.is_signature_only():

88 continue

89 funcs_payable = [function for function in contract.functions if function.payable]

90 if funcs_payable:

91 if self.do_no_send_ether(contract):

92 info: DETECTOR_INFO = ["Contract locking ether found:\n"]

93 info += ["\tContract ", contract, " has payable functions:\n"]

94 for function in funcs_payable:

95 info += ["\t - ", function, "\n"]

96 info += "\tBut does not have a function to withdraw the ether\n"

97

98 json = self.generate_result(info)

99

100 results.append(json)

101

102 return results

103

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/slither/detectors/attributes/locked_ether.py b/slither/detectors/attributes/locked_ether.py

--- a/slither/detectors/attributes/locked_ether.py

+++ b/slither/detectors/attributes/locked_ether.py

@@ -3,7 +3,7 @@

"""

from typing import List

-from slither.core.declarations.contract import Contract

+from slither.core.declarations import Contract, SolidityFunction

from slither.detectors.abstract_detector import (

AbstractDetector,

DetectorClassification,

@@ -17,7 +17,9 @@

NewContract,

LibraryCall,

InternalCall,

+ SolidityCall,

)

+from slither.slithir.variables import Constant

from slither.utils.output import Output

@@ -68,8 +70,28 @@

):

if ir.call_value and ir.call_value != 0:

return False

- if isinstance(ir, (LowLevelCall)):

- if ir.function_name in ["delegatecall", "callcode"]:

+ if isinstance(ir, (LowLevelCall)) and ir.function_name in [

+ "delegatecall",

+ "callcode",

+ ]:

+ return False

+ if isinstance(ir, SolidityCall):

+ call_can_send_ether = ir.function in [

+ SolidityFunction(

+ "delegatecall(uint256,uint256,uint256,uint256,uint256,uint256)"

+ ),

+ SolidityFunction(

+ "callcode(uint256,uint256,uint256,uint256,uint256,uint256,uint256)"

+ ),

+ SolidityFunction(

+ "call(uint256,uint256,uint256,uint256,uint256,uint256,uint256)"

+ ),

+ ]

+ nonzero_call_value = call_can_send_ether and (

+ not isinstance(ir.arguments[2], Constant)

+ or ir.arguments[2].value != 0

+ )

+ if nonzero_call_value:

return False

# If a new internal call or librarycall

# Add it to the list to explore

|

{"golden_diff": "diff --git a/slither/detectors/attributes/locked_ether.py b/slither/detectors/attributes/locked_ether.py\n--- a/slither/detectors/attributes/locked_ether.py\n+++ b/slither/detectors/attributes/locked_ether.py\n@@ -3,7 +3,7 @@\n \"\"\"\n from typing import List\n \n-from slither.core.declarations.contract import Contract\n+from slither.core.declarations import Contract, SolidityFunction\n from slither.detectors.abstract_detector import (\n AbstractDetector,\n DetectorClassification,\n@@ -17,7 +17,9 @@\n NewContract,\n LibraryCall,\n InternalCall,\n+ SolidityCall,\n )\n+from slither.slithir.variables import Constant\n from slither.utils.output import Output\n \n \n@@ -68,8 +70,28 @@\n ):\n if ir.call_value and ir.call_value != 0:\n return False\n- if isinstance(ir, (LowLevelCall)):\n- if ir.function_name in [\"delegatecall\", \"callcode\"]:\n+ if isinstance(ir, (LowLevelCall)) and ir.function_name in [\n+ \"delegatecall\",\n+ \"callcode\",\n+ ]:\n+ return False\n+ if isinstance(ir, SolidityCall):\n+ call_can_send_ether = ir.function in [\n+ SolidityFunction(\n+ \"delegatecall(uint256,uint256,uint256,uint256,uint256,uint256)\"\n+ ),\n+ SolidityFunction(\n+ \"callcode(uint256,uint256,uint256,uint256,uint256,uint256,uint256)\"\n+ ),\n+ SolidityFunction(\n+ \"call(uint256,uint256,uint256,uint256,uint256,uint256,uint256)\"\n+ ),\n+ ]\n+ nonzero_call_value = call_can_send_ether and (\n+ not isinstance(ir.arguments[2], Constant)\n+ or ir.arguments[2].value != 0\n+ )\n+ if nonzero_call_value:\n return False\n # If a new internal call or librarycall\n # Add it to the list to explore\n", "issue": "[Bug] contract reports ether as locked when ether is sent in Yul\nThe following contract reports ether as locked despite it being sent in a Yul block\r\n```\r\ncontract FPLockedEther {\r\n receive() payable external {}\r\n\r\n function yulSendEther() external {\r\n bool success;\r\n assembly {\r\n success := call(gas(), caller(), balance(address()), 0,0,0,0)\r\n }\r\n }\r\n}\r\n```\r\n```\r\nContract locking ether found:\r\n\tContract FPLockedEther (locked-ether.sol#1-13) has payable functions:\r\n\t - FPLockedEther.receive() (locked-ether.sol#2-3)\r\n\tBut does not have a function to withdraw the ether\r\nReference: https://github.com/crytic/slither/wiki/Detector-Documentation#contracts-that-lock-ether\r\n```\r\n\r\nIt could be that the IR is incorrect here as it should not be a `SOLIDITY_CALL`\r\n```\r\nContract FPLockedEther\r\n\tFunction FPLockedEther.receive() (*)\r\n\tFunction FPLockedEther.yulSendEther() (*)\r\n\t\tExpression: success = call(uint256,uint256,uint256,uint256,uint256,uint256,uint256)(gas()(),caller()(),balance(uint256)(address()()),0,0,0,0)\r\n\t\tIRs:\r\n\t\t\tTMP_0(uint256) = SOLIDITY_CALL gas()()\r\n\t\t\tTMP_1(address) := msg.sender(address)\r\n\t\t\tTMP_2 = CONVERT this to address\r\n\t\t\tTMP_3(uint256) = SOLIDITY_CALL balance(uint256)(TMP_2)\r\n\t\t\tTMP_4(uint256) = SOLIDITY_CALL call(uint256,uint256,uint256,uint256,uint256,uint256,uint256)(TMP_0,TMP_1,TMP_3,0,0,0,0)\r\n\t\t\tsuccess(bool) := TMP_4(uint256)\r\n```\n", "before_files": [{"content": "\"\"\"\n Check if ethers are locked in the contract\n\"\"\"\nfrom typing import List\n\nfrom slither.core.declarations.contract import Contract\nfrom slither.detectors.abstract_detector import (\n AbstractDetector,\n DetectorClassification,\n DETECTOR_INFO,\n)\nfrom slither.slithir.operations import (\n HighLevelCall,\n LowLevelCall,\n Send,\n Transfer,\n NewContract,\n LibraryCall,\n InternalCall,\n)\nfrom slither.utils.output import Output\n\n\nclass LockedEther(AbstractDetector): # pylint: disable=too-many-nested-blocks\n\n ARGUMENT = \"locked-ether\"\n HELP = \"Contracts that lock ether\"\n IMPACT = DetectorClassification.MEDIUM\n CONFIDENCE = DetectorClassification.HIGH\n\n WIKI = \"https://github.com/crytic/slither/wiki/Detector-Documentation#contracts-that-lock-ether\"\n\n WIKI_TITLE = \"Contracts that lock Ether\"\n WIKI_DESCRIPTION = \"Contract with a `payable` function, but without a withdrawal capacity.\"\n\n # region wiki_exploit_scenario\n WIKI_EXPLOIT_SCENARIO = \"\"\"\n```solidity\npragma solidity 0.4.24;\ncontract Locked{\n function receive() payable public{\n }\n}\n```\nEvery Ether sent to `Locked` will be lost.\"\"\"\n # endregion wiki_exploit_scenario\n\n WIKI_RECOMMENDATION = \"Remove the payable attribute or add a withdraw function.\"\n\n @staticmethod\n def do_no_send_ether(contract: Contract) -> bool:\n functions = contract.all_functions_called\n to_explore = functions\n explored = []\n while to_explore: # pylint: disable=too-many-nested-blocks\n functions = to_explore\n explored += to_explore\n to_explore = []\n for function in functions:\n calls = [c.name for c in function.internal_calls]\n if \"suicide(address)\" in calls or \"selfdestruct(address)\" in calls:\n return False\n for node in function.nodes:\n for ir in node.irs:\n if isinstance(\n ir,\n (Send, Transfer, HighLevelCall, LowLevelCall, NewContract),\n ):\n if ir.call_value and ir.call_value != 0:\n return False\n if isinstance(ir, (LowLevelCall)):\n if ir.function_name in [\"delegatecall\", \"callcode\"]:\n return False\n # If a new internal call or librarycall\n # Add it to the list to explore\n # InternalCall if to follow internal call in libraries\n if isinstance(ir, (InternalCall, LibraryCall)):\n if not ir.function in explored:\n to_explore.append(ir.function)\n\n return True\n\n def _detect(self) -> List[Output]:\n results = []\n\n for contract in self.compilation_unit.contracts_derived:\n if contract.is_signature_only():\n continue\n funcs_payable = [function for function in contract.functions if function.payable]\n if funcs_payable:\n if self.do_no_send_ether(contract):\n info: DETECTOR_INFO = [\"Contract locking ether found:\\n\"]\n info += [\"\\tContract \", contract, \" has payable functions:\\n\"]\n for function in funcs_payable:\n info += [\"\\t - \", function, \"\\n\"]\n info += \"\\tBut does not have a function to withdraw the ether\\n\"\n\n json = self.generate_result(info)\n\n results.append(json)\n\n return results\n", "path": "slither/detectors/attributes/locked_ether.py"}], "after_files": [{"content": "\"\"\"\n Check if ethers are locked in the contract\n\"\"\"\nfrom typing import List\n\nfrom slither.core.declarations import Contract, SolidityFunction\nfrom slither.detectors.abstract_detector import (\n AbstractDetector,\n DetectorClassification,\n DETECTOR_INFO,\n)\nfrom slither.slithir.operations import (\n HighLevelCall,\n LowLevelCall,\n Send,\n Transfer,\n NewContract,\n LibraryCall,\n InternalCall,\n SolidityCall,\n)\nfrom slither.slithir.variables import Constant\nfrom slither.utils.output import Output\n\n\nclass LockedEther(AbstractDetector): # pylint: disable=too-many-nested-blocks\n\n ARGUMENT = \"locked-ether\"\n HELP = \"Contracts that lock ether\"\n IMPACT = DetectorClassification.MEDIUM\n CONFIDENCE = DetectorClassification.HIGH\n\n WIKI = \"https://github.com/crytic/slither/wiki/Detector-Documentation#contracts-that-lock-ether\"\n\n WIKI_TITLE = \"Contracts that lock Ether\"\n WIKI_DESCRIPTION = \"Contract with a `payable` function, but without a withdrawal capacity.\"\n\n # region wiki_exploit_scenario\n WIKI_EXPLOIT_SCENARIO = \"\"\"\n```solidity\npragma solidity 0.4.24;\ncontract Locked{\n function receive() payable public{\n }\n}\n```\nEvery Ether sent to `Locked` will be lost.\"\"\"\n # endregion wiki_exploit_scenario\n\n WIKI_RECOMMENDATION = \"Remove the payable attribute or add a withdraw function.\"\n\n @staticmethod\n def do_no_send_ether(contract: Contract) -> bool:\n functions = contract.all_functions_called\n to_explore = functions\n explored = []\n while to_explore: # pylint: disable=too-many-nested-blocks\n functions = to_explore\n explored += to_explore\n to_explore = []\n for function in functions:\n calls = [c.name for c in function.internal_calls]\n if \"suicide(address)\" in calls or \"selfdestruct(address)\" in calls:\n return False\n for node in function.nodes:\n for ir in node.irs:\n if isinstance(\n ir,\n (Send, Transfer, HighLevelCall, LowLevelCall, NewContract),\n ):\n if ir.call_value and ir.call_value != 0:\n return False\n if isinstance(ir, (LowLevelCall)) and ir.function_name in [\n \"delegatecall\",\n \"callcode\",\n ]:\n return False\n if isinstance(ir, SolidityCall):\n call_can_send_ether = ir.function in [\n SolidityFunction(\n \"delegatecall(uint256,uint256,uint256,uint256,uint256,uint256)\"\n ),\n SolidityFunction(\n \"callcode(uint256,uint256,uint256,uint256,uint256,uint256,uint256)\"\n ),\n SolidityFunction(\n \"call(uint256,uint256,uint256,uint256,uint256,uint256,uint256)\"\n ),\n ]\n nonzero_call_value = call_can_send_ether and (\n not isinstance(ir.arguments[2], Constant)\n or ir.arguments[2].value != 0\n )\n if nonzero_call_value:\n return False\n # If a new internal call or librarycall\n # Add it to the list to explore\n # InternalCall if to follow internal call in libraries\n if isinstance(ir, (InternalCall, LibraryCall)):\n if not ir.function in explored:\n to_explore.append(ir.function)\n\n return True\n\n def _detect(self) -> List[Output]:\n results = []\n\n for contract in self.compilation_unit.contracts_derived:\n if contract.is_signature_only():\n continue\n funcs_payable = [function for function in contract.functions if function.payable]\n if funcs_payable:\n if self.do_no_send_ether(contract):\n info: DETECTOR_INFO = [\"Contract locking ether found:\\n\"]\n info += [\"\\tContract \", contract, \" has payable functions:\\n\"]\n for function in funcs_payable:\n info += [\"\\t - \", function, \"\\n\"]\n info += \"\\tBut does not have a function to withdraw the ether\\n\"\n\n json = self.generate_result(info)\n\n results.append(json)\n\n return results\n", "path": "slither/detectors/attributes/locked_ether.py"}]}

| 1,643 | 485 |

gh_patches_debug_22330

|

rasdani/github-patches

|

git_diff

|

comic__grand-challenge.org-1744

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Markdown preview fails CSRF validation checks

Caused by the name change of the CSRF cookie.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `app/grandchallenge/core/widgets.py`

Content:

```

1 from django import forms

2 from markdownx.widgets import AdminMarkdownxWidget, MarkdownxWidget

3

4

5 class JSONEditorWidget(forms.Textarea):

6 template_name = "jsoneditor/jsoneditor_widget.html"

7

8 def __init__(self, schema=None, attrs=None):

9 super().__init__(attrs)

10 self.schema = schema

11

12 def get_context(self, name, value, attrs):

13 context = super().get_context(name, value, attrs)

14 context.update({"schema": self.schema})

15 return context

16

17 class Media:

18 css = {

19 "all": (

20 "https://cdnjs.cloudflare.com/ajax/libs/jsoneditor/5.25.0/jsoneditor.min.css",

21 )

22 }

23 js = (

24 "https://cdnjs.cloudflare.com/ajax/libs/jsoneditor/5.25.0/jsoneditor.min.js",

25 )

26

27

28 class MarkdownEditorWidget(MarkdownxWidget):

29 class Media(MarkdownxWidget.Media):

30 js = [

31 *MarkdownxWidget.Media.js,

32 "vendor/js/markdown-toolbar-element/index.umd.js",

33 ]

34

35

36 class MarkdownEditorAdminWidget(AdminMarkdownxWidget):

37 class Media(AdminMarkdownxWidget.Media):

38 css = {

39 "all": [

40 *AdminMarkdownxWidget.Media.css["all"],

41 "vendor/css/base.min.css",

42 "vendor/fa/css/all.css",

43 ]

44 }

45 js = [

46 *AdminMarkdownxWidget.Media.js,

47 "vendor/js/markdown-toolbar-element/index.umd.js",

48 ]

49

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/app/grandchallenge/core/widgets.py b/app/grandchallenge/core/widgets.py

--- a/app/grandchallenge/core/widgets.py

+++ b/app/grandchallenge/core/widgets.py

@@ -26,23 +26,29 @@

class MarkdownEditorWidget(MarkdownxWidget):

- class Media(MarkdownxWidget.Media):

- js = [

- *MarkdownxWidget.Media.js,

- "vendor/js/markdown-toolbar-element/index.umd.js",

- ]

+ @property

+ def media(self):

+ return forms.Media(

+ js=(

+ "js/markdownx.js",

+ "vendor/js/markdown-toolbar-element/index.umd.js",

+ )

+ )

class MarkdownEditorAdminWidget(AdminMarkdownxWidget):

- class Media(AdminMarkdownxWidget.Media):

- css = {

- "all": [

- *AdminMarkdownxWidget.Media.css["all"],

- "vendor/css/base.min.css",

- "vendor/fa/css/all.css",

- ]

- }

- js = [

- *AdminMarkdownxWidget.Media.js,

- "vendor/js/markdown-toolbar-element/index.umd.js",

- ]

+ @property

+ def media(self):

+ return forms.Media(

+ css={

+ "all": [

+ *AdminMarkdownxWidget.Media.css["all"],

+ "vendor/css/base.min.css",

+ "vendor/fa/css/all.css",

+ ]

+ },

+ js=[

+ "js/markdownx.js",

+ "vendor/js/markdown-toolbar-element/index.umd.js",

+ ],

+ )

|

{"golden_diff": "diff --git a/app/grandchallenge/core/widgets.py b/app/grandchallenge/core/widgets.py\n--- a/app/grandchallenge/core/widgets.py\n+++ b/app/grandchallenge/core/widgets.py\n@@ -26,23 +26,29 @@\n \n \n class MarkdownEditorWidget(MarkdownxWidget):\n- class Media(MarkdownxWidget.Media):\n- js = [\n- *MarkdownxWidget.Media.js,\n- \"vendor/js/markdown-toolbar-element/index.umd.js\",\n- ]\n+ @property\n+ def media(self):\n+ return forms.Media(\n+ js=(\n+ \"js/markdownx.js\",\n+ \"vendor/js/markdown-toolbar-element/index.umd.js\",\n+ )\n+ )\n \n \n class MarkdownEditorAdminWidget(AdminMarkdownxWidget):\n- class Media(AdminMarkdownxWidget.Media):\n- css = {\n- \"all\": [\n- *AdminMarkdownxWidget.Media.css[\"all\"],\n- \"vendor/css/base.min.css\",\n- \"vendor/fa/css/all.css\",\n- ]\n- }\n- js = [\n- *AdminMarkdownxWidget.Media.js,\n- \"vendor/js/markdown-toolbar-element/index.umd.js\",\n- ]\n+ @property\n+ def media(self):\n+ return forms.Media(\n+ css={\n+ \"all\": [\n+ *AdminMarkdownxWidget.Media.css[\"all\"],\n+ \"vendor/css/base.min.css\",\n+ \"vendor/fa/css/all.css\",\n+ ]\n+ },\n+ js=[\n+ \"js/markdownx.js\",\n+ \"vendor/js/markdown-toolbar-element/index.umd.js\",\n+ ],\n+ )\n", "issue": "Markdown preview fails CSRF validation checks\nCaused by the name change of the CSRF cookie.\n", "before_files": [{"content": "from django import forms\nfrom markdownx.widgets import AdminMarkdownxWidget, MarkdownxWidget\n\n\nclass JSONEditorWidget(forms.Textarea):\n template_name = \"jsoneditor/jsoneditor_widget.html\"\n\n def __init__(self, schema=None, attrs=None):\n super().__init__(attrs)\n self.schema = schema\n\n def get_context(self, name, value, attrs):\n context = super().get_context(name, value, attrs)\n context.update({\"schema\": self.schema})\n return context\n\n class Media:\n css = {\n \"all\": (\n \"https://cdnjs.cloudflare.com/ajax/libs/jsoneditor/5.25.0/jsoneditor.min.css\",\n )\n }\n js = (\n \"https://cdnjs.cloudflare.com/ajax/libs/jsoneditor/5.25.0/jsoneditor.min.js\",\n )\n\n\nclass MarkdownEditorWidget(MarkdownxWidget):\n class Media(MarkdownxWidget.Media):\n js = [\n *MarkdownxWidget.Media.js,\n \"vendor/js/markdown-toolbar-element/index.umd.js\",\n ]\n\n\nclass MarkdownEditorAdminWidget(AdminMarkdownxWidget):\n class Media(AdminMarkdownxWidget.Media):\n css = {\n \"all\": [\n *AdminMarkdownxWidget.Media.css[\"all\"],\n \"vendor/css/base.min.css\",\n \"vendor/fa/css/all.css\",\n ]\n }\n js = [\n *AdminMarkdownxWidget.Media.js,\n \"vendor/js/markdown-toolbar-element/index.umd.js\",\n ]\n", "path": "app/grandchallenge/core/widgets.py"}], "after_files": [{"content": "from django import forms\nfrom markdownx.widgets import AdminMarkdownxWidget, MarkdownxWidget\n\n\nclass JSONEditorWidget(forms.Textarea):\n template_name = \"jsoneditor/jsoneditor_widget.html\"\n\n def __init__(self, schema=None, attrs=None):\n super().__init__(attrs)\n self.schema = schema\n\n def get_context(self, name, value, attrs):\n context = super().get_context(name, value, attrs)\n context.update({\"schema\": self.schema})\n return context\n\n class Media:\n css = {\n \"all\": (\n \"https://cdnjs.cloudflare.com/ajax/libs/jsoneditor/5.25.0/jsoneditor.min.css\",\n )\n }\n js = (\n \"https://cdnjs.cloudflare.com/ajax/libs/jsoneditor/5.25.0/jsoneditor.min.js\",\n )\n\n\nclass MarkdownEditorWidget(MarkdownxWidget):\n @property\n def media(self):\n return forms.Media(\n js=(\n \"js/markdownx.js\",\n \"vendor/js/markdown-toolbar-element/index.umd.js\",\n )\n )\n\n\nclass MarkdownEditorAdminWidget(AdminMarkdownxWidget):\n @property\n def media(self):\n return forms.Media(\n css={\n \"all\": [\n *AdminMarkdownxWidget.Media.css[\"all\"],\n \"vendor/css/base.min.css\",\n \"vendor/fa/css/all.css\",\n ]\n },\n js=[\n \"js/markdownx.js\",\n \"vendor/js/markdown-toolbar-element/index.umd.js\",\n ],\n )\n", "path": "app/grandchallenge/core/widgets.py"}]}

| 685 | 355 |

gh_patches_debug_19406

|

rasdani/github-patches

|

git_diff

|

aws-cloudformation__cfn-lint-441

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

E3002 error thrown when using parameter lists

*cfn-lint version cfn-lint 0.8.3

*Description of issue. When using SSM parameters that are type list, the linter doesn't properly recognize them as a list and thows an error E3002

Specifically E3002 Property PreferredAvailabilityZones should be of type List or Parameter should be a list for resource cacheRedisV1

us-east-1.yaml:249:7

The parameter section for this is:

Parameters:

azList:

Type: "AWS::SSM::Parameter::Value<List<String>>"

Description: "The list of AZs from Parameter Store"

Default: '/regionSettings/azList'

The resource is defined as:

cacheForumRedisV1:

Type: AWS::ElastiCache::CacheCluster

Properties:

PreferredAvailabilityZones: !Ref azList

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/cfnlint/rules/resources/properties/Properties.py`

Content:

```

1 """

2 Copyright 2018 Amazon.com, Inc. or its affiliates. All Rights Reserved.

3

4 Permission is hereby granted, free of charge, to any person obtaining a copy of this

5 software and associated documentation files (the "Software"), to deal in the Software

6 without restriction, including without limitation the rights to use, copy, modify,

7 merge, publish, distribute, sublicense, and/or sell copies of the Software, and to

8 permit persons to whom the Software is furnished to do so.

9

10 THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED,

11 INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A

12 PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

13 HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

14 OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE

15 SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

16 """

17 import six

18 from cfnlint import CloudFormationLintRule

19 from cfnlint import RuleMatch

20 import cfnlint.helpers

21

22

23 class Properties(CloudFormationLintRule):

24 """Check Base Resource Configuration"""

25 id = 'E3002'

26 shortdesc = 'Resource properties are valid'

27 description = 'Making sure that resources properties ' + \

28 'are properly configured'

29 source_url = 'https://github.com/awslabs/cfn-python-lint/blob/master/docs/cfn-resource-specification.md#properties'

30 tags = ['resources']

31

32 def __init__(self):

33 super(Properties, self).__init__()

34 self.cfn = {}

35 self.resourcetypes = {}

36 self.propertytypes = {}

37 self.parameternames = {}

38

39 def primitivetypecheck(self, value, primtype, proppath):

40 """

41 Check primitive types.

42 Only check that a primitive type is actual a primitive type:

43 - If its JSON let it go

44 - If its Conditions check each sub path of the condition

45 - If its a object make sure its a valid function and function

46 - If its a list raise an error

47

48 """

49

50 matches = []

51 if isinstance(value, dict) and primtype == 'Json':

52 return matches

53 if isinstance(value, dict):

54 if len(value) == 1:

55 for sub_key, sub_value in value.items():

56 if sub_key in cfnlint.helpers.CONDITION_FUNCTIONS:

57 # not erroring on bad Ifs but not need to account for it

58 # so the rule doesn't error out

59 if isinstance(sub_value, list):

60 if len(sub_value) == 3:

61 matches.extend(self.primitivetypecheck(

62 sub_value[1], primtype, proppath + ['Fn::If', 1]))

63 matches.extend(self.primitivetypecheck(

64 sub_value[2], primtype, proppath + ['Fn::If', 2]))

65 elif sub_key not in ['Fn::Base64', 'Fn::GetAtt', 'Fn::GetAZs', 'Fn::ImportValue',

66 'Fn::Join', 'Fn::Split', 'Fn::FindInMap', 'Fn::Select', 'Ref',

67 'Fn::If', 'Fn::Contains', 'Fn::Sub', 'Fn::Cidr']:

68 message = 'Property %s has an illegal function %s' % ('/'.join(map(str, proppath)), sub_key)

69 matches.append(RuleMatch(proppath, message))

70 else:

71 message = 'Property is an object instead of %s at %s' % (primtype, '/'.join(map(str, proppath)))

72 matches.append(RuleMatch(proppath, message))

73 elif isinstance(value, list):

74 message = 'Property should be of type %s not List at %s' % (primtype, '/'.join(map(str, proppath)))

75 matches.append(RuleMatch(proppath, message))

76

77 return matches

78

79 def check_list_for_condition(self, text, prop, parenttype, resourcename, propspec, path):

80 """Checks lists that are a dict for conditions"""

81 matches = []

82 if len(text[prop]) == 1:

83 for sub_key, sub_value in text[prop].items():

84 if sub_key in cfnlint.helpers.CONDITION_FUNCTIONS:

85 if len(sub_value) == 3:

86 for if_i, if_v in enumerate(sub_value[1:]):

87 condition_path = path[:] + [sub_key, if_i + 1]

88 if isinstance(if_v, list):

89 for index, item in enumerate(if_v):

90 arrproppath = condition_path[:]

91

92 arrproppath.append(index)

93 matches.extend(self.propertycheck(

94 item, propspec['ItemType'],

95 parenttype, resourcename, arrproppath, False))

96 elif isinstance(if_v, dict):

97 if len(if_v) == 1:

98 for d_k, d_v in if_v.items():

99 if d_k != 'Ref' or d_v != 'AWS::NoValue':

100 message = 'Property {0} should be of type List for resource {1} at {2}'

101 matches.append(

102 RuleMatch(

103 condition_path,

104 message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))

105 else:

106 message = 'Property {0} should be of type List for resource {1} at {2}'

107 matches.append(

108 RuleMatch(

109 condition_path,

110 message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))

111 else:

112 message = 'Property {0} should be of type List for resource {1} at {2}'

113 matches.append(

114 RuleMatch(

115 condition_path,

116 message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))

117

118 else:

119 message = 'Invalid !If condition specified at %s' % ('/'.join(map(str, path)))

120 matches.append(RuleMatch(path, message))

121 else:

122 message = 'Property is an object instead of List at %s' % ('/'.join(map(str, path)))

123 matches.append(RuleMatch(path, message))

124 else:

125 message = 'Property is an object instead of List at %s' % ('/'.join(map(str, path)))

126 matches.append(RuleMatch(path, message))

127

128 return matches

129

130 def check_exceptions(self, parenttype, proptype, text):

131 """

132 Checks for exceptions to the spec

133 - Start with handling exceptions for templated code.

134 """

135 templated_exceptions = {

136 'AWS::ApiGateway::RestApi': ['BodyS3Location'],

137 'AWS::Lambda::Function': ['Code'],

138 'AWS::ElasticBeanstalk::ApplicationVersion': ['SourceBundle'],

139 }

140

141 exceptions = templated_exceptions.get(parenttype, [])

142 if proptype in exceptions:

143 if isinstance(text, six.string_types):

144 return True

145

146 return False

147

148 def propertycheck(self, text, proptype, parenttype, resourcename, path, root):

149 """Check individual properties"""

150

151 parameternames = self.parameternames

152 matches = []

153 if root:

154 specs = self.resourcetypes

155 resourcetype = parenttype

156 else:

157 specs = self.propertytypes

158 resourcetype = str.format('{0}.{1}', parenttype, proptype)

159 # Handle tags

160 if resourcetype not in specs:

161 if proptype in specs:

162 resourcetype = proptype

163 else:

164 resourcetype = str.format('{0}.{1}', parenttype, proptype)

165 else:

166 resourcetype = str.format('{0}.{1}', parenttype, proptype)

167

168 resourcespec = specs[resourcetype].get('Properties', {})

169 supports_additional_properties = specs[resourcetype].get('AdditionalProperties', False)

170

171 if text == 'AWS::NoValue':

172 return matches

173 if not isinstance(text, dict):

174 if not self.check_exceptions(parenttype, proptype, text):

175 message = 'Expecting an object at %s' % ('/'.join(map(str, path)))

176 matches.append(RuleMatch(path, message))

177 return matches

178

179 for prop in text:

180 proppath = path[:]

181 proppath.append(prop)

182 if prop not in resourcespec:

183 if prop in cfnlint.helpers.CONDITION_FUNCTIONS:

184 cond_values = self.cfn.get_condition_values(text[prop])

185 for cond_value in cond_values:

186 matches.extend(self.propertycheck(

187 cond_value['Value'], proptype, parenttype, resourcename,

188 proppath + cond_value['Path'], root))

189 elif not supports_additional_properties:

190 message = 'Invalid Property %s' % ('/'.join(map(str, proppath)))

191 matches.append(RuleMatch(proppath, message))

192 else:

193 if 'Type' in resourcespec[prop]:

194 if resourcespec[prop]['Type'] == 'List':

195 if 'PrimitiveItemType' not in resourcespec[prop]:

196 if isinstance(text[prop], list):

197 for index, item in enumerate(text[prop]):

198 arrproppath = proppath[:]

199 arrproppath.append(index)

200 matches.extend(self.propertycheck(

201 item, resourcespec[prop]['ItemType'],

202 parenttype, resourcename, arrproppath, False))

203 elif (isinstance(text[prop], dict)):

204 # A list can be be specific as a Conditional

205 matches.extend(

206 self.check_list_for_condition(

207 text, prop, parenttype, resourcename, resourcespec[prop], proppath)

208 )

209 else:

210 message = 'Property {0} should be of type List for resource {1}'

211 matches.append(

212 RuleMatch(

213 proppath,

214 message.format(prop, resourcename)))

215 else:

216 if isinstance(text[prop], list):

217 primtype = resourcespec[prop]['PrimitiveItemType']

218 for index, item in enumerate(text[prop]):

219 arrproppath = proppath[:]

220 arrproppath.append(index)

221 matches.extend(self.primitivetypecheck(item, primtype, arrproppath))

222 elif isinstance(text[prop], dict):

223 if 'Ref' in text[prop]:

224 ref = text[prop]['Ref']

225 if ref in parameternames:

226 param_type = self.cfn.template['Parameters'][ref]['Type']

227 if param_type:

228 if not param_type.startswith('List<') and not param_type == 'CommaDelimitedList':

229 message = 'Property {0} should be of type List or Parameter should ' \

230 'be a list for resource {1}'

231 matches.append(

232 RuleMatch(

233 proppath,

234 message.format(prop, resourcename)))

235 else:

236 message = 'Property {0} should be of type List for resource {1}'

237 matches.append(

238 RuleMatch(

239 proppath,

240 message.format(prop, resourcename)))

241 else:

242 message = 'Property {0} should be of type List for resource {1}'

243 matches.append(

244 RuleMatch(

245 proppath,

246 message.format(prop, resourcename)))

247 else:

248 if resourcespec[prop]['Type'] not in ['Map']:

249 matches.extend(self.propertycheck(

250 text[prop], resourcespec[prop]['Type'], parenttype,

251 resourcename, proppath, False))

252 elif 'PrimitiveType' in resourcespec[prop]:

253 primtype = resourcespec[prop]['PrimitiveType']

254 matches.extend(self.primitivetypecheck(text[prop], primtype, proppath))

255

256 return matches

257

258 def match(self, cfn):

259 """Check CloudFormation Properties"""

260 matches = []

261 self.cfn = cfn

262

263 resourcespecs = cfnlint.helpers.RESOURCE_SPECS[cfn.regions[0]]

264 self.resourcetypes = resourcespecs['ResourceTypes']

265 self.propertytypes = resourcespecs['PropertyTypes']

266 self.parameternames = self.cfn.get_parameter_names()

267 for resourcename, resourcevalue in cfn.get_resources().items():

268 if 'Properties' in resourcevalue and 'Type' in resourcevalue:

269 resourcetype = resourcevalue.get('Type', None)

270 if resourcetype.startswith('Custom::'):

271 resourcetype = 'AWS::CloudFormation::CustomResource'

272 if resourcetype in self.resourcetypes:

273 path = ['Resources', resourcename, 'Properties']

274 matches.extend(self.propertycheck(

275 resourcevalue.get('Properties', {}), '',

276 resourcetype, resourcename, path, True))

277

278 return matches

279

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/cfnlint/rules/resources/properties/Properties.py b/src/cfnlint/rules/resources/properties/Properties.py

--- a/src/cfnlint/rules/resources/properties/Properties.py

+++ b/src/cfnlint/rules/resources/properties/Properties.py

@@ -225,7 +225,7 @@

if ref in parameternames:

param_type = self.cfn.template['Parameters'][ref]['Type']

if param_type:

- if not param_type.startswith('List<') and not param_type == 'CommaDelimitedList':

+ if 'List<' not in param_type and '<List' not in param_type and not param_type == 'CommaDelimitedList':

message = 'Property {0} should be of type List or Parameter should ' \

'be a list for resource {1}'

matches.append(

|

{"golden_diff": "diff --git a/src/cfnlint/rules/resources/properties/Properties.py b/src/cfnlint/rules/resources/properties/Properties.py\n--- a/src/cfnlint/rules/resources/properties/Properties.py\n+++ b/src/cfnlint/rules/resources/properties/Properties.py\n@@ -225,7 +225,7 @@\n if ref in parameternames:\n param_type = self.cfn.template['Parameters'][ref]['Type']\n if param_type:\n- if not param_type.startswith('List<') and not param_type == 'CommaDelimitedList':\n+ if 'List<' not in param_type and '<List' not in param_type and not param_type == 'CommaDelimitedList':\n message = 'Property {0} should be of type List or Parameter should ' \\\n 'be a list for resource {1}'\n matches.append(\n", "issue": "E3002 error thrown when using parameter lists\n*cfn-lint version cfn-lint 0.8.3\r\n\r\n*Description of issue. When using SSM parameters that are type list, the linter doesn't properly recognize them as a list and thows an error E3002 \r\n\r\nSpecifically E3002 Property PreferredAvailabilityZones should be of type List or Parameter should be a list for resource cacheRedisV1\r\nus-east-1.yaml:249:7\r\n\r\nThe parameter section for this is:\r\nParameters:\r\n azList:\r\n Type: \"AWS::SSM::Parameter::Value<List<String>>\"\r\n Description: \"The list of AZs from Parameter Store\"\r\n Default: '/regionSettings/azList'\r\n\r\nThe resource is defined as:\r\ncacheForumRedisV1:\r\n Type: AWS::ElastiCache::CacheCluster\r\n Properties:\r\n PreferredAvailabilityZones: !Ref azList\r\n\n", "before_files": [{"content": "\"\"\"\n Copyright 2018 Amazon.com, Inc. or its affiliates. All Rights Reserved.\n\n Permission is hereby granted, free of charge, to any person obtaining a copy of this\n software and associated documentation files (the \"Software\"), to deal in the Software\n without restriction, including without limitation the rights to use, copy, modify,\n merge, publish, distribute, sublicense, and/or sell copies of the Software, and to\n permit persons to whom the Software is furnished to do so.\n\n THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED,\n INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A\n PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT\n HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION\n OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE\n SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.\n\"\"\"\nimport six\nfrom cfnlint import CloudFormationLintRule\nfrom cfnlint import RuleMatch\nimport cfnlint.helpers\n\n\nclass Properties(CloudFormationLintRule):\n \"\"\"Check Base Resource Configuration\"\"\"\n id = 'E3002'\n shortdesc = 'Resource properties are valid'\n description = 'Making sure that resources properties ' + \\\n 'are properly configured'\n source_url = 'https://github.com/awslabs/cfn-python-lint/blob/master/docs/cfn-resource-specification.md#properties'\n tags = ['resources']\n\n def __init__(self):\n super(Properties, self).__init__()\n self.cfn = {}\n self.resourcetypes = {}\n self.propertytypes = {}\n self.parameternames = {}\n\n def primitivetypecheck(self, value, primtype, proppath):\n \"\"\"\n Check primitive types.\n Only check that a primitive type is actual a primitive type:\n - If its JSON let it go\n - If its Conditions check each sub path of the condition\n - If its a object make sure its a valid function and function\n - If its a list raise an error\n\n \"\"\"\n\n matches = []\n if isinstance(value, dict) and primtype == 'Json':\n return matches\n if isinstance(value, dict):\n if len(value) == 1:\n for sub_key, sub_value in value.items():\n if sub_key in cfnlint.helpers.CONDITION_FUNCTIONS:\n # not erroring on bad Ifs but not need to account for it\n # so the rule doesn't error out\n if isinstance(sub_value, list):\n if len(sub_value) == 3:\n matches.extend(self.primitivetypecheck(\n sub_value[1], primtype, proppath + ['Fn::If', 1]))\n matches.extend(self.primitivetypecheck(\n sub_value[2], primtype, proppath + ['Fn::If', 2]))\n elif sub_key not in ['Fn::Base64', 'Fn::GetAtt', 'Fn::GetAZs', 'Fn::ImportValue',\n 'Fn::Join', 'Fn::Split', 'Fn::FindInMap', 'Fn::Select', 'Ref',\n 'Fn::If', 'Fn::Contains', 'Fn::Sub', 'Fn::Cidr']:\n message = 'Property %s has an illegal function %s' % ('/'.join(map(str, proppath)), sub_key)\n matches.append(RuleMatch(proppath, message))\n else:\n message = 'Property is an object instead of %s at %s' % (primtype, '/'.join(map(str, proppath)))\n matches.append(RuleMatch(proppath, message))\n elif isinstance(value, list):\n message = 'Property should be of type %s not List at %s' % (primtype, '/'.join(map(str, proppath)))\n matches.append(RuleMatch(proppath, message))\n\n return matches\n\n def check_list_for_condition(self, text, prop, parenttype, resourcename, propspec, path):\n \"\"\"Checks lists that are a dict for conditions\"\"\"\n matches = []\n if len(text[prop]) == 1:\n for sub_key, sub_value in text[prop].items():\n if sub_key in cfnlint.helpers.CONDITION_FUNCTIONS:\n if len(sub_value) == 3:\n for if_i, if_v in enumerate(sub_value[1:]):\n condition_path = path[:] + [sub_key, if_i + 1]\n if isinstance(if_v, list):\n for index, item in enumerate(if_v):\n arrproppath = condition_path[:]\n\n arrproppath.append(index)\n matches.extend(self.propertycheck(\n item, propspec['ItemType'],\n parenttype, resourcename, arrproppath, False))\n elif isinstance(if_v, dict):\n if len(if_v) == 1:\n for d_k, d_v in if_v.items():\n if d_k != 'Ref' or d_v != 'AWS::NoValue':\n message = 'Property {0} should be of type List for resource {1} at {2}'\n matches.append(\n RuleMatch(\n condition_path,\n message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))\n else:\n message = 'Property {0} should be of type List for resource {1} at {2}'\n matches.append(\n RuleMatch(\n condition_path,\n message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))\n else:\n message = 'Property {0} should be of type List for resource {1} at {2}'\n matches.append(\n RuleMatch(\n condition_path,\n message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))\n\n else:\n message = 'Invalid !If condition specified at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n else:\n message = 'Property is an object instead of List at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n else:\n message = 'Property is an object instead of List at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n\n return matches\n\n def check_exceptions(self, parenttype, proptype, text):\n \"\"\"\n Checks for exceptions to the spec\n - Start with handling exceptions for templated code.\n \"\"\"\n templated_exceptions = {\n 'AWS::ApiGateway::RestApi': ['BodyS3Location'],\n 'AWS::Lambda::Function': ['Code'],\n 'AWS::ElasticBeanstalk::ApplicationVersion': ['SourceBundle'],\n }\n\n exceptions = templated_exceptions.get(parenttype, [])\n if proptype in exceptions:\n if isinstance(text, six.string_types):\n return True\n\n return False\n\n def propertycheck(self, text, proptype, parenttype, resourcename, path, root):\n \"\"\"Check individual properties\"\"\"\n\n parameternames = self.parameternames\n matches = []\n if root:\n specs = self.resourcetypes\n resourcetype = parenttype\n else:\n specs = self.propertytypes\n resourcetype = str.format('{0}.{1}', parenttype, proptype)\n # Handle tags\n if resourcetype not in specs:\n if proptype in specs:\n resourcetype = proptype\n else:\n resourcetype = str.format('{0}.{1}', parenttype, proptype)\n else:\n resourcetype = str.format('{0}.{1}', parenttype, proptype)\n\n resourcespec = specs[resourcetype].get('Properties', {})\n supports_additional_properties = specs[resourcetype].get('AdditionalProperties', False)\n\n if text == 'AWS::NoValue':\n return matches\n if not isinstance(text, dict):\n if not self.check_exceptions(parenttype, proptype, text):\n message = 'Expecting an object at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n return matches\n\n for prop in text:\n proppath = path[:]\n proppath.append(prop)\n if prop not in resourcespec:\n if prop in cfnlint.helpers.CONDITION_FUNCTIONS:\n cond_values = self.cfn.get_condition_values(text[prop])\n for cond_value in cond_values:\n matches.extend(self.propertycheck(\n cond_value['Value'], proptype, parenttype, resourcename,\n proppath + cond_value['Path'], root))\n elif not supports_additional_properties:\n message = 'Invalid Property %s' % ('/'.join(map(str, proppath)))\n matches.append(RuleMatch(proppath, message))\n else:\n if 'Type' in resourcespec[prop]:\n if resourcespec[prop]['Type'] == 'List':\n if 'PrimitiveItemType' not in resourcespec[prop]:\n if isinstance(text[prop], list):\n for index, item in enumerate(text[prop]):\n arrproppath = proppath[:]\n arrproppath.append(index)\n matches.extend(self.propertycheck(\n item, resourcespec[prop]['ItemType'],\n parenttype, resourcename, arrproppath, False))\n elif (isinstance(text[prop], dict)):\n # A list can be be specific as a Conditional\n matches.extend(\n self.check_list_for_condition(\n text, prop, parenttype, resourcename, resourcespec[prop], proppath)\n )\n else:\n message = 'Property {0} should be of type List for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n if isinstance(text[prop], list):\n primtype = resourcespec[prop]['PrimitiveItemType']\n for index, item in enumerate(text[prop]):\n arrproppath = proppath[:]\n arrproppath.append(index)\n matches.extend(self.primitivetypecheck(item, primtype, arrproppath))\n elif isinstance(text[prop], dict):\n if 'Ref' in text[prop]:\n ref = text[prop]['Ref']\n if ref in parameternames:\n param_type = self.cfn.template['Parameters'][ref]['Type']\n if param_type:\n if not param_type.startswith('List<') and not param_type == 'CommaDelimitedList':\n message = 'Property {0} should be of type List or Parameter should ' \\\n 'be a list for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n message = 'Property {0} should be of type List for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n message = 'Property {0} should be of type List for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n if resourcespec[prop]['Type'] not in ['Map']:\n matches.extend(self.propertycheck(\n text[prop], resourcespec[prop]['Type'], parenttype,\n resourcename, proppath, False))\n elif 'PrimitiveType' in resourcespec[prop]:\n primtype = resourcespec[prop]['PrimitiveType']\n matches.extend(self.primitivetypecheck(text[prop], primtype, proppath))\n\n return matches\n\n def match(self, cfn):\n \"\"\"Check CloudFormation Properties\"\"\"\n matches = []\n self.cfn = cfn\n\n resourcespecs = cfnlint.helpers.RESOURCE_SPECS[cfn.regions[0]]\n self.resourcetypes = resourcespecs['ResourceTypes']\n self.propertytypes = resourcespecs['PropertyTypes']\n self.parameternames = self.cfn.get_parameter_names()\n for resourcename, resourcevalue in cfn.get_resources().items():\n if 'Properties' in resourcevalue and 'Type' in resourcevalue:\n resourcetype = resourcevalue.get('Type', None)\n if resourcetype.startswith('Custom::'):\n resourcetype = 'AWS::CloudFormation::CustomResource'\n if resourcetype in self.resourcetypes:\n path = ['Resources', resourcename, 'Properties']\n matches.extend(self.propertycheck(\n resourcevalue.get('Properties', {}), '',\n resourcetype, resourcename, path, True))\n\n return matches\n", "path": "src/cfnlint/rules/resources/properties/Properties.py"}], "after_files": [{"content": "\"\"\"\n Copyright 2018 Amazon.com, Inc. or its affiliates. All Rights Reserved.\n\n Permission is hereby granted, free of charge, to any person obtaining a copy of this\n software and associated documentation files (the \"Software\"), to deal in the Software\n without restriction, including without limitation the rights to use, copy, modify,\n merge, publish, distribute, sublicense, and/or sell copies of the Software, and to\n permit persons to whom the Software is furnished to do so.\n\n THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED,\n INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A\n PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT\n HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION\n OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE\n SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.\n\"\"\"\nimport six\nfrom cfnlint import CloudFormationLintRule\nfrom cfnlint import RuleMatch\nimport cfnlint.helpers\n\n\nclass Properties(CloudFormationLintRule):\n \"\"\"Check Base Resource Configuration\"\"\"\n id = 'E3002'\n shortdesc = 'Resource properties are valid'\n description = 'Making sure that resources properties ' + \\\n 'are properly configured'\n source_url = 'https://github.com/awslabs/cfn-python-lint/blob/master/docs/cfn-resource-specification.md#properties'\n tags = ['resources']\n\n def __init__(self):\n super(Properties, self).__init__()\n self.cfn = {}\n self.resourcetypes = {}\n self.propertytypes = {}\n self.parameternames = {}\n\n def primitivetypecheck(self, value, primtype, proppath):\n \"\"\"\n Check primitive types.\n Only check that a primitive type is actual a primitive type:\n - If its JSON let it go\n - If its Conditions check each sub path of the condition\n - If its a object make sure its a valid function and function\n - If its a list raise an error\n\n \"\"\"\n\n matches = []\n if isinstance(value, dict) and primtype == 'Json':\n return matches\n if isinstance(value, dict):\n if len(value) == 1:\n for sub_key, sub_value in value.items():\n if sub_key in cfnlint.helpers.CONDITION_FUNCTIONS:\n # not erroring on bad Ifs but not need to account for it\n # so the rule doesn't error out\n if isinstance(sub_value, list):\n if len(sub_value) == 3:\n matches.extend(self.primitivetypecheck(\n sub_value[1], primtype, proppath + ['Fn::If', 1]))\n matches.extend(self.primitivetypecheck(\n sub_value[2], primtype, proppath + ['Fn::If', 2]))\n elif sub_key not in ['Fn::Base64', 'Fn::GetAtt', 'Fn::GetAZs', 'Fn::ImportValue',\n 'Fn::Join', 'Fn::Split', 'Fn::FindInMap', 'Fn::Select', 'Ref',\n 'Fn::If', 'Fn::Contains', 'Fn::Sub', 'Fn::Cidr']:\n message = 'Property %s has an illegal function %s' % ('/'.join(map(str, proppath)), sub_key)\n matches.append(RuleMatch(proppath, message))\n else:\n message = 'Property is an object instead of %s at %s' % (primtype, '/'.join(map(str, proppath)))\n matches.append(RuleMatch(proppath, message))\n elif isinstance(value, list):\n message = 'Property should be of type %s not List at %s' % (primtype, '/'.join(map(str, proppath)))\n matches.append(RuleMatch(proppath, message))\n\n return matches\n\n def check_list_for_condition(self, text, prop, parenttype, resourcename, propspec, path):\n \"\"\"Checks lists that are a dict for conditions\"\"\"\n matches = []\n if len(text[prop]) == 1:\n for sub_key, sub_value in text[prop].items():\n if sub_key in cfnlint.helpers.CONDITION_FUNCTIONS:\n if len(sub_value) == 3:\n for if_i, if_v in enumerate(sub_value[1:]):\n condition_path = path[:] + [sub_key, if_i + 1]\n if isinstance(if_v, list):\n for index, item in enumerate(if_v):\n arrproppath = condition_path[:]\n\n arrproppath.append(index)\n matches.extend(self.propertycheck(\n item, propspec['ItemType'],\n parenttype, resourcename, arrproppath, False))\n elif isinstance(if_v, dict):\n if len(if_v) == 1:\n for d_k, d_v in if_v.items():\n if d_k != 'Ref' or d_v != 'AWS::NoValue':\n message = 'Property {0} should be of type List for resource {1} at {2}'\n matches.append(\n RuleMatch(\n condition_path,\n message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))\n else:\n message = 'Property {0} should be of type List for resource {1} at {2}'\n matches.append(\n RuleMatch(\n condition_path,\n message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))\n else:\n message = 'Property {0} should be of type List for resource {1} at {2}'\n matches.append(\n RuleMatch(\n condition_path,\n message.format(prop, resourcename, ('/'.join(str(x) for x in condition_path)))))\n\n else:\n message = 'Invalid !If condition specified at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n else:\n message = 'Property is an object instead of List at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n else:\n message = 'Property is an object instead of List at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n\n return matches\n\n def check_exceptions(self, parenttype, proptype, text):\n \"\"\"\n Checks for exceptions to the spec\n - Start with handling exceptions for templated code.\n \"\"\"\n templated_exceptions = {\n 'AWS::ApiGateway::RestApi': ['BodyS3Location'],\n 'AWS::Lambda::Function': ['Code'],\n 'AWS::ElasticBeanstalk::ApplicationVersion': ['SourceBundle'],\n }\n\n exceptions = templated_exceptions.get(parenttype, [])\n if proptype in exceptions:\n if isinstance(text, six.string_types):\n return True\n\n return False\n\n def propertycheck(self, text, proptype, parenttype, resourcename, path, root):\n \"\"\"Check individual properties\"\"\"\n\n parameternames = self.parameternames\n matches = []\n if root:\n specs = self.resourcetypes\n resourcetype = parenttype\n else:\n specs = self.propertytypes\n resourcetype = str.format('{0}.{1}', parenttype, proptype)\n # Handle tags\n if resourcetype not in specs:\n if proptype in specs:\n resourcetype = proptype\n else:\n resourcetype = str.format('{0}.{1}', parenttype, proptype)\n else:\n resourcetype = str.format('{0}.{1}', parenttype, proptype)\n\n resourcespec = specs[resourcetype].get('Properties', {})\n supports_additional_properties = specs[resourcetype].get('AdditionalProperties', False)\n\n if text == 'AWS::NoValue':\n return matches\n if not isinstance(text, dict):\n if not self.check_exceptions(parenttype, proptype, text):\n message = 'Expecting an object at %s' % ('/'.join(map(str, path)))\n matches.append(RuleMatch(path, message))\n return matches\n\n for prop in text:\n proppath = path[:]\n proppath.append(prop)\n if prop not in resourcespec:\n if prop in cfnlint.helpers.CONDITION_FUNCTIONS:\n cond_values = self.cfn.get_condition_values(text[prop])\n for cond_value in cond_values:\n matches.extend(self.propertycheck(\n cond_value['Value'], proptype, parenttype, resourcename,\n proppath + cond_value['Path'], root))\n elif not supports_additional_properties:\n message = 'Invalid Property %s' % ('/'.join(map(str, proppath)))\n matches.append(RuleMatch(proppath, message))\n else:\n if 'Type' in resourcespec[prop]:\n if resourcespec[prop]['Type'] == 'List':\n if 'PrimitiveItemType' not in resourcespec[prop]:\n if isinstance(text[prop], list):\n for index, item in enumerate(text[prop]):\n arrproppath = proppath[:]\n arrproppath.append(index)\n matches.extend(self.propertycheck(\n item, resourcespec[prop]['ItemType'],\n parenttype, resourcename, arrproppath, False))\n elif (isinstance(text[prop], dict)):\n # A list can be be specific as a Conditional\n matches.extend(\n self.check_list_for_condition(\n text, prop, parenttype, resourcename, resourcespec[prop], proppath)\n )\n else:\n message = 'Property {0} should be of type List for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n if isinstance(text[prop], list):\n primtype = resourcespec[prop]['PrimitiveItemType']\n for index, item in enumerate(text[prop]):\n arrproppath = proppath[:]\n arrproppath.append(index)\n matches.extend(self.primitivetypecheck(item, primtype, arrproppath))\n elif isinstance(text[prop], dict):\n if 'Ref' in text[prop]:\n ref = text[prop]['Ref']\n if ref in parameternames:\n param_type = self.cfn.template['Parameters'][ref]['Type']\n if param_type:\n if 'List<' not in param_type and '<List' not in param_type and not param_type == 'CommaDelimitedList':\n message = 'Property {0} should be of type List or Parameter should ' \\\n 'be a list for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n message = 'Property {0} should be of type List for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n message = 'Property {0} should be of type List for resource {1}'\n matches.append(\n RuleMatch(\n proppath,\n message.format(prop, resourcename)))\n else:\n if resourcespec[prop]['Type'] not in ['Map']:\n matches.extend(self.propertycheck(\n text[prop], resourcespec[prop]['Type'], parenttype,\n resourcename, proppath, False))\n elif 'PrimitiveType' in resourcespec[prop]:\n primtype = resourcespec[prop]['PrimitiveType']\n matches.extend(self.primitivetypecheck(text[prop], primtype, proppath))\n\n return matches\n\n def match(self, cfn):\n \"\"\"Check CloudFormation Properties\"\"\"\n matches = []\n self.cfn = cfn\n\n resourcespecs = cfnlint.helpers.RESOURCE_SPECS[cfn.regions[0]]\n self.resourcetypes = resourcespecs['ResourceTypes']\n self.propertytypes = resourcespecs['PropertyTypes']\n self.parameternames = self.cfn.get_parameter_names()\n for resourcename, resourcevalue in cfn.get_resources().items():\n if 'Properties' in resourcevalue and 'Type' in resourcevalue:\n resourcetype = resourcevalue.get('Type', None)\n if resourcetype.startswith('Custom::'):\n resourcetype = 'AWS::CloudFormation::CustomResource'\n if resourcetype in self.resourcetypes:\n path = ['Resources', resourcename, 'Properties']\n matches.extend(self.propertycheck(\n resourcevalue.get('Properties', {}), '',\n resourcetype, resourcename, path, True))\n\n return matches\n", "path": "src/cfnlint/rules/resources/properties/Properties.py"}]}

| 3,918 | 184 |

gh_patches_debug_17287

|

rasdani/github-patches

|

git_diff

|

streamlit__streamlit-999

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

PyDeck warning Mapbox API key not set

# Summary

Migrated a deck_gl chart to PyDeck API. When page is run get a UserWarning that the Mapbox API key is not set. Old deck_gl_chart() function does not display the warning.

# Steps to reproduce

1: Get a personal mapbox token, Verify it is set using streamlit config show

[mapbox]

```

# Configure Streamlit to use a custom Mapbox token for elements like st.deck_gl_chart and st.map. If you don't do this you'll be using Streamlit's own token, which has limitations and is not guaranteed to always work. To get a token for yourself, create an account at https://mapbox.com. It's free! (for moderate usage levels)

# Default: "pk.eyJ1IjoidGhpYWdvdCIsImEiOiJjamh3bm85NnkwMng4M3dydnNveWwzeWNzIn0.vCBDzNsEF2uFSFk2AM0WZQ"

# The value below was set in C:\Users\...path...\.streamlit\config.toml

token = "pk.eyJ1Ijoia25......................."

```

2. Run a PyDeck chart, any demo should do

3. Inspect the output from streamlit run app.py in the shell

## Expected behavior:

map displays, no message in shell

## Actual behavior:

Map displays, shell displays a UserWarning

```

You can now view your Streamlit app in your browser.

URL: http://localhost:8501

c:\apps\anaconda3\envs\ccadash\lib\site-packages\pydeck\bindings\deck.py:82: UserWarning: Mapbox API key is not set. This may impact available features of pydeck.

UserWarning,

```

## Is this a regression?

That is, did this use to work the way you expected in the past?

yes

# Debug info

- Streamlit version:0.53.0

- Python version: 3.7.3

- Using Conda? PipEnv? PyEnv? Pex? Conda

- OS version: Windows 10

- Browser version: Chrome Version 79.0.3945.117 (Official Build) (64-bit)

# Additional information

If needed, add any other context about the problem here. For exmaple, did this bug come from https://discuss.streamlit.io or another site? Link the original source here!

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `lib/streamlit/bootstrap.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 # Copyright 2018-2020 Streamlit Inc.

3 #

4 # Licensed under the Apache License, Version 2.0 (the "License");

5 # you may not use this file except in compliance with the License.

6 # You may obtain a copy of the License at

7 #

8 # http://www.apache.org/licenses/LICENSE-2.0

9 #

10 # Unless required by applicable law or agreed to in writing, software

11 # distributed under the License is distributed on an "AS IS" BASIS,

12 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 # See the License for the specific language governing permissions and

14 # limitations under the License.

15

16 import os

17 import signal

18 import sys

19

20 import click

21 import tornado.ioloop

22

23 from streamlit import config

24 from streamlit import net_util

25 from streamlit import url_util

26 from streamlit import env_util

27 from streamlit import util

28 from streamlit.Report import Report

29 from streamlit.logger import get_logger

30 from streamlit.server.Server import Server

31

32 LOGGER = get_logger(__name__)

33

34 # Wait for 1 second before opening a browser. This gives old tabs a chance to

35 # reconnect.

36 # This must be >= 2 * WebSocketConnection.ts#RECONNECT_WAIT_TIME_MS.

37 BROWSER_WAIT_TIMEOUT_SEC = 1

38

39

40 def _set_up_signal_handler():

41 LOGGER.debug("Setting up signal handler")

42

43 def signal_handler(signal_number, stack_frame):

44 # The server will shut down its threads and stop the ioloop

45 Server.get_current().stop()

46

47 signal.signal(signal.SIGTERM, signal_handler)

48 signal.signal(signal.SIGINT, signal_handler)

49 if sys.platform == "win32":

50 signal.signal(signal.SIGBREAK, signal_handler)

51 else:

52 signal.signal(signal.SIGQUIT, signal_handler)

53

54

55 def _fix_sys_path(script_path):

56 """Add the script's folder to the sys path.

57

58 Python normally does this automatically, but since we exec the script

59 ourselves we need to do it instead.

60 """

61 sys.path.insert(0, os.path.dirname(script_path))

62

63

64 def _fix_matplotlib_crash():

65 """Set Matplotlib backend to avoid a crash.

66

67 The default Matplotlib backend crashes Python on OSX when run on a thread

68 that's not the main thread, so here we set a safer backend as a fix.

69 Users can always disable this behavior by setting the config

70 runner.fixMatplotlib = false.

71

72 This fix is OS-independent. We didn't see a good reason to make this

73 Mac-only. Consistency within Streamlit seemed more important.

74 """

75 if config.get_option("runner.fixMatplotlib"):

76 try:

77 # TODO: a better option may be to set

78 # os.environ["MPLBACKEND"] = "Agg". We'd need to do this towards

79 # the top of __init__.py, before importing anything that imports

80 # pandas (which imports matplotlib). Alternately, we could set

81 # this environment variable in a new entrypoint defined in

82 # setup.py. Both of these introduce additional trickiness: they

83 # need to run without consulting streamlit.config.get_option,

84 # because this would import streamlit, and therefore matplotlib.

85 import matplotlib

86

87 matplotlib.use("Agg")

88 except ImportError:

89 pass

90

91

92 def _fix_tornado_crash():

93 """Set default asyncio policy to be compatible with Tornado 6.

94

95 Tornado 6 (at least) is not compatible with the default

96 asyncio implementation on Windows. So here we

97 pick the older SelectorEventLoopPolicy when the OS is Windows

98 if the known-incompatible default policy is in use.

99

100 This has to happen as early as possible to make it a low priority and

101 overrideable

102

103 See: https://github.com/tornadoweb/tornado/issues/2608

104

105 FIXME: if/when tornado supports the defaults in asyncio,

106 remove and bump tornado requirement for py38

107 """

108 if env_util.IS_WINDOWS and sys.version_info >= (3, 8):

109 import asyncio

110

111 try:

112 from asyncio import (

113 WindowsProactorEventLoopPolicy,

114 WindowsSelectorEventLoopPolicy,

115 )

116 except ImportError:

117 pass

118 # Not affected

119 else:

120 if type(asyncio.get_event_loop_policy()) is WindowsProactorEventLoopPolicy:

121 # WindowsProactorEventLoopPolicy is not compatible with

122 # Tornado 6 fallback to the pre-3.8 default of Selector

123 asyncio.set_event_loop_policy(WindowsSelectorEventLoopPolicy())

124

125

126 def _fix_sys_argv(script_path, args):

127 """sys.argv needs to exclude streamlit arguments and parameters

128 and be set to what a user's script may expect.

129 """

130 import sys

131

132 sys.argv = [script_path] + list(args)

133

134

135 def _on_server_start(server):

136 _print_url()

137

138 def maybe_open_browser():

139 if config.get_option("server.headless"):

140 # Don't open browser when in headless mode.

141 return

142

143 if server.browser_is_connected:

144 # Don't auto-open browser if there's already a browser connected.

145 # This can happen if there's an old tab repeatedly trying to

146 # connect, and it happens to success before we launch the browser.

147 return

148

149 if config.is_manually_set("browser.serverAddress"):

150 addr = config.get_option("browser.serverAddress")

151 else:

152 addr = "localhost"

153

154 util.open_browser(Report.get_url(addr))

155

156 # Schedule the browser to open using the IO Loop on the main thread, but

157 # only if no other browser connects within 1s.

158 ioloop = tornado.ioloop.IOLoop.current()

159 ioloop.call_later(BROWSER_WAIT_TIMEOUT_SEC, maybe_open_browser)

160

161

162 def _print_url():

163 title_message = "You can now view your Streamlit app in your browser."

164 named_urls = []

165

166 if config.is_manually_set("browser.serverAddress"):

167 named_urls = [

168 ("URL", Report.get_url(config.get_option("browser.serverAddress")))

169 ]

170

171 elif config.get_option("server.headless"):

172 named_urls = [

173 ("Network URL", Report.get_url(net_util.get_internal_ip())),

174 ("External URL", Report.get_url(net_util.get_external_ip())),

175 ]

176

177 else:

178 named_urls = [

179 ("Local URL", Report.get_url("localhost")),

180 ("Network URL", Report.get_url(net_util.get_internal_ip())),

181 ]

182

183 click.secho("")

184 click.secho(" %s" % title_message, fg="blue", bold=True)

185 click.secho("")

186

187 for url_name, url in named_urls:

188 url_util.print_url(url_name, url)

189

190 click.secho("")

191

192

193 def run(script_path, command_line, args):

194 """Run a script in a separate thread and start a server for the app.

195

196 This starts a blocking ioloop.

197

198 Parameters

199 ----------

200 script_path : str

201 command_line : str

202 args : [str]

203

204 """

205 _fix_sys_path(script_path)

206 _fix_matplotlib_crash()

207 _fix_tornado_crash()

208 _fix_sys_argv(script_path, args)

209

210 # Install a signal handler that will shut down the ioloop

211 # and close all our threads

212 _set_up_signal_handler()

213

214 ioloop = tornado.ioloop.IOLoop.current()

215

216 # Create and start the server.

217 server = Server(ioloop, script_path, command_line)

218 server.start(_on_server_start)

219

220 # (Must com after start(), because this starts a new thread and start() may

221 # call sys.exit() which doesn't kill other threads.

222 server.add_preheated_report_session()

223

224 # Start the ioloop. This function will not return until the

225 # server is shut down.

226 ioloop.start()

227

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/lib/streamlit/bootstrap.py b/lib/streamlit/bootstrap.py

--- a/lib/streamlit/bootstrap.py

+++ b/lib/streamlit/bootstrap.py

@@ -159,6 +159,12 @@

ioloop.call_later(BROWSER_WAIT_TIMEOUT_SEC, maybe_open_browser)

+def _fix_pydeck_mapbox_api_warning():

+ """Sets MAPBOX_API_KEY environment variable needed for PyDeck otherwise it will throw an exception"""

+

+ os.environ["MAPBOX_API_KEY"] = config.get_option("mapbox.token")

+

+

def _print_url():

title_message = "You can now view your Streamlit app in your browser."

named_urls = []

@@ -206,6 +212,7 @@

_fix_matplotlib_crash()

_fix_tornado_crash()

_fix_sys_argv(script_path, args)

+ _fix_pydeck_mapbox_api_warning()

# Install a signal handler that will shut down the ioloop

# and close all our threads

|