Spaces:

Running

on

CPU Upgrade

[FEEDBACK] Inference Playground

Inference Playground

This discussion is dedicated to providing feedback on the Inference Playground and Serverless Inference API.

Visit the playground here: huggingface.co/playground

About the Inference Playground:

The Inference Playground is a user interface designed to simplify testing our serverless inference API with chat models. It lists available models for you to try, allowing you to experiment with each model's settings, test available models via a UI, and copy code snippets.

- To view all available settings, refer to the Serverless Inference for Chat Completion documentation.

- Browse available chat models here.

If you need more usage, you can subscribe to PRO.

| User Tier | Rate Limit |

|---|---|

| Unregistered Users | 1 request per hour |

| Signed-up Users | 50 requests per hour |

| PRO and Enterprise Users | 500 requests per hour |

Upcoming Features:

- Continuous UI improvements

- A dedicated UI for function calling

- Support for vision language models

- A feature to easily compare models

Add place to change API keys in Playground.

Add place to change API keys in Playground.

Yes I'll try to add this today. edit: I added it.

support tool use🛠️

How to use this 🤗 InferenceClient with "Langchain" or "Llama-Index " ?

How to use this 🤗 InferenceClient with "Langchain" or "Llama-Index " ?

What do you mean? This is just a UI for easier testing and getting the code to do Inference on HF models.

Being able to use text completion like in Open Web UI would be great.

Testing function calling output would also be very appreciated. Actually calling the functions doesn't make any sense in this case, but generating the json for it would be very useful.

I am aware that this probably takes some work to accomplish, as the templates need to be evaluated to implement this, but it would be great, even if just for popular models (like the recent llama 3b)

Being able to use text completion like in Open Web UI would be great.

What do you mean? I think this is HuggingChat no?

Testing function calling output would also be very appreciated. Actually calling the functions doesn't make any sense in this case, but generating the json for it would be very useful.

Yes, we'll add that.

Thanks 🤗

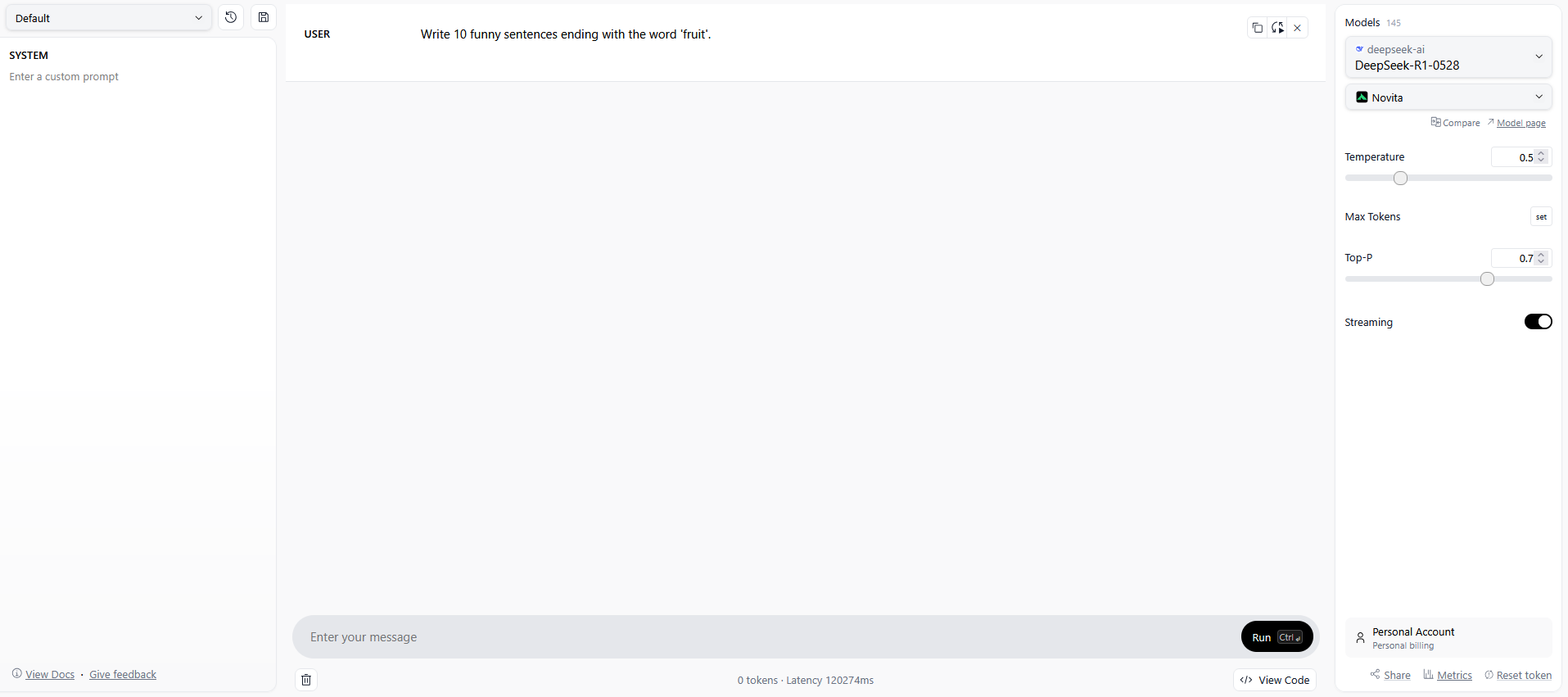

Playground regularly (three times in the last hour alone with different models & providers) produces "Error NetworkError when attempting to fetch resource." --> (0 tokens · Latency 120268ms). I can rule out that it is due to internet connection or other local issues.

Also as a Pro-User, another error that's more on the entertaining side pops out from time to time: "Error Failed to perform inference: Your current plan only allows you to switch models Infinity times per minute. Please try again later or upgrade your plan to increase this limit."

@hideosnes Sorry to hear about that. Do you recall if retrying with the same model and provider after the error worked?

Will also investigate the other error. Thanks for your feedback!

@thomasglopes Also would it be possible to add support for setting custom parameters (maybe a JSON input)? For example K2 is almost unusable without setting the repetition penalty. Thanks 🤗

@hideosnes Sorry to hear about that. Do you recall if retrying with the same model and provider after the error worked?

Will also investigate the other error. Thanks for your feedback!

It's usually a matter of minutes until it works again. Error occurs independent of model or provider and I tried quite a bit to rule out that it's an issue on my side. Perhaps it helps, a similar error occurs when using Huggingface_hub / InferenceClient(? i think that's the name, cant remember atm -_-) to do text-generation tasks (in this case via colab): "service not available at this time".

@thomasglopes

it is back again with updated interface.

I am using Safari.

It was only with playground, the website was dark.