hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

972278e093e7da3b5072c3264970fbc6572638d5 | 15,314 | md | Markdown | articles/marketplace/partner-center-portal/create-power-bi-app-offer.md | pmsousa/azure-docs.pt-pt | bc487beff48df00493484663c200e44d4b24cb18 | [

"CC-BY-4.0",

"MIT"

] | 15 | 2017-08-28T07:46:17.000Z | 2022-02-03T12:49:15.000Z | articles/marketplace/partner-center-portal/create-power-bi-app-offer.md | pmsousa/azure-docs.pt-pt | bc487beff48df00493484663c200e44d4b24cb18 | [

"CC-BY-4.0",

"MIT"

] | 407 | 2018-06-14T16:12:48.000Z | 2021-06-02T16:08:13.000Z | articles/marketplace/partner-center-portal/create-power-bi-app-offer.md | pmsousa/azure-docs.pt-pt | bc487beff48df00493484663c200e44d4b24cb18 | [

"CC-BY-4.0",

"MIT"

] | 17 | 2017-10-04T22:53:31.000Z | 2022-03-10T16:41:59.000Z | ---

title: Criar uma oferta de aplicativo Power BI no Microsoft AppSource

description: Saiba como criar e publicar uma oferta de aplicação Power BI ao Microsoft AppSource.

author: navits09

ms.author: navits

ms.service: marketplace

ms.subservice: partnercenter-marketplace-publisher

ms.topic: how-to

ms.date: 07/22/2020

ms.openlocfilehash: d5eb253fb24f463106866f8b0fe17f634e805cbb

ms.sourcegitcommit: 5f482220a6d994c33c7920f4e4d67d2a450f7f08

ms.translationtype: MT

ms.contentlocale: pt-PT

ms.lasthandoff: 04/08/2021

ms.locfileid: "107107482"

---

# <a name="create-a-power-bi-app-offer"></a>Criar uma oferta da aplicação Power BI

Este artigo descreve como criar e publicar uma oferta de aplicação Power BI ao [Microsoft AppSource.](https://appsource.microsoft.com/)

Antes de iniciar, [crie uma conta de Mercado Comercial no Partner Center](../create-account.md) se ainda não o fez. Certifique-se de que a sua conta está inscrita no programa de marketplace comercial.

## <a name="create-a-new-offer"></a>Criar uma nova oferta

1. Inscreva-se no [Partner Center](https://partner.microsoft.com/dashboard/home).

2. No menu de navegação à esquerda, selecione **Commercial Marketplace** > **Overview**.

3. Na página 'Vista Geral', selecione **+ Nova oferta** Power BI Service > **App**.

> [!NOTE]

> Após a publicação de uma oferta, as edições feitas no Partner Center só aparecem nas lojas online depois de republicarem a oferta. Certifique-se de que é sempre republicante depois de escorção.

> [!IMPORTANT]

> Se a **Power BI Service App** não for mostrada ou ativada, a sua conta não tem permissão para criar este tipo de oferta. Por favor, verifique se cumpriu todos os [requisitos](create-power-bi-app-overview.md) para este tipo de oferta, incluindo o registo de uma conta de desenvolvedor.

## <a name="new-offer"></a>Nova oferta

Introduza um **ID de oferta**. Este é um identificador único para cada oferta na sua conta.

- Este ID é visível para os clientes no endereço web para a oferta de mercado e modelos de Gestor de Recursos Azure, se aplicável.

- Utilize apenas letras minúsculas e números. Pode incluir hífens e sublinhados, mas sem espaços, e está limitado a 50 caracteres. Por exemplo, se introduzir **aqui o test-offer-1,** o endereço web da oferta será `https://azuremarketplace.microsoft.com/marketplace/../test-offer-1` .

- O ID da Oferta não pode ser alterado depois de selecionar **Criar**.

Insira **um pseudónimo de Oferta.** Este é o nome usado para a oferta no Partner Center.

- Este nome não é usado no mercado e é diferente do nome da oferta e outros valores mostrados aos clientes.

- O pseudónimo Oferta não pode ser alterado depois de selecionar **Criar**.

Selecione **Criar** para gerar a oferta e continuar.

## <a name="offer-overview"></a>Oferta geral

Esta página mostra uma representação visual dos passos necessários para publicar esta oferta (concluída e futura) e quanto tempo cada passo deve demorar a ser concluído.

Inclui links para realizar operações nesta oferta com base na seleção que faz. Por exemplo:

- Se a oferta for um rascunho - Eliminar oferta de rascunho

- Se a oferta for ao vivo - [Pare de vender a oferta](update-existing-offer.md#stop-selling-an-offer-or-plan)

- Se a oferta estiver em pré-visualização - [Go-live](../review-publish-offer.md#previewing-and-approving-your-offer)

- Se ainda não tiver concluído a assinatura do editor - [Cancele a publicação.](../review-publish-offer.md#cancel-publishing)

## <a name="offer-setup"></a>Configuração de oferta

### <a name="customer-leads"></a>Ligações ao cliente

Ao publicar a sua oferta no mercado com o Partner Center, deve conectá-la ao seu sistema de Gestão de Relacionamento com o Cliente (CRM). Isto permite-lhe receber informações de contacto do cliente assim que alguém manifestar interesse ou utilizar o seu produto.

1. Selecione um destino de oportunidades potenciais para onde quer que enviemos as oportunidades potenciais de clientes. O Partner Center suporta os seguintes sistemas crm:

- [Dinâmica 365](commercial-marketplace-lead-management-instructions-dynamics.md) para Envolvimento com o Cliente

- [Marketo](commercial-marketplace-lead-management-instructions-marketo.md)

- [Salesforce](commercial-marketplace-lead-management-instructions-salesforce.md)

> [!NOTE]

> Se o seu sistema CRM não estiver nesta lista, utilize [a Tabela Azure](commercial-marketplace-lead-management-instructions-azure-table.md) ou [o ponto final HTTPS](commercial-marketplace-lead-management-instructions-https.md) para armazenar dados de chumbo do cliente. Em seguida, exporte os dados para o seu sistema crm.

2. Ligue a sua oferta ao destino principal ao publicar no Partner Center.

3. Confirme se a ligação ao destino de chumbo está configurada corretamente. Depois de publicá-lo no Partner Center, validaremos a ligação e enviaremos um teste. Enquanto pré-visualiza a oferta antes de entrar em direto, também pode testar a sua ligação de chumbo tentando comprar a oferta no ambiente de pré-visualização.

4. Certifique-se de que a ligação ao destino principal permanece atualizada para não perder nenhuma pista.

Aqui estão alguns recursos adicionais de gestão de chumbo:

- [Oportunidades potenciais da oferta do marketplace comercial](commercial-marketplace-get-customer-leads.md)

- [Questões comuns sobre gestão de chumbo](../lead-management-faq.md#common-questions-about-lead-management)

- [Erros de configuração de chumbo de resolução de problemas](../lead-management-faq.md#publishing-config-errors)

- [Visão geral da gestão de chumbo](https://assetsprod.microsoft.com/mpn/cloud-marketplace-lead-management.pdf) PDF (Certifique-se de que o seu bloqueador pop-up está desligado).

**Selecione Guardar o projeto** antes de continuar.

## <a name="properties"></a>Propriedades

Esta página permite definir as categorias e indústrias usadas para agrupar a sua oferta no mercado, a sua versão de aplicação e os contratos legais que suportam a sua oferta.

### <a name="category"></a>Categoria

Selecione categorias e subcategorias para colocar a sua oferta nas áreas de pesquisa de mercado apropriadas. Não se esqueça de descrever como a sua oferta suporta estas categorias na descrição da oferta. Selecione:

- Pelo menos uma e até duas categorias, incluindo uma categoria primária e secundária (opcional).

- Até duas subcategorias para cada categoria primária e/ou secundária. Se não for aplicável nenhuma subcategoria à sua oferta, selecione **Não aplicável**.

Consulte a lista completa de categorias e subcategorias na [Listagem de Ofertas Boas Práticas.](../gtm-offer-listing-best-practices.md)

### <a name="industry"></a>Setor

[!INCLUDE [Industry Taxonomy](./includes/industry-taxonomy.md)]

### <a name="legal"></a>Legal

#### <a name="terms-and-conditions"></a>Termos e condições

Para fornecer os seus próprios termos e condições personalizados, insira até 10.000 caracteres na caixa **de Termos e Condições.** Os clientes devem aceitar estes termos antes de poderem experimentar a sua oferta.

**Selecione Guardar o rascunho** antes de continuar para a secção seguinte, Ofereça a listagem.

## <a name="offer-listing"></a>Listagem de ofertas

Aqui definirá os detalhes da oferta que são apresentados no mercado. Isto inclui o nome da oferta, descrição, imagens, e assim por diante.

### <a name="language"></a>Linguagem

Selecione o idioma no qual a sua oferta será listada. Atualmente, **inglês (Estados Unidos)** é a única opção disponível.

Defina detalhes do mercado (como nome de oferta, descrição e imagens) para cada idioma/mercado. Selecione o nome idioma/mercado para fornecer esta informação.

> [!NOTE]

> Os detalhes da oferta não são necessários para estar em inglês se a descrição da oferta começar com a frase: "Esta aplicação está disponível apenas em [língua não inglesa]." Também é normal fornecer um Link Útil para oferecer conteúdo num idioma diferente do usado na listagem de ofertas.

Aqui está um exemplo de como as informações de oferta aparecem no Microsoft AppSource (quaisquer preços listados são apenas para fins e não se destinam a refletir custos reais):

:::image type="content" source="media/example-power-bi-app.png" alt-text="Ilustra como esta oferta aparece no Microsoft AppSource.":::

#### <a name="call-out-descriptions"></a>Descrições de chamadas

1. Logótipo

2. Produtos

3. Categorias

4. Indústrias

5. Endereço de suporte (link)

6. Termos de utilização

7. Política de privacidade

8. Nome da oferta

9. Resumo

10. Description

11. Screenshots/vídeos

### <a name="name"></a>Name

O nome que aqui entra apresenta como título da sua oferta. Este campo está pré-preenchido com o texto que inseriu na caixa **de pseudónimos Oferta** quando criou a oferta. Pode alterar este nome posteriormente.

O nome:

- Pode ser registada (e pode incluir símbolos de marca registada ou de direitos autorais).

- Não deve ter mais de 50 caracteres.

- Não pode incluir emojis.

### <a name="search-results-summary"></a>Resumo dos resultados da pesquisa

Forneça uma breve descrição da sua oferta. Isto pode ter até 100 caracteres de comprimento e é usado em resultados de pesquisa no mercado.

### <a name="description"></a>Description

[!INCLUDE [Long description-1](./includes/long-description-1.md)]

[!INCLUDE [Long description-2](./includes/long-description-2.md)]

[!INCLUDE [Long description-3](./includes/long-description-3.md)]

### <a name="search-keywords"></a>Pesquisar palavras-chave

Introduza até três palavras-chave de pesquisa opcionais para ajudar os clientes a encontrar a sua oferta no mercado. Para obter melhores resultados, utilize também estas palavras-chave na sua descrição.

### <a name="helpprivacy-web-addresses"></a>Endereços Web de ajuda/Privacidade

Forneça links para ajudar os clientes a entender melhor a sua oferta.

#### <a name="help-link"></a>Ligação de ajuda

Insira o endereço web onde os clientes podem saber mais sobre a sua oferta.

#### <a name="privacy-policy-url"></a>URL de política de privacidade

Insira o endereço web para a política de privacidade da sua organização. Você é responsável por garantir que a sua oferta está em conformidade com as leis e regulamentos de privacidade. Você também é responsável por publicar uma política de privacidade válida no seu site.

### <a name="contact-information"></a>Informações de Contacto

Deve fornecer o nome, e-mail e número de telefone para um **contacto de suporte** e um contacto de **Engenharia.** Esta informação não é mostrada aos clientes. Está disponível para a Microsoft e pode ser fornecido aos parceiros cloud Solution Provider (CSP).

- Contacto de apoio (obrigatório): Para questões gerais de apoio.

- Contacto de engenharia (obrigatório): Para questões técnicas e questões de certificação.

- Contacto do Programa CSP (opcional): Para revendedores questões relacionadas com o programa CSP.

Na secção **de contacto de Apoio,** forneça o endereço web do **site de Apoio** onde os parceiros possam encontrar suporte para a sua oferta.

### <a name="supporting-documents"></a>Documentos comprovativos

Fornecer pelo menos um e até três documentos de marketing relacionados em formato PDF. Por exemplo, livros brancos, brochuras, listas de verificação ou apresentações.

### <a name="marketplace-images"></a>Imagens do mercado

Forneça logotipos e imagens para utilizar com a sua oferta. Todas as imagens devem estar em formato PNG. Imagens desfocadas serão rejeitadas.

[!INCLUDE [logo tips](../includes/graphics-suggestions.md)]

>[!NOTE]

>Se tiver um problema de upload de ficheiros, certifique-se de que a rede local não bloqueia o `https://upload.xboxlive.com` serviço utilizado pelo Partner Center.

#### <a name="store-logos"></a>Logotipos da loja

Forneça um ficheiro PNG para o logotipo de tamanho **grande.** O Partner Center irá usá-lo para criar um logótipo **pequeno.** Pode substituir opcionalmente isto por uma imagem diferente mais tarde.

- **Grande** (de 216 x 216 a 350 x 350 px, necessário)

- **Pequeno** (48 x 48 px, opcional)

Estes logótipos são utilizados em diferentes locais da listagem:

[!INCLUDE [logos-appsource-only](../includes/logos-appsource-only.md)]

[!INCLUDE [logo tips](../includes/graphics-suggestions.md)]

#### <a name="screenshots"></a>Capturas de ecrã

Adicione pelo menos uma e até cinco imagens que mostram como a sua oferta funciona. Cada um deve ter 1280 x 720 pixels em tamanho e em formato PNG.

#### <a name="videos-optional"></a>Vídeos (opcional)

Adicione até cinco vídeos que demonstram a sua oferta. Insira o nome do vídeo, o seu endereço web e a imagem PNG do vídeo em 1280 x 720 pixels de tamanho.

#### <a name="additional-marketplace-listing-resources"></a>Recursos de listagem de mercado adicionais

Para saber mais sobre a criação de listas de ofertas, consulte [Offer listing best practices](../gtm-offer-listing-best-practices.md).

## <a name="technical-configuration"></a>Configuração técnica

Promova a sua aplicação no Serviço Power BI para a produção e forneça a ligação de instalador de aplicações Power BI que permite aos clientes instalarem a sua aplicação. Para obter mais informações, consulte [as aplicações publicar com dashboards e relatórios no Power BI](/power-bi/service-create-distribute-apps).

## <a name="supplemental-content"></a>Conteúdo suplementar

Forneça informações adicionais sobre a sua oferta para nos ajudar a validá-la. Esta informação não é mostrada aos clientes ou publicada no mercado.

### <a name="validation-assets"></a>Ativos de validação

Opcionalmente, adicione instruções (até 3.000 caracteres) para ajudar a equipa de validação da Microsoft a configurar, conectar e testar a sua aplicação. Inclua configurações típicas, contas, parâmetros ou outras informações que possam ser usadas para testar a opção De Ligar Dados. Esta informação é visível apenas para a equipa de validação e é utilizada apenas para fins de validação.

## <a name="review-and-publish"></a>Rever e publicar

Depois de ter completado todas as secções necessárias da oferta, pode submeter a sua oferta para rever e publicar.

No canto superior direito do portal, selecione **'Rever e publicar**.

Na página de comentários pode:

- Consulte o estado de conclusão de cada secção da oferta. Não pode publicar até que todas as secções da oferta estejam marcadas como completas.

- **Não começou** - A secção ainda não foi iniciada e precisa de ser concluída.

- **Incompleto** - A secção tem erros que precisam de ser corrigidos ou requer que forneça mais informações. Consulte as secções anteriormente neste documento para obter orientação.

- **Completo** - A secção tem todos os dados necessários e não há erros. Todas as secções da oferta devem estar completas antes de poder submeter a oferta.

- Forneça instruções de teste à equipa de certificação para garantir que a sua aplicação é testada corretamente. Além disso, forneça quaisquer notas suplementares que sejam úteis para entender a sua oferta.

Para submeter a oferta de publicação, **selecione Publicar**.

Enviaremos um e-mail para informá-lo quando uma versão de pré-visualização da oferta estiver disponível para revisão e aprovação. Para publicar a sua oferta ao público, vá ao Partner Center e selecione **Go-live**.

| 58.450382 | 387 | 0.775891 | por_Latn | 0.999538 |

9722afd3e5e8fd62b8ad1b871c22106f45758a51 | 7,066 | md | Markdown | README.md | pfgithub/zls | 4952c344818a1886ea6db7992339c2ae4ac25593 | [

"MIT"

] | null | null | null | README.md | pfgithub/zls | 4952c344818a1886ea6db7992339c2ae4ac25593 | [

"MIT"

] | null | null | null | README.md | pfgithub/zls | 4952c344818a1886ea6db7992339c2ae4ac25593 | [

"MIT"

] | 1 | 2022-01-20T10:46:06.000Z | 2022-01-20T10:46:06.000Z |

Zig Language Server, or `zls`, is a language server for Zig. The Zig wiki states that "The Zig community is decentralized" and "There is no concept of 'official' or 'unofficial'", so instead of calling `zls` unofficial, and I'm going to call it a cool option, one of [many](https://github.com/search?q=zig+language+server).

<!-- omit in toc -->

## Table Of Contents

- [Installation](#installation)

- [Build Options](#build-options)

- [Configuration Options](#configuration-options)

- [Usage](#usage)

- [VSCode](#vscode)

- [Sublime Text 3](#sublime-text-3)

- [Kate](#kate)

- [Neovim/Vim8](#neovimvim8)

- [Emacs](#emacs)

- [Related Projects](#related-projects)

- [License](#license)

## Installation

Installing `zls` is pretty simple. You will need [a build of Zig master](https://ziglang.org/download/) (or >0.6) to build ZLS.

```bash

git clone --recurse-submodules https://github.com/zigtools/zls

cd zls

zig build

# To configure ZLS:

zig build config

```

### Build Options

| Option | Type | Default Value | What it Does |

| --- | --- | --- | --- |

| `-Ddata_version` | `string` (master or 0.6.0) | 0.6.0 | The data file version. This selects the files in the `src/data` folder that correspond to the Zig version being served.

Then, you can use the `zls` executable in an editor of your choice that has a Zig language server client!

### Configuration Options

You can configure zls by providing a zls.json file.

zls will look for a zls.json configuration file in multiple locations with the following priority:

- In the folders open in your workspace (this applies for files in those folders)

- In the local configuration folder of your OS (as provided by [known-folders](https://github.com/ziglibs/known-folders#folder-list))

- In the same directory as the executable

The following options are currently available.

| Option | Type | Default value | What it Does |

| --- | --- | --- | --- |

| `enable_snippets` | `bool` | `false` | Enables snippet completions when the client also supports them. |

| `zig_lib_path` | `?[]const u8` | `null` | zig library path, e.g. `/path/to/zig/lib/zig`, used to analyze std library imports. |

| `zig_exe_path` | `?[]const u8` | `null` | zig executable path, e.g. `/path/to/zig/zig`, used to run the custom build runner. If `null`, zig is looked up in `PATH`. Will be used to infer the zig standard library path if none is provided. |

| `warn_style` | `bool` | `false` | Enables warnings for style *guideline* mismatches |

| `build_runner_path` | `?[]const u8` | `null` | Path to the build_runner.zig file provided by zls. This option must be present in one of the global configuration files to have any effect. `null` is equivalent to `${executable_directory}/build_runner.zig` |

| `enable_semantic_tokens` | `bool` | false | Enables semantic token support when the client also supports it. |

## Usage

`zls` will supercharge your Zig programming experience with autocomplete, function documentation, and more! Follow the instructions for your specific editor below:

### VSCode

Install the `zls-vscode` extension from [here](https://github.com/zigtools/zls-vscode/releases).

### Sublime Text 3

- Install the `LSP` package from [here](https://github.com/sublimelsp/LSP/releases) or via Package Control.

- Add this snippet to `LSP's` user settings:

```json

{

"clients": {

"zig":{

"command": ["zls"],

"enabled": true,

"languageId": "zig",

"scopes": ["source.zig"],

"syntaxes": ["Packages/Zig Language/Syntaxes/Zig.tmLanguage"]

}

}

}

```

### Kate

- Enable `LSP client` plugin in Kate settings.

- Add this snippet to `LSP client's` user settings (e.g. /$HOME/.config/kate/lspclient)

(or paste it in `LSP client's` GUI settings)

```json

{

"servers": {

"zig": {

"command": ["zls"],

"url": "https://github.com/zigtools/zls",

"highlightingModeRegex": "^Zig$"

}

}

}

```

### Neovim/Vim8

- Install the CoC engine from [here](https://github.com/neoclide/coc.nvim).

- Issue `:CocConfig` from within your Vim editor, and the following snippet:

```json

{

"languageserver": {

"zls" : {

"command": "command_or_path_to_zls",

"filetypes": ["zig"]

}

}

}

```

### Emacs

- Install [lsp-mode](https://github.com/emacs-lsp/lsp-mode) from melpa

- [zig mode](https://github.com/ziglang/zig-mode) is also useful

```elisp

(require 'lsp)

(add-to-list 'lsp-language-id-configuration '(zig-mode . "zig"))

(lsp-register-client

(make-lsp-client

:new-connection (lsp-stdio-connection "<path to zls>")

:major-modes '(zig-mode)

:server-id 'zls))

```

## Related Projects

- [`sublime-zig-language` by @prime31](https://github.com/prime31/sublime-zig-language)

- Supports basic language features

- Uses data provided by `src/data` to perform builtin autocompletion

- [`zig-lsp` by @xackus](https://github.com/xackus/zig-lsp)

- Inspiration for `zls`

- [`known-folders` by @ziglibs](https://github.com/ziglibs/known-folders)

- Provides API to access known folders on Linux, Windows and Mac OS

## License

MIT

| 47.422819 | 1,598 | 0.722898 | eng_Latn | 0.545459 |

9722affd0665831042c67d39b95179ff601e57d0 | 1,000 | md | Markdown | exampleSite/content/en/foo-first-level-section/foo-second-level/page-at-foo-second-level.md | marcanuy/simpleit-hugo-theme | 49277e5c3be0f90f6250d12a138cf21d32319404 | [

"MIT"

] | 17 | 2018-08-09T22:56:36.000Z | 2022-03-28T19:50:03.000Z | exampleSite/content/en/foo-first-level-section/foo-second-level/page-at-foo-second-level.md | brandonburt/breezy-centurion | 57f283657b2b3a701050cf72c6f4c87c1d03cf6c | [

"MIT"

] | 26 | 2019-02-18T21:32:30.000Z | 2021-07-22T21:01:32.000Z | exampleSite/content/en/foo-first-level-section/foo-second-level/page-at-foo-second-level.md | brandonburt/breezy-centurion | 57f283657b2b3a701050cf72c6f4c87c1d03cf6c | [

"MIT"

] | 10 | 2018-10-29T01:39:51.000Z | 2021-07-31T16:07:47.000Z | ---

title: "Page at nested section level"

linktitle: "Nested link Title"

date: "2018-08-06"

subtitle: 'I am the subtitle'

description: 'I am the description used at head meta and footer description'

resources:

- name: #header

src: #victor_hugo.jpg

title: #Portrait photograph of Victor Hugo

params:

license: #"Public Domain"

original: #"https://commons.wikimedia.org/wiki/File:Victor_Hugo_by_%C3%89tienne_Carjat_1876_-_full.jpg"

translationKey: "page-at-foo-second-level"

---

## Overview

I am an article at `/content/foo-first-level-section/foo-second-level/page-at-second-level.md`.

## Lorem ipsum dolor sit amet

Lorem ipsum dolor sit amet, eu eos vitae deseruisse eloquentiam.

Ex his nemore dolorem incorrupte, vide omnis facete pro an, cum te

summo simul.

## An veri sensibus

An veri sensibus hendrerit vim, duo omnis expetenda at, error numquam

expetendis eum ea. No cum simul iriure sensibus, consequuntur

conclusionemque cum an.

Admodum rationibus percipitur eos an.

| 28.571429 | 107 | 0.759 | eng_Latn | 0.347587 |

9722e235965773e66283ae09f6e1e375a18a4485 | 1,923 | md | Markdown | archived/sensu-go/5.11/getting-started/media.md | acsrujan/sensu-docs | 50836c29a25df06b5ab811c611b018279a48ff09 | [

"MIT"

] | 69 | 2015-01-14T20:11:56.000Z | 2022-01-24T10:44:03.000Z | archived/sensu-go/5.11/getting-started/media.md | acsrujan/sensu-docs | 50836c29a25df06b5ab811c611b018279a48ff09 | [

"MIT"

] | 2,119 | 2015-01-08T20:00:16.000Z | 2022-03-31T15:26:31.000Z | archived/sensu-go/5.11/getting-started/media.md | acsrujan/sensu-docs | 50836c29a25df06b5ab811c611b018279a48ff09 | [

"MIT"

] | 217 | 2015-01-08T09:44:23.000Z | 2022-03-24T01:52:59.000Z | ---

title: "Sensu Go media"

linkTitle: "Media"

description: "Looking for resources on Sensu Go? Check out our media guide, which includes a collection of blog posts, videos, tutorials, and podcasts, all covering Sensu Go."

version: "5.11"

weight: 100

product: "Sensu Go"

menu:

sensu-go-5.11:

parent: getting-started

---

### Talks

- [Greg Poirier - Sensu Go Deep Dive at Sensu Summit 2017](https://www.youtube.com/watch?v=mfOk0mOfkvA)

- [Greg Poirier - Sensu Go Assets](https://www.youtube.com/watch?v=JNHs4VD_-1M&t=1s)

- [Sean Porter, Influx Days - Data Collection & Prometheus Scraping with Sensu 5.0](https://www.youtube.com/watch?v=vn32Gx8rL4o)

### Blog posts

- [Simon Plourde: Understanding RBAC in Sensu Go](https://blog.sensu.io/understanding-rbac-in-sensu-go)

- [Sean Porter: Self-service monitoring checks in Sensu Go](https://blog.sensu.io/self-service-monitoring-checks-in-sensu-go)

- [Christian Michel - How to monitor 1,000 network devices using Sensu Go and Ansible](https://blog.sensu.io/network-monitoring-tools-sensu-ansible)

- [Eric Chlebek - Filters: valves for the Sensu monitoring event pipeline](https://blog.sensu.io/filters-valves-for-the-sensu-monitoring-event-pipeline)

- [Greg Schofield - Sensu Habitat Core Plans are Here](https://blog.chef.io/2018/08/22/guest-post-sensu-habitat-core-plans-are-here/)

- [Nikki Attea - Check output metric extraction with InfluxDB & Grafana](http://blog.sensu.io/check-output-metric-extraction-with-influxdb-grafana)

- [Jef Spaleta - Migrating to 5.0](https://blog.sensu.io/migrating-to-2.0-the-good-the-bad-the-ugly)

- [Anna Plotkin - Sensu Go is here!](https://blog.sensu.io/sensu-go-is-here)

### Tutorials

- [Sensu sandbox tutorials](../sandbox)

### Podcasts

- [Sensu Community Chat November 2018](https://www.youtube.com/watch?v=5tIPv-rJMZU)

_NOTE: Prior to October 2018, Sensu Go was known as Sensu 2.0._

| 49.307692 | 176 | 0.730109 | eng_Latn | 0.245937 |

972333b739f5dd0f822568fd0afece425de7c7d3 | 842 | md | Markdown | README.md | zKillboard/zkb-backup | a9ecdd10b18712a3e2a3f384f3ceca552a52fe32 | [

"MIT"

] | null | null | null | README.md | zKillboard/zkb-backup | a9ecdd10b18712a3e2a3f384f3ceca552a52fe32 | [

"MIT"

] | null | null | null | README.md | zKillboard/zkb-backup | a9ecdd10b18712a3e2a3f384f3ceca552a52fe32 | [

"MIT"

] | 1 | 2021-08-12T05:25:48.000Z | 2021-08-12T05:25:48.000Z | # zkb-backup

Creates a sqlite backup of killmails known by zkillboard.

# Requirements

Requires curl and sqlite3 php extensions.

# Install

Clone this repository and chdir into it.

Install composer if you don't already have it. Instructions can be found at https://getcomposer.org/download/

Execute:

./composer.phar update

chdir into the cron directory and execute go.php:

php go.php

If all is well, you'll see output including the fetcher grabbing individual killmails, the redisq listener, as well as the daily fetcher pulling the killmail_id and hashes. The data is stored under `/data/` of the installed directory.

## Cron

Add this entry to your cronjob, replacing the `~` with the appropriate location:

~/zkb-backup/cron/cron.sh

That's it. You're done. It will take time to fetch all killmails, be patient.

| 27.16129 | 234 | 0.756532 | eng_Latn | 0.996934 |

97238f9a50f0d208c797fad77d71e8c894f22c17 | 4,376 | md | Markdown | README.md | npmtest/node-npmtest-hippie | 843ef5a4a192384c86550430c46ae79e9301554a | [

"MIT"

] | null | null | null | README.md | npmtest/node-npmtest-hippie | 843ef5a4a192384c86550430c46ae79e9301554a | [

"MIT"

] | null | null | null | README.md | npmtest/node-npmtest-hippie | 843ef5a4a192384c86550430c46ae79e9301554a | [

"MIT"

] | null | null | null | # npmtest-hippie

#### basic test coverage for [hippie (v0.5.1)](https://github.com/vesln/hippie) [](https://www.npmjs.org/package/npmtest-hippie) [](https://travis-ci.org/npmtest/node-npmtest-hippie)

#### Simple end-to-end API testing

[](https://www.npmjs.com/package/hippie)

| git-branch : | [alpha](https://github.com/npmtest/node-npmtest-hippie/tree/alpha)|

|--:|:--|

| coverage : | [](https://npmtest.github.io/node-npmtest-hippie/build/coverage.html/index.html)|

| test-report : | [](https://npmtest.github.io/node-npmtest-hippie/build/test-report.html)|

| test-server-github : | [](https://npmtest.github.io/node-npmtest-hippie/build/app/index.html) | | build-artifacts : | [](https://github.com/npmtest/node-npmtest-hippie/tree/gh-pages/build)|

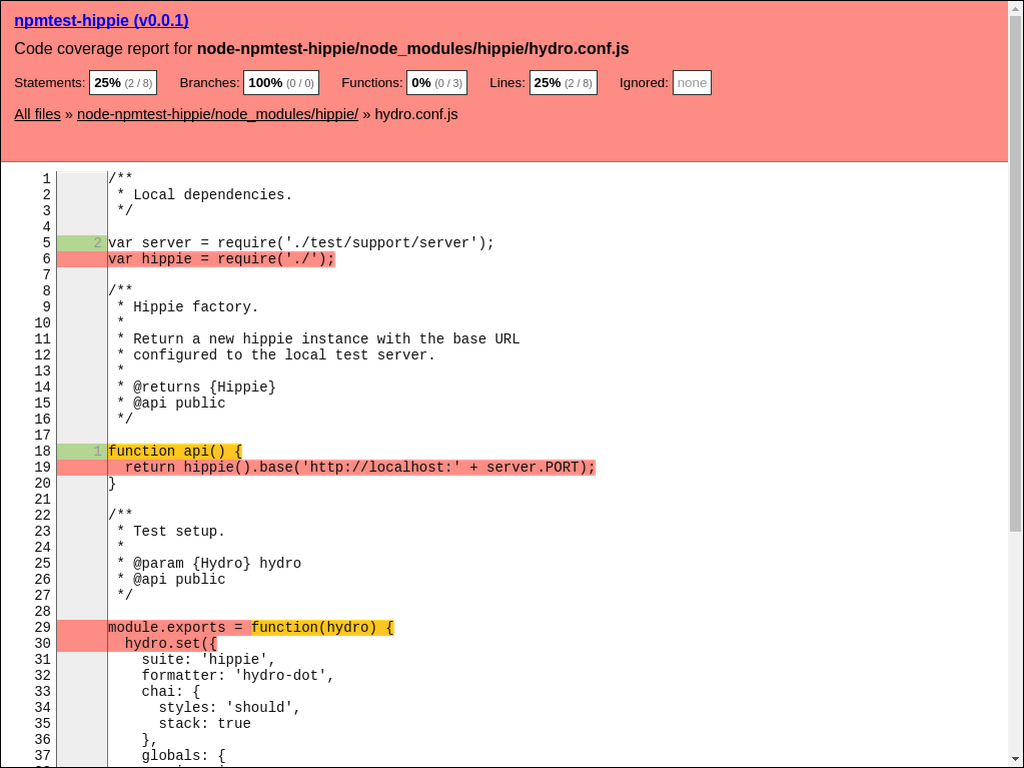

- [https://npmtest.github.io/node-npmtest-hippie/build/coverage.html/index.html](https://npmtest.github.io/node-npmtest-hippie/build/coverage.html/index.html)

[](https://npmtest.github.io/node-npmtest-hippie/build/coverage.html/index.html)

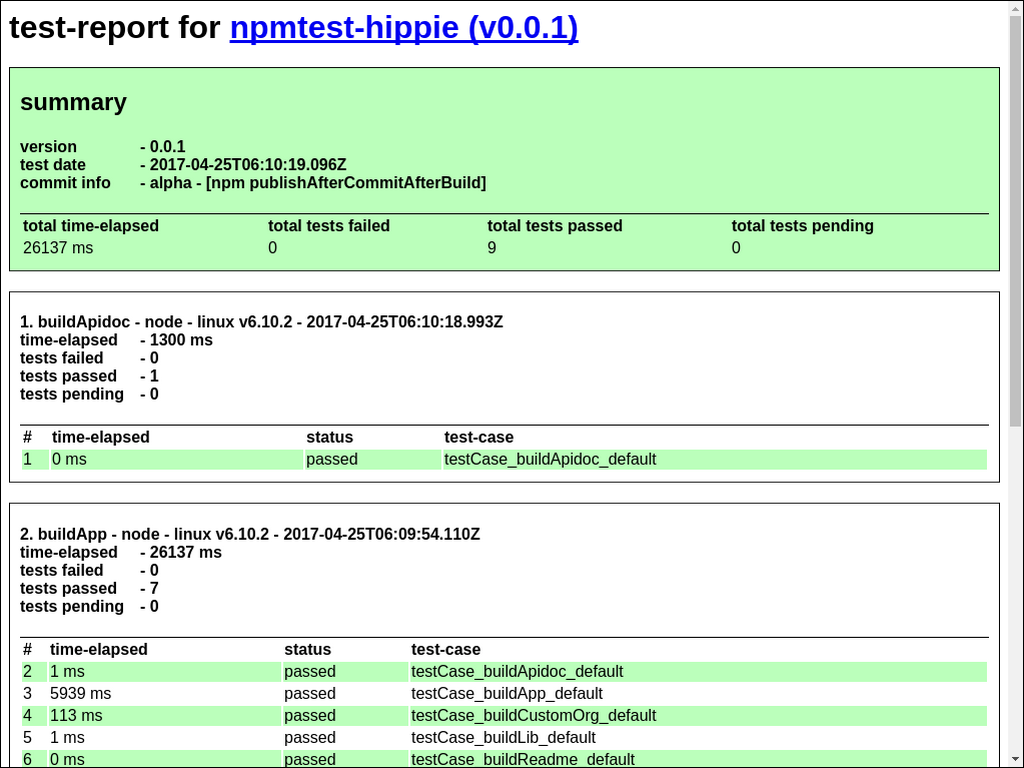

- [https://npmtest.github.io/node-npmtest-hippie/build/test-report.html](https://npmtest.github.io/node-npmtest-hippie/build/test-report.html)

[](https://npmtest.github.io/node-npmtest-hippie/build/test-report.html)

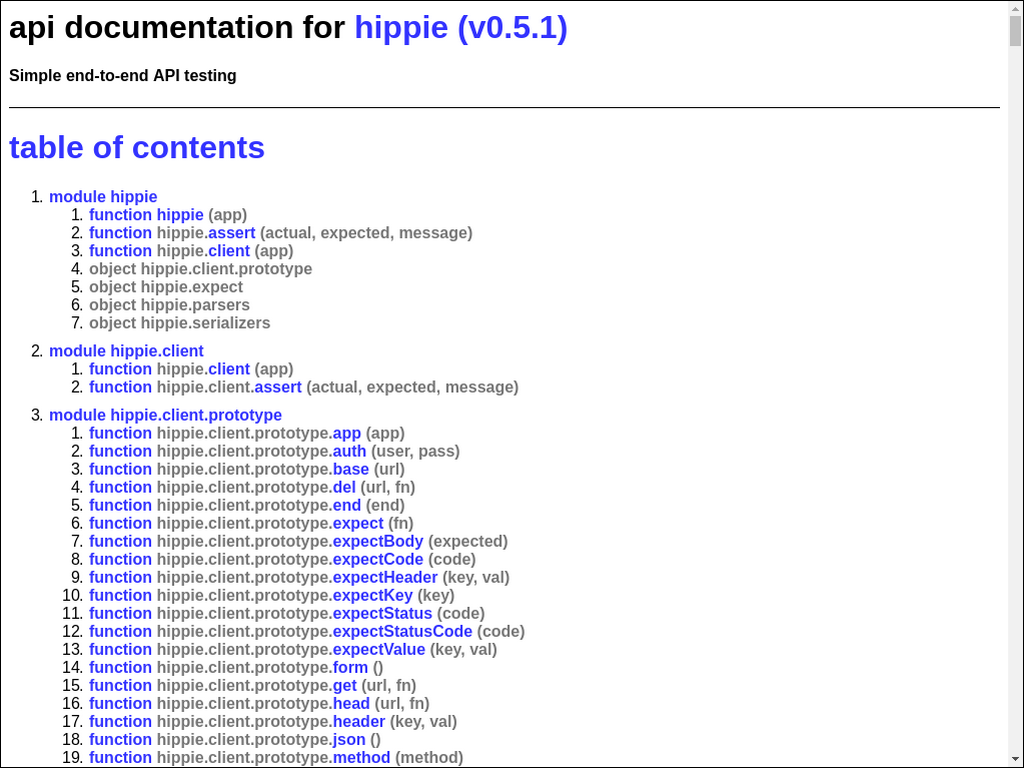

- [https://npmdoc.github.io/node-npmdoc-hippie/build/apidoc.html](https://npmdoc.github.io/node-npmdoc-hippie/build/apidoc.html)

[](https://npmdoc.github.io/node-npmdoc-hippie/build/apidoc.html)

# package.json

```json

{

"author": {

"name": "Veselin Todorov"

},

"bugs": {

"url": "https://github.com/vesln/hippie/issues"

},

"dependencies": {

"assertion-error": "~1.0.0",

"deep-eql": "~0.1.3",

"es6-promise": "^3.0.2",

"pathval": "0.0.1",

"qs": "~0.6.5",

"request": "~2.74.0"

},

"description": "Simple end-to-end API testing",

"devDependencies": {

"chai": "~1.8.1",

"express": "~3.4.4",

"hydro": "~0.8.7",

"hydro-bdd": "~0.1.0",

"hydro-chai": "~0.1.3",

"hydro-clean-stacks": "~0.1.0",

"hydro-dot": "~1.0.5",

"istanbul": "~0.1.44",

"jshint": "~2.3.0"

},

"directories": {},

"dist": {

"shasum": "050db4f3b6ee8daa8029abeba6b51e58b6cf1526",

"tarball": "https://registry.npmjs.org/hippie/-/hippie-0.5.1.tgz"

},

"gitHead": "376c7e0985c599162e9f47ba7a33d1bfaa644760",

"homepage": "https://github.com/vesln/hippie",

"license": "MIT",

"main": "./lib/hippie.js",

"maintainers": [

{

"name": "cachecontrol"

},

{

"name": "veselin"

},

{

"name": "vesln"

}

],

"name": "hippie",

"optionalDependencies": {},

"repository": {

"type": "git",

"url": "git+https://github.com/vesln/hippie.git"

},

"scripts": {

"coverage": "istanbul cover _hydro",

"pretest": "jshint .",

"test": "hydro"

},

"version": "0.5.1",

"bin": {}

}

```

# misc

- this document was created with [utility2](https://github.com/kaizhu256/node-utility2)

| 42.076923 | 380 | 0.653793 | yue_Hant | 0.143217 |

9723a20c3b68d4ddccd57eb286b42a7214935e4e | 337,710 | md | Markdown | bitnami/harbor/README.md | angel7slayer/charts | 5b159a70ec71d4a59280be1cdb8091660502e51e | [

"Apache-2.0"

] | null | null | null | bitnami/harbor/README.md | angel7slayer/charts | 5b159a70ec71d4a59280be1cdb8091660502e51e | [

"Apache-2.0"

] | null | null | null | bitnami/harbor/README.md | angel7slayer/charts | 5b159a70ec71d4a59280be1cdb8091660502e51e | [

"Apache-2.0"

] | null | null | null | <!--- app-name: Harbor -->

# Harbor packaged by Bitnami

Harbor is an open source trusted cloud-native registry to store, sign, and scan content. It adds functionalities like security, identity, and management to the open source Docker distribution.

[Overview of Harbor](https://goharbor.io/)

## TL;DR

```bash

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install my-release bitnami/harbor

```

## Introduction

This [Helm](https://github.com/kubernetes/helm) chart installs [Harbor](https://github.com/goharbor/harbor) in a Kubernetes cluster. Welcome to [contribute](https://github.com/bitnami/charts/blob/master/CONTRIBUTING.md) to Helm Chart for Harbor.

This Helm chart has been developed based on [goharbor/harbor-helm](https://github.com/goharbor/harbor-helm) chart but including some features common to the Bitnami chart library.

For example, the following changes have been introduced:

- Possibility to pull all the required images from a private registry through the Global Docker image parameters.

- Redis™ and PostgreSQL are managed as chart dependencies.

- Liveness and Readiness probes for all deployments are exposed to the values.yaml.

- Uses new Helm chart labels formatting.

- Uses Bitnami container images:

- non-root by default

- published for debian-10 and ol-7

- This chart support the Harbor optional components Chartmuseum, Clair and Notary integrations.

## Prerequisites

- Kubernetes 1.19+

- Helm 3.2.0+

- PV provisioner support in the underlying infrastructure

- ReadWriteMany volumes for deployment scaling

## Installing the Chart

Install the Harbor helm chart with a release name `my-release`:

```bash

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install my-release bitnami/harbor

```

## Uninstalling the Chart

To uninstall/delete the `my-release` deployment:

```bash

helm delete --purge my-release

```

Additionally, if `persistence.resourcePolicy` is set to `keep`, you should manually delete the PVCs.

## Parameters

### Global parameters

| Name | Description | Value |

| ------------------------- | ----------------------------------------------- | ----- |

| `global.imageRegistry` | Global Docker image registry | `""` |

| `global.imagePullSecrets` | Global Docker registry secret names as an array | `[]` |

| `global.storageClass` | Global StorageClass for Persistent Volume(s) | `""` |

### Common Parameters

| Name | Description | Value |

| ------------------------ | -------------------------------------------------------------------------------------------- | --------------- |

| `nameOverride` | String to partially override common.names.fullname template (will maintain the release name) | `""` |

| `fullnameOverride` | String to fully override common.names.fullname template with a string | `""` |

| `kubeVersion` | Force target Kubernetes version (using Helm capabilities if not set) | `""` |

| `clusterDomain` | Kubernetes Cluster Domain | `cluster.local` |

| `commonAnnotations` | Annotations to add to all deployed objects | `{}` |

| `commonLabels` | Labels to add to all deployed objects | `{}` |

| `extraDeploy` | Array of extra objects to deploy with the release (evaluated as a template). | `[]` |

| `diagnosticMode.enabled` | Enable diagnostic mode (all probes will be disabled and the command will be overridden) | `false` |

| `diagnosticMode.command` | Command to override all containers in the the deployment(s)/statefulset(s) | `["sleep"]` |

| `diagnosticMode.args` | Args to override all containers in the the deployment(s)/statefulset(s) | `["infinity"]` |

### Harbor common parameters

| Name | Description | Value |

| ---------------------------- | ----------------------------------------------------------------------------------------------------------------------------------------------------------- | --------------------------------------- |

| `adminPassword` | The initial password of Harbor admin. Change it from portal after launching Harbor | `""` |

| `externalURL` | The external URL for Harbor Core service | `https://core.harbor.domain` |

| `proxy.httpProxy` | The URL of the HTTP proxy server | `""` |

| `proxy.httpsProxy` | The URL of the HTTPS proxy server | `""` |

| `proxy.noProxy` | The URLs that the proxy settings not apply to | `127.0.0.1,localhost,.local,.internal` |

| `proxy.components` | The component list that the proxy settings apply to | `["core","jobservice","clair","trivy"]` |

| `logLevel` | The log level used for Harbor services. Allowed values are [ fatal \| error \| warn \| info \| debug \| trace ] | `debug` |

| `internalTLS.enabled` | Use TLS in all the supported containers: chartmuseum, clair, core, jobservice, portal, registry and trivy | `false` |

| `internalTLS.caBundleSecret` | Name of an existing secret with a custom CA that will be injected into the trust store for chartmuseum, clair, core, jobservice, registry, trivy components | `""` |

| `ipFamily.ipv6.enabled` | Enable listening on IPv6 ([::]) for NGINX-based components (NGINX,portal) | `true` |

| `ipFamily.ipv4.enabled` | Enable listening on IPv4 for NGINX-based components (NGINX,portal) | `true` |

### Traffic Exposure Parameters

| Name | Description | Value |

| ---------------------------------- | -------------------------------------------------------------------------------------------------------------------------------- | ------------------------ |

| `exposureType` | The way to expose Harbor. Allowed values are [ ingress \| proxy ] | `proxy` |

| `service.type` | NGINX proxy service type | `LoadBalancer` |

| `service.ports.http` | NGINX proxy service HTTP port | `80` |

| `service.ports.https` | NGINX proxy service HTTPS port | `443` |

| `service.ports.notary` | Notary service port | `4443` |

| `service.nodePorts.http` | Node port for HTTP | `""` |

| `service.nodePorts.https` | Node port for HTTPS | `""` |

| `service.nodePorts.notary` | Node port for Notary | `""` |

| `service.sessionAffinity` | Control where client requests go, to the same pod or round-robin | `None` |

| `service.clusterIP` | NGINX proxy service Cluster IP | `""` |

| `service.loadBalancerIP` | NGINX proxy service Load Balancer IP | `""` |

| `service.loadBalancerSourceRanges` | NGINX proxy service Load Balancer sources | `[]` |

| `service.externalTrafficPolicy` | NGINX proxy service external traffic policy | `Cluster` |

| `service.annotations` | Additional custom annotations for NGINX proxy service | `{}` |

| `service.extraPorts` | Extra port to expose on NGINX proxy service | `[]` |

| `ingress.core.ingressClassName` | IngressClass that will be be used to implement the Ingress (Kubernetes 1.18+) | `""` |

| `ingress.core.pathType` | Ingress path type | `ImplementationSpecific` |

| `ingress.core.apiVersion` | Force Ingress API version (automatically detected if not set) | `""` |

| `ingress.core.controller` | The ingress controller type. Currently supports `default`, `gce` and `ncp` | `default` |

| `ingress.core.hostname` | Default host for the ingress record | `core.harbor.domain` |

| `ingress.core.annotations` | Additional annotations for the Ingress resource. To enable certificate autogeneration, place here your cert-manager annotations. | `{}` |

| `ingress.core.tls` | Enable TLS configuration for the host defined at `ingress.core.hostname` parameter | `false` |

| `ingress.core.selfSigned` | Create a TLS secret for this ingress record using self-signed certificates generated by Helm | `false` |

| `ingress.core.extraHosts` | An array with additional hostname(s) to be covered with the ingress record | `[]` |

| `ingress.core.extraPaths` | An array with additional arbitrary paths that may need to be added to the ingress under the main host | `[]` |

| `ingress.core.extraTls` | TLS configuration for additional hostname(s) to be covered with this ingress record | `[]` |

| `ingress.core.secrets` | Custom TLS certificates as secrets | `[]` |

| `ingress.notary.ingressClassName` | IngressClass that will be be used to implement the Ingress (Kubernetes 1.18+) | `""` |

| `ingress.notary.pathType` | Ingress path type | `ImplementationSpecific` |

| `ingress.notary.apiVersion` | Force Ingress API version (automatically detected if not set) | `""` |

| `ingress.notary.controller` | The ingress controller type. Currently supports `default`, `gce` and `ncp` | `default` |

| `ingress.notary.hostname` | Default host for the ingress record | `notary.harbor.domain` |

| `ingress.notary.annotations` | Additional annotations for the Ingress resource. To enable certificate autogeneration, place here your cert-manager annotations. | `{}` |

| `ingress.notary.tls` | Enable TLS configuration for the host defined at `ingress.hostname` parameter | `false` |

| `ingress.notary.selfSigned` | Create a TLS secret for this ingress record using self-signed certificates generated by Helm | `false` |

| `ingress.notary.extraHosts` | An array with additional hostname(s) to be covered with the ingress record | `[]` |

| `ingress.notary.extraPaths` | An array with additional arbitrary paths that may need to be added to the ingress under the main host | `[]` |

| `ingress.notary.extraTls` | TLS configuration for additional hostname(s) to be covered with this ingress record | `[]` |

| `ingress.notary.secrets` | Custom TLS certificates as secrets | `[]` |

### Persistence Parameters

| Name | Description | Value |

| ------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------- |

| `persistence.enabled` | Enable the data persistence or not | `true` |

| `persistence.resourcePolicy` | Setting it to `keep` to avoid removing PVCs during a helm delete operation. Leaving it empty will delete PVCs after the chart deleted | `keep` |

| `persistence.persistentVolumeClaim.registry.existingClaim` | Name of an existing PVC to use | `""` |

| `persistence.persistentVolumeClaim.registry.storageClass` | PVC Storage Class for Harbor Registry data volume | `""` |

| `persistence.persistentVolumeClaim.registry.subPath` | The sub path used in the volume | `""` |

| `persistence.persistentVolumeClaim.registry.accessModes` | The access mode of the volume | `["ReadWriteOnce"]` |

| `persistence.persistentVolumeClaim.registry.size` | The size of the volume | `5Gi` |

| `persistence.persistentVolumeClaim.registry.annotations` | Annotations for the PVC | `{}` |

| `persistence.persistentVolumeClaim.registry.selector` | Selector to match an existing Persistent Volume | `{}` |

| `persistence.persistentVolumeClaim.jobservice.existingClaim` | Name of an existing PVC to use | `""` |

| `persistence.persistentVolumeClaim.jobservice.storageClass` | PVC Storage Class for Harbor Jobservice data volume | `""` |

| `persistence.persistentVolumeClaim.jobservice.subPath` | The sub path used in the volume | `""` |

| `persistence.persistentVolumeClaim.jobservice.accessModes` | The access mode of the volume | `["ReadWriteOnce"]` |

| `persistence.persistentVolumeClaim.jobservice.size` | The size of the volume | `1Gi` |

| `persistence.persistentVolumeClaim.jobservice.annotations` | Annotations for the PVC | `{}` |

| `persistence.persistentVolumeClaim.jobservice.selector` | Selector to match an existing Persistent Volume | `{}` |

| `persistence.persistentVolumeClaim.chartmuseum.existingClaim` | Name of an existing PVC to use | `""` |

| `persistence.persistentVolumeClaim.chartmuseum.storageClass` | PVC Storage Class for Chartmuseum data volume | `""` |

| `persistence.persistentVolumeClaim.chartmuseum.subPath` | The sub path used in the volume | `""` |

| `persistence.persistentVolumeClaim.chartmuseum.accessModes` | The access mode of the volume | `["ReadWriteOnce"]` |

| `persistence.persistentVolumeClaim.chartmuseum.size` | The size of the volume | `5Gi` |

| `persistence.persistentVolumeClaim.chartmuseum.annotations` | Annotations for the PVC | `{}` |

| `persistence.persistentVolumeClaim.chartmuseum.selector` | Selector to match an existing Persistent Volume | `{}` |

| `persistence.persistentVolumeClaim.trivy.storageClass` | PVC Storage Class for Trivy data volume | `""` |

| `persistence.persistentVolumeClaim.trivy.accessModes` | The access mode of the volume | `["ReadWriteOnce"]` |

| `persistence.persistentVolumeClaim.trivy.size` | The size of the volume | `5Gi` |

| `persistence.persistentVolumeClaim.trivy.annotations` | Annotations for the PVC | `{}` |

| `persistence.persistentVolumeClaim.trivy.selector` | Selector to match an existing Persistent Volume | `{}` |

| `persistence.imageChartStorage.caBundleSecret` | Specify the `caBundleSecret` if the storage service uses a self-signed certificate. The secret must contain keys named `ca.crt` which will be injected into the trust store of registry's and chartmuseum's containers. | `""` |

| `persistence.imageChartStorage.disableredirect` | The configuration for managing redirects from content backends. For backends which do not supported it (such as using MinIO® for `s3` storage type), please set it to `true` to disable redirects. Refer to the [guide](https://github.com/docker/distribution/blob/master/docs/configuration.md#redirect) for more information about the detail | `false` |

| `persistence.imageChartStorage.type` | The type of storage for images and charts: `filesystem`, `azure`, `gcs`, `s3`, `swift` or `oss`. The type must be `filesystem` if you want to use persistent volumes for registry and chartmuseum. Refer to the [guide](https://github.com/docker/distribution/blob/master/docs/configuration.md#storage) for more information about the detail | `filesystem` |

| `persistence.imageChartStorage.filesystem.rootdirectory` | Filesystem storage type setting: Storage root directory | `/storage` |

| `persistence.imageChartStorage.filesystem.maxthreads` | Filesystem storage type setting: Maximum threads directory | `""` |

| `persistence.imageChartStorage.azure.accountname` | Azure storage type setting: Name of the Azure account | `accountname` |

| `persistence.imageChartStorage.azure.accountkey` | Azure storage type setting: Key of the Azure account | `base64encodedaccountkey` |

| `persistence.imageChartStorage.azure.container` | Azure storage type setting: Container | `containername` |

| `persistence.imageChartStorage.azure.storagePrefix` | Azure storage type setting: Storage prefix | `/azure/harbor/charts` |

| `persistence.imageChartStorage.azure.realm` | Azure storage type setting: Realm of the Azure account | `""` |

| `persistence.imageChartStorage.gcs.bucket` | GCS storage type setting: Bucket name | `bucketname` |

| `persistence.imageChartStorage.gcs.encodedkey` | GCS storage type setting: Base64 encoded key | `base64-encoded-json-key-file` |

| `persistence.imageChartStorage.gcs.rootdirectory` | GCS storage type setting: Root directory name | `""` |

| `persistence.imageChartStorage.gcs.chunksize` | GCS storage type setting: Chunk size name | `""` |

| `persistence.imageChartStorage.s3.region` | S3 storage type setting: Region | `us-west-1` |

| `persistence.imageChartStorage.s3.bucket` | S3 storage type setting: Bucket name | `bucketname` |

| `persistence.imageChartStorage.s3.accesskey` | S3 storage type setting: Access key name | `""` |

| `persistence.imageChartStorage.s3.secretkey` | S3 storage type setting: Secret Key name | `""` |

| `persistence.imageChartStorage.s3.regionendpoint` | S3 storage type setting: Region Endpoint | `""` |

| `persistence.imageChartStorage.s3.encrypt` | S3 storage type setting: Encrypt | `""` |

| `persistence.imageChartStorage.s3.keyid` | S3 storage type setting: Key ID | `""` |

| `persistence.imageChartStorage.s3.secure` | S3 storage type setting: Secure | `""` |

| `persistence.imageChartStorage.s3.skipverify` | S3 storage type setting: TLS skip verification | `""` |

| `persistence.imageChartStorage.s3.v4auth` | S3 storage type setting: V4 authorization | `""` |

| `persistence.imageChartStorage.s3.chunksize` | S3 storage type setting: V4 authorization | `""` |

| `persistence.imageChartStorage.s3.rootdirectory` | S3 storage type setting: Root directory name | `""` |

| `persistence.imageChartStorage.s3.storageClass` | S3 storage type setting: Storage class | `""` |

| `persistence.imageChartStorage.s3.sse` | S3 storage type setting: SSE name | `""` |

| `persistence.imageChartStorage.swift.authurl` | Swift storage type setting: Authentication url | `https://storage.myprovider.com/v3/auth` |

| `persistence.imageChartStorage.swift.username` | Swift storage type setting: Authentication url | `""` |

| `persistence.imageChartStorage.swift.password` | Swift storage type setting: Password | `""` |

| `persistence.imageChartStorage.swift.container` | Swift storage type setting: Container | `""` |

| `persistence.imageChartStorage.swift.region` | Swift storage type setting: Region | `""` |

| `persistence.imageChartStorage.swift.tenant` | Swift storage type setting: Tenant | `""` |

| `persistence.imageChartStorage.swift.tenantid` | Swift storage type setting: TenantID | `""` |

| `persistence.imageChartStorage.swift.domain` | Swift storage type setting: Domain | `""` |

| `persistence.imageChartStorage.swift.domainid` | Swift storage type setting: DomainID | `""` |

| `persistence.imageChartStorage.swift.trustid` | Swift storage type setting: TrustID | `""` |

| `persistence.imageChartStorage.swift.insecureskipverify` | Swift storage type setting: Verification | `""` |

| `persistence.imageChartStorage.swift.chunksize` | Swift storage type setting: Chunk | `""` |

| `persistence.imageChartStorage.swift.prefix` | Swift storage type setting: Prefix | `""` |

| `persistence.imageChartStorage.swift.secretkey` | Swift storage type setting: Secre Key | `""` |

| `persistence.imageChartStorage.swift.accesskey` | Swift storage type setting: Access Key | `""` |

| `persistence.imageChartStorage.swift.authversion` | Swift storage type setting: Auth | `""` |

| `persistence.imageChartStorage.swift.endpointtype` | Swift storage type setting: Endpoint | `""` |

| `persistence.imageChartStorage.swift.tempurlcontainerkey` | Swift storage type setting: Temp URL container key | `""` |

| `persistence.imageChartStorage.swift.tempurlmethods` | Swift storage type setting: Temp URL methods | `""` |

| `persistence.imageChartStorage.oss.accesskeyid` | OSS storage type setting: Access key ID | `""` |

| `persistence.imageChartStorage.oss.accesskeysecret` | OSS storage type setting: Access key secret name containing the token | `""` |

| `persistence.imageChartStorage.oss.region` | OSS storage type setting: Region name | `""` |

| `persistence.imageChartStorage.oss.bucket` | OSS storage type setting: Bucket name | `""` |

| `persistence.imageChartStorage.oss.endpoint` | OSS storage type setting: Endpoint | `""` |

| `persistence.imageChartStorage.oss.internal` | OSS storage type setting: Internal | `""` |

| `persistence.imageChartStorage.oss.encrypt` | OSS storage type setting: Encrypt | `""` |

| `persistence.imageChartStorage.oss.secure` | OSS storage type setting: Secure | `""` |

| `persistence.imageChartStorage.oss.chunksize` | OSS storage type setting: Chunk | `""` |

| `persistence.imageChartStorage.oss.rootdirectory` | OSS storage type setting: Directory | `""` |

| `persistence.imageChartStorage.oss.secretkey` | OSS storage type setting: Secret key | `""` |

### Volume Permissions parameters

| Name | Description | Value |

| ------------------------------------------------------ | ------------------------------------------------------------------------------- | ----------------------- |

| `volumePermissions.enabled` | Enable init container that changes the owner and group of the persistent volume | `false` |

| `volumePermissions.image.registry` | Init container volume-permissions image registry | `docker.io` |

| `volumePermissions.image.repository` | Init container volume-permissions image repository | `bitnami/bitnami-shell` |

| `volumePermissions.image.tag` | Init container volume-permissions image tag (immutable tags are recommended) | `10-debian-10-r370` |

| `volumePermissions.image.pullPolicy` | Init container volume-permissions image pull policy | `IfNotPresent` |

| `volumePermissions.image.pullSecrets` | Init container volume-permissions image pull secrets | `[]` |

| `volumePermissions.resources.limits` | Init container volume-permissions resource limits | `{}` |

| `volumePermissions.resources.requests` | Init container volume-permissions resource requests | `{}` |

| `volumePermissions.containerSecurityContext.enabled` | Enable init container Security Context | `true` |

| `volumePermissions.containerSecurityContext.runAsUser` | User ID for the init container | `0` |

### NGINX Parameters

| Name | Description | Value |

| --------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------ | ---------------------- |

| `nginx.image.registry` | NGINX image registry | `docker.io` |

| `nginx.image.repository` | NGINX image repository | `bitnami/nginx` |

| `nginx.image.tag` | NGINX image tag (immutable tags are recommended) | `1.21.6-debian-10-r50` |

| `nginx.image.pullPolicy` | NGINX image pull policy | `IfNotPresent` |

| `nginx.image.pullSecrets` | NGINX image pull secrets | `[]` |

| `nginx.image.debug` | Enable NGINX image debug mode | `false` |

| `nginx.tls.enabled` | Enable TLS termination | `true` |

| `nginx.tls.existingSecret` | Existing secret name containing your own TLS certificates. | `""` |

| `nginx.tls.commonName` | The common name used to generate the self-signed TLS certificates | `core.harbor.domain` |

| `nginx.behindReverseProxy` | If NGINX is behind another reverse proxy, set to true | `false` |

| `nginx.command` | Override default container command (useful when using custom images) | `[]` |

| `nginx.args` | Override default container args (useful when using custom images) | `[]` |

| `nginx.extraEnvVars` | Array with extra environment variables to add NGINX pods | `[]` |

| `nginx.extraEnvVarsCM` | ConfigMap containing extra environment variables for NGINX pods | `""` |

| `nginx.extraEnvVarsSecret` | Secret containing extra environment variables (in case of sensitive data) for NGINX pods | `""` |

| `nginx.containerPorts.http` | NGINX HTTP container port | `8080` |

| `nginx.containerPorts.https` | NGINX HTTPS container port | `8443` |

| `nginx.containerPorts.notary` | NGINX container port where Notary svc is exposed | `4443` |

| `nginx.replicaCount` | Number of NGINX replicas | `1` |

| `nginx.livenessProbe.enabled` | Enable livenessProbe on NGINX containers | `true` |

| `nginx.livenessProbe.initialDelaySeconds` | Initial delay seconds for livenessProbe | `20` |

| `nginx.livenessProbe.periodSeconds` | Period seconds for livenessProbe | `10` |

| `nginx.livenessProbe.timeoutSeconds` | Timeout seconds for livenessProbe | `5` |

| `nginx.livenessProbe.failureThreshold` | Failure threshold for livenessProbe | `6` |

| `nginx.livenessProbe.successThreshold` | Success threshold for livenessProbe | `1` |

| `nginx.readinessProbe.enabled` | Enable readinessProbe on NGINX containers | `true` |

| `nginx.readinessProbe.initialDelaySeconds` | Initial delay seconds for readinessProbe | `20` |

| `nginx.readinessProbe.periodSeconds` | Period seconds for readinessProbe | `10` |

| `nginx.readinessProbe.timeoutSeconds` | Timeout seconds for readinessProbe | `5` |

| `nginx.readinessProbe.failureThreshold` | Failure threshold for readinessProbe | `6` |

| `nginx.readinessProbe.successThreshold` | Success threshold for readinessProbe | `1` |

| `nginx.startupProbe.enabled` | Enable startupProbe on NGINX containers | `false` |

| `nginx.startupProbe.initialDelaySeconds` | Initial delay seconds for startupProbe | `10` |

| `nginx.startupProbe.periodSeconds` | Period seconds for startupProbe | `10` |

| `nginx.startupProbe.timeoutSeconds` | Timeout seconds for startupProbe | `1` |

| `nginx.startupProbe.failureThreshold` | Failure threshold for startupProbe | `15` |

| `nginx.startupProbe.successThreshold` | Success threshold for startupProbe | `1` |

| `nginx.customLivenessProbe` | Custom livenessProbe that overrides the default one | `{}` |

| `nginx.customReadinessProbe` | Custom readinessProbe that overrides the default one | `{}` |

| `nginx.customStartupProbe` | Custom startupProbe that overrides the default one | `{}` |

| `nginx.resources.limits` | The resources limits for the NGINX containers | `{}` |

| `nginx.resources.requests` | The requested resources for the NGINX containers | `{}` |

| `nginx.podSecurityContext.enabled` | Enabled NGINX pods' Security Context | `true` |

| `nginx.podSecurityContext.fsGroup` | Set NGINX pod's Security Context fsGroup | `1001` |

| `nginx.containerSecurityContext.enabled` | Enabled NGINX containers' Security Context | `true` |

| `nginx.containerSecurityContext.runAsUser` | Set NGINX containers' Security Context runAsUser | `1001` |

| `nginx.containerSecurityContext.runAsNonRoot` | Set NGINX containers' Security Context runAsNonRoot | `true` |

| `nginx.updateStrategy.type` | NGINX deployment strategy type - only really applicable for deployments with RWO PVs attached | `RollingUpdate` |

| `nginx.updateStrategy.rollingUpdate` | NGINX deployment rolling update configuration parameters | `{}` |

| `nginx.lifecycleHooks` | LifecycleHook for the NGINX container(s) to automate configuration before or after startup | `{}` |

| `nginx.hostAliases` | NGINX pods host aliases | `[]` |

| `nginx.podLabels` | Add additional labels to the NGINX pods (evaluated as a template) | `{}` |

| `nginx.podAnnotations` | Annotations to add to the NGINX pods (evaluated as a template) | `{}` |

| `nginx.podAffinityPreset` | NGINX Pod affinity preset. Ignored if `affinity` is set. Allowed values: `soft` or `hard` | `""` |

| `nginx.podAntiAffinityPreset` | NGINX Pod anti-affinity preset. Ignored if `affinity` is set. Allowed values: `soft` or `hard` | `soft` |

| `nginx.nodeAffinityPreset.type` | NGINX Node affinity preset type. Ignored if `affinity` is set. Allowed values: `soft` or `hard` | `""` |

| `nginx.nodeAffinityPreset.key` | NGINX Node label key to match Ignored if `affinity` is set. | `""` |

| `nginx.nodeAffinityPreset.values` | NGINX Node label values to match. Ignored if `affinity` is set. | `[]` |

| `nginx.affinity` | NGINX Affinity for pod assignment | `{}` |

| `nginx.nodeSelector` | NGINX Node labels for pod assignment | `{}` |

| `nginx.tolerations` | NGINX Tolerations for pod assignment | `[]` |

| `nginx.topologySpreadConstraints` | Topology Spread Constraints for pod assignment spread across your cluster among failure-domains. Evaluated as a template | `{}` |

| `nginx.priorityClassName` | Priority Class Name | `""` |

| `nginx.schedulerName` | Use an alternate scheduler, e.g. "stork". | `""` |

| `nginx.sidecars` | Add additional sidecar containers to the NGINX pods | `[]` |

| `nginx.initContainers` | Add additional init containers to the NGINX pods | `[]` |

| `nginx.extraVolumeMounts` | Optionally specify extra list of additional volumeMounts for the NGINX pods | `[]` |

| `nginx.extraVolumes` | Optionally specify extra list of additional volumes for the NGINX pods | `[]` |

### Harbor Portal Parameters

| Name | Description | Value |

| ---------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------ | ----------------------- |

| `portal.image.registry` | Harbor Portal image registry | `docker.io` |

| `portal.image.repository` | Harbor Portal image repository | `bitnami/harbor-portal` |

| `portal.image.tag` | Harbor Portal image tag (immutable tags are recommended) | `2.4.2-debian-10-r2` |

| `portal.image.pullPolicy` | Harbor Portal image pull policy | `IfNotPresent` |

| `portal.image.pullSecrets` | Harbor Portal image pull secrets | `[]` |

| `portal.image.debug` | Enable Harbor Portal image debug mode | `false` |

| `portal.tls.existingSecret` | Name of an existing secret with the certificates for internal TLS access | `""` |

| `portal.command` | Override default container command (useful when using custom images) | `[]` |

| `portal.args` | Override default container args (useful when using custom images) | `[]` |

| `portal.extraEnvVars` | Array with extra environment variables to add Harbor Portal pods | `[]` |

| `portal.extraEnvVarsCM` | ConfigMap containing extra environment variables for Harbor Portal pods | `""` |

| `portal.extraEnvVarsSecret` | Secret containing extra environment variables (in case of sensitive data) for Harbor Portal pods | `""` |

| `portal.containerPorts.http` | Harbor Portal HTTP container port | `8080` |

| `portal.containerPorts.https` | Harbor Portal HTTPS container port | `8443` |

| `portal.replicaCount` | Number of Harbor Portal replicas | `1` |

| `portal.livenessProbe.enabled` | Enable livenessProbe on Harbor Portal containers | `true` |

| `portal.livenessProbe.initialDelaySeconds` | Initial delay seconds for livenessProbe | `20` |

| `portal.livenessProbe.periodSeconds` | Period seconds for livenessProbe | `10` |

| `portal.livenessProbe.timeoutSeconds` | Timeout seconds for livenessProbe | `5` |

| `portal.livenessProbe.failureThreshold` | Failure threshold for livenessProbe | `6` |

| `portal.livenessProbe.successThreshold` | Success threshold for livenessProbe | `1` |

| `portal.readinessProbe.enabled` | Enable readinessProbe on Harbor Portal containers | `true` |

| `portal.readinessProbe.initialDelaySeconds` | Initial delay seconds for readinessProbe | `20` |

| `portal.readinessProbe.periodSeconds` | Period seconds for readinessProbe | `10` |

| `portal.readinessProbe.timeoutSeconds` | Timeout seconds for readinessProbe | `5` |

| `portal.readinessProbe.failureThreshold` | Failure threshold for readinessProbe | `6` |

| `portal.readinessProbe.successThreshold` | Success threshold for readinessProbe | `1` |

| `portal.startupProbe.enabled` | Enable startupProbe on Harbor Portal containers | `false` |

| `portal.startupProbe.initialDelaySeconds` | Initial delay seconds for startupProbe | `5` |

| `portal.startupProbe.periodSeconds` | Period seconds for startupProbe | `10` |

| `portal.startupProbe.timeoutSeconds` | Timeout seconds for startupProbe | `1` |

| `portal.startupProbe.failureThreshold` | Failure threshold for startupProbe | `15` |

| `portal.startupProbe.successThreshold` | Success threshold for startupProbe | `1` |

| `portal.customLivenessProbe` | Custom livenessProbe that overrides the default one | `{}` |

| `portal.customReadinessProbe` | Custom readinessProbe that overrides the default one | `{}` |

| `portal.customStartupProbe` | Custom startupProbe that overrides the default one | `{}` |

| `portal.resources.limits` | The resources limits for the Harbor Portal containers | `{}` |

| `portal.resources.requests` | The requested resources for the Harbor Portal containers | `{}` |

| `portal.podSecurityContext.enabled` | Enabled Harbor Portal pods' Security Context | `true` |

| `portal.podSecurityContext.fsGroup` | Set Harbor Portal pod's Security Context fsGroup | `1001` |

| `portal.containerSecurityContext.enabled` | Enabled Harbor Portal containers' Security Context | `true` |

| `portal.containerSecurityContext.runAsUser` | Set Harbor Portal containers' Security Context runAsUser | `1001` |

| `portal.containerSecurityContext.runAsNonRoot` | Set Harbor Portal containers' Security Context runAsNonRoot | `true` |

| `portal.updateStrategy.type` | Harbor Portal deployment strategy type - only really applicable for deployments with RWO PVs attached | `RollingUpdate` |

| `portal.updateStrategy.rollingUpdate` | Harbor Portal deployment rolling update configuration parameters | `{}` |

| `portal.lifecycleHooks` | LifecycleHook for the Harbor Portal container(s) to automate configuration before or after startup | `{}` |

| `portal.hostAliases` | Harbor Portal pods host aliases | `[]` |

| `portal.podLabels` | Add additional labels to the Harbor Portal pods (evaluated as a template) | `{}` |

| `portal.podAnnotations` | Annotations to add to the Harbor Portal pods (evaluated as a template) | `{}` |

| `portal.podAffinityPreset` | Harbor Portal Pod affinity preset. Ignored if `portal.affinity` is set. Allowed values: `soft` or `hard` | `""` |

| `portal.podAntiAffinityPreset` | Harbor Portal Pod anti-affinity preset. Ignored if `portal.affinity` is set. Allowed values: `soft` or `hard` | `soft` |

| `portal.nodeAffinityPreset.type` | Harbor Portal Node affinity preset type. Ignored if `portal.affinity` is set. Allowed values: `soft` or `hard` | `""` |

| `portal.nodeAffinityPreset.key` | Harbor Portal Node label key to match Ignored if `portal.affinity` is set. | `""` |

| `portal.nodeAffinityPreset.values` | Harbor Portal Node label values to match. Ignored if `portal.affinity` is set. | `[]` |

| `portal.affinity` | Harbor Portal Affinity for pod assignment | `{}` |

| `portal.nodeSelector` | Harbor Portal Node labels for pod assignment | `{}` |

| `portal.tolerations` | Harbor Portal Tolerations for pod assignment | `[]` |

| `portal.topologySpreadConstraints` | Topology Spread Constraints for pod assignment spread across your cluster among failure-domains. Evaluated as a template | `{}` |

| `portal.priorityClassName` | Priority Class Name | `""` |

| `portal.schedulerName` | Use an alternate scheduler, e.g. "stork". | `""` |

| `portal.sidecars` | Add additional sidecar containers to the Harbor Portal pods | `[]` |

| `portal.initContainers` | Add additional init containers to the Harbor Portal pods | `[]` |

| `portal.extraVolumeMounts` | Optionally specify extra list of additional volumeMounts for the Harbor Portal pods | `[]` |

| `portal.extraVolumes` | Optionally specify extra list of additional volumes for the Harbor Portal pods | `[]` |

| `portal.automountServiceAccountToken` | Automount service account token | `false` |

| `portal.service.ports.http` | Harbor Portal HTTP service port | `80` |

| `portal.service.ports.https` | Harbor Portal HTTPS service port | `443` |

### Harbor Core Parameters

| Name | Description | Value |

| -------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | --------------------- |

| `core.image.registry` | Harbor Core image registry | `docker.io` |

| `core.image.repository` | Harbor Core image repository | `bitnami/harbor-core` |

| `core.image.tag` | Harbor Core image tag (immutable tags are recommended) | `2.4.2-debian-10-r2` |

| `core.image.pullPolicy` | Harbor Core image pull policy | `IfNotPresent` |

| `core.image.pullSecrets` | Harbor Core image pull secrets | `[]` |

| `core.image.debug` | Enable Harbor Core image debug mode | `false` |

| `core.uaaSecret` | If using external UAA auth which has a self signed cert, you can provide a pre-created secret containing it under the key `ca.crt`. | `""` |

| `core.secretKey` | The key used for encryption. Must be a string of 16 chars | `""` |

| `core.secret` | Secret used when the core server communicates with other components. If a secret key is not specified, Helm will generate one. Must be a string of 16 chars. | `""` |

| `core.secretName` | Fill the name of a kubernetes secret if you want to use your own TLS certificate and private key for token encryption/decryption. The secret must contain two keys named: `tls.crt` - the certificate and `tls.key` - the private key. The default key pair will be used if it isn't set | `""` |

| `core.csrfKey` | The CSRF key. Will be generated automatically if it isn't specified | `""` |

| `core.tls.existingSecret` | Name of an existing secret with the certificates for internal TLS access | `""` |

| `core.command` | Override default container command (useful when using custom images) | `[]` |

| `core.args` | Override default container args (useful when using custom images) | `[]` |

| `core.extraEnvVars` | Array with extra environment variables to add Harbor Core pods | `[]` |

| `core.extraEnvVarsCM` | ConfigMap containing extra environment variables for Harbor Core pods | `""` |

| `core.extraEnvVarsSecret` | Secret containing extra environment variables (in case of sensitive data) for Harbor Core pods | `""` |

| `core.containerPorts.http` | Harbor Core HTTP container port | `8080` |

| `core.containerPorts.https` | Harbor Core HTTPS container port | `8443` |

| `core.containerPorts.metrics` | Harbor Core metrics container port | `8001` |

| `core.replicaCount` | Number of Harbor Core replicas | `1` |

| `core.livenessProbe.enabled` | Enable livenessProbe on Harbor Core containers | `true` |

| `core.livenessProbe.initialDelaySeconds` | Initial delay seconds for livenessProbe | `20` |

| `core.livenessProbe.periodSeconds` | Period seconds for livenessProbe | `10` |

| `core.livenessProbe.timeoutSeconds` | Timeout seconds for livenessProbe | `5` |

| `core.livenessProbe.failureThreshold` | Failure threshold for livenessProbe | `6` |

| `core.livenessProbe.successThreshold` | Success threshold for livenessProbe | `1` |

| `core.readinessProbe.enabled` | Enable readinessProbe on Harbor Core containers | `true` |

| `core.readinessProbe.initialDelaySeconds` | Initial delay seconds for readinessProbe | `20` |

| `core.readinessProbe.periodSeconds` | Period seconds for readinessProbe | `10` |

| `core.readinessProbe.timeoutSeconds` | Timeout seconds for readinessProbe | `5` |

| `core.readinessProbe.failureThreshold` | Failure threshold for readinessProbe | `6` |

| `core.readinessProbe.successThreshold` | Success threshold for readinessProbe | `1` |

| `core.startupProbe.enabled` | Enable startupProbe on Harbor Core containers | `false` |

| `core.startupProbe.initialDelaySeconds` | Initial delay seconds for startupProbe | `5` |

| `core.startupProbe.periodSeconds` | Period seconds for startupProbe | `10` |

| `core.startupProbe.timeoutSeconds` | Timeout seconds for startupProbe | `1` |

| `core.startupProbe.failureThreshold` | Failure threshold for startupProbe | `15` |

| `core.startupProbe.successThreshold` | Success threshold for startupProbe | `1` |

| `core.customLivenessProbe` | Custom livenessProbe that overrides the default one | `{}` |

| `core.customReadinessProbe` | Custom readinessProbe that overrides the default one | `{}` |

| `core.customStartupProbe` | Custom startupProbe that overrides the default one | `{}` |

| `core.resources.limits` | The resources limits for the Harbor Core containers | `{}` |

| `core.resources.requests` | The requested resources for the Harbor Core containers | `{}` |

| `core.podSecurityContext.enabled` | Enabled Harbor Core pods' Security Context | `true` |

| `core.podSecurityContext.fsGroup` | Set Harbor Core pod's Security Context fsGroup | `1001` |

| `core.containerSecurityContext.enabled` | Enabled Harbor Core containers' Security Context | `true` |

| `core.containerSecurityContext.runAsUser` | Set Harbor Core containers' Security Context runAsUser | `1001` |

| `core.containerSecurityContext.runAsNonRoot` | Set Harbor Core containers' Security Context runAsNonRoot | `true` |

| `core.updateStrategy.type` | Harbor Core deployment strategy type - only really applicable for deployments with RWO PVs attached | `RollingUpdate` |

| `core.updateStrategy.rollingUpdate` | Harbor Core deployment rolling update configuration parameters | `{}` |

| `core.lifecycleHooks` | LifecycleHook for the Harbor Core container(s) to automate configuration before or after startup | `{}` |

| `core.hostAliases` | Harbor Core pods host aliases | `[]` |

| `core.podLabels` | Add additional labels to the Harbor Core pods (evaluated as a template) | `{}` |

| `core.podAnnotations` | Annotations to add to the Harbor Core pods (evaluated as a template) | `{}` |

| `core.podAffinityPreset` | Harbor Core Pod affinity preset. Ignored if `core.affinity` is set. Allowed values: `soft` or `hard` | `""` |

| `core.podAntiAffinityPreset` | Harbor Core Pod anti-affinity preset. Ignored if `core.affinity` is set. Allowed values: `soft` or `hard` | `soft` |

| `core.nodeAffinityPreset.type` | Harbor Core Node affinity preset type. Ignored if `core.affinity` is set. Allowed values: `soft` or `hard` | `""` |

| `core.nodeAffinityPreset.key` | Harbor Core Node label key to match Ignored if `core.affinity` is set. | `""` |

| `core.nodeAffinityPreset.values` | Harbor Core Node label values to match. Ignored if `core.affinity` is set. | `[]` |

| `core.affinity` | Harbor Core Affinity for pod assignment | `{}` |

| `core.nodeSelector` | Harbor Core Node labels for pod assignment | `{}` |

| `core.tolerations` | Harbor Core Tolerations for pod assignment | `[]` |

| `core.topologySpreadConstraints` | Topology Spread Constraints for pod assignment spread across your cluster among failure-domains. Evaluated as a template | `{}` |

| `core.priorityClassName` | Priority Class Name | `""` |

| `core.schedulerName` | Use an alternate scheduler, e.g. "stork". | `""` |

| `core.sidecars` | Add additional sidecar containers to the Harbor Core pods | `[]` |

| `core.initContainers` | Add additional init containers to the Harbor Core pods | `[]` |

| `core.extraVolumeMounts` | Optionally specify extra list of additional volumeMounts for the Harbor Core pods | `[]` |

| `core.extraVolumes` | Optionally specify extra list of additional volumes for the Harbor Core pods | `[]` |

| `core.automountServiceAccountToken` | Automount service account token | `false` |

| `core.service.ports.http` | Harbor Core HTTP service port | `80` |

| `core.service.ports.https` | Harbor Core HTTPS service port | `443` |

| `core.service.ports.metrics` | Harbor Core metrics service port | `8001` |

### Harbor Jobservice Parameters

| Name | Description | Value |