text

stringlengths 20

57.3k

| labels

class label 4

classes |

|---|---|

Title: Adding Example for FastApi OpenAPI documentation

Body: ## Problem

Currently for my pydantic models, I use the example field to document FastAPI:

` = Field(..., example="This is my example for SwaggerUI")`

## Suggested solution

Would it be possible through comment in the schema.prisma to add these examples?

## Alternatives

Open to any solution.

## Additional context

I want to completely remove my custom pydantic object definitions and rely on your generated models and partials (which I find amazing!)

| 1medium

|

Title: [Call for contributions] help us improve LoKr, LoHa, and other LyCORIS

Body: Originally reported by @bghira in https://github.com/huggingface/peft/issues/1931.

Our LoKr, LoHA, and other LyCORIS modules are outdated and could benefit from your help quite a bit. The following is a list of things that need modifications and fixing:

- [ ] fixed rank dropout implementation

- [ ] fixed maths (not multiplying against the vector, but only the scalar)

- [ ] full matrix tuning

- [ ] 1x1 convolutions

- [ ] quantised LoHa/LoKr

- [ ] weight-decomposed LoHa/LoKr

So, if you are interested, feel free to take one of these up at a time and open PRs. Of course, we will be with you for the PRs, learning from them and provide guidance as needed.

Please mention this issue when opening PRs and tag @BenjaminBossan and myself.

| 1medium

|

Title: Incorrect CBOW implementation in Gensim leads to inferior performance

Body: #### Problem description

According to this article https://aclanthology.org/2021.insights-1.1.pdf:

<img width="636" alt="Screen Shot 2021-11-09 at 15 47 21" src="https://user-images.githubusercontent.com/610412/140945923-7d279468-a9e9-41b4-b7c2-919919832bc5.png">

#### Steps/code/corpus to reproduce

I haven't tried to verify / reproduce. Gensim's goal is to follow the original C implementation faithfully, which it does. So this is not a bug per se, more a question of "how whether / how much we want to deviate from the reference implementation". I'm in favour if the result is unambiguous better (more accurate, faster, no downsides).

#### Versions

All versions since the beginning of word2vec in Gensim.

| 1medium

|

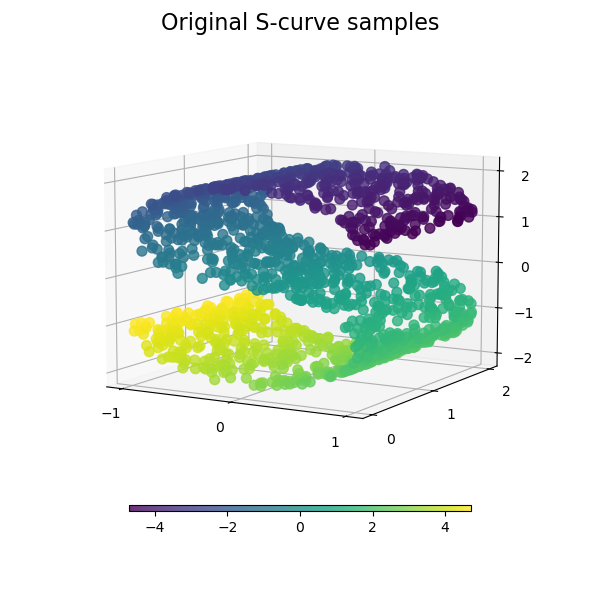

Title: Manifold Feature Engineering

Body:

Currently we have a t-SNE visualizer for text, but we can create a general manifold learning visualizer for projecting high dimensional data into 2 dimensions that respect non-linear effects (unlike our current decomposition methods.

### Proposal/Issue

The visualizer would take as hyperparameters:

- The color space of the original data (either by class or more specifically for each point)

- The manifold method (string or estimator)

It would be fit to training data.

The visualizer would display the representation in 2D space, as well as the training time and any other associated metrics.

### Code Snippet

- Code snippet found here: [Plot Compare Manifold Methods](http://scikit-learn.org/stable/auto_examples/manifold/plot_compare_methods.html)

### Background

- [Comparison of Manifold Algorithms (sklearn docs)](http://scikit-learn.org/stable/modules/manifold.html#manifold)

This investigation started with self-organizing maps (SOMS) visualization:

- http://blog.yhat.com/posts/self-organizing-maps-2.html

- https://stats.stackexchange.com/questions/210446/how-does-one-visualize-the-self-organizing-map-of-n-dimensional-data

| 1medium

|

Title: [BUG] --report flag without arguments is broken.

Body: **Checklist**

- [x] I checked the [FAQ section](https://schemathesis.readthedocs.io/en/stable/faq.html#frequently-asked-questions) of the documentation

- [x] I looked for similar issues in the [issue tracker](https://github.com/schemathesis/schemathesis/issues)

**Describe the bug**

When running from the CLI with the --report flag an error is thrown. When adding any arguments like true or 1, a file with that name is created. I would like a report on schemathesis.io to be generated.

**To Reproduce**

Steps to reproduce the behavior:

Run a valid schemathesis command with the --report flag

The error received is the following:

```

Traceback (most recent call last):

File "/home/docker/.local/bin/st", line 8, in <module>

sys.exit(schemathesis())

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 1157, in __call__

return self.main(*args, **kwargs)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 1078, in main

rv = self.invoke(ctx)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 1686, in invoke

sub_ctx = cmd.make_context(cmd_name, args, parent=ctx)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 943, in make_context

self.parse_args(ctx, args)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 1408, in parse_args

value, args = param.handle_parse_result(ctx, opts, args)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 2400, in handle_parse_result

value = self.process_value(ctx, value)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 2356, in process_value

value = self.type_cast_value(ctx, value)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 2344, in type_cast_value

return convert(value)

File "/home/docker/.local/lib/python3.8/site-packages/click/core.py", line 2316, in convert

return self.type(value, param=self, ctx=ctx)

File "/home/docker/.local/lib/python3.8/site-packages/click/types.py", line 83, in __call__

return self.convert(value, param, ctx)

File "/home/docker/.local/lib/python3.8/site-packages/click/types.py", line 712, in convert

lazy = self.resolve_lazy_flag(value)

File "/home/docker/.local/lib/python3.8/site-packages/click/types.py", line 694, in resolve_lazy_flag

if os.fspath(value) == "-":

TypeError: expected str, bytes or os.PathLike object, not object

```

**Expected behavior**

--report with no flags should upload a report to schemathesis.io

**Environment (please complete the following information):**

- OS: Debian WSL

- Python version: 3.8.10

- Schemathesis version: 3.19.5

- Spec version: 3.0.3

| 1medium

|

Title: connect() fails if /etc/os-release is not available

Body: ## Bug description

App fails if `/etc/os-release` is missing.

## How to reproduce

```

sudo mv /etc/os-release /etc/os-release.old

#start app

````

## Environment & setup

<!-- In which environment does the problem occur -->

- OS: Cloud Linux OS (4.18.0-372.19.1.lve.el8.x86_64)

- Database: SQLite]

- Python version: 3.0

- Prisma version:

```

INFO: Waiting for application startup.

cat: /etc/os-release: No such file or directory

ERROR: Traceback (most recent call last):

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/starlette/routing.py", line 671, in lifespan

async with self.lifespan_context(app):

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/starlette/routing.py", line 566, in __aenter__

await self._router.startup()

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/starlette/routing.py", line 648, in startup

await handler()

File "/home/wodorec1/wodore/tests/prisma/main.py", line 13, in startup

await prisma.connect()

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/client.py", line 252, in connect

await self.__engine.connect(

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/engine/query.py", line 128, in connect

self.file = file = self._ensure_file()

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/engine/query.py", line 116, in _ensure_file

return utils.ensure(BINARY_PATHS.query_engine)

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/engine/utils.py", line 72, in ensure

name = query_engine_name()

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/engine/utils.py", line 36, in query_engine_name

return f'prisma-query-engine-{platform.check_for_extension(platform.binary_platform())}'

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/binaries/platform.py", line 56, in binary_platform

distro = linux_distro()

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/binaries/platform.py", line 23, in linux_distro

distro_id, distro_id_like = _get_linux_distro_details()

File "/home/wodorec1/virtualenv/wodore/tests/prisma/3.9/lib/python3.9/site-packages/prisma/binaries/platform.py", line 37, in _get_linux_distro_details

process = subprocess.run(

File "/opt/alt/python39/lib64/python3.9/subprocess.py", line 528, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['cat', '/etc/os-release']' returned non-zero exit status 1.

```

| 1medium

|

Title: Consider renaming root `OR` query to `ANY`

Body: ## Problem

<!-- A clear and concise description of what the problem is. Ex. I'm always frustrated when [...] -->

The root `OR` part of a query can be confusing: https://news.ycombinator.com/item?id=30416531#30422118

## Suggested solution

<!-- A clear and concise description of what you want to happen. -->

Replace the `OR` field with an `ANY` field, for example:

```py

posts = await client.post.find_many(

where={

'OR': [

{'title': {'contains': 'prisma'}},

{'content': {'contains': 'prisma'}},

]

}

)

```

Becomes:

```py

posts = await client.post.find_many(

where={

'ANY': [

{'title': {'contains': 'prisma'}},

{'content': {'contains': 'prisma'}},

]

}

)

```

If we decide to go with this (or similar), the `OR` field should be deprecated first and then removed in a later release.

| 1medium

|

Title: Change "alpha" parameter to "opacity"

Body: In working on issue #558, it's becoming clear that there is potential for naming confusion and collision around our use of `alpha` to mean the opacity/translucency (e.g. in the matplotlib sense), since in scikit-learn, `alpha` is often used to reference other things, such as the regularization hyperparameter, e.g.

```

oz = ResidualsPlot(Lasso(alpha=0.1), alpha=0.7)

```

As such, we should probably change our `alpha` to something like `opacity`. This update will impact a lot of the codebase and docs, and therefore has the potential to be disruptive, so I propose waiting until things are a bit quieter.

| 1medium

|

Title: Remove old datasets code and rewire with new datasets.load_* api

Body: As a follow up to reworking the datasets API, we need to go through and remove redundant old code in these locations:

- [x] `yellowbrick/download.py`

- [ ] `tests/dataset.py`

Part of this will be a requirement to rewire tests and examples as needed. Likely also there might be slight transformations of data in code that will have to happen

@DistrictDataLabs/team-oz-maintainers

| 1medium

|

Title: Add typing to all modules under `robot.api`

Body: Currently, the `@keyword` and `@library` functions exposed through the `robot.api` module have no annotations. This limits the type-checking that tools like mypy and Pylance / pyright can perform, in turn limiting what types of issues language servers can detect.

Adding annotations to these functions (and, ultimately, all public-facing interfaces of the Robot Framework) would enable better IDE / tool integration.

Note that the improvements with regards to automatic type conversion also closely relate to this issue since the framework uses the signatures of methods decorated with the `@keyword` decorator to attempt automatic type conversion, while the missing annotations limit static analysis of the decorated methods.

| 1medium

|

Title: [BUG] Optimization that compacts multiple filters into `eval` generates unexpected node in graph

Body: <!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

**Describe the bug**

Optimization that compacts multiple filters into eval generates unexpected node in graph.

**To Reproduce**

To help us reproducing this bug, please provide information below:

1. Your Python version

2. The version of Mars you use

3. Versions of crucial packages, such as numpy, scipy and pandas

4. Full stack of the error.

5. Minimized code to reproduce the error.

```python

@enter_mode(build=True)

def test_arithmetic_query(setup):

df1 = md.DataFrame(raw, chunk_size=10)

df2 = md.DataFrame(raw2, chunk_size=10)

df3 = df1.merge(df2, on='A', suffixes=('', '_'))

df3['K'] = df4 = df3["A"] * (1 - df3["B"])

graph = TileableGraph([df3.data])

next(TileableGraphBuilder(graph).build())

records = optimize(graph)

opt_df4 = records.get_optimization_result(df4.data)

assert opt_df4.op.expr == "(`A`) * ((1) - (`B`))"

assert len(graph) == 5 # for now len(graph) is 6

assert len([n for n in graph if isinstance(n.op, DataFrameEval)]) == 1 # and 2 evals exist

```

| 1medium

|

Title: Allow ModelVisualizers to wrap Pipeline objects

Body: **Describe the solution you'd like**

Our model visualizers expect to wrap classifiers, regressors, or clusters in order to visualize the model under the hood; they even do checks to ensure the right estimator is passed in. Unfortunately in many cases, passing a pipeline object as the model in question does not allow the visualizer to work, even though the model is acceptable as a pipeline, e.g. it is a classifier for classification score visualizers (more on this below). This is primarily because the Pipeline wrapper masks the attributes needed by the visualizer.

I propose that we modify the [`ModelVisualizer `](https://github.com/DistrictDataLabs/yellowbrick/blob/develop/yellowbrick/base.py#L274) to change the `ModelVisualizer.estimator` attribute to a `@property` - when setting the estimator property, we can perform a check to ensure that the Pipeline has a `final_estimator` attribute (e.g. that it is not a transformer pipeline). When getting the estimator property, we can return the final estimator instead of the entire Pipeline. This should ensure that we can use pipelines in our model visualizers.

**NOTE** however that we will still have to `fit()`, `predict()`, and `score()` on the entire pipeline, so this is a bit more nuanced than it seems on first glance. There will probably have to be `is_pipeline()` checking and other estimator access utilities.

**Is your feature request related to a problem? Please describe.**

Consider the following, fairly common code:

```python

from sklearn.pipeline import Pipeline

from sklearn.neural_network import MLPClassifier

from sklearn.feature_extraction.text import TfidfVectorizer

from yellowbrick.classifier import ClassificationReport

model = Pipeline([

('tfidf', TfidfVectorizer()),

('mlp', MLPClassifier()),

])

oz = ClassificationReport(model)

oz.fit(X_train, y_train)

oz.score(X_test, y_test)

oz.poof()

```

This seems to be a valid model for a classification report, unfortunately the classification report is not able to access the MLPClassiifer's `classes_` attribute since the Pipeline doesn't know how to pass that on to the final estimator.

I think the original idea for the `ScoreVisualizers` was that they would be inside of Pipelines, e.g.

```python

model = Pipeline([

('tfidf', TfidfVectorizer()),

('clf', ClassificationReport(MLPClassifier())),

])

model.fit(X, y)

model.score(X_test, y_test)

model.named_steps['clf'].poof()

```

But this makes it difficult to use more than one visualizer; e.g. ROCAUC visualizer and CR visualizer.

**Definition of Done**

- [ ] Update `ModelVisualizer` class with pipeline helpers

- [ ] Ensure current tests pass

- [ ] Add test to all model visualizer subclasses to pass in a pipeline as the estimator

- [ ] Add documentation about using visualizers with pipelines

| 1medium

|

Title: Provide a JSON Schema generator

Body: ## Problem

This would be useful as a real world example of a custom Prisma generator.

It could also help find any features we could add that would make building custom Prisma generators easier.

## Additional context

This has already been implemented in TypeScript: https://github.com/valentinpalkovic/prisma-json-schema-generator

| 1medium

|

Title: Allow removing tags using `-tag` syntax also in `Test Tags`

Body: `Test Tags` from `*** Settings ***` and `[Tags]` from Test Case behave differently when removing tags: while it is possible to remove tags with Test Case's `[Tag] -something`, Settings `Test Tags -something` introduces a new tag `-something`.

Running tests with these robot files (also [attached](https://github.com/user-attachments/files/17566740/TagsTest.zip)):

* `__init__.robot`:

```

*** Settings ***

Test Tags something

```

* `-SomethingInSettings.robot`:

```

*** Settings ***

Test Tags -something

*** Test Cases ***

-Something In Settings

Should Be Empty ${TEST TAGS}

```

* `-SomethingInTestCase.robot`:

```

*** Test Cases ***

-Something In Test Case

[Tags] -something

Should Be Empty ${TEST TAGS}

```

gives the following output:

```

> robot .

==============================================================================

TagsTest

==============================================================================

TagsTest.-SomethingInSettings

==============================================================================

-Something In Settings | FAIL |

'['-something', 'something']' should be empty.

------------------------------------------------------------------------------

TagsTest.-SomethingInSettings | FAIL |

1 test, 0 passed, 1 failed

==============================================================================

TagsTest.-SomethingInTestCase

==============================================================================

-Something In Test Case | PASS |

------------------------------------------------------------------------------

TagsTest.-SomethingInTestCase | PASS |

1 test, 1 passed, 0 failed

==============================================================================

TagsTest | FAIL |

2 tests, 1 passed, 1 failed

==============================================================================

```

(https://forum.robotframework.org/t/removing-tags-from-the-test-tags-setting/7513/6?u=romanliv confirms this as an issue to be fixed)

| 1medium

|

Title: Add fail-safe for downloading binaries.

Body: ## Problem

<!-- A clear and concise description of what the problem is. Ex. I'm always frustrated when [...] -->

Whenever I wish to run the prisma command after installation, it will download binaries. Whenever I CTRL+C the task through the download process. It will cause me to not be able to download the binaries in the future unless there is a new update OR if I install NodeJS. It always frustrates me whenever this happens, so I have to wait till it does install in the future.

## Suggested solution

<!-- A clear and concise description of what you want to happen. -->

I suggest adding a fail-safe for the downloading binaries, as if it is cancelled whilst the binaries are being downloaded, it will lead to the issue that the binaries haven't been downloaded.

## Alternatives

<!-- A clear and concise description of any alternative solutions or features you've considered. -->

I haven't currently considered anything else, sorry!

## Additional context

<!-- Add any other context or screenshots about the feature request here. -->

Issue #665.

| 1medium

|

Title: Word2vec: loss tally maxes at 134217728.0 due to float32 limited-precision

Body:

<!--

**IMPORTANT**:

- Use the [Gensim mailing list](https://groups.google.com/forum/#!forum/gensim) to ask general or usage questions. Github issues are only for bug reports.

- Check [Recipes&FAQ](https://github.com/RaRe-Technologies/gensim/wiki/Recipes-&-FAQ) first for common answers.

Github bug reports that do not include relevant information and context will be closed without an answer. Thanks!

-->

#### Cumulative loss of word2vec maxes out at 134217728.0

I'm training a word2vec model with 2,793,404 sentences / 33,499,912 words, vocabulary size 162,253 (words with at least 5 occurrences).

Expected behaviour: with `compute_loss=True`, gensim's word2vec should compute the loss in the expected way.

Actual behaviour: the cumulative loss seems to be maxing out at `134217728.0`:

Building vocab...

Vocab done. Training model for 120 epochs, with 16 workers...

Loss after epoch 1: 16162246.0 / cumulative loss: 16162246.0

Loss after epoch 2: 11594642.0 / cumulative loss: 27756888.0

[ - snip - ]

Loss after epoch 110: 570688.0 / cumulative loss: 133002056.0

Loss after epoch 111: 564448.0 / cumulative loss: 133566504.0

Loss after epoch 112: 557848.0 / cumulative loss: 134124352.0

Loss after epoch 113: 93376.0 / cumulative loss: 134217728.0

Loss after epoch 114: 0.0 / cumulative loss: 134217728.0

Loss after epoch 115: 0.0 / cumulative loss: 134217728.0

And it stays at `134217728.0` thereafter. The value `134217728.0` is of course exactly `128*1024*1024`, which does not seem like a coincidence.

#### Steps to reproduce

My code is as follows:

class MyLossCalculator(CallbackAny2Vec):

def __init__(self):

self.epoch = 1

self.losses = []

self.cumu_losses = []

def on_epoch_end(self, model):

cumu_loss = model.get_latest_training_loss()

loss = cumu_loss if self.epoch <= 1 else cumu_loss - self.cumu_losses[-1]

print(f"Loss after epoch {self.epoch}: {loss} / cumulative loss: {cumu_loss}")

self.epoch += 1

self.losses.append(loss)

self.cumu_losses.append(cumu_loss)

def train_and_check(my_sentences, my_epochs, my_workers=8):

print(f"Building vocab...")

my_model: Word2Vec = Word2Vec(sg=1, compute_loss=True, workers=my_workers)

my_model.build_vocab(my_sentences)

print(f"Vocab done. Training model for {my_epochs} epochs, with {my_workers} workers...")

loss_calc = MyLossCalculator()

trained_word_count, raw_word_count = my_model.train(my_sentences, total_examples=my_model.corpus_count, compute_loss=True,

epochs=my_epochs, callbacks=[loss_calc])

loss = loss_calc.losses[-1]

print(trained_word_count, raw_word_count, loss)

loss_df = pd.DataFrame({"training loss": loss_calc.losses})

loss_df.plot(color="blue")

# print(f"Calculating accuracy...")

# acc, details = my_model.wv.evaluate_word_analogies(questions_file, case_insensitive=True)

# print(acc)

return loss_calc, my_model

The data is a news article corpus in Finnish; I'm not at liberty to share all of it (and anyway it's a bit big), but it looks like one would expect:

[7]: df.head(2)

[7]: [Row(file_and_id='data_in_json/2018/04/0001.json.gz%%3-10169118', index_in_file='853', headline='Parainen pyristelee pois lastensuojelun kriisistä: irtisanoutuneiden tilalle houkutellaan uusia sosiaalityöntekijöitä paremmilla työeduilla', publication_date='2018-04-20 11:59:35+03:00', publication_year='2018', publication_month='04', sentence='hän tiesi minkälaiseen tilanteeseen tulee', lemmatised_sentence='hän tietää minkälainen tilanne tulla', source='yle', rnd=8.436637410902392e-08),

Row(file_and_id='data_in_xml/arkistosiirto2018.zip%%arkistosiirto2018/102054668.xml', index_in_file=None, headline='*** Tiedote/SDP: Medialle tiedoksi: SDP:n puheenjohtaja Antti Rinteen puhe puoluevaltuuston kokouksessa ***', publication_date='2018-04-21T12:51:44', publication_year='2018', publication_month='04', sentence='me haluamme jättää hallitukselle välikysymyksen siitä miksi nuorten ihmisten tulevaisuuden uskoa halutaan horjuttaa miksi epävarmuutta ja näköalattomuutta sekä pelkoa tulevaisuuden suhteen halutaan lisätä', lemmatised_sentence='me haluta jättää hallitus välikysymys se miksi nuori ihminen tulevaisuus usko haluta horjuttaa miksi epävarmuus ja näköalattomuus sekä pelko tulevaisuus suhteen haluta lisätä', source='stt', rnd=8.547760445010155e-07)]

sentences = list(map(lambda r: r["lemmatised_sentence"].split(" "), df.select("lemmatised_sentence").collect()))

[18]: sentences[0]

[18]: ['hän', 'tietää', 'minkälainen', 'tilanne', 'tulla']

#### Versions

The output of:

```python

import platform; print(platform.platform())

import sys; print("Python", sys.version)

import numpy; print("NumPy", numpy.__version__)

import scipy; print("SciPy", scipy.__version__)

import gensim; print("gensim", gensim.__version__)

from gensim.models import word2vec;print("FAST_VERSION", word2vec.FAST_VERSION)

```

is:

Windows-10-10.0.18362-SP0

Python 3.7.3 | packaged by conda-forge | (default, Jul 1 2019, 22:01:29) [MSC v.1900 64 bit (AMD64)]

NumPy 1.17.3

SciPy 1.3.1

gensim 3.8.1

FAST_VERSION 1

Finally, I'm not the only one who has encountered this issue. I found the following related links:

https://groups.google.com/forum/#!topic/gensim/IH5-nWoR_ZI

https://stackoverflow.com/questions/59823688/gensim-word2vec-model-loss-becomes-0-after-few-epochs

I'm not sure if this is only a display issue and the training continues normally even after the cumulative loss reaches its "maximum", or if the training in fact stops at that point. The trained word vectors seem reasonably ok, judging by `my_model.wv.evaluate_word_analogies()`, though they do need more training than this.

| 1medium

|

Title: Add optional typed base classes for listener API

Body: Issue #4567 proposes adding a base class for the dynamic library API and having similar base classes for the listener API would be convenient as well. The usage would be something like this:

```python

from robot.api.interfaces import ListenerV3

class Listener(ListenerV3):

...

```

The base class should have all available listener methods with documentation and appropriate type information. We should have base classes both for listener v2 and for v3 and they should have `ROBOT_LISTENER_API_VERSION` set accordingly.

Similarly as #4567, this would be easy to implement and could be done already in RF 6.1. We mainly need to agree on naming and where to import these base classes.

| 1medium

|

Title: [BUG] Failed to create Mars DataFrame when mars object exists in a list

Body: <!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

**Describe the bug**

Failed to create Mars DataFrame when mars object exists in a list.

**To Reproduce**

To help us reproducing this bug, please provide information below:

1. Your Python version

2. The version of Mars you use

3. Versions of crucial packages, such as numpy, scipy and pandas

4. Full stack of the error.

5. Minimized code to reproduce the error.

```

In [1]: import mars

In [2]: mars.new_session()

Web service started at http://0.0.0.0:24172

Out[2]: <mars.deploy.oscar.session.SyncSession at 0x7f8ada249370>

In [3]: import mars.dataframe as md

In [5]: s = md.Series([1, 2, 3])

In [6]: df2 = md.DataFrame({'a': [s.sum()]})

100%|█████████████████████████████████████| 100.0/100 [00:00<00:00, 1592.00it/s]

In [7]: df2

Out[7]: DataFrame <op=DataFrameDataSource, key=5a704fd6d6ab7aee6f31d874c2f11347>

In [12]: df2.execute()

0%| | 0/100 [00:00<?, ?it/s]Failed to run subtask 00lm3BBKMBsieIow3LtlHrwv on band numa-0

Traceback (most recent call last):

File "/Users/qinxuye/Workspace/mars/mars/services/scheduling/worker/execution.py", line 331, in internal_run_subtask

subtask_info.result = await self._retry_run_subtask(

File "/Users/qinxuye/Workspace/mars/mars/services/scheduling/worker/execution.py", line 420, in _retry_run_subtask

return await _retry_run(subtask, subtask_info, _run_subtask_once)

File "/Users/qinxuye/Workspace/mars/mars/services/scheduling/worker/execution.py", line 107, in _retry_run

raise ex

File "/Users/qinxuye/Workspace/mars/mars/services/scheduling/worker/execution.py", line 67, in _retry_run

return await target_async_func(*args)

File "/Users/qinxuye/Workspace/mars/mars/services/scheduling/worker/execution.py", line 373, in _run_subtask_once

return await asyncio.shield(aiotask)

File "/Users/qinxuye/Workspace/mars/mars/services/subtask/api.py", line 68, in run_subtask_in_slot

return await ref.run_subtask.options(profiling_context=profiling_context).send(

File "/Users/qinxuye/Workspace/mars/mars/oscar/backends/context.py", line 183, in send

future = await self._call(actor_ref.address, message, wait=False)

File "/Users/qinxuye/Workspace/mars/mars/oscar/backends/context.py", line 61, in _call

return await self._caller.call(

File "/Users/qinxuye/Workspace/mars/mars/oscar/backends/core.py", line 95, in call

await client.send(message)

File "/Users/qinxuye/Workspace/mars/mars/oscar/backends/communication/base.py", line 258, in send

return await self.channel.send(message)

File "/Users/qinxuye/Workspace/mars/mars/oscar/backends/communication/socket.py", line 73, in send

buffers = await serializer.run()

File "/Users/qinxuye/Workspace/mars/mars/serialization/aio.py", line 80, in run

return self._get_buffers()

File "/Users/qinxuye/Workspace/mars/mars/serialization/aio.py", line 37, in _get_buffers

headers, buffers = serialize(self._obj)

File "/Users/qinxuye/Workspace/mars/mars/serialization/core.py", line 363, in serialize

gen_result = gen_serializer.serialize(gen_to_serial, context)

File "/Users/qinxuye/Workspace/mars/mars/serialization/core.py", line 72, in wrapped

return func(self, obj, context)

File "/Users/qinxuye/Workspace/mars/mars/serialization/core.py", line 151, in serialize

return {}, pickle_buffers(obj)

File "/Users/qinxuye/Workspace/mars/mars/serialization/core.py", line 88, in pickle_buffers

buffers[0] = cloudpickle.dumps(

File "/Users/qinxuye/miniconda3/envs/mars3.8/lib/python3.8/site-packages/cloudpickle/cloudpickle_fast.py", line 73, in dumps

cp.dump(obj)

File "/Users/qinxuye/miniconda3/envs/mars3.8/lib/python3.8/site-packages/cloudpickle/cloudpickle_fast.py", line 563, in dump

return Pickler.dump(self, obj)

TypeError: cannot pickle 'weakref' object

100%|█████████████████████████████████████| 100.0/100 [00:00<00:00, 3581.29it/s]

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-12-fe40b754f95d> in <module>

----> 1 df2.execute()

~/Workspace/mars/mars/core/entity/tileables.py in execute(self, session, **kw)

460

461 def execute(self, session=None, **kw):

--> 462 result = self.data.execute(session=session, **kw)

463 if isinstance(result, TILEABLE_TYPE):

464 return self

~/Workspace/mars/mars/core/entity/executable.py in execute(self, session, **kw)

96

97 session = _get_session(self, session)

---> 98 return execute(self, session=session, **kw)

99

100 def _check_session(self, session: SessionType, action: str):

~/Workspace/mars/mars/deploy/oscar/session.py in execute(tileable, session, wait, new_session_kwargs, show_progress, progress_update_interval, *tileables, **kwargs)

1777 session = get_default_or_create(**(new_session_kwargs or dict()))

1778 session = _ensure_sync(session)

-> 1779 return session.execute(

1780 tileable,

1781 *tileables,

~/Workspace/mars/mars/deploy/oscar/session.py in execute(self, tileable, show_progress, *tileables, **kwargs)

1575 fut = asyncio.run_coroutine_threadsafe(coro, self._loop)

1576 try:

-> 1577 execution_info: ExecutionInfo = fut.result(

1578 timeout=self._isolated_session.timeout

1579 )

~/miniconda3/envs/mars3.8/lib/python3.8/concurrent/futures/_base.py in result(self, timeout)

437 raise CancelledError()

438 elif self._state == FINISHED:

--> 439 return self.__get_result()

440 else:

441 raise TimeoutError()

~/miniconda3/envs/mars3.8/lib/python3.8/concurrent/futures/_base.py in __get_result(self)

386 def __get_result(self):

387 if self._exception:

--> 388 raise self._exception

389 else:

390 return self._result

~/Workspace/mars/mars/deploy/oscar/session.py in _execute(session, wait, show_progress, progress_update_interval, cancelled, *tileables, **kwargs)

1757 # set cancelled to avoid wait task leak

1758 cancelled.set()

-> 1759 await execution_info

1760 else:

1761 return execution_info

~/Workspace/mars/mars/deploy/oscar/session.py in wait()

100

101 async def wait():

--> 102 return await self._aio_task

103

104 self._future_local.future = fut = asyncio.run_coroutine_threadsafe(

~/Workspace/mars/mars/deploy/oscar/session.py in _run_in_background(self, tileables, task_id, progress, profiling)

905 )

906 if task_result.error:

--> 907 raise task_result.error.with_traceback(task_result.traceback)

908 if cancelled:

909 return

~/Workspace/mars/mars/services/scheduling/worker/execution.py in internal_run_subtask(self, subtask, band_name)

329

330 batch_quota_req = {(subtask.session_id, subtask.subtask_id): calc_size}

--> 331 subtask_info.result = await self._retry_run_subtask(

332 subtask, band_name, subtask_api, batch_quota_req

333 )

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _retry_run_subtask(self, subtask, band_name, subtask_api, batch_quota_req)

418 # any exceptions occurred.

419 if subtask.retryable:

--> 420 return await _retry_run(subtask, subtask_info, _run_subtask_once)

421 else:

422 try:

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _retry_run(subtask, subtask_info, target_async_func, *args)

105 )

106 else:

--> 107 raise ex

108

109

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _retry_run(subtask, subtask_info, target_async_func, *args)

65 while True:

66 try:

---> 67 return await target_async_func(*args)

68 except (OSError, MarsError) as ex:

69 if subtask_info.num_retries < subtask_info.max_retries:

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _run_subtask_once()

371 subtask_api.run_subtask_in_slot(band_name, slot_id, subtask)

372 )

--> 373 return await asyncio.shield(aiotask)

374 except asyncio.CancelledError as ex:

375 # make sure allocated slots are traced

~/Workspace/mars/mars/services/subtask/api.py in run_subtask_in_slot(self, band_name, slot_id, subtask)

66 ProfilingContext(task_id=subtask.task_id) if enable_profiling else None

67 )

---> 68 return await ref.run_subtask.options(profiling_context=profiling_context).send(

69 subtask

70 )

~/Workspace/mars/mars/oscar/backends/context.py in send(self, actor_ref, message, wait_response, profiling_context)

181 ):

182 detect_cycle_send(message, wait_response)

--> 183 future = await self._call(actor_ref.address, message, wait=False)

184 if wait_response:

185 result = await self._wait(future, actor_ref.address, message)

~/Workspace/mars/mars/oscar/backends/context.py in _call(self, address, message, wait)

59 self, address: str, message: _MessageBase, wait: bool = True

60 ) -> Union[ResultMessage, ErrorMessage, asyncio.Future]:

---> 61 return await self._caller.call(

62 Router.get_instance_or_empty(), address, message, wait=wait

63 )

~/Workspace/mars/mars/oscar/backends/core.py in call(self, router, dest_address, message, wait)

93 with Timer() as timer:

94 try:

---> 95 await client.send(message)

96 except ConnectionError:

97 try:

~/Workspace/mars/mars/oscar/backends/communication/base.py in send(self, message)

256 @implements(Channel.send)

257 async def send(self, message):

--> 258 return await self.channel.send(message)

259

260 @implements(Channel.recv)

~/Workspace/mars/mars/oscar/backends/communication/socket.py in send(self, message)

71 compress = self.compression or 0

72 serializer = AioSerializer(message, compress=compress)

---> 73 buffers = await serializer.run()

74

75 # write buffers

~/Workspace/mars/mars/serialization/aio.py in run(self)

78

79 async def run(self):

---> 80 return self._get_buffers()

81

82

~/Workspace/mars/mars/serialization/aio.py in _get_buffers(self)

35

36 def _get_buffers(self):

---> 37 headers, buffers = serialize(self._obj)

38

39 def _is_cuda_buffer(buf): # pragma: no cover

~/Workspace/mars/mars/serialization/core.py in serialize(obj, context)

361 gen_to_serial = gen.send(last_serial)

362 gen_serializer = _serial_dispatcher.get_handler(type(gen_to_serial))

--> 363 gen_result = gen_serializer.serialize(gen_to_serial, context)

364 if isinstance(gen_result, types.GeneratorType):

365 # when intermediate result still generator, push its contexts

~/Workspace/mars/mars/serialization/core.py in wrapped(self, obj, context)

70 else:

71 context[id(obj)] = obj

---> 72 return func(self, obj, context)

73

74 return wrapped

~/Workspace/mars/mars/serialization/core.py in serialize(self, obj, context)

149 @buffered

150 def serialize(self, obj, context: Dict):

--> 151 return {}, pickle_buffers(obj)

152

153 def deserialize(self, header: Dict, buffers: List, context: Dict):

~/Workspace/mars/mars/serialization/core.py in pickle_buffers(obj)

86 buffers.append(memoryview(x))

87

---> 88 buffers[0] = cloudpickle.dumps(

89 obj,

90 buffer_callback=buffer_cb,

~/miniconda3/envs/mars3.8/lib/python3.8/site-packages/cloudpickle/cloudpickle_fast.py in dumps(obj, protocol, buffer_callback)

71 file, protocol=protocol, buffer_callback=buffer_callback

72 )

---> 73 cp.dump(obj)

74 return file.getvalue()

75

~/miniconda3/envs/mars3.8/lib/python3.8/site-packages/cloudpickle/cloudpickle_fast.py in dump(self, obj)

561 def dump(self, obj):

562 try:

--> 563 return Pickler.dump(self, obj)

564 except RuntimeError as e:

565 if "recursion" in e.args[0]:

TypeError: cannot pickle 'weakref' object

```

| 1medium

|

Title: Propose using `$var` syntax if evaluation IF or WHILE condition using `${var}` fails

Body: A common error when evaluating expressions with IF or otherwise is using something like

```

IF ${x} == 'expected'

Keyword

END

```

when the variable `${x}` contains a string. Normal variables are resolved _before_ evaluating the expression, so if `${x}` contains a string `value`, the evaluated expression will be `value == 'expected'`. Evaluating it fails because `value` isn't quoted, it's thus considered a variable in Python, and no such variable exists. The resulting error is this:

> Evaluating IF condition failed: Evaluating expression 'value == 'expected'' failed: NameError: name 'value' is not defined nor importable as module

One solution to this problem is quoting the variable like `'${x}' == 'expected'`, but that doesn't work if the variable value contains quotes or newlines. A better solution is using the special `$var` syntax like `$x == 'value'` that makes the variable available in the Python evaluation namespace (#2040). All this is explained in the [User Guide](http://robotframework.org/robotframework/latest/RobotFrameworkUserGuide.html#evaluating-expressions), but there are many users who don't know about this and struggle with the syntax.

Because this is a such a common error, we should make the error more informative if the expression contains "normal" variables. We could, for example, show also the original expression and recommend quoting or using the "special" variable syntax. Possibly it could look like this:

> Evaluating IF condition failed: Evaluating expression 'value == 'expected'' failed: NameError: name 'value' is not defined nor importable as module

> The original expression was '${x} == 'expected''. Try using the '$var' syntax like '$x == 'expected'' to avoid resolving variables before the expression is evaluated. See the Evaluating expression appendix in the User Guide for more details.

There are few problems implementing this:

1. Variables are solved before the [evaluate_expression](https://github.com/robotframework/robotframework/blob/master/src/robot/variables/evaluation.py#L31) is called so this function doesn't know the original expression nor did it contain variables. This information needs to be passed to it, but in same cases (at least with inline Python evaluation) it isn't that easy.

2. It's not easy to detect when exactly this extra information should be included into the error. Including it always when evaluating the expression fails can add confusion when the error isn't related to variables. It would probably better to include it only if evaluation fails for a NameError, but also in that case you could have an expression like `'${x}' == value` where the variable likely isn't a problem. We could try some heuristics to see what causes the error, but that's probably too much work compared to including the extra info in some cases where it's not needed.

3. Coming up with a good but somewhat short error message isn't easy. I'm not totally happy with the above, but I guess it would be better than nothing.

Because this extra info is added only if evaluation fails, this should be a totally backwards compatible change. It would be nice to include it already into RF 6.1, but that release is already about to be late and this isn't that easy to implement, so RF 6.2 is probably a better target. If someone is interested to look at this, including it already into RF 6.1 ought to be possible.

| 1medium

|

Title: Stacked bar plots helper function

Body: Create a helper function in the `yellowbrick.draw` module that can create a stacked bar chart from a 2D array on the specified axes. This function should have the following basic signature:

```python

def bar_stack(data, ax=None, labels=None, ticks=None, colors=None, **kwargs):

"""

An advanced bar chart plotting utility that can draw bar and stacked bar charts from

data, wrapping calls to the specified matplotlib.Axes object.

Parameters

----------

data : 2D array-like

The data associated with the bar chart where the columns represent each bar

and the rows represent each stack in the bar chart. A single bar chart would

be a 2D array with only one row, a bar chart with three stacks per bar would

have a shape of (3, b).

ax : matplotlib.Axes, default: None

The axes object to draw the barplot on, uses plt.gca() if not specified.

labels : list of str, default: None

The labels for each row in the bar stack, used to create a legend.

ticks : list of str, default: None

The labels for each bar, added to the x-axis for a vertical plot, or the y-axis

for a horizontal plot.

colors : array-like, default: None

Specify the colors of each bar, each row in the stack, or every segment.

kwargs : dict

Additional keyword arguments to pass to ``ax.bar``.

"""

```

This is just a base signature and should be further refined as we dig into the work. Once this helper function is created, the following visualizers should be updated to use it:

- [ ] `PosTagVisualizer`

- [ ] `FeatureImportances`

- [ ] `ClassPredictionError`

(@DistrictDataLabs/team-oz-maintainers please feel free to add other visualizers above as needed)

**Describe the solution you'd like**

A helper function to create stacked bar plot. (See #510 and #847, #771 )

**Is your feature request related to a problem? Please describe.**

This can be useful for `ClassPredictionError`, `FeatureImportances`, and future stacked bar plot.

| 1medium

|

Title: [Documentation]: More FastAPI examples and clearer ASGI/WSGI sections

Body: Our documentation currently falls short in providing comprehensive examples for FastAPI and ASGI. This gap has led to user confusion. Additionally, the WSGI & ASGI topics are combined in a way that muddles the information.

### Proposed Changes

- Add more FastAPI examples across the "Python" documentation section to cater to a broader audience and use cases.

- Promote the use of `call_and_validate` throughout the documentation for consistency.

- Create separate sub-sections for ASGI and WSGI under the "Python" section to eliminate confusion and provide targeted guidance.

| 1medium

|

Title: Add support for middleware

Body: [https://www.prisma.io/docs/concepts/components/prisma-client/middleware](https://www.prisma.io/docs/concepts/components/prisma-client/middleware)

```py

client = Client()

async def logging_middleware(params: MiddlewareParams, next: NextMiddleware) -> MiddlewareResult:

log.info('Running %s query on %s', params.action, params.model)

yield await next(params)

log.info('Successfully ran %s query on %s ', params.action, params.model)

async def foo_middleware(params: MiddlewareParams, next: NextMiddleware) -> MiddlewareResult:

...

result = await next(params)

...

return result

client.use(logging_middleware, foo_middleware)

```

### Should Support

* Yielding result

* Returning result

| 1medium

|

Title: Using aggregation instead of transform to perform `df.groupby().nunique()`

Body: <!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

Now, `df.groupby().nunique()` would be delegated to `transform` to perform execution, it will be a shuffle operation which is very time consuming, we can delegate it to `aggregation` which is way more optimized.

| 1medium

|

Title: [BUG] NameError raised when comparing string inside agg function.

Body: <!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

**Describe the bug**

NameError raised when comparing string inside agg function.

**To Reproduce**

To help us reproducing this bug, please provide information below:

1. Your Python version

2. The version of Mars you use

3. Versions of crucial packages, such as numpy, scipy and pandas

4. Full stack of the error.

5. Minimized code to reproduce the error.

```

In [3]: import mars.dataframe as md

In [4]: df = md.DataFrame({'a': ['1', '2', '3'], 'b': ['a1', 'a2', 'a1']})

In [5]: df.groupby('a', as_index=False)['b'].agg(lambda x: (x == "a1").sum()).ex

...: ecute()

0%| | 0/100 [00:00<?, ?it/s]Failed to run subtask aRWmQqEgc5OymCkUZLYRcTb9 on band numa-0

Traceback (most recent call last):

File "/Users/xuyeqin/Workspace/mars/mars/services/scheduling/worker/execution.py", line 315, in internal_run_subtask

subtask_info.result = await self._retry_run_subtask(

File "/Users/xuyeqin/Workspace/mars/mars/services/scheduling/worker/execution.py", line 404, in _retry_run_subtask

return await _retry_run(subtask, subtask_info, _run_subtask_once)

File "/Users/xuyeqin/Workspace/mars/mars/services/scheduling/worker/execution.py", line 91, in _retry_run

raise ex

File "/Users/xuyeqin/Workspace/mars/mars/services/scheduling/worker/execution.py", line 66, in _retry_run

return await target_async_func(*args)

File "/Users/xuyeqin/Workspace/mars/mars/services/scheduling/worker/execution.py", line 357, in _run_subtask_once

return await asyncio.shield(aiotask)

File "/Users/xuyeqin/Workspace/mars/mars/services/subtask/api.py", line 66, in run_subtask_in_slot

return await ref.run_subtask.options(profiling_context=profiling_context).send(

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/context.py", line 186, in send

return self._process_result_message(result)

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/context.py", line 70, in _process_result_message

raise message.error.with_traceback(message.traceback)

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/pool.py", line 520, in send

result = await self._run_coro(message.message_id, coro)

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/pool.py", line 319, in _run_coro

return await coro

File "/Users/xuyeqin/Workspace/mars/mars/oscar/api.py", line 115, in __on_receive__

return await super().__on_receive__(message)

File "mars/oscar/core.pyx", line 373, in __on_receive__

raise ex

File "mars/oscar/core.pyx", line 367, in mars.oscar.core._BaseActor.__on_receive__

return await self._handle_actor_result(result)

File "mars/oscar/core.pyx", line 252, in _handle_actor_result

task_result = await coros[0]

File "mars/oscar/core.pyx", line 295, in _run_actor_async_generator

with debug_async_timeout('actor_lock_timeout',

File "mars/oscar/core.pyx", line 297, in mars.oscar.core._BaseActor._run_actor_async_generator

async with self._lock:

File "mars/oscar/core.pyx", line 301, in mars.oscar.core._BaseActor._run_actor_async_generator

res = await gen.athrow(*res)

File "/Users/xuyeqin/Workspace/mars/mars/services/subtask/worker/runner.py", line 118, in run_subtask

result = yield self._running_processor.run(subtask)

File "mars/oscar/core.pyx", line 306, in mars.oscar.core._BaseActor._run_actor_async_generator

res = await self._handle_actor_result(res)

File "mars/oscar/core.pyx", line 226, in _handle_actor_result

result = await result

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/context.py", line 186, in send

return self._process_result_message(result)

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/context.py", line 70, in _process_result_message

raise message.error.with_traceback(message.traceback)

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/pool.py", line 520, in send

result = await self._run_coro(message.message_id, coro)

File "/Users/xuyeqin/Workspace/mars/mars/oscar/backends/pool.py", line 319, in _run_coro

return await coro

File "/Users/xuyeqin/Workspace/mars/mars/oscar/api.py", line 115, in __on_receive__

return await super().__on_receive__(message)

File "mars/oscar/core.pyx", line 373, in __on_receive__

raise ex

File "mars/oscar/core.pyx", line 367, in mars.oscar.core._BaseActor.__on_receive__

return await self._handle_actor_result(result)

File "mars/oscar/core.pyx", line 252, in _handle_actor_result

task_result = await coros[0]

File "mars/oscar/core.pyx", line 295, in _run_actor_async_generator

with debug_async_timeout('actor_lock_timeout',

File "mars/oscar/core.pyx", line 297, in mars.oscar.core._BaseActor._run_actor_async_generator

async with self._lock:

File "mars/oscar/core.pyx", line 301, in mars.oscar.core._BaseActor._run_actor_async_generator

res = await gen.athrow(*res)

File "/Users/xuyeqin/Workspace/mars/mars/services/subtask/worker/processor.py", line 596, in run

result = yield self._running_aio_task

File "mars/oscar/core.pyx", line 306, in mars.oscar.core._BaseActor._run_actor_async_generator

res = await self._handle_actor_result(res)

File "mars/oscar/core.pyx", line 226, in _handle_actor_result

result = await result

File "/Users/xuyeqin/Workspace/mars/mars/services/subtask/worker/processor.py", line 457, in run

await self._execute_graph(chunk_graph)

File "/Users/xuyeqin/Workspace/mars/mars/services/subtask/worker/processor.py", line 209, in _execute_graph

await to_wait

File "/Users/xuyeqin/Workspace/mars/mars/lib/aio/_threads.py", line 36, in to_thread

return await loop.run_in_executor(None, func_call)

File "/Users/xuyeqin/miniconda3/envs/mars3.8/lib/python3.8/concurrent/futures/thread.py", line 57, in run

result = self.fn(*self.args, **self.kwargs)

File "/Users/xuyeqin/Workspace/mars/mars/core/mode.py", line 77, in _inner

return func(*args, **kwargs)

File "/Users/xuyeqin/Workspace/mars/mars/services/subtask/worker/processor.py", line 177, in _execute_operand

return execute(ctx, op)

File "/Users/xuyeqin/Workspace/mars/mars/core/operand/core.py", line 487, in execute

result = executor(results, op)

File "/Users/xuyeqin/Workspace/mars/mars/core/custom_log.py", line 94, in wrap

return func(cls, ctx, op)

File "/Users/xuyeqin/Workspace/mars/mars/utils.py", line 1128, in wrapped

result = func(cls, ctx, op)

File "/Users/xuyeqin/Workspace/mars/mars/dataframe/groupby/aggregation.py", line 1050, in execute

cls._execute_map(ctx, op)

File "/Users/xuyeqin/Workspace/mars/mars/dataframe/groupby/aggregation.py", line 854, in _execute_map

pre_df = func(pre_df, gpu=op.is_gpu())

File "<string>", line 3, in expr_function

NameError: name 'a1' is not defined

0%| | 0/100 [00:00<?, ?it/s]

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-5-3a632fccd78f> in <module>

----> 1 df.groupby('a', as_index=False)['b'].agg(lambda x: (x == "a1").sum()).execute()

~/Workspace/mars/mars/core/entity/tileables.py in execute(self, session, **kw)

460

461 def execute(self, session=None, **kw):

--> 462 result = self.data.execute(session=session, **kw)

463 if isinstance(result, TILEABLE_TYPE):

464 return self

~/Workspace/mars/mars/core/entity/executable.py in execute(self, session, **kw)

96

97 session = _get_session(self, session)

---> 98 return execute(self, session=session, **kw)

99

100 def _check_session(self, session: SessionType, action: str):

~/Workspace/mars/mars/deploy/oscar/session.py in execute(tileable, session, wait, new_session_kwargs, show_progress, progress_update_interval, *tileables, **kwargs)

1771 session = get_default_or_create(**(new_session_kwargs or dict()))

1772 session = _ensure_sync(session)

-> 1773 return session.execute(

1774 tileable,

1775 *tileables,

~/Workspace/mars/mars/deploy/oscar/session.py in execute(self, tileable, show_progress, *tileables, **kwargs)

1571 fut = asyncio.run_coroutine_threadsafe(coro, self._loop)

1572 try:

-> 1573 execution_info: ExecutionInfo = fut.result(

1574 timeout=self._isolated_session.timeout

1575 )

~/miniconda3/envs/mars3.8/lib/python3.8/concurrent/futures/_base.py in result(self, timeout)

437 raise CancelledError()

438 elif self._state == FINISHED:

--> 439 return self.__get_result()

440 else:

441 raise TimeoutError()

~/miniconda3/envs/mars3.8/lib/python3.8/concurrent/futures/_base.py in __get_result(self)

386 def __get_result(self):

387 if self._exception:

--> 388 raise self._exception

389 else:

390 return self._result

~/Workspace/mars/mars/deploy/oscar/session.py in _execute(session, wait, show_progress, progress_update_interval, cancelled, *tileables, **kwargs)

1722 while not cancelled.is_set():

1723 try:

-> 1724 await asyncio.wait_for(

1725 asyncio.shield(execution_info), progress_update_interval

1726 )

~/miniconda3/envs/mars3.8/lib/python3.8/asyncio/tasks.py in wait_for(fut, timeout, loop)

481

482 if fut.done():

--> 483 return fut.result()

484 else:

485 fut.remove_done_callback(cb)

~/miniconda3/envs/mars3.8/lib/python3.8/asyncio/tasks.py in _wrap_awaitable(awaitable)

682 that will later be wrapped in a Task by ensure_future().

683 """

--> 684 return (yield from awaitable.__await__())

685

686 _wrap_awaitable._is_coroutine = _is_coroutine

~/Workspace/mars/mars/deploy/oscar/session.py in wait()

100

101 async def wait():

--> 102 return await self._aio_task

103

104 self._future_local.future = fut = asyncio.run_coroutine_threadsafe(

~/Workspace/mars/mars/deploy/oscar/session.py in _run_in_background(self, tileables, task_id, progress, profiling)

901 )

902 if task_result.error:

--> 903 raise task_result.error.with_traceback(task_result.traceback)

904 if cancelled:

905 return

~/Workspace/mars/mars/services/scheduling/worker/execution.py in internal_run_subtask(self, subtask, band_name)

313

314 batch_quota_req = {(subtask.session_id, subtask.subtask_id): calc_size}

--> 315 subtask_info.result = await self._retry_run_subtask(

316 subtask, band_name, subtask_api, batch_quota_req

317 )

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _retry_run_subtask(self, subtask, band_name, subtask_api, batch_quota_req)

402 # any exceptions occurred.

403 if subtask.retryable:

--> 404 return await _retry_run(subtask, subtask_info, _run_subtask_once)

405 else:

406 return await _run_subtask_once()

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _retry_run(subtask, subtask_info, target_async_func, *args)

89 ex,

90 )

---> 91 raise ex

92

93

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _retry_run(subtask, subtask_info, target_async_func, *args)

64 while True:

65 try:

---> 66 return await target_async_func(*args)

67 except (OSError, MarsError) as ex:

68 if subtask_info.num_retries < subtask_info.max_retries:

~/Workspace/mars/mars/services/scheduling/worker/execution.py in _run_subtask_once()

355 subtask_api.run_subtask_in_slot(band_name, slot_id, subtask)

356 )

--> 357 return await asyncio.shield(aiotask)

358 except asyncio.CancelledError as ex:

359 # make sure allocated slots are traced

~/Workspace/mars/mars/services/subtask/api.py in run_subtask_in_slot(self, band_name, slot_id, subtask)

64 else None

65 )

---> 66 return await ref.run_subtask.options(profiling_context=profiling_context).send(

67 subtask

68 )

~/Workspace/mars/mars/oscar/backends/context.py in send(self, actor_ref, message, wait_response, profiling_context)

184 if wait_response:

185 result = await self._wait(future, actor_ref.address, message)

--> 186 return self._process_result_message(result)

187 else:

188 return future

~/Workspace/mars/mars/oscar/backends/context.py in _process_result_message(message)

68 return message.result

69 else:

---> 70 raise message.error.with_traceback(message.traceback)

71

72 async def _wait(self, future: asyncio.Future, address: str, message: _MessageBase):

~/Workspace/mars/mars/oscar/backends/pool.py in send()

518 raise ActorNotExist(f"Actor {actor_id} does not exist")

519 coro = self._actors[actor_id].__on_receive__(message.content)

--> 520 result = await self._run_coro(message.message_id, coro)

521 processor.result = ResultMessage(

522 message.message_id,

~/Workspace/mars/mars/oscar/backends/pool.py in _run_coro()

317 self._process_messages[message_id] = asyncio.tasks.current_task()

318 try:

--> 319 return await coro

320 finally:

321 self._process_messages.pop(message_id, None)

~/Workspace/mars/mars/oscar/api.py in __on_receive__()

113 Message shall be (method_name,) + args + (kwargs,)

114 """

--> 115 return await super().__on_receive__(message)

116

117

~/Workspace/mars/mars/oscar/core.pyx in __on_receive__()

371 debug_logger.exception('Got unhandled error when handling message %r'

372 'in actor %s at %s', message, self.uid, self.address)

--> 373 raise ex

374

375

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor.__on_receive__()

365 raise ValueError(f'call_method {call_method} not valid')

366

--> 367 return await self._handle_actor_result(result)

368 except Exception as ex:

369 if _log_unhandled_errors:

~/Workspace/mars/mars/oscar/core.pyx in _handle_actor_result()

250 # asyncio.wait as it introduces much overhead

251 if len(coros) == 1:

--> 252 task_result = await coros[0]

253 if extract_tuple:

254 result = task_result

~/Workspace/mars/mars/oscar/core.pyx in _run_actor_async_generator()

293 res = None

294 while True:

--> 295 with debug_async_timeout('actor_lock_timeout',

296 'async_generator %r hold lock timeout', gen):

297 async with self._lock:

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor._run_actor_async_generator()

295 with debug_async_timeout('actor_lock_timeout',

296 'async_generator %r hold lock timeout', gen):

--> 297 async with self._lock:

298 if not is_exception:

299 res = await gen.asend(res)

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor._run_actor_async_generator()

299 res = await gen.asend(res)

300 else:

--> 301 res = await gen.athrow(*res)

302 try:

303 if _log_cycle_send:

~/Workspace/mars/mars/services/subtask/worker/runner.py in run_subtask()

116 self._running_processor = self._last_processor = processor

117 try:

--> 118 result = yield self._running_processor.run(subtask)

119 finally:

120 self._running_processor = None

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor._run_actor_async_generator()

304 message_trace = pop_message_trace()

305

--> 306 res = await self._handle_actor_result(res)

307 is_exception = False

308 except:

~/Workspace/mars/mars/oscar/core.pyx in _handle_actor_result()

224

225 if inspect.isawaitable(result):

--> 226 result = await result

227 elif is_async_generator(result):

228 result = (result,)

~/Workspace/mars/mars/oscar/backends/context.py in send()

184 if wait_response:

185 result = await self._wait(future, actor_ref.address, message)

--> 186 return self._process_result_message(result)

187 else:

188 return future

~/Workspace/mars/mars/oscar/backends/context.py in _process_result_message()

68 return message.result

69 else:

---> 70 raise message.error.with_traceback(message.traceback)

71

72 async def _wait(self, future: asyncio.Future, address: str, message: _MessageBase):

~/Workspace/mars/mars/oscar/backends/pool.py in send()

518 raise ActorNotExist(f"Actor {actor_id} does not exist")

519 coro = self._actors[actor_id].__on_receive__(message.content)

--> 520 result = await self._run_coro(message.message_id, coro)

521 processor.result = ResultMessage(

522 message.message_id,

~/Workspace/mars/mars/oscar/backends/pool.py in _run_coro()

317 self._process_messages[message_id] = asyncio.tasks.current_task()

318 try:

--> 319 return await coro

320 finally:

321 self._process_messages.pop(message_id, None)

~/Workspace/mars/mars/oscar/api.py in __on_receive__()

113 Message shall be (method_name,) + args + (kwargs,)

114 """

--> 115 return await super().__on_receive__(message)

116

117

~/Workspace/mars/mars/oscar/core.pyx in __on_receive__()

371 debug_logger.exception('Got unhandled error when handling message %r'

372 'in actor %s at %s', message, self.uid, self.address)

--> 373 raise ex

374

375

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor.__on_receive__()

365 raise ValueError(f'call_method {call_method} not valid')

366

--> 367 return await self._handle_actor_result(result)

368 except Exception as ex:

369 if _log_unhandled_errors:

~/Workspace/mars/mars/oscar/core.pyx in _handle_actor_result()

250 # asyncio.wait as it introduces much overhead

251 if len(coros) == 1:

--> 252 task_result = await coros[0]

253 if extract_tuple:

254 result = task_result

~/Workspace/mars/mars/oscar/core.pyx in _run_actor_async_generator()

293 res = None

294 while True:

--> 295 with debug_async_timeout('actor_lock_timeout',

296 'async_generator %r hold lock timeout', gen):

297 async with self._lock:

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor._run_actor_async_generator()

295 with debug_async_timeout('actor_lock_timeout',

296 'async_generator %r hold lock timeout', gen):

--> 297 async with self._lock:

298 if not is_exception:

299 res = await gen.asend(res)

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor._run_actor_async_generator()

299 res = await gen.asend(res)

300 else:

--> 301 res = await gen.athrow(*res)

302 try:

303 if _log_cycle_send:

~/Workspace/mars/mars/services/subtask/worker/processor.py in run()

594 self._running_aio_task = asyncio.create_task(processor.run())

595 try:

--> 596 result = yield self._running_aio_task

597 raise mo.Return(result)

598 finally:

~/Workspace/mars/mars/oscar/core.pyx in mars.oscar.core._BaseActor._run_actor_async_generator()

304 message_trace = pop_message_trace()

305

--> 306 res = await self._handle_actor_result(res)

307 is_exception = False

308 except:

~/Workspace/mars/mars/oscar/core.pyx in _handle_actor_result()

224

225 if inspect.isawaitable(result):

--> 226 result = await result

227 elif is_async_generator(result):

228 result = (result,)

~/Workspace/mars/mars/services/subtask/worker/processor.py in run()

455 try:

456 # execute chunk graph

--> 457 await self._execute_graph(chunk_graph)

458 finally:

459 # unpin inputs data

~/Workspace/mars/mars/services/subtask/worker/processor.py in _execute_graph()

207

208 try:

--> 209 await to_wait

210 logger.debug(

211 "Finish executing operand: %s," "chunk: %s, subtask id: %s",

~/Workspace/mars/mars/lib/aio/_threads.py in to_thread()

34 ctx = contextvars.copy_context()

35 func_call = functools.partial(ctx.run, func, *args, **kwargs)

---> 36 return await loop.run_in_executor(None, func_call)

~/miniconda3/envs/mars3.8/lib/python3.8/concurrent/futures/thread.py in run()

55

56 try:

---> 57 result = self.fn(*self.args, **self.kwargs)

58 except BaseException as exc:

59 self.future.set_exception(exc)

~/Workspace/mars/mars/core/mode.py in _inner()

75 def _inner(*args, **kwargs):

76 with enter_mode(**mode_name_to_value):

---> 77 return func(*args, **kwargs)

78

79 else:

~/Workspace/mars/mars/services/subtask/worker/processor.py in _execute_operand()

175 self, ctx: Dict[str, Any], op: OperandType

176 ): # noqa: R0201 # pylint: disable=no-self-use

--> 177 return execute(ctx, op)

178

179 async def _execute_graph(self, chunk_graph: ChunkGraph):

~/Workspace/mars/mars/core/operand/core.py in execute()

485 # The `UFuncTypeError` was introduced by numpy#12593 since v1.17.0.

486 try:

--> 487 result = executor(results, op)

488 succeeded = True

489 return result

~/Workspace/mars/mars/core/custom_log.py in wrap()

92

93 if custom_log_dir is None:

---> 94 return func(cls, ctx, op)

95

96 log_path = os.path.join(custom_log_dir, op.key)

~/Workspace/mars/mars/utils.py in wrapped()

1126

1127 try:

-> 1128 result = func(cls, ctx, op)

1129 finally:

1130 with AbstractSession._lock:

~/Workspace/mars/mars/dataframe/groupby/aggregation.py in execute()

1048 pd.set_option("mode.use_inf_as_na", op.use_inf_as_na)

1049 if op.stage == OperandStage.map:

-> 1050 cls._execute_map(ctx, op)

1051 elif op.stage == OperandStage.combine:

1052 cls._execute_combine(ctx, op)

~/Workspace/mars/mars/dataframe/groupby/aggregation.py in _execute_map()

852 pre_df = in_data if cols is None else in_data[cols]

853 try:

--> 854 pre_df = func(pre_df, gpu=op.is_gpu())

855 except TypeError:

856 pre_df = pre_df.transform(_wrapped_func)

~/Workspace/mars/mars/dataframe/reduction/core.py in expr_function()

NameError: name 'a1' is not defined

```

| 1medium

|

Title: Audit usage of `Any` throughout the code base

Body: Currently there are a couple of places that we use `Any` when we should instead use a more accurate type or just fallback to `object`. For example, the `DataError` class, https://github.com/RobertCraigie/prisma-client-py/blob/5fe90fa3e09918f289aab573cdce79985c21d1ad/src/prisma/errors.py#L59

Other places for improvement:

- `QueryBuilder.method_format` should use a `Literal` type

| 1medium

|

Title: Make it possible for custom converters to get access to the library

Body: Custom converters are a handy feature added in RF 5.0 (#4088). It would be convenient if converters would be able to access the library they are attached to. This would allow conversion to be dependent on the state of the library or the automated application. For example, we web testing library could automatically check does a locator string (id, xpath, css, ...) match any object on the page and pass a reference to the object to the keyword.

A simple way to support this would be passing the library instance to the converter along with the value to be converted:

```python

def converter(value, library):

...

```

For backwards compatibility reasons we cannot make the second argument mandatory and needing to use it in cases where it's not needed wouldn't be convenient in general. We can, however, easily check does the converter accept one or two arguments and pass it the library instance only in the latter case.

| 1medium

|

Title: Script to autogenerate images in docs

Body: Currently in our `docs` folder, we have code that generates png files to be used in the generated docs. It would be helpful to have a script to generate images and save them in the appropriate folder location.

related to: https://github.com/DistrictDataLabs/yellowbrick/issues/38

| 1medium

|

Title: Maximize whitespace with feature-reordering optimization in ParallelCoordinates and RadViz

Body: Both `RadViz` and `ParallelCoordinates` would benefit from increased whitespace/increased transparency that is achieved simply by recording the columns around the circle in `RadViz` and along the horizontal in `ParallelCoordinates`. Potentially some optimization technique would allow us to discover the best feature ordering/subset of features to display.

### Proposal/Issue

- [ ] enhance `RadViz`/`ParallelCoordinates` to specify the ordering of the features

- [ ] create a function/method to compute the amount of whitespace or alpha transparency in the figure

- [ ] implement an optimization method (Hill Climbing, Simulated Annealing, etc.) to maximize the whitespace/alpha transparency using feature orders as individual search points.

| 1medium

|

Title: Request: Add Flash Attention 2.0 Support for ViTMAEForPreTraining

Body: Hi Hugging Face team!

I am currently working on pre-training a Foundation Model using ViTMAEForPreTraining, and I was hoping to use Flash Attention 2.0 to speed up training and reduce memory usage. However, when I attempted to enable Flash Attention, I encountered the following error:

`ValueError: ViTMAEForPreTraining does not support Flash Attention 2.0 yet.

Please request to add support where the model is hosted, on its model hub page: https://huggingface.co//discussions/new

or in the Transformers GitHub repo: https://github.com/huggingface/transformers/issues/new`

Since MAE pre-training is heavily dependent on the attention mechanism, adding Flash Attention support would be a valuable enhancement—especially for larger ViT models and high-resolution datasets, like Landsat data we are working with.

**Feature Request**

- Please add support for Flash Attention 2.0 to ViTMAEForPreTraining.

- This would help make MAE pre-training more efficient in terms of speed and memory consumption.

**Why This Matters**

- Many users working with large imagery datasets (like remote sensing, medical imaging, etc.) would greatly benefit from this.

- Flash Attention has already proven useful in other ViT variants, so bringing this to MAE feels like a natural next step.

**Environment Details**

- Transformers version: v4.41.0.dev0

- PyTorch version: 2.5.1

- Running on multi-GPU with NCCL backend

| 1medium

|

Title: [FEATURE] graphql not required handling

Body: Currently when fields in graphql schemas are not required, schemathesis can send `null` to them.

According to the graphql specs this is valid and it is useful to find bugs.

But sometimes it would be easier to not send null values

Is there a way to turn the null value sending behavior off? It would be nice to have a simple switch for it

| 1medium

|

Title: [BUG] index out of range when using Mars with XGBOOST

Body: <!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

**Describe the bug**

IndexError: list assignment index out of range

**To Reproduce**

To help us reproducing this bug, please provide information below:

1. Your Python version

Python 3.7.7 on ray docker 1.9

2. The version of Mars you use

0.9.0a2

3. Versions of crucial packages, such as numpy, scipy and pandas

pip install xgboost

pip install "xgboost_ray"

pip install lightgbm

4. Full stack of the error.

(base) ray@eb0b527fa9ea:~/ray/ray$ python main.py

2022-02-16 12:39:47,496 WARNING ray.py:301 -- Ray is not started, start the local ray cluster by `ray.init`.

2022-02-16 12:39:50,168 INFO services.py:1340 -- View the Ray dashboard at http://127.0.0.1:8265

2022-02-16 12:39:51,580 INFO driver.py:34 -- Setup cluster with {'ray://ray-cluster-1645043987/0': {'CPU': 8}, 'ray://ray-cluster-1645043987/1': {'CPU': 8}, 'ray://ray-cluster-1645043987/2': {'CPU': 8}, 'ray://ray-cluster-1645043987/3': {'CPU': 8}}

2022-02-16 12:39:51,581 INFO driver.py:36 -- Creating placement group ray-cluster-1645043987 with bundles [{'CPU': 8}, {'CPU': 8}, {'CPU': 8}, {'CPU': 8}].

2022-02-16 12:39:51,716 INFO driver.py:50 -- Create placement group success.

2022-02-16 12:39:52,978 INFO ray.py:479 -- Create supervisor on node ray://ray-cluster-1645043987/0/0 succeeds.

2022-02-16 12:39:53,230 INFO ray.py:489 -- Start services on supervisor ray://ray-cluster-1645043987/0/0 succeeds.

2022-02-16 12:40:07,025 INFO ray.py:498 -- Create 4 workers and start services on workers succeeds.

2022-02-16 12:40:07,036 WARNING ray.py:510 -- Web service started at http://0.0.0.0:46910

0%| | 0/100 [00:00<?, ?it/s]

Traceback (most recent call last):

File "main.py", line 69, in <module>

main()

File "main.py", line 35, in main

df_train, df_test = _load_data(n_samples, n_features, n_classes, test_size=0.2)

File "main.py", line 25, in _load_data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=test_size, random_state=shuffle_seed)

File "/home/ray/anaconda3/lib/python3.7/site-packages/mars/learn/model_selection/_split.py", line 145, in train_test_split

session=session, **(run_kwargs or dict())

File "/home/ray/anaconda3/lib/python3.7/site-packages/mars/core/entity/executable.py", line 221, in execute

ret = execute(*self, session=session, **kw)

File "/home/ray/anaconda3/lib/python3.7/site-packages/mars/deploy/oscar/session.py", line 1779, in execute

**kwargs,