repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

jlippold/tweakCompatible | 340425466 | Title: `Cercube for YouTube` working on iOS 11.3.1

Question:

username_0: ```

{

"packageId": "me.alfhaily.cercube",

"action": "working",

"userInfo": {

"arch32": false,

"packageId": "me.alfhaily.cercube",

"deviceId": "iPhone6,2",

"url": "http://cydia.saurik.com/package/me.alfhaily.cercube/",

"iOSVersion": "11.3.1",

"packageVersionIndexed": true,

"packageName": "Cercube for YouTube",

"category": "Tweaks",

"repository": "BigBoss",

"name": "Cercube for YouTube",

"packageIndexed": true,

"packageStatusExplaination": "This package version has been marked as Working based on feedback from users in the community. The current positive rating is 100% with 6 working reports.",

"id": "me.alfhaily.cercube",

"commercial": false,

"packageInstalled": true,

"tweakCompatVersion": "0.0.7",

"shortDescription": "Download YouTube videos and more!",

"latest": "4.2.2.5",

"author": "<NAME>",

"packageStatus": "Working"

},

"base64": "<KEY>",

"chosenStatus": "working",

"notes": ""

}

``` |

Pokecube-Development/Pokecube-Issues-and-Wiki | 585634858 | Title: Sound issue

Question:

username_0: Issue Description:

Clipping sound

What happens:

When pokemon are attacking/being attacked at a distance a tiny short clipped sound is played (this may be due to lag or may be due distance from the battle I have not fully discovered why this occurs.)

What you expected to happen:

Clean sound

Steps to reproduce:

1. Within visual range of a pokemon battle but not close enough to hear the sounds

2. May also require artificial latency to reproduce (sorry its not quite clear to whats causing it)

3.

____

Affected Versions (Do *not* use "latest"): Replace with a list of all mods you have in.

- Pokecube AIO: 2.0.5

- Minecraft: 1.15.2

- Forge: 31.1.27<issue_closed>

Status: Issue closed |

WormBase/db-migration | 227653631 | Title: Document manual steps at the end of the migration

Question:

username_0: * Missing from documentation (after step 8):

9. `azanium backup-db`

10. Transfer backed-up database to AWS S3

10.1 Setting `$FROM_URI` and `$TO_URI`:

`FROM_URI="file:///wormbase/datomic-db-backups/$LATEST_DATE/$WS_RELEASE"`

`TO_URI="datomic:ddb://us-east-1/$WS_RELEASE/wormbase"`

10.2 Ensuring use of correct version of datomic-pro

10.3 `cd $DATOMIC_PRO_HOME && ./bin/datomic backup-db "$FROM_URI" "$TO_URI"`<issue_closed>

Status: Issue closed |

litmuschaos/litmus | 550392727 | Title: (feat): Using Gitlab remote templates

Question:

username_0: This Issue is for the feature request in Gitlab pipeline of litmus-e2e:

**_What it is about?_**

- Adding the remote template feature for different experiments running in the Gitlab pipeline.

**_Why and how?_**

- Using the Gitlab templates for every experiment in the pipeline is a good practice in both aspects coding and understanding as by using the templates the logics would be written separately and called in the jobs.

Status: Issue closed

Answers:

username_0: PR to fix this issue - https://github.com/mayadata-io/gitlab-remote-templates/pull/1 |

PaulSonOfLars/gotgbot | 613928009 | Title: Get chat users list

Question:

username_0: Hi! How to get chat users list? It is needed for saving id and username of users in database.

Answers:

username_1: Bots can't get the list of users in a chat. This is a bot API limitation, I can't do anything about it. You need to store the data as they speak.

Status: Issue closed

username_0: Okay, thank you for the answer! |

sebgroup/react-components | 500754803 | Title: CRUD-pattern (new pattern)

Question:

username_0: **Pattern (sep 2019)**

A pattern that describes a uniform way for the user to add both soft and hard properties. This two-step pattern is especially useful in pages where the user has to manually update values.

A white card triggers a slide-out. Use is the same behaviour for all devices.

**Details**

See the measurements (etc) in Design Library:

https://designlibrary.sebgroup.com/patterns/crud/#usage

Design library identifier: component-crud

Any questions or feedback? /Ulrika, <EMAIL>

Answers:

username_1: @username_0 ... I think we need more details about this component. We're not sure how this component should behave. The only screenshots you have provided are mobile only. We don't know the behaviour when it's rendered in a desktop browser.

username_2: I have developed this component separately in my project. Please let me know if there is a need for it and I will add it to this repo. |

eslint/eslint | 234457928 | Title: ESLint requires trailing comma for function parameters.

Question:

username_0: **Tell us about your environment**

* **ESLint Version:** v3.19.0

* **Node Version:** v6.10.0

* **npm Version:** 5.0.3

**What parser (default, Babel-ESLint, etc.) are you using?**

default

**Please show your full configuration:**

```

{

"extends": "eslint-config-airbnb-base",

"parserOptions": {

"ecmaVersion": 6,

"sourceType": "module",

},

"globals": {

"ga": true,

},

"env": {

"browser": true,

"node": true,

"es6": true,

"jquery": true,

},

}

```

**What did you do? Please include the actual source code causing the issue.**

```js

function extract(a, b, c) {

console.log(c);

}

extract(

'style-loader',

[

'css?sourceMap',

'postcss',

'sass?sourceMap',

],

{

publicPath: '../',

} //👈 ESLint error: Missing trailing comma on the last function parameter.

);

```

**What did you expect to happen?**

ESLint should not check trailing comma for function parameter.

**What actually happened? Please include the actual, raw output from ESLint.**

➜ demo git:(dev) ✗ eslint eslint-test.js

/Users/tonni/Projects/demo/eslint-test.js

2:3 warning Unexpected console statement no-console

14:4 error Missing trailing comma comma-dangle

✖ 2 problems (1 error, 1 warning)

Answers:

username_1: Thanks for the report, but this is working as intended -- you are extending the `airbnb-base` configuration, which requires trailing commas for function arguments.

Also see: https://github.com/eslint/eslint/issues/8513, https://github.com/eslint/eslint/issues/7851, https://github.com/eslint/eslint/issues/7749, https://github.com/eslint/eslint/issues/7571.

username_0: 👍 Thanks for your excellent explanation, closing it. @username_1

Status: Issue closed

|

cout970/Magneticraft-API-and-Issues | 120082360 | Title: Pumpjack issues

Question:

username_0: I think the one which causes server TPS to nosedive when it depletes all oil source blocks has been fixed so I'll leave that one out (although I did just type it in here lol)

We are seeing 2 issues on our server which need addressing but we do run cauldron so not sure if one of them is related.

1) - pickaxing pumpjacks doesn't always drop the pumpjack in the world, sometimes they just disappear. Some of the guys on our server have mentioned they are getting them to drop if they pick the block which has the oil outlets on it. I tried with a vanilla iron pick always on that block and have lost 7 of them now. Not sure if this is related to Cauldron.

2) - large surface oil pools, if you get a large pool the pumpjack is not draining all the surface oil , needs to look further out.

Answers:

username_1: Fixed drop problems (probably). And oil searching needs a lot of work,

username_2: Found the same issue with 0.6.0-beta2. Pumpjack does not drop at all. kinda frustrating when playing a pack like Cthulhu Awakens, where the recipe has been made more expensive.

username_3: Has this been fixed? Players from a Civilization server are reporting this pumps keeps disappearing when broken.

username_4: Are you all using 0.6.1-final? it should be fixed in it. if it is fixed for you please let me know so I can close this :)

Status: Issue closed

username_0: I've not had an issue with 0.6.1 final, these are all fixed |

adobe-research/node-theseus | 171702560 | Title: Question: how hard would it be to interface theseus with another editor?

Question:

username_0: I used to work a lot with brackets, and I still think it's the most comfortable editor for HTML and CSS, but there's better node.js support elsewhere. I was wondering if there's a fundamental reason why theseus is only supporting brackets, other than it being enough.

Answers:

username_1: It's pretty easy to integrate node-theseus with other editors. I tried to [document the node-theseus protocol](http://adobe-research.github.io/fondue/) completely enough that someone could do just that.

The node-theseus protocol is basically a simple JSON-based RPC layer over WebSockets. Most of the work is done on the fondue side (because that's where the data is), so the data you get via the protocol is processed enough to be usable straight away.

If the editor you're targeting is also written in JavaScript, you should have no problem. I wrote [a simple example with d3](https://github.com/username_1/node-theseus-d3) that you might use as a base. I also wrote [a little profiler](https://github.com/username_1/fondue-profile) and [the start of a browser-based debugger](https://github.com/username_1/theseus-browser), but those last two projects have probably bit rot. I think they were based on experimental modifications of fondue, but the basic infrastructure will be the same.

It only took me a few hours to write a C++ client for node-theseus recently (using [nlohmann/json](https://github.com/nlohmann/json) and [zaphoyd/websocketpp](https://github.com/zaphoyd/websocketpp)), so supporting additional langauges should be no problem.

Let me know if I can help! The documentation is a direct result of other people asking for assistance integrating with their projects. :)

Status: Issue closed

|

GoogleChrome/lighthouse | 332108303 | Title: DevTools Error: FAILED_DOCUMENT_REQUEST

Question:

username_0: **Initial URL**: http://localhost:8000/

**Chrome Version**: 66.0.3359.181

**Error Message**: FAILED_DOCUMENT_REQUEST

**Stack Trace**:

```

LHError: FAILED_DOCUMENT_REQUEST

at Function.getPageLoadError (chrome-devtools://devtools/remote/serve_file/@<KEY>/audits2_worker/audits2_worker_module.js:917:27)

at pass.then._ (chrome-devtools://devtools/remote/serve_file/@<KEY>/audits2_worker/audits2_worker_module.js:922:270)

``` |

ikedaosushi/tech-news | 610044807 | Title: Jetson Nanoで動く深層強化学習を使ったラジコン向け自動運転ソフトウェアの紹介 - masato-ka's diary

Question:

username_0: Jetson Nanoで動く深層強化学習を使ったラジコン向け自動運転ソフトウェアの紹介 - masato-ka's diary<br>

<br>

https://ift.tt/3aL45AE |

intersystems-community/Global-Masters | 820034004 | Title: Badges for Votes - changes

Question:

username_0: **Posts Votes:**

dc_v50_postvotes

dc_v100_postvotes

dc_v500_postvotes

dc_v1000_postvotes

правила подсчета:

Awarded when your posts (articles, questions, discussions, announcements) gathered 50 / 100 / 500 / 1000 votes in sum on DC.

Posts must not be deleted; they must be published.

Posts from the Developer Community Feedback group are not counted.

**Comments Votes:**

dc_a50_answervotes

dc_a100_answervotes

dc_a500_answervotes

dc_a1000_answervotes

правила подсчета:

Awarded when your comments (любые комменты) gather 50 / 100 / 500 / 1000 votes in sum on DC.

Deleted answers are not counted

Answers at the Developer Community Feedback group are not counted.

@MakarovS96<issue_closed>

Status: Issue closed |

samvera-labs/avalon-bundle | 327762157 | Title: Create a rubocop cop to ensure necessary files have license headers

Question:

username_0: A rubocop cop would allow us to easily check that each new file gets a license header as part of the normal build process. It also gives us a standard place to record which files should and shouldn't have license headers via rubocop's config file.

I did a prototype here: https://github.com/samvera-labs/avalon-bundle/commit/a8784600ca0ef34d4c6010acdd2198cde83bf8fb This prototype would need to be altered to work properly and not be so brittle but proved the approach to be viable. It might also be best to more this to the `license_header` gem.

### Description

Create a gem similar to Bixby because we have multiple repositories we need to run this over

- [ ] the cop should detect when the license is not present (and in the correct format)

- [ ] the cop should autocorrect the license when appriorate (rubocop -a), so that way in the future we just update the gem and the `bundle install && bundle exec rubocop` to update the license

- Put this new gem in the gemfile

Related to #29.

Answers:

username_1: This can wait an be re-evaluated later. This is a before release type thing (formal release).

Status: Issue closed

|

MaybeShewill-CV/CRNN_Tensorflow | 488422393 | Title: 怎么提高预测速度

Question:

username_0: 将训练好的模型封装了一个服务,当sequence_length为70时,一张图片速度要2秒,请问应该怎样优化速度?

Answers:

username_1: @username_0 如果是gpu的话 第一次使用的时候需要warm up 可以多测试一些数据后再统计效率:)

Status: Issue closed

username_2: 您好!我也遇到了一样的问题,我把tf.nn.ctc_beam_search_decoder中的参数beam_width调整成了1,处理一张图片的时间就在0.5s以内了,您也可以试一下!

但是我没有深究这个参数的作用,我测试了几张图片,对结果貌似是没有影响的.....期待您的反馈 |

NervJS/taro | 877149243 | Title: Taro.uploadFile 设置 header 不生效

Question:

username_0: <!-- 请不要删除自动生成的 Issue 标签 -->

<!-- 请不要删除自动生成的 Issue 标签 -->

### 相关平台

H5

**浏览器版本: Chrome 90**

**使用框架: React**

### 复现步骤

```

const uploadTask = Taro.uploadFile({

url: `/openapi/${query}`,

filePath: files[0],

name: 'file',

formData: {

user: 'test',

},

header: { token: '123', abc: 'abc' },

success: function(res) {

var data = res.data

//do something

return data

},

})

uploadTask.progress(res => {

console.log('上传进度', res.progress)

console.log('已经上传的数据长度', res.totalBytesSent)

console.log('预期需要上传的数据总长度', res.totalBytesExpectedToSend)

})

uploadTask.abort() // 取消上传任务

```

### 期望结果

headers 显示 token

### 实际结果

```

Accept: */*

Accept-Encoding: gzip, deflate

Accept-Language: zh-CN,zh;q=0.9,en-US;q=0.8,en;q=0.7,zh-TW;q=0.6,ko;q=0.5,ja;q=0.4

Cache-Control: no-cache

Connection: keep-alive

Content-Length: 1354

Content-Type: multipart/form-data; boundary=----WebKitFormBoundarypnWFAxyBMAiBz5zp

DNT: 1

Host: 10.0.2.135:10086

Origin: http://10.0.2.135:10086

Pragma: no-cache

Referer: http://10.0.2.135:10086/

User-Agent: Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.3 Mobile/15E148 Safari/604.1

```

### 环境信息

```

👽 Taro v3.1.4

Taro CLI 3.1.4 environment info:

System:

[Truncated]

Shell: 5.8 - /bin/zsh

Binaries:

Node: 12.16.3 - ~/.nvm/versions/node/v12.16.3/bin/node

Yarn: 1.22.4 - ~/.nvm/versions/node/v12.16.3/bin/yarn

npm: 7.6.3 - ~/.nvm/versions/node/v12.16.3/bin/npm

npmPackages:

@tarojs/cli: 3.1.4 => 3.1.4

@tarojs/components: 3.1.4 => 3.1.4

@tarojs/mini-runner: 3.1.4 => 3.1.4

@tarojs/react: 3.1.4 => 3.1.4

@tarojs/runtime: 3.1.4 => 3.1.4

@tarojs/taro: 3.1.4 => 3.1.4

@tarojs/webpack-runner: 3.1.4 => 3.1.4

babel-preset-taro: 3.1.4 => 3.1.4

eslint-config-taro: 3.1.4 => 3.1.4

react: ^17.0.0 => 17.0.2

taro-ui: ^3.0.0-alpha.10 => 3.0.0-alpha.10

```

<!-- generated by taro-issues. 请勿修改或删除此行注释 --><!--labels=T-h5,V-3,F-react--><issue_closed>

Status: Issue closed |

cfpb/hmda-frontend | 545929122 | Title: Parser isn't reading LEIs with spaces

Question:

username_0: Short description explaining the high-level reason for the new issue.

Parser isn't reading LEIs with spaces as incorrect. When there is a space in the LEI in the LAR line, the parser doesn't note this as a formatting error. But it notes it in the S/V edits. It should be a parser error. This does however work with ULIs.

## Current behavior

## Expected behavior

## Steps to replicate behavior (include URLs)

1. File to test: [Bank1_is not NA (lowercase).txt](https://github.com/cfpb/hmda-frontend/files/4027697/Bank1_is.not.NA.lowercase.txt)

## Screenshots

Answers:

username_1: The [FFVT](https://ffiec.cfpb.gov/tools/file-format-verification) also considers the format of the provided test file correct, so this may be a validation that needs to be added on the backend.

The [FFVT](https://ffiec.cfpb.gov/tools/file-format-verification) does not identify a format error when there is a space in the ULI, so it could be using different parsing logic.

@username_2 @BarakStout @PatrickHSI could you please check if the backend is currently looking for spaces in the LEI and ULI fields of LAR rows during file format verification of a filing submission?

Observations:

- A space in a LAR LEI uploads without error and does not trigger a parse error but shows up as a syntactical edit.

- A space in a LAR ULI fails during upload with the following status

```json

{

"code": -1,

"message":"An error occurred while submitting the data.",

"description":"Please re-upload your file."

}

```

username_2: This appears to be a backend issue. Ticket with PR here: https://github.com/cfpb/hmda-platform/pull/3408

username_3: Closing since this is tracked in cfpb/hmda-platform#3408

Status: Issue closed

|

refined-bitbucket/refined-bitbucket | 709952429 | Title: Plugin not working in pull request screen

Question:

username_0: I've tried it in a commit diff and it works well. However inside a pull request screen, there is no syntax highlighting.

Answers:

username_1: The syntax highlighting is only implemented in the old pull request experience, not the new one.

Status: Issue closed

|

julianlam/nodebb-plugin-session-sharing | 264499909 | Title: Can you make register override ?

Question:

username_0: I see that you have login override. Can you make register override ? It will be a great option

Answers:

username_1: Hi there, you can do this by forcing all users to go to the login override. Simply disable user registration

Status: Issue closed

username_1: Let me know if this doesn't work for you...

username_2: I am having a similar issue with this with regard to admin users.

I have "Revaluate" set. With normal (non-admin) users, it works great. When I log out from the main site and shared session cookie is deleted, the user is likewise logged out of NodeBB. However, when I do the same with an admin user, I can confirm that the shared session cookie is deleted, but the person is NOT logged out of NodeBB. The only way I have found to log out such a person is by logging in someone else.

I am using NodeBB v1.10.2.

username_1: Hi @username_2 -- this is by design, we didn't want the admin user to be logged out because occasionally they may run into situations where they accidentally change some session-sharing option, and then they get logged out and can't log back in to fix it :grimacing:

Did you need to log out admins as well?

username_1: Bypass located here:

https://github.com/username_1/nodebb-plugin-session-sharing/blob/ae5bd15cbe3cb5a68dce34e87a8fecd94a5c4008/library.js#L495-L498

username_2: Yes I need to be able to log out admins as well.

Here is the problematic scenario:

- Admin is on a shared computer

- Admin logs in via my main site login page (not NodeBB)

- Admin navigates to the forum, and is automatically logged in

- Admin navigates back to the main site and logs out there, thinking he/she is logged out of the entire site, including forum

- Someone else navigates to the forum on this computer and discovers they are logged in as this admin.

username_1: Makes sense. I will see about adding an option to toggle this bypass on and off via the ACP.

username_1: Tracked in #67

username_2: Much appreciated.

username_3: Hey is someone else still facing same issue ?

Revalidatie is enabled.

Registration is disabled.

Login page is set to the other app's login url.

Shared Cookie is deleted through the other website.

(even I don't see the cookie (named token) in the inspector on both website after it has been deleted)

The nodebb is still logged in ...

username_1: Admin account stays logged in, just in case.

username_3: Forgot to mention ... not logged in as admin ...

username_3: <img width="1439" alt="Screenshot 2021-07-30 at 6 35 21 PM" src="https://user-images.githubusercontent.com/23694746/127661081-ccdadad0-8147-42ef-8dfe-1f6714a441b8.png">

As you acn see I have already deleted the token cookie but still it is logged in based on the express.sid cookie ...

Revalidate is turned on but seems it is not taking that cookie into account after it is logged in..

username_3: Yes I am certain re-validate is checked in admin panel ...

/debug/session generated the token for test user ... even my other app generated token were letting me login ..

but deleting or expiring the cookie was not logging it out ... It kept logged in even after deleting / expiring the cookie ..

(I even tried deleting the shared cookie from the inspector manually .. it still kept logged in) |

a14n/dart-google-maps | 458932683 | Title: StreetViewPanorama.controls array can't be indexed using ControlPosition enum

Question:

username_0: This code leads to the following error:

google.maps.GMap map;

...

var topRightControls = map.streetView.controls[ControlPosition.TOP_RIGHT];

Error:

The argument type 'ControlPosition' can't be assigned to the parameter type 'int'. #argument_type_not_assignable

I believe lib/src/core/street_view/street_view_panorama.dart should replace lines 57-66 with simply:

Controls controls;

Status: Issue closed

Answers:

username_1: Thanks for the report.

Fix available in version 3.3.4. |

dhall-lang/dhall-haskell | 474709097 | Title: `//` for recursive records?

Question:

username_0: For a [“recursive record” as defined in the Wiki](https://github.com/dhall-lang/dhall-lang/wiki/How-to-translate-recursive-code-to-Dhall#recursive-record), is it possible to implement the equivalent of the // operator/function?

(I asked this in the #dhall Slack channel where @MonoidMusician and @sellout offered some high-level ideas but I think I need further help)

Answers:

username_0: Wrong repo, sorry about that. Opened as https://github.com/dhall-lang/dhall-lang/issues/681

Status: Issue closed

|

societe-generale/github-crawler | 361732107 | Title: new FileContentParser : number of XML elements

Question:

username_0: ## Summary

It would be interesting to get a new parser that counts the number of elements under a certain xpath.

## Type of Issue

<!-- This issue is a -->

<!-- put an `x` the boxe that apply. -->

It is a :

- [ ] bug

- [x] request

- [ ] question regarding the documentation

## Motivation

It would be helpful for example to know quickly the number of modules in a multi-module Maven project

## Expected Behavior

provide the Xpath under which to count nested elements. Count only the XML elements. return "not found" if xpath doesn't exist

## Steps to Reproduce (for bugs)

<!--- Please provide a link to a live example or steps to -->

<!-- reproduice this behavior -->

## Your Environment

<!--- If you're reporting a bug, include as many relevant details about the environment you experienced the bug in -->

* Version used: 1.0.8

* OS and version:

* Version of libs used:<issue_closed>

Status: Issue closed |

dbeaver/dbeaver | 624339424 | Title: Allow to see PostgreSQL REFCURSOR results in editor

Question:

username_0: **Is your feature request related to a problem? Please describe.**

I always wanted to see the results of a cursor returned from a function in PostgreSQL instead of just "<unnamed portal 1>"

**Describe the solution you'd like**

I would like to be able to double click "<unnamed portal 1>" in the query results and that a popup window would open with the cursor data (all columns an values).

**Describe alternatives you've considered**

Fetching the cursor manually in an anonymous block and printing its' values requires writing custom code.

**Additional context**

Answers:

username_1: Yeah, we had to fix it a long time ago.

In fact we already have ref cursors support for Oracle and PostgreSQL.

It works only in manual commit mode. Currently you can see cursor contents by <kbd>shift+enter</kbd> (it will be opened in popup dialog).

But we definitely need to show it in panel viewer + have ability to reuse cursor.

Like this:

Status: Issue closed

username_2: verified |

connell-class/revassess | 634924902 | Title: Tier 3 Test 1 asserts single result but returns List

Question:

username_0: **Describe the bug**

Tier 3 Test 1 asserts single result but returns List

java.lang.AssertionError: expected:<10> but was:<org.hibernate.query.internal.NativeQueryImpl@67ab1c47>

**To Reproduce**

Steps to reproduce the behavior:

1. Go to RevassessTier3\src\test\java\com\tier3\answers\Answer1Tests.java

2. Run as Junit Test

**Possible solution**

Replace

RevassessTier3\src\test\java\com\tier3\answers\Answer1Tests.java

Line 25

assertEquals(10,sess.createNativeQuery("select * from abs(-10)", Integer.class));

with

assertEquals(10,sess.createNativeQuery("select * from abs(-10)").getSingleResult());

Answers:

username_1: fixed

Status: Issue closed

|

serverless/serverless | 207710950 | Title: Error: spawn java ENOENT

Question:

username_0: <!--

1. If you have a question and not a bug/feature request please ask it at http://forum.serverless.com

2. Please check if an issue already exists so there are no duplicates

3. Check out and follow our Guidelines: https://github.com/serverless/serverless/blob/master/CONTRIBUTING.md

4. Fill out the whole template so we have a good overview on the issue

5. Do not remove any section of the template. If something is not applicable leave it empty but leave it in the Issue

6. Please follow the template, otherwise we'll have to ask you to update it

-->

# This is a (Bug Report)

## Description

Everytime I try to start serverless using the below command I get the same error. My JDK is updated to 1.8 and JAVA_HOME is set to that. I can't figure out how to fix this issue?

For bug reports:

* What went wrong?

Trying to run this command: sls dynamodb start --stage local -P 8001

* What did you expect should have happened?

local dynamodb to start running

* What was the config you used?

* What stacktrace or error message from your provider did you see?

events.js:160

throw er; // Unhandled 'error' event

^

Error: spawn java ENOENT

at exports._errnoException (util.js:1022:11)

at Process.ChildProcess._handle.onexit (internal/child_process.js:193:32)

at onErrorNT (internal/child_process.js:359:16)

at _combinedTickCallback (internal/process/next_tick.js:74:11)

at process._tickDomainCallback (internal/process/next_tick.js:122:9)

For feature proposals:

* What is the use case that should be solved. The more detail you describe this in the easier it is to understand for us.

* If there is additional config how would it look

Similar or dependent issues:

* #12345

## Additional Data

* ***Serverless Framework Version you're using***:

1.7

* ***Operating System***:

macOS Sierra

* ***Stack Trace***:

Error: spawn java ENOENT

at exports._errnoException (util.js:1022:11)

at Process.ChildProcess._handle.onexit (internal/child_process.js:193:32)

at onErrorNT (internal/child_process.js:359:16)

at _combinedTickCallback (internal/process/next_tick.js:74:11)

at process._tickDomainCallback (internal/process/next_tick.js:122:9)

* ***Provider Error messages***:

events.js:160

throw er; // Unhandled 'error' event

^

Answers:

username_1: Have you tried running `sls dynamodb install`? This downloads the DynamoDb libs you need.

Status: Issue closed

username_2: `sls dynamodb install` solved this issue for me. Thanks!

username_3: I have this same issue,

i'm on a mac, my java_home path is set and i've run `sls dynamodb install`

still getting this error

username_4: Same here in a docker environment (circleCI)

username_5: I fixed this to install java in alpine image:

apk --update add openjdk7-jre

But still can't start the dynamodb-local and serverless-offline in container.

@username_4

Did you try to start local dynamodb and serverless offline in container successfully?

username_4: Hey @username_5 ,

Yes, i've been able to do it successfully => https://github.com/username_4/circleci-node8-sls-jre

You can use this image in your circleCI build, or you can also get it from hub.docker, use it as a base image, and install your app with it.

I've been able to start local dynamodb + serverless offline with it.

Ping me if you need more information.

username_5: Thanks, @username_4 👍

I used your image and successfully start local dynamodb and serverless offline in the container. Furtherly, I add features to build and push the new `image:tag` with latest serverless release.

```

$ docker run --rm -it -v $(pwd):/opt/app -v ~/.aws:/root/.aws -v ~/.ssh:/root/.ssh svls/serverless:1.24.1 bash

bash-4.3# sls plugin install -n serverless-dynamodb-local

bash-4.3# sls plugin install -n serverless-offline

bash-4.3# sls dynamodb install

bash-4.3# sls offline start -r us-east-2 --noTimeout --corsDisallowCredentials false &

bash-4.3# npm run test

```

https://github.com/serverless-lambda/docker-serverless

username_4: @username_5 for running test locally with sls offline, I use:

```bash

bash# sls offline start --exec "npm run test"

```

Which method do you think it's better?

username_6: Got the same error, and fixed it: I forgot to install Java ;)

Fetch JDK from http://www.oracle.com/technetwork/java/javase/downloads/jdk10-downloads-4416644.html , install it and don't forget to restart your cmd on Windows.

username_7: java version "10.0.2" 2018-07-17

Java(TM) SE Runtime Environment 18.3 (build 10.0.2+13)

Java HotSpot(TM) 64-Bit Server VM 18.3 (build 10.0.2+13, mixed mode)

```

On ubuntu I have OpenJDK v1.8.0 installed:

```

$ java -version

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-8u181-b13-0ubuntu0.16.04.1-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode

```

username_8: `sudo apt install default-jdk` helped me for ubuntu. (user `sudo apt install default-jre` for mint) |

gcivil-nyu-org/spring2020-cs-gy-9223-class | 587922901 | Title: User can add new sensor

Question:

username_0: **User story**

As a user I can easily add a new sensor without having to manually write code so I can focus on other things.

**Acceptance criteria**

-Sensor is added and can be viewed

- Sensor can contain multiple fields with differnt data types

-Adding sensor does not cause any problems

**Definition of Done**

User can easily add sensor<issue_closed>

Status: Issue closed |

notgiven688/webminerpool | 360547903 | Title: About the Cryptonight V2

Question:

username_0: I'v tested today and i confirm that is slower then the V1

Is there anyone who has tried it before?

Answers:

username_1: Did you check the cv_v2 branch? Did you compile it yourself? The current cn_v2 branch is slower for v0 and v1 because of some compiler troubles which I already fixed - will update the branch soon. The cn_v2 version is slower because of the additional SQRT and 64bit DIVISION operations. Not much we can do about that - I think.

username_0: Yes compiled and tested on killallasics, i get almost 15% slower :(

username_1: It is expected that cnv2 runs slower, also with other miner programs. The problem is that especially code without cpu intrinsics suffer.

username_1: @username_0

Probably we can cast here to double and do a floating point division which should be faster.

https://github.com/username_1/webminerpool/blob/66f1379dbabd3a44483704e7ba3fa1deaf587a35/hash_cn/webassembly/cryptonight.c#L98

I will test it later.

username_0: @username_1

I really hope it will work ,that will really help

and thanks for your effort

username_1: `const uint64_t aa = (uint64_t)((double)dividend / (double)divisor); `

Indeed speeds up the calculations around 1-2% but fails some corner cases..

Status: Issue closed

|

tadashi-aikawa/jumeaux | 314383423 | Title: final/notify add-on

Question:

username_0: - [ ] Create this

- [ ] Make [final/slack] deprecated

- [ ] Create issue to be [final/slack] deprecated

[final/slack]: https://username_0.github.io/jumeaux/ja/addons/final/#slack

Answers:

username_0: Support since 0.65.0

Status: Issue closed

|

dart-lang/build | 269355859 | Title: LibraryBuilder: generate `y.dart` from input `x.dart`

Question:

username_0: I'm trying to create `test/src/foo_nullable.dart` given `test/src/foo.dart`

But I seem to only be able to generate `test/src/foo.nullable.dart`

Intentional? I'd love to have a bit more flexibility here...

`#ThingsIShouldKnow`

Related to https://github.com/dart-lang/build/issues/552

Answers:

username_1: Isn't this pkg/source_gen?

username_0: I hit this just using Builders – so I don't *think* so...

username_2: No, this is not possible today. We intentionally limited the files you can output to only changing extensions on the primary input.

We want to use only configuration driven output possibilities so that we can find outputs in Skylark without writing custom code for each builder.

What is the use case for being able to output to a different file basename?

username_0: I have some file `x.dart` and I'd like to treat it like a template to generate 3 other flavors.

`x_nullable.dart`, `x_nullable_custom_classes.dart`, `x_custom_classes.dart`

I can do all of this with extensions, obviously – just starts feeling a bit weird.

...more curious than anything

username_2: This would be possible today. We say "extension" but the way it's implemented is "postfix" so you could configure a builder to have `buildExtensions' as `{ '.dart': [ '_nullable.dart', '_custom_classes.dart']}`

Similarly if you had a use case you could go from `_test.dart` to `_harness.dart` or similar.

Status: Issue closed

username_2: This would be possible today. We say "extension" but the way it's implemented is "postfix" so you could configure a builder to have `buildExtensions' as `{ '.dart': [ '_nullable.dart', '_custom_classes.dart']}`

Similarly if you had a use case you could go from `_test.dart` to `_harness.dart` or similar.

username_2: I'm trying to create `test/src/foo_nullable.dart` given `test/src/foo.dart`

But I seem to only be able to generate `test/src/foo.nullable.dart`

Intentional? I'd love to have a bit more flexibility here...

`#ThingsIShouldKnow`

Related to https://github.com/dart-lang/build/issues/552

username_3: We have also had requests to generate files in a separate directory. This matters less when you are setting `writeToCache: true`, which will become more common soon, but I think it is still a valid use case we will want to eventually support, and we should be able to do it in a generic fashion that works across build systems without custom code per builder.

username_2: Interesting, the `example/` use case is pretty compelling, I'm having a hard time coming up with a configuration we could use that would work for that without being overly specific to that use case.

The types of directory moves I can imagine are:

1. Move from one top-level directory to another (`lib/something/foo.dart` to `example/something/foo.example.dart`)

2. Move to a sibling directory (`lib/something/foo.dart` to `lib/different/foo.dart`)

3. Move to a sub directory (`lib/something/foo.dart` to `lib/something/sub/foo.dart`)

Describing each of these without only configuration metadata could be tough.

username_2: I think we would most likely want the `example/` use case to be solved with `example/$example$` like our other [magic placeholder](https://github.com/dart-lang/build/pull/746) files. I don't think we want to go down a path of allowing an arbitrary number of assets to be mirrored in a separate directory tree.

We can reopen if we find a compelling use case, but for now I'm going to close this as not planned and we can file a separate issue for `$example$` if and when we need it.

Status: Issue closed

|

venveo/serverless-sharp | 595413185 | Title: Custom alias / domain + domain certificate for Cloudfront Distribution

Question:

username_0: Is this not yet supported at the moment?

If not, I'm interested in seeing if I can spend some time to add this option to the stack. Would be nice to have beautiful domains for image CDNs I was thinking ;-)

Answers:

username_0: https://github.com/venveo/serverless-sharp/pull/48

username_1: Thanks again! Added for next release!

Status: Issue closed

username_0: @username_1 np. :-) Please note that when custom domain is used, the region will have to be us-east-1 because that is the only way certificates can be made automatically (cloudfront certificates will have to be us-east-1 in order to be binded).

username_1: @username_0 Good catch! I'm working on refactoring the docs, so I'll be sure to include a note of that. |

zocteam/website | 442805444 | Title: Governance promo video

Question:

username_0: Would like to integrate this video into the website somewhere... https://youtu.be/HTMAkn7R6mE

Answers:

username_1: video is unavailable

username_0: Can alternatively be grabbed from here: https://mega.nz/#!2xkEhaxA!NmNRxug-bGvQXxfvo2WjtptBMqU0ZafcnyBQpg0z4Z8

Status: Issue closed

|

Esri/military-tools-geoprocessing-toolbox | 332068550 | Title: NumberFeatures tool does not label feature correctly when new feature class is created

Question:

username_0: _From @kgonzago on June 7, 2018 19:44_

6/13: Tasks remaining

- [ ] Add tool to MT toolbox

- [ ] Test from MT build

- [ ] Doc GRG toolset topic - add Number Features to table

- [ ] MT What's new (added the tool to GRG toolset)

When creating a new feature layer (feature class) during the Number Feature tool process, the tool:

1. Creates a new feature class in the project GDB

2. Adds it as a feature layer to the TOC

3. Applies the appropriate layer file for symbology

But, the features are not labeled at all. Somehow the layer file is preventing it from labeling.

## Expected Behavior

Features should be labeled in the newly created feature layer

## Current Behavior

Run tool on:

Get result:

Features are not labeled.

## Possible Solution

Update LYRX file to provide labels as well as symbology.

## Steps to Reproduce (for bugs)

1. Open the Clearing Operations solution

2. Open the Number features tool

3. Choose to number the NewLocations feature layer

4. Specify a new output feature class

5. Run the tool.

6. The new layer is added to the map. It has the correct symbology, but the features are not labeled.

7. Turn on labels - for some reason, even when you turn on labels they don't display (though sometimes they do. @kgonzago and @ACueva saw it not working, then I (BB) tried again on my own ArcGIS Pro 2.1.0 machine and I could manually apply labels and have them display).

## Context

<!--- How has this issue affected you? What are you trying to accomplish? -->

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Version used:

* Environment name and version (e.g. Chrome 39, node.js 5.4):

* Operating System and version (desktop or mobile):

* Link to your project:

Win10, ArcGIS Pro 2.1.

Win8, ArcGIS Pro 2.1.0 (BB)

_Copied from original issue: Esri/solutions-geoprocessing-toolbox#677_

Answers:

username_0: _From @username_1 on June 12, 2018 18:22_

I ran this on Pro 2.2.12776(Beta2) and setting the symbology/labeling on the output layer using a lyrx file seems to be now working correctly for setting the labels. So **this issue is dependent on Pro 2.2 final**:

There is just a small code change needed ([here](https://github.com/Esri/solutions-geoprocessing-toolbox/blob/dev/clearing_operations/scripts/NumberFeaturesTool.py#L93)) to check whether in Pro or ArcMap because a Pro .lyrx file is needed to make the GP output labeling work (a 10.X .lyr file doesn't work for this labeling scenario)

Moving/migrating NumberFeatures tool opened with issue: https://github.com/Esri/military-tools-geoprocessing-toolbox/issues/339

FYI @username_2 @username_3 @username_4 @ACueva

username_0: _From @username_3 on June 12, 2018 18:26_

@username_1 - cool! So, an if product == ArcMap use lyr, if product == Pro use lyrx check here is all it would need?

username_0: _From @username_1 on June 12, 2018 18:40_

@username_3 - that is correct. There is an [existing method/utility](https://github.com/Esri/solutions-geoprocessing-toolbox/blob/dev/clearing_operations/scripts/Utilities.py#L41) for this - though its behavior is not consistent if run from arcpy outside of the Pro/ArcMap - since it returns: 1. Pro 2. ArcMap 3. Other (standalone arcpy but could be Pro or ArcMap python) - so we may need to add a method

username_1: Addressed/added/migrated from [previous clearing ops toolbox](https://github.com/Esri/solutions-geoprocessing-toolbox/tree/dev/clearing_operations) in PR #342

This should work as well as it did in the [previous clearing ops repo/toolbox](https://github.com/Esri/solutions-geoprocessing-toolbox/tree/dev/clearing_operations) - though more rigorous testing of this [may reveal new issues - ex.](https://github.com/Esri/military-tools-geoprocessing-toolbox/issues/339#issuecomment-396974424)

username_2: Symbology is missing from output in ArcMap and ArcGIS Pro

username_1: @username_2 - can you provide some additional info/repro steps or step me through

The issue might be the one mentioned here: https://github.com/Esri/military-tools-geoprocessing-toolbox/issues/339#issuecomment-396974424 that the exact field names **"Number" and "Purpose"** must exist in the input+output feature class because the layer files are set to use those fields.

Also Pro should be version 2.2.12776(Beta2) or later.

Example: ArcMap no symbols or labels with missing fields:

username_1: I found another way to repro @username_2 's [reported issue above](https://github.com/Esri/military-tools-geoprocessing-toolbox/issues/341#issuecomment-397469457) - if you don't supply an "Output Numbered Features" parameter - the symbology will also not be applied.

This has to do with the peculiar design of this tool that allows the input parameter to **sometimes** be used as the output parameter if no ouput parameter is supplied. The ouput is empty in this case so GP does not apply the symbology.

I think we have had similar problems with this tool design before https://github.com/Esri/solutions-geoprocessing-toolbox/issues/607 - https://github.com/Esri/solutions-geoprocessing-toolbox/issues/607#issuecomment-331572977

username_1: Just to summarize when labeling should work

Setting Labeling / Layer Symbology on the output only works currently with the NumberFeatures tool when:

1. An output parameter "Output Numbered Features" parameter is set

2. The field names "Number" and "Purpose" exist in the input

3. If in Pro, using 2.2.12776(Beta2) or later

These limitations were present in the previous ClearingOps toolset/template - but we are likely finding them as a result of just doing more testing *outside of* the template

username_2: With the information above I am going to verify the statements of @username_1

username_2: based on all the testing I have done I do not think that labels from gp tools work in ArcGIS Pro 2.2. I have tested this in

I would like to move this out of the sprint if someone else cannot get the labels to work correctly. @username_4 and @ACueva.

username_3: @username_2 - I think we can include logic to test whether or not the Purpose field is present and populated and choose which LYRX file to apply.

username_4: On Pro 2.2 final the tool is not labeling for me, either upon immediate output or by trying to turn labeling off then back on. I confirmed the "Number" field is present in the input and a "Purpose" field is present in the input. I also tried making sure the purpose field had non-null text values in it. Also I made sure to use an output named differently than the input. I confirmed that the output which is not labeling does have values in the number field and the label class expression is {Number]. In the label expression I tried to switch between all four languages in the label expression as well, also no luck. I attempted to switch and label by some other field, such as "Purpose" which I also calculated values, and still no labels at all.

username_2: @username_3 will try and use the old layer file that only populates simple symbology (yellow boxes with labels). If that does not work we will move on and not tackle this issue during this release.

username_3: I think I've got it working correctly now.

https://github.com/Esri/military-tools-geoprocessing-toolbox/pull/347

Had to add a feature class to the featuresetsWebMerc.gdb and point the symbology LYRX file to it in order for Pro to apply the symbology.

@username_2 - please test.

Status: Issue closed

|

planetarypy/pvl | 90711204 | Title: Write docs

Question:

username_0: Please add a simple example of opening a file containing a label and then extracting some sample information from that label for both a PDS3 image (Pancam?) and the sample `pattern.cub`.

As you have done elsewhere, write a short example in the readme, but more complete examples in the actual Sphinx docs.

Once those easy tasks are done then try doing the following things:

* Writing out a label using `encode`

* Modifying a label

* Adding an entry

* changing an entry

* Writing out the modified label

* Creating a label from scratch

Answers:

username_0: Note that some of the additional tasks above may be difficult, for example, creating a label from scratch. It might be possible but likely not easy, we will probably need to make some helper methods to improve this task and possibly others (like modifying label). The point of asking you to try it now is to expose and document those rough spots.

username_0: FYI there is an example of using `pvl.load` here: https://github.com/planetarypy/planetaryimage/blob/master/planetaryimage/image.py Note that `pvl.load` is renamed to `load_label` in this example just to be explicit about whats being loaded. This is not necessary for documentation purposes.

There are also simpler examples here:

https://github.com/planetarypy/pvl/blob/master/tests/test_decoder.py

Note there is both:

* `load` - which loads from file

* `loads` - which parses a label from a string.

The `load` form is probably going to be the most common.

username_1: Do you want the label from scratch to be based on an image without a label or from random information?

username_0: I would start by trying to recreate a very simple but valid label. Like `tiny1.lbl` in https://github.com/planetarypy/pvl/tree/master/tests/data/pds3 and then try making increasingly complex labels.

Status: Issue closed

username_0: Great start! |

MicrosoftDocs/feedback | 420820849 | Title: no serach result for short term like "GC"

Question:

username_0: **Describe the bug**

docs.microsoft.com/en-us/dotnet/api/ shows no result for short term like "GC"

**To Reproduce**

Steps to reproduce the behavior:

search **GC** on https://docs.microsoft.com/en-us/dotnet/api/

https://docs.microsoft.com/en-us/dotnet/api/?term=GC

**Expected behavior**

**Desktop (please complete the following information):**

- OS: win 10

- Browser: chrome

Answers:

username_1: @username_2 do you have a minimum of 3 letters for the API browser search?

username_2: Yes. You do need to use at least 3 letters but if you are looking for items in a two letter class name you can also type a . after GC and get results that way.

Status: Issue closed

username_0: @username_2

Thank you for your workaround.

I think lifting 'at least 3 letters` restriction for some well-known terms like GC/IO would provide better experience.

But I'm OK with your workaround.

So closing. |

fiveisprime/iron-cache | 116988858 | Title: No function for client.get()

Question:

username_0: I get the following while trying to get from a cache. Using IronWorker fine but notice this isn't an official repository. Is it no longer supported?

```

var cache = new ironcache.Client()

```

```

TypeError: cache.get is not a function

at File.store (/worker/lib/File.js:112:13)

at throw (native)

at onRejected (/worker/node_modules/co/index.js:81:24)

at /worker/node_modules/superagent/lib/node/index.js:1036:11

at Request.callback (/worker/node_modules/superagent/lib/node/index.js:797:3)

at IncomingMessage.<anonymous> (/worker/node_modules/superagent/lib/node/index.js:990:12)

at emitNone (events.js:72:20)

at IncomingMessage.emit (events.js:166:7)

at endReadableNT (_stream_readable.js:903:12)

at doNTCallback2 (node.js:439:9)

```

Status: Issue closed

Answers:

username_0: I get the following while trying to get from a cache. Using IronWorker fine but notice this isn't an official repository. Is it no longer supported?

```

var cache = new ironcache.Client()

```

```

TypeError: cache.get is not a function

at File.store (/worker/lib/File.js:112:13)

at throw (native)

at onRejected (/worker/node_modules/co/index.js:81:24)

at /worker/node_modules/superagent/lib/node/index.js:1036:11

at Request.callback (/worker/node_modules/superagent/lib/node/index.js:797:3)

at IncomingMessage.<anonymous> (/worker/node_modules/superagent/lib/node/index.js:990:12)

at emitNone (events.js:72:20)

at IncomingMessage.emit (events.js:166:7)

at endReadableNT (_stream_readable.js:903:12)

at doNTCallback2 (node.js:439:9)

```

Status: Issue closed

|

acceptbitcoincash/acceptbitcoincash | 328781180 | Title: Other porn site accepting BCH! (hush-hush.com)

Question:

username_0: Hey! I'm searching porn sites accepting BCH and I found this one

---------------

- name: Hush-Hush

url: http://www.hush-hush.com/

img: http://www.hush-hush.com/images/logo-trans.png

twitter: HHgalleries

facebook:

region:

country:

city:

bch: yes

btc: yes

othercrypto: yes

doc:

```<issue_closed>

Status: Issue closed |

dotnet/interactive | 561508234 | Title: PowerShell Notebook - Can't import Pester

Question:

username_0: #### Describe the bug

I tried to run one of my Azure Data Studio PowerShell notebooks into a PowerShell Notebook using .NET Jupyter. It worked fine until I tried to import my dbachecks module which uses Pester. I then created a new Notebook and tried to just run some Pester

The error I receive is

````

Describe: The 'Describe' command was found in the module 'Pester', but the module could not be loaded. For more information, run 'Import-Module Pester'.

````

When I try to just import Pester by itself using any of my available versions even 3.4.0 I get

````

Get-Command: C:\Users\mrrob\Documents\PowerShell\Modules\pester\4.9.0\Pester.psm1

Line |

94 | $script:SafeCommands['Get-CimInstance'] = Get-Command -Name Get-CimInstance -Module CimCmdlets @safeCommandLookupParameters

| ^ The term 'Get-CimInstance' is not recognized as the name of a

| cmdlet, function, script file, or operable program. Check the spelling of the name, or if a path was included,

| verify that the path is correct and try again.

Import-Module: The module to process 'Pester.psm1', listed in field 'ModuleToProcess/RootModule' of module manifest 'C:\Users\mrrob\Documents\PowerShell\Modules\pester\4.9.0\pester.psd1' was not processed because no valid module was found in any module directory.

````

output of Get-Module Pester -ListAvailable

````

Directory: C:\Users\mrrob\Documents\PowerShell\Modules

ModuleType Version PreRelease Name PSEdition ExportedCommands

---------- ------- ---------- ---- --------- ----------------

Script 4.9.0 Pester Desk {Describe, Context, It, Should…}

Script 4.8.1 Pester Desk {Describe, Context, It, Should…}

Directory: C:\Program Files\WindowsPowerShell\Modules

ModuleType Version PreRelease Name PSEdition ExportedCommands

---------- ------- ---------- ---- --------- ----------------

Script 4.8.1 Pester Desk {Describe, Context, It, Should…}

Script 3.4.0 Pester Desk {Describe, Context, It, Should…}

````

#### Did this error occur while using `dotnet interactive`?

- [ X] .NET Jupyter Notebook

#### Screenshots

If applicable, add screenshots to help explain your problem.

#### Please complete the following:

- OS

- [X ] Windows 10

- [ ] macOS

- [ ] Linux (Please specify distro)

- [ ] iOS

- [ ] Android

- Browser

- [X ] Chrome

- [ X] Edge

- [ ] Safari

- Frontend

- [ ] Jupyter notebook

- [X ] Jupyter lab

- [ ] nteract

Answers:

username_0: {

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# This is a new Notebook\n",

"\n",

"That has been written in .NET Interactive\n"

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"<pre></pre>\r\n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

"<pre>Name Value</pre>\r\n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

"<pre>---- -----</pre>\r\n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

"<pre>PSVersion 7.0.0-rc.1</pre>\r\n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

"<pre>PSEdition Core</pre>\r\n"

]

},

"metadata": {},

"output_type": "display_data"

[Truncated]

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": ".NET (PowerShell)",

"language": "PowerShell",

"name": ".net-powershell"

},

"language_info": {

"file_extension": ".ps1",

"mimetype": "text/x-powershell",

"name": "PowerShell",

"pygments_lexer": "powershell",

"version": "7.0"

}

},

"nbformat": 4,

"nbformat_minor": 4

}

username_0: The notebook can be seen

https://gist.github.com/username_0/545b9c353c609ff5bb1bff4b6ccb57cd

username_1: This seems to be an issue with splatting in PowerShell when also providing named parameters:

username_1: A bit of a twist in how this is being done in the kernel, is likely going to be the issue:

username_2: @username_1 I am seeing, I see it in your screenshot as well, do you have any fix for that?

```powershell

Import-Module: The module to process 'Pester.psm1', listed in field 'ModuleToProcess/RootModule' of module manifest '...\Documents\PowerShell\Modules\pester\4.9.0\pester.psd1' was not processed because no valid module was found in any module directory.

```

username_1: I go by this, but then checking the Exported Commands and it is there:

username_1: ...but even changing the psm1 to this line of the module it still fails, so I think the implicit remoting is the issue:

```

$script:SafeCommands['Get-CimInstance'] = Get-Command -Name Get-CimInstance -Module CimCmdlets -CommandType Cmdlet -ErrorAction Stop -All

```

username_1: This is indeed the remoting issue because I just caught that the kernel is importing the Windows PowerShell version of the CimCmdlets module (note the version is `1.0.0.0` which is Windows release). The version matched to PowerShell 7 is version 7.0.0.0

username_3: @username_1 Thanks for reporting this! Yes, it was the CimCmdlets module from the system32 module path that got imported (via the `WinCompat` feature added in PS7). This is because currently the PS kernel don't ship all the built-in modules along with it ...

The built-in modules are not published anywhere and are platform specific, it's hard for an application that host powershell to ship them along. We have the issue https://github.com/PowerShell/PowerShell/issues/11783 to track this work.

username_1: @username_3 maybe I'm missing something...

While it is true that not all modules are going to be shipped with PowerShell CIM cmdlets are not one of them, they are indeed shipped with the versions of PowerShell (both 6 and 7). You can find the DLL, `Microsoft.Management.Infrastructure.CimCmdlets.dll`, located in the root directory of each version of PowerShell:

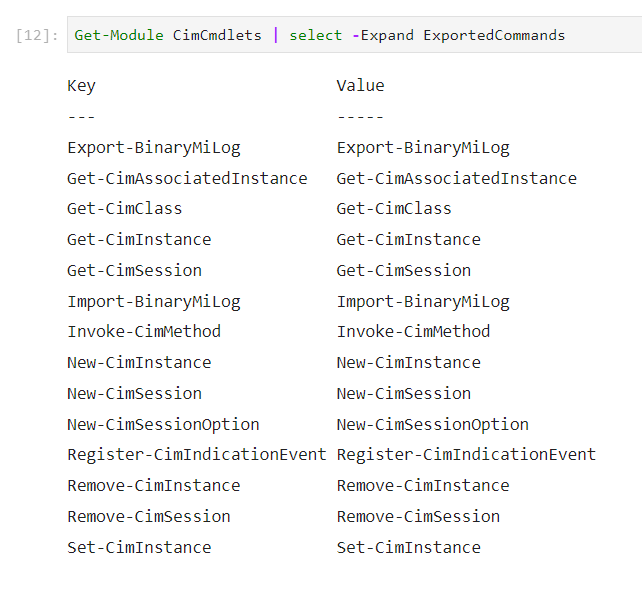

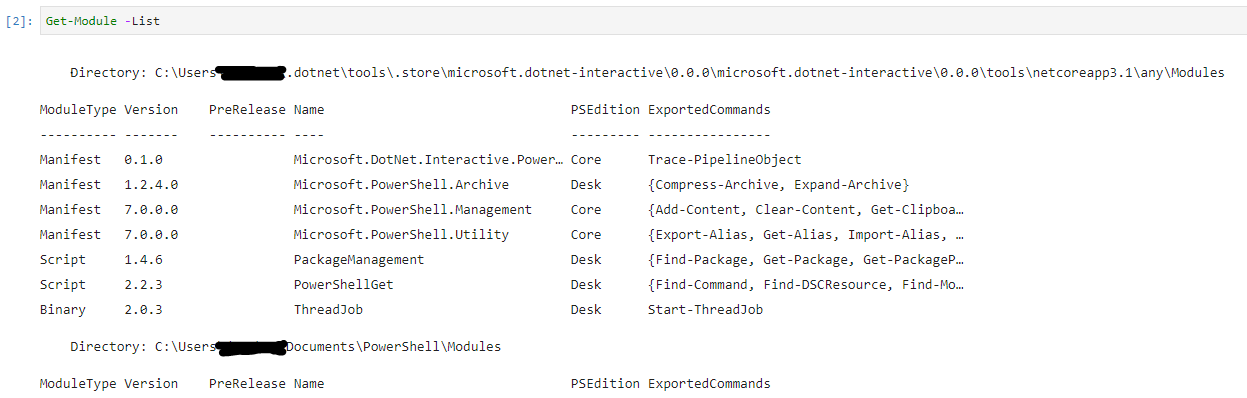

username_3: Yes, indeed.

What I meant is that not all built-in modules (literally the module folders) that come with PowerShell (6/7) out-of-box are currently shipped along with the PowerShell Jupyter kernel.

The following 7 modules are what the PowerShell Jupyter kernel currently ship, and we only have the `Utility` and `Management` built-in modules, no `CimCmdlets`.

We are working on get this fixed.

Status: Issue closed

username_0: Of course it can be resolved by importing from the local PowerShell Core

username_0: #### Describe the bug

I tried to run one of my Azure Data Studio PowerShell notebooks into a PowerShell Notebook using .NET Jupyter. It worked fine until I tried to import my dbachecks module which uses Pester. I then created a new Notebook and tried to just run some Pester

The error I receive is

````

Describe: The 'Describe' command was found in the module 'Pester', but the module could not be loaded. For more information, run 'Import-Module Pester'.

````

When I try to just import Pester by itself using any of my available versions even 3.4.0 I get

````

Get-Command: C:\Users\mrrob\Documents\PowerShell\Modules\pester\4.9.0\Pester.psm1

Line |

94 | $script:SafeCommands['Get-CimInstance'] = Get-Command -Name Get-CimInstance -Module CimCmdlets @safeCommandLookupParameters

| ^ The term 'Get-CimInstance' is not recognized as the name of a

| cmdlet, function, script file, or operable program. Check the spelling of the name, or if a path was included,

| verify that the path is correct and try again.

Import-Module: The module to process 'Pester.psm1', listed in field 'ModuleToProcess/RootModule' of module manifest 'C:\Users\mrrob\Documents\PowerShell\Modules\pester\4.9.0\pester.psd1' was not processed because no valid module was found in any module directory.

````

output of Get-Module Pester -ListAvailable

````

Directory: C:\Users\mrrob\Documents\PowerShell\Modules

ModuleType Version PreRelease Name PSEdition ExportedCommands

---------- ------- ---------- ---- --------- ----------------

Script 4.9.0 Pester Desk {Describe, Context, It, Should…}

Script 4.8.1 Pester Desk {Describe, Context, It, Should…}

Directory: C:\Program Files\WindowsPowerShell\Modules

ModuleType Version PreRelease Name PSEdition ExportedCommands

---------- ------- ---------- ---- --------- ----------------

Script 4.8.1 Pester Desk {Describe, Context, It, Should…}

Script 3.4.0 Pester Desk {Describe, Context, It, Should…}

````

#### Did this error occur while using `dotnet interactive`?

- [ X] .NET Jupyter Notebook

#### Screenshots

If applicable, add screenshots to help explain your problem.

#### Please complete the following:

- OS

- [X ] Windows 10

- [ ] macOS

- [ ] Linux (Please specify distro)

- [ ] iOS

- [ ] Android

- Browser

- [X ] Chrome

- [ X] Edge

- [ ] Safari

- Frontend

- [ ] Jupyter notebook

- [X ] Jupyter lab

- [ ] nteract

username_0: That was a mistake that everyone at PowerShell Saturday Hamburg saw!!

Please re-open

Status: Issue closed

username_3: @username_0 The PR #189 brought in `CimCmdlets` and other built-in modules for the PowerShell kernel, so importing Pester should work fine now. |

byuitechops/module-publish-settings | 294882417 | Title: README: module-publish-settings

Question:

username_0: Please get the new README.md from the [Child Template](https://github.com/byuitechops/child-template/blob/master/README.md) and fill it out for your child module. Push it to your child module's repository.

If you have questions, ask Zach, Daniel, or Josh.<issue_closed>

Status: Issue closed |

lh3/minimap2 | 1101081595 | Title: [E::sam_parse1] query name too long

Question:

username_0: 12 12 12 12

```

Status: Issue closed

Answers:

username_1: ```

<(samples/NA19240/hifiasm/NA19240.asm.bp.hap2.p_ctg.fasta.gz)

```

Remove `<()`.

username_1: Then you can generate SAM first and then see if there are long query names. The error report is from samtools.

username_0: Thanks. Generated intermediate SAM file and query names were still only 11 characters. Bug in samtools 1.10, fixed by 1.12. |

cclib/cclib | 41575132 | Title: NMR and EPR attributes

Question:

username_0: Just to start the discussion....

Taking a look at the Gaussian NMR page, I see there are options for different gauge origins and methods. Do we parse all of them, similar to how we handle atomic charges? That is, a dictionary with the various origins/methods as keys? Or just the most common? I think NWChem and GAMESS only support the GIAO method

Are there other print options (e.g. eigenvectors) that would be useful?

Answers:

username_1: I'm looking to start parsing the NMR section of ORCA, and was hoping we could get some sort of consensus on what should be parsed. ORCA 4 only supports GIAO. While there are other methods, such as IGLO (available in ORCA 3), they are generally not recommended, so I don't know if we need a separate method flag. I know very little about NMR and what is important, but my talks with a developer of the NMR module in ORCA suggested that just parsing the isotropic and anisotropic should be sufficient.

I would propose that nmr be a tuple of two numpy arrays `(isotropic, anisotropic)`. However, it could also be a dictionary wherein `isotropic`, `anisotropic`, `shielding_tensor`, etc. are keys. Thoughts?

Here is a sample of all the data ORCA prints out for and NMR computation (He atom).

```

---------------

CHEMICAL SHIFTS

---------------

Note: using conversion factor for au to ppm alpha^2/2 = 26.625677252

Doing GIAO para- and diamagnetic shielding integrals analytically ...done

Doing remaining GIAO terms numerically ...done

--------------

Nucleus 0He:

--------------

Diamagnetic contribution to the shielding tensor (ppm) :

59.949 0.000 -0.000

0.000 59.949 0.000

-0.000 0.000 59.949

Paramagnetic contribution to the shielding tensor (ppm):

-0.000 0.000 0.000

0.000 -0.000 -0.000

0.000 -0.000 -0.000

Total shielding tensor (ppm):

59.949 0.000 0.000

0.000 59.949 -0.000

0.000 -0.000 59.949

Diagonalized sT*s matrix:

sDSO 59.949 59.949 59.949 iso= 59.949

sPSO -0.000 -0.000 -0.000 iso= -0.000

--------------- --------------- ---------------

Total 59.949 59.949 59.949 iso= 59.949

--------------------------

CHEMICAL SHIELDING SUMMARY (ppm)

--------------------------

Nucleus Element Isotropic Anisotropy

------- ------- ------------ ------------

0 He 59.949 0.000

```

username_2: I'll think about the structure once 1.5.2 is out, but I can comment on the first part. In practice, one is interested only in GIAO for the gauge problem because of absolute accuracy problems and poor/slow convergence with respect to the basis set for the other methods. In principle, one could choose IGLO, each individual nucleus, or a common origin. The g-tensor is less sensitive to the choice of gauge origin, so historically the choice is a common origin, usually the center of electronic charge. For hyperfine tensors, each nucleus is usually the center. I'm not sure about ISSC or ZFS tensors. If we'd like a common appearance for each of these, then there needs to be some flexibility for specifying the gauge origin.

More advanced (and for 2.0) is the choice of spin-orbit operator. @ghutchis also mentioned to me some time ago that the tensor orientation may be desirable for visualization, say in Avogadro.

I generally prefer dictionaries over tuples due to the descriptiveness.

username_3: Pushing back to v1.5.3 |

Clinical-Genomics/scout | 900388722 | Title: Dropdown height is a tad too small

Question:

username_0: I've just noticed this:

Sorry I didn't realize sooner when I reviewed yesterday @moedarrah!

Answers:

username_0: This could be easily fixed by assigning a custom style class only to the dropdowns present on the variants filters.

Status: Issue closed

|

DavidTanner/nodecredstash | 216820854 | Title: Allow passing custom endpoints into underlying AWS clients

Question:

username_0: To facilitate talking to a local dynamoDB instance, we need the ability to pass in a different endpoint parameters to KMS and DynamoDB. Because the same `awsOpts` param is shared for dynamo and KMS, it's not possible to change the endpoint.

I would propose two new items added to the high-level configuration (not `awsOpts`): `dynamoEndpoint` and `kmsEndpoint` that get merged into the respective `awsOpts` objects.

Answers:

username_1: Can you submit a pull request with said functionality and a test?

Status: Issue closed

|

aws/aws-xray-sdk-node | 274398492 | Title: Feature Request: Capturing additional SQL Query information

Question:

username_0: The current MySQL instrumentation only captures the url and query type of each query made.

It would be nice to update the instrumentation to also capture the actual query being run.

Obviously there would be privacy concerns attached to this, since you probably don't want to log out every query.

I'm happy to implement this if I can get some direction from the core team as to how you want to handle this.

I was thinking you can provide a predicate as part of the options initialising the instrumentation. This predicate that would be given the query being run, and return whether or not this query should log the query being run.

I came to look for this after seeing this forum thread: https://forums.aws.amazon.com/thread.jspa?messageID=809685

Answers:

username_1: Hi username_0,

Due to the sensitive nature of the data and information that could be captured, we have plans to address this issue internally. We are still discussing on how to handle best to handle this to get most value out of it, while still being safe and protecting our customer's potentially sensitive data and prevent unintentionally leaking data due to misconfiguration. We do intend to address this in the next calendar year.

Thanks,

Sandra

username_2: Has there been anything further on this? Without the SQL query data, I don't really get much value out of instrumenting PostgreSQL. I think the configuration proposals in the forums are sensible - it at least gives people the option on turning on query/parameter capture.

username_3: Any Updates @username_1 ? Is there a plan for this?

username_4: @username_3 Still on the roadmap and being prioritized against other items. PRs are welcome.

username_5: @username_4 / @username_1 : Any updates on this enhancement request. We see a lot of value of including our sqls while instrumenting our PostgreSQL DB roundtrips and as @username_2 points, there is not much value instrumenting DB round trips without the sql info. It looks like customers have been waiting for this feature for 3 years now.

username_6: Hi @username_5,

We are sorry this feature hasn't been prioritized yet. We're continuing to work on how best to capture these queries in a secure manner. |

scala/bug | 220096896 | Title: Private constructors and methods are compiled to public visibility when accessed from a companion object

Question:

username_0: Private constructors and methods are compiled to public visibility when a companion object access them. This should arguably be the "most private" possible. While the Scala compiler should (and likely does, I didn't test it) enforce the visibility restrictions, when interoperating with Java, it would be preferable to have something other than public visibility here.

Per <NAME>, the issue could be solvable by emitting an appropriate static forwarder in the class.

Example with expected results (no companion object):

```scala

class Private private(x: Int) {

private[this] val y = x

private def yValue = y

}

```

javap -p output with private constructor and method:

```scala

public class Private implements scala.ScalaObject {

private final int y;

private int yValue();

private Private(int);

}

```

This is expected.

However, adding the companion object as follows results in the private constructor and method being generated as public.

```scala

object Private {

def main(args: Array[String]) {

val p = new Private(7)

p.yValue

}

}

```

javap -p output with public constructor and method:

```scala

public class Private implements scala.ScalaObject {

private final int y;

public static final void main(java.lang.String[]);

public final int Private$$yValue();

public Private(int);

}

``` |

google/play-services-plugins | 487871192 | Title: Strict Version Matcher Gradle Plugin: Release tag 1.2.0 is missing

Question:

username_0: I am missing the release tag (and a [changelog](https://github.com/google/play-services-plugins/issues/40)) for version 1.2.0 of the _Strict Version Matcher Gradle Plugin_ which is already available via the Google Maven repository and [advertised in release notes of Google Play Services](https://developers.google.com/android/guides/releases#june_27_2019).

- [ ] Please tag your commits and push them as soon as you release new versions.

Answers:

username_0: Meanwhile, [version 1.2.1 has been released](https://developers.google.com/android/guides/releases#november_19_2019) at November 19, 2019.

I kindly ask you to add a detailed CHANGELOG and the corresponding tags to this repository. It appears to abandoned otherwise. :skull: |

smith-chem-wisc/MetaMorpheus | 1046738430 | Title: File was not found in the dictionary

Question:

username_0: I get an error, noting that the run has failed, with the error that one of the files was not found in the dictionary.

I re-ran only that file, and it was okay.

What does that error means?

Answers:

username_1: I'm not sure. If you see the error again, could you please paste the full error message or a screenshot into the issue here?

Otherwise, I believe there is an option to report the error to the metamorpheus email. Did you click that button to send the error report?

Which files is it complaining about? Please share an example of the files that reproduce the error.

Thanks!

username_1: Thank you for reporting the issue. Please reopen another if you encounter it again.

Status: Issue closed

|

EasyNetQ/EasyNetQ | 180687948 | Title: Crash Binding or Create Queue

Question:

username_0: Hi, I have a exception when I try to bind a queue to my exchange.

My topology is:

1) 2 VHost each with

send

Client -> Ex_cli -> routing Key1 -> Ex_Srv-> permanent Queue -> Server

receive

Client <- temporary Queue <- Ex_cli <- routing Key2 <- Ex_Srv<- Server

Server create the two topologies:

I doing this for a strong isolation between the client side and server side

The Client connect to VLogin/Ex_Cli, then create exclusive/temporary Queue on Ex_Cli, start the consumeur on queue and send Msg with reply (I use advence bus)

When server received the login message (and after validate credential), he reply a message to the client that contain all informations to connect to VBusiness and start processing with.

The Client close the IBus and create a new one with new parameter.

After I use my RPC to send messages in a loop.

Some time after 200 or 1000 or 10000 messages, I received a crash when I try to create the binding on Queue used to consume the reply and rarely when I try to create the Queue. (queue name Q_<ClientGuid>_<COUNTER>)

try

{

queue = _bus.Advanced.QueueDeclare(

name: queueName,

passive: false,

durable: false,

exclusive: true,

autoDelete: true

); <==== Exception happen here rarely

}

catch (Exception ex)

{

_log.ErrorFormat(ex, "Unable to create Queue Name{0}", queueName);

}

try

{

binding = _bus.Advanced.Bind(_exchange, queue, replyToRoutingKey); <==== Exception happen here frequently

}

catch (Exception ex)

{

_log.ErrorFormat(ex, "Unable to open BUS Topology QueueName{0}", queueName);

}

The Rabbit MQ log

Error on AMQP connection <0.5590.192> ([::1]:52399 -> [::1]:33704, vhost: 'VHDEMO', user: 'BHCClient', state: running), channel 3:

operation basic.ack caused a connection exception channel_error: "expected 'channel.open'"

Exception catch on Binding:

The AMQP operation was interrupted: AMQP close-reason, initiated by Peer, code=504, text="CHANNEL_ERROR - expected 'channel.open'", classId=60, methodId=80, cause=

Stack:

à EasyNetQ.Producer.ClientCommandDispatcherSingleton.Invoke(Action`1 channelAction)

à EasyNetQ.Producer.ClientCommandDispatcher.Invoke(Action`1 channelAction)

à EasyNetQ.RabbitAdvancedBus.Bind(IExchange exchange, IQueue queue, String routingKey)

à Network.RabbitMQBus.createQueueConsume(String queueName, String replyToRoutingKey, Action`3 callBackConsume) dans f:\OpalNetwork-RabbitMQV2\sources\technical\Network\RabbitMQSBus\RabbitMQBus.cs:ligne 523

I've try to find a solution, but unable to found any things.

I use the last nugget package and use a single thread (so no race on my side).

Have you some idea that can help me to understand what happen ?

Thanks in advance

Answers:

username_0: I close the subject, I make a work around with less overhead (240/s => 920/s)

Status: Issue closed

|

grid-js/gridjs | 1097060058 | Title: Import server-side data example

Question:

username_0: The live results on this template, https://gridjs.io/docs/examples/server/, fails with the error "An error happened while fetching the data". The console reports this error, "[Grid.js] [ERROR]: TypeError: data.map is not a function".

I believe the problem is in this line of the example code:

then: data => data.map(card => [card.name, card.lang, card.released_at, card.artist])

I think it should read:

then: data => data.results.map(card => [card.name, card.lang, card.released_at, card.artist]) |

argoproj/argo-cd | 819114953 | Title: Cluster cache requires manual refresh

Question: