repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

cake-build/cake | 115399437 | Title: OpenCover: Code example is wrong

Question:

username_0: The following example is wrong.

There is no `SetOutputFile` extension method for `OpenCoverSettings`.

<issue_closed>

Status: Issue closed |

bokeh/bokeh | 321963321 | Title: Major Label Overriders not accepting dictionary

Question:

username_0: #### Description of expected behavior and the observed behavior

When overriding ticks I'm unable to pass a dict unless wrapping in eval(str()).

Although I found a fix I'm kinda curious why this is happening... Newbie coder 👍

#### Complete, minimal, self-contained example code that reproduces the issue

```

from bokeh.layouts import row

from bokeh.plotting import figure, show, output_file

factors = range(0,4)

labels = ['Ed', 'Jon', 'Christian', 'Ed']

x = [50, 40, 65, 10]

dot = figure(title="Categorical Dot Plot", tools="", toolbar_location=None,

y_range=[0-1,max(factors)+1], x_range=[0,100])

dot.segment(0, factors, x, factors, line_width=2, line_color="green", )

dot.circle(x, factors, size=15, fill_color="orange", line_color="green", line_width=3, )

factor_labels = dict(zip(factors, labels))

dot.yaxis.major_label_overrides = factor_labels

#dot.yaxis.major_label_overrides = eval(str(factor_labels))

```

Gives

#### Stack traceback and/or browser JavaScript console output

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-224-fea246dbd58f> in <module>()

----> 1 show(dot)

~\Anaconda3\lib\site-packages\bokeh\util\api.py in wrapper(*args, **kw)

188 @wraps(obj)

189 def wrapper(*args, **kw):

--> 190 return obj(*args, **kw)

191

192 wrapper.__bkversion__ = version

~\Anaconda3\lib\site-packages\bokeh\io\showing.py in show(obj, browser, new, notebook_handle, notebook_url)

135 if obj not in state.document.roots:

136 state.document.add_root(obj)

--> 137 return _show_with_state(obj, state, browser, new, notebook_handle=notebook_handle)

138

139 #-----------------------------------------------------------------------------

~\Anaconda3\lib\site-packages\bokeh\io\showing.py in _show_with_state(obj, state, browser, new, notebook_handle)

162

163 if state.notebook:

--> 164 comms_handle = run_notebook_hook(state.notebook_type, 'doc', obj, state, notebook_handle)

165 shown = True

166

~\Anaconda3\lib\site-packages\bokeh\util\api.py in wrapper(*args, **kw)

188 @wraps(obj)

189 def wrapper(*args, **kw):

--> 190 return obj(*args, **kw)

191

192 wrapper.__bkversion__ = version

[Truncated]

237 separators=separators, default=default, sort_keys=sort_keys,

--> 238 **kw).encode(obj)

239

240

~\Anaconda3\lib\json\encoder.py in encode(self, o)

197 # exceptions aren't as detailed. The list call should be roughly

198 # equivalent to the PySequence_Fast that ''.join() would do.

--> 199 chunks = self.iterencode(o, _one_shot=True)

200 if not isinstance(chunks, (list, tuple)):

201 chunks = list(chunks)

~\Anaconda3\lib\json\encoder.py in iterencode(self, o, _one_shot)

255 self.key_separator, self.item_separator, self.sort_keys,

256 self.skipkeys, _one_shot)

--> 257 return _iterencode(o, 0)

258

259 def _make_iterencode(markers, _default, _encoder, _indent, _floatstr,

TypeError: keys must be a string

Answers:

username_1: Your code works as-is for me on master with no errors:

<img width="224" alt="screen shot 2018-05-10 at 08 08 42" src="https://user-images.githubusercontent.com/1078448/39876924-61124076-5429-11e8-871a-5190635e30ab.png">

If there ever was a big, it's been fixed since (your issue did not state any version info, please **always** provide version info)

In any case there is no reason to do things this way, Bokeh has built-in support for real categorical factors:

https://bokeh.pydata.org/en/latest/docs/user_guide/categorical.html

Status: Issue closed

|

simonbengtsson/jsPDF-AutoTable | 334205281 | Title: The afterPageContent, beforePageContent and afterPageAdd hooks are deprecated.

Question:

username_0: First of all, awesome plugin! It seems that is is using depricated jsPDF hooks though.

I get this error in the console:

`The afterPageContent, beforePageContent and afterPageAdd hooks are deprecated. Use addPageContent instead.`

Status: Issue closed

Answers:

username_1: did u resove the problem cz i'm getting the same error |

dlr-eoc/ukis-pysat | 618837727 | Title: Provide documentation for datahub log in

Question:

username_0: Connecing to a datahub requires user credentials to be set according to the target datahub, e.g. Copernicus SciHub.

Calling `src = Source(source=Datahub.Scihub)` looks for user credentials but fails if not set in an environment:

```

File "<env_dir>\lib\site-packages\ukis_pysat\data.py", line 57, in __init__

self.user = env_get("SCIHUB_USER")

File "<env_dir>\lib\site-packages\ukis_pysat\file.py", line 23, in env_get

raise KeyError(f"No environment variable {key} found")

KeyError: 'No environment variable SCIHUB_USER found'

```

The documentation on this is rather sparse and should be more detailed.

There are different ways on providing user credentials, depending on the OS and development tools.

- User credentials could be provided as plain text, which is not recomennded.

- PyCharm offers the possibility to store the credentials in an environment in the Run/Debug configuration dialog.

- Credentials could be set in the following way (example in plain text, but can be loaded from files):

```

os.environ["SCIHUB_USER"] = "Tim"

os.environ["SCIHUB_PW"] = "<PASSWORD>"

```<issue_closed>

Status: Issue closed |

rossfuhrman/_why_the_lucky_markov | 563720162 | Title: I probably cried. Often you’ll use other classes in the arguments for the first monster in the list and goes through each item in a little colorblind girl with a colon.

Question:

username_0: Toot: I probably cried. Often you’ll use other classes in the arguments for the first monster in the list and goes through each item in a little colorblind girl with a colon.

One comment = 1 upvote. Sometime after this gets 2 upvotes, it will be posted to the main account at https://mastodon.xyz/@_why_toots |

sympy/sympy | 185453192 | Title: assume_integer_order in bessel.py should be more liberal

Question:

username_0: Right now things like `jn(nu, x).rewrite(besselj)` won't work unless you explicitly set `nu = Symbol('nu', integer=True)`. It should be more liberal, only failing if the argument is not an integer. In general, SymPy should not require the correct assumptions to be true, only for the incorrect assumptions to not be True.

As a side note: I'm unclear why this has to require nu be an integer. Aren't bessel functions defined for any value?

Answers:

username_1: Some tests are failing as the assumption of having an interger parameter is removed.

```python

assert jn(2.57082614100218 - 0.580811206370047*I, z) == sqrt(2)*sqrt(pi)*sqrt(1/z)*besselj(3.07082614100218 - 0.580811206370047*I, z)/2

```

Should I change the test in this case?

username_0: We should double check the result.

username_2: hello i would like to work on this. can you guide me where to start. I'm new here

username_0: I believe there is already a fix at https://github.com/sympy/sympy/pull/11785

Status: Issue closed

username_3: @username_0, regarding your question about the integer order in the description of this issue, for spherical bessel functions (jn, yn) the order has to be an integer AFAIU. This is in contrast to normal bessel functions, where the order can even be complex-valued (modified bessel functions).

username_0: So is the merged PR #11785 incorrect?

username_3: I totally agree with what you said in https://github.com/sympy/sympy/issues/11777#issue-185453192: "It should be more liberal, only failing if the argument is not an integer. In general, SymPy should not require the correct assumptions to be true, only for the incorrect assumptions to not be True." Since in PR #11785 there is no check for the incorrect assumptions to not be True, i.e., it does not check whether `nu != Symbol('nu', integer=False)`, this PR does not address your statement. I'll work on a new PR to fix this. |

S0NN1/advent-of-code-2020 | 774725990 | Title: Cannot compile solution for day1 part 2

Question:

username_0: I'm having issues with compiling code for day 1 part 2.

Steps to reproduce:

Clone the repo

Run `cargo run` inside the folder `day_1`

The last command executes correctly but only prints the solution for part 1

I believe this is a critical issue and should be handled ASAP.

Thank you

Answers:

username_1: Fixed in latest commit

Status: Issue closed

|

alexedwards/scs | 292160942 | Title: stale sessions in data store - `deadline` plus `saved` property?

Question:

username_0: How are folks handling stale sessions in the data store? For example, redis has its [expire](https://redis.io/commands/expire) command but by default it is set to never expire.

If a vacuuming routine compares `time.Now()` > `deadline`, then the session should be deleted. That's the logic, yes?

Is there any use case for including a `saved` property (the date when a session was last saved) - in addition to the deadline? Right now I can't think of any. Maybe I just answered my own question but am double-checking with others.

Answers:

username_0: Wow, this is great! I see the stores already include checks for expired tokens. Excellent library! Thanks, @alexedwards!

Status: Issue closed

|

fluffysquirrels/GGJ2015 | 55533909 | Title: Player can move through wall if collision volume is not clamped to grid unit size

Question:

username_0: This is currently the case on Level1 if you move up to the back wall. It is a result of the position clamp fix forcing the player through the wall meaning that Unity temporarily disregards collisions between the player and the wall - allowing the player to escape. I simple fix for now is to ensure that all art assets adhere to the unit grid layout.

Status: Issue closed

Answers:

username_0: This is currently the case on Level1 if you move up to the back wall. It is a result of the position clamp fix forcing the player through the wall meaning that Unity temporarily disregards collisions between the player and the wall - allowing the player to escape. I simple fix for now is to ensure that all art assets adhere to the unit grid layout.

username_1: I'm not quite clear on how to reproduce this. Can you show a video or an annotated screenshot demonstrating which wall to jump over?

username_0: Sure - will do at 12, I'll update you then....

username_0: Uploaded a video to /testing

username_1: Ah! Got it. I thought you meant the bottom left edge / wall. I especially like when the player crouches at the end and falls into infinity!

I'll take a look at why this is happening and try to stop it.

username_0: Haha. I think I know why - it's kind of my fault but possibly unavoidable in a way (the fix being to fix the art and collision volumes). At the moment the collision geometry of the level prevents the player from intersecting it (due to the rigid body with isKinematic ticked on the player object). However if you adjust the transform through code (er.... significantly on a single frame I guess?) as we are currently (and necessarily) with my clamp position function then you can force the volumes to intersect. On the next FixedUpdate the collision system will then ignore collisions from intersecting volumes so the player can then escape. All because I hastily modelled the environment and have messed up the size!

I guess this can be fixed from an art standpoint but we could possibly prevent it from happening from a code stand point as well? By snapping the player BACK to the previous square or something along those lines? We definitely do not want to allow the player to not adhere to the grid system as then nothing works well.

username_0: Oops didn't realise you can only assign one person at a time? I'll reassign you for now...

username_0: Fixed environment model - you'll need to pull as I had to update the Level1 scene as a result!

username_0: Not sure whether to close this one for now?

Status: Issue closed

username_1: Just tested this after your fix. I tried to hop through all four walls of the grid and couldn't.

I think it's fine to close this issue now with the environment fix and we can open a new one if the problem re-occurs. |

notify-run/notify.run | 1043201688 | Title: Subscribed channel says: Not found

Question:

username_0: Subscribed channel says: Not found.

Notifications (pop-ups and sounds) ok, but the channel doesn't show list and says (in red): Not found.

Phone and pc have the same problem.

Thank you very much.

Answers:

username_1: Sorry about that, I found the bug and am deploying a fix. Should be live in < 10 minutes.

username_0: Working.

Tnx.

Status: Issue closed

|

ray-project/ray | 606303483 | Title: fine grained CUDA / GPU controls?

Question:

username_0: <!--Please include [tune], [rllib], [autoscaler] etc. in the issue title if relevant-->

### What is your question?

Hi I have multiple systems with diverse hardware in my portfolio. For example this system with 3 GPUs.

Cuda device 0 has 1gb VRAM

Cuda device 1 has 16gb VRAM

Cuda device 2 has 48gb VRAM

How can I configure ray to only do calculations on cuda devices 1 and 2?

My current example model has a size of 11gb. So I can load it once on Cuda device 1 but four times on cuda device 2. How to configure for this with the fractional gpus?

Is there a way to allocate memory on an absolute gb scale?

Or is there a flag so ray automatically figures out the paralellization under the hood?

I had an intense look through the docs but failed to understand:

https://ray.readthedocs.io/en/latest/using-ray-with-gpus.html#fractional-gpus

*Ray version and other system information (Python version, TensorFlow version, OS):*

latest

Answers:

username_1: Ray doesn't innately support this for now. (It currently does not support resource isolation). You should probably find a way to pin each task to GPU. For example, when you want to pin CPU, you can do sth like this https://stackoverflow.com/questions/61051911/how-to-ensure-each-worker-use-exactly-one-cpu. (I am not that familiar with GPU, but I assume there should be a way to do). @robertnishihara Do you know any way to do this? |

jlippold/tweakCompatible | 350097067 | Title: `InteliX` partial on iOS 11.3.1

Question:

username_0: ```

{

"packageId": "com.ioscreatix.intelix",

"action": "working",

"userInfo": {

"arch32": false,

"packageId": "com.ioscreatix.intelix",

"deviceId": "iPhone10,3",

"url": "http://cydia.saurik.com/package/com.ioscreatix.intelix/",

"iOSVersion": "11.3.1",

"packageVersionIndexed": true,

"packageName": "InteliX",

"category": "Tweaks",

"repository": "Packix",

"name": "InteliX",

"installed": "1.3.8",

"packageIndexed": true,

"packageStatusExplaination": "This package version has been marked as Working based on feedback from users in the community. The current positive rating is 90% with 19 working reports.",

"id": "com.ioscreatix.intelix",

"commercial": true,

"packageInstalled": true,

"tweakCompatVersion": "0.1.0",

"shortDescription": "Grouped Notifications for iOS 11",

"latest": "1.3.8",

"author": "iOS Creatix",

"packageStatus": "Working"

},

"base64": "<KEY>

"chosenStatus": "partial",

"notes": "Notifications don’t open"

}

``` |

department-of-veterans-affairs/va.gov-team | 967155077 | Title: AWS Staging: VAOS Direct Scheduling clinic Availability Calendar >Next button not enabled

Question:

username_0: 1. Log in as Cecil.

2. Select Primary Care. Select Cheyenne.

3. Enter April 1, 2022 for 'when do you want to schedule this appointment'. Select continue.

4. Expected response: The month of April displays and I can advance to the next month (using the >Next button).

5. Actual Response: The month of April displays and I CAN NOT advance to the next month (using the >Next button). I have to go back and change the date to May 1, 2022 if I want to see May availability.

6. I can use < Previous just fine. It is the >Next that is all in grey.

april 1 selected call: https://staging-api.va.gov/vaos/v0/facilities/983/available_appointments?type_of_care_[…]&clinic_ids[]=455&start_date=2022-04-01&end_date=2022-05-31

may 2 selected call: https://staging-api.va.gov/vaos/v0/facilities/983/available_appointments?type_of_care_[…]&clinic_ids[]=455&start_date=2022-05-01&end_date=2022-06-30 (edited)

Max future days to the clinic is 450 days. So that is not the issue.

Status: Issue closed

Answers:

username_1: Appears to be fixed now. @username_0 feel free to validate on your end as well |

Way2CU/Ranger-Site | 240007442 | Title: Site doesn't pass W3C validation.

Question:

username_0: [As seen here](https://validator.w3.org/nu/?doc=http%3A%2F%2Flp.berghoff.co.il%2F) site doesn't pass validation. Notable issues are using `li` elements as direct children of `div`.

@iliyaM you just make sure this passes afterwards. @username_1 this bug is solely yours. What you can't do, write here.

Answers:

username_1: Errors for LI elements fixed.

Error: Attribute seamless not allowed on element iframe at this point.

Spoke with @username_0 about this. It should be done on system level.

Status: Issue closed

|

FCC-Alumni/alumni-network | 217080624 | Title: User Profile Route

Question:

username_0: A route specifically for viewing a user's profile, that really highlights their profile and is less of a preview.

e.g. `/dashboard/users/:username_1`

Could link to this from community, mentorship, and chat, to give users a nice way of seeing details about another user.

Thoughts? Can discuss.

Answers:

username_1: @username_0 Yes, this is the next big step - I think once he have this done, we will have a pretty solid working app, and can think about cleaning things up and an initial potential release. I have more in mind for the future, but once this, chat, and search are fully done, I think we will be in good shape for an MVP (oh yeah, plus copy revisions and looking at what to do with both protected and unprotected home pages)

username_1: @username_0 should this be an unprotected route? so even non-users could see it? If so we'd have to hide email and such - if we even include that.

Status: Issue closed

|

AzureAD/microsoft-authentication-library-for-objc | 637428512 | Title: Can MSALWebviewParameters be used with SwiftUI to fetch a token interactively?

Answers:

username_1: SwiftUI should be compatible with existing UIKit frameworks. Apple has some guidance here: https://developer.apple.com/tutorials/swiftui/interfacing-with-uikit. Let us know if that still doesn't address your issue. Thanks. |

ballerina-platform/ballerina-lang | 588694425 | Title: Not possible to access json payload when payload is a json array

Question:

username_0: **Description:**

I have a POST resource and I want to pass a JSON array as the request payload.

````java

@http:ResourceConfig {

methods: ["POST"],

path: "/news-articles/validatetest",

cors: {

allowOrigins: ["*"],

allowHeaders: ["Authorization, Lang"]

},

produces: ["application/json"],

consumes: ["application/json"]

}

resource function validateArticlesTest(http:Caller caller, http:Request req) {

var x = req.getJsonPayload();

io:println(x);

io:println("test");

}

````

But seems when run this and invoke, var x becomes allways null.

````

curl -X POST http://localhost:9090/news-articles/validatetest -H "Content-Type: application/json" --data '[{"aaa":"amaval", "bbb":"bbbval"},{"ccc":"amaval", "ddd":"bbb val"}]'

````

**Affected Versions:**

1.1.3

Answers:

username_0: I could get this work by below approach by casting.

```

resource function validateArticlesTest(http:Caller caller, http:Request req) {

json[]|error jsonarray = <json[]>req.getJsonPayload();

io:println(jsonarray);

}

```

Hence closing the issue.

Status: Issue closed

username_1: @username_0, when casting, the resultant value is always the target type if successful.

So the following (without `error`) will work.

```ballerina

json[] jsonarray = <json[]>req.getJsonPayload();

```

But if unsuccessful, it will result in a panic.

For example, in case `req.getJsonPayload()` could evaluate to an error or could even be a `json` payload but not a JSON array (e.g., JSON object, digit, null, etc.) the cast to `json[]` will result in a panic, which in an HTTP resource will result in a 500 internal server error.

If that's not the intended behaviour, and you want to specifically handle the error scenarios, it would be better to do something like

```ballerina

json|error x = req.getJsonPayload();

if x is json[] {

// Valid, received a JSON array.

} else if x is json {

// Invalid - JSON, but not an array.

} else {

// Invalid - `error`

}

```

username_0: Thank you very much for the insight @username_1 |

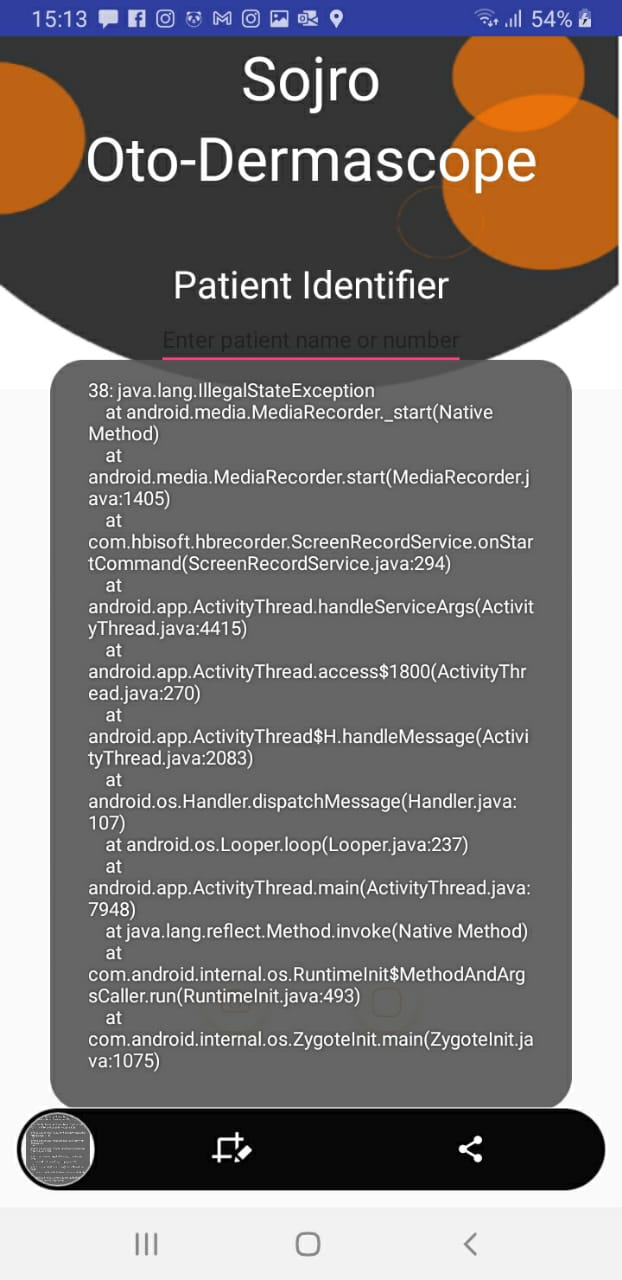

HBiSoft/HBRecorder | 918554116 | Title: Screen recording not working in android version 9

Question:

username_0: **HBRecorder version**

for example 2.0.0

**Device information**

Samsung

- SDK version 29

**Screenshots**

If applicable, add screenshots to help explain your problem.

|

Neztore/save-server | 994334801 | Title: (413) Payload Too Large.

Question:

username_0: **Describe the bug**

When I try uploading a file around 1MB or more it does not let me, some screenshots also give this error.

**To Reproduce**

1. Get a 1MB file or bigger.

2. Upload the file and it will give you the error.

**Expected behavior**

The file should upload normally.

**Screenshots**

**Additional context**

Latest ShareX version.

Answers:

username_1: This is a common issue, and is usually not due to Save-Server itself. The default limit within save-server is around 10mb

Nginx, by default, applies a low payload limit. [Follow these steps](https://www.tecmint.com/limit-file-upload-size-in-nginx/) to fix this - most proxy softwares, I imagine, apply a similar restriction by default.

username_0: Hey, I have tried that and it has worked. I have also found how to change the default save-server limit. Thanks!

Status: Issue closed

|

Yaruno292/EndlessRunner | 269596145 | Title: Naamgeving en engels

Question:

username_0: Je hebt feedback gekregen van **username_0**

op:

```c

public class DIE : MonoBehaviour {

private void OnTriggerEnter2D(Collider2D collision)

{

animationPlayer.ded = true;

}

}

```

URL: https://github.com/Yaruno292/EndlessRunner/blob/master/code/Player/DIE.cs

Feedback: Classes zijn altijd PascalCasing, gebruik correct engels.[](http://www.studiozoetekauw.nl/codereview-in-het-onderwijs/ '#cr:{"sha":"fb4e6075badd5775e05b549059fa4523e333bf98","path":"code/Player/DIE.cs","reviewer":"username_0"}') |

github-vet/rangeloop-pointer-findings | 777117186 | Title: keybase/client: go/kbtest/chat.go; 6 LoC

Question:

username_0: [Click here to see the code in its original context.](https://github.com/keybase/client/blob/a144e0ce38ee9e495cc5acbcd4ef859f5534d820/go/kbtest/chat.go#L1139-L1144)

<details>

<summary>Click here to show the 6 line(s) of Go which triggered the analyzer.</summary>

```go

for _, inboxItem := range inboxItems {

c.InboxCb <- NonblockInboxResult{

ConvRes: &inboxItem,

ConvID: inboxItem.GetConvID(),

}

}

```

</details>

Leave a reaction on this issue to contribute to the project by classifying this instance as a **Bug** :-1:, **Mitigated** :+1:, or **Desirable Behavior** :rocket:

See the descriptions of the classifications [here](https://github.com/github-vet/rangeclosure-findings#how-can-i-help) for more information.

commit ID: a144e0ce38ee9e495cc5acbcd4ef859f5534d820 |

dotvanilla/vanilla | 937225842 | Title: Getting started

Question:

username_0: Hey @username_1. Sorry to disturb you again.

I just cloned this repo but am unable to open the project in my VS. Can you publish a release? It will be very helpful if you release a compiled version.

It will be very helpful of you.

Thanks.

Answers:

username_1: Hi, please wait for me a few more days. I need time to relearn webassembly. there are something i forgot for the webassembly compiler development.

WebAssembly is not design for win32 programming, and the COM technology is design for win32. so webassembly probably not working for COM.....but you can search for the nodejs package that may supports the COM interface. webassembly application can utilize the nodejs api.

username_1: there is a unknow bug in this compiler project, i needs time to solve this bug |

JulzCryptoMyriad/JulzWidget | 987230209 | Title: Create 2 options for wallets

Question:

username_0:

Must be able to choose is using a mobile wallet(using WAalletConnect) or identify which wallet they have on the webpage they are using.

Answers:

username_0: Depends on #7 |

johnfairh/RubyGateway | 1033258879 | Title: Ruby version problem

Question:

username_0: I have swift project. в нем $LOAD_PATH :

/Library/Ruby/Site/2.6.0

/Library/Ruby/Site/2.6.0/x86_64-darwin20

/Library/Ruby/Site/2.6.0/universal-darwin20

/Library/Ruby/Site

/System/Library/Frameworks/Ruby.framework/Versions/2.6/usr/lib/ruby/vendor_ruby/2.6.0

/System/Library/Frameworks/Ruby.framework/Versions/2.6/usr/lib/ruby/vendor_ruby/2.6.0/x86_64-darwin20

/System/Library/Frameworks/Ruby.framework/Versions/2.6/usr/lib/ruby/vendor_ruby/2.6.0/universal-darwin20

/System/Library/Frameworks/Ruby.framework/Versions/2.6/usr/lib/ruby/vendor_ruby

/System/Library/Frameworks/Ruby.framework/Versions/2.6/usr/lib/ruby/2.6.0

/System/Library/Frameworks/Ruby.framework/Versions/2.6/usr/lib/ruby/2.6.0/x86_64-darwin20

/System/Library/Frameworks/Ruby.framework/Versions/2.6/usr/lib/ruby/2.6.0/universal-darwin20

In termenal

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/site_ruby/2.7.0

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/site_ruby/2.7.0/x86_64-darwin20

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/site_ruby

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/vendor_ruby/2.7.0

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/vendor_ruby/2.7.0/x86_64-darwin20

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/vendor_ruby

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/2.7.0

/Users/alexandr/.rvm/rubies/ruby-2.7.0/lib/ruby/2.7.0/x86_64-darwin20

How to make it fashionable to look from the ruby project in the same place and from the terminal?

Answers:

username_1: Closing, answered.

Status: Issue closed

|

glotzerlab/hoomd-blue | 541195435 | Title: No error issued when different particle vertices for specified for different ranks

Question:

username_0: ## Description

<!-- Describe the problem. -->

When different vertices are applied to each rank in a hoomd run, no error is produced meaning that different particles can be run for each rank. This has been shown to be a problem if the user is generating a new particle configuration each time the code is run meaning that for each rank the particle vertices will be different.

The issues become clear during compression of a simulation because the system is unable to properly run overlap checks.

to reproduce the problem, initialize the signac workspace and run the signac project file submit_job

## Script

```python

# Include a minimal script that reproduces the problem

you can reproduce this problem on great lakes with the script provided here.

The reduce overlap code is what generates a slightly different particle for each rank based on the isovalue file which is just the file for the vertices of the particle.

[Problem_Reproduction.zip](https://github.com/glotzerlab/hoomd-blue/files/3990248/Problem_Reproduction.zip)

```

<!-- Attach any input files needed to execute the script. -->

## Output

<!-- What output did you get? -->

## Expected output

<!-- What output did you expect? -->

## Configuration

<!-- What is your system configuration? -->

<!-- Remove items that do not apply. -->

Platform:

- Mac

- Linux

- GPU

- CPU

Installation method:

- Conda package

- Compiled from source

- glotzerlab-software container

<!-- What software versions do you have? -->

Versions:

- Python version: 3.7

- **HOOMD-blue** version: 2.8.1

## Developer

<!-- Are you able to fix this bug for the benefit of the HOOMD-blue user community? -->

Answers:

username_1: @b-butler This issue is general to all param and TypeParam dict values. The user can break things by specifying different values (e.g. temperatures) on different ranks. In v2.x I have strategically inserted broadcast calls on parameters where I expect users to pass different values (such as seed which many users set to a clock value). The broadcast call passes the value from rank 0 out to all the ranks and any values set on other ranks are ignored.

For v3 we could consider a general solution for all parameters and type parameters. This would require writing and exposing broadcast methods for all possible types that users may pass to params and TypeParams. Given that these may be user-defined classes (e.g. particle filters) or nested dicts, we may need to rely on pickle to pack and unpack the objects. Alternately, we could just not broadcast custom types and assume that users pass them in correctly.

username_1: I'm not sure a general solution is possible given the nature of the data model. We will need to address topics like this in a future MPI tutorial.

Status: Issue closed

|

Septima/spatialsuite-google-analytics | 154417549 | Title: Haderslev:GoogleAnalytics mangler vidergående opsætning

Question:

username_0: Vi mangler en opsætning til vores profiler.

Der er blevet oprettet 2 hovedsagelige profiler: Basis og Maxi, men de er helt tomme...

Det vi p.t. kan se er et samlet antal af bruger.

Vores forventning har været at spore anvendelsen af:

- **alle** eksisterende profiler

- **alle** eksisterende lag.

Er det noget vi selve kunne sætte op? |

NYCPlanning/labs-geosearch-docker | 564213913 | Title: 1912 Ditmars fails

Question:

username_0: @username_0 commented on [Fri Jan 19 2018](https://github.com/NYCPlanning/labs-geosearch-pad-normalize/issues/27)

19-12 Ditmars works

---

@chriswhong commented on [Mon Jan 22 2018](https://github.com/NYCPlanning/labs-geosearch-pad-normalize/issues/27#issuecomment-359464865)

Should be addressed by #11 |

space-wizards/space-station-14 | 267477189 | Title: We need sprite offsets.

Question:

username_0: Just a general way to offset sprites would be nice.

Answers:

username_1: I think we have this though, we have offset parameters that load in? I might be mistaken

username_0: Not that I can see, at least not on the basic `SpriteComponent`

Status: Issue closed

|

DimensionDataResearch/packer-plugins-ddcloud | 271743181 | Title: Unable to find uploaded source_image

Question:

username_0: Hi,

I am experiencing an issue with packer-plugins-ddcloud.

I have uploaded a customer image to use as a source_image, however the plugin does not seem to find it.

Expected result is that it should find the customer image and use this as a source_image to modify (provisioners e.g. Ansible) and build and upload a new customer image on the Dimension Data cloud.

Packer output:

```bash

# packer build debian9.json

ddcloud-customerimage output will be in this color.

ddcloud-customerimage: Resolving datacenter 'EU7'...

ddcloud-customerimage: Resolved datacenter 'EU7'.

==> ddcloud-customerimage: Image 'Debian9-base' not found in datacenter 'EU7'.

Build 'ddcloud-customerimage' errored: One or more steps failed to complete

```

Used builder configuration:

```json

"builders": [{

"type": "ddcloud-customerimage",

"mcp_region": "EU",

"mcp_user": "MYLOGIN",

"mcp_password": "<PASSWORD>",

"datacenter": "EU7",

"networkdomain": "develop",

"vlan": "prod-vlan-120",

"source_image": "Debian9-base",

"target_image": "Debian 9 test",

"use_private_ipv4": "true",

"communicator" : "ssh"

}

```

Thanks in advance!

Answers:

username_1: Hi looks like it should work - I'll check this out first thing tomorrow (am in AU and it's almost bedtime)

username_1: Ok, had a glance at the code and it looks like you've hit a bug - will sort it out first thing tomorrow; sorry for the inconvenience :)

username_0: Thank you!

username_1: No problem :)

In the meanwhile, would you mind running packer again with the following environment variables set and then attaching the resulting log file to this issue? I'm off to bed but will have a look tomorrow.

```bash

export PACKER_LOG=1

export PACKER_LOG_PATH=$PWD/packer.log

export MCP_EXTENDED_LOGGING=1

```

username_0: [packer.log](https://github.com/DimensionDataResearch/packer-plugins-ddcloud/files/1449860/packer.log)

I have installed your latest release beta4 which shows that it is unable to find an OS Image or Customer Image named Debian9-base. Which I did upload as a customer image.

username_1: From the log, it looks like the CloudControl API says there is no image, but I suspect that this relates to guest OS customisation (older API doesn't return images with GOC disables), had to deal with this in our Terraform provider a while back. Will check it out tomorrow

username_1: Ok, I'm going to try updating to the current version of the CloudControl client library (should be able to see images that have GOC disabled) and rebuild. Will post here when a new release is out.

username_1: Ok, so I'm about to create the release (thanks for bearing with me). This won't actually _fix_ your problem but will at least confirm for us that the problem relates to the newer style of images (where you can disable GOC). If that's the case, it should only take a couple of hours to implement.

Sorry about that, BTW - it looks like a case of our Packer plugins not keeping up with new features in CloudControl (I didn't realise anyone was using the plugins, but if you are then I'm happy to keep them up to date).

username_1: Ok @username_0, could you try running [v1.0.3-beta5](https://github.com/DimensionDataResearch/packer-plugins-ddcloud/releases/tag/v0.1.3-beta5) with logging and posting the log, please?

username_0: Just woke up and about to go to work, will run it immediately once I get

there.

username_0: I have run beta5, it seems to work alright. But cleanup on failure goes wrong.

I still have a network issue as ddcloud does not seem to support DHCP for some reason, hoping to figure out how to get around that...

[packer.log](https://github.com/DimensionDataResearch/packer-plugins-ddcloud/files/1452747/packer.log)

username_1: Yeah, they don't support DHCP unfortunately - I've been caught out by that one too :grin:

Fortunately, I built one that'll do what you want:

https://github.com/DimensionDataResearch/mcp2-dhcp-server

username_1: (sorry, I know it's slightly more awkward than native support within CloudControl but once it's set up, it's pretty much set-and-forget)

username_1: (just turn off PXE / iPXE features as needed)

username_0: Thanks :+1:

username_1: No worries - give us a yell if you have any trouble with it.

username_0: I am a bit disappointed as to needing to run a VM with a DHCP server in order to deploy VMs naturally.

How do the Dimension Data images work to circumvent this?

username_1: Yeah, it's not the best :-/

As I understand it (I'm not part of the team that does the MCP and CloudControl), CloudControl uses VMWare's [Guest OS Customisation](https://docs.mcp-services.net/display/CCD/Introduction+to+Cloud+Server+Provisioning%2C+OS+Customization%2C+and+Best+Practices) facility to achieve configuration of stuff like IP addresses (more [here](https://docs.mcp-services.net/display/CCD/Best+Practices+and+Tips+around+Linux+Guest+OS+Customization+Client+Images) and distro support matrix [here](https://docs.mcp-services.net/pages/viewpage.action?pageId=3015255)).

When you initially imported your image, were you presented with a choice to enable / disable guest OS customisation? I could be wrong, but if not then you might be using a distro that VMWare doesn't know how to customise.

username_1: I'll reach out to the relevant team to confirm this, BTW.

username_0: Thanks I'll give that a try, yes I did disable guest OS customisation as I did not have vmware tools installed.

username_1: Ah, sorry, just looked through the support matrix myself - looks like Debian is supported, but not for GOC :(

username_1: So if you're using Debian, you'll probably need either DHCP or static IPs baked into the image (yuck).

username_0: I tried to fake it being Ubuntu 16.04 instead, giving the following error:

ddcloud-customerimage output will be in this color.

ddcloud-customerimage: Resolving datacenter 'EU7'...

ddcloud-customerimage: Resolved datacenter 'EU7'.

ddcloud-customerimage: Deploying server 'packer-build-bddaa7002f' in network domain 'develop' ('e7e74af0-6dcd-41a9-8c12-9e60ad24c6a5')...

==> ddcloud-customerimage: Request to deploy server 'packer-build-bddaa7002f' failed with status code 400 (INVALID_INPUT_DATA): administratorPassword must not be provided if the imageId corresponds to a Linux Customer Image or Windows 2003 Customer Image.

Build 'ddcloud-customerimage' errored: unexpected EOF

==> Some builds didn't complete successfully and had errors:

--> ddcloud-customerimage: unexpected EOF

Not quite sure what this is supposed to mean.

username_0: I did not provide "initial_admin_password" as part of the builder in Packer.

username_1: Ah - ok, that might be a bug (or undefined behaviour at least); let me have a look.

username_1: Creating a new release now...

username_1: Ok, would you mind giving it one more try on [beta7](https://github.com/DimensionDataResearch/packer-plugins-ddcloud/releases/tag/v0.1.3-beta7) (with logging)?

username_0: [packer.log](https://github.com/DimensionDataResearch/packer-plugins-ddcloud/files/1453284/packer.log)

Issue is still present unfortunately.

username_1: LOL ok, sorry, I know what the problem is now. Give me a minute.

username_1: Creating a new release (sorry about this, but I think we've got it right this time)...

username_1: (it's been close to 12 months since I've touched this codebase and it's taking me a while to get familiar with the design again)

username_1: https://github.com/DimensionDataResearch/packer-plugins-ddcloud/releases/tag/v0.1.3-beta8

username_0: Thanks! It seems to be doing something right now.

username_0: Right now my VM is stuck on "Wait for Guest Ip Address"

username_1: Hmm - you may need to follow [these](https://docs.mcp-services.net/display/CCD/Best+Practices+and+Tips+around+Linux+Guest+OS+Customization+Client+Images

) instructions to make sure the original source image is customisation-compatible.

I'm not sure if you actually need `open-vm-tools` or equivalent to be present in the image...

You could also try just deploying a server from the source image and then logging into the console to see the effects of customisation (assuming the image was marked as supporting customisation).

username_1: Probably worth seeing if server deployed via the UI from that source image boots correctly; it would at least make it easier to see what IP it winds up with (otherwise, perhaps the DHCP option may wind up being easier to use).

username_0: Thanks for the help so far. I am still trying to figure out why the IP is not assigned, would be nice if someone from Dimension Data could tell me what's causing the it.

username_1: Hi, sorry to hear you're still having problems :-/

I think the best way to get support on this is to try deploy your image using the UI and then log a new ticket that doesn't mention Packer but just the server deployment problem(s). That way it'll get routed to the right people.

username_1: I take it following the instructions in that page I linked didn't help?

username_1: If the deployment fails I can probably show you how to use `curl` or `httpie` to view the full server details (including reason for failure) via the CloudControl API, which may help the CloudControl folks diagnose the issue...

username_0: If you could provide me with that info, that would be most helpful. Thanks!

username_1: Sure - no problem:

Do you prefer curl, HTTPie, or POSTMAN (personally I prefer HTTPie or POSTMAN but any of those is fine)?

username_0: I prefer httpie or postman as well.

username_1: Try this:

```bash

http get 'https://api-EU.dimensiondata.com/caas/2.5/{orgId}/server/server/{serverId}' Accept:application/json --auth-type basic --auth 'user:password'

```

Where `{orgId}` is, from the logs, `799dda5b-93ba-411c-a2a3-61c4c6e20c54`, and `{serverId}` is the Id of the server (you can see that in the CloudControl UI).

username_0: Thanks, that resulted in giving me the following information.

```json

"progress": {

"action": "DEPLOY_SERVER",

"numberOfSteps": 14,

"requestTime": "2017-11-09T07:54:52.000Z",

"step": {

"name": "WAIT_FOR_GUEST_IP_ADDRESS",

"number": 11

},

"updateTime": "2017-11-09T08:29:00.000Z",

"userName": "x"

},

```

Doesn't really say why, but I guess I should make a ticket with this information.

username_1: Yeah - that part of the process is a little opaque, but essentially it means that the server has booted but is has not picked up the configured IP address. I believe the customisation process modifies stuff in `/etc` and if your distro has unexpected stuff in there it may not be successful in doing so. I'd say at this stage yes, the best option is to raise a ticket; someone with knowledge of the system internals will need to take a look at it.

username_1: BTW, you can see a list of the steps and what they do [here](

https://docs.mcp-services.net/display/CCD/Introduction+to+Cloud+Server+Provisioning%2C+OS+Customization%2C+and+Best+Practices).

(sorry I couldn't more directly helpful but I have no deeper access to the system than you do)

username_1: BTW, it looks you do need to have VMWare tools or open-vm-tools installed for GOC to work correctly.

username_0: I did have them installed, I am seeing the following issue still when using Ubuntu 16.04 as a base image.

```

==> ddcloud-customerimage: Request to delete server failed with unexpected status code 400 (SERVER_STARTED): Server with id 6a2c6ef9-ddd0-4e41-88a5-0717e9ae7053 is started but must be stopped to perform this operation. Please Power Off or Shutdown the Server (as appropriate) and try again.

Build 'ddcloud-customerimage' errored: unexpected EOF

```

Could you make it so that it forces deletion or stops and then deletes?

username_1: Oops, I would have though it would already do that - I'll look into it first thing tomorrow. Thanks for sticking with it!

username_1: I've made the change (turns out it was done correctly in another step, but not this one), but have to get to bed; will create a release as soon as I wake up (sorry about that).

username_1: Never mind - just created `beta9` release. Go for it. Will be back online tomorrow morning :)

PS. If you still have any problems, attach a log and I'll see what's going on.

username_0: Thanks!! Sleep well :)

username_0: [packer.log](https://github.com/DimensionDataResearch/packer-plugins-ddcloud/files/1457819/packer.log)

Getting a segmentation fault now.

username_1: 😭

username_1: Believe it or not, we're making progress! The server deploy / destroy is working now and it's the destroying the firewall rule that is causing problems. Looking into it now.

username_1: Turns out the code path for `use_private_ipv4` hasn't been used before and it was trying to delete a non-existent firewall rule. Fixing now.

username_1: Right, `beta10` is ready to go - fingers crossed this is the last release you'll have to try.

username_0: Thanks, sorry for the lack of response. You are a day ahead of us and by the time you are at work I am home and unable to run Packer. :smile: I am running it now and waiting for the results!

username_1: No worries - I'm used to the time-zone thing and besides, it seems like you're the one who's having to wait for me rather than the other way around :)

username_0: The build has finished succesfully now!

Build 'ddcloud-customerimage' finished.

==> Builds finished. The artifacts of successful builds are:

--> ddcloud-customerimage: Customer image 'Ubuntu 16.04 test' ('ea3e0f47-d598-4135-8943-0f5b60c11d67') in datacenter 'EU7'.

username_1: Sorry again for all the trouble; I built this more than a year ago, and nobody used it so it didn't receive much testing. I'll give it a little love in the coming months if I can (will probably get it merged into Packer as a built-in module).

username_1: (let me know if the image deploys successfuly, BTW)

username_0: No worries! Glad to be able to help test it! In fact used to do a lot of testing for companies, and worked in software development myself.

I'll test if the image deploys now, I didn't do much to it besides install python and run Ansible debug returning which network it's in.

username_1: If it helps, BTW, there's a libcloud driver for CloudControl, and some (rather basic because we've had a couple of PRs stuck in limbo for a while) Ansible modules for it too.

username_0: Haven't heard of it before, what is it's use case?

username_1: Libcloud's a Python library that provides an abstraction over most of the cloud providers out there. If you can write Python it's not a bad way to automate things (it's how we wrote those Ansible modules, for example).

https://docs.mcp-services.net/display/LPC/LibCloud+Python+Client

username_0: Ah cool, thanks I'll give it a look! Yes I do write Python.. for quite a few years already, but you probably checked my profile? :+1:

username_1: Yep, that's why I suggested it :wink:

Status: Issue closed

|

mrdoob/three.js | 1054838890 | Title: Drag control needs to access its raycaster

Question:

username_0: **Is your feature request related to a problem? Please describe.**

I need to access the raycaster of drag control to handle the objects when they are not in the default layer.

I see that the transform control can access its raycaster to set to different layer, why it isn't the same with drag control?

**Describe the solution you'd like**

How about ?

```

getRaycaster() {

return _raycaster;

}

```

Answers:

username_1: Sounds good! Would you like to make a PR?

Status: Issue closed

|

ContinualAI/avalanche | 761278573 | Title: Add (Lopez-paz, 2017) Metrics: ACC, FWT & BWT

Question:

username_0: We should add the metrics proposed in (Lopez-paz, 2017) within the **beta version** of Avalanche.

Answers:

username_1: I'd love to work on this issue

username_0: Perfect @username_1, refer to @username_2 for any problem/doubts you may encounter! :)

Status: Issue closed

|

Neeke/PHP-Druid | 197592130 | Title: PHP 7 extension is broken

Question:

username_0: Build time:

```

/dev/shm/BUILD/php-pecl-druid-0.6.0/ZTS/druid.c: In function 'zim_DRUID_NAME_getData':

/dev/shm/BUILD/php-pecl-druid-0.6.0/ZTS/druid.c:559:21: warning: implicit declaration of function 'Z_TYPE_PP' [-Wimplicit-function-declaration]

if (argc > 1 && Z_TYPE_PP(content) != IS_ARRAY)

^~~~~~~~~

```

Runtime:

```

+ /usr/bin/php -n -d extension=json.so -d extension=curl.so --define extension=/dev/shm/BUILDROOT/php-pecl-druid-0.6.0-1.fc25.remi.7.0.x86_64/usr/lib64/php/modules/druid.so --modules

+ grep Druid

PHP Warning: PHP Startup: Unable to load dynamic library '/dev/shm/BUILDROOT/php-pecl-druid-0.6.0-1.fc25.remi.7.0.x86_64/usr/lib64/php/modules/druid.so' - /dev/shm/BUILDROOT/php-pecl-druid-0.6.0-1.fc25.remi.7.0.x86_64/usr/lib64/php/modules/druid.so: undefined symbol: Z_TYPE_PP in Unknown on line 0

```

Answers:

username_1: Can you fix it?

username_0: Sorry, not in a short delay.

username_0: See pr #6 (untested, only build)

Status: Issue closed

|

owncloud/ocis-reva | 698802455 | Title: [eos] Cannot set mtime in file upload

Question:

username_0: <d:prop><d:getetag/><d:getlastmodified/></d:prop>

</d:propfind>' -k | xmllint --format -

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 649 100 415 100 234 387 218 0:00:01 0:00:01 --:--:-- 605

<?xml version="1.0" encoding="utf-8"?>

<d:multistatus xmlns:d="DAV:" xmlns:s="http://sabredav.org/ns" xmlns:oc="http://owncloud.org/ns">

<d:response>

<d:href>/remote.php/webdav/file.txt</d:href>

<d:propstat>

<d:prop>

<d:getetag>"2452963196928:062c0215"</d:getetag>

<d:getlastmodified>Fri, 11 Sep 2020 03:43:45 +0000</d:getlastmodified>

</d:prop>

<d:status>HTTP/1.1 200 OK</d:status>

</d:propstat>

</d:response>

</d:multistatus>

``` |

tidyverse/ggplot2 | 810435363 | Title: ragg device functions not working with ggsave

Question:

username_0: It's not working for me however, the following code results in an empty image being saved.

Related to https://github.com/r-lib/ragg/issues/69

``` r

library(ggplot2)

library(ragg)

p <- ggplot(iris, aes(Sepal.Length, Sepal.Width, col = Species)) + geom_point()

ggsave("gg_test.png", p, device = agg_png)

#> Saving 7 x 5 in image

```

<sup>Created on 2021-02-17 by the [reprex package](https://reprex.tidyverse.org) (v1.0.0)</sup>

<details style="margin-bottom:10px;">

<summary>

Session info

</summary>

``` r

sessioninfo::session_info()

#> ─ Session info ───────────────────────────────────────────────────────────────

#> setting value

#> version R version 4.0.3 (2020-10-10)

#> os macOS Mojave 10.14.6

#> system x86_64, darwin17.0

#> ui X11

#> language (EN)

#> collate en_GB.UTF-8

#> ctype en_GB.UTF-8

#> tz Europe/Paris

#> date 2021-02-17

#>

#> ─ Packages ───────────────────────────────────────────────────────────────────

#> package * version date lib source

#> assertthat 0.2.1 2019-03-21 [1] standard (@0.2.1)

#> backports 1.2.0 2020-11-02 [1] standard (@1.2.0)

#> cli 2.2.0 2020-11-20 [1] standard (@2.2.0)

#> colorspace 2.0-0 2020-11-11 [1] standard (@2.0-0)

#> crayon 1.3.4 2017-09-16 [1] standard (@1.3.4)

#> digest 0.6.27 2020-10-24 [1] standard (@0.6.27)

#> dplyr 1.0.2 2020-08-18 [1] standard (@1.0.2)

#> ellipsis 0.3.1 2020-05-15 [1] standard (@0.3.1)

#> evaluate 0.14 2019-05-28 [1] standard (@0.14)

#> fansi 0.4.1 2020-01-08 [1] standard (@0.4.1)

#> farver 2.0.3 2020-01-16 [1] standard (@2.0.3)

#> fs 1.5.0 2020-07-31 [1] standard (@1.5.0)

#> generics 0.1.0 2020-10-31 [1] standard (@0.1.0)

#> ggplot2 * 3.3.3 2020-12-30 [1] standard (@3.3.3)

#> glue 1.4.2 2020-08-27 [1] standard (@1.4.2)

#> gtable 0.3.0 2019-03-25 [1] standard (@0.3.0)

#> highr 0.8 2019-03-20 [1] standard (@0.8)

#> htmltools 0.5.1.1 2021-01-22 [1] standard (@0.5.1.1)

#> knitr 1.30 2020-09-22 [1] standard (@1.30)

#> labeling 0.4.2 2020-10-20 [1] standard (@0.4.2)

#> lifecycle 0.2.0 2020-03-06 [1] standard (@0.2.0)

#> magrittr 2.0.1 2020-11-17 [1] standard (@2.0.1)

#> munsell 0.5.0 2018-06-12 [1] standard (@0.5.0)

[Truncated]

#> scales 1.1.1 2020-05-11 [1] standard (@1.1.1)

#> sessioninfo 1.1.1 2018-11-05 [1] standard (@1.1.1)

#> stringi 1.5.3 2020-09-09 [1] standard (@1.5.3)

#> stringr 1.4.0 2019-02-10 [1] standard (@1.4.0)

#> styler 1.3.2 2020-02-23 [1] standard (@1.3.2)

#> systemfonts 1.0.1 2021-02-09 [1] standard (@1.0.1)

#> textshaping 0.3.0 2021-02-10 [1] standard (@0.3.0)

#> tibble 3.0.4 2020-10-12 [1] standard (@3.0.4)

#> tidyselect 1.1.0 2020-05-11 [1] standard (@1.1.0)

#> vctrs 0.3.5 2020-11-17 [1] standard (@0.3.5)

#> withr 2.3.0 2020-09-22 [1] standard (@2.3.0)

#> xfun 0.19 2020-10-30 [1] standard (@0.19)

#> yaml 2.2.1 2020-02-01 [1] standard (@2.2.1)

#>

#> [1] /Library/Frameworks/R.framework/Versions/4.0/Resources/library

```

</details>

Thanks.

Answers:

username_1: Thanks, I too faced this problem a while ago. As the default value of `ragg::agg_png()`'s `unit` is `px`, which is the same as `grDevices::png()`, I guess ggplot2 needs some wrappers like this:

https://github.com/tidyverse/ggplot2/blob/dbd7d79aa35c49b4eab200cf5f7a084a7748e776/R/save.r#L181-L185

username_2: This is the lookup table that converts file extensions into graphic devices, so it wouldn't have an effect if users specify the graphics device as a function. Instead, if they do so, they will have to set `res` and `units` manually.

However, we should change the lookup table so it uses ragg devices by default if available.

username_2: See also: #4086

username_3: Yeah — the plan is to use ragg if available for all file types where it makes sense in the next release. If someone is eager to implement the logic they should feel free but otherwise I'm on it the next time I look at ggplot2

username_1: Yes, what I meant was the users need to define some wrapper function like the one below. I guess a lot of people will use AGG as RStudio graphic device (as it's great) and they'll want to use the same rendering on `ggsave()` as well. Probably the next release will not be very soon, so I think it's meaningful to show a workaround for this here.

``` r

library(ggplot2)

p <- ggplot(iris, aes(Sepal.Length, Sepal.Width, col = Species)) + geom_point()

# 300 is the same dpi as ggsave()'s default

png <- function(...) ragg::agg_png(..., res = 300, units = "in")

ggsave("gg_test.png", p, device = png)

```

username_3: The behaviour of `ggsave()` is quite unfortunate... ragg uses the exact same default values as `grDevices::png()` but still does not behave the same way

username_2: The following works just fine, though. If people want to use custom graphics devices they need to be prepared to set a few parameters correctly, I think. Maybe the real issue is to document `ggsave()` better, and to explain that when using a device function, some settings such as `dpi` or `units` may not work and/or may have to be set explicitly.

``` r

library(ggplot2)

p <- ggplot(iris, aes(Sepal.Length, Sepal.Width, col = Species)) + geom_point()

ggsave("~/Desktop/gg_test.png", p, device = ragg::agg_png, res = 300, units = "in")

#> Saving 7 x 5 in image

```

<sup>Created on 2021-02-22 by the [reprex package](https://reprex.tidyverse.org) (v1.0.0)</sup>

username_4: @username_2, when I run your example, my `gg_test.png` file is 7x7 pixels big and 116 bytes big.

`p` prints to Viewer just fine, it's `ggsave` with `agg_png` that saves a tiny 7x7 pixels file.

My session info:

```

R version 4.0.3 (2020-10-10)

Platform: x86_64-apple-darwin17.0 (64-bit)

Running under: macOS Big Sur 10.16

Matrix products: default

LAPACK: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRlapack.dylib

locale:

[1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] ggplot2_3.3.3

loaded via a namespace (and not attached):

[1] magrittr_2.0.1 tidyselect_1.1.0 munsell_0.5.0 colorspace_2.0-0 R6_2.5.0

[6] ragg_1.1.1.9000 rlang_0.4.10 fansi_0.4.2 dplyr_1.0.5 tools_4.0.3

[11] grid_4.0.3 gtable_0.3.0 utf8_1.1.4 DBI_1.1.1 withr_2.4.1

[16] systemfonts_1.0.1 ellipsis_0.3.1 digest_0.6.27 assertthat_0.2.1 tibble_3.0.6

[21] lifecycle_1.0.0 crayon_1.4.1 textshaping_0.3.1 farver_2.0.3 purrr_0.3.4

[26] vctrs_0.3.6 glue_1.4.2 labeling_0.4.2 compiler_4.0.3 pillar_1.5.0

[31] generics_0.1.0 scales_1.1.1 pkgconfig_2.0.3

```

username_5: Hello,

In addition, it seems that `ggsave()` does not close the device when using `agg_png()` as device (it does when specifying `'png'` as device). The following reprex ends with to the `Too many open devices` error.

```

library(ggplot2)

library(ragg)

library(glue)

data(iris)

for (i in 1:200){

p <- ggplot(iris) +

geom_point(aes(Sepal.Length,Sepal.Width,color=Species))

ggsave(glue("Plot_{i}.png"), plot=p, device=agg_png(), path="./tmp/")

}

```

(This is solved by explicitly calling `dev.off()` after `ggsave()`)

username_1: @username_5

What `device` argument accepts is a **function** (e.g. `agg_png`), not a function call (e.g. `png()`).

username_6: I can confirm w/ @username_4 that using your code Claus with `agg_png` + friends as the device returns small images, 116 bytes, 7x7 *pixels*.

`ragg` version 1.1.2

username_2: Ah, this makes sense. `ggsave()` has a `units` argument so it doesn't get captured by `...` and thus doesn't get handed off to `agg_png()`. Workaround is to specify the resolution directly in px, not in inch.

username_6: Yup!

You can get the "expected" behavior as so, without specifying arguments inside `agg_png`:

```

ggsave(

"gg_test.png",

p,

device = ragg::agg_png,

res = 300,

units = "in",

height = 1200, # this is ACTUALLY pixels

width = 1400, # also pixels

limitsize = FALSE # because ggplot2 thinks it's inches

)

```

Status: Issue closed

|

JulienNigon/SpellcasterUniversityPrivateBeta | 876679001 | Title: Typo Pologne pour les titres

Question:

username_0:

Pourrais tu faire que quand on lance le jeu en Polonais il utilise pour les titres la Mops à la place de la Berry Rotunda?

Status: Issue closed

Answers:

username_0: Typo Mops mise en remplacement pour les lettres qui manquent |

espressif/esp-adf | 794918763 | Title: How to make playback of mp3 from SD card using esp32-s2?

Question:

username_0: Hi all,

Working on mp3 playback from sd card using esp32-s2. wroom is used. I'm able to create/save a text file and open/edit that file. but stuck at mp3 playback.

requesting experienced suggestions and guidance.

I will be happy if there is a sample code.

thanks in advance.

Answers:

username_1: Hi, @username_0

Currently adf support sdio interface as fatfs dirver, but s2 has no sdio interface.

So you need to choice spi as fatfs interface, and app code do not need change.

username_0: @username_1 Sir, can you please provide a sample code?

username_0: Any updates? Highly appreciates your suggestions...

username_1: HI , @username_0

The log provided shows some undeclared errors.

It may be an error such as not including a header file. You need to be careful about some dependency issues when porting.

username_0: the i2s header files seems to be OK.

username_0: esp32 works perfectly with internal DAC. While using the same code for Espressif ESP32-S2-Soala-1_V1.2, (MP3 Playback from spiffs) it gives error that the problem with i2s as my previous post. Still the issue not resolved. Any example code released so far? Play back from spiffs or from SD Card? Expecting a solution at the earliest. Thank you.

username_2: Hi there!

I've just encountered this issue as well, allbeit with another example (the "Play MP3 file from SD Card" to be precise). I ended up just using the sdspi host in the audio_board_sdcard_init function of my custom board. It ended up looking (and working) like this. Just like username_1 mentioned.

``` c++

esp_err_t audio_board_sdcard_init(esp_periph_set_handle_t set, periph_sdcard_mode_t mode){

esp_err_t ret;

esp_vfs_fat_sdmmc_mount_config_t mount_config = {

.format_if_mount_failed = SDCARD_FORMAT_ON_MOUNT_FAIL,

.max_files = SDCARD_OPEN_FILE_NUM_MAX,

.allocation_unit_size = 16 * 1024

};

sdmmc_card_t* card;

const char mount_point[] = SDCARD_MOUNT_POINT;

ESP_LOGI(TAG, "Initializing SD card");

gpio_set_pull_mode(SDCARD_MISO_GPIO, GPIO_PULLUP_ONLY); // TODO: Replace with seperate pull-ups.

gpio_set_pull_mode(SDCARD_MOSI_GPIO, GPIO_PULLUP_ONLY);

gpio_set_pull_mode(SDCARD_SCLK_GPIO, GPIO_PULLUP_ONLY);

gpio_set_pull_mode(SDCARD_CS_GPIO, GPIO_PULLUP_ONLY);

ESP_LOGI(TAG, "Using SPI peripheral");

sdmmc_host_t host = SDSPI_HOST_DEFAULT();

spi_bus_config_t bus_cfg = {

.mosi_io_num = SDCARD_MOSI_GPIO,

.miso_io_num = SDCARD_MISO_GPIO,

.sclk_io_num = SDCARD_SCLK_GPIO,

.quadwp_io_num = -1,

.quadhd_io_num = -1,

.max_transfer_sz = 4000,

};

ret = spi_bus_initialize(host.slot, &bus_cfg, SPI_DMA_CHAN);

if (ret != ESP_OK) {

ESP_LOGE(TAG, "Failed to initialize bus.");

return ret;

}

sdspi_device_config_t slot_config = SDSPI_DEVICE_CONFIG_DEFAULT();

slot_config.gpio_cs = SDCARD_CS_GPIO;

slot_config.host_id = host.slot;

ret = esp_vfs_fat_sdspi_mount(mount_point, &host, &slot_config, &mount_config, &card);

if (ret != ESP_OK) {

if (ret == ESP_FAIL) {

ESP_LOGE(TAG, "Failed to mount filesystem. "

"If you want the card to be formatted, set the EXAMPLE_FORMAT_IF_MOUNT_FAILED menuconfig option.");

} else {

ESP_LOGE(TAG, "Failed to initialize the card (%s). "

"Make sure SD card lines have pull-up resistors in place.", esp_err_to_name(ret));

}

return ret;

}

return ret;

}

```

I hope this helps you and maybe others in the future as well!

Kind regards,

Jochem

username_0: Thanks @username_2 ,

It helps me. i2s shows some error while using the internal DAC. i think, i2s function is not portable to esp23s2 from esp32.

Status: Issue closed

|

sixteenmillimeter/mcopy | 459255131 | Title: Frame counting method takes a long time on large videos

Question:

username_0: Improve the frame counting feature for filmout so that it determines frame count using

```

ffprobe -v error -count_frames -select_streams v:0 -show_entries stream=nb_read_frames -of default=nokey=1:noprint_wrappers=1 "${video}"

```

Status: Issue closed

Answers:

username_0: This has been resolved with commit f239f862e8b44f925d5ec8c7ab943d622c73ff56. For every type of supported video format or container *except* .mkv (Matroska) there is a new ffprobe command which runs in a fraction of the time. For .mkv files, the old command is used. |

kubernetes/kubernetes | 117488864 | Title: Rename RawPodStatus/PodStatus in kubelet

Question:

username_0: Forked from #17259

We want to rename some types and functions in the container runtime interface.

- type RawPodStatus to PodStatus

- GetPodStatus() to GetAPIPodStatus() (should be eventually deprecated).

- GetRawPodStatus() to GetPodStatus()

@username_1, I am assigning this to you so that you do with, or after your pending PRs to avoid rebases.

Answers:

username_0: /cc @dchen1107

Status: Issue closed

username_1: close this issue, because it has been done in #17420. :) |

ajency/F-BCircle | 240932751 | Title: Single View of Listing html - Issues

Question:

username_0: Tested on: http://staging.fnbcircle.com/single-view.html

Tested on: Chrome

1) The start of the breadcrumb is not aligned with the next section.

2) The header elements should be prominent- maybe bold.

3) The location and map below the listing title looks faded.

4) View when clicked on +10 for areas of operation should be shown.

5) Change the text "Send Enquiry" to "Send an Enquiry".

6) For the count of contacts and enquiries, there will be buckets set.

So we have the following values. "Fewer than 10, 10+ Contact Requests/Enquiries, 50+ Contact Requests/Enquiries, 100+ Contact Requests/Enquiries and so on.

7) Change "Is this your business? Claim it now. or Report Now" to "Is this your business? Claim it now

or Report"

8) Photos & Documents - On click of cover image - no way to go to the next images.

9) "Article" should be "Articles"

10) "Also listed In" should be "Also Listed In"

11) Browse Categories should display the categories having higher count first.

12) On click of the map link below the title, it should take to the Map section but along with the address displayed too.

13) When clicked on Listed In - it should scroll exactly above Also Listed in section. Similarly for Overview, Similar Businesses.

14) The scrollable menu should comes as soon the menu section disappears.

15) On mouse hover of the categories under browse categories section, they should be highlighted. Similarly the download links for documents.

16) Hours of operation - See more view?

17) "Updates" should be inline with the next section

18) Add share icons - Whatsapp, Linkedin, Facebook, Twitter, Google+

Answers:

username_1: Points fixed leaving 18 and 21.

username_0: @username_1

6. For the count of contacts and enquiries, there will be buckets set.

So we have the following values. "Fewer than 10, 10+ Contact Requests/Enquiries, 50+ Contact Requests/Enquiries, 100+ Contact Requests/Enquiries and so on.

12. On click of the map link below the title, it should take to the Map section but along with the address displayed too.

On mouse hover the download links for documents should be highlighted. |

uwsampl/relay-bench | 548356106 | Title: Including telemetry graphs in the webpage

Question:

username_0: Now that we have the `vis_telemetry` subsystem, we should include the generated graphs in the generated webpage. @AD1024 can you try to modify the website generator to include the last run's telemetry graphs in the generated web page (say, under a header for each experiment that has a graph)? It would be valuable for usability |

trondr/DriverTool | 1112442361 | Title: DriverTool.exe missing from package.

Question:

username_0: The Script\DriverTool folder of a package is missing DriverTool.exe when using the PowerShell module to create the package.

Reproduced with:

$models = @("10RS")

$models | Foreach-Object{ Write-Host "Getting driver updates for model $_"; Get-DtDriverUpdates -Manufacturer Lenovo -ModelCode "$_" -OperatingSystem "WIN10X64" -OsBuild "21H2" -ExcludeDriverUpdates @("BIOS","Firmware") -Verbose } | Invoke-DtDownloadDriverUpdates

Workaround: Copy DriverTool.exe from powershell module manually. Example:

copy "C:\Program Files\WindowsPowerShell\Modules\DriverTool.PowerCLI\1.0.22023\internal\tools\DriverTool\DriverTool.exe" "C:\temp\DU\10RS\2021-11-12-1.0\Script\DriverTool\DriverTool.exe"<issue_closed>

Status: Issue closed |

coleifer/peewee | 88295364 | Title: [Support request] No primary key for a model

Question:

username_0: Hi Charles,

I've got a table which logs keywords, schema is:

```

class Keyword(Model):

name = CharField(null=False)

created = DateTimeField(null=False, default=datetime.utcnow)

class Meta:

indexes = (

(('created', 'name'), False)

)

```

In short, I want to drop the auto-generated primary key column (id). How do I prevent it from being readded in subsequent db creations, I've taken a look into the manual and not found the syntax.

-----

In long, in the database however it has added an id column and associated pkey index. This table now has 35 million rows and that pkey index is 10gb. I've taken a look into the code and it looks safe to drop the column, we only perform an aggregate query on the table in any case:

`Keyword.select(Keyword.created, Keyword.name).where(Keyword.created > 3_days)` OR `Keyword.select().where(Keyword.created > 3_days).count()`

Neither of these should need a primary key as we can get exact duplicates (35mil rows are for 3 days of data).

Many thanks,

Alex

PS. It would also be awesome to be able to name index constraints, getting a few problems when running schema migrations because of name mismatches between environments.

Status: Issue closed

Answers:

username_1: You need a primary key of some sort, so your best bet is to:

```python

class Keyword(Model):

name = CharField(null=False)

created = DateTimeField(null=False, default=datetime.utcnow)

class Meta:

primary_key = CompositeKey('name', 'created')

```

Unfortunately peewee does not support "no primary key" at all.

username_0: Hmm, but surely this will imply a unique constraint which isn't what I'm after because the dataset can contain duplicate values. Is there any plans for peewee to support no primary key set ups?

username_1: Oh wait my mistake I forgot that this *is* possible!

Edited my comment above.

username_1: I've also updated the docs to make this more clear.

username_0: Awesome thanks Charles :)

username_2: Thanks @username_1 for this answer of yours - it helped me greatly now, three years later. :+1: (I was struggling with peewee trying to retrieve data from a non-existing sequence as below)

```

psycopg2.ProgrammingError: relation "issue_assignees_id_seq" does not exist

LINE 1: SELECT CURRVAL('"issue_assignees_id_seq"')

```

Applying the `primary_key = False` to the inner class fixed the issue. |

os-js/osjs-server | 752990458 | Title: Dynamic mount point

Question:

username_0: Hi,

Is it possible to create mountpoint at runtime?

For example, in an application, can I careate a new mountpoint with one of adapters registered before?

server.js:

can we do something like this?

`{....core.configuration.mountpoints, newMountpoint} `and using it as mountpoints list in application. or just create an mountpoint entry and use it:

```js

const mountpoint = ({

adapter:'monster',

name:'Run time mountpoint',

attributes : .............

})

```

an then use `mountpoint`. for creating mountpoint in application.

Also How can I do this independent of an application. creating `mountpoint` at osjs run time under some condition, and accessing it in whole osjs.

Answers:

username_1: Create a service provider. You could wire it together either via signals (events) or a service contract.

```

class MyServiceProvider {

constructor(core, options = {}) {

this.core = core

this.options = options

}

async init() {

this.core.singleton('my-service', this.createMyContract())

}

createMyContract() {

return {

mount: () => this.createSomeMountpoint()

}

}

createSomeMountpoint() {

// This is where you would add stuff to do actual operations

}

}

```

Then via your apps do `core.make('my-service').mount()`.

username_1: Which does not have to be an app ofc. And as for conditions, you can create your own state(s) and such and as I mentioned wire it together with events.

If you have some idea how you need it to work, then show me and I can write a more complete example.

username_0: Still I didn't write service provider.

Of course I think one way may helps:

when a user try logins with username and password, after validation he gets token and endpoints list. then we could save tokens in session storage for every adapter method query(checking user's privileges) and save endpoints and name in osjs settings. In this way, certainly we need implement `server.js` for each application needs this mountpoints(with passing `token ` and `endpoint` to `server.js`) and call vfs there and return response.

I hope I have told it clear.

username_1: With the scenario you just described it sounds like you might not need any runtime dynamic mount/unmount on the client-side, but rather make the login process manipulate the mounpoint list (upon login) that usually comes from the statically generated configuration.

As for the server side, since you're using the same adapter for everything, I think a nice way to approach this is to make a mountpoint adapter support RegEx in the `name` property.

I think this solves the issue in a fast and efficient way for your needs -- at least if you know what name(s) you're going to use for your mountpoints.

So, to summarize:

1. Change the Filesystem component in the client to initially store the mountpoint list from config

* Add API method to add to this list

* Add this API method to the VFS service contract

2. Change the Filesystem component in the server to support RegEx matching on mountpoint names

username_0: As you said before, we must write serviceProvider to decouple this operation from login and depends it to auth SP.

But our new issue is creating client mountpoints to showing user! Do we need create a client service provider for this porpuse?

we store mountpoint name in server session. (actually we need create mountpoint list in client after user login too).

username_0: This wasn't clear to my why i need modify Filesystem component. what is your mean of `VFS service contract`.

Is it necessary to modify server filesystem when we could add dynamically? Where might it cause a problem when push to mountpoints?

username_1: we store mountpoint name in server session. (actually we need create mountpoint list in client after user login too).

Please use the following issue for client side stuff: https://github.com/os-js/osjs-client/issues/134

In any case, I would recommend creating a service provider for this. Store the data required to set up the mountpoints in the user data, then just use the new methods that will be implemented by https://github.com/os-js/osjs-client/issues/134.

The reason for this is that the VFS is not available before *after* a user has logged in (this can be customized, but I would not recommend it).

Status: Issue closed

username_1: Why did you close this?

username_0: I didn't should close an issue when someone self-assigned it..right?

username_0: Hi,

Is it possible to create mountpoint at runtime?

For example, in an application, can I careate a new mountpoint with one of adapters registered before?

server.js:

can we do something like this?

`{....core.configuration.mountpoints, newMountpoint} `and using it as mountpoints list in application. or just create an mountpoint entry and use it:

```js

const mountpoint = ({

adapter:'monster',

name:'Run time mountpoint',

attributes : .............

})

```

an then use `mountpoint`. for creating mountpoint in application.

Also How can I do this independent of an application. creating `mountpoint` at osjs run time under some condition, and accessing it in whole osjs.

username_1: But I don't think this is solved :smile:

There still is no proper way to dynamically add/remove mountpoints on the server.

Pushing into that array is a very brute force way of doing this, and is not guaranteed to work forever of some internal mechanics change slightly.

I wrote here what I feel needs to be done to solve this correctly: https://github.com/os-js/osjs-server/issues/42#issuecomment-736073632

username_1: I just realized there's a way to dynamically add mountpoints in the server already :blush:

```

// Example using the standard home mountpoint setup

core.make('osjs/fs').mount({

name: 'example',

attributes: {

root: '{vfs}/{username}'

}

})

```

I might actually make it so the client also supports using `mount()` with an object just like the new `addMountpoint` i made there so that the API signatures are similar.

username_1: If that does not solve your issue, please re-open :blush: