markdown

stringlengths 0

1.02M

| code

stringlengths 0

832k

| output

stringlengths 0

1.02M

| license

stringlengths 3

36

| path

stringlengths 6

265

| repo_name

stringlengths 6

127

|

|---|---|---|---|---|---|

Setup If you are running this generator locally(i.e. in a jupyter notebook in conda, just make sure you installed:- RDKit- DeepChem 2.5.0 & above- Tensorflow 2.4.0 & aboveThen, please skip the following part and continue from `Data Preparations`. To increase efficiency, we recommend running this molecule generator in Colab.Then, we'll first need to run the following lines of code, these will download conda with the deepchem environment in colab.

|

#!curl -Lo conda_installer.py https://raw.githubusercontent.com/deepchem/deepchem/master/scripts/colab_install.py

#import conda_installer

#conda_installer.install()

#!/root/miniconda/bin/conda info -e

#!pip install --pre deepchem

#import deepchem

#deepchem.__version__

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Data PreparationsNow we are ready to import some useful functions/packages, along with our model. Import Data

|

import model##our model

from rdkit import Chem

from rdkit.Chem import AllChem

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import deepchem as dc

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Then, we are ready to import our dataset for training. Here, for demonstration, we'll be using this dataset of in-vitro assay that detects inhibition of SARS-CoV 3CL protease via fluorescence.The dataset is originally from [PubChem AID1706](https://pubchem.ncbi.nlm.nih.gov/bioassay/1706), previously handled by [JClinic AIcure](https://www.aicures.mit.edu/) team at MIT into this [binarized label form](https://github.com/yangkevin2/coronavirus_data/blob/master/data/AID1706_binarized_sars.csv).

|

df = pd.read_csv('AID1706_binarized_sars.csv')

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Observe the data above, it contains a 'smiles' column, which stands for the smiles representation of the molecules. There is also an 'activity' column, in which it is the label specifying whether that molecule is considered as hit for the protein.Here, we only need those 405 molecules considered as hits, and we'll be extracting features from them to generate new molecules that may as well be hits.

|

true = df[df['activity']==1]

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Set Minimum Length for molecules Since we'll be using graphic neural network, it might be more helpful and efficient if our graph data are of the same size, thus, we'll eliminate the molecules from the training set that are shorter(i.e. lacking enough atoms) than our desired minimum size.

|

num_atoms = 6 #here the minimum length of molecules is 6

input_df = true['smiles']

df_length = []

for _ in input_df:

df_length.append(Chem.MolFromSmiles(_).GetNumAtoms() )

true['length'] = df_length #create a new column containing each molecule's length

true = true[true['length']>num_atoms] #Here we leave only the ones longer than 6

input_df = true['smiles']

input_df_smiles = input_df.apply(Chem.MolFromSmiles) #convert the smiles representations into rdkit molecules

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Now, we are ready to apply the `featurizer` function to our molecules to convert them into graphs with nodes and edges for training.

|

#input_df = input_df.apply(Chem.MolFromSmiles)

train_set = input_df_smiles.apply( lambda x: model.featurizer(x,max_length = num_atoms))

train_set

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

We'll take one more step to make the train_set into separate nodes and edges, which fits the format later to supply to the model for training

|

nodes_train, edges_train = list(zip(*train_set) )

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Training Now, we're finally ready for generating new molecules. We'll first import some necessay functions from tensorflow.

|

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

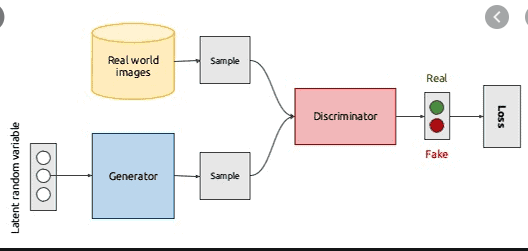

The network here we'll be using is Generative Adversarial Network, as mentioned in the project introduction. Here's a great [introduction](https://machinelearningmastery.com/what-are-generative-adversarial-networks-gans/).  Here we'll first initiate a discriminator and a generator model with the corresponding functions in the package.

|

disc = model.make_discriminator(num_atoms)

gene = model.make_generator(num_atoms, noise_input_shape = 100)

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Then, with the `train_batch` function, we'll supply the necessary inputs and train our network. Upon some experimentations, an epoch of around 160 would be nice for this dataset.

|

generator_trained = model.train_batch(

disc, gene,

np.array(nodes_train), np.array(edges_train),

noise_input_shape = 100, EPOCH = 160, BATCHSIZE = 2,

plot_hist = True, temp_result = False

)

|

>0, d1=0.221, d2=0.833 g=0.681, a1=100, a2=0

>1, d1=0.054, d2=0.714 g=0.569, a1=100, a2=0

>2, d1=0.026, d2=0.725 g=0.631, a1=100, a2=0

>3, d1=0.016, d2=0.894 g=0.636, a1=100, a2=0

>4, d1=0.016, d2=0.920 g=0.612, a1=100, a2=0

>5, d1=0.012, d2=0.789 g=0.684, a1=100, a2=0

>6, d1=0.014, d2=0.733 g=0.622, a1=100, a2=0

>7, d1=0.056, d2=0.671 g=0.798, a1=100, a2=100

>8, d1=0.029, d2=0.587 g=0.653, a1=100, a2=100

>9, d1=0.133, d2=0.537 g=0.753, a1=100, a2=100

>10, d1=0.049, d2=0.640 g=0.839, a1=100, a2=100

>11, d1=0.056, d2=0.789 g=0.836, a1=100, a2=0

>12, d1=0.086, d2=0.564 g=0.916, a1=100, a2=100

>13, d1=0.067, d2=0.550 g=0.963, a1=100, a2=100

>14, d1=0.062, d2=0.575 g=0.940, a1=100, a2=100

>15, d1=0.053, d2=0.534 g=1.019, a1=100, a2=100

>16, d1=0.179, d2=0.594 g=1.087, a1=100, a2=100

>17, d1=0.084, d2=0.471 g=0.987, a1=100, a2=100

>18, d1=0.052, d2=0.366 g=1.226, a1=100, a2=100

>19, d1=0.065, d2=0.404 g=1.220, a1=100, a2=100

>20, d1=0.044, d2=0.311 g=1.274, a1=100, a2=100

>21, d1=0.015, d2=0.231 g=1.567, a1=100, a2=100

>22, d1=0.010, d2=0.222 g=1.838, a1=100, a2=100

>23, d1=0.007, d2=0.177 g=1.903, a1=100, a2=100

>24, d1=0.004, d2=0.139 g=2.155, a1=100, a2=100

>25, d1=0.132, d2=0.111 g=2.316, a1=100, a2=100

>26, d1=0.004, d2=0.139 g=2.556, a1=100, a2=100

>27, d1=0.266, d2=0.133 g=2.131, a1=100, a2=100

>28, d1=0.001, d2=0.199 g=2.211, a1=100, a2=100

>29, d1=0.000, d2=0.252 g=2.585, a1=100, a2=100

>30, d1=0.000, d2=0.187 g=2.543, a1=100, a2=100

>31, d1=0.002, d2=0.081 g=2.454, a1=100, a2=100

>32, d1=0.171, d2=0.061 g=2.837, a1=100, a2=100

>33, d1=0.028, d2=0.045 g=2.858, a1=100, a2=100

>34, d1=0.011, d2=0.072 g=2.627, a1=100, a2=100

>35, d1=2.599, d2=0.115 g=1.308, a1=0, a2=100

>36, d1=0.000, d2=0.505 g=0.549, a1=100, a2=100

>37, d1=0.000, d2=1.463 g=0.292, a1=100, a2=0

>38, d1=0.002, d2=1.086 g=0.689, a1=100, a2=0

>39, d1=0.153, d2=0.643 g=0.861, a1=100, a2=100

>40, d1=0.000, d2=0.353 g=1.862, a1=100, a2=100

>41, d1=0.034, d2=0.143 g=2.683, a1=100, a2=100

>42, d1=0.003, d2=0.110 g=2.784, a1=100, a2=100

>43, d1=0.093, d2=0.058 g=2.977, a1=100, a2=100

>44, d1=0.046, d2=0.051 g=3.051, a1=100, a2=100

>45, d1=0.185, d2=0.062 g=2.922, a1=100, a2=100

>46, d1=0.097, d2=0.070 g=2.670, a1=100, a2=100

>47, d1=0.060, d2=0.073 g=2.444, a1=100, a2=100

>48, d1=0.093, d2=0.156 g=2.385, a1=100, a2=100

>49, d1=0.785, d2=0.346 g=1.026, a1=0, a2=100

>50, d1=0.057, d2=0.869 g=0.667, a1=100, a2=0

>51, d1=0.002, d2=1.001 g=0.564, a1=100, a2=0

>52, d1=0.000, d2=0.764 g=1.047, a1=100, a2=0

>53, d1=0.010, d2=0.362 g=1.586, a1=100, a2=100

>54, d1=0.033, d2=0.230 g=2.469, a1=100, a2=100

>55, d1=0.179, d2=0.134 g=2.554, a1=100, a2=100

>56, d1=0.459, d2=0.103 g=2.356, a1=100, a2=100

>57, d1=0.245, d2=0.185 g=1.769, a1=100, a2=100

>58, d1=0.014, d2=0.227 g=1.229, a1=100, a2=100

>59, d1=0.016, d2=0.699 g=0.882, a1=100, a2=0

>60, d1=0.002, d2=0.534 g=1.192, a1=100, a2=100

>61, d1=0.010, d2=0.335 g=1.630, a1=100, a2=100

>62, d1=0.019, d2=0.283 g=2.246, a1=100, a2=100

>63, d1=0.240, d2=0.132 g=2.547, a1=100, a2=100

>64, d1=0.965, d2=0.219 g=1.534, a1=0, a2=100

>65, d1=0.040, d2=0.529 g=0.950, a1=100, a2=100

>66, d1=0.012, d2=0.611 g=0.978, a1=100, a2=100

>67, d1=0.015, d2=0.576 g=1.311, a1=100, a2=100

>68, d1=0.102, d2=0.214 g=1.840, a1=100, a2=100

>69, d1=0.020, d2=0.140 g=2.544, a1=100, a2=100

>70, d1=5.089, d2=0.314 g=1.231, a1=0, a2=100

>71, d1=0.026, d2=0.700 g=0.556, a1=100, a2=0

>72, d1=0.005, d2=1.299 g=0.460, a1=100, a2=0

>73, d1=0.009, d2=1.033 g=0.791, a1=100, a2=0

>74, d1=0.013, d2=0.343 g=1.408, a1=100, a2=100

>75, d1=0.247, d2=0.267 g=1.740, a1=100, a2=100

>76, d1=0.184, d2=0.172 g=2.105, a1=100, a2=100

>77, d1=0.150, d2=0.133 g=2.297, a1=100, a2=100

>78, d1=0.589, d2=0.112 g=2.557, a1=100, a2=100

>79, d1=0.477, d2=0.232 g=1.474, a1=100, a2=100

>80, d1=0.173, d2=0.360 g=1.034, a1=100, a2=100

>81, d1=0.052, d2=0.790 g=0.936, a1=100, a2=0

>82, d1=0.042, d2=0.537 g=1.135, a1=100, a2=100

>83, d1=0.296, d2=0.363 g=1.152, a1=100, a2=100

>84, d1=0.157, d2=0.377 g=1.283, a1=100, a2=100

>85, d1=0.139, d2=0.436 g=1.445, a1=100, a2=100

>86, d1=0.163, d2=0.343 g=1.370, a1=100, a2=100

>87, d1=0.189, d2=0.290 g=1.576, a1=100, a2=100

>88, d1=1.223, d2=0.548 g=0.822, a1=0, a2=100

>89, d1=0.016, d2=1.042 g=0.499, a1=100, a2=0

>90, d1=0.013, d2=1.033 g=0.829, a1=100, a2=0

>91, d1=0.006, d2=0.589 g=1.421, a1=100, a2=100

>92, d1=0.054, d2=0.160 g=2.414, a1=100, a2=100

>93, d1=0.214, d2=0.070 g=3.094, a1=100, a2=100

>94, d1=0.445, d2=0.089 g=2.564, a1=100, a2=100

>95, d1=2.902, d2=0.180 g=1.358, a1=0, a2=100

>96, d1=0.485, d2=0.684 g=0.625, a1=100, a2=100

>97, d1=0.287, d2=1.296 g=0.405, a1=100, a2=0

>98, d1=0.159, d2=1.149 g=0.689, a1=100, a2=0

>99, d1=0.021, d2=0.557 g=1.405, a1=100, a2=100

>100, d1=0.319, d2=0.243 g=1.905, a1=100, a2=100

>101, d1=0.811, d2=0.241 g=1.523, a1=0, a2=100

>102, d1=0.469, d2=0.439 g=0.987, a1=100, a2=100

>103, d1=0.073, d2=0.760 g=0.698, a1=100, a2=0

>104, d1=0.040, d2=0.762 g=0.869, a1=100, a2=0

>105, d1=0.073, d2=0.444 g=1.453, a1=100, a2=100

>106, d1=0.455, d2=0.272 g=1.632, a1=100, a2=100

>107, d1=0.320, d2=0.365 g=1.416, a1=100, a2=100

>108, d1=0.245, d2=0.409 g=1.245, a1=100, a2=100

>109, d1=0.258, d2=0.572 g=1.146, a1=100, a2=100

>110, d1=0.120, d2=0.447 g=1.538, a1=100, a2=100

>111, d1=2.707, d2=0.376 g=1.343, a1=0, a2=100

>112, d1=3.112, d2=0.604 g=0.873, a1=0, a2=100

>113, d1=0.107, d2=0.750 g=0.873, a1=100, a2=0

>114, d1=0.284, d2=0.682 g=0.905, a1=100, a2=100

>115, d1=1.768, d2=0.717 g=0.824, a1=0, a2=0

>116, d1=0.530, d2=0.822 g=0.560, a1=100, a2=0

>117, d1=0.424, d2=0.984 g=0.613, a1=100, a2=0

>118, d1=1.608, d2=1.398 g=0.244, a1=0, a2=0

>119, d1=4.422, d2=2.402 g=0.135, a1=0, a2=0

>120, d1=0.011, d2=1.998 g=0.321, a1=100, a2=0

>121, d1=0.085, d2=1.066 g=0.815, a1=100, a2=0

>122, d1=0.895, d2=0.444 g=1.495, a1=0, a2=100

>123, d1=2.659, d2=0.288 g=1.417, a1=0, a2=100

>124, d1=1.780, d2=0.450 g=0.869, a1=0, a2=100

>125, d1=2.271, d2=1.046 g=0.324, a1=0, a2=0

>126, d1=0.836, d2=1.970 g=0.123, a1=0, a2=0

>127, d1=0.108, d2=2.396 g=0.103, a1=100, a2=0

>128, d1=0.146, d2=2.371 g=0.174, a1=100, a2=0

>129, d1=0.189, d2=1.623 g=0.424, a1=100, a2=0

>130, d1=0.508, d2=0.877 g=0.876, a1=100, a2=0

>131, d1=0.723, d2=0.423 g=1.367, a1=0, a2=100

>132, d1=1.306, d2=0.292 g=1.445, a1=0, a2=100

>133, d1=0.920, d2=0.318 g=1.378, a1=0, a2=100

>134, d1=1.120, d2=0.481 g=0.827, a1=0, a2=100

>135, d1=0.278, d2=0.763 g=0.562, a1=100, a2=0

>136, d1=0.134, d2=0.901 g=0.555, a1=100, a2=0

>137, d1=0.061, d2=0.816 g=0.864, a1=100, a2=0

>138, d1=0.057, d2=0.451 g=1.533, a1=100, a2=100

>139, d1=0.111, d2=0.214 g=2.145, a1=100, a2=100

>140, d1=0.260, d2=0.107 g=2.451, a1=100, a2=100

>141, d1=4.498, d2=0.209 g=1.266, a1=0, a2=100

>142, d1=0.016, d2=0.681 g=0.672, a1=100, a2=100

>143, d1=0.007, d2=0.952 g=0.702, a1=100, a2=0

>144, d1=0.008, d2=0.624 g=1.337, a1=100, a2=100

>145, d1=0.010, d2=0.241 g=2.114, a1=100, a2=100

>146, d1=2.108, d2=0.121 g=2.536, a1=0, a2=100

>147, d1=4.086, d2=0.111 g=2.315, a1=0, a2=100

>148, d1=1.247, d2=0.177 g=1.781, a1=0, a2=100

>149, d1=2.684, d2=0.377 g=1.026, a1=0, a2=100

>150, d1=0.572, d2=0.701 g=0.710, a1=100, a2=0

>151, d1=0.608, d2=0.899 g=0.571, a1=100, a2=0

>152, d1=0.118, d2=0.904 g=0.592, a1=100, a2=0

>153, d1=0.228, d2=0.837 g=0.735, a1=100, a2=0

>154, d1=0.353, d2=0.671 g=0.912, a1=100, a2=100

>155, d1=0.959, d2=0.563 g=0.985, a1=0, a2=100

>156, d1=0.427, d2=0.478 g=1.184, a1=100, a2=100

>157, d1=0.307, d2=0.348 g=1.438, a1=100, a2=100

>158, d1=0.488, d2=0.286 g=1.383, a1=100, a2=100

>159, d1=0.264, d2=0.333 g=1.312, a1=100, a2=100

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

There are two possible kind of failures regarding a GAN model: model collapse and failure of convergence. Model collapse would often mean that the generative part of the model wouldn't be able to generate diverse outcomes. Failure of convergence between the generative and the discriminative model could likely way be identified as that the loss for the discriminator has gone to zero or close to zero. Observe the above generated plot, in the upper plot, the loss of discriminator has not gone to zero/close to zero, indicating that the model has possibily find a balance between the generator and the discriminator. In the lower plot, the accuracy is fluctuating between 1 and 0, indicating possible variability within the data generated. Therefore, it is reasonable to conclude that within the possible range of epoch and other parameters, the model has successfully avoided the two common types of failures associated with GAN. Rewarding Phase The above `train_batch` function is set to return a trained generator. Thus, we could use that function directly and observe the possible molecules we could get from that function.

|

no, ed = generator_trained(np.random.randint(0,20

, size =(1,100)))#generated nodes and edges

abs(no.numpy()).astype(int).reshape(num_atoms), abs(ed.numpy()).astype(int).reshape(num_atoms,num_atoms)

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

With the `de_featurizer`, we could convert the generated matrix into a smiles molecule and plot it out=)

|

cat, dog = model.de_featurizer(abs(no.numpy()).astype(int).reshape(num_atoms), abs(ed.numpy()).astype(int).reshape(num_atoms,num_atoms))

Chem.MolToSmiles(cat)

Chem.MolFromSmiles(Chem.MolToSmiles(cat))

|

RDKit ERROR: [14:09:13] Explicit valence for atom # 1 O, 5, is greater than permitted

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Brief Result Analysis

|

from rdkit import DataStructs

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

With the rdkit function of comparing similarities, here we'll demonstrate a preliminary analysis of the molecule we've generated. With "CCO" molecule as a control, we could observe that the new molecule we've generated is more similar to a random selected molecule(the fourth molecule) from the initial training set.This may indicate that our model has indeed extracted some features from our original dataset and generated a new molecule that is relevant.

|

DataStructs.FingerprintSimilarity(Chem.RDKFingerprint(Chem.MolFromSmiles("[Li]NBBC=N")), Chem.RDKFingerprint(Chem.MolFromSmiles("CCO")))# compare with the control

#compare with one from the original data

DataStructs.FingerprintSimilarity(Chem.RDKFingerprint(Chem.MolFromSmiles("[Li]NBBC=N")), Chem.RDKFingerprint(Chem.MolFromSmiles("CCN1C2=NC(=O)N(C(=O)C2=NC(=N1)C3=CC=CC=C3)C")))

|

_____no_output_____

|

MIT

|

example.ipynb

|

susanzhang233/mollykill

|

Large DataFrame Tests - NYC CAB

|

BASE_DIR = '/mnt/roscoe/data/fuzzydata/fuzzydatatest/nyc-cab/'

frameworks = ['pandas', 'sqlite', 'modin_dask', 'modin_ray']

all_perfs = []

for f in frameworks:

result_file = glob.glob(f"{BASE_DIR}/{f}/*_perf.csv")[0]

perf_df = pd.read_csv(result_file, index_col=0)

perf_df['end_time_seconds'] = np.cumsum(perf_df.elapsed_time)

perf_df['start_time_seconds'] = perf_df['end_time_seconds'].shift().fillna(0)

perf_df['framework'] = f

all_perfs.append(perf_df)

#plot_gantt(perf_df, title=f'Example Workflow 1.18 GB CSV load/groupby on {f}', x_range=[0.0, 320])

nyc_cab_perfs = pd.concat(all_perfs, ignore_index=True)

new_op_labels = ['load', 'groupby_1', 'groupby_2', 'groupby_3']

nyc_cab_perfs['op'] = np.tile(new_op_labels,4)

pivoted = nyc_cab_perfs.pivot(index='framework', columns='op', values='elapsed_time')

pivoted = pivoted.reindex(['pandas', 'modin_dask', 'modin_ray', 'sqlite'])[new_op_labels]

ax = pivoted.plot.bar(stacked=True)

plt.xticks(rotation=0)

plt.legend()

plt.xlabel('Client')

plt.ylabel('Runtime (Seconds)')

plt.savefig('real_example.pdf', bbox_inches='tight')

|

_____no_output_____

|

MIT

|

examples/Result_Analysis.ipynb

|

suhailrehman/fuzzydata

|

Combined Performance / Scaling Graph

|

BASE_DIR = '/mnt/roscoe/data/fuzzydata/fuzzydata_scaling_test_3/'

frameworks = ['pandas', 'sqlite', 'modin_dask', 'modin_ray']

sizes = ['1000', '10000', '100000', '1000000', '5000000']

all_perfs = []

for framework in frameworks:

for size in sizes:

input_dir = f"{BASE_DIR}/{framework}_{size}/"

try:

#print(f"{input_dir}/*_perf.csv")

perf_file = glob.glob(f"{input_dir}/*_perf.csv")[0]

perf_df = pd.read_csv(perf_file, index_col=0)

perf_df['end_time_seconds'] = np.cumsum(perf_df.elapsed_time)

perf_df['start_time_seconds'] = perf_df['end_time_seconds'].shift().fillna(0)

perf_df['framework'] = framework

perf_df['size'] = size

all_perfs.append(perf_df)

except (IndexError, FileNotFoundError) as e:

#raise(e)

pass

all_perfs_df = pd.concat(all_perfs, ignore_index=True)

total_wf_times = all_perfs_df.loc[all_perfs_df.dst == 'artifact_14'][['framework','size','end_time_seconds']].reset_index(drop=True).pivot(index='size', columns='framework', values='end_time_seconds')

total_wf_times = total_wf_times.rename_axis('Client')

total_wf_times = total_wf_times[['pandas', 'modin_dask', 'modin_ray', 'sqlite']]

total_wf_times

import seaborn as sns

import matplotlib

import matplotlib.pyplot as plt

font = {'family' : 'serif',

'weight' : 'normal',

'size' : 12}

matplotlib.rc('font', **font)

x_axis_replacements = ['1K', '10K', '100K', '1M', '5M']

plt.figure(figsize=(6,4))

ax = sns.lineplot(data=total_wf_times, markers=True, linewidth=2.5, markersize=10)

plt.xticks(total_wf_times.index, x_axis_replacements)

plt.grid()

plt.xlabel('Base Artifact Number of Rows (r)')

plt.ylabel('Runtime (Seconds)')

handles, labels = ax.get_legend_handles_labels()

ax.legend(handles=handles, labels=labels)

plt.savefig("scaling.pdf", bbox_inches='tight')

breakdown = all_perfs_df[['framework', 'size', 'op', 'elapsed_time']].groupby(['framework', 'size', 'op']).sum().reset_index()

pivoted_breakdown = breakdown.pivot(index=['size', 'framework'], columns=['op'], values='elapsed_time')

pivoted_breakdown

pivoted_breakdown.plot.bar(stacked=True)

plt.savefig("breakdown.pdf", bbox_inches='tight')

breakdown

# Sources: https://stackoverflow.com/questions/22787209/how-to-have-clusters-of-stacked-bars-with-python-pandas

import pandas as pd

import matplotlib.cm as cm

import numpy as np

import matplotlib.pyplot as plt

def plot_clustered_stacked(dfall, labels=None, title="multiple stacked bar plot", H="/",

x_axis_replacements=None, **kwargs):

"""Given a list of dataframes, with identical columns and index, create a clustered stacked bar plot.

labels is a list of the names of the dataframe, used for the legend

title is a string for the title of the plot

H is the hatch used for identification of the different dataframe"""

n_df = len(dfall)

n_col = len(dfall[0].columns)

n_ind = len(dfall[0].index)

fig = plt.figure(figsize=(6,4))

axe = fig.add_subplot(111)

for df in dfall : # for each data frame

axe = df.plot(kind="bar",

linewidth=0,

stacked=True,

ax=axe,

legend=False,

grid=False,

**kwargs) # make bar plots

hatches = ['', 'oo', '///', '++']

h,l = axe.get_legend_handles_labels() # get the handles we want to modify

for i in range(0, n_df * n_col, n_col): # len(h) = n_col * n_df

for j, pa in enumerate(h[i:i+n_col]):

for rect in pa.patches: # for each index

rect.set_x(rect.get_x() + 1 / float(n_df + 1) * i / float(n_col) -0.1)

rect.set_hatch(hatches[int(i / n_col)]) #edited part

rect.set_width(1 / float(n_df + 1))

axe.set_xticks((np.arange(0, 2 * n_ind, 2) + 1 / float(n_df + 1)) / 2.)

if x_axis_replacements == None:

x_axis_replacements = df.index

axe.set_xticklabels(x_axis_replacements, rotation = 0)

#axe.set_title(title)

# Add invisible data to add another legend

n=[]

for i in range(n_df):

n.append(axe.bar(0, 0, color="gray", hatch=hatches[i]))

l1 = axe.legend(h[:n_col], l[:n_col], loc=[0.38, 0.545])

if labels is not None:

l2 = plt.legend(n, labels)# , loc=[1.01, 0.1])

axe.add_artist(l1)

return axe

cols = ['groupby','load','merge','project','sample']

pbr = pivoted_breakdown.reset_index()

pbr = pbr.set_index('size')

df_splits = [pbr.loc[pbr.framework == f][cols] for f in ['pandas', 'modin_dask', 'modin_ray', 'sqlite']]

# Then, just call :

plot_clustered_stacked(df_splits,['pandas', 'modin_dask', 'modin_ray', 'sqlite'],

x_axis_replacements=x_axis_replacements,

title='Timing Breakdown Per Operation Type')

plt.xlabel('Base Artifact Number of Rows (r)')

plt.ylabel('Runtime (Seconds)')

plt.savefig("breakdown.eps")

pivoted_breakdown.reset_index().set_index('size')

|

_____no_output_____

|

MIT

|

examples/Result_Analysis.ipynb

|

suhailrehman/fuzzydata

|

DataMining TwitterAPIRequirements:- TwitterAccount- TwitterApp credentials ImportsThe following imports are requiered to mine data from Twitter

|

# http://tweepy.readthedocs.io/en/v3.5.0/index.html

import tweepy

# https://api.mongodb.com/python/current/

import pymongo

import json

import sys

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

Access and Test the TwitterAPIInsert your `CONSUMER_KEY`, `CONSUMER_SECRET`, `ACCESS_TOKEN` and `ACCESS_TOKEN_SECRET` and run the code snippet to test if access is granted. If everything works well 'tweepy...' will be posted to your timeline.

|

# Set the received credentials for your recently created TwitterAPI

CONSUMER_KEY = 'MmiELrtF7fSp3vptCID8jKril'

CONSUMER_SECRET = 'HqtMRk4jpt30uwDOLz30jHqZm6TPN6rj3oHFaL6xFxw2k0GkDC'

ACCESS_TOKEN = '116725830-rkT63AILxR4fpf4kUXd8xJoOcHTsGkKUOKSMpMJQ'

ACCESS_TOKEN_SECRET = 'eKzxfku4GdYu1wWcMr5iusTmhFT35cDWezMU2Olr5UD4i'

# auth with your provided

auth = tweepy.OAuthHandler(CONSUMER_KEY, CONSUMER_SECRET)

auth.set_access_token(ACCESS_TOKEN, ACCESS_TOKEN_SECRET)

# Create an instance for the TwitterApi

twitter = tweepy.API(auth)

status = twitter.update_status('tweepy ...')

print(json.dumps(status._json, indent=1))

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

mongoDBTo gain access to the mongoDB the library `pymongo` is used.In the first step the mongoDB URL is defined.

|

MONGO_URL = 'mongodb://twitter-mongodb:27017/'

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

Next, two functions are defined to save and load data from mongoDB.

|

def save_to_mongo(data, mongo_db, mongo_db_coll):

# Connects to the MongoDB server running on

client = pymongo.MongoClient(MONGO_URL)

# Get a reference to a particular database

db = client[mongo_db]

# Reference a particular collection in the database

coll = db[mongo_db_coll]

# Perform a bulk insert and return the IDs

return coll.insert_one(data)

def load_from_mongo(mongo_db, mongo_db_coll, return_cursor=False, criteria=None, projection=None):

# Optionally, use criteria and projection to limit the data that is

# returned - http://docs.mongodb.org/manual/reference/method/db.collection.find/

# Connects to the MongoDB server running on

client = pymongo.MongoClient(MONGO_URL)

# Reference a particular collection in the database

db = client[mongo_db]

# Perform a bulk insert and return the IDs

coll = db[mongo_db_coll]

if criteria is None:

criteria = {}

if projection is None:

cursor = coll.find(criteria)

else:

cursor = coll.find(criteria, projection)

# Returning a cursor is recommended for large amounts of data

if return_cursor:

return cursor

else:

return [ item for item in cursor ]

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

Stream tweets to mongoDBNow we want to stream tweets to a current trend to the mongoDB.Therefore we ask the TwitterAPI for current Trends within different places. Places are defined with WOEID https://www.flickr.com/places/info/1

|

# WORLD

print('trends WORLD')

trends = twitter.trends_place(1)[0]['trends']

for t in trends[:5]:

print(json.dumps(t['name'],indent=1))

# US

print('\ntrends US')

trends = twitter.trends_place(23424977)[0]['trends']

for t in trends[:5]:

print(json.dumps(t['name'],indent=1))

# AT

print('\ntrends AUSTRIA')

trends = twitter.trends_place(23424750)[0]['trends']

for t in trends[:5]:

print(json.dumps(t['name'],indent=1))

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

StreamListenertweepy provides a StreamListener that allows to stream live tweets. All streamed tweets are stored to the mongoDB.

|

MONGO_DB = 'trends'

MONGO_COLL = 'tweets'

TREND = '#BestBoyBand'

class CustomStreamListener(tweepy.StreamListener):

def __init__(self, twitter):

self.twitter = twitter

super(tweepy.StreamListener, self).__init__()

self.db = pymongo.MongoClient(MONGO_URL)[MONGO_DB]

self.number = 1

print('Streaming tweets to mongo ...')

def on_data(self, tweet):

self.number += 1

self.db[MONGO_COLL].insert_one(json.loads(tweet))

if self.number % 100 == 0 : print('{} tweets added'.format(self.number))

def on_error(self, status_code):

return True # Don't kill the stream

def on_timeout(self):

return True # Don't kill the stream

sapi = tweepy.streaming.Stream(auth, CustomStreamListener(twitter))

sapi.filter(track=[TREND])

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

Collect tweets from a specific userIn this use-case we mine data from a specific user.

|

MONGO_DB = 'trump'

MONGO_COLL = 'tweets'

TWITTER_USER = '@realDonaldTrump'

def get_all_tweets(screen_name):

#initialize a list to hold all the tweepy Tweets

alltweets = []

#make initial request for most recent tweets (200 is the maximum allowed count)

new_tweets = twitter.user_timeline(screen_name = screen_name,count=200)

#save most recent tweets

alltweets.extend(new_tweets)

#save the id of the oldest tweet less one

oldest = alltweets[-1].id - 1

#keep grabbing tweets until there are no tweets left to grab

while len(new_tweets) > 0:

#all subsiquent requests use the max_id param to prevent duplicates

new_tweets = twitter.user_timeline(screen_name = screen_name,count=200,max_id=oldest)

#save most recent tweets

alltweets.extend(new_tweets)

#update the id of the oldest tweet less one

oldest = alltweets[-1].id - 1

print("...{} tweets downloaded so far".format(len(alltweets)))

#write tweet objects to JSON

print("Writing tweet objects to MongoDB please wait...")

number = 1

for status in alltweets:

print(save_to_mongo(status._json, MONGO_DB, MONGO_COLL))

number += 1

print("Done - {} tweets saved!".format(number))

#pass in the username of the account you want to download

get_all_tweets(TWITTER_USER)

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

Load tweets from mongo

|

data = load_from_mongo('trends', 'tweets')

for d in data[:5]:

print(d['text'])

|

_____no_output_____

|

MIT

|

jupyter-notebooks/02_DataMining_TwitterAPI.ipynb

|

amarbajric/INDBA-ML

|

Graphs from the presentation

|

import matplotlib.pyplot as plt

%matplotlib notebook

# create a new figure

plt.figure()

# create x and y coordinates via lists

x = [99, 19, 88, 12, 95, 47, 81, 64, 83, 76]

y = [43, 18, 11, 4, 78, 47, 77, 70, 21, 24]

# scatter the points onto the figure

plt.scatter(x, y)

# create a new figure

plt.figure()

# create x and y values via lists

x = [1, 2, 3, 4, 5, 6, 7, 8]

y = [1, 4, 9, 16, 25, 36, 49, 64]

# plot the line

plt.plot(x, y)

# create a new figure

plt.figure()

# create a list of observations

observations = [5.24, 3.82, 3.73, 5.3 , 3.93, 5.32, 6.43, 4.4 , 5.79, 4.05, 5.34, 5.62, 6.02, 6.08, 6.39, 5.03, 5.34, 4.98, 3.84, 4.91, 6.62, 4.66, 5.06, 2.37, 5. , 3.7 , 5.22, 5.86, 3.88, 4.68, 4.88, 5.01, 3.09, 5.38, 4.78, 6.26, 6.29, 5.77, 4.33, 5.96, 4.74, 4.54, 7.99, 5. , 4.85, 5.68, 3.73, 4.42, 4.99, 4.47, 6.06, 5.88, 4.56, 5.37, 6.39, 4.15]

# create a histogram with 15 intervals

plt.hist(observations, bins=15)

# create a new figure

plt.figure()

# plot a red line with a transparancy of 40%. Label this 'line 1'

plt.plot(x, y, color='red', alpha=0.4, label='line 1')

# make a key appear on the plot

plt.legend()

# import pandas

import pandas as pd

# read in data from a csv

data = pd.read_csv('data/weather.csv', parse_dates=['Date'])

# create a new matplotlib figure

plt.figure()

# plot the temperature over time

plt.plot(data['Date'], data['Temp (C)'])

# add a ylabel

plt.ylabel('Temperature (C)')

plt.figure()

# create inputs

x = ['UK', 'France', 'Germany', 'Spain', 'Italy']

y = [67.5, 65.1, 83.5, 46.7, 60.6]

# plot the chart

plt.bar(x, y)

plt.ylabel('Population (M)')

plt.figure()

# create inputs

x = ['UK', 'France', 'Germany', 'Spain', 'Italy']

y = [67.5, 65.1, 83.5, 46.7, 60.6]

# create a list of colours

colour = ['red', 'green', 'blue', 'orange', 'purple']

# plot the chart with the colors and transparancy

plt.bar(x, y, color=colour, alpha=0.5)

plt.ylabel('Population (M)')

plt.figure()

x = [1, 2, 3, 4, 5, 6, 7, 8, 9]

y1 = [2, 4, 6, 8, 10, 12, 14, 16, 18]

y2 = [4, 8, 12, 16, 20, 24, 28, 32, 36]

plt.scatter(x, y1, color='cyan', s=5)

plt.scatter(x, y2, color='violet', s=15)

plt.figure()

x = [1, 2, 3, 4, 5, 6, 7, 8, 9]

y1 = [2, 4, 6, 8, 10, 12, 14, 16, 18]

y2 = [4, 8, 12, 16, 20, 24, 28, 32, 36]

size1 = [10, 20, 30, 40, 50, 60, 70, 80, 90]

size2 = [90, 80, 70, 60, 50, 40, 30, 20, 10]

plt.scatter(x, y1, color='cyan', s=size1)

plt.scatter(x, y2, color='violet', s=size2)

co2_file = '../5. Examples of Visual Analytics in Python/data/national/co2_emissions_tonnes_per_person.csv'

gdp_file = '../5. Examples of Visual Analytics in Python/data/national/gdppercapita_us_inflation_adjusted.csv'

pop_file = '../5. Examples of Visual Analytics in Python/data/national/population.csv'

co2_per_cap = pd.read_csv(co2_file, index_col=0, parse_dates=True)

gdp_per_cap = pd.read_csv(gdp_file, index_col=0, parse_dates=True)

population = pd.read_csv(pop_file, index_col=0, parse_dates=True)

plt.figure()

x = gdp_per_cap.loc['2017'] # gdp in 2017

y = co2_per_cap.loc['2017'] # co2 emmissions in 2017

# population in 2017 will give size of points (divide pop by 1M)

size = population.loc['2017'] / 1e6

# scatter points with vector size and some transparancy

plt.scatter(x, y, s=size, alpha=0.5)

# set a log-scale

plt.xscale('log')

plt.yscale('log')

plt.xlabel('GDP per capita, $US')

plt.ylabel('CO2 emissions per person per year, tonnes')

plt.figure()

# create grid of numbers

grid = [[1, 2, 3],

[4, 5, 6],

[7, 8, 9]]

# plot the grid with 'autumn' color map

plt.imshow(grid, cmap='autumn')

# add a colour key

plt.colorbar()

import pandas as pd

data = pd.read_csv("../5. Examples of Visual Analytics in Python/data/stocks/FTSE_stock_prices.csv", index_col=0)

correlation_matrix = data.pct_change().corr()

# create a new figure

plt.figure()

# imshow the grid of correlation

plt.imshow(correlation_matrix, cmap='terrain')

# add a color bar

plt.colorbar()

# remove cluttering x and y ticks

plt.xticks([])

plt.yticks([])

elevation = pd.read_csv('data/UK_elevation.csv', index_col=0)

# create figure

plt.figure()

# imshow data

plt.imshow(elevation, # grid data

vmin=-50, # minimum for colour bar

vmax=500, # maximum for colour bar

cmap='terrain', # terrain style colour map

extent=[-11, 3, 50, 60]) # [x1, x2, y1, y2] plot boundaries

# add axis labels and a title

plt.xlabel('Longitude')

plt.ylabel('Latitude')

plt.title('UK Elevation Profile')

# add a colourbar

plt.colorbar()

|

_____no_output_____

|

MIT

|

6. Advanced Visualisation Tools/Graphs from the presentation.ipynb

|

nickelnine37/VisualAnalyticsWithPython

|

SOLUCION 1

|

h1 = 0

h2 = 0

m1 = 0

m2 = 0 # 1440 + 24 *6

contador = 0 # 5 + (1440 + ?) * 2 + 144 + 24 + 2= 3057

while [h1, h2, m1, m2] != [2,3,5,9]:

if [h1, h2] == [m2, m1]:

print(h1, h2,":", m1, m2)

m2 = m2 + 1

if m2 == 10:

m2 = 0

m1 = m1 + 1

if m1 == 6:

h2 = h2 + 1

m2 = 0

contador = contador + 1

m2 = m2 + 1

if m2 == 10:

m2 = 0

m1 = m1 + 1

if m1 == 6:

m1 = 0

h2 = h2 +1

if h2 == 10:

h2 = 0

h1 = h1 +1

print("Numero de palindromos: ",contador)

|

_____no_output_____

|

MIT

|

28Octubre.ipynb

|

VxctxrTL/daa_2021_1

|

Solucion 2

|

horario="0000"

contador=0

while horario!="2359":

inv=horario[::-1]

if horario==inv:

contador+=1

print(horario[0:2],":",horario[2:4])

new=int(horario)

new+=1

horario=str(new).zfill(4)

print("son ",contador,"palindromos")

# 2 + (2360 * 4 ) + 24

|

_____no_output_____

|

MIT

|

28Octubre.ipynb

|

VxctxrTL/daa_2021_1

|

Solucion 3

|

lista=[]

for i in range(0,24,1): # 24

for j in range(0,60,1): # 60 1440

if i<10:

if j<10:

lista.append("0"+str(i)+":"+"0"+str(j))

elif j>=10:

lista.append("0"+str(i)+":"+str(j))

else:

if i>=10:

if j<10:

lista.append(str(i)+":"+"0"+str(j))

elif j>=10:

lista.append(str(i)+":"+str(j))

# 1440 + 2 + 1440 + 16 * 2 = 2900

lista2=[]

contador=0

for i in range(len(lista)): # 1440

x=lista[i][::-1]

if x==lista[i]:

lista2.append(x)

contador=contador+1

print(contador)

for j in (lista2):

print(j)

for x in range (0,24,1):

for y in range(0,60,1): #1440 * 3 +13 = 4333

hora=str(x)+":"+str(y)

if x<10:

hora="0"+str(x)+":"+str(y)

if y<10:

hora=str(x)+"0"+":"+str(y)

p=hora[::-1]

if p==hora:

print(f"{hora} es palindromo")

total = int(0) #Contador de numero de palindromos

for hor in range(0,24): #Bucles anidados for para dar aumentar las horas y los minutos al mismo tiempo

for min in range(0,60):

hor_n = str(hor) #Variables

min_n = str(min)

if (hor<10): #USamos condiciones para que las horas y los minutos no rebasen el horario

hor_n = ("0"+hor_n)

if (min<10):

min_n = ("0"+ min_n)

if (hor_n[::-1] == min_n): #Mediante un slicing le damos el formato a las horas para que este empiece desde la derecha

print("{}:{}".format(hor_n,min_n))

total += 1

#1 + 1440 * 5 =7201

|

_____no_output_____

|

MIT

|

28Octubre.ipynb

|

VxctxrTL/daa_2021_1

|

Getting ready

|

import tensorflow as tf

import tensorflow.keras as keras

import pandas as pd

import numpy as np

census_dir = 'https://archive.ics.uci.edu/ml/machine-learning-databases/adult/'

train_path = tf.keras.utils.get_file('adult.data', census_dir + 'adult.data')

test_path = tf.keras.utils.get_file('adult.test', census_dir + 'adult.test')

columns = ['age', 'workclass', 'fnlwgt', 'education', 'education_num', 'marital_status', 'occupation',

'relationship', 'race', 'gender', 'capital_gain', 'capital_loss', 'hours_per_week', 'native_country',

'income_bracket']

train_data = pd.read_csv(train_path, header=None, names=columns)

test_data = pd.read_csv(test_path, header=None, names=columns, skiprows=1)

|

_____no_output_____

|

MIT

|

Chapter 4 - Linear Regression/Using Wide & Deep models.ipynb

|

Young-Picasso/TensorFlow2.x_Cookbook

|

How to do it

|

predictors = ['age', 'workclass', 'education', 'education_num', 'marital_status', 'occupation', 'relationship',

'gender']

y_train = (train_data.income_bracket==' >50K').astype(int)

y_test = (test_data.income_bracket==' >50K').astype(int)

train_data = train_data[predictors]

test_data = test_data[predictors]

train_data[['age', 'education_num']] = train_data[['age', 'education_num']].fillna(train_data[['age', 'education_num']]).mean()

test_data[['age', 'education_num']] = test_data[['age', 'education_num']].fillna(test_data[['age', 'education_num']]).mean()

def define_feature_columns(data_df, numeric_cols, categorical_cols, categorical_embeds, dimension=30):

numeric_columns = []

categorical_columns = []

embeddings = []

for feature_name in numeric_cols:

numeric_columns.append(tf.feature_column.numeric_column(feature_name, dtype=tf.float32))

for feature_name in categorical_cols:

vocabulary = data_df[feature_name].unique()

categorical_columns.append(tf.feature_column.categorical_column_with_vocabulary_list(feature_name, vocabulary))

for feature_name in categorical_embeds:

vocabulary = data_df[feature_name].unique()

to_categorical = tf.feature_column.categorical_column_with_vocabulary_list(feature_name, vocabulary)

embeddings.append(tf.feature_column.embedding_column(to_categorical, dimension=dimension))

return numeric_columns, categorical_columns, embeddings

def create_interactions(interactions_list, buckets=10):

feature_columns = []

for (a, b) in interactions_list:

crossed_feature = tf.feature_column.crossed_column([a, b], hash_bucket_size=buckets)

crossed_feature_one_hot = tf.feature_column.indicator_column(crossed_feature)

feature_columns.append(crossed_feature_one_hot)

return feature_columns

numeric_columns, categorical_columns, embeddings = define_feature_columns(train_data,

numeric_cols=['age', 'education_num'],

categorical_cols=['gender'],

categorical_embeds=['workclass', 'education',

'marital_status', 'occupation',

'relationship'],

dimension=32

)

interactions = create_interactions([['education', 'occupation']], buckets=10)

estimator = tf.estimator.DNNLinearCombinedClassifier(

# wide settings

linear_feature_columns=numeric_columns+categorical_columns+interactions,

linear_optimizer=keras.optimizers.Ftrl(learning_rate=0.0002),

# deep settings

dnn_feature_columns=embeddings,

dnn_hidden_units=[1024, 256, 128, 64],

dnn_optimizer=keras.optimizers.Adam(learning_rate=0.0001))

def make_input_fn(data_df, label_df, num_epochs=10, shuffle=True, batch_size=256):

def input_function():

ds = tf.data.Dataset.from_tensor_slices((dict(data_df), label_df))

if shuffle:

ds = ds.shuffle(1000)

ds = ds.batch(batch_size).repeat(num_epochs)

return ds

return input_function

train_input_fn = make_input_fn(train_data, y_train, num_epochs=100, batch_size=256)

test_input_fn = make_input_fn(test_data, y_test, num_epochs=1, shuffle=False)

estimator.train(input_fn=train_input_fn, steps=1500)

results = estimator.evaluate(input_fn=test_input_fn)

print(results)

def predict_proba(predictor):

preds = list()

for pred in predictor:

preds.append(pred['probabilities'])

return np.array(preds)

predictions = predict_proba(estimator.predict(input_fn=test_input_fn))

print(predictions)

|

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmp/tmpxnk3fqlo/model.ckpt-1500

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

|

MIT

|

Chapter 4 - Linear Regression/Using Wide & Deep models.ipynb

|

Young-Picasso/TensorFlow2.x_Cookbook

|

Automated Machine Learning Forecasting away from training data Contents1. [Introduction](Introduction)2. [Setup](Setup)3. [Data](Data)4. [Prepare remote compute and data.](prepare_remote)4. [Create the configuration and train a forecaster](train)5. [Forecasting from the trained model](forecasting)6. [Forecasting away from training data](forecasting_away) IntroductionThis notebook demonstrates the full interface to the `forecast()` function. The best known and most frequent usage of `forecast` enables forecasting on test sets that immediately follows training data. However, in many use cases it is necessary to continue using the model for some time before retraining it. This happens especially in **high frequency forecasting** when forecasts need to be made more frequently than the model can be retrained. Examples are in Internet of Things and predictive cloud resource scaling.Here we show how to use the `forecast()` function when a time gap exists between training data and prediction period.Terminology:* forecast origin: the last period when the target value is known* forecast periods(s): the period(s) for which the value of the target is desired.* lookback: how many past periods (before forecast origin) the model function depends on. The larger of number of lags and length of rolling window.* prediction context: `lookback` periods immediately preceding the forecast origin Setup Please make sure you have followed the `configuration.ipynb` notebook so that your ML workspace information is saved in the config file.

|

import os

import pandas as pd

import numpy as np

import logging

import warnings

import azureml.core

from azureml.core.dataset import Dataset

from pandas.tseries.frequencies import to_offset

from azureml.core.compute import AmlCompute

from azureml.core.compute import ComputeTarget

from azureml.core.runconfig import RunConfiguration

from azureml.core.conda_dependencies import CondaDependencies

# Squash warning messages for cleaner output in the notebook

warnings.showwarning = lambda *args, **kwargs: None

np.set_printoptions(precision=4, suppress=True, linewidth=120)

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

This sample notebook may use features that are not available in previous versions of the Azure ML SDK.

|

print("This notebook was created using version 1.8.0 of the Azure ML SDK")

print("You are currently using version", azureml.core.VERSION, "of the Azure ML SDK")

from azureml.core.workspace import Workspace

from azureml.core.experiment import Experiment

from azureml.train.automl import AutoMLConfig

ws = Workspace.from_config()

# choose a name for the run history container in the workspace

experiment_name = 'automl-forecast-function-demo'

experiment = Experiment(ws, experiment_name)

output = {}

output['Subscription ID'] = ws.subscription_id

output['Workspace'] = ws.name

output['SKU'] = ws.sku

output['Resource Group'] = ws.resource_group

output['Location'] = ws.location

output['Run History Name'] = experiment_name

pd.set_option('display.max_colwidth', -1)

outputDf = pd.DataFrame(data = output, index = [''])

outputDf.T

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

DataFor the demonstration purposes we will generate the data artificially and use them for the forecasting.

|

TIME_COLUMN_NAME = 'date'

GRAIN_COLUMN_NAME = 'grain'

TARGET_COLUMN_NAME = 'y'

def get_timeseries(train_len: int,

test_len: int,

time_column_name: str,

target_column_name: str,

grain_column_name: str,

grains: int = 1,

freq: str = 'H'):

"""

Return the time series of designed length.

:param train_len: The length of training data (one series).

:type train_len: int

:param test_len: The length of testing data (one series).

:type test_len: int

:param time_column_name: The desired name of a time column.

:type time_column_name: str

:param

:param grains: The number of grains.

:type grains: int

:param freq: The frequency string representing pandas offset.

see https://pandas.pydata.org/pandas-docs/stable/user_guide/timeseries.html

:type freq: str

:returns: the tuple of train and test data sets.

:rtype: tuple

"""

data_train = [] # type: List[pd.DataFrame]

data_test = [] # type: List[pd.DataFrame]

data_length = train_len + test_len

for i in range(grains):

X = pd.DataFrame({

time_column_name: pd.date_range(start='2000-01-01',

periods=data_length,

freq=freq),

target_column_name: np.arange(data_length).astype(float) + np.random.rand(data_length) + i*5,

'ext_predictor': np.asarray(range(42, 42 + data_length)),

grain_column_name: np.repeat('g{}'.format(i), data_length)

})

data_train.append(X[:train_len])

data_test.append(X[train_len:])

X_train = pd.concat(data_train)

y_train = X_train.pop(target_column_name).values

X_test = pd.concat(data_test)

y_test = X_test.pop(target_column_name).values

return X_train, y_train, X_test, y_test

n_test_periods = 6

n_train_periods = 30

X_train, y_train, X_test, y_test = get_timeseries(train_len=n_train_periods,

test_len=n_test_periods,

time_column_name=TIME_COLUMN_NAME,

target_column_name=TARGET_COLUMN_NAME,

grain_column_name=GRAIN_COLUMN_NAME,

grains=2)

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Let's see what the training data looks like.

|

X_train.tail()

# plot the example time series

import matplotlib.pyplot as plt

whole_data = X_train.copy()

target_label = 'y'

whole_data[target_label] = y_train

for g in whole_data.groupby('grain'):

plt.plot(g[1]['date'].values, g[1]['y'].values, label=g[0])

plt.legend()

plt.show()

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Prepare remote compute and data. The [Machine Learning service workspace](https://docs.microsoft.com/en-us/azure/machine-learning/service/concept-workspace), is paired with the storage account, which contains the default data store. We will use it to upload the artificial data and create [tabular dataset](https://docs.microsoft.com/en-us/python/api/azureml-core/azureml.data.tabulardataset?view=azure-ml-py) for training. A tabular dataset defines a series of lazily-evaluated, immutable operations to load data from the data source into tabular representation.

|

# We need to save thw artificial data and then upload them to default workspace datastore.

DATA_PATH = "fc_fn_data"

DATA_PATH_X = "{}/data_train.csv".format(DATA_PATH)

if not os.path.isdir('data'):

os.mkdir('data')

pd.DataFrame(whole_data).to_csv("data/data_train.csv", index=False)

# Upload saved data to the default data store.

ds = ws.get_default_datastore()

ds.upload(src_dir='./data', target_path=DATA_PATH, overwrite=True, show_progress=True)

train_data = Dataset.Tabular.from_delimited_files(path=ds.path(DATA_PATH_X))

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

You will need to create a [compute target](https://docs.microsoft.com/en-us/azure/machine-learning/service/how-to-set-up-training-targetsamlcompute) for your AutoML run. In this tutorial, you create AmlCompute as your training compute resource.

|

from azureml.core.compute import ComputeTarget, AmlCompute

from azureml.core.compute_target import ComputeTargetException

# Choose a name for your CPU cluster

amlcompute_cluster_name = "fcfn-cluster"

# Verify that cluster does not exist already

try:

compute_target = ComputeTarget(workspace=ws, name=amlcompute_cluster_name)

print('Found existing cluster, use it.')

except ComputeTargetException:

compute_config = AmlCompute.provisioning_configuration(vm_size='STANDARD_D2_V2',

max_nodes=6)

compute_target = ComputeTarget.create(ws, amlcompute_cluster_name, compute_config)

compute_target.wait_for_completion(show_output=True)

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Create the configuration and train a forecaster First generate the configuration, in which we:* Set metadata columns: target, time column and grain column names.* Validate our data using cross validation with rolling window method.* Set normalized root mean squared error as a metric to select the best model.* Set early termination to True, so the iterations through the models will stop when no improvements in accuracy score will be made.* Set limitations on the length of experiment run to 15 minutes.* Finally, we set the task to be forecasting.* We apply the lag lead operator to the target value i.e. we use the previous values as a predictor for the future ones.

|

lags = [1,2,3]

max_horizon = n_test_periods

time_series_settings = {

'time_column_name': TIME_COLUMN_NAME,

'grain_column_names': [ GRAIN_COLUMN_NAME ],

'max_horizon': max_horizon,

'target_lags': lags

}

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Run the model selection and training process.

|

from azureml.core.workspace import Workspace

from azureml.core.experiment import Experiment

from azureml.train.automl import AutoMLConfig

automl_config = AutoMLConfig(task='forecasting',

debug_log='automl_forecasting_function.log',

primary_metric='normalized_root_mean_squared_error',

experiment_timeout_hours=0.25,

enable_early_stopping=True,

training_data=train_data,

compute_target=compute_target,

n_cross_validations=3,

verbosity = logging.INFO,

max_concurrent_iterations=4,

max_cores_per_iteration=-1,

label_column_name=target_label,

**time_series_settings)

remote_run = experiment.submit(automl_config, show_output=False)

remote_run.wait_for_completion()

# Retrieve the best model to use it further.

_, fitted_model = remote_run.get_output()

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Forecasting from the trained model In this section we will review the `forecast` interface for two main scenarios: forecasting right after the training data, and the more complex interface for forecasting when there is a gap (in the time sense) between training and testing data. X_train is directly followed by the X_testLet's first consider the case when the prediction period immediately follows the training data. This is typical in scenarios where we have the time to retrain the model every time we wish to forecast. Forecasts that are made on daily and slower cadence typically fall into this category. Retraining the model every time benefits the accuracy because the most recent data is often the most informative.We use `X_test` as a **forecast request** to generate the predictions. Typical path: X_test is known, forecast all upcoming periods

|

# The data set contains hourly data, the training set ends at 01/02/2000 at 05:00

# These are predictions we are asking the model to make (does not contain thet target column y),

# for 6 periods beginning with 2000-01-02 06:00, which immediately follows the training data

X_test

y_pred_no_gap, xy_nogap = fitted_model.forecast(X_test)

# xy_nogap contains the predictions in the _automl_target_col column.

# Those same numbers are output in y_pred_no_gap

xy_nogap

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Confidence intervals Forecasting model may be used for the prediction of forecasting intervals by running ```forecast_quantiles()```. This method accepts the same parameters as forecast().

|

quantiles = fitted_model.forecast_quantiles(X_test)

quantiles

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Distribution forecastsOften the figure of interest is not just the point prediction, but the prediction at some quantile of the distribution. This arises when the forecast is used to control some kind of inventory, for example of grocery items or virtual machines for a cloud service. In such case, the control point is usually something like "we want the item to be in stock and not run out 99% of the time". This is called a "service level". Here is how you get quantile forecasts.

|

# specify which quantiles you would like

fitted_model.quantiles = [0.01, 0.5, 0.95]

# use forecast_quantiles function, not the forecast() one

y_pred_quantiles = fitted_model.forecast_quantiles(X_test)

# quantile forecasts returned in a Dataframe along with the time and grain columns

y_pred_quantiles

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Destination-date forecast: "just do something"In some scenarios, the X_test is not known. The forecast is likely to be weak, because it is missing contemporaneous predictors, which we will need to impute. If you still wish to predict forward under the assumption that the last known values will be carried forward, you can forecast out to "destination date". The destination date still needs to fit within the maximum horizon from training.

|

# We will take the destination date as a last date in the test set.

dest = max(X_test[TIME_COLUMN_NAME])

y_pred_dest, xy_dest = fitted_model.forecast(forecast_destination=dest)

# This form also shows how we imputed the predictors which were not given. (Not so well! Use with caution!)

xy_dest

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Forecasting away from training data Suppose we trained a model, some time passed, and now we want to apply the model without re-training. If the model "looks back" -- uses previous values of the target -- then we somehow need to provide those values to the model.The notion of forecast origin comes into play: the forecast origin is **the last period for which we have seen the target value**. This applies per grain, so each grain can have a different forecast origin. The part of data before the forecast origin is the **prediction context**. To provide the context values the model needs when it looks back, we pass definite values in `y_test` (aligned with corresponding times in `X_test`).

|

# generate the same kind of test data we trained on,

# but now make the train set much longer, so that the test set will be in the future

X_context, y_context, X_away, y_away = get_timeseries(train_len=42, # train data was 30 steps long

test_len=4,

time_column_name=TIME_COLUMN_NAME,

target_column_name=TARGET_COLUMN_NAME,

grain_column_name=GRAIN_COLUMN_NAME,

grains=2)

# end of the data we trained on

print(X_train.groupby(GRAIN_COLUMN_NAME)[TIME_COLUMN_NAME].max())

# start of the data we want to predict on

print(X_away.groupby(GRAIN_COLUMN_NAME)[TIME_COLUMN_NAME].min())

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

There is a gap of 12 hours between end of training and beginning of `X_away`. (It looks like 13 because all timestamps point to the start of the one hour periods.) Using only `X_away` will fail without adding context data for the model to consume.

|

try:

y_pred_away, xy_away = fitted_model.forecast(X_away)

xy_away

except Exception as e:

print(e)

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

How should we read that eror message? The forecast origin is at the last time the model saw an actual value of `y` (the target). That was at the end of the training data! The model is attempting to forecast from the end of training data. But the requested forecast periods are past the maximum horizon. We need to provide a define `y` value to establish the forecast origin.We will use this helper function to take the required amount of context from the data preceding the testing data. It's definition is intentionally simplified to keep the idea in the clear.

|

def make_forecasting_query(fulldata, time_column_name, target_column_name, forecast_origin, horizon, lookback):

"""

This function will take the full dataset, and create the query

to predict all values of the grain from the `forecast_origin`

forward for the next `horizon` horizons. Context from previous

`lookback` periods will be included.

fulldata: pandas.DataFrame a time series dataset. Needs to contain X and y.

time_column_name: string which column (must be in fulldata) is the time axis

target_column_name: string which column (must be in fulldata) is to be forecast

forecast_origin: datetime type the last time we (pretend to) have target values

horizon: timedelta how far forward, in time units (not periods)

lookback: timedelta how far back does the model look?

Example:

```

forecast_origin = pd.to_datetime('2012-09-01') + pd.DateOffset(days=5) # forecast 5 days after end of training

print(forecast_origin)

X_query, y_query = make_forecasting_query(data,

forecast_origin = forecast_origin,

horizon = pd.DateOffset(days=7), # 7 days into the future

lookback = pd.DateOffset(days=1), # model has lag 1 period (day)

)

```

"""

X_past = fulldata[ (fulldata[ time_column_name ] > forecast_origin - lookback) &

(fulldata[ time_column_name ] <= forecast_origin)

]

X_future = fulldata[ (fulldata[ time_column_name ] > forecast_origin) &

(fulldata[ time_column_name ] <= forecast_origin + horizon)

]

y_past = X_past.pop(target_column_name).values.astype(np.float)

y_future = X_future.pop(target_column_name).values.astype(np.float)

# Now take y_future and turn it into question marks

y_query = y_future.copy().astype(np.float) # because sometimes life hands you an int

y_query.fill(np.NaN)

print("X_past is " + str(X_past.shape) + " - shaped")

print("X_future is " + str(X_future.shape) + " - shaped")

print("y_past is " + str(y_past.shape) + " - shaped")

print("y_query is " + str(y_query.shape) + " - shaped")

X_pred = pd.concat([X_past, X_future])

y_pred = np.concatenate([y_past, y_query])

return X_pred, y_pred

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Let's see where the context data ends - it ends, by construction, just before the testing data starts.

|

print(X_context.groupby(GRAIN_COLUMN_NAME)[TIME_COLUMN_NAME].agg(['min','max','count']))

print(X_away.groupby(GRAIN_COLUMN_NAME)[TIME_COLUMN_NAME].agg(['min','max','count']))

X_context.tail(5)

# Since the length of the lookback is 3,

# we need to add 3 periods from the context to the request

# so that the model has the data it needs

# Put the X and y back together for a while.

# They like each other and it makes them happy.

X_context[TARGET_COLUMN_NAME] = y_context

X_away[TARGET_COLUMN_NAME] = y_away

fulldata = pd.concat([X_context, X_away])

# forecast origin is the last point of data, which is one 1-hr period before test

forecast_origin = X_away[TIME_COLUMN_NAME].min() - pd.DateOffset(hours=1)

# it is indeed the last point of the context

assert forecast_origin == X_context[TIME_COLUMN_NAME].max()

print("Forecast origin: " + str(forecast_origin))

# the model uses lags and rolling windows to look back in time

n_lookback_periods = max(lags)

lookback = pd.DateOffset(hours=n_lookback_periods)

horizon = pd.DateOffset(hours=max_horizon)

# now make the forecast query from context (refer to figure)

X_pred, y_pred = make_forecasting_query(fulldata, TIME_COLUMN_NAME, TARGET_COLUMN_NAME,

forecast_origin, horizon, lookback)

# show the forecast request aligned

X_show = X_pred.copy()

X_show[TARGET_COLUMN_NAME] = y_pred

X_show

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Note that the forecast origin is at 17:00 for both grains, and periods from 18:00 are to be forecast.

|

# Now everything works

y_pred_away, xy_away = fitted_model.forecast(X_pred, y_pred)

# show the forecast aligned

X_show = xy_away.reset_index()

# without the generated features

X_show[['date', 'grain', 'ext_predictor', '_automl_target_col']]

# prediction is in _automl_target_col

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Forecasting farther than the maximum horizon When the forecast destination, or the latest date in the prediction data frame, is farther into the future than the specified maximum horizon, the `forecast()` function will still make point predictions out to the later date using a recursive operation mode. Internally, the method recursively applies the regular forecaster to generate context so that we can forecast further into the future. To illustrate the use-case and operation of recursive forecasting, we'll consider an example with a single time-series where the forecasting period directly follows the training period and is twice as long as the maximum horizon given at training time.Internally, we apply the forecaster in an iterative manner and finish the forecast task in two interations. In the first iteration, we apply the forecaster and get the prediction for the first max-horizon periods (y_pred1). In the second iteraction, y_pred1 is used as the context to produce the prediction for the next max-horizon periods (y_pred2). The combination of (y_pred1 and y_pred2) gives the results for the total forecast periods. A caveat: forecast accuracy will likely be worse the farther we predict into the future since errors are compounded with recursive application of the forecaster.

|

# generate the same kind of test data we trained on, but with a single grain/time-series and test period twice as long as the max_horizon

_, _, X_test_long, y_test_long = get_timeseries(train_len=n_train_periods,

test_len=max_horizon*2,

time_column_name=TIME_COLUMN_NAME,

target_column_name=TARGET_COLUMN_NAME,

grain_column_name=GRAIN_COLUMN_NAME,

grains=1)

print(X_test_long.groupby(GRAIN_COLUMN_NAME)[TIME_COLUMN_NAME].min())

print(X_test_long.groupby(GRAIN_COLUMN_NAME)[TIME_COLUMN_NAME].max())

# forecast() function will invoke the recursive forecast method internally.

y_pred_long, X_trans_long = fitted_model.forecast(X_test_long)

y_pred_long

# What forecast() function does in this case is equivalent to iterating it twice over the test set as the following.

y_pred1, _ = fitted_model.forecast(X_test_long[:max_horizon])

y_pred_all, _ = fitted_model.forecast(X_test_long, np.concatenate((y_pred1, np.full(max_horizon, np.nan))))

np.array_equal(y_pred_all, y_pred_long)

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

Confidence interval and distributional forecastsAutoML cannot currently estimate forecast errors beyond the maximum horizon set during training, so the `forecast_quantiles()` function will return missing values for quantiles not equal to 0.5 beyond the maximum horizon.

|

fitted_model.forecast_quantiles(X_test_long)

|

_____no_output_____

|

MIT

|

how-to-use-azureml/automated-machine-learning/forecasting-forecast-function/auto-ml-forecasting-function.ipynb

|

hyoshioka0128/MachineLearningNotebooks

|

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

path =("https://raw.githubusercontent.com/18cse005/DMDW/main/USA_cars_datasets.csv")

data=pd.read_csv(path)

type(data)

data.info

data.shape

data.index

data.columns

data.head()

data.tail()

data.head(10)

data.isnull().sum()

data.dropna(inplace=True) # removed the null values 1st method remove roes when large data we are having

data.isnull().sum()

data.shape

data.head(10)

# 2nd method handling missing value

data['price'].mean()

data['price'].head()

data['price'].replace(np.NaN,data['price'].mean()).head()

|

_____no_output_____

|

Apache-2.0

|

Data_preprocessing.ipynb

|

18cse081/dmdw

|

|

Introduction and Foundations: Titanic Survival Exploration> Udacity Machine Learning Engineer Nanodegree: _Project 0_>> Author: _Ke Zhang_>> Submission Date: _2017-04-27_ (Revision 2) AbstractIn 1912, the ship RMS Titanic struck an iceberg on its maiden voyage and sank, resulting in the deaths of most of its passengers and crew. In this introductory project, we will explore a subset of the RMS Titanic passenger manifest to determine which features best predict whether someone survived or did not survive. To complete this project, you will need to implement several conditional predictions and answer the questions below. Your project submission will be evaluated based on the completion of the code and your responses to the questions. Content- [Getting Started](Getting-Started)- [Making Predictions](Making-Predictions)- [Conclusion](Conclusion)- [References](References)- [Reproduction Environment](Reproduction-Environment) Getting StartedTo begin working with the RMS Titanic passenger data, we'll first need to `import` the functionality we need, and load our data into a `pandas` DataFrame.

|

# Import libraries necessary for this project

import numpy as np

import pandas as pd

from IPython.display import display # Allows the use of display() for DataFrames

# Import supplementary visualizations code visuals.py

import visuals as vs

# Pretty display for notebooks

%matplotlib inline

# Load the dataset

in_file = 'titanic_data.csv'

full_data = pd.read_csv(in_file)

# Print the first few entries of the RMS Titanic data

display(full_data.head())

|

_____no_output_____

|

Apache-2.0

|

p0_ss_titanic_survival_exploration/p0_ss_titanic_survival_exploration.ipynb

|

superkley/udacity-mlnd

|

From a sample of the RMS Titanic data, we can see the various features present for each passenger on the ship:- **Survived**: Outcome of survival (0 = No; 1 = Yes)- **Pclass**: Socio-economic class (1 = Upper class; 2 = Middle class; 3 = Lower class)- **Name**: Name of passenger- **Sex**: Sex of the passenger- **Age**: Age of the passenger (Some entries contain `NaN`)- **SibSp**: Number of siblings and spouses of the passenger aboard- **Parch**: Number of parents and children of the passenger aboard- **Ticket**: Ticket number of the passenger- **Fare**: Fare paid by the passenger- **Cabin** Cabin number of the passenger (Some entries contain `NaN`)- **Embarked**: Port of embarkation of the passenger (C = Cherbourg; Q = Queenstown; S = Southampton)Since we're interested in the outcome of survival for each passenger or crew member, we can remove the **Survived** feature from this dataset and store it as its own separate variable `outcomes`. We will use these outcomes as our prediction targets. Run the code cell below to remove **Survived** as a feature of the dataset and store it in `outcomes`.

|

# Store the 'Survived' feature in a new variable and remove it from the dataset

outcomes = full_data['Survived']

data = full_data.drop('Survived', axis = 1)

# Show the new dataset with 'Survived' removed

display(data.head())

|

_____no_output_____

|

Apache-2.0

|

p0_ss_titanic_survival_exploration/p0_ss_titanic_survival_exploration.ipynb

|

superkley/udacity-mlnd

|

The very same sample of the RMS Titanic data now shows the **Survived** feature removed from the DataFrame. Note that `data` (the passenger data) and `outcomes` (the outcomes of survival) are now *paired*. That means for any passenger `data.loc[i]`, they have the survival outcome `outcomes[i]`.To measure the performance of our predictions, we need a metric to score our predictions against the true outcomes of survival. Since we are interested in how *accurate* our predictions are, we will calculate the proportion of passengers where our prediction of their survival is correct. Run the code cell below to create our `accuracy_score` function and test a prediction on the first five passengers. **Think:** *Out of the first five passengers, if we predict that all of them survived, what would you expect the accuracy of our predictions to be?*

|

def accuracy_score(truth, pred):

""" Returns accuracy score for input truth and predictions. """

# Ensure that the number of predictions matches number of outcomes

if len(truth) == len(pred):

# Calculate and return the accuracy as a percent

return "Predictions have an accuracy of {:.2f}%.".format((truth == pred).mean()*100)

else:

return "Number of predictions does not match number of outcomes!"

# Test the 'accuracy_score' function

predictions = pd.Series(np.ones(5, dtype = int))

print accuracy_score(outcomes[:5], predictions)

|

Predictions have an accuracy of 60.00%.

|

Apache-2.0

|

p0_ss_titanic_survival_exploration/p0_ss_titanic_survival_exploration.ipynb

|

superkley/udacity-mlnd

|

> **Tip:** If you save an iPython Notebook, the output from running code blocks will also be saved. However, the state of your workspace will be reset once a new session is started. Make sure that you run all of the code blocks from your previous session to reestablish variables and functions before picking up where you last left off. Making PredictionsIf we were asked to make a prediction about any passenger aboard the RMS Titanic whom we knew nothing about, then the best prediction we could make would be that they did not survive. This is because we can assume that a majority of the passengers (more than 50%) did not survive the ship sinking. The `predictions_0` function below will always predict that a passenger did not survive.

|

def predictions_0(data):

""" Model with no features. Always predicts a passenger did not survive. """

predictions = []

for _, passenger in data.iterrows():

# Predict the survival of 'passenger'

predictions.append(0)

# Return our predictions

return pd.Series(predictions)