hexsha

stringlengths 40

40

| size

int64 6

14.9M

| ext

stringclasses 1

value | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 6

260

| max_stars_repo_name

stringlengths 6

119

| max_stars_repo_head_hexsha

stringlengths 40

41

| max_stars_repo_licenses

list | max_stars_count

int64 1

191k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 6

260

| max_issues_repo_name

stringlengths 6

119

| max_issues_repo_head_hexsha

stringlengths 40

41

| max_issues_repo_licenses

list | max_issues_count

int64 1

67k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 6

260

| max_forks_repo_name

stringlengths 6

119

| max_forks_repo_head_hexsha

stringlengths 40

41

| max_forks_repo_licenses

list | max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | avg_line_length

float64 2

1.04M

| max_line_length

int64 2

11.2M

| alphanum_fraction

float64 0

1

| cells

list | cell_types

list | cell_type_groups

list |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

4a12697bd20ecf05dd79c37739ad90c58d3b3bec

| 30,134 |

ipynb

|

Jupyter Notebook

|

notebooks/workshop-6/variational_inference.ipynb

|

Imperial-College-Data-Science-Society/workshops

|

8ce86f9f70ab47644e1f2e988da3bac41c0b9625

|

[

"MIT"

] | 17 |

2020-08-05T03:17:50.000Z

|

2021-02-06T05:07:28.000Z

|

notebooks/workshop-6/variational_inference.ipynb

|

Imperial-College-Data-Science-Society/Workshops

|

8ce86f9f70ab47644e1f2e988da3bac41c0b9625

|

[

"MIT"

] | 4 |

2021-01-31T09:23:26.000Z

|

2022-03-12T00:50:02.000Z

|

notebooks/workshop-6/variational_inference.ipynb

|

Imperial-College-Data-Science-Society/workshops

|

8ce86f9f70ab47644e1f2e988da3bac41c0b9625

|

[

"MIT"

] | 12 |

2020-10-15T17:16:58.000Z

|

2021-03-04T18:27:39.000Z

| 65.084233 | 5,712 | 0.702496 |

[

[

[

"# Variational Inference and Learning in the Big Data Regime\n\nMany real-world modelling solutions require fitting models with large numbers of data-points and parameters, which is made convenient recently through software implementing automatic differentiation, but also require uncertainty quantification. Variational inference is a generic family of tools that reformulates (Bayesian) model inference into an optimisation problem, thereby making use of modern software tools but also having the ability to give model uncertainty. This talk will motivate how variational inference works and what the state-of-the-art methods are. We will also accompany the theory with implementations on some simple probabilistic models, such as variational autoencoders (VAE). If time-permitting, we will briefly talk about some of the recent frontiers of variational inference, namely normalising flows and Stein Variational Gradient Descent.\n\n \n\n💻 Content covered:\n\nCurrent inference methods: maximum likelihood and Markov chain Monte Carlo\n\nInformation theory and KL divergence\n\nMean field variational inference\n\nBayesian linear regression\n\nMonte Carlo variational inference (MCVI), reparameterisation trick and law of the unconscious statistician (LOTUS)\n\nExample software implementations: VAE\n\n👾 This lecture will be held online on Microsoft Teams.\n\n🔴The event will be recorded and will be publicly available.\n\n🎉 Attendance is FREE for members! Whether you are a student at Imperial College or not, sign up to be a member at www.icdss.club/joinus\n\n⭐️ We encourage participants of this workshop to have looked at our previous sessions on YouTube. Prerequisites: basic understanding of Bayesian statistics\n\n📖 A schedule of our lecture series is currently available",

"_____no_output_____"

],

[

"## Background\n\n- Variational Inference: A Review for Statisticians: https://www.tandfonline.com/doi/full/10.1080/01621459.2017.1285773\n- Auto-Encoding Variational Bayes: https://arxiv.org/pdf/1312.6114.pdf\n- http://yingzhenli.net/home/en/approximateinference\n- https://github.com/ethanluoyc/pytorch-vae\n\nConsider crop yields $y$ and we have a likelihood $p(y|z)$ where $z$ are latent parameters. Suppose $z$ has some prior distribution $p(z)$, then the posterior distribution is\n$$\np(z|y) \\propto p(y|z)p(z) := \\tilde{p}(z|y).\n$$\n\nWe then want to be able to compute quantities $\\mathbb{E}_{z\\sim p(z|y)}[h(Z)]$, for certain functions $h$ e.g. $h(z)=z$ for the posterior mean of $Z$.\n\nWe could compute $p(z|y$) analytically if we have nice priors (conjugate priors), but this is usually not the case for most models e.g. Autoencoders with latent parameters or certain Gaussian mixture models. \n\nMarkov chain Monte Carlo (MCMC) allows us to obtain samples from $z\\sim p(z|y)$ using samplers (e.g. Hamiltonian Monte Carlo (HMC) or Metropolis-Hastings), but it could be very expensive and prohibits it from being used for the big data setting.",

"_____no_output_____"

],

[

"### Variational Inference\n\nVariational Inference (VI)/Variational Bayes/Variational Approximation turns this problem into an optimisation problem. We now seek $q(z)$ in a space of functions $\\mathcal{Q}$, instead of computing the exact $p(z|y)$, in which\n\n$$KL(q(z) || p(z|y)) = \\int \\log\\frac{q(z)}{p(z|y)} q(z) dq$$\n\nis minimised. This KL denotes the KL-divergence, which is a divergence measure that looks at how close 2 distributions are to one-another. It is:\n\n- Non-negative\n- Is equal to 0 if and only if $q(z) = p(z|y)$\n- Note: $KL(q(z)||p(z|y)) \\neq KL(p(z|y) || q(z))$. Minimising $KL(p(z|y) || q(z))$ is the objective of Expectation Propagation, which is another method for approximating posterior distributions.\n\nNote that maximum likelihood estimation (MLE) is done by maximising the log-likelihood, which is the same as minimising the KL divergence:\n\n$$\n\\text{argmin}_{\\theta} KL(\\hat{p}(y|\\theta^*) || p(y|\\theta)) = \\text{argmin}_{\\theta} \\frac{1}{n}\\sum_{i=1}^n \\log \\frac{p(y_i|\\hat{\\theta})}{p(y_i|\\theta)} = \\text{argmin}_{\\theta} \\frac{1}{n}\\sum_{i=1}^n \\log \\frac{1}{p(y_i|\\theta)} = \\text{argmax}_{\\theta} \\frac{1}{n}\\sum_{i=1}^n \\log p(y_i|\\theta).\n$$\n\n**Evidence Lower-Bound**\n\nSuppose I pose a family of posteriors $q(z)$, then\n\n\\begin{align*}\nKL(q(z) || p(z|y)) = \\int \\log\\frac{q(z)}{p(z|y)} q(z) dq &= \\mathbb{E}_{z\\sim q(z)}[\\log q(z)] - \\mathbb{E}_{z\\sim q(z)}[\\log p(z|y)] \\\\\n&= \\mathbb{E}_{z\\sim q(z)}[\\log q(z)] - \\mathbb{E}_{z\\sim q(z)}[\\log p(z,y)] + \\log p(y) \\\\\n&= \\mathbb{E}_{z\\sim q(z)}[\\log q(z)] - \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)] - \\mathbb{E}_{z\\sim q(z)}[p(z)] + \\log p(y) \\\\\n&=\\log p(y) + \\mathbb{E}_{z\\sim q(z)}[\\log \\frac{q(z)}{p(z)}] - \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)] \\\\\n&= \\log p(y) + KL(q(z) || p(z)) - \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)].\n\\end{align*}\n\nSince the left term is positive and $\\log p(y)$ is fixed, it is sufficient to minimise:\n\n$$\nKL(q(z) || p(z)) - \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)].\n$$\n\nThe evidence lower-bound is $ELBO(q) = \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)] - KL(q(z) || p(z))$, which is maximised.\n",

"_____no_output_____"

],

[

"### Mean-Field Variational Inference\n\nAs fancy as it sounds, it just means specifying a family of posteriors $\\mathcal{Q}$ such that \n\n$$\nq(z) = \\prod_{j=1}^m q_j(z_j),\n$$\nwhere $m$ is the number of parameters.\n\n**Coordinate Ascent Variational Inference (CAVI)**\nBlei et al. (2017)\n\n\nLet's look at an example (Li (2021)):\n$$\ny|x \\sim \\mathcal{N}(y; x^\\intercal\\theta, \\sigma^2),\\qquad \\theta\\sim\\mathcal{N}(\\theta; \\mu_0, \\Gamma_0^{-1}).\n$$\nThis has an analytical solution\n$$\np(\\theta|\\mathcal{D}) = \\mathcal{N}(\\theta; \\mu,\\Gamma^{-1})\n$$\nwith\n\\begin{align*}\n\\Gamma &= \\Gamma_0 + \\frac{1}{\\sigma^2}X^\\intercal X \\\\\n\\mu &= \\frac{1}{\\sigma^2}(X^\\intercal X + \\Gamma_0)^{-1}X^Ty,\n\\end{align*}\n\nwhere $X=(x_1,\\ldots,x_n)^\\intercal$ and $y=(y_1,\\ldots,y_n)^\\intercal$. **Let's try CAVI**:\n\n\\begin{align*}\n\\log q_1(\\theta_1) =& \\int q_2(\\theta_2) \\log \\tilde{p}(\\theta_1, \\theta_2) d\\theta_2\\\\\n=& \\int -\\frac{1}{2}\\left[(\\theta_1-\\mu_1)^2\\Gamma_{11} + 2(\\theta_1-\\mu_1)\\Gamma_{12}(\\theta_2-\\mu_2) \\right]q_2(\\theta_2) d\\theta_2 + const \\\\\n=& -\\frac{1}{2}\\left[(\\theta_1-\\mu_1)^2\\Gamma_{11} + 2(\\theta_1-\\mu_1)\\Gamma_{12}(\\mathbb{E}_{\\theta_2\\sim q_2}[\\theta_2]-\\mu_2) \\right] + const,\n\\end{align*}\nwhich is Gaussian with mean and variance\n$$\n\\tilde{\\mu}_1 = \\mu_1 - \\Gamma_{11}^{-1}\\Gamma_{12}(\\mathbb{E}_{q_2}[\\theta_2] - \\mu_2),\\qquad \\tilde{\\gamma}_2^{-1} = \\Gamma_{11}.\n$$\nSimilarly, you can obtain a similar expression for $q_2(\\theta_2)$. For CAVI to convergence, it can be shown that $(\\tilde{\\mu}_1, \\tilde{\\mu}_2)^\\intercal = \\mu$, giving\n\n$$\n\\tilde{\\mu}_1 = \\mu_1, \\qquad \\tilde{\\mu}_2 = \\mu_2.\n$$\n\nIn this case, CAVI gives a Gaussian posteriors.",

"_____no_output_____"

],

[

"### Monte Carlo Variational Inference (MCVI)\n\nFor big data situations, the variational expectation term can be (1) very expensive and (2) is not available in closed form. We can also add some more complexity to the posterior instead of just having a mean-field approximation. Recall the bound:\n\n$$\n\\mathcal{L}(q; p) = KL(q(z) || p(z)) - \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)].\n$$\n\nMCVI calculates the variational expectation using Monte Carlo integration\n$$\n\\mathbb{E}_{z\\sim q(z)}[\\log p(y_i|z)] \\approx \\frac{1}{M}\\sum_{j=1}^M \\log p(y_i|z^j),\\qquad z^j\\sim q(z).\n$$\nEven better, we can calculate this using mini-batches:\n$$\n\\sum_{i=1}^n\\mathbb{E}_{z\\sim q(z)}[\\log p(y_i|z)] = \\mathbb{E}_{S\\sim \\{1,\\ldots,n\\}}\\left[\\frac{n}{|S|}\\sum_{i\\in S} \\mathbb{E}_q[\\log p(y_i|z)] \\right],\n$$\n\nwhere the inner expectation can be calculated as before. Now, to minimise $\\mathcal{L}(q; p)$, we differentiate with respect to the parameters, let's call it $\\theta$. Therefore, we need\n\n\\begin{align*}\n\\nabla_\\theta \\mathcal{L}(q; p) =& \\nabla_\\theta\\left[KL(q(z) || p(z)) - \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)] \\right] \\\\\n=& \\nabla_\\theta \\left[ \\frac{1}{M}\\sum_{j=1}^M \\log\\frac{q(z^j)}{p(z^j)} \\right] - \\nabla_\\theta\\left[\\mathbb{E}_{S\\sim \\{1,\\ldots,n\\}}\\left[\\frac{n}{|S|}\\sum_{i\\in S} \\frac{1}{M}\\sum_{j=1}^M \\log p(y_i|z^j)\\right] \\right],\n\\end{align*}\n\nwhere $z^j\\sim q(z)$. We can get rid of the expectation with respect to the mini-batches and get a nice approximation for the bound for each batch $S$.\n\n**Reparameterisation Trick/Law of the Unconcious Statistician (LOTUS)**\nLOTUS basically refers to the identity:\n\n$$\nE_X[f(X)] = \\int f(x) p(x) dx = \\int f(g(\\epsilon)) p(\\epsilon) dx = E_\\epsilon[f(g(\\epsilon))]\n$$\n\nfor $x=g(\\epsilon)$, via the inverse function theorem and the change of variable theorem. The reparameterisation trick thus makes it easier to compute the bound by allowing us to sample from a simpler distribution $p(\\epsilon)$ to get $q(z)$:\n\n\\begin{align*}\n\\nabla_\\theta \\mathcal{L}(q; p) =& \\nabla_\\theta\\left[KL(q(z) || p(z)) - \\mathbb{E}_{z\\sim q(z)}[\\log p(y|z)] \\right] \\\\\n=& \\nabla_\\theta\\left[KL(q(z) || p(z)) - \\mathbb{E}_{\\epsilon}[\\log p(y|g_\\theta(\\epsilon))] \\right]\\\\\n=& \\nabla_\\theta KL(q(z) || p(z)) - \\mathbb{E}_{\\epsilon}[\\nabla_g \\log p(y|g_\\theta(\\epsilon)) \\times \\nabla_\\theta g_\\theta(\\epsilon)].\n\\end{align*}\n\nThen repeat using the same MCVI integration method to approximate the variational expectation. In practice, we can also use automatic differentiation to calculate the gradients.\n\n\n**Example: Variational Autoencoders (VAEs)**\n\nModel (Taken from https://lilianweng.github.io/lil-log/2018/08/12/from-autoencoder-to-beta-vae.html)\n\n\n\n**(1)**\nThe decoder represents the likelihood $p(y|z)$, where $y$ is an image. In the upcoming example, we have\n\n$$\n\\log p(y|z) = \\log N(y; f_\\theta(z), I) \\equiv ||y - f_\\theta(z)||_2^2,\n$$\n\nthe MSE loss.\n\n**(2)**\nThe prior is $z\\sim \\mathcal{N}(0, I)$. \n\n**(3)**\nAs you will see in many applications, they people only use 1 sample to calculate the variational expectation. i.e. taking $M=1$.\n\n**(4)**\nThe variational distribution that we are going for is $$q(z|y) = N(g_\\phi(y)[0], g_\\phi(y)[1] I),$$\nwhere the variational distribution is parameterised by the encoder network.\n\n**(5)**\nWe note that we can actually analytically compute the KL divergence as they are 2 Gaussians (proceed to Wikipedia for the formula...)\n",

"_____no_output_____"

],

[

"## Experiments",

"_____no_output_____"

]

],

[

[

"# from https://github.com/ethanluoyc/pytorch-vae/blob/master/vae.py\n\nimport torch\nfrom torch.autograd import Variable\nimport numpy as np\nimport torch.nn.functional as F\nimport torchvision\nfrom torchvision import transforms\nimport torch.optim as optim\nfrom torch import nn\nimport matplotlib.pyplot as plt\nfrom six.moves import urllib\nopener = urllib.request.build_opener()\nopener.addheaders = [('User-agent', 'Mozilla/5.0')]\nurllib.request.install_opener(opener)\n\nclass Normal(object):\n def __init__(self, mu, sigma, log_sigma, v=None, r=None):\n self.mu = mu\n self.sigma = sigma # either stdev diagonal itself, or stdev diagonal from decomposition\n self.logsigma = log_sigma\n dim = mu.get_shape()\n if v is None:\n v = torch.FloatTensor(*dim)\n if r is None:\n r = torch.FloatTensor(*dim)\n self.v = v\n self.r = r\n\n\nclass Encoder(torch.nn.Module):\n def __init__(self, D_in, H, D_out):\n super(Encoder, self).__init__()\n self.linear1 = torch.nn.Linear(D_in, H)\n self.linear2 = torch.nn.Linear(H, D_out)\n\n def forward(self, x):\n x = F.relu(self.linear1(x))\n return F.relu(self.linear2(x))\n\n\nclass Decoder(torch.nn.Module):\n def __init__(self, D_in, H, D_out):\n super(Decoder, self).__init__()\n self.linear1 = torch.nn.Linear(D_in, H)\n self.linear2 = torch.nn.Linear(H, D_out)\n\n def forward(self, x):\n x = F.relu(self.linear1(x))\n return F.relu(self.linear2(x))\n\n\nclass VAE(torch.nn.Module):\n latent_dim = 8\n\n def __init__(self, encoder, decoder):\n super(VAE, self).__init__()\n self.encoder = encoder\n self.decoder = decoder\n self._enc_mu = torch.nn.Linear(100, 8)\n self._enc_log_sigma = torch.nn.Linear(100, 8)\n\n def _sample_latent(self, h_enc):\n \"\"\"\n Return the latent normal sample z ~ N(mu, sigma^2)\n \"\"\"\n mu = self._enc_mu(h_enc)\n log_sigma = self._enc_log_sigma(h_enc)\n sigma = torch.exp(log_sigma)\n std_z = torch.from_numpy(np.random.normal(0, 1, size=sigma.size())).float()\n\n self.z_mean = mu\n self.z_sigma = sigma\n\n return mu + sigma * Variable(std_z, requires_grad=False) # Reparameterization trick\n\n def forward(self, state):\n h_enc = self.encoder(state)\n z = self._sample_latent(h_enc)\n return self.decoder(z)\n\n\ndef latent_loss(z_mean, z_stddev):\n mean_sq = z_mean * z_mean\n stddev_sq = z_stddev * z_stddev\n return 0.5 * torch.mean(mean_sq + stddev_sq - torch.log(stddev_sq) - 1)\n\n\n\ninput_dim = 28 * 28\nbatch_size = 32\n\ntransform = transforms.Compose(\n [transforms.ToTensor()])\nmnist = torchvision.datasets.MNIST('./', download=True, transform=transform)\n\ndataloader = torch.utils.data.DataLoader(mnist, batch_size=batch_size,\n shuffle=True, num_workers=2)\n\nprint('Number of samples: ', len(mnist))\n\nencoder = Encoder(input_dim, 100, 100)\ndecoder = Decoder(8, 100, input_dim)\nvae = VAE(encoder, decoder)\n\ncriterion = nn.MSELoss()\n\noptimizer = optim.Adam(vae.parameters(), lr=0.001)\nl = None\nfor epoch in range(5):\n for i, data in enumerate(dataloader, 0):\n inputs, classes = data\n inputs, classes = Variable(inputs.resize_(batch_size, input_dim)), Variable(classes)\n optimizer.zero_grad()\n dec = vae(inputs)\n ll = latent_loss(vae.z_mean, vae.z_sigma)\n loss = criterion(dec, inputs) + ll\n loss.backward()\n optimizer.step()\n l = loss.item()\n print(epoch, l)\n\nplt.imshow(vae(inputs).data[0].numpy().reshape(28, 28), cmap='gray')\nplt.show(block=True)",

"Number of samples: 60000\n0 0.07181154936552048\n1 0.07108696550130844\n2 0.0702378898859024\n3 0.06724850833415985\n4 0.07055552303791046\n"

],

[

"plt.imshow(inputs[0].numpy().reshape(28, 28), cmap='gray')",

"_____no_output_____"

]

],

[

[

"### Normalising Flows\n\nUsing a \"nice\" class of diffeomorphisms, one can obtain diagonal Jacobians from the diffeomorphisms, we apply the change of variables formula:\n\\begin{align*}\nq(z_L) = q(z) \\prod_{l=1}^L |\\det(\\nabla_{z_{l-1}} T_l(z_{l-1}))|^{-1}\n\\end{align*}",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown"

] |

[

[

"markdown",

"markdown",

"markdown",

"markdown",

"markdown",

"markdown"

],

[

"code",

"code"

],

[

"markdown"

]

] |

4a1271caef0ec888281eb787c403207f855f889a

| 12,058 |

ipynb

|

Jupyter Notebook

|

jupyter/paddlepaddle/face_mask_detection_paddlepaddle_zh.ipynb

|

dandansamax/djl

|

3c4262de4c60922a2f7ce22fd7c686cff62c24f8

|

[

"Apache-2.0"

] | null | null | null |

jupyter/paddlepaddle/face_mask_detection_paddlepaddle_zh.ipynb

|

dandansamax/djl

|

3c4262de4c60922a2f7ce22fd7c686cff62c24f8

|

[

"Apache-2.0"

] | null | null | null |

jupyter/paddlepaddle/face_mask_detection_paddlepaddle_zh.ipynb

|

dandansamax/djl

|

3c4262de4c60922a2f7ce22fd7c686cff62c24f8

|

[

"Apache-2.0"

] | null | null | null | 34.15864 | 202 | 0.560541 |

[

[

[

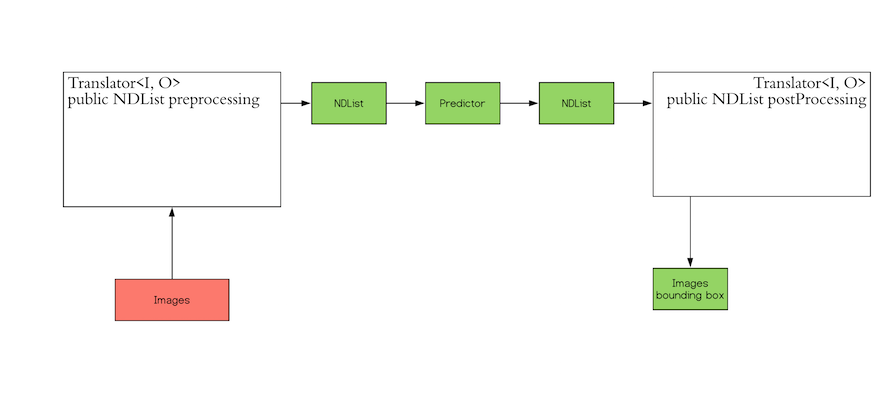

"# 用飛槳+ DJL 實作人臉口罩辨識\n在這個教學中我們將會展示利用 PaddleHub 下載預訓練好的 PaddlePaddle 模型並針對範例照片做人臉口罩辨識。這個範例總共會分成兩個步驟:\n\n- 用臉部檢測模型識別圖片中的人臉(無論是否有戴口罩) \n- 確認圖片中的臉是否有戴口罩\n\n這兩個步驟會包含使用兩個 Paddle 模型,我們會在接下來的內容介紹兩個模型對應需要做的前後處理邏輯\n\n## 導入相關環境依賴及子類別\n在這個例子中的前處理飛槳深度學習引擎需要搭配 DJL 混合模式進行深度學習推理,原因是引擎本身沒有包含 NDArray 操作,因此需要藉用其他引擎的 NDArray 操作能力來完成。這邊我們導入 PyTorch 來做協同的前處理工作:",

"_____no_output_____"

]

],

[

[

"// %mavenRepo snapshots https://oss.sonatype.org/content/repositories/snapshots/\n\n%maven ai.djl:api:0.17.0\n%maven ai.djl.paddlepaddle:paddlepaddle-model-zoo:0.17.0\n%maven org.slf4j:slf4j-simple:1.7.32\n\n// second engine to do preprocessing and postprocessing\n%maven ai.djl.pytorch:pytorch-engine:0.17.0",

"_____no_output_____"

],

[

"import ai.djl.*;\nimport ai.djl.inference.*;\nimport ai.djl.modality.*;\nimport ai.djl.modality.cv.*;\nimport ai.djl.modality.cv.output.*;\nimport ai.djl.modality.cv.transform.*;\nimport ai.djl.modality.cv.translator.*;\nimport ai.djl.modality.cv.util.*;\nimport ai.djl.ndarray.*;\nimport ai.djl.ndarray.types.Shape;\nimport ai.djl.repository.zoo.*;\nimport ai.djl.translate.*;\n\nimport java.io.*;\nimport java.nio.file.*;\nimport java.util.*;",

"_____no_output_____"

]

],

[

[

"## 臉部偵測模型\n現在我們可以開始處理第一個模型,在將圖片輸入臉部檢測模型前我們必須先做一些預處理:\n•\t調整圖片尺寸: 以特定比例縮小圖片\n•\t用一個數值對縮小後圖片正規化\n對開發者來說好消息是,DJL 提供了 Translator 介面來幫助開發做這樣的預處理. 一個比較粗略的 Translator 架構如下:\n\n\n\n在接下來的段落,我們會利用一個 FaceTranslator 子類別實作來完成工作\n### 預處理\n在這個階段我們會讀取一張圖片並且對其做一些事先的預處理,讓我們先示範讀取一張圖片:",

"_____no_output_____"

]

],

[

[

"String url = \"https://raw.githubusercontent.com/PaddlePaddle/PaddleHub/release/v1.5/demo/mask_detection/python/images/mask.jpg\";\nImage img = ImageFactory.getInstance().fromUrl(url);\nimg.getWrappedImage();",

"_____no_output_____"

]

],

[

[

"接著,讓我們試著對圖片做一些預處理的轉換:",

"_____no_output_____"

]

],

[

[

"NDList processImageInput(NDManager manager, Image input, float shrink) {\n NDArray array = input.toNDArray(manager);\n Shape shape = array.getShape();\n array = NDImageUtils.resize(\n array, (int) (shape.get(1) * shrink), (int) (shape.get(0) * shrink));\n array = array.transpose(2, 0, 1).flip(0); // HWC -> CHW BGR -> RGB\n NDArray mean = manager.create(new float[] {104f, 117f, 123f}, new Shape(3, 1, 1));\n array = array.sub(mean).mul(0.007843f); // normalization\n array = array.expandDims(0); // make batch dimension\n return new NDList(array);\n}\n\nprocessImageInput(NDManager.newBaseManager(), img, 0.5f);",

"_____no_output_____"

]

],

[

[

"如上述所見,我們已經把圖片轉成如下尺寸的 NDArray: (披量, 通道(RGB), 高度, 寬度). 這是物件檢測模型輸入的格式\n### 後處理\n當我們做後處理時, 模型輸出的格式是 (number_of_boxes, (class_id, probability, xmin, ymin, xmax, ymax)). 我們可以將其存入預先建立好的 DJL 子類別 DetectedObjects 以便做後續操作. 我們假設有一組推論後的輸出是 ((1, 0.99, 0.2, 0.4, 0.5, 0.8)) 並且試著把人像框顯示在圖片上",

"_____no_output_____"

]

],

[

[

"DetectedObjects processImageOutput(NDList list, List<String> className, float threshold) {\n NDArray result = list.singletonOrThrow();\n float[] probabilities = result.get(\":,1\").toFloatArray();\n List<String> names = new ArrayList<>();\n List<Double> prob = new ArrayList<>();\n List<BoundingBox> boxes = new ArrayList<>();\n for (int i = 0; i < probabilities.length; i++) {\n if (probabilities[i] >= threshold) {\n float[] array = result.get(i).toFloatArray();\n names.add(className.get((int) array[0]));\n prob.add((double) probabilities[i]);\n boxes.add(\n new Rectangle(\n array[2], array[3], array[4] - array[2], array[5] - array[3]));\n }\n }\n return new DetectedObjects(names, prob, boxes);\n}\n\nNDArray tempOutput = NDManager.newBaseManager().create(new float[]{1f, 0.99f, 0.1f, 0.1f, 0.2f, 0.2f}, new Shape(1, 6));\nDetectedObjects testBox = processImageOutput(new NDList(tempOutput), Arrays.asList(\"Not Face\", \"Face\"), 0.7f);\nImage newImage = img.duplicate();\nnewImage.drawBoundingBoxes(testBox);\nnewImage.getWrappedImage();",

"_____no_output_____"

]

],

[

[

"### 生成一個翻譯器並執行推理任務\n透過這個步驟,你會理解 DJL 中的前後處理如何運作,現在讓我們把前數的幾個步驟串在一起並對真實圖片進行操作:",

"_____no_output_____"

]

],

[

[

"class FaceTranslator implements NoBatchifyTranslator<Image, DetectedObjects> {\n\n private float shrink;\n private float threshold;\n private List<String> className;\n\n FaceTranslator(float shrink, float threshold) {\n this.shrink = shrink;\n this.threshold = threshold;\n className = Arrays.asList(\"Not Face\", \"Face\");\n }\n\n @Override\n public DetectedObjects processOutput(TranslatorContext ctx, NDList list) {\n return processImageOutput(list, className, threshold);\n }\n\n @Override\n public NDList processInput(TranslatorContext ctx, Image input) {\n return processImageInput(ctx.getNDManager(), input, shrink);\n }\n}",

"_____no_output_____"

]

],

[

[

"要執行這個人臉檢測推理,我們必須先從 DJL 的 Paddle Model Zoo 讀取模型,在讀取模型之前我們必須指定好 `Crieteria` . `Crieteria` 是用來確認要從哪邊讀取模型而後執行 `Translator` 來進行模型導入. 接著,我們只要利用 `Predictor` 就可以開始進行推論",

"_____no_output_____"

]

],

[

[

"Criteria<Image, DetectedObjects> criteria = Criteria.builder()\n .setTypes(Image.class, DetectedObjects.class)\n .optModelUrls(\"djl://ai.djl.paddlepaddle/face_detection/0.0.1/mask_detection\")\n .optFilter(\"flavor\", \"server\")\n .optTranslator(new FaceTranslator(0.5f, 0.7f))\n .build();\n \nvar model = criteria.loadModel();\nvar predictor = model.newPredictor();\n\nDetectedObjects inferenceResult = predictor.predict(img);\nnewImage = img.duplicate();\nnewImage.drawBoundingBoxes(inferenceResult);\nnewImage.getWrappedImage();",

"_____no_output_____"

]

],

[

[

"如圖片所示,這個推論服務已經可以正確的辨識出圖片中的三張人臉\n## 口罩分類模型\n一旦有了圖片的座標,我們就可以將圖片裁剪到適當大小並且將其傳給口罩分類模型做後續的推論\n### 圖片裁剪\n圖中方框位置的數值範圍從0到1, 只要將這個數值乘上圖片的長寬我們就可以將方框對應到圖片中的準確位置. 為了使裁剪後的圖片有更好的精確度,我們將圖片裁剪成方形,讓我們示範一下:",

"_____no_output_____"

]

],

[

[

"int[] extendSquare(\n double xmin, double ymin, double width, double height, double percentage) {\n double centerx = xmin + width / 2;\n double centery = ymin + height / 2;\n double maxDist = Math.max(width / 2, height / 2) * (1 + percentage);\n return new int[] {\n (int) (centerx - maxDist), (int) (centery - maxDist), (int) (2 * maxDist)\n };\n}\n\nImage getSubImage(Image img, BoundingBox box) {\n Rectangle rect = box.getBounds();\n int width = img.getWidth();\n int height = img.getHeight();\n int[] squareBox =\n extendSquare(\n rect.getX() * width,\n rect.getY() * height,\n rect.getWidth() * width,\n rect.getHeight() * height,\n 0.18);\n return img.getSubImage(squareBox[0], squareBox[1], squareBox[2], squareBox[2]);\n}\n\nList<DetectedObjects.DetectedObject> faces = inferenceResult.items();\ngetSubImage(img, faces.get(2).getBoundingBox()).getWrappedImage();",

"_____no_output_____"

]

],

[

[

"### 事先準備 Translator 並讀取模型\n在使用臉部檢測模型的時候,我們可以利用 DJL 預先建好的 `ImageClassificationTranslator` 並且加上一些轉換。這個 Translator 提供了一些基礎的圖片翻譯處理並且同時包含一些進階的標準化圖片處理。以這個例子來說, 我們不需要額外建立新的 `Translator` 而使用預先建立的就可以",

"_____no_output_____"

]

],

[

[

"var criteria = Criteria.builder()\n .setTypes(Image.class, Classifications.class)\n .optModelUrls(\"djl://ai.djl.paddlepaddle/mask_classification/0.0.1/mask_classification\")\n .optFilter(\"flavor\", \"server\")\n .optTranslator(\n ImageClassificationTranslator.builder()\n .addTransform(new Resize(128, 128))\n .addTransform(new ToTensor()) // HWC -> CHW div(255)\n .addTransform(\n new Normalize(\n new float[] {0.5f, 0.5f, 0.5f},\n new float[] {1.0f, 1.0f, 1.0f}))\n .addTransform(nd -> nd.flip(0)) // RGB -> GBR\n .build())\n .build();\n\nvar classifyModel = criteria.loadModel();\nvar classifier = classifyModel.newPredictor();",

"_____no_output_____"

]

],

[

[

"### 執行推論任務\n最後,要完成一個口罩識別的任務,我們只需要將上述的步驟合在一起即可。我們先將圖片做裁剪後並對其做上述的推論操作,結束之後再生成一個新的分類子類別 `DetectedObjects`:",

"_____no_output_____"

]

],

[

[

"List<String> names = new ArrayList<>();\nList<Double> prob = new ArrayList<>();\nList<BoundingBox> rect = new ArrayList<>();\nfor (DetectedObjects.DetectedObject face : faces) {\n Image subImg = getSubImage(img, face.getBoundingBox());\n Classifications classifications = classifier.predict(subImg);\n names.add(classifications.best().getClassName());\n prob.add(face.getProbability());\n rect.add(face.getBoundingBox());\n}\n\nnewImage = img.duplicate();\nnewImage.drawBoundingBoxes(new DetectedObjects(names, prob, rect));\nnewImage.getWrappedImage();",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

]

] |

4a12777909877ab21d1c749a9f29b34afcfe9129

| 7,332 |

ipynb

|

Jupyter Notebook

|

Numpy-Assignment-2.ipynb

|

Jazz-hash/Python-Raw-Codes

|

d43cb8ec9261167037550bd59ee79e53eb9d7abf

|

[

"Apache-2.0"

] | null | null | null |

Numpy-Assignment-2.ipynb

|

Jazz-hash/Python-Raw-Codes

|

d43cb8ec9261167037550bd59ee79e53eb9d7abf

|

[

"Apache-2.0"

] | null | null | null |

Numpy-Assignment-2.ipynb

|

Jazz-hash/Python-Raw-Codes

|

d43cb8ec9261167037550bd59ee79e53eb9d7abf

|

[

"Apache-2.0"

] | null | null | null | 32.017467 | 257 | 0.30401 |

[

[

[

"import numpy as np\nimport os\nfrom PIL import Image\nimport glob\ni = 0\nrootDir = '.'\nimageDir = 'images'\nresizedImageDir = 'ResizedImages'\nimageList = []\nresizedImageList = []\nfor dirName, subDirList, fileList in os.walk(rootDir):\n if imageDir in dirName:\n print('Found Directory: %s' % dirName)\n for file in glob.glob(r\"{}/*.png\".format(imageDir)):\n print('\\t%s' %file)\n image = Image.open(file)\n imageList.append(image)\n resized_image = image.resize((200,150))\n resized_image = np.array(resized_image)\n resizedImageList.append(resized_image)\n break",

"Found Directory: .\\images\n\timages\\Screenshot-1.png\n\timages\\Screenshot-2.png\n\timages\\Screenshot-3.png\n"

],

[

"print(imageList)\nprint(resizedImageList)",

"[<PIL.PngImagePlugin.PngImageFile image mode=RGBA size=1366x768 at 0x2046903E358>, <PIL.PngImagePlugin.PngImageFile image mode=RGBA size=1366x768 at 0x20469128358>, <PIL.PngImagePlugin.PngImageFile image mode=RGBA size=1366x768 at 0x20469128780>]\n[array([[[ 48, 9, 12, 255],\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255],\n ...,\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255]],\n\n [[ 48, 9, 12, 255],\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255],\n ...,\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255]],\n\n [[ 48, 9, 12, 255],\n [ 18, 97, 146, 255],\n [ 7, 103, 161, 255],\n ...,\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255],\n [ 48, 9, 12, 255]],\n\n ...,\n\n [[ 30, 6, 8, 255],\n [255, 255, 255, 255],\n [255, 255, 255, 255],\n ...,\n [ 28, 4, 6, 255],\n [ 29, 5, 7, 255],\n [ 31, 7, 9, 255]],\n\n [[ 30, 6, 8, 255],\n [ 29, 5, 7, 255],\n [ 30, 6, 8, 255],\n ...,\n [ 31, 7, 9, 255],\n [ 30, 6, 8, 255],\n [ 29, 5, 7, 255]],\n\n [[ 28, 4, 6, 255],\n [ 29, 5, 7, 255],\n [ 29, 5, 7, 255],\n ...,\n [ 30, 6, 8, 255],\n [ 29, 5, 7, 255],\n [ 29, 5, 7, 255]]], dtype=uint8), array([[[ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n ...,\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255]],\n\n [[ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n ...,\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255]],\n\n [[ 44, 20, 22, 255],\n [ 16, 99, 149, 255],\n [ 7, 105, 163, 255],\n ...,\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255]],\n\n ...,\n\n [[ 30, 6, 8, 255],\n [255, 255, 255, 255],\n [255, 255, 255, 255],\n ...,\n [ 28, 4, 6, 255],\n [ 29, 5, 7, 255],\n [ 31, 7, 9, 255]],\n\n [[ 30, 6, 8, 255],\n [ 29, 5, 7, 255],\n [ 30, 6, 8, 255],\n ...,\n [ 31, 7, 9, 255],\n [ 30, 6, 8, 255],\n [ 29, 5, 7, 255]],\n\n [[ 28, 4, 6, 255],\n [ 29, 5, 7, 255],\n [ 29, 5, 7, 255],\n ...,\n [ 30, 6, 8, 255],\n [ 29, 5, 7, 255],\n [ 29, 5, 7, 255]]], dtype=uint8), array([[[ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n ...,\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255]],\n\n [[ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n ...,\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255]],\n\n [[ 44, 20, 22, 255],\n [ 16, 99, 149, 255],\n [ 7, 105, 163, 255],\n ...,\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255],\n [ 44, 20, 22, 255]],\n\n ...,\n\n [[ 30, 6, 8, 255],\n [255, 255, 255, 255],\n [255, 255, 255, 255],\n ...,\n [ 28, 4, 6, 255],\n [ 29, 5, 7, 255],\n [ 31, 7, 9, 255]],\n\n [[ 30, 6, 8, 255],\n [ 29, 5, 7, 255],\n [ 30, 6, 8, 255],\n ...,\n [ 31, 7, 9, 255],\n [ 30, 6, 8, 255],\n [ 29, 5, 7, 255]],\n\n [[ 28, 4, 6, 255],\n [ 29, 5, 7, 255],\n [ 29, 5, 7, 255],\n ...,\n [ 30, 6, 8, 255],\n [ 29, 5, 7, 255],\n [ 29, 5, 7, 255]]], dtype=uint8)]\n"

]

]

] |

[

"code"

] |

[

[

"code",

"code"

]

] |

4a128342fa71f007e28422cc0439776cb803ef10

| 287,990 |

ipynb

|

Jupyter Notebook

|

_notebooks/2022-05-06-Residual-Networks.ipynb

|

geon-youn/DunGeon

|

70792a1042630fbf114fe43263b851d0b57d5b18

|

[

"Apache-2.0"

] | null | null | null |

_notebooks/2022-05-06-Residual-Networks.ipynb

|

geon-youn/DunGeon

|

70792a1042630fbf114fe43263b851d0b57d5b18

|

[

"Apache-2.0"

] | null | null | null |

_notebooks/2022-05-06-Residual-Networks.ipynb

|

geon-youn/DunGeon

|

70792a1042630fbf114fe43263b851d0b57d5b18

|

[

"Apache-2.0"

] | null | null | null | 143.63591 | 189,145 | 0.821397 |

[

[

[

"# \"Understanding Residual Networks\"\n> \"Probably about residuals, right?\"\n\n- comments: true\n- categories: [vision]",

"_____no_output_____"

]

],

[

[

"#hide\n!pip install -Uqq fastai>=2.0.0 graphviz ipywidgets matplotlib nbdev>=0.2.12 pandas scikit_learn azure-cognitiveservices-search-imagesearch sentencepiece",

"_____no_output_____"

],

[

"#hide\nfrom google.colab import drive\ndrive.mount('/content/gdrive/', force_remount=True)",

"Mounted at /content/gdrive/\n"

]

],

[

[

"## Introduction",

"_____no_output_____"

],

[

"We've covered the basics of CNNs with the MNIST data set where we trained a model to recognize handwritten digits. Today, we'll be moving towards residual networks that allow us to train CNNs with more layers. \n\nSince we've already got a good result with the MNIST data set, we'll move onto the Imagenette data set, which is a smaller version of the ImageNet data set. \n\nWe train with a smaller version so that we can make small changes without having to wait long periods of time for the model to train.",

"_____no_output_____"

]

],

[

[

"#hide\nfrom fastai.vision.all import *",

"_____no_output_____"

],

[

"def get_dls(url, presize, resize):\n path = untar_data(url)\n return DataBlock((ImageBlock, CategoryBlock), get_items=get_image_files,\n splitter=GrandparentSplitter(valid_name='val'),\n get_y=parent_label, item_tfms=Resize(presize),\n batch_tfms=[*aug_transforms(min_scale=0.5, size=resize),\n Normalize.from_stats(*imagenet_stats)]\n ).dataloaders(path, bs=128)",

"_____no_output_____"

],

[

"#hide_output\ndls = get_dls(URLs.IMAGENETTE_160, 160, 128)",

"_____no_output_____"

],

[

"dls.show_batch(max_n=4)",

"_____no_output_____"

]

],

[

[

"## Adding flexibility through fully convolutional neural networks",

"_____no_output_____"

],

[

"First, we'll first change how our model works. With the MNIST data set, we have images of shape 28 $\\times$ 28. If we added a few more layers with a stride of 1, then we'd get more layers. But, how do we do classification for images with sizes that aren't 28 $\\times$ 28? And, what do we do if we want to have additional layers of different strides?\n\nIn reducing the last two dimensions of our output from each layer (the height and width) through strides, we get two problems:\n- with larger images, we need a lot more stride 2 layers; and\n- the model won't work for images of different shape than our training images. \n\nThe latter can be solved through resizing, but do we really want to resize images to 28 $\\times$ 28? We might be losing a lot of information for more complex tasks. \n\nSo, we solve it through *fully convolutional networks*, which uses a trick of taking the average of activations along a convolutional grid. In other words, we take the average of the last two axes from the final layer like so:",

"_____no_output_____"

]

],

[

[

"def avg_pool(x):\n return x.mean((2, 3))",

"_____no_output_____"

]

],

[

[

"Remember that our final layer has a shape of `n_batches * n_channels * height * width`. So, taking the average of the last two axes gives us a new tensor of shape `n_batches * n_channels * 1` from which we flatten to get `n_batches * n_channels`. \n\nUnlike our last approach of striding until our last two axes are 1 $\\times$ 1, we can have a final layer with any value for our last two axes and still end up with 1 $\\times$ 1 after average pooling. In other words, through average pooling, we can have a CNN that can have as many layers as we want, with images of any shape during inference. \n\nOverall, a fully convolutional network has a number of convolutional layers that can be of any stride, an adaptive average pooling layer, a flatten layer, and then a linear layer. An adaptive average pooling layer allows us to specify the shape of the output, but we want one value so we pass in `1`:",

"_____no_output_____"

]

],

[

[

"def block(ni, nf):\n return ConvLayer(ni, nf, stride=2)\n\ndef get_model():\n return nn.Sequential(\n block(3, 16),\n block(16, 32),\n block(32, 64),\n block(64, 128),\n block(128, 256),\n nn.AdaptiveAvgPool2d(1), \n Flatten(),\n nn.Linear(256, dls.c)\n )",

"_____no_output_____"

]

],

[

[

"The [`ConvLayer`](https://docs.fast.ai/layers.html#ConvLayer) is fastai's version of our `conv` layer from the [last blog](https://geon-youn.github.io/DunGeon/vision/2022/04/30/Convolutional-Neural-Networks.html), which includes the convolutional layer, the activation function, and batch normalization, but also adds more functionalities. \n\n---\n\n*Activation function or nonlinearity?* In the last blog, I defined ReLU as a nonlinearity, because it is a nonlinearity. However, it can also be called an activation function since it's taking the activations from the convolutional layer to output new activations. An activation function is just that: a function between two linear layers. \n\n---\n\nIn retrospect, our simple CNN for the MNIST data set looked something like this:",

"_____no_output_____"

]

],

[

[

"def get_simple_cnn():\n return nn.Sequential(\n block(3, 16),\n block(16, 32),\n block(32, 64),\n block(64, 128),\n block(128, 10),\n Flatten()\n )",

"_____no_output_____"

]

],

[

[

"In a fully convolutional network we have what we had before in a CNN, except that we pool the last two axes from our final convolutional layer into a unit axis, flatten the output to get rid of the unit axis, then use a linear layer to get `dls.c` output channels. `dls.c` returns how many unique labels there are for our data set. \n\nSo, why didn't we just use fully convolutional networks from the beginning? Well, fully convolutional networks take an image, cut them into pieces, shake them all about, do the hokey pokey, and decide, on average, what the image should be classified as. However, we were dealing with an optical character recognition (OCR) problem. With OCR, it doesn't make sense to cut a character into pieces and decide, on averge, what character it is. \n\nThat doesn't mean fully convolutional networks are useless; they're bad for OCR problems, but they're good for problems where the objects-to-be-classified don't have a specific orientation or size. \n\nLet's try training a fully convolutional network on the Imagenette data set:",

"_____no_output_____"

]

],

[

[

"def get_learner(model):\n return Learner(dls, model, loss_func=nn.CrossEntropyLoss(), \n metrics=accuracy).to_fp16()",

"_____no_output_____"

],

[

"learn = get_learner(get_model())",

"_____no_output_____"

],

[

"learn.fit_one_cycle(5, 3e-3)",

"_____no_output_____"

]

],

[

[

"## How resnet came about",

"_____no_output_____"

],

[

"Now we're ready to add more layers through a fully convolutional network. But, how does it turn out if we just add more layers? Not that promising. \n\n<figure>\n <img src='https://inotgo.com/imagesLocal/202103/24/20210324084503876z_0.png' alt='Comparisons of small and large layer CNNs'>\n <figcaption>Comparison of small and large layer CNNs through error rate on training and test sets.</figcaption>\n</figure>\n\nYou'd expect a model with more layers to have an easier time predicting the correct label. However, [the founders](https://arxiv.org/abs/1512.03385) of resnet found different results when training and comparing the results of a 20- and 56-layer CNN: the 56-layer model was doing worse than the 20-layer model in both training and test sets. Interestingly, the lower performance isn't caused by overfitting because the same pattern persists between the training and test errors.\n\nHowever, shouldn't we be able to add 36 identity layers (that output the same activations) to the 20-layer model to achieve a 56-layer model that has the same results as the 20-layer model? For some reason, SGD isn't able to find that kind of model. \n\nSo, here comes *residuals*. Instead of each layer being the output of `F(x)` where `F` is a layer and `x` is the input, what if we had `x + F(x)`? In essense, we want each layer to learn well. We could go straight to `H(x)`, but we can have it learn `H(x)` by learning `F(x) = H(x) - x`, which turns out to `H(x) = x + F(x)`. \n\nReturning to the idea of identity layers, `F` contains a batchnorm which performs $\\gamma y + \\beta$ with the output `y`. We could have $\\gamma$ equal to 0, which turns `x + F(x)` to `x + 0`, which is equivalent to `x`.\n\nTherefore, we could start with a good 20-layer model, initialize 36 layers on top of it with $\\gamma$ initialized to 0, then fine-tune the entire model. \n\nHowever, instead of starting with a trained model, we have something like this:\n\n<figure>\n <img src='https://www.researchgate.net/publication/331195671/figure/fig1/AS:727865238753280@1550548004386/ResNet-module-adapted-from-1.ppm' alt='Diagram of a resnet block'>\n <figcaption>The \"resnet\" layer</figcaption>\n</figure>\n\nIn a residual network layer, we have an input `x` that passes through two convolutional layers `f` and `g`, where `F(x) = g(f(x))`. The right arrow is the *identity branch* or *skip connection* that gives us the identity part of the equation: `x + F(x)`, whereby `F(x)` is the residual. \n\nSo instead of adding on these resnet layers to a 20-layer model, we just initialize a model with these layers. Then, we initialize them randomly per usual and train them with SGD. Skip connections enable SGD to optimize the model even though there's more layers. \n\nWhy resnet works so well compared to just adding more layers is like how weight decay works so well: we're training to minimize residuals. \n\nAt each layer of a residual network, we're training the residuals given by the function `F` since that's the only part with trainable parameters; however, the output of each layer is `x + F(x)`. So, if the desired output is `H(x)` where `H(x) = x + F(x)`, we're asking the model to predict the *residual* `F(x) = H(x) - x`, which is the difference between the desired output and the given input. Therefore at each optimization step, we're minimizing the error (residual). Hence a residual network is good at learning the difference between doing nothing and doing something (by going through the two weight layers). \n\nSo, a residual network block looks like this:",

"_____no_output_____"

]

],

[

[

"class ResBlock(Module):\n def __init__(self, ni, nf):\n self.convs = nn.Sequential(\n ConvLayer(ni, nf),\n ConvLayer(nf, nf, norm_type=NormType.BatchZero)\n )\n\n def forward(self, x):\n return x + self.convs(x)",

"_____no_output_____"

]

],

[

[

"By passing `NormType.BatchZero` to the second convolutional layer, we initialize the $\\gamma$s in the batchnorm equation to 0. \n\n",

"_____no_output_____"

],

[

"## How to use a resblock in practice",

"_____no_output_____"

],

[

"Although we could begin training with the `ResBlock`, how would `x + F(x)` work if `x` and `F(x)` are of different shapes? \n\nWell, how could they become different shapes? When `ni != nf` or we use a stride that's not 1. Remember that `x` has the shape `n_batch * n_channels * height * width`. If we have `ni != nf`, then `n_channels` is different for `x` and `F(x)`. Similarly, if `stride != 1`, then `height` and `width` would be different for `x` and `F(x)`. \n\nInstead of accepting these restrictions, we can resize `x` to become the same shape as `F(x)`. First, we appply average pooling to change `height` and `width`, then apply a 1 $\\times$ 1 convolution (a convolution layer with a kernel size of 1 $\\times$ 1) with `ni` in-channels and `nf` out-channels to change `n_channels`. \n\nThrough changing our code, we have:",

"_____no_output_____"

]

],

[

[

"def _conv_block(ni, nf, stride):\n return nn.Sequential(\n ConvLayer(ni, nf, stride=stride),\n ConvLayer(nf, nf, act_cls=None, norm_type=NormType.BatchZero)\n )",

"_____no_output_____"

],

[

"class ResBlock(Module):\n def __init__(self, ni, nf, stride=1):\n self.convs = _conv_block(ni, nf, stride)\n # noop is `lamda x: x`, short for \"no operation\"\n self.idconv = noop if ni == nf else ConvLayer(ni, nf, 1, act_cls=None)\n self.pool = noop if stride == 1 else nn.AvgPool2d(stride, ceil_mode=True)\n\n def forward(self, x):\n # we can apply ReLU for both x and F(x) since we specified \n # no activation functions for the last layer of conv and idconvs\n return F.relu(self.idconv(self.pool(x)) + self.convs(x))",

"_____no_output_____"

]

],

[

[

"In our new `ResBlock`, one pass will lead to `H(G(x)) + F(x)` where `F` is two convolutional layers and `G` is a pooling layer followed by `H`, a convolutional layer. We have a pooling layer to make the height and width of `x` the same as `F(x)` and the convolutional layer to make `G(x)` have the same out-channels as `F(x)`. Then, `H(G(x))` and `F(x)` will have the same shape all the time, allowing them to be added. Overall, a resnet block is 2 layers deep.",

"_____no_output_____"

]

],

[

[

"def block(ni, nf):\n return ResBlock(ni, nf, stride=2)",

"_____no_output_____"

]

],

[

[

"So, let's try retraining with the new model:",

"_____no_output_____"

]

],

[

[

"learn = get_learner(get_model())\nlearn.fit_one_cycle(5, 3e-3)",

"_____no_output_____"

]

],

[

[

"We didn't get much of an accuracy boost, but that's because we still have the same number of layers. Let's double the layers:",

"_____no_output_____"

]

],

[

[

"def block(ni, nf):\n return nn.Sequential(ResBlock(ni, nf, stride=2), ResBlock(nf, nf))",

"_____no_output_____"

],

[

"learn = get_learner(get_model())\nlearn.fit_one_cycle(5, 3e-3)",

"_____no_output_____"

]

],

[

[

"## Implementing a resnet",

"_____no_output_____"

],

[

"The first step to go from a resblock to a resnet is to improve the stem of the model.\n\nThe first few layers of the model are called its *stem*. Through practice, [some researchers](https://arxiv.org/abs/1812.01187) found improvements by beginning the model with a few convolutional layers followed by a max pooling layer. A max pooling layer, unlike average pooling, takes the maximum instead of the average.\n\nThe new stem, which we prepend the the model looks like this:",

"_____no_output_____"

]

],

[

[

"def _resnet_stem(*sizes):\n return [\n ConvLayer(sizes[i], sizes[i + 1], 3, stride=2 if i == 0 else 1) \n for i in range(len(sizes) - 1)\n ] + [nn.MaxPool2d(kernel_size=3, stride=2, padding=1)]",

"_____no_output_____"

]

],

[

[

"Keeping the model simple in the beginning helps with training because with CNNs, the vast majority of *computations*, not parameters, occur in the beginning where images have large dimensions.",

"_____no_output_____"

]

],

[

[

"_resnet_stem(3, 32, 32, 64)",

"_____no_output_____"

]

],

[

[

"These same researchers found additional \"tricks\" to substantially improve the model: using **four** groups of resnet blocks with channels of 64, 128, 256, and 512. Each group starts with a stride of 2 except for the first one since it's after the max pooling layer from the stem. \n\nBelow is *the* resnet:",

"_____no_output_____"

]

],

[

[

"class ResNet(nn.Sequential):\n def __init__(self, n_out, layers, expansion=1):\n stem = _resnet_stem(3, 32, 32, 64)\n self.channels = [64, 64, 128, 256, 512]\n for i in range(1, 5): self.channels[i] *= expansion\n blocks = [self._make_layer(*o) for o in enumerate(layers)]\n super().__init__(*stem, *blocks, \n nn.AdaptiveAvgPool2d(1), Flatten(),\n nn.Linear(self.channels[-1], n_out))\n \n def _make_layer(self, idx, n_layers):\n stride = 1 if idx == 0 else 2\n ni, nf = self.channels[idx:idx + 2]\n return nn.Sequential(*[\n ResBlock(ni if i == 0 else nf, nf, stride if i == 0 else 1)\n for i in range(n_layers)\n ])",

"_____no_output_____"

]

],

[

[

"The \"total\" number of layers in a resnet is double sum of the layers passed in as `layers` in instantiating `ResNet` plus 2 from `stem` and `nn.Linear`. We consider `stem` as one layer because of the max pooling layer at the end. \n\nSo, a resnet-18 is:",

"_____no_output_____"

]

],

[

[

"#collapse_output\nrn18 = ResNet(dls.c, [2, 2, 2, 2])\nrn18",

"_____no_output_____"

]

],

[

[

"Where it has 18 layers since we pass in `[2, 2, 2, 2]` for `layers`, which sums of 8 and double that is `16` (we double since each `ResBlock` is 2 layers deep). Then, we add 2 from `stem` and `nn.Linear` to get 18 \"total\" layers. \n\nBy increasing the number of layers to 18, we see a significant increase in our model's accuracy:",

"_____no_output_____"

]

],

[

[

"learn = get_learner(rn18)\nlearn.fit_one_cycle(5, 3e-3)",

"_____no_output_____"

]

],

[

[

"## Adding more layers to resnet-18",

"_____no_output_____"

],

[

"As you increase the number of layers in a resnet, the number of parameters increases substantially. Thus, to avoid running out of GPU memory, we can apply *bottlenecking*, which alters the convolutional layer in `ResBlock` for `F` in `x + F(x)` as follows:\n\n<figure>\n <img src='https://miro.medium.com/max/588/0*9tCUFp28oQGOK6bE.jpg' alt='Bottleneck layer'>\n</figure>\n\nPreviously, we stacked two convolutions with a kernel size of 3. However, a bottleneck layer has a convolution with a kernel size of 1, which decreases the channels by a factor of 4, then a convolution with a kernel size of 3 that maintains the same number of channels, and then a convolution with a kernel size of 1 that increases the number of channels by a factor of 4 to return the original dimension. It's coined *bottleneck* because we start with, in this case, a 256-channel image that's \"bottlenecked\" by the first convolution into 64 channels, and then enlarged to 256 channels by the last convolution.\n\nAlthough we'll have another layer, it performs faster than the two convolutions with a kernel size of 3 because convolutions with a kernel size of 1 are much faster. \n\nThrough bottleneck layers, we can have more channels in more-or-less the same amount of time. Additionally, we'll have fewer parameters since we replace a 3 $\\times$ 3 kernel layer with two 1 $\\times$ 1 kernel layers, although one has 4 more out-channels. Overall, we have a difference of 4$:$9. \n\nIn code, the bottleneck layer looks like this:",

"_____no_output_____"

]

],

[

[

"def _conv_block(ni, nf, stride):\n return nn.Sequential(\n ConvLayer(ni, nf // 4, stride=1),\n ConvLayer(nf // 4, nf // 4, stride=stride),\n ConvLayer(nf // 4, nf, stride=1, act_cls=None, norm_type=NormType.BatchZero)\n )",

"_____no_output_____"

]

],

[

[

"Then, when we make our resnet model, we'll have to pass in 4 for our `expansion` to account for the decreasing factor of 4 in our bottleneck layer. ",

"_____no_output_____"

]

],

[

[

"rn50 = ResNet(dls.c, [3, 4, 6, 3], 4)",

"_____no_output_____"

],

[

"learn = get_learner(rn50)\nlearn.fit_one_cycle(20, 3e-3)",

"_____no_output_____"

]

],

[

[

"## MNIST: to CNN or resnet?",

"_____no_output_____"

],

[

"In the previous blog, we achieved a 99.2% accuracy with just the CNN. Let's try training a resnet-50 with the MNIST data set and see what we can get:",

"_____no_output_____"

]

],

[

[

"def get_dls(url, bs=512):\n path = untar_data(url)\n return DataBlock(\n blocks=(ImageBlock(cls=PILImageBW), CategoryBlock),\n get_items=get_image_files,\n splitter=GrandparentSplitter('training', 'testing'), \n get_y=parent_label,\n batch_tfms=Normalize()\n ).dataloaders(path, bs=bs)",

"_____no_output_____"

],

[

"#hide_output\ndls = get_dls(URLs.MNIST)",

"_____no_output_____"

],

[

"dls.show_batch(max_n=4)",

"_____no_output_____"

]

],

[

[

"We have to change the first input into `_resnet_stem` to 1 from 3 since the MNIST data set is a greyscale image.",

"_____no_output_____"

]

],

[

[

"class ResNet(nn.Sequential):\n def __init__(self, n_out, layers, expansion=1):\n stem = _resnet_stem(1, 32, 32, 64)\n self.channels = [64, 64, 128, 256, 512]\n for i in range(1, 5): self.channels[i] *= expansion\n blocks = [self._make_layer(*o) for o in enumerate(layers)]\n super().__init__(*stem, *blocks, \n nn.AdaptiveAvgPool2d(1), Flatten(),\n nn.Linear(self.channels[-1], n_out))\n \n def _make_layer(self, idx, n_layers):\n stride = 1 if idx == 0 else 2\n ni, nf = self.channels[idx:idx + 2]\n return nn.Sequential(*[\n ResBlock(ni if i == 0 else nf, nf, stride if i == 0 else 1)\n for i in range(n_layers)\n ])",

"_____no_output_____"

],

[

"rn50 = ResNet(dls.c, [3, 4, 6, 3], 4)",

"_____no_output_____"

],

[

"learn = get_learner(rn50)",

"_____no_output_____"

],

[

"lr = learn.lr_find().valley",

"_____no_output_____"

],

[

"learn.fit_one_cycle(20, lr)",

"_____no_output_____"

]

],

[

[

"After training the model by 20 epochs, we can confidently say that it's not always the best idea to start with a more complex architecture that takes much longer to train and gives a worse accuracy. \n\nIn addition, I mentioned before that resnets aren't the best for OCR problems like digit recognition since we're slicing the image and deciding, on average, what the digit is. \n\nIn this scenario, a regular CNN would be a better choice than a resnet. ",

"_____no_output_____"

],

[

"## Conclusion",

"_____no_output_____"

],

[

"In this blog, we covered residual networks, which allow us to train CNNs with more layers by having the model learn indirectly through residuals. It's as if before, we were just training the weights of the activations, when in reality, we also needed bias to have the models learn efficiently. To visualize, imagine the following:\n\nBefore, we had\n```\nweights * x + bias\n```\nas our activations, where `x` is our input. But, that just gives us activations. Through residual networks, we have\n```\n(weights * x + bias) + residuals\n```\nwhich adds on another parameter that we can train. Through exploring, we found that we'd need some way to make `(weights * x + bias)` have the same shape as `residuals` to allow them to be added. Thus, we formed\n```\nreshape * (weights * x + bias) + residuals\n```\nTherefore, a residual network *fixes* a CNN to be more like how we train neural networks since the above can be simplified to:\n```\nweights * x + bias\n```\n\nThen, to use this new kind of layer, we had to use fully convolutional networks that allow us to have input of any shape. Finally, we looked at a bag of tricks like stems, groups, and bottleneck layers that allow us to train RNNs more efficiently and have many more layers.\n\nAt this point, we've covered all the main architectures for training great models with computer vision (CNN and resnet), natural language processing (AWD-LSTM), tabular data (random forests and neural networks), and collaborative filtering (probabilitic matrix factorization and neural networks). \n\nFrom now on, we'll be looking at the foundations of deep learning and fastai through modifying the mentioned architectures, building better optimization functions than the standard SGD, exactly what PyTorch is doing for us, how to visualize what the model is learning, and a deeper look into what fastai is doing for us through its `Learner` class. ",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown"

] |

[

[

"markdown"

],

[

"code",

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown",

"markdown",

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code"

],

[

"markdown",

"markdown",

"markdown"

]

] |

4a129330fb0ae33fad42abdccc4b5bedaa0674ad

| 19,103 |

ipynb

|

Jupyter Notebook

|

Assignment_Day_2.ipynb

|

VishnuM24/LetsUpgrade-Python

|

b1d1e74df9f6bbebab155e460e45335cd0f9888a

|

[

"Apache-2.0"

] | null | null | null |

Assignment_Day_2.ipynb

|

VishnuM24/LetsUpgrade-Python

|

b1d1e74df9f6bbebab155e460e45335cd0f9888a

|

[

"Apache-2.0"

] | null | null | null |

Assignment_Day_2.ipynb

|

VishnuM24/LetsUpgrade-Python

|

b1d1e74df9f6bbebab155e460e45335cd0f9888a

|

[

"Apache-2.0"

] | null | null | null | 23.789539 | 243 | 0.403968 |

[

[

[

"<a href=\"https://colab.research.google.com/github/VishnuM24/LetsUpgrade-Python/blob/master/Assignment_Day_2.ipynb\" target=\"_parent\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/></a>",

"_____no_output_____"

],

[

"# - DAY 2 -",

"_____no_output_____"

],

[

"##**QUESTION 1 :**\n\nList & its default methods",

"_____no_output_____"

]

],

[

[

"vowels = [ 'a', 'e' ]",

"_____no_output_____"

],

[

"# Method 1 - extend()\nvow2 = ['i', 'o', 'u']\nvowels.extend( vow2 )\nprint( vowels )",

"['a', 'e', 'i', 'o', 'u']\n"

],

[

"# Method 2 - append()\nvowels.append( 'y' )\nprint( vowels )",

"['a', 'e', 'i', 'o', 'u', 'y']\n"

],

[

"# Method 3 - insert()\nvowels.insert( 2, 'x' )\nprint(vowels)",

"['a', 'e', 'x', 'i', 'o', 'u', 'y']\n"

],

[

"# Method 4 - pop()\nvowels.pop(-1)\nprint(vowels)",

"['a', 'e', 'x', 'i', 'o', 'u']\n"

],

[

"# Method 5 - remove()\nvowels.remove('x')\nprint(vowels)",

"['a', 'e', 'i', 'o', 'u']\n"

]

],

[

[

"## **Question 2:**\n\nDictionary & its default methods",

"_____no_output_____"

]

],

[

[

"dailyFood = {\n 'breakfast' : 'dosa',\n 'lunch' : 'rice',\n 'dinner' : 'chapathi'\n}",

"_____no_output_____"

],

[

"# Method 1 - keys()\nkeys_list = dailyFood.keys()\nprint( keys_list )",

"dict_keys(['breakfast', 'lunch', 'dinner'])\n"

],

[

"# Method 2 - items()\ndailyfood_list = dailyFood.items()\nprint( dailyfood_list )",

"dict_items([('breakfast', 'dosa'), ('lunch', 'rice'), ('dinner', 'chapathi')])\n"

],

[

"# Method 3 - update()\ndailyFood.update( { 'evening_snack' : 'biscuits' } )\nprint( dailyfood_list )",

"dict_items([('breakfast', 'dosa'), ('lunch', 'rice'), ('dinner', 'chapathi'), ('evening_snack', 'biscuits')])\n"

],

[

"# Method 4 - get()\nfood_lunch = dailyFood.get('lunch')\nprint( food_lunch )",

"rice\n"

],

[

"# Method 5 - pop()\ndailyFood.pop( 'evening_snack' )\nprint( dailyFood )",

"{'breakfast': 'dosa', 'lunch': 'rice', 'dinner': 'chapathi'}\n"

]

],

[

[

"## **Question 3:**\n\nSets & its default methods",

"_____no_output_____"

]

],

[

[

"language_set = { 'c', 'python', 'java' }",

"_____no_output_____"

],

[

"# Method 1 - add()\nlanguage_set.add( 'PHP' )\nprint( language_set )",

"{'python', 'PHP', 'c', 'java'}\n"

],

[

"# Method 2 - union()\nprogramming_set = language_set.union( { 'c#', 'cpp', 'Go', 'R' } )\nprint( programming_set )",

"{'python', 'PHP', 'java', 'R', 'c#', 'c', 'cpp', 'Go'}\n"

],

[

"# Method 3 - discard()\nprogramming_set.discard( 'PHP' )\nprint( programming_set )",

"{'python', 'java', 'R', 'c#', 'c', 'cpp', 'Go'}\n"

],

[

"# Method 4 - issubset()\nunder_programming = language_set.issubset( programming_set )\nprint( under_programming )",

"False\n"

],

[

"# Method 5 - update()\nprogramming_set.update( { 'html', 'css', 'javascript' } )\nprint( programming_set )",

"{'python', 'javascript', 'html', 'java', 'R', 'c#', 'css', 'c', 'cpp', 'Go'}\n"

]

],

[

[

"## **Question 4:**\n\nTuple & its default methods",

"_____no_output_____"

]

],

[

[

"oct_values = ( '000', '001' , '010' , '011' , '100', '101', '110', '111' )",

"_____no_output_____"

],

[

"# Method 1 - count()\nrepeat_3 = oct_values.count( '011' )\nprint( repeat_3 )",

"1\n"

],

[

"# Method 2 - index()\nindx = oct_values.index( '101' )\nprint( indx )",

"5\n"

],

[

"oct_values[6]",

"_____no_output_____"

]

],

[

[

"## **Question 5:**\n\nStrings & its default methods",

"_____no_output_____"

]

],

[

[

"intro_template = 'Hi This is <NAME> from <PLACE>'\n",

"_____no_output_____"

],

[

"# Method 1 - replace()\nmy_template = intro_template.replace( '<NAME>', 'Vishnu' )\nmy_template = my_template.replace( '<PLACE>', 'Kerala' )\n\nprint( my_template )",

"Hi This is Vishnu from Kerala\n"

],

[

"# Method 2 - split()\nwords = my_template.split(' ')\nprint( words )",

"['Hi', 'This', 'is', 'Vishnu', 'from', 'Kerala']\n"

],

[

"# Method 3 - find()\nx = my_template.find('Vishnu')\nprint( 'My name starts at position',x )",

"My name starts at position 11\n"

],

[

"# Method 4 - upper()\ncaps_template = my_template.upper()\nprint( caps_template )",

"HI THIS IS VISHNU FROM KERALA\n"

],

[

"# Method 5 - isnumeric()\nisNumber = my_template.isnumeric()\nprint( isNumber )\n\ntemp_string = '2412'\nisNo = temp_string.isnumeric()\nprint( isNo )",

"False\nTrue\n"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown",

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

]

] |

4a129a1ce0ae589c15398b44b1c1bb8ad57981b7

| 5,402 |

ipynb

|

Jupyter Notebook

|

Movie recommendation system.ipynb

|

i-m-ram/movie-recommendation-system

|

9ab1d27f877765299d0079fa703da3c5587dd703

|

[

"MIT"

] | null | null | null |

Movie recommendation system.ipynb

|

i-m-ram/movie-recommendation-system

|

9ab1d27f877765299d0079fa703da3c5587dd703

|

[

"MIT"

] | null | null | null |

Movie recommendation system.ipynb

|

i-m-ram/movie-recommendation-system

|

9ab1d27f877765299d0079fa703da3c5587dd703

|

[

"MIT"

] | null | null | null | 31.776471 | 151 | 0.52351 |

[

[

[

"import pandas as pd\nimport numpy as np\n\ncredit_df=pd.read_csv(r'E:\\Projects\\Movie recommendation System\\DataSet\\tmdb_5000_credits.csv')\nmovie_df=pd.read_csv(r'E:\\Projects\\Movie recommendation System\\DataSet\\tmdb_5000_movies.csv')\n\n#movie_df.head()\n\n#credit_df.head()\n\ncredit_df.columns=['id','title','cast','crew']\n\nmovie_df=movie_df.merge(credit_df,on='id')\n\n#movie_df.head()\n\n#movie_df.describe()\n\n#movie_df.info()\n\nfrom ast import literal_eval\nfeatures = [\"cast\", \"crew\", \"keywords\", \"genres\"]\nfor feature in features:\n movie_df[feature] = movie_df[feature].apply(literal_eval)\n#movie_df[features].head(10)\n\n#movie_df['cast'].head()\n\n\n\ndef get_director(x):\n for i in x:\n if i[\"job\"] == \"Director\":\n return i[\"name\"]\n return np.nan\n\ndef get_list(x):\n if isinstance(x, list):\n names = [i[\"name\"] for i in x]\n if len(names) > 3:\n names = names[:3]\n return names\n return []\n\nmovie_df['Director']=movie_df['crew'].apply(get_director)\n\nfeatures = [\"cast\", \"keywords\", \"genres\"]\nfor feature in features:\n movie_df[feature] = movie_df[feature].apply(get_list)\n\nmovie_df['title']=movie_df['original_title']\n\n#movie_df[['title', 'cast', 'Director', 'keywords', 'genres']].head()\n\ndef clean_data(row):\n if isinstance(row, list):\n return [str.lower(i.replace(\" \", \"\")) for i in row if isinstance(i,str)]\n else:\n if isinstance(row, str):\n return str.lower(row.replace(\" \", \"\"))\n else:\n return \"\"\nfeatures = ['cast', 'keywords', 'Director', 'genres']\nfor feature in features:\n movie_df[feature] = movie_df[feature].apply(clean_data)\n\n#movie_df['cast'].head()\n\ndef create_soup(features):\n return ' '.join(features['keywords']) + ' ' + ' '.join(features['cast']) + ' ' + features['Director'] + ' ' + ' '.join(features['genres'])\n\nmovie_df['soup']=movie_df.apply(create_soup, axis=1)\n#movie_df['soup'].head()\n\n\nfrom sklearn.feature_extraction.text import CountVectorizer\nfrom sklearn.metrics.pairwise import cosine_similarity\n\ncount_vectorizer = CountVectorizer(stop_words='english')\ncount_matrix=count_vectorizer.fit_transform(movie_df['soup'])\n#print(count_matrix.shape)\ncosine_sim2 = cosine_similarity(count_matrix, count_matrix) \n#print(cosine_sim2.shape)\n\nmovie_df = movie_df.reset_index()\nindices = pd.Series(movie_df.index, index=movie_df[\"title\"]).drop_duplicates()\n#print(indices.head())\n\ndef get_recommendations(title, cosine_sim):\n idx = indices[title]\n similarity_scores = list(enumerate(cosine_sim[idx]))\n similarity_scores= sorted(similarity_scores, key=lambda x: x[1], reverse=True)\n similarity_scores= similarity_scores[1:11]\n # (a, b) where a is id of movie, b is similarity_scores\n movie_indices = [ind[0] for ind in similarity_scores]\n movies = movie_df[\"title\"].iloc[movie_indices]\n return movies\n\n#print(get_recommendations(\"Iron Man 2\", cosine_sim2))\nMovie_name=str(input('Enter Movie Name'))\nget_recommendations(Movie_name, cosine_sim2)\n",

"Enter Movie NameIron Man\n"

]

]

] |

[

"code"

] |

[

[

"code"

]

] |

4a12aaf71a4731f35b182f57673746dc3056b805

| 25,890 |

ipynb

|

Jupyter Notebook

|

Curso3.ipynb

|

camilogutierrez/MachineLearning

|

ec129ac09ae86f94b4535523bdacf800295cea8c

|

[

"MIT"

] | 2 |

2020-05-08T21:18:08.000Z

|

2020-07-18T22:13:22.000Z

|

Curso3.ipynb

|

camilogutierrez/MachineLearning

|

ec129ac09ae86f94b4535523bdacf800295cea8c

|

[

"MIT"

] | null | null | null |

Curso3.ipynb

|

camilogutierrez/MachineLearning

|

ec129ac09ae86f94b4535523bdacf800295cea8c

|

[

"MIT"

] | null | null | null | 61.937799 | 941 | 0.687524 |

[

[

[

"# ML Strategy \n* Collect more data\n* Collect more diverse trainign set\n* Train algorithm longer with gradient descetn\n* Try adam isntead of gradient descent\n* Try bigger networks\n* Try smaller networks\n* Try dropout\n* Add L2 regularizatión\n* Network architecture\n* Network archicteture \n - Activvation\n - \\# hidden units\n \n# Orthogonalization \nFor a supervised learning system to do well, you usually need to tune the knobs of your system to make sure that four things hold true. \n1. **Fit training set well on cost function** First, is that you usually have to make sure that you're at least doing well on the training set. So performance on the training set needs to pass some acceptability assessment. For some applications, this might mean doing comparably to human level performance. But this will depend on your application, and we'll talk more about comparing to human level performance next week.\n2. **Fit dev set well on cost function**\n3. **Fit test set well on cost function**\n3. **Performs well in real world**\n\nel priemro se soluccion con bigget network, the optiization algorithm\nel segundo con regularization o con un bigger traingin set\net tres con bigger dev set\ny el cuarto cambiando el dev set o la cost function\n\n The exact details of what's precision and recall don't matter too much for this example. But briefly, the definition of precision is, of the examples that your classifier recognizes as cats,\nPlay video starting at 1 minute 23 seconds and follow transcript1:23\nWhat percentage actually are cats?\nPlay video starting at 1 minute 32 seconds and follow transcript1:32\nSo if classifier A has 95% precision, this means that when classifier A says something is a cat, there's a 95% chance it really is a cat. And recall is, of all the images that really are cats, what percentage were correctly recognized by your classifier? So what percentage of actual cats, Are correctly recognized?\n\n<img align='center' src='images/metric.PNG' width='650'/>",

"_____no_output_____"

],

[

"* I often recommend that you set up a single real number evaluation metric for your problem. Let's look at an example.\n\nprecision: the examples that your classifier recognizes as cats, What percentage actually are cats\no if classifier A has 95% precision, this means that when classifier A says something is a cat, there's a 95% chance it really is a cat.\n\nrecall: of all the images that really are cats, what percentage were correctly recognized by your classifier? So what percentage of actual cats, Are correctly recognized? So if classifier A is 90% recall, this means that of all of the images in, say, your dev sets that really are cats, classifier A accurately pulled out 90% of them. \n\n\ntrade-off between precision and recall\n\n\nThe problem with using precision recall as your evaluation metric is that if classifier A does better on recall, which it does here, the classifier B does better on precision, then you're not sure which classifier is better.\n\nyou just have to find a new evaluation metric that combines precision and recall.\n \nIn the machine learning literature, the standard way to combine precision and recall is something called an F1 score. Think as average of precision (P) and recall\n\n\n$$F1 = \\frac{2}{\\frac{1}{P}+\\frac{1}{R}}$$ Harmonic mean of precition P and Recall R\n\nwhat I recommend in this example is, in addition to tracking your performance in the four different geographies, to also compute the average. And assuming that average performance is a reasonable single real number evaluation metric, by computing the average, you can quickly tell that it looks like algorithm C has a lowest average error.\n\n---\n\n**Satisficing and Optimizing metric**\n\nTo summarize, if there are multiple things you care about by say there's one as the optimizing metric that you want to do as well as possible on and one or more as satisficing metrics were you'll be satisfice. Almost it does better than some threshold you can now have an almost automatic way of quickly looking at multiple core size and picking the, quote, best one. Now these evaluation matrix must be evaluated or calculated on a training set or a development set or maybe on the test set. So one of the things you also need to do is set up training, dev or development, as well as test sets. In the next video, I want to share with you some guidelines for how to set up training, dev, and test sets. So let's go on to the next.\n\ncost = accuracy - 0.5 * running time\n\nmaximize accuracy but subject \n\nthat maximizes accuracy but subject to that the running time, that is the time it takes to classify an image, that that has to be less than or equal to 100 milliseconds. \n\nthat running time is what we call a satisficing metric\n\nSo in this case accuracy is the optimizing metric and a number of false positives every 24 hours is the satisficing metric\n\n**Train/dev/test distributions**\n\nThe way you set up your training dev, or development sets and test sets, can have a huge impact on how rapidly you or your team can make progress on building machine learning application.\n\n* Dev set So, that dev set is also called the development set, or sometimes called the hold out cross validation set. And, workflow in machine learning is that you try a lot of ideas, train up different models on the training set, and then use the dev set to evaluate the different ideas and pick one. And, keep iterating to improve dev set performance until, finally, you have one clause that you're happy with that you then evaluate on your test set.\n* choose a dev set and test set to reflect data you expect to get in future and consider important to do well on. And, in particular, the dev set and the test set here, should come from the same distribution. So, whatever type of data you expect to get in the future, and once you do well on, try to get data that looks like that. ",

"_____no_output_____"

],

[