hexsha

stringlengths 40

40

| size

int64 6

14.9M

| ext

stringclasses 1

value | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 6

260

| max_stars_repo_name

stringlengths 6

119

| max_stars_repo_head_hexsha

stringlengths 40

41

| max_stars_repo_licenses

list | max_stars_count

int64 1

191k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 6

260

| max_issues_repo_name

stringlengths 6

119

| max_issues_repo_head_hexsha

stringlengths 40

41

| max_issues_repo_licenses

list | max_issues_count

int64 1

67k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 6

260

| max_forks_repo_name

stringlengths 6

119

| max_forks_repo_head_hexsha

stringlengths 40

41

| max_forks_repo_licenses

list | max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | avg_line_length

float64 2

1.04M

| max_line_length

int64 2

11.2M

| alphanum_fraction

float64 0

1

| cells

list | cell_types

list | cell_type_groups

list |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

4a3018bf9f835bf9cde5f5d1043aa4ed2ca8cee2

| 31,081 |

ipynb

|

Jupyter Notebook

|

models/ML_Pipeline_Preparation.ipynb

|

AntonioBauer/DisasterResponsePipelines

|

561c99e2f938f41ce857aec2e83846b399d2a8e6

|

[

"FTL",

"CNRI-Python",

"RSA-MD"

] | null | null | null |

models/ML_Pipeline_Preparation.ipynb

|

AntonioBauer/DisasterResponsePipelines

|

561c99e2f938f41ce857aec2e83846b399d2a8e6

|

[

"FTL",

"CNRI-Python",

"RSA-MD"

] | null | null | null |

models/ML_Pipeline_Preparation.ipynb

|

AntonioBauer/DisasterResponsePipelines

|

561c99e2f938f41ce857aec2e83846b399d2a8e6

|

[

"FTL",

"CNRI-Python",

"RSA-MD"

] | 1 |

2021-01-23T14:53:52.000Z

|

2021-01-23T14:53:52.000Z

| 39.796415 | 337 | 0.519835 |

[

[

[

"# ML Pipeline Preparation\nFollow the instructions below to help you create your ML pipeline.\n### 1. Import libraries and load data from database.\n- Import Python libraries\n- Load dataset from database with [`read_sql_table`](https://pandas.pydata.org/pandas-docs/stable/generated/pandas.read_sql_table.html)\n- Define feature and target variables X and Y",

"_____no_output_____"

]

],

[

[

"# import libraries\nimport sys\nimport pandas as pd\nimport numpy as np\nfrom sqlalchemy import create_engine\n\nimport nltk\nnltk.download(['punkt', 'wordnet', 'stopwords'])\nfrom nltk.tokenize import word_tokenize\nfrom nltk.stem import WordNetLemmatizer\nfrom nltk.corpus import stopwords\nfrom nltk.stem.porter import PorterStemmer\nfrom nltk.tokenize import RegexpTokenizer\n\nfrom nltk.tokenize import word_tokenize\nfrom nltk.stem import WordNetLemmatizer\nfrom sklearn.pipeline import Pipeline\nfrom sklearn.metrics import confusion_matrix\nfrom sklearn.model_selection import train_test_split\nfrom sklearn.multioutput import MultiOutputClassifier\nfrom sklearn.ensemble import RandomForestClassifier\nfrom sklearn.neighbors import KNeighborsClassifier\nfrom sklearn.model_selection import GridSearchCV\nfrom sklearn.feature_extraction.text import CountVectorizer, TfidfTransformer\nfrom sklearn.metrics import classification_report\nfrom sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score\n\nimport re\nimport pickle",

"[nltk_data] Downloading package punkt to /root/nltk_data...\n[nltk_data] Package punkt is already up-to-date!\n[nltk_data] Downloading package wordnet to /root/nltk_data...\n[nltk_data] Package wordnet is already up-to-date!\n[nltk_data] Downloading package stopwords to /root/nltk_data...\n[nltk_data] Package stopwords is already up-to-date!\n"

],

[

"# load data from database into DataFrame df\nengine = create_engine('sqlite:///DisasterResponse.db')\ndf = pd.read_sql('DisasterData', con=engine)",

"_____no_output_____"

],

[

"# split Dataframe df into X and y\nX = df['message']\ny = df.iloc[:, 4:]",

"_____no_output_____"

]

],

[

[

"### 2. Write a tokenization function to process your text data",

"_____no_output_____"

]

],

[

[

"def tokenize(text):\n\n # tokenize text and instantiate lemmatizer\n tokens = word_tokenize(text)\n lemmatizer = WordNetLemmatizer()\n \n # remove stopwords\n tokens = [token for token in tokens if token not in stopwords.words('english')]\n \n # remove punctuaction\n tokens = [token for token in tokens if token.isalpha()]\n\n # create clean tokens\n clean_tokens = []\n for tok in tokens:\n clean_tok = lemmatizer.lemmatize(tok).lower().strip()\n clean_tokens.append(clean_tok)\n\n return clean_tokens",

"_____no_output_____"

]

],

[

[

"### 3. Build a machine learning pipeline\nThis machine pipeline should take in the `message` column as input and output classification results on the other 36 categories in the dataset. You may find the [MultiOutputClassifier](http://scikit-learn.org/stable/modules/generated/sklearn.multioutput.MultiOutputClassifier.html) helpful for predicting multiple target variables.",

"_____no_output_____"

]

],

[

[

"# build pipeline for the text transformation and for estimator\npipeline = Pipeline([\n ('vect', CountVectorizer(tokenizer = tokenize)),\n ('tfidf', TfidfTransformer()),\n ('clf', MultiOutputClassifier(RandomForestClassifier()))\n])",

"_____no_output_____"

]

],

[

[

"### 4. Train pipeline\n- Split data into train and test sets\n- Train pipeline",

"_____no_output_____"

]

],

[

[

"# split data into training and test data\nX_train, X_test, y_train, y_test = train_test_split(X, y)",

"_____no_output_____"

],

[

"%%time\n# train data\npipeline.fit(X_train, y_train)",

"CPU times: user 2min 37s, sys: 7.35 s, total: 2min 45s\nWall time: 2min 45s\n"

],

[

"y_test.columns",

"_____no_output_____"

]

],

[

[

"### 5. Test your model\nReport the f1 score, precision and recall for each output category of the dataset. You can do this by iterating through the columns and calling sklearn's `classification_report` on each.",

"_____no_output_____"

]

],

[

[

"# predict responses for basic model\nY_pred = pipeline.predict(X_test)",

"_____no_output_____"

],

[

"# print classification_report\nprint(classification_report(y_test, Y_pred, target_names = y_test.columns, digits = 2))",

" precision recall f1-score support\n\n related 0.87 0.91 0.89 5105\n request 0.82 0.44 0.57 1140\n offer 0.00 0.00 0.00 33\n aid_related 0.75 0.60 0.66 2739\n medical_help 0.60 0.07 0.12 546\n medical_products 0.88 0.11 0.19 355\n search_and_rescue 0.54 0.04 0.08 173\n security 0.00 0.00 0.00 123\n military 0.69 0.10 0.17 226\n child_alone 0.00 0.00 0.00 0\n water 0.86 0.29 0.43 414\n food 0.80 0.60 0.69 727\n shelter 0.83 0.30 0.44 592\n clothing 0.69 0.12 0.20 95\n money 0.80 0.02 0.05 161\n missing_people 0.50 0.01 0.02 86\n refugees 0.53 0.04 0.07 204\n death 0.78 0.16 0.27 313\n other_aid 0.40 0.05 0.09 858\ninfrastructure_related 0.11 0.00 0.00 445\n transport 0.65 0.06 0.12 313\n buildings 0.74 0.12 0.20 353\n electricity 0.75 0.07 0.12 132\n tools 0.00 0.00 0.00 34\n hospitals 0.00 0.00 0.00 63\n shops 0.00 0.00 0.00 26\n aid_centers 0.00 0.00 0.00 85\n other_infrastructure 0.17 0.00 0.01 307\n weather_related 0.86 0.59 0.70 1864\n floods 0.89 0.36 0.52 547\n storm 0.79 0.41 0.54 641\n fire 0.33 0.01 0.03 71\n earthquake 0.88 0.73 0.79 626\n cold 0.52 0.14 0.22 122\n other_weather 0.41 0.03 0.06 346\n direct_report 0.75 0.32 0.44 1294\n\n avg / total 0.74 0.49 0.54 21159\n\n"

]

],

[

[

"### 6. Improve your model\nUse grid search to find better parameters. ",

"_____no_output_____"

]

],

[

[

"# build pipeline for the text transformation and for estimator\ncv_pipeline = Pipeline([\n ('vect', CountVectorizer(tokenizer=tokenize)),\n ('tfidf', TfidfTransformer()),\n ('clf', MultiOutputClassifier(RandomForestClassifier()))\n])",

"_____no_output_____"

],

[

"MultiOutputClassifier(RandomForestClassifier()).get_params()",

"_____no_output_____"

],

[

"# reduced hyperparameter tuning and cross validation due to runtime\nparameters = {\n 'clf__estimator__n_estimators': [4, 6, 9],\n 'clf__estimator__min_samples_split': [2, 3, 5],\n}\n \n\ncv_forest = GridSearchCV(cv_pipeline, param_grid = parameters, cv = 2, verbose = 2, n_jobs = 4)",

"_____no_output_____"

],

[

"%%time\n# train improved model\ncv_forest.fit(X_train, y_train)",

"Fitting 2 folds for each of 9 candidates, totalling 18 fits\n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=4 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=4 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=6 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=6 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=4, total= 7.8min\n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=9 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=4, total= 7.8min\n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=9 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=6, total= 8.2min\n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=4 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=6, total= 8.2min\n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=4 \n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=4, total= 7.8min\n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=6 \n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=4, total= 7.8min\n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=6 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=9, total= 8.8min\n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=9 \n[CV] clf__estimator__min_samples_split=2, clf__estimator__n_estimators=9, total= 8.9min\n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=9 \n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=6, total= 8.2min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=4 \n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=6, total= 8.2min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=4 \n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=9, total= 8.7min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=6 \n[CV] clf__estimator__min_samples_split=3, clf__estimator__n_estimators=9, total= 8.7min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=6 \n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=4, total= 7.8min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=9 \n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=4, total= 7.8min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=9 \n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=6, total= 8.1min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=6, total= 8.1min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=9, total= 5.0min\n[CV] clf__estimator__min_samples_split=5, clf__estimator__n_estimators=9, total= 4.9min\n"

],

[

"# display the best performing parameters\ncv_forest.best_params_",

"_____no_output_____"

]

],

[

[

"### 7. Test your model\nShow the accuracy, precision, and recall of the tuned model. \n\nSince this project focuses on code quality, process, and pipelines, there is no minimum performance metric needed to pass. However, make sure to fine tune your models for accuracy, precision and recall to make your project stand out - especially for your portfolio!",

"_____no_output_____"

]

],

[

[

"# predict responses for improved model\nY_pred_cv = cv_forest.predict(X_test)",

"_____no_output_____"

],

[

"# print classification_report\nprint(classification_report(y_test, Y_pred_cv, target_names = y_test.columns, digits = 2))",

" precision recall f1-score support\n\n related 0.87 0.89 0.88 5105\n request 0.79 0.39 0.52 1140\n offer 0.00 0.00 0.00 33\n aid_related 0.74 0.55 0.63 2739\n medical_help 0.60 0.09 0.15 546\n medical_products 0.70 0.08 0.14 355\n search_and_rescue 0.53 0.05 0.09 173\n security 0.00 0.00 0.00 123\n military 0.56 0.09 0.15 226\n child_alone 0.00 0.00 0.00 0\n water 0.81 0.32 0.46 414\n food 0.82 0.43 0.56 727\n shelter 0.79 0.26 0.39 592\n clothing 1.00 0.05 0.10 95\n money 0.78 0.09 0.16 161\n missing_people 0.00 0.00 0.00 86\n refugees 0.53 0.08 0.14 204\n death 0.80 0.15 0.25 313\n other_aid 0.42 0.05 0.09 858\ninfrastructure_related 0.31 0.02 0.03 445\n transport 0.66 0.11 0.18 313\n buildings 0.60 0.09 0.15 353\n electricity 0.67 0.09 0.16 132\n tools 0.00 0.00 0.00 34\n hospitals 0.00 0.00 0.00 63\n shops 0.00 0.00 0.00 26\n aid_centers 0.00 0.00 0.00 85\n other_infrastructure 0.17 0.01 0.01 307\n weather_related 0.82 0.57 0.67 1864\n floods 0.84 0.28 0.42 547\n storm 0.77 0.37 0.50 641\n fire 0.33 0.01 0.03 71\n earthquake 0.87 0.66 0.75 626\n cold 0.68 0.16 0.25 122\n other_weather 0.47 0.05 0.10 346\n direct_report 0.72 0.29 0.41 1294\n\n avg / total 0.73 0.46 0.52 21159\n\n"

]

],

[

[

"### 8. Try improving your model further. Here are a few ideas:\n* try other machine learning algorithms\n* add other features besides the TF-IDF",

"_____no_output_____"

],

[

"I'm going to use the KNeighborsClassifier like in my previous submission and see how well it performs in this case.",

"_____no_output_____"

]

],

[

[

"# using KNeighborsClassifier\npipeline_knn = Pipeline([\n ('vect', CountVectorizer(tokenizer = tokenize)),\n ('tfidf', TfidfTransformer()),\n ('clf', MultiOutputClassifier(KNeighborsClassifier()))\n])",

"_____no_output_____"

],

[

"# train KNeighborsClassifier model\npipeline_knn.fit(X_train, y_train)",

"_____no_output_____"

],

[

"# predict responses for KNeighborsClassifier model\nY_pred_knn = pipeline_knn.predict(X_test)",

"_____no_output_____"

],

[

"# print classification_report\nprint(classification_report(y_test, Y_pred_knn, target_names = y_test.columns, digits = 2))",

" precision recall f1-score support\n\n related 0.80 0.99 0.88 5105\n request 0.75 0.09 0.16 1140\n offer 0.00 0.00 0.00 33\n aid_related 0.78 0.05 0.09 2739\n medical_help 0.00 0.00 0.00 546\n medical_products 0.50 0.00 0.01 355\n search_and_rescue 0.00 0.00 0.00 173\n security 0.00 0.00 0.00 123\n military 0.00 0.00 0.00 226\n child_alone 0.00 0.00 0.00 0\n water 0.73 0.05 0.09 414\n food 0.75 0.07 0.12 727\n shelter 0.84 0.04 0.07 592\n clothing 0.50 0.02 0.04 95\n money 0.75 0.02 0.04 161\n missing_people 0.00 0.00 0.00 86\n refugees 0.00 0.00 0.00 204\n death 0.78 0.02 0.04 313\n other_aid 0.50 0.01 0.02 858\ninfrastructure_related 0.50 0.00 0.00 445\n transport 0.00 0.00 0.00 313\n buildings 1.00 0.01 0.02 353\n electricity 0.00 0.00 0.00 132\n tools 0.00 0.00 0.00 34\n hospitals 0.00 0.00 0.00 63\n shops 0.00 0.00 0.00 26\n aid_centers 0.00 0.00 0.00 85\n other_infrastructure 0.00 0.00 0.00 307\n weather_related 0.80 0.06 0.11 1864\n floods 0.50 0.00 0.00 547\n storm 0.75 0.01 0.03 641\n fire 0.00 0.00 0.00 71\n earthquake 0.81 0.14 0.24 626\n cold 1.00 0.01 0.02 122\n other_weather 0.75 0.01 0.02 346\n direct_report 0.73 0.06 0.11 1294\n\n avg / total 0.67 0.27 0.27 21159\n\n"

]

],

[

[

"### 9. Export your model as a pickle file",

"_____no_output_____"

]

],

[

[

"pickle_out = open('model.pkl','wb')\npickle.dump(cv_forest, pickle_out)\npickle_out.close()",

"_____no_output_____"

]

],

[

[

"### 10. Use this notebook to complete `train.py`\nUse the template file attached in the Resources folder to write a script that runs the steps above to create a database and export a model based on a new dataset specified by the user.",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown"

] |

[

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

]

] |

4a301978d2b7fcffd2e4a8064a6f891c1c4fdfff

| 316,244 |

ipynb

|

Jupyter Notebook

|

notebooks/graph_generator.ipynb

|

dschaub95/pyconcorde

|

8b4a7af120071e939445050efdf613412475cb26

|

[

"BSD-3-Clause"

] | null | null | null |

notebooks/graph_generator.ipynb

|

dschaub95/pyconcorde

|

8b4a7af120071e939445050efdf613412475cb26

|

[

"BSD-3-Clause"

] | null | null | null |

notebooks/graph_generator.ipynb

|

dschaub95/pyconcorde

|

8b4a7af120071e939445050efdf613412475cb26

|

[

"BSD-3-Clause"

] | null | null | null | 215.425068 | 101,302 | 0.806169 |

[

[

[

"%load_ext autoreload\n%autoreload 2",

"_____no_output_____"

],

[

"from TSP_utils import TSP_solver, TSP_plotter, TSP_generator, TSP_loader\nimport numpy as np\nimport networkx as nx\nimport tqdm\nimport tsplib95\nimport time\nimport sys",

"_____no_output_____"

],

[

"solver = TSP_solver()",

"_____no_output_____"

],

[

"folder = 'test_sets'\nNUM_NODES = 10\ngenerator = TSP_generator(g_type='tsp_2d', num_min=NUM_NODES, num_max=NUM_NODES)\nnum_graphs = 1000\ngraph_list = generator.gen_graphs(num_graphs=num_graphs)\nsave_path = f'../{folder}/synthetic_n_{NUM_NODES}_{num_graphs}'\ngenerator.save_nx_as_tsp(graph_list, save_path, scale=6, start_index=0)\nsolver.add_lengths_and_sols(data_dir=save_path, scale_factor=10**6, lengths_name='lengths.txt', sols_name='solutions.txt')",

"TSP_Problem_0.tsp\nTSP_Problem_1.tsp\nTSP_Problem_2.tsp\nTSP_Problem_3.tsp\nTSP_Problem_4.tsp\nTSP_Problem_5.tsp\nTSP_Problem_6.tsp\nTSP_Problem_7.tsp\nTSP_Problem_8.tsp\nTSP_Problem_9.tsp\nTSP_Problem_10.tsp\nTSP_Problem_11.tsp\nTSP_Problem_12.tsp\nTSP_Problem_13.tsp\nTSP_Problem_14.tsp\nTSP_Problem_15.tsp\nTSP_Problem_16.tsp\nTSP_Problem_17.tsp\nTSP_Problem_18.tsp\nTSP_Problem_19.tsp\nTSP_Problem_20.tsp\nTSP_Problem_21.tsp\nTSP_Problem_22.tsp\nTSP_Problem_23.tsp\nTSP_Problem_24.tsp\nTSP_Problem_25.tsp\nTSP_Problem_26.tsp\nTSP_Problem_27.tsp\nTSP_Problem_28.tsp\nTSP_Problem_29.tsp\nTSP_Problem_30.tsp\nTSP_Problem_31.tsp\nTSP_Problem_32.tsp\nTSP_Problem_33.tsp\nTSP_Problem_34.tsp\nTSP_Problem_35.tsp\nTSP_Problem_36.tsp\nTSP_Problem_37.tsp\nTSP_Problem_38.tsp\nTSP_Problem_39.tsp\nTSP_Problem_40.tsp\nTSP_Problem_41.tsp\nTSP_Problem_42.tsp\nTSP_Problem_43.tsp\nTSP_Problem_44.tsp\nTSP_Problem_45.tsp\nTSP_Problem_46.tsp\nTSP_Problem_47.tsp\nTSP_Problem_48.tsp\nTSP_Problem_49.tsp\nTSP_Problem_50.tsp\nTSP_Problem_51.tsp\nTSP_Problem_52.tsp\nTSP_Problem_53.tsp\nTSP_Problem_54.tsp\nTSP_Problem_55.tsp\nTSP_Problem_56.tsp\nTSP_Problem_57.tsp\nTSP_Problem_58.tsp\nTSP_Problem_59.tsp\nTSP_Problem_60.tsp\nTSP_Problem_61.tsp\nTSP_Problem_62.tsp\nTSP_Problem_63.tsp\nTSP_Problem_64.tsp\nTSP_Problem_65.tsp\nTSP_Problem_66.tsp\nTSP_Problem_67.tsp\nTSP_Problem_68.tsp\nTSP_Problem_69.tsp\nTSP_Problem_70.tsp\nTSP_Problem_71.tsp\nTSP_Problem_72.tsp\nTSP_Problem_73.tsp\nTSP_Problem_74.tsp\nTSP_Problem_75.tsp\nTSP_Problem_76.tsp\nTSP_Problem_77.tsp\nTSP_Problem_78.tsp\nTSP_Problem_79.tsp\nTSP_Problem_80.tsp\nTSP_Problem_81.tsp\nTSP_Problem_82.tsp\nTSP_Problem_83.tsp\nTSP_Problem_84.tsp\nTSP_Problem_85.tsp\nTSP_Problem_86.tsp\nTSP_Problem_87.tsp\nTSP_Problem_88.tsp\nTSP_Problem_89.tsp\nTSP_Problem_90.tsp\nTSP_Problem_91.tsp\nTSP_Problem_92.tsp\nTSP_Problem_93.tsp\nTSP_Problem_94.tsp\nTSP_Problem_95.tsp\nTSP_Problem_96.tsp\nTSP_Problem_97.tsp\nTSP_Problem_98.tsp\nTSP_Problem_99.tsp\nTSP_Problem_100.tsp\nTSP_Problem_101.tsp\nTSP_Problem_102.tsp\nTSP_Problem_103.tsp\nTSP_Problem_104.tsp\nTSP_Problem_105.tsp\nTSP_Problem_106.tsp\nTSP_Problem_107.tsp\nTSP_Problem_108.tsp\nTSP_Problem_109.tsp\nTSP_Problem_110.tsp\nTSP_Problem_111.tsp\nTSP_Problem_112.tsp\nTSP_Problem_113.tsp\nTSP_Problem_114.tsp\nTSP_Problem_115.tsp\nTSP_Problem_116.tsp\nTSP_Problem_117.tsp\nTSP_Problem_118.tsp\nTSP_Problem_119.tsp\nTSP_Problem_120.tsp\nTSP_Problem_121.tsp\nTSP_Problem_122.tsp\nTSP_Problem_123.tsp\nTSP_Problem_124.tsp\nTSP_Problem_125.tsp\nTSP_Problem_126.tsp\nTSP_Problem_127.tsp\nTSP_Problem_128.tsp\nTSP_Problem_129.tsp\nTSP_Problem_130.tsp\nTSP_Problem_131.tsp\nTSP_Problem_132.tsp\nTSP_Problem_133.tsp\nTSP_Problem_134.tsp\nTSP_Problem_135.tsp\nTSP_Problem_136.tsp\nTSP_Problem_137.tsp\nTSP_Problem_138.tsp\nTSP_Problem_139.tsp\nTSP_Problem_140.tsp\nTSP_Problem_141.tsp\nTSP_Problem_142.tsp\nTSP_Problem_143.tsp\nTSP_Problem_144.tsp\nTSP_Problem_145.tsp\nTSP_Problem_146.tsp\nTSP_Problem_147.tsp\nTSP_Problem_148.tsp\nTSP_Problem_149.tsp\nTSP_Problem_150.tsp\nTSP_Problem_151.tsp\nTSP_Problem_152.tsp\nTSP_Problem_153.tsp\nTSP_Problem_154.tsp\nTSP_Problem_155.tsp\nTSP_Problem_156.tsp\nTSP_Problem_157.tsp\nTSP_Problem_158.tsp\nTSP_Problem_159.tsp\nTSP_Problem_160.tsp\nTSP_Problem_161.tsp\nTSP_Problem_162.tsp\nTSP_Problem_163.tsp\nTSP_Problem_164.tsp\nTSP_Problem_165.tsp\nTSP_Problem_166.tsp\nTSP_Problem_167.tsp\nTSP_Problem_168.tsp\nTSP_Problem_169.tsp\nTSP_Problem_170.tsp\nTSP_Problem_171.tsp\nTSP_Problem_172.tsp\nTSP_Problem_173.tsp\nTSP_Problem_174.tsp\nTSP_Problem_175.tsp\nTSP_Problem_176.tsp\nTSP_Problem_177.tsp\nTSP_Problem_178.tsp\nTSP_Problem_179.tsp\nTSP_Problem_180.tsp\nTSP_Problem_181.tsp\nTSP_Problem_182.tsp\nTSP_Problem_183.tsp\nTSP_Problem_184.tsp\nTSP_Problem_185.tsp\nTSP_Problem_186.tsp\nTSP_Problem_187.tsp\nTSP_Problem_188.tsp\nTSP_Problem_189.tsp\nTSP_Problem_190.tsp\nTSP_Problem_191.tsp\nTSP_Problem_192.tsp\nTSP_Problem_193.tsp\nTSP_Problem_194.tsp\nTSP_Problem_195.tsp\nTSP_Problem_196.tsp\nTSP_Problem_197.tsp\nTSP_Problem_198.tsp\nTSP_Problem_199.tsp\nTSP_Problem_200.tsp\nTSP_Problem_201.tsp\nTSP_Problem_202.tsp\nTSP_Problem_203.tsp\nTSP_Problem_204.tsp\nTSP_Problem_205.tsp\nTSP_Problem_206.tsp\nTSP_Problem_207.tsp\nTSP_Problem_208.tsp\nTSP_Problem_209.tsp\nTSP_Problem_210.tsp\nTSP_Problem_211.tsp\nTSP_Problem_212.tsp\nTSP_Problem_213.tsp\nTSP_Problem_214.tsp\nTSP_Problem_215.tsp\nTSP_Problem_216.tsp\nTSP_Problem_217.tsp\nTSP_Problem_218.tsp\nTSP_Problem_219.tsp\nTSP_Problem_220.tsp\nTSP_Problem_221.tsp\nTSP_Problem_222.tsp\nTSP_Problem_223.tsp\nTSP_Problem_224.tsp\nTSP_Problem_225.tsp\nTSP_Problem_226.tsp\nTSP_Problem_227.tsp\nTSP_Problem_228.tsp\nTSP_Problem_229.tsp\nTSP_Problem_230.tsp\nTSP_Problem_231.tsp\nTSP_Problem_232.tsp\nTSP_Problem_233.tsp\nTSP_Problem_234.tsp\nTSP_Problem_235.tsp\nTSP_Problem_236.tsp\nTSP_Problem_237.tsp\nTSP_Problem_238.tsp\nTSP_Problem_239.tsp\nTSP_Problem_240.tsp\nTSP_Problem_241.tsp\nTSP_Problem_242.tsp\nTSP_Problem_243.tsp\nTSP_Problem_244.tsp\nTSP_Problem_245.tsp\nTSP_Problem_246.tsp\nTSP_Problem_247.tsp\nTSP_Problem_248.tsp\nTSP_Problem_249.tsp\nTSP_Problem_250.tsp\nTSP_Problem_251.tsp\nTSP_Problem_252.tsp\nTSP_Problem_253.tsp\nTSP_Problem_254.tsp\nTSP_Problem_255.tsp\nTSP_Problem_256.tsp\nTSP_Problem_257.tsp\nTSP_Problem_258.tsp\nTSP_Problem_259.tsp\nTSP_Problem_260.tsp\nTSP_Problem_261.tsp\nTSP_Problem_262.tsp\nTSP_Problem_263.tsp\nTSP_Problem_264.tsp\nTSP_Problem_265.tsp\nTSP_Problem_266.tsp\nTSP_Problem_267.tsp\nTSP_Problem_268.tsp\nTSP_Problem_269.tsp\nTSP_Problem_270.tsp\nTSP_Problem_271.tsp\nTSP_Problem_272.tsp\nTSP_Problem_273.tsp\nTSP_Problem_274.tsp\nTSP_Problem_275.tsp\nTSP_Problem_276.tsp\nTSP_Problem_277.tsp\nTSP_Problem_278.tsp\nTSP_Problem_279.tsp\nTSP_Problem_280.tsp\nTSP_Problem_281.tsp\nTSP_Problem_282.tsp\nTSP_Problem_283.tsp\nTSP_Problem_284.tsp\nTSP_Problem_285.tsp\nTSP_Problem_286.tsp\nTSP_Problem_287.tsp\nTSP_Problem_288.tsp\nTSP_Problem_289.tsp\nTSP_Problem_290.tsp\nTSP_Problem_291.tsp\nTSP_Problem_292.tsp\nTSP_Problem_293.tsp\nTSP_Problem_294.tsp\nTSP_Problem_295.tsp\nTSP_Problem_296.tsp\nTSP_Problem_297.tsp\nTSP_Problem_298.tsp\nTSP_Problem_299.tsp\nTSP_Problem_300.tsp\nTSP_Problem_301.tsp\nTSP_Problem_302.tsp\nTSP_Problem_303.tsp\nTSP_Problem_304.tsp\nTSP_Problem_305.tsp\nTSP_Problem_306.tsp\nTSP_Problem_307.tsp\nTSP_Problem_308.tsp\nTSP_Problem_309.tsp\nTSP_Problem_310.tsp\nTSP_Problem_311.tsp\nTSP_Problem_312.tsp\nTSP_Problem_313.tsp\nTSP_Problem_314.tsp\nTSP_Problem_315.tsp\nTSP_Problem_316.tsp\nTSP_Problem_317.tsp\nTSP_Problem_318.tsp\nTSP_Problem_319.tsp\nTSP_Problem_320.tsp\nTSP_Problem_321.tsp\nTSP_Problem_322.tsp\nTSP_Problem_323.tsp\nTSP_Problem_324.tsp\nTSP_Problem_325.tsp\nTSP_Problem_326.tsp\nTSP_Problem_327.tsp\nTSP_Problem_328.tsp\nTSP_Problem_329.tsp\nTSP_Problem_330.tsp\nTSP_Problem_331.tsp\nTSP_Problem_332.tsp\nTSP_Problem_333.tsp\nTSP_Problem_334.tsp\nTSP_Problem_335.tsp\nTSP_Problem_336.tsp\nTSP_Problem_337.tsp\nTSP_Problem_338.tsp\nTSP_Problem_339.tsp\nTSP_Problem_340.tsp\nTSP_Problem_341.tsp\nTSP_Problem_342.tsp\nTSP_Problem_343.tsp\nTSP_Problem_344.tsp\nTSP_Problem_345.tsp\nTSP_Problem_346.tsp\nTSP_Problem_347.tsp\nTSP_Problem_348.tsp\nTSP_Problem_349.tsp\nTSP_Problem_350.tsp\nTSP_Problem_351.tsp\nTSP_Problem_352.tsp\nTSP_Problem_353.tsp\nTSP_Problem_354.tsp\nTSP_Problem_355.tsp\nTSP_Problem_356.tsp\nTSP_Problem_357.tsp\nTSP_Problem_358.tsp\nTSP_Problem_359.tsp\nTSP_Problem_360.tsp\nTSP_Problem_361.tsp\nTSP_Problem_362.tsp\nTSP_Problem_363.tsp\nTSP_Problem_364.tsp\nTSP_Problem_365.tsp\nTSP_Problem_366.tsp\nTSP_Problem_367.tsp\nTSP_Problem_368.tsp\nTSP_Problem_369.tsp\nTSP_Problem_370.tsp\nTSP_Problem_371.tsp\nTSP_Problem_372.tsp\nTSP_Problem_373.tsp\nTSP_Problem_374.tsp\nTSP_Problem_375.tsp\nTSP_Problem_376.tsp\nTSP_Problem_377.tsp\nTSP_Problem_378.tsp\nTSP_Problem_379.tsp\nTSP_Problem_380.tsp\nTSP_Problem_381.tsp\nTSP_Problem_382.tsp\nTSP_Problem_383.tsp\nTSP_Problem_384.tsp\nTSP_Problem_385.tsp\nTSP_Problem_386.tsp\nTSP_Problem_387.tsp\nTSP_Problem_388.tsp\nTSP_Problem_389.tsp\nTSP_Problem_390.tsp\nTSP_Problem_391.tsp\nTSP_Problem_392.tsp\nTSP_Problem_393.tsp\nTSP_Problem_394.tsp\nTSP_Problem_395.tsp\nTSP_Problem_396.tsp\nTSP_Problem_397.tsp\nTSP_Problem_398.tsp\nTSP_Problem_399.tsp\nTSP_Problem_400.tsp\nTSP_Problem_401.tsp\nTSP_Problem_402.tsp\nTSP_Problem_403.tsp\nTSP_Problem_404.tsp\nTSP_Problem_405.tsp\nTSP_Problem_406.tsp\nTSP_Problem_407.tsp\nTSP_Problem_408.tsp\nTSP_Problem_409.tsp\nTSP_Problem_410.tsp\nTSP_Problem_411.tsp\nTSP_Problem_412.tsp\nTSP_Problem_413.tsp\nTSP_Problem_414.tsp\nTSP_Problem_415.tsp\nTSP_Problem_416.tsp\nTSP_Problem_417.tsp\nTSP_Problem_418.tsp\nTSP_Problem_419.tsp\nTSP_Problem_420.tsp\nTSP_Problem_421.tsp\nTSP_Problem_422.tsp\nTSP_Problem_423.tsp\nTSP_Problem_424.tsp\nTSP_Problem_425.tsp\nTSP_Problem_426.tsp\nTSP_Problem_427.tsp\nTSP_Problem_428.tsp\nTSP_Problem_429.tsp\nTSP_Problem_430.tsp\nTSP_Problem_431.tsp\nTSP_Problem_432.tsp\nTSP_Problem_433.tsp\nTSP_Problem_434.tsp\nTSP_Problem_435.tsp\nTSP_Problem_436.tsp\nTSP_Problem_437.tsp\nTSP_Problem_438.tsp\nTSP_Problem_439.tsp\nTSP_Problem_440.tsp\nTSP_Problem_441.tsp\nTSP_Problem_442.tsp\nTSP_Problem_443.tsp\nTSP_Problem_444.tsp\nTSP_Problem_445.tsp\nTSP_Problem_446.tsp\nTSP_Problem_447.tsp\nTSP_Problem_448.tsp\nTSP_Problem_449.tsp\nTSP_Problem_450.tsp\nTSP_Problem_451.tsp\nTSP_Problem_452.tsp\nTSP_Problem_453.tsp\nTSP_Problem_454.tsp\nTSP_Problem_455.tsp\nTSP_Problem_456.tsp\nTSP_Problem_457.tsp\nTSP_Problem_458.tsp\nTSP_Problem_459.tsp\nTSP_Problem_460.tsp\nTSP_Problem_461.tsp\nTSP_Problem_462.tsp\nTSP_Problem_463.tsp\nTSP_Problem_464.tsp\nTSP_Problem_465.tsp\nTSP_Problem_466.tsp\nTSP_Problem_467.tsp\nTSP_Problem_468.tsp\nTSP_Problem_469.tsp\nTSP_Problem_470.tsp\nTSP_Problem_471.tsp\nTSP_Problem_472.tsp\nTSP_Problem_473.tsp\nTSP_Problem_474.tsp\nTSP_Problem_475.tsp\nTSP_Problem_476.tsp\nTSP_Problem_477.tsp\nTSP_Problem_478.tsp\nTSP_Problem_479.tsp\nTSP_Problem_480.tsp\nTSP_Problem_481.tsp\nTSP_Problem_482.tsp\nTSP_Problem_483.tsp\nTSP_Problem_484.tsp\nTSP_Problem_485.tsp\nTSP_Problem_486.tsp\nTSP_Problem_487.tsp\nTSP_Problem_488.tsp\nTSP_Problem_489.tsp\nTSP_Problem_490.tsp\nTSP_Problem_491.tsp\nTSP_Problem_492.tsp\nTSP_Problem_493.tsp\nTSP_Problem_494.tsp\nTSP_Problem_495.tsp\nTSP_Problem_496.tsp\nTSP_Problem_497.tsp\nTSP_Problem_498.tsp\nTSP_Problem_499.tsp\nTSP_Problem_500.tsp\nTSP_Problem_501.tsp\nTSP_Problem_502.tsp\nTSP_Problem_503.tsp\nTSP_Problem_504.tsp\nTSP_Problem_505.tsp\nTSP_Problem_506.tsp\nTSP_Problem_507.tsp\nTSP_Problem_508.tsp\nTSP_Problem_509.tsp\nTSP_Problem_510.tsp\nTSP_Problem_511.tsp\nTSP_Problem_512.tsp\nTSP_Problem_513.tsp\nTSP_Problem_514.tsp\nTSP_Problem_515.tsp\nTSP_Problem_516.tsp\nTSP_Problem_517.tsp\nTSP_Problem_518.tsp\nTSP_Problem_519.tsp\nTSP_Problem_520.tsp\nTSP_Problem_521.tsp\nTSP_Problem_522.tsp\nTSP_Problem_523.tsp\nTSP_Problem_524.tsp\nTSP_Problem_525.tsp\nTSP_Problem_526.tsp\nTSP_Problem_527.tsp\nTSP_Problem_528.tsp\nTSP_Problem_529.tsp\nTSP_Problem_530.tsp\nTSP_Problem_531.tsp\nTSP_Problem_532.tsp\nTSP_Problem_533.tsp\nTSP_Problem_534.tsp\nTSP_Problem_535.tsp\nTSP_Problem_536.tsp\nTSP_Problem_537.tsp\nTSP_Problem_538.tsp\nTSP_Problem_539.tsp\nTSP_Problem_540.tsp\nTSP_Problem_541.tsp\nTSP_Problem_542.tsp\nTSP_Problem_543.tsp\nTSP_Problem_544.tsp\nTSP_Problem_545.tsp\nTSP_Problem_546.tsp\nTSP_Problem_547.tsp\nTSP_Problem_548.tsp\nTSP_Problem_549.tsp\nTSP_Problem_550.tsp\nTSP_Problem_551.tsp\nTSP_Problem_552.tsp\nTSP_Problem_553.tsp\nTSP_Problem_554.tsp\nTSP_Problem_555.tsp\nTSP_Problem_556.tsp\nTSP_Problem_557.tsp\nTSP_Problem_558.tsp\nTSP_Problem_559.tsp\nTSP_Problem_560.tsp\nTSP_Problem_561.tsp\nTSP_Problem_562.tsp\nTSP_Problem_563.tsp\nTSP_Problem_564.tsp\nTSP_Problem_565.tsp\nTSP_Problem_566.tsp\nTSP_Problem_567.tsp\nTSP_Problem_568.tsp\nTSP_Problem_569.tsp\nTSP_Problem_570.tsp\nTSP_Problem_571.tsp\nTSP_Problem_572.tsp\nTSP_Problem_573.tsp\nTSP_Problem_574.tsp\nTSP_Problem_575.tsp\nTSP_Problem_576.tsp\nTSP_Problem_577.tsp\nTSP_Problem_578.tsp\nTSP_Problem_579.tsp\nTSP_Problem_580.tsp\nTSP_Problem_581.tsp\nTSP_Problem_582.tsp\nTSP_Problem_583.tsp\nTSP_Problem_584.tsp\nTSP_Problem_585.tsp\nTSP_Problem_586.tsp\nTSP_Problem_587.tsp\nTSP_Problem_588.tsp\nTSP_Problem_589.tsp\nTSP_Problem_590.tsp\nTSP_Problem_591.tsp\nTSP_Problem_592.tsp\nTSP_Problem_593.tsp\nTSP_Problem_594.tsp\nTSP_Problem_595.tsp\nTSP_Problem_596.tsp\nTSP_Problem_597.tsp\nTSP_Problem_598.tsp\nTSP_Problem_599.tsp\nTSP_Problem_600.tsp\nTSP_Problem_601.tsp\nTSP_Problem_602.tsp\nTSP_Problem_603.tsp\nTSP_Problem_604.tsp\nTSP_Problem_605.tsp\nTSP_Problem_606.tsp\nTSP_Problem_607.tsp\nTSP_Problem_608.tsp\nTSP_Problem_609.tsp\nTSP_Problem_610.tsp\nTSP_Problem_611.tsp\nTSP_Problem_612.tsp\nTSP_Problem_613.tsp\nTSP_Problem_614.tsp\nTSP_Problem_615.tsp\nTSP_Problem_616.tsp\nTSP_Problem_617.tsp\nTSP_Problem_618.tsp\nTSP_Problem_619.tsp\nTSP_Problem_620.tsp\nTSP_Problem_621.tsp\nTSP_Problem_622.tsp\nTSP_Problem_623.tsp\nTSP_Problem_624.tsp\nTSP_Problem_625.tsp\nTSP_Problem_626.tsp\nTSP_Problem_627.tsp\nTSP_Problem_628.tsp\nTSP_Problem_629.tsp\nTSP_Problem_630.tsp\nTSP_Problem_631.tsp\nTSP_Problem_632.tsp\nTSP_Problem_633.tsp\nTSP_Problem_634.tsp\nTSP_Problem_635.tsp\nTSP_Problem_636.tsp\nTSP_Problem_637.tsp\nTSP_Problem_638.tsp\nTSP_Problem_639.tsp\nTSP_Problem_640.tsp\nTSP_Problem_641.tsp\nTSP_Problem_642.tsp\nTSP_Problem_643.tsp\nTSP_Problem_644.tsp\nTSP_Problem_645.tsp\nTSP_Problem_646.tsp\nTSP_Problem_647.tsp\nTSP_Problem_648.tsp\nTSP_Problem_649.tsp\nTSP_Problem_650.tsp\nTSP_Problem_651.tsp\nTSP_Problem_652.tsp\nTSP_Problem_653.tsp\nTSP_Problem_654.tsp\nTSP_Problem_655.tsp\nTSP_Problem_656.tsp\nTSP_Problem_657.tsp\nTSP_Problem_658.tsp\nTSP_Problem_659.tsp\nTSP_Problem_660.tsp\nTSP_Problem_661.tsp\nTSP_Problem_662.tsp\nTSP_Problem_663.tsp\nTSP_Problem_664.tsp\nTSP_Problem_665.tsp\nTSP_Problem_666.tsp\nTSP_Problem_667.tsp\nTSP_Problem_668.tsp\nTSP_Problem_669.tsp\nTSP_Problem_670.tsp\nTSP_Problem_671.tsp\nTSP_Problem_672.tsp\nTSP_Problem_673.tsp\nTSP_Problem_674.tsp\nTSP_Problem_675.tsp\nTSP_Problem_676.tsp\nTSP_Problem_677.tsp\nTSP_Problem_678.tsp\nTSP_Problem_679.tsp\nTSP_Problem_680.tsp\nTSP_Problem_681.tsp\nTSP_Problem_682.tsp\nTSP_Problem_683.tsp\nTSP_Problem_684.tsp\nTSP_Problem_685.tsp\nTSP_Problem_686.tsp\nTSP_Problem_687.tsp\nTSP_Problem_688.tsp\nTSP_Problem_689.tsp\nTSP_Problem_690.tsp\nTSP_Problem_691.tsp\nTSP_Problem_692.tsp\nTSP_Problem_693.tsp\nTSP_Problem_694.tsp\nTSP_Problem_695.tsp\nTSP_Problem_696.tsp\nTSP_Problem_697.tsp\nTSP_Problem_698.tsp\nTSP_Problem_699.tsp\nTSP_Problem_700.tsp\nTSP_Problem_701.tsp\nTSP_Problem_702.tsp\nTSP_Problem_703.tsp\nTSP_Problem_704.tsp\nTSP_Problem_705.tsp\nTSP_Problem_706.tsp\nTSP_Problem_707.tsp\nTSP_Problem_708.tsp\nTSP_Problem_709.tsp\nTSP_Problem_710.tsp\nTSP_Problem_711.tsp\nTSP_Problem_712.tsp\nTSP_Problem_713.tsp\nTSP_Problem_714.tsp\nTSP_Problem_715.tsp\nTSP_Problem_716.tsp\nTSP_Problem_717.tsp\nTSP_Problem_718.tsp\nTSP_Problem_719.tsp\nTSP_Problem_720.tsp\nTSP_Problem_721.tsp\nTSP_Problem_722.tsp\nTSP_Problem_723.tsp\nTSP_Problem_724.tsp\nTSP_Problem_725.tsp\nTSP_Problem_726.tsp\nTSP_Problem_727.tsp\nTSP_Problem_728.tsp\nTSP_Problem_729.tsp\nTSP_Problem_730.tsp\nTSP_Problem_731.tsp\nTSP_Problem_732.tsp\nTSP_Problem_733.tsp\nTSP_Problem_734.tsp\nTSP_Problem_735.tsp\nTSP_Problem_736.tsp\nTSP_Problem_737.tsp\nTSP_Problem_738.tsp\nTSP_Problem_739.tsp\nTSP_Problem_740.tsp\nTSP_Problem_741.tsp\nTSP_Problem_742.tsp\nTSP_Problem_743.tsp\nTSP_Problem_744.tsp\nTSP_Problem_745.tsp\nTSP_Problem_746.tsp\nTSP_Problem_747.tsp\nTSP_Problem_748.tsp\nTSP_Problem_749.tsp\nTSP_Problem_750.tsp\nTSP_Problem_751.tsp\nTSP_Problem_752.tsp\nTSP_Problem_753.tsp\nTSP_Problem_754.tsp\nTSP_Problem_755.tsp\nTSP_Problem_756.tsp\nTSP_Problem_757.tsp\nTSP_Problem_758.tsp\nTSP_Problem_759.tsp\nTSP_Problem_760.tsp\nTSP_Problem_761.tsp\nTSP_Problem_762.tsp\nTSP_Problem_763.tsp\nTSP_Problem_764.tsp\nTSP_Problem_765.tsp\nTSP_Problem_766.tsp\nTSP_Problem_767.tsp\nTSP_Problem_768.tsp\nTSP_Problem_769.tsp\nTSP_Problem_770.tsp\nTSP_Problem_771.tsp\nTSP_Problem_772.tsp\nTSP_Problem_773.tsp\nTSP_Problem_774.tsp\nTSP_Problem_775.tsp\nTSP_Problem_776.tsp\nTSP_Problem_777.tsp\nTSP_Problem_778.tsp\nTSP_Problem_779.tsp\nTSP_Problem_780.tsp\nTSP_Problem_781.tsp\nTSP_Problem_782.tsp\nTSP_Problem_783.tsp\nTSP_Problem_784.tsp\nTSP_Problem_785.tsp\nTSP_Problem_786.tsp\nTSP_Problem_787.tsp\nTSP_Problem_788.tsp\nTSP_Problem_789.tsp\nTSP_Problem_790.tsp\nTSP_Problem_791.tsp\nTSP_Problem_792.tsp\nTSP_Problem_793.tsp\nTSP_Problem_794.tsp\nTSP_Problem_795.tsp\nTSP_Problem_796.tsp\nTSP_Problem_797.tsp\nTSP_Problem_798.tsp\nTSP_Problem_799.tsp\nTSP_Problem_800.tsp\nTSP_Problem_801.tsp\nTSP_Problem_802.tsp\nTSP_Problem_803.tsp\nTSP_Problem_804.tsp\nTSP_Problem_805.tsp\nTSP_Problem_806.tsp\nTSP_Problem_807.tsp\nTSP_Problem_808.tsp\nTSP_Problem_809.tsp\nTSP_Problem_810.tsp\nTSP_Problem_811.tsp\nTSP_Problem_812.tsp\nTSP_Problem_813.tsp\nTSP_Problem_814.tsp\nTSP_Problem_815.tsp\nTSP_Problem_816.tsp\nTSP_Problem_817.tsp\nTSP_Problem_818.tsp\nTSP_Problem_819.tsp\nTSP_Problem_820.tsp\nTSP_Problem_821.tsp\nTSP_Problem_822.tsp\nTSP_Problem_823.tsp\nTSP_Problem_824.tsp\nTSP_Problem_825.tsp\nTSP_Problem_826.tsp\nTSP_Problem_827.tsp\nTSP_Problem_828.tsp\nTSP_Problem_829.tsp\nTSP_Problem_830.tsp\nTSP_Problem_831.tsp\nTSP_Problem_832.tsp\nTSP_Problem_833.tsp\nTSP_Problem_834.tsp\nTSP_Problem_835.tsp\nTSP_Problem_836.tsp\nTSP_Problem_837.tsp\nTSP_Problem_838.tsp\nTSP_Problem_839.tsp\nTSP_Problem_840.tsp\nTSP_Problem_841.tsp\nTSP_Problem_842.tsp\nTSP_Problem_843.tsp\nTSP_Problem_844.tsp\nTSP_Problem_845.tsp\nTSP_Problem_846.tsp\nTSP_Problem_847.tsp\nTSP_Problem_848.tsp\nTSP_Problem_849.tsp\nTSP_Problem_850.tsp\nTSP_Problem_851.tsp\nTSP_Problem_852.tsp\nTSP_Problem_853.tsp\nTSP_Problem_854.tsp\nTSP_Problem_855.tsp\nTSP_Problem_856.tsp\nTSP_Problem_857.tsp\nTSP_Problem_858.tsp\nTSP_Problem_859.tsp\nTSP_Problem_860.tsp\nTSP_Problem_861.tsp\nTSP_Problem_862.tsp\nTSP_Problem_863.tsp\nTSP_Problem_864.tsp\nTSP_Problem_865.tsp\nTSP_Problem_866.tsp\nTSP_Problem_867.tsp\nTSP_Problem_868.tsp\nTSP_Problem_869.tsp\nTSP_Problem_870.tsp\nTSP_Problem_871.tsp\nTSP_Problem_872.tsp\nTSP_Problem_873.tsp\nTSP_Problem_874.tsp\nTSP_Problem_875.tsp\nTSP_Problem_876.tsp\nTSP_Problem_877.tsp\nTSP_Problem_878.tsp\nTSP_Problem_879.tsp\nTSP_Problem_880.tsp\nTSP_Problem_881.tsp\nTSP_Problem_882.tsp\nTSP_Problem_883.tsp\nTSP_Problem_884.tsp\nTSP_Problem_885.tsp\nTSP_Problem_886.tsp\nTSP_Problem_887.tsp\nTSP_Problem_888.tsp\nTSP_Problem_889.tsp\nTSP_Problem_890.tsp\nTSP_Problem_891.tsp\nTSP_Problem_892.tsp\nTSP_Problem_893.tsp\nTSP_Problem_894.tsp\nTSP_Problem_895.tsp\nTSP_Problem_896.tsp\nTSP_Problem_897.tsp\nTSP_Problem_898.tsp\nTSP_Problem_899.tsp\nTSP_Problem_900.tsp\nTSP_Problem_901.tsp\nTSP_Problem_902.tsp\nTSP_Problem_903.tsp\nTSP_Problem_904.tsp\nTSP_Problem_905.tsp\nTSP_Problem_906.tsp\nTSP_Problem_907.tsp\nTSP_Problem_908.tsp\nTSP_Problem_909.tsp\nTSP_Problem_910.tsp\nTSP_Problem_911.tsp\nTSP_Problem_912.tsp\nTSP_Problem_913.tsp\nTSP_Problem_914.tsp\nTSP_Problem_915.tsp\nTSP_Problem_916.tsp\nTSP_Problem_917.tsp\nTSP_Problem_918.tsp\nTSP_Problem_919.tsp\nTSP_Problem_920.tsp\nTSP_Problem_921.tsp\nTSP_Problem_922.tsp\nTSP_Problem_923.tsp\nTSP_Problem_924.tsp\nTSP_Problem_925.tsp\nTSP_Problem_926.tsp\nTSP_Problem_927.tsp\nTSP_Problem_928.tsp\nTSP_Problem_929.tsp\nTSP_Problem_930.tsp\nTSP_Problem_931.tsp\nTSP_Problem_932.tsp\nTSP_Problem_933.tsp\nTSP_Problem_934.tsp\nTSP_Problem_935.tsp\nTSP_Problem_936.tsp\nTSP_Problem_937.tsp\nTSP_Problem_938.tsp\nTSP_Problem_939.tsp\nTSP_Problem_940.tsp\nTSP_Problem_941.tsp\nTSP_Problem_942.tsp\nTSP_Problem_943.tsp\nTSP_Problem_944.tsp\nTSP_Problem_945.tsp\nTSP_Problem_946.tsp\nTSP_Problem_947.tsp\nTSP_Problem_948.tsp\nTSP_Problem_949.tsp\nTSP_Problem_950.tsp\nTSP_Problem_951.tsp\nTSP_Problem_952.tsp\nTSP_Problem_953.tsp\nTSP_Problem_954.tsp\nTSP_Problem_955.tsp\nTSP_Problem_956.tsp\nTSP_Problem_957.tsp\nTSP_Problem_958.tsp\nTSP_Problem_959.tsp\nTSP_Problem_960.tsp\nTSP_Problem_961.tsp\nTSP_Problem_962.tsp\nTSP_Problem_963.tsp\nTSP_Problem_964.tsp\nTSP_Problem_965.tsp\nTSP_Problem_966.tsp\nTSP_Problem_967.tsp\nTSP_Problem_968.tsp\nTSP_Problem_969.tsp\nTSP_Problem_970.tsp\nTSP_Problem_971.tsp\nTSP_Problem_972.tsp\nTSP_Problem_973.tsp\nTSP_Problem_974.tsp\nTSP_Problem_975.tsp\nTSP_Problem_976.tsp\nTSP_Problem_977.tsp\nTSP_Problem_978.tsp\nTSP_Problem_979.tsp\nTSP_Problem_980.tsp\nTSP_Problem_981.tsp\nTSP_Problem_982.tsp\nTSP_Problem_983.tsp\nTSP_Problem_984.tsp\nTSP_Problem_985.tsp\nTSP_Problem_986.tsp\nTSP_Problem_987.tsp\nTSP_Problem_988.tsp\nTSP_Problem_989.tsp\nTSP_Problem_990.tsp\nTSP_Problem_991.tsp\nTSP_Problem_992.tsp\nTSP_Problem_993.tsp\nTSP_Problem_994.tsp\nTSP_Problem_995.tsp\nTSP_Problem_996.tsp\nTSP_Problem_997.tsp\nTSP_Problem_998.tsp\nTSP_Problem_999.tsp\n"

],

[

"NUM_MIN = 20\nNUM_MAX = 20\ngenerator = TSP_generator(g_type='tsp_2d', num_min=NUM_MIN, num_max=NUM_MAX)\nnum_graphs = 50000\ngraph_list = generator.gen_graphs(num_graphs=num_graphs)\nsave_path = f'../training_sets/synthetic_nrange_{NUM_MIN}_{NUM_MAX}_{num_graphs}'\ngenerator.save_nx_as_tsp(graph_list, save_path, scale=6, start_index=0)\nsolver.add_lengths_and_sols(data_dir=save_path, scale_factor=10**6, lengths_name='lengths.txt', sols_name='solutions.txt')",

"TSP_Problem_0.tsp\nTSP_Problem_1.tsp\nTSP_Problem_2.tsp\nTSP_Problem_3.tsp\nTSP_Problem_4.tsp\nTSP_Problem_5.tsp\nTSP_Problem_6.tsp\nTSP_Problem_7.tsp\nTSP_Problem_8.tsp\nTSP_Problem_9.tsp\nTSP_Problem_10.tsp\nTSP_Problem_11.tsp\nTSP_Problem_12.tsp\nTSP_Problem_13.tsp\nTSP_Problem_14.tsp\nTSP_Problem_15.tsp\nTSP_Problem_16.tsp\nTSP_Problem_17.tsp\nTSP_Problem_18.tsp\nTSP_Problem_19.tsp\nTSP_Problem_20.tsp\nTSP_Problem_21.tsp\nTSP_Problem_22.tsp\nTSP_Problem_23.tsp\nTSP_Problem_24.tsp\nTSP_Problem_25.tsp\nTSP_Problem_26.tsp\nTSP_Problem_27.tsp\nTSP_Problem_28.tsp\nTSP_Problem_29.tsp\nTSP_Problem_30.tsp\nTSP_Problem_31.tsp\nTSP_Problem_32.tsp\nTSP_Problem_33.tsp\nTSP_Problem_34.tsp\nTSP_Problem_35.tsp\nTSP_Problem_36.tsp\nTSP_Problem_37.tsp\nTSP_Problem_38.tsp\nTSP_Problem_39.tsp\nTSP_Problem_40.tsp\nTSP_Problem_41.tsp\nTSP_Problem_42.tsp\nTSP_Problem_43.tsp\nTSP_Problem_44.tsp\nTSP_Problem_45.tsp\nTSP_Problem_46.tsp\nTSP_Problem_47.tsp\nTSP_Problem_48.tsp\nTSP_Problem_49.tsp\nTSP_Problem_50.tsp\nTSP_Problem_51.tsp\nTSP_Problem_52.tsp\nTSP_Problem_53.tsp\nTSP_Problem_54.tsp\nTSP_Problem_55.tsp\nTSP_Problem_56.tsp\nTSP_Problem_57.tsp\nTSP_Problem_58.tsp\nTSP_Problem_59.tsp\nTSP_Problem_60.tsp\nTSP_Problem_61.tsp\nTSP_Problem_62.tsp\nTSP_Problem_63.tsp\nTSP_Problem_64.tsp\nTSP_Problem_65.tsp\nTSP_Problem_66.tsp\nTSP_Problem_67.tsp\nTSP_Problem_68.tsp\nTSP_Problem_69.tsp\nTSP_Problem_70.tsp\nTSP_Problem_71.tsp\nTSP_Problem_72.tsp\nTSP_Problem_73.tsp\nTSP_Problem_74.tsp\nTSP_Problem_75.tsp\nTSP_Problem_76.tsp\nTSP_Problem_77.tsp\nTSP_Problem_78.tsp\nTSP_Problem_79.tsp\nTSP_Problem_80.tsp\nTSP_Problem_81.tsp\nTSP_Problem_82.tsp\nTSP_Problem_83.tsp\nTSP_Problem_84.tsp\nTSP_Problem_85.tsp\nTSP_Problem_86.tsp\nTSP_Problem_87.tsp\nTSP_Problem_88.tsp\nTSP_Problem_89.tsp\nTSP_Problem_90.tsp\nTSP_Problem_91.tsp\nTSP_Problem_92.tsp\nTSP_Problem_93.tsp\nTSP_Problem_94.tsp\nTSP_Problem_95.tsp\nTSP_Problem_96.tsp\nTSP_Problem_97.tsp\nTSP_Problem_98.tsp\nTSP_Problem_99.tsp\n"

],

[

"NUM_MIN = 40\nNUM_MAX = 50\ngenerator = TSP_generator(g_type='tsp_2d', num_min=NUM_MIN, num_max=NUM_MAX)\n\nnum_graphs = 100\ngraph_list = generator.gen_graphs(num_graphs=num_graphs)\nsave_path = f'valid_sets/synthetic_nrange_{NUM_MIN}_{NUM_MAX}_{num_graphs}'\ngenerator.save_nx_as_tsp(graph_list, save_path, scale=6, start_index=0)\nsolver.add_lengths_and_sols(data_dir=save_path, scale_factor=10**6, lengths_name='lengths.txt', sols_name='solutions.txt')",

"TSP_Problem_0.tsp\nTSP_Problem_1.tsp\nTSP_Problem_2.tsp\nTSP_Problem_3.tsp\nTSP_Problem_4.tsp\nTSP_Problem_5.tsp\nTSP_Problem_6.tsp\nTSP_Problem_7.tsp\nTSP_Problem_8.tsp\nTSP_Problem_9.tsp\nTSP_Problem_10.tsp\nTSP_Problem_11.tsp\nTSP_Problem_12.tsp\nTSP_Problem_13.tsp\nTSP_Problem_14.tsp\nTSP_Problem_15.tsp\nTSP_Problem_16.tsp\nTSP_Problem_17.tsp\nTSP_Problem_18.tsp\nTSP_Problem_19.tsp\nTSP_Problem_20.tsp\nTSP_Problem_21.tsp\nTSP_Problem_22.tsp\nTSP_Problem_23.tsp\nTSP_Problem_24.tsp\nTSP_Problem_25.tsp\nTSP_Problem_26.tsp\nTSP_Problem_27.tsp\nTSP_Problem_28.tsp\nTSP_Problem_29.tsp\nTSP_Problem_30.tsp\nTSP_Problem_31.tsp\nTSP_Problem_32.tsp\nTSP_Problem_33.tsp\nTSP_Problem_34.tsp\nTSP_Problem_35.tsp\nTSP_Problem_36.tsp\nTSP_Problem_37.tsp\nTSP_Problem_38.tsp\nTSP_Problem_39.tsp\nTSP_Problem_40.tsp\nTSP_Problem_41.tsp\nTSP_Problem_42.tsp\nTSP_Problem_43.tsp\nTSP_Problem_44.tsp\nTSP_Problem_45.tsp\nTSP_Problem_46.tsp\nTSP_Problem_47.tsp\nTSP_Problem_48.tsp\nTSP_Problem_49.tsp\nTSP_Problem_50.tsp\nTSP_Problem_51.tsp\nTSP_Problem_52.tsp\nTSP_Problem_53.tsp\nTSP_Problem_54.tsp\nTSP_Problem_55.tsp\nTSP_Problem_56.tsp\nTSP_Problem_57.tsp\nTSP_Problem_58.tsp\nTSP_Problem_59.tsp\nTSP_Problem_60.tsp\nTSP_Problem_61.tsp\nTSP_Problem_62.tsp\nTSP_Problem_63.tsp\nTSP_Problem_64.tsp\nTSP_Problem_65.tsp\nTSP_Problem_66.tsp\nTSP_Problem_67.tsp\nTSP_Problem_68.tsp\nTSP_Problem_69.tsp\nTSP_Problem_70.tsp\nTSP_Problem_71.tsp\nTSP_Problem_72.tsp\nTSP_Problem_73.tsp\nTSP_Problem_74.tsp\nTSP_Problem_75.tsp\nTSP_Problem_76.tsp\nTSP_Problem_77.tsp\nTSP_Problem_78.tsp\nTSP_Problem_79.tsp\nTSP_Problem_80.tsp\nTSP_Problem_81.tsp\nTSP_Problem_82.tsp\nTSP_Problem_83.tsp\nTSP_Problem_84.tsp\nTSP_Problem_85.tsp\nTSP_Problem_86.tsp\nTSP_Problem_87.tsp\nTSP_Problem_88.tsp\nTSP_Problem_89.tsp\nTSP_Problem_90.tsp\nTSP_Problem_91.tsp\nTSP_Problem_92.tsp\nTSP_Problem_93.tsp\nTSP_Problem_94.tsp\nTSP_Problem_95.tsp\nTSP_Problem_96.tsp\nTSP_Problem_97.tsp\nTSP_Problem_98.tsp\nTSP_Problem_99.tsp\n"

],

[

"plotter.plot_nx_graph(test[0], title='Fittness = {}'.format(np.round(test[1], 3)))",

"_____no_output_____"

],

[

"\nplotter = gTSP.TSP_plotter()\nplotter.plot_nx_graph(test[0])\n",

"_____no_output_____"

],

[

"np.arange(10)",

"_____no_output_____"

],

[

"generator = gTSP.TSP_generator(g_type='tsp_2d', num_min=15, num_max=20)\nmin = np.inf\nfor g in graph_list:\n tmp = generator.calc_control_parameter(g)\n if tmp < min:\n min = tmp\nprint(min)",

"0.8869272752360513\n"

],

[

"solver = gTSP.TSP_solver()\nsolver.calc_best_tour(graph_list[3])",

"_____no_output_____"

]

]

] |

[

"code"

] |

[

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

]

] |

4a302eb7c1f7fa7a54635822e060571644e52e5d

| 340,180 |

ipynb

|

Jupyter Notebook

|

07_food_vision_milestone_project_1.ipynb

|

Ankur099IIT/Tensorflow-and-DeepLearning

|

ef684d7613902f8c7185e67629440e6abc264789

|

[

"MIT"

] | 2,375 |

2020-11-24T06:18:23.000Z

|

2022-03-31T13:29:55.000Z

|

07_food_vision_milestone_project_1.ipynb

|

Ankur099IIT/Tensorflow-and-DeepLearning

|

ef684d7613902f8c7185e67629440e6abc264789

|

[

"MIT"

] | 41 |

2021-03-03T08:43:52.000Z

|

2022-03-31T19:49:49.000Z

|

07_food_vision_milestone_project_1.ipynb

|

Ankur099IIT/Tensorflow-and-DeepLearning

|

ef684d7613902f8c7185e67629440e6abc264789

|

[

"MIT"

] | 1,036 |

2021-01-17T05:14:41.000Z

|

2022-03-31T10:10:09.000Z

| 100.141301 | 82,790 | 0.775331 |

[

[

[

"<a href=\"https://colab.research.google.com/github/mrdbourke/tensorflow-deep-learning/blob/main/07_food_vision_milestone_project_1.ipynb\" target=\"_parent\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/></a>",

"_____no_output_____"

],

[

"# 07. Milestone Project 1: 🍔👁 Food Vision Big™\n\nIn the previous notebook ([transfer learning part 3: scaling up](https://github.com/mrdbourke/tensorflow-deep-learning/blob/main/06_transfer_learning_in_tensorflow_part_3_scaling_up.ipynb)) we built Food Vision mini: a transfer learning model which beat the original results of the [Food101 paper](https://data.vision.ee.ethz.ch/cvl/datasets_extra/food-101/) with only 10% of the data.\n\nBut you might be wondering, what would happen if we used all the data?\n\nWell, that's what we're going to find out in this notebook!\n\nWe're going to be building Food Vision Big™, using all of the data from the Food101 dataset.\n\nYep. All 75,750 training images and 25,250 testing images.\n\nAnd guess what...\n\nThis time **we've got the goal of beating [DeepFood](https://www.researchgate.net/publication/304163308_DeepFood_Deep_Learning-Based_Food_Image_Recognition_for_Computer-Aided_Dietary_Assessment)**, a 2016 paper which used a Convolutional Neural Network trained for 2-3 days to achieve 77.4% top-1 accuracy.\n\n> 🔑 **Note:** **Top-1 accuracy** means \"accuracy for the top softmax activation value output by the model\" (because softmax ouputs a value for every class, but top-1 means only the highest one is evaluated). **Top-5 accuracy** means \"accuracy for the top 5 softmax activation values output by the model\", in other words, did the true label appear in the top 5 activation values? Top-5 accuracy scores are usually noticeably higher than top-1.\n\n| | 🍔👁 Food Vision Big™ | 🍔👁 Food Vision mini |\n|-----|-----|-----|\n| Dataset source | TensorFlow Datasets | Preprocessed download from Kaggle | \n| Train data | 75,750 images | 7,575 images | \n| Test data | 25,250 images | 25,250 images | \n| Mixed precision | Yes | No |\n| Data loading | Performanant tf.data API | TensorFlow pre-built function | \n| Target results | 77.4% top-1 accuracy (beat [DeepFood paper](https://arxiv.org/abs/1606.05675)) | 50.76% top-1 accuracy (beat [Food101 paper](https://data.vision.ee.ethz.ch/cvl/datasets_extra/food-101/static/bossard_eccv14_food-101.pdf)) | \n\n*Table comparing difference between Food Vision Big (this notebook) versus Food Vision mini (previous notebook).*\n\nAlongside attempting to beat the DeepFood paper, we're going to learn about two methods to significantly improve the speed of our model training:\n1. Prefetching\n2. Mixed precision training\n\nBut more on these later.\n\n## What we're going to cover\n\n* Using TensorFlow Datasets to download and explore data\n* Creating preprocessing function for our data\n* Batching & preparing datasets for modelling (**making our datasets run fast**)\n* Creating modelling callbacks\n* Setting up **mixed precision training**\n* Building a feature extraction model (see [transfer learning part 1: feature extraction](https://github.com/mrdbourke/tensorflow-deep-learning/blob/main/04_transfer_learning_in_tensorflow_part_1_feature_extraction.ipynb))\n* Fine-tuning the feature extraction model (see [transfer learning part 2: fine-tuning](https://github.com/mrdbourke/tensorflow-deep-learning/blob/main/05_transfer_learning_in_tensorflow_part_2_fine_tuning.ipynb))\n* Viewing training results on TensorBoard\n\n## How you should approach this notebook\n\nYou can read through the descriptions and the code (it should all run, except for the cells which error on purpose), but there's a better option.\n\nWrite all of the code yourself.\n\nYes. I'm serious. Create a new notebook, and rewrite each line by yourself. Investigate it, see if you can break it, why does it break?\n\nYou don't have to write the text descriptions but writing the code yourself is a great way to get hands-on experience.\n\nDon't worry if you make mistakes, we all do. The way to get better and make less mistakes is to write more code.\n\n> 📖 **Resource:** See the full set of course materials on GitHub: https://github.com/mrdbourke/tensorflow-deep-learning",

"_____no_output_____"

],

[

"## Check GPU\n\nFor this notebook, we're going to be doing something different.\n\nWe're going to be using mixed precision training.\n\nMixed precision training was introduced in [TensorFlow 2.4.0](https://blog.tensorflow.org/2020/12/whats-new-in-tensorflow-24.html) (a very new feature at the time of writing).\n\nWhat does **mixed precision training** do?\n\nMixed precision training uses a combination of single precision (float32) and half-preicison (float16) data types to speed up model training (up 3x on modern GPUs).\n\nWe'll talk about this more later on but in the meantime you can read the [TensorFlow documentation on mixed precision](https://www.tensorflow.org/guide/mixed_precision) for more details.\n\nFor now, before we can move forward if we want to use mixed precision training, we need to make sure the GPU powering our Google Colab instance (if you're using Google Colab) is compataible. \n\nFor mixed precision training to work, you need access to a GPU with a compute compability score of 7.0+. \n\nGoogle Colab offers P100, K80 and T4 GPUs, however, **the P100 and K80 aren't compatible with mixed precision training**.\n\nTherefore before we proceed we need to make sure we have **access to a Tesla T4 GPU in our Google Colab instance**.\n\nIf you're not using Google Colab, you can find a list of various [Nvidia GPU compute capabilities on Nvidia's developer website](https://developer.nvidia.com/cuda-gpus#compute).\n\n> 🔑 **Note:** If you run the cell below and see a P100 or K80, try going to to Runtime -> Factory Reset Runtime (note: this will remove any saved variables and data from your Colab instance) and then retry to get a T4.\n>\n> **You can still run the code *without* a GPU capable of mixed precision** (it'll just be a little slower). ",

"_____no_output_____"

]

],

[

[

"# If using Google Colab, this should output \"Tesla T4\" otherwise, \n# you won't be able to use mixed precision training\n!nvidia-smi -L",

"GPU 0: NVIDIA TITAN RTX (UUID: GPU-64b1678c-cec3-56bb-af0c-8ae69de44cbd)\r\n"

]

],

[

[

"Since mixed precision training was introduced in TensorFlow 2.4.0, make sure you've got at least TensorFlow 2.4.0+.",

"_____no_output_____"

]

],

[

[

"# Hide warning logs (see: https://stackoverflow.com/a/38645250/7900723)\nimport os\nos.environ[\"TF_CPP_MIN_LOG_LEVEL\"] = \"3\"\n\n# Check TensorFlow version (should be 2.4.0+)\nimport tensorflow as tf\nprint(tf.__version__)",

"2.6.2\n"

]

],

[

[

"## Get helper functions\n\nWe've created a series of helper functions throughout the previous notebooks in the course. Instead of rewriting them (tedious), we'll import the [`helper_functions.py`](https://github.com/mrdbourke/tensorflow-deep-learning/blob/main/extras/helper_functions.py) file from the GitHub repo.",

"_____no_output_____"

]

],

[

[

"# Get helper functions file\nif not os.path.exists(\"helper_functions.py\"):\n print(\"Downloading helper functions...\")\n !wget https://raw.githubusercontent.com/mrdbourke/tensorflow-deep-learning/main/extras/helper_functions.py\nelse:\n print(\"Helper functions file already exists, skipping download...\")",

"Helper functions file already exists, skipping download...\n"

],

[

"# Import series of helper functions for the notebook (we've created/used these in previous notebooks)\nfrom helper_functions import create_tensorboard_callback, plot_loss_curves, compare_historys",

"_____no_output_____"

]

],

[

[

"## Use TensorFlow Datasets to Download Data\n\nIn previous notebooks, we've downloaded our food images (from the [Food101 dataset](https://www.kaggle.com/dansbecker/food-101/home)) from Google Storage.\n\nAnd this is a typical workflow you'd use if you're working on your own datasets.\n\nHowever, there's another way to get datasets ready to use with TensorFlow.\n\nFor many of the most popular datasets in the machine learning world (often referred to and used as benchmarks), you can access them through [TensorFlow Datasets (TFDS)](https://www.tensorflow.org/datasets/overview).\n\nWhat is **TensorFlow Datasets**?\n\nA place for prepared and ready-to-use machine learning datasets.\n\nWhy use TensorFlow Datasets?\n\n* Load data already in Tensors\n* Practice on well established datasets\n* Experiment with differet data loading techniques (like we're going to use in this notebook)\n* Experiment with new TensorFlow features quickly (such as mixed precision training)\n\nWhy *not* use TensorFlow Datasets?\n\n* The datasets are static (they don't change, like your real-world datasets would)\n* Might not be suited for your particular problem (but great for experimenting)\n\nTo begin using TensorFlow Datasets we can import it under the alias `tfds`.\n",

"_____no_output_____"

]

],

[

[

"# Get TensorFlow Datasets\nimport tensorflow_datasets as tfds",

"_____no_output_____"

]

],

[

[

"To find all of the available datasets in TensorFlow Datasets, you can use the `list_builders()` method.\n\nAfter doing so, we can check to see if the one we're after (`\"food101\"`) is present.",

"_____no_output_____"

]

],

[

[

"# List available datasets\ndatasets_list = tfds.list_builders() # get all available datasets in TFDS\nprint(\"food101\" in datasets_list) # is the dataset we're after available?",

"True\n"

]

],

[

[

"Beautiful! It looks like the dataset we're after is available (note there are plenty more available but we're on Food101).\n\nTo get access to the Food101 dataset from the TFDS, we can use the [`tfds.load()`](https://www.tensorflow.org/datasets/api_docs/python/tfds/load) method.\n\nIn particular, we'll have to pass it a few parameters to let it know what we're after:\n* `name` (str) : the target dataset (e.g. `\"food101\"`)\n* `split` (list, optional) : what splits of the dataset we're after (e.g. `[\"train\", \"validation\"]`)\n * the `split` parameter is quite tricky. See [the documentation for more](https://github.com/tensorflow/datasets/blob/master/docs/splits.md).\n* `shuffle_files` (bool) : whether or not to shuffle the files on download, defaults to `False` \n* `as_supervised` (bool) : `True` to download data samples in tuple format (`(data, label)`) or `False` for dictionary format \n* `with_info` (bool) : `True` to download dataset metadata (labels, number of samples, etc)\n\n> 🔑 **Note:** Calling the `tfds.load()` method will start to download a target dataset to disk if the `download=True` parameter is set (default). This dataset could be 100GB+, so make sure you have space.",

"_____no_output_____"

]

],

[

[

"# Load in the data (takes about 5-6 minutes in Google Colab)\n(train_data, test_data), ds_info = tfds.load(name=\"food101\", # target dataset to get from TFDS\n split=[\"train\", \"validation\"], # what splits of data should we get? note: not all datasets have train, valid, test\n shuffle_files=True, # shuffle files on download?\n as_supervised=True, # download data in tuple format (sample, label), e.g. (image, label)\n with_info=True) # include dataset metadata? if so, tfds.load() returns tuple (data, ds_info)",

"_____no_output_____"

]

],

[

[

"Wonderful! After a few minutes of downloading, we've now got access to entire Food101 dataset (in tensor format) ready for modelling.\n\nNow let's get a little information from our dataset, starting with the class names.\n\nGetting class names from a TensorFlow Datasets dataset requires downloading the \"`dataset_info`\" variable (by using the `as_supervised=True` parameter in the `tfds.load()` method, **note:** this will only work for supervised datasets in TFDS).\n\nWe can access the class names of a particular dataset using the `dataset_info.features` attribute and accessing `names` attribute of the the `\"label\"` key.",

"_____no_output_____"

]

],

[

[

"# Features of Food101 TFDS\nds_info.features",

"_____no_output_____"

],

[

"# Get class names\nclass_names = ds_info.features[\"label\"].names\nclass_names[:10]",

"_____no_output_____"

]

],

[

[

"### Exploring the Food101 data from TensorFlow Datasets\n\nNow we've downloaded the Food101 dataset from TensorFlow Datasets, how about we do what any good data explorer should?\n\nIn other words, \"visualize, visualize, visualize\". \n\nLet's find out a few details about our dataset:\n* The shape of our input data (image tensors)\n* The datatype of our input data\n* What the labels of our input data look like (e.g. one-hot encoded versus label-encoded)\n* Do the labels match up with the class names?\n\nTo do, let's take one sample off the training data (using the [`.take()` method](https://www.tensorflow.org/api_docs/python/tf/data/Dataset#take)) and explore it. ",

"_____no_output_____"

]

],

[

[

"# Take one sample off the training data\ntrain_one_sample = train_data.take(1) # samples are in format (image_tensor, label)",

"_____no_output_____"

]

],

[

[

"Because we used the `as_supervised=True` parameter in our `tfds.load()` method above, data samples come in the tuple format structure `(data, label)` or in our case `(image_tensor, label)`.",

"_____no_output_____"

]

],

[

[

"# What does one sample of our training data look like?\ntrain_one_sample",

"_____no_output_____"

]

],

[

[

"Let's loop through our single training sample and get some info from the `image_tensor` and `label`.",

"_____no_output_____"

]

],

[

[

"# Output info about our training sample\nfor image, label in train_one_sample:\n print(f\"\"\"\n Image shape: {image.shape}\n Image dtype: {image.dtype}\n Target class from Food101 (tensor form): {label}\n Class name (str form): {class_names[label.numpy()]}\n \"\"\")",

"\n Image shape: (512, 289, 3)\n Image dtype: <dtype: 'uint8'>\n Target class from Food101 (tensor form): 45\n Class name (str form): frozen_yogurt\n \n"

]

],

[

[

"Because we set the `shuffle_files=True` parameter in our `tfds.load()` method above, running the cell above a few times will give a different result each time.\n\nChecking these you might notice some of the images have different shapes, for example `(512, 342, 3)` and `(512, 512, 3)` (height, width, color_channels).\n\nLet's see what one of the image tensors from TFDS's Food101 dataset looks like.",

"_____no_output_____"

]

],

[

[

"# What does an image tensor from TFDS's Food101 look like?\nimage",

"_____no_output_____"

],

[

"# What are the min and max values?\ntf.reduce_min(image), tf.reduce_max(image)",

"_____no_output_____"

]

],

[

[

"Alright looks like our image tensors have values of between 0 & 255 (standard red, green, blue colour values) and the values are of data type `unit8`.\n\nWe might have to preprocess these before passing them to a neural network. But we'll handle this later.\n\nIn the meantime, let's see if we can plot an image sample.",

"_____no_output_____"

],

[

"### Plot an image from TensorFlow Datasets\n\nWe've seen our image tensors in tensor format, now let's really adhere to our motto.\n\n\"Visualize, visualize, visualize!\"\n\nLet's plot one of the image samples using [`matplotlib.pyplot.imshow()`](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.imshow.html) and set the title to target class name.",

"_____no_output_____"

]

],

[

[

"# Plot an image tensor\nimport matplotlib.pyplot as plt\nplt.imshow(image)\nplt.title(class_names[label.numpy()]) # add title to image by indexing on class_names list\nplt.axis(False);",

"_____no_output_____"

]

],

[

[

"Delicious!\n\nOkay, looks like the Food101 data we've got from TFDS is similar to the datasets we've been using in previous notebooks.\n\nNow let's preprocess it and get it ready for use with a neural network.",

"_____no_output_____"

],

[

"## Create preprocessing functions for our data\n\nIn previous notebooks, when our images were in folder format we used the method [`tf.keras.preprocessing.image_dataset_from_directory()`](https://www.tensorflow.org/api_docs/python/tf/keras/preprocessing/image_dataset_from_directory) to load them in.\n\nDoing this meant our data was loaded into a format ready to be used with our models.\n\nHowever, since we've downloaded the data from TensorFlow Datasets, there are a couple of preprocessing steps we have to take before it's ready to model. \n\nMore specifically, our data is currently:\n\n* In `uint8` data type\n* Comprised of all differnet sized tensors (different sized images)\n* Not scaled (the pixel values are between 0 & 255)\n\nWhereas, models like data to be:\n\n* In `float32` data type\n* Have all of the same size tensors (batches require all tensors have the same shape, e.g. `(224, 224, 3)`)\n* Scaled (values between 0 & 1), also called normalized\n\nTo take care of these, we'll create a `preprocess_img()` function which:\n\n* Resizes an input image tensor to a specified size using [`tf.image.resize()`](https://www.tensorflow.org/api_docs/python/tf/image/resize)\n* Converts an input image tensor's current datatype to `tf.float32` using [`tf.cast()`](https://www.tensorflow.org/api_docs/python/tf/cast)\n\n> 🔑 **Note:** Pretrained EfficientNetBX models in [`tf.keras.applications.efficientnet`](https://www.tensorflow.org/api_docs/python/tf/keras/applications/efficientnet) (what we're going to be using) have rescaling built-in. But for many other model architectures you'll want to rescale your data (e.g. get its values between 0 & 1). This could be incorporated inside your \"`preprocess_img()`\" function (like the one below) or within your model as a [`tf.keras.layers.experimental.preprocessing.Rescaling`](https://www.tensorflow.org/api_docs/python/tf/keras/layers/experimental/preprocessing/Rescaling) layer.",

"_____no_output_____"

]

],

[

[

"# Make a function for preprocessing images\ndef preprocess_img(image, label, img_shape=224):\n \"\"\"\n Converts image datatype from 'uint8' -> 'float32' and reshapes image to\n [img_shape, img_shape, color_channels]\n \"\"\"\n image = tf.image.resize(image, [img_shape, img_shape]) # reshape to img_shape\n return tf.cast(image, tf.float32), label # return (float32_image, label) tuple",

"_____no_output_____"

]

],

[

[

"Our `preprocess_img()` function above takes image and label as input (even though it does nothing to the label) because our dataset is currently in the tuple structure `(image, label)`.\n\nLet's try our function out on a target image.",

"_____no_output_____"

]

],

[

[

"# Preprocess a single sample image and check the outputs\npreprocessed_img = preprocess_img(image, label)[0]\nprint(f\"Image before preprocessing:\\n {image[:2]}...,\\nShape: {image.shape},\\nDatatype: {image.dtype}\\n\")\nprint(f\"Image after preprocessing:\\n {preprocessed_img[:2]}...,\\nShape: {preprocessed_img.shape},\\nDatatype: {preprocessed_img.dtype}\")",

"Image before preprocessing:\n [[[145 151 125]\n [157 163 137]\n [159 165 139]\n ...\n [197 209 199]\n [197 209 199]\n [197 207 198]]\n\n [[146 152 126]\n [156 162 136]\n [158 164 138]\n ...\n [197 209 199]\n [197 209 199]\n [199 209 200]]]...,\nShape: (512, 289, 3),\nDatatype: <dtype: 'uint8'>\n\nImage after preprocessing:\n [[[147.19739 153.19739 127.19739]\n [157.22768 163.22768 137.22768]\n [158.09805 164.09805 138.09805]\n ...\n [197. 209. 197.54907]\n [197. 209. 199. ]\n [198.09914 208.38936 199.24425]]\n\n [[154.69467 160.69467 134.69467]\n [155.87053 161.87053 135.87053]\n [158.39908 164.39908 138.39908]\n ...\n [197. 209. 197.54907]\n [197. 209. 199. ]\n [196.32831 206.61853 195.76364]]]...,\nShape: (224, 224, 3),\nDatatype: <dtype: 'float32'>\n"

]

],

[

[

"Excellent! Looks like our `preprocess_img()` function is working as expected.\n\nThe input image gets converted from `uint8` to `float32` and gets reshaped from its current shape to `(224, 224, 3)`.\n\nHow does it look?",

"_____no_output_____"

]

],

[

[

"# We can still plot our preprocessed image as long as we \n# divide by 255 (for matplotlib capatibility)\nplt.imshow(preprocessed_img/255.)\nplt.title(class_names[label])\nplt.axis(False);",

"_____no_output_____"

]

],

[

[

"All this food visualization is making me hungry. How about we start preparing to model it?",

"_____no_output_____"

],

[

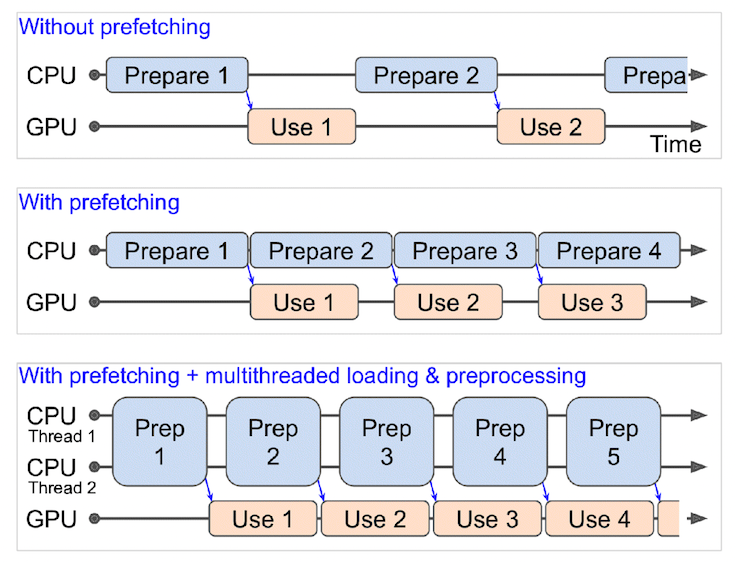

"## Batch & prepare datasets\n\nBefore we can model our data, we have to turn it into batches.\n\nWhy?\n\nBecause computing on batches is memory efficient.\n\nWe turn our data from 101,000 image tensors and labels (train and test combined) into batches of 32 image and label pairs, thus enabling it to fit into the memory of our GPU.\n\nTo do this in effective way, we're going to be leveraging a number of methods from the [`tf.data` API](https://www.tensorflow.org/api_docs/python/tf/data).\n\n> 📖 **Resource:** For loading data in the most performant way possible, see the TensorFlow docuemntation on [Better performance with the tf.data API](https://www.tensorflow.org/guide/data_performance).\n\nSpecifically, we're going to be using:\n\n* [`map()`](https://www.tensorflow.org/api_docs/python/tf/data/Dataset#map) - maps a predefined function to a target dataset (e.g. `preprocess_img()` to our image tensors)\n* [`shuffle()`](https://www.tensorflow.org/api_docs/python/tf/data/Dataset#shuffle) - randomly shuffles the elements of a target dataset up `buffer_size` (ideally, the `buffer_size` is equal to the size of the dataset, however, this may have implications on memory)\n* [`batch()`](https://www.tensorflow.org/api_docs/python/tf/data/Dataset#batch) - turns elements of a target dataset into batches (size defined by parameter `batch_size`)\n* [`prefetch()`](https://www.tensorflow.org/api_docs/python/tf/data/Dataset#prefetch) - prepares subsequent batches of data whilst other batches of data are being computed on (improves data loading speed but costs memory)\n* Extra: [`cache()`](https://www.tensorflow.org/api_docs/python/tf/data/Dataset#cache) - caches (saves them for later) elements in a target dataset, saving loading time (will only work if your dataset is small enough to fit in memory, standard Colab instances only have 12GB of memory) \n\nThings to note:\n- Can't batch tensors of different shapes (e.g. different image sizes, need to reshape images first, hence our `preprocess_img()` function)\n- `shuffle()` keeps a buffer of the number you pass it images shuffled, ideally this number would be all of the samples in your training set, however, if your training set is large, this buffer might not fit in memory (a fairly large number like 1000 or 10000 is usually suffice for shuffling)\n- For methods with the `num_parallel_calls` parameter available (such as `map()`), setting it to`num_parallel_calls=tf.data.AUTOTUNE` will parallelize preprocessing and significantly improve speed\n- Can't use `cache()` unless your dataset can fit in memory\n\nWoah, the above is alot. But once we've coded below, it'll start to make sense.\n\nWe're going to through things in the following order:\n\n```\nOriginal dataset (e.g. train_data) -> map() -> shuffle() -> batch() -> prefetch() -> PrefetchDataset\n```\n\nThis is like saying, \n\n> \"Hey, map this preprocessing function across our training dataset, then shuffle a number of elements before batching them together and make sure you prepare new batches (prefetch) whilst the model is looking through the current batch\".\n\n\n\n*What happens when you use prefetching (faster) versus what happens when you don't use prefetching (slower). **Source:** Page 422 of [Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow Book by Aurélien Géron](https://www.oreilly.com/library/view/hands-on-machine-learning/9781492032632/).*\n",

"_____no_output_____"

]

],

[

[

"# Map preprocessing function to training data (and paralellize)\ntrain_data = train_data.map(map_func=preprocess_img, num_parallel_calls=tf.data.AUTOTUNE)\n# Shuffle train_data and turn it into batches and prefetch it (load it faster)\ntrain_data = train_data.shuffle(buffer_size=1000).batch(batch_size=32).prefetch(buffer_size=tf.data.AUTOTUNE)\n\n# Map prepreprocessing function to test data\ntest_data = test_data.map(preprocess_img, num_parallel_calls=tf.data.AUTOTUNE)\n# Turn test data into batches (don't need to shuffle)\ntest_data = test_data.batch(32).prefetch(tf.data.AUTOTUNE)",

"_____no_output_____"

]

],

[

[

"And now let's check out what our prepared datasets look like.",

"_____no_output_____"

]

],

[

[

"train_data, test_data",

"_____no_output_____"

]

],

[

[

"Excellent! Looks like our data is now in tutples of `(image, label)` with datatypes of `(tf.float32, tf.int64)`, just what our model is after.\n\n> 🔑 **Note:** You can get away without calling the `prefetch()` method on the end of your datasets, however, you'd probably see significantly slower data loading speeds when building a model. So most of your dataset input pipelines should end with a call to [`prefecth()`](https://www.tensorflow.org/api_docs/python/tf/data/Dataset#prefetch).\n\nOnward.",

"_____no_output_____"

],

[

"## Create modelling callbacks\n\nSince we're going to be training on a large amount of data and training could take a long time, it's a good idea to set up some modelling callbacks so we be sure of things like our model's training logs being tracked and our model being checkpointed (saved) after various training milestones.\n\nTo do each of these we'll use the following callbacks:\n* [`tf.keras.callbacks.TensorBoard()`](https://www.tensorflow.org/api_docs/python/tf/keras/callbacks/TensorBoard) - allows us to keep track of our model's training history so we can inspect it later (**note:** we've created this callback before have imported it from `helper_functions.py` as `create_tensorboard_callback()`)\n* [`tf.keras.callbacks.ModelCheckpoint()`](https://www.tensorflow.org/api_docs/python/tf/keras/callbacks/ModelCheckpoint) - saves our model's progress at various intervals so we can load it and resuse it later without having to retrain it\n * Checkpointing is also helpful so we can start fine-tuning our model at a particular epoch and revert back to a previous state if fine-tuning offers no benefits",

"_____no_output_____"

]

],

[

[

"# Create TensorBoard callback (already have \"create_tensorboard_callback()\" from a previous notebook)\nfrom helper_functions import create_tensorboard_callback\n\n# Create ModelCheckpoint callback to save model's progress\ncheckpoint_path = \"model_checkpoints/cp.ckpt\" # saving weights requires \".ckpt\" extension\nmodel_checkpoint = tf.keras.callbacks.ModelCheckpoint(checkpoint_path,\n montior=\"val_accuracy\", # save the model weights with best validation accuracy\n save_best_only=True, # only save the best weights\n save_weights_only=True, # only save model weights (not whole model)\n verbose=1) # don't print out whether or not model is being saved ",

"_____no_output_____"

]

],

[

[

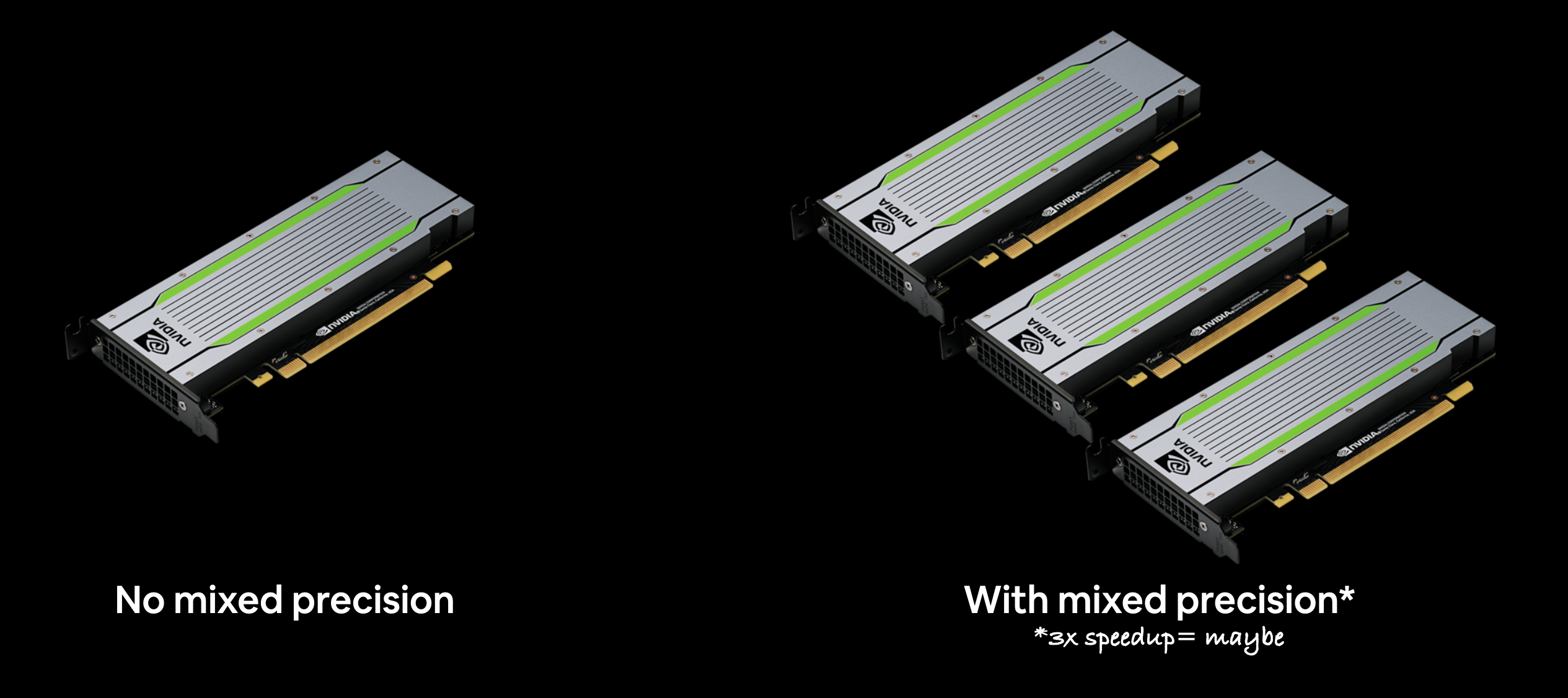

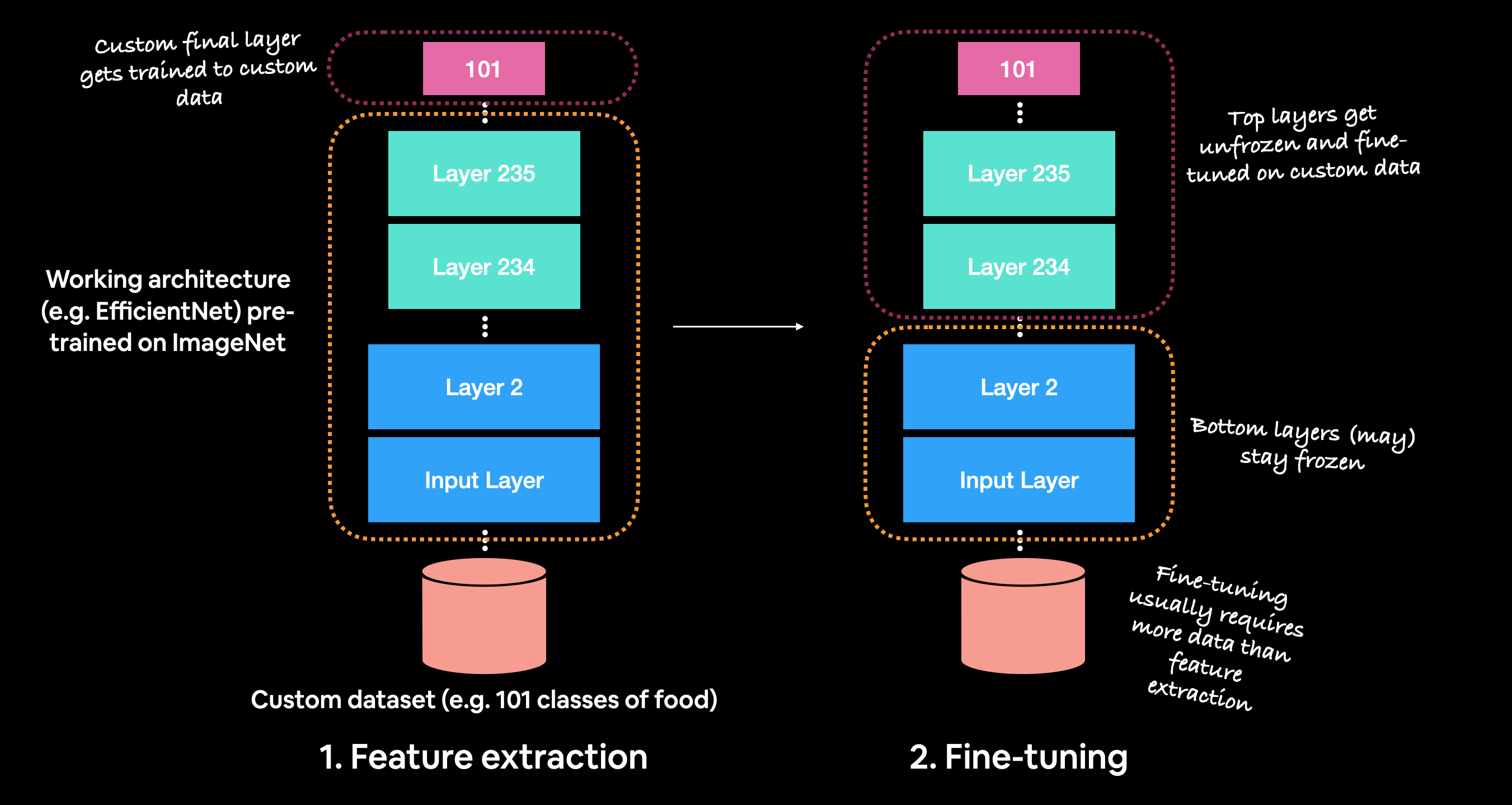

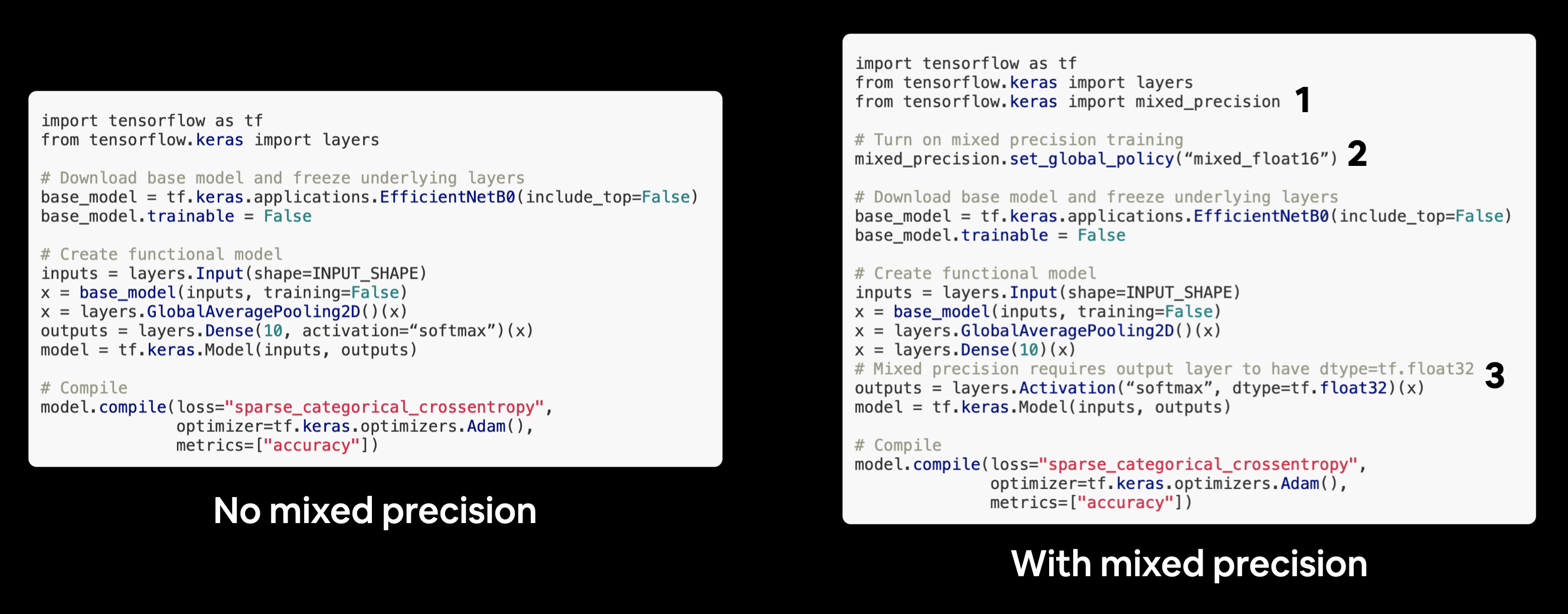

"## Setup mixed precision training\n\nWe touched on mixed precision training above.\n\nHowever, we didn't quite explain it.\n\nNormally, tensors in TensorFlow default to the float32 datatype (unless otherwise specified).\n\nIn computer science, float32 is also known as [single-precision floating-point format](https://en.wikipedia.org/wiki/Single-precision_floating-point_format). The 32 means it usually occupies 32 bits in computer memory.\n\nYour GPU has a limited memory, therefore it can only handle a number of float32 tensors at the same time.\n\nThis is where mixed precision training comes in.\n\nMixed precision training involves using a mix of float16 and float32 tensors to make better use of your GPU's memory.\n\nCan you guess what float16 means?\n\nWell, if you thought since float32 meant single-precision floating-point, you might've guessed float16 means [half-precision floating-point format](https://en.wikipedia.org/wiki/Half-precision_floating-point_format). And if you did, you're right! And if not, no trouble, now you know.\n\nFor tensors in float16 format, each element occupies 16 bits in computer memory.\n\nSo, where does this leave us?\n\nAs mentioned before, when using mixed precision training, your model will make use of float32 and float16 data types to use less memory where possible and in turn run faster (using less memory per tensor means more tensors can be computed on simultaneously).\n\nAs a result, using mixed precision training can improve your performance on modern GPUs (those with a compute capability score of 7.0+) by up to 3x.\n\nFor a more detailed explanation, I encourage you to read through the [TensorFlow mixed precision guide](https://www.tensorflow.org/guide/mixed_precision) (I'd highly recommend at least checking out the summary).\n\n\n*Because mixed precision training uses a combination of float32 and float16 data types, you may see up to a 3x speedup on modern GPUs.*\n\n> 🔑 **Note:** If your GPU doesn't have a score of over 7.0+ (e.g. P100 in Colab), mixed precision won't work (see: [\"Supported Hardware\"](https://www.tensorflow.org/guide/mixed_precision#supported_hardware) in the mixed precision guide for more).\n\n> 📖 **Resource:** If you'd like to learn more about precision in computer science (the detail to which a numerical quantity is expressed by a computer), see the [Wikipedia page](https://en.wikipedia.org/wiki/Precision_(computer_science)) (and accompanying resources). \n\nOkay, enough talk, let's see how we can turn on mixed precision training in TensorFlow.\n\nThe beautiful thing is, the [`tensorflow.keras.mixed_precision`](https://www.tensorflow.org/api_docs/python/tf/keras/mixed_precision/) API has made it very easy for us to get started.\n\nFirst, we'll import the API and then use the [`set_global_policy()`](https://www.tensorflow.org/api_docs/python/tf/keras/mixed_precision/set_global_policy) method to set the *dtype policy* to `\"mixed_float16\"`.\n",

"_____no_output_____"

]

],

[

[

"# Turn on mixed precision training\nfrom tensorflow.keras import mixed_precision\nmixed_precision.set_global_policy(policy=\"mixed_float16\") # set global policy to mixed precision ",

"_____no_output_____"

]

],

[

[