hexsha

stringlengths 40

40

| size

int64 6

14.9M

| ext

stringclasses 1

value | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 6

260

| max_stars_repo_name

stringlengths 6

119

| max_stars_repo_head_hexsha

stringlengths 40

41

| max_stars_repo_licenses

list | max_stars_count

int64 1

191k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 6

260

| max_issues_repo_name

stringlengths 6

119

| max_issues_repo_head_hexsha

stringlengths 40

41

| max_issues_repo_licenses

list | max_issues_count

int64 1

67k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 6

260

| max_forks_repo_name

stringlengths 6

119

| max_forks_repo_head_hexsha

stringlengths 40

41

| max_forks_repo_licenses

list | max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | avg_line_length

float64 2

1.04M

| max_line_length

int64 2

11.2M

| alphanum_fraction

float64 0

1

| cells

list | cell_types

list | cell_type_groups

list |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

cb7dae4f95d26ef216946744e7c368badae9ad28

| 12,165 |

ipynb

|

Jupyter Notebook

|

pages/workshop/NumPy/Intermediate NumPy.ipynb

|

rrbuchholz/python-training

|

6bade362a17b174f44a63d3474e54e0e6402b954

|

[

"BSD-3-Clause"

] | 87 |

2019-08-29T06:54:06.000Z

|

2022-03-14T12:52:59.000Z

|

pages/workshop/NumPy/Intermediate NumPy.ipynb

|

rrbuchholz/python-training

|

6bade362a17b174f44a63d3474e54e0e6402b954

|

[

"BSD-3-Clause"

] | 100 |

2019-08-30T16:52:36.000Z

|

2022-02-10T12:12:05.000Z

|

pages/workshop/NumPy/Intermediate NumPy.ipynb

|

rrbuchholz/python-training

|

6bade362a17b174f44a63d3474e54e0e6402b954

|

[

"BSD-3-Clause"

] | 58 |

2019-07-19T20:39:18.000Z

|

2022-03-07T13:47:32.000Z

| 24.378758 | 311 | 0.538512 |

[

[

[

"<a name=\"top\"></a>\n<div style=\"width:1000 px\">\n\n<div style=\"float:right; width:98 px; height:98px;\">\n<img src=\"https://raw.githubusercontent.com/Unidata/MetPy/master/src/metpy/plots/_static/unidata_150x150.png\" alt=\"Unidata Logo\" style=\"height: 98px;\">\n</div>\n\n<h1>Intermediate NumPy</h1>\n<h3>Unidata Python Workshop</h3>\n\n<div style=\"clear:both\"></div>\n</div>\n\n<hr style=\"height:2px;\">\n\n<div style=\"float:right; width:250 px\"><img src=\"http://www.contribute.geeksforgeeks.org/wp-content/uploads/numpy-logo1.jpg\" alt=\"NumPy Logo\" style=\"height: 250px;\"></div>\n\n### Questions\n1. How do we work with the multiple dimensions in a NumPy Array?\n1. How can we extract irregular subsets of data?\n1. How can we sort an array?\n\n### Objectives\n1. <a href=\"#indexing\">Using axes to slice arrays</a>\n1. <a href=\"#boolean\">Index arrays using true and false</a>\n1. <a href=\"#integers\">Index arrays using arrays of indices</a>",

"_____no_output_____"

],

[

"<a name=\"indexing\"></a>\n## 1. Using axes to slice arrays\n\nThe solution to the last exercise in the Numpy Basics notebook introduces an important concept when working with NumPy: the axis. This indicates the particular dimension along which a function should operate (provided the function does something taking multiple values and converts to a single value). \n\nLet's look at a concrete example with `sum`:",

"_____no_output_____"

]

],

[

[

"# Convention for import to get shortened namespace\nimport numpy as np",

"_____no_output_____"

],

[

"# Create an array for testing\na = np.arange(12).reshape(3, 4)\na",

"_____no_output_____"

],

[

"# This calculates the total of all values in the array\nnp.sum(a)",

"_____no_output_____"

],

[

"# Keep this in mind:\na.shape",

"_____no_output_____"

],

[

"# Instead, take the sum across the rows:\nnp.sum(a, axis=0)",

"_____no_output_____"

],

[

"# Or do the same and take the some across columns:\nnp.sum(a, axis=1)",

"_____no_output_____"

]

],

[

[

"<div class=\"alert alert-success\">\n <b>EXERCISE</b>:\n <ul>\n <li>Finish the code below to calculate advection. The trick is to figure out\n how to do the summation.</li>\n </ul>\n</div>",

"_____no_output_____"

]

],

[

[

"# Synthetic data\ntemp = np.random.randn(100, 50)\nu = np.random.randn(100, 50)\nv = np.random.randn(100, 50)\n\n# Calculate the gradient components\ngradx, grady = np.gradient(temp)\n\n# Turn into an array of vectors:\n# axis 0 is x position\n# axis 1 is y position\n# axis 2 is the vector components\ngrad_vec = np.dstack([gradx, grady])\nprint(grad_vec.shape)\n\n# Turn wind components into vector\nwind_vec = np.dstack([u, v])\n\n# Calculate advection, the dot product of wind and the negative of gradient\n# DON'T USE NUMPY.DOT (doesn't work). Multiply and add.\n",

"_____no_output_____"

]

],

[

[

"<div class=\"alert alert-info\">\n <b>SOLUTION</b>\n</div>",

"_____no_output_____"

]

],

[

[

"# %load solutions/advection.py",

"_____no_output_____"

]

],

[

[

"<a href=\"#top\">Top</a>\n<hr style=\"height:2px;\">",

"_____no_output_____"

],

[

"<a name=\"boolean\"></a>\n## 2. Indexing Arrays with Boolean Values\nNumpy can easily create arrays of boolean values and use those to select certain values to extract from an array",

"_____no_output_____"

]

],

[

[

"# Create some synthetic data representing temperature and wind speed data\nnp.random.seed(19990503) # Make sure we all have the same data\ntemp = (20 * np.cos(np.linspace(0, 2 * np.pi, 100)) +\n 50 + 2 * np.random.randn(100))\nspd = (np.abs(10 * np.sin(np.linspace(0, 2 * np.pi, 100)) +\n 10 + 5 * np.random.randn(100)))",

"_____no_output_____"

],

[

"%matplotlib inline\nimport matplotlib.pyplot as plt\nplt.plot(temp, 'tab:red')\nplt.plot(spd, 'tab:blue');",

"_____no_output_____"

]

],

[

[

"By doing a comparision between a NumPy array and a value, we get an\narray of values representing the results of the comparison between\neach element and the value",

"_____no_output_____"

]

],

[

[

"temp > 45",

"_____no_output_____"

]

],

[

[

"We can take the resulting array and use this to index into the\nNumPy array and retrieve the values where the result was true",

"_____no_output_____"

]

],

[

[

"print(temp[temp > 45])",

"_____no_output_____"

]

],

[

[

"So long as the size of the boolean array matches the data, the boolean array can come from anywhere",

"_____no_output_____"

]

],

[

[

"print(temp[spd > 10])",

"_____no_output_____"

],

[

"# Make a copy so we don't modify the original data\ntemp2 = temp.copy()\n\n# Replace all places where spd is <10 with NaN (not a number) so matplotlib skips it\ntemp2[spd < 10] = np.nan\nplt.plot(temp2, 'tab:red')",

"_____no_output_____"

]

],

[

[

"Can also combine multiple boolean arrays using the syntax for bitwise operations. **MUST HAVE PARENTHESES** due to operator precedence.",

"_____no_output_____"

]

],

[

[

"print(temp[(temp < 45) & (spd > 10)])",

"_____no_output_____"

]

],

[

[

"<div class=\"alert alert-success\">\n <b>EXERCISE</b>:\n <ul>\n <li>Heat index is only defined for temperatures >= 80F and relative humidity values >= 40%. Using the data generated below, use boolean indexing to extract the data where heat index has a valid value.</li>\n </ul>\n</div>",

"_____no_output_____"

]

],

[

[

"# Here's the \"data\"\nnp.random.seed(19990503) # Make sure we all have the same data\ntemp = (20 * np.cos(np.linspace(0, 2 * np.pi, 100)) +\n 80 + 2 * np.random.randn(100))\nrh = (np.abs(20 * np.cos(np.linspace(0, 4 * np.pi, 100)) +\n 50 + 5 * np.random.randn(100)))\n\n\n# Create a mask for the two conditions described above\n# good_heat_index = \n\n\n\n# Use this mask to grab the temperature and relative humidity values that together\n# will give good heat index values\n# temp[] ?\n\n\n# BONUS POINTS: Plot only the data where heat index is defined by\n# inverting the mask (using `~mask`) and setting invalid values to np.nan",

"_____no_output_____"

]

],

[

[

"<div class=\"alert alert-info\">\n <b>SOLUTION</b>\n</div>",

"_____no_output_____"

]

],

[

[

"# %load solutions/heat_index.py",

"_____no_output_____"

]

],

[

[

"<a href=\"#top\">Top</a>\n<hr style=\"height:2px;\">",

"_____no_output_____"

],

[

"<a name=\"integers\"></a>\n## 3. Indexing using arrays of indices\n\nYou can also use a list or array of indices to extract particular values--this is a natural extension of the regular indexing. For instance, just as we can select the first element:",

"_____no_output_____"

]

],

[

[

"print(temp[0])",

"_____no_output_____"

]

],

[

[

"We can also extract the first, fifth, and tenth elements:",

"_____no_output_____"

]

],

[

[

"print(temp[[0, 4, 9]])",

"_____no_output_____"

]

],

[

[

"One of the ways this comes into play is trying to sort numpy arrays using `argsort`. This function returns the indices of the array that give the items in sorted order. So for our temp \"data\":",

"_____no_output_____"

]

],

[

[

"inds = np.argsort(temp)\nprint(inds)",

"_____no_output_____"

]

],

[

[

"We can use this array of indices to pass into temp to get it in sorted order:",

"_____no_output_____"

]

],

[

[

"print(temp[inds])",

"_____no_output_____"

]

],

[

[

"Or we can slice `inds` to only give the 10 highest temperatures:",

"_____no_output_____"

]

],

[

[

"ten_highest = inds[-10:]\nprint(temp[ten_highest])",

"_____no_output_____"

]

],

[

[

"There are other numpy arg functions that return indices for operating:",

"_____no_output_____"

]

],

[

[

"np.*arg*?",

"_____no_output_____"

]

],

[

[

"<a href=\"#top\">Top</a>\n<hr style=\"height:2px;\">",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown"

] |

[

[

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

]

] |

cb7db3058c7478a3eee48f68f82e8250cbd6da38

| 20,610 |

ipynb

|

Jupyter Notebook

|

test.ipynb

|

rdrockz/c9-python-getting-started

|

3b6a1e08851dc795f11a0214fecc8678078433eb

|

[

"MIT"

] | null | null | null |

test.ipynb

|

rdrockz/c9-python-getting-started

|

3b6a1e08851dc795f11a0214fecc8678078433eb

|

[

"MIT"

] | null | null | null |

test.ipynb

|

rdrockz/c9-python-getting-started

|

3b6a1e08851dc795f11a0214fecc8678078433eb

|

[

"MIT"

] | null | null | null | 26.627907 | 278 | 0.450364 |

[

[

[

"def dig_pow(n, p):\n # creating a placholder\n length = len(str(n))\n total=0\n for digits in range(1,length):\n \n a= n % (10**digits)\n print(a)\n total+= (a ** (p+length-digits))\n print(total)\n if total % n==0:\n return total //n\n else:\n return -1 \n",

"_____no_output_____"

],

[

"dig_pow(46288,3) # SHOULD RETURN 51 as 4³ + 6⁴+ 2⁵ + 8⁶ + 8⁷ = 2360688 = 46288 * 51",

"8\n2097152\n88\n464406183936\n288\n2445761839104\n6288\n1565773854474240\n"

],

[

"def dig_pow(n, p):\n # by list comprehension\n total=0\n new_p=int(p)\n for digit in str(n):\n #a= n % (10**digits)\n #print(a)\n print(digit)\n total += (int(digit) ** new_p)\n new_p+=1\n print(total)\n if total % n==0:\n return total //n\n else:\n return -1 \n",

"_____no_output_____"

],

[

"dig_pow(46288,3)",

"4\n64\n6\n1360\n2\n1392\n8\n263536\n8\n2360688\n"

],

[

"def openOrSenior(data):\n # List of List and make a newList\n newList =[]\n \n for a,b in data:\n print (a,b)\n #if #more than 60 and more than 7\n \n #newList.append(\"Senior\")\n \n #else:\n #newList.append(\"Open\")\n \n \n #return new_list\n ",

"_____no_output_____"

],

[

"openOrSenior([[45, 12],[55,21],[19, -2],[104, 20]])",

"45 12\n55 21\n19 -2\n104 20\n"

],

[

"def dirReduc(arr):\n # so n is +1 and s is -1 for vertical, so is the case for e and w for horizontal\n # reduce function to return [] \n # might have to use recursive\n newList =[]\n #newArray =[]\n for i,dir in enumerate(arr):\n #print (dir)\n print (arr[i])\n if arr[i] == \"NORTH\" and arr[i+1]!= \"SOUTH\" or arr[i] == \"EAST\" and arr[i+1]!= \"WEST\" :\n print (arr[i])\n\n \n #if newArray.append(dir) \n \n \n return arr",

"_____no_output_____"

],

[

"a = [\"NORTH\", \"SOUTH\", \"SOUTH\", \"EAST\", \"WEST\", \"NORTH\", \"WEST\"]\ndirReduc(a) # West",

"NORTH\nSOUTH\nSOUTH\nEAST\nWEST\nNORTH\nNORTH\nWEST\n"

],

[

"#Check if North and South are adjacent,\n\ndef dirReduc(arr):\n newList=[]\n for i, element in enumerate(arr):\n previous_element = arr[i-1] if i > 0 else None\n current_element = element\n if current_element is \"NORTH\" and previous_element is not \"SOUTH\":\n newList.append(\"NORTH\")\n elif current_element is \"SOUTH\" and previous_element is not \"NORTH\":\n newList.append(\"SOUTH\")\n elif current_element is \"EAST\" and previous_element is not \"WEST\":\n newList.append(\"EAST\") \n elif current_element is \"WEST\" and previous_element is not \"EAST\":\n newList.append(\"WEST\")\n\n #if dir== \"NORTH\" and newList[i-1]\n #newList[i].append(dir) \n #if dir[i] == \"NORTH\" and dir[i+1] == \"SOUTH\":\n # dir.pop(i)\n print (newList)\n",

"_____no_output_____"

],

[

"def dirReduc(arr):\n dir = [\"NORTH\",\"SOUTH\",\"EAST\",\"WEST\"]\n for i,element in enumerate[arr]:\n if element in dir\n\n # dir = [(\"NORTH\",\"SOUTH\"),(\"EAST\",\"WEST\"),(\"SOUTH\",\"NORTH\"),(\"WEST\",\"EAST\")]\n'for i, element in enumerate(mylist):\n previous_element = mylist[i-1] if i > 0 else None\n current_element = element\n next_element = mylist[i+1] if i < len(mylist)-1 else None\n print(previous_element, current_element, next_element)",

"_____no_output_____"

],

[

"a = [\"NORTH\", \"SOUTH\", \"SOUTH\", \"EAST\", \"WEST\", \"NORTH\", \"WEST\"]\n#dirReduc(a) # West \narr=a\ndir = [(\"NORTH\",\"SOUTH\"),(\"EAST\",\"WEST\"),(\"SOUTH\",\"NORTH\"),(\"WEST\",\"EAST\")]\ndef tup(a):\n if len(a) % 2 != 0:\n listTup= list(zip(a[::2],a[+1::2]))\n listTup.append(a[-1])\n else:\n listTup= list(zip(arr[::2],arr[+1::2]))\n\n return listTup\n\n\n\n#i=iter(arr)\n#if i in dir and i.next()==",

"_____no_output_____"

],

[

"def dirReduc(arr):\n dir = [(\"NORTH\",\"SOUTH\"),(\"EAST\",\"WEST\"),(\"SOUTH\",\"NORTH\"),(\"WEST\",\"EAST\")]\n \n def tup(a):\n if len(a) % 2 != 0:\n listTup= list(zip(a[::2],a[+1::2]))\n listTup.append(a[-1])\n else:\n listTup= list(zip(arr[::2],arr[+1::2]))\n\n return listTup\n \n new=[]\n tuplist=tup(arr)\n print (tuplist)\n \n if dir in tuplist :\n print(\"y\")\n else:\n print(\"nah\")\n print (dir)\n while dir in tuplist:\n new= (x for x in tuplist if x not in dir)\n tuplist =dirReduc(new)\n print(new)\n #l3 = [x for x in l1 if x not in l2]\n #else:\n # pass\n #print(a)\n ",

"_____no_output_____"

],

[

"a = [\"NORTH\", \"SOUTH\", \"SOUTH\", \"EAST\", \"WEST\", \"NORTH\", \"WEST\"]\ndirReduc(a) # West",

"[('NORTH', 'SOUTH'), ('SOUTH', 'EAST'), ('WEST', 'NORTH'), 'WEST']\nnah\n[('NORTH', 'SOUTH'), ('EAST', 'WEST'), ('SOUTH', 'NORTH'), ('WEST', 'EAST')]\n"

],

[

"def dirReduc(arr): \n def tup(a):\n if len(a) % 2 != 0:\n listTup= list(zip(a[::2],a[+1::2]))\n listTup.append(a[-1])\n else:\n listTup= list(zip(arr[::2],arr[+1::2]))\n\n return listTup\n\n new= tup(arr)\n old=[]\n dir = [(\"NORTH\",\"SOUTH\"),(\"EAST\",\"WEST\"),(\"SOUTH\",\"NORTH\"),(\"WEST\",\"EAST\")]\n #recursive\n while len(old)== len(new):\n \n old= tup(new)\n new= [element for element in old if element not in dir ]\n new= [x for t in new for x in t if len(x)>1]\n #and element for x in new fro element in x\n print (list(new))\n #old=new\n #print (old)\n \n #print (new)",

"_____no_output_____"

],

[

"a = [\"NORTH\", \"SOUTH\", \"SOUTH\", \"EAST\", \"WEST\", \"NORTH\", \"WEST\"]\ndirReduc(a) # West\n",

"_____no_output_____"

],

[

"def dirReduc(arr): \n def tup(a):\n if len(a) % 2 != 0:\n listTup= list(zip(a[::2],a[+1::2]))\n listTup.append(a[-1])\n else:\n listTup= list(zip(arr[::2],arr[+1::2]))\n\n return listTup\n a=arr\n old= tup(a)\n new=[]\n dir = [(\"NORTH\",\"SOUTH\"),(\"EAST\",\"WEST\"),(\"SOUTH\",\"NORTH\"),(\"WEST\",\"EAST\")]\n #recursive?\n if len(old) != len(new): \n #old= tup(new)\n new= [element for element in old if element not in dir ]\n print(new)\n #new= [element for tup in new for element in new ]\n #new= [element for t in new for element in t if len(element)>1]\n #and element for x in new fro element in x\n print (list(new))\n #old=new\n #print (old)\n else:\n print (new)",

"_____no_output_____"

],

[

"a = [\"NORTH\", \"SOUTH\", \"SOUTH\", \"EAST\", \"WEST\", \"NORTH\", \"WEST\"]\ndirReduc(a) # West",

"[('SOUTH', 'EAST'), ('WEST', 'NORTH'), 'WEST']\n[('SOUTH', 'EAST'), ('WEST', 'NORTH'), 'WEST']\n"

]

],

[

[

" if arr[index]==\"NORTH\" and tempList[index+1]!=\"SOUTH\" :#or arr[index]==\"SOUTH\" and tempList[index+1]!=\"NORTH\" :\n newList.append(arr[index])\n #elif arr[index]==\"EAST\" and tempList[index+1]!=\"WEST\" or arr[index]==\"WEST\" and tempList[index+1]!=\"EAST\" :\n newList.append(arr[index])\n else:\n pass \n #while len(newList)==len(tempList):\n #print(newList)\n #dirReduc(newList)\n print(newList)",

"_____no_output_____"

]

],

[

[

"def dirReduc(arr):\n tempList= arr\n newList=[]\n #print(arr)\n dir = [(\"NORTH\",\"SOUTH\"),(\"EAST\",\"WEST\"),(\"SOUTH\",\"NORTH\"),(\"WEST\",\"EAST\")]\n #print (dir)\n for index in range(len(arr)-1):\n \n #for i in range(len(dir)):\n \n #if [(zip(arr[::],tempList[1::]))] not in dir[:]:\n #newList.append(arr[index+1])\n\n #print(list(zip(arr[::],tempList[+1::])))\n if [list(zip(arr[index:],tempList[index+1:]))] not in [(x for x in dir)]:\n newList.append(arr[index+1])\n #else:\n #pass\n print(newList)\n\n \n\n\n",

"_____no_output_____"

],

[

"dirReduc([\"NORTH\",\"SOUTH\",\"SOUTH\",\"EAST\",\"WEST\"])",

"['SOUTH', 'SOUTH', 'EAST', 'WEST']\n"

],

[

"dirReduc([\"NORTH\",\"SOUTH\",\"SOUTH\",\"EAST\",\"WEST\"])",

"{'N': 0, 'S': 1, 'E': 0, 'W': 0}\n"

],

[

"def dirReduc(arr):\n turtle ={'N':0,'S':0,'E':0,'W':0}\n turtleNew=turtle\n a=arr[1:]\n for i in range(0,len(arr)-1):\n if arr[i] == \"NORTH\" and a[i] != \"SOUTH\":\n turtle['N']+=1\n #turtle['S']-=1\n elif arr[i] == \"SOUTH\" and a[i] != \"NORTH\":\n turtle['S']+=1\n #turtle['N']-=1\n\n elif arr[i] == \"EAST\" and a[i] != \"WEST\":\n turtle['E']+=1\n #turtle['W']-=1\n\n elif arr[i] == \"WEST\" and a[i] != \"EAST\":\n turtle['W']+=1\n #turtle['E']-=1\n\n print(turtle)",

"_____no_output_____"

],

[

"a = [\"NORTH\", \"SOUTH\", \"SOUTH\", \"EAST\", \"WEST\", \"NORTH\", \"WEST\"]\ndirReduc(a)",

"{'N': 1, 'S': 2, 'E': 0, 'W': 1}\n"

],

[

"def dirReduc(arr):\n new=arr\n for l in arr:\n for dir in new:\n \n\n \"\"\"\n if new[:-2] ==\"NORTH\" and l == \"SOUTH\":\n new.pop()\n new.pop()\n print(new)\n elif new[:-2]==\"SOUTH\" and l == \"NORTH\":\n new.pop()\n new.pop()\n print(new)\n elif new[:-2]==\"EAST\" and l == \"WEST\":\n new.pop()\n new.pop()\n print(new)\n elif new[:-2]==\"WEST\" and l == \"EAST\":\n new.pop()\n new.pop()\n print(new)\n #else:\n #break\n # elif new[:-1]==False:\n # new.append(l)\n \"\"\"\n print(new) \n \n",

"_____no_output_____"

],

[

"a = [\"NORTH\", \"SOUTH\", \"SOUTH\", \"EAST\", \"WEST\", \"NORTH\", \"WEST\"]\ndirReduc(a)\n",

"['NORTH', 'SOUTH', 'SOUTH', 'EAST', 'WEST', 'NORTH', 'WEST']\n"

]

]

] |

[

"code",

"markdown",

"code"

] |

[

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code"

]

] |

cb7db3889930e741d325cf2d06df1a7fc4d9bf71

| 6,252 |

ipynb

|

Jupyter Notebook

|

courses/machine_learning/tensorflow/c_batched.ipynb

|

ecuriotto/training-data-analyst

|

3da6b9a4f9715c6cf9653c2025ac071b15011111

|

[

"Apache-2.0"

] | 4 |

2021-02-20T19:23:56.000Z

|

2021-02-21T07:28:49.000Z

|

courses/machine_learning/tensorflow/c_batched.ipynb

|

jgamblegeorge/training-data-analyst

|

9f9006e7b740f59798ac6016d55dd4e38a1e0528

|

[

"Apache-2.0"

] | 11 |

2020-01-28T23:13:27.000Z

|

2022-03-12T00:11:30.000Z

|

courses/machine_learning/tensorflow/c_batched.ipynb

|

jgamblegeorge/training-data-analyst

|

9f9006e7b740f59798ac6016d55dd4e38a1e0528

|

[

"Apache-2.0"

] | 4 |

2020-05-15T06:23:05.000Z

|

2021-12-20T06:00:15.000Z

| 33.612903 | 553 | 0.602047 |

[

[

[

"<h1> 2c. Refactoring to add batching and feature-creation </h1>\n\nIn this notebook, we continue reading the same small dataset, but refactor our ML pipeline in two small, but significant, ways:\n<ol>\n<li> Refactor the input to read data in batches.\n<li> Refactor the feature creation so that it is not one-to-one with inputs.\n</ol>\nThe Pandas function in the previous notebook also batched, only after it had read the whole data into memory -- on a large dataset, this won't be an option.",

"_____no_output_____"

]

],

[

[

"import tensorflow as tf\nimport numpy as np\nimport shutil\nprint(tf.__version__)",

"_____no_output_____"

]

],

[

[

"<h2> 1. Refactor the input </h2>\n\nRead data created in Lab1a, but this time make it more general and performant. Instead of using Pandas, we will use TensorFlow's Dataset API.",

"_____no_output_____"

]

],

[

[

"CSV_COLUMNS = ['fare_amount', 'pickuplon','pickuplat','dropofflon','dropofflat','passengers', 'key']\nLABEL_COLUMN = 'fare_amount'\nDEFAULTS = [[0.0], [-74.0], [40.0], [-74.0], [40.7], [1.0], ['nokey']]\n\ndef read_dataset(filename, mode, batch_size = 512):\n def _input_fn():\n def decode_csv(value_column):\n columns = tf.decode_csv(value_column, record_defaults = DEFAULTS)\n features = dict(zip(CSV_COLUMNS, columns))\n label = features.pop(LABEL_COLUMN)\n return features, label\n\n # Create list of files that match pattern\n file_list = tf.gfile.Glob(filename)\n\n # Create dataset from file list\n dataset = tf.data.TextLineDataset(file_list).map(decode_csv)\n if mode == tf.estimator.ModeKeys.TRAIN:\n num_epochs = None # indefinitely\n dataset = dataset.shuffle(buffer_size = 10 * batch_size)\n else:\n num_epochs = 1 # end-of-input after this\n\n dataset = dataset.repeat(num_epochs).batch(batch_size)\n return dataset.make_one_shot_iterator().get_next()\n return _input_fn\n \n\ndef get_train():\n return read_dataset('./taxi-train.csv', mode = tf.estimator.ModeKeys.TRAIN)\n\ndef get_valid():\n return read_dataset('./taxi-valid.csv', mode = tf.estimator.ModeKeys.EVAL)\n\ndef get_test():\n return read_dataset('./taxi-test.csv', mode = tf.estimator.ModeKeys.EVAL)",

"_____no_output_____"

]

],

[

[

"<h2> 2. Refactor the way features are created. </h2>\n\nFor now, pass these through (same as previous lab). However, refactoring this way will enable us to break the one-to-one relationship between inputs and features.",

"_____no_output_____"

]

],

[

[

"INPUT_COLUMNS = [\n tf.feature_column.numeric_column('pickuplon'),\n tf.feature_column.numeric_column('pickuplat'),\n tf.feature_column.numeric_column('dropofflat'),\n tf.feature_column.numeric_column('dropofflon'),\n tf.feature_column.numeric_column('passengers'),\n]\n\ndef add_more_features(feats):\n # Nothing to add (yet!)\n return feats\n\nfeature_cols = add_more_features(INPUT_COLUMNS)",

"_____no_output_____"

]

],

[

[

"<h2> Create and train the model </h2>\n\nNote that we train for num_steps * batch_size examples.",

"_____no_output_____"

]

],

[

[

"tf.logging.set_verbosity(tf.logging.INFO)\nOUTDIR = 'taxi_trained'\nshutil.rmtree(OUTDIR, ignore_errors = True) # start fresh each time\nmodel = tf.estimator.LinearRegressor(\n feature_columns = feature_cols, model_dir = OUTDIR)\nmodel.train(input_fn = get_train(), steps = 100); # TODO: change the name of input_fn as needed",

"_____no_output_____"

]

],

[

[

"<h3> Evaluate model </h3>\n\nAs before, evaluate on the validation data. We'll do the third refactoring (to move the evaluation into the training loop) in the next lab.",

"_____no_output_____"

]

],

[

[

"def print_rmse(model, name, input_fn):\n metrics = model.evaluate(input_fn = input_fn, steps = 1)\n print('RMSE on {} dataset = {}'.format(name, np.sqrt(metrics['average_loss'])))\nprint_rmse(model, 'validation', get_valid())",

"_____no_output_____"

]

],

[

[

"Copyright 2017 Google Inc. Licensed under the Apache License, Version 2.0 (the \"License\"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown"

] |

[

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

]

] |

cb7db702fb856e37e6eb6126ed3667aa0522b39b

| 16,970 |

ipynb

|

Jupyter Notebook

|

Baseline/resnet.ipynb

|

satish860/foodimageclassifier

|

12ce22e56f42de36f2b32d4344a57d20cf2fe089

|

[

"Apache-2.0"

] | null | null | null |

Baseline/resnet.ipynb

|

satish860/foodimageclassifier

|

12ce22e56f42de36f2b32d4344a57d20cf2fe089

|

[

"Apache-2.0"

] | null | null | null |

Baseline/resnet.ipynb

|

satish860/foodimageclassifier

|

12ce22e56f42de36f2b32d4344a57d20cf2fe089

|

[

"Apache-2.0"

] | null | null | null | 29.309154 | 274 | 0.470183 |

[

[

[

"# Food Image Classifier",

"_____no_output_____"

],

[

"This part of the Manning Live project - https://liveproject.manning.com/project/210 . In synposis, By working on this project, I will be classying the food variety of 101 type. Dataset is already availble in public but we will be starting with subset of the classifier",

"_____no_output_____"

],

[

"## Dataset",

"_____no_output_____"

],

[

"As a general best practice to ALWAYS start with a subset of the dataset rather than a full one. There are two reason for the same\n1. As you experiement with the model, You dont want to run over all the dataset that will slow down the process\n2. You will end up wasting lots of GPU resources well before the getting best model for the Job",

"_____no_output_____"

],

[

"In the Case live Project, The authors already shared the subset of the notebook so we can use the same for the baseline model",

"_____no_output_____"

]

],

[

[

"#!wget https://lp-prod-resources.s3-us-west-2.amazonaws.com/other/Deploying+a+Deep+Learning+Model+on+Web+and+Mobile+Applications+Using+TensorFlow/Food+101+-+Data+Subset.zip\n#!unzip Food+101+-+Data+Subset.zip",

"_____no_output_____"

],

[

"import torch\nfrom torchvision import datasets,models\nimport torchvision.transforms as tt\nimport numpy as np\nimport matplotlib.pyplot as plt\nfrom torchvision.utils import make_grid\nfrom torch.utils.data import DataLoader,random_split,Dataset\nimport torch.nn as nn\nimport torch.nn.functional as F\nimport torch.optim as optim\nfrom fastprogress.fastprogress import master_bar, progress_bar",

"_____no_output_____"

],

[

"stats = ((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))\ntrain_tfms = tt.Compose([tt.RandomHorizontalFlip(),\n tt.Resize([224,224]),\n tt.ToTensor(), \n tt.Normalize(*stats,inplace=True)])\nvalid_tfms = tt.Compose([tt.Resize([224,224]),tt.ToTensor(), tt.Normalize(*stats)])",

"_____no_output_____"

]

],

[

[

"Create a Pytorch dataset from the image folder. This will allow us to create a Training dataset and validation dataset",

"_____no_output_____"

]

],

[

[

"ds = datasets.ImageFolder('food-101-subset/images/')",

"_____no_output_____"

],

[

"class CustomDataset(Dataset):\n def __init__(self,ds,transformer):\n self.ds = ds\n self.transform = transformer\n \n def __getitem__(self,idx):\n image,label = self.ds[idx]\n img = self.transform(image)\n return img,label\n \n def __len__(self):\n return len(ds)",

"_____no_output_____"

],

[

"train_len=0.8*len(ds)\nval_len = len(ds) - train_len\nint(train_len),int(val_len)",

"_____no_output_____"

],

[

"train_ds,val_ds = random_split(dataset=ds,lengths=[int(train_len),int(val_len)],generator=torch.Generator().manual_seed(42))",

"_____no_output_____"

],

[

"t_ds = CustomDataset(train_ds.dataset,train_tfms)\nv_ds = CustomDataset(val_ds.dataset,valid_tfms)",

"_____no_output_____"

],

[

"batch_size = 32\ntrain_dl = DataLoader(t_ds, batch_size, shuffle=True, pin_memory=True)\nvalid_dl = DataLoader(v_ds, batch_size, pin_memory=True)",

"_____no_output_____"

],

[

"for x,yb in train_dl:\n print(x.shape)\n break;",

"torch.Size([32, 3, 224, 224])\n"

],

[

"def show_batch(dl):\n for images, labels in dl:\n fig, ax = plt.subplots(figsize=(12, 12))\n ax.set_xticks([]); ax.set_yticks([])\n ax.imshow(make_grid(images[:64], nrow=8).permute(1, 2, 0))\n break",

"_____no_output_____"

]

],

[

[

"# Create a ResNet Model with default Parameters",

"_____no_output_____"

]

],

[

[

"class Flatten(nn.Module):\n def forward(self,x):\n return torch.flatten(x,1)\n\nclass FoodImageClassifer(nn.Module):\n def __init__(self):\n super().__init__()\n resnet = models.resnet34(pretrained=True)\n self.body = nn.Sequential(*list(resnet.children())[:-2])\n self.head = nn.Sequential(nn.AdaptiveAvgPool2d(1),Flatten(),nn.Linear(resnet.fc.in_features,3))\n \n def forward(self,x):\n x = self.body(x)\n return self.head(x)\n \n def freeze(self):\n for name,param in self.body.named_parameters():\n param.requires_grad = True",

"_____no_output_____"

],

[

"def fit(epochs,model,train_dl,valid_dl,loss_fn,opt):\n mb = master_bar(range(epochs))\n mb.write(['epoch','train_loss','valid_loss','trn_acc','val_acc'],table=True)\n\n for i in mb: \n trn_loss,val_loss = 0.0,0.0\n trn_acc,val_acc = 0,0\n trn_n,val_n = len(train_dl.dataset),len(valid_dl.dataset)\n model.train()\n for xb,yb in progress_bar(train_dl,parent=mb):\n xb,yb = xb.to(device), yb.to(device)\n out = model(xb)\n opt.zero_grad()\n loss = loss_fn(out,yb)\n _,pred = torch.max(out.data, 1)\n trn_acc += (pred == yb).sum().item()\n trn_loss += loss.item()\n loss.backward()\n opt.step()\n trn_loss /= mb.child.total\n trn_acc /= trn_n\n\n model.eval()\n with torch.no_grad():\n for xb,yb in progress_bar(valid_dl,parent=mb):\n xb,yb = xb.to(device), yb.to(device)\n out = model(xb)\n loss = loss_fn(out,yb)\n val_loss += loss.item()\n _,pred = torch.max(out.data, 1)\n val_acc += (pred == yb).sum().item()\n val_loss /= mb.child.total\n val_acc /= val_n\n\n mb.write([i,f'{trn_loss:.6f}',f'{val_loss:.6f}',f'{trn_acc:.6f}',f'{val_acc:.6f}'],table=True)",

"_____no_output_____"

]

],

[

[

"# Making the Resnet as a Feature Extractor and training model",

"_____no_output_____"

]

],

[

[

"model = FoodImageClassifer()\ncriterion = nn.CrossEntropyLoss()\noptimizer_ft = optim.SGD(model.parameters(), lr=0.001, momentum=0.9)\n#model.freeze()\ndevice = torch.device(\"cuda:0\" if torch.cuda.is_available() else \"cpu\")\nmodel = model.to(device)\nfit(10,model=model,train_dl=train_dl,valid_dl=valid_dl,loss_fn=criterion,opt=optimizer_ft)",

"_____no_output_____"

]

],

[

[

"# Freeze the layers",

"_____no_output_____"

]

],

[

[

"model = FoodImageClassifer()\ncriterion = nn.CrossEntropyLoss()\noptimizer_ft = optim.Adam(model.parameters(), lr=1e-4)\nmodel.freeze()\ndevice = torch.device(\"cuda:0\" if torch.cuda.is_available() else \"cpu\")\nmodel = model.to(device)\nfit(10,model=model,train_dl=train_dl,valid_dl=valid_dl,loss_fn=criterion,opt=optimizer_ft)",

"_____no_output_____"

],

[

"torch.save(model.state_dict,'resnet.pth')",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown",

"markdown",

"markdown",

"markdown",

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

]

] |

cb7dbf352b347a29e861d64bb94dae0e7e59b66b

| 27,661 |

ipynb

|

Jupyter Notebook

|

day_5.ipynb

|

mzignis/dw_matrix_road_signs

|

8b648686794a6076a8cfb52ab26fc21aea2c196f

|

[

"MIT"

] | null | null | null |

day_5.ipynb

|

mzignis/dw_matrix_road_signs

|

8b648686794a6076a8cfb52ab26fc21aea2c196f

|

[

"MIT"

] | null | null | null |

day_5.ipynb

|

mzignis/dw_matrix_road_signs

|

8b648686794a6076a8cfb52ab26fc21aea2c196f

|

[

"MIT"

] | null | null | null | 44.977236 | 237 | 0.511695 |

[

[

[

"import datetime\nimport os\n\nimport matplotlib.pyplot as plt\nimport numpy as np\nimport pandas as pd\nimport seaborn as sns\n\nfrom skimage import color, exposure\n\nfrom sklearn.metrics import accuracy_score\n\nimport tensorflow as tf\nfrom tensorflow.keras.models import Sequential\nfrom tensorflow.keras.layers import Conv2D, MaxPool2D, Dense, Flatten, Dropout\nfrom tensorflow.keras.utils import to_categorical\n\nfrom hyperopt import hp, STATUS_OK, tpe, Trials, fmin\n\nsns.set()\n%load_ext tensorboard",

"/usr/local/lib/python3.6/dist-packages/statsmodels/tools/_testing.py:19: FutureWarning: pandas.util.testing is deprecated. Use the functions in the public API at pandas.testing instead.\n import pandas.util.testing as tm\n"

],

[

"HOME = '/content/drive/My Drive/Colab Notebooks/matrix/dw_matrix_road_signs'\n%cd $HOME",

"/content/drive/My Drive/Colab Notebooks/matrix/dw_matrix_road_signs\n"

],

[

"train_db = pd.read_pickle('data/train.p')\ntest_db = pd.read_pickle('data/test.p')\n\nX_train, y_train = train_db['features'], train_db['labels']\nX_test, y_test = test_db['features'], test_db['labels']",

"_____no_output_____"

],

[

"sign_names = pd.read_csv('data/dw_signnames.csv')\nsign_names.head()",

"_____no_output_____"

],

[

"y_train = to_categorical(y_train)\ny_test = to_categorical(y_test)",

"_____no_output_____"

],

[

"input_shape = X_train.shape[1:]\ncat_num = y_train.shape[1]",

"_____no_output_____"

],

[

"def get_cnn_v1(input_shape, cat_num, verbose=False):\n model = Sequential([Conv2D(filters=64, kernel_size=(3, 3), activation='relu', input_shape=input_shape),\n Flatten(),\n Dense(cat_num, activation='softmax')])\n if verbose:\n model.summary()\n\n return model\n\n\ncnn_v1 = get_cnn_v1(input_shape, cat_num, True)",

"Model: \"sequential\"\n_________________________________________________________________\nLayer (type) Output Shape Param # \n=================================================================\nconv2d (Conv2D) (None, 30, 30, 64) 1792 \n_________________________________________________________________\nflatten (Flatten) (None, 57600) 0 \n_________________________________________________________________\ndense (Dense) (None, 43) 2476843 \n=================================================================\nTotal params: 2,478,635\nTrainable params: 2,478,635\nNon-trainable params: 0\n_________________________________________________________________\n"

],

[

"def train_model(model, X_train, y_train, params_fit=dict()):\n\n logdir = os.path.join('logs', datetime.datetime.now().strftime('%Y%m%d_%H%M%S'))\n tensorboard_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)\n\n model.compile(loss='categorical_crossentropy', optimizer='Adam', metrics=['accuracy'])\n model.fit(X_train, \n y_train,\n batch_size=params_fit.get('batch_size', 128),\n epochs=params_fit.get('epochs', 5),\n verbose=params_fit.get('verbose', 1),\n validation_data=params_fit.get('validation_data', (X_train, y_train)),\n callbacks=[tensorboard_callback])\n\n return model\n\nmodel_trained = train_model(cnn_v1, X_train, y_train)",

"Epoch 1/5\n272/272 [==============================] - 7s 25ms/step - loss: 21.1410 - accuracy: 0.7725 - val_loss: 0.2157 - val_accuracy: 0.9500\nEpoch 2/5\n272/272 [==============================] - 6s 23ms/step - loss: 0.1960 - accuracy: 0.9538 - val_loss: 0.1232 - val_accuracy: 0.9707\nEpoch 3/5\n272/272 [==============================] - 6s 23ms/step - loss: 0.1243 - accuracy: 0.9693 - val_loss: 0.0857 - val_accuracy: 0.9778\nEpoch 4/5\n272/272 [==============================] - 6s 23ms/step - loss: 0.0941 - accuracy: 0.9764 - val_loss: 0.0711 - val_accuracy: 0.9825\nEpoch 5/5\n272/272 [==============================] - 6s 23ms/step - loss: 0.0943 - accuracy: 0.9792 - val_loss: 0.1108 - val_accuracy: 0.9747\n"

],

[

"def predict(model, X_test, y_test, scoring=accuracy_score):\n y_test_norm = np.argmax(y_test, axis=1)\n y_pred_prob = model.predict(X_test)\n y_pred = np.argmax(y_pred_prob, axis=1)\n\n return scoring(y_test_norm, y_pred)",

"_____no_output_____"

],

[

"def get_cnn(input_shape, cat_num):\n model = Sequential([Conv2D(filters=32, kernel_size=(3, 3), activation='relu', input_shape=input_shape),\n Conv2D(filters=32, kernel_size=(3, 3), activation='relu', padding='same'),\n MaxPool2D(),\n Dropout(0.3),\n\n Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same'),\n Conv2D(filters=64, kernel_size=(3, 3), activation='relu'),\n MaxPool2D(),\n Dropout(0.3),\n\n Flatten(),\n\n Dense(1024, activation='relu'),\n Dropout(0.3),\n\n Dense(1024, activation='relu'),\n Dropout(0.3),\n\n Dense(cat_num, activation='softmax')])\n return model",

"_____no_output_____"

],

[

"def train_and_predict(model, X_train, y_train, X_test, y_test):\n model_trained = train_model(model, X_train, y_train)\n return predict(model_trained, X_test, y_test)\n\n# train_and_predict(get_cnn(input_shape, cat_num), X_train, y_train, X_test, y_test)",

"Epoch 1/5\n272/272 [==============================] - 12s 45ms/step - loss: 2.3776 - accuracy: 0.4112 - val_loss: 0.4694 - val_accuracy: 0.8598\nEpoch 2/5\n272/272 [==============================] - 12s 43ms/step - loss: 0.5820 - accuracy: 0.8224 - val_loss: 0.1191 - val_accuracy: 0.9720\nEpoch 3/5\n272/272 [==============================] - 12s 43ms/step - loss: 0.3022 - accuracy: 0.9095 - val_loss: 0.0622 - val_accuracy: 0.9845\nEpoch 4/5\n272/272 [==============================] - 12s 43ms/step - loss: 0.1948 - accuracy: 0.9410 - val_loss: 0.0264 - val_accuracy: 0.9929\nEpoch 5/5\n272/272 [==============================] - 12s 43ms/step - loss: 0.1507 - accuracy: 0.9559 - val_loss: 0.0218 - val_accuracy: 0.9943\n"

],

[

"def get_model(input_shape, cat_num, params):\n model = Sequential([Conv2D(filters=32, kernel_size=(3, 3), activation='relu', input_shape=input_shape),\n Conv2D(filters=32, kernel_size=(3, 3), activation='relu', padding='same'),\n MaxPool2D(),\n Dropout(params['dropout_cnn_0']),\n\n Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same'),\n Conv2D(filters=64, kernel_size=(3, 3), activation='relu'),\n MaxPool2D(),\n Dropout(params['dropout_cnn_1']),\n\n Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same'),\n Conv2D(filters=128, kernel_size=(3, 3), activation='relu'),\n MaxPool2D(),\n Dropout(params['dropout_cnn_2']),\n\n Flatten(),\n\n Dense(1024, activation='relu'),\n Dropout(params['dropout_dense_0']),\n\n Dense(1024, activation='relu'),\n Dropout(params['dropout_dense_1']),\n\n Dense(cat_num, activation='softmax')])\n return model",

"_____no_output_____"

],

[

"def func_obj(params):\n model = get_model(input_shape, cat_num, params)\n model.compile(loss='categorical_crossentropy', optimizer='Adam', metrics=['accuracy'])\n\n model.fit(X_train, \n y_train,\n batch_size=int(params.get('batch_size', 128)),\n epochs=params.get('epochs', 5),\n verbose=params.get('verbose', 0)\n )\n\n score = model.evaluate(X_test, y_test, verbose=0)\n accuracy = score[1]\n print(f'params={params}')\n print(f'accuracy={accuracy}')\n\n return {'loss': -accuracy, 'status': STATUS_OK, 'model': model}",

"_____no_output_____"

],

[

"space = {\n 'batch_size': hp.quniform('batch_size', 100, 200, 10),\n 'dropout_cnn_0': hp.uniform('dropout_cnn_0', 0.3, 0.5),\n 'dropout_cnn_1': hp.uniform('dropout_cnn_1', 0.3, 0.5),\n 'dropout_cnn_2': hp.uniform('dropout_cnn_2', 0.3, 0.5),\n 'dropout_dense_0': hp.uniform('dropout_dense_0', 0.3, 0.7),\n 'dropout_dense_1': hp.uniform('dropout_dense_1', 0.3, 0.7),\n}",

"_____no_output_____"

],

[

"best = fmin(\n func_obj,\n space,\n tpe.suggest,\n 30, \n Trials()\n)",

"params={'batch_size': 100.0, 'dropout_cnn_0': 0.4879239947277033, 'dropout_cnn_1': 0.41844465850576257, 'dropout_cnn_2': 0.30965372112329087, 'dropout_dense_0': 0.4021819483430512, 'dropout_dense_1': 0.5484462572265736}\naccuracy=0.9546485543251038\nparams={'batch_size': 110.0, 'dropout_cnn_0': 0.4454706127504861, 'dropout_cnn_1': 0.4624384690395012, 'dropout_cnn_2': 0.33913598936283884, 'dropout_dense_0': 0.5740434204430944, 'dropout_dense_1': 0.6296515937419154}\naccuracy=0.8337868452072144\nparams={'batch_size': 160.0, 'dropout_cnn_0': 0.36067887838440155, 'dropout_cnn_1': 0.46008918518034936, 'dropout_cnn_2': 0.43839249380954826, 'dropout_dense_0': 0.3246246348241844, 'dropout_dense_1': 0.3160098003597073}\naccuracy=0.9435374140739441\nparams={'batch_size': 120.0, 'dropout_cnn_0': 0.32139805632785357, 'dropout_cnn_1': 0.3682460950330647, 'dropout_cnn_2': 0.35017506728862746, 'dropout_dense_0': 0.636572271397232, 'dropout_dense_1': 0.571017519489049}\naccuracy=0.9678004384040833\nparams={'batch_size': 120.0, 'dropout_cnn_0': 0.4913879097246001, 'dropout_cnn_1': 0.47033985564382663, 'dropout_cnn_2': 0.31865265519409725, 'dropout_dense_0': 0.4649288055725062, 'dropout_dense_1': 0.5296904186684789}\naccuracy=0.9235827922821045\nparams={'batch_size': 150.0, 'dropout_cnn_0': 0.4164041967473282, 'dropout_cnn_1': 0.37056626479342125, 'dropout_cnn_2': 0.3436300186166929, 'dropout_dense_0': 0.5087510602359042, 'dropout_dense_1': 0.42453105625736365}\naccuracy=0.9616780281066895\nparams={'batch_size': 180.0, 'dropout_cnn_0': 0.4854568816341579, 'dropout_cnn_1': 0.48877389527091347, 'dropout_cnn_2': 0.32601913003927036, 'dropout_dense_0': 0.5560442462373014, 'dropout_dense_1': 0.3649109619366709}\naccuracy=0.8356009125709534\nparams={'batch_size': 150.0, 'dropout_cnn_0': 0.4700263840446974, 'dropout_cnn_1': 0.322993910912036, 'dropout_cnn_2': 0.3856630233645689, 'dropout_dense_0': 0.6065512537170488, 'dropout_dense_1': 0.5801993674122023}\naccuracy=0.8383219838142395\nparams={'batch_size': 190.0, 'dropout_cnn_0': 0.3297266714814264, 'dropout_cnn_1': 0.4093366714067281, 'dropout_cnn_2': 0.37022163453985824, 'dropout_dense_0': 0.4170767345499065, 'dropout_dense_1': 0.6575939918759518}\naccuracy=0.942630410194397\nparams={'batch_size': 190.0, 'dropout_cnn_0': 0.41931974165794494, 'dropout_cnn_1': 0.3658335614786773, 'dropout_cnn_2': 0.38194136852267363, 'dropout_dense_0': 0.35608031433606646, 'dropout_dense_1': 0.520155315556423}\naccuracy=0.9589568972587585\nparams={'batch_size': 200.0, 'dropout_cnn_0': 0.47952859607728693, 'dropout_cnn_1': 0.45881924368073185, 'dropout_cnn_2': 0.4852393119246963, 'dropout_dense_0': 0.629240760228121, 'dropout_dense_1': 0.6294628440800268}\naccuracy=0.6000000238418579\nparams={'batch_size': 140.0, 'dropout_cnn_0': 0.3150257556317754, 'dropout_cnn_1': 0.31747190509765943, 'dropout_cnn_2': 0.35274788955799385, 'dropout_dense_0': 0.4694085515989886, 'dropout_dense_1': 0.37821243166844476}\naccuracy=0.9501133561134338\nparams={'batch_size': 190.0, 'dropout_cnn_0': 0.42318543432060074, 'dropout_cnn_1': 0.4679243745883398, 'dropout_cnn_2': 0.33994933689087475, 'dropout_dense_0': 0.5234438903237745, 'dropout_dense_1': 0.6297812010676287}\naccuracy=0.8433106541633606\nparams={'batch_size': 180.0, 'dropout_cnn_0': 0.4111970954079379, 'dropout_cnn_1': 0.3810825698020177, 'dropout_cnn_2': 0.34368611965100887, 'dropout_dense_0': 0.4377954919072118, 'dropout_dense_1': 0.408624015159865}\naccuracy=0.8283446431159973\nparams={'batch_size': 140.0, 'dropout_cnn_0': 0.3439677060908322, 'dropout_cnn_1': 0.35799455118265633, 'dropout_cnn_2': 0.33208044451947605, 'dropout_dense_0': 0.5981815864983848, 'dropout_dense_1': 0.6511843812879597}\naccuracy=0.9365079402923584\nparams={'batch_size': 150.0, 'dropout_cnn_0': 0.46748242209200613, 'dropout_cnn_1': 0.3676523437653032, 'dropout_cnn_2': 0.4312792131485984, 'dropout_dense_0': 0.32625690833721793, 'dropout_dense_1': 0.39700280191442144}\naccuracy=0.9539682269096375\nparams={'batch_size': 180.0, 'dropout_cnn_0': 0.42118581162583, 'dropout_cnn_1': 0.4195010230907402, 'dropout_cnn_2': 0.4484039188546435, 'dropout_dense_0': 0.6910918343546651, 'dropout_dense_1': 0.4624531349632961}\naccuracy=0.8224489688873291\nparams={'batch_size': 160.0, 'dropout_cnn_0': 0.39549131897703976, 'dropout_cnn_1': 0.3057757730736938, 'dropout_cnn_2': 0.3440014812703657, 'dropout_dense_0': 0.38230910863624784, 'dropout_dense_1': 0.5782683789010685}\naccuracy=0.9417233467102051\nparams={'batch_size': 160.0, 'dropout_cnn_0': 0.40973697044396806, 'dropout_cnn_1': 0.44794678886427797, 'dropout_cnn_2': 0.43617063275156226, 'dropout_dense_0': 0.4707042293286713, 'dropout_dense_1': 0.5573245013217827}\naccuracy=0.9058957099914551\nparams={'batch_size': 150.0, 'dropout_cnn_0': 0.484035628014369, 'dropout_cnn_1': 0.3354419819793466, 'dropout_cnn_2': 0.3679687322020443, 'dropout_dense_0': 0.527323731619307, 'dropout_dense_1': 0.5803313861614661}\naccuracy=0.934920608997345\nparams={'batch_size': 130.0, 'dropout_cnn_0': 0.3788726918102912, 'dropout_cnn_1': 0.39487133539431546, 'dropout_cnn_2': 0.4093930677647206, 'dropout_dense_0': 0.6899431290444987, 'dropout_dense_1': 0.4715426582507825}\naccuracy=0.91700679063797\nparams={'batch_size': 120.0, 'dropout_cnn_0': 0.3751135026304699, 'dropout_cnn_1': 0.3450941747375239, 'dropout_cnn_2': 0.4027596876155159, 'dropout_dense_0': 0.653364076129978, 'dropout_dense_1': 0.456372854022631}\naccuracy=0.9428571462631226\nparams={'batch_size': 100.0, 'dropout_cnn_0': 0.4430692000951883, 'dropout_cnn_1': 0.3920331534487922, 'dropout_cnn_2': 0.30809641707342283, 'dropout_dense_0': 0.6457738013910003, 'dropout_dense_1': 0.6931556825310153}\naccuracy=0.9020408391952515\nparams={'batch_size': 130.0, 'dropout_cnn_0': 0.3098449776598965, 'dropout_cnn_1': 0.4330141894396331, 'dropout_cnn_2': 0.36462177532083384, 'dropout_dense_0': 0.5080213947756672, 'dropout_dense_1': 0.436456594672405}\naccuracy=0.961904764175415\nparams={'batch_size': 120.0, 'dropout_cnn_0': 0.3100682995875006, 'dropout_cnn_1': 0.4403059761171209, 'dropout_cnn_2': 0.3647118024249737, 'dropout_dense_0': 0.5499327379699052, 'dropout_dense_1': 0.33047800715612013}\naccuracy=0.9215419292449951\nparams={'batch_size': 110.0, 'dropout_cnn_0': 0.33554440076591774, 'dropout_cnn_1': 0.43474055482913365, 'dropout_cnn_2': 0.41893302140483907, 'dropout_dense_0': 0.6605185879511281, 'dropout_dense_1': 0.4832971499239135}\naccuracy=0.9360544085502625\nparams={'batch_size': 130.0, 'dropout_cnn_0': 0.30379539369144754, 'dropout_cnn_1': 0.4064463518523729, 'dropout_cnn_2': 0.4656824710935996, 'dropout_dense_0': 0.5881258356630151, 'dropout_dense_1': 0.42907421627055664}\naccuracy=0.9514739513397217\nparams={'batch_size': 110.0, 'dropout_cnn_0': 0.3224440342224547, 'dropout_cnn_1': 0.48668517280337237, 'dropout_cnn_2': 0.3000177265182328, 'dropout_dense_0': 0.4908740626213097, 'dropout_dense_1': 0.5070687093481369}\naccuracy=0.9489796161651611\nparams={'batch_size': 130.0, 'dropout_cnn_0': 0.3486243130709472, 'dropout_cnn_1': 0.42415652169302975, 'dropout_cnn_2': 0.38653332278305086, 'dropout_dense_0': 0.42873294452965865, 'dropout_dense_1': 0.3484720970071098}\naccuracy=0.9428571462631226\nparams={'batch_size': 100.0, 'dropout_cnn_0': 0.3033407328546241, 'dropout_cnn_1': 0.3872273792780576, 'dropout_cnn_2': 0.31589339084649687, 'dropout_dense_0': 0.6212959824180401, 'dropout_dense_1': 0.5453367051678037}\naccuracy=0.9696145057678223\n100%|██████████| 30/30 [22:54<00:00, 45.82s/it, best loss: -0.9696145057678223]\n"

],

[

" ",

"_____no_output_____"

]

]

] |

[

"code"

] |

[

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

]

] |

cb7dc17d12e262d52f55df313be22b7ce178aa8f

| 889,700 |

ipynb

|

Jupyter Notebook

|

Feedforward Learning Rate Search/Adam.ipynb

|

Kulbear/AdaBound-Reproduction

|

eb436e4fa6c7087042e435c2b40b94bde0dcbf2d

|

[

"MIT"

] | 1 |

2019-02-27T06:14:45.000Z

|

2019-02-27T06:14:45.000Z

|

Feedforward Learning Rate Search/Adam.ipynb

|

Kulbear/AdaBound-Reproduction

|

eb436e4fa6c7087042e435c2b40b94bde0dcbf2d

|

[

"MIT"

] | null | null | null |

Feedforward Learning Rate Search/Adam.ipynb

|

Kulbear/AdaBound-Reproduction

|

eb436e4fa6c7087042e435c2b40b94bde0dcbf2d

|

[

"MIT"

] | null | null | null | 1,032.134571 | 116,648 | 0.952295 |

[

[

[

"import torch\ntorch.backends.cudnn.deterministic = True\ntorch.backends.cudnn.benchmark = False",

"_____no_output_____"

],

[

"import numpy as np\n\nimport pickle\nfrom collections import namedtuple\nfrom tqdm import tqdm\n\nimport torch\ntorch.backends.cudnn.deterministic = True\ntorch.backends.cudnn.benchmark = False\nimport torch.nn as nn\nimport torch.nn.functional as F\nimport torch.optim as optim\nimport torchvision\nimport torchvision.transforms as transforms\n\nfrom adabound import AdaBound\n\nimport matplotlib.pyplot as plt",

"_____no_output_____"

],

[

"transform = transforms.Compose(\n [transforms.ToTensor(),\n transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])\n\ntrainset = torchvision.datasets.MNIST(root='./data_mnist', train=True,\n download=True, transform=transform)\ntrainloader = torch.utils.data.DataLoader(trainset, batch_size=200,\n shuffle=True, num_workers=4)\n\ntestset = torchvision.datasets.MNIST(root='./data_mnist', train=False,\n download=True, transform=transform)\ntestloader = torch.utils.data.DataLoader(testset, batch_size=200,\n shuffle=False, num_workers=4)",

"_____no_output_____"

],

[

"device = 'cuda:0'\n\noptim_configs = {\n '1e-4': {\n 'optimizer': optim.Adam, \n 'kwargs': {\n 'lr': 1e-4,\n 'weight_decay': 0,\n 'betas': (0.9, 0.999),\n 'eps': 1e-08,\n 'amsgrad': False\n }\n },\n '5e-3': {\n 'optimizer': optim.Adam, \n 'kwargs': {\n 'lr': 5e-3,\n 'weight_decay': 0,\n 'betas': (0.9, 0.999),\n 'eps': 1e-08,\n 'amsgrad': False\n }\n },\n '1e-2': {\n 'optimizer': optim.Adam, \n 'kwargs': {\n 'lr': 1e-2,\n 'weight_decay': 0,\n 'betas': (0.9, 0.999),\n 'eps': 1e-08,\n 'amsgrad': False\n }\n },\n '1e-3': {\n 'optimizer': optim.Adam, \n 'kwargs': {\n 'lr': 1e-3,\n 'weight_decay': 0,\n 'betas': (0.9, 0.999),\n 'eps': 1e-08,\n 'amsgrad': False\n }\n },\n '5e-4': {\n 'optimizer': optim.Adam, \n 'kwargs': {\n 'lr': 5e-4,\n 'weight_decay': 0,\n 'betas': (0.9, 0.999),\n 'eps': 1e-08,\n 'amsgrad': False\n }\n },\n}",

"_____no_output_____"

],

[

"class MLP(nn.Module):\n def __init__(self, hidden_size=256):\n super(MLP, self).__init__()\n self.fc1 = nn.Linear(28 * 28, hidden_size)\n self.fc2 = nn.Linear(hidden_size, 10)\n\n def forward(self, x):\n x = x.view(-1, 28 * 28)\n x = F.relu(self.fc1(x))\n x = self.fc2(x)\n return x\n \ncriterion = nn.CrossEntropyLoss()",

"_____no_output_____"

],

[

"hidden_sizes = [256, 512, 1024, 2048]\n\nfor h_size in hidden_sizes:\n Stat = namedtuple('Stat', ['losses', 'accs'])\n train_results = {}\n test_results = {} \n for optim_name, optim_config in optim_configs.items():\n torch.manual_seed(0)\n np.random.seed(0)\n train_results[optim_name] = Stat(losses=[], accs=[])\n test_results[optim_name] = Stat(losses=[], accs=[])\n net = MLP(hidden_size=h_size).to(device)\n optimizer = optim_config['optimizer'](net.parameters(), **optim_config['kwargs'])\n print(optimizer)\n\n for epoch in tqdm(range(100)): # loop over the dataset multiple times\n train_stat = {\n 'loss': .0,\n 'correct': 0,\n 'total': 0\n }\n\n test_stat = {\n 'loss': .0,\n 'correct': 0,\n 'total': 0\n }\n for i, data in enumerate(trainloader, 0):\n # get the inputs\n inputs, labels = data\n inputs = inputs.to(device)\n labels = labels.to(device)\n optimizer.zero_grad()\n outputs = net(inputs)\n loss = criterion(outputs, labels)\n loss.backward()\n optimizer.step()\n _, predicted = torch.max(outputs, 1)\n c = (predicted == labels).sum()\n\n # calculate\n train_stat['loss'] += loss.item()\n train_stat['correct'] += c.item()\n train_stat['total'] += labels.size()[0]\n train_results[optim_name].losses.append(train_stat['loss'] / (i + 1))\n train_results[optim_name].accs.append(train_stat['correct'] / train_stat['total'])\n\n\n with torch.no_grad():\n for i, data in enumerate(testloader, 0):\n inputs, labels = data\n inputs = inputs.to(device)\n labels = labels.to(device)\n outputs = net(inputs)\n loss = criterion(outputs, labels)\n _, predicted = torch.max(outputs, 1)\n c = (predicted == labels).sum()\n\n test_stat['loss'] += loss.item()\n test_stat['correct'] += c.item()\n test_stat['total'] += labels.size()[0]\n test_results[optim_name].losses.append(test_stat['loss'] / (i + 1))\n test_results[optim_name].accs.append(test_stat['correct'] / test_stat['total'])\n \n # Save stat!\n stat = {\n 'train': train_results,\n 'test': test_results\n }\n with open(f'adam_stat_mlp_{h_size}.pkl', 'wb') as f:\n pickle.dump(stat, f)\n \n # Plot loss \n f, (ax1, ax2) = plt.subplots(1, 2, figsize=(13, 5))\n for optim_name in optim_configs:\n if 'Bound' in optim_name:\n ax1.plot(train_results[optim_name].losses, '--', label=optim_name)\n else:\n ax1.plot(train_results[optim_name].losses, label=optim_name)\n ax1.set_ylabel('Training Loss')\n ax1.set_xlabel('# of Epcoh')\n ax1.legend()\n\n for optim_name in optim_configs:\n if 'Bound' in optim_name:\n ax2.plot(test_results[optim_name].losses, '--', label=optim_name)\n else:\n ax2.plot(test_results[optim_name].losses, label=optim_name)\n ax2.set_ylabel('Test Loss')\n ax2.set_xlabel('# of Epcoh')\n ax2.legend()\n\n plt.suptitle(f'Training Loss and Test Loss for MLP({h_size}) on MNIST', y=1.01)\n plt.tight_layout()\n plt.show()\n \n # Plot accuracy \n f, (ax1, ax2) = plt.subplots(1, 2, figsize=(13, 5))\n for optim_name in optim_configs:\n if 'Bound' in optim_name:\n ax1.plot(train_results[optim_name].accs, '--', label=optim_name)\n else:\n ax1.plot(train_results[optim_name].accs, label=optim_name)\n ax1.set_ylabel('Training Accuracy %')\n ax1.set_xlabel('# of Epcoh')\n ax1.legend()\n\n for optim_name in optim_configs:\n if 'Bound' in optim_name:\n ax2.plot(test_results[optim_name].accs, '--', label=optim_name)\n else:\n ax2.plot(test_results[optim_name].accs, label=optim_name)\n ax2.set_ylabel('Test Accuracy %')\n ax2.set_xlabel('# of Epcoh')\n ax2.legend()\n\n plt.suptitle(f'Training Accuracy and Test Accuracy for MLP({h_size}) on MNIST', y=1.01)\n plt.tight_layout()\n plt.show()",

" 0%| | 0/100 [00:00<?, ?it/s]"

]

]

] |

[

"code"

] |

[

[

"code",

"code",

"code",

"code",

"code",

"code"

]

] |

cb7dc30f282cbb1c813608eb66feb52f2edaff0d

| 75,312 |

ipynb

|

Jupyter Notebook

|

assignment_cnn_preenchido.ipynb

|

WittmannF/udemy-deep-learning-cnns

|

4aefdd611824284b31b40ac3b496d3f8ee86b691

|

[

"MIT"

] | null | null | null |

assignment_cnn_preenchido.ipynb

|

WittmannF/udemy-deep-learning-cnns

|

4aefdd611824284b31b40ac3b496d3f8ee86b691

|

[

"MIT"

] | null | null | null |

assignment_cnn_preenchido.ipynb

|

WittmannF/udemy-deep-learning-cnns

|

4aefdd611824284b31b40ac3b496d3f8ee86b691

|

[

"MIT"

] | null | null | null | 137.681901 | 17,278 | 0.840504 |

[

[

[

"<a href=\"https://colab.research.google.com/github/WittmannF/udemy-deep-learning-cnns/blob/main/assignment_cnn_preenchido.ipynb\" target=\"_parent\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/></a>",

"_____no_output_____"

],

[

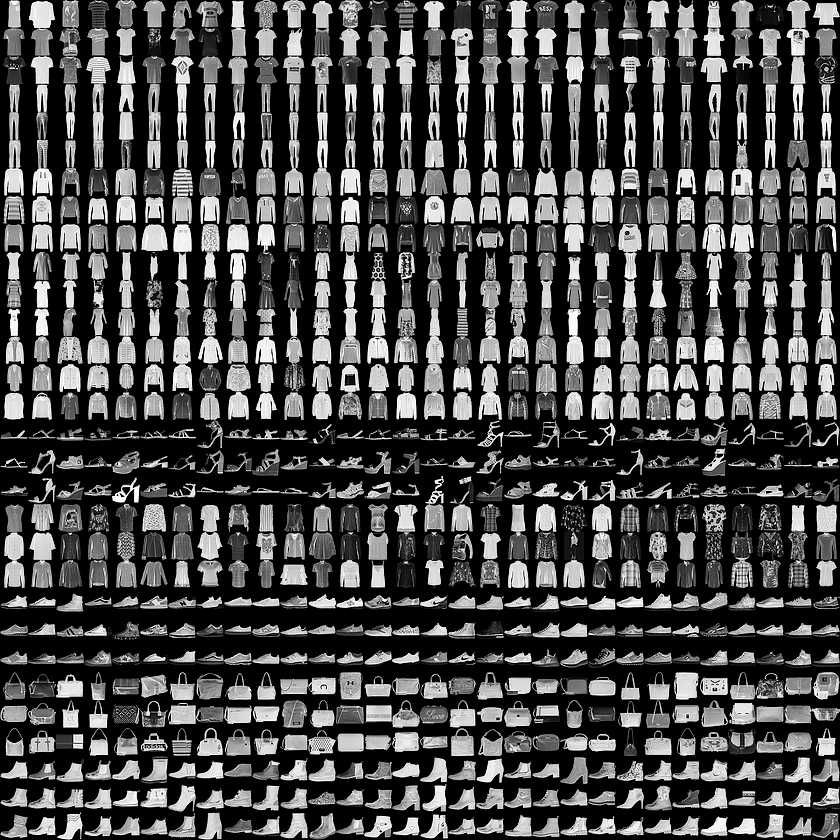

"## Assignment: Fashion MNIST\nNow it is your turn! You are going to use the same methods presented in the previous video in order to classify clothes from a black and white dataset of images (image by Zalando, MIT License):\n\n\nThe class labels are:\n```\n0. T-shirt/top\n1. Trouser\n2. Pullover\n3. Dress\n4. Coat\n5. Sandal\n6. Shirt\n7. Sneaker\n8. Bag\n9. Ankle boot\n```\n\n### 1. Preparing the input data\nLet's first import the dataset. It is available on [tensorflow.keras.datasets](https://keras.io/datasets/):",

"_____no_output_____"

]

],

[

[

"import tensorflow\nfashion_mnist = tensorflow.keras.datasets.fashion_mnist\n\n(X_train, y_train), (X_test, y_test) = fashion_mnist.load_data()",

"Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz\n32768/29515 [=================================] - 0s 0us/step\n40960/29515 [=========================================] - 0s 0us/step\nDownloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz\n26427392/26421880 [==============================] - 0s 0us/step\n26435584/26421880 [==============================] - 0s 0us/step\nDownloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz\n16384/5148 [===============================================================================================] - 0s 0us/step\nDownloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz\n4423680/4422102 [==============================] - 0s 0us/step\n4431872/4422102 [==============================] - 0s 0us/step\n"

],

[

"print(\"Shape of the training set: {}\".format(X_train.shape))\nprint(\"Shape of the test set: {}\".format(X_test.shape))",

"Shape of the training set: (60000, 28, 28)\nShape of the test set: (10000, 28, 28)\n"

],

[

"# TODO: Normalize the training and testing set using standardization\ndef normalize(x,m,s):\n return (x-m)/s\n\ntrain_mean = X_train.mean()\ntrain_std = X_train.std()\n\nX_train = normalize(X_train, train_mean, train_std)\nX_test = normalize(X_test, train_mean, train_std)\n",

"_____no_output_____"

],

[

"print(f'Training Mean after standardization {X_train.mean():.3f}')\nprint(f'Training Std after standardization {X_train.std():.3f}')\nprint(f'Test Mean after standardization {X_test.mean():.3f}')\nprint(f'Test Std after standardization {X_test.std():.3f}')",

"Training Mean after standardization -0.000\nTraining Std after standardization 1.000\nTest Mean after standardization 0.002\nTest Std after standardization 0.998\n"

]

],

[

[

"### 2. Training with fully connected layers",

"_____no_output_____"

]

],

[

[

"from tensorflow.keras.models import Sequential\nfrom tensorflow.keras.layers import Flatten, Dense\n\nmodel = Sequential([\n Flatten(),\n Dense(512, activation='relu'),\n Dense(10, activation='softmax')\n])\n\nmodel.compile(optimizer='adam',\n loss='sparse_categorical_crossentropy',\n metrics=['accuracy'])\n\nmodel.fit(X_train, y_train, epochs=2, validation_data=(X_test, y_test))",

"Epoch 1/2\n1875/1875 [==============================] - 6s 2ms/step - loss: 0.4515 - accuracy: 0.8380 - val_loss: 0.4077 - val_accuracy: 0.8497\nEpoch 2/2\n1875/1875 [==============================] - 4s 2ms/step - loss: 0.3473 - accuracy: 0.8720 - val_loss: 0.3933 - val_accuracy: 0.8556\n"

]

],

[

[

"### 3. Extending to CNNs\nNow your goal is to develop an architecture that can reach a test accuracy higher than 0.85.",

"_____no_output_____"

]

],

[

[

"X_train.shape",

"_____no_output_____"

],

[

"# TODO: Reshape the dataset in order to add the channel dimension\nX_train = X_train.reshape(-1, 28, 28, 1)\nX_test = X_test.reshape(-1, 28, 28, 1)",

"_____no_output_____"

],

[

"from tensorflow.keras.layers import Conv2D, MaxPooling2D\n\nmodel = Sequential([\n Conv2D(6, kernel_size=(3,3), activation='relu', input_shape=(28,28,1)),\n MaxPooling2D(),\n Conv2D(16, kernel_size=(3,3), activation='relu'),\n MaxPooling2D(),\n Flatten(),\n Dense(512, activation='relu'),\n Dense(10, activation='softmax')\n])\n\nmodel.summary()",

"Model: \"sequential_1\"\n_________________________________________________________________\nLayer (type) Output Shape Param # \n=================================================================\nconv2d (Conv2D) (None, 26, 26, 6) 60 \n_________________________________________________________________\nmax_pooling2d (MaxPooling2D) (None, 13, 13, 6) 0 \n_________________________________________________________________\nconv2d_1 (Conv2D) (None, 11, 11, 16) 880 \n_________________________________________________________________\nmax_pooling2d_1 (MaxPooling2 (None, 5, 5, 16) 0 \n_________________________________________________________________\nflatten_1 (Flatten) (None, 400) 0 \n_________________________________________________________________\ndense_2 (Dense) (None, 512) 205312 \n_________________________________________________________________\ndense_3 (Dense) (None, 10) 5130 \n=================================================================\nTotal params: 211,382\nTrainable params: 211,382\nNon-trainable params: 0\n_________________________________________________________________\n"

],

[

"model.compile(optimizer='adam',\n loss='sparse_categorical_crossentropy',\n metrics=['accuracy'])\n",

"_____no_output_____"

],

[

"hist=model.fit(X_train, y_train, epochs=10, validation_data=(X_test, y_test))",

"Epoch 1/10\n1875/1875 [==============================] - 21s 3ms/step - loss: 0.4505 - accuracy: 0.8368 - val_loss: 0.3674 - val_accuracy: 0.8636\nEpoch 2/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.3198 - accuracy: 0.8812 - val_loss: 0.3373 - val_accuracy: 0.8787\nEpoch 3/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.2808 - accuracy: 0.8971 - val_loss: 0.3061 - val_accuracy: 0.8911\nEpoch 4/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.2502 - accuracy: 0.9064 - val_loss: 0.3113 - val_accuracy: 0.8861\nEpoch 5/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.2270 - accuracy: 0.9147 - val_loss: 0.2970 - val_accuracy: 0.8898\nEpoch 6/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.2039 - accuracy: 0.9232 - val_loss: 0.2721 - val_accuracy: 0.9022\nEpoch 7/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.1843 - accuracy: 0.9305 - val_loss: 0.2746 - val_accuracy: 0.9061\nEpoch 8/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.1652 - accuracy: 0.9372 - val_loss: 0.2927 - val_accuracy: 0.9047\nEpoch 9/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.1484 - accuracy: 0.9436 - val_loss: 0.2862 - val_accuracy: 0.9040\nEpoch 10/10\n1875/1875 [==============================] - 5s 3ms/step - loss: 0.1318 - accuracy: 0.9504 - val_loss: 0.3239 - val_accuracy: 0.9003\n"

],

[

"import pandas as pd\npd.DataFrame(hist.history).plot()",

"_____no_output_____"

]

],

[

[

"### 4. Visualizing Predictions",

"_____no_output_____"

]

],

[

[

"import numpy as np\nimport matplotlib.pyplot as plt\n\nlabel_names = {0:\"T-shirt/top\",\n 1:\"Trouser\",\n 2:\"Pullover\",\n 3:\"Dress\",\n 4:\"Coat\",\n 5:\"Sandal\",\n 6:\"Shirt\",\n 7:\"Sneaker\",\n 8:\"Bag\",\n 9:\"Ankle boot\"}\n\n# Index to be visualized\nfor idx in range(5):\n plt.imshow(X_test[idx].reshape(28,28), cmap='gray')\n out = model.predict(X_test[idx].reshape(1,28,28,1))\n plt.title(\"True: {}, Pred: {}\".format(label_names[y_test[idx]], label_names[np.argmax(out)]))\n plt.show()",

"_____no_output_____"

],

[

"",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

]

] |

cb7dc3c69efe8faa3a301aad4d196418c9fb835d

| 8,709 |

ipynb

|

Jupyter Notebook

|

Classificationa ,recomention and the word is positive or negative of text data using NLP.ipynb

|

Vivek-Upadhya/Vivek-Upadhya.github.io

|

b02d9c7ae91a652e8ac5d5ed42ef196a8a710704

|

[

"BSD-3-Clause",

"MIT"

] | null | null | null |

Classificationa ,recomention and the word is positive or negative of text data using NLP.ipynb

|

Vivek-Upadhya/Vivek-Upadhya.github.io

|

b02d9c7ae91a652e8ac5d5ed42ef196a8a710704

|

[

"BSD-3-Clause",

"MIT"

] | null | null | null |

Classificationa ,recomention and the word is positive or negative of text data using NLP.ipynb

|

Vivek-Upadhya/Vivek-Upadhya.github.io

|

b02d9c7ae91a652e8ac5d5ed42ef196a8a710704

|

[

"BSD-3-Clause",

"MIT"

] | null | null | null | 26.231928 | 432 | 0.546102 |

[

[

[

"# Load library\nimport nltk\nimport os\nfrom nltk import tokenize \nfrom nltk.tokenize import sent_tokenize,word_tokenize",

"_____no_output_____"

],

[

"os.getcwd()",

"_____no_output_____"

],

[

"# Read the Data\n\nraw=open(\"C:\\\\Users\\\\vivek\\\\Desktop\\\\NLP Python Practice\\\\Labeled Dateset.txt\").read()",

"_____no_output_____"

]

],

[

[

"# Tokenize and make the Data into the Lower Case",

"_____no_output_____"

]

],

[

[

"# Change the Data in lower\n\nraw=raw.lower()",

"_____no_output_____"

],

[

"# tokenize the data\n\ndocs=sent_tokenize(raw)",

"_____no_output_____"

],

[

"docs",

"_____no_output_____"

],

[

"# Split the Data into the label and review\n\ndocs=docs[0].split(\"\\n\")",

"_____no_output_____"

],

[

"docs",

"_____no_output_____"

]

],

[

[

"# Pre-processing punctuation",

"_____no_output_____"

]

],

[

[

"from string import punctuation as punc",

"_____no_output_____"

],

[

"for d in docs:\n for ch in d:\n if ch in punc:\n d.replace(ch,\"\")",

"_____no_output_____"

]

],

[

[

"# removing Stop word and stemming",

"_____no_output_____"

]

],

[

[

"from sklearn.feature_extraction.stop_words import ENGLISH_STOP_WORDS\nfrom nltk.stem import PorterStemmer",

"C:\\Users\\vivek\\anaconda3\\lib\\site-packages\\sklearn\\utils\\deprecation.py:143: FutureWarning: The sklearn.feature_extraction.stop_words module is deprecated in version 0.22 and will be removed in version 0.24. The corresponding classes / functions should instead be imported from sklearn.feature_extraction.text. Anything that cannot be imported from sklearn.feature_extraction.text is now part of the private API.\n warnings.warn(message, FutureWarning)\n"

],

[

"ps=PorterStemmer()\nfor d in docs:\n for token in word_tokenize(d):\n if token in ENGLISH_STOP_WORDS:\n d.replace(token,\"\")\n d.replace(token,ps.stem(token))",

"_____no_output_____"

]

],

[

[

"# Ask from the user for test Data",

"_____no_output_____"

]

],

[

[

"for i in range(len(docs)):\n print(\"D\"+str(i)+\":\"+docs[i])\ntest=input(\"Enter your text:\")\ndocs.append(test+\":\")\n\n\n## Seperating the document into the label,striping off the unwanted white space\nx,y=[],[]\nfor d in docs:\n x.append(d[:d.index(\":\")].strip())\n y.append(d[d.index(\":\")+1:].strip())\n \n# vectorizer using Tfidf\n\nfrom sklearn.feature_extraction.text import TfidfVectorizer\nvectorizer=TfidfVectorizer()\nvec=vectorizer.fit_transform(x)\n\n# trainning KNN Classifier\n\nfrom sklearn.neighbors import KNeighborsClassifier\nknn=KNeighborsClassifier(1)\nknn.fit(vec[:6],y[:6])\nprint(\"Label: \",knn.predict(vec[6]))\n\n# Sntiment Analysis\n\nfrom nltk.corpus import wordnet\ntest_tokens=test.split(\" \")\ngood=wordnet.synsets(\"good\")\nbad=wordnet.synsets(\"evil\")\nscore_pos=0\nscore_neg=0\n\n\nfor token in test_tokens:\n t=wordnet.synsets(token)\n if len(t)>0:\n sim_good=wordnet.wup_similarity(good[0],t[0])\n sim_bad=wordnet.wup_similarity(bad[0],t[0])\n if(sim_good is not None):\n score_pos =score_pos + sim_good\n if(sim_bad is not None):\n score_neg =score_neg + sim_bad\n \n \nif((score_pos - score_neg)>0.1):\n print(\"Subjective Statement, Positive openion of strength: %.2f\" %score_pos)\n \nelif((score_neg - score_pos)>0.1):\n print(\"Subjective Statement, Negative openion of strength: %.2f\" %score_neg)\nelse:\n print(\"Objective Statement, No openion Showed\")\n \n \n\n# Nearest Document\n\nfrom sklearn.neighbors import NearestNeighbors\nnb=NearestNeighbors(n_neighbors=2)\nnb.fit(vec[:6])\nclosest_docs=nb.kneighbors(vec[6])\nprint(\"Recomended document with IDs \",closest_docs[1])\nprint(\"hiving distance \",closest_docs[0])",

"D0:this recipe is very special for cooking snacks : cooking\nD1:i like to cook but it is usually takes longer : cokking \nD2:my priorities is cooking include pastas and soup: cooking\nD3:one need to stay fit while playing profesional sport : sports\nD4:it is very important for sportsman to take care of their diet : sports\nD5:professional sports demand a lot of hardwork: sports\nEnter your text:i like to make specual food\nLabel: ['cokking']\nObjective Statement, No openion Showed\nRecomended document with IDs [[1 3]]\nhiving distance [[1.26626032 1.36748507]]\n"

]

]

] |

[

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

]

] |

cb7dd0490e2079bdc9038b6f506e0c7f53ab0bd3

| 7,007 |

ipynb

|

Jupyter Notebook

|

courses/fast-and-lean-data-science/colab_intro.ipynb

|

KayvanShah1/training-data-analyst

|

3f778a57b8e6d2446af40ca6063b2fd9c1b4bc88

|

[

"Apache-2.0"

] | 6,140 |

2016-05-23T16:09:35.000Z

|

2022-03-30T19:00:46.000Z

|

courses/fast-and-lean-data-science/colab_intro.ipynb

|

KayvanShah1/training-data-analyst

|

3f778a57b8e6d2446af40ca6063b2fd9c1b4bc88

|

[

"Apache-2.0"

] | 1,384 |

2016-07-08T22:26:41.000Z

|

2022-03-24T16:39:43.000Z

|

courses/fast-and-lean-data-science/colab_intro.ipynb

|

KayvanShah1/training-data-analyst

|

3f778a57b8e6d2446af40ca6063b2fd9c1b4bc88

|

[

"Apache-2.0"

] | 5,110 |

2016-05-27T13:45:18.000Z

|

2022-03-31T18:40:42.000Z

| 30.072961 | 395 | 0.517625 |

[

[

[