sha

stringlengths 40

40

| text

stringlengths 0

13.4M

| id

stringlengths 2

117

| tags

list | created_at

stringlengths 25

25

| metadata

stringlengths 2

31.7M

| last_modified

stringlengths 25

25

|

|---|---|---|---|---|---|---|

024c3202dd522d1fec98d154895ad8cbaedb74fb | # Dataset Card for the High-Level Dataset

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Supported Tasks](#supported-tasks)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

## Dataset Description

The High-Level (HL) dataset aligns **object-centric descriptions** from [COCO](https://arxiv.org/pdf/1405.0312.pdf)

with **high-level descriptions** crowdsourced along 3 axes: **_scene_, _action_, _rationale_**

The HL dataset contains 14997 images from COCO and a total of 134973 crowdsourced captions (3 captions for each axis) aligned with ~749984 object-centric captions from COCO.

Each axis is collected by asking the following 3 questions:

1) Where is the picture taken?

2) What is the subject doing?

3) Why is the subject doing it?

**The high-level descriptions capture the human interpretations of the images**. These interpretations contain abstract concepts not directly linked to physical objects.

Each high-level description is provided with a _confidence score_, crowdsourced by an independent worker measuring the extent to which

the high-level description is likely given the corresponding image, question, and caption. The higher the score, the more the high-level caption is close to the commonsense (in a Likert scale from 1-5).

- **🗃️ Repository:** [github.com/michelecafagna26/HL-dataset](https://github.com/michelecafagna26/HL-dataset)

- **📜 Paper:** [HL Dataset: Visually-grounded Description of Scenes, Actions and Rationales](https://arxiv.org/abs/2302.12189?context=cs.CL)

- **🧭 Spaces:** [Dataset explorer](https://huggingface.co/spaces/michelecafagna26/High-Level-Dataset-explorer)

- **🖊️ Contact:** [email protected]

### Supported Tasks

- image captioning

- visual question answering

- multimodal text-scoring

- zero-shot evaluation

### Languages

English

## Dataset Structure

The dataset is provided with images from COCO and two metadata jsonl files containing the annotations

### Data Instances

An instance looks like this:

```json

{

"file_name": "COCO_train2014_000000138878.jpg",

"captions": {

"scene": [

"in a car",

"the picture is taken in a car",

"in an office."

],

"action": [

"posing for a photo",

"the person is posing for a photo",

"he's sitting in an armchair."

],

"rationale": [

"to have a picture of himself",

"he wants to share it with his friends",

"he's working and took a professional photo."

],

"object": [

"A man sitting in a car while wearing a shirt and tie.",

"A man in a car wearing a dress shirt and tie.",

"a man in glasses is wearing a tie",

"Man sitting in the car seat with button up and tie",

"A man in glasses and a tie is near a window."

]

},

"confidence": {

"scene": [

5,

5,

4

],

"action": [

5,

5,

4

],

"rationale": [

5,

5,

4

]

},

"purity": {

"scene": [

-1.1760284900665283,

-1.0889461040496826,

-1.442818284034729

],

"action": [

-1.0115827322006226,

-0.5917857885360718,

-1.6931917667388916

],

"rationale": [

-1.0546956062316895,

-0.9740906357765198,

-1.2204363346099854

]

},

"diversity": {

"scene": 25.965358893403383,

"action": 32.713305568898775,

"rationale": 2.658757840479801

}

}

```

### Data Fields

- ```file_name```: original COCO filename

- ```captions```: Dict containing all the captions for the image. Each axis can be accessed with the axis name and it contains a list of captions.

- ```confidence```: Dict containing the captions confidence scores. Each axis can be accessed with the axis name and it contains a list of captions. Confidence scores are not provided for the _object_ axis (COCO captions).t

- ```purity score```: Dict containing the captions purity scores. The purity score measures the semantic similarity of the captions within the same axis (Bleurt-based).

- ```diversity score```: Dict containing the captions diversity scores. The diversity score measures the lexical diversity of the captions within the same axis (Self-BLEU-based).

### Data Splits

There are 14997 images and 134973 high-level captions split into:

- Train-val: 13498 images and 121482 high-level captions

- Test: 1499 images and 13491 high-level captions

## Dataset Creation

The dataset has been crowdsourced on Amazon Mechanical Turk.

From the paper:

>We randomly select 14997 images from the COCO 2014 train-val split. In order to answer questions related to _actions_ and _rationales_ we need to

> ensure the presence of a subject in the image. Therefore, we leverage the entity annotation provided in COCO to select images containing

> at least one person. The whole annotation is conducted on Amazon Mechanical Turk (AMT). We split the workload into batches in order to ease

>the monitoring of the quality of the data collected. Each image is annotated by three different annotators, therefore we collect three annotations per axis.

### Curation Rationale

From the paper:

>In this work, we tackle the issue of **grounding high-level linguistic concepts in the visual modality**, proposing the High-Level (HL) Dataset: a

V\&L resource aligning existing object-centric captions with human-collected high-level descriptions of images along three different axes: _scenes_, _actions_ and _rationales_.

The high-level captions capture the human interpretation of the scene, providing abstract linguistic concepts complementary to object-centric captions

>used in current V\&L datasets, e.g. in COCO. We take a step further, and we collect _confidence scores_ to distinguish commonsense assumptions

>from subjective interpretations and we characterize our data under a variety of semantic and lexical aspects.

### Source Data

- Images: COCO

- object axis annotations: COCO

- scene, action, rationale annotations: crowdsourced

- confidence scores: crowdsourced

- purity score and diversity score: automatically computed

#### Annotation process

From the paper:

>**Pilot:** We run a pilot study with the double goal of collecting feedback and defining the task instructions.

>With the results from the pilot we design a beta version of the task and we run a small batch of cases on the crowd-sourcing platform.

>We manually inspect the results and we further refine the instructions and the formulation of the task before finally proceeding with the

>annotation in bulk. The final annotation form is shown in Appendix D.

>***Procedure:*** The participants are shown an image and three questions regarding three aspects or axes: _scene_, _actions_ and _rationales_

> i,e. _Where is the picture taken?_, _What is the subject doing?_, _Why is the subject doing it?_. We explicitly ask the participants to use

>their personal interpretation of the scene and add examples and suggestions in the instructions to further guide the annotators. Moreover,

>differently from other VQA datasets like (Antol et al., 2015) and (Zhu et al., 2016), where each question can refer to different entities

>in the image, we systematically ask the same three questions about the same subject for each image. The full instructions are reported

>in Figure 1. For details regarding the annotation costs see Appendix A.

#### Who are the annotators?

Turkers from Amazon Mechanical Turk

### Personal and Sensitive Information

There is no personal or sensitive information

## Considerations for Using the Data

[More Information Needed]

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

From the paper:

>**Quantitying grammatical errors:** We ask two expert annotators to correct grammatical errors in a sample of 9900 captions, 900 of which are shared between the two annotators.

> The annotators are shown the image caption pairs and they are asked to edit the caption whenever they identify a grammatical error.

>The most common errors reported by the annotators are:

>- Misuse of prepositions

>- Wrong verb conjugation

>- Pronoun omissions

>In order to quantify the extent to which the corrected captions differ from the original ones, we compute the Levenshtein distance (Levenshtein, 1966) between them.

>We observe that 22.5\% of the sample has been edited and only 5\% with a Levenshtein distance greater than 10. This suggests a reasonable

>level of grammatical quality overall, with no substantial grammatical problems. This can also be observed from the Levenshtein distance

>distribution reported in Figure 2. Moreover, the human evaluation is quite reliable as we observe a moderate inter-annotator agreement

>(alpha = 0.507, (Krippendorff, 2018) computed over the shared sample.

### Dataset Curators

Michele Cafagna

### Licensing Information

The Images and the object-centric captions follow the [COCO terms of Use](https://cocodataset.org/#termsofuse)

The remaining annotations are licensed under Apache-2.0 license.

### Citation Information

```BibTeX

@inproceedings{cafagna2023hl,

title={{HL} {D}ataset: {V}isually-grounded {D}escription of {S}cenes, {A}ctions and

{R}ationales},

author={Cafagna, Michele and van Deemter, Kees and Gatt, Albert},

booktitle={Proceedings of the 16th International Natural Language Generation Conference (INLG'23)},

address = {Prague, Czech Republic},

year={2023}

}

```

| michelecafagna26/hl | [

"task_categories:image-to-text",

"task_categories:question-answering",

"task_categories:zero-shot-classification",

"task_ids:text-scoring",

"annotations_creators:crowdsourced",

"multilinguality:monolingual",

"size_categories:10K<n<100K",

"language:en",

"license:apache-2.0",

"arxiv:1405.0312",

"arxiv:2302.12189",

"region:us"

]

| 2023-01-25T16:15:17+00:00 | {"annotations_creators": ["crowdsourced"], "language": ["en"], "license": "apache-2.0", "multilinguality": ["monolingual"], "size_categories": ["10K<n<100K"], "task_categories": ["image-to-text", "question-answering", "zero-shot-classification"], "task_ids": ["text-scoring"], "pretty_name": "HL (High-Level Dataset)", "annotations_origin": ["crowdsourced"], "dataset_info": {"splits": [{"name": "train", "num_examples": 13498}, {"name": "test", "num_examples": 1499}]}} | 2023-08-02T10:50:20+00:00 |

0ea08bf8ff41c8ea54d6671411ce0005fb46113a | # Dataset Card for "RSSCN7"

## Dataset Description

- **Paper** [Deep Learning Based Feature Selection for Remote Sensing Scene Classification](https://ieeexplore.ieee.org/iel7/8859/7305891/07272047.pdf)

### Licensing Information

For research and academic purposes.

## Citation Information

[Deep Learning Based Feature Selection for Remote Sensing Scene Classification](https://ieeexplore.ieee.org/iel7/8859/7305891/07272047.pdf)

```

@article{7272047,

title = {Deep Learning Based Feature Selection for Remote Sensing Scene Classification},

author = {Zou, Qin and Ni, Lihao and Zhang, Tong and Wang, Qian},

year = 2015,

journal = {IEEE Geoscience and Remote Sensing Letters},

volume = 12,

number = 11,

pages = {2321--2325},

doi = {10.1109/LGRS.2015.2475299}

}

``` | jonathan-roberts1/RSSCN7 | [

"task_categories:image-classification",

"task_categories:zero-shot-image-classification",

"license:other",

"region:us"

]

| 2023-01-25T16:16:29+00:00 | {"license": "other", "task_categories": ["image-classification", "zero-shot-image-classification"], "dataset_info": {"features": [{"name": "image", "dtype": "image"}, {"name": "label", "dtype": {"class_label": {"names": {"0": "field", "1": "forest", "2": "grass", "3": "industry", "4": "parking", "5": "resident", "6": "river or lake"}}}}], "splits": [{"name": "train", "num_bytes": 345895442.4, "num_examples": 2800}], "download_size": 367257922, "dataset_size": 345895442.4}} | 2023-03-31T16:20:53+00:00 |

212f53dc625a4caaefa8f105679d3434381158c1 | # Dataset Card for "OxfordPets_test_facebook_opt_2.7b_Attributes_Caption_ns_3669"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Multimodal-Fatima/OxfordPets_test_facebook_opt_2.7b_Attributes_Caption_ns_3669 | [

"region:us"

]

| 2023-01-25T16:20:51+00:00 | {"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "image", "dtype": "image"}, {"name": "prompt", "dtype": "string"}, {"name": "true_label", "dtype": "string"}, {"name": "prediction", "dtype": "string"}, {"name": "scores", "sequence": "float64"}], "splits": [{"name": "fewshot_0_bs_16", "num_bytes": 121189501.375, "num_examples": 3669}, {"name": "fewshot_1_bs_16", "num_bytes": 122187449.375, "num_examples": 3669}, {"name": "fewshot_3_bs_16", "num_bytes": 124265920.375, "num_examples": 3669}, {"name": "fewshot_5_bs_16", "num_bytes": 126336943.375, "num_examples": 3669}, {"name": "fewshot_8_bs_16", "num_bytes": 129454684.375, "num_examples": 3669}], "download_size": 603074119, "dataset_size": 623434498.875}} | 2023-01-25T20:23:48+00:00 |

ac46d216ebaf87e36a4dae607253e6985e6e5a75 | # Dataset Card for "OxfordPets_test_facebook_opt_125m_Visclues_ns_3669"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Multimodal-Fatima/OxfordPets_test_facebook_opt_125m_Visclues_ns_3669 | [

"region:us"

]

| 2023-01-25T16:23:25+00:00 | {"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "image", "dtype": "image"}, {"name": "prompt", "dtype": "string"}, {"name": "true_label", "dtype": "string"}, {"name": "prediction", "dtype": "string"}, {"name": "scores", "sequence": "float64"}], "splits": [{"name": "fewshot_0_bs_16", "num_bytes": 121460903.375, "num_examples": 3669}, {"name": "fewshot_1_bs_16", "num_bytes": 122822438.375, "num_examples": 3669}, {"name": "fewshot_3_bs_16", "num_bytes": 125536937.375, "num_examples": 3669}, {"name": "fewshot_5_bs_16", "num_bytes": 128243714.375, "num_examples": 3669}, {"name": "fewshot_8_bs_16", "num_bytes": 132312290.375, "num_examples": 3669}], "download_size": 604694650, "dataset_size": 630376283.875}} | 2023-01-25T20:30:55+00:00 |

bcb88fa457c2bea86e317aa0fc22e177f1ce49b1 | # Dataset Card for "OxfordPets_test_facebook_opt_350m_Visclues_ns_3669"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Multimodal-Fatima/OxfordPets_test_facebook_opt_350m_Visclues_ns_3669 | [

"region:us"

]

| 2023-01-25T16:27:08+00:00 | {"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "image", "dtype": "image"}, {"name": "prompt", "dtype": "string"}, {"name": "true_label", "dtype": "string"}, {"name": "prediction", "dtype": "string"}, {"name": "scores", "sequence": "float64"}], "splits": [{"name": "fewshot_0_bs_16", "num_bytes": 121460915.375, "num_examples": 3669}, {"name": "fewshot_1_bs_16", "num_bytes": 122822636.375, "num_examples": 3669}, {"name": "fewshot_3_bs_16", "num_bytes": 125537076.375, "num_examples": 3669}, {"name": "fewshot_5_bs_16", "num_bytes": 128243735.375, "num_examples": 3669}, {"name": "fewshot_8_bs_16", "num_bytes": 132312128.375, "num_examples": 3669}], "download_size": 604694442, "dataset_size": 630376491.875}} | 2023-01-25T20:41:27+00:00 |

80b92e231adc6c0cd9314ab5de5e9a3997c0be16 | # Dataset Card for "yuvalkirstain-pickapic-ft-eval-random-prompts"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | yuvalkirstain/yuvalkirstain-pickapic-ft-eval-random-prompts | [

"region:us"

]

| 2023-01-25T16:28:58+00:00 | {"dataset_info": {"features": [{"name": "prompt", "dtype": "string"}, {"name": "url", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 31392, "num_examples": 200}], "download_size": 11259, "dataset_size": 31392}} | 2023-01-25T16:29:05+00:00 |

c6912d3c9b04c0edc0857e7c4c458b0e3fef1b4b | # Dataset Card for "OxfordPets_test_facebook_opt_1.3b_Visclues_ns_3669"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Multimodal-Fatima/OxfordPets_test_facebook_opt_1.3b_Visclues_ns_3669 | [

"region:us"

]

| 2023-01-25T16:32:27+00:00 | {"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "image", "dtype": "image"}, {"name": "prompt", "dtype": "string"}, {"name": "true_label", "dtype": "string"}, {"name": "prediction", "dtype": "string"}, {"name": "scores", "sequence": "float64"}], "splits": [{"name": "fewshot_0_bs_16", "num_bytes": 121477284.375, "num_examples": 3669}, {"name": "fewshot_1_bs_16", "num_bytes": 122822944.375, "num_examples": 3669}, {"name": "fewshot_3_bs_16", "num_bytes": 125537165.375, "num_examples": 3669}, {"name": "fewshot_5_bs_16", "num_bytes": 128243890.375, "num_examples": 3669}, {"name": "fewshot_8_bs_16", "num_bytes": 132312524.375, "num_examples": 3669}], "download_size": 604685676, "dataset_size": 630393808.875}} | 2023-01-25T21:01:08+00:00 |

83bc4b9a3afda020c5cb388a4fd470a7133301e6 | DewaNyoman/parkir-perahu | [

"license:unknown",

"region:us"

]

| 2023-01-25T16:36:11+00:00 | {"license": "unknown"} | 2023-01-25T16:45:02+00:00 |

|

3e793948e63e15c2ada57984aff1e8848c55c560 | # Dataset Card for "OxfordPets_test_facebook_opt_2.7b_Visclues_ns_3669"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Multimodal-Fatima/OxfordPets_test_facebook_opt_2.7b_Visclues_ns_3669 | [

"region:us"

]

| 2023-01-25T16:39:10+00:00 | {"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "image", "dtype": "image"}, {"name": "prompt", "dtype": "string"}, {"name": "true_label", "dtype": "string"}, {"name": "prediction", "dtype": "string"}, {"name": "scores", "sequence": "float64"}], "splits": [{"name": "fewshot_0_bs_16", "num_bytes": 121488865.375, "num_examples": 3669}, {"name": "fewshot_1_bs_16", "num_bytes": 122822889.375, "num_examples": 3669}, {"name": "fewshot_3_bs_16", "num_bytes": 125537183.375, "num_examples": 3669}, {"name": "fewshot_5_bs_16", "num_bytes": 128243845.375, "num_examples": 3669}, {"name": "fewshot_8_bs_16", "num_bytes": 132312365.375, "num_examples": 3669}], "download_size": 604681164, "dataset_size": 630405148.875}} | 2023-01-25T21:31:11+00:00 |

4228afe8a630ba39652b20c3f12cf34eb80a0cd6 | # Dataset Card for "BusinessNewsDataset"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | LIDIA-HESSEN/vencortex-BusinessNewsDataset | [

"region:us"

]

| 2023-01-25T17:09:47+00:00 | {"dataset_info": {"features": [{"name": "title", "dtype": "string"}, {"name": "image", "dtype": "string"}, {"name": "text", "dtype": "string"}, {"name": "url", "dtype": "string"}, {"name": "type", "dtype": "string"}, {"name": "context_id", "dtype": "string"}, {"name": "source", "dtype": "string"}, {"name": "date", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 290733891, "num_examples": 469361}], "download_size": 123671926, "dataset_size": 290733891}} | 2023-01-25T17:09:54+00:00 |

d26e9511bf4570dcba1ea244f93807bdf14750c6 | # Dataset Card for "FAQ_student_accesiblity_for_UTD"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Rami/FAQ_student_accesiblity_for_UTD | [

"region:us"

]

| 2023-01-25T17:15:01+00:00 | {"dataset_info": {"features": [{"name": "Question", "dtype": "string"}, {"name": "Answering", "dtype": "string"}, {"name": "URL", "dtype": "string"}, {"name": "Label", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 86308, "num_examples": 156}], "download_size": 44389, "dataset_size": 86308}} | 2023-03-25T22:17:38+00:00 |

763c2084a6b03532f4b6277818b03e5263d229d3 | # This repository contains the dataset of weather forecasting competition - Datavidia 2022

## Deskripsi File

- train.csv - Data yang digunakan untuk melatih model berisi fitur-fitur dan target

- train_hourly.csv - Data tambahan berisi fitur-fitur untuk setiap jam

- test.csv - Data uji yang berisi fitur-fitur untuk prediksi target

- test_hourly.csv - Data tambahan berisi fitur-fitur untuk setiap jam pada tanggal-tanggal yang termasuk dalam test.csv

- sample_submission.csv - File berisi contoh submisi untuk kompetisi ini

## Deskripsi Fitur

### train.csv

- time – Tanggal pencatatan

- temperature_2m_max (°C) – Temperatur udara tertinggi pada ketinggian 2 m di atas permukaan

- temperature_2m_min (°C) – Temperatur udara terendah pada ketinggian 2 m di atas permukaan

- apparent_temperature_max (°C) – Temperatur semu maksimum yang terasa

- apparent_temperature_min (°C) – Temperatur semu minimum yang terasa

- sunrise (iso8601) – Waktu matahari terbit pada hari itu dengan format ISO 8601

- sunset (iso8601) – Waktu matahari tenggelam pada hari itu dengan format ISO 8601

- shortwave_radiation_sum (MJ/m²) – Total radiasi matahari pada hari tersebut

- rain_sum (mm) – Jumlah curah hujan pada hari tersebut

- snowfall_sum (cm) – Jumlah hujan salju pada hari tersebut

- windspeed_10m_max (km/h) – Kecepatan angin maksimum pada ketinggian 10 m

- windgusts_10m_max (km/h) - Kecepatan angin minimum pada ketinggian 10 m

- winddirection_10m_dominant (°) – Arah angin dominan pada hari tersebut

- et0_fao_evapotranspiration (mm) – Jumlah evaporasi dan transpirasi pada hari tersebut

- elevation – Ketinggian kota yang tercatat

- city – Nama kota yang tercatat

### train_hourly.csv

- time – Tanggal dan jam pencatatan

- temperature_2m (°C) – Temperatur pada ketinggian 2 m

- relativehumidity_2m (%) – Kelembapan pada ketinggian 2 m

- dewpoint_2m (°C) – Titik embun; suhu ambang udara mengembun

- apparent_temperature (°C) – Temperatur semu yang dirasakan

- pressure_msl (hPa) – Tekanan udara pada ketinggian permukaan air laut rata-rata (mean sea level)

- surface_pressure (hPa) – Tekanan udara pada ketinggian permukaan daerah tersebut

- snowfall (cm) – Jumlah hujan salju pada jam tersebut

- cloudcover (%) – Persentase awan yang menutupi langit

- cloudcover_low (%) – Persentase cloud cover pada awan sampai ketinggian 2 km

- cloudcover_mid (%) – Persentase cloud cover pada ketinggian 2-6 km

- cloudcover_high (%) – Persentase cloud cover pada ketinggian di atas 6 km

- shortwave_radiation (W/m²) – Rata-rata energi pancaran matahari pada gelombang inframerah hingga ultraviolet

- direct_radiation (W/m²) – Rata-rata pancaran matahari langsung pada permukaan tanah seluas 1 m2

- diffuse_radiation (W/m²) – Rata-rata pancaran matahari yang dihamburkan oleh permukaan dan atmosfer

- direct_normal_irradiance (W/m²) – Rata-rata pancaran matahari langsung pada luas 1 m2 tegak lurus dengan arah pancaran

- windspeed_10m (km/h) – Kecepatan angin pada ketinggian 10 m

- windspeed_100m (km/h) – Kecepatan angin pada ketinggian 100 m

- winddirection_10m (°) – Arah angin pada ketinggian 10 m

- winddirection_100m (°) – Arah angin pada ketinggian 100 m

- windgusts_10m (km/h) – Kecepatan angin ketika terdapat angin kencang

- et0_fao_evapotranspiration (mm) – Jumlah evapotranspirasi (evaporasi dan transpirasi) pada jam tersebut

- vapor_pressure_deficit (kPa) – Perbedaan tekanan uap air dari udara dengan tekanan uap air ketika udara tersaturasi

- soil_temperature_0_to_7cm (°C) – Rata-rata temperatur tanah pada kedalaman 0-7 cm

- soil_temperature_7_to_28cm (°C) – Rata-rata temperatur tanah pada kedalaman 7-28 cm

- soil_temperature_28_to_100cm (°C) – Rata-rata temperatur tanah pada kedalaman 28-100 cm

- soil_temperature_100_to_255cm (°C) – Rata-rata temperatur tanah pada kedalaman 100-255 cm

- soil_moisture_0_to_7cm (m³/m³) – Rata-rata kelembapan air pada tanah untuk kedalaman 0-7 cm

- soil_moisture_7_to_28cm (m³/m³) – Rata-rata kelembapan air pada tanah untuk kedalaman 7-28 cm

- soil_moisture_28_to_100cm (m³/m³) – Rata-rata kelembapan air pada tanah untuk kedalaman 28-100 cm

- soil_moisture_100_to_255cm (m³/m³) – Rata-rata kelembapan air pada tanah untuk kedalaman 100-255 cm

- city – Nama kota | elskow/Weather4cast | [

"license:unlicense",

"region:us"

]

| 2023-01-25T17:31:20+00:00 | {"license": "unlicense"} | 2023-01-25T17:58:10+00:00 |

a4c789887a5064ddb505b642b381c347ac0c6964 |

# Dataset Card for pile-pii-scrubadub

## Dataset Description

- **Repository: https://github.com/tomekkorbak/aligned-pretraining-objectives**

- **Paper: Arxiv link to be added**

### Dataset Summary

This dataset contains text from [The Pile](https://huggingface.co/datasets/the_pile), annotated based on the toxicity of each sentence.

Each document (row in the dataset) is segmented into sentences, and each sentence is given a score: the toxicity predicted by the [Detoxify](https://github.com/unitaryai/detoxify).

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

This dataset is taken from [The Pile](https://huggingface.co/datasets/the_pile), which is English text.

## Dataset Structure

### Data Instances

1949977

### Data Fields

- texts (sequence): a list of the sentences in the document, segmented using SpaCy

- meta (dict): the section of [The Pile](https://huggingface.co/datasets/the_pile) from which it originated

- scores (sequence): a score for each sentence in the `texts` column indicating the toxicity predicted by [Detoxify](https://github.com/unitaryai/detoxify)

- avg_score (float64): the average of the scores listed in the `scores` column

- num_sents (int64): the number of sentences (and scores) in that document

### Data Splits

Training set only

## Dataset Creation

### Curation Rationale

This is labeled text from [The Pile](https://huggingface.co/datasets/the_pile), a large dataset of text in English. The text is scored for toxicity so that generative language models can be trained to avoid generating toxic text.

### Source Data

#### Initial Data Collection and Normalization

This is labeled text from [The Pile](https://huggingface.co/datasets/the_pile).

#### Who are the source language producers?

Please see [The Pile](https://huggingface.co/datasets/the_pile) for the source of the dataset.

### Annotations

#### Annotation process

Each sentence was scored using [Detoxify](https://github.com/unitaryai/detoxify), which is a toxic comment classifier.

We used the `unbiased` model which is based on the 124M parameter [RoBERTa](https://arxiv.org/abs/1907.11692) and trained on the [Jigsaw Unintended Bias in Toxicity Classification dataset](https://www.kaggle.com/c/jigsaw-unintended-bias-in-toxicity-classification).

#### Who are the annotators?

[Detoxify](https://github.com/unitaryai/detoxify)

### Personal and Sensitive Information

This dataset contains all personal identifable information and toxic text that was originally contained in [The Pile](https://huggingface.co/datasets/the_pile).

## Considerations for Using the Data

### Social Impact of Dataset

This dataset contains examples of toxic text and personal identifiable information.

(A version of this datatset with personal identifiable information annotated is [available here](https://huggingface.co/datasets/tomekkorbak/pile-pii-scrubadub).)

Please take care to avoid misusing the toxic text or putting anybody in danger by publicizing their information.

This dataset is intended for research purposes only. We cannot guarantee that all toxic text has been detected, and we cannot guarantee that models trained using it will avoid generating toxic text.

We do not recommend deploying models trained on this data.

### Discussion of Biases

This dataset contains all biases from The Pile discussed in their paper: https://arxiv.org/abs/2101.00027

### Other Known Limitations

The toxic text in this dataset was detected using imperfect automated detection methods. We cannot guarantee that the labels are 100% accurate.

## Additional Information

### Dataset Curators

[The Pile](https://huggingface.co/datasets/the_pile)

### Licensing Information

From [The Pile](https://huggingface.co/datasets/the_pile): PubMed Central: [MIT License](https://github.com/EleutherAI/pile-pubmedcentral/blob/master/LICENSE)

### Citation Information

Paper information to be added

### Contributions

[The Pile](https://huggingface.co/datasets/the_pile) | tomekkorbak/pile-detoxify | [

"task_categories:text-classification",

"task_categories:other",

"task_ids:acceptability-classification",

"task_ids:hate-speech-detection",

"task_ids:text-scoring",

"annotations_creators:machine-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:1M<n<10M",

"source_datasets:extended|the_pile",

"language:en",

"license:mit",

"toxicity",

"pretraining-with-human-feedback",

"arxiv:1907.11692",

"arxiv:2101.00027",

"region:us"

]

| 2023-01-25T17:32:30+00:00 | {"annotations_creators": ["machine-generated"], "language_creators": ["found"], "language": ["en"], "license": ["mit"], "multilinguality": ["monolingual"], "size_categories": ["1M<n<10M"], "source_datasets": ["extended|the_pile"], "task_categories": ["text-classification", "other"], "task_ids": ["acceptability-classification", "hate-speech-detection", "text-scoring"], "pretty_name": "pile-detoxify", "tags": ["toxicity", "pretraining-with-human-feedback"]} | 2023-02-07T15:31:11+00:00 |

b61d29f477163034001472614dc97fb9614dddea |

# Dataset Card for DocLayNet small

## About this card (01/27/2023)

### Property and license

All information from this page but the content of this paragraph "About this card (01/27/2023)" has been copied/pasted from [Dataset Card for DocLayNet](https://huggingface.co/datasets/ds4sd/DocLayNet).

DocLayNet is a dataset created by Deep Search (IBM Research) published under [license CDLA-Permissive-1.0](https://huggingface.co/datasets/ds4sd/DocLayNet#licensing-information).

I do not claim any rights to the data taken from this dataset and published on this page.

### DocLayNet dataset

[DocLayNet dataset](https://github.com/DS4SD/DocLayNet) (IBM) provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories.

Until today, the dataset can be downloaded through direct links or as a dataset from Hugging Face datasets:

- direct links: [doclaynet_core.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_core.zip) (28 GiB), [doclaynet_extra.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_extra.zip) (7.5 GiB)

- Hugging Face dataset library: [dataset DocLayNet](https://huggingface.co/datasets/ds4sd/DocLayNet)

Paper: [DocLayNet: A Large Human-Annotated Dataset for Document-Layout Analysis](https://arxiv.org/abs/2206.01062) (06/02/2022)

### Processing into a format facilitating its use by HF notebooks

These 2 options require the downloading of all the data (approximately 30GBi), which requires downloading time (about 45 mn in Google Colab) and a large space on the hard disk. These could limit experimentation for people with low resources.

Moreover, even when using the download via HF datasets library, it is necessary to download the EXTRA zip separately ([doclaynet_extra.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_extra.zip), 7.5 GiB) to associate the annotated bounding boxes with the text extracted by OCR from the PDFs. This operation also requires additional code because the boundings boxes of the texts do not necessarily correspond to those annotated (a calculation of the percentage of area in common between the boundings boxes annotated and those of the texts makes it possible to make a comparison between them).

At last, in order to use Hugging Face notebooks on fine-tuning layout models like LayoutLMv3 or LiLT, DocLayNet data must be processed in a proper format.

For all these reasons, I decided to process the DocLayNet dataset:

- into 3 datasets of different sizes:

- [DocLayNet small](https://huggingface.co/datasets/pierreguillou/DocLayNet-small) (about 1% of DocLayNet) < 1.000k document images (691 train, 64 val, 49 test)

- [DocLayNet base](https://huggingface.co/datasets/pierreguillou/DocLayNet-base) (about 10% of DocLayNet) < 10.000k document images (6910 train, 648 val, 499 test)

- [DocLayNet large](https://huggingface.co/datasets/pierreguillou/DocLayNet-large) (about 100% of DocLayNet) < 100.000k document images (69.103 train, 6.480 val, 4.994 test)

- with associated texts and PDFs (base64 format),

- and in a format facilitating their use by HF notebooks.

*Note: the layout HF notebooks will greatly help participants of the IBM [ICDAR 2023 Competition on Robust Layout Segmentation in Corporate Documents](https://ds4sd.github.io/icdar23-doclaynet/)!*

### About PDFs languages

Citation of the page 3 of the [DocLayNet paper](https://arxiv.org/abs/2206.01062):

"We did not control the document selection with regard to language. **The vast majority of documents contained in DocLayNet (close to 95%) are published in English language.** However, **DocLayNet also contains a number of documents in other languages such as German (2.5%), French (1.0%) and Japanese (1.0%)**. While the document language has negligible impact on the performance of computer vision methods such as object detection and segmentation models, it might prove challenging for layout analysis methods which exploit textual features."

### About PDFs categories distribution

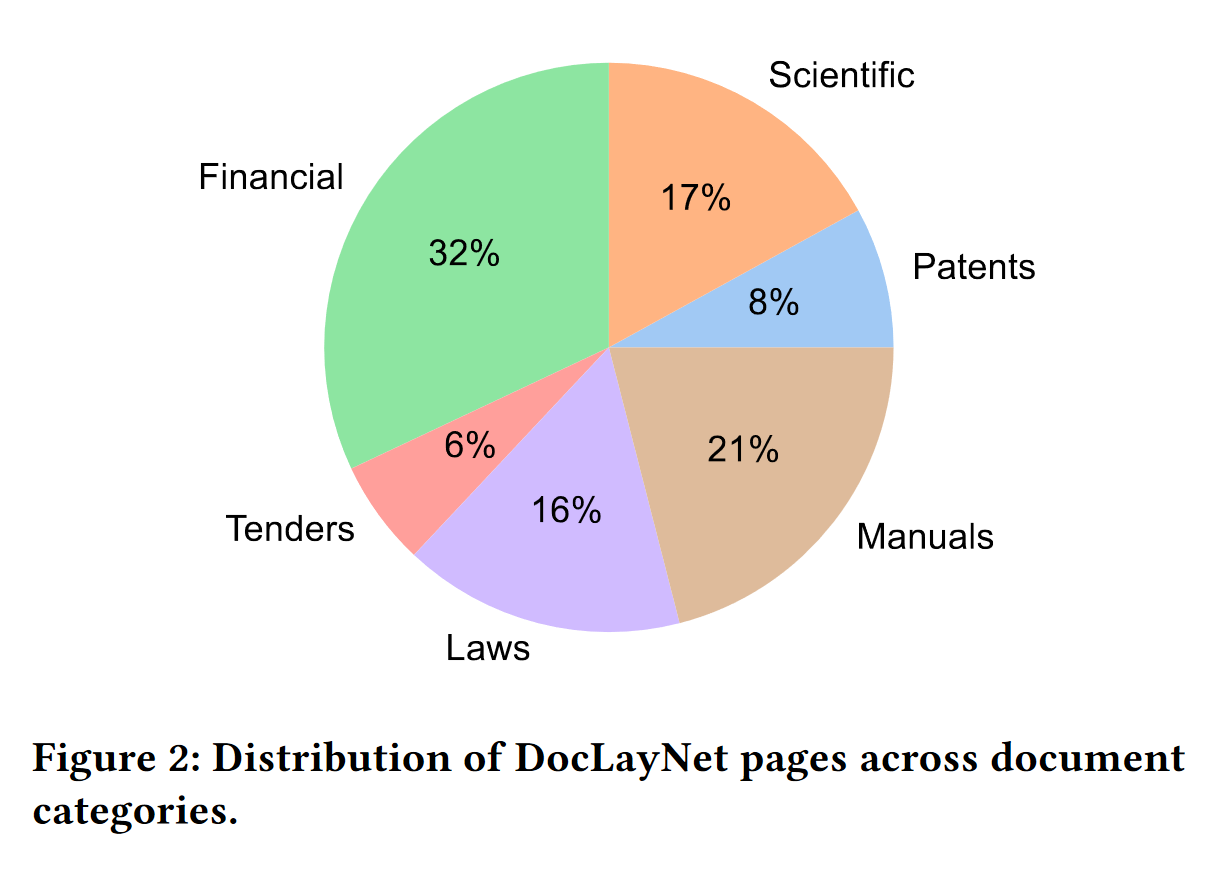

Citation of the page 3 of the [DocLayNet paper](https://arxiv.org/abs/2206.01062):

"The pages in DocLayNet can be grouped into **six distinct categories**, namely **Financial Reports, Manuals, Scientific Articles, Laws & Regulations, Patents and Government Tenders**. Each document category was sourced from various repositories. For example, Financial Reports contain both free-style format annual reports which expose company-specific, artistic layouts as well as the more formal SEC filings. The two largest categories (Financial Reports and Manuals) contain a large amount of free-style layouts in order to obtain maximum variability. In the other four categories, we boosted the variability by mixing documents from independent providers, such as different government websites or publishers. In Figure 2, we show the document categories contained in DocLayNet with their respective sizes."

### Download & overview

The size of the DocLayNet small is about 1% of the DocLayNet dataset (random selection respectively in the train, val and test files).

```

# !pip install -q datasets

from datasets import load_dataset

dataset_small = load_dataset("pierreguillou/DocLayNet-small")

# overview of dataset_small

DatasetDict({

train: Dataset({

features: ['id', 'texts', 'bboxes_block', 'bboxes_line', 'categories', 'image', 'pdf', 'page_hash', 'original_filename', 'page_no', 'num_pages', 'original_width', 'original_height', 'coco_width', 'coco_height', 'collection', 'doc_category'],

num_rows: 691

})

validation: Dataset({

features: ['id', 'texts', 'bboxes_block', 'bboxes_line', 'categories', 'image', 'pdf', 'page_hash', 'original_filename', 'page_no', 'num_pages', 'original_width', 'original_height', 'coco_width', 'coco_height', 'collection', 'doc_category'],

num_rows: 64

})

test: Dataset({

features: ['id', 'texts', 'bboxes_block', 'bboxes_line', 'categories', 'image', 'pdf', 'page_hash', 'original_filename', 'page_no', 'num_pages', 'original_width', 'original_height', 'coco_width', 'coco_height', 'collection', 'doc_category'],

num_rows: 49

})

})

```

### Annotated bounding boxes

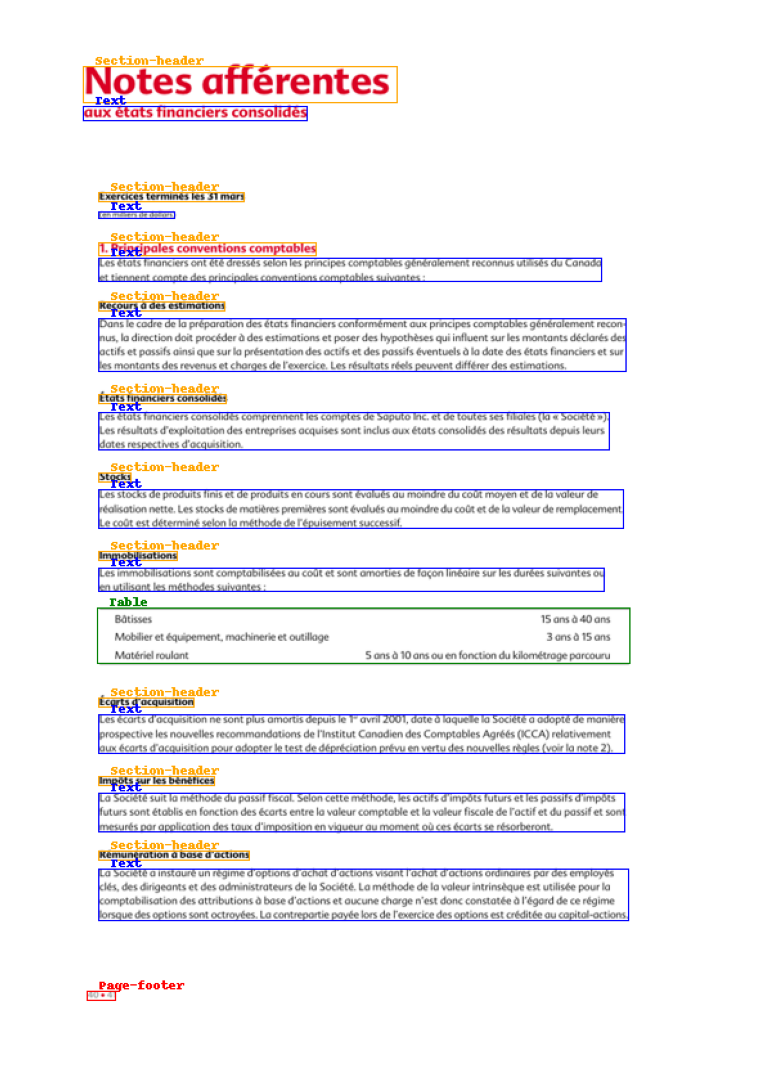

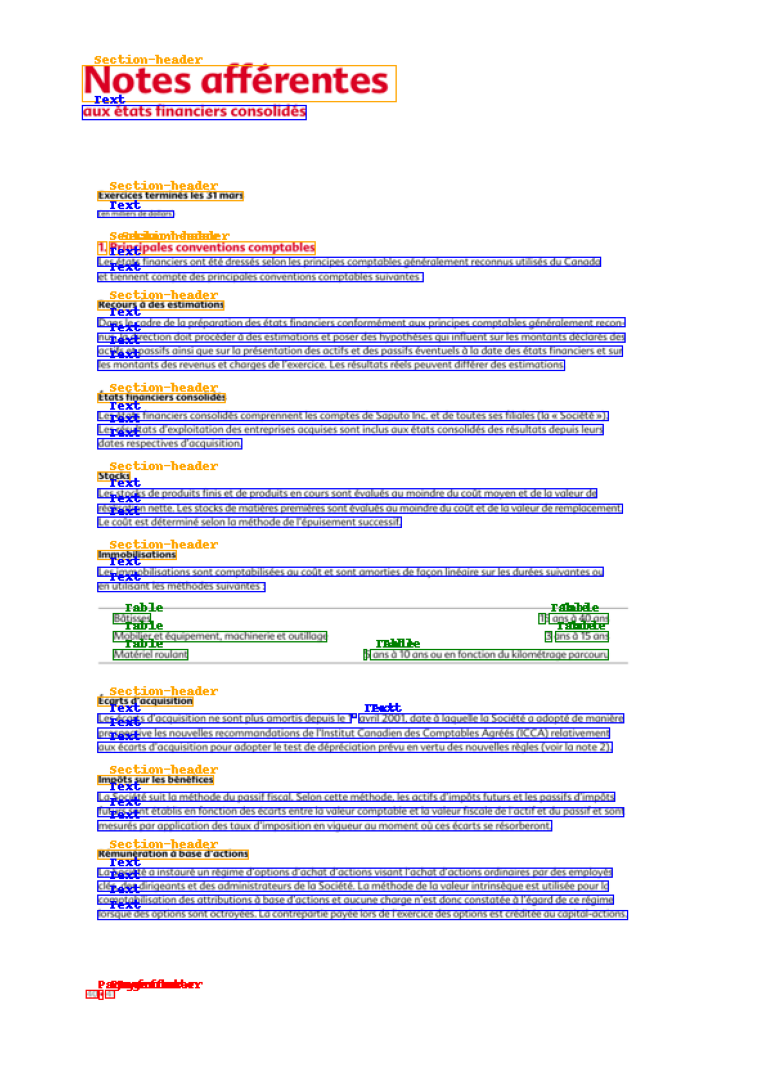

The DocLayNet base makes easy to display document image with the annotaed bounding boxes of paragraphes or lines.

Check the notebook [processing_DocLayNet_dataset_to_be_used_by_layout_models_of_HF_hub.ipynb](https://github.com/piegu/language-models/blob/master/processing_DocLayNet_dataset_to_be_used_by_layout_models_of_HF_hub.ipynb) in order to get the code.

#### Paragraphes

#### Lines

### HF notebooks

- [notebooks LayoutLM](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LayoutLM) (Niels Rogge)

- [notebooks LayoutLMv2](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LayoutLMv2) (Niels Rogge)

- [notebooks LayoutLMv3](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LayoutLMv3) (Niels Rogge)

- [notebooks LiLT](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LiLT) (Niels Rogge)

- [Document AI: Fine-tuning LiLT for document-understanding using Hugging Face Transformers](https://github.com/philschmid/document-ai-transformers/blob/main/training/lilt_funsd.ipynb) ([post](https://www.philschmid.de/fine-tuning-lilt#3-fine-tune-and-evaluate-lilt) of Phil Schmid)

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Dataset Structure](#dataset-structure)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Annotations](#annotations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://developer.ibm.com/exchanges/data/all/doclaynet/

- **Repository:** https://github.com/DS4SD/DocLayNet

- **Paper:** https://doi.org/10.1145/3534678.3539043

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

DocLayNet provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories. It provides several unique features compared to related work such as PubLayNet or DocBank:

1. *Human Annotation*: DocLayNet is hand-annotated by well-trained experts, providing a gold-standard in layout segmentation through human recognition and interpretation of each page layout

2. *Large layout variability*: DocLayNet includes diverse and complex layouts from a large variety of public sources in Finance, Science, Patents, Tenders, Law texts and Manuals

3. *Detailed label set*: DocLayNet defines 11 class labels to distinguish layout features in high detail.

4. *Redundant annotations*: A fraction of the pages in DocLayNet are double- or triple-annotated, allowing to estimate annotation uncertainty and an upper-bound of achievable prediction accuracy with ML models

5. *Pre-defined train- test- and validation-sets*: DocLayNet provides fixed sets for each to ensure proportional representation of the class-labels and avoid leakage of unique layout styles across the sets.

### Supported Tasks and Leaderboards

We are hosting a competition in ICDAR 2023 based on the DocLayNet dataset. For more information see https://ds4sd.github.io/icdar23-doclaynet/.

## Dataset Structure

### Data Fields

DocLayNet provides four types of data assets:

1. PNG images of all pages, resized to square `1025 x 1025px`

2. Bounding-box annotations in COCO format for each PNG image

3. Extra: Single-page PDF files matching each PNG image

4. Extra: JSON file matching each PDF page, which provides the digital text cells with coordinates and content

The COCO image record are defined like this example

```js

...

{

"id": 1,

"width": 1025,

"height": 1025,

"file_name": "132a855ee8b23533d8ae69af0049c038171a06ddfcac892c3c6d7e6b4091c642.png",

// Custom fields:

"doc_category": "financial_reports" // high-level document category

"collection": "ann_reports_00_04_fancy", // sub-collection name

"doc_name": "NASDAQ_FFIN_2002.pdf", // original document filename

"page_no": 9, // page number in original document

"precedence": 0, // Annotation order, non-zero in case of redundant double- or triple-annotation

},

...

```

The `doc_category` field uses one of the following constants:

```

financial_reports,

scientific_articles,

laws_and_regulations,

government_tenders,

manuals,

patents

```

### Data Splits

The dataset provides three splits

- `train`

- `val`

- `test`

## Dataset Creation

### Annotations

#### Annotation process

The labeling guideline used for training of the annotation experts are available at [DocLayNet_Labeling_Guide_Public.pdf](https://raw.githubusercontent.com/DS4SD/DocLayNet/main/assets/DocLayNet_Labeling_Guide_Public.pdf).

#### Who are the annotators?

Annotations are crowdsourced.

## Additional Information

### Dataset Curators

The dataset is curated by the [Deep Search team](https://ds4sd.github.io/) at IBM Research.

You can contact us at [[email protected]](mailto:[email protected]).

Curators:

- Christoph Auer, [@cau-git](https://github.com/cau-git)

- Michele Dolfi, [@dolfim-ibm](https://github.com/dolfim-ibm)

- Ahmed Nassar, [@nassarofficial](https://github.com/nassarofficial)

- Peter Staar, [@PeterStaar-IBM](https://github.com/PeterStaar-IBM)

### Licensing Information

License: [CDLA-Permissive-1.0](https://cdla.io/permissive-1-0/)

### Citation Information

```bib

@article{doclaynet2022,

title = {DocLayNet: A Large Human-Annotated Dataset for Document-Layout Segmentation},

doi = {10.1145/3534678.353904},

url = {https://doi.org/10.1145/3534678.3539043},

author = {Pfitzmann, Birgit and Auer, Christoph and Dolfi, Michele and Nassar, Ahmed S and Staar, Peter W J},

year = {2022},

isbn = {9781450393850},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

booktitle = {Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining},

pages = {3743–3751},

numpages = {9},

location = {Washington DC, USA},

series = {KDD '22}

}

```

### Contributions

Thanks to [@dolfim-ibm](https://github.com/dolfim-ibm), [@cau-git](https://github.com/cau-git) for adding this dataset. | pierreguillou/DocLayNet-small | [

"task_categories:object-detection",

"task_categories:image-segmentation",

"task_categories:token-classification",

"task_ids:instance-segmentation",

"annotations_creators:crowdsourced",

"size_categories:1K<n<10K",

"language:en",

"language:de",

"language:fr",

"language:ja",

"license:other",

"DocLayNet",

"COCO",

"PDF",

"IBM",

"Financial-Reports",

"Finance",

"Manuals",

"Scientific-Articles",

"Science",

"Laws",

"Law",

"Regulations",

"Patents",

"Government-Tenders",

"object-detection",

"image-segmentation",

"token-classification",

"arxiv:2206.01062",

"region:us"

]

| 2023-01-25T17:47:43+00:00 | {"annotations_creators": ["crowdsourced"], "language": ["en", "de", "fr", "ja"], "license": "other", "size_categories": ["1K<n<10K"], "task_categories": ["object-detection", "image-segmentation", "token-classification"], "task_ids": ["instance-segmentation"], "pretty_name": "DocLayNet small", "tags": ["DocLayNet", "COCO", "PDF", "IBM", "Financial-Reports", "Finance", "Manuals", "Scientific-Articles", "Science", "Laws", "Law", "Regulations", "Patents", "Government-Tenders", "object-detection", "image-segmentation", "token-classification"]} | 2023-05-17T07:56:10+00:00 |

86fa5ebffa3d336210ee1eeeec349b2c7f07899b |

# Dataset Card for DocLayNet base

## About this card (01/27/2023)

### Property and license

All information from this page but the content of this paragraph "About this card (01/27/2023)" has been copied/pasted from [Dataset Card for DocLayNet](https://huggingface.co/datasets/ds4sd/DocLayNet).

DocLayNet is a dataset created by Deep Search (IBM Research) published under [license CDLA-Permissive-1.0](https://huggingface.co/datasets/ds4sd/DocLayNet#licensing-information).

I do not claim any rights to the data taken from this dataset and published on this page.

### DocLayNet dataset

[DocLayNet dataset](https://github.com/DS4SD/DocLayNet) (IBM) provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories.

Until today, the dataset can be downloaded through direct links or as a dataset from Hugging Face datasets:

- direct links: [doclaynet_core.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_core.zip) (28 GiB), [doclaynet_extra.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_extra.zip) (7.5 GiB)

- Hugging Face dataset library: [dataset DocLayNet](https://huggingface.co/datasets/ds4sd/DocLayNet)

Paper: [DocLayNet: A Large Human-Annotated Dataset for Document-Layout Analysis](https://arxiv.org/abs/2206.01062) (06/02/2022)

### Processing into a format facilitating its use by HF notebooks

These 2 options require the downloading of all the data (approximately 30GBi), which requires downloading time (about 45 mn in Google Colab) and a large space on the hard disk. These could limit experimentation for people with low resources.

Moreover, even when using the download via HF datasets library, it is necessary to download the EXTRA zip separately ([doclaynet_extra.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_extra.zip), 7.5 GiB) to associate the annotated bounding boxes with the text extracted by OCR from the PDFs. This operation also requires additional code because the boundings boxes of the texts do not necessarily correspond to those annotated (a calculation of the percentage of area in common between the boundings boxes annotated and those of the texts makes it possible to make a comparison between them).

At last, in order to use Hugging Face notebooks on fine-tuning layout models like LayoutLMv3 or LiLT, DocLayNet data must be processed in a proper format.

For all these reasons, I decided to process the DocLayNet dataset:

- into 3 datasets of different sizes:

- [DocLayNet small](https://huggingface.co/datasets/pierreguillou/DocLayNet-small) (about 1% of DocLayNet) < 1.000k document images (691 train, 64 val, 49 test)

- [DocLayNet base](https://huggingface.co/datasets/pierreguillou/DocLayNet-base) (about 10% of DocLayNet) < 10.000k document images (6910 train, 648 val, 499 test)

- [DocLayNet large](https://huggingface.co/datasets/pierreguillou/DocLayNet-large) (about 100% of DocLayNet) < 100.000k document images (69.103 train, 6.480 val, 4.994 test)

- with associated texts and PDFs (base64 format),

- and in a format facilitating their use by HF notebooks.

*Note: the layout HF notebooks will greatly help participants of the IBM [ICDAR 2023 Competition on Robust Layout Segmentation in Corporate Documents](https://ds4sd.github.io/icdar23-doclaynet/)!*

### About PDFs languages

Citation of the page 3 of the [DocLayNet paper](https://arxiv.org/abs/2206.01062):

"We did not control the document selection with regard to language. **The vast majority of documents contained in DocLayNet (close to 95%) are published in English language.** However, **DocLayNet also contains a number of documents in other languages such as German (2.5%), French (1.0%) and Japanese (1.0%)**. While the document language has negligible impact on the performance of computer vision methods such as object detection and segmentation models, it might prove challenging for layout analysis methods which exploit textual features."

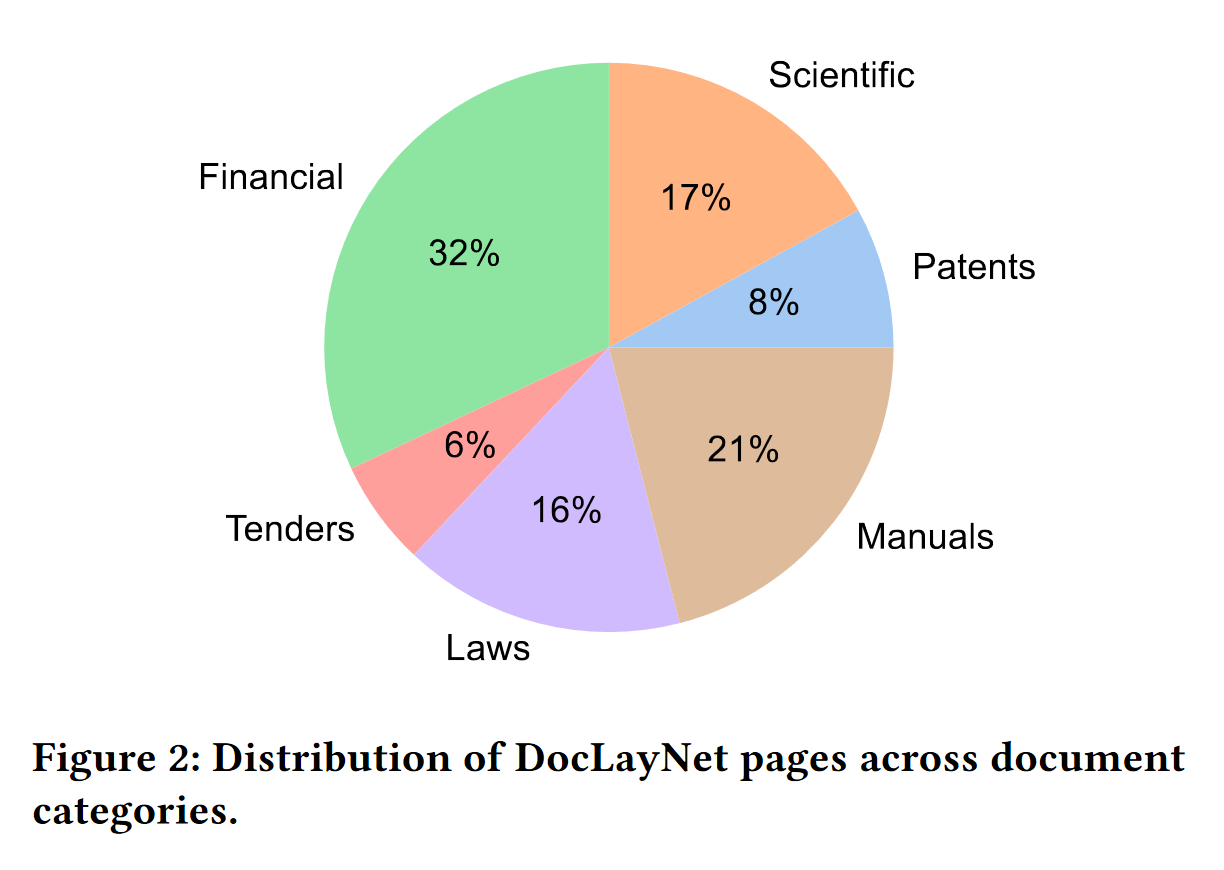

### About PDFs categories distribution

Citation of the page 3 of the [DocLayNet paper](https://arxiv.org/abs/2206.01062):

"The pages in DocLayNet can be grouped into **six distinct categories**, namely **Financial Reports, Manuals, Scientific Articles, Laws & Regulations, Patents and Government Tenders**. Each document category was sourced from various repositories. For example, Financial Reports contain both free-style format annual reports which expose company-specific, artistic layouts as well as the more formal SEC filings. The two largest categories (Financial Reports and Manuals) contain a large amount of free-style layouts in order to obtain maximum variability. In the other four categories, we boosted the variability by mixing documents from independent providers, such as different government websites or publishers. In Figure 2, we show the document categories contained in DocLayNet with their respective sizes."

### Download & overview

The size of the DocLayNet small is about 10% of the DocLayNet dataset (random selection respectively in the train, val and test files).

```

# !pip install -q datasets

from datasets import load_dataset

dataset_base = load_dataset("pierreguillou/DocLayNet-base")

# overview of dataset_base

DatasetDict({

train: Dataset({

features: ['id', 'texts', 'bboxes_block', 'bboxes_line', 'categories', 'image', 'pdf', 'page_hash', 'original_filename', 'page_no', 'num_pages', 'original_width', 'original_height', 'coco_width', 'coco_height', 'collection', 'doc_category'],

num_rows: 6910

})

validation: Dataset({

features: ['id', 'texts', 'bboxes_block', 'bboxes_line', 'categories', 'image', 'pdf', 'page_hash', 'original_filename', 'page_no', 'num_pages', 'original_width', 'original_height', 'coco_width', 'coco_height', 'collection', 'doc_category'],

num_rows: 648

})

test: Dataset({

features: ['id', 'texts', 'bboxes_block', 'bboxes_line', 'categories', 'image', 'pdf', 'page_hash', 'original_filename', 'page_no', 'num_pages', 'original_width', 'original_height', 'coco_width', 'coco_height', 'collection', 'doc_category'],

num_rows: 499

})

})

```

### Annotated bounding boxes

The DocLayNet base makes easy to display document image with the annotaed bounding boxes of paragraphes or lines.

Check the notebook [processing_DocLayNet_dataset_to_be_used_by_layout_models_of_HF_hub.ipynb](https://github.com/piegu/language-models/blob/master/processing_DocLayNet_dataset_to_be_used_by_layout_models_of_HF_hub.ipynb) in order to get the code.

#### Paragraphes

#### Lines

### HF notebooks

- [notebooks LayoutLM](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LayoutLM) (Niels Rogge)

- [notebooks LayoutLMv2](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LayoutLMv2) (Niels Rogge)

- [notebooks LayoutLMv3](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LayoutLMv3) (Niels Rogge)

- [notebooks LiLT](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LiLT) (Niels Rogge)

- [Document AI: Fine-tuning LiLT for document-understanding using Hugging Face Transformers](https://github.com/philschmid/document-ai-transformers/blob/main/training/lilt_funsd.ipynb) ([post](https://www.philschmid.de/fine-tuning-lilt#3-fine-tune-and-evaluate-lilt) of Phil Schmid)

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Dataset Structure](#dataset-structure)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Annotations](#annotations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://developer.ibm.com/exchanges/data/all/doclaynet/

- **Repository:** https://github.com/DS4SD/DocLayNet

- **Paper:** https://doi.org/10.1145/3534678.3539043

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

DocLayNet provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories. It provides several unique features compared to related work such as PubLayNet or DocBank:

1. *Human Annotation*: DocLayNet is hand-annotated by well-trained experts, providing a gold-standard in layout segmentation through human recognition and interpretation of each page layout

2. *Large layout variability*: DocLayNet includes diverse and complex layouts from a large variety of public sources in Finance, Science, Patents, Tenders, Law texts and Manuals

3. *Detailed label set*: DocLayNet defines 11 class labels to distinguish layout features in high detail.

4. *Redundant annotations*: A fraction of the pages in DocLayNet are double- or triple-annotated, allowing to estimate annotation uncertainty and an upper-bound of achievable prediction accuracy with ML models

5. *Pre-defined train- test- and validation-sets*: DocLayNet provides fixed sets for each to ensure proportional representation of the class-labels and avoid leakage of unique layout styles across the sets.

### Supported Tasks and Leaderboards

We are hosting a competition in ICDAR 2023 based on the DocLayNet dataset. For more information see https://ds4sd.github.io/icdar23-doclaynet/.

## Dataset Structure

### Data Fields

DocLayNet provides four types of data assets:

1. PNG images of all pages, resized to square `1025 x 1025px`

2. Bounding-box annotations in COCO format for each PNG image

3. Extra: Single-page PDF files matching each PNG image

4. Extra: JSON file matching each PDF page, which provides the digital text cells with coordinates and content

The COCO image record are defined like this example

```js

...

{

"id": 1,

"width": 1025,

"height": 1025,

"file_name": "132a855ee8b23533d8ae69af0049c038171a06ddfcac892c3c6d7e6b4091c642.png",

// Custom fields:

"doc_category": "financial_reports" // high-level document category

"collection": "ann_reports_00_04_fancy", // sub-collection name

"doc_name": "NASDAQ_FFIN_2002.pdf", // original document filename

"page_no": 9, // page number in original document

"precedence": 0, // Annotation order, non-zero in case of redundant double- or triple-annotation

},

...

```

The `doc_category` field uses one of the following constants:

```

financial_reports,

scientific_articles,

laws_and_regulations,

government_tenders,

manuals,

patents

```

### Data Splits

The dataset provides three splits

- `train`

- `val`

- `test`

## Dataset Creation

### Annotations

#### Annotation process

The labeling guideline used for training of the annotation experts are available at [DocLayNet_Labeling_Guide_Public.pdf](https://raw.githubusercontent.com/DS4SD/DocLayNet/main/assets/DocLayNet_Labeling_Guide_Public.pdf).

#### Who are the annotators?

Annotations are crowdsourced.

## Additional Information

### Dataset Curators

The dataset is curated by the [Deep Search team](https://ds4sd.github.io/) at IBM Research.

You can contact us at [[email protected]](mailto:[email protected]).

Curators:

- Christoph Auer, [@cau-git](https://github.com/cau-git)

- Michele Dolfi, [@dolfim-ibm](https://github.com/dolfim-ibm)

- Ahmed Nassar, [@nassarofficial](https://github.com/nassarofficial)

- Peter Staar, [@PeterStaar-IBM](https://github.com/PeterStaar-IBM)

### Licensing Information

License: [CDLA-Permissive-1.0](https://cdla.io/permissive-1-0/)

### Citation Information

```bib

@article{doclaynet2022,

title = {DocLayNet: A Large Human-Annotated Dataset for Document-Layout Segmentation},

doi = {10.1145/3534678.353904},

url = {https://doi.org/10.1145/3534678.3539043},

author = {Pfitzmann, Birgit and Auer, Christoph and Dolfi, Michele and Nassar, Ahmed S and Staar, Peter W J},

year = {2022},

isbn = {9781450393850},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

booktitle = {Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining},

pages = {3743–3751},

numpages = {9},

location = {Washington DC, USA},

series = {KDD '22}

}

```

### Contributions

Thanks to [@dolfim-ibm](https://github.com/dolfim-ibm), [@cau-git](https://github.com/cau-git) for adding this dataset. | pierreguillou/DocLayNet-base | [

"task_categories:object-detection",

"task_categories:image-segmentation",

"task_categories:token-classification",

"task_ids:instance-segmentation",

"annotations_creators:crowdsourced",

"size_categories:1K<n<10K",

"language:en",

"language:de",

"language:fr",

"language:ja",

"license:other",

"DocLayNet",

"COCO",

"PDF",

"IBM",

"Financial-Reports",

"Finance",

"Manuals",

"Scientific-Articles",

"Science",

"Laws",

"Law",

"Regulations",

"Patents",

"Government-Tenders",

"object-detection",

"image-segmentation",

"token-classification",

"arxiv:2206.01062",

"region:us"

]

| 2023-01-25T17:53:26+00:00 | {"annotations_creators": ["crowdsourced"], "language": ["en", "de", "fr", "ja"], "license": "other", "size_categories": ["1K<n<10K"], "task_categories": ["object-detection", "image-segmentation", "token-classification"], "task_ids": ["instance-segmentation"], "pretty_name": "DocLayNet base", "tags": ["DocLayNet", "COCO", "PDF", "IBM", "Financial-Reports", "Finance", "Manuals", "Scientific-Articles", "Science", "Laws", "Law", "Regulations", "Patents", "Government-Tenders", "object-detection", "image-segmentation", "token-classification"]} | 2023-05-17T07:56:30+00:00 |

ade0789f658fd356185f9cc1438d268835b99204 | <h1 style="text-align: center;">MPSC Multi-view Dataset</h1>

<p style='text-align: justify;'>

Deep video representation learning has recently attained state-of-the-art performance in video action recognition. However, when used with video clips from varied perspectives, the performance of these models degrades significantly. Existing VAR models frequently simultaneously contain both view information and action attributes, making it difficult to learn a view-invariant representation. Therefore, to study the attribute of multiview representation, we collected a large-scale time synchronous multiview video dataset from 10 subjects in both indoor and outdoor settings performing 10 different actions with three horizontal and vertical viewpoints using a smartphone, an action camera, and a drone camera. We provide the multiview video dataset with various meta-data information to facilitate further research for robust VAR systems.

</p>

### Collecting multiview videos

<p style='text-align: justify;'>

In our data collection strategy, we choose regular sensors (smartphone camera), wide-angle sensors (go-pro, action camera), and drone cameras covering front views, side views, and top view positions to receive simultaneous three 2D projections of ten action events. To collect multi-angular and positional projections of the same actions, smartphones (Samsung S8 plus, flat-angle sensor), action cameras (Dragon touch EK700, wide-angle sensor), and a drone (Parrot Anafi, flat-angle sensor) capture the action events simultaneously from different positions in 1080p at 30 FPS. Among the cameras, the smartphone was hand-held and tracked the events. The action camera was placed in a stationary position and captured the events using its wide-view sensor. Both of them were positions approximately 6 feet away from the participants to capture two completely different side-views of the actions from horizontal position. Lastly, the drone captures the events' top view while flying at a low altitude of varying distances from 8 feet to 15 feet. Although we positioned the cameras to capture events from a particular angular position with some occasional movement, it effectively captured an almost complete-view of actions, as the volunteers turn in different directions to perform different actions without any constraints.

</p>

<p style='text-align: justify;'>

We have selected ten regular micro-actions in our dataset with both static (poses: sitting, standing, lying with face up, lying with face down) and dynamic actions (temporal patterns: walking, push up, waving hand, leg exercise, object carrying, object pick/drop). We hypothesize this would further foundation for complex action recognition since some complex actions require sequentially performing a subset of these micro-actions. In our target actions selection, some actions have only minor differences to distinguish and require contextual knowledge (walking and object carrying, push-ups and lying down, lying with face down and lying with face up, standing and hand waving in standing position). Further, we have collected the background-only data without the human to provide a no-action/human dataset for the identical backgrounds.

</p>

<p style='text-align: justify;'>

We collect these data [sampled shown in the follwing figure] from 12 volunteer participants with varying traits. The participant performs all ten actions for 30 seconds while being recorded from three-positional cameras simultaneously in each session. The participants provided data multiple times, under different environments with different clothing amassing 30 sessions, yielding approximately ten hours of total video data in a time-controlled and safe setup.

</p>

<p style='text-align: justify;'>

Further, the videos are collected under varying realistic lighting conditions; natural lighting, artificial lighting, and a mix of both indoors, and outdoor environments, and multiple realistic backgrounds like walls, doors, windows, grasses, roads, and reflective tiles with varying camera settings like zoom, brightness and contrast filter, relative motions. Environments and lighting conditions are presented in the above figure. We also provide the videos containing only background to avail further research.

</p>

### Data Preprocessing and AI readiness

<p style='text-align: justify;'>

We align each session's simultaneously recorded videos from the starting time-stamp, and at any given time, all three sensors of any particular session capture their corresponding positional projection of the same event. The alignment allows us to annotate one video file per session for the underlying action in the time duration and receive action annotation for the other two videos, significantly reducing the annotation burden for these multiview videos.

</p>

<p style='text-align: justify;'>

Besides action information, each video is also tagged with the following meta-information: the subjects' ID, backgrounds environments, lighting conditions, camera specifications, settings (varying zoom, brightness), camera-subject distances, and relative movements, for various research directions. Additionally, other information such as the date, time, and the trial number were also listed for each video. Multiple human volunteers manually annotated video files, and these annotations went through multiple cross-checking. Finally, we prepare the video data in pickle file format for quick loading using python/C++/Matlab libraries.

</p>

### Dataset Statistics

Here we provide the our collected dataset characteristics insight.

<p style='text-align: justify;'>

<strong> 1) Inter and Intra action variations:</strong> We ensure fine-grain inter and intra-action variation in our dataset by requesting the participants to perform similar actions in freestyle. Further, we take multiple sessions on different dates and times to incorporate inter-personal variation in the dataset. 80% of our participants provided data in multiple sessions. 58% of the participant provides their data from multiple backgrounds. We have 20% of female participants in for multiple sessions. In actions, we have 40% stable pose as action and 60% dynamic simple actions in our collected dataset. Further, 10% of our volunteers are athletes. Moreover, our dataset are relatively balanced with almost equal duration of each actions.

</p>

<p style='text-align: justify;'>

<strong> 2) Background Variations:</strong> We considered different realistic backgrounds for our data collection while ensuring safety for the participants. We have 75% data for the indoor laboratory environment. Among that, we have 60% of data with white wall background with regular inventories like computers, bookshelves, doors, and windows, 25% with reflective tiles, sunny windows, and 5% under a messy laboratory background with multiple office tables and carpets. Among the 25% outdoor data, we collected 50% of the outdoor data in green fields and concrete parking spaces. We have about 60% of the data in the artificial lighting, and the rest are in natural sunlight conditions. We also provide the backgrounds without the subjects from the three sensors' viewpoints for reference.

</p>

<p style='text-align: justify;'>

<strong>3) Viewpoint and sensor Variations:</strong> We have collected 67% data from the horizontal view and 33% from the top-angular positional viewpoints. Our 67% data are captured by the flat lens from a angular viewpoint, and 33% are captured via the wide angular view from the horizontal position. 40% data are recorded from the stable camera position, and 60% data are captured via moving camera sensors. We have 20% data from the subject-focused zoomed camera lens. Further, the subjects perform the actions while facing away from the sensors 20% of the time.

</p>

### Reference

Please refer to the following papers to cite the dataset.

- Hasan, Z., Ahmed, M., Faridee, A. Z. M., Purushotham, S., Kwon, H., Lee, H., & Roy, N. (2023). NEV-NCD: Negative Learning, Entropy, and Variance regularization based novel action categories discovery. arXiv preprint arXiv:2304.07354.

### Acknowledgement

<p style='text-align: justify;'>

We acknowledge the support of DEVCOM Army Research Laboratory (ARL) and U.S. Army Grant No. W911NF21-20076.

</p> | mahmed10/MPSC_MV | [

"task_categories:video-classification",

"Video Acitvity Recognition",

"region:us"

]

| 2023-01-25T17:53:36+00:00 | {"task_categories": ["video-classification"], "tags": ["Video Acitvity Recognition"]} | 2023-04-28T14:25:02+00:00 |

297227012467386b09e6bf7d270d277d0e2b9325 |

# Dataset Card for pile-pii-scrubadub

## Dataset Description

- **Repository: https://github.com/tomekkorbak/aligned-pretraining-objectives**

- **Paper: Arxiv link to be added**

### Dataset Summary

This dataset contains text from [The Pile](https://huggingface.co/datasets/the_pile), annotated based on the personal idenfitiable information (PII) in each sentence.

Each document (row in the dataset) is segmented into sentences, and each sentence is given a score: the percentage of words in it that are classified as PII by [Scrubadub](https://scrubadub.readthedocs.io/en/stable/).

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

This dataset is taken from [The Pile](https://huggingface.co/datasets/the_pile), which is English text.

## Dataset Structure

### Data Instances

1949977

### Data Fields

- texts (sequence): a list of the sentences in the document (segmented using [SpaCy](https://spacy.io/))

- meta (dict): the section of [The Pile](https://huggingface.co/datasets/the_pile) from which it originated

- scores (sequence): a score for each sentence in the `texts` column indicating the percent of words that are detected as PII by [Scrubadub](https://scrubadub.readthedocs.io/en/stable/)

- avg_score (float64): the average of the scores listed in the `scores` column

- num_sents (int64): the number of sentences (and scores) in that document

### Data Splits

Training set only

## Dataset Creation

### Curation Rationale

This is labeled text from [The Pile](https://huggingface.co/datasets/the_pile), a large dataset of text in English. The PII is labeled so that generative language models can be trained to avoid generating PII.

### Source Data

#### Initial Data Collection and Normalization

This is labeled text from [The Pile](https://huggingface.co/datasets/the_pile).

#### Who are the source language producers?

Please see [The Pile](https://huggingface.co/datasets/the_pile) for the source of the dataset.

### Annotations

#### Annotation process

For each sentence, [Scrubadub](https://scrubadub.readthedocs.io/en/stable/) was used to detect:

- email addresses

- addresses and postal codes

- phone numbers

- credit card numbers

- US social security numbers

- vehicle plates numbers

- dates of birth

- URLs

- login credentials

#### Who are the annotators?

[Scrubadub](https://scrubadub.readthedocs.io/en/stable/)

### Personal and Sensitive Information

This dataset contains all PII that was originally contained in [The Pile](https://huggingface.co/datasets/the_pile), with all detected PII annotated.

## Considerations for Using the Data

### Social Impact of Dataset

This dataset contains examples of real PII (conveniently annotated in the text!). Please take care to avoid misusing it or putting anybody in danger by publicizing their information.

This dataset is intended for research purposes only. We cannot guarantee that all PII has been detected, and we cannot guarantee that models trained using it will avoid generating PII.

We do not recommend deploying models trained on this data.

### Discussion of Biases

This dataset contains all biases from The Pile discussed in their paper: https://arxiv.org/abs/2101.00027

### Other Known Limitations

The PII in this dataset was detected using imperfect automated detection methods. We cannot guarantee that the labels are 100% accurate.

## Additional Information

### Dataset Curators

[The Pile](https://huggingface.co/datasets/the_pile)

### Licensing Information

From [The Pile](https://huggingface.co/datasets/the_pile): PubMed Central: [MIT License](https://github.com/EleutherAI/pile-pubmedcentral/blob/master/LICENSE)

### Citation Information

Paper information to be added

### Contributions

[The Pile](https://huggingface.co/datasets/the_pile) | tomekkorbak/pile-pii-scrubadub | [

"task_categories:text-classification",

"task_categories:other",

"task_ids:acceptability-classification",

"task_ids:text-scoring",

"annotations_creators:machine-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:1M<n<10M",

"source_datasets:extended|the_pile",

"language:en",

"license:mit",

"pii",

"personal",

"identifiable",

"information",

"pretraining-with-human-feedback",

"arxiv:2101.00027",

"region:us"

]

| 2023-01-25T18:00:01+00:00 | {"annotations_creators": ["machine-generated"], "language_creators": ["found"], "language": ["en"], "license": ["mit"], "multilinguality": ["monolingual"], "size_categories": ["1M<n<10M"], "source_datasets": ["extended|the_pile"], "task_categories": ["text-classification", "other"], "task_ids": ["acceptability-classification", "text-scoring"], "pretty_name": "pile-pii-scrubadub", "tags": ["pii", "personal", "identifiable", "information", "pretraining-with-human-feedback"]} | 2023-02-07T15:26:41+00:00 |

a06d4250163274a43e10baad618e61f097583d27 | # Dataset Card for "lat_en_loeb_morph"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | grosenthal/lat_en_loeb_morph | [

"region:us"

]

| 2023-01-25T18:11:22+00:00 | {"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "la", "dtype": "string"}, {"name": "en", "dtype": "string"}, {"name": "file", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 60797479, "num_examples": 99343}, {"name": "test", "num_bytes": 628768, "num_examples": 1014}, {"name": "valid", "num_bytes": 605889, "num_examples": 1014}], "download_size": 31059812, "dataset_size": 62032136}} | 2023-02-28T18:49:30+00:00 |

7c87c5db319dab81696ecb1b7e9ea2eb92c8f6dd |

# Dataset for training Russian language models

Overall: 75G

Scripts: https://github.com/IlyaGusev/rulm/tree/master/data_processing

| Website | Char count (M) | Word count (M) |

|-----------------|---------------|---------------|

| pikabu | 14938 | 2161 |

| lenta | 1008 | 135 |

| stihi | 2994 | 393 |

| stackoverflow | 1073 | 228 |

| habr | 5112 | 753 |

| taiga_fontanka | 419 | 55 |

| librusec | 10149 | 1573 |

| buriy | 2646 | 352 |

| ods_tass | 1908 | 255 |

| wiki | 3473 | 469 |

| math | 987 | 177 |

| IlyaGusev/rulm | [

"task_categories:text-generation",

"size_categories:10M<n<100M",

"language:ru",

"region:us"

]

| 2023-01-25T18:14:38+00:00 | {"language": ["ru"], "size_categories": ["10M<n<100M"], "task_categories": ["text-generation"], "dataset_info": {"features": [{"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 78609111353, "num_examples": 14811026}, {"name": "test", "num_bytes": 397130292, "num_examples": 74794}, {"name": "validation", "num_bytes": 395354867, "num_examples": 74691}], "download_size": 24170140196, "dataset_size": 79401596512}} | 2023-03-20T23:53:53+00:00 |

aa69145a9f971d214419ee3eba2838f3b4522fd0 | # Dataset Card for "lat_en_loeb_split"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | grosenthal/lat_en_loeb_split | [

"region:us"

]

| 2023-01-25T18:27:37+00:00 | {"dataset_info": {"features": [{"name": "id", "dtype": "int64"}, {"name": "la", "dtype": "string"}, {"name": "en", "dtype": "string"}, {"name": "file", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 46936015, "num_examples": 99343}, {"name": "test", "num_bytes": 484664, "num_examples": 1014}, {"name": "valid", "num_bytes": 468616, "num_examples": 1014}], "download_size": 26225698, "dataset_size": 47889295}} | 2023-03-25T00:31:49+00:00 |

9bc3c0c62180045ce419b1a58c9cf14666ece180 | Jupyter notebooks and supporting code | SDbiaseval/notebooks | [

"license:apache-2.0",

"region:us"

]

| 2023-01-25T18:31:00+00:00 | {"license": "apache-2.0", "viewer": false} | 2023-01-31T16:17:43+00:00 |

62c181fb787a3e753e52abc328a7c4fd83af4f00 | # Dataset Card for "methods2test_raw_grouped"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | dembastu/methods2test_raw_grouped | [

"region:us"

]