sha

stringlengths 40

40

| text

stringlengths 0

13.4M

| id

stringlengths 2

117

| tags

list | created_at

stringlengths 25

25

| metadata

stringlengths 2

31.7M

| last_modified

stringlengths 25

25

|

|---|---|---|---|---|---|---|

c487313ad85c48d196cd3aa4373ebddb42447e23 | OddBunny/fox_femboy | [

"license:cc-by-nc-nd-4.0",

"region:us"

] | 2022-09-15T07:10:35+00:00 | {"license": "cc-by-nc-nd-4.0"} | 2022-09-18T16:43:18+00:00 |

|

4b1a960c1331c8bf2a9114b9bb8d895a0a317b64 |

A PubMed-based dataset, used for the fine-tuning of the [BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://arxiv.org/abs/2007.15779) model for the context-based classification of the names of molecular pathways.

<br><br>

HuggingFace [card](https://huggingface.co/Timofey/PubMedBERT_Genes_Proteins_Context_Classifier) of the fine-tuned model.<br>

GitHub [link](https://github.com/ANDDigest/ANDDigest_classification_models) with a notebooks, for the fine-tuning and application of the model.

| Timofey/Genes_Proteins_Fine-Tuning_Dataset | [

"ANDDigest",

"ANDSystem",

"PubMed",

"arxiv:2007.15779",

"region:us"

] | 2022-09-15T08:38:09+00:00 | {"tags": ["ANDDigest", "ANDSystem", "PubMed"], "viewer": false, "extra_gated_fields": {"I agree to share my contact Information": "checkbox"}} | 2022-11-11T12:01:38+00:00 |

12a67fa2b064a06d7c22d3e32b223f484d2f3a57 |

A PubMed-based dataset, used for the fine-tuning of the [BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://arxiv.org/abs/2007.15779) model for the context-based classification of the names of molecular pathways.

<br><br>

HuggingFace [card](https://huggingface.co/Timofey/PubMedBERT_Diseases_Side-Effects_Context_Classifier) of the fine-tuned model.<br>

GitHub [link](https://github.com/ANDDigest/ANDDigest_classification_models) with a notebooks, for the fine-tuning and application of the model.

--- | Timofey/Diseases_Side-Effects_Fine-Tuning_Dataset | [

"ANDDigest",

"ANDSystem",

"PubMed",

"arxiv:2007.15779",

"region:us"

] | 2022-09-15T08:48:34+00:00 | {"tags": ["ANDDigest", "ANDSystem", "PubMed"], "viewer": false, "extra_gated_fields": {"I agree to share my contact Information": "checkbox"}} | 2022-11-11T12:01:08+00:00 |

fc4e15ea42bdae5e66a3df41a9f047acda875ebf |

A PubMed-based dataset, used for the fine-tuning of the [BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://arxiv.org/abs/2007.15779) model for the context-based classification of the names of molecular pathways.

<br><br>

HuggingFace [card](https://huggingface.co/Timofey/PubMedBERT_Pathways_Context_Classifier) of the fine-tuned model.<br>

GitHub [link](https://github.com/ANDDigest/ANDDigest_classification_models) with a notebooks, for the fine-tuning and application of the model. | Timofey/Pathways_Fine-Tuning_Dataset | [

"ANDDigest",

"ANDSystem",

"PubMed",

"arxiv:2007.15779",

"region:us"

] | 2022-09-15T08:55:55+00:00 | {"tags": ["ANDDigest", "ANDSystem", "PubMed"], "viewer": false, "extra_gated_fields": {"I agree to share my contact Information": "checkbox"}} | 2022-11-11T12:02:39+00:00 |

a8d0fb879ef9b12fd3f2ceb910a25af0bfbea10f |

A PubMed-based dataset, used for the fine-tuning of the [BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://arxiv.org/abs/2007.15779) model for the context-based classification of the names of molecular pathways.

<br><br>

HuggingFace [card](https://huggingface.co/Timofey/PubMedBERT_Cell_Components_Context_Classifier) of the fine-tuned model.<br>

GitHub [link](https://github.com/ANDDigest/ANDDigest_classification_models) with a notebooks, for the fine-tuning and application of the model.

| Timofey/Cell_Components_Fine-Tuning_Dataset | [

"ANDDigest",

"ANDSystem",

"PubMed",

"arxiv:2007.15779",

"region:us"

] | 2022-09-15T10:26:27+00:00 | {"tags": ["ANDDigest", "ANDSystem", "PubMed"], "viewer": false, "extra_gated_fields": {"I agree to share my contact Information": "checkbox"}} | 2022-11-11T12:02:12+00:00 |

86daa918401d71f6df102d24db7ed4bc60d39caa |

A PubMed-based dataset, used for the fine-tuning of the [BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://arxiv.org/abs/2007.15779) model for the context-based classification of the names of molecular pathways.

<br><br>

HuggingFace [card](https://huggingface.co/Timofey/PubMedBERT_Drugs_Metabolites_Context_Classifier) of the fine-tuned model.<br>

GitHub [link](https://github.com/ANDDigest/ANDDigest_classification_models) with a notebooks, for the fine-tuning and application of the model.

| Timofey/Drugs_Metabolites_Fine-Tuning_Dataset | [

"ANDDigest",

"ANDSystem",

"PubMed",

"arxiv:2007.15779",

"region:us"

] | 2022-09-15T10:35:21+00:00 | {"tags": ["ANDDigest", "ANDSystem", "PubMed"], "viewer": false, "extra_gated_fields": {"I agree to share my contact Information": "checkbox"}} | 2022-11-11T12:00:35+00:00 |

5e4f6b0f9b29eeb9034c01d76ccaf6e71f3db775 | taspecustu/Nanachi | [

"license:cc-by-4.0",

"region:us"

] | 2022-09-15T11:25:52+00:00 | {"license": "cc-by-4.0"} | 2022-09-15T11:32:36+00:00 |

|

dd7d748ed3c8e00fd078e625a01c2d9addff358b |

# Data card for Internet Archive historic book pages unlabelled.

- `10,844,387` unlabelled pages from historical books from the internet archive.

- Intended to be used for:

- pre-training computer vision models in an unsupervised manner

- using weak supervision to generate labels | ImageIN/IA_unlabelled | [

"region:us"

] | 2022-09-15T12:52:19+00:00 | {"annotations_creators": [], "language_creators": [], "language": [], "license": [], "multilinguality": [], "size_categories": [], "source_datasets": [], "task_categories": [], "task_ids": [], "pretty_name": "Internet Archive historic book pages unlabelled.", "tags": []} | 2022-10-21T13:38:12+00:00 |

bc2dd80f3fe48061b9648e867ef6f41a71ed5660 | Kipol/vs_art | [

"license:cc",

"region:us"

] | 2022-09-15T14:17:14+00:00 | {"license": "cc"} | 2022-09-15T14:18:08+00:00 |

|

e0aa6f54740139a2bde073beac5f93403ed2e990 | annotations_creators:

- no-annotation

languages:

-English

All data pulled from Gene Expression Omnibus website. tab separated file with GSE number followed by title and abstract text. | spiccolo/gene_expression_omnibus_nlp | [

"region:us"

] | 2022-09-15T14:53:44+00:00 | {} | 2022-10-13T15:34:55+00:00 |

7b976142cd87d9b99c4e9841a3c579e99eee09ed | # AutoTrain Dataset for project: ratnakar_1000_sample_curated

## Dataset Description

This dataset has been automatically processed by AutoTrain for project ratnakar_1000_sample_curated.

### Languages

The BCP-47 code for the dataset's language is en.

## Dataset Structure

### Data Instances

A sample from this dataset looks as follows:

```json

[

{

"tokens": [

"INTRADAY",

"NAHARINDUS",

" ABOVE ",

"128",

" - 129 SL ",

"126",

" TARGET ",

"140",

" "

],

"tags": [

8,

10,

0,

3,

0,

9,

0,

5,

0

]

},

{

"tokens": [

"INTRADAY",

"ASTRON",

" ABV ",

"39",

" SL ",

"37.50",

" TARGET ",

"45",

" "

],

"tags": [

8,

10,

0,

3,

0,

9,

0,

5,

0

]

}

]

```

### Dataset Fields

The dataset has the following fields (also called "features"):

```json

{

"tokens": "Sequence(feature=Value(dtype='string', id=None), length=-1, id=None)",

"tags": "Sequence(feature=ClassLabel(num_classes=12, names=['NANA', 'btst', 'delivery', 'enter', 'entry_momentum', 'exit', 'exit2', 'exit3', 'intraday', 'sl', 'symbol', 'touched'], id=None), length=-1, id=None)"

}

```

### Dataset Splits

This dataset is split into a train and validation split. The split sizes are as follow:

| Split name | Num samples |

| ------------ | ------------------- |

| train | 726 |

| valid | 259 |

# GitHub Link to this project : [Telegram Trade Msg Backtest ML](https://github.com/hemangjoshi37a/TelegramTradeMsgBacktestML)

# Need custom model for your application? : Place a order on hjLabs.in : [Custom Token Classification or Named Entity Recognition (NER) model as in Natural Language Processing (NLP) Machine Learning](https://hjlabs.in/product/custom-token-classification-or-named-entity-recognition-ner-model-as-in-natural-language-processing-nlp-machine-learning/)

## What this repository contains? :

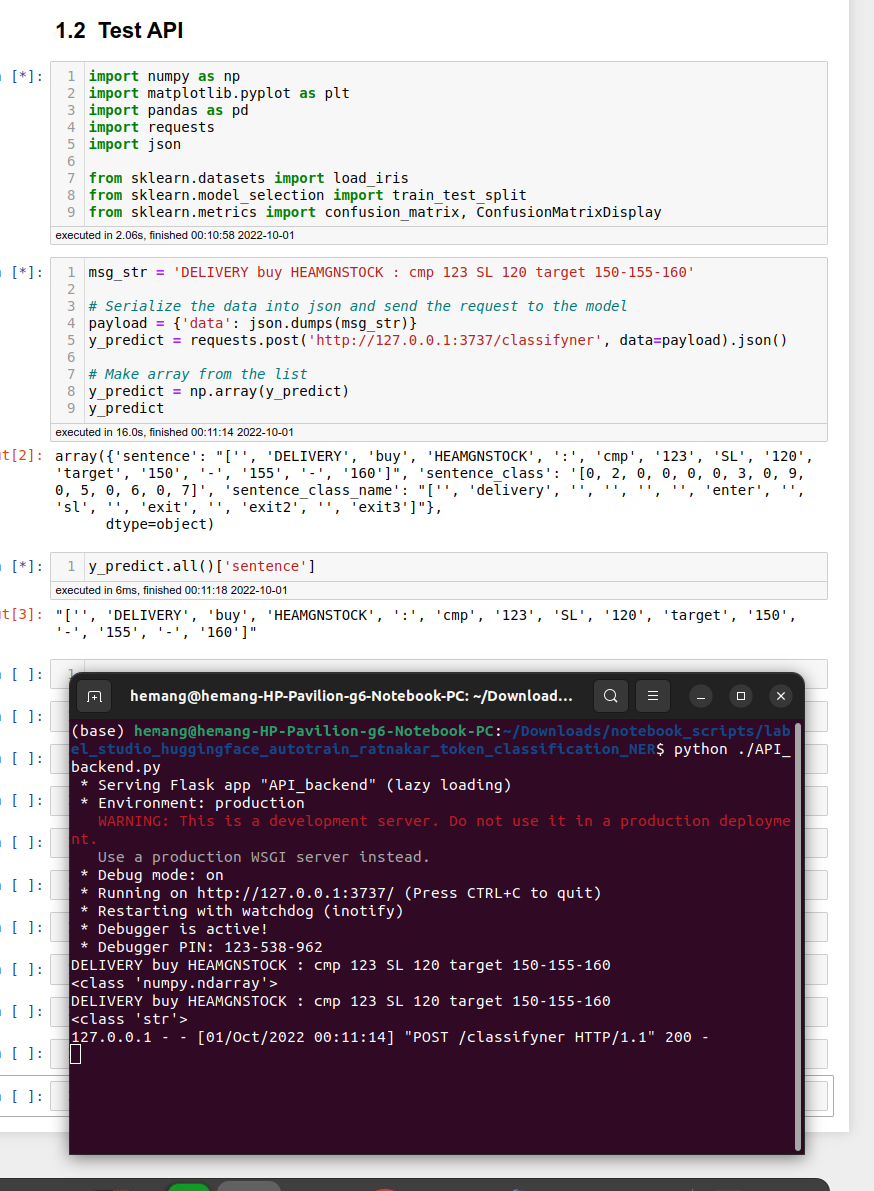

1. Label data using LabelStudio NER(Named Entity Recognition or Token Classification) tool.

convert to

2. Convert LabelStudio CSV or JSON to HuggingFace-autoTrain dataset conversion script

3. Train NER model on Hugginface-autoTrain.

4. Use Hugginface-autoTrain model to predict labels on new data in LabelStudio using LabelStudio-ML-Backend.

5. Define python function to predict labels using Hugginface-autoTrain model.

6. Only label new data from newly predicted-labels-dataset that has falsified labels.

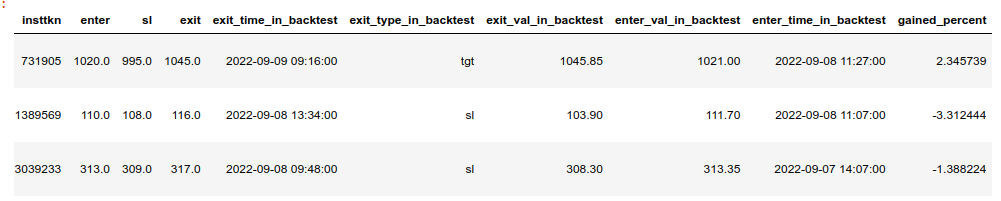

7. Backtest Truely labelled dataset against real historical data of the stock using zerodha kiteconnect and jugaad_trader.

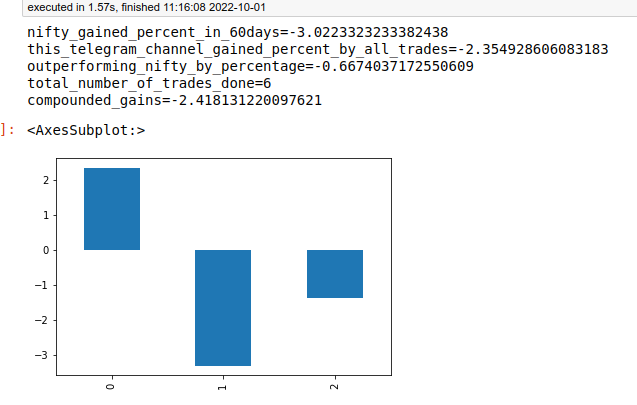

8. Evaluate total gained percentage since inception summation-wise and compounded and plot.

9. Listen to telegram channel for new LIVE messages using telegram API for algotrading.

10. Serve the app as flask web API for web request and respond to it as labelled tokens.

11. Outperforming or underperforming results of the telegram channel tips against exchange index by percentage.

Place a custom order on hjLabs.in : [https://hjLabs.in](https://hjlabs.in/?product=custom-algotrading-software-for-zerodha-and-angel-w-source-code)

----------------------------------------------------------------------

### Contact us

Mobile : [+917016525813](tel:+917016525813)

Whatsapp & Telegram : [+919409077371](tel:+919409077371)

Email : [[email protected]](mailto:[email protected])

Place a custom order on hjLabs.in : [https://hjLabs.in](https://hjlabs.in/)

Please contribute your suggestions and corections to support our efforts.

Thank you.

Buy us a coffee for $5 on PayPal ?

[](https://www.paypal.com/cgi-bin/webscr?cmd=_s-xclick&hosted_button_id=5JXC8VRCSUZWJ)

----------------------------------------------------------------------

### Checkout Our Other Repositories

- [pyPortMan](https://github.com/hemangjoshi37a/pyPortMan)

- [transformers_stock_prediction](https://github.com/hemangjoshi37a/transformers_stock_prediction)

- [TrendMaster](https://github.com/hemangjoshi37a/TrendMaster)

- [hjAlgos_notebooks](https://github.com/hemangjoshi37a/hjAlgos_notebooks)

- [AutoCut](https://github.com/hemangjoshi37a/AutoCut)

- [My_Projects](https://github.com/hemangjoshi37a/My_Projects)

- [Cool Arduino and ESP8266 or NodeMCU Projects](https://github.com/hemangjoshi37a/my_Arduino)

- [Telegram Trade Msg Backtest ML](https://github.com/hemangjoshi37a/TelegramTradeMsgBacktestML)

### Checkout Our Other Products

- [WiFi IoT LED Matrix Display](https://hjlabs.in/product/wifi-iot-led-display)

- [SWiBoard WiFi Switch Board IoT Device](https://hjlabs.in/product/swiboard-wifi-switch-board-iot-device)

- [Electric Bicycle](https://hjlabs.in/product/electric-bicycle)

- [Product 3D Design Service with Solidworks](https://hjlabs.in/product/product-3d-design-with-solidworks/)

- [AutoCut : Automatic Wire Cutter Machine](https://hjlabs.in/product/automatic-wire-cutter-machine/)

- [Custom AlgoTrading Software Coding Services](https://hjlabs.in/product/custom-algotrading-software-for-zerodha-and-angel-w-source-code//)

- [SWiBoard :Tasmota MQTT Control App](https://play.google.com/store/apps/details?id=in.hjlabs.swiboard)

- [Custom Token Classification or Named Entity Recognition (NER) model as in Natural Language Processing (NLP) Machine Learning](https://hjlabs.in/product/custom-token-classification-or-named-entity-recognition-ner-model-as-in-natural-language-processing-nlp-machine-learning/)

## Some Cool Arduino and ESP8266 (or NodeMCU) IoT projects:

- [IoT_LED_over_ESP8266_NodeMCU : Turn LED on and off using web server hosted on a nodemcu or esp8266](https://github.com/hemangjoshi37a/my_Arduino/tree/master/IoT_LED_over_ESP8266_NodeMCU)

- [ESP8266_NodeMCU_BasicOTA : Simple OTA (Over The Air) upload code from Arduino IDE using WiFi to NodeMCU or ESP8266](https://github.com/hemangjoshi37a/my_Arduino/tree/master/ESP8266_NodeMCU_BasicOTA)

- [IoT_CSV_SD : Read analog value of Voltage and Current and write it to SD Card in CSV format for Arduino, ESP8266, NodeMCU etc](https://github.com/hemangjoshi37a/my_Arduino/tree/master/IoT_CSV_SD)

- [Honeywell_I2C_Datalogger : Log data in A SD Card from a Honeywell I2C HIH8000 or HIH6000 series sensor having external I2C RTC clock](https://github.com/hemangjoshi37a/my_Arduino/tree/master/Honeywell_I2C_Datalogger)

- [IoT_Load_Cell_using_ESP8266_NodeMC : Read ADC value from High Precision 12bit ADS1015 ADC Sensor and Display on SSD1306 SPI Display as progress bar for Arduino or ESP8266 or NodeMCU](https://github.com/hemangjoshi37a/my_Arduino/tree/master/IoT_Load_Cell_using_ESP8266_NodeMC)

- [IoT_SSD1306_ESP8266_NodeMCU : Read from High Precision 12bit ADC seonsor ADS1015 and display to SSD1306 SPI as progress bar in ESP8266 or NodeMCU or Arduino](https://github.com/hemangjoshi37a/my_Arduino/tree/master/IoT_SSD1306_ESP8266_NodeMCU)

## Checkout Our Awesome 3D GrabCAD Models:

- [AutoCut : Automatic Wire Cutter Machine](https://grabcad.com/library/automatic-wire-cutter-machine-1)

- [ESP Matrix Display 5mm Acrylic Box](https://grabcad.com/library/esp-matrix-display-5mm-acrylic-box-1)

- [Arcylic Bending Machine w/ Hot Air Gun](https://grabcad.com/library/arcylic-bending-machine-w-hot-air-gun-1)

- [Automatic Wire Cutter/Stripper](https://grabcad.com/library/automatic-wire-cutter-stripper-1)

## Our HuggingFace Models :

- [hemangjoshi37a/autotrain-ratnakar_1000_sample_curated-1474454086 : Stock tip message NER(Named Entity Recognition or Token Classification) using HUggingFace-AutoTrain and LabelStudio and Ratnakar Securities Pvt. Ltd.](https://huggingface.co/hemangjoshi37a/autotrain-ratnakar_1000_sample_curated-1474454086)

## Our HuggingFace Datasets :

- [hemangjoshi37a/autotrain-data-ratnakar_1000_sample_curated : Stock tip message NER(Named Entity Recognition or Token Classification) using HUggingFace-AutoTrain and LabelStudio and Ratnakar Securities Pvt. Ltd.](https://huggingface.co/datasets/hemangjoshi37a/autotrain-data-ratnakar_1000_sample_curated)

## We sell Gigs on Fiverr :

- [code android and ios app for you using flutter firebase software stack](https://business.fiverr.com/share/3v14pr)

- [code custom algotrading software for zerodha or angel broking](https://business.fiverr.com/share/kzkvEy)

## Awesome Fiverr. Gigs:

- [develop machine learning ner model as in nlp using python](https://www.fiverr.com/share/9YNabx)

- [train custom chatgpt question answering model](https://www.fiverr.com/share/rwx6r7)

- [build algotrading, backtesting and stock monitoring tools using python](https://www.fiverr.com/share/A7Y14q)

- [tutor you in your science problems](https://www.fiverr.com/share/zPzmlz)

- [make apps for you crossplatform ](https://www.fiverr.com/share/BGw12l)

| hemangjoshi37a/autotrain-data-ratnakar_1000_sample_curated | [

"language:en",

"region:us"

] | 2022-09-15T16:35:58+00:00 | {"language": ["en"]} | 2023-02-16T12:45:39+00:00 |

f5295abf41f24f8fc5b9790311a2484400dcdf00 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Zero-Shot Text Classification

* Model: autoevaluate/zero-shot-classification

* Dataset: autoevaluate/zero-shot-classification-sample

* Config: autoevaluate--zero-shot-classification-sample

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-staging-eval-autoevaluate__zero-shot-classification-sample-autoevalu-acab52-16766274 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-15T17:06:48+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["autoevaluate/zero-shot-classification-sample"], "eval_info": {"task": "text_zero_shot_classification", "model": "autoevaluate/zero-shot-classification", "metrics": [], "dataset_name": "autoevaluate/zero-shot-classification-sample", "dataset_config": "autoevaluate--zero-shot-classification-sample", "dataset_split": "test", "col_mapping": {"text": "text", "classes": "classes", "target": "target"}}} | 2022-09-15T18:13:14+00:00 |

be8e467ab348721baeae3c5e8761e120f1b9e341 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Zero-Shot Text Classification

* Model: autoevaluate/zero-shot-classification

* Dataset: Tristan/zero_shot_classification_test

* Config: Tristan--zero_shot_classification_test

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@Tristan](https://huggingface.co/Tristan) for evaluating this model. | autoevaluate/autoeval-staging-eval-Tristan__zero_shot_classification_test-Tristan__zero_sh-997db8-16786276 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-15T18:25:59+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["Tristan/zero_shot_classification_test"], "eval_info": {"task": "text_zero_shot_classification", "model": "autoevaluate/zero-shot-classification", "metrics": [], "dataset_name": "Tristan/zero_shot_classification_test", "dataset_config": "Tristan--zero_shot_classification_test", "dataset_split": "test", "col_mapping": {"text": "text", "classes": "classes", "target": "target"}}} | 2022-09-15T18:26:29+00:00 |

5993d6f8de645d09e4e076540e6d25f0ee2b747a | polinaeterna/earn | [

"license:cc-by-sa-4.0",

"region:us"

] | 2022-09-15T19:43:48+00:00 | {"license": "cc-by-sa-4.0"} | 2022-09-15T19:48:46+00:00 |

|

64df8d986e65b342699e9dbed622775ae1ce4ba1 | darcksky/Ringsofsaturnlugalkien | [

"license:artistic-2.0",

"region:us"

] | 2022-09-15T21:47:45+00:00 | {"license": "artistic-2.0"} | 2022-09-16T02:01:05+00:00 |

|

e36da016ad8b2fec475e4af1af4ce5e26766b1cd | g0d/BroadcastingCommission_Patois_Dataset | [

"license:other",

"region:us"

] | 2022-09-15T22:19:56+00:00 | {"license": "other"} | 2022-09-15T23:16:22+00:00 |

|

c2a2bfe23d23992408295e0dcaa40e1d06fbacc9 |

# openwebtext_20p

## Dataset Description

- **Origin:** [openwebtext](https://huggingface.co/datasets/openwebtext)

- **Download Size** 4.60 GiB

- **Generated Size** 7.48 GiB

- **Total Size** 12.08 GiB

first 20% of [openwebtext](https://huggingface.co/datasets/openwebtext) | Bingsu/openwebtext_20p | [

"task_categories:text-generation",

"task_categories:fill-mask",

"task_ids:language-modeling",

"task_ids:masked-language-modeling",

"annotations_creators:no-annotation",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:1M<n<10M",

"source_datasets:extended|openwebtext",

"language:en",

"license:cc0-1.0",

"region:us"

] | 2022-09-16T01:15:16+00:00 | {"annotations_creators": ["no-annotation"], "language_creators": ["found"], "language": ["en"], "license": ["cc0-1.0"], "multilinguality": ["monolingual"], "size_categories": ["1M<n<10M"], "source_datasets": ["extended|openwebtext"], "task_categories": ["text-generation", "fill-mask"], "task_ids": ["language-modeling", "masked-language-modeling"], "paperswithcode_id": "openwebtext", "pretty_name": "openwebtext_20p"} | 2022-09-16T01:36:38+00:00 |

a99cdd9ebcda07905cf2d6c5cdf58b70c43cce8e |

# Dataset Card for Kelly

Keywords for Language Learning for Young and adults alike

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Additional Information](#additional-information)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://spraakbanken.gu.se/en/resources/kelly

- **Paper:** https://link.springer.com/article/10.1007/s10579-013-9251-2

### Dataset Summary

The Swedish Kelly list is a freely available frequency-based vocabulary list

that comprises general-purpose language of modern Swedish. The list was

generated from a large web-acquired corpus (SweWaC) of 114 million words

dating from the 2010s. It is adapted to the needs of language learners and

contains 8,425 most frequent lemmas that cover 80% of SweWaC.

### Languages

Swedish (sv-SE)

## Dataset Structure

### Data Instances

Here is a sample of the data:

```python

{

'id': 190,

'raw_frequency': 117835.0,

'relative_frequency': 1033.61,

'cefr_level': 'A1',

'source': 'SweWaC',

'marker': 'en',

'lemma': 'dag',

'pos': 'noun-en',

'examples': 'e.g. god dag'

}

```

This can be understood as:

> The common noun "dag" ("day") has a rank of 190 in the list. It was used 117,835

times in SweWaC, meaning it occured 1033.61 times per million words. This word

is among the most important vocabulary words for Swedish language learners and

should be learned at the A1 CEFR level. An example usage of this word is the

phrase "god dag" ("good day").

### Data Fields

- `id`: The row number for the data entry, starting at 1. Generally corresponds

to the rank of the word.

- `raw_frequency`: The raw frequency of the word.

- `relative_frequency`: The relative frequency of the word measured in

number of occurences per million words.

- `cefr_level`: The CEFR level (A1, A2, B1, B2, C1, C2) of the word.

- `source`: Whether the word came from SweWaC, translation lists (T2), or

was manually added (manual).

- `marker`: The grammatical marker of the word, if any, such as an article or

infinitive marker.

- `lemma`: The lemma of the word, sometimes provided with its spelling or

stylistic variants.

- `pos`: The word's part-of-speech.

- `examples`: Usage examples and comments. Only available for some of the words.

Manual entries were prepended to the list, giving them a higher rank than they

might otherwise have had. For example, the manual entry "Göteborg ("Gothenberg")

has a rank of 20, while the first non-manual entry "och" ("and") has a rank of

87. However, a conjunction and common stopword is far more likely to occur than

the name of a city.

### Data Splits

There is a single split, `train`.

## Dataset Creation

Please refer to the article [Corpus-based approaches for the creation of a frequency

based vocabulary list in the EU project KELLY – issues on reliability, validity and

coverage](https://gup.ub.gu.se/publication/148533?lang=en) for information about how

the original dataset was created and considerations for using the data.

**The following changes have been made to the original dataset**:

- Changed header names.

- Normalized the large web-acquired corpus name to "SweWac" in the `source` field.

- Set the relative frequency of manual entries to null rather than 1000000.

## Additional Information

### Licensing Information

[CC BY 4.0](https://creativecommons.org/licenses/by/4.0)

### Citation Information

Please cite the authors if you use this dataset in your work:

```bibtex

@article{Kilgarriff2013,

doi = {10.1007/s10579-013-9251-2},

url = {https://doi.org/10.1007/s10579-013-9251-2},

year = {2013},

month = sep,

publisher = {Springer Science and Business Media {LLC}},

volume = {48},

number = {1},

pages = {121--163},

author = {Adam Kilgarriff and Frieda Charalabopoulou and Maria Gavrilidou and Janne Bondi Johannessen and Saussan Khalil and Sofie Johansson Kokkinakis and Robert Lew and Serge Sharoff and Ravikiran Vadlapudi and Elena Volodina},

title = {Corpus-based vocabulary lists for language learners for nine languages},

journal = {Language Resources and Evaluation}

}

```

### Contributions

Thanks to [@spraakbanken](https://github.com/spraakbanken) for creating this dataset

and to [@codesue](https://github.com/codesue) for adding it.

| codesue/kelly | [

"task_categories:text-classification",

"task_ids:text-scoring",

"annotations_creators:expert-generated",

"language_creators:expert-generated",

"multilinguality:monolingual",

"size_categories:1K<n<10K",

"language:sv",

"license:cc-by-4.0",

"lexicon",

"swedish",

"CEFR",

"region:us"

] | 2022-09-16T01:18:16+00:00 | {"annotations_creators": ["expert-generated"], "language_creators": ["expert-generated"], "language": ["sv"], "license": ["cc-by-4.0"], "multilinguality": ["monolingual"], "size_categories": ["1K<n<10K"], "source_datasets": [], "task_categories": ["text-classification"], "task_ids": ["text-scoring"], "pretty_name": "kelly", "tags": ["lexicon", "swedish", "CEFR"]} | 2022-12-18T22:06:55+00:00 |

dc137a6a976f6b5bb8768e9bb51ec58df930ccd1 |

# Dataset Card for "privy-english"

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://github.com/pixie-io/pixie/tree/main/src/datagen/pii/privy](https://github.com/pixie-io/pixie/tree/main/src/datagen/pii/privy)

### Dataset Summary

A synthetic PII dataset generated using [Privy](https://github.com/pixie-io/pixie/tree/main/src/datagen/pii/privy), a tool which parses OpenAPI specifications and generates synthetic request payloads, searching for keywords in API schema definitions to select appropriate data providers. Generated API payloads are converted to various protocol trace formats like JSON and SQL to approximate the data developers might encounter while debugging applications.

This labelled PII dataset consists of protocol traces (JSON, SQL (PostgreSQL, MySQL), HTML, and XML) generated from OpenAPI specifications and includes 60+ PII types.

### Supported Tasks and Leaderboards

Named Entity Recognition (NER) and PII classification.

### Label Scheme

<details>

<summary>View label scheme (26 labels for 60 PII data providers)</summary>

| Component | Labels |

| --- | --- |

| **`ner`** | `PERSON`, `LOCATION`, `NRP`, `DATE_TIME`, `CREDIT_CARD`, `URL`, `IBAN_CODE`, `US_BANK_NUMBER`, `PHONE_NUMBER`, `US_SSN`, `US_PASSPORT`, `US_DRIVER_LICENSE`, `IP_ADDRESS`, `US_ITIN`, `EMAIL_ADDRESS`, `ORGANIZATION`, `TITLE`, `COORDINATE`, `IMEI`, `PASSWORD`, `LICENSE_PLATE`, `CURRENCY`, `ROUTING_NUMBER`, `SWIFT_CODE`, `MAC_ADDRESS`, `AGE` |

</details>

### Languages

English

## Dataset Structure

### Data Instances

A sample:

```

{

"full_text": "{\"full_name_female\": \"Bethany Williams\", \"NewServerCertificateName\": \"\", \"NewPath\": \"\", \"ServerCertificateName\": \"dCwMNqR\", \"Action\": \"\", \"Version\": \"u zNS zNS\"}",

"masked": "{\"full_name_female\": \"{{name_female}}\", \"NewServerCertificateName\": \"{{string}}\", \"NewPath\": \"{{string}}\", \"ServerCertificateName\": \"{{string}}\", \"Action\": \"{{string}}\", \"Version\": \"{{string}}\"}",

"spans": [

{

"entity_type": "PERSON",

"entity_value": "Bethany Williams",

"start_position": 22,

"end_position": 38

}

],

"template_id": 51889,

"metadata": null

}

```

## Dataset Creation

### Curation Rationale

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the source language producers?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Annotations

#### Annotation process

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the annotators?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Personal and Sensitive Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Discussion of Biases

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Other Known Limitations

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Additional Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Dataset Curators

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Licensing Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Citation Information

```

@online{WinNT,

author = {Benjamin Kilimnik},

title = {{Privy} Synthetic PII Protocol Trace Dataset},

year = 2022,

url = {https://huggingface.co/datasets/beki/privy},

}

```

### Contributions

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | beki/privy | [

"task_categories:token-classification",

"task_ids:named-entity-recognition",

"multilinguality:monolingual",

"size_categories:100K<n<200K",

"size_categories:300K<n<400K",

"language:en",

"license:mit",

"pii-detection",

"region:us"

] | 2022-09-16T03:41:28+00:00 | {"language": ["en"], "license": ["mit"], "multilinguality": ["monolingual"], "size_categories": ["100K<n<200K", "300K<n<400K"], "task_categories": ["token-classification"], "task_ids": ["named-entity-recognition"], "pretty_name": "Privy English", "tags": ["pii-detection"], "train-eval-index": [{"config": "privy-small", "task": "token-classification", "task_id": "entity_extraction", "splits": {"train_split": "train", "eval_split": "test"}, "metrics": [{"type": "seqeval", "name": "seqeval"}]}]} | 2023-04-25T20:45:06+00:00 |

ffe47778949ab10a9d142c9156da20cceae5488e |

# Dataset Card for Nexdata/Mandarin_Spontaneous_Speech_Data_by_Mobile_Phone

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://www.nexdata.ai/datasets/77?source=Huggingface

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

The data were recorded by 700 Mandarin speakers, 65% of whom were women. There is no pre-made text, and speakers makes phone calls in a natural way while recording the contents of the calls. This data mainly labels the near-end speech, and the speech content is naturally colloquial.

For more details, please refer to the link: https://www.nexdata.ai/datasets/77?source=Huggingface

### Supported Tasks and Leaderboards

automatic-speech-recognition, audio-speaker-identification: The dataset can be used to train a model for Automatic Speech Recognition (ASR).

### Languages

Mandarin

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

Commercial License

### Citation Information

[More Information Needed]

### Contributions | Nexdata/Mandarin_Spontaneous_Speech_Data_by_Mobile_Phone | [

"task_categories:automatic-speech-recognition",

"language:zh",

"region:us"

] | 2022-09-16T09:10:40+00:00 | {"language": ["zh"], "task_categories": ["automatic-speech-recognition"], "YAML tags": [{"copy-paste the tags obtained with the tagging app": "https://github.com/huggingface/datasets-tagging"}]} | 2023-11-22T09:44:03+00:00 |

2751c683885849b771797fec13e146fe59811180 |

# Dataset Card for Nexdata/Korean_Conversational_Speech_Data_by_Mobile_Phone

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://www.nexdata.ai/datasets/1103?source=Huggingface

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

About 700 Korean speakers participated in the recording, and conducted face-to-face communication in a natural way. They had free discussion on a number of given topics, with a wide range of fields; the voice was natural and fluent, in line with the actual dialogue scene. Text is transferred manually, with high accuracy.

For more details, please refer to the link: https://www.nexdata.ai/datasets/1103?source=Huggingface

### Supported Tasks and Leaderboards

automatic-speech-recognition, audio-speaker-identification: The dataset can be used to train a model for Automatic Speech Recognition (ASR).

### Languages

Korean

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

Commercial License

### Citation Information

[More Information Needed]

### Contributions | Nexdata/Korean_Conversational_Speech_Data_by_Mobile_Phone | [

"task_categories:conversational",

"language:ko",

"region:us"

] | 2022-09-16T09:13:43+00:00 | {"language": ["ko"], "task_categories": ["conversational"], "YAML tags": [{"copy-paste the tags obtained with the tagging app": "https://github.com/huggingface/datasets-tagging"}]} | 2023-11-22T09:43:54+00:00 |

466e1bbc26e58600d32cfdab7779aea4be5f6c78 |

# Dataset Card for Nexdata/Japanese_Conversational_Speech_by_Mobile_Phone

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://www.nexdata.ai/datasets/1166?source=Huggingface

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

About 1000 speakers participated in the recording, and conducted face-to-face communication in a natural way. They had free discussion on a number of given topics, with a wide range of fields; the voice was natural and fluent, in line with the actual dialogue scene. Text is transferred manually, with high accuracy.

For more details, please refer to the link: https://www.nexdata.ai/datasets/1166?source=Huggingface

### Supported Tasks and Leaderboards

automatic-speech-recognition, audio-speaker-identification: The dataset can be used to train a model for Automatic Speech Recognition (ASR).

### Languages

Japanese

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

Commercial License

### Citation Information

[More Information Needed]

### Contributions | Nexdata/Japanese_Conversational_Speech_by_Mobile_Phone | [

"task_categories:conversational",

"language:ja",

"region:us"

] | 2022-09-16T09:14:35+00:00 | {"language": ["ja"], "task_categories": ["conversational"], "YAML tags": [{"copy-paste the tags obtained with the tagging app": "https://github.com/huggingface/datasets-tagging"}]} | 2023-11-22T09:44:24+00:00 |

9d53d40614e2466e905a48c39d3593ad4ed52b81 |

# Dataset Card for Nexdata/Italian_Conversational_Speech_Data_by_Mobile_Phone

## Description

About 700 speakers participated in the recording, and conducted face-to-face communication in a natural way. They had free discussion on a number of given topics, with a wide range of fields; the voice was natural and fluent, in line with the actual dialogue scene. Text is transferred manually, with high accuracy.

For more details, please refer to the link: https://www.nexdata.ai/datasets/1178?source=Huggingface

## Format

16kHz, 16bit, uncompressed wav, mono channel;

## Recording Environment

quiet indoor environment, without echo;

## Recording content

dozens of topics are specified, and the speakers make dialogue under those topics while the recording is performed;

## Demographics

About 700 people.

## Annotation

annotating for the transcription text, speaker identification and gender

## Device

Android mobile phone, iPhone;

## Language

Italian

## Application scenarios

speech recognition; voiceprint recognition;

## Accuracy rate

the word accuracy rate is not less than 98%

# Licensing Information

Commercial License

| Nexdata/Italian_Conversational_Speech_Data_by_Mobile_Phone | [

"task_categories:conversational",

"language:it",

"region:us"

] | 2022-09-16T09:15:32+00:00 | {"language": ["it"], "task_categories": ["conversational"], "YAML tags": [{"copy-paste the tags obtained with the tagging app": "https://github.com/huggingface/datasets-tagging"}]} | 2023-11-10T07:48:10+00:00 |

b96e3be1f0db925f88558b78d9092a1269c814e0 |

NLI를 위한 한국어 속담 데이터셋입니다.

'question'은 속담의 의미와 보기(5지선다)가 표시되어 있으며,

'label'에는 정답의 번호(0-4)가 표시되어 있습니다.

licence: cc-by-sa-2.0-kr (원본 출처:국립국어원 표준국어대사전)

|Model| psyche/korean_idioms |

|:------:|:---:|

|klue/bert-base|0.7646| | psyche/korean_idioms | [

"task_categories:text-classification",

"annotations_creators:machine-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:1K<n<10K",

"source_datasets:original",

"language:ko",

"region:us"

] | 2022-09-16T10:31:37+00:00 | {"annotations_creators": ["machine-generated"], "language_creators": ["found"], "language": ["ko"], "multilinguality": ["monolingual"], "size_categories": ["1K<n<10K"], "source_datasets": ["original"], "task_categories": ["text-classification"], "pretty_name": "psyche/korean_idioms", "tags": []} | 2022-10-23T03:02:44+00:00 |

28fb0d7e0d32c1ac7b6dd09f8d9a4e283212e1c0 |

|Model| psyche/bool_sentence (10k) |

|:------:|:---:|

|klue/bert-base|0.9335|

licence: cc-by-sa-2.0-kr (원본 출처:국립국어원 표준국어대사전) | psyche/bool_sentence | [

"task_categories:text-classification",

"annotations_creators:machine-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:100K<n<1M",

"source_datasets:original",

"language:ko",

"region:us"

] | 2022-09-16T11:30:21+00:00 | {"annotations_creators": ["machine-generated"], "language_creators": ["found"], "language": ["ko"], "multilinguality": ["monolingual"], "size_categories": ["100K<n<1M"], "source_datasets": ["original"], "task_categories": ["text-classification"], "task_ids": [], "pretty_name": "psyche/bool_sentence", "tags": []} | 2022-10-23T01:52:40+00:00 |

7dfaa5ab1015d802d08b5ca624675a53d4502bda |

```sh

git clone https://github.com/natir/br.git

git clone https://github.com/natir/pcon

git clone https://github.com/natir/yacrd

git clone https://github.com/natir/rasusa

git clone https://github.com/natir/fpa

git clone https://github.com/natir/kmrf

rm -f RustBioGPT-train.csv && for i in `find . -name "*.rs"`;do paste -d "," <(echo $i|perl -pe "s/\.\/(\w+)\/.+/\"\1\"/g") <(echo $i|perl -pe "s/(.+)/\"\1\"/g") <(perl -pe "s/\n/\\\n/g" $i|perl -pe s"/\"/\'/g" |perl -pe "s/(.+)/\"\1\"/g") <(echo "mit"|perl -pe "s/(.+)/\"\1\"/g") >> RustBioGPT-train.csv; done

sed -i '1i "repo_name","path","content","license"' RustBioGPT-train.csv

``` | jelber2/RustBioGPT | [

"license:mit",

"region:us"

] | 2022-09-16T11:59:39+00:00 | {"license": "mit"} | 2022-09-27T11:02:09+00:00 |

6a10b37e1971cde1ac72ff68a431519efcbe249a | wjm123/wjm123 | [

"license:afl-3.0",

"region:us"

] | 2022-09-16T12:15:45+00:00 | {"license": "afl-3.0"} | 2022-09-16T12:18:02+00:00 |

|

5156a742da7df2bd1796e2e34840ca6231509e82 | cakiki/token-graph | [

"license:apache-2.0",

"region:us"

] | 2022-09-16T12:43:04+00:00 | {"license": "apache-2.0"} | 2022-09-17T08:31:00+00:00 |

|

6ca3d7b3c4711e6f9df5d73ee70958c2750f925c |

# WNLI-es

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Website:** https://cs.nyu.edu/~davise/papers/WinogradSchemas/WS.html

- **Point of Contact:** [Carlos Rodríguez-Penagos]([email protected]) and [Carme Armentano-Oller]([email protected])

### Dataset Summary

"A Winograd schema is a pair of sentences that differ in only one or two words and that contain an ambiguity that is resolved in opposite ways in the two sentences and requires the use of world knowledge and reasoning for its resolution. The schema takes its name from Terry Winograd." Source: [The Winograd Schema Challenge](https://cs.nyu.edu/~davise/papers/WinogradSchemas/WS.html).

The [Winograd NLI dataset](https://dl.fbaipublicfiles.com/glue/data/WNLI.zip) presents 855 sentence pairs, in which the first sentence contains an ambiguity and the second one a possible interpretation of it. The label indicates if the interpretation is correct (1) or not (0).

This dataset is a professional translation into Spanish of [Winograd NLI dataset](https://dl.fbaipublicfiles.com/glue/data/WNLI.zip) as published in [GLUE Benchmark](https://gluebenchmark.com/tasks).

Both the original dataset and this translation are licenced under a [Creative Commons Attribution 4.0 International License](https://creativecommons.org/licenses/by/4.0/).

### Supported Tasks and Leaderboards

Textual entailment, Text classification, Language Model.

### Languages

* Spanish (es)

## Dataset Structure

### Data Instances

Three tsv files.

### Data Fields

- index

- sentence 1: first sentence of the pair

- sentence 2: second sentence of the pair

- label: relation between the two sentences:

* 0: the second sentence does not entail a correct interpretation of the first one (neutral)

* 1: the second sentence entails a correct interpretation of the first one (entailment)

### Data Splits

- wnli-train-es.csv: 636 sentence pairs

- wnli-dev-es.csv: 72 sentence pairs

- wnli-test-shuffled-es.csv: 147 sentence pairs

## Dataset Creation

### Curation Rationale

We translated this dataset to contribute to the development of language models in Spanish.

### Source Data

- [GLUE Benchmark site](https://gluebenchmark.com)

#### Initial Data Collection and Normalization

This is a professional translation of [WNLI dataset](https://cs.nyu.edu/~davise/papers/WinogradSchemas/WS.html) into Spanish, commissioned by [BSC TeMU](https://temu.bsc.es/) within the the framework of the [Plan-TL](https://plantl.mineco.gob.es/Paginas/index.aspx).

For more information on how the Winograd NLI dataset was created, visit the webpage [The Winograd Schema Challenge](https://cs.nyu.edu/~davise/papers/WinogradSchemas/WS.html).

#### Who are the source language producers?

For more information on how the Winograd NLI dataset was created, visit the webpage [The Winograd Schema Challenge](https://cs.nyu.edu/~davise/papers/WinogradSchemas/WS.html).

### Annotations

#### Annotation process

We comissioned a professional translation of [WNLI dataset](https://cs.nyu.edu/~davise/papers/WinogradSchemas/WS.html) into Spanish.

#### Who are the annotators?

Translation was commisioned to a professional translation agency.

### Personal and Sensitive Information

No personal or sensitive information included.

## Considerations for Using the Data

### Social Impact of Dataset

This dataset contributes to the development of language models in Spanish.

### Discussion of Biases

[N/A]

### Other Known Limitations

[N/A]

## Additional Information

### Dataset Curators

Text Mining Unit (TeMU) at the Barcelona Supercomputing Center ([email protected]).

For further information, send an email to ([email protected]).

This work was funded by the [Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA)](https://avancedigital.mineco.gob.es/en-us/Paginas/index.aspx) within the framework of the [Plan-TL](https://plantl.mineco.gob.es/Paginas/index.aspx).

### Licensing information

This work is licensed under [CC Attribution 4.0 International](https://creativecommons.org/licenses/by/4.0/) License.

Copyright by the Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA) (2022)

### Contributions

[N/A]

| PlanTL-GOB-ES/wnli-es | [

"task_categories:text-classification",

"task_ids:natural-language-inference",

"annotations_creators:expert-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:unknown",

"source_datasets:extended|glue",

"language:es",

"license:cc-by-4.0",

"region:us"

] | 2022-09-16T12:51:45+00:00 | {"annotations_creators": ["expert-generated"], "language_creators": ["found"], "language": ["es"], "license": ["cc-by-4.0"], "multilinguality": ["monolingual"], "size_categories": ["unknown"], "source_datasets": ["extended|glue"], "task_categories": ["text-classification"], "task_ids": ["natural-language-inference"], "pretty_name": "wnli-es"} | 2022-11-18T12:03:25+00:00 |

4a15933dcd0acf4d468b13e12f601a4e456deeb6 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Question Answering

* Model: Jiqing/bert-large-uncased-whole-word-masking-finetuned-squad-finetuned-squad

* Dataset: squad_v2

* Config: squad_v2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-squad_v2-squad_v2-e15d25-1483654271 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T15:14:24+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["squad_v2"], "eval_info": {"task": "extractive_question_answering", "model": "Jiqing/bert-large-uncased-whole-word-masking-finetuned-squad-finetuned-squad", "metrics": [], "dataset_name": "squad_v2", "dataset_config": "squad_v2", "dataset_split": "validation", "col_mapping": {"context": "context", "question": "question", "answers-text": "answers.text", "answers-answer_start": "answers.answer_start"}}} | 2022-09-16T15:19:11+00:00 |

dd8b911a18f8578bdc3a4009ce27af553ff6dd62 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Question Answering

* Model: MYX4567/distilbert-base-uncased-finetuned-squad

* Dataset: squad_v2

* Config: squad_v2

* Split: validation

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-squad_v2-squad_v2-e15d25-1483654272 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T15:14:27+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["squad_v2"], "eval_info": {"task": "extractive_question_answering", "model": "MYX4567/distilbert-base-uncased-finetuned-squad", "metrics": [], "dataset_name": "squad_v2", "dataset_config": "squad_v2", "dataset_split": "validation", "col_mapping": {"context": "context", "question": "question", "answers-text": "answers.text", "answers-answer_start": "answers.answer_start"}}} | 2022-09-16T15:16:56+00:00 |

ad46374198d1c2b567649b3aef123d746ba4278c | Violence/Cloud | [

"license:afl-3.0",

"region:us"

] | 2022-09-16T16:45:20+00:00 | {"license": "afl-3.0"} | 2022-09-16T16:45:20+00:00 |

|

ecd209ffe06e918e4c7e7ce8684640434697e830 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Zero-Shot Text Classification

* Model: mathemakitten/opt-125m

* Dataset: autoevaluate/zero-shot-classification-sample

* Config: autoevaluate--zero-shot-classification-sample

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@mathemakitten](https://huggingface.co/mathemakitten) for evaluating this model. | autoevaluate/autoeval-eval-autoevaluate__zero-shot-classification-sample-autoevalu-912bbb-1484454284 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T16:55:47+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["autoevaluate/zero-shot-classification-sample"], "eval_info": {"task": "text_zero_shot_classification", "model": "mathemakitten/opt-125m", "metrics": [], "dataset_name": "autoevaluate/zero-shot-classification-sample", "dataset_config": "autoevaluate--zero-shot-classification-sample", "dataset_split": "test", "col_mapping": {"text": "text", "classes": "classes", "target": "target"}}} | 2022-09-16T16:56:15+00:00 |

63a9e740124aeaed97c6cc48ed107b95833d7121 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Zero-Shot Text Classification

* Model: mathemakitten/opt-125m

* Dataset: autoevaluate/zero-shot-classification-sample

* Config: autoevaluate--zero-shot-classification-sample

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@mathemakitten](https://huggingface.co/mathemakitten) for evaluating this model. | autoevaluate/autoeval-eval-autoevaluate__zero-shot-classification-sample-autoevalu-c3526e-1484354283 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T16:55:48+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["autoevaluate/zero-shot-classification-sample"], "eval_info": {"task": "text_zero_shot_classification", "model": "mathemakitten/opt-125m", "metrics": [], "dataset_name": "autoevaluate/zero-shot-classification-sample", "dataset_config": "autoevaluate--zero-shot-classification-sample", "dataset_split": "test", "col_mapping": {"text": "text", "classes": "classes", "target": "target"}}} | 2022-09-16T16:56:15+00:00 |

589bf157b543e47fc4bc6e2d681eb765df768a60 | spacemanidol/query-rewriting-dense-retrieval | [

"license:mit",

"region:us"

] | 2022-09-16T17:08:15+00:00 | {"license": "mit"} | 2022-09-16T17:08:15+00:00 |

|

37ea2ff12fdef2021a8068cf76c186aa9c1ca50a | jemale/test | [

"license:mit",

"region:us"

] | 2022-09-16T17:27:16+00:00 | {"license": "mit"} | 2022-09-16T17:27:16+00:00 |

|

4f7cf75267bc4b751a03ed9f668350be69d9ce4a | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Token Classification

* Model: chandrasutrisnotjhong/bert-finetuned-ner

* Dataset: conll2003

* Config: conll2003

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-conll2003-conll2003-bc26c9-1485554291 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:21:31+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["conll2003"], "eval_info": {"task": "entity_extraction", "model": "chandrasutrisnotjhong/bert-finetuned-ner", "metrics": [], "dataset_name": "conll2003", "dataset_config": "conll2003", "dataset_split": "test", "col_mapping": {"tokens": "tokens", "tags": "ner_tags"}}} | 2022-09-16T19:22:45+00:00 |

c816be36bf214a2b8ed525580d849ac7df0d2634 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Token Classification

* Model: baptiste/deberta-finetuned-ner

* Dataset: conll2003

* Config: conll2003

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-conll2003-conll2003-bc26c9-1485554292 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:21:37+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["conll2003"], "eval_info": {"task": "entity_extraction", "model": "baptiste/deberta-finetuned-ner", "metrics": [], "dataset_name": "conll2003", "dataset_config": "conll2003", "dataset_split": "test", "col_mapping": {"tokens": "tokens", "tags": "ner_tags"}}} | 2022-09-16T19:23:02+00:00 |

4c2a0ee535002890fffbd6b6a0fe8afc5bc2f6cf | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Token Classification

* Model: mariolinml/roberta_large-ner-conll2003_0818_v0

* Dataset: conll2003

* Config: conll2003

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-conll2003-conll2003-bc26c9-1485554294 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:21:47+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["conll2003"], "eval_info": {"task": "entity_extraction", "model": "mariolinml/roberta_large-ner-conll2003_0818_v0", "metrics": [], "dataset_name": "conll2003", "dataset_config": "conll2003", "dataset_split": "test", "col_mapping": {"tokens": "tokens", "tags": "ner_tags"}}} | 2022-09-16T19:23:36+00:00 |

5e2e4e90132c48d0b3e0afa6337a75225510eb8a | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Token Classification

* Model: jjglilleberg/bert-finetuned-ner

* Dataset: conll2003

* Config: conll2003

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-conll2003-conll2003-bc26c9-1485554295 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:21:53+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["conll2003"], "eval_info": {"task": "entity_extraction", "model": "jjglilleberg/bert-finetuned-ner", "metrics": [], "dataset_name": "conll2003", "dataset_config": "conll2003", "dataset_split": "test", "col_mapping": {"tokens": "tokens", "tags": "ner_tags"}}} | 2022-09-16T19:23:06+00:00 |

2105a9d5dd2b3d9ca6f7a7d51c60455a31a40e2a | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Token Classification

* Model: Yv/bert-finetuned-ner

* Dataset: conll2003

* Config: conll2003

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-conll2003-conll2003-bc26c9-1485554297 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:22:05+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["conll2003"], "eval_info": {"task": "entity_extraction", "model": "Yv/bert-finetuned-ner", "metrics": [], "dataset_name": "conll2003", "dataset_config": "conll2003", "dataset_split": "test", "col_mapping": {"tokens": "tokens", "tags": "ner_tags"}}} | 2022-09-16T19:23:19+00:00 |

6d4a3c8d5c40bf818348fcef1f6147e947481fef | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Multi-class Text Classification

* Model: armandnlp/distilbert-base-uncased-finetuned-emotion

* Dataset: emotion

* Config: default

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-emotion-default-fe1aa0-1485654301 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:22:29+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["emotion"], "eval_info": {"task": "multi_class_classification", "model": "armandnlp/distilbert-base-uncased-finetuned-emotion", "metrics": [], "dataset_name": "emotion", "dataset_config": "default", "dataset_split": "test", "col_mapping": {"text": "text", "target": "label"}}} | 2022-09-16T19:22:59+00:00 |

f009dc448491e5daf234a5e867b3fb012e366dc9 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Multi-class Text Classification

* Model: andreaschandra/distilbert-base-uncased-finetuned-emotion

* Dataset: emotion

* Config: default

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-emotion-default-fe1aa0-1485654303 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:22:41+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["emotion"], "eval_info": {"task": "multi_class_classification", "model": "andreaschandra/distilbert-base-uncased-finetuned-emotion", "metrics": [], "dataset_name": "emotion", "dataset_config": "default", "dataset_split": "test", "col_mapping": {"text": "text", "target": "label"}}} | 2022-09-16T19:23:06+00:00 |

b42408bed4845eabbde9ec840f2c77be1ce455ae | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Multi-class Text Classification

* Model: bousejin/distilbert-base-uncased-finetuned-emotion

* Dataset: emotion

* Config: default

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@lewtun](https://huggingface.co/lewtun) for evaluating this model. | autoevaluate/autoeval-eval-emotion-default-fe1aa0-1485654304 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T19:22:48+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["emotion"], "eval_info": {"task": "multi_class_classification", "model": "bousejin/distilbert-base-uncased-finetuned-emotion", "metrics": [], "dataset_name": "emotion", "dataset_config": "default", "dataset_split": "test", "col_mapping": {"text": "text", "target": "label"}}} | 2022-09-16T19:23:15+00:00 |

8f69a50e60bac11a0b2f12e5354f0678281aaf50 | # AutoTrain Dataset for project: consbert

## Dataset Description

This dataset has been automatically processed by AutoTrain for project consbert.

### Languages

The BCP-47 code for the dataset's language is unk.

## Dataset Structure

### Data Instances

A sample from this dataset looks as follows:

```json

[

{

"text": "DECLARATION OF PERFORMANCE fermacell Screws 1. unique identification code of the product type 2. purpose of use 3. manufacturer 5. system(s) for assessment and verification of constancy of performance 6. harmonised standard Notified body(ies) 7. Declared performance Essential feature Reaction to fire Tensile strength Length Corrosion protection (Reis oeueelt Nr. FC-0103 A FC-0103 A Drywall screws type TSN for fastening gypsum fibreboards James Hardie Europe GmbH Bennigsen- Platz 1 D-40474 Disseldorf Tel. +49 800 3864001 E-Mail fermacell jameshardie.de System 4 DIN EN 14566:2008+A1:2009 Stichting Hout Research (2590) Performance Al fulfilled <63mm Phosphated - Class 48 The performance of the above product corresponds to the declared performance(s). The manufacturer mentioned aboveis solely responsible for the preparation of the declaration of performancein accordance with Regulation (EU) No. 305/2011. Signed for the manufacturer and on behalf of the manufacturerof: Dusseldorf, 01.01.2020 2020 James Hardie Europe GmbH. and designate registered and incorporated trademarks of James Hardie Technology Limited Dr. J\u00e9rg Brinkmann (CEO) AESTUVER Seite 1/1 ",

"target": 1

},

{

"text": "DERBIGUM\u201d MAKING BUILDINGS SMART 9 - Performances d\u00e9clar\u00e9es selon EN 13707 : 2004 + A2: 2009 Caract\u00e9ristiques essentielles Performances Unit\u00e9s R\u00e9sistance a un feu ext\u00e9rieur (Note 1) FRoof (t3) - R\u00e9action au feu F - Etanch\u00e9it\u00e9 a l\u2019eau Conforme - Propri\u00e9t\u00e9s en traction : R\u00e9sistance en traction LxT* 900 x 700(+4 20%) N/50 mm Allongement LxT* 45 x 45 (+ 15) % R\u00e9sistance aux racines NPD** - R\u00e9sistance au poinconnementstatique (A) 20 kg R\u00e9sistance au choc (A et B) NPD** mm R\u00e9sistance a la d\u00e9chirure LxT* 200 x 200 (+ 20%) N R\u00e9sistance des jonctions: R\u00e9sistance au pelage NPD** N/50 mm R\u00e9sistance au cisaillement NPD** N/50 mm Durabilit\u00e9 : Sous UV, eau et chaleur Conforme - Pliabilit\u00e9 a froid apr\u00e9s vieillissement a la -10 (+ 5) \u00b0C chaleur Pliabilit\u00e9 a froid -18 \u00b0C Substances dangereuses (Note 2) - * L signifie la direction longitudinale, T signifie la direction transversale **NPD signifie Performance Non D\u00e9termin\u00e9e Note 1: Aucune performance ne peut \u00e9tre donn\u00e9e pourle produit seul, la performance de r\u00e9sistance a un feu ext\u00e9rieur d\u2019une toiture d\u00e9pend du syst\u00e9me complet Note 2: En l\u2019absence de norme d\u2019essai europ\u00e9enne harmonis\u00e9e, aucune performanceli\u00e9e au comportementa la lixiviation ne peut \u00e9tre d\u00e9clar\u00e9e, la d\u00e9claration doit \u00e9tre \u00e9tablie selon les dispositions nationales en vigueur. 10 - Les performances du produit identifi\u00e9 aux points 1 et 2 ci-dessus sont conformes aux performances d\u00e9clar\u00e9es indiqu\u00e9es au point 9. La pr\u00e9sente d\u00e9claration des performances est \u00e9tablie sous la seule responsabilit\u00e9 du fabricant identifi\u00e9 au point 4 Sign\u00e9 pourle fabricant et en son nom par: Mr Steve Geubels, Group Operations Director Perwez ,30/09/2016 Page 2 of 2 ",

"target": 8

}

]

```

### Dataset Fields

The dataset has the following fields (also called "features"):

```json

{

"text": "Value(dtype='string', id=None)",

"target": "ClassLabel(num_classes=9, names=['0', '1', '2', '3', '4', '5', '6', '7', '8'], id=None)"

}

```

### Dataset Splits

This dataset is split into a train and validation split. The split sizes are as follow:

| Split name | Num samples |

| ------------ | ------------------- |

| train | 59 |

| valid | 18 |

| Chemsseddine/autotrain-data-consbert | [

"task_categories:text-classification",

"region:us"

] | 2022-09-16T20:00:22+00:00 | {"task_categories": ["text-classification"]} | 2022-09-16T20:03:18+00:00 |

55c4e0884053ad905c6ceccdff7e02e8a0d9c7b8 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Zero-Shot Text Classification

* Model: autoevaluate/zero-shot-classification

* Dataset: Tristan/zero-shot-classification-large-test

* Config: Tristan--zero-shot-classification-large-test

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@Tristan](https://huggingface.co/Tristan) for evaluating this model. | autoevaluate/autoeval-eval-Tristan__zero-shot-classification-large-test-Tristan__z-7873ce-1486054319 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-16T22:52:59+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["Tristan/zero-shot-classification-large-test"], "eval_info": {"task": "text_zero_shot_classification", "model": "autoevaluate/zero-shot-classification", "metrics": [], "dataset_name": "Tristan/zero-shot-classification-large-test", "dataset_config": "Tristan--zero-shot-classification-large-test", "dataset_split": "test", "col_mapping": {"text": "text", "classes": "classes", "target": "target"}}} | 2022-09-16T23:43:54+00:00 |

35d2e5d9f41feed5ca053572780ad7263b060d96 | # Dataset Card for AutoTrain Evaluator

This repository contains model predictions generated by [AutoTrain](https://huggingface.co/autotrain) for the following task and dataset:

* Task: Summarization

* Model: SamuelAllen123/t5-efficient-large-nl36_fine_tune_sum

* Dataset: samsum

* Config: samsum

* Split: test

To run new evaluation jobs, visit Hugging Face's [automatic model evaluator](https://huggingface.co/spaces/autoevaluate/model-evaluator).

## Contributions

Thanks to [@samuelfipps123](https://huggingface.co/samuelfipps123) for evaluating this model. | autoevaluate/autoeval-eval-samsum-samsum-7cb0ac-1486354325 | [

"autotrain",

"evaluation",

"region:us"

] | 2022-09-17T00:56:39+00:00 | {"type": "predictions", "tags": ["autotrain", "evaluation"], "datasets": ["samsum"], "eval_info": {"task": "summarization", "model": "SamuelAllen123/t5-efficient-large-nl36_fine_tune_sum", "metrics": [], "dataset_name": "samsum", "dataset_config": "samsum", "dataset_split": "test", "col_mapping": {"text": "dialogue", "target": "summary"}}} | 2022-09-17T01:01:53+00:00 |

834a9ec3ad3d01d96e9371cce33ce5a28a721102 | # Dataset Card for AutoTrain Evaluator