modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-02 12:32:32

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 534

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-02 12:31:20

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Butanium/simple-stories-2L16H128D-attention-only-toy-transformer

|

Butanium

| 2025-08-06T14:29:37Z | 9 | 0 | null |

[

"safetensors",

"llama",

"region:us"

] | null | 2025-08-06T14:29:27Z |

# 2-Layer 16-Head Attention-Only Transformer

This is a simplified transformer model with 2 attention layer(s) and 16 attention head(s), hidden size 128, designed for studying attention mechanisms in isolation.

## Architecture Differences from Vanilla Transformer

**Removed Components:**

- **No MLP/Feed-Forward layers** - Only attention layers

- **No Layer Normalization** - No LayerNorm before/after attention

- **No positional encoding** - No position embeddings of any kind

**Kept Components:**

- Token embeddings

- Multi-head self-attention with causal masking

- Residual connections around attention layers

- Language modeling head (linear projection to vocabulary)

This minimal architecture isolates the attention mechanism, making it useful for mechanistic interpretability research as described in [A Mathematical Framework for Transformer Circuits](https://transformer-circuits.pub/2021/framework/index.html).

## Usage

```python

config_class = LlamaConfig

def __init__(self, config: LlamaConfig):

super().__init__(config)

self.embed_tokens = nn.Embedding(config.vocab_size, config.hidden_size)

self.layers = nn.ModuleList([AttentionLayer(config) for _ in range(config.num_hidden_layers)])

self.lm_head = nn.Linear(config.hidden_size, config.vocab_size, bias=False)

model = AttentionOnlyTransformer.from_pretrained('Butanium/simple-stories-2L16H128D-attention-only-toy-transformer')

```

## Training Data

The model is trained on the [SimpleStories dataset](https://huggingface.co/datasets/SimpleStories/SimpleStories) for next-token prediction.

|

Butanium/simple-stories-2L16H512D-attention-only-toy-transformer

|

Butanium

| 2025-08-06T14:29:29Z | 7 | 0 | null |

[

"safetensors",

"llama",

"region:us"

] | null | 2025-08-06T14:29:26Z |

# 2-Layer 16-Head Attention-Only Transformer

This is a simplified transformer model with 2 attention layer(s) and 16 attention head(s), hidden size 512, designed for studying attention mechanisms in isolation.

## Architecture Differences from Vanilla Transformer

**Removed Components:**

- **No MLP/Feed-Forward layers** - Only attention layers

- **No Layer Normalization** - No LayerNorm before/after attention

- **No positional encoding** - No position embeddings of any kind

**Kept Components:**

- Token embeddings

- Multi-head self-attention with causal masking

- Residual connections around attention layers

- Language modeling head (linear projection to vocabulary)

This minimal architecture isolates the attention mechanism, making it useful for mechanistic interpretability research as described in [A Mathematical Framework for Transformer Circuits](https://transformer-circuits.pub/2021/framework/index.html).

## Usage

```python

config_class = LlamaConfig

def __init__(self, config: LlamaConfig):

super().__init__(config)

self.embed_tokens = nn.Embedding(config.vocab_size, config.hidden_size)

self.layers = nn.ModuleList([AttentionLayer(config) for _ in range(config.num_hidden_layers)])

self.lm_head = nn.Linear(config.hidden_size, config.vocab_size, bias=False)

model = AttentionOnlyTransformer.from_pretrained('Butanium/simple-stories-2L16H512D-attention-only-toy-transformer')

```

## Training Data

The model is trained on the [SimpleStories dataset](https://huggingface.co/datasets/SimpleStories/SimpleStories) for next-token prediction.

|

pasithbas159/MIVC_Typhoon2_HII_satellite_v1

|

pasithbas159

| 2025-08-06T14:29:29Z | 6 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_vl",

"image-to-text",

"text-generation-inference",

"unsloth",

"trl",

"sft",

"en",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-to-text

| 2025-07-21T17:13:37Z |

---

base_model: pasithbas/Typhoon2_HII_satellite_v1

tags:

- text-generation-inference

- transformers

- unsloth

- qwen2_vl

- trl

- sft

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** pasithbas159

- **License:** apache-2.0

- **Finetuned from model :** pasithbas/Typhoon2_HII_satellite_v1

This qwen2_vl model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

sbunlp/fabert

|

sbunlp

| 2025-08-06T14:29:15Z | 3,116 | 15 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"bert",

"fill-mask",

"fa",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2024-02-09T14:00:20Z |

---

language:

- fa

library_name: transformers

widget:

- text: "ز سوزناکی گفتار من [MASK] بگریست"

example_title: "Poetry 1"

- text: "نظر از تو برنگیرم همه [MASK] تا بمیرم که تو در دلم نشستی و سر مقام داری"

example_title: "Poetry 2"

- text: "هر ساعتم اندرون بجوشد [MASK] را وآگاهی نیست مردم بیرون را"

example_title: "Poetry 3"

- text: "غلام همت آن رند عافیت سوزم که در گدا صفتی [MASK] داند"

example_title: "Poetry 4"

- text: "این [MASK] اولشه."

example_title: "Informal 1"

- text: "دیگه خسته شدم! [MASK] اینم شد کار؟!"

example_title: "Informal 2"

- text: "فکر نکنم به موقع برسیم. بهتره [MASK] این یکی بشیم."

example_title: "Informal 3"

- text: "تا صبح بیدار موندم و داشتم برای [MASK] آماده می شدم."

example_title: "Informal 4"

- text: "زندگی بدون [MASK] خستهکننده است."

example_title: "Formal 1"

- text: "در حکم اولیه این شرکت مجاز به فعالیت شد ولی پس از بررسی مجدد، مجوز این شرکت [MASK] شد."

example_title: "Formal 2"

---

# FaBERT: Pre-training BERT on Persian Blogs

## Model Details

FaBERT is a Persian BERT-base model trained on the diverse HmBlogs corpus, encompassing both casual and formal Persian texts. Developed for natural language processing tasks, FaBERT is a robust solution for processing Persian text. Through evaluation across various Natural Language Understanding (NLU) tasks, FaBERT consistently demonstrates notable improvements, while having a compact model size. Now available on Hugging Face, integrating FaBERT into your projects is hassle-free. Experience enhanced performance without added complexity as FaBERT tackles a variety of NLP tasks.

## Features

- Pre-trained on the diverse HmBlogs corpus consisting more than 50 GB of text from Persian Blogs

- Remarkable performance across various downstream NLP tasks

- BERT architecture with 124 million parameters

## Useful Links

- **Repository:** [FaBERT on Github](https://github.com/SBU-NLP-LAB/FaBERT)

- **Paper:** [ACL Anthology](https://aclanthology.org/2025.wnut-1.10/)

## Usage

### Loading the Model with MLM head

```python

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("sbunlp/fabert") # make sure to use the default fast tokenizer

model = AutoModelForMaskedLM.from_pretrained("sbunlp/fabert")

```

### Downstream Tasks

Similar to the original English BERT, FaBERT can be fine-tuned on many downstream tasks.(https://huggingface.co/docs/transformers/en/training)

Examples on Persian datasets are available in our [GitHub repository](#useful-links).

**make sure to use the default Fast Tokenizer**

## Training Details

FaBERT was pre-trained with the MLM (WWM) objective, and the resulting perplexity on validation set was 7.76.

| Hyperparameter | Value |

|-------------------|:--------------:|

| Batch Size | 32 |

| Optimizer | Adam |

| Learning Rate | 6e-5 |

| Weight Decay | 0.01 |

| Total Steps | 18 Million |

| Warmup Steps | 1.8 Million |

| Precision Format | TF32 |

## Evaluation

Here are some key performance results for the FaBERT model:

**Sentiment Analysis**

| Task | FaBERT | ParsBERT | XLM-R |

|:-------------|:------:|:--------:|:-----:|

| MirasOpinion | **87.51** | 86.73 | 84.92 |

| MirasIrony | 74.82 | 71.08 | **75.51** |

| DeepSentiPers | **79.85** | 74.94 | 79.00 |

**Named Entity Recognition**

| Task | FaBERT | ParsBERT | XLM-R |

|:-------------|:------:|:--------:|:-----:|

| PEYMA | **91.39** | 91.24 | 90.91 |

| ParsTwiner | **82.22** | 81.13 | 79.50 |

| MultiCoNER v2 | 57.92 | **58.09** | 51.47 |

**Question Answering**

| Task | FaBERT | ParsBERT | XLM-R |

|:-------------|:------:|:--------:|:-----:|

| ParsiNLU | **55.87** | 44.89 | 42.55 |

| PQuAD | 87.34 | 86.89 | **87.60** |

| PCoQA | **53.51** | 50.96 | 51.12 |

**Natural Language Inference & QQP**

| Task | FaBERT | ParsBERT | XLM-R |

|:-------------|:------:|:--------:|:-----:|

| FarsTail | **84.45** | 82.52 | 83.50 |

| SBU-NLI | **66.65** | 58.41 | 58.85 |

| ParsiNLU QQP | **82.62** | 77.60 | 79.74 |

**Number of Parameters**

| | FaBERT | ParsBERT | XLM-R |

|:-------------|:------:|:--------:|:-----:|

| Parameter Count (M) | 124 | 162 | 278 |

| Vocabulary Size (K) | 50 | 100 | 250 |

For a more detailed performance analysis refer to the paper.

## How to Cite

If you use FaBERT in your research or projects, please cite it using the following BibTeX:

```bibtex

@inproceedings{masumi-etal-2025-fabert,

title = "{F}a{BERT}: Pre-training {BERT} on {P}ersian Blogs",

author = "Masumi, Mostafa and

Majd, Seyed Soroush and

Shamsfard, Mehrnoush and

Beigy, Hamid",

editor = "Bak, JinYeong and

Goot, Rob van der and

Jang, Hyeju and

Buaphet, Weerayut and

Ramponi, Alan and

Xu, Wei and

Ritter, Alan",

booktitle = "Proceedings of the Tenth Workshop on Noisy and User-generated Text",

month = may,

year = "2025",

address = "Albuquerque, New Mexico, USA",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2025.wnut-1.10/",

doi = "10.18653/v1/2025.wnut-1.10",

pages = "85--96",

ISBN = "979-8-89176-232-9",

}

```

|

luc4s-0liv3ra/P.m.f

|

luc4s-0liv3ra

| 2025-08-06T14:28:10Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-06T14:28:09Z |

---

license: apache-2.0

---

|

johnbridges/UIGEN-X-32B-0727-GGUF

|

johnbridges

| 2025-08-06T14:27:57Z | 3,877 | 0 |

transformers

|

[

"transformers",

"gguf",

"text-generation-inference",

"qwen3",

"ui-generation",

"tailwind-css",

"html",

"reasoning",

"step-by-step-generation",

"hybrid-thinking",

"tool-calling",

"en",

"base_model:Qwen/Qwen3-32B",

"base_model:quantized:Qwen/Qwen3-32B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2025-08-05T14:11:32Z |

---

base_model:

- Qwen/Qwen3-32B

tags:

- text-generation-inference

- transformers

- qwen3

- ui-generation

- tailwind-css

- html

- reasoning

- step-by-step-generation

- hybrid-thinking

- tool-calling

license: apache-2.0

language:

- en

---

# <span style="color: #7FFF7F;">UIGEN-X-32B-0727 GGUF Models</span>

## <span style="color: #7F7FFF;">Model Generation Details</span>

This model was generated using [llama.cpp](https://github.com/ggerganov/llama.cpp) at commit [`4cb208c9`](https://github.com/ggerganov/llama.cpp/commit/4cb208c93c1c938591a5b40354e2a6f9b94489bc).

---

## <span style="color: #7FFF7F;">Quantization Beyond the IMatrix</span>

I've been experimenting with a new quantization approach that selectively elevates the precision of key layers beyond what the default IMatrix configuration provides.

In my testing, standard IMatrix quantization underperforms at lower bit depths, especially with Mixture of Experts (MoE) models. To address this, I'm using the `--tensor-type` option in `llama.cpp` to manually "bump" important layers to higher precision. You can see the implementation here:

👉 [Layer bumping with llama.cpp](https://github.com/Mungert69/GGUFModelBuilder/blob/main/model-converter/tensor_list_builder.py)

While this does increase model file size, it significantly improves precision for a given quantization level.

### **I'd love your feedback—have you tried this? How does it perform for you?**

---

<a href="https://readyforquantum.com/huggingface_gguf_selection_guide.html" style="color: #7FFF7F;">

Click here to get info on choosing the right GGUF model format

</a>

---

<!--Begin Original Model Card-->

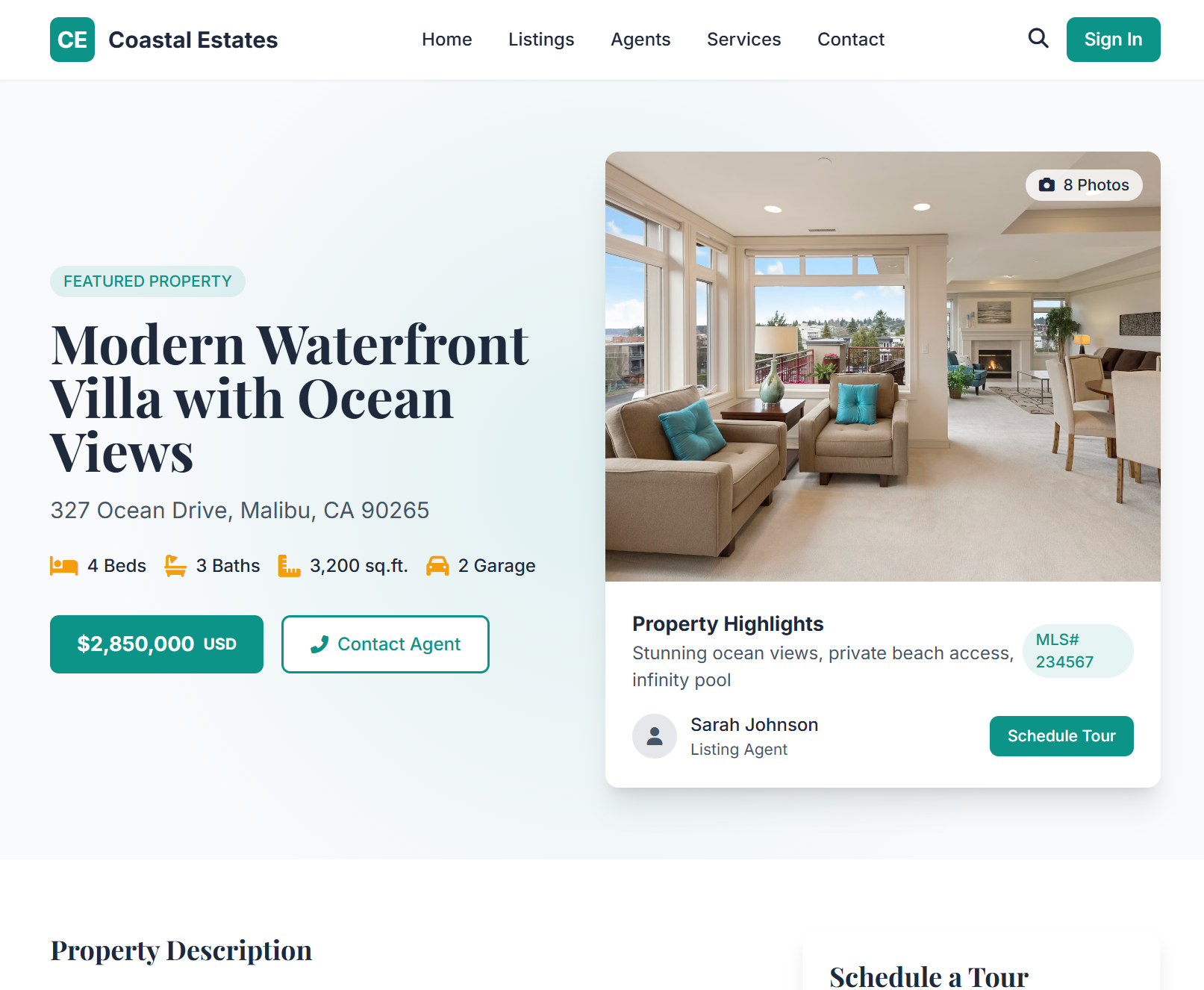

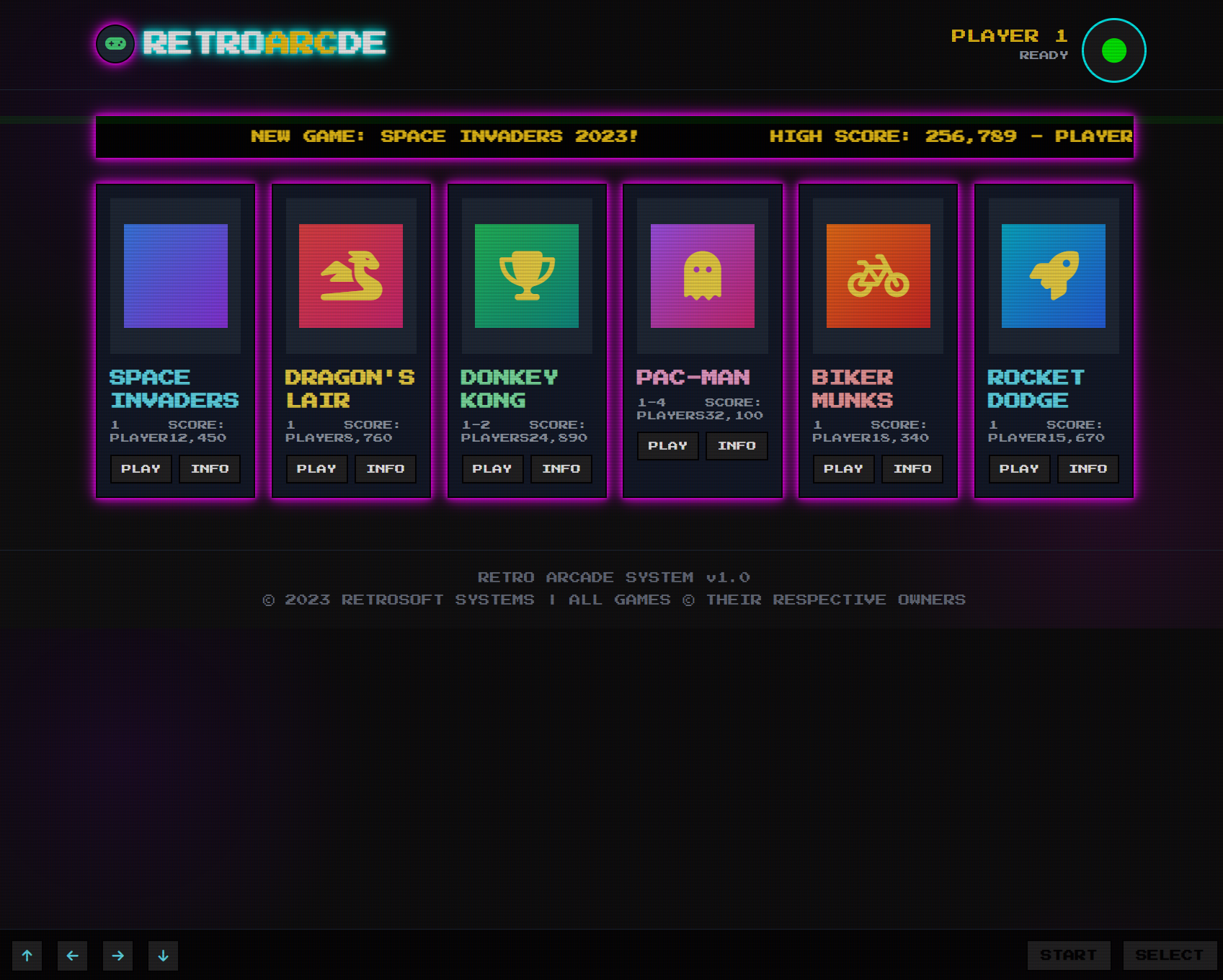

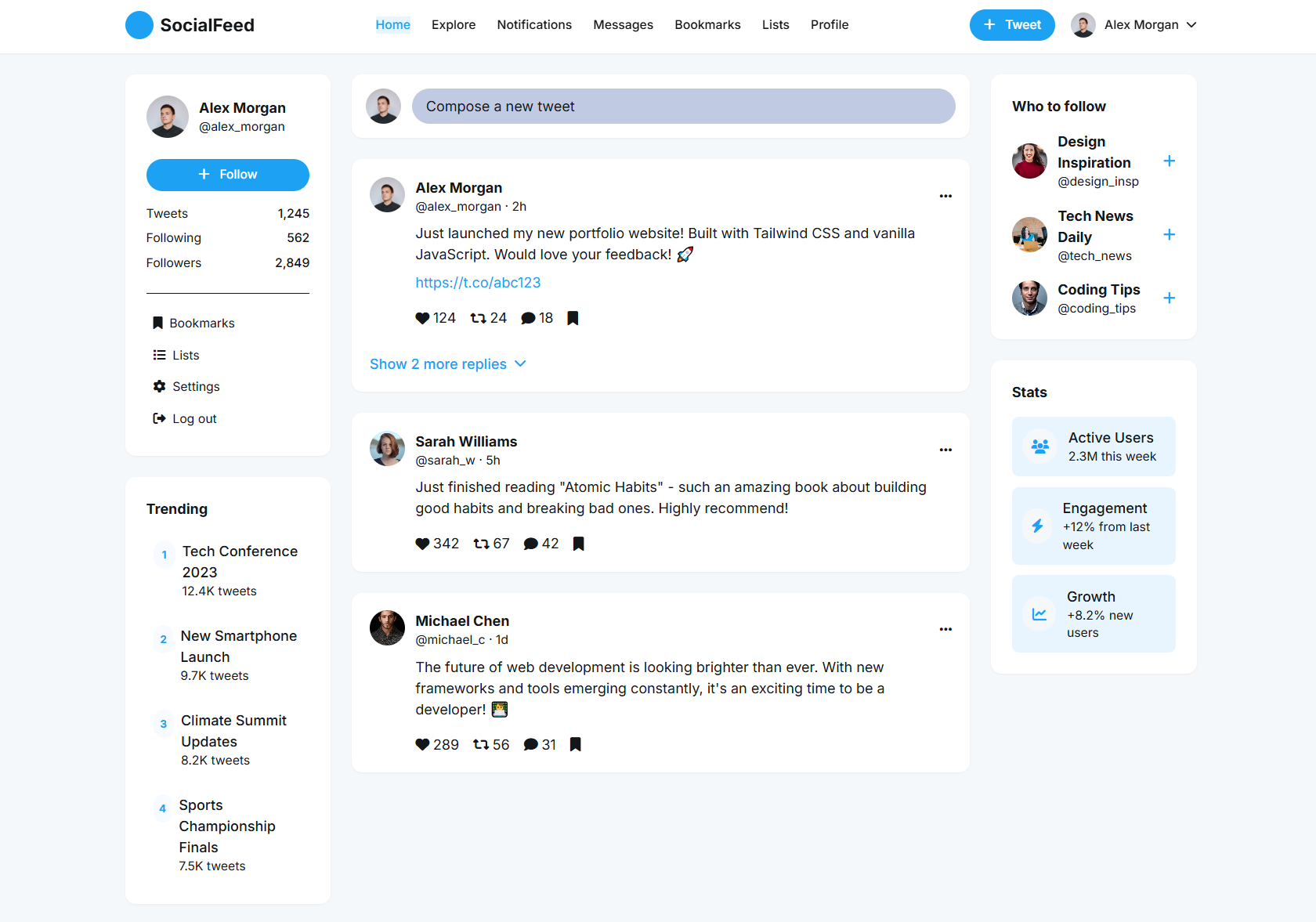

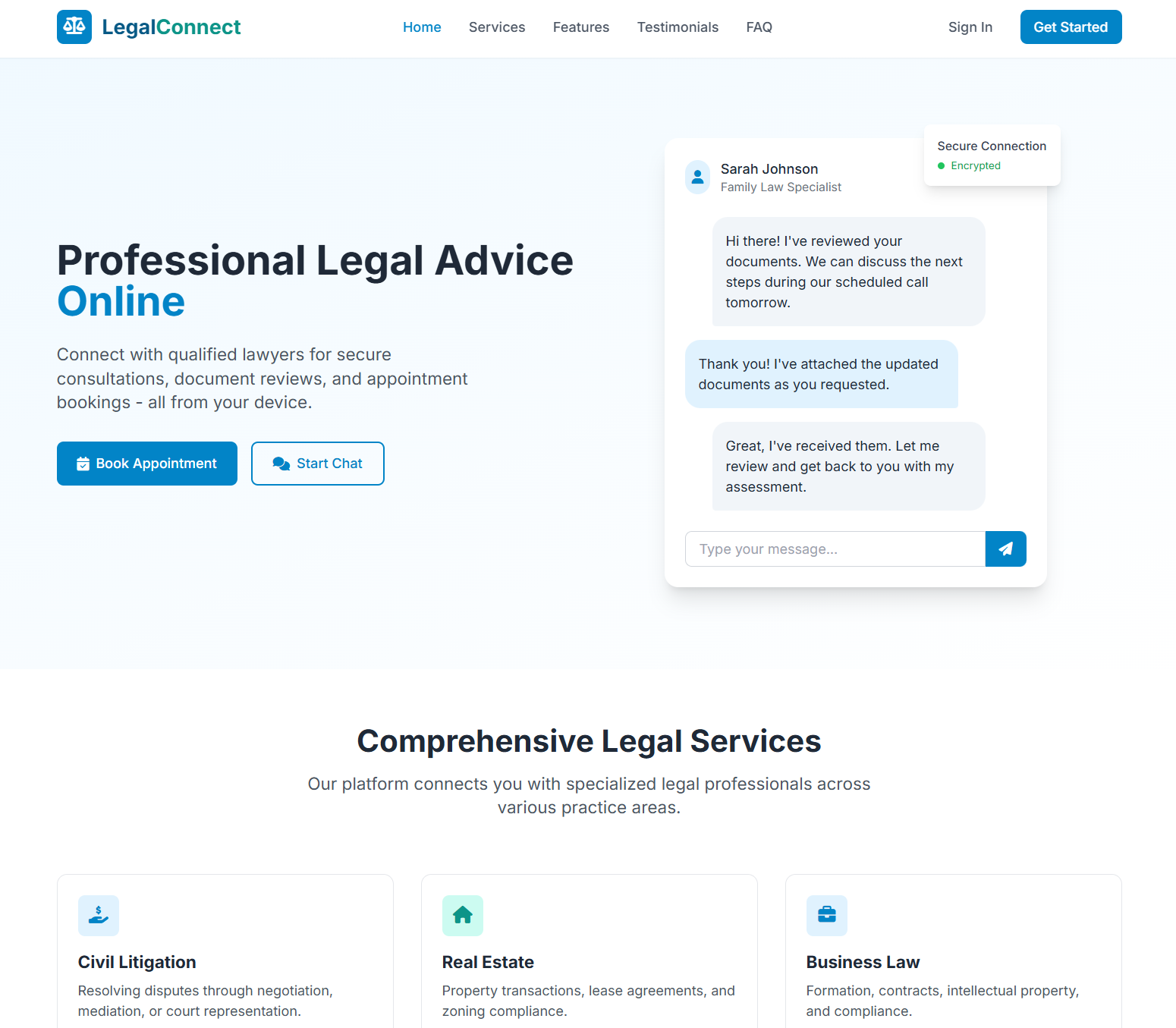

# UIGEN-X-32B-0727 Reasoning Only UI Generation Model

> Tesslate's Reasoning Only UI generation model built on Qwen3-32B architecture. Trained to systematically plan, architect, and implement complete user interfaces across modern development stacks.

**Live Examples**: [https://uigenoutput.tesslate.com](https://uigenoutput.tesslate.com)

**Discord Community**: [https://discord.gg/EcCpcTv93U](https://discord.gg/EcCpcTv93U)

**Website**: [https://tesslate.com](https://tesslate.com)

---

## Model Architecture

UIGEN-X-32B-0727 implements **Reasoning Only** from the Qwen3 family - combining systematic planning with direct implementation. The model follows a structured thinking process:

1. **Problem Analysis** — Understanding requirements and constraints

2. **Architecture Planning** — Component structure and technology decisions

3. **Design System Definition** — Color schemes, typography, and styling approach

4. **Implementation Strategy** — Step-by-step code generation with reasoning

This hybrid approach enables both thoughtful planning and efficient code generation, making it suitable for complex UI development tasks.

---

## Complete Technology Coverage

UIGEN-X-32B-0727 supports **26 major categories** spanning **frameworks and libraries** across **7 platforms**:

### Web Frameworks

- **React**: Next.js, Remix, Gatsby, Create React App, Vite

- **Vue**: Nuxt.js, Quasar, Gridsome

- **Angular**: Angular CLI, Ionic Angular

- **Svelte**: SvelteKit, Astro

- **Modern**: Solid.js, Qwik, Alpine.js

- **Static**: Astro, 11ty, Jekyll, Hugo

### Styling Systems

- **Utility-First**: Tailwind CSS, UnoCSS, Windi CSS

- **CSS-in-JS**: Styled Components, Emotion, Stitches

- **Component Systems**: Material-UI, Chakra UI, Mantine

- **Traditional**: Bootstrap, Bulma, Foundation

- **Design Systems**: Carbon Design, IBM Design Language

- **Framework-Specific**: Angular Material, Vuetify, Quasar

### UI Component Libraries

- **React**: shadcn/ui, Material-UI, Ant Design, Chakra UI, Mantine, PrimeReact, Headless UI, NextUI, DaisyUI

- **Vue**: Vuetify, PrimeVue, Quasar, Element Plus, Naive UI

- **Angular**: Angular Material, PrimeNG, ng-bootstrap, Clarity Design

- **Svelte**: Svelte Material UI, Carbon Components Svelte

- **Headless**: Radix UI, Reach UI, Ariakit, React Aria

### State Management

- **React**: Redux Toolkit, Zustand, Jotai, Valtio, Context API

- **Vue**: Pinia, Vuex, Composables

- **Angular**: NgRx, Akita, Services

- **Universal**: MobX, XState, Recoil

### Animation Libraries

- **React**: Framer Motion, React Spring, React Transition Group

- **Vue**: Vue Transition, Vueuse Motion

- **Universal**: GSAP, Lottie, CSS Animations, Web Animations API

- **Mobile**: React Native Reanimated, Expo Animations

### Icon Systems

Lucide, Heroicons, Material Icons, Font Awesome, Ant Design Icons, Bootstrap Icons, Ionicons, Tabler Icons, Feather, Phosphor, React Icons, Vue Icons

---

## Platform Support

### Web Development

Complete coverage of modern web development from simple HTML/CSS to complex enterprise applications.

### Mobile Development

- **React Native**: Expo, CLI, with navigation and state management

- **Flutter**: Cross-platform mobile with Material and Cupertino designs

- **Ionic**: Angular, React, and Vue-based hybrid applications

### Desktop Applications

- **Electron**: Cross-platform desktop apps (Slack, VSCode-style)

- **Tauri**: Rust-based lightweight desktop applications

- **Flutter Desktop**: Native desktop performance

### Python Applications

- **Web UI**: Streamlit, Gradio, Flask, FastAPI

- **Desktop GUI**: Tkinter, PyQt5/6, Kivy, wxPython, Dear PyGui

### Development Tools

Build tools, bundlers, testing frameworks, and development environments.

---

## Programming Language Support

**26 Languages and Approaches**:

JavaScript, TypeScript, Python, Dart, HTML5, CSS3, SCSS, SASS, Less, PostCSS, CSS Modules, Styled Components, JSX, TSX, Vue SFC, Svelte Components, Angular Templates, Tailwind, PHP

---

## Visual Style System

UIGEN-X-32B-0727 includes **21 distinct visual style categories** that can be applied to any framework:

### Modern Design Styles

- **Glassmorphism**: Frosted glass effects with blur and transparency

- **Neumorphism**: Soft, extruded design elements

- **Material Design**: Google's design system principles

- **Fluent Design**: Microsoft's design language

### Traditional & Classic

- **Skeuomorphism**: Real-world object representations

- **Swiss Design**: Clean typography and grid systems

- **Bauhaus**: Functional, geometric design principles

### Contemporary Trends

- **Brutalism**: Bold, raw, unconventional layouts

- **Anti-Design**: Intentionally imperfect, organic aesthetics

- **Minimalism**: Essential elements only, generous whitespace

### Thematic Styles

- **Cyberpunk**: Neon colors, glitch effects, futuristic elements

- **Dark Mode**: High contrast, reduced eye strain

- **Retro-Futurism**: 80s/90s inspired futuristic design

- **Geocities/90s Web**: Nostalgic early web aesthetics

### Experimental

- **Maximalism**: Rich, layered, abundant visual elements

- **Madness/Experimental**: Unconventional, boundary-pushing designs

- **Abstract Shapes**: Geometric, non-representational elements

---

## Prompt Structure Guide

### Basic Structure

To achieve the best results, use this prompting structure below:

```

[Action] + [UI Type] + [Framework Stack] + [Specific Features] + [Optional: Style]

```

### Examples

**Simple Component**:

```

Create a navigation bar using React + Tailwind CSS with logo, menu items, and mobile hamburger menu

```

**Complex Application**:

```

Build a complete e-commerce dashboard using Next.js + TypeScript + Tailwind CSS + shadcn/ui with:

- Product management (CRUD operations)

- Order tracking with status updates

- Customer analytics with charts

- Responsive design for mobile/desktop

- Dark mode toggle

Style: Use a clean, modern glassmorphism aesthetic

```

**Framework-Specific**:

```

Design an Angular Material admin panel with:

- Sidenav with expandable menu items

- Data tables with sorting and filtering

- Form validation with reactive forms

- Charts using ng2-charts

- SCSS custom theming

```

### Advanced Prompt Techniques

**Multi-Page Applications**:

```

Create a complete SaaS application using Vue 3 + Nuxt 3 + Tailwind CSS + Pinia:

Pages needed:

1. Landing page with hero, features, pricing

2. Dashboard with metrics and quick actions

3. Settings page with user preferences

4. Billing page with subscription management

Include: Navigation between pages, state management, responsive design

Style: Professional, modern with subtle animations

```

**Style Mixing**:

```

Build a portfolio website using Svelte + SvelteKit + Tailwind CSS combining:

- Minimalist layout principles

- Cyberpunk color scheme (neon accents)

- Smooth animations for page transitions

- Typography-driven content sections

```

---

## Tool Calling & Agentic Usage

UIGEN-X-32B-0727 supports **function calling** for dynamic asset integration and enhanced development workflows.

### Image Integration with Unsplash

Register tools for dynamic image fetching:

```json

{

"type": "function",

"function": {

"name": "fetch_unsplash_image",

"description": "Fetch high-quality images from Unsplash for UI mockups",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "Search term for image (e.g., 'modern office', 'technology', 'nature')"

},

"orientation": {

"type": "string",

"enum": ["landscape", "portrait", "squarish"],

"description": "Image orientation"

},

"size": {

"type": "string",

"enum": ["small", "regular", "full"],

"description": "Image size"

}

},

"required": ["query"]

}

}

}

```

### Content Generation Tools

```json

{

"type": "function",

"function": {

"name": "generate_content",

"description": "Generate realistic content for UI components",

"parameters": {

"type": "object",

"properties": {

"type": {

"type": "string",

"enum": ["user_profiles", "product_data", "blog_posts", "testimonials"],

"description": "Type of content to generate"

},

"count": {

"type": "integer",

"description": "Number of items to generate"

},

"theme": {

"type": "string",

"description": "Content theme or industry"

}

},

"required": ["type", "count"]

}

}

}

```

### Complete Agentic Workflow Example

```python

# 1. Plan the application

response = model.chat([

{"role": "user", "content": "Plan a complete travel booking website using React + Next.js + Tailwind CSS + shadcn/ui"}

], tools=[fetch_unsplash_image, generate_content])

# 2. The model will reason through the requirements and call tools:

# - fetch_unsplash_image(query="travel destinations", orientation="landscape")

# - generate_content(type="destinations", count=10, theme="popular travel")

# - fetch_unsplash_image(query="hotel rooms", orientation="landscape")

# 3. Generate complete implementation with real assets

final_response = model.chat([

{"role": "user", "content": "Now implement the complete website with the fetched images and content"}

])

```

### Tool Integration Patterns

**Dynamic Asset Loading**:

- Fetch relevant images during UI generation

- Generate realistic content for components

- Create cohesive color palettes from images

- Optimize assets for web performance

**Multi-Step Development**:

- Plan application architecture

- Generate individual components

- Integrate components into pages

- Apply consistent styling and theming

- Test responsive behavior

**Content-Aware Design**:

- Adapt layouts based on content types

- Optimize typography for readability

- Create responsive image galleries

- Generate accessible alt text

---

## Inference Configuration

### Optimal Parameters

```python

{

"temperature": 0.6, # Balanced creativity and consistency (make it lower if quantized!!!!)

"top_p": 0.9, # Nucleus sampling for quality

"top_k": 40, # Vocabulary restriction

"max_tokens": 25000, # Full component generation

"repetition_penalty": 1.1, # Avoid repetitive patterns

}

```

---

## Use Cases & Applications

### Rapid Prototyping

- Quick mockups for client presentations

- A/B testing different design approaches

- Concept validation with interactive prototypes

### Production Development

- Component library creation

- Design system implementation

- Template and boilerplate generation

### Educational & Learning

- Teaching modern web development

- Framework comparison and evaluation

- Best practices demonstration

### Enterprise Solutions

- Dashboard and admin panel generation

- Internal tool development

- Legacy system modernization

---

## Technical Requirements

### Hardware

- **GPU**: 8GB+ VRAM recommended (RTX 3080/4070 or equivalent)

- **RAM**: 16GB system memory minimum

- **Storage**: 20GB for model weights and cache

### Software

- **Python**: 3.8+ with transformers, torch, unsloth

- **Node.js**: For running generated JavaScript/TypeScript code

- **Browser**: Modern browser for testing generated UIs

### Integration

- Compatible with HuggingFace transformers

- Supports GGML/GGUF quantization

- Works with text-generation-webui

- API-ready for production deployment

---

## Limitations & Considerations

- **Token Usage**: Reasoning process increases token consumption

- **Complex Logic**: Focuses on UI structure rather than business logic

- **Real-time Features**: Generated code requires backend integration

- **Testing**: Output may need manual testing and refinement

- **Accessibility**: While ARIA-aware, manual a11y testing recommended

---

## Community & Support

**Discord**: [https://discord.gg/EcCpcTv93U](https://discord.gg/EcCpcTv93U)

**Website**: [https://tesslate.com](https://tesslate.com)

**Examples**: [https://uigenoutput.tesslate.com](https://uigenoutput.tesslate.com)

Join our community to share creations, get help, and contribute to the ecosystem.

---

## Citation

```bibtex

@misc{tesslate_uigen_x_2025,

title={UIGEN-X-32B-0727: Reasoning Only UI Generation with Qwen3},

author={Tesslate Team},

year={2025},

publisher={Tesslate},

url={https://huggingface.co/tesslate/UIGEN-X-32B-0727}

}

```

---

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/ZhW150gEhg0lkXoSjkiiU.png" alt="UI Screenshot 1" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/NdxVu6Zv6beigOYjbKCl1.png" alt="UI Screenshot 2" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/RX8po_paCIxrrcTvZ3xfA.png" alt="UI Screenshot 3" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/DBssA7zan39uxy9HQOo5N.png" alt="UI Screenshot 4" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/ttljEdBcYh1tkmyrCUQku.png" alt="UI Screenshot 5" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/duLxNQAuqv1FPVlsmQsWr.png" alt="UI Screenshot 6" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/ja2nhpNrvucf_zwCARXxa.png" alt="UI Screenshot 7" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/ca0f_8U9HQdaSVAejpzPn.png" alt="UI Screenshot 8" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/gzZF2CiOjyEbPAPRYSV-N.png" alt="UI Screenshot 9" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/y8wB78PffUUoVLzw3al2R.png" alt="UI Screenshot 10" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/M12dGr0xArAIF7gANSC5T.png" alt="UI Screenshot 11" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/t7r7cYlUwmI1QQf3fxO7o.png" alt="UI Screenshot 12" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/-uCIIJqTrrY9xkJHKCEqC.png" alt="UI Screenshot 13" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/eqT3IUWaPtoNQb-IWQNuy.png" alt="UI Screenshot 14" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/RhbGMcxCNlMIXRLEacUGi.png" alt="UI Screenshot 15" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64d1129297ca59bcf7458d07/FWhs43BKkXku12MwiW0v9.png" alt="UI Screenshot 16" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/67db34a5e7f1d129b294e2af/ILHx-xcn18cyDLX5a63xV.png" alt="UIGEN-X UI Screenshot 1" width="400">

<img src="https://cdn-uploads.huggingface.co/production/uploads/67db34a5e7f1d129b294e2af/A-zKo1J4HYftjiOjq_GB4.png" alt="UIGEN-X UI Screenshot 2" width="400">

*Built with Reasoning Only capabilities from Qwen3, UIGEN-X-32B-0727 represents a comprehensive approach to AI-driven UI development across the entire modern web development ecosystem.*

<!--End Original Model Card-->

---

# <span id="testllm" style="color: #7F7FFF;">🚀 If you find these models useful</span>

Help me test my **AI-Powered Quantum Network Monitor Assistant** with **quantum-ready security checks**:

👉 [Quantum Network Monitor](https://readyforquantum.com/?assistant=open&utm_source=huggingface&utm_medium=referral&utm_campaign=huggingface_repo_readme)

The full Open Source Code for the Quantum Network Monitor Service available at my github repos ( repos with NetworkMonitor in the name) : [Source Code Quantum Network Monitor](https://github.com/Mungert69). You will also find the code I use to quantize the models if you want to do it yourself [GGUFModelBuilder](https://github.com/Mungert69/GGUFModelBuilder)

💬 **How to test**:

Choose an **AI assistant type**:

- `TurboLLM` (GPT-4.1-mini)

- `HugLLM` (Hugginface Open-source models)

- `TestLLM` (Experimental CPU-only)

### **What I’m Testing**

I’m pushing the limits of **small open-source models for AI network monitoring**, specifically:

- **Function calling** against live network services

- **How small can a model go** while still handling:

- Automated **Nmap security scans**

- **Quantum-readiness checks**

- **Network Monitoring tasks**

🟡 **TestLLM** – Current experimental model (llama.cpp on 2 CPU threads on huggingface docker space):

- ✅ **Zero-configuration setup**

- ⏳ 30s load time (slow inference but **no API costs**) . No token limited as the cost is low.

- 🔧 **Help wanted!** If you’re into **edge-device AI**, let’s collaborate!

### **Other Assistants**

🟢 **TurboLLM** – Uses **gpt-4.1-mini** :

- **It performs very well but unfortunatly OpenAI charges per token. For this reason tokens usage is limited.

- **Create custom cmd processors to run .net code on Quantum Network Monitor Agents**

- **Real-time network diagnostics and monitoring**

- **Security Audits**

- **Penetration testing** (Nmap/Metasploit)

🔵 **HugLLM** – Latest Open-source models:

- 🌐 Runs on Hugging Face Inference API. Performs pretty well using the lastest models hosted on Novita.

### 💡 **Example commands you could test**:

1. `"Give me info on my websites SSL certificate"`

2. `"Check if my server is using quantum safe encyption for communication"`

3. `"Run a comprehensive security audit on my server"`

4. '"Create a cmd processor to .. (what ever you want)" Note you need to install a [Quantum Network Monitor Agent](https://readyforquantum.com/Download/?utm_source=huggingface&utm_medium=referral&utm_campaign=huggingface_repo_readme) to run the .net code on. This is a very flexible and powerful feature. Use with caution!

### Final Word

I fund the servers used to create these model files, run the Quantum Network Monitor service, and pay for inference from Novita and OpenAI—all out of my own pocket. All the code behind the model creation and the Quantum Network Monitor project is [open source](https://github.com/Mungert69). Feel free to use whatever you find helpful.

If you appreciate the work, please consider [buying me a coffee](https://www.buymeacoffee.com/mahadeva) ☕. Your support helps cover service costs and allows me to raise token limits for everyone.

I'm also open to job opportunities or sponsorship.

Thank you! 😊

|

DeusImperator/L3.3-Shakudo-70b_exl3_3.0bpw_H6

|

DeusImperator

| 2025-08-06T14:27:54Z | 5 | 0 | null |

[

"safetensors",

"llama",

"base_model:Steelskull/L3.3-Shakudo-70b",

"base_model:quantized:Steelskull/L3.3-Shakudo-70b",

"license:llama3.3",

"3-bit",

"exl3",

"region:us"

] | null | 2025-08-05T17:57:37Z |

---

license: llama3.3

base_model:

- Steelskull/L3.3-Shakudo-70b

---

# L3.3-Shakudo-70b - EXL3 3.0bpw H6

This is a 3bpw EXL3 quant of [Steelskull/L3.3-Shakudo-70b](https://huggingface.co/Steelskull/L3.3-Shakudo-70b)

This quant was made using exllamav3-0.0.5 with '--cal_cols 4096' (instead of default 2048) which in my experience improves quant quality a bit

3bpw fits in 32GB VRAM on Windows with around 18-20k Q8 context

I tested this quant shortly in some random RPs (including ones over 8k and 16k context) and it seems to work fine

## Prompt Templates

Uses Llama 3 Instruct format. Supports thinking with "\<thinking\>" prefill in assistant response.

### Original readme below

---

<!DOCTYPE html><html lang="en" style="margin:0; padding:0; width:100%; height:100%;">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>L3.3-Shakudo-70b</title>

<link href="https://fonts.googleapis.com/css2?family=Cinzel+Decorative:wght@400;700&family=Lora:ital,wght@0,400;0,500;0,600;0,700;1,400;1,500;1,600;1,700&display=swap" rel="stylesheet">

<style>

/* GOTHIC ALCHEMIST THEME */

/* Base styles */

/* DEBUG STYLES FOR SMALL SCREENS - Added temporarily to diagnose responsive issues */

@media (max-width: 480px) {

.debug-overflow {

border: 2px solid red !important;

}

}

/* Fix for vertical text in composition list on mobile */

@media (max-width: 480px) {

.composition-list li {

grid-template-columns: 1fr; /* Change to single column on mobile */

}

.model-component a {

display: inline; /* Change from block to inline */

word-break: break-word; /* Better word breaking behavior */

}

}

/* Remove horizontal padding on containers for mobile */

@media (max-width: 480px) {

.container {

padding-left: 0;

padding-right: 0;

}

}

* {

margin: 0;

padding: 0;

box-sizing: border-box;

}

html {

font-size: 16px;

scroll-behavior: smooth;

}

body {

font-family: 'Lora', serif;

background-color: #1A1A1A;

color: #E0EAE0;

line-height: 1.6;

background: radial-gradient(ellipse at center, #2a2a2a 0%, #1A1A1A 70%);

background-attachment: fixed;

position: relative;

overflow-x: hidden;

margin: 0;

padding: 0;

font-size: 16px;

overflow-y: auto;

min-height: 100vh;

height: auto;

}

body::before {

content: '';

position: fixed;

top: 0;

left: 0;

width: 100%;

height: 100%;

background:

radial-gradient(circle at 10% 20%, rgba(229, 91, 0, 0.15) 0%, transparent 40%),

radial-gradient(circle at 90% 80%, rgba(212, 175, 55, 0.15) 0%, transparent 40%);

pointer-events: none;

z-index: -1;

}

/* Typography */

h1, h2, h3, h4, h5, h6 {

font-family: 'Cinzel Decorative', serif;

font-weight: 700;

color: #E0EAE0;

margin-bottom: 1rem;

text-transform: uppercase;

letter-spacing: 1px;

}

p {

margin-bottom: 1.5rem;

color: rgba(224, 234, 224, 0.9);

}

a {

color: #E55B00; /* Fiery Orange */

text-decoration: none;

transition: all 0.3s ease;

}

a:hover {

color: #D4AF37; /* Gold */

text-shadow: 0 0 10px rgba(212, 175, 55, 0.7);

}

/* Aesthetic neon details */

.neon-border {

border: 1px solid #E55B00;

box-shadow: 0 0 10px rgba(229, 91, 0, 0.5);

}

.glowing-text {

color: #E55B00;

text-shadow:

0 0 5px rgba(229, 91, 0, 0.7),

0 0 10px rgba(229, 91, 0, 0.5),

0 0 15px rgba(229, 91, 0, 0.3);

}

/* Form elements */

input, select, textarea, button {

font-family: 'Lora', serif;

padding: 0.75rem 1rem;

border: 1px solid rgba(229, 91, 0, 0.5);

background-color: rgba(26, 26, 26, 0.8);

color: #E0EAE0;

border-radius: 0;

transition: all 0.3s ease;

}

input:focus, select:focus, textarea:focus {

outline: none;

border-color: #E55B00;

box-shadow: 0 0 10px rgba(229, 91, 0, 0.5);

}

button {

cursor: pointer;

background-color: rgba(229, 91, 0, 0.2);

border: 1px solid #E55B00;

border-radius: 0;

}

button:hover {

background-color: rgba(229, 91, 0, 0.4);

transform: translateY(-2px);

box-shadow: 0 0 15px rgba(229, 91, 0, 0.5);

}

/* Details and summary */

details {

margin-bottom: 1.5rem;

}

summary {

padding: 1rem;

background: rgba(229, 91, 0, 0.1);

border: 1px solid rgba(229, 91, 0, 0.3);

font-weight: 600;

cursor: pointer;

position: relative;

overflow: hidden;

border-radius: 0;

transition: all 0.3s ease;

}

summary:hover {

background: rgba(229, 91, 0, 0.2);

border-color: #E55B00;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.4);

}

summary::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 8px;

height: 100%;

background: linear-gradient(135deg, #E55B00, #D4AF37);

opacity: 0.7;

}

details[open] summary {

margin-bottom: 1rem;

box-shadow: 0 0 20px rgba(229, 91, 0, 0.4);

}

/* Code blocks */

code {

font-family: 'Cascadia Code', 'Source Code Pro', monospace;

background: rgba(229, 91, 0, 0.1);

padding: 0.2rem 0.4rem;

border: 1px solid rgba(229, 91, 0, 0.3);

border-radius: 0;

font-size: 0.9rem;

color: #E55B00;

}

pre {

background: rgba(26, 26, 26, 0.8);

padding: 1.5rem;

border: 1px solid rgba(229, 91, 0, 0.3);

overflow-x: auto;

margin-bottom: 1.5rem;

border-radius: 0;

}

pre code {

background: transparent;

padding: 0;

border: none;

color: #E0EAE0;

}

/* Scrollbar styling */

::-webkit-scrollbar {

width: 8px;

height: 8px;

background-color: #1A1A1A;

}

::-webkit-scrollbar-thumb {

background: linear-gradient(135deg, #E55B00, #D4AF37);

border-radius: 0;

}

::-webkit-scrollbar-track {

background-color: rgba(26, 26, 26, 0.8);

border-radius: 0;

}

/* Selection styling */

::selection {

background-color: rgba(229, 91, 0, 0.3);

color: #E0EAE0;

}

/* Metrics section */

.metrics-section {

margin-bottom: 30px;

position: relative;

background: rgba(3, 6, 18, 0.8);

border: 1px solid #00b2ff;

padding: 20px;

clip-path: polygon(0 0, calc(100% - 15px) 0, 100% 15px, 100% 100%, 15px 100%, 0 calc(100% - 15px));

box-shadow: 0 0 20px rgba(0, 178, 255, 0.15);

}

/* Core metrics grid */

.core-metrics-grid {

display: grid;

grid-template-columns: repeat(auto-fit, minmax(200px, 1fr));

gap: 15px;

margin-bottom: 30px;

}

.info-grid {

display: grid;

grid-template-columns: repeat(auto-fit, minmax(150px, 1fr));

gap: 15px;

}

/* Metric box */

.metric-box {

background: rgba(3, 6, 18, 0.8);

border: 1px solid #00b2ff;

border-radius: 0;

padding: 15px;

display: flex;

flex-direction: column;

gap: 8px;

position: relative;

overflow: hidden;

clip-path: polygon(0 0, calc(100% - 10px) 0, 100% 10px, 100% 100%, 10px 100%, 0 calc(100% - 10px));

box-shadow: 0 0 15px rgba(0, 178, 255, 0.15);

transition: all 0.3s ease;

}

.metric-box:hover {

box-shadow: 0 0 20px rgba(0, 178, 255, 0.3);

transform: translateY(-2px);

}

.metric-box::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

background-image:

linear-gradient(45deg, rgba(0, 178, 255, 0.1) 25%, transparent 25%, transparent 75%, rgba(0, 178, 255, 0.1) 75%),

linear-gradient(-45deg, rgba(0, 178, 255, 0.1) 25%, transparent 25%, transparent 75%, rgba(0, 178, 255, 0.1) 75%);

background-size: 10px 10px;

pointer-events: none;

opacity: 0.5;

}

.metric-box .label {

color: #e0f7ff;

font-size: 14px;

font-weight: 500;

text-transform: uppercase;

letter-spacing: 1px;

text-shadow: 0 0 5px rgba(0, 178, 255, 0.3);

}

.metric-box .value {

color: #00b2ff;

font-size: 28px;

font-weight: 700;

text-shadow:

0 0 10px rgba(0, 178, 255, 0.5),

0 0 20px rgba(0, 178, 255, 0.3);

letter-spacing: 1px;

font-family: 'Orbitron', sans-serif;

}

/* Progress metrics */

.progress-metrics {

display: grid;

gap: 15px;

padding: 20px;

background: rgba(3, 6, 18, 0.8);

border: 1px solid #00b2ff;

position: relative;

overflow: hidden;

clip-path: polygon(0 0, calc(100% - 15px) 0, 100% 15px, 100% 100%, 15px 100%, 0 calc(100% - 15px));

box-shadow: 0 0 20px rgba(0, 178, 255, 0.15);

}

.progress-metric {

display: grid;

gap: 8px;

}

.progress-label {

display: flex;

justify-content: space-between;

align-items: center;

color: #e0f7ff;

font-size: 14px;

text-transform: uppercase;

letter-spacing: 1px;

text-shadow: 0 0 5px rgba(0, 178, 255, 0.3);

}

.progress-value {

color: #00b2ff;

font-weight: 600;

text-shadow:

0 0 5px rgba(0, 178, 255, 0.5),

0 0 10px rgba(0, 178, 255, 0.3);

font-family: 'Orbitron', sans-serif;

}

/* Progress bars */

.progress-bar {

height: 4px;

background: rgba(0, 178, 255, 0.1);

border-radius: 0;

overflow: hidden;

position: relative;

border: 1px solid rgba(0, 178, 255, 0.2);

clip-path: polygon(0 0, 100% 0, calc(100% - 4px) 100%, 0 100%);

}

.progress-fill {

height: 100%;

background: linear-gradient(90deg, #0062ff, #00b2ff);

border-radius: 0;

position: relative;

overflow: hidden;

clip-path: polygon(0 0, calc(100% - 4px) 0, 100% 100%, 0 100%);

box-shadow:

0 0 10px rgba(0, 178, 255, 0.4),

0 0 20px rgba(0, 178, 255, 0.2);

}

.progress-fill::after {

content: '';

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

background: linear-gradient(90deg,

rgba(255, 255, 255, 0.1) 0%,

rgba(255, 255, 255, 0.1) 40%,

rgba(255, 255, 255, 0.3) 50%,

rgba(255, 255, 255, 0.1) 60%,

rgba(255, 255, 255, 0.1) 100%

);

background-size: 200% 100%;

animation: shimmer 2s infinite;

}

/* Split progress bars */

.progress-metric.split .progress-label {

justify-content: space-between;

font-size: 13px;

}

.progress-bar.split {

display: flex;

background: rgba(0, 178, 255, 0.1);

position: relative;

justify-content: center;

border: 1px solid rgba(0, 178, 255, 0.2);

clip-path: polygon(0 0, 100% 0, calc(100% - 4px) 100%, 0 100%);

}

.progress-bar.split::after {

content: '';

position: absolute;

top: 0;

left: 50%;

transform: translateX(-50%);

width: 2px;

height: 100%;

background: rgba(0, 178, 255, 0.3);

z-index: 2;

box-shadow: 0 0 10px rgba(0, 178, 255, 0.4);

}

.progress-fill-left,

.progress-fill-right {

height: 100%;

background: linear-gradient(90deg, #0062ff, #00b2ff);

position: relative;

width: 50%;

overflow: hidden;

}

.progress-fill-left {

clip-path: polygon(0 0, calc(100% - 4px) 0, 100% 100%, 0 100%);

margin-right: 1px;

transform-origin: right;

transform: scaleX(var(--scale, 0));

box-shadow:

0 0 10px rgba(0, 178, 255, 0.4),

0 0 20px rgba(0, 178, 255, 0.2);

}

.progress-fill-right {

clip-path: polygon(0 0, 100% 0, 100% 100%, 4px 100%);

margin-left: 1px;

transform-origin: left;

transform: scaleX(var(--scale, 0));

box-shadow:

0 0 10px rgba(0, 178, 255, 0.4),

0 0 20px rgba(0, 178, 255, 0.2);

}

/* Benchmark container */

.benchmark-container {

background: rgba(3, 6, 18, 0.8);

border: 1px solid #00b2ff;

position: relative;

overflow: hidden;

clip-path: polygon(0 0, calc(100% - 15px) 0, 100% 15px, 100% 100%, 15px 100%, 0 calc(100% - 15px));

box-shadow: 0 0 20px rgba(0, 178, 255, 0.15);

padding: 20px;

}

/* Benchmark notification */

.benchmark-notification {

background: rgba(3, 6, 18, 0.8);

border: 1px solid #00b2ff;

padding: 15px;

margin-bottom: 20px;

position: relative;

overflow: hidden;

clip-path: polygon(0 0, calc(100% - 10px) 0, 100% 10px, 100% 100%, 10px 100%, 0 calc(100% - 10px));

box-shadow: 0 0 15px rgba(0, 178, 255, 0.15);

}

.notification-content {

display: flex;

align-items: center;

gap: 10px;

position: relative;

z-index: 1;

}

.notification-icon {

font-size: 20px;

color: #00b2ff;

text-shadow:

0 0 10px rgba(0, 178, 255, 0.5),

0 0 20px rgba(0, 178, 255, 0.3);

}

.notification-text {

color: #e0f7ff;

font-size: 14px;

display: flex;

align-items: center;

gap: 10px;

flex-wrap: wrap;

text-transform: uppercase;

letter-spacing: 1px;

text-shadow: 0 0 5px rgba(0, 178, 255, 0.3);

}

.benchmark-link {

color: #00b2ff;

font-weight: 500;

white-space: nowrap;

text-shadow:

0 0 5px rgba(0, 178, 255, 0.5),

0 0 10px rgba(0, 178, 255, 0.3);

position: relative;

padding: 2px 5px;

border: 1px solid rgba(0, 178, 255, 0.3);

clip-path: polygon(0 0, calc(100% - 5px) 0, 100% 5px, 100% 100%, 5px 100%, 0 calc(100% - 5px));

transition: all 0.3s ease;

}

.benchmark-link:hover {

background: rgba(0, 178, 255, 0.1);

border-color: #00b2ff;

box-shadow: 0 0 10px rgba(0, 178, 255, 0.3);

}

@keyframes shimmer {

0% { background-position: 200% 0; }

100% { background-position: -200% 0; }

}

/* Button styles */

.button {

display: inline-block;

padding: 10px 20px;

background-color: rgba(229, 91, 0, 0.2);

color: #E0EAE0;

border: 1px solid #E55B00;

font-family: 'Cinzel Decorative', serif;

font-weight: 600;

font-size: 15px;

text-transform: uppercase;

letter-spacing: 1px;

cursor: pointer;

transition: all 0.3s ease;

position: relative;

overflow: hidden;

text-align: center;

border-radius: 0;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.3);

}

.button:hover {

background-color: rgba(229, 91, 0, 0.4);

color: #E0EAE0;

transform: translateY(-2px);

box-shadow: 0 0 20px rgba(212, 175, 55, 0.5);

text-shadow: 0 0 10px rgba(212, 175, 55, 0.7);

}

.button:active {

transform: translateY(1px);

box-shadow: 0 0 10px rgba(229, 91, 0, 0.4);

}

.button::before {

content: '';

position: absolute;

top: 0;

left: -100%;

width: 100%;

height: 100%;

background: linear-gradient(

90deg,

transparent,

rgba(212, 175, 55, 0.3),

transparent

);

transition: left 0.7s ease;

}

.button:hover::before {

left: 100%;

}

.button::after {

content: '';

position: absolute;

inset: 0;

background-image: linear-gradient(45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%), linear-gradient(-45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%);

background-size: 10px 10px;

opacity: 0;

transition: opacity 0.3s ease;

pointer-events: none;

}

.button:hover::after {

opacity: 0.5;

}

/* Support buttons */

.support-buttons {

display: flex;

gap: 15px;

flex-wrap: wrap;

}

.support-buttons .button {

min-width: 150px;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.3);

}

.support-buttons .button:hover {

box-shadow: 0 0 20px rgba(212, 175, 55, 0.5);

}

/* Button animations */

@keyframes pulse {

0% {

box-shadow: 0 0 10px rgba(0, 178, 255, 0.3);

}

50% {

box-shadow: 0 0 20px rgba(0, 178, 255, 0.5);

}

100% {

box-shadow: 0 0 10px rgba(0, 178, 255, 0.3);

}

}

.animated-button {

animation: pulse 2s infinite;

}

/* Button variants */

.button.primary {

background-color: rgba(0, 98, 255, 0.2);

border-color: #00b2ff;

}

.button.primary:hover {

background-color: rgba(0, 98, 255, 0.3);

}

.button.outline {

background-color: transparent;

border-color: #00b2ff;

}

.button.outline:hover {

background-color: rgba(0, 98, 255, 0.1);

}

.button.small {

padding: 6px 12px;

font-size: 13px;

}

.button.large {

padding: 12px 24px;

font-size: 16px;

}

/* Button with icon */

.button-with-icon {

display: inline-flex;

align-items: center;

gap: 8px;

}

.button-icon {

font-size: 18px;

line-height: 1;

}

/* Responsive adjustments */

@media (max-width: 768px) {

.support-buttons {

flex-direction: column;

}

.support-buttons .button {

width: 100%;

}

}

/* Container & Layout */

.container {

width: 100%;

max-width: 100%;

margin: 0;

padding: 20px;

position: relative;

background-color: rgba(26, 26, 26, 0.8);

border: 1px solid #E55B00;

box-shadow: 0 0 20px rgba(229, 91, 0, 0.5);

border-radius: 0;

}

.container::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

background:

radial-gradient(circle at 20% 30%, rgba(229, 91, 0, 0.15) 0%, transparent 50%),

radial-gradient(circle at 80% 70%, rgba(212, 175, 55, 0.1) 0%, transparent 40%);

pointer-events: none;

z-index: -1;

}

/* Header */

.header {

margin-bottom: 50px;

position: relative;

padding-bottom: 20px;

border-bottom: 1px solid #E55B00;

overflow: hidden;

}

.header::before {

content: '';

position: absolute;

bottom: -1px;

left: 0;

width: 50%;

height: 1px;

background: linear-gradient(90deg, #E55B00, transparent);

box-shadow: 0 0 20px #E55B00;

}

.header::after {

content: '';

position: absolute;

bottom: -1px;

right: 0;

width: 50%;

height: 1px;

background: linear-gradient(90deg, transparent, #E55B00);

box-shadow: 0 0 20px #E55B00;

}

.header h1 {

font-family: 'Cinzel Decorative', serif;

font-size: 48px;

color: #E0EAE0;

text-align: center;

text-transform: uppercase;

letter-spacing: 2px;

margin: 0;

position: relative;

text-shadow:

0 0 5px rgba(229, 91, 0, 0.7),

0 0 10px rgba(229, 91, 0, 0.5),

0 0 20px rgba(229, 91, 0, 0.3);

}

.header h1::before {

content: '';

position: absolute;

width: 100px;

height: 1px;

bottom: -10px;

left: 50%;

transform: translateX(-50%);

background: #E55B00;

box-shadow: 0 0 20px #E55B00;

}

/* Info section */

.info {

margin-bottom: 50px;

overflow: visible; /* Ensure content can extend beyond container */

}

.info > img {

width: 100%;

height: auto;

border: 1px solid #E55B00;

margin-bottom: 30px;

box-shadow: 0 0 30px rgba(229, 91, 0, 0.5);

border-radius: 0;

background-color: rgba(26, 26, 26, 0.6);

display: block;

}

.info h2 {

font-family: 'Cinzel Decorative', serif;

font-size: 28px;

color: #E0EAE0;

text-transform: uppercase;

letter-spacing: 1.5px;

margin: 30px 0 20px 0;

padding-bottom: 10px;

border-bottom: 1px solid rgba(229, 91, 0, 0.4);

position: relative;

text-shadow: 0 0 10px rgba(229, 91, 0, 0.5);

}

.info h2::after {

content: '';

position: absolute;

bottom: -1px;

left: 0;

width: 100px;

height: 1px;

background: #E55B00;

box-shadow: 0 0 15px #E55B00;

}

.info h3 {

font-family: 'Cinzel Decorative', serif;

font-size: 24px;

color: #E0EAE0;

margin: 20px 0 15px 0;

letter-spacing: 1px;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.4);

}

.info h4 {

font-family: 'Lora', serif;

font-size: 18px;

color: #E55B00;

margin: 15px 0 10px 0;

letter-spacing: 0.5px;

text-transform: uppercase;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.5);

}

.info p {

margin: 0 0 15px 0;

line-height: 1.6;

}

/* Creator section */

.creator-section {

margin-bottom: 30px;

padding: 20px 20px 10px 20px;

background: rgba(26, 26, 26, 0.8);

border: 1px solid #E55B00;

position: relative;

border-radius: 15px;

box-shadow: 0 0 20px rgba(229, 91, 0, 0.3);

}

.creator-badge {

position: relative;

z-index: 1;

}

.creator-info {

display: flex;

flex-direction: column;

}

.creator-label {

color: #E0EAE0;

font-size: 14px;

text-transform: uppercase;

letter-spacing: 1px;

margin-bottom: 5px;

}

.creator-link {

color: #E55B00;

text-decoration: none;

font-weight: 600;

display: flex;

align-items: center;

gap: 5px;

transition: all 0.3s ease;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.5);

}

.creator-link:hover {

transform: translateX(5px);

text-shadow: 0 0 10px rgba(212, 175, 55, 0.7);

}

.creator-name {

font-size: 18px;

}

.creator-arrow {

font-weight: 600;

transition: transform 0.3s ease;

}

/* Supporters dropdown section */

.sponsors-section {

margin-top: 15px;

position: relative;

z-index: 2;

}

.sponsors-dropdown {

width: 100%;

background: rgba(229, 91, 0, 0.1);

border: 1px solid #E55B00;

border-radius: 15px;

overflow: hidden;

position: relative;

}

.sponsors-summary {

padding: 12px 15px;

display: flex;

justify-content: space-between;

align-items: center;

cursor: pointer;

outline: none;

position: relative;

z-index: 1;

transition: all 0.3s ease;

}

.sponsors-summary:hover {

background-color: rgba(229, 91, 0, 0.2);

}

.sponsors-title {

font-family: 'Cinzel Decorative', serif;

color: #E0EAE0;

font-size: 16px;

text-transform: uppercase;

letter-spacing: 1px;

font-weight: 600;

text-shadow: 0 0 8px rgba(229, 91, 0, 0.4);

}

.sponsors-list {

padding: 15px;

display: grid;

grid-template-columns: repeat(auto-fill, minmax(120px, 1fr));

gap: 15px;

background: transparent;

border-top: 1px solid rgba(229, 91, 0, 0.3);

}

.sponsor-item {

display: flex;

flex-direction: column;

align-items: center;

text-align: center;

padding: 10px;

border: 1px solid rgba(229, 91, 0, 0.2);

background: rgba(229, 91, 0, 0.1);

border-radius: 15px;

transition: all 0.3s ease;

}

.sponsor-item:hover {

transform: translateY(-3px);

border-color: #E55B00;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.3);

background: rgba(229, 91, 0, 0.2);

}

.sponsor-rank {

color: #E55B00;

font-weight: 600;

font-size: 14px;

margin-bottom: 5px;

text-shadow: 0 0 8px rgba(229, 91, 0, 0.5);

}

.sponsor-img {

width: 60px;

height: 60px;

border-radius: 50%;

object-fit: cover;

border: 2px solid #E55B00;

box-shadow: 0 0 12px rgba(229, 91, 0, 0.3);

margin-bottom: 8px;

transition: all 0.3s ease;

}

.sponsor-item:nth-child(1) .sponsor-img {

border-color: gold;

box-shadow: 0 0 12px rgba(255, 215, 0, 0.5);

}

.sponsor-item:nth-child(2) .sponsor-img {

border-color: silver;

box-shadow: 0 0 12px rgba(192, 192, 192, 0.5);

}

.sponsor-item:nth-child(3) .sponsor-img {

border-color: #cd7f32; /* bronze */

box-shadow: 0 0 12px rgba(205, 127, 50, 0.5);

}

.sponsor-item:hover .sponsor-img {

border-color: #D4AF37;

}

.sponsor-name {

color: #E0EAE0;

font-size: 14px;

font-weight: 500;

word-break: break-word;

}

.creator-link:hover .creator-arrow {

transform: translateX(5px);

}

.dropdown-icon {

color: #E55B00;

transition: transform 0.3s ease;

}

details[open] .dropdown-icon {

transform: rotate(180deg);

}

/* Model info */

.model-info {

margin-bottom: 50px;

}

/* Section container */

.section-container {

margin-bottom: 50px;

padding: 25px;

background: rgba(26, 26, 26, 0.8);

border: 1px solid #E55B00;

position: relative;

overflow: hidden;

border-radius: 15px;

box-shadow: 0 0 20px rgba(229, 91, 0, 0.3);

}

.section-container::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

background-image:

linear-gradient(45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%),

linear-gradient(-45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%);

background-size: 10px 10px;

pointer-events: none;

z-index: 0;

opacity: 0.5;

}

.section-container h2 {

margin-top: 0;

}

/* Support section */

.support-section {

margin-bottom: 50px;

padding: 25px;

background: rgba(26, 26, 26, 0.8);

border: 1px solid #E55B00;

position: relative;

overflow: hidden;

border-radius: 15px;

box-shadow: 0 0 20px rgba(229, 91, 0, 0.3);

}

.support-section::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

background-image:

linear-gradient(45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%),

linear-gradient(-45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%);

background-size: 10px 10px;

pointer-events: none;

z-index: 0;

opacity: 0.5;

}

.support-section h2 {

margin-top: 0;

}

/* Special thanks */

.special-thanks {

margin-top: 30px;

}

.thanks-list {

list-style: none;

padding: 0;

margin: 15px 0;

display: grid;

grid-template-columns: repeat(auto-fill, minmax(250px, 1fr));

gap: 15px;

}

.thanks-list li {

padding: 10px 15px;

background: rgba(229, 91, 0, 0.1);

border: 1px solid rgba(229, 91, 0, 0.3);

position: relative;

overflow: hidden;

border-radius: 0;

transition: all 0.3s ease;

}

.thanks-list li:hover {

background: rgba(229, 91, 0, 0.2);

border-color: #E55B00;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.4);

transform: translateY(-2px);

}

.thanks-list li strong {

color: #E55B00;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.5);

}

.thanks-note {

font-style: italic;

color: rgba(224, 234, 224, 0.7);

text-align: center;

margin-top: 20px;

}

/* General card styles */

.info-card,

.template-card,

.settings-card,

.quantized-section {

background: rgba(26, 26, 26, 0.8);

border: 1px solid #E55B00;

padding: 25px;

margin: 20px 0;

position: relative;

overflow: hidden;

border-radius: 15px;

box-shadow: 0 0 20px rgba(229, 91, 0, 0.3);

}

.info-card::before,

.template-card::before,

.settings-card::before,

.quantized-section::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

background-image:

linear-gradient(45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%),

linear-gradient(-45deg, rgba(229, 91, 0, 0.1) 25%, transparent 25%, transparent 75%, rgba(229, 91, 0, 0.1) 75%);

background-size: 10px 10px;

pointer-events: none;

z-index: 0;

opacity: 0.5;

}

.info-card::after,

.template-card::after,

.settings-card::after,

.quantized-section::after {

content: '';

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

background: linear-gradient(135deg, rgba(229, 91, 0, 0.15), transparent 70%);

pointer-events: none;

z-index: 0;

}

/* Info card specific */

.info-card {

box-shadow: 0 0 30px rgba(229, 91, 0, 0.4);

}

.info-header {

margin-bottom: 25px;

padding-bottom: 15px;

border-bottom: 1px solid rgba(229, 91, 0, 0.4);

position: relative;

}

.info-header::after {

content: '';

position: absolute;

bottom: -1px;

left: 0;

width: 100px;

height: 1px;

background: #E55B00;

box-shadow: 0 0 10px #E55B00;

}

.model-tags {

display: flex;

flex-wrap: wrap;

gap: 10px;

margin-top: 10px;

}

.model-tag {

background: rgba(229, 91, 0, 0.2);

border: 1px solid #E55B00;

color: #E0EAE0;

font-size: 12px;

padding: 5px 10px;

text-transform: uppercase;

letter-spacing: 1px;

font-weight: 500;

position: relative;

overflow: hidden;

border-radius: 0;

box-shadow: 0 0 10px rgba(229, 91, 0, 0.4);

transition: all 0.3s ease;

}

.model-tag:hover {

background: rgba(229, 91, 0, 0.4);

box-shadow: 0 0 15px rgba(229, 91, 0, 0.6);

transform: translateY(-2px);

}

/* Model composition list */

.model-composition h4 {

margin-bottom: 15px;

}

.composition-list {

list-style: none;

padding: 0;

margin: 0 0 20px 0;

display: grid;

gap: 12px;

}

.composition-list li {

display: grid;

grid-template-columns: minmax(0, 1fr) auto;

align-items: center;

gap: 10px;

padding: 10px 15px;

background: rgba(229, 91, 0, 0.1);

border: 1px solid rgba(229, 91, 0, 0.3);

position: relative;

overflow: hidden;

border-radius: 0;

transition: all 0.3s ease;

}

.composition-list li:hover {

background: rgba(229, 91, 0, 0.2);

border-color: #E55B00;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.4);

transform: translateY(-2px);

}

.composition-list li::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 8px;

height: 100%;

background: linear-gradient(180deg, #E55B00, #D4AF37);

opacity: 0.7;

box-shadow: 0 0 10px rgba(229, 91, 0, 0.6);

}

.model-component {

color: #E55B00;

font-weight: 500;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.5);

}

.model-component a {

display: block;

overflow-wrap: break-word;

word-wrap: break-word;

word-break: break-word;

transition: all 0.3s ease;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.5);

}

.model-component a:hover {

transform: translateX(5px);

text-shadow: 0 0 10px rgba(212, 175, 55, 0.7);

}

/* Base model dropdown styles */

.base-model-dropdown {

width: 100%;

position: relative;

padding-right: 50px; /* Make space for the BASE label */

display: block;

margin-bottom: 0;

}

.base-model-summary {

display: flex;

justify-content: space-between;

align-items: center;

padding: 8px 12px 8px 20px; /* Increased left padding to prevent text overlap with blue stripe */

cursor: pointer;

border: 1px solid rgba(229, 91, 0, 0.3);

position: relative;

border-radius: 0;

margin-bottom: 0;

transition: all 0.3s ease;

color: #E55B00;

font-weight: 500;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.5);

}

.base-model-summary:hover {

background: rgba(229, 91, 0, 0.2);

border-color: #E55B00;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.4);

}

.base-model-summary span:first-child {

overflow: hidden;

text-overflow: ellipsis;

display: inline-block;

white-space: nowrap;

flex: 1;

}

.dropdown-icon {

font-size: 0.75rem;

margin-left: 8px;

color: rgba(229, 91, 0, 0.7);

transition: transform 0.3s ease;

}

.base-model-dropdown[open] .dropdown-icon {

transform: rotate(180deg);

}

.base-model-list {

position: absolute;

margin-top: 0;

left: 50%;

transform: translateX(-50%);

background: rgba(26, 26, 26, 0.95);

border: 1px solid rgba(229, 91, 0, 0.5);

border-radius: 0;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.3);

min-width: 100%;

overflow: visible;

}

.base-model-item {

padding: 8px 12px 8px 20px; /* Increased left padding for the model items */

border-bottom: 1px solid rgba(229, 91, 0, 0.2);

position: relative;

transition: all 0.3s ease;

}

.base-model-item:last-child {

border-bottom: none;

margin-bottom: 0;

}

.base-model-item:hover {

background: rgba(229, 91, 0, 0.2);

box-shadow: 0 0 15px rgba(229, 91, 0, 0.4);

transform: translateY(-1px) translateX(0);

}

.base-model-item a {

display: block;

width: 100%;

overflow: hidden;

padding-left: 10px;

}

.model-label {

color: #E55B00;

text-decoration: none;

transition: all 0.3s ease;

display: inline-block;

font-weight: 500;

white-space: nowrap;

overflow: hidden;

text-overflow: ellipsis;

}

.model-label:hover {

text-shadow: 0 0 10px rgba(212, 175, 55, 0.7);

}

/* BASE label */

.base-model-dropdown::after {

z-index: 1;

content: attr(data-merge-type);

position: absolute;

right: 0;

top: 8px;

transform: translateY(0);

font-size: 10px;

padding: 2px 5px;

background: rgba(229, 91, 0, 0.3);

color: #E0EAE0;

border: 1px solid #E55B00;

box-shadow: 0 0 10px rgba(229, 91, 0, 0.5);

border-radius: 0;

}

/* Override the blue stripe for base-model-summary and items */

.base-model-dropdown {

position: relative;

}

.base-model-summary::before,

.base-model-item::before {

content: '';

position: absolute;

top: 0;

left: 0;

width: 8px;

height: 100%;

background: linear-gradient(180deg, #E55B00, #D4AF37);

opacity: 0.7;

}

.base-model-dropdown[open] .base-model-summary,

.base-model-dropdown[open] .base-model-list {

border-color: rgba(229, 91, 0, 0.7);

box-shadow: 0 0 25px rgba(229, 91, 0, 0.5);

z-index: 20;

position: relative;

}

/* Model description */

.model-description {

margin-top: 30px;

}

.model-description h4 {

margin-bottom: 15px;

}

.model-description p {

margin-bottom: 20px;

}

.model-description ul {

padding-left: 20px;

margin-bottom: 20px;

list-style: none;

}

.model-description li {

margin-bottom: 8px;

position: relative;

padding-left: 15px;

}

.model-description li::before {

content: '†';

position: absolute;

left: 0;

top: 0;

color: #E55B00;

text-shadow: 0 0 10px rgba(229, 91, 0, 0.7);

}

/* Template card */

.template-card {

box-shadow: 0 0 30px rgba(229, 91, 0, 0.4);

}

.template-item {

padding: 15px;

margin-bottom: 15px;

background: rgba(229, 91, 0, 0.1);

border: 1px solid rgba(229, 91, 0, 0.3);

position: relative;

border-radius: 0;

transition: all 0.3s ease;

}

.template-item:hover {

background: rgba(229, 91, 0, 0.2);

border-color: #E55B00;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.5);

transform: translateY(-2px);

}

.template-content {

display: flex;

flex-direction: column;

gap: 5px;

}

.template-link {

display: flex;

align-items: center;

justify-content: space-between;

font-weight: 600;

color: #E55B00;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.5);

padding: 5px;

transition: all 0.3s ease;

}

.template-link:hover {

text-shadow: 0 0 10px rgba(212, 175, 55, 0.7);

transform: translateX(5px);

}

.link-arrow {

font-weight: 600;

transition: transform 0.3s ease;

}

.template-link:hover .link-arrow {

transform: translateX(5px);

}

.template-author {

font-size: 14px;

color: rgba(224, 234, 224, 0.8);

text-transform: uppercase;

letter-spacing: 1px;

}

/* Settings card */

.settings-card {

box-shadow: 0 0 30px rgba(229, 91, 0, 0.4);

}

.settings-header {

margin-bottom: 15px;

padding-bottom: 10px;

border-bottom: 1px solid rgba(229, 91, 0, 0.4);

position: relative;

}

.settings-header::after {

content: '';

position: absolute;

bottom: -1px;

left: 0;

width: 80px;

height: 1px;

background: #E55B00;

box-shadow: 0 0 10px #E55B00;

}

.settings-content {

padding: 15px;

background: rgba(229, 91, 0, 0.1);

border: 1px solid rgba(229, 91, 0, 0.3);

margin-bottom: 15px;

position: relative;

border-radius: 0;

}

.settings-grid {

display: grid;

grid-template-columns: repeat(auto-fit, minmax(250px, 1fr));

gap: 20px;

margin-top: 20px;

}

.setting-item {

display: flex;

justify-content: space-between;

align-items: center;

margin-bottom: 10px;

padding: 8px 0;

border-bottom: 1px solid rgba(229, 91, 0, 0.2);

}

.setting-item:last-child {

margin-bottom: 0;

border-bottom: none;

}

.setting-label {

color: #E0EAE0;

font-size: 14px;

font-weight: 500;

text-transform: uppercase;

letter-spacing: 1px;

}

.setting-value {

color: #E55B00;

font-weight: 600;

font-family: 'Lora', serif;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.7);

}

.setting-item.highlight {

padding: 15px;

background: rgba(229, 91, 0, 0.2);

border: 1px solid rgba(229, 91, 0, 0.4);

border-radius: 0;

display: flex;

justify-content: center;

position: relative;

}

.setting-item.highlight .setting-value {

font-size: 24px;

font-weight: 700;

text-shadow:

0 0 10px rgba(229, 91, 0, 0.7),

0 0 20px rgba(229, 91, 0, 0.5);

}

/* Sampler Settings Section */

.sampler-settings {

position: relative;

overflow: visible;

}

.sampler-settings .settings-card {

background: rgba(26, 26, 26, 0.8);

border: 1px solid #E55B00;

box-shadow: 0 0 20px rgba(229, 91, 0, 0.4), inset 0 0 30px rgba(229, 91, 0, 0.2);

padding: 20px;

margin: 15px 0;

position: relative;

}

.sampler-settings .settings-header h3 {

color: #E55B00;

text-shadow: 0 0 8px rgba(229, 91, 0, 0.7);

font-size: 1.2rem;

letter-spacing: 1px;

}

.sampler-settings .settings-grid {

display: grid;

grid-template-columns: repeat(auto-fit, minmax(250px, 1fr));

gap: 15px;

}

.sampler-settings .setting-item {

border-bottom: 1px solid rgba(229, 91, 0, 0.3);

padding: 12px 0;

transition: all 0.3s ease;

}

.sampler-settings .setting-label {

font-family: 'Lora', serif;

font-weight: 600;

color: #E0EAE0;

}

.sampler-settings .setting-value {

font-family: 'Lora', serif;

color: #E55B00;

}

/* DRY Settings styles */

.dry-settings {

margin-top: 8px;

padding-left: 8px;

border-left: 2px solid rgba(229, 91, 0, 0.4);

display: flex;

flex-direction: column;

gap: 6px;

}

.dry-item {

display: flex;

justify-content: space-between;

align-items: center;

}

.dry-label {

font-size: 13px;

color: #E0EAE0;

}

.dry-value {

color: #E55B00;

font-family: 'Lora', serif;

text-shadow: 0 0 5px rgba(229, 91, 0, 0.6);

}

/* Quantized sections */

.quantized-section {

margin-bottom: 30px;

}

.quantized-items {

display: grid;

gap: 15px;

margin-top: 15px;

}

.quantized-item {

padding: 15px;

background: rgba(229, 91, 0, 0.1);

border: 1px solid rgba(229, 91, 0, 0.3);

display: grid;

gap: 8px;

position: relative;

border-radius: 0;

transition: all 0.3s ease;

}

.quantized-item:hover {

background: rgba(229, 91, 0, 0.2);

border-color: #E55B00;

box-shadow: 0 0 15px rgba(229, 91, 0, 0.5);

transform: translateY(-2px);

}

.author {

color: #E0EAE0;

font-size: 12px;

text-transform: uppercase;

letter-spacing: 1px;

font-weight: 500;

}

.multi-links {

display: flex;

align-items: center;

flex-wrap: wrap;

gap: 5px;

}

.separator {

color: rgba(224, 234, 224, 0.5);

margin: 0 5px;

}

/* Medieval Corners */

.corner {

position: absolute;

background:none;

width:6em;

height:6em;

font-size:10px;

opacity: 1.0;

transition: opacity 0.3s ease-in-out;

}

.corner:after {

position: absolute;

content: '';

display: block;

width:0.2em;

height:0.2em;

}

/* New Progress Bar Design */

.new-progress-container {

margin: 2rem 0;

padding: 1.5rem;

background: rgba(229, 91, 0, 0.05);

border: 1px solid rgba(229, 91, 0, 0.2);