problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_8043

|

rasdani/github-patches

|

git_diff

|

scoutapp__scout_apm_python-226

|

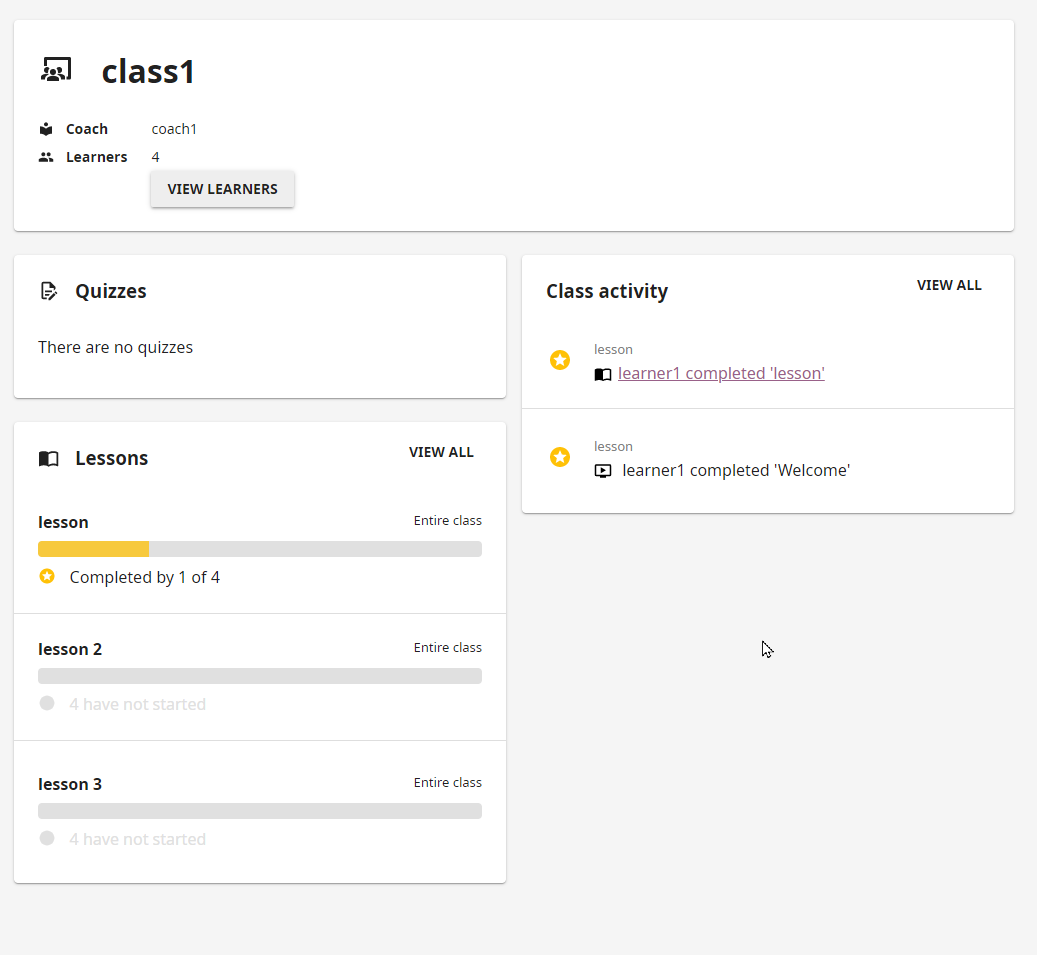

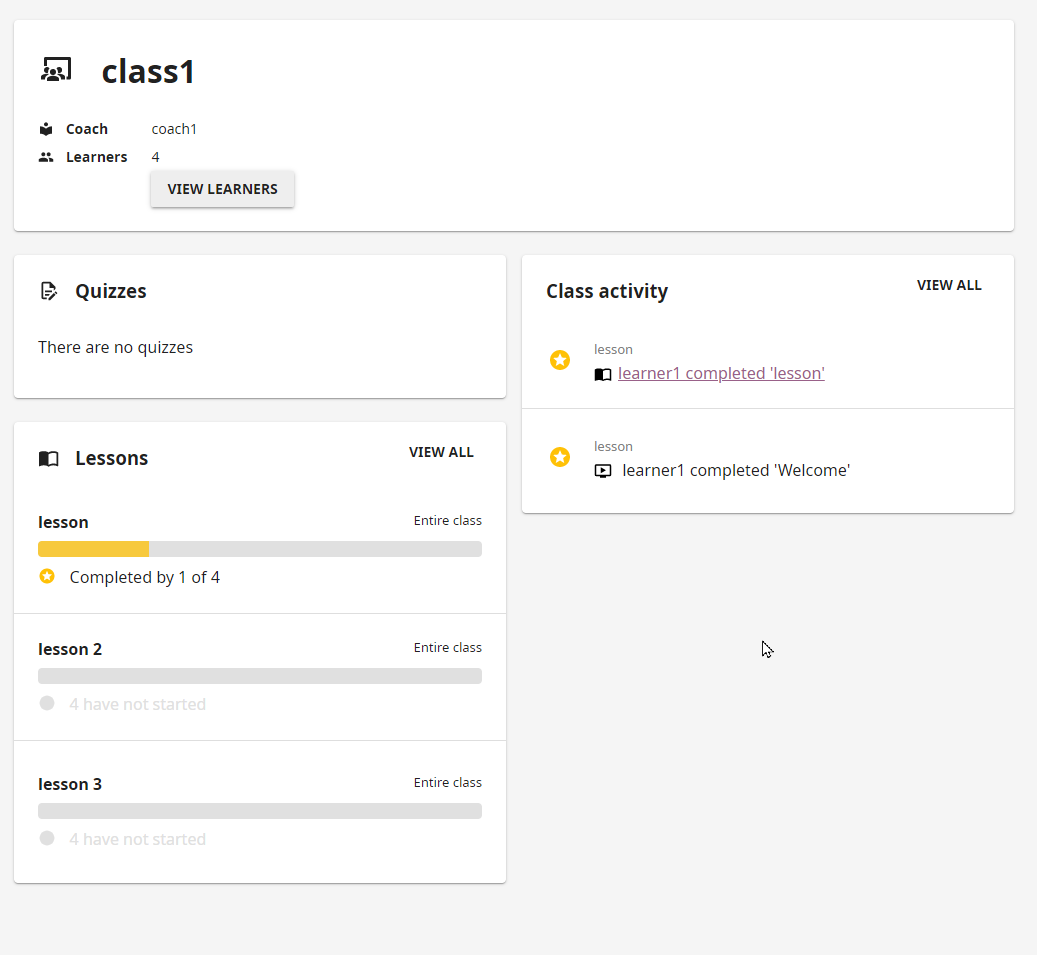

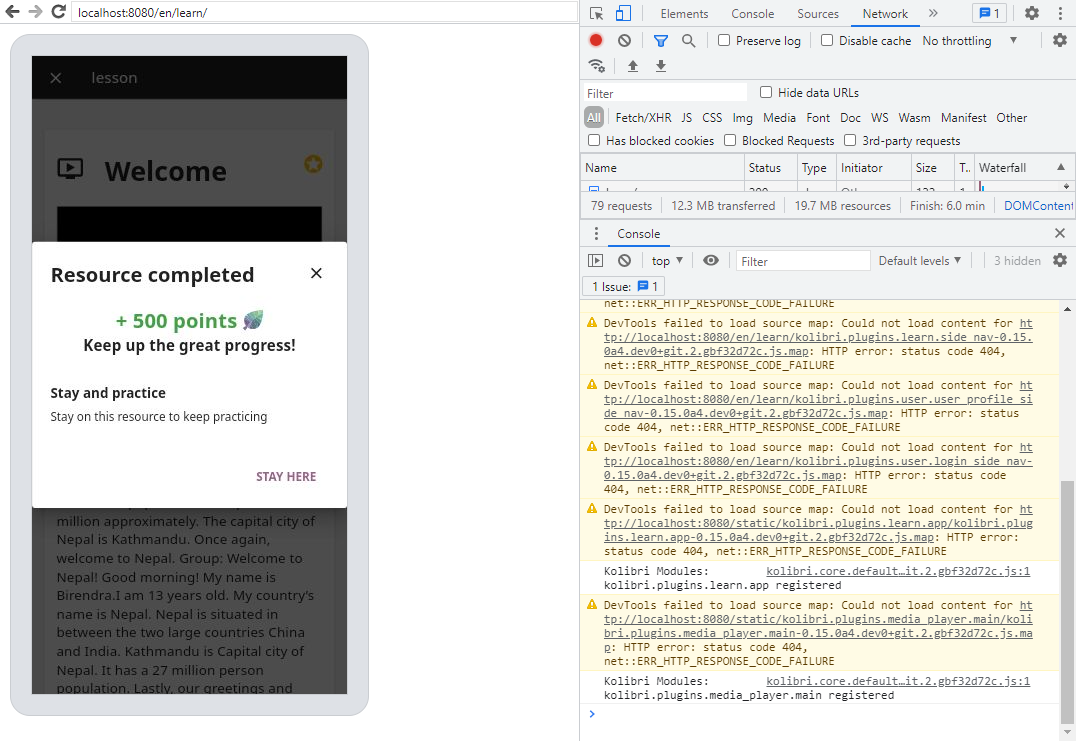

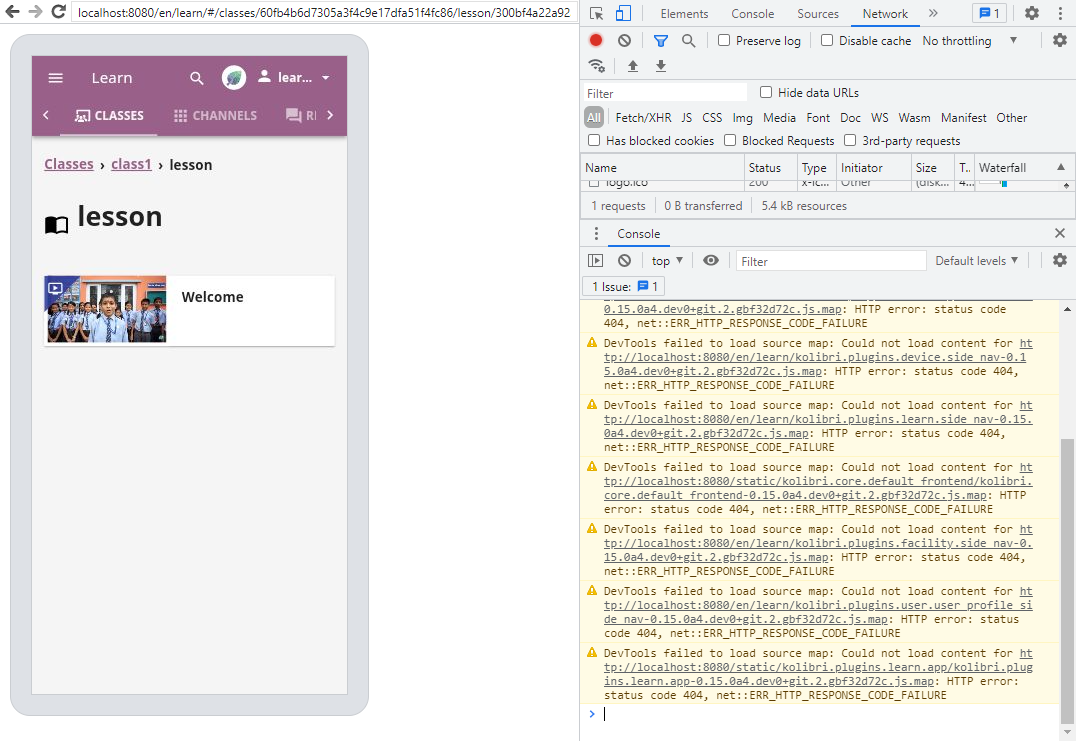

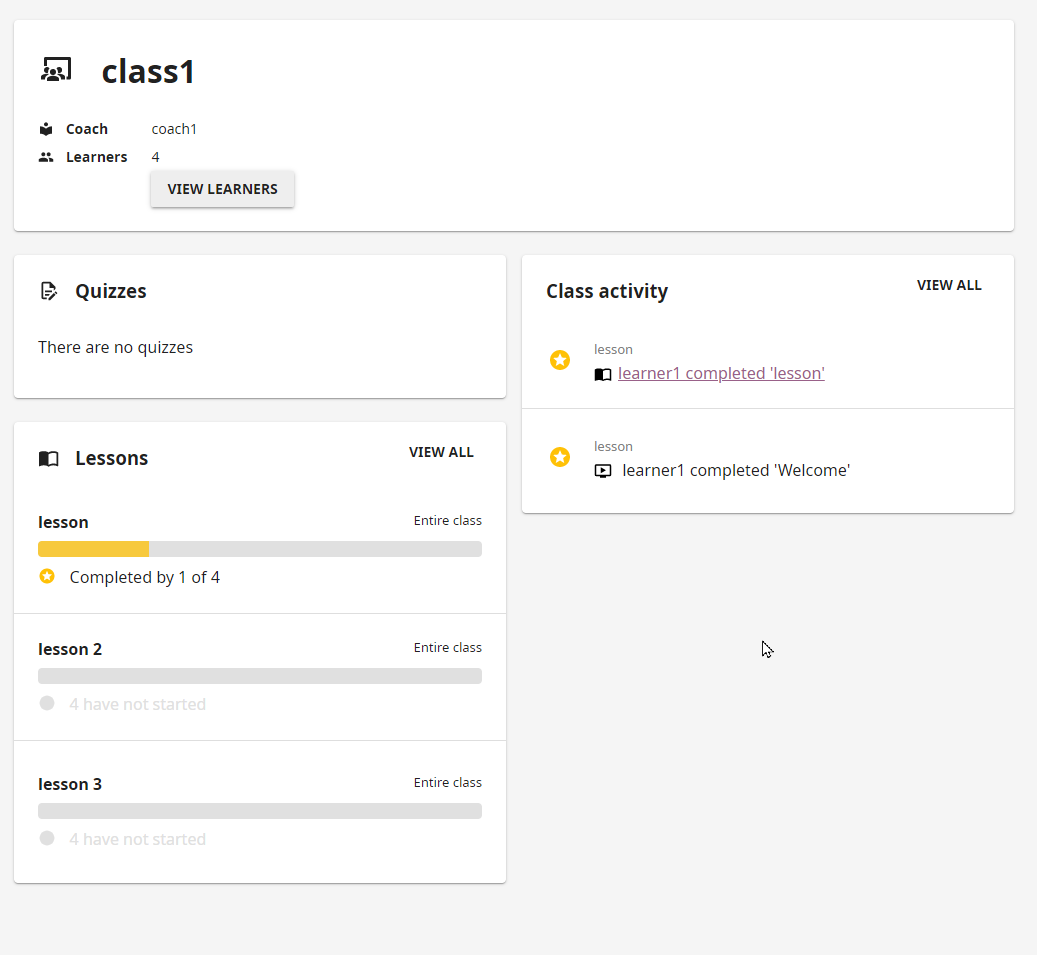

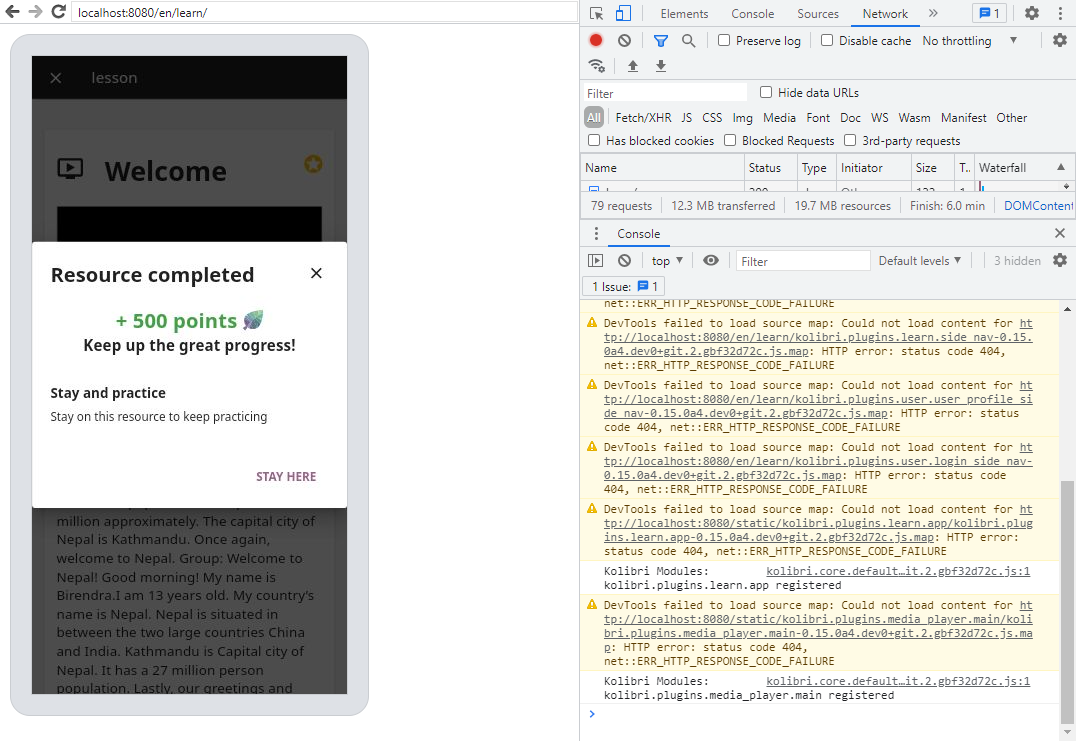

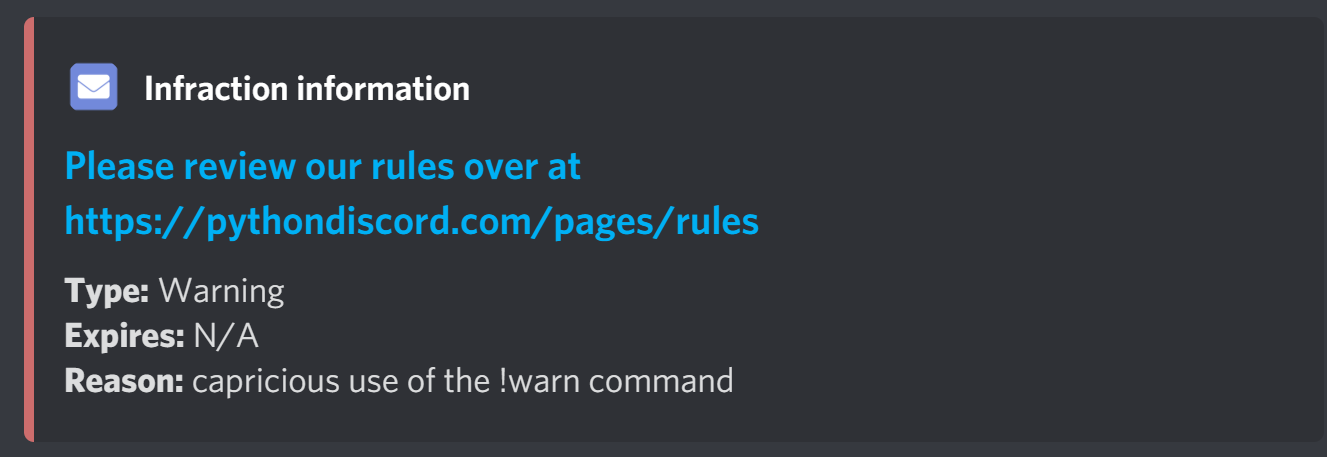

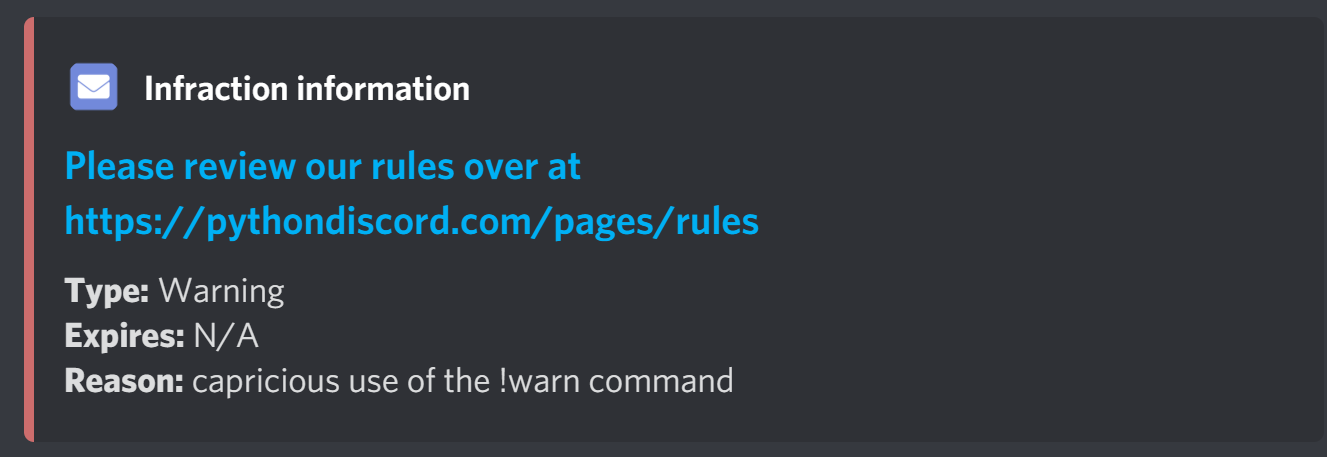

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Name Django Admin Views clearer

Currently, Django Admin views are captured with confusingly internal names. We should capture something clearer

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

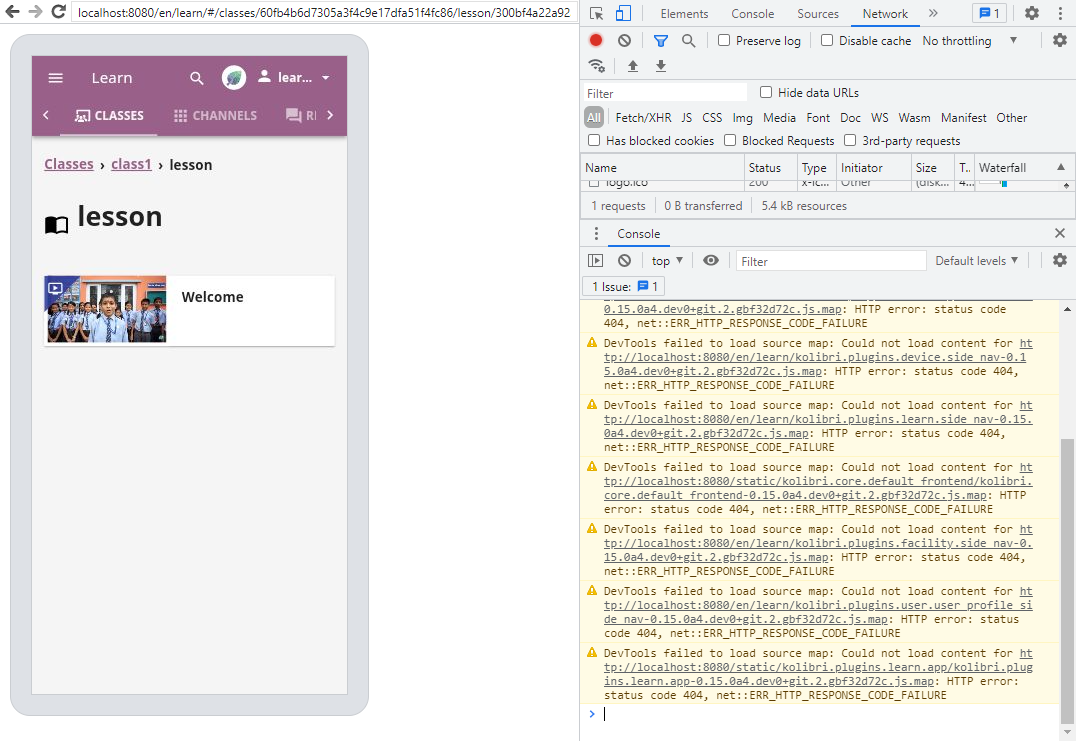

Path: `src/scout_apm/django/middleware.py`

Content:

```

1 # coding=utf-8

2 from __future__ import absolute_import, division, print_function, unicode_literals

3

4 import logging

5

6 from scout_apm.core.ignore import ignore_path

7 from scout_apm.core.queue_time import track_request_queue_time

8 from scout_apm.core.tracked_request import TrackedRequest

9

10 logger = logging.getLogger(__name__)

11

12

13 def get_operation_name(request):

14 view_name = request.resolver_match._func_path

15 return "Controller/" + view_name

16

17

18 def track_request_view_data(request, tracked_request):

19 tracked_request.tag("path", request.path)

20 if ignore_path(request.path):

21 tracked_request.tag("ignore_transaction", True)

22

23 try:

24 # Determine a remote IP to associate with the request. The value is

25 # spoofable by the requester so this is not suitable to use in any

26 # security sensitive context.

27 user_ip = (

28 request.META.get("HTTP_X_FORWARDED_FOR", "").split(",")[0]

29 or request.META.get("HTTP_CLIENT_IP", "").split(",")[0]

30 or request.META.get("REMOTE_ADDR", None)

31 )

32 tracked_request.tag("user_ip", user_ip)

33 except Exception:

34 pass

35

36 user = getattr(request, "user", None)

37 if user is not None:

38 try:

39 tracked_request.tag("username", user.get_username())

40 except Exception:

41 pass

42

43

44 class MiddlewareTimingMiddleware(object):

45 """

46 Insert as early into the Middleware stack as possible (outermost layers),

47 so that other middlewares called after can be timed.

48 """

49

50 def __init__(self, get_response):

51 self.get_response = get_response

52

53 def __call__(self, request):

54 tracked_request = TrackedRequest.instance()

55

56 tracked_request.start_span(operation="Middleware")

57 queue_time = request.META.get("HTTP_X_QUEUE_START") or request.META.get(

58 "HTTP_X_REQUEST_START", ""

59 )

60 track_request_queue_time(queue_time, tracked_request)

61

62 try:

63 return self.get_response(request)

64 finally:

65 TrackedRequest.instance().stop_span()

66

67

68 class ViewTimingMiddleware(object):

69 """

70 Insert as deep into the middleware stack as possible, ideally wrapping no

71 other middleware. Designed to time the View itself

72 """

73

74 def __init__(self, get_response):

75 self.get_response = get_response

76

77 def __call__(self, request):

78 """

79 Wrap a single incoming request with start and stop calls.

80 This will start timing, but relies on the process_view callback to

81 capture more details about what view was really called, and other

82 similar info.

83

84 If process_view isn't called, then the request will not

85 be recorded. This can happen if a middleware further along the stack

86 doesn't call onward, and instead returns a response directly.

87 """

88 tracked_request = TrackedRequest.instance()

89

90 # This operation name won't be recorded unless changed later in

91 # process_view

92 tracked_request.start_span(operation="Unknown")

93 try:

94 response = self.get_response(request)

95 finally:

96 tracked_request.stop_span()

97 return response

98

99 def process_view(self, request, view_func, view_args, view_kwargs):

100 """

101 Capture details about the view_func that is about to execute

102 """

103 tracked_request = TrackedRequest.instance()

104 tracked_request.mark_real_request()

105

106 track_request_view_data(request, tracked_request)

107

108 span = tracked_request.current_span()

109 if span is not None:

110 span.operation = get_operation_name(request)

111

112 def process_exception(self, request, exception):

113 """

114 Mark this request as having errored out

115

116 Does not modify or catch or otherwise change the exception thrown

117 """

118 TrackedRequest.instance().tag("error", "true")

119

120

121 class OldStyleMiddlewareTimingMiddleware(object):

122 """

123 Insert as early into the Middleware stack as possible (outermost layers),

124 so that other middlewares called after can be timed.

125 """

126

127 def process_request(self, request):

128 tracked_request = TrackedRequest.instance()

129 request._scout_tracked_request = tracked_request

130

131 queue_time = request.META.get("HTTP_X_QUEUE_START") or request.META.get(

132 "HTTP_X_REQUEST_START", ""

133 )

134 track_request_queue_time(queue_time, tracked_request)

135

136 tracked_request.start_span(operation="Middleware")

137

138 def process_response(self, request, response):

139 # Only stop span if there's a request, but presume we are balanced,

140 # i.e. that custom instrumentation within the application is not

141 # causing errors

142 tracked_request = getattr(request, "_scout_tracked_request", None)

143 if tracked_request is not None:

144 tracked_request.stop_span()

145 return response

146

147

148 class OldStyleViewMiddleware(object):

149 def process_view(self, request, view_func, view_func_args, view_func_kwargs):

150 tracked_request = getattr(request, "_scout_tracked_request", None)

151 if tracked_request is None:

152 # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so

153 # don't do anything

154 return

155

156 tracked_request.mark_real_request()

157

158 track_request_view_data(request, tracked_request)

159

160 span = tracked_request.start_span(operation=get_operation_name(request))

161 # Save the span into the request, so we can check

162 # if we're matched up when stopping

163 request._scout_view_span = span

164

165 def process_response(self, request, response):

166 tracked_request = getattr(request, "_scout_tracked_request", None)

167 if tracked_request is None:

168 # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so

169 # don't do anything

170 return response

171

172 # Only stop span if we started, but presume we are balanced, i.e. that

173 # custom instrumentation within the application is not causing errors

174 span = getattr(request, "_scout_view_span", None)

175 if span is not None:

176 tracked_request.stop_span()

177 return response

178

179 def process_exception(self, request, exception):

180 tracked_request = getattr(request, "_scout_tracked_request", None)

181 if tracked_request is None:

182 # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so

183 # don't do anything

184 return

185

186 tracked_request.tag("error", "true")

187

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/scout_apm/django/middleware.py b/src/scout_apm/django/middleware.py

--- a/src/scout_apm/django/middleware.py

+++ b/src/scout_apm/django/middleware.py

@@ -11,7 +11,20 @@

def get_operation_name(request):

+ view_func = request.resolver_match.func

view_name = request.resolver_match._func_path

+

+ if hasattr(view_func, "model_admin"):

+ # Seems to comes from Django admin (attribute only set on Django 1.9+)

+ admin_class = view_func.model_admin.__class__

+ view_name = (

+ admin_class.__module__

+ + "."

+ + admin_class.__name__

+ + "."

+ + view_func.__name__

+ )

+

return "Controller/" + view_name

|

{"golden_diff": "diff --git a/src/scout_apm/django/middleware.py b/src/scout_apm/django/middleware.py\n--- a/src/scout_apm/django/middleware.py\n+++ b/src/scout_apm/django/middleware.py\n@@ -11,7 +11,20 @@\n \n \n def get_operation_name(request):\n+ view_func = request.resolver_match.func\n view_name = request.resolver_match._func_path\n+\n+ if hasattr(view_func, \"model_admin\"):\n+ # Seems to comes from Django admin (attribute only set on Django 1.9+)\n+ admin_class = view_func.model_admin.__class__\n+ view_name = (\n+ admin_class.__module__\n+ + \".\"\n+ + admin_class.__name__\n+ + \".\"\n+ + view_func.__name__\n+ )\n+\n return \"Controller/\" + view_name\n", "issue": "Name Django Admin Views clearer\nCurrently, Django Admin views are captured with confusingly internal names. We should capture something clearer\r\n\r\n\r\n\n", "before_files": [{"content": "# coding=utf-8\nfrom __future__ import absolute_import, division, print_function, unicode_literals\n\nimport logging\n\nfrom scout_apm.core.ignore import ignore_path\nfrom scout_apm.core.queue_time import track_request_queue_time\nfrom scout_apm.core.tracked_request import TrackedRequest\n\nlogger = logging.getLogger(__name__)\n\n\ndef get_operation_name(request):\n view_name = request.resolver_match._func_path\n return \"Controller/\" + view_name\n\n\ndef track_request_view_data(request, tracked_request):\n tracked_request.tag(\"path\", request.path)\n if ignore_path(request.path):\n tracked_request.tag(\"ignore_transaction\", True)\n\n try:\n # Determine a remote IP to associate with the request. The value is\n # spoofable by the requester so this is not suitable to use in any\n # security sensitive context.\n user_ip = (\n request.META.get(\"HTTP_X_FORWARDED_FOR\", \"\").split(\",\")[0]\n or request.META.get(\"HTTP_CLIENT_IP\", \"\").split(\",\")[0]\n or request.META.get(\"REMOTE_ADDR\", None)\n )\n tracked_request.tag(\"user_ip\", user_ip)\n except Exception:\n pass\n\n user = getattr(request, \"user\", None)\n if user is not None:\n try:\n tracked_request.tag(\"username\", user.get_username())\n except Exception:\n pass\n\n\nclass MiddlewareTimingMiddleware(object):\n \"\"\"\n Insert as early into the Middleware stack as possible (outermost layers),\n so that other middlewares called after can be timed.\n \"\"\"\n\n def __init__(self, get_response):\n self.get_response = get_response\n\n def __call__(self, request):\n tracked_request = TrackedRequest.instance()\n\n tracked_request.start_span(operation=\"Middleware\")\n queue_time = request.META.get(\"HTTP_X_QUEUE_START\") or request.META.get(\n \"HTTP_X_REQUEST_START\", \"\"\n )\n track_request_queue_time(queue_time, tracked_request)\n\n try:\n return self.get_response(request)\n finally:\n TrackedRequest.instance().stop_span()\n\n\nclass ViewTimingMiddleware(object):\n \"\"\"\n Insert as deep into the middleware stack as possible, ideally wrapping no\n other middleware. Designed to time the View itself\n \"\"\"\n\n def __init__(self, get_response):\n self.get_response = get_response\n\n def __call__(self, request):\n \"\"\"\n Wrap a single incoming request with start and stop calls.\n This will start timing, but relies on the process_view callback to\n capture more details about what view was really called, and other\n similar info.\n\n If process_view isn't called, then the request will not\n be recorded. This can happen if a middleware further along the stack\n doesn't call onward, and instead returns a response directly.\n \"\"\"\n tracked_request = TrackedRequest.instance()\n\n # This operation name won't be recorded unless changed later in\n # process_view\n tracked_request.start_span(operation=\"Unknown\")\n try:\n response = self.get_response(request)\n finally:\n tracked_request.stop_span()\n return response\n\n def process_view(self, request, view_func, view_args, view_kwargs):\n \"\"\"\n Capture details about the view_func that is about to execute\n \"\"\"\n tracked_request = TrackedRequest.instance()\n tracked_request.mark_real_request()\n\n track_request_view_data(request, tracked_request)\n\n span = tracked_request.current_span()\n if span is not None:\n span.operation = get_operation_name(request)\n\n def process_exception(self, request, exception):\n \"\"\"\n Mark this request as having errored out\n\n Does not modify or catch or otherwise change the exception thrown\n \"\"\"\n TrackedRequest.instance().tag(\"error\", \"true\")\n\n\nclass OldStyleMiddlewareTimingMiddleware(object):\n \"\"\"\n Insert as early into the Middleware stack as possible (outermost layers),\n so that other middlewares called after can be timed.\n \"\"\"\n\n def process_request(self, request):\n tracked_request = TrackedRequest.instance()\n request._scout_tracked_request = tracked_request\n\n queue_time = request.META.get(\"HTTP_X_QUEUE_START\") or request.META.get(\n \"HTTP_X_REQUEST_START\", \"\"\n )\n track_request_queue_time(queue_time, tracked_request)\n\n tracked_request.start_span(operation=\"Middleware\")\n\n def process_response(self, request, response):\n # Only stop span if there's a request, but presume we are balanced,\n # i.e. that custom instrumentation within the application is not\n # causing errors\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is not None:\n tracked_request.stop_span()\n return response\n\n\nclass OldStyleViewMiddleware(object):\n def process_view(self, request, view_func, view_func_args, view_func_kwargs):\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is None:\n # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so\n # don't do anything\n return\n\n tracked_request.mark_real_request()\n\n track_request_view_data(request, tracked_request)\n\n span = tracked_request.start_span(operation=get_operation_name(request))\n # Save the span into the request, so we can check\n # if we're matched up when stopping\n request._scout_view_span = span\n\n def process_response(self, request, response):\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is None:\n # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so\n # don't do anything\n return response\n\n # Only stop span if we started, but presume we are balanced, i.e. that\n # custom instrumentation within the application is not causing errors\n span = getattr(request, \"_scout_view_span\", None)\n if span is not None:\n tracked_request.stop_span()\n return response\n\n def process_exception(self, request, exception):\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is None:\n # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so\n # don't do anything\n return\n\n tracked_request.tag(\"error\", \"true\")\n", "path": "src/scout_apm/django/middleware.py"}], "after_files": [{"content": "# coding=utf-8\nfrom __future__ import absolute_import, division, print_function, unicode_literals\n\nimport logging\n\nfrom scout_apm.core.ignore import ignore_path\nfrom scout_apm.core.queue_time import track_request_queue_time\nfrom scout_apm.core.tracked_request import TrackedRequest\n\nlogger = logging.getLogger(__name__)\n\n\ndef get_operation_name(request):\n view_func = request.resolver_match.func\n view_name = request.resolver_match._func_path\n\n if hasattr(view_func, \"model_admin\"):\n # Seems to comes from Django admin (attribute only set on Django 1.9+)\n admin_class = view_func.model_admin.__class__\n view_name = (\n admin_class.__module__\n + \".\"\n + admin_class.__name__\n + \".\"\n + view_func.__name__\n )\n\n return \"Controller/\" + view_name\n\n\ndef track_request_view_data(request, tracked_request):\n tracked_request.tag(\"path\", request.path)\n if ignore_path(request.path):\n tracked_request.tag(\"ignore_transaction\", True)\n\n try:\n # Determine a remote IP to associate with the request. The value is\n # spoofable by the requester so this is not suitable to use in any\n # security sensitive context.\n user_ip = (\n request.META.get(\"HTTP_X_FORWARDED_FOR\", \"\").split(\",\")[0]\n or request.META.get(\"HTTP_CLIENT_IP\", \"\").split(\",\")[0]\n or request.META.get(\"REMOTE_ADDR\", None)\n )\n tracked_request.tag(\"user_ip\", user_ip)\n except Exception:\n pass\n\n user = getattr(request, \"user\", None)\n if user is not None:\n try:\n tracked_request.tag(\"username\", user.get_username())\n except Exception:\n pass\n\n\nclass MiddlewareTimingMiddleware(object):\n \"\"\"\n Insert as early into the Middleware stack as possible (outermost layers),\n so that other middlewares called after can be timed.\n \"\"\"\n\n def __init__(self, get_response):\n self.get_response = get_response\n\n def __call__(self, request):\n tracked_request = TrackedRequest.instance()\n\n tracked_request.start_span(operation=\"Middleware\")\n queue_time = request.META.get(\"HTTP_X_QUEUE_START\") or request.META.get(\n \"HTTP_X_REQUEST_START\", \"\"\n )\n track_request_queue_time(queue_time, tracked_request)\n\n try:\n return self.get_response(request)\n finally:\n TrackedRequest.instance().stop_span()\n\n\nclass ViewTimingMiddleware(object):\n \"\"\"\n Insert as deep into the middleware stack as possible, ideally wrapping no\n other middleware. Designed to time the View itself\n \"\"\"\n\n def __init__(self, get_response):\n self.get_response = get_response\n\n def __call__(self, request):\n \"\"\"\n Wrap a single incoming request with start and stop calls.\n This will start timing, but relies on the process_view callback to\n capture more details about what view was really called, and other\n similar info.\n\n If process_view isn't called, then the request will not\n be recorded. This can happen if a middleware further along the stack\n doesn't call onward, and instead returns a response directly.\n \"\"\"\n tracked_request = TrackedRequest.instance()\n\n # This operation name won't be recorded unless changed later in\n # process_view\n tracked_request.start_span(operation=\"Unknown\")\n try:\n response = self.get_response(request)\n finally:\n tracked_request.stop_span()\n return response\n\n def process_view(self, request, view_func, view_args, view_kwargs):\n \"\"\"\n Capture details about the view_func that is about to execute\n \"\"\"\n tracked_request = TrackedRequest.instance()\n tracked_request.mark_real_request()\n\n track_request_view_data(request, tracked_request)\n\n span = tracked_request.current_span()\n if span is not None:\n span.operation = get_operation_name(request)\n\n def process_exception(self, request, exception):\n \"\"\"\n Mark this request as having errored out\n\n Does not modify or catch or otherwise change the exception thrown\n \"\"\"\n TrackedRequest.instance().tag(\"error\", \"true\")\n\n\nclass OldStyleMiddlewareTimingMiddleware(object):\n \"\"\"\n Insert as early into the Middleware stack as possible (outermost layers),\n so that other middlewares called after can be timed.\n \"\"\"\n\n def process_request(self, request):\n tracked_request = TrackedRequest.instance()\n request._scout_tracked_request = tracked_request\n\n queue_time = request.META.get(\"HTTP_X_QUEUE_START\") or request.META.get(\n \"HTTP_X_REQUEST_START\", \"\"\n )\n track_request_queue_time(queue_time, tracked_request)\n\n tracked_request.start_span(operation=\"Middleware\")\n\n def process_response(self, request, response):\n # Only stop span if there's a request, but presume we are balanced,\n # i.e. that custom instrumentation within the application is not\n # causing errors\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is not None:\n tracked_request.stop_span()\n return response\n\n\nclass OldStyleViewMiddleware(object):\n def process_view(self, request, view_func, view_func_args, view_func_kwargs):\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is None:\n # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so\n # don't do anything\n return\n\n tracked_request.mark_real_request()\n\n track_request_view_data(request, tracked_request)\n\n span = tracked_request.start_span(operation=get_operation_name(request))\n # Save the span into the request, so we can check\n # if we're matched up when stopping\n request._scout_view_span = span\n\n def process_response(self, request, response):\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is None:\n # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so\n # don't do anything\n return response\n\n # Only stop span if we started, but presume we are balanced, i.e. that\n # custom instrumentation within the application is not causing errors\n span = getattr(request, \"_scout_view_span\", None)\n if span is not None:\n tracked_request.stop_span()\n return response\n\n def process_exception(self, request, exception):\n tracked_request = getattr(request, \"_scout_tracked_request\", None)\n if tracked_request is None:\n # Looks like OldStyleMiddlewareTimingMiddleware didn't run, so\n # don't do anything\n return\n\n tracked_request.tag(\"error\", \"true\")\n", "path": "src/scout_apm/django/middleware.py"}]}

| 2,156 | 192 |

gh_patches_debug_1074

|

rasdani/github-patches

|

git_diff

|

huggingface__diffusers-1052

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Improve the precision of our integration tests

We currently have a rather low precision when testing our pipeline due to due reasons.

1. - Our reference is an image and not a numpy array. This means that when we created our reference image we lost float precision which is unnecessary

2. - We only test for `.max() < 1e-2` . IMO we should test for `.max() < 1e-4` with the numpy arrays. In my experiements across multiple devices I have **not** seen differences bigger than `.max() < 1e-4` when using full precision.

IMO this could have also prevented: https://github.com/huggingface/diffusers/issues/902

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/diffusers/utils/__init__.py`

Content:

```

1 # Copyright 2022 The HuggingFace Inc. team. All rights reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15

16 import os

17

18 from .deprecation_utils import deprecate

19 from .import_utils import (

20 ENV_VARS_TRUE_AND_AUTO_VALUES,

21 ENV_VARS_TRUE_VALUES,

22 USE_JAX,

23 USE_TF,

24 USE_TORCH,

25 DummyObject,

26 is_accelerate_available,

27 is_flax_available,

28 is_inflect_available,

29 is_modelcards_available,

30 is_onnx_available,

31 is_scipy_available,

32 is_tf_available,

33 is_torch_available,

34 is_transformers_available,

35 is_unidecode_available,

36 requires_backends,

37 )

38 from .logging import get_logger

39 from .outputs import BaseOutput

40

41

42 if is_torch_available():

43 from .testing_utils import (

44 floats_tensor,

45 load_image,

46 load_numpy,

47 parse_flag_from_env,

48 require_torch_gpu,

49 slow,

50 torch_all_close,

51 torch_device,

52 )

53

54

55 logger = get_logger(__name__)

56

57

58 hf_cache_home = os.path.expanduser(

59 os.getenv("HF_HOME", os.path.join(os.getenv("XDG_CACHE_HOME", "~/.cache"), "huggingface"))

60 )

61 default_cache_path = os.path.join(hf_cache_home, "diffusers")

62

63

64 CONFIG_NAME = "config.json"

65 WEIGHTS_NAME = "diffusion_pytorch_model.bin"

66 FLAX_WEIGHTS_NAME = "diffusion_flax_model.msgpack"

67 ONNX_WEIGHTS_NAME = "model.onnx"

68 HUGGINGFACE_CO_RESOLVE_ENDPOINT = "https://huggingface.co"

69 DIFFUSERS_CACHE = default_cache_path

70 DIFFUSERS_DYNAMIC_MODULE_NAME = "diffusers_modules"

71 HF_MODULES_CACHE = os.getenv("HF_MODULES_CACHE", os.path.join(hf_cache_home, "modules"))

72

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/diffusers/utils/__init__.py b/src/diffusers/utils/__init__.py

--- a/src/diffusers/utils/__init__.py

+++ b/src/diffusers/utils/__init__.py

@@ -42,6 +42,7 @@

if is_torch_available():

from .testing_utils import (

floats_tensor,

+ load_hf_numpy,

load_image,

load_numpy,

parse_flag_from_env,

|

{"golden_diff": "diff --git a/src/diffusers/utils/__init__.py b/src/diffusers/utils/__init__.py\n--- a/src/diffusers/utils/__init__.py\n+++ b/src/diffusers/utils/__init__.py\n@@ -42,6 +42,7 @@\n if is_torch_available():\n from .testing_utils import (\n floats_tensor,\n+ load_hf_numpy,\n load_image,\n load_numpy,\n parse_flag_from_env,\n", "issue": "Improve the precision of our integration tests\nWe currently have a rather low precision when testing our pipeline due to due reasons. \r\n1. - Our reference is an image and not a numpy array. This means that when we created our reference image we lost float precision which is unnecessary\r\n2. - We only test for `.max() < 1e-2` . IMO we should test for `.max() < 1e-4` with the numpy arrays. In my experiements across multiple devices I have **not** seen differences bigger than `.max() < 1e-4` when using full precision.\r\n\r\nIMO this could have also prevented: https://github.com/huggingface/diffusers/issues/902\n", "before_files": [{"content": "# Copyright 2022 The HuggingFace Inc. team. All rights reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\nimport os\n\nfrom .deprecation_utils import deprecate\nfrom .import_utils import (\n ENV_VARS_TRUE_AND_AUTO_VALUES,\n ENV_VARS_TRUE_VALUES,\n USE_JAX,\n USE_TF,\n USE_TORCH,\n DummyObject,\n is_accelerate_available,\n is_flax_available,\n is_inflect_available,\n is_modelcards_available,\n is_onnx_available,\n is_scipy_available,\n is_tf_available,\n is_torch_available,\n is_transformers_available,\n is_unidecode_available,\n requires_backends,\n)\nfrom .logging import get_logger\nfrom .outputs import BaseOutput\n\n\nif is_torch_available():\n from .testing_utils import (\n floats_tensor,\n load_image,\n load_numpy,\n parse_flag_from_env,\n require_torch_gpu,\n slow,\n torch_all_close,\n torch_device,\n )\n\n\nlogger = get_logger(__name__)\n\n\nhf_cache_home = os.path.expanduser(\n os.getenv(\"HF_HOME\", os.path.join(os.getenv(\"XDG_CACHE_HOME\", \"~/.cache\"), \"huggingface\"))\n)\ndefault_cache_path = os.path.join(hf_cache_home, \"diffusers\")\n\n\nCONFIG_NAME = \"config.json\"\nWEIGHTS_NAME = \"diffusion_pytorch_model.bin\"\nFLAX_WEIGHTS_NAME = \"diffusion_flax_model.msgpack\"\nONNX_WEIGHTS_NAME = \"model.onnx\"\nHUGGINGFACE_CO_RESOLVE_ENDPOINT = \"https://huggingface.co\"\nDIFFUSERS_CACHE = default_cache_path\nDIFFUSERS_DYNAMIC_MODULE_NAME = \"diffusers_modules\"\nHF_MODULES_CACHE = os.getenv(\"HF_MODULES_CACHE\", os.path.join(hf_cache_home, \"modules\"))\n", "path": "src/diffusers/utils/__init__.py"}], "after_files": [{"content": "# Copyright 2022 The HuggingFace Inc. team. All rights reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\nimport os\n\nfrom .deprecation_utils import deprecate\nfrom .import_utils import (\n ENV_VARS_TRUE_AND_AUTO_VALUES,\n ENV_VARS_TRUE_VALUES,\n USE_JAX,\n USE_TF,\n USE_TORCH,\n DummyObject,\n is_accelerate_available,\n is_flax_available,\n is_inflect_available,\n is_modelcards_available,\n is_onnx_available,\n is_scipy_available,\n is_tf_available,\n is_torch_available,\n is_transformers_available,\n is_unidecode_available,\n requires_backends,\n)\nfrom .logging import get_logger\nfrom .outputs import BaseOutput\n\n\nif is_torch_available():\n from .testing_utils import (\n floats_tensor,\n load_hf_numpy,\n load_image,\n load_numpy,\n parse_flag_from_env,\n require_torch_gpu,\n slow,\n torch_all_close,\n torch_device,\n )\n\n\nlogger = get_logger(__name__)\n\n\nhf_cache_home = os.path.expanduser(\n os.getenv(\"HF_HOME\", os.path.join(os.getenv(\"XDG_CACHE_HOME\", \"~/.cache\"), \"huggingface\"))\n)\ndefault_cache_path = os.path.join(hf_cache_home, \"diffusers\")\n\n\nCONFIG_NAME = \"config.json\"\nWEIGHTS_NAME = \"diffusion_pytorch_model.bin\"\nFLAX_WEIGHTS_NAME = \"diffusion_flax_model.msgpack\"\nONNX_WEIGHTS_NAME = \"model.onnx\"\nHUGGINGFACE_CO_RESOLVE_ENDPOINT = \"https://huggingface.co\"\nDIFFUSERS_CACHE = default_cache_path\nDIFFUSERS_DYNAMIC_MODULE_NAME = \"diffusers_modules\"\nHF_MODULES_CACHE = os.getenv(\"HF_MODULES_CACHE\", os.path.join(hf_cache_home, \"modules\"))\n", "path": "src/diffusers/utils/__init__.py"}]}

| 1,046 | 98 |

gh_patches_debug_24220

|

rasdani/github-patches

|

git_diff

|

ietf-tools__datatracker-4407

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Schedule editor icons need to be more distinct

From @flynnliz

The various “person” icons are confusing. It’s hard to know at a glance in the grid which conflicts are “person who must be present” and which are “chair conflict,” and it’s even more confusing that in the session request data box on the bottom right, the “requested by” icon is the same as the chair conflict. Can these three be more distinct from each other?

- The “technology overlap” chain icon shows up really faintly and it’s very tiny, so it’s easy to miss. Same with the “key participant overlap” key icon — those two are really difficult to distinguish from each other when they are so small. Can these be made larger or even just changed to something that takes up more vertical space so they’re easier to distinguish?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `ietf/meeting/templatetags/editor_tags.py`

Content:

```

1 # Copyright The IETF Trust 2022, All Rights Reserved

2 # -*- coding: utf-8 -*-

3

4 """Custom tags for the schedule editor"""

5 import debug # pyflakes: ignore

6

7 from django import template

8 from django.utils.html import format_html

9

10 register = template.Library()

11

12

13 @register.simple_tag

14 def constraint_icon_for(constraint_name, count=None):

15 # icons must be valid HTML and kept up to date with tests.EditorTagTests.test_constraint_icon_for()

16 icons = {

17 'conflict': '<span class="encircled">{reversed}1</span>',

18 'conflic2': '<span class="encircled">{reversed}2</span>',

19 'conflic3': '<span class="encircled">{reversed}3</span>',

20 'bethere': '<i class="bi bi-person"></i>{count}',

21 'timerange': '<i class="bi bi-calendar"></i>',

22 'time_relation': 'Δ',

23 'wg_adjacent': '{reversed}<i class="bi bi-skip-end"></i>',

24 'chair_conflict': '{reversed}<i class="bi bi-person-circle"></i>',

25 'tech_overlap': '{reversed}<i class="bi bi-link"></i>',

26 'key_participant': '{reversed}<i class="bi bi-key"></i>',

27 'joint_with_groups': '<i class="bi bi-merge"></i>',

28 'responsible_ad': '<span class="encircled">AD</span>',

29 }

30 reversed_suffix = '-reversed'

31 if constraint_name.slug.endswith(reversed_suffix):

32 reversed = True

33 cn = constraint_name.slug[: -len(reversed_suffix)]

34 else:

35 reversed = False

36 cn = constraint_name.slug

37 return format_html(

38 icons[cn],

39 count=count or '',

40 reversed='-' if reversed else '',

41 )

42

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/ietf/meeting/templatetags/editor_tags.py b/ietf/meeting/templatetags/editor_tags.py

--- a/ietf/meeting/templatetags/editor_tags.py

+++ b/ietf/meeting/templatetags/editor_tags.py

@@ -17,13 +17,13 @@

'conflict': '<span class="encircled">{reversed}1</span>',

'conflic2': '<span class="encircled">{reversed}2</span>',

'conflic3': '<span class="encircled">{reversed}3</span>',

- 'bethere': '<i class="bi bi-person"></i>{count}',

+ 'bethere': '<i class="bi bi-people-fill"></i>{count}',

'timerange': '<i class="bi bi-calendar"></i>',

'time_relation': 'Δ',

'wg_adjacent': '{reversed}<i class="bi bi-skip-end"></i>',

- 'chair_conflict': '{reversed}<i class="bi bi-person-circle"></i>',

- 'tech_overlap': '{reversed}<i class="bi bi-link"></i>',

- 'key_participant': '{reversed}<i class="bi bi-key"></i>',

+ 'chair_conflict': '{reversed}<i class="bi bi-circle-fill"></i>',

+ 'tech_overlap': '{reversed}<i class="bi bi-link-45deg"></i>',

+ 'key_participant': '{reversed}<i class="bi bi-star"></i>',

'joint_with_groups': '<i class="bi bi-merge"></i>',

'responsible_ad': '<span class="encircled">AD</span>',

}

|

{"golden_diff": "diff --git a/ietf/meeting/templatetags/editor_tags.py b/ietf/meeting/templatetags/editor_tags.py\n--- a/ietf/meeting/templatetags/editor_tags.py\n+++ b/ietf/meeting/templatetags/editor_tags.py\n@@ -17,13 +17,13 @@\n 'conflict': '<span class=\"encircled\">{reversed}1</span>',\n 'conflic2': '<span class=\"encircled\">{reversed}2</span>',\n 'conflic3': '<span class=\"encircled\">{reversed}3</span>',\n- 'bethere': '<i class=\"bi bi-person\"></i>{count}',\n+ 'bethere': '<i class=\"bi bi-people-fill\"></i>{count}',\n 'timerange': '<i class=\"bi bi-calendar\"></i>',\n 'time_relation': 'Δ',\n 'wg_adjacent': '{reversed}<i class=\"bi bi-skip-end\"></i>',\n- 'chair_conflict': '{reversed}<i class=\"bi bi-person-circle\"></i>',\n- 'tech_overlap': '{reversed}<i class=\"bi bi-link\"></i>',\n- 'key_participant': '{reversed}<i class=\"bi bi-key\"></i>',\n+ 'chair_conflict': '{reversed}<i class=\"bi bi-circle-fill\"></i>',\n+ 'tech_overlap': '{reversed}<i class=\"bi bi-link-45deg\"></i>',\n+ 'key_participant': '{reversed}<i class=\"bi bi-star\"></i>',\n 'joint_with_groups': '<i class=\"bi bi-merge\"></i>',\n 'responsible_ad': '<span class=\"encircled\">AD</span>',\n }\n", "issue": "Schedule editor icons need to be more distinct\nFrom @flynnliz\r\n\r\nThe various \u201cperson\u201d icons are confusing. It\u2019s hard to know at a glance in the grid which conflicts are \u201cperson who must be present\u201d and which are \u201cchair conflict,\u201d and it\u2019s even more confusing that in the session request data box on the bottom right, the \u201crequested by\u201d icon is the same as the chair conflict. Can these three be more distinct from each other? \r\n\r\n\r\n\r\n\r\n- The \u201ctechnology overlap\u201d chain icon shows up really faintly and it\u2019s very tiny, so it\u2019s easy to miss. Same with the \u201ckey participant overlap\u201d key icon \u2014 those two are really difficult to distinguish from each other when they are so small. Can these be made larger or even just changed to something that takes up more vertical space so they\u2019re easier to distinguish?\r\n\r\n\r\n\r\n\n", "before_files": [{"content": "# Copyright The IETF Trust 2022, All Rights Reserved\n# -*- coding: utf-8 -*-\n\n\"\"\"Custom tags for the schedule editor\"\"\"\nimport debug # pyflakes: ignore\n\nfrom django import template\nfrom django.utils.html import format_html\n\nregister = template.Library()\n\n\[email protected]_tag\ndef constraint_icon_for(constraint_name, count=None):\n # icons must be valid HTML and kept up to date with tests.EditorTagTests.test_constraint_icon_for()\n icons = {\n 'conflict': '<span class=\"encircled\">{reversed}1</span>',\n 'conflic2': '<span class=\"encircled\">{reversed}2</span>',\n 'conflic3': '<span class=\"encircled\">{reversed}3</span>',\n 'bethere': '<i class=\"bi bi-person\"></i>{count}',\n 'timerange': '<i class=\"bi bi-calendar\"></i>',\n 'time_relation': 'Δ',\n 'wg_adjacent': '{reversed}<i class=\"bi bi-skip-end\"></i>',\n 'chair_conflict': '{reversed}<i class=\"bi bi-person-circle\"></i>',\n 'tech_overlap': '{reversed}<i class=\"bi bi-link\"></i>',\n 'key_participant': '{reversed}<i class=\"bi bi-key\"></i>',\n 'joint_with_groups': '<i class=\"bi bi-merge\"></i>',\n 'responsible_ad': '<span class=\"encircled\">AD</span>',\n }\n reversed_suffix = '-reversed'\n if constraint_name.slug.endswith(reversed_suffix):\n reversed = True\n cn = constraint_name.slug[: -len(reversed_suffix)]\n else:\n reversed = False\n cn = constraint_name.slug\n return format_html(\n icons[cn],\n count=count or '',\n reversed='-' if reversed else '',\n )\n", "path": "ietf/meeting/templatetags/editor_tags.py"}], "after_files": [{"content": "# Copyright The IETF Trust 2022, All Rights Reserved\n# -*- coding: utf-8 -*-\n\n\"\"\"Custom tags for the schedule editor\"\"\"\nimport debug # pyflakes: ignore\n\nfrom django import template\nfrom django.utils.html import format_html\n\nregister = template.Library()\n\n\[email protected]_tag\ndef constraint_icon_for(constraint_name, count=None):\n # icons must be valid HTML and kept up to date with tests.EditorTagTests.test_constraint_icon_for()\n icons = {\n 'conflict': '<span class=\"encircled\">{reversed}1</span>',\n 'conflic2': '<span class=\"encircled\">{reversed}2</span>',\n 'conflic3': '<span class=\"encircled\">{reversed}3</span>',\n 'bethere': '<i class=\"bi bi-people-fill\"></i>{count}',\n 'timerange': '<i class=\"bi bi-calendar\"></i>',\n 'time_relation': 'Δ',\n 'wg_adjacent': '{reversed}<i class=\"bi bi-skip-end\"></i>',\n 'chair_conflict': '{reversed}<i class=\"bi bi-circle-fill\"></i>',\n 'tech_overlap': '{reversed}<i class=\"bi bi-link-45deg\"></i>',\n 'key_participant': '{reversed}<i class=\"bi bi-star\"></i>',\n 'joint_with_groups': '<i class=\"bi bi-merge\"></i>',\n 'responsible_ad': '<span class=\"encircled\">AD</span>',\n }\n reversed_suffix = '-reversed'\n if constraint_name.slug.endswith(reversed_suffix):\n reversed = True\n cn = constraint_name.slug[: -len(reversed_suffix)]\n else:\n reversed = False\n cn = constraint_name.slug\n return format_html(\n icons[cn],\n count=count or '',\n reversed='-' if reversed else '',\n )\n", "path": "ietf/meeting/templatetags/editor_tags.py"}]}

| 1,041 | 379 |

gh_patches_debug_1063

|

rasdani/github-patches

|

git_diff

|

open-telemetry__opentelemetry-python-2307

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Rename `ConsoleExporter` to `ConsoleLogExporter`?

As suggested by @lonewolf3739, we should rename the ConsoleExporter to ConsoleLogExporter to follow the pattern established by the ConsoleSpanExporter.

Not in this PR; Should we rename this to `ConsoleLogExporter`?

_Originally posted by @lonewolf3739 in https://github.com/open-telemetry/opentelemetry-python/pull/2253#r759589860_

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py`

Content:

```

1 # Copyright The OpenTelemetry Authors

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import abc

16 import collections

17 import enum

18 import logging

19 import os

20 import sys

21 import threading

22 from os import linesep

23 from typing import IO, Callable, Deque, List, Optional, Sequence

24

25 from opentelemetry.context import attach, detach, set_value

26 from opentelemetry.sdk._logs import LogData, LogProcessor, LogRecord

27 from opentelemetry.util._time import _time_ns

28

29 _logger = logging.getLogger(__name__)

30

31

32 class LogExportResult(enum.Enum):

33 SUCCESS = 0

34 FAILURE = 1

35

36

37 class LogExporter(abc.ABC):

38 """Interface for exporting logs.

39

40 Interface to be implemented by services that want to export logs received

41 in their own format.

42

43 To export data this MUST be registered to the :class`opentelemetry.sdk._logs.LogEmitter` using a

44 log processor.

45 """

46

47 @abc.abstractmethod

48 def export(self, batch: Sequence[LogData]):

49 """Exports a batch of logs.

50

51 Args:

52 batch: The list of `LogData` objects to be exported

53

54 Returns:

55 The result of the export

56 """

57

58 @abc.abstractmethod

59 def shutdown(self):

60 """Shuts down the exporter.

61

62 Called when the SDK is shut down.

63 """

64

65

66 class ConsoleExporter(LogExporter):

67 """Implementation of :class:`LogExporter` that prints log records to the

68 console.

69

70 This class can be used for diagnostic purposes. It prints the exported

71 log records to the console STDOUT.

72 """

73

74 def __init__(

75 self,

76 out: IO = sys.stdout,

77 formatter: Callable[[LogRecord], str] = lambda record: record.to_json()

78 + linesep,

79 ):

80 self.out = out

81 self.formatter = formatter

82

83 def export(self, batch: Sequence[LogData]):

84 for data in batch:

85 self.out.write(self.formatter(data.log_record))

86 self.out.flush()

87 return LogExportResult.SUCCESS

88

89 def shutdown(self):

90 pass

91

92

93 class SimpleLogProcessor(LogProcessor):

94 """This is an implementation of LogProcessor which passes

95 received logs in the export-friendly LogData representation to the

96 configured LogExporter, as soon as they are emitted.

97 """

98

99 def __init__(self, exporter: LogExporter):

100 self._exporter = exporter

101 self._shutdown = False

102

103 def emit(self, log_data: LogData):

104 if self._shutdown:

105 _logger.warning("Processor is already shutdown, ignoring call")

106 return

107 token = attach(set_value("suppress_instrumentation", True))

108 try:

109 self._exporter.export((log_data,))

110 except Exception: # pylint: disable=broad-except

111 _logger.exception("Exception while exporting logs.")

112 detach(token)

113

114 def shutdown(self):

115 self._shutdown = True

116 self._exporter.shutdown()

117

118 def force_flush(

119 self, timeout_millis: int = 30000

120 ) -> bool: # pylint: disable=no-self-use

121 return True

122

123

124 class _FlushRequest:

125 __slots__ = ["event", "num_log_records"]

126

127 def __init__(self):

128 self.event = threading.Event()

129 self.num_log_records = 0

130

131

132 class BatchLogProcessor(LogProcessor):

133 """This is an implementation of LogProcessor which creates batches of

134 received logs in the export-friendly LogData representation and

135 send to the configured LogExporter, as soon as they are emitted.

136 """

137

138 def __init__(

139 self,

140 exporter: LogExporter,

141 schedule_delay_millis: int = 5000,

142 max_export_batch_size: int = 512,

143 export_timeout_millis: int = 30000,

144 ):

145 self._exporter = exporter

146 self._schedule_delay_millis = schedule_delay_millis

147 self._max_export_batch_size = max_export_batch_size

148 self._export_timeout_millis = export_timeout_millis

149 self._queue = collections.deque() # type: Deque[LogData]

150 self._worker_thread = threading.Thread(target=self.worker, daemon=True)

151 self._condition = threading.Condition(threading.Lock())

152 self._shutdown = False

153 self._flush_request = None # type: Optional[_FlushRequest]

154 self._log_records = [

155 None

156 ] * self._max_export_batch_size # type: List[Optional[LogData]]

157 self._worker_thread.start()

158 # Only available in *nix since py37.

159 if hasattr(os, "register_at_fork"):

160 os.register_at_fork(

161 after_in_child=self._at_fork_reinit

162 ) # pylint: disable=protected-access

163

164 def _at_fork_reinit(self):

165 self._condition = threading.Condition(threading.Lock())

166 self._queue.clear()

167 self._worker_thread = threading.Thread(target=self.worker, daemon=True)

168 self._worker_thread.start()

169

170 def worker(self):

171 timeout = self._schedule_delay_millis / 1e3

172 flush_request = None # type: Optional[_FlushRequest]

173 while not self._shutdown:

174 with self._condition:

175 if self._shutdown:

176 # shutdown may have been called, avoid further processing

177 break

178 flush_request = self._get_and_unset_flush_request()

179 if (

180 len(self._queue) < self._max_export_batch_size

181 and self._flush_request is None

182 ):

183 self._condition.wait(timeout)

184

185 flush_request = self._get_and_unset_flush_request()

186 if not self._queue:

187 timeout = self._schedule_delay_millis / 1e3

188 self._notify_flush_request_finished(flush_request)

189 flush_request = None

190 continue

191 if self._shutdown:

192 break

193

194 start_ns = _time_ns()

195 self._export(flush_request)

196 end_ns = _time_ns()

197 # subtract the duration of this export call to the next timeout

198 timeout = self._schedule_delay_millis / 1e3 - (

199 (end_ns - start_ns) / 1e9

200 )

201

202 self._notify_flush_request_finished(flush_request)

203 flush_request = None

204

205 # there might have been a new flush request while export was running

206 # and before the done flag switched to true

207 with self._condition:

208 shutdown_flush_request = self._get_and_unset_flush_request()

209

210 # flush the remaining logs

211 self._drain_queue()

212 self._notify_flush_request_finished(flush_request)

213 self._notify_flush_request_finished(shutdown_flush_request)

214

215 def _export(self, flush_request: Optional[_FlushRequest] = None):

216 """Exports logs considering the given flush_request.

217

218 If flush_request is not None then logs are exported in batches

219 until the number of exported logs reached or exceeded the num of logs in

220 flush_request, otherwise exports at max max_export_batch_size logs.

221 """

222 if flush_request is None:

223 self._export_batch()

224 return

225

226 num_log_records = flush_request.num_log_records

227 while self._queue:

228 exported = self._export_batch()

229 num_log_records -= exported

230

231 if num_log_records <= 0:

232 break

233

234 def _export_batch(self) -> int:

235 """Exports at most max_export_batch_size logs and returns the number of

236 exported logs.

237 """

238 idx = 0

239 while idx < self._max_export_batch_size and self._queue:

240 record = self._queue.pop()

241 self._log_records[idx] = record

242 idx += 1

243 token = attach(set_value("suppress_instrumentation", True))

244 try:

245 self._exporter.export(self._log_records[:idx]) # type: ignore

246 except Exception: # pylint: disable=broad-except

247 _logger.exception("Exception while exporting logs.")

248 detach(token)

249

250 for index in range(idx):

251 self._log_records[index] = None

252 return idx

253

254 def _drain_queue(self):

255 """Export all elements until queue is empty.

256

257 Can only be called from the worker thread context because it invokes

258 `export` that is not thread safe.

259 """

260 while self._queue:

261 self._export_batch()

262

263 def _get_and_unset_flush_request(self) -> Optional[_FlushRequest]:

264 flush_request = self._flush_request

265 self._flush_request = None

266 if flush_request is not None:

267 flush_request.num_log_records = len(self._queue)

268 return flush_request

269

270 @staticmethod

271 def _notify_flush_request_finished(

272 flush_request: Optional[_FlushRequest] = None,

273 ):

274 if flush_request is not None:

275 flush_request.event.set()

276

277 def _get_or_create_flush_request(self) -> _FlushRequest:

278 if self._flush_request is None:

279 self._flush_request = _FlushRequest()

280 return self._flush_request

281

282 def emit(self, log_data: LogData) -> None:

283 """Adds the `LogData` to queue and notifies the waiting threads

284 when size of queue reaches max_export_batch_size.

285 """

286 if self._shutdown:

287 return

288 self._queue.appendleft(log_data)

289 if len(self._queue) >= self._max_export_batch_size:

290 with self._condition:

291 self._condition.notify()

292

293 def shutdown(self):

294 self._shutdown = True

295 with self._condition:

296 self._condition.notify_all()

297 self._worker_thread.join()

298 self._exporter.shutdown()

299

300 def force_flush(self, timeout_millis: Optional[int] = None) -> bool:

301 if timeout_millis is None:

302 timeout_millis = self._export_timeout_millis

303 if self._shutdown:

304 return True

305

306 with self._condition:

307 flush_request = self._get_or_create_flush_request()

308 self._condition.notify_all()

309

310 ret = flush_request.event.wait(timeout_millis / 1e3)

311 if not ret:

312 _logger.warning("Timeout was exceeded in force_flush().")

313 return ret

314

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py b/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py

--- a/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py

+++ b/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py

@@ -63,7 +63,7 @@

"""

-class ConsoleExporter(LogExporter):

+class ConsoleLogExporter(LogExporter):

"""Implementation of :class:`LogExporter` that prints log records to the

console.

|

{"golden_diff": "diff --git a/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py b/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py\n--- a/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py\n+++ b/opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py\n@@ -63,7 +63,7 @@\n \"\"\"\n \n \n-class ConsoleExporter(LogExporter):\n+class ConsoleLogExporter(LogExporter):\n \"\"\"Implementation of :class:`LogExporter` that prints log records to the\n console.\n", "issue": "Rename `ConsoleExporter` to `ConsoleLogExporter`?\nAs suggested by @lonewolf3739, we should rename the ConsoleExporter to ConsoleLogExporter to follow the pattern established by the ConsoleSpanExporter.\r\n\r\nNot in this PR; Should we rename this to `ConsoleLogExporter`?\r\n\r\n_Originally posted by @lonewolf3739 in https://github.com/open-telemetry/opentelemetry-python/pull/2253#r759589860_\n", "before_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport abc\nimport collections\nimport enum\nimport logging\nimport os\nimport sys\nimport threading\nfrom os import linesep\nfrom typing import IO, Callable, Deque, List, Optional, Sequence\n\nfrom opentelemetry.context import attach, detach, set_value\nfrom opentelemetry.sdk._logs import LogData, LogProcessor, LogRecord\nfrom opentelemetry.util._time import _time_ns\n\n_logger = logging.getLogger(__name__)\n\n\nclass LogExportResult(enum.Enum):\n SUCCESS = 0\n FAILURE = 1\n\n\nclass LogExporter(abc.ABC):\n \"\"\"Interface for exporting logs.\n\n Interface to be implemented by services that want to export logs received\n in their own format.\n\n To export data this MUST be registered to the :class`opentelemetry.sdk._logs.LogEmitter` using a\n log processor.\n \"\"\"\n\n @abc.abstractmethod\n def export(self, batch: Sequence[LogData]):\n \"\"\"Exports a batch of logs.\n\n Args:\n batch: The list of `LogData` objects to be exported\n\n Returns:\n The result of the export\n \"\"\"\n\n @abc.abstractmethod\n def shutdown(self):\n \"\"\"Shuts down the exporter.\n\n Called when the SDK is shut down.\n \"\"\"\n\n\nclass ConsoleExporter(LogExporter):\n \"\"\"Implementation of :class:`LogExporter` that prints log records to the\n console.\n\n This class can be used for diagnostic purposes. It prints the exported\n log records to the console STDOUT.\n \"\"\"\n\n def __init__(\n self,\n out: IO = sys.stdout,\n formatter: Callable[[LogRecord], str] = lambda record: record.to_json()\n + linesep,\n ):\n self.out = out\n self.formatter = formatter\n\n def export(self, batch: Sequence[LogData]):\n for data in batch:\n self.out.write(self.formatter(data.log_record))\n self.out.flush()\n return LogExportResult.SUCCESS\n\n def shutdown(self):\n pass\n\n\nclass SimpleLogProcessor(LogProcessor):\n \"\"\"This is an implementation of LogProcessor which passes\n received logs in the export-friendly LogData representation to the\n configured LogExporter, as soon as they are emitted.\n \"\"\"\n\n def __init__(self, exporter: LogExporter):\n self._exporter = exporter\n self._shutdown = False\n\n def emit(self, log_data: LogData):\n if self._shutdown:\n _logger.warning(\"Processor is already shutdown, ignoring call\")\n return\n token = attach(set_value(\"suppress_instrumentation\", True))\n try:\n self._exporter.export((log_data,))\n except Exception: # pylint: disable=broad-except\n _logger.exception(\"Exception while exporting logs.\")\n detach(token)\n\n def shutdown(self):\n self._shutdown = True\n self._exporter.shutdown()\n\n def force_flush(\n self, timeout_millis: int = 30000\n ) -> bool: # pylint: disable=no-self-use\n return True\n\n\nclass _FlushRequest:\n __slots__ = [\"event\", \"num_log_records\"]\n\n def __init__(self):\n self.event = threading.Event()\n self.num_log_records = 0\n\n\nclass BatchLogProcessor(LogProcessor):\n \"\"\"This is an implementation of LogProcessor which creates batches of\n received logs in the export-friendly LogData representation and\n send to the configured LogExporter, as soon as they are emitted.\n \"\"\"\n\n def __init__(\n self,\n exporter: LogExporter,\n schedule_delay_millis: int = 5000,\n max_export_batch_size: int = 512,\n export_timeout_millis: int = 30000,\n ):\n self._exporter = exporter\n self._schedule_delay_millis = schedule_delay_millis\n self._max_export_batch_size = max_export_batch_size\n self._export_timeout_millis = export_timeout_millis\n self._queue = collections.deque() # type: Deque[LogData]\n self._worker_thread = threading.Thread(target=self.worker, daemon=True)\n self._condition = threading.Condition(threading.Lock())\n self._shutdown = False\n self._flush_request = None # type: Optional[_FlushRequest]\n self._log_records = [\n None\n ] * self._max_export_batch_size # type: List[Optional[LogData]]\n self._worker_thread.start()\n # Only available in *nix since py37.\n if hasattr(os, \"register_at_fork\"):\n os.register_at_fork(\n after_in_child=self._at_fork_reinit\n ) # pylint: disable=protected-access\n\n def _at_fork_reinit(self):\n self._condition = threading.Condition(threading.Lock())\n self._queue.clear()\n self._worker_thread = threading.Thread(target=self.worker, daemon=True)\n self._worker_thread.start()\n\n def worker(self):\n timeout = self._schedule_delay_millis / 1e3\n flush_request = None # type: Optional[_FlushRequest]\n while not self._shutdown:\n with self._condition:\n if self._shutdown:\n # shutdown may have been called, avoid further processing\n break\n flush_request = self._get_and_unset_flush_request()\n if (\n len(self._queue) < self._max_export_batch_size\n and self._flush_request is None\n ):\n self._condition.wait(timeout)\n\n flush_request = self._get_and_unset_flush_request()\n if not self._queue:\n timeout = self._schedule_delay_millis / 1e3\n self._notify_flush_request_finished(flush_request)\n flush_request = None\n continue\n if self._shutdown:\n break\n\n start_ns = _time_ns()\n self._export(flush_request)\n end_ns = _time_ns()\n # subtract the duration of this export call to the next timeout\n timeout = self._schedule_delay_millis / 1e3 - (\n (end_ns - start_ns) / 1e9\n )\n\n self._notify_flush_request_finished(flush_request)\n flush_request = None\n\n # there might have been a new flush request while export was running\n # and before the done flag switched to true\n with self._condition:\n shutdown_flush_request = self._get_and_unset_flush_request()\n\n # flush the remaining logs\n self._drain_queue()\n self._notify_flush_request_finished(flush_request)\n self._notify_flush_request_finished(shutdown_flush_request)\n\n def _export(self, flush_request: Optional[_FlushRequest] = None):\n \"\"\"Exports logs considering the given flush_request.\n\n If flush_request is not None then logs are exported in batches\n until the number of exported logs reached or exceeded the num of logs in\n flush_request, otherwise exports at max max_export_batch_size logs.\n \"\"\"\n if flush_request is None:\n self._export_batch()\n return\n\n num_log_records = flush_request.num_log_records\n while self._queue:\n exported = self._export_batch()\n num_log_records -= exported\n\n if num_log_records <= 0:\n break\n\n def _export_batch(self) -> int:\n \"\"\"Exports at most max_export_batch_size logs and returns the number of\n exported logs.\n \"\"\"\n idx = 0\n while idx < self._max_export_batch_size and self._queue:\n record = self._queue.pop()\n self._log_records[idx] = record\n idx += 1\n token = attach(set_value(\"suppress_instrumentation\", True))\n try:\n self._exporter.export(self._log_records[:idx]) # type: ignore\n except Exception: # pylint: disable=broad-except\n _logger.exception(\"Exception while exporting logs.\")\n detach(token)\n\n for index in range(idx):\n self._log_records[index] = None\n return idx\n\n def _drain_queue(self):\n \"\"\"Export all elements until queue is empty.\n\n Can only be called from the worker thread context because it invokes\n `export` that is not thread safe.\n \"\"\"\n while self._queue:\n self._export_batch()\n\n def _get_and_unset_flush_request(self) -> Optional[_FlushRequest]:\n flush_request = self._flush_request\n self._flush_request = None\n if flush_request is not None:\n flush_request.num_log_records = len(self._queue)\n return flush_request\n\n @staticmethod\n def _notify_flush_request_finished(\n flush_request: Optional[_FlushRequest] = None,\n ):\n if flush_request is not None:\n flush_request.event.set()\n\n def _get_or_create_flush_request(self) -> _FlushRequest:\n if self._flush_request is None:\n self._flush_request = _FlushRequest()\n return self._flush_request\n\n def emit(self, log_data: LogData) -> None:\n \"\"\"Adds the `LogData` to queue and notifies the waiting threads\n when size of queue reaches max_export_batch_size.\n \"\"\"\n if self._shutdown:\n return\n self._queue.appendleft(log_data)\n if len(self._queue) >= self._max_export_batch_size:\n with self._condition:\n self._condition.notify()\n\n def shutdown(self):\n self._shutdown = True\n with self._condition:\n self._condition.notify_all()\n self._worker_thread.join()\n self._exporter.shutdown()\n\n def force_flush(self, timeout_millis: Optional[int] = None) -> bool:\n if timeout_millis is None:\n timeout_millis = self._export_timeout_millis\n if self._shutdown:\n return True\n\n with self._condition:\n flush_request = self._get_or_create_flush_request()\n self._condition.notify_all()\n\n ret = flush_request.event.wait(timeout_millis / 1e3)\n if not ret:\n _logger.warning(\"Timeout was exceeded in force_flush().\")\n return ret\n", "path": "opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py"}], "after_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport abc\nimport collections\nimport enum\nimport logging\nimport os\nimport sys\nimport threading\nfrom os import linesep\nfrom typing import IO, Callable, Deque, List, Optional, Sequence\n\nfrom opentelemetry.context import attach, detach, set_value\nfrom opentelemetry.sdk._logs import LogData, LogProcessor, LogRecord\nfrom opentelemetry.util._time import _time_ns\n\n_logger = logging.getLogger(__name__)\n\n\nclass LogExportResult(enum.Enum):\n SUCCESS = 0\n FAILURE = 1\n\n\nclass LogExporter(abc.ABC):\n \"\"\"Interface for exporting logs.\n\n Interface to be implemented by services that want to export logs received\n in their own format.\n\n To export data this MUST be registered to the :class`opentelemetry.sdk._logs.LogEmitter` using a\n log processor.\n \"\"\"\n\n @abc.abstractmethod\n def export(self, batch: Sequence[LogData]):\n \"\"\"Exports a batch of logs.\n\n Args:\n batch: The list of `LogData` objects to be exported\n\n Returns:\n The result of the export\n \"\"\"\n\n @abc.abstractmethod\n def shutdown(self):\n \"\"\"Shuts down the exporter.\n\n Called when the SDK is shut down.\n \"\"\"\n\n\nclass ConsoleLogExporter(LogExporter):\n \"\"\"Implementation of :class:`LogExporter` that prints log records to the\n console.\n\n This class can be used for diagnostic purposes. It prints the exported\n log records to the console STDOUT.\n \"\"\"\n\n def __init__(\n self,\n out: IO = sys.stdout,\n formatter: Callable[[LogRecord], str] = lambda record: record.to_json()\n + linesep,\n ):\n self.out = out\n self.formatter = formatter\n\n def export(self, batch: Sequence[LogData]):\n for data in batch:\n self.out.write(self.formatter(data.log_record))\n self.out.flush()\n return LogExportResult.SUCCESS\n\n def shutdown(self):\n pass\n\n\nclass SimpleLogProcessor(LogProcessor):\n \"\"\"This is an implementation of LogProcessor which passes\n received logs in the export-friendly LogData representation to the\n configured LogExporter, as soon as they are emitted.\n \"\"\"\n\n def __init__(self, exporter: LogExporter):\n self._exporter = exporter\n self._shutdown = False\n\n def emit(self, log_data: LogData):\n if self._shutdown:\n _logger.warning(\"Processor is already shutdown, ignoring call\")\n return\n token = attach(set_value(\"suppress_instrumentation\", True))\n try:\n self._exporter.export((log_data,))\n except Exception: # pylint: disable=broad-except\n _logger.exception(\"Exception while exporting logs.\")\n detach(token)\n\n def shutdown(self):\n self._shutdown = True\n self._exporter.shutdown()\n\n def force_flush(\n self, timeout_millis: int = 30000\n ) -> bool: # pylint: disable=no-self-use\n return True\n\n\nclass _FlushRequest:\n __slots__ = [\"event\", \"num_log_records\"]\n\n def __init__(self):\n self.event = threading.Event()\n self.num_log_records = 0\n\n\nclass BatchLogProcessor(LogProcessor):\n \"\"\"This is an implementation of LogProcessor which creates batches of\n received logs in the export-friendly LogData representation and\n send to the configured LogExporter, as soon as they are emitted.\n \"\"\"\n\n def __init__(\n self,\n exporter: LogExporter,\n schedule_delay_millis: int = 5000,\n max_export_batch_size: int = 512,\n export_timeout_millis: int = 30000,\n ):\n self._exporter = exporter\n self._schedule_delay_millis = schedule_delay_millis\n self._max_export_batch_size = max_export_batch_size\n self._export_timeout_millis = export_timeout_millis\n self._queue = collections.deque() # type: Deque[LogData]\n self._worker_thread = threading.Thread(target=self.worker, daemon=True)\n self._condition = threading.Condition(threading.Lock())\n self._shutdown = False\n self._flush_request = None # type: Optional[_FlushRequest]\n self._log_records = [\n None\n ] * self._max_export_batch_size # type: List[Optional[LogData]]\n self._worker_thread.start()\n # Only available in *nix since py37.\n if hasattr(os, \"register_at_fork\"):\n os.register_at_fork(\n after_in_child=self._at_fork_reinit\n ) # pylint: disable=protected-access\n\n def _at_fork_reinit(self):\n self._condition = threading.Condition(threading.Lock())\n self._queue.clear()\n self._worker_thread = threading.Thread(target=self.worker, daemon=True)\n self._worker_thread.start()\n\n def worker(self):\n timeout = self._schedule_delay_millis / 1e3\n flush_request = None # type: Optional[_FlushRequest]\n while not self._shutdown:\n with self._condition:\n if self._shutdown:\n # shutdown may have been called, avoid further processing\n break\n flush_request = self._get_and_unset_flush_request()\n if (\n len(self._queue) < self._max_export_batch_size\n and self._flush_request is None\n ):\n self._condition.wait(timeout)\n\n flush_request = self._get_and_unset_flush_request()\n if not self._queue:\n timeout = self._schedule_delay_millis / 1e3\n self._notify_flush_request_finished(flush_request)\n flush_request = None\n continue\n if self._shutdown:\n break\n\n start_ns = _time_ns()\n self._export(flush_request)\n end_ns = _time_ns()\n # subtract the duration of this export call to the next timeout\n timeout = self._schedule_delay_millis / 1e3 - (\n (end_ns - start_ns) / 1e9\n )\n\n self._notify_flush_request_finished(flush_request)\n flush_request = None\n\n # there might have been a new flush request while export was running\n # and before the done flag switched to true\n with self._condition:\n shutdown_flush_request = self._get_and_unset_flush_request()\n\n # flush the remaining logs\n self._drain_queue()\n self._notify_flush_request_finished(flush_request)\n self._notify_flush_request_finished(shutdown_flush_request)\n\n def _export(self, flush_request: Optional[_FlushRequest] = None):\n \"\"\"Exports logs considering the given flush_request.\n\n If flush_request is not None then logs are exported in batches\n until the number of exported logs reached or exceeded the num of logs in\n flush_request, otherwise exports at max max_export_batch_size logs.\n \"\"\"\n if flush_request is None:\n self._export_batch()\n return\n\n num_log_records = flush_request.num_log_records\n while self._queue:\n exported = self._export_batch()\n num_log_records -= exported\n\n if num_log_records <= 0:\n break\n\n def _export_batch(self) -> int:\n \"\"\"Exports at most max_export_batch_size logs and returns the number of\n exported logs.\n \"\"\"\n idx = 0\n while idx < self._max_export_batch_size and self._queue:\n record = self._queue.pop()\n self._log_records[idx] = record\n idx += 1\n token = attach(set_value(\"suppress_instrumentation\", True))\n try:\n self._exporter.export(self._log_records[:idx]) # type: ignore\n except Exception: # pylint: disable=broad-except\n _logger.exception(\"Exception while exporting logs.\")\n detach(token)\n\n for index in range(idx):\n self._log_records[index] = None\n return idx\n\n def _drain_queue(self):\n \"\"\"Export all elements until queue is empty.\n\n Can only be called from the worker thread context because it invokes\n `export` that is not thread safe.\n \"\"\"\n while self._queue:\n self._export_batch()\n\n def _get_and_unset_flush_request(self) -> Optional[_FlushRequest]:\n flush_request = self._flush_request\n self._flush_request = None\n if flush_request is not None:\n flush_request.num_log_records = len(self._queue)\n return flush_request\n\n @staticmethod\n def _notify_flush_request_finished(\n flush_request: Optional[_FlushRequest] = None,\n ):\n if flush_request is not None:\n flush_request.event.set()\n\n def _get_or_create_flush_request(self) -> _FlushRequest:\n if self._flush_request is None:\n self._flush_request = _FlushRequest()\n return self._flush_request\n\n def emit(self, log_data: LogData) -> None:\n \"\"\"Adds the `LogData` to queue and notifies the waiting threads\n when size of queue reaches max_export_batch_size.\n \"\"\"\n if self._shutdown:\n return\n self._queue.appendleft(log_data)\n if len(self._queue) >= self._max_export_batch_size:\n with self._condition:\n self._condition.notify()\n\n def shutdown(self):\n self._shutdown = True\n with self._condition:\n self._condition.notify_all()\n self._worker_thread.join()\n self._exporter.shutdown()\n\n def force_flush(self, timeout_millis: Optional[int] = None) -> bool:\n if timeout_millis is None:\n timeout_millis = self._export_timeout_millis\n if self._shutdown:\n return True\n\n with self._condition:\n flush_request = self._get_or_create_flush_request()\n self._condition.notify_all()\n\n ret = flush_request.event.wait(timeout_millis / 1e3)\n if not ret:\n _logger.warning(\"Timeout was exceeded in force_flush().\")\n return ret\n", "path": "opentelemetry-sdk/src/opentelemetry/sdk/_logs/export/__init__.py"}]}

| 3,507 | 125 |

gh_patches_debug_6152

|

rasdani/github-patches

|

git_diff

|

tobymao__sqlglot-1549

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

FROM_UNIXTIME has different types in Trino and SparkSQL

```FROM_UNIXTIME(`created`)``` in Trino SQL returns a datetime, but ```FROM_UNIXTIME(`created`)``` in SparkSQL returns a string, which can cause queries to fail.

I would expect:

```select FROM_UNIXTIME(`created`) from a``` # (read='trino')

to convert to

```select CAST(FROM_UNIXTIME('created') as timestamp) from a``` # (write='spark')

https://docs.databricks.com/sql/language-manual/functions/from_unixtime.html

https://spark.apache.org/docs/3.0.0/api/sql/#from_unixtime

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `sqlglot/dialects/spark2.py`

Content:

```

1 from __future__ import annotations

2

3 import typing as t

4

5 from sqlglot import exp, parser

6 from sqlglot.dialects.dialect import create_with_partitions_sql, rename_func, trim_sql

7 from sqlglot.dialects.hive import Hive

8 from sqlglot.helper import seq_get

9

10

11 def _create_sql(self: Hive.Generator, e: exp.Create) -> str:

12 kind = e.args["kind"]

13 properties = e.args.get("properties")

14

15 if kind.upper() == "TABLE" and any(

16 isinstance(prop, exp.TemporaryProperty)

17 for prop in (properties.expressions if properties else [])

18 ):

19 return f"CREATE TEMPORARY VIEW {self.sql(e, 'this')} AS {self.sql(e, 'expression')}"

20 return create_with_partitions_sql(self, e)

21

22

23 def _map_sql(self: Hive.Generator, expression: exp.Map) -> str:

24 keys = self.sql(expression.args["keys"])

25 values = self.sql(expression.args["values"])

26 return f"MAP_FROM_ARRAYS({keys}, {values})"

27

28

29 def _parse_as_cast(to_type: str) -> t.Callable[[t.Sequence], exp.Expression]:

30 return lambda args: exp.Cast(this=seq_get(args, 0), to=exp.DataType.build(to_type))

31

32

33 def _str_to_date(self: Hive.Generator, expression: exp.StrToDate) -> str:

34 this = self.sql(expression, "this")

35 time_format = self.format_time(expression)

36 if time_format == Hive.date_format:

37 return f"TO_DATE({this})"

38 return f"TO_DATE({this}, {time_format})"

39

40

41 def _unix_to_time_sql(self: Hive.Generator, expression: exp.UnixToTime) -> str:

42 scale = expression.args.get("scale")

43 timestamp = self.sql(expression, "this")

44 if scale is None:

45 return f"FROM_UNIXTIME({timestamp})"

46 if scale == exp.UnixToTime.SECONDS:

47 return f"TIMESTAMP_SECONDS({timestamp})"

48 if scale == exp.UnixToTime.MILLIS: