problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_40220

|

rasdani/github-patches

|

git_diff

|

encode__starlette-1715

|

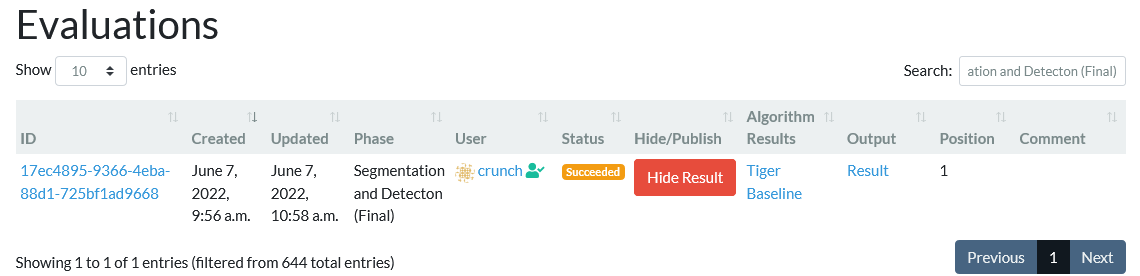

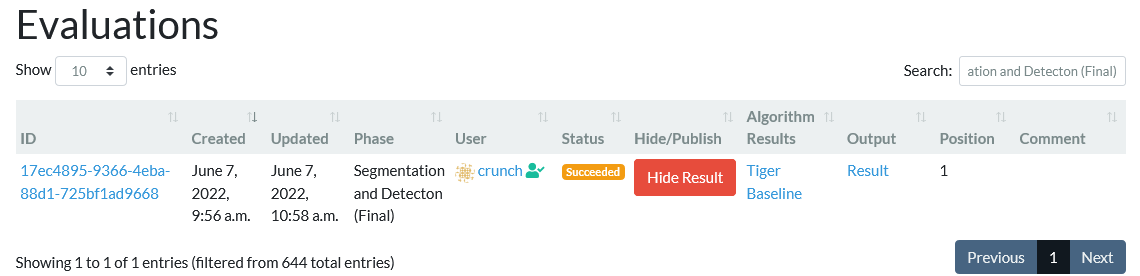

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Background tasks are cancelled if the client closes connection

### Checklist

- [X] The bug is reproducible against the latest release or `master`.

- [X] There are no similar issues or pull requests to fix it yet.

### Describe the bug

When the HTTP client closes the TCP socket immediately after receiving the HTTP response, background tasks are cancelled.

This bug only happens when running the ASGI under uvicorn, and only if at least one HTTP Middleware is defined in the user middleware chain.

### Steps to reproduce the bug

1. Write the following ASGI Starlette application in `repro.py`:

```python

import traceback

import anyio

from starlette.applications import Starlette

from starlette.background import BackgroundTasks

from starlette.middleware import Middleware

from starlette.middleware.base import BaseHTTPMiddleware

from starlette.responses import Response

from starlette.routing import Route

async def passthrough(request, call_next):

return await call_next(request)

async def _sleep(identifier, delay):

print(identifier, "started")

try:

await anyio.sleep(delay)

print(identifier, "completed")

except BaseException:

print(identifier, "error")

traceback.print_exc()

raise

async def response_with_sleeps(request):

background_tasks = BackgroundTasks()

background_tasks.add_task(_sleep, "background task 1", 2)

background_tasks.add_task(_sleep, "background task 2", 2)

return Response(background=background_tasks)

application = Starlette(

middleware=[

Middleware(BaseHTTPMiddleware, dispatch=passthrough),

],

routes=[

Route("/", response_with_sleeps),

],

)

```

2. Run that application using `uvicorn` (either uvloop or regular asyncio will reproduce the issue) on localhost:8000

```bash

uvicorn repro:application --port 8000

```

3. Run the following client script

```python

#!/usr/bin/env python

import socket

connection = socket.create_connection(("localhost", 8000))

connection.sendall(b"GET / HTTP/1.1\r\nHost: localhost\r\n\r\n")

print(connection.recv(10000).decode("utf8"))

connection.close()

```

### Expected behavior

The client script gets the HTTP response, and both background tasks should complete successfully.

The expected behavior will be detectable by the following content in standard output:

```

background task 1 started

background task 1 completed

background task 2 started

background task 2 completed

```

### Actual behavior

Background task 1 is interrupted at the `await` point and background task 2 is never started.

That results in the following content in the output (when running the `repro.py` application):

```

background task 1 started

background task 1 error

Traceback (most recent call last):

File "/Users/jean/PycharmProjects/starlette-bg-cancelled/./repro.py", line 19, in _sleep

await anyio.sleep(delay)

File "/Users/jean/PycharmProjects/starlette-bg-cancelled/venv/lib/python3.9/site-packages/anyio/_core/_eventloop.py", line 69, in sleep

return await get_asynclib().sleep(delay)

File "/usr/local/Cellar/[email protected]/3.9.6/Frameworks/Python.framework/Versions/3.9/lib/python3.9/asyncio/tasks.py", line 654, in sleep

return await future

asyncio.exceptions.CancelledError

```

### Debugging material

_No response_

### Environment

- MacOS 10.14.6 / Python 3.9 / Starlette 0.18.0

### Additional context

* When I remove the `passthrough` middleware, the bug goes away.

* When I run the same application in `hypercorn`, the bug goes away.

* There does not seem to be a difference between using `uvloop` or not.

* If the client script (e.g. with a `time.sleep(10)`) maintains the TCP connection open, the bug goes away.

Shield send "http.response.start" from cancellation (BaseHTTPMiddleware)

Fixes #1634

- Discussion #1527

- Caused by #1157

`await recv_stream.receive()` will raise `anyio.EndOfStream` if request is disconnected, due to:

- `task_group.cancel_scope.cancel()` in `StreamingResponse.__call__.<locals>.wrap` and

- cancellation check in `await checkpoint()` of `MemoryObjectSendStream.send`,

and then `RuntimeError: No response returned.` will be raised in `BaseHTTPMiddleware`.

Let's shield send "http.response.start" from cancellation, since the message is ready to be sent to the receiver.

This is an alternative implementation of #1706 in `BaseHTTPMiddleware` instead of `StreamingResponse`.

We should not force the shielding in `StreamingResponse`, since the cancellation check is an intended feature of `MemoryObjectSendStream`. `BaseHTTPMiddleware`, which uses both, should be responsible for the compatibility.

--- END ISSUE ---

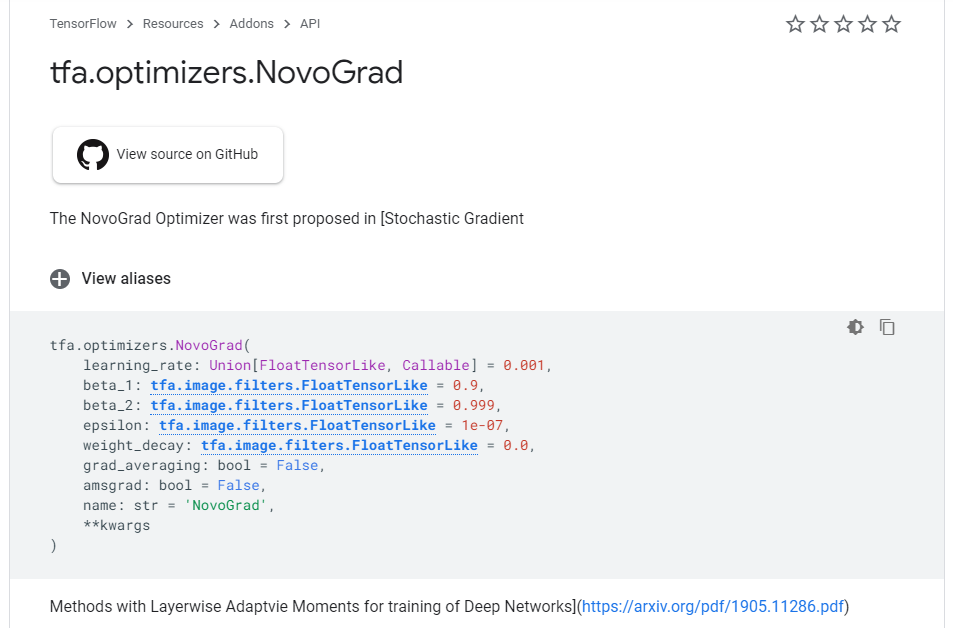

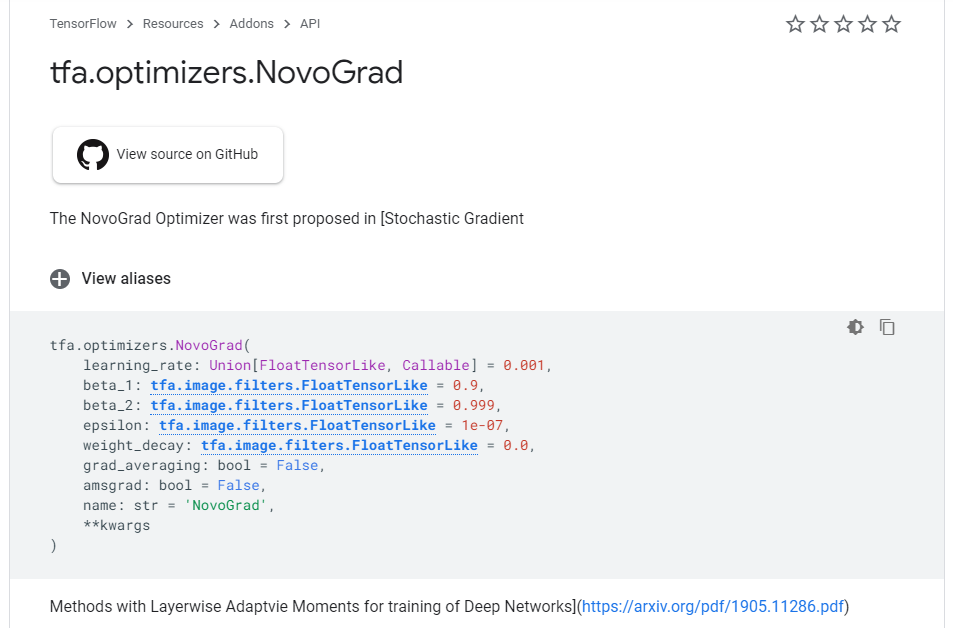

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `starlette/middleware/base.py`

Content:

```

1 import typing

2

3 import anyio

4

5 from starlette.requests import Request

6 from starlette.responses import Response, StreamingResponse

7 from starlette.types import ASGIApp, Receive, Scope, Send

8

9 RequestResponseEndpoint = typing.Callable[[Request], typing.Awaitable[Response]]

10 DispatchFunction = typing.Callable[

11 [Request, RequestResponseEndpoint], typing.Awaitable[Response]

12 ]

13

14

15 class BaseHTTPMiddleware:

16 def __init__(

17 self, app: ASGIApp, dispatch: typing.Optional[DispatchFunction] = None

18 ) -> None:

19 self.app = app

20 self.dispatch_func = self.dispatch if dispatch is None else dispatch

21

22 async def __call__(self, scope: Scope, receive: Receive, send: Send) -> None:

23 if scope["type"] != "http":

24 await self.app(scope, receive, send)

25 return

26

27 async def call_next(request: Request) -> Response:

28 app_exc: typing.Optional[Exception] = None

29 send_stream, recv_stream = anyio.create_memory_object_stream()

30

31 async def coro() -> None:

32 nonlocal app_exc

33

34 async with send_stream:

35 try:

36 await self.app(scope, request.receive, send_stream.send)

37 except Exception as exc:

38 app_exc = exc

39

40 task_group.start_soon(coro)

41

42 try:

43 message = await recv_stream.receive()

44 except anyio.EndOfStream:

45 if app_exc is not None:

46 raise app_exc

47 raise RuntimeError("No response returned.")

48

49 assert message["type"] == "http.response.start"

50

51 async def body_stream() -> typing.AsyncGenerator[bytes, None]:

52 async with recv_stream:

53 async for message in recv_stream:

54 assert message["type"] == "http.response.body"

55 body = message.get("body", b"")

56 if body:

57 yield body

58 if not message.get("more_body", False):

59 break

60

61 if app_exc is not None:

62 raise app_exc

63

64 response = StreamingResponse(

65 status_code=message["status"], content=body_stream()

66 )

67 response.raw_headers = message["headers"]

68 return response

69

70 async with anyio.create_task_group() as task_group:

71 request = Request(scope, receive=receive)

72 response = await self.dispatch_func(request, call_next)

73 await response(scope, receive, send)

74 task_group.cancel_scope.cancel()

75

76 async def dispatch(

77 self, request: Request, call_next: RequestResponseEndpoint

78 ) -> Response:

79 raise NotImplementedError() # pragma: no cover

80

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/starlette/middleware/base.py b/starlette/middleware/base.py

--- a/starlette/middleware/base.py

+++ b/starlette/middleware/base.py

@@ -4,12 +4,13 @@

from starlette.requests import Request

from starlette.responses import Response, StreamingResponse

-from starlette.types import ASGIApp, Receive, Scope, Send

+from starlette.types import ASGIApp, Message, Receive, Scope, Send

RequestResponseEndpoint = typing.Callable[[Request], typing.Awaitable[Response]]

DispatchFunction = typing.Callable[

[Request, RequestResponseEndpoint], typing.Awaitable[Response]

]

+T = typing.TypeVar("T")

class BaseHTTPMiddleware:

@@ -24,19 +25,52 @@

await self.app(scope, receive, send)

return

+ response_sent = anyio.Event()

+

async def call_next(request: Request) -> Response:

app_exc: typing.Optional[Exception] = None

send_stream, recv_stream = anyio.create_memory_object_stream()

+ async def receive_or_disconnect() -> Message:

+ if response_sent.is_set():

+ return {"type": "http.disconnect"}

+

+ async with anyio.create_task_group() as task_group:

+

+ async def wrap(func: typing.Callable[[], typing.Awaitable[T]]) -> T:

+ result = await func()

+ task_group.cancel_scope.cancel()

+ return result

+

+ task_group.start_soon(wrap, response_sent.wait)

+ message = await wrap(request.receive)

+

+ if response_sent.is_set():

+ return {"type": "http.disconnect"}

+

+ return message

+

+ async def close_recv_stream_on_response_sent() -> None:

+ await response_sent.wait()

+ recv_stream.close()

+

+ async def send_no_error(message: Message) -> None:

+ try:

+ await send_stream.send(message)

+ except anyio.BrokenResourceError:

+ # recv_stream has been closed, i.e. response_sent has been set.

+ return

+

async def coro() -> None:

nonlocal app_exc

async with send_stream:

try:

- await self.app(scope, request.receive, send_stream.send)

+ await self.app(scope, receive_or_disconnect, send_no_error)

except Exception as exc:

app_exc = exc

+ task_group.start_soon(close_recv_stream_on_response_sent)

task_group.start_soon(coro)

try:

@@ -71,7 +105,7 @@

request = Request(scope, receive=receive)

response = await self.dispatch_func(request, call_next)

await response(scope, receive, send)

- task_group.cancel_scope.cancel()

+ response_sent.set()

async def dispatch(

self, request: Request, call_next: RequestResponseEndpoint

|

{"golden_diff": "diff --git a/starlette/middleware/base.py b/starlette/middleware/base.py\n--- a/starlette/middleware/base.py\n+++ b/starlette/middleware/base.py\n@@ -4,12 +4,13 @@\n \n from starlette.requests import Request\n from starlette.responses import Response, StreamingResponse\n-from starlette.types import ASGIApp, Receive, Scope, Send\n+from starlette.types import ASGIApp, Message, Receive, Scope, Send\n \n RequestResponseEndpoint = typing.Callable[[Request], typing.Awaitable[Response]]\n DispatchFunction = typing.Callable[\n [Request, RequestResponseEndpoint], typing.Awaitable[Response]\n ]\n+T = typing.TypeVar(\"T\")\n \n \n class BaseHTTPMiddleware:\n@@ -24,19 +25,52 @@\n await self.app(scope, receive, send)\n return\n \n+ response_sent = anyio.Event()\n+\n async def call_next(request: Request) -> Response:\n app_exc: typing.Optional[Exception] = None\n send_stream, recv_stream = anyio.create_memory_object_stream()\n \n+ async def receive_or_disconnect() -> Message:\n+ if response_sent.is_set():\n+ return {\"type\": \"http.disconnect\"}\n+\n+ async with anyio.create_task_group() as task_group:\n+\n+ async def wrap(func: typing.Callable[[], typing.Awaitable[T]]) -> T:\n+ result = await func()\n+ task_group.cancel_scope.cancel()\n+ return result\n+\n+ task_group.start_soon(wrap, response_sent.wait)\n+ message = await wrap(request.receive)\n+\n+ if response_sent.is_set():\n+ return {\"type\": \"http.disconnect\"}\n+\n+ return message\n+\n+ async def close_recv_stream_on_response_sent() -> None:\n+ await response_sent.wait()\n+ recv_stream.close()\n+\n+ async def send_no_error(message: Message) -> None:\n+ try:\n+ await send_stream.send(message)\n+ except anyio.BrokenResourceError:\n+ # recv_stream has been closed, i.e. response_sent has been set.\n+ return\n+\n async def coro() -> None:\n nonlocal app_exc\n \n async with send_stream:\n try:\n- await self.app(scope, request.receive, send_stream.send)\n+ await self.app(scope, receive_or_disconnect, send_no_error)\n except Exception as exc:\n app_exc = exc\n \n+ task_group.start_soon(close_recv_stream_on_response_sent)\n task_group.start_soon(coro)\n \n try:\n@@ -71,7 +105,7 @@\n request = Request(scope, receive=receive)\n response = await self.dispatch_func(request, call_next)\n await response(scope, receive, send)\n- task_group.cancel_scope.cancel()\n+ response_sent.set()\n \n async def dispatch(\n self, request: Request, call_next: RequestResponseEndpoint\n", "issue": "Background tasks are cancelled if the client closes connection\n### Checklist\n\n- [X] The bug is reproducible against the latest release or `master`.\n- [X] There are no similar issues or pull requests to fix it yet.\n\n### Describe the bug\n\nWhen the HTTP client closes the TCP socket immediately after receiving the HTTP response, background tasks are cancelled.\r\n\r\nThis bug only happens when running the ASGI under uvicorn, and only if at least one HTTP Middleware is defined in the user middleware chain.\n\n### Steps to reproduce the bug\n\n1. Write the following ASGI Starlette application in `repro.py`:\r\n```python\r\nimport traceback\r\n\r\nimport anyio\r\nfrom starlette.applications import Starlette\r\nfrom starlette.background import BackgroundTasks\r\nfrom starlette.middleware import Middleware\r\nfrom starlette.middleware.base import BaseHTTPMiddleware\r\nfrom starlette.responses import Response\r\nfrom starlette.routing import Route\r\n\r\n\r\nasync def passthrough(request, call_next):\r\n return await call_next(request)\r\n\r\n\r\nasync def _sleep(identifier, delay):\r\n print(identifier, \"started\")\r\n try:\r\n await anyio.sleep(delay)\r\n print(identifier, \"completed\")\r\n except BaseException:\r\n print(identifier, \"error\")\r\n traceback.print_exc()\r\n raise\r\n\r\n\r\nasync def response_with_sleeps(request):\r\n background_tasks = BackgroundTasks()\r\n background_tasks.add_task(_sleep, \"background task 1\", 2)\r\n background_tasks.add_task(_sleep, \"background task 2\", 2)\r\n return Response(background=background_tasks)\r\n\r\n\r\napplication = Starlette(\r\n middleware=[\r\n Middleware(BaseHTTPMiddleware, dispatch=passthrough),\r\n ],\r\n routes=[\r\n Route(\"/\", response_with_sleeps),\r\n ],\r\n)\r\n```\r\n2. Run that application using `uvicorn` (either uvloop or regular asyncio will reproduce the issue) on localhost:8000\r\n```bash\r\nuvicorn repro:application --port 8000\r\n```\r\n3. Run the following client script\r\n```python\r\n#!/usr/bin/env python\r\nimport socket\r\n\r\nconnection = socket.create_connection((\"localhost\", 8000))\r\nconnection.sendall(b\"GET / HTTP/1.1\\r\\nHost: localhost\\r\\n\\r\\n\")\r\nprint(connection.recv(10000).decode(\"utf8\"))\r\nconnection.close()\r\n```\r\n\n\n### Expected behavior\n\nThe client script gets the HTTP response, and both background tasks should complete successfully.\r\n\r\nThe expected behavior will be detectable by the following content in standard output:\r\n\r\n```\r\nbackground task 1 started\r\nbackground task 1 completed\r\nbackground task 2 started\r\nbackground task 2 completed\r\n```\n\n### Actual behavior\n\nBackground task 1 is interrupted at the `await` point and background task 2 is never started.\r\n\r\nThat results in the following content in the output (when running the `repro.py` application):\r\n```\r\nbackground task 1 started\r\nbackground task 1 error\r\nTraceback (most recent call last):\r\n File \"/Users/jean/PycharmProjects/starlette-bg-cancelled/./repro.py\", line 19, in _sleep\r\n await anyio.sleep(delay)\r\n File \"/Users/jean/PycharmProjects/starlette-bg-cancelled/venv/lib/python3.9/site-packages/anyio/_core/_eventloop.py\", line 69, in sleep\r\n return await get_asynclib().sleep(delay)\r\n File \"/usr/local/Cellar/[email protected]/3.9.6/Frameworks/Python.framework/Versions/3.9/lib/python3.9/asyncio/tasks.py\", line 654, in sleep\r\n return await future\r\nasyncio.exceptions.CancelledError\r\n```\n\n### Debugging material\n\n_No response_\n\n### Environment\n\n- MacOS 10.14.6 / Python 3.9 / Starlette 0.18.0\n\n### Additional context\n\n * When I remove the `passthrough` middleware, the bug goes away.\r\n * When I run the same application in `hypercorn`, the bug goes away.\r\n * There does not seem to be a difference between using `uvloop` or not.\r\n * If the client script (e.g. with a `time.sleep(10)`) maintains the TCP connection open, the bug goes away.\nShield send \"http.response.start\" from cancellation (BaseHTTPMiddleware)\nFixes #1634 \r\n- Discussion #1527 \r\n- Caused by #1157 \r\n\r\n`await recv_stream.receive()` will raise `anyio.EndOfStream` if request is disconnected, due to:\r\n- `task_group.cancel_scope.cancel()` in `StreamingResponse.__call__.<locals>.wrap` and\r\n- cancellation check in `await checkpoint()` of `MemoryObjectSendStream.send`,\r\n\r\nand then `RuntimeError: No response returned.` will be raised in `BaseHTTPMiddleware`.\r\n\r\nLet's shield send \"http.response.start\" from cancellation, since the message is ready to be sent to the receiver.\r\n\r\nThis is an alternative implementation of #1706 in `BaseHTTPMiddleware` instead of `StreamingResponse`.\r\nWe should not force the shielding in `StreamingResponse`, since the cancellation check is an intended feature of `MemoryObjectSendStream`. `BaseHTTPMiddleware`, which uses both, should be responsible for the compatibility.\n", "before_files": [{"content": "import typing\n\nimport anyio\n\nfrom starlette.requests import Request\nfrom starlette.responses import Response, StreamingResponse\nfrom starlette.types import ASGIApp, Receive, Scope, Send\n\nRequestResponseEndpoint = typing.Callable[[Request], typing.Awaitable[Response]]\nDispatchFunction = typing.Callable[\n [Request, RequestResponseEndpoint], typing.Awaitable[Response]\n]\n\n\nclass BaseHTTPMiddleware:\n def __init__(\n self, app: ASGIApp, dispatch: typing.Optional[DispatchFunction] = None\n ) -> None:\n self.app = app\n self.dispatch_func = self.dispatch if dispatch is None else dispatch\n\n async def __call__(self, scope: Scope, receive: Receive, send: Send) -> None:\n if scope[\"type\"] != \"http\":\n await self.app(scope, receive, send)\n return\n\n async def call_next(request: Request) -> Response:\n app_exc: typing.Optional[Exception] = None\n send_stream, recv_stream = anyio.create_memory_object_stream()\n\n async def coro() -> None:\n nonlocal app_exc\n\n async with send_stream:\n try:\n await self.app(scope, request.receive, send_stream.send)\n except Exception as exc:\n app_exc = exc\n\n task_group.start_soon(coro)\n\n try:\n message = await recv_stream.receive()\n except anyio.EndOfStream:\n if app_exc is not None:\n raise app_exc\n raise RuntimeError(\"No response returned.\")\n\n assert message[\"type\"] == \"http.response.start\"\n\n async def body_stream() -> typing.AsyncGenerator[bytes, None]:\n async with recv_stream:\n async for message in recv_stream:\n assert message[\"type\"] == \"http.response.body\"\n body = message.get(\"body\", b\"\")\n if body:\n yield body\n if not message.get(\"more_body\", False):\n break\n\n if app_exc is not None:\n raise app_exc\n\n response = StreamingResponse(\n status_code=message[\"status\"], content=body_stream()\n )\n response.raw_headers = message[\"headers\"]\n return response\n\n async with anyio.create_task_group() as task_group:\n request = Request(scope, receive=receive)\n response = await self.dispatch_func(request, call_next)\n await response(scope, receive, send)\n task_group.cancel_scope.cancel()\n\n async def dispatch(\n self, request: Request, call_next: RequestResponseEndpoint\n ) -> Response:\n raise NotImplementedError() # pragma: no cover\n", "path": "starlette/middleware/base.py"}], "after_files": [{"content": "import typing\n\nimport anyio\n\nfrom starlette.requests import Request\nfrom starlette.responses import Response, StreamingResponse\nfrom starlette.types import ASGIApp, Message, Receive, Scope, Send\n\nRequestResponseEndpoint = typing.Callable[[Request], typing.Awaitable[Response]]\nDispatchFunction = typing.Callable[\n [Request, RequestResponseEndpoint], typing.Awaitable[Response]\n]\nT = typing.TypeVar(\"T\")\n\n\nclass BaseHTTPMiddleware:\n def __init__(\n self, app: ASGIApp, dispatch: typing.Optional[DispatchFunction] = None\n ) -> None:\n self.app = app\n self.dispatch_func = self.dispatch if dispatch is None else dispatch\n\n async def __call__(self, scope: Scope, receive: Receive, send: Send) -> None:\n if scope[\"type\"] != \"http\":\n await self.app(scope, receive, send)\n return\n\n response_sent = anyio.Event()\n\n async def call_next(request: Request) -> Response:\n app_exc: typing.Optional[Exception] = None\n send_stream, recv_stream = anyio.create_memory_object_stream()\n\n async def receive_or_disconnect() -> Message:\n if response_sent.is_set():\n return {\"type\": \"http.disconnect\"}\n\n async with anyio.create_task_group() as task_group:\n\n async def wrap(func: typing.Callable[[], typing.Awaitable[T]]) -> T:\n result = await func()\n task_group.cancel_scope.cancel()\n return result\n\n task_group.start_soon(wrap, response_sent.wait)\n message = await wrap(request.receive)\n\n if response_sent.is_set():\n return {\"type\": \"http.disconnect\"}\n\n return message\n\n async def close_recv_stream_on_response_sent() -> None:\n await response_sent.wait()\n recv_stream.close()\n\n async def send_no_error(message: Message) -> None:\n try:\n await send_stream.send(message)\n except anyio.BrokenResourceError:\n # recv_stream has been closed, i.e. response_sent has been set.\n return\n\n async def coro() -> None:\n nonlocal app_exc\n\n async with send_stream:\n try:\n await self.app(scope, receive_or_disconnect, send_no_error)\n except Exception as exc:\n app_exc = exc\n\n task_group.start_soon(close_recv_stream_on_response_sent)\n task_group.start_soon(coro)\n\n try:\n message = await recv_stream.receive()\n except anyio.EndOfStream:\n if app_exc is not None:\n raise app_exc\n raise RuntimeError(\"No response returned.\")\n\n assert message[\"type\"] == \"http.response.start\"\n\n async def body_stream() -> typing.AsyncGenerator[bytes, None]:\n async with recv_stream:\n async for message in recv_stream:\n assert message[\"type\"] == \"http.response.body\"\n body = message.get(\"body\", b\"\")\n if body:\n yield body\n if not message.get(\"more_body\", False):\n break\n\n if app_exc is not None:\n raise app_exc\n\n response = StreamingResponse(\n status_code=message[\"status\"], content=body_stream()\n )\n response.raw_headers = message[\"headers\"]\n return response\n\n async with anyio.create_task_group() as task_group:\n request = Request(scope, receive=receive)\n response = await self.dispatch_func(request, call_next)\n await response(scope, receive, send)\n response_sent.set()\n\n async def dispatch(\n self, request: Request, call_next: RequestResponseEndpoint\n ) -> Response:\n raise NotImplementedError() # pragma: no cover\n", "path": "starlette/middleware/base.py"}]}

| 2,057 | 623 |

gh_patches_debug_7549

|

rasdani/github-patches

|

git_diff

|

great-expectations__great_expectations-3463

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

`\n` and `,` transposed in notebook generation

**Describe the bug**

Following the tutorial, in the Jupyter notebook, the `ignored_columns` list has commas at the start of the line.

Probably just transpose [here](https://github.com/great-expectations/great_expectations/blob/c49e533c012c275a791fce09df207d2bd7126780/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py#L74-L77) to fix:

```diff

- f' "{column_name}"\n,' for column_name in self.validator.columns()

+ f' "{column_name}",\n' for column_name in self.validator.columns()

```

**To Reproduce**

Steps to reproduce the behavior:

1. Follow the tutorial.

2. In the jupyter notebook, notice the formatting for the `ignored_columns`.

**Expected behavior**

Generated code in notebooks should follow normal formatting conventions.

**Environment (please complete the following information):**

- Operating System: MacOS

- Great Expectations Version:

- superconductive/ge_tutorials @ 5db831a92af51f77dde19723a81a2cea92e2a756

- great-expectations==0.13.35

**Additional context**

Mentioned this when talking with Ortal on September 24: Might already be filed or handled somewhere else.

Use cleaner solution for non-truncating division in python 2

Prefer `from __future__ import division` to `1.*x/y`

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py`

Content:

```

1 from typing import Any, Dict, List, Union

2

3 import nbformat

4

5 from great_expectations import DataContext

6 from great_expectations.core.batch import BatchRequest

7 from great_expectations.render.renderer.suite_edit_notebook_renderer import (

8 SuiteEditNotebookRenderer,

9 )

10

11

12 class SuiteProfileNotebookRenderer(SuiteEditNotebookRenderer):

13 def __init__(

14 self,

15 context: DataContext,

16 expectation_suite_name: str,

17 batch_request: Union[str, Dict[str, Union[str, int, Dict[str, Any]]]],

18 ):

19 super().__init__(context=context)

20

21 if batch_request is None:

22 batch_request = {}

23 self.batch_request = batch_request

24

25 self.validator = context.get_validator(

26 batch_request=BatchRequest(**batch_request),

27 expectation_suite_name=expectation_suite_name,

28 )

29

30 self.expectation_suite_name = self.validator.expectation_suite_name

31

32 # noinspection PyMethodOverriding

33 def add_header(self):

34 self.add_markdown_cell(

35 markdown=f"""# Initialize a new Expectation Suite by profiling a batch of your data.

36 This process helps you avoid writing lots of boilerplate when authoring suites by allowing you to select columns and other factors that you care about and letting a profiler write some candidate expectations for you to adjust.

37

38 **Expectation Suite Name**: `{self.expectation_suite_name}`

39 """

40 )

41 self.add_code_cell(

42 code=f"""\

43 import datetime

44

45 import pandas as pd

46

47 import great_expectations as ge

48 import great_expectations.jupyter_ux

49 from great_expectations.core.batch import BatchRequest

50 from great_expectations.profile.user_configurable_profiler import UserConfigurableProfiler

51 from great_expectations.checkpoint import SimpleCheckpoint

52 from great_expectations.exceptions import DataContextError

53

54 context = ge.data_context.DataContext()

55

56 batch_request = {self.batch_request}

57

58 expectation_suite_name = "{self.expectation_suite_name}"

59

60 validator = context.get_validator(

61 batch_request=BatchRequest(**batch_request),

62 expectation_suite_name=expectation_suite_name

63 )

64 column_names = [f'"{{column_name}}"' for column_name in validator.columns()]

65 print(f"Columns: {{', '.join(column_names)}}.")

66 validator.head(n_rows=5, fetch_all=False)

67 """,

68 lint=True,

69 )

70

71 def _add_available_columns_list(self):

72 column_names: List[str]

73 column_name: str

74 column_names = [

75 f' "{column_name}"\n,' for column_name in self.validator.columns()

76 ]

77 code: str = f'ignored_columns = [\n{"".join(column_names)}]'

78 self.add_code_cell(code=code, lint=True)

79

80 def add_footer(self):

81 self.add_markdown_cell(

82 markdown="""# Save & review your new Expectation Suite

83

84 Let's save the draft expectation suite as a JSON file in the

85 `great_expectations/expectations` directory of your project and rebuild the Data

86 Docs site to make it easy to review your new suite."""

87 )

88 code_cell: str = """\

89 print(validator.get_expectation_suite(discard_failed_expectations=False))

90 validator.save_expectation_suite(discard_failed_expectations=False)

91

92 checkpoint_config = {

93 "class_name": "SimpleCheckpoint",

94 "validations": [

95 {

96 "batch_request": batch_request,

97 "expectation_suite_name": expectation_suite_name

98 }

99 ]

100 }

101 checkpoint = SimpleCheckpoint(

102 f"_tmp_checkpoint_{expectation_suite_name}",

103 context,

104 **checkpoint_config

105 )

106 checkpoint_result = checkpoint.run()

107

108 context.build_data_docs()

109

110 validation_result_identifier = checkpoint_result.list_validation_result_identifiers()[0]

111 context.open_data_docs(resource_identifier=validation_result_identifier)

112 """

113 self.add_code_cell(code=code_cell, lint=True)

114 self.add_markdown_cell(

115 markdown=f"""## Next steps

116 After you review this initial Expectation Suite in Data Docs you

117 should edit this suite to make finer grained adjustments to the expectations.

118 This can be done by running `great_expectations suite edit {self.expectation_suite_name}`."""

119 )

120

121 # noinspection PyMethodOverriding

122 def render(self) -> nbformat.NotebookNode:

123 self._notebook = nbformat.v4.new_notebook()

124 self.add_header()

125 self.add_markdown_cell(

126 markdown="""# Select columns

127

128 Select the columns on which you would like to set expectations and those which you would like to ignore.

129

130 Great Expectations will choose which expectations might make sense for a column based on the **data type** and **cardinality** of the data in each selected column.

131

132 Simply comment out columns that are important and should be included. You can select multiple lines and

133 use a jupyter keyboard shortcut to toggle each line: **Linux/Windows**:

134 `Ctrl-/`, **macOS**: `Cmd-/`"""

135 )

136 self._add_available_columns_list()

137 self.add_markdown_cell(

138 markdown="""# Run the data profiler

139

140 The suites generated here are **not meant to be production suites** -- they are **a starting point to build upon**.

141

142 **To get to a production-grade suite, you will definitely want to [edit this

143 suite](https://docs.greatexpectations.io/en/latest/guides/how_to_guides/creating_and_editing_expectations/how_to_edit_an_expectation_suite_using_a_disposable_notebook.html?utm_source=notebook&utm_medium=profile_based_expectations)

144 after this initial step gets you started on the path towards what you want.**

145

146 This is highly configurable depending on your goals.

147 You can ignore columns or exclude certain expectations, specify a threshold for creating value set expectations, or even specify semantic types for a given column.

148 You can find more information about [how to configure this profiler, including a list of the expectations that it uses, here.](https://docs.greatexpectations.io/en/latest/guides/how_to_guides/creating_and_editing_expectations/how_to_create_an_expectation_suite_with_the_user_configurable_profiler.html)

149

150 """

151 )

152 self._add_profiler_cell()

153 self.add_footer()

154 return self._notebook

155

156 # noinspection PyMethodOverriding

157 def render_to_disk(self, notebook_file_path: str):

158 """

159 Render a notebook to disk from an expectation suite.

160 """

161 self.render()

162 self.write_notebook_to_disk(

163 notebook=self._notebook, notebook_file_path=notebook_file_path

164 )

165

166 def _add_profiler_cell(self):

167 self.add_code_cell(

168 code=f"""\

169 profiler = UserConfigurableProfiler(

170 profile_dataset=validator,

171 excluded_expectations=None,

172 ignored_columns=ignored_columns,

173 not_null_only=False,

174 primary_or_compound_key=False,

175 semantic_types_dict=None,

176 table_expectations_only=False,

177 value_set_threshold="MANY",

178 )

179 suite = profiler.build_suite()""",

180 lint=True,

181 )

182

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py b/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py

--- a/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py

+++ b/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py

@@ -72,7 +72,7 @@

column_names: List[str]

column_name: str

column_names = [

- f' "{column_name}"\n,' for column_name in self.validator.columns()

+ f' "{column_name}",\n' for column_name in self.validator.columns()

]

code: str = f'ignored_columns = [\n{"".join(column_names)}]'

self.add_code_cell(code=code, lint=True)

|

{"golden_diff": "diff --git a/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py b/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py\n--- a/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py\n+++ b/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py\n@@ -72,7 +72,7 @@\n column_names: List[str]\n column_name: str\n column_names = [\n- f' \"{column_name}\"\\n,' for column_name in self.validator.columns()\n+ f' \"{column_name}\",\\n' for column_name in self.validator.columns()\n ]\n code: str = f'ignored_columns = [\\n{\"\".join(column_names)}]'\n self.add_code_cell(code=code, lint=True)\n", "issue": "`\\n` and `,` transposed in notebook generation\n**Describe the bug**\r\nFollowing the tutorial, in the Jupyter notebook, the `ignored_columns` list has commas at the start of the line.\r\nProbably just transpose [here](https://github.com/great-expectations/great_expectations/blob/c49e533c012c275a791fce09df207d2bd7126780/great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py#L74-L77) to fix:\r\n\r\n```diff\r\n- f' \"{column_name}\"\\n,' for column_name in self.validator.columns()\r\n+ f' \"{column_name}\",\\n' for column_name in self.validator.columns()\r\n```\r\n\r\n**To Reproduce**\r\nSteps to reproduce the behavior:\r\n1. Follow the tutorial.\r\n2. In the jupyter notebook, notice the formatting for the `ignored_columns`.\r\n\r\n**Expected behavior**\r\nGenerated code in notebooks should follow normal formatting conventions.\r\n\r\n**Environment (please complete the following information):**\r\n - Operating System: MacOS\r\n - Great Expectations Version: \r\n - superconductive/ge_tutorials @ 5db831a92af51f77dde19723a81a2cea92e2a756\r\n - great-expectations==0.13.35\r\n\r\n**Additional context**\r\nMentioned this when talking with Ortal on September 24: Might already be filed or handled somewhere else.\r\n\r\n\nUse cleaner solution for non-truncating division in python 2\nPrefer `from __future__ import division` to `1.*x/y`\n", "before_files": [{"content": "from typing import Any, Dict, List, Union\n\nimport nbformat\n\nfrom great_expectations import DataContext\nfrom great_expectations.core.batch import BatchRequest\nfrom great_expectations.render.renderer.suite_edit_notebook_renderer import (\n SuiteEditNotebookRenderer,\n)\n\n\nclass SuiteProfileNotebookRenderer(SuiteEditNotebookRenderer):\n def __init__(\n self,\n context: DataContext,\n expectation_suite_name: str,\n batch_request: Union[str, Dict[str, Union[str, int, Dict[str, Any]]]],\n ):\n super().__init__(context=context)\n\n if batch_request is None:\n batch_request = {}\n self.batch_request = batch_request\n\n self.validator = context.get_validator(\n batch_request=BatchRequest(**batch_request),\n expectation_suite_name=expectation_suite_name,\n )\n\n self.expectation_suite_name = self.validator.expectation_suite_name\n\n # noinspection PyMethodOverriding\n def add_header(self):\n self.add_markdown_cell(\n markdown=f\"\"\"# Initialize a new Expectation Suite by profiling a batch of your data.\nThis process helps you avoid writing lots of boilerplate when authoring suites by allowing you to select columns and other factors that you care about and letting a profiler write some candidate expectations for you to adjust.\n\n**Expectation Suite Name**: `{self.expectation_suite_name}`\n\"\"\"\n )\n self.add_code_cell(\n code=f\"\"\"\\\nimport datetime\n\nimport pandas as pd\n\nimport great_expectations as ge\nimport great_expectations.jupyter_ux\nfrom great_expectations.core.batch import BatchRequest\nfrom great_expectations.profile.user_configurable_profiler import UserConfigurableProfiler\nfrom great_expectations.checkpoint import SimpleCheckpoint\nfrom great_expectations.exceptions import DataContextError\n\ncontext = ge.data_context.DataContext()\n\nbatch_request = {self.batch_request}\n\nexpectation_suite_name = \"{self.expectation_suite_name}\"\n\nvalidator = context.get_validator(\n batch_request=BatchRequest(**batch_request),\n expectation_suite_name=expectation_suite_name\n)\ncolumn_names = [f'\"{{column_name}}\"' for column_name in validator.columns()]\nprint(f\"Columns: {{', '.join(column_names)}}.\")\nvalidator.head(n_rows=5, fetch_all=False)\n\"\"\",\n lint=True,\n )\n\n def _add_available_columns_list(self):\n column_names: List[str]\n column_name: str\n column_names = [\n f' \"{column_name}\"\\n,' for column_name in self.validator.columns()\n ]\n code: str = f'ignored_columns = [\\n{\"\".join(column_names)}]'\n self.add_code_cell(code=code, lint=True)\n\n def add_footer(self):\n self.add_markdown_cell(\n markdown=\"\"\"# Save & review your new Expectation Suite\n\nLet's save the draft expectation suite as a JSON file in the\n`great_expectations/expectations` directory of your project and rebuild the Data\n Docs site to make it easy to review your new suite.\"\"\"\n )\n code_cell: str = \"\"\"\\\nprint(validator.get_expectation_suite(discard_failed_expectations=False))\nvalidator.save_expectation_suite(discard_failed_expectations=False)\n\ncheckpoint_config = {\n \"class_name\": \"SimpleCheckpoint\",\n \"validations\": [\n {\n \"batch_request\": batch_request,\n \"expectation_suite_name\": expectation_suite_name\n }\n ]\n}\ncheckpoint = SimpleCheckpoint(\n f\"_tmp_checkpoint_{expectation_suite_name}\",\n context,\n **checkpoint_config\n)\ncheckpoint_result = checkpoint.run()\n\ncontext.build_data_docs()\n\nvalidation_result_identifier = checkpoint_result.list_validation_result_identifiers()[0]\ncontext.open_data_docs(resource_identifier=validation_result_identifier)\n\"\"\"\n self.add_code_cell(code=code_cell, lint=True)\n self.add_markdown_cell(\n markdown=f\"\"\"## Next steps\nAfter you review this initial Expectation Suite in Data Docs you\nshould edit this suite to make finer grained adjustments to the expectations.\nThis can be done by running `great_expectations suite edit {self.expectation_suite_name}`.\"\"\"\n )\n\n # noinspection PyMethodOverriding\n def render(self) -> nbformat.NotebookNode:\n self._notebook = nbformat.v4.new_notebook()\n self.add_header()\n self.add_markdown_cell(\n markdown=\"\"\"# Select columns\n\nSelect the columns on which you would like to set expectations and those which you would like to ignore.\n\nGreat Expectations will choose which expectations might make sense for a column based on the **data type** and **cardinality** of the data in each selected column.\n\nSimply comment out columns that are important and should be included. You can select multiple lines and\nuse a jupyter keyboard shortcut to toggle each line: **Linux/Windows**:\n`Ctrl-/`, **macOS**: `Cmd-/`\"\"\"\n )\n self._add_available_columns_list()\n self.add_markdown_cell(\n markdown=\"\"\"# Run the data profiler\n\nThe suites generated here are **not meant to be production suites** -- they are **a starting point to build upon**.\n\n**To get to a production-grade suite, you will definitely want to [edit this\nsuite](https://docs.greatexpectations.io/en/latest/guides/how_to_guides/creating_and_editing_expectations/how_to_edit_an_expectation_suite_using_a_disposable_notebook.html?utm_source=notebook&utm_medium=profile_based_expectations)\nafter this initial step gets you started on the path towards what you want.**\n\nThis is highly configurable depending on your goals.\nYou can ignore columns or exclude certain expectations, specify a threshold for creating value set expectations, or even specify semantic types for a given column.\nYou can find more information about [how to configure this profiler, including a list of the expectations that it uses, here.](https://docs.greatexpectations.io/en/latest/guides/how_to_guides/creating_and_editing_expectations/how_to_create_an_expectation_suite_with_the_user_configurable_profiler.html)\n\n\"\"\"\n )\n self._add_profiler_cell()\n self.add_footer()\n return self._notebook\n\n # noinspection PyMethodOverriding\n def render_to_disk(self, notebook_file_path: str):\n \"\"\"\n Render a notebook to disk from an expectation suite.\n \"\"\"\n self.render()\n self.write_notebook_to_disk(\n notebook=self._notebook, notebook_file_path=notebook_file_path\n )\n\n def _add_profiler_cell(self):\n self.add_code_cell(\n code=f\"\"\"\\\nprofiler = UserConfigurableProfiler(\n profile_dataset=validator,\n excluded_expectations=None,\n ignored_columns=ignored_columns,\n not_null_only=False,\n primary_or_compound_key=False,\n semantic_types_dict=None,\n table_expectations_only=False,\n value_set_threshold=\"MANY\",\n)\nsuite = profiler.build_suite()\"\"\",\n lint=True,\n )\n", "path": "great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py"}], "after_files": [{"content": "from typing import Any, Dict, List, Union\n\nimport nbformat\n\nfrom great_expectations import DataContext\nfrom great_expectations.core.batch import BatchRequest\nfrom great_expectations.render.renderer.suite_edit_notebook_renderer import (\n SuiteEditNotebookRenderer,\n)\n\n\nclass SuiteProfileNotebookRenderer(SuiteEditNotebookRenderer):\n def __init__(\n self,\n context: DataContext,\n expectation_suite_name: str,\n batch_request: Union[str, Dict[str, Union[str, int, Dict[str, Any]]]],\n ):\n super().__init__(context=context)\n\n if batch_request is None:\n batch_request = {}\n self.batch_request = batch_request\n\n self.validator = context.get_validator(\n batch_request=BatchRequest(**batch_request),\n expectation_suite_name=expectation_suite_name,\n )\n\n self.expectation_suite_name = self.validator.expectation_suite_name\n\n # noinspection PyMethodOverriding\n def add_header(self):\n self.add_markdown_cell(\n markdown=f\"\"\"# Initialize a new Expectation Suite by profiling a batch of your data.\nThis process helps you avoid writing lots of boilerplate when authoring suites by allowing you to select columns and other factors that you care about and letting a profiler write some candidate expectations for you to adjust.\n\n**Expectation Suite Name**: `{self.expectation_suite_name}`\n\"\"\"\n )\n self.add_code_cell(\n code=f\"\"\"\\\nimport datetime\n\nimport pandas as pd\n\nimport great_expectations as ge\nimport great_expectations.jupyter_ux\nfrom great_expectations.core.batch import BatchRequest\nfrom great_expectations.profile.user_configurable_profiler import UserConfigurableProfiler\nfrom great_expectations.checkpoint import SimpleCheckpoint\nfrom great_expectations.exceptions import DataContextError\n\ncontext = ge.data_context.DataContext()\n\nbatch_request = {self.batch_request}\n\nexpectation_suite_name = \"{self.expectation_suite_name}\"\n\nvalidator = context.get_validator(\n batch_request=BatchRequest(**batch_request),\n expectation_suite_name=expectation_suite_name\n)\ncolumn_names = [f'\"{{column_name}}\"' for column_name in validator.columns()]\nprint(f\"Columns: {{', '.join(column_names)}}.\")\nvalidator.head(n_rows=5, fetch_all=False)\n\"\"\",\n lint=True,\n )\n\n def _add_available_columns_list(self):\n column_names: List[str]\n column_name: str\n column_names = [\n f' \"{column_name}\",\\n' for column_name in self.validator.columns()\n ]\n code: str = f'ignored_columns = [\\n{\"\".join(column_names)}]'\n self.add_code_cell(code=code, lint=True)\n\n def add_footer(self):\n self.add_markdown_cell(\n markdown=\"\"\"# Save & review your new Expectation Suite\n\nLet's save the draft expectation suite as a JSON file in the\n`great_expectations/expectations` directory of your project and rebuild the Data\n Docs site to make it easy to review your new suite.\"\"\"\n )\n code_cell: str = \"\"\"\\\nprint(validator.get_expectation_suite(discard_failed_expectations=False))\nvalidator.save_expectation_suite(discard_failed_expectations=False)\n\ncheckpoint_config = {\n \"class_name\": \"SimpleCheckpoint\",\n \"validations\": [\n {\n \"batch_request\": batch_request,\n \"expectation_suite_name\": expectation_suite_name\n }\n ]\n}\ncheckpoint = SimpleCheckpoint(\n f\"_tmp_checkpoint_{expectation_suite_name}\",\n context,\n **checkpoint_config\n)\ncheckpoint_result = checkpoint.run()\n\ncontext.build_data_docs()\n\nvalidation_result_identifier = checkpoint_result.list_validation_result_identifiers()[0]\ncontext.open_data_docs(resource_identifier=validation_result_identifier)\n\"\"\"\n self.add_code_cell(code=code_cell, lint=True)\n self.add_markdown_cell(\n markdown=f\"\"\"## Next steps\nAfter you review this initial Expectation Suite in Data Docs you\nshould edit this suite to make finer grained adjustments to the expectations.\nThis can be done by running `great_expectations suite edit {self.expectation_suite_name}`.\"\"\"\n )\n\n # noinspection PyMethodOverriding\n def render(self) -> nbformat.NotebookNode:\n self._notebook = nbformat.v4.new_notebook()\n self.add_header()\n self.add_markdown_cell(\n markdown=\"\"\"# Select columns\n\nSelect the columns on which you would like to set expectations and those which you would like to ignore.\n\nGreat Expectations will choose which expectations might make sense for a column based on the **data type** and **cardinality** of the data in each selected column.\n\nSimply comment out columns that are important and should be included. You can select multiple lines and\nuse a jupyter keyboard shortcut to toggle each line: **Linux/Windows**:\n`Ctrl-/`, **macOS**: `Cmd-/`\"\"\"\n )\n self._add_available_columns_list()\n self.add_markdown_cell(\n markdown=\"\"\"# Run the data profiler\n\nThe suites generated here are **not meant to be production suites** -- they are **a starting point to build upon**.\n\n**To get to a production-grade suite, you will definitely want to [edit this\nsuite](https://docs.greatexpectations.io/en/latest/guides/how_to_guides/creating_and_editing_expectations/how_to_edit_an_expectation_suite_using_a_disposable_notebook.html?utm_source=notebook&utm_medium=profile_based_expectations)\nafter this initial step gets you started on the path towards what you want.**\n\nThis is highly configurable depending on your goals.\nYou can ignore columns or exclude certain expectations, specify a threshold for creating value set expectations, or even specify semantic types for a given column.\nYou can find more information about [how to configure this profiler, including a list of the expectations that it uses, here.](https://docs.greatexpectations.io/en/latest/guides/how_to_guides/creating_and_editing_expectations/how_to_create_an_expectation_suite_with_the_user_configurable_profiler.html)\n\n\"\"\"\n )\n self._add_profiler_cell()\n self.add_footer()\n return self._notebook\n\n # noinspection PyMethodOverriding\n def render_to_disk(self, notebook_file_path: str):\n \"\"\"\n Render a notebook to disk from an expectation suite.\n \"\"\"\n self.render()\n self.write_notebook_to_disk(\n notebook=self._notebook, notebook_file_path=notebook_file_path\n )\n\n def _add_profiler_cell(self):\n self.add_code_cell(\n code=f\"\"\"\\\nprofiler = UserConfigurableProfiler(\n profile_dataset=validator,\n excluded_expectations=None,\n ignored_columns=ignored_columns,\n not_null_only=False,\n primary_or_compound_key=False,\n semantic_types_dict=None,\n table_expectations_only=False,\n value_set_threshold=\"MANY\",\n)\nsuite = profiler.build_suite()\"\"\",\n lint=True,\n )\n", "path": "great_expectations/render/renderer/v3/suite_profile_notebook_renderer.py"}]}

| 2,512 | 175 |

gh_patches_debug_14974

|

rasdani/github-patches

|

git_diff

|

enthought__chaco-423

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Demo world_map.py does not work on python 2

**Problem Description**

urllib.request deprecated in Python 2

**Reproduction Steps:**

Run world_map.py

**Expected behavior:**

Traceback (most recent call last):

File "world_map.py", line 15, in <module>

import urllib.request, urllib.parse, urllib.error

ImportError: No module named request

**OS, Python version:** [Enter OS name and Python version]

OSX, Python 2.7

xref: #385

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `examples/demo/world_map.py`

Content:

```

1 #!/usr/bin/env python

2 """

3 Displays a world map with locations plotted on top. Locations are expected to

4 be tuples of latitude, longitude where West and South are expressed as

5 negative values.

6 - Mousewheel up and down zooms the plot in and out.

7 - Pressing "z" brings up the Zoom Box, and you can click-drag a rectangular

8 region to zoom. If you use a sequence of zoom boxes, pressing alt-left-arrow

9 and alt-right-arrow moves you forwards and backwards through the "zoom

10 history".

11 """

12

13 # Standard library imports

14 import os.path

15 import urllib.request, urllib.parse, urllib.error

16

17 # Major library imports

18 import numpy

19

20 # ETS imports

21 from chaco.api import Plot, ArrayPlotData, ImageData

22 from chaco.tools.api import ZoomTool

23 from enable.component_editor import ComponentEditor

24 from traits.api import HasTraits, Instance, Str

25 from traitsui.api import Item, View

26

27 class WorldMapPlot(HasTraits):

28

29 ### Public Traits ##########################################################

30

31 # The plot which will be displayed

32 plot = Instance(Plot)

33

34 # The URL which points to the world map image to be downloaded

35 image_url = Str("http://eoimages.gsfc.nasa.gov/ve//2433/land_shallow_topo_2048.jpg")

36

37

38 ### Private Traits #########################################################

39

40 # The path to where the image exists on the filesystem

41 image_path = Str()

42

43 # The view

44 traits_view = View(Item('plot', editor=ComponentEditor(),

45 width=800, height=400, show_label=False),

46 resizable=True)

47

48 #---------------------------------------------------------------------------

49 # Public interface

50 #---------------------------------------------------------------------------

51

52 def __init__(self, **kw):

53 super(WorldMapPlot, self).__init__(**kw)

54

55 self._download_map_image()

56 image = ImageData.fromfile(self.image_path)

57

58 # For now, the locations are hardcoded, though this can be changed

59 # eassily to take command line args, read from a file, or by other

60 # means

61 austin_loc = (30.16, -97.44)

62

63 locations_x = numpy.array([austin_loc[1]])

64 locations_y = numpy.array([austin_loc[0]])

65

66 # transform each of the locations to the image data space, including

67 # moving the origin from bottom left to top left

68 locations_x = (locations_x + 180) * image.data.shape[1]/360

69 locations_y = (locations_y*-1 + 90) * image.data.shape[0]/180

70

71 # Create the plott data, adding the image and the locations

72 plot_data = ArrayPlotData()

73 plot_data.set_data("imagedata", image._data)

74 plot_data.set_data("locations_x", locations_x)

75 plot_data.set_data("locations_y", locations_y)

76

77 # Create the plot with the origin as top left, which matches

78 # how the image data is aligned

79 self.plot = Plot(plot_data, default_origin="top left")

80 self.plot.img_plot('imagedata')

81

82 # Plot the locations as a scatter plot to be overlayed on top

83 # of the map

84 loc_plot = self.plot.plot(('locations_x', 'locations_y'),

85 type='scatter', size=3, color='yellow',

86 marker='dot')[0]

87

88 loc_plot.x_mapper.range.high = image.data.shape[1]

89 loc_plot.x_mapper.range.low = 0

90 loc_plot.y_mapper.range.high = image.data.shape[0]

91 loc_plot.y_mapper.range.low = -0

92

93 # set up any tools, in this case just the zoom tool

94 zoom = ZoomTool(component=self.plot, tool_mode="box", always_on=False)

95 self.plot.overlays.append(zoom)

96

97 #---------------------------------------------------------------------------

98 # Protected interface

99 #---------------------------------------------------------------------------

100

101 def _download_map_image(self):

102 """ Downloads a map from the image_url attribute. This is done

103 primarily to keep the redistributable Chaco package as small

104 as possible

105 """

106 example_dir = os.path.dirname(__file__)

107 self.image_path = os.path.join(example_dir, 'data',

108 os.path.split(self.image_url)[1])

109

110 if not os.path.exists(self.image_path):

111 print("Downloading map image")

112 urllib.request.urlretrieve(self.image_url, self.image_path)

113

114 #===============================================================================

115 # demo object that is used by the demo.py application.

116 #===============================================================================

117 demo = WorldMapPlot()

118

119 if __name__ == "__main__":

120 demo.configure_traits()

121

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/examples/demo/world_map.py b/examples/demo/world_map.py

--- a/examples/demo/world_map.py

+++ b/examples/demo/world_map.py

@@ -12,7 +12,7 @@

# Standard library imports

import os.path

-import urllib.request, urllib.parse, urllib.error

+from six.moves.urllib import request

# Major library imports

import numpy

@@ -109,7 +109,7 @@

if not os.path.exists(self.image_path):

print("Downloading map image")

- urllib.request.urlretrieve(self.image_url, self.image_path)

+ request.urlretrieve(self.image_url, self.image_path)

#===============================================================================

# demo object that is used by the demo.py application.

|

{"golden_diff": "diff --git a/examples/demo/world_map.py b/examples/demo/world_map.py\n--- a/examples/demo/world_map.py\n+++ b/examples/demo/world_map.py\n@@ -12,7 +12,7 @@\n \n # Standard library imports\n import os.path\n-import urllib.request, urllib.parse, urllib.error\n+from six.moves.urllib import request\n \n # Major library imports\n import numpy\n@@ -109,7 +109,7 @@\n \n if not os.path.exists(self.image_path):\n print(\"Downloading map image\")\n- urllib.request.urlretrieve(self.image_url, self.image_path)\n+ request.urlretrieve(self.image_url, self.image_path)\n \n #===============================================================================\n # demo object that is used by the demo.py application.\n", "issue": "Demo world_map.py does not work on python 2\n**Problem Description**\r\nurllib.request deprecated in Python 2\r\n**Reproduction Steps:**\r\nRun world_map.py\r\n\r\n**Expected behavior:**\r\n\r\nTraceback (most recent call last):\r\n File \"world_map.py\", line 15, in <module>\r\n import urllib.request, urllib.parse, urllib.error\r\nImportError: No module named request\r\n\r\n**OS, Python version:** [Enter OS name and Python version]\r\nOSX, Python 2.7\r\n\r\nxref: #385\n", "before_files": [{"content": "#!/usr/bin/env python\n\"\"\"\nDisplays a world map with locations plotted on top. Locations are expected to\nbe tuples of latitude, longitude where West and South are expressed as\nnegative values.\n - Mousewheel up and down zooms the plot in and out.\n - Pressing \"z\" brings up the Zoom Box, and you can click-drag a rectangular\n region to zoom. If you use a sequence of zoom boxes, pressing alt-left-arrow\n and alt-right-arrow moves you forwards and backwards through the \"zoom\n history\".\n\"\"\"\n\n# Standard library imports\nimport os.path\nimport urllib.request, urllib.parse, urllib.error\n\n# Major library imports\nimport numpy\n\n# ETS imports\nfrom chaco.api import Plot, ArrayPlotData, ImageData\nfrom chaco.tools.api import ZoomTool\nfrom enable.component_editor import ComponentEditor\nfrom traits.api import HasTraits, Instance, Str\nfrom traitsui.api import Item, View\n\nclass WorldMapPlot(HasTraits):\n\n ### Public Traits ##########################################################\n\n # The plot which will be displayed\n plot = Instance(Plot)\n\n # The URL which points to the world map image to be downloaded\n image_url = Str(\"http://eoimages.gsfc.nasa.gov/ve//2433/land_shallow_topo_2048.jpg\")\n\n\n ### Private Traits #########################################################\n\n # The path to where the image exists on the filesystem\n image_path = Str()\n\n # The view\n traits_view = View(Item('plot', editor=ComponentEditor(),\n width=800, height=400, show_label=False),\n resizable=True)\n\n #---------------------------------------------------------------------------\n # Public interface\n #---------------------------------------------------------------------------\n\n def __init__(self, **kw):\n super(WorldMapPlot, self).__init__(**kw)\n\n self._download_map_image()\n image = ImageData.fromfile(self.image_path)\n\n # For now, the locations are hardcoded, though this can be changed\n # eassily to take command line args, read from a file, or by other\n # means\n austin_loc = (30.16, -97.44)\n\n locations_x = numpy.array([austin_loc[1]])\n locations_y = numpy.array([austin_loc[0]])\n\n # transform each of the locations to the image data space, including\n # moving the origin from bottom left to top left\n locations_x = (locations_x + 180) * image.data.shape[1]/360\n locations_y = (locations_y*-1 + 90) * image.data.shape[0]/180\n\n # Create the plott data, adding the image and the locations\n plot_data = ArrayPlotData()\n plot_data.set_data(\"imagedata\", image._data)\n plot_data.set_data(\"locations_x\", locations_x)\n plot_data.set_data(\"locations_y\", locations_y)\n\n # Create the plot with the origin as top left, which matches\n # how the image data is aligned\n self.plot = Plot(plot_data, default_origin=\"top left\")\n self.plot.img_plot('imagedata')\n\n # Plot the locations as a scatter plot to be overlayed on top\n # of the map\n loc_plot = self.plot.plot(('locations_x', 'locations_y'),\n type='scatter', size=3, color='yellow',\n marker='dot')[0]\n\n loc_plot.x_mapper.range.high = image.data.shape[1]\n loc_plot.x_mapper.range.low = 0\n loc_plot.y_mapper.range.high = image.data.shape[0]\n loc_plot.y_mapper.range.low = -0\n\n # set up any tools, in this case just the zoom tool\n zoom = ZoomTool(component=self.plot, tool_mode=\"box\", always_on=False)\n self.plot.overlays.append(zoom)\n\n #---------------------------------------------------------------------------\n # Protected interface\n #---------------------------------------------------------------------------\n\n def _download_map_image(self):\n \"\"\" Downloads a map from the image_url attribute. This is done\n primarily to keep the redistributable Chaco package as small\n as possible\n \"\"\"\n example_dir = os.path.dirname(__file__)\n self.image_path = os.path.join(example_dir, 'data',\n os.path.split(self.image_url)[1])\n\n if not os.path.exists(self.image_path):\n print(\"Downloading map image\")\n urllib.request.urlretrieve(self.image_url, self.image_path)\n\n#===============================================================================\n# demo object that is used by the demo.py application.\n#===============================================================================\ndemo = WorldMapPlot()\n\nif __name__ == \"__main__\":\n demo.configure_traits()\n", "path": "examples/demo/world_map.py"}], "after_files": [{"content": "#!/usr/bin/env python\n\"\"\"\nDisplays a world map with locations plotted on top. Locations are expected to\nbe tuples of latitude, longitude where West and South are expressed as\nnegative values.\n - Mousewheel up and down zooms the plot in and out.\n - Pressing \"z\" brings up the Zoom Box, and you can click-drag a rectangular\n region to zoom. If you use a sequence of zoom boxes, pressing alt-left-arrow\n and alt-right-arrow moves you forwards and backwards through the \"zoom\n history\".\n\"\"\"\n\n# Standard library imports\nimport os.path\nfrom six.moves.urllib import request\n\n# Major library imports\nimport numpy\n\n# ETS imports\nfrom chaco.api import Plot, ArrayPlotData, ImageData\nfrom chaco.tools.api import ZoomTool\nfrom enable.component_editor import ComponentEditor\nfrom traits.api import HasTraits, Instance, Str\nfrom traitsui.api import Item, View\n\nclass WorldMapPlot(HasTraits):\n\n ### Public Traits ##########################################################\n\n # The plot which will be displayed\n plot = Instance(Plot)\n\n # The URL which points to the world map image to be downloaded\n image_url = Str(\"http://eoimages.gsfc.nasa.gov/ve//2433/land_shallow_topo_2048.jpg\")\n\n\n ### Private Traits #########################################################\n\n # The path to where the image exists on the filesystem\n image_path = Str()\n\n # The view\n traits_view = View(Item('plot', editor=ComponentEditor(),\n width=800, height=400, show_label=False),\n resizable=True)\n\n #---------------------------------------------------------------------------\n # Public interface\n #---------------------------------------------------------------------------\n\n def __init__(self, **kw):\n super(WorldMapPlot, self).__init__(**kw)\n\n self._download_map_image()\n image = ImageData.fromfile(self.image_path)\n\n # For now, the locations are hardcoded, though this can be changed\n # eassily to take command line args, read from a file, or by other\n # means\n austin_loc = (30.16, -97.44)\n\n locations_x = numpy.array([austin_loc[1]])\n locations_y = numpy.array([austin_loc[0]])\n\n # transform each of the locations to the image data space, including\n # moving the origin from bottom left to top left\n locations_x = (locations_x + 180) * image.data.shape[1]/360\n locations_y = (locations_y*-1 + 90) * image.data.shape[0]/180\n\n # Create the plott data, adding the image and the locations\n plot_data = ArrayPlotData()\n plot_data.set_data(\"imagedata\", image._data)\n plot_data.set_data(\"locations_x\", locations_x)\n plot_data.set_data(\"locations_y\", locations_y)\n\n # Create the plot with the origin as top left, which matches\n # how the image data is aligned\n self.plot = Plot(plot_data, default_origin=\"top left\")\n self.plot.img_plot('imagedata')\n\n # Plot the locations as a scatter plot to be overlayed on top\n # of the map\n loc_plot = self.plot.plot(('locations_x', 'locations_y'),\n type='scatter', size=3, color='yellow',\n marker='dot')[0]\n\n loc_plot.x_mapper.range.high = image.data.shape[1]\n loc_plot.x_mapper.range.low = 0\n loc_plot.y_mapper.range.high = image.data.shape[0]\n loc_plot.y_mapper.range.low = -0\n\n # set up any tools, in this case just the zoom tool\n zoom = ZoomTool(component=self.plot, tool_mode=\"box\", always_on=False)\n self.plot.overlays.append(zoom)\n\n #---------------------------------------------------------------------------\n # Protected interface\n #---------------------------------------------------------------------------\n\n def _download_map_image(self):\n \"\"\" Downloads a map from the image_url attribute. This is done\n primarily to keep the redistributable Chaco package as small\n as possible\n \"\"\"\n example_dir = os.path.dirname(__file__)\n self.image_path = os.path.join(example_dir, 'data',\n os.path.split(self.image_url)[1])\n\n if not os.path.exists(self.image_path):\n print(\"Downloading map image\")\n request.urlretrieve(self.image_url, self.image_path)\n\n#===============================================================================\n# demo object that is used by the demo.py application.\n#===============================================================================\ndemo = WorldMapPlot()\n\nif __name__ == \"__main__\":\n demo.configure_traits()\n", "path": "examples/demo/world_map.py"}]}

| 1,631 | 156 |

gh_patches_debug_28762

|

rasdani/github-patches

|

git_diff

|

ray-project__ray-9057

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[tune] sync_on_checkpoint ignored when list of experiments passed to tune.run

### What is the problem?

When passing a list of predefined Experiments to tune via the `run` function, the `sync_on_checkpoint` flag is ignored.

**Reason for this behaviour:**

The `make_parser` function takes in `no_sync_on_checkpoint`; it's set to False by default.

https://github.com/ray-project/ray/blob/981f67bfb01725f1e153c881f81082fcba0ac223/python/ray/tune/config_parser.py#L75-L77

The create_trial_from_spec function gets a `sync_on_checkpoint` from the Experiment spec, but that key is never used by the parser. So the following line makes the `sync_on_checkpoint` of the returned `Trial` object `True` by default, thus ignoring the value of `sync_on_checkpoint` in the incoming Experiment spec.

https://github.com/ray-project/ray/blob/981f67bfb01725f1e153c881f81082fcba0ac223/python/ray/tune/config_parser.py#L185

*Ray Version: All versions starting from 0.7.7 when the feature was released.*

### Reproduction (REQUIRED)

```python3

import ray

from ray import tune

from ray.tune import Experiment

def func(config):

pass

sync_true_obj = Experiment("test_sync", func, sync_on_checkpoint=True)

sync_false_obj = Experiment("test_sync", func, sync_on_checkpoint=False)

ls = [sync_true_obj, sync_false_obj]

ray.init(local_mode=True)

analysis = tune.run(ls)

```

To check the error, add the following lines just before the `return Trial(` line in `config_parser.py`

```python3

print(args)

print(args.no_sync_on_checkpoint)

```

https://github.com/ray-project/ray/blob/981f67bfb01725f1e153c881f81082fcba0ac223/python/ray/tune/config_parser.py#L173

- [x] I have verified my script runs in a clean environment and reproduces the issue.

- [x] I have verified the issue also occurs with the [latest wheels](https://docs.ray.io/en/latest/installation.html).

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `python/ray/tune/config_parser.py`

Content:

```

1 import argparse

2 import json

3 import os

4

5 # For compatibility under py2 to consider unicode as str

6 from six import string_types

7

8 from ray.tune import TuneError

9 from ray.tune.trial import Trial

10 from ray.tune.resources import json_to_resources

11 from ray.tune.logger import _SafeFallbackEncoder

12

13

14 def make_parser(parser_creator=None, **kwargs):

15 """Returns a base argument parser for the ray.tune tool.

16

17 Args:

18 parser_creator: A constructor for the parser class.

19 kwargs: Non-positional args to be passed into the

20 parser class constructor.

21 """

22

23 if parser_creator:

24 parser = parser_creator(**kwargs)

25 else:

26 parser = argparse.ArgumentParser(**kwargs)

27

28 # Note: keep this in sync with rllib/train.py

29 parser.add_argument(

30 "--run",

31 default=None,

32 type=str,

33 help="The algorithm or model to train. This may refer to the name "

34 "of a built-on algorithm (e.g. RLLib's DQN or PPO), or a "

35 "user-defined trainable function or class registered in the "

36 "tune registry.")

37 parser.add_argument(

38 "--stop",

39 default="{}",

40 type=json.loads,

41 help="The stopping criteria, specified in JSON. The keys may be any "

42 "field returned by 'train()' e.g. "

43 "'{\"time_total_s\": 600, \"training_iteration\": 100000}' to stop "

44 "after 600 seconds or 100k iterations, whichever is reached first.")

45 parser.add_argument(

46 "--config",

47 default="{}",

48 type=json.loads,

49 help="Algorithm-specific configuration (e.g. env, hyperparams), "

50 "specified in JSON.")

51 parser.add_argument(

52 "--resources-per-trial",

53 default=None,

54 type=json_to_resources,

55 help="Override the machine resources to allocate per trial, e.g. "

56 "'{\"cpu\": 64, \"gpu\": 8}'. Note that GPUs will not be assigned "

57 "unless you specify them here. For RLlib, you probably want to "

58 "leave this alone and use RLlib configs to control parallelism.")

59 parser.add_argument(

60 "--num-samples",

61 default=1,

62 type=int,

63 help="Number of times to repeat each trial.")

64 parser.add_argument(

65 "--checkpoint-freq",

66 default=0,

67 type=int,

68 help="How many training iterations between checkpoints. "

69 "A value of 0 (default) disables checkpointing.")

70 parser.add_argument(

71 "--checkpoint-at-end",

72 action="store_true",

73 help="Whether to checkpoint at the end of the experiment. "

74 "Default is False.")

75 parser.add_argument(

76 "--no-sync-on-checkpoint",

77 action="store_true",

78 help="Disable sync-down of trial checkpoint, which is enabled by "

79 "default to guarantee recoverability. If set, checkpoint syncing from "

80 "worker to driver is asynchronous. Set this only if synchronous "

81 "checkpointing is too slow and trial restoration failures can be "

82 "tolerated")

83 parser.add_argument(

84 "--keep-checkpoints-num",

85 default=None,

86 type=int,

87 help="Number of best checkpoints to keep. Others get "

88 "deleted. Default (None) keeps all checkpoints.")

89 parser.add_argument(

90 "--checkpoint-score-attr",

91 default="training_iteration",

92 type=str,

93 help="Specifies by which attribute to rank the best checkpoint. "

94 "Default is increasing order. If attribute starts with min- it "

95 "will rank attribute in decreasing order. Example: "

96 "min-validation_loss")

97 parser.add_argument(

98 "--export-formats",

99 default=None,

100 help="List of formats that exported at the end of the experiment. "

101 "Default is None. For RLlib, 'checkpoint' and 'model' are "

102 "supported for TensorFlow policy graphs.")

103 parser.add_argument(

104 "--max-failures",

105 default=3,

106 type=int,

107 help="Try to recover a trial from its last checkpoint at least this "

108 "many times. Only applies if checkpointing is enabled.")

109 parser.add_argument(

110 "--scheduler",

111 default="FIFO",

112 type=str,

113 help="FIFO (default), MedianStopping, AsyncHyperBand, "

114 "HyperBand, or HyperOpt.")

115 parser.add_argument(

116 "--scheduler-config",

117 default="{}",

118 type=json.loads,

119 help="Config options to pass to the scheduler.")

120

121 # Note: this currently only makes sense when running a single trial

122 parser.add_argument(

123 "--restore",

124 default=None,

125 type=str,

126 help="If specified, restore from this checkpoint.")

127

128 return parser

129

130

131 def to_argv(config):

132 """Converts configuration to a command line argument format."""

133 argv = []

134 for k, v in config.items():

135 if "-" in k:

136 raise ValueError("Use '_' instead of '-' in `{}`".format(k))

137 if v is None:

138 continue

139 if not isinstance(v, bool) or v: # for argparse flags

140 argv.append("--{}".format(k.replace("_", "-")))

141 if isinstance(v, string_types):

142 argv.append(v)

143 elif isinstance(v, bool):

144 pass

145 else:

146 argv.append(json.dumps(v, cls=_SafeFallbackEncoder))

147 return argv

148

149

150 def create_trial_from_spec(spec, output_path, parser, **trial_kwargs):

151 """Creates a Trial object from parsing the spec.

152

153 Arguments:

154 spec (dict): A resolved experiment specification. Arguments should

155 The args here should correspond to the command line flags

156 in ray.tune.config_parser.

157 output_path (str); A specific output path within the local_dir.

158 Typically the name of the experiment.

159 parser (ArgumentParser): An argument parser object from

160 make_parser.

161 trial_kwargs: Extra keyword arguments used in instantiating the Trial.

162

163 Returns:

164 A trial object with corresponding parameters to the specification.

165 """

166 try:

167 args, _ = parser.parse_known_args(to_argv(spec))

168 except SystemExit:

169 raise TuneError("Error parsing args, see above message", spec)

170 if "resources_per_trial" in spec:

171 trial_kwargs["resources"] = json_to_resources(

172 spec["resources_per_trial"])

173 return Trial(

174 # Submitting trial via server in py2.7 creates Unicode, which does not

175 # convert to string in a straightforward manner.

176 trainable_name=spec["run"],

177 # json.load leads to str -> unicode in py2.7

178 config=spec.get("config", {}),

179 local_dir=os.path.join(spec["local_dir"], output_path),

180 # json.load leads to str -> unicode in py2.7

181 stopping_criterion=spec.get("stop", {}),

182 remote_checkpoint_dir=spec.get("remote_checkpoint_dir"),

183 checkpoint_freq=args.checkpoint_freq,

184 checkpoint_at_end=args.checkpoint_at_end,

185 sync_on_checkpoint=not args.no_sync_on_checkpoint,

186 keep_checkpoints_num=args.keep_checkpoints_num,

187 checkpoint_score_attr=args.checkpoint_score_attr,