problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_10128

|

rasdani/github-patches

|

git_diff

|

MycroftAI__mycroft-core-2338

|

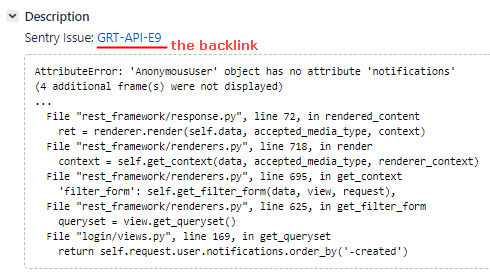

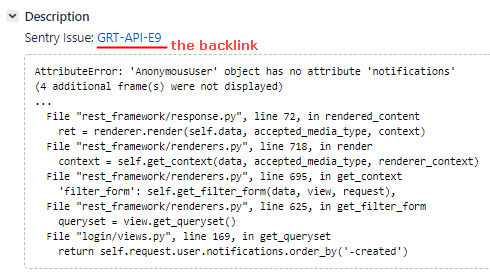

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Skill Shutdown sometimes fails causing duplicate instances of Skill

## Devices:

Issue occurred on two separate Debian Linux installations

- one running Mycroft-core 19.02.14,

- the other running dev branch the date of this issue.

## Steps to reproduce:

1. Load Mycroft and ensure working as expected

2. Make intentional code error in Alarm Skill to raise exception eg `self.log.info(not_a_real_var)`

3. Skill fails on reload with the following error:

```

12:04:25.758 | INFO | 22386 | mycroft.skills.skill_loader:reload:109 | ATTEMPTING TO RELOAD SKILL: mycroft-alarm.mycroftai

12:04:25.760 | ERROR | 22386 | mycroft.skills.skill_loader:_execute_instance_shutdown:145 | An error occurred while shutting down AlarmSkill

Traceback (most recent call last):

File "/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/skill_loader.py", line 142, in _execute_instance_shutdown

self.instance.default_shutdown()

File "/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/mycroft_skill/mycroft_skill.py", line 1162, in default_shutdown

self.event_scheduler.shutdown()

File "/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/event_scheduler.py", line 433, in shutdown

self.events.clear()

File "/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/mycroft_skill/event_container.py", line 183, in clear

self.bus.remove(e, f)

File "/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/messagebus/client/client.py", line 172, in remove

self.emitter.remove_listener(event_name, func)

File "/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/messagebus/client/threaded_event_emitter.py", line 57, in remove_listener

return super().remove_listener(event_name, func)

File "/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/.venv/lib/python3.6/site-packages/pyee/__init__.py", line 205, in remove_listener

self._events[event].pop(f)

KeyError: <function create_basic_wrapper.<locals>.wrapper at 0x7f2a504781e0>

```

4. Remove bad code and save to reload Skill

```

12:04:25.803 | INFO | 22386 | mycroft.skills.skill_loader:_communicate_load_status:273 | Skill mycroft-alarm.mycroftai loaded successfully

```

5. Trigger the Skill with an utterance eg "what alarms are set"

6. Mycroft responds twice

- once from the failed instance of the Skill: "An error occurred while processing a request in Alarm Skill"

- once from the new working instance of the Skill: "No alarms have been set"

The failed instance of the Skill will remain until Mycroft services are restarted.

After restarting, the same steps cause the same error.

## Investigation:

This was only occurring for the Alarm Skill, did not get the same behavior in the Date Time Skill.

To see what might be causing it, I added some logging to the offending function in [`mycroft/messagebus/client/threaded_event_emitter.py`](https://github.com/MycroftAI/mycroft-core/blob/dev/mycroft/messagebus/client/threaded_event_emitter.py):

```

def remove_listener(self, event_name, func):

""" Wrap the remove to translate from function to wrapped

function.

"""

for w in self.wrappers[event_name]:

if w[0] == func:

self.wrappers[event_name].remove(w)

return super().remove_listener(event_name, w[1])

# if no wrapper exists try removing the function

LOG.info("REMOVING LISTENER")

LOG.info("event_name: " + str(event_name))

LOG.info(event_name)

LOG.info("func: " + str(func))

LOG.info(func)

return super().remove_listener(event_name, func)

```

Then reloaded Mycroft and can no longer trigger the issue.

## Hypothesis

- Presumably the `func` was not being found in `self.wrappers` allowing it to proceed to try removing the function itself on [line 57](https://github.com/MycroftAI/mycroft-core/blob/dev/mycroft/messagebus/client/threaded_event_emitter.py#L57) which then failed.

- Possibly the function was modified or removed from `self.wrappers` causing it to not match?

- Is `self.wrappers` cached between service restarts, and would editing this file have recreated it?

As I'm no longer able to reproduce the error it's difficult to continue debugging. So documenting the situation here in case anyone else runs into it and we can pick it back up.

Unless anyone else has an idea of what might be happening?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mycroft/skills/mycroft_skill/event_container.py`

Content:

```

1 from inspect import signature

2

3 from mycroft.messagebus.message import Message

4 from mycroft.metrics import Stopwatch, report_timing

5 from mycroft.util.log import LOG

6

7 from ..skill_data import to_alnum

8

9

10 def unmunge_message(message, skill_id):

11 """Restore message keywords by removing the Letterified skill ID.

12 Arguments:

13 message (Message): Intent result message

14 skill_id (str): skill identifier

15 Returns:

16 Message without clear keywords

17 """

18 if isinstance(message, Message) and isinstance(message.data, dict):

19 skill_id = to_alnum(skill_id)

20 for key in list(message.data.keys()):

21 if key.startswith(skill_id):

22 # replace the munged key with the real one

23 new_key = key[len(skill_id):]

24 message.data[new_key] = message.data.pop(key)

25

26 return message

27

28

29 def get_handler_name(handler):

30 """Name (including class if available) of handler function.

31

32 Arguments:

33 handler (function): Function to be named

34

35 Returns:

36 string: handler name as string

37 """

38 if '__self__' in dir(handler) and 'name' in dir(handler.__self__):

39 return handler.__self__.name + '.' + handler.__name__

40 else:

41 return handler.__name__

42

43

44 def create_wrapper(handler, skill_id, on_start, on_end, on_error):

45 """Create the default skill handler wrapper.

46

47 This wrapper handles things like metrics, reporting handler start/stop

48 and errors.

49 handler (callable): method/function to call

50 skill_id: skill_id for associated skill

51 on_start (function): function to call before executing the handler

52 on_end (function): function to call after executing the handler

53 on_error (function): function to call for error reporting

54 """

55 def wrapper(message):

56 stopwatch = Stopwatch()

57 try:

58 message = unmunge_message(message, skill_id)

59 if on_start:

60 on_start(message)

61

62 with stopwatch:

63 if len(signature(handler).parameters) == 0:

64 handler()

65 else:

66 handler(message)

67

68 except Exception as e:

69 if on_error:

70 on_error(e)

71 finally:

72 if on_end:

73 on_end(message)

74

75 # Send timing metrics

76 context = message.context

77 if context and 'ident' in context:

78 report_timing(context['ident'], 'skill_handler', stopwatch,

79 {'handler': handler.__name__,

80 'skill_id': skill_id})

81 return wrapper

82

83

84 def create_basic_wrapper(handler, on_error=None):

85 """Create the default skill handler wrapper.

86

87 This wrapper handles things like metrics, reporting handler start/stop

88 and errors.

89

90 Arguments:

91 handler (callable): method/function to call

92 on_error (function): function to call to report error.

93

94 Returns:

95 Wrapped callable

96 """

97 def wrapper(message):

98 try:

99 if len(signature(handler).parameters) == 0:

100 handler()

101 else:

102 handler(message)

103 except Exception as e:

104 if on_error:

105 on_error(e)

106

107 return wrapper

108

109

110 class EventContainer:

111 """Container tracking messagbus handlers.

112

113 This container tracks events added by a skill, allowing unregistering

114 all events on shutdown.

115 """

116 def __init__(self, bus=None):

117 self.bus = bus

118 self.events = []

119

120 def set_bus(self, bus):

121 self.bus = bus

122

123 def add(self, name, handler, once=False):

124 """Create event handler for executing intent or other event.

125

126 Arguments:

127 name (string): IntentParser name

128 handler (func): Method to call

129 once (bool, optional): Event handler will be removed after it has

130 been run once.

131 """

132 def once_wrapper(message):

133 # Remove registered one-time handler before invoking,

134 # allowing them to re-schedule themselves.

135 handler(message)

136 self.remove(name)

137

138 if handler:

139 if once:

140 self.bus.once(name, once_wrapper)

141 else:

142 self.bus.on(name, handler)

143 self.events.append((name, handler))

144

145 def remove(self, name):

146 """Removes an event from bus emitter and events list.

147

148 Args:

149 name (string): Name of Intent or Scheduler Event

150 Returns:

151 bool: True if found and removed, False if not found

152 """

153 print("Removing event {}".format(name))

154 removed = False

155 for _name, _handler in list(self.events):

156 if name == _name:

157 try:

158 self.events.remove((_name, _handler))

159 except ValueError:

160 LOG.error('Failed to remove event {}'.format(name))

161 pass

162 removed = True

163

164 # Because of function wrappers, the emitter doesn't always directly

165 # hold the _handler function, it sometimes holds something like

166 # 'wrapper(_handler)'. So a call like:

167 # self.bus.remove(_name, _handler)

168 # will not find it, leaving an event handler with that name left behind

169 # waiting to fire if it is ever re-installed and triggered.

170 # Remove all handlers with the given name, regardless of handler.

171 if removed:

172 self.bus.remove_all_listeners(name)

173 return removed

174

175 def __iter__(self):

176 return iter(self.events)

177

178 def clear(self):

179 """Unregister all registered handlers and clear the list of registered

180 events.

181 """

182 for e, f in self.events:

183 self.bus.remove(e, f)

184 self.events = [] # Remove reference to wrappers

185

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mycroft/skills/mycroft_skill/event_container.py b/mycroft/skills/mycroft_skill/event_container.py

--- a/mycroft/skills/mycroft_skill/event_container.py

+++ b/mycroft/skills/mycroft_skill/event_container.py

@@ -138,9 +138,12 @@

if handler:

if once:

self.bus.once(name, once_wrapper)

+ self.events.append((name, once_wrapper))

else:

self.bus.on(name, handler)

- self.events.append((name, handler))

+ self.events.append((name, handler))

+

+ LOG.debug('Added event: {}'.format(name))

def remove(self, name):

"""Removes an event from bus emitter and events list.

|

{"golden_diff": "diff --git a/mycroft/skills/mycroft_skill/event_container.py b/mycroft/skills/mycroft_skill/event_container.py\n--- a/mycroft/skills/mycroft_skill/event_container.py\n+++ b/mycroft/skills/mycroft_skill/event_container.py\n@@ -138,9 +138,12 @@\n if handler:\n if once:\n self.bus.once(name, once_wrapper)\n+ self.events.append((name, once_wrapper))\n else:\n self.bus.on(name, handler)\n- self.events.append((name, handler))\n+ self.events.append((name, handler))\n+\n+ LOG.debug('Added event: {}'.format(name))\n \n def remove(self, name):\n \"\"\"Removes an event from bus emitter and events list.\n", "issue": "Skill Shutdown sometimes fails causing duplicate instances of Skill\n## Devices:\r\nIssue occurred on two separate Debian Linux installations\r\n- one running Mycroft-core 19.02.14, \r\n- the other running dev branch the date of this issue.\r\n\r\n## Steps to reproduce:\r\n1. Load Mycroft and ensure working as expected\r\n2. Make intentional code error in Alarm Skill to raise exception eg `self.log.info(not_a_real_var)`\r\n3. Skill fails on reload with the following error:\r\n```\r\n 12:04:25.758 | INFO | 22386 | mycroft.skills.skill_loader:reload:109 | ATTEMPTING TO RELOAD SKILL: mycroft-alarm.mycroftai\r\n 12:04:25.760 | ERROR | 22386 | mycroft.skills.skill_loader:_execute_instance_shutdown:145 | An error occurred while shutting down AlarmSkill\r\nTraceback (most recent call last):\r\n File \"/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/skill_loader.py\", line 142, in _execute_instance_shutdown\r\n self.instance.default_shutdown()\r\n File \"/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/mycroft_skill/mycroft_skill.py\", line 1162, in default_shutdown\r\n self.event_scheduler.shutdown()\r\n File \"/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/event_scheduler.py\", line 433, in shutdown\r\n self.events.clear()\r\n File \"/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/skills/mycroft_skill/event_container.py\", line 183, in clear\r\n self.bus.remove(e, f)\r\n File \"/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/messagebus/client/client.py\", line 172, in remove\r\n self.emitter.remove_listener(event_name, func)\r\n File \"/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/mycroft/messagebus/client/threaded_event_emitter.py\", line 57, in remove_listener\r\n return super().remove_listener(event_name, func)\r\n File \"/home/fs-neriahbjato/Documents/Full_Scale/Mycroft/mycroft-core/.venv/lib/python3.6/site-packages/pyee/__init__.py\", line 205, in remove_listener\r\n self._events[event].pop(f)\r\nKeyError: <function create_basic_wrapper.<locals>.wrapper at 0x7f2a504781e0>\r\n```\r\n4. Remove bad code and save to reload Skill\r\n```\r\n 12:04:25.803 | INFO | 22386 | mycroft.skills.skill_loader:_communicate_load_status:273 | Skill mycroft-alarm.mycroftai loaded successfully\r\n```\r\n5. Trigger the Skill with an utterance eg \"what alarms are set\"\r\n6. Mycroft responds twice\r\n - once from the failed instance of the Skill: \"An error occurred while processing a request in Alarm Skill\"\r\n - once from the new working instance of the Skill: \"No alarms have been set\"\r\n\r\nThe failed instance of the Skill will remain until Mycroft services are restarted.\r\nAfter restarting, the same steps cause the same error.\r\n\r\n## Investigation:\r\nThis was only occurring for the Alarm Skill, did not get the same behavior in the Date Time Skill.\r\nTo see what might be causing it, I added some logging to the offending function in [`mycroft/messagebus/client/threaded_event_emitter.py`](https://github.com/MycroftAI/mycroft-core/blob/dev/mycroft/messagebus/client/threaded_event_emitter.py):\r\n```\r\ndef remove_listener(self, event_name, func):\r\n \"\"\" Wrap the remove to translate from function to wrapped\r\n function.\r\n \"\"\"\r\n for w in self.wrappers[event_name]:\r\n if w[0] == func:\r\n self.wrappers[event_name].remove(w)\r\n return super().remove_listener(event_name, w[1])\r\n # if no wrapper exists try removing the function\r\n LOG.info(\"REMOVING LISTENER\")\r\n LOG.info(\"event_name: \" + str(event_name))\r\n LOG.info(event_name)\r\n LOG.info(\"func: \" + str(func))\r\n LOG.info(func)\r\n return super().remove_listener(event_name, func)\r\n```\r\nThen reloaded Mycroft and can no longer trigger the issue.\r\n\r\n## Hypothesis\r\n- Presumably the `func` was not being found in `self.wrappers` allowing it to proceed to try removing the function itself on [line 57](https://github.com/MycroftAI/mycroft-core/blob/dev/mycroft/messagebus/client/threaded_event_emitter.py#L57) which then failed.\r\n- Possibly the function was modified or removed from `self.wrappers` causing it to not match?\r\n- Is `self.wrappers` cached between service restarts, and would editing this file have recreated it?\r\n\r\n\r\nAs I'm no longer able to reproduce the error it's difficult to continue debugging. So documenting the situation here in case anyone else runs into it and we can pick it back up.\r\n\r\nUnless anyone else has an idea of what might be happening?\n", "before_files": [{"content": "from inspect import signature\n\nfrom mycroft.messagebus.message import Message\nfrom mycroft.metrics import Stopwatch, report_timing\nfrom mycroft.util.log import LOG\n\nfrom ..skill_data import to_alnum\n\n\ndef unmunge_message(message, skill_id):\n \"\"\"Restore message keywords by removing the Letterified skill ID.\n Arguments:\n message (Message): Intent result message\n skill_id (str): skill identifier\n Returns:\n Message without clear keywords\n \"\"\"\n if isinstance(message, Message) and isinstance(message.data, dict):\n skill_id = to_alnum(skill_id)\n for key in list(message.data.keys()):\n if key.startswith(skill_id):\n # replace the munged key with the real one\n new_key = key[len(skill_id):]\n message.data[new_key] = message.data.pop(key)\n\n return message\n\n\ndef get_handler_name(handler):\n \"\"\"Name (including class if available) of handler function.\n\n Arguments:\n handler (function): Function to be named\n\n Returns:\n string: handler name as string\n \"\"\"\n if '__self__' in dir(handler) and 'name' in dir(handler.__self__):\n return handler.__self__.name + '.' + handler.__name__\n else:\n return handler.__name__\n\n\ndef create_wrapper(handler, skill_id, on_start, on_end, on_error):\n \"\"\"Create the default skill handler wrapper.\n\n This wrapper handles things like metrics, reporting handler start/stop\n and errors.\n handler (callable): method/function to call\n skill_id: skill_id for associated skill\n on_start (function): function to call before executing the handler\n on_end (function): function to call after executing the handler\n on_error (function): function to call for error reporting\n \"\"\"\n def wrapper(message):\n stopwatch = Stopwatch()\n try:\n message = unmunge_message(message, skill_id)\n if on_start:\n on_start(message)\n\n with stopwatch:\n if len(signature(handler).parameters) == 0:\n handler()\n else:\n handler(message)\n\n except Exception as e:\n if on_error:\n on_error(e)\n finally:\n if on_end:\n on_end(message)\n\n # Send timing metrics\n context = message.context\n if context and 'ident' in context:\n report_timing(context['ident'], 'skill_handler', stopwatch,\n {'handler': handler.__name__,\n 'skill_id': skill_id})\n return wrapper\n\n\ndef create_basic_wrapper(handler, on_error=None):\n \"\"\"Create the default skill handler wrapper.\n\n This wrapper handles things like metrics, reporting handler start/stop\n and errors.\n\n Arguments:\n handler (callable): method/function to call\n on_error (function): function to call to report error.\n\n Returns:\n Wrapped callable\n \"\"\"\n def wrapper(message):\n try:\n if len(signature(handler).parameters) == 0:\n handler()\n else:\n handler(message)\n except Exception as e:\n if on_error:\n on_error(e)\n\n return wrapper\n\n\nclass EventContainer:\n \"\"\"Container tracking messagbus handlers.\n\n This container tracks events added by a skill, allowing unregistering\n all events on shutdown.\n \"\"\"\n def __init__(self, bus=None):\n self.bus = bus\n self.events = []\n\n def set_bus(self, bus):\n self.bus = bus\n\n def add(self, name, handler, once=False):\n \"\"\"Create event handler for executing intent or other event.\n\n Arguments:\n name (string): IntentParser name\n handler (func): Method to call\n once (bool, optional): Event handler will be removed after it has\n been run once.\n \"\"\"\n def once_wrapper(message):\n # Remove registered one-time handler before invoking,\n # allowing them to re-schedule themselves.\n handler(message)\n self.remove(name)\n\n if handler:\n if once:\n self.bus.once(name, once_wrapper)\n else:\n self.bus.on(name, handler)\n self.events.append((name, handler))\n\n def remove(self, name):\n \"\"\"Removes an event from bus emitter and events list.\n\n Args:\n name (string): Name of Intent or Scheduler Event\n Returns:\n bool: True if found and removed, False if not found\n \"\"\"\n print(\"Removing event {}\".format(name))\n removed = False\n for _name, _handler in list(self.events):\n if name == _name:\n try:\n self.events.remove((_name, _handler))\n except ValueError:\n LOG.error('Failed to remove event {}'.format(name))\n pass\n removed = True\n\n # Because of function wrappers, the emitter doesn't always directly\n # hold the _handler function, it sometimes holds something like\n # 'wrapper(_handler)'. So a call like:\n # self.bus.remove(_name, _handler)\n # will not find it, leaving an event handler with that name left behind\n # waiting to fire if it is ever re-installed and triggered.\n # Remove all handlers with the given name, regardless of handler.\n if removed:\n self.bus.remove_all_listeners(name)\n return removed\n\n def __iter__(self):\n return iter(self.events)\n\n def clear(self):\n \"\"\"Unregister all registered handlers and clear the list of registered\n events.\n \"\"\"\n for e, f in self.events:\n self.bus.remove(e, f)\n self.events = [] # Remove reference to wrappers\n", "path": "mycroft/skills/mycroft_skill/event_container.py"}], "after_files": [{"content": "from inspect import signature\n\nfrom mycroft.messagebus.message import Message\nfrom mycroft.metrics import Stopwatch, report_timing\nfrom mycroft.util.log import LOG\n\nfrom ..skill_data import to_alnum\n\n\ndef unmunge_message(message, skill_id):\n \"\"\"Restore message keywords by removing the Letterified skill ID.\n Arguments:\n message (Message): Intent result message\n skill_id (str): skill identifier\n Returns:\n Message without clear keywords\n \"\"\"\n if isinstance(message, Message) and isinstance(message.data, dict):\n skill_id = to_alnum(skill_id)\n for key in list(message.data.keys()):\n if key.startswith(skill_id):\n # replace the munged key with the real one\n new_key = key[len(skill_id):]\n message.data[new_key] = message.data.pop(key)\n\n return message\n\n\ndef get_handler_name(handler):\n \"\"\"Name (including class if available) of handler function.\n\n Arguments:\n handler (function): Function to be named\n\n Returns:\n string: handler name as string\n \"\"\"\n if '__self__' in dir(handler) and 'name' in dir(handler.__self__):\n return handler.__self__.name + '.' + handler.__name__\n else:\n return handler.__name__\n\n\ndef create_wrapper(handler, skill_id, on_start, on_end, on_error):\n \"\"\"Create the default skill handler wrapper.\n\n This wrapper handles things like metrics, reporting handler start/stop\n and errors.\n handler (callable): method/function to call\n skill_id: skill_id for associated skill\n on_start (function): function to call before executing the handler\n on_end (function): function to call after executing the handler\n on_error (function): function to call for error reporting\n \"\"\"\n def wrapper(message):\n stopwatch = Stopwatch()\n try:\n message = unmunge_message(message, skill_id)\n if on_start:\n on_start(message)\n\n with stopwatch:\n if len(signature(handler).parameters) == 0:\n handler()\n else:\n handler(message)\n\n except Exception as e:\n if on_error:\n on_error(e)\n finally:\n if on_end:\n on_end(message)\n\n # Send timing metrics\n context = message.context\n if context and 'ident' in context:\n report_timing(context['ident'], 'skill_handler', stopwatch,\n {'handler': handler.__name__,\n 'skill_id': skill_id})\n return wrapper\n\n\ndef create_basic_wrapper(handler, on_error=None):\n \"\"\"Create the default skill handler wrapper.\n\n This wrapper handles things like metrics, reporting handler start/stop\n and errors.\n\n Arguments:\n handler (callable): method/function to call\n on_error (function): function to call to report error.\n\n Returns:\n Wrapped callable\n \"\"\"\n def wrapper(message):\n try:\n if len(signature(handler).parameters) == 0:\n handler()\n else:\n handler(message)\n except Exception as e:\n if on_error:\n on_error(e)\n\n return wrapper\n\n\nclass EventContainer:\n \"\"\"Container tracking messagbus handlers.\n\n This container tracks events added by a skill, allowing unregistering\n all events on shutdown.\n \"\"\"\n def __init__(self, bus=None):\n self.bus = bus\n self.events = []\n\n def set_bus(self, bus):\n self.bus = bus\n\n def add(self, name, handler, once=False):\n \"\"\"Create event handler for executing intent or other event.\n\n Arguments:\n name (string): IntentParser name\n handler (func): Method to call\n once (bool, optional): Event handler will be removed after it has\n been run once.\n \"\"\"\n def once_wrapper(message):\n # Remove registered one-time handler before invoking,\n # allowing them to re-schedule themselves.\n handler(message)\n self.remove(name)\n\n if handler:\n if once:\n self.bus.once(name, once_wrapper)\n self.events.append((name, once_wrapper))\n else:\n self.bus.on(name, handler)\n self.events.append((name, handler))\n\n LOG.debug('Added event: {}'.format(name))\n\n def remove(self, name):\n \"\"\"Removes an event from bus emitter and events list.\n\n Args:\n name (string): Name of Intent or Scheduler Event\n Returns:\n bool: True if found and removed, False if not found\n \"\"\"\n print(\"Removing event {}\".format(name))\n removed = False\n for _name, _handler in list(self.events):\n if name == _name:\n try:\n self.events.remove((_name, _handler))\n except ValueError:\n LOG.error('Failed to remove event {}'.format(name))\n pass\n removed = True\n\n # Because of function wrappers, the emitter doesn't always directly\n # hold the _handler function, it sometimes holds something like\n # 'wrapper(_handler)'. So a call like:\n # self.bus.remove(_name, _handler)\n # will not find it, leaving an event handler with that name left behind\n # waiting to fire if it is ever re-installed and triggered.\n # Remove all handlers with the given name, regardless of handler.\n if removed:\n self.bus.remove_all_listeners(name)\n return removed\n\n def __iter__(self):\n return iter(self.events)\n\n def clear(self):\n \"\"\"Unregister all registered handlers and clear the list of registered\n events.\n \"\"\"\n for e, f in self.events:\n self.bus.remove(e, f)\n self.events = [] # Remove reference to wrappers\n", "path": "mycroft/skills/mycroft_skill/event_container.py"}]}

| 3,054 | 160 |

gh_patches_debug_33830

|

rasdani/github-patches

|

git_diff

|

scikit-image__scikit-image-4416

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Interpolation error in measure.profile_line

## Description

#4206, once merged, exposes interesting behavior for [measure.profile_line](https://scikit-image.org/docs/dev/api/skimage.measure.html#skimage.measure.profile_line). Consider

```python

image = np.arange(12).reshape(4, 3)

# reduce_func is a new parameter proposed in #4206

prof = profile_line(image, (0, 1), (3, 1), linewidth=3, reduce_func=None)

assert_array_equal(image, prof)

```

As I understand it, the returned profile should be identical with the original image but we get this (value at (0, 2) is different):

```

AssertionError:

Arrays are not equal

Mismatch: 8.33%

Max absolute difference: 2

Max relative difference: 0.

x: array([[ 0, 1, 2],

[ 3, 4, 5],

[ 6, 7, 8],

[ 9, 10, 11]])

y: array([[ 0, 1, 0],

[ 3, 4, 5],

[ 6, 7, 8],

[ 9, 10, 11]])

```

This might originate in [scipy.ndimage.map_coordinates](https://docs.scipy.org/doc/scipy/reference/generated/scipy.ndimage.map_coordinates.html#scipy.ndimage.map_coordinates). Looks like a potential bug to me but might be an edge case for the underlying interpolation that can be ignored/is not reasonable to fix.

This behavior is already relevant even before merging #4206 because the faulty value will change the mean value of the profile line.

https://github.com/scikit-image/scikit-image/pull/4206#issuecomment-544920806 also highlights additional strange behavior.

## Version information

Current master, exposed once #4206 is merged.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `skimage/measure/profile.py`

Content:

```

1 from warnings import warn

2 import numpy as np

3 from scipy import ndimage as ndi

4

5

6 def profile_line(image, src, dst, linewidth=1,

7 order=None, mode='constant', cval=0.0,

8 *, reduce_func=np.mean):

9 """Return the intensity profile of an image measured along a scan line.

10

11 Parameters

12 ----------

13 image : numeric array, shape (M, N[, C])

14 The image, either grayscale (2D array) or multichannel

15 (3D array, where the final axis contains the channel

16 information).

17 src : 2-tuple of numeric scalar (float or int)

18 The start point of the scan line.

19 dst : 2-tuple of numeric scalar (float or int)

20 The end point of the scan line. The destination point is *included*

21 in the profile, in contrast to standard numpy indexing.

22 linewidth : int, optional

23 Width of the scan, perpendicular to the line

24 order : int in {0, 1, 2, 3, 4, 5}, optional

25 The order of the spline interpolation, default is 0 if

26 image.dtype is bool and 1 otherwise. The order has to be in

27 the range 0-5. See `skimage.transform.warp` for detail.

28 mode : {'constant', 'nearest', 'reflect', 'mirror', 'wrap'}, optional

29 How to compute any values falling outside of the image.

30 cval : float, optional

31 If `mode` is 'constant', what constant value to use outside the image.

32 reduce_func : callable, optional

33 Function used to calculate the aggregation of pixel values

34 perpendicular to the profile_line direction when `linewidth` > 1.

35 If set to None the unreduced array will be returned.

36

37 Returns

38 -------

39 return_value : array

40 The intensity profile along the scan line. The length of the profile

41 is the ceil of the computed length of the scan line.

42

43 Examples

44 --------

45 >>> x = np.array([[1, 1, 1, 2, 2, 2]])

46 >>> img = np.vstack([np.zeros_like(x), x, x, x, np.zeros_like(x)])

47 >>> img

48 array([[0, 0, 0, 0, 0, 0],

49 [1, 1, 1, 2, 2, 2],

50 [1, 1, 1, 2, 2, 2],

51 [1, 1, 1, 2, 2, 2],

52 [0, 0, 0, 0, 0, 0]])

53 >>> profile_line(img, (2, 1), (2, 4))

54 array([1., 1., 2., 2.])

55 >>> profile_line(img, (1, 0), (1, 6), cval=4)

56 array([1., 1., 1., 2., 2., 2., 4.])

57

58 The destination point is included in the profile, in contrast to

59 standard numpy indexing.

60 For example:

61

62 >>> profile_line(img, (1, 0), (1, 6)) # The final point is out of bounds

63 array([1., 1., 1., 2., 2., 2., 0.])

64 >>> profile_line(img, (1, 0), (1, 5)) # This accesses the full first row

65 array([1., 1., 1., 2., 2., 2.])

66

67 For different reduce_func inputs:

68

69 >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.mean)

70 array([0.66666667, 0.66666667, 0.66666667, 1.33333333])

71 >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.max)

72 array([1, 1, 1, 2])

73 >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.sum)

74 array([2, 2, 2, 4])

75

76 The unreduced array will be returned when `reduce_func` is None or when

77 `reduce_func` acts on each pixel value individually.

78

79 >>> profile_line(img, (1, 2), (4, 2), linewidth=3, order=0,

80 ... reduce_func=None)

81 array([[1, 1, 2],

82 [1, 1, 2],

83 [1, 1, 2],

84 [0, 0, 0]])

85 >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.sqrt)

86 array([[1. , 1. , 0. ],

87 [1. , 1. , 0. ],

88 [1. , 1. , 0. ],

89 [1.41421356, 1.41421356, 0. ]])

90 """

91 if order is None:

92 order = 0 if image.dtype == bool else 1

93

94 if image.dtype == bool and order != 0:

95 warn("Input image dtype is bool. Interpolation is not defined "

96 "with bool data type. Please set order to 0 or explicitely "

97 "cast input image to another data type. Starting from version "

98 "0.19 a ValueError will be raised instead of this warning.",

99 FutureWarning, stacklevel=2)

100

101 perp_lines = _line_profile_coordinates(src, dst, linewidth=linewidth)

102 if image.ndim == 3:

103 pixels = [ndi.map_coordinates(image[..., i], perp_lines,

104 prefilter=order > 1,

105 order=order, mode=mode,

106 cval=cval) for i in

107 range(image.shape[2])]

108 pixels = np.transpose(np.asarray(pixels), (1, 2, 0))

109 else:

110 pixels = ndi.map_coordinates(image, perp_lines, prefilter=order > 1,

111 order=order, mode=mode, cval=cval)

112 # The outputted array with reduce_func=None gives an array where the

113 # row values (axis=1) are flipped. Here, we make this consistent.

114 pixels = np.flip(pixels, axis=1)

115

116 if reduce_func is None:

117 intensities = pixels

118 else:

119 try:

120 intensities = reduce_func(pixels, axis=1)

121 except TypeError: # function doesn't allow axis kwarg

122 intensities = np.apply_along_axis(reduce_func, arr=pixels, axis=1)

123

124 return intensities

125

126

127 def _line_profile_coordinates(src, dst, linewidth=1):

128 """Return the coordinates of the profile of an image along a scan line.

129

130 Parameters

131 ----------

132 src : 2-tuple of numeric scalar (float or int)

133 The start point of the scan line.

134 dst : 2-tuple of numeric scalar (float or int)

135 The end point of the scan line.

136 linewidth : int, optional

137 Width of the scan, perpendicular to the line

138

139 Returns

140 -------

141 coords : array, shape (2, N, C), float

142 The coordinates of the profile along the scan line. The length of the

143 profile is the ceil of the computed length of the scan line.

144

145 Notes

146 -----

147 This is a utility method meant to be used internally by skimage functions.

148 The destination point is included in the profile, in contrast to

149 standard numpy indexing.

150 """

151 src_row, src_col = src = np.asarray(src, dtype=float)

152 dst_row, dst_col = dst = np.asarray(dst, dtype=float)

153 d_row, d_col = dst - src

154 theta = np.arctan2(d_row, d_col)

155

156 length = int(np.ceil(np.hypot(d_row, d_col) + 1))

157 # we add one above because we include the last point in the profile

158 # (in contrast to standard numpy indexing)

159 line_col = np.linspace(src_col, dst_col, length)

160 line_row = np.linspace(src_row, dst_row, length)

161

162 # we subtract 1 from linewidth to change from pixel-counting

163 # (make this line 3 pixels wide) to point distances (the

164 # distance between pixel centers)

165 col_width = (linewidth - 1) * np.sin(-theta) / 2

166 row_width = (linewidth - 1) * np.cos(theta) / 2

167 perp_rows = np.array([np.linspace(row_i - row_width, row_i + row_width,

168 linewidth) for row_i in line_row])

169 perp_cols = np.array([np.linspace(col_i - col_width, col_i + col_width,

170 linewidth) for col_i in line_col])

171 return np.array([perp_rows, perp_cols])

172

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/skimage/measure/profile.py b/skimage/measure/profile.py

--- a/skimage/measure/profile.py

+++ b/skimage/measure/profile.py

@@ -4,21 +4,22 @@

def profile_line(image, src, dst, linewidth=1,

- order=None, mode='constant', cval=0.0,

+ order=None, mode=None, cval=0.0,

*, reduce_func=np.mean):

"""Return the intensity profile of an image measured along a scan line.

Parameters

----------

- image : numeric array, shape (M, N[, C])

+ image : ndarray, shape (M, N[, C])

The image, either grayscale (2D array) or multichannel

(3D array, where the final axis contains the channel

information).

- src : 2-tuple of numeric scalar (float or int)

- The start point of the scan line.

- dst : 2-tuple of numeric scalar (float or int)

- The end point of the scan line. The destination point is *included*

- in the profile, in contrast to standard numpy indexing.

+ src : array_like, shape (2, )

+ The coordinates of the start point of the scan line.

+ dst : array_like, shape (2, )

+ The coordinates of the end point of the scan

+ line. The destination point is *included* in the profile, in

+ contrast to standard numpy indexing.

linewidth : int, optional

Width of the scan, perpendicular to the line

order : int in {0, 1, 2, 3, 4, 5}, optional

@@ -97,6 +98,12 @@

"cast input image to another data type. Starting from version "

"0.19 a ValueError will be raised instead of this warning.",

FutureWarning, stacklevel=2)

+ if mode is None:

+ warn("Default out of bounds interpolation mode 'constant' is "

+ "deprecated. In version 0.19 it will be set to 'reflect'. "

+ "To avoid this warning, set `mode=` explicitly.",

+ FutureWarning, stacklevel=2)

+ mode = 'constant'

perp_lines = _line_profile_coordinates(src, dst, linewidth=linewidth)

if image.ndim == 3:

|

{"golden_diff": "diff --git a/skimage/measure/profile.py b/skimage/measure/profile.py\n--- a/skimage/measure/profile.py\n+++ b/skimage/measure/profile.py\n@@ -4,21 +4,22 @@\n \n \n def profile_line(image, src, dst, linewidth=1,\n- order=None, mode='constant', cval=0.0,\n+ order=None, mode=None, cval=0.0,\n *, reduce_func=np.mean):\n \"\"\"Return the intensity profile of an image measured along a scan line.\n \n Parameters\n ----------\n- image : numeric array, shape (M, N[, C])\n+ image : ndarray, shape (M, N[, C])\n The image, either grayscale (2D array) or multichannel\n (3D array, where the final axis contains the channel\n information).\n- src : 2-tuple of numeric scalar (float or int)\n- The start point of the scan line.\n- dst : 2-tuple of numeric scalar (float or int)\n- The end point of the scan line. The destination point is *included*\n- in the profile, in contrast to standard numpy indexing.\n+ src : array_like, shape (2, )\n+ The coordinates of the start point of the scan line.\n+ dst : array_like, shape (2, )\n+ The coordinates of the end point of the scan\n+ line. The destination point is *included* in the profile, in\n+ contrast to standard numpy indexing.\n linewidth : int, optional\n Width of the scan, perpendicular to the line\n order : int in {0, 1, 2, 3, 4, 5}, optional\n@@ -97,6 +98,12 @@\n \"cast input image to another data type. Starting from version \"\n \"0.19 a ValueError will be raised instead of this warning.\",\n FutureWarning, stacklevel=2)\n+ if mode is None:\n+ warn(\"Default out of bounds interpolation mode 'constant' is \"\n+ \"deprecated. In version 0.19 it will be set to 'reflect'. \"\n+ \"To avoid this warning, set `mode=` explicitly.\",\n+ FutureWarning, stacklevel=2)\n+ mode = 'constant'\n \n perp_lines = _line_profile_coordinates(src, dst, linewidth=linewidth)\n if image.ndim == 3:\n", "issue": "Interpolation error in measure.profile_line\n## Description\r\n\r\n#4206, once merged, exposes interesting behavior for [measure.profile_line](https://scikit-image.org/docs/dev/api/skimage.measure.html#skimage.measure.profile_line). Consider\r\n\r\n```python\r\nimage = np.arange(12).reshape(4, 3)\r\n# reduce_func is a new parameter proposed in #4206\r\nprof = profile_line(image, (0, 1), (3, 1), linewidth=3, reduce_func=None)\r\nassert_array_equal(image, prof)\r\n```\r\nAs I understand it, the returned profile should be identical with the original image but we get this (value at (0, 2) is different):\r\n```\r\nAssertionError: \r\nArrays are not equal\r\nMismatch: 8.33%\r\nMax absolute difference: 2\r\nMax relative difference: 0.\r\n x: array([[ 0, 1, 2],\r\n [ 3, 4, 5],\r\n [ 6, 7, 8],\r\n [ 9, 10, 11]])\r\n y: array([[ 0, 1, 0],\r\n [ 3, 4, 5],\r\n [ 6, 7, 8],\r\n [ 9, 10, 11]])\r\n```\r\nThis might originate in [scipy.ndimage.map_coordinates](https://docs.scipy.org/doc/scipy/reference/generated/scipy.ndimage.map_coordinates.html#scipy.ndimage.map_coordinates). Looks like a potential bug to me but might be an edge case for the underlying interpolation that can be ignored/is not reasonable to fix. \r\nThis behavior is already relevant even before merging #4206 because the faulty value will change the mean value of the profile line.\r\n\r\nhttps://github.com/scikit-image/scikit-image/pull/4206#issuecomment-544920806 also highlights additional strange behavior.\r\n\r\n## Version information\r\n\r\nCurrent master, exposed once #4206 is merged.\r\n\n", "before_files": [{"content": "from warnings import warn\nimport numpy as np\nfrom scipy import ndimage as ndi\n\n\ndef profile_line(image, src, dst, linewidth=1,\n order=None, mode='constant', cval=0.0,\n *, reduce_func=np.mean):\n \"\"\"Return the intensity profile of an image measured along a scan line.\n\n Parameters\n ----------\n image : numeric array, shape (M, N[, C])\n The image, either grayscale (2D array) or multichannel\n (3D array, where the final axis contains the channel\n information).\n src : 2-tuple of numeric scalar (float or int)\n The start point of the scan line.\n dst : 2-tuple of numeric scalar (float or int)\n The end point of the scan line. The destination point is *included*\n in the profile, in contrast to standard numpy indexing.\n linewidth : int, optional\n Width of the scan, perpendicular to the line\n order : int in {0, 1, 2, 3, 4, 5}, optional\n The order of the spline interpolation, default is 0 if\n image.dtype is bool and 1 otherwise. The order has to be in\n the range 0-5. See `skimage.transform.warp` for detail.\n mode : {'constant', 'nearest', 'reflect', 'mirror', 'wrap'}, optional\n How to compute any values falling outside of the image.\n cval : float, optional\n If `mode` is 'constant', what constant value to use outside the image.\n reduce_func : callable, optional\n Function used to calculate the aggregation of pixel values\n perpendicular to the profile_line direction when `linewidth` > 1.\n If set to None the unreduced array will be returned.\n\n Returns\n -------\n return_value : array\n The intensity profile along the scan line. The length of the profile\n is the ceil of the computed length of the scan line.\n\n Examples\n --------\n >>> x = np.array([[1, 1, 1, 2, 2, 2]])\n >>> img = np.vstack([np.zeros_like(x), x, x, x, np.zeros_like(x)])\n >>> img\n array([[0, 0, 0, 0, 0, 0],\n [1, 1, 1, 2, 2, 2],\n [1, 1, 1, 2, 2, 2],\n [1, 1, 1, 2, 2, 2],\n [0, 0, 0, 0, 0, 0]])\n >>> profile_line(img, (2, 1), (2, 4))\n array([1., 1., 2., 2.])\n >>> profile_line(img, (1, 0), (1, 6), cval=4)\n array([1., 1., 1., 2., 2., 2., 4.])\n\n The destination point is included in the profile, in contrast to\n standard numpy indexing.\n For example:\n\n >>> profile_line(img, (1, 0), (1, 6)) # The final point is out of bounds\n array([1., 1., 1., 2., 2., 2., 0.])\n >>> profile_line(img, (1, 0), (1, 5)) # This accesses the full first row\n array([1., 1., 1., 2., 2., 2.])\n\n For different reduce_func inputs:\n\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.mean)\n array([0.66666667, 0.66666667, 0.66666667, 1.33333333])\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.max)\n array([1, 1, 1, 2])\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.sum)\n array([2, 2, 2, 4])\n\n The unreduced array will be returned when `reduce_func` is None or when\n `reduce_func` acts on each pixel value individually.\n\n >>> profile_line(img, (1, 2), (4, 2), linewidth=3, order=0,\n ... reduce_func=None)\n array([[1, 1, 2],\n [1, 1, 2],\n [1, 1, 2],\n [0, 0, 0]])\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.sqrt)\n array([[1. , 1. , 0. ],\n [1. , 1. , 0. ],\n [1. , 1. , 0. ],\n [1.41421356, 1.41421356, 0. ]])\n \"\"\"\n if order is None:\n order = 0 if image.dtype == bool else 1\n\n if image.dtype == bool and order != 0:\n warn(\"Input image dtype is bool. Interpolation is not defined \"\n \"with bool data type. Please set order to 0 or explicitely \"\n \"cast input image to another data type. Starting from version \"\n \"0.19 a ValueError will be raised instead of this warning.\",\n FutureWarning, stacklevel=2)\n\n perp_lines = _line_profile_coordinates(src, dst, linewidth=linewidth)\n if image.ndim == 3:\n pixels = [ndi.map_coordinates(image[..., i], perp_lines,\n prefilter=order > 1,\n order=order, mode=mode,\n cval=cval) for i in\n range(image.shape[2])]\n pixels = np.transpose(np.asarray(pixels), (1, 2, 0))\n else:\n pixels = ndi.map_coordinates(image, perp_lines, prefilter=order > 1,\n order=order, mode=mode, cval=cval)\n # The outputted array with reduce_func=None gives an array where the\n # row values (axis=1) are flipped. Here, we make this consistent.\n pixels = np.flip(pixels, axis=1)\n\n if reduce_func is None:\n intensities = pixels\n else:\n try:\n intensities = reduce_func(pixels, axis=1)\n except TypeError: # function doesn't allow axis kwarg\n intensities = np.apply_along_axis(reduce_func, arr=pixels, axis=1)\n\n return intensities\n\n\ndef _line_profile_coordinates(src, dst, linewidth=1):\n \"\"\"Return the coordinates of the profile of an image along a scan line.\n\n Parameters\n ----------\n src : 2-tuple of numeric scalar (float or int)\n The start point of the scan line.\n dst : 2-tuple of numeric scalar (float or int)\n The end point of the scan line.\n linewidth : int, optional\n Width of the scan, perpendicular to the line\n\n Returns\n -------\n coords : array, shape (2, N, C), float\n The coordinates of the profile along the scan line. The length of the\n profile is the ceil of the computed length of the scan line.\n\n Notes\n -----\n This is a utility method meant to be used internally by skimage functions.\n The destination point is included in the profile, in contrast to\n standard numpy indexing.\n \"\"\"\n src_row, src_col = src = np.asarray(src, dtype=float)\n dst_row, dst_col = dst = np.asarray(dst, dtype=float)\n d_row, d_col = dst - src\n theta = np.arctan2(d_row, d_col)\n\n length = int(np.ceil(np.hypot(d_row, d_col) + 1))\n # we add one above because we include the last point in the profile\n # (in contrast to standard numpy indexing)\n line_col = np.linspace(src_col, dst_col, length)\n line_row = np.linspace(src_row, dst_row, length)\n\n # we subtract 1 from linewidth to change from pixel-counting\n # (make this line 3 pixels wide) to point distances (the\n # distance between pixel centers)\n col_width = (linewidth - 1) * np.sin(-theta) / 2\n row_width = (linewidth - 1) * np.cos(theta) / 2\n perp_rows = np.array([np.linspace(row_i - row_width, row_i + row_width,\n linewidth) for row_i in line_row])\n perp_cols = np.array([np.linspace(col_i - col_width, col_i + col_width,\n linewidth) for col_i in line_col])\n return np.array([perp_rows, perp_cols])\n", "path": "skimage/measure/profile.py"}], "after_files": [{"content": "from warnings import warn\nimport numpy as np\nfrom scipy import ndimage as ndi\n\n\ndef profile_line(image, src, dst, linewidth=1,\n order=None, mode=None, cval=0.0,\n *, reduce_func=np.mean):\n \"\"\"Return the intensity profile of an image measured along a scan line.\n\n Parameters\n ----------\n image : ndarray, shape (M, N[, C])\n The image, either grayscale (2D array) or multichannel\n (3D array, where the final axis contains the channel\n information).\n src : array_like, shape (2, )\n The coordinates of the start point of the scan line.\n dst : array_like, shape (2, )\n The coordinates of the end point of the scan\n line. The destination point is *included* in the profile, in\n contrast to standard numpy indexing.\n linewidth : int, optional\n Width of the scan, perpendicular to the line\n order : int in {0, 1, 2, 3, 4, 5}, optional\n The order of the spline interpolation, default is 0 if\n image.dtype is bool and 1 otherwise. The order has to be in\n the range 0-5. See `skimage.transform.warp` for detail.\n mode : {'constant', 'nearest', 'reflect', 'mirror', 'wrap'}, optional\n How to compute any values falling outside of the image.\n cval : float, optional\n If `mode` is 'constant', what constant value to use outside the image.\n reduce_func : callable, optional\n Function used to calculate the aggregation of pixel values\n perpendicular to the profile_line direction when `linewidth` > 1.\n If set to None the unreduced array will be returned.\n\n Returns\n -------\n return_value : array\n The intensity profile along the scan line. The length of the profile\n is the ceil of the computed length of the scan line.\n\n Examples\n --------\n >>> x = np.array([[1, 1, 1, 2, 2, 2]])\n >>> img = np.vstack([np.zeros_like(x), x, x, x, np.zeros_like(x)])\n >>> img\n array([[0, 0, 0, 0, 0, 0],\n [1, 1, 1, 2, 2, 2],\n [1, 1, 1, 2, 2, 2],\n [1, 1, 1, 2, 2, 2],\n [0, 0, 0, 0, 0, 0]])\n >>> profile_line(img, (2, 1), (2, 4))\n array([1., 1., 2., 2.])\n >>> profile_line(img, (1, 0), (1, 6), cval=4)\n array([1., 1., 1., 2., 2., 2., 4.])\n\n The destination point is included in the profile, in contrast to\n standard numpy indexing.\n For example:\n\n >>> profile_line(img, (1, 0), (1, 6)) # The final point is out of bounds\n array([1., 1., 1., 2., 2., 2., 0.])\n >>> profile_line(img, (1, 0), (1, 5)) # This accesses the full first row\n array([1., 1., 1., 2., 2., 2.])\n\n For different reduce_func inputs:\n\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.mean)\n array([0.66666667, 0.66666667, 0.66666667, 1.33333333])\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.max)\n array([1, 1, 1, 2])\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.sum)\n array([2, 2, 2, 4])\n\n The unreduced array will be returned when `reduce_func` is None or when\n `reduce_func` acts on each pixel value individually.\n\n >>> profile_line(img, (1, 2), (4, 2), linewidth=3, order=0,\n ... reduce_func=None)\n array([[1, 1, 2],\n [1, 1, 2],\n [1, 1, 2],\n [0, 0, 0]])\n >>> profile_line(img, (1, 0), (1, 3), linewidth=3, reduce_func=np.sqrt)\n array([[1. , 1. , 0. ],\n [1. , 1. , 0. ],\n [1. , 1. , 0. ],\n [1.41421356, 1.41421356, 0. ]])\n \"\"\"\n if order is None:\n order = 0 if image.dtype == bool else 1\n\n if image.dtype == bool and order != 0:\n warn(\"Input image dtype is bool. Interpolation is not defined \"\n \"with bool data type. Please set order to 0 or explicitely \"\n \"cast input image to another data type. Starting from version \"\n \"0.19 a ValueError will be raised instead of this warning.\",\n FutureWarning, stacklevel=2)\n if mode is None:\n warn(\"Default out of bounds interpolation mode 'constant' is \"\n \"deprecated. In version 0.19 it will be set to 'reflect'. \"\n \"To avoid this warning, set `mode=` explicitly.\",\n FutureWarning, stacklevel=2)\n mode = 'constant'\n\n perp_lines = _line_profile_coordinates(src, dst, linewidth=linewidth)\n if image.ndim == 3:\n pixels = [ndi.map_coordinates(image[..., i], perp_lines,\n prefilter=order > 1,\n order=order, mode=mode,\n cval=cval) for i in\n range(image.shape[2])]\n pixels = np.transpose(np.asarray(pixels), (1, 2, 0))\n else:\n pixels = ndi.map_coordinates(image, perp_lines, prefilter=order > 1,\n order=order, mode=mode, cval=cval)\n # The outputted array with reduce_func=None gives an array where the\n # row values (axis=1) are flipped. Here, we make this consistent.\n pixels = np.flip(pixels, axis=1)\n\n if reduce_func is None:\n intensities = pixels\n else:\n try:\n intensities = reduce_func(pixels, axis=1)\n except TypeError: # function doesn't allow axis kwarg\n intensities = np.apply_along_axis(reduce_func, arr=pixels, axis=1)\n\n return intensities\n\n\ndef _line_profile_coordinates(src, dst, linewidth=1):\n \"\"\"Return the coordinates of the profile of an image along a scan line.\n\n Parameters\n ----------\n src : 2-tuple of numeric scalar (float or int)\n The start point of the scan line.\n dst : 2-tuple of numeric scalar (float or int)\n The end point of the scan line.\n linewidth : int, optional\n Width of the scan, perpendicular to the line\n\n Returns\n -------\n coords : array, shape (2, N, C), float\n The coordinates of the profile along the scan line. The length of the\n profile is the ceil of the computed length of the scan line.\n\n Notes\n -----\n This is a utility method meant to be used internally by skimage functions.\n The destination point is included in the profile, in contrast to\n standard numpy indexing.\n \"\"\"\n src_row, src_col = src = np.asarray(src, dtype=float)\n dst_row, dst_col = dst = np.asarray(dst, dtype=float)\n d_row, d_col = dst - src\n theta = np.arctan2(d_row, d_col)\n\n length = int(np.ceil(np.hypot(d_row, d_col) + 1))\n # we add one above because we include the last point in the profile\n # (in contrast to standard numpy indexing)\n line_col = np.linspace(src_col, dst_col, length)\n line_row = np.linspace(src_row, dst_row, length)\n\n # we subtract 1 from linewidth to change from pixel-counting\n # (make this line 3 pixels wide) to point distances (the\n # distance between pixel centers)\n col_width = (linewidth - 1) * np.sin(-theta) / 2\n row_width = (linewidth - 1) * np.cos(theta) / 2\n perp_rows = np.array([np.linspace(row_i - row_width, row_i + row_width,\n linewidth) for row_i in line_row])\n perp_cols = np.array([np.linspace(col_i - col_width, col_i + col_width,\n linewidth) for col_i in line_col])\n return np.array([perp_rows, perp_cols])\n", "path": "skimage/measure/profile.py"}]}

| 3,125 | 528 |

gh_patches_debug_25538

|

rasdani/github-patches

|

git_diff

|

mathesar-foundation__mathesar-2331

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fix API error response when filtering on an invalid date

## Steps to reproduce

1. Send a request like

```http

GET http://localhost:8000/api/db/v0/tables/1/records/?limit=500&offset=0&filter={"equal":[{"column_id":[6]},{"literal":["2"]}]}

```

Here, column with id `6` is a date column, and the value `"2"` is a partially-entered date that should be considered invalid.

1. Expect an error response conforming to our [error standards](https://wiki.mathesar.org/en/engineering/standards/api#errors)

1. Instead, observe an HTTP 500 Django error.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mathesar/api/db/viewsets/records.py`

Content:

```

1 from psycopg2.errors import ForeignKeyViolation

2 from rest_access_policy import AccessViewSetMixin

3 from rest_framework import status, viewsets

4 from rest_framework.exceptions import NotFound

5 from rest_framework.renderers import BrowsableAPIRenderer

6 from rest_framework.response import Response

7 from sqlalchemy.exc import IntegrityError

8

9 from mathesar.api.db.permissions.records import RecordAccessPolicy

10 from mathesar.api.exceptions.error_codes import ErrorCodes

11 import mathesar.api.exceptions.database_exceptions.exceptions as database_api_exceptions

12 import mathesar.api.exceptions.generic_exceptions.base_exceptions as generic_api_exceptions

13 from db.functions.exceptions import (

14 BadDBFunctionFormat, ReferencedColumnsDontExist, UnknownDBFunctionID,

15 )

16 from db.records.exceptions import (

17 BadGroupFormat, GroupFieldNotFound, InvalidGroupType, UndefinedFunction,

18 BadSortFormat, SortFieldNotFound

19 )

20 from mathesar.api.pagination import TableLimitOffsetPagination

21 from mathesar.api.serializers.records import RecordListParameterSerializer, RecordSerializer

22 from mathesar.api.utils import get_table_or_404

23 from mathesar.functions.operations.convert import rewrite_db_function_spec_column_ids_to_names

24 from mathesar.models.base import Table

25 from mathesar.utils.json import MathesarJSONRenderer

26

27

28 class RecordViewSet(AccessViewSetMixin, viewsets.ViewSet):

29 access_policy = RecordAccessPolicy

30

31 # There is no 'update' method.

32 # We're not supporting PUT requests because there aren't a lot of use cases

33 # where the entire record needs to be replaced, PATCH suffices for updates.

34 def get_queryset(self):

35 return Table.objects.all().order_by('-created_at')

36

37 renderer_classes = [MathesarJSONRenderer, BrowsableAPIRenderer]

38

39 # For filter parameter formatting, see:

40 # db/functions/operations/deserialize.py::get_db_function_from_ma_function_spec function doc>

41 # For sorting parameter formatting, see:

42 # https://github.com/centerofci/sqlalchemy-filters#sort-format

43 def list(self, request, table_pk=None):

44 paginator = TableLimitOffsetPagination()

45

46 serializer = RecordListParameterSerializer(data=request.GET)

47 serializer.is_valid(raise_exception=True)

48 table = get_table_or_404(table_pk)

49

50 filter_unprocessed = serializer.validated_data['filter']

51 order_by = serializer.validated_data['order_by']

52 grouping = serializer.validated_data['grouping']

53 search_fuzzy = serializer.validated_data['search_fuzzy']

54 filter_processed = None

55 column_names_to_ids = table.get_column_name_id_bidirectional_map()

56 column_ids_to_names = column_names_to_ids.inverse

57 if filter_unprocessed:

58 filter_processed = rewrite_db_function_spec_column_ids_to_names(

59 column_ids_to_names=column_ids_to_names,

60 spec=filter_unprocessed,

61 )

62 # Replace column id value used in the `field` property with column name

63 name_converted_group_by = None

64 if grouping:

65 group_by_columns_names = [column_ids_to_names[column_id] for column_id in grouping['columns']]

66 name_converted_group_by = {**grouping, 'columns': group_by_columns_names}

67 name_converted_order_by = [{**column, 'field': column_ids_to_names[column['field']]} for column in order_by]

68 name_converted_search = [{**column, 'column': column_ids_to_names[column['field']]} for column in search_fuzzy]

69

70 try:

71

72 records = paginator.paginate_queryset(

73 self.get_queryset(), request, table, column_names_to_ids,

74 filters=filter_processed,

75 order_by=name_converted_order_by,

76 grouping=name_converted_group_by,

77 search=name_converted_search,

78 duplicate_only=serializer.validated_data['duplicate_only']

79 )

80 except (BadDBFunctionFormat, UnknownDBFunctionID, ReferencedColumnsDontExist) as e:

81 raise database_api_exceptions.BadFilterAPIException(

82 e,

83 field='filters',

84 status_code=status.HTTP_400_BAD_REQUEST

85 )

86 except (BadSortFormat, SortFieldNotFound) as e:

87 raise database_api_exceptions.BadSortAPIException(

88 e,

89 field='order_by',

90 status_code=status.HTTP_400_BAD_REQUEST

91 )

92 except (BadGroupFormat, GroupFieldNotFound, InvalidGroupType) as e:

93 raise database_api_exceptions.BadGroupAPIException(

94 e,

95 field='grouping',

96 status_code=status.HTTP_400_BAD_REQUEST

97 )

98 except UndefinedFunction as e:

99 raise database_api_exceptions.UndefinedFunctionAPIException(

100 e,

101 details=e.args[0],

102 status_code=status.HTTP_400_BAD_REQUEST

103 )

104 serializer = RecordSerializer(

105 records,

106 many=True,

107 context=self.get_serializer_context(table)

108 )

109 return paginator.get_paginated_response(serializer.data)

110

111 def retrieve(self, request, pk=None, table_pk=None):

112 table = get_table_or_404(table_pk)

113 # TODO refactor to use serializer for more DRY response logic

114 paginator = TableLimitOffsetPagination()

115 record_filters = {

116 "equal": [

117 {"column_name": [table.primary_key_column_name]},

118 {"literal": [pk]}

119 ]

120 }

121 column_names_to_ids = table.get_column_name_id_bidirectional_map()

122 records = paginator.paginate_queryset(

123 table,

124 request,

125 table,

126 column_names_to_ids,

127 filters=record_filters

128 )

129 if not records:

130 raise NotFound

131 serializer = RecordSerializer(

132 records,

133 many=True,

134 context=self.get_serializer_context(table)

135 )

136 return paginator.get_paginated_response(serializer.data)

137

138 def create(self, request, table_pk=None):

139 table = get_table_or_404(table_pk)

140 serializer = RecordSerializer(data=request.data, context=self.get_serializer_context(table))

141 serializer.is_valid(raise_exception=True)

142 serializer.save()

143 # TODO refactor to use serializer for more DRY response logic

144 column_name_id_map = table.get_column_name_id_bidirectional_map()

145 table_pk_column_id = column_name_id_map[table.primary_key_column_name]

146 pk_value = serializer.data[table_pk_column_id]

147 paginator = TableLimitOffsetPagination()

148 record_filters = {

149 "equal": [

150 {"column_name": [table.primary_key_column_name]},

151 {"literal": [pk_value]}

152 ]

153 }

154 column_names_to_ids = table.get_column_name_id_bidirectional_map()

155 records = paginator.paginate_queryset(

156 table,

157 request,

158 table,

159 column_names_to_ids,

160 filters=record_filters

161 )

162 serializer = RecordSerializer(

163 records,

164 many=True,

165 context=self.get_serializer_context(table)

166 )

167 response = paginator.get_paginated_response(serializer.data)

168 response.status_code = status.HTTP_201_CREATED

169 return response

170

171 def partial_update(self, request, pk=None, table_pk=None):

172 table = get_table_or_404(table_pk)

173 serializer = RecordSerializer(

174 {'id': pk},

175 data=request.data,

176 context=self.get_serializer_context(table),

177 partial=True

178 )

179 serializer.is_valid(raise_exception=True)

180 serializer.save()

181 # TODO refactor to use serializer for more DRY response logic

182 paginator = TableLimitOffsetPagination()

183 record_filters = {

184 "equal": [

185 {"column_name": [table.primary_key_column_name]},

186 {"literal": [pk]}

187 ]

188 }

189 column_names_to_ids = table.get_column_name_id_bidirectional_map()

190 records = paginator.paginate_queryset(

191 table,

192 request,

193 table,

194 column_names_to_ids,

195 filters=record_filters

196 )

197 serializer = RecordSerializer(

198 records,

199 many=True,

200 context=self.get_serializer_context(table)

201 )

202 return paginator.get_paginated_response(serializer.data)

203

204 def destroy(self, request, pk=None, table_pk=None):

205 table = get_table_or_404(table_pk)

206 if table.get_record(pk) is None:

207 raise generic_api_exceptions.NotFoundAPIException(

208 NotFound,

209 error_code=ErrorCodes.RecordNotFound.value,

210 message="Record doesn't exist"

211 )

212 try:

213 table.delete_record(pk)

214 except IntegrityError as e:

215 if isinstance(e.orig, ForeignKeyViolation):

216 raise database_api_exceptions.ForeignKeyViolationAPIException(

217 e,

218 status_code=status.HTTP_400_BAD_REQUEST,

219 referent_table=table,

220 )

221

222 return Response(status=status.HTTP_204_NO_CONTENT)

223

224 def get_serializer_context(self, table):

225 columns_map = table.get_column_name_id_bidirectional_map()

226 context = {'columns_map': columns_map, 'table': table}

227 return context

228

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mathesar/api/db/viewsets/records.py b/mathesar/api/db/viewsets/records.py

--- a/mathesar/api/db/viewsets/records.py

+++ b/mathesar/api/db/viewsets/records.py

@@ -1,10 +1,10 @@

-from psycopg2.errors import ForeignKeyViolation

+from psycopg2.errors import ForeignKeyViolation, InvalidDatetimeFormat

from rest_access_policy import AccessViewSetMixin

from rest_framework import status, viewsets

from rest_framework.exceptions import NotFound

from rest_framework.renderers import BrowsableAPIRenderer

from rest_framework.response import Response

-from sqlalchemy.exc import IntegrityError

+from sqlalchemy.exc import IntegrityError, DataError

from mathesar.api.db.permissions.records import RecordAccessPolicy

from mathesar.api.exceptions.error_codes import ErrorCodes

@@ -101,6 +101,13 @@

details=e.args[0],

status_code=status.HTTP_400_BAD_REQUEST

)

+ except DataError as e:

+ if isinstance(e.orig, InvalidDatetimeFormat):

+ raise database_api_exceptions.InvalidDateFormatAPIException(

+ e,

+ status_code=status.HTTP_400_BAD_REQUEST,

+ )

+

serializer = RecordSerializer(

records,

many=True,

|