problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

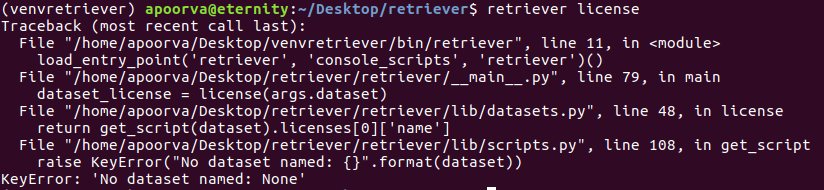

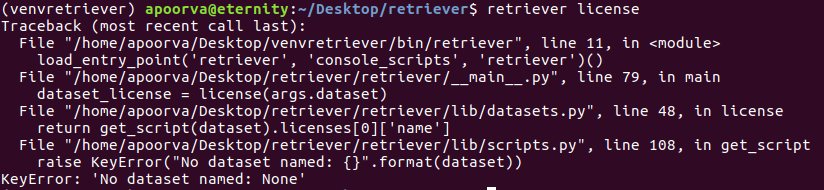

gh_patches_debug_5891 | rasdani/github-patches | git_diff | sublimelsp__LSP-1732 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

`os.path.relpath` may throw an exception on Windows.

`os.path.relpath` may throw an exception on Windows.

```

Traceback (most recent call last):

File "C:\tools\sublime\Data\Installed Packages\LSP.sublime-package\plugin/references.py", line 55, in

File "C:\tools\sublime\Data\Installed Packages\LSP.sublime-package\plugin/references.py", line 62, in _handle_response

File "C:\tools\sublime\Data\Installed Packages\LSP.sublime-package\plugin/references.py", line 85, in _show_references_in_output_panel

File "C:\tools\sublime\Data\Installed Packages\LSP.sublime-package\plugin/references.py", line 107, in _get_relative_path

File "./python3.3/ntpath.py", line 564, in relpath

ValueError: path is on mount 'C:', start on mount '\myserver\myshare'

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `plugin/references.py`

Content:

```

1 from .core.panels import ensure_panel

2 from .core.protocol import Location

3 from .core.protocol import Point

4 from .core.protocol import Request

5 from .core.registry import get_position

6 from .core.registry import LspTextCommand

7 from .core.sessions import Session

8 from .core.settings import PLUGIN_NAME

9 from .core.settings import userprefs

10 from .core.types import ClientConfig

11 from .core.types import PANEL_FILE_REGEX

12 from .core.types import PANEL_LINE_REGEX

13 from .core.typing import Dict, List, Optional, Tuple

14 from .core.views import get_line

15 from .core.views import get_uri_and_position_from_location

16 from .core.views import text_document_position_params

17 from .locationpicker import LocationPicker

18 import functools

19 import linecache

20 import os

21 import sublime

22

23

24 def ensure_references_panel(window: sublime.Window) -> Optional[sublime.View]:

25 return ensure_panel(window, "references", PANEL_FILE_REGEX, PANEL_LINE_REGEX,

26 "Packages/" + PLUGIN_NAME + "/Syntaxes/References.sublime-syntax")

27

28

29 class LspSymbolReferencesCommand(LspTextCommand):

30

31 capability = 'referencesProvider'

32

33 def __init__(self, view: sublime.View) -> None:

34 super().__init__(view)

35 self._picker = None # type: Optional[LocationPicker]

36

37 def run(self, _: sublime.Edit, event: Optional[dict] = None, point: Optional[int] = None) -> None:

38 session = self.best_session(self.capability)

39 file_path = self.view.file_name()

40 pos = get_position(self.view, event, point)

41 if session and file_path and pos is not None:

42 params = text_document_position_params(self.view, pos)

43 params['context'] = {"includeDeclaration": False}

44 request = Request("textDocument/references", params, self.view, progress=True)

45 session.send_request(

46 request,

47 functools.partial(

48 self._handle_response_async,

49 self.view.substr(self.view.word(pos)),

50 session

51 )

52 )

53

54 def _handle_response_async(self, word: str, session: Session, response: Optional[List[Location]]) -> None:

55 sublime.set_timeout(lambda: self._handle_response(word, session, response))

56

57 def _handle_response(self, word: str, session: Session, response: Optional[List[Location]]) -> None:

58 if response:

59 if userprefs().show_references_in_quick_panel:

60 self._show_references_in_quick_panel(session, response)

61 else:

62 self._show_references_in_output_panel(word, session, response)

63 else:

64 window = self.view.window()

65 if window:

66 window.status_message("No references found")

67

68 def _show_references_in_quick_panel(self, session: Session, locations: List[Location]) -> None:

69 self.view.run_command("add_jump_record", {"selection": [(r.a, r.b) for r in self.view.sel()]})

70 LocationPicker(self.view, session, locations, side_by_side=False)

71

72 def _show_references_in_output_panel(self, word: str, session: Session, locations: List[Location]) -> None:

73 window = session.window

74 panel = ensure_references_panel(window)

75 if not panel:

76 return

77 manager = session.manager()

78 if not manager:

79 return

80 base_dir = manager.get_project_path(self.view.file_name() or "")

81 to_render = [] # type: List[str]

82 references_count = 0

83 references_by_file = _group_locations_by_uri(window, session.config, locations)

84 for file, references in references_by_file.items():

85 to_render.append('{}:'.format(_get_relative_path(base_dir, file)))

86 for reference in references:

87 references_count += 1

88 point, line = reference

89 to_render.append('{:>5}:{:<4} {}'.format(point.row + 1, point.col + 1, line))

90 to_render.append("") # add spacing between filenames

91 characters = "\n".join(to_render)

92 panel.settings().set("result_base_dir", base_dir)

93 panel.run_command("lsp_clear_panel")

94 window.run_command("show_panel", {"panel": "output.references"})

95 panel.run_command('append', {

96 'characters': "{} references for '{}'\n\n{}".format(references_count, word, characters),

97 'force': True,

98 'scroll_to_end': False

99 })

100 # highlight all word occurrences

101 regions = panel.find_all(r"\b{}\b".format(word))

102 panel.add_regions('ReferenceHighlight', regions, 'comment', flags=sublime.DRAW_OUTLINED)

103

104

105 def _get_relative_path(base_dir: Optional[str], file_path: str) -> str:

106 if base_dir:

107 return os.path.relpath(file_path, base_dir)

108 else:

109 return file_path

110

111

112 def _group_locations_by_uri(

113 window: sublime.Window,

114 config: ClientConfig,

115 locations: List[Location]

116 ) -> Dict[str, List[Tuple[Point, str]]]:

117 """Return a dictionary that groups locations by the URI it belongs."""

118 grouped_locations = {} # type: Dict[str, List[Tuple[Point, str]]]

119 for location in locations:

120 uri, position = get_uri_and_position_from_location(location)

121 file_path = config.map_server_uri_to_client_path(uri)

122 point = Point.from_lsp(position)

123 # get line of the reference, to showcase its use

124 reference_line = get_line(window, file_path, point.row)

125 if grouped_locations.get(file_path) is None:

126 grouped_locations[file_path] = []

127 grouped_locations[file_path].append((point, reference_line))

128 # we don't want to cache the line, we always want to get fresh data

129 linecache.clearcache()

130 return grouped_locations

131

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/plugin/references.py b/plugin/references.py

--- a/plugin/references.py

+++ b/plugin/references.py

@@ -104,9 +104,12 @@

def _get_relative_path(base_dir: Optional[str], file_path: str) -> str:

if base_dir:

- return os.path.relpath(file_path, base_dir)

- else:

- return file_path

+ try:

+ return os.path.relpath(file_path, base_dir)

+ except ValueError:

+ # On Windows, ValueError is raised when path and start are on different drives.

+ pass

+ return file_path

def _group_locations_by_uri(

| {"golden_diff": "diff --git a/plugin/references.py b/plugin/references.py\n--- a/plugin/references.py\n+++ b/plugin/references.py\n@@ -104,9 +104,12 @@\n \n def _get_relative_path(base_dir: Optional[str], file_path: str) -> str:\n if base_dir:\n- return os.path.relpath(file_path, base_dir)\n- else:\n- return file_path\n+ try:\n+ return os.path.relpath(file_path, base_dir)\n+ except ValueError:\n+ # On Windows, ValueError is raised when path and start are on different drives.\n+ pass\n+ return file_path\n \n \n def _group_locations_by_uri(\n", "issue": "`os.path.relpath` may throw an exception on Windows.\n`os.path.relpath` may throw an exception on Windows.\r\n\r\n```\r\nTraceback (most recent call last):\r\nFile \"C:\\tools\\sublime\\Data\\Installed Packages\\LSP.sublime-package\\plugin/references.py\", line 55, in \r\nFile \"C:\\tools\\sublime\\Data\\Installed Packages\\LSP.sublime-package\\plugin/references.py\", line 62, in _handle_response\r\nFile \"C:\\tools\\sublime\\Data\\Installed Packages\\LSP.sublime-package\\plugin/references.py\", line 85, in _show_references_in_output_panel\r\nFile \"C:\\tools\\sublime\\Data\\Installed Packages\\LSP.sublime-package\\plugin/references.py\", line 107, in _get_relative_path\r\nFile \"./python3.3/ntpath.py\", line 564, in relpath\r\nValueError: path is on mount 'C:', start on mount '\\myserver\\myshare'\r\n```\n", "before_files": [{"content": "from .core.panels import ensure_panel\nfrom .core.protocol import Location\nfrom .core.protocol import Point\nfrom .core.protocol import Request\nfrom .core.registry import get_position\nfrom .core.registry import LspTextCommand\nfrom .core.sessions import Session\nfrom .core.settings import PLUGIN_NAME\nfrom .core.settings import userprefs\nfrom .core.types import ClientConfig\nfrom .core.types import PANEL_FILE_REGEX\nfrom .core.types import PANEL_LINE_REGEX\nfrom .core.typing import Dict, List, Optional, Tuple\nfrom .core.views import get_line\nfrom .core.views import get_uri_and_position_from_location\nfrom .core.views import text_document_position_params\nfrom .locationpicker import LocationPicker\nimport functools\nimport linecache\nimport os\nimport sublime\n\n\ndef ensure_references_panel(window: sublime.Window) -> Optional[sublime.View]:\n return ensure_panel(window, \"references\", PANEL_FILE_REGEX, PANEL_LINE_REGEX,\n \"Packages/\" + PLUGIN_NAME + \"/Syntaxes/References.sublime-syntax\")\n\n\nclass LspSymbolReferencesCommand(LspTextCommand):\n\n capability = 'referencesProvider'\n\n def __init__(self, view: sublime.View) -> None:\n super().__init__(view)\n self._picker = None # type: Optional[LocationPicker]\n\n def run(self, _: sublime.Edit, event: Optional[dict] = None, point: Optional[int] = None) -> None:\n session = self.best_session(self.capability)\n file_path = self.view.file_name()\n pos = get_position(self.view, event, point)\n if session and file_path and pos is not None:\n params = text_document_position_params(self.view, pos)\n params['context'] = {\"includeDeclaration\": False}\n request = Request(\"textDocument/references\", params, self.view, progress=True)\n session.send_request(\n request,\n functools.partial(\n self._handle_response_async,\n self.view.substr(self.view.word(pos)),\n session\n )\n )\n\n def _handle_response_async(self, word: str, session: Session, response: Optional[List[Location]]) -> None:\n sublime.set_timeout(lambda: self._handle_response(word, session, response))\n\n def _handle_response(self, word: str, session: Session, response: Optional[List[Location]]) -> None:\n if response:\n if userprefs().show_references_in_quick_panel:\n self._show_references_in_quick_panel(session, response)\n else:\n self._show_references_in_output_panel(word, session, response)\n else:\n window = self.view.window()\n if window:\n window.status_message(\"No references found\")\n\n def _show_references_in_quick_panel(self, session: Session, locations: List[Location]) -> None:\n self.view.run_command(\"add_jump_record\", {\"selection\": [(r.a, r.b) for r in self.view.sel()]})\n LocationPicker(self.view, session, locations, side_by_side=False)\n\n def _show_references_in_output_panel(self, word: str, session: Session, locations: List[Location]) -> None:\n window = session.window\n panel = ensure_references_panel(window)\n if not panel:\n return\n manager = session.manager()\n if not manager:\n return\n base_dir = manager.get_project_path(self.view.file_name() or \"\")\n to_render = [] # type: List[str]\n references_count = 0\n references_by_file = _group_locations_by_uri(window, session.config, locations)\n for file, references in references_by_file.items():\n to_render.append('{}:'.format(_get_relative_path(base_dir, file)))\n for reference in references:\n references_count += 1\n point, line = reference\n to_render.append('{:>5}:{:<4} {}'.format(point.row + 1, point.col + 1, line))\n to_render.append(\"\") # add spacing between filenames\n characters = \"\\n\".join(to_render)\n panel.settings().set(\"result_base_dir\", base_dir)\n panel.run_command(\"lsp_clear_panel\")\n window.run_command(\"show_panel\", {\"panel\": \"output.references\"})\n panel.run_command('append', {\n 'characters': \"{} references for '{}'\\n\\n{}\".format(references_count, word, characters),\n 'force': True,\n 'scroll_to_end': False\n })\n # highlight all word occurrences\n regions = panel.find_all(r\"\\b{}\\b\".format(word))\n panel.add_regions('ReferenceHighlight', regions, 'comment', flags=sublime.DRAW_OUTLINED)\n\n\ndef _get_relative_path(base_dir: Optional[str], file_path: str) -> str:\n if base_dir:\n return os.path.relpath(file_path, base_dir)\n else:\n return file_path\n\n\ndef _group_locations_by_uri(\n window: sublime.Window,\n config: ClientConfig,\n locations: List[Location]\n) -> Dict[str, List[Tuple[Point, str]]]:\n \"\"\"Return a dictionary that groups locations by the URI it belongs.\"\"\"\n grouped_locations = {} # type: Dict[str, List[Tuple[Point, str]]]\n for location in locations:\n uri, position = get_uri_and_position_from_location(location)\n file_path = config.map_server_uri_to_client_path(uri)\n point = Point.from_lsp(position)\n # get line of the reference, to showcase its use\n reference_line = get_line(window, file_path, point.row)\n if grouped_locations.get(file_path) is None:\n grouped_locations[file_path] = []\n grouped_locations[file_path].append((point, reference_line))\n # we don't want to cache the line, we always want to get fresh data\n linecache.clearcache()\n return grouped_locations\n", "path": "plugin/references.py"}], "after_files": [{"content": "from .core.panels import ensure_panel\nfrom .core.protocol import Location\nfrom .core.protocol import Point\nfrom .core.protocol import Request\nfrom .core.registry import get_position\nfrom .core.registry import LspTextCommand\nfrom .core.sessions import Session\nfrom .core.settings import PLUGIN_NAME\nfrom .core.settings import userprefs\nfrom .core.types import ClientConfig\nfrom .core.types import PANEL_FILE_REGEX\nfrom .core.types import PANEL_LINE_REGEX\nfrom .core.typing import Dict, List, Optional, Tuple\nfrom .core.views import get_line\nfrom .core.views import get_uri_and_position_from_location\nfrom .core.views import text_document_position_params\nfrom .locationpicker import LocationPicker\nimport functools\nimport linecache\nimport os\nimport sublime\n\n\ndef ensure_references_panel(window: sublime.Window) -> Optional[sublime.View]:\n return ensure_panel(window, \"references\", PANEL_FILE_REGEX, PANEL_LINE_REGEX,\n \"Packages/\" + PLUGIN_NAME + \"/Syntaxes/References.sublime-syntax\")\n\n\nclass LspSymbolReferencesCommand(LspTextCommand):\n\n capability = 'referencesProvider'\n\n def __init__(self, view: sublime.View) -> None:\n super().__init__(view)\n self._picker = None # type: Optional[LocationPicker]\n\n def run(self, _: sublime.Edit, event: Optional[dict] = None, point: Optional[int] = None) -> None:\n session = self.best_session(self.capability)\n file_path = self.view.file_name()\n pos = get_position(self.view, event, point)\n if session and file_path and pos is not None:\n params = text_document_position_params(self.view, pos)\n params['context'] = {\"includeDeclaration\": False}\n request = Request(\"textDocument/references\", params, self.view, progress=True)\n session.send_request(\n request,\n functools.partial(\n self._handle_response_async,\n self.view.substr(self.view.word(pos)),\n session\n )\n )\n\n def _handle_response_async(self, word: str, session: Session, response: Optional[List[Location]]) -> None:\n sublime.set_timeout(lambda: self._handle_response(word, session, response))\n\n def _handle_response(self, word: str, session: Session, response: Optional[List[Location]]) -> None:\n if response:\n if userprefs().show_references_in_quick_panel:\n self._show_references_in_quick_panel(session, response)\n else:\n self._show_references_in_output_panel(word, session, response)\n else:\n window = self.view.window()\n if window:\n window.status_message(\"No references found\")\n\n def _show_references_in_quick_panel(self, session: Session, locations: List[Location]) -> None:\n self.view.run_command(\"add_jump_record\", {\"selection\": [(r.a, r.b) for r in self.view.sel()]})\n LocationPicker(self.view, session, locations, side_by_side=False)\n\n def _show_references_in_output_panel(self, word: str, session: Session, locations: List[Location]) -> None:\n window = session.window\n panel = ensure_references_panel(window)\n if not panel:\n return\n manager = session.manager()\n if not manager:\n return\n base_dir = manager.get_project_path(self.view.file_name() or \"\")\n to_render = [] # type: List[str]\n references_count = 0\n references_by_file = _group_locations_by_uri(window, session.config, locations)\n for file, references in references_by_file.items():\n to_render.append('{}:'.format(_get_relative_path(base_dir, file)))\n for reference in references:\n references_count += 1\n point, line = reference\n to_render.append('{:>5}:{:<4} {}'.format(point.row + 1, point.col + 1, line))\n to_render.append(\"\") # add spacing between filenames\n characters = \"\\n\".join(to_render)\n panel.settings().set(\"result_base_dir\", base_dir)\n panel.run_command(\"lsp_clear_panel\")\n window.run_command(\"show_panel\", {\"panel\": \"output.references\"})\n panel.run_command('append', {\n 'characters': \"{} references for '{}'\\n\\n{}\".format(references_count, word, characters),\n 'force': True,\n 'scroll_to_end': False\n })\n # highlight all word occurrences\n regions = panel.find_all(r\"\\b{}\\b\".format(word))\n panel.add_regions('ReferenceHighlight', regions, 'comment', flags=sublime.DRAW_OUTLINED)\n\n\ndef _get_relative_path(base_dir: Optional[str], file_path: str) -> str:\n if base_dir:\n try:\n return os.path.relpath(file_path, base_dir)\n except ValueError:\n # On Windows, ValueError is raised when path and start are on different drives.\n pass\n return file_path\n\n\ndef _group_locations_by_uri(\n window: sublime.Window,\n config: ClientConfig,\n locations: List[Location]\n) -> Dict[str, List[Tuple[Point, str]]]:\n \"\"\"Return a dictionary that groups locations by the URI it belongs.\"\"\"\n grouped_locations = {} # type: Dict[str, List[Tuple[Point, str]]]\n for location in locations:\n uri, position = get_uri_and_position_from_location(location)\n file_path = config.map_server_uri_to_client_path(uri)\n point = Point.from_lsp(position)\n # get line of the reference, to showcase its use\n reference_line = get_line(window, file_path, point.row)\n if grouped_locations.get(file_path) is None:\n grouped_locations[file_path] = []\n grouped_locations[file_path].append((point, reference_line))\n # we don't want to cache the line, we always want to get fresh data\n linecache.clearcache()\n return grouped_locations\n", "path": "plugin/references.py"}]} | 1,973 | 149 |

gh_patches_debug_7263 | rasdani/github-patches | git_diff | iterative__dvc-5753 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

exp show: failing with rich==10.0.0

```console

$ dvc exp show

dvc exp show -v

2021-03-29 11:30:45,071 DEBUG: Check for update is disabled.

2021-03-29 11:30:46,006 ERROR: unexpected error - 'int' object has no attribute 'max_width'

------------------------------------------------------------

Traceback (most recent call last):

File "/home/saugat/repos/iterative/dvc/dvc/main.py", line 55, in main

ret = cmd.run()

File "/home/saugat/repos/iterative/dvc/dvc/command/experiments.py", line 411, in run

measurement = table.__rich_measure__(console, SHOW_MAX_WIDTH)

File "/home/saugat/venvs/dvc/env39/lib/python3.9/site-packages/rich/table.py", line 287, in __rich_measure__

max_width = options.max_width

AttributeError: 'int' object has no attribute 'max_width'

------------------------------------------------------------

2021-03-29 11:30:47,022 DEBUG: Version info for developers:

DVC version: 2.0.11+f8c567

---------------------------------

Platform: Python 3.9.2 on Linux-5.11.8-arch1-1-x86_64-with-glibc2.33

Supports: All remotes

Cache types: hardlink, symlink

Cache directory: ext4 on /dev/sda9

Caches: local

Remotes: https

Workspace directory: ext4 on /dev/sda9

Repo: dvc, git

Having any troubles? Hit us up at https://dvc.org/support, we are always happy to help!

```

This is also breaking our linter ([here](https://github.com/iterative/dvc/runs/2214172187?check_suite_focus=true#step:7:250

)) and tests as well due to the change in rich's internal API that we are using:

https://github.com/iterative/dvc/blob/1a25ebe3bd2eda4c3612e408fb503d64490fb56c/dvc/utils/table.py#L59

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `dvc/utils/table.py`

Content:

```

1 from dataclasses import dataclass

2 from typing import TYPE_CHECKING, List, cast

3

4 from rich.style import StyleType

5 from rich.table import Column as RichColumn

6 from rich.table import Table as RichTable

7

8 if TYPE_CHECKING:

9 from rich.console import (

10 Console,

11 ConsoleOptions,

12 JustifyMethod,

13 OverflowMethod,

14 RenderableType,

15 )

16

17

18 @dataclass

19 class Column(RichColumn):

20 collapse: bool = False

21

22

23 class Table(RichTable):

24 def add_column( # pylint: disable=arguments-differ

25 self,

26 header: "RenderableType" = "",

27 footer: "RenderableType" = "",

28 *,

29 header_style: StyleType = None,

30 footer_style: StyleType = None,

31 style: StyleType = None,

32 justify: "JustifyMethod" = "left",

33 overflow: "OverflowMethod" = "ellipsis",

34 width: int = None,

35 min_width: int = None,

36 max_width: int = None,

37 ratio: int = None,

38 no_wrap: bool = False,

39 collapse: bool = False,

40 ) -> None:

41 column = Column( # type: ignore[call-arg]

42 _index=len(self.columns),

43 header=header,

44 footer=footer,

45 header_style=header_style or "",

46 footer_style=footer_style or "",

47 style=style or "",

48 justify=justify,

49 overflow=overflow,

50 width=width,

51 min_width=min_width,

52 max_width=max_width,

53 ratio=ratio,

54 no_wrap=no_wrap,

55 collapse=collapse,

56 )

57 self.columns.append(column)

58

59 def _calculate_column_widths(

60 self, console: "Console", options: "ConsoleOptions"

61 ) -> List[int]:

62 """Calculate the widths of each column, including padding, not

63 including borders.

64

65 Adjacent collapsed columns will be removed until there is only a single

66 truncated column remaining.

67 """

68 widths = super()._calculate_column_widths(console, options)

69 last_collapsed = -1

70 columns = cast(List[Column], self.columns)

71 for i in range(len(columns) - 1, -1, -1):

72 if widths[i] == 1 and columns[i].collapse:

73 if last_collapsed >= 0:

74 del widths[last_collapsed]

75 del columns[last_collapsed]

76 if self.box:

77 options.max_width += 1

78 for column in columns[last_collapsed:]:

79 column._index -= 1

80 last_collapsed = i

81 padding = self._get_padding_width(i)

82 if (

83 columns[i].overflow == "ellipsis"

84 and (sum(widths) + padding) <= options.max_width

85 ):

86 # Set content width to 1 (plus padding) if we can fit a

87 # single unicode ellipsis in this column

88 widths[i] = 1 + padding

89 else:

90 last_collapsed = -1

91 return widths

92

93 def _collapse_widths( # type: ignore[override]

94 self, widths: List[int], wrapable: List[bool], max_width: int,

95 ) -> List[int]:

96 """Collapse columns right-to-left if possible to fit table into

97 max_width.

98

99 If table is still too wide after collapsing, rich's automatic overflow

100 handling will be used.

101 """

102 columns = cast(List[Column], self.columns)

103 collapsible = [column.collapse for column in columns]

104 total_width = sum(widths)

105 excess_width = total_width - max_width

106 if any(collapsible):

107 for i in range(len(widths) - 1, -1, -1):

108 if collapsible[i]:

109 total_width -= widths[i]

110 excess_width -= widths[i]

111 widths[i] = 0

112 if excess_width <= 0:

113 break

114 return super()._collapse_widths(widths, wrapable, max_width)

115

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/dvc/utils/table.py b/dvc/utils/table.py

--- a/dvc/utils/table.py

+++ b/dvc/utils/table.py

@@ -69,7 +69,7 @@

last_collapsed = -1

columns = cast(List[Column], self.columns)

for i in range(len(columns) - 1, -1, -1):

- if widths[i] == 1 and columns[i].collapse:

+ if widths[i] == 0 and columns[i].collapse:

if last_collapsed >= 0:

del widths[last_collapsed]

del columns[last_collapsed]

| {"golden_diff": "diff --git a/dvc/utils/table.py b/dvc/utils/table.py\n--- a/dvc/utils/table.py\n+++ b/dvc/utils/table.py\n@@ -69,7 +69,7 @@\n last_collapsed = -1\n columns = cast(List[Column], self.columns)\n for i in range(len(columns) - 1, -1, -1):\n- if widths[i] == 1 and columns[i].collapse:\n+ if widths[i] == 0 and columns[i].collapse:\n if last_collapsed >= 0:\n del widths[last_collapsed]\n del columns[last_collapsed]\n", "issue": "exp show: failing with rich==10.0.0\n```console\r\n$ dvc exp show\r\ndvc exp show -v\r\n2021-03-29 11:30:45,071 DEBUG: Check for update is disabled.\r\n2021-03-29 11:30:46,006 ERROR: unexpected error - 'int' object has no attribute 'max_width'\r\n------------------------------------------------------------\r\nTraceback (most recent call last):\r\n File \"/home/saugat/repos/iterative/dvc/dvc/main.py\", line 55, in main\r\n ret = cmd.run()\r\n File \"/home/saugat/repos/iterative/dvc/dvc/command/experiments.py\", line 411, in run\r\n measurement = table.__rich_measure__(console, SHOW_MAX_WIDTH)\r\n File \"/home/saugat/venvs/dvc/env39/lib/python3.9/site-packages/rich/table.py\", line 287, in __rich_measure__\r\n max_width = options.max_width\r\nAttributeError: 'int' object has no attribute 'max_width'\r\n------------------------------------------------------------\r\n2021-03-29 11:30:47,022 DEBUG: Version info for developers:\r\nDVC version: 2.0.11+f8c567 \r\n---------------------------------\r\nPlatform: Python 3.9.2 on Linux-5.11.8-arch1-1-x86_64-with-glibc2.33\r\nSupports: All remotes\r\nCache types: hardlink, symlink\r\nCache directory: ext4 on /dev/sda9\r\nCaches: local\r\nRemotes: https\r\nWorkspace directory: ext4 on /dev/sda9\r\nRepo: dvc, git\r\n\r\nHaving any troubles? Hit us up at https://dvc.org/support, we are always happy to help!\r\n```\r\n\r\n\r\nThis is also breaking our linter ([here](https://github.com/iterative/dvc/runs/2214172187?check_suite_focus=true#step:7:250\r\n)) and tests as well due to the change in rich's internal API that we are using:\r\nhttps://github.com/iterative/dvc/blob/1a25ebe3bd2eda4c3612e408fb503d64490fb56c/dvc/utils/table.py#L59\r\n\r\n\n", "before_files": [{"content": "from dataclasses import dataclass\nfrom typing import TYPE_CHECKING, List, cast\n\nfrom rich.style import StyleType\nfrom rich.table import Column as RichColumn\nfrom rich.table import Table as RichTable\n\nif TYPE_CHECKING:\n from rich.console import (\n Console,\n ConsoleOptions,\n JustifyMethod,\n OverflowMethod,\n RenderableType,\n )\n\n\n@dataclass\nclass Column(RichColumn):\n collapse: bool = False\n\n\nclass Table(RichTable):\n def add_column( # pylint: disable=arguments-differ\n self,\n header: \"RenderableType\" = \"\",\n footer: \"RenderableType\" = \"\",\n *,\n header_style: StyleType = None,\n footer_style: StyleType = None,\n style: StyleType = None,\n justify: \"JustifyMethod\" = \"left\",\n overflow: \"OverflowMethod\" = \"ellipsis\",\n width: int = None,\n min_width: int = None,\n max_width: int = None,\n ratio: int = None,\n no_wrap: bool = False,\n collapse: bool = False,\n ) -> None:\n column = Column( # type: ignore[call-arg]\n _index=len(self.columns),\n header=header,\n footer=footer,\n header_style=header_style or \"\",\n footer_style=footer_style or \"\",\n style=style or \"\",\n justify=justify,\n overflow=overflow,\n width=width,\n min_width=min_width,\n max_width=max_width,\n ratio=ratio,\n no_wrap=no_wrap,\n collapse=collapse,\n )\n self.columns.append(column)\n\n def _calculate_column_widths(\n self, console: \"Console\", options: \"ConsoleOptions\"\n ) -> List[int]:\n \"\"\"Calculate the widths of each column, including padding, not\n including borders.\n\n Adjacent collapsed columns will be removed until there is only a single\n truncated column remaining.\n \"\"\"\n widths = super()._calculate_column_widths(console, options)\n last_collapsed = -1\n columns = cast(List[Column], self.columns)\n for i in range(len(columns) - 1, -1, -1):\n if widths[i] == 1 and columns[i].collapse:\n if last_collapsed >= 0:\n del widths[last_collapsed]\n del columns[last_collapsed]\n if self.box:\n options.max_width += 1\n for column in columns[last_collapsed:]:\n column._index -= 1\n last_collapsed = i\n padding = self._get_padding_width(i)\n if (\n columns[i].overflow == \"ellipsis\"\n and (sum(widths) + padding) <= options.max_width\n ):\n # Set content width to 1 (plus padding) if we can fit a\n # single unicode ellipsis in this column\n widths[i] = 1 + padding\n else:\n last_collapsed = -1\n return widths\n\n def _collapse_widths( # type: ignore[override]\n self, widths: List[int], wrapable: List[bool], max_width: int,\n ) -> List[int]:\n \"\"\"Collapse columns right-to-left if possible to fit table into\n max_width.\n\n If table is still too wide after collapsing, rich's automatic overflow\n handling will be used.\n \"\"\"\n columns = cast(List[Column], self.columns)\n collapsible = [column.collapse for column in columns]\n total_width = sum(widths)\n excess_width = total_width - max_width\n if any(collapsible):\n for i in range(len(widths) - 1, -1, -1):\n if collapsible[i]:\n total_width -= widths[i]\n excess_width -= widths[i]\n widths[i] = 0\n if excess_width <= 0:\n break\n return super()._collapse_widths(widths, wrapable, max_width)\n", "path": "dvc/utils/table.py"}], "after_files": [{"content": "from dataclasses import dataclass\nfrom typing import TYPE_CHECKING, List, cast\n\nfrom rich.style import StyleType\nfrom rich.table import Column as RichColumn\nfrom rich.table import Table as RichTable\n\nif TYPE_CHECKING:\n from rich.console import (\n Console,\n ConsoleOptions,\n JustifyMethod,\n OverflowMethod,\n RenderableType,\n )\n\n\n@dataclass\nclass Column(RichColumn):\n collapse: bool = False\n\n\nclass Table(RichTable):\n def add_column( # pylint: disable=arguments-differ\n self,\n header: \"RenderableType\" = \"\",\n footer: \"RenderableType\" = \"\",\n *,\n header_style: StyleType = None,\n footer_style: StyleType = None,\n style: StyleType = None,\n justify: \"JustifyMethod\" = \"left\",\n overflow: \"OverflowMethod\" = \"ellipsis\",\n width: int = None,\n min_width: int = None,\n max_width: int = None,\n ratio: int = None,\n no_wrap: bool = False,\n collapse: bool = False,\n ) -> None:\n column = Column( # type: ignore[call-arg]\n _index=len(self.columns),\n header=header,\n footer=footer,\n header_style=header_style or \"\",\n footer_style=footer_style or \"\",\n style=style or \"\",\n justify=justify,\n overflow=overflow,\n width=width,\n min_width=min_width,\n max_width=max_width,\n ratio=ratio,\n no_wrap=no_wrap,\n collapse=collapse,\n )\n self.columns.append(column)\n\n def _calculate_column_widths(\n self, console: \"Console\", options: \"ConsoleOptions\"\n ) -> List[int]:\n \"\"\"Calculate the widths of each column, including padding, not\n including borders.\n\n Adjacent collapsed columns will be removed until there is only a single\n truncated column remaining.\n \"\"\"\n widths = super()._calculate_column_widths(console, options)\n last_collapsed = -1\n columns = cast(List[Column], self.columns)\n for i in range(len(columns) - 1, -1, -1):\n if widths[i] == 0 and columns[i].collapse:\n if last_collapsed >= 0:\n del widths[last_collapsed]\n del columns[last_collapsed]\n if self.box:\n options.max_width += 1\n for column in columns[last_collapsed:]:\n column._index -= 1\n last_collapsed = i\n padding = self._get_padding_width(i)\n if (\n columns[i].overflow == \"ellipsis\"\n and (sum(widths) + padding) <= options.max_width\n ):\n # Set content width to 1 (plus padding) if we can fit a\n # single unicode ellipsis in this column\n widths[i] = 1 + padding\n else:\n last_collapsed = -1\n return widths\n\n def _collapse_widths( # type: ignore[override]\n self, widths: List[int], wrapable: List[bool], max_width: int,\n ) -> List[int]:\n \"\"\"Collapse columns right-to-left if possible to fit table into\n max_width.\n\n If table is still too wide after collapsing, rich's automatic overflow\n handling will be used.\n \"\"\"\n columns = cast(List[Column], self.columns)\n collapsible = [column.collapse for column in columns]\n total_width = sum(widths)\n excess_width = total_width - max_width\n if any(collapsible):\n for i in range(len(widths) - 1, -1, -1):\n if collapsible[i]:\n total_width -= widths[i]\n excess_width -= widths[i]\n widths[i] = 0\n if excess_width <= 0:\n break\n return super()._collapse_widths(widths, wrapable, max_width)\n", "path": "dvc/utils/table.py"}]} | 1,877 | 134 |

gh_patches_debug_15548 | rasdani/github-patches | git_diff | tensorflow__addons-340 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

tfa.seq2seq.sequence_loss can't average over one dimension (batch or timesteps) while summing over the other one

**System information**

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Google Colab

- TensorFlow installed from (source or binary): binary

- TensorFlow version (use command below): 2.0.0=beta1

- TensorFlow Addons installed from (source, PyPi): PyPi

- TensorFlow Addons version: 0.4.0

- Python version and type (eg. Anaconda Python, Stock Python as in Mac, or homebrew installed Python etc): Google Colab Python

- Is GPU used? (yes/no): yes

- GPU model (if used): T4

**Describe the bug**

`tfa.seq2seq.sequence_loss` can't average over one dimension (`batch` or `timesteps`) while summing over the other one. It will arbitrarily only execute the averaging and ignore the sum right now.

**Describe the expected behavior**

I think the weights should be associated with the summing operation, and then the averaging should happen irrespective of that.

Concretely, when passing, say `average_across_batch=True` and `sum_over_timesteps=True` (of course, making sure `average_across_timesteps=False` is set), you should expect either of these things:

1. An error stating that this is not implemented (might be the wisest).

2. Return a scalar tensor obtained by either of these two following orders:

a) first computing the *weighted sum* of xents over timesteps (yielding a batchsize-sized tensor of xent-sums), then simply averaging this vector, i.e., summing and dividing by the batchsize. The result, however, is just the both-averaged version times the batchsize, divided by the sum of all weights.

b) first computing the *weighted average* over the batchsize, then summing these averages over all timesteps. The result here is different from 1a and the double-averaged (of course, there is some correlation...)!

I think 1a is the desired behavior (as the loglikelihood of a sequence really is the sum of the individual loglikelihoods and batches do correspond to sequence-length agnostic averages) and I'd be happy to establish it as the standard for this. Either way, doing something other than failing with an error will require an explicit notice in the docs. An error (or warning for backwards-compatibility?) might just be the simplest and safest option.

**Code to reproduce the issue**

```python

tfa.seq2seq.sequence_loss(

logits=tf.random.normal([3, 5, 7]),

targets=tf.zeros([3, 5], dtype=tf.int32),

weights=tf.sequence_mask(lengths=[3, 5, 1], maxlen=5, dtype=tf.float32),

average_across_batch=True,

average_across_timesteps=False,

sum_over_batch=False,

sum_over_timesteps=True,

)

```

...should return a scalar but returns only the batch-averaged tensor.

**Some more code to play with to test the claims above**

```python

import tensorflow.compat.v2 as tf

import tensorflow_addons as tfa

import numpy as np

import random

case1b = []

dblavg = []

for _ in range(100):

dtype = tf.float32

batchsize = random.randint(2, 10)

maxlen = random.randint(2, 10)

logits = tf.random.normal([batchsize, maxlen, 3])

labels = tf.zeros([batchsize, maxlen], dtype=tf.int32)

lengths = tf.squeeze(tf.random.categorical(tf.zeros([1, maxlen - 1]), batchsize)) + 1

weights = tf.sequence_mask(lengths=lengths, maxlen=maxlen, dtype=tf.float32)

def sl(ab, sb, at, st):

return tfa.seq2seq.sequence_loss(

logits,

labels,

weights,

average_across_batch=ab,

average_across_timesteps=at,

sum_over_batch=sb,

sum_over_timesteps=st,

)

all_b_all_t = sl(ab=False, sb=False, at=False, st=False)

avg_b_avg_t = sl(ab=True, sb=False, at=True, st=False)

sum_b_all_t = sl(ab=False, sb=True, at=False, st=False)

tf.assert_equal(sum_b_all_t, tf.math.divide_no_nan(tf.reduce_sum(all_b_all_t, axis=0), tf.reduce_sum(weights, axis=0)))

weighted = all_b_all_t * weights

first_sum_timesteps = tf.reduce_sum(weighted, axis=1)

then_average_batch = tf.reduce_sum(first_sum_timesteps) / batchsize

first_average_batch = tf.math.divide_no_nan(tf.reduce_sum(weighted, axis=0), tf.reduce_sum(weights, axis=0))

then_sum_timesteps = tf.reduce_sum(first_average_batch)

# Case 1a and 1b are different.

assert not np.isclose(then_average_batch, then_sum_timesteps)

# Case 1a is just the double-averaging up to a constant.

assert np.allclose(then_average_batch * batchsize / tf.reduce_sum(weights), avg_b_avg_t)

# Case 1b is not just the averaging.

assert not np.allclose(then_sum_timesteps / maxlen, avg_b_avg_t)

# They only kind of correlate:

case1b.append(then_sum_timesteps / maxlen)

dblavg.append(avg_b_avg_t)

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `tensorflow_addons/seq2seq/loss.py`

Content:

```

1 # Copyright 2016 The TensorFlow Authors. All Rights Reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 # ==============================================================================

15 """Seq2seq loss operations for use in sequence models."""

16

17 from __future__ import absolute_import

18 from __future__ import division

19 from __future__ import print_function

20

21 import tensorflow as tf

22

23

24 def sequence_loss(logits,

25 targets,

26 weights,

27 average_across_timesteps=True,

28 average_across_batch=True,

29 sum_over_timesteps=False,

30 sum_over_batch=False,

31 softmax_loss_function=None,

32 name=None):

33 """Weighted cross-entropy loss for a sequence of logits.

34

35 Depending on the values of `average_across_timesteps` /

36 `sum_over_timesteps` and `average_across_batch` / `sum_over_batch`, the

37 return Tensor will have rank 0, 1, or 2 as these arguments reduce the

38 cross-entropy at each target, which has shape

39 `[batch_size, sequence_length]`, over their respective dimensions. For

40 example, if `average_across_timesteps` is `True` and `average_across_batch`

41 is `False`, then the return Tensor will have shape `[batch_size]`.

42

43 Note that `average_across_timesteps` and `sum_over_timesteps` cannot be

44 True at same time. Same for `average_across_batch` and `sum_over_batch`.

45

46 The recommended loss reduction in tf 2.0 has been changed to sum_over,

47 instead of weighted average. User are recommend to use `sum_over_timesteps`

48 and `sum_over_batch` for reduction.

49

50 Args:

51 logits: A Tensor of shape

52 `[batch_size, sequence_length, num_decoder_symbols]` and dtype float.

53 The logits correspond to the prediction across all classes at each

54 timestep.

55 targets: A Tensor of shape `[batch_size, sequence_length]` and dtype

56 int. The target represents the true class at each timestep.

57 weights: A Tensor of shape `[batch_size, sequence_length]` and dtype

58 float. `weights` constitutes the weighting of each prediction in the

59 sequence. When using `weights` as masking, set all valid timesteps to 1

60 and all padded timesteps to 0, e.g. a mask returned by

61 `tf.sequence_mask`.

62 average_across_timesteps: If set, sum the cost across the sequence

63 dimension and divide the cost by the total label weight across

64 timesteps.

65 average_across_batch: If set, sum the cost across the batch dimension and

66 divide the returned cost by the batch size.

67 sum_over_timesteps: If set, sum the cost across the sequence dimension

68 and divide the size of the sequence. Note that any element with 0

69 weights will be excluded from size calculation.

70 sum_over_batch: if set, sum the cost across the batch dimension and

71 divide the total cost by the batch size. Not that any element with 0

72 weights will be excluded from size calculation.

73 softmax_loss_function: Function (labels, logits) -> loss-batch

74 to be used instead of the standard softmax (the default if this is

75 None). **Note that to avoid confusion, it is required for the function

76 to accept named arguments.**

77 name: Optional name for this operation, defaults to "sequence_loss".

78

79 Returns:

80 A float Tensor of rank 0, 1, or 2 depending on the

81 `average_across_timesteps` and `average_across_batch` arguments. By

82 default, it has rank 0 (scalar) and is the weighted average cross-entropy

83 (log-perplexity) per symbol.

84

85 Raises:

86 ValueError: logits does not have 3 dimensions or targets does not have 2

87 dimensions or weights does not have 2 dimensions.

88 """

89 if len(logits.get_shape()) != 3:

90 raise ValueError("Logits must be a "

91 "[batch_size x sequence_length x logits] tensor")

92 if len(targets.get_shape()) != 2:

93 raise ValueError(

94 "Targets must be a [batch_size x sequence_length] tensor")

95 if len(weights.get_shape()) != 2:

96 raise ValueError(

97 "Weights must be a [batch_size x sequence_length] tensor")

98 if average_across_timesteps and sum_over_timesteps:

99 raise ValueError(

100 "average_across_timesteps and sum_over_timesteps cannot "

101 "be set to True at same time.")

102 if average_across_batch and sum_over_batch:

103 raise ValueError(

104 "average_across_batch and sum_over_batch cannot be set "

105 "to True at same time.")

106 with tf.name_scope(name or "sequence_loss"):

107 num_classes = tf.shape(input=logits)[2]

108 logits_flat = tf.reshape(logits, [-1, num_classes])

109 targets = tf.reshape(targets, [-1])

110 if softmax_loss_function is None:

111 crossent = tf.nn.sparse_softmax_cross_entropy_with_logits(

112 labels=targets, logits=logits_flat)

113 else:

114 crossent = softmax_loss_function(

115 labels=targets, logits=logits_flat)

116 crossent *= tf.reshape(weights, [-1])

117 if average_across_timesteps and average_across_batch:

118 crossent = tf.reduce_sum(input_tensor=crossent)

119 total_size = tf.reduce_sum(input_tensor=weights)

120 crossent = tf.math.divide_no_nan(crossent, total_size)

121 elif sum_over_timesteps and sum_over_batch:

122 crossent = tf.reduce_sum(input_tensor=crossent)

123 total_count = tf.cast(

124 tf.math.count_nonzero(weights), crossent.dtype)

125 crossent = tf.math.divide_no_nan(crossent, total_count)

126 else:

127 crossent = tf.reshape(crossent, tf.shape(input=logits)[0:2])

128 if average_across_timesteps or average_across_batch:

129 reduce_axis = [0] if average_across_batch else [1]

130 crossent = tf.reduce_sum(

131 input_tensor=crossent, axis=reduce_axis)

132 total_size = tf.reduce_sum(

133 input_tensor=weights, axis=reduce_axis)

134 crossent = tf.math.divide_no_nan(crossent, total_size)

135 elif sum_over_timesteps or sum_over_batch:

136 reduce_axis = [0] if sum_over_batch else [1]

137 crossent = tf.reduce_sum(

138 input_tensor=crossent, axis=reduce_axis)

139 total_count = tf.cast(

140 tf.math.count_nonzero(weights, axis=reduce_axis),

141 dtype=crossent.dtype)

142 crossent = tf.math.divide_no_nan(crossent, total_count)

143 return crossent

144

145

146 class SequenceLoss(tf.keras.losses.Loss):

147 """Weighted cross-entropy loss for a sequence of logits."""

148

149 def __init__(self,

150 average_across_timesteps=False,

151 average_across_batch=False,

152 sum_over_timesteps=True,

153 sum_over_batch=True,

154 softmax_loss_function=None,

155 name=None):

156 super(SequenceLoss, self).__init__(name=name)

157 self.average_across_timesteps = average_across_timesteps

158 self.average_across_batch = average_across_batch

159 self.sum_over_timesteps = sum_over_timesteps

160 self.sum_over_batch = sum_over_batch

161 self.softmax_loss_function = softmax_loss_function

162

163 def __call__(self, y_true, y_pred, sample_weight=None):

164 """Override the parent __call__ to have a customized reduce

165 behavior."""

166 return sequence_loss(

167 y_pred,

168 y_true,

169 sample_weight,

170 average_across_timesteps=self.average_across_timesteps,

171 average_across_batch=self.average_across_batch,

172 sum_over_timesteps=self.sum_over_timesteps,

173 sum_over_batch=self.sum_over_batch,

174 softmax_loss_function=self.softmax_loss_function,

175 name=self.name)

176

177 def call(self, y_true, y_pred):

178 # Skip this method since the __call__ contains real implementation.

179 pass

180

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/tensorflow_addons/seq2seq/loss.py b/tensorflow_addons/seq2seq/loss.py

--- a/tensorflow_addons/seq2seq/loss.py

+++ b/tensorflow_addons/seq2seq/loss.py

@@ -103,6 +103,14 @@

raise ValueError(

"average_across_batch and sum_over_batch cannot be set "

"to True at same time.")

+ if average_across_batch and sum_over_timesteps:

+ raise ValueError(

+ "average_across_batch and sum_over_timesteps cannot be set "

+ "to True at same time because of ambiguous order.")

+ if sum_over_batch and average_across_timesteps:

+ raise ValueError(

+ "sum_over_batch and average_across_timesteps cannot be set "

+ "to True at same time because of ambiguous order.")

with tf.name_scope(name or "sequence_loss"):

num_classes = tf.shape(input=logits)[2]

logits_flat = tf.reshape(logits, [-1, num_classes])

| {"golden_diff": "diff --git a/tensorflow_addons/seq2seq/loss.py b/tensorflow_addons/seq2seq/loss.py\n--- a/tensorflow_addons/seq2seq/loss.py\n+++ b/tensorflow_addons/seq2seq/loss.py\n@@ -103,6 +103,14 @@\n raise ValueError(\n \"average_across_batch and sum_over_batch cannot be set \"\n \"to True at same time.\")\n+ if average_across_batch and sum_over_timesteps:\n+ raise ValueError(\n+ \"average_across_batch and sum_over_timesteps cannot be set \"\n+ \"to True at same time because of ambiguous order.\")\n+ if sum_over_batch and average_across_timesteps:\n+ raise ValueError(\n+ \"sum_over_batch and average_across_timesteps cannot be set \"\n+ \"to True at same time because of ambiguous order.\")\n with tf.name_scope(name or \"sequence_loss\"):\n num_classes = tf.shape(input=logits)[2]\n logits_flat = tf.reshape(logits, [-1, num_classes])\n", "issue": "tfa.seq2seq.sequence_loss can't average over one dimension (batch or timesteps) while summing over the other one\n**System information**\r\n- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Google Colab\r\n- TensorFlow installed from (source or binary): binary\r\n- TensorFlow version (use command below): 2.0.0=beta1\r\n- TensorFlow Addons installed from (source, PyPi): PyPi\r\n- TensorFlow Addons version: 0.4.0\r\n- Python version and type (eg. Anaconda Python, Stock Python as in Mac, or homebrew installed Python etc): Google Colab Python\r\n- Is GPU used? (yes/no): yes\r\n- GPU model (if used): T4\r\n\r\n**Describe the bug**\r\n\r\n`tfa.seq2seq.sequence_loss` can't average over one dimension (`batch` or `timesteps`) while summing over the other one. It will arbitrarily only execute the averaging and ignore the sum right now.\r\n\r\n**Describe the expected behavior**\r\n\r\nI think the weights should be associated with the summing operation, and then the averaging should happen irrespective of that.\r\nConcretely, when passing, say `average_across_batch=True` and `sum_over_timesteps=True` (of course, making sure `average_across_timesteps=False` is set), you should expect either of these things:\r\n\r\n1. An error stating that this is not implemented (might be the wisest).\r\n2. Return a scalar tensor obtained by either of these two following orders:\r\n a) first computing the *weighted sum* of xents over timesteps (yielding a batchsize-sized tensor of xent-sums), then simply averaging this vector, i.e., summing and dividing by the batchsize. The result, however, is just the both-averaged version times the batchsize, divided by the sum of all weights.\r\n b) first computing the *weighted average* over the batchsize, then summing these averages over all timesteps. The result here is different from 1a and the double-averaged (of course, there is some correlation...)!\r\n\r\nI think 1a is the desired behavior (as the loglikelihood of a sequence really is the sum of the individual loglikelihoods and batches do correspond to sequence-length agnostic averages) and I'd be happy to establish it as the standard for this. Either way, doing something other than failing with an error will require an explicit notice in the docs. An error (or warning for backwards-compatibility?) might just be the simplest and safest option.\r\n\r\n**Code to reproduce the issue**\r\n\r\n```python\r\ntfa.seq2seq.sequence_loss(\r\n logits=tf.random.normal([3, 5, 7]),\r\n targets=tf.zeros([3, 5], dtype=tf.int32),\r\n weights=tf.sequence_mask(lengths=[3, 5, 1], maxlen=5, dtype=tf.float32),\r\n average_across_batch=True,\r\n average_across_timesteps=False,\r\n sum_over_batch=False,\r\n sum_over_timesteps=True,\r\n)\r\n```\r\n...should return a scalar but returns only the batch-averaged tensor.\r\n\r\n**Some more code to play with to test the claims above**\r\n\r\n```python\r\nimport tensorflow.compat.v2 as tf\r\nimport tensorflow_addons as tfa\r\nimport numpy as np\r\nimport random\r\n\r\ncase1b = []\r\ndblavg = []\r\n\r\nfor _ in range(100):\r\n dtype = tf.float32\r\n batchsize = random.randint(2, 10)\r\n maxlen = random.randint(2, 10)\r\n logits = tf.random.normal([batchsize, maxlen, 3])\r\n labels = tf.zeros([batchsize, maxlen], dtype=tf.int32)\r\n lengths = tf.squeeze(tf.random.categorical(tf.zeros([1, maxlen - 1]), batchsize)) + 1\r\n weights = tf.sequence_mask(lengths=lengths, maxlen=maxlen, dtype=tf.float32)\r\n\r\n def sl(ab, sb, at, st):\r\n return tfa.seq2seq.sequence_loss(\r\n logits,\r\n labels,\r\n weights,\r\n average_across_batch=ab,\r\n average_across_timesteps=at,\r\n sum_over_batch=sb,\r\n sum_over_timesteps=st,\r\n )\r\n\r\n all_b_all_t = sl(ab=False, sb=False, at=False, st=False)\r\n avg_b_avg_t = sl(ab=True, sb=False, at=True, st=False)\r\n sum_b_all_t = sl(ab=False, sb=True, at=False, st=False)\r\n\r\n tf.assert_equal(sum_b_all_t, tf.math.divide_no_nan(tf.reduce_sum(all_b_all_t, axis=0), tf.reduce_sum(weights, axis=0)))\r\n\r\n weighted = all_b_all_t * weights\r\n\r\n first_sum_timesteps = tf.reduce_sum(weighted, axis=1)\r\n then_average_batch = tf.reduce_sum(first_sum_timesteps) / batchsize\r\n\r\n first_average_batch = tf.math.divide_no_nan(tf.reduce_sum(weighted, axis=0), tf.reduce_sum(weights, axis=0))\r\n then_sum_timesteps = tf.reduce_sum(first_average_batch)\r\n\r\n # Case 1a and 1b are different.\r\n assert not np.isclose(then_average_batch, then_sum_timesteps)\r\n # Case 1a is just the double-averaging up to a constant.\r\n assert np.allclose(then_average_batch * batchsize / tf.reduce_sum(weights), avg_b_avg_t)\r\n # Case 1b is not just the averaging.\r\n assert not np.allclose(then_sum_timesteps / maxlen, avg_b_avg_t)\r\n # They only kind of correlate:\r\n case1b.append(then_sum_timesteps / maxlen)\r\n dblavg.append(avg_b_avg_t)\r\n```\n", "before_files": [{"content": "# Copyright 2016 The TensorFlow Authors. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n# ==============================================================================\n\"\"\"Seq2seq loss operations for use in sequence models.\"\"\"\n\nfrom __future__ import absolute_import\nfrom __future__ import division\nfrom __future__ import print_function\n\nimport tensorflow as tf\n\n\ndef sequence_loss(logits,\n targets,\n weights,\n average_across_timesteps=True,\n average_across_batch=True,\n sum_over_timesteps=False,\n sum_over_batch=False,\n softmax_loss_function=None,\n name=None):\n \"\"\"Weighted cross-entropy loss for a sequence of logits.\n\n Depending on the values of `average_across_timesteps` /\n `sum_over_timesteps` and `average_across_batch` / `sum_over_batch`, the\n return Tensor will have rank 0, 1, or 2 as these arguments reduce the\n cross-entropy at each target, which has shape\n `[batch_size, sequence_length]`, over their respective dimensions. For\n example, if `average_across_timesteps` is `True` and `average_across_batch`\n is `False`, then the return Tensor will have shape `[batch_size]`.\n\n Note that `average_across_timesteps` and `sum_over_timesteps` cannot be\n True at same time. Same for `average_across_batch` and `sum_over_batch`.\n\n The recommended loss reduction in tf 2.0 has been changed to sum_over,\n instead of weighted average. User are recommend to use `sum_over_timesteps`\n and `sum_over_batch` for reduction.\n\n Args:\n logits: A Tensor of shape\n `[batch_size, sequence_length, num_decoder_symbols]` and dtype float.\n The logits correspond to the prediction across all classes at each\n timestep.\n targets: A Tensor of shape `[batch_size, sequence_length]` and dtype\n int. The target represents the true class at each timestep.\n weights: A Tensor of shape `[batch_size, sequence_length]` and dtype\n float. `weights` constitutes the weighting of each prediction in the\n sequence. When using `weights` as masking, set all valid timesteps to 1\n and all padded timesteps to 0, e.g. a mask returned by\n `tf.sequence_mask`.\n average_across_timesteps: If set, sum the cost across the sequence\n dimension and divide the cost by the total label weight across\n timesteps.\n average_across_batch: If set, sum the cost across the batch dimension and\n divide the returned cost by the batch size.\n sum_over_timesteps: If set, sum the cost across the sequence dimension\n and divide the size of the sequence. Note that any element with 0\n weights will be excluded from size calculation.\n sum_over_batch: if set, sum the cost across the batch dimension and\n divide the total cost by the batch size. Not that any element with 0\n weights will be excluded from size calculation.\n softmax_loss_function: Function (labels, logits) -> loss-batch\n to be used instead of the standard softmax (the default if this is\n None). **Note that to avoid confusion, it is required for the function\n to accept named arguments.**\n name: Optional name for this operation, defaults to \"sequence_loss\".\n\n Returns:\n A float Tensor of rank 0, 1, or 2 depending on the\n `average_across_timesteps` and `average_across_batch` arguments. By\n default, it has rank 0 (scalar) and is the weighted average cross-entropy\n (log-perplexity) per symbol.\n\n Raises:\n ValueError: logits does not have 3 dimensions or targets does not have 2\n dimensions or weights does not have 2 dimensions.\n \"\"\"\n if len(logits.get_shape()) != 3:\n raise ValueError(\"Logits must be a \"\n \"[batch_size x sequence_length x logits] tensor\")\n if len(targets.get_shape()) != 2:\n raise ValueError(\n \"Targets must be a [batch_size x sequence_length] tensor\")\n if len(weights.get_shape()) != 2:\n raise ValueError(\n \"Weights must be a [batch_size x sequence_length] tensor\")\n if average_across_timesteps and sum_over_timesteps:\n raise ValueError(\n \"average_across_timesteps and sum_over_timesteps cannot \"\n \"be set to True at same time.\")\n if average_across_batch and sum_over_batch:\n raise ValueError(\n \"average_across_batch and sum_over_batch cannot be set \"\n \"to True at same time.\")\n with tf.name_scope(name or \"sequence_loss\"):\n num_classes = tf.shape(input=logits)[2]\n logits_flat = tf.reshape(logits, [-1, num_classes])\n targets = tf.reshape(targets, [-1])\n if softmax_loss_function is None:\n crossent = tf.nn.sparse_softmax_cross_entropy_with_logits(\n labels=targets, logits=logits_flat)\n else:\n crossent = softmax_loss_function(\n labels=targets, logits=logits_flat)\n crossent *= tf.reshape(weights, [-1])\n if average_across_timesteps and average_across_batch:\n crossent = tf.reduce_sum(input_tensor=crossent)\n total_size = tf.reduce_sum(input_tensor=weights)\n crossent = tf.math.divide_no_nan(crossent, total_size)\n elif sum_over_timesteps and sum_over_batch:\n crossent = tf.reduce_sum(input_tensor=crossent)\n total_count = tf.cast(\n tf.math.count_nonzero(weights), crossent.dtype)\n crossent = tf.math.divide_no_nan(crossent, total_count)\n else:\n crossent = tf.reshape(crossent, tf.shape(input=logits)[0:2])\n if average_across_timesteps or average_across_batch:\n reduce_axis = [0] if average_across_batch else [1]\n crossent = tf.reduce_sum(\n input_tensor=crossent, axis=reduce_axis)\n total_size = tf.reduce_sum(\n input_tensor=weights, axis=reduce_axis)\n crossent = tf.math.divide_no_nan(crossent, total_size)\n elif sum_over_timesteps or sum_over_batch:\n reduce_axis = [0] if sum_over_batch else [1]\n crossent = tf.reduce_sum(\n input_tensor=crossent, axis=reduce_axis)\n total_count = tf.cast(\n tf.math.count_nonzero(weights, axis=reduce_axis),\n dtype=crossent.dtype)\n crossent = tf.math.divide_no_nan(crossent, total_count)\n return crossent\n\n\nclass SequenceLoss(tf.keras.losses.Loss):\n \"\"\"Weighted cross-entropy loss for a sequence of logits.\"\"\"\n\n def __init__(self,\n average_across_timesteps=False,\n average_across_batch=False,\n sum_over_timesteps=True,\n sum_over_batch=True,\n softmax_loss_function=None,\n name=None):\n super(SequenceLoss, self).__init__(name=name)\n self.average_across_timesteps = average_across_timesteps\n self.average_across_batch = average_across_batch\n self.sum_over_timesteps = sum_over_timesteps\n self.sum_over_batch = sum_over_batch\n self.softmax_loss_function = softmax_loss_function\n\n def __call__(self, y_true, y_pred, sample_weight=None):\n \"\"\"Override the parent __call__ to have a customized reduce\n behavior.\"\"\"\n return sequence_loss(\n y_pred,\n y_true,\n sample_weight,\n average_across_timesteps=self.average_across_timesteps,\n average_across_batch=self.average_across_batch,\n sum_over_timesteps=self.sum_over_timesteps,\n sum_over_batch=self.sum_over_batch,\n softmax_loss_function=self.softmax_loss_function,\n name=self.name)\n\n def call(self, y_true, y_pred):\n # Skip this method since the __call__ contains real implementation.\n pass\n", "path": "tensorflow_addons/seq2seq/loss.py"}], "after_files": [{"content": "# Copyright 2016 The TensorFlow Authors. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n# ==============================================================================\n\"\"\"Seq2seq loss operations for use in sequence models.\"\"\"\n\nfrom __future__ import absolute_import\nfrom __future__ import division\nfrom __future__ import print_function\n\nimport tensorflow as tf\n\n\ndef sequence_loss(logits,\n targets,\n weights,\n average_across_timesteps=True,\n average_across_batch=True,\n sum_over_timesteps=False,\n sum_over_batch=False,\n softmax_loss_function=None,\n name=None):\n \"\"\"Weighted cross-entropy loss for a sequence of logits.\n\n Depending on the values of `average_across_timesteps` /\n `sum_over_timesteps` and `average_across_batch` / `sum_over_batch`, the\n return Tensor will have rank 0, 1, or 2 as these arguments reduce the\n cross-entropy at each target, which has shape\n `[batch_size, sequence_length]`, over their respective dimensions. For\n example, if `average_across_timesteps` is `True` and `average_across_batch`\n is `False`, then the return Tensor will have shape `[batch_size]`.\n\n Note that `average_across_timesteps` and `sum_over_timesteps` cannot be\n True at same time. Same for `average_across_batch` and `sum_over_batch`.\n\n The recommended loss reduction in tf 2.0 has been changed to sum_over,\n instead of weighted average. User are recommend to use `sum_over_timesteps`\n and `sum_over_batch` for reduction.\n\n Args:\n logits: A Tensor of shape\n `[batch_size, sequence_length, num_decoder_symbols]` and dtype float.\n The logits correspond to the prediction across all classes at each\n timestep.\n targets: A Tensor of shape `[batch_size, sequence_length]` and dtype\n int. The target represents the true class at each timestep.\n weights: A Tensor of shape `[batch_size, sequence_length]` and dtype\n float. `weights` constitutes the weighting of each prediction in the\n sequence. When using `weights` as masking, set all valid timesteps to 1\n and all padded timesteps to 0, e.g. a mask returned by\n `tf.sequence_mask`.\n average_across_timesteps: If set, sum the cost across the sequence\n dimension and divide the cost by the total label weight across\n timesteps.\n average_across_batch: If set, sum the cost across the batch dimension and\n divide the returned cost by the batch size.\n sum_over_timesteps: If set, sum the cost across the sequence dimension\n and divide the size of the sequence. Note that any element with 0\n weights will be excluded from size calculation.\n sum_over_batch: if set, sum the cost across the batch dimension and\n divide the total cost by the batch size. Not that any element with 0\n weights will be excluded from size calculation.\n softmax_loss_function: Function (labels, logits) -> loss-batch\n to be used instead of the standard softmax (the default if this is\n None). **Note that to avoid confusion, it is required for the function\n to accept named arguments.**\n name: Optional name for this operation, defaults to \"sequence_loss\".\n\n Returns:\n A float Tensor of rank 0, 1, or 2 depending on the\n `average_across_timesteps` and `average_across_batch` arguments. By\n default, it has rank 0 (scalar) and is the weighted average cross-entropy\n (log-perplexity) per symbol.\n\n Raises:\n ValueError: logits does not have 3 dimensions or targets does not have 2\n dimensions or weights does not have 2 dimensions.\n \"\"\"\n if len(logits.get_shape()) != 3:\n raise ValueError(\"Logits must be a \"\n \"[batch_size x sequence_length x logits] tensor\")\n if len(targets.get_shape()) != 2:\n raise ValueError(\n \"Targets must be a [batch_size x sequence_length] tensor\")\n if len(weights.get_shape()) != 2:\n raise ValueError(\n \"Weights must be a [batch_size x sequence_length] tensor\")\n if average_across_timesteps and sum_over_timesteps:\n raise ValueError(\n \"average_across_timesteps and sum_over_timesteps cannot \"\n \"be set to True at same time.\")\n if average_across_batch and sum_over_batch:\n raise ValueError(\n \"average_across_batch and sum_over_batch cannot be set \"\n \"to True at same time.\")\n if average_across_batch and sum_over_timesteps:\n raise ValueError(\n \"average_across_batch and sum_over_timesteps cannot be set \"\n \"to True at same time because of ambiguous order.\")\n if sum_over_batch and average_across_timesteps:\n raise ValueError(\n \"sum_over_batch and average_across_timesteps cannot be set \"\n \"to True at same time because of ambiguous order.\")\n with tf.name_scope(name or \"sequence_loss\"):\n num_classes = tf.shape(input=logits)[2]\n logits_flat = tf.reshape(logits, [-1, num_classes])\n targets = tf.reshape(targets, [-1])\n if softmax_loss_function is None:\n crossent = tf.nn.sparse_softmax_cross_entropy_with_logits(\n labels=targets, logits=logits_flat)\n else:\n crossent = softmax_loss_function(\n labels=targets, logits=logits_flat)\n crossent *= tf.reshape(weights, [-1])\n if average_across_timesteps and average_across_batch:\n crossent = tf.reduce_sum(input_tensor=crossent)\n total_size = tf.reduce_sum(input_tensor=weights)\n crossent = tf.math.divide_no_nan(crossent, total_size)\n elif sum_over_timesteps and sum_over_batch:\n crossent = tf.reduce_sum(input_tensor=crossent)\n total_count = tf.cast(\n tf.math.count_nonzero(weights), crossent.dtype)\n crossent = tf.math.divide_no_nan(crossent, total_count)\n else:\n crossent = tf.reshape(crossent, tf.shape(input=logits)[0:2])\n if average_across_timesteps or average_across_batch:\n reduce_axis = [0] if average_across_batch else [1]\n crossent = tf.reduce_sum(\n input_tensor=crossent, axis=reduce_axis)\n total_size = tf.reduce_sum(\n input_tensor=weights, axis=reduce_axis)\n crossent = tf.math.divide_no_nan(crossent, total_size)\n elif sum_over_timesteps or sum_over_batch:\n reduce_axis = [0] if sum_over_batch else [1]\n crossent = tf.reduce_sum(\n input_tensor=crossent, axis=reduce_axis)\n total_count = tf.cast(\n tf.math.count_nonzero(weights, axis=reduce_axis),\n dtype=crossent.dtype)\n crossent = tf.math.divide_no_nan(crossent, total_count)\n return crossent\n\n\nclass SequenceLoss(tf.keras.losses.Loss):\n \"\"\"Weighted cross-entropy loss for a sequence of logits.\"\"\"\n\n def __init__(self,\n average_across_timesteps=False,\n average_across_batch=False,\n sum_over_timesteps=True,\n sum_over_batch=True,\n softmax_loss_function=None,\n name=None):\n super(SequenceLoss, self).__init__(name=name)\n self.average_across_timesteps = average_across_timesteps\n self.average_across_batch = average_across_batch\n self.sum_over_timesteps = sum_over_timesteps\n self.sum_over_batch = sum_over_batch\n self.softmax_loss_function = softmax_loss_function\n\n def __call__(self, y_true, y_pred, sample_weight=None):\n \"\"\"Override the parent __call__ to have a customized reduce\n behavior.\"\"\"\n return sequence_loss(\n y_pred,\n y_true,\n sample_weight,\n average_across_timesteps=self.average_across_timesteps,\n average_across_batch=self.average_across_batch,\n sum_over_timesteps=self.sum_over_timesteps,\n sum_over_batch=self.sum_over_batch,\n softmax_loss_function=self.softmax_loss_function,\n name=self.name)\n\n def call(self, y_true, y_pred):\n # Skip this method since the __call__ contains real implementation.\n pass\n", "path": "tensorflow_addons/seq2seq/loss.py"}]} | 3,719 | 232 |

gh_patches_debug_10799 | rasdani/github-patches | git_diff | optuna__optuna-1680 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Use function annotation syntax for Type Hints.

After dropping Python 2.7 support at #710, we can define type hints with function annotation syntax.

~~Do you have a plan to update the coding style guideline?~~

https://github.com/optuna/optuna/wiki/Coding-Style-Conventions

## Progress

- [x] `optuna/integration/sklearn.py` (#1735)

- [x] `optuna/study.py` - assigned to harpy

## Note to the questioner

We still cannot use variable annotation syntax introduced by [PEP 526](https://www.python.org/dev/peps/pep-0526/) because we supports Python 3.5.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `optuna/pruners/_nop.py`

Content:

```

1 from optuna.pruners import BasePruner

2 from optuna import type_checking

3

4 if type_checking.TYPE_CHECKING:

5 from optuna.study import Study # NOQA

6 from optuna.trial import FrozenTrial # NOQA

7

8

9 class NopPruner(BasePruner):

10 """Pruner which never prunes trials.

11

12 Example:

13

14 .. testcode::

15

16 import numpy as np

17 from sklearn.datasets import load_iris

18 from sklearn.linear_model import SGDClassifier

19 from sklearn.model_selection import train_test_split

20

21 import optuna

22

23 X, y = load_iris(return_X_y=True)

24 X_train, X_valid, y_train, y_valid = train_test_split(X, y)

25 classes = np.unique(y)

26

27 def objective(trial):

28 alpha = trial.suggest_uniform('alpha', 0.0, 1.0)

29 clf = SGDClassifier(alpha=alpha)

30 n_train_iter = 100

31

32 for step in range(n_train_iter):

33 clf.partial_fit(X_train, y_train, classes=classes)

34

35 intermediate_value = clf.score(X_valid, y_valid)

36 trial.report(intermediate_value, step)

37

38 if trial.should_prune():

39 assert False, "should_prune() should always return False with this pruner."

40 raise optuna.TrialPruned()

41

42 return clf.score(X_valid, y_valid)

43

44 study = optuna.create_study(direction='maximize',

45 pruner=optuna.pruners.NopPruner())

46 study.optimize(objective, n_trials=20)

47 """

48

49 def prune(self, study, trial):

50 # type: (Study, FrozenTrial) -> bool

51

52 return False

53

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/optuna/pruners/_nop.py b/optuna/pruners/_nop.py

--- a/optuna/pruners/_nop.py

+++ b/optuna/pruners/_nop.py

@@ -1,9 +1,5 @@

+import optuna

from optuna.pruners import BasePruner

-from optuna import type_checking

-

-if type_checking.TYPE_CHECKING:

- from optuna.study import Study # NOQA

- from optuna.trial import FrozenTrial # NOQA

class NopPruner(BasePruner):

@@ -46,7 +42,6 @@

study.optimize(objective, n_trials=20)

"""

- def prune(self, study, trial):

- # type: (Study, FrozenTrial) -> bool

+ def prune(self, study: "optuna.study.Study", trial: "optuna.trial.FrozenTrial") -> bool:

return False