problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_30341 | rasdani/github-patches | git_diff | pyinstaller__pyinstaller-7411 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Multiprocessing "spawn" not thread-safe on Linux.

<!--

Welcome to the PyInstaller issue tracker! Before creating an issue, please heed the following:

1. This tracker should only be used to report bugs and request features / enhancements to PyInstaller

- For questions and general support, use the discussions forum.

2. Use the search function before creating a new issue. Duplicates will be closed and directed to

the original discussion.

3. When making a bug report, make sure you provide all required information. The easier it is for

maintainers to reproduce, the faster it'll be fixed.

-->

<!-- +++ ONLY TEXT +++ DO NOT POST IMAGES +++ -->

## Description of the issue

When using multiprocessing with the "spawn" method on Linux, processes sometimes fail to start with the message: `FileNotFoundError: [Errno 2] No such file or directory: '/tmp/_MEIOchafX/multiprocessing_bug.py'` This happens if different threads try to launch processes concurrently. It would appear that the "spawn" method is not thread-safe when used with freeze support.

As mentioned below, this bug does not manifest when built with `--onedir`.

[debug.log](https://github.com/pyinstaller/pyinstaller/files/10560051/debug.log) contains import and bootloader logging for a failure case.

Q: Does this happen with "fork"?

A: No

Q: Does this happen when running from source?

A: No, only when packaged as a pyinstaller executable with `--onefile`.

Q: Does this happen on Windows?

A: Unknown

### Context information (for bug reports)

* Output of `pyinstaller --version`: ```5.7.0```

* Version of Python: Python 3.10.6

* Platform: Ubuntu 22.04.1 LTS

* How you installed Python: apt

* Did you also try this on another platform?

* Ubuntu 18.04.6 LTS, pyinstaller 4.7, Python 3.7 - Bug is present

* WSL2 pyinstaller 4.7, Python 3.7 - Bug is present

* try the latest development version, using the following command:

```shell

pip install https://github.com/pyinstaller/pyinstaller/archive/develop.zip

```

* follow *all* the instructions in our "If Things Go Wrong" Guide

(https://github.com/pyinstaller/pyinstaller/wiki/If-Things-Go-Wrong) and

### Make sure [everything is packaged correctly](https://github.com/pyinstaller/pyinstaller/wiki/How-to-Report-Bugs#make-sure-everything-is-packaged-correctly)

* [x] start with clean installation

* [x] use the latest development version

* [x] Run your frozen program **from a command window (shell)** — instead of double-clicking on it

* [x] Package your program in **--onedir mode** - **BUG DOES NOT MANIFEST**

* [x] Package **without UPX**, say: use the option `--noupx` or set `upx=False` in your .spec-file - - **BUG DOES NOT MANIFEST**

* [x] Repackage you application in **verbose/debug mode**. For this, pass the option `--debug` to `pyi-makespec` or `pyinstaller` or use `EXE(..., debug=1, ...)` in your .spec file.

### A minimal example program which shows the error

```python

import multiprocessing

import sys

from threading import Thread

DEFAULT_N = 3

def main():

try:

n = int(sys.argv[1])

except IndexError:

n=DEFAULT_N

threads = []

for i in range(n):

threads.append(Thread(target=foo, args=(i, )))

for i in range(n):

threads[i].start()

for i in range(n):

threads[i].join()

def foo(i):

multiprocessing_context = multiprocessing.get_context(method="spawn")

q = multiprocessing_context.Queue()

p = multiprocessing_context.Process(target=bar, args=(q, i), daemon=True)

p.start()

p.join()

def bar(q, i):

q.put('hello')

print(f"{i} Added to queue")

if __name__ == "__main__":

multiprocessing.freeze_support()

main()

```

### Stacktrace / full error message

Note: If you can't reproduce the bug, try increasing the parameter from 2 to 5 (or higher).

```

$> dist/multiprocessing_bug 2

0 Added to queue

Traceback (most recent call last):

File "multiprocessing_bug.py", line 34, in <module>

multiprocessing.freeze_support()

File "PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py", line 49, in _freeze_support

File "multiprocessing/spawn.py", line 116, in spawn_main

File "multiprocessing/spawn.py", line 125, in _main

File "multiprocessing/spawn.py", line 236, in prepare

File "multiprocessing/spawn.py", line 287, in _fixup_main_from_path

File "runpy.py", line 288, in run_path

File "runpy.py", line 252, in _get_code_from_file

FileNotFoundError: [Errno 2] No such file or directory: '/tmp/_MEIOchafX/multiprocessing_bug.py'

[8216] Failed to execute script 'multiprocessing_bug' due to unhandled exception!

```

### Workaround

As shown below, adding a lock around the call to `process.start()` seems to resolve the issue.

```python

import multiprocessing

import sys

from threading import Thread, Lock

DEFAULT_N = 3

def main():

try:

n = int(sys.argv[1])

except IndexError:

n=DEFAULT_N

threads = []

for i in range(n):

threads.append(Thread(target=foo, args=(i, )))

for i in range(n):

threads[i].start()

for i in range(n):

threads[i].join()

lock = Lock()

def foo(i):

multiprocessing_context = multiprocessing.get_context(method="spawn")

q = multiprocessing_context.Queue()

p = multiprocessing_context.Process(target=bar, args=(q, i), daemon=True)

with lock:

p.start()

p.join()

def bar(q, i):

q.put('hello')

print(f"{i} Added to queue")

if __name__ == "__main__":

multiprocessing.freeze_support()

main()

```

--- END ISSUE ---

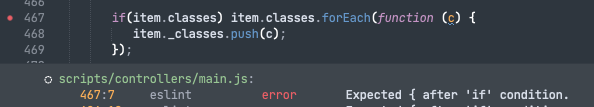

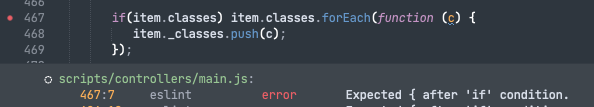

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py`

Content:

```

1 #-----------------------------------------------------------------------------

2 # Copyright (c) 2017-2023, PyInstaller Development Team.

3 #

4 # Licensed under the Apache License, Version 2.0 (the "License");

5 # you may not use this file except in compliance with the License.

6 #

7 # The full license is in the file COPYING.txt, distributed with this software.

8 #

9 # SPDX-License-Identifier: Apache-2.0

10 #-----------------------------------------------------------------------------

11

12 import multiprocessing

13 import multiprocessing.spawn as spawn

14 # 'spawn' multiprocessing needs some adjustments on osx

15 import os

16 import sys

17 from subprocess import _args_from_interpreter_flags

18

19 # prevent spawn from trying to read __main__ in from the main script

20 multiprocessing.process.ORIGINAL_DIR = None

21

22

23 def _freeze_support():

24 # We want to catch the two processes that are spawned by the multiprocessing code:

25 # - the semaphore tracker, which cleans up named semaphores in the spawn multiprocessing mode

26 # - the fork server, which keeps track of worker processes in forkserver mode.

27 # both of these processes are started by spawning a new copy of the running executable, passing it the flags from

28 # _args_from_interpreter_flags and then "-c" and an import statement.

29 # Look for those flags and the import statement, then exec() the code ourselves.

30

31 if (

32 len(sys.argv) >= 2 and sys.argv[-2] == '-c' and sys.argv[-1].startswith((

33 'from multiprocessing.semaphore_tracker import main', # Py<3.8

34 'from multiprocessing.resource_tracker import main', # Py>=3.8

35 'from multiprocessing.forkserver import main'

36 )) and set(sys.argv[1:-2]) == set(_args_from_interpreter_flags())

37 ):

38 exec(sys.argv[-1])

39 sys.exit()

40

41 if spawn.is_forking(sys.argv):

42 kwds = {}

43 for arg in sys.argv[2:]:

44 name, value = arg.split('=')

45 if value == 'None':

46 kwds[name] = None

47 else:

48 kwds[name] = int(value)

49 spawn.spawn_main(**kwds)

50 sys.exit()

51

52

53 multiprocessing.freeze_support = spawn.freeze_support = _freeze_support

54

55 # Bootloader unsets _MEIPASS2 for child processes to allow running PyInstaller binaries inside pyinstaller binaries.

56 # This is ok for mac or unix with fork() system call. But on Windows we need to overcome missing fork() function.

57

58 if sys.platform.startswith('win'):

59 import multiprocessing.popen_spawn_win32 as forking

60 else:

61 import multiprocessing.popen_fork as forking

62 import multiprocessing.popen_spawn_posix as spawning

63

64

65 # Mix-in to re-set _MEIPASS2 from sys._MEIPASS.

66 class FrozenSupportMixIn:

67 def __init__(self, *args, **kw):

68 if hasattr(sys, 'frozen'):

69 # We have to set original _MEIPASS2 value from sys._MEIPASS to get --onefile mode working.

70 os.putenv('_MEIPASS2', sys._MEIPASS) # @UndefinedVariable

71 try:

72 super().__init__(*args, **kw)

73 finally:

74 if hasattr(sys, 'frozen'):

75 # On some platforms (e.g. AIX) 'os.unsetenv()' is not available. In those cases we cannot delete the

76 # variable but only set it to the empty string. The bootloader can handle this case.

77 if hasattr(os, 'unsetenv'):

78 os.unsetenv('_MEIPASS2')

79 else:

80 os.putenv('_MEIPASS2', '')

81

82

83 # Patch forking.Popen to re-set _MEIPASS2 from sys._MEIPASS.

84 class _Popen(FrozenSupportMixIn, forking.Popen):

85 pass

86

87

88 forking.Popen = _Popen

89

90 if not sys.platform.startswith('win'):

91 # Patch spawning.Popen to re-set _MEIPASS2 from sys._MEIPASS.

92 class _Spawning_Popen(FrozenSupportMixIn, spawning.Popen):

93 pass

94

95 spawning.Popen = _Spawning_Popen

96

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py b/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py

--- a/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py

+++ b/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py

@@ -9,6 +9,7 @@

# SPDX-License-Identifier: Apache-2.0

#-----------------------------------------------------------------------------

+import threading

import multiprocessing

import multiprocessing.spawn as spawn

# 'spawn' multiprocessing needs some adjustments on osx

@@ -64,14 +65,17 @@

# Mix-in to re-set _MEIPASS2 from sys._MEIPASS.

class FrozenSupportMixIn:

+ _lock = threading.Lock()

+

def __init__(self, *args, **kw):

- if hasattr(sys, 'frozen'):

+ # The whole code block needs be executed under a lock to prevent race conditions between `os.putenv` and

+ # `os.unsetenv` calls when processes are spawned concurrently from multiple threads. See #7410.

+ with self._lock:

# We have to set original _MEIPASS2 value from sys._MEIPASS to get --onefile mode working.

os.putenv('_MEIPASS2', sys._MEIPASS) # @UndefinedVariable

- try:

- super().__init__(*args, **kw)

- finally:

- if hasattr(sys, 'frozen'):

+ try:

+ super().__init__(*args, **kw)

+ finally:

# On some platforms (e.g. AIX) 'os.unsetenv()' is not available. In those cases we cannot delete the

# variable but only set it to the empty string. The bootloader can handle this case.

if hasattr(os, 'unsetenv'):

| {"golden_diff": "diff --git a/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py b/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py\n--- a/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py\n+++ b/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py\n@@ -9,6 +9,7 @@\n # SPDX-License-Identifier: Apache-2.0\n #-----------------------------------------------------------------------------\n \n+import threading\n import multiprocessing\n import multiprocessing.spawn as spawn\n # 'spawn' multiprocessing needs some adjustments on osx\n@@ -64,14 +65,17 @@\n \n # Mix-in to re-set _MEIPASS2 from sys._MEIPASS.\n class FrozenSupportMixIn:\n+ _lock = threading.Lock()\n+\n def __init__(self, *args, **kw):\n- if hasattr(sys, 'frozen'):\n+ # The whole code block needs be executed under a lock to prevent race conditions between `os.putenv` and\n+ # `os.unsetenv` calls when processes are spawned concurrently from multiple threads. See #7410.\n+ with self._lock:\n # We have to set original _MEIPASS2 value from sys._MEIPASS to get --onefile mode working.\n os.putenv('_MEIPASS2', sys._MEIPASS) # @UndefinedVariable\n- try:\n- super().__init__(*args, **kw)\n- finally:\n- if hasattr(sys, 'frozen'):\n+ try:\n+ super().__init__(*args, **kw)\n+ finally:\n # On some platforms (e.g. AIX) 'os.unsetenv()' is not available. In those cases we cannot delete the\n # variable but only set it to the empty string. The bootloader can handle this case.\n if hasattr(os, 'unsetenv'):\n", "issue": "Multiprocessing \"spawn\" not thread-safe on Linux.\n<!--\r\nWelcome to the PyInstaller issue tracker! Before creating an issue, please heed the following:\r\n\r\n1. This tracker should only be used to report bugs and request features / enhancements to PyInstaller\r\n - For questions and general support, use the discussions forum.\r\n2. Use the search function before creating a new issue. Duplicates will be closed and directed to\r\n the original discussion.\r\n3. When making a bug report, make sure you provide all required information. The easier it is for\r\n maintainers to reproduce, the faster it'll be fixed.\r\n-->\r\n\r\n<!-- +++ ONLY TEXT +++ DO NOT POST IMAGES +++ -->\r\n\r\n## Description of the issue\r\n\r\nWhen using multiprocessing with the \"spawn\" method on Linux, processes sometimes fail to start with the message: `FileNotFoundError: [Errno 2] No such file or directory: '/tmp/_MEIOchafX/multiprocessing_bug.py'` This happens if different threads try to launch processes concurrently. It would appear that the \"spawn\" method is not thread-safe when used with freeze support.\r\n\r\nAs mentioned below, this bug does not manifest when built with `--onedir`.\r\n\r\n[debug.log](https://github.com/pyinstaller/pyinstaller/files/10560051/debug.log) contains import and bootloader logging for a failure case. \r\n\r\n\r\nQ: Does this happen with \"fork\"? \r\nA: No\r\n\r\nQ: Does this happen when running from source?\r\nA: No, only when packaged as a pyinstaller executable with `--onefile`.\r\n\r\nQ: Does this happen on Windows?\r\nA: Unknown\r\n\r\n### Context information (for bug reports)\r\n\r\n* Output of `pyinstaller --version`: ```5.7.0```\r\n* Version of Python: Python 3.10.6\r\n* Platform: Ubuntu 22.04.1 LTS\r\n* How you installed Python: apt\r\n* Did you also try this on another platform?\r\n * Ubuntu 18.04.6 LTS, pyinstaller 4.7, Python 3.7 - Bug is present\r\n * WSL2 pyinstaller 4.7, Python 3.7 - Bug is present\r\n\r\n\r\n* try the latest development version, using the following command:\r\n\r\n```shell\r\npip install https://github.com/pyinstaller/pyinstaller/archive/develop.zip\r\n```\r\n\r\n* follow *all* the instructions in our \"If Things Go Wrong\" Guide\r\n (https://github.com/pyinstaller/pyinstaller/wiki/If-Things-Go-Wrong) and\r\n\r\n### Make sure [everything is packaged correctly](https://github.com/pyinstaller/pyinstaller/wiki/How-to-Report-Bugs#make-sure-everything-is-packaged-correctly)\r\n\r\n * [x] start with clean installation\r\n * [x] use the latest development version\r\n * [x] Run your frozen program **from a command window (shell)** \u2014 instead of double-clicking on it\r\n * [x] Package your program in **--onedir mode** - **BUG DOES NOT MANIFEST**\r\n * [x] Package **without UPX**, say: use the option `--noupx` or set `upx=False` in your .spec-file - - **BUG DOES NOT MANIFEST**\r\n * [x] Repackage you application in **verbose/debug mode**. For this, pass the option `--debug` to `pyi-makespec` or `pyinstaller` or use `EXE(..., debug=1, ...)` in your .spec file.\r\n\r\n\r\n### A minimal example program which shows the error\r\n\r\n```python\r\nimport multiprocessing\r\nimport sys\r\nfrom threading import Thread\r\n\r\nDEFAULT_N = 3\r\n\r\ndef main():\r\n try:\r\n n = int(sys.argv[1])\r\n except IndexError:\r\n n=DEFAULT_N\r\n\r\n threads = []\r\n for i in range(n):\r\n threads.append(Thread(target=foo, args=(i, )))\r\n for i in range(n):\r\n threads[i].start()\r\n for i in range(n):\r\n threads[i].join()\r\n\r\ndef foo(i):\r\n multiprocessing_context = multiprocessing.get_context(method=\"spawn\")\r\n q = multiprocessing_context.Queue()\r\n p = multiprocessing_context.Process(target=bar, args=(q, i), daemon=True)\r\n p.start()\r\n p.join()\r\n\r\n\r\ndef bar(q, i):\r\n q.put('hello')\r\n print(f\"{i} Added to queue\")\r\n\r\nif __name__ == \"__main__\":\r\n multiprocessing.freeze_support()\r\n main()\r\n\r\n```\r\n\r\n### Stacktrace / full error message\r\n\r\nNote: If you can't reproduce the bug, try increasing the parameter from 2 to 5 (or higher).\r\n\r\n```\r\n$> dist/multiprocessing_bug 2\r\n0 Added to queue\r\nTraceback (most recent call last):\r\n File \"multiprocessing_bug.py\", line 34, in <module>\r\n multiprocessing.freeze_support()\r\n File \"PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py\", line 49, in _freeze_support\r\n File \"multiprocessing/spawn.py\", line 116, in spawn_main\r\n File \"multiprocessing/spawn.py\", line 125, in _main\r\n File \"multiprocessing/spawn.py\", line 236, in prepare\r\n File \"multiprocessing/spawn.py\", line 287, in _fixup_main_from_path\r\n File \"runpy.py\", line 288, in run_path\r\n File \"runpy.py\", line 252, in _get_code_from_file\r\nFileNotFoundError: [Errno 2] No such file or directory: '/tmp/_MEIOchafX/multiprocessing_bug.py'\r\n[8216] Failed to execute script 'multiprocessing_bug' due to unhandled exception!\r\n\r\n```\r\n\r\n### Workaround\r\n\r\nAs shown below, adding a lock around the call to `process.start()` seems to resolve the issue.\r\n\r\n```python\r\nimport multiprocessing\r\nimport sys\r\nfrom threading import Thread, Lock\r\n\r\nDEFAULT_N = 3\r\n\r\ndef main():\r\n try:\r\n n = int(sys.argv[1])\r\n except IndexError:\r\n n=DEFAULT_N\r\n\r\n threads = []\r\n for i in range(n):\r\n threads.append(Thread(target=foo, args=(i, )))\r\n for i in range(n):\r\n threads[i].start()\r\n for i in range(n):\r\n threads[i].join()\r\n\r\nlock = Lock()\r\ndef foo(i):\r\n multiprocessing_context = multiprocessing.get_context(method=\"spawn\")\r\n q = multiprocessing_context.Queue()\r\n p = multiprocessing_context.Process(target=bar, args=(q, i), daemon=True)\r\n\r\n with lock:\r\n p.start()\r\n\r\n p.join()\r\n\r\n\r\ndef bar(q, i):\r\n q.put('hello')\r\n print(f\"{i} Added to queue\")\r\n\r\nif __name__ == \"__main__\":\r\n multiprocessing.freeze_support()\r\n main()\r\n\r\n```\r\n\n", "before_files": [{"content": "#-----------------------------------------------------------------------------\n# Copyright (c) 2017-2023, PyInstaller Development Team.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n#\n# The full license is in the file COPYING.txt, distributed with this software.\n#\n# SPDX-License-Identifier: Apache-2.0\n#-----------------------------------------------------------------------------\n\nimport multiprocessing\nimport multiprocessing.spawn as spawn\n# 'spawn' multiprocessing needs some adjustments on osx\nimport os\nimport sys\nfrom subprocess import _args_from_interpreter_flags\n\n# prevent spawn from trying to read __main__ in from the main script\nmultiprocessing.process.ORIGINAL_DIR = None\n\n\ndef _freeze_support():\n # We want to catch the two processes that are spawned by the multiprocessing code:\n # - the semaphore tracker, which cleans up named semaphores in the spawn multiprocessing mode\n # - the fork server, which keeps track of worker processes in forkserver mode.\n # both of these processes are started by spawning a new copy of the running executable, passing it the flags from\n # _args_from_interpreter_flags and then \"-c\" and an import statement.\n # Look for those flags and the import statement, then exec() the code ourselves.\n\n if (\n len(sys.argv) >= 2 and sys.argv[-2] == '-c' and sys.argv[-1].startswith((\n 'from multiprocessing.semaphore_tracker import main', # Py<3.8\n 'from multiprocessing.resource_tracker import main', # Py>=3.8\n 'from multiprocessing.forkserver import main'\n )) and set(sys.argv[1:-2]) == set(_args_from_interpreter_flags())\n ):\n exec(sys.argv[-1])\n sys.exit()\n\n if spawn.is_forking(sys.argv):\n kwds = {}\n for arg in sys.argv[2:]:\n name, value = arg.split('=')\n if value == 'None':\n kwds[name] = None\n else:\n kwds[name] = int(value)\n spawn.spawn_main(**kwds)\n sys.exit()\n\n\nmultiprocessing.freeze_support = spawn.freeze_support = _freeze_support\n\n# Bootloader unsets _MEIPASS2 for child processes to allow running PyInstaller binaries inside pyinstaller binaries.\n# This is ok for mac or unix with fork() system call. But on Windows we need to overcome missing fork() function.\n\nif sys.platform.startswith('win'):\n import multiprocessing.popen_spawn_win32 as forking\nelse:\n import multiprocessing.popen_fork as forking\n import multiprocessing.popen_spawn_posix as spawning\n\n\n# Mix-in to re-set _MEIPASS2 from sys._MEIPASS.\nclass FrozenSupportMixIn:\n def __init__(self, *args, **kw):\n if hasattr(sys, 'frozen'):\n # We have to set original _MEIPASS2 value from sys._MEIPASS to get --onefile mode working.\n os.putenv('_MEIPASS2', sys._MEIPASS) # @UndefinedVariable\n try:\n super().__init__(*args, **kw)\n finally:\n if hasattr(sys, 'frozen'):\n # On some platforms (e.g. AIX) 'os.unsetenv()' is not available. In those cases we cannot delete the\n # variable but only set it to the empty string. The bootloader can handle this case.\n if hasattr(os, 'unsetenv'):\n os.unsetenv('_MEIPASS2')\n else:\n os.putenv('_MEIPASS2', '')\n\n\n# Patch forking.Popen to re-set _MEIPASS2 from sys._MEIPASS.\nclass _Popen(FrozenSupportMixIn, forking.Popen):\n pass\n\n\nforking.Popen = _Popen\n\nif not sys.platform.startswith('win'):\n # Patch spawning.Popen to re-set _MEIPASS2 from sys._MEIPASS.\n class _Spawning_Popen(FrozenSupportMixIn, spawning.Popen):\n pass\n\n spawning.Popen = _Spawning_Popen\n", "path": "PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py"}], "after_files": [{"content": "#-----------------------------------------------------------------------------\n# Copyright (c) 2017-2023, PyInstaller Development Team.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n#\n# The full license is in the file COPYING.txt, distributed with this software.\n#\n# SPDX-License-Identifier: Apache-2.0\n#-----------------------------------------------------------------------------\n\nimport threading\nimport multiprocessing\nimport multiprocessing.spawn as spawn\n# 'spawn' multiprocessing needs some adjustments on osx\nimport os\nimport sys\nfrom subprocess import _args_from_interpreter_flags\n\n# prevent spawn from trying to read __main__ in from the main script\nmultiprocessing.process.ORIGINAL_DIR = None\n\n\ndef _freeze_support():\n # We want to catch the two processes that are spawned by the multiprocessing code:\n # - the semaphore tracker, which cleans up named semaphores in the spawn multiprocessing mode\n # - the fork server, which keeps track of worker processes in forkserver mode.\n # both of these processes are started by spawning a new copy of the running executable, passing it the flags from\n # _args_from_interpreter_flags and then \"-c\" and an import statement.\n # Look for those flags and the import statement, then exec() the code ourselves.\n\n if (\n len(sys.argv) >= 2 and sys.argv[-2] == '-c' and sys.argv[-1].startswith((\n 'from multiprocessing.semaphore_tracker import main', # Py<3.8\n 'from multiprocessing.resource_tracker import main', # Py>=3.8\n 'from multiprocessing.forkserver import main'\n )) and set(sys.argv[1:-2]) == set(_args_from_interpreter_flags())\n ):\n exec(sys.argv[-1])\n sys.exit()\n\n if spawn.is_forking(sys.argv):\n kwds = {}\n for arg in sys.argv[2:]:\n name, value = arg.split('=')\n if value == 'None':\n kwds[name] = None\n else:\n kwds[name] = int(value)\n spawn.spawn_main(**kwds)\n sys.exit()\n\n\nmultiprocessing.freeze_support = spawn.freeze_support = _freeze_support\n\n# Bootloader unsets _MEIPASS2 for child processes to allow running PyInstaller binaries inside pyinstaller binaries.\n# This is ok for mac or unix with fork() system call. But on Windows we need to overcome missing fork() function.\n\nif sys.platform.startswith('win'):\n import multiprocessing.popen_spawn_win32 as forking\nelse:\n import multiprocessing.popen_fork as forking\n import multiprocessing.popen_spawn_posix as spawning\n\n\n# Mix-in to re-set _MEIPASS2 from sys._MEIPASS.\nclass FrozenSupportMixIn:\n _lock = threading.Lock()\n\n def __init__(self, *args, **kw):\n # The whole code block needs be executed under a lock to prevent race conditions between `os.putenv` and\n # `os.unsetenv` calls when processes are spawned concurrently from multiple threads. See #7410.\n with self._lock:\n # We have to set original _MEIPASS2 value from sys._MEIPASS to get --onefile mode working.\n os.putenv('_MEIPASS2', sys._MEIPASS) # @UndefinedVariable\n try:\n super().__init__(*args, **kw)\n finally:\n # On some platforms (e.g. AIX) 'os.unsetenv()' is not available. In those cases we cannot delete the\n # variable but only set it to the empty string. The bootloader can handle this case.\n if hasattr(os, 'unsetenv'):\n os.unsetenv('_MEIPASS2')\n else:\n os.putenv('_MEIPASS2', '')\n\n\n# Patch forking.Popen to re-set _MEIPASS2 from sys._MEIPASS.\nclass _Popen(FrozenSupportMixIn, forking.Popen):\n pass\n\n\nforking.Popen = _Popen\n\nif not sys.platform.startswith('win'):\n # Patch spawning.Popen to re-set _MEIPASS2 from sys._MEIPASS.\n class _Spawning_Popen(FrozenSupportMixIn, spawning.Popen):\n pass\n\n spawning.Popen = _Spawning_Popen\n", "path": "PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py"}]} | 2,763 | 402 |

gh_patches_debug_13193 | rasdani/github-patches | git_diff | opensearch-project__opensearch-build-499 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Make plugin integtest.sh run against non-snapshot build

The plugin integtest.sh picks up the opensearch version provided in build.gradle, which is 1.1.0-SNAPSHOT. Since the release candidates are non snapshot built artifacts, make this configurable in integ test job

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `bundle-workflow/src/paths/script_finder.py`

Content:

```

1 # SPDX-License-Identifier: Apache-2.0

2 #

3 # The OpenSearch Contributors require contributions made to

4 # this file be licensed under the Apache-2.0 license or a

5 # compatible open source license.

6

7 import os

8

9

10 class ScriptFinder:

11 class ScriptNotFoundError(Exception):

12 def __init__(self, kind, paths):

13 self.kind = kind

14 self.paths = paths

15 super().__init__(f"Could not find {kind} script. Looked in {paths}.")

16

17 component_scripts_path = os.path.realpath(

18 os.path.join(

19 os.path.dirname(os.path.abspath(__file__)), "../../scripts/components"

20 )

21 )

22

23 default_scripts_path = os.path.realpath(

24 os.path.join(

25 os.path.dirname(os.path.abspath(__file__)), "../../scripts/default"

26 )

27 )

28

29 """

30 ScriptFinder is a helper that abstracts away the details of where to look for build, test and install scripts.

31

32 For build.sh and integtest.sh scripts, given a component name and a checked-out Git repository,

33 it will look in the following locations, in order:

34 * Root of the Git repository

35 * /scripts/<script-name> in the Git repository

36 * <component_scripts_path>/<component_name>/<script-name>

37 * <default_scripts_path>/<script-name>

38

39 For install.sh scripts, given a component name, it will look in the following locations, in order:

40 * <component_scripts_path>/<component_name>/<script-name>

41 * <default_scripts_path>/<script-name>

42 """

43

44 @classmethod

45 def __find_script(cls, name, paths):

46 script = next(filter(lambda path: os.path.exists(path), paths), None)

47 if script is None:

48 raise ScriptFinder.ScriptNotFoundError(name, paths)

49 return script

50

51 @classmethod

52 def find_build_script(cls, component_name, git_dir):

53 paths = [

54 os.path.realpath(os.path.join(git_dir, "build.sh")),

55 os.path.realpath(os.path.join(git_dir, "scripts/build.sh")),

56 os.path.realpath(

57 os.path.join(cls.component_scripts_path, component_name, "build.sh")

58 ),

59 os.path.realpath(os.path.join(cls.default_scripts_path, "build.sh")),

60 ]

61

62 return cls.__find_script("build.sh", paths)

63

64 @classmethod

65 def find_integ_test_script(cls, component_name, git_dir):

66 paths = [

67 os.path.realpath(os.path.join(git_dir, "integtest.sh")),

68 os.path.realpath(os.path.join(git_dir, "scripts/integtest.sh")),

69 os.path.realpath(

70 os.path.join(cls.component_scripts_path, component_name, "integtest.sh")

71 ),

72 os.path.realpath(os.path.join(cls.default_scripts_path, "integtest.sh")),

73 ]

74

75 return cls.__find_script("integtest.sh", paths)

76

77 @classmethod

78 def find_install_script(cls, component_name):

79 paths = [

80 os.path.realpath(

81 os.path.join(cls.component_scripts_path, component_name, "install.sh")

82 ),

83 os.path.realpath(os.path.join(cls.default_scripts_path, "install.sh")),

84 ]

85

86 return cls.__find_script("install.sh", paths)

87

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/bundle-workflow/src/paths/script_finder.py b/bundle-workflow/src/paths/script_finder.py

--- a/bundle-workflow/src/paths/script_finder.py

+++ b/bundle-workflow/src/paths/script_finder.py

@@ -64,8 +64,9 @@

@classmethod

def find_integ_test_script(cls, component_name, git_dir):

paths = [

- os.path.realpath(os.path.join(git_dir, "integtest.sh")),

- os.path.realpath(os.path.join(git_dir, "scripts/integtest.sh")),

+ # TODO: Uncomment this after the integtest.sh tool is removed from plugin repos. See issue #497

+ # os.path.realpath(os.path.join(git_dir, "integtest.sh")),

+ # os.path.realpath(os.path.join(git_dir, "scripts/integtest.sh")),

os.path.realpath(

os.path.join(cls.component_scripts_path, component_name, "integtest.sh")

),

| {"golden_diff": "diff --git a/bundle-workflow/src/paths/script_finder.py b/bundle-workflow/src/paths/script_finder.py\n--- a/bundle-workflow/src/paths/script_finder.py\n+++ b/bundle-workflow/src/paths/script_finder.py\n@@ -64,8 +64,9 @@\n @classmethod\n def find_integ_test_script(cls, component_name, git_dir):\n paths = [\n- os.path.realpath(os.path.join(git_dir, \"integtest.sh\")),\n- os.path.realpath(os.path.join(git_dir, \"scripts/integtest.sh\")),\n+ # TODO: Uncomment this after the integtest.sh tool is removed from plugin repos. See issue #497\n+ # os.path.realpath(os.path.join(git_dir, \"integtest.sh\")),\n+ # os.path.realpath(os.path.join(git_dir, \"scripts/integtest.sh\")),\n os.path.realpath(\n os.path.join(cls.component_scripts_path, component_name, \"integtest.sh\")\n ),\n", "issue": "Make plugin integtest.sh run against non-snapshot build\nThe plugin integtest.sh picks up the opensearch version provided in build.gradle, which is 1.1.0-SNAPSHOT. Since the release candidates are non snapshot built artifacts, make this configurable in integ test job\n", "before_files": [{"content": "# SPDX-License-Identifier: Apache-2.0\n#\n# The OpenSearch Contributors require contributions made to\n# this file be licensed under the Apache-2.0 license or a\n# compatible open source license.\n\nimport os\n\n\nclass ScriptFinder:\n class ScriptNotFoundError(Exception):\n def __init__(self, kind, paths):\n self.kind = kind\n self.paths = paths\n super().__init__(f\"Could not find {kind} script. Looked in {paths}.\")\n\n component_scripts_path = os.path.realpath(\n os.path.join(\n os.path.dirname(os.path.abspath(__file__)), \"../../scripts/components\"\n )\n )\n\n default_scripts_path = os.path.realpath(\n os.path.join(\n os.path.dirname(os.path.abspath(__file__)), \"../../scripts/default\"\n )\n )\n\n \"\"\"\n ScriptFinder is a helper that abstracts away the details of where to look for build, test and install scripts.\n\n For build.sh and integtest.sh scripts, given a component name and a checked-out Git repository,\n it will look in the following locations, in order:\n * Root of the Git repository\n * /scripts/<script-name> in the Git repository\n * <component_scripts_path>/<component_name>/<script-name>\n * <default_scripts_path>/<script-name>\n\n For install.sh scripts, given a component name, it will look in the following locations, in order:\n * <component_scripts_path>/<component_name>/<script-name>\n * <default_scripts_path>/<script-name>\n \"\"\"\n\n @classmethod\n def __find_script(cls, name, paths):\n script = next(filter(lambda path: os.path.exists(path), paths), None)\n if script is None:\n raise ScriptFinder.ScriptNotFoundError(name, paths)\n return script\n\n @classmethod\n def find_build_script(cls, component_name, git_dir):\n paths = [\n os.path.realpath(os.path.join(git_dir, \"build.sh\")),\n os.path.realpath(os.path.join(git_dir, \"scripts/build.sh\")),\n os.path.realpath(\n os.path.join(cls.component_scripts_path, component_name, \"build.sh\")\n ),\n os.path.realpath(os.path.join(cls.default_scripts_path, \"build.sh\")),\n ]\n\n return cls.__find_script(\"build.sh\", paths)\n\n @classmethod\n def find_integ_test_script(cls, component_name, git_dir):\n paths = [\n os.path.realpath(os.path.join(git_dir, \"integtest.sh\")),\n os.path.realpath(os.path.join(git_dir, \"scripts/integtest.sh\")),\n os.path.realpath(\n os.path.join(cls.component_scripts_path, component_name, \"integtest.sh\")\n ),\n os.path.realpath(os.path.join(cls.default_scripts_path, \"integtest.sh\")),\n ]\n\n return cls.__find_script(\"integtest.sh\", paths)\n\n @classmethod\n def find_install_script(cls, component_name):\n paths = [\n os.path.realpath(\n os.path.join(cls.component_scripts_path, component_name, \"install.sh\")\n ),\n os.path.realpath(os.path.join(cls.default_scripts_path, \"install.sh\")),\n ]\n\n return cls.__find_script(\"install.sh\", paths)\n", "path": "bundle-workflow/src/paths/script_finder.py"}], "after_files": [{"content": "# SPDX-License-Identifier: Apache-2.0\n#\n# The OpenSearch Contributors require contributions made to\n# this file be licensed under the Apache-2.0 license or a\n# compatible open source license.\n\nimport os\n\n\nclass ScriptFinder:\n class ScriptNotFoundError(Exception):\n def __init__(self, kind, paths):\n self.kind = kind\n self.paths = paths\n super().__init__(f\"Could not find {kind} script. Looked in {paths}.\")\n\n component_scripts_path = os.path.realpath(\n os.path.join(\n os.path.dirname(os.path.abspath(__file__)), \"../../scripts/components\"\n )\n )\n\n default_scripts_path = os.path.realpath(\n os.path.join(\n os.path.dirname(os.path.abspath(__file__)), \"../../scripts/default\"\n )\n )\n\n \"\"\"\n ScriptFinder is a helper that abstracts away the details of where to look for build, test and install scripts.\n\n For build.sh and integtest.sh scripts, given a component name and a checked-out Git repository,\n it will look in the following locations, in order:\n * Root of the Git repository\n * /scripts/<script-name> in the Git repository\n * <component_scripts_path>/<component_name>/<script-name>\n * <default_scripts_path>/<script-name>\n\n For install.sh scripts, given a component name, it will look in the following locations, in order:\n * <component_scripts_path>/<component_name>/<script-name>\n * <default_scripts_path>/<script-name>\n \"\"\"\n\n @classmethod\n def __find_script(cls, name, paths):\n script = next(filter(lambda path: os.path.exists(path), paths), None)\n if script is None:\n raise ScriptFinder.ScriptNotFoundError(name, paths)\n return script\n\n @classmethod\n def find_build_script(cls, component_name, git_dir):\n paths = [\n os.path.realpath(os.path.join(git_dir, \"build.sh\")),\n os.path.realpath(os.path.join(git_dir, \"scripts/build.sh\")),\n os.path.realpath(\n os.path.join(cls.component_scripts_path, component_name, \"build.sh\")\n ),\n os.path.realpath(os.path.join(cls.default_scripts_path, \"build.sh\")),\n ]\n\n return cls.__find_script(\"build.sh\", paths)\n\n @classmethod\n def find_integ_test_script(cls, component_name, git_dir):\n paths = [\n # TODO: Uncomment this after the integtest.sh tool is removed from plugin repos. See issue #497\n # os.path.realpath(os.path.join(git_dir, \"integtest.sh\")),\n # os.path.realpath(os.path.join(git_dir, \"scripts/integtest.sh\")),\n os.path.realpath(\n os.path.join(cls.component_scripts_path, component_name, \"integtest.sh\")\n ),\n os.path.realpath(os.path.join(cls.default_scripts_path, \"integtest.sh\")),\n ]\n\n return cls.__find_script(\"integtest.sh\", paths)\n\n @classmethod\n def find_install_script(cls, component_name):\n paths = [\n os.path.realpath(\n os.path.join(cls.component_scripts_path, component_name, \"install.sh\")\n ),\n os.path.realpath(os.path.join(cls.default_scripts_path, \"install.sh\")),\n ]\n\n return cls.__find_script(\"install.sh\", paths)\n", "path": "bundle-workflow/src/paths/script_finder.py"}]} | 1,165 | 214 |

gh_patches_debug_1486 | rasdani/github-patches | git_diff | certbot__certbot-8776 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fix lint and mypy with Python < 3.8

In https://github.com/certbot/certbot/pull/8748, we made a change that causes our lint and mypy tests to need to be run on Python 3.8+ to pass. See https://github.com/certbot/certbot/pull/8748#issuecomment-808790093 for the discussion of the problem here.

I don't think we should do this. Certbot supports Python 3.6+ and I think it could cause a particularly bad experience for new devs that don't happen to know they need Python 3.8+. This change also broke our development Dockerfile as can be seen at https://dev.azure.com/certbot/certbot/_build/results?buildId=3742&view=logs&j=bea2d267-f41e-5b33-7b51-a88065a8cbb0&t=0dc90756-6888-5ee6-5a6a-5855e6b9ae76&l=1873. Instead, I think we should change our approach here so the tests work on all versions of Python we support. I'm open to other ideas, but the two ideas I had for this are:

1. Just declare a runtime dependency on `typing-extensions`.

2. Add `typing-extensions` as a dev/test dependency and try to import it, but use similar fallback code to what we current have if it's not available.

What do you think @adferrand? Are you interested in working on this?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `certbot/setup.py`

Content:

```

1 import codecs

2 from distutils.version import LooseVersion

3 import os

4 import re

5 import sys

6

7 from setuptools import __version__ as setuptools_version

8 from setuptools import find_packages

9 from setuptools import setup

10

11 min_setuptools_version='39.0.1'

12 # This conditional isn't necessary, but it provides better error messages to

13 # people who try to install this package with older versions of setuptools.

14 if LooseVersion(setuptools_version) < LooseVersion(min_setuptools_version):

15 raise RuntimeError(f'setuptools {min_setuptools_version}+ is required')

16

17 # Workaround for https://bugs.python.org/issue8876, see

18 # https://bugs.python.org/issue8876#msg208792

19 # This can be removed when using Python 2.7.9 or later:

20 # https://hg.python.org/cpython/raw-file/v2.7.9/Misc/NEWS

21 if os.path.abspath(__file__).split(os.path.sep)[1] == 'vagrant':

22 del os.link

23

24

25 def read_file(filename, encoding='utf8'):

26 """Read unicode from given file."""

27 with codecs.open(filename, encoding=encoding) as fd:

28 return fd.read()

29

30

31 here = os.path.abspath(os.path.dirname(__file__))

32

33 # read version number (and other metadata) from package init

34 init_fn = os.path.join(here, 'certbot', '__init__.py')

35 meta = dict(re.findall(r"""__([a-z]+)__ = '([^']+)""", read_file(init_fn)))

36

37 readme = read_file(os.path.join(here, 'README.rst'))

38 version = meta['version']

39

40 # This package relies on PyOpenSSL and requests, however, it isn't specified

41 # here to avoid masking the more specific request requirements in acme. See

42 # https://github.com/pypa/pip/issues/988 for more info.

43 install_requires = [

44 'acme>=1.8.0',

45 # We technically need ConfigArgParse 0.10.0 for Python 2.6 support, but

46 # saying so here causes a runtime error against our temporary fork of 0.9.3

47 # in which we added 2.6 support (see #2243), so we relax the requirement.

48 'ConfigArgParse>=0.9.3',

49 'configobj>=5.0.6',

50 'cryptography>=2.1.4',

51 'distro>=1.0.1',

52 # 1.1.0+ is required to avoid the warnings described at

53 # https://github.com/certbot/josepy/issues/13.

54 'josepy>=1.1.0',

55 'parsedatetime>=2.4',

56 'pyrfc3339',

57 'pytz',

58 # This dependency needs to be added using environment markers to avoid its

59 # installation on Linux.

60 'pywin32>=300 ; sys_platform == "win32"',

61 f'setuptools>={min_setuptools_version}',

62 'zope.component',

63 'zope.interface',

64 ]

65

66 dev_extras = [

67 'astroid',

68 'azure-devops',

69 'coverage',

70 'ipdb',

71 'mypy',

72 'PyGithub',

73 # 1.1.0+ is required for poetry to use the poetry-core library for the

74 # build system declared in tools/pinning/pyproject.toml.

75 'poetry>=1.1.0',

76 'pylint',

77 'pytest',

78 'pytest-cov',

79 'pytest-xdist',

80 'tox',

81 'twine',

82 'wheel',

83 ]

84

85 docs_extras = [

86 # If you have Sphinx<1.5.1, you need docutils<0.13.1

87 # https://github.com/sphinx-doc/sphinx/issues/3212

88 'repoze.sphinx.autointerface',

89 'Sphinx>=1.2', # Annotation support

90 'sphinx_rtd_theme',

91 ]

92

93 setup(

94 name='certbot',

95 version=version,

96 description="ACME client",

97 long_description=readme,

98 url='https://github.com/letsencrypt/letsencrypt',

99 author="Certbot Project",

100 author_email='[email protected]',

101 license='Apache License 2.0',

102 python_requires='>=3.6',

103 classifiers=[

104 'Development Status :: 5 - Production/Stable',

105 'Environment :: Console',

106 'Environment :: Console :: Curses',

107 'Intended Audience :: System Administrators',

108 'License :: OSI Approved :: Apache Software License',

109 'Operating System :: POSIX :: Linux',

110 'Programming Language :: Python',

111 'Programming Language :: Python :: 3',

112 'Programming Language :: Python :: 3.6',

113 'Programming Language :: Python :: 3.7',

114 'Programming Language :: Python :: 3.8',

115 'Programming Language :: Python :: 3.9',

116 'Topic :: Internet :: WWW/HTTP',

117 'Topic :: Security',

118 'Topic :: System :: Installation/Setup',

119 'Topic :: System :: Networking',

120 'Topic :: System :: Systems Administration',

121 'Topic :: Utilities',

122 ],

123

124 packages=find_packages(exclude=['docs', 'examples', 'tests', 'venv']),

125 include_package_data=True,

126

127 install_requires=install_requires,

128 extras_require={

129 'dev': dev_extras,

130 'docs': docs_extras,

131 },

132

133 entry_points={

134 'console_scripts': [

135 'certbot = certbot.main:main',

136 ],

137 'certbot.plugins': [

138 'manual = certbot._internal.plugins.manual:Authenticator',

139 'null = certbot._internal.plugins.null:Installer',

140 'standalone = certbot._internal.plugins.standalone:Authenticator',

141 'webroot = certbot._internal.plugins.webroot:Authenticator',

142 ],

143 },

144 )

145

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/certbot/setup.py b/certbot/setup.py

--- a/certbot/setup.py

+++ b/certbot/setup.py

@@ -77,6 +77,9 @@

'pytest',

'pytest-cov',

'pytest-xdist',

+ # typing-extensions is required to import typing.Protocol and make the mypy checks

+ # pass (along with pylint about non-existent objects) on Python 3.6 & 3.7

+ 'typing-extensions',

'tox',

'twine',

'wheel',

| {"golden_diff": "diff --git a/certbot/setup.py b/certbot/setup.py\n--- a/certbot/setup.py\n+++ b/certbot/setup.py\n@@ -77,6 +77,9 @@\n 'pytest',\n 'pytest-cov',\n 'pytest-xdist',\n+ # typing-extensions is required to import typing.Protocol and make the mypy checks\n+ # pass (along with pylint about non-existent objects) on Python 3.6 & 3.7\n+ 'typing-extensions',\n 'tox',\n 'twine',\n 'wheel',\n", "issue": "Fix lint and mypy with Python < 3.8\nIn https://github.com/certbot/certbot/pull/8748, we made a change that causes our lint and mypy tests to need to be run on Python 3.8+ to pass. See https://github.com/certbot/certbot/pull/8748#issuecomment-808790093 for the discussion of the problem here.\r\n\r\nI don't think we should do this. Certbot supports Python 3.6+ and I think it could cause a particularly bad experience for new devs that don't happen to know they need Python 3.8+. This change also broke our development Dockerfile as can be seen at https://dev.azure.com/certbot/certbot/_build/results?buildId=3742&view=logs&j=bea2d267-f41e-5b33-7b51-a88065a8cbb0&t=0dc90756-6888-5ee6-5a6a-5855e6b9ae76&l=1873. Instead, I think we should change our approach here so the tests work on all versions of Python we support. I'm open to other ideas, but the two ideas I had for this are:\r\n\r\n1. Just declare a runtime dependency on `typing-extensions`.\r\n2. Add `typing-extensions` as a dev/test dependency and try to import it, but use similar fallback code to what we current have if it's not available.\r\n\r\nWhat do you think @adferrand? Are you interested in working on this?\n", "before_files": [{"content": "import codecs\nfrom distutils.version import LooseVersion\nimport os\nimport re\nimport sys\n\nfrom setuptools import __version__ as setuptools_version\nfrom setuptools import find_packages\nfrom setuptools import setup\n\nmin_setuptools_version='39.0.1'\n# This conditional isn't necessary, but it provides better error messages to\n# people who try to install this package with older versions of setuptools.\nif LooseVersion(setuptools_version) < LooseVersion(min_setuptools_version):\n raise RuntimeError(f'setuptools {min_setuptools_version}+ is required')\n\n# Workaround for https://bugs.python.org/issue8876, see\n# https://bugs.python.org/issue8876#msg208792\n# This can be removed when using Python 2.7.9 or later:\n# https://hg.python.org/cpython/raw-file/v2.7.9/Misc/NEWS\nif os.path.abspath(__file__).split(os.path.sep)[1] == 'vagrant':\n del os.link\n\n\ndef read_file(filename, encoding='utf8'):\n \"\"\"Read unicode from given file.\"\"\"\n with codecs.open(filename, encoding=encoding) as fd:\n return fd.read()\n\n\nhere = os.path.abspath(os.path.dirname(__file__))\n\n# read version number (and other metadata) from package init\ninit_fn = os.path.join(here, 'certbot', '__init__.py')\nmeta = dict(re.findall(r\"\"\"__([a-z]+)__ = '([^']+)\"\"\", read_file(init_fn)))\n\nreadme = read_file(os.path.join(here, 'README.rst'))\nversion = meta['version']\n\n# This package relies on PyOpenSSL and requests, however, it isn't specified\n# here to avoid masking the more specific request requirements in acme. See\n# https://github.com/pypa/pip/issues/988 for more info.\ninstall_requires = [\n 'acme>=1.8.0',\n # We technically need ConfigArgParse 0.10.0 for Python 2.6 support, but\n # saying so here causes a runtime error against our temporary fork of 0.9.3\n # in which we added 2.6 support (see #2243), so we relax the requirement.\n 'ConfigArgParse>=0.9.3',\n 'configobj>=5.0.6',\n 'cryptography>=2.1.4',\n 'distro>=1.0.1',\n # 1.1.0+ is required to avoid the warnings described at\n # https://github.com/certbot/josepy/issues/13.\n 'josepy>=1.1.0',\n 'parsedatetime>=2.4',\n 'pyrfc3339',\n 'pytz',\n # This dependency needs to be added using environment markers to avoid its\n # installation on Linux.\n 'pywin32>=300 ; sys_platform == \"win32\"',\n f'setuptools>={min_setuptools_version}',\n 'zope.component',\n 'zope.interface',\n]\n\ndev_extras = [\n 'astroid',\n 'azure-devops',\n 'coverage',\n 'ipdb',\n 'mypy',\n 'PyGithub',\n # 1.1.0+ is required for poetry to use the poetry-core library for the\n # build system declared in tools/pinning/pyproject.toml.\n 'poetry>=1.1.0',\n 'pylint',\n 'pytest',\n 'pytest-cov',\n 'pytest-xdist',\n 'tox',\n 'twine',\n 'wheel',\n]\n\ndocs_extras = [\n # If you have Sphinx<1.5.1, you need docutils<0.13.1\n # https://github.com/sphinx-doc/sphinx/issues/3212\n 'repoze.sphinx.autointerface',\n 'Sphinx>=1.2', # Annotation support\n 'sphinx_rtd_theme',\n]\n\nsetup(\n name='certbot',\n version=version,\n description=\"ACME client\",\n long_description=readme,\n url='https://github.com/letsencrypt/letsencrypt',\n author=\"Certbot Project\",\n author_email='[email protected]',\n license='Apache License 2.0',\n python_requires='>=3.6',\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n 'Environment :: Console',\n 'Environment :: Console :: Curses',\n 'Intended Audience :: System Administrators',\n 'License :: OSI Approved :: Apache Software License',\n 'Operating System :: POSIX :: Linux',\n 'Programming Language :: Python',\n 'Programming Language :: Python :: 3',\n 'Programming Language :: Python :: 3.6',\n 'Programming Language :: Python :: 3.7',\n 'Programming Language :: Python :: 3.8',\n 'Programming Language :: Python :: 3.9',\n 'Topic :: Internet :: WWW/HTTP',\n 'Topic :: Security',\n 'Topic :: System :: Installation/Setup',\n 'Topic :: System :: Networking',\n 'Topic :: System :: Systems Administration',\n 'Topic :: Utilities',\n ],\n\n packages=find_packages(exclude=['docs', 'examples', 'tests', 'venv']),\n include_package_data=True,\n\n install_requires=install_requires,\n extras_require={\n 'dev': dev_extras,\n 'docs': docs_extras,\n },\n\n entry_points={\n 'console_scripts': [\n 'certbot = certbot.main:main',\n ],\n 'certbot.plugins': [\n 'manual = certbot._internal.plugins.manual:Authenticator',\n 'null = certbot._internal.plugins.null:Installer',\n 'standalone = certbot._internal.plugins.standalone:Authenticator',\n 'webroot = certbot._internal.plugins.webroot:Authenticator',\n ],\n },\n)\n", "path": "certbot/setup.py"}], "after_files": [{"content": "import codecs\nfrom distutils.version import LooseVersion\nimport os\nimport re\nimport sys\n\nfrom setuptools import __version__ as setuptools_version\nfrom setuptools import find_packages\nfrom setuptools import setup\n\nmin_setuptools_version='39.0.1'\n# This conditional isn't necessary, but it provides better error messages to\n# people who try to install this package with older versions of setuptools.\nif LooseVersion(setuptools_version) < LooseVersion(min_setuptools_version):\n raise RuntimeError(f'setuptools {min_setuptools_version}+ is required')\n\n# Workaround for https://bugs.python.org/issue8876, see\n# https://bugs.python.org/issue8876#msg208792\n# This can be removed when using Python 2.7.9 or later:\n# https://hg.python.org/cpython/raw-file/v2.7.9/Misc/NEWS\nif os.path.abspath(__file__).split(os.path.sep)[1] == 'vagrant':\n del os.link\n\n\ndef read_file(filename, encoding='utf8'):\n \"\"\"Read unicode from given file.\"\"\"\n with codecs.open(filename, encoding=encoding) as fd:\n return fd.read()\n\n\nhere = os.path.abspath(os.path.dirname(__file__))\n\n# read version number (and other metadata) from package init\ninit_fn = os.path.join(here, 'certbot', '__init__.py')\nmeta = dict(re.findall(r\"\"\"__([a-z]+)__ = '([^']+)\"\"\", read_file(init_fn)))\n\nreadme = read_file(os.path.join(here, 'README.rst'))\nversion = meta['version']\n\n# This package relies on PyOpenSSL and requests, however, it isn't specified\n# here to avoid masking the more specific request requirements in acme. See\n# https://github.com/pypa/pip/issues/988 for more info.\ninstall_requires = [\n 'acme>=1.8.0',\n # We technically need ConfigArgParse 0.10.0 for Python 2.6 support, but\n # saying so here causes a runtime error against our temporary fork of 0.9.3\n # in which we added 2.6 support (see #2243), so we relax the requirement.\n 'ConfigArgParse>=0.9.3',\n 'configobj>=5.0.6',\n 'cryptography>=2.1.4',\n 'distro>=1.0.1',\n # 1.1.0+ is required to avoid the warnings described at\n # https://github.com/certbot/josepy/issues/13.\n 'josepy>=1.1.0',\n 'parsedatetime>=2.4',\n 'pyrfc3339',\n 'pytz',\n # This dependency needs to be added using environment markers to avoid its\n # installation on Linux.\n 'pywin32>=300 ; sys_platform == \"win32\"',\n f'setuptools>={min_setuptools_version}',\n 'zope.component',\n 'zope.interface',\n]\n\ndev_extras = [\n 'astroid',\n 'azure-devops',\n 'coverage',\n 'ipdb',\n 'mypy',\n 'PyGithub',\n # 1.1.0+ is required for poetry to use the poetry-core library for the\n # build system declared in tools/pinning/pyproject.toml.\n 'poetry>=1.1.0',\n 'pylint',\n 'pytest',\n 'pytest-cov',\n 'pytest-xdist',\n # typing-extensions is required to import typing.Protocol and make the mypy checks\n # pass (along with pylint about non-existent objects) on Python 3.6 & 3.7\n 'typing-extensions',\n 'tox',\n 'twine',\n 'wheel',\n]\n\ndocs_extras = [\n # If you have Sphinx<1.5.1, you need docutils<0.13.1\n # https://github.com/sphinx-doc/sphinx/issues/3212\n 'repoze.sphinx.autointerface',\n 'Sphinx>=1.2', # Annotation support\n 'sphinx_rtd_theme',\n]\n\nsetup(\n name='certbot',\n version=version,\n description=\"ACME client\",\n long_description=readme,\n url='https://github.com/letsencrypt/letsencrypt',\n author=\"Certbot Project\",\n author_email='[email protected]',\n license='Apache License 2.0',\n python_requires='>=3.6',\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n 'Environment :: Console',\n 'Environment :: Console :: Curses',\n 'Intended Audience :: System Administrators',\n 'License :: OSI Approved :: Apache Software License',\n 'Operating System :: POSIX :: Linux',\n 'Programming Language :: Python',\n 'Programming Language :: Python :: 3',\n 'Programming Language :: Python :: 3.6',\n 'Programming Language :: Python :: 3.7',\n 'Programming Language :: Python :: 3.8',\n 'Programming Language :: Python :: 3.9',\n 'Topic :: Internet :: WWW/HTTP',\n 'Topic :: Security',\n 'Topic :: System :: Installation/Setup',\n 'Topic :: System :: Networking',\n 'Topic :: System :: Systems Administration',\n 'Topic :: Utilities',\n ],\n\n packages=find_packages(exclude=['docs', 'examples', 'tests', 'venv']),\n include_package_data=True,\n\n install_requires=install_requires,\n extras_require={\n 'dev': dev_extras,\n 'docs': docs_extras,\n },\n\n entry_points={\n 'console_scripts': [\n 'certbot = certbot.main:main',\n ],\n 'certbot.plugins': [\n 'manual = certbot._internal.plugins.manual:Authenticator',\n 'null = certbot._internal.plugins.null:Installer',\n 'standalone = certbot._internal.plugins.standalone:Authenticator',\n 'webroot = certbot._internal.plugins.webroot:Authenticator',\n ],\n },\n)\n", "path": "certbot/setup.py"}]} | 2,224 | 125 |

gh_patches_debug_32163 | rasdani/github-patches | git_diff | opsdroid__opsdroid-1183 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Add Google Style Docstrings

We should implement Google Style Docstrings to every function, method, class in opsdroid. This style will support existing documentation and will help in the future by generating documentation automatically.

This consists in a bit of effort so this issue can be worked by more than one contributor, just make sure that everyone knows what you are working on in order to avoid other contributors spending time on something that you are working on.

If you are unfamiliar with the Google Style Docstrings I'd recommend that you check these resources:

- [Sphix 1.8.0+ - Google Style Docstrings](https://sphinxcontrib-napoleon.readthedocs.io/en/latest/example_google.html)

Docstrings that need to be updated:

- main.py

- [x] configure_lang

- [ ] configure_log

- [ ] get_logging_level

- [ ] check_dependencies

- [ ] print_version

- [ ] print_example_config

- [ ] edit_files

- [x] welcome_message

- ~~helper.py~~

- [x] get_opsdroid

- [x] del_rw

- [x] move_config_to_appdir

- memory.py

- [x] Memory

- [x] get

- [x] put

- [x] _get_from_database

- [x] _put_to_database

- message.py

- [x] Message

- [x] __init__

- [x] _thinking_delay

- [x] _typing delay

- [x] respond

- [x] react

- web.py

- [ ] Web

- [x] get_port

- [x] get_host

- [x] get_ssl_context

- [ ] start

- [ ] build_response

- [ ] web_index_handler

- [ ] web_stats_handler

- matchers.py

- [ ] match_regex

- [ ] match_apiai_action

- [ ] match_apiai_intent

- [ ] match_dialogflow_action

- [ ] match_dialogflow_intent

- [ ] match_luisai_intent

- [ ] match_rasanlu

- [ ] match_recastai

- [ ] match_witai

- [ ] match_crontab

- [ ] match_webhook

- [ ] match_always

- core.py

- [ ] OpsDroid

- [ ] default_connector

- [ ] exit

- [ ] critical

- [ ] call_stop

- [ ] disconnect

- [ ] stop

- [ ] load

- [ ] start_loop

- [x] setup_skills

- [ ] train_parsers

- [ ] start_connector_tasks

- [ ] start_database

- [ ] run_skill

- [ ] get_ranked_skills

- [ ] parse

- loader.py

- [ ] Loader

- [x] import_module_from_spec

- [x] import_module

- [x] check_cache

- [x] build_module_import_path

- [x] build_module_install_path

- [x] git_clone

- [x] git_pull

- [x] pip_install_deps

- [x] create_default_config

- [x] load_config_file

- [ ] envvar_constructor

- [ ] include_constructor

- [x] setup_modules_directory

- [x] load_modules_from_config

- [x] _load_modules

- [x] _install_module

- [x] _update_module

- [ ] _install_git_module

- [x] _install_local_module

---- ORIGINAL POST ----

I've been wondering about this for a while now and I would like to know if we should replace/update all the docstrings in opsdroid with the Google Style doc strings.

I think this could help new and old contributors to contribute and commit to opsdroid since the Google Style docstrings give more information about every method/function and specifies clearly what sort of input the function/method expects, what will it return and what will be raised (if applicable).

The downsize of this style is that the length of every .py file will increase due to the doc strings, but since most IDE's allow you to hide those fields it shouldn't be too bad.

Here is a good example of Google Style Doc strings: [Sphix 1.8.0+ - Google Style Docstrings](http://www.sphinx-doc.org/en/master/ext/example_google.html)

I would like to know what you all think about this idea and if its worth spending time on it.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `opsdroid/cli/utils.py`

Content:

```

1 """Utilities for the opsdroid CLI commands."""

2

3 import click

4 import gettext

5 import os

6 import logging

7 import subprocess

8 import sys

9 import time

10 import warnings

11

12 from opsdroid.const import (

13 DEFAULT_LOG_FILENAME,

14 LOCALE_DIR,

15 DEFAULT_LANGUAGE,

16 DEFAULT_CONFIG_PATH,

17 )

18

19 _LOGGER = logging.getLogger("opsdroid")

20

21

22 def edit_files(ctx, param, value):

23 """Open config/log file with favourite editor."""

24 if value == "config":

25 file = DEFAULT_CONFIG_PATH

26 if ctx.command.name == "cli":

27 warn_deprecated_cli_option(

28 "The flag -e/--edit-files has been deprecated. "

29 "Please run `opsdroid config edit` instead."

30 )

31 elif value == "log":

32 file = DEFAULT_LOG_FILENAME

33 if ctx.command.name == "cli":

34 warn_deprecated_cli_option(

35 "The flag -l/--view-log has been deprecated. "

36 "Please run `opsdroid logs` instead."

37 )

38 else:

39 return

40

41 editor = os.environ.get("EDITOR", "vi")

42 if editor == "vi":

43 click.echo(

44 "You are about to edit a file in vim. \n"

45 "Read the tutorial on vim at: https://bit.ly/2HRvvrB"

46 )

47 time.sleep(3)

48

49 subprocess.run([editor, file])

50 ctx.exit(0)

51

52

53 def warn_deprecated_cli_option(text):

54 """Warn users that the cli option they have used is deprecated."""

55 print(f"Warning: {text}")

56 warnings.warn(text, DeprecationWarning)

57

58

59 def configure_lang(config):

60 """Configure app language based on user config.

61

62 Args:

63 config: Language Configuration and it uses ISO 639-1 code.

64 for more info https://en.m.wikipedia.org/wiki/List_of_ISO_639-1_codes

65

66

67 """

68 lang_code = config.get("lang", DEFAULT_LANGUAGE)

69 if lang_code != DEFAULT_LANGUAGE:

70 lang = gettext.translation("opsdroid", LOCALE_DIR, (lang_code,), fallback=True)

71 lang.install()

72

73

74 def check_dependencies():

75 """Check for system dependencies required by opsdroid."""

76 if sys.version_info.major < 3 or sys.version_info.minor < 6:

77 logging.critical(_("Whoops! opsdroid requires python 3.6 or above."))

78 sys.exit(1)

79

80

81 def welcome_message(config):

82 """Add welcome message if set to true in configuration.

83

84 Args:

85 config: config loaded by Loader

86

87 Raises:

88 KeyError: If 'welcome-message' key is not found in configuration file

89

90 """

91 try:

92 if config["welcome-message"]:

93 _LOGGER.info("=" * 40)

94 _LOGGER.info(

95 _(

96 "You can customise your opsdroid by modifying "

97 "your configuration.yaml"

98 )

99 )

100 _LOGGER.info(

101 _("Read more at: " "http://opsdroid.readthedocs.io/#configuration")

102 )

103 _LOGGER.info(_("Watch the Get Started Videos at: " "http://bit.ly/2fnC0Fh"))

104 _LOGGER.info(

105 _(

106 "Install Opsdroid Desktop at: \n"

107 "https://github.com/opsdroid/opsdroid-desktop/"

108 "releases"

109 )

110 )

111 _LOGGER.info("=" * 40)

112 except KeyError:

113 _LOGGER.warning(

114 _("'welcome-message: true/false' is missing in configuration.yaml")

115 )

116

```

Path: `opsdroid/cli/config.py`

Content:

```

1 """The config subcommand for opsdroid cli."""

2

3 import click

4

5 from opsdroid.cli.utils import edit_files, warn_deprecated_cli_option

6 from opsdroid.const import EXAMPLE_CONFIG_FILE

7

8

9 def print_example_config(ctx, param, value):

10 """[Deprecated] Print out the example config."""

11 if not value or ctx.resilient_parsing:

12 return

13 if ctx.command.name == "cli":

14 warn_deprecated_cli_option(

15 "The flag --gen-config has been deprecated. "

16 "Please run `opsdroid config gen` instead."

17 )

18 with open(EXAMPLE_CONFIG_FILE, "r") as conf:

19 click.echo(conf.read())

20 ctx.exit(0)

21

22

23 @click.group()

24 def config():

25 """Subcommands related to opsdroid configuration."""

26

27

28 @config.command()

29 @click.pass_context

30 def gen(ctx):

31 """Print out the example config."""

32 print_example_config(ctx, None, True)

33

34

35 @config.command()

36 @click.pass_context

37 def edit(ctx):

38 """Print out the example config."""

39 edit_files(ctx, None, "config")

40

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/opsdroid/cli/config.py b/opsdroid/cli/config.py

--- a/opsdroid/cli/config.py

+++ b/opsdroid/cli/config.py

@@ -7,7 +7,19 @@

def print_example_config(ctx, param, value):

- """[Deprecated] Print out the example config."""

+ """[Deprecated] Print out the example config.

+

+ Args:

+ ctx (:obj:`click.Context`): The current click cli context.

+ param (dict): a dictionary of all parameters pass to the click

+ context when invoking this function as a callback.

+ value (bool): the value of this parameter after invocation.

+ Defaults to False, set to True when this flag is called.

+

+ Returns:

+ int: the exit code. Always returns 0 in this case.

+

+ """

if not value or ctx.resilient_parsing:

return

if ctx.command.name == "cli":

diff --git a/opsdroid/cli/utils.py b/opsdroid/cli/utils.py

--- a/opsdroid/cli/utils.py

+++ b/opsdroid/cli/utils.py

@@ -20,7 +20,21 @@

def edit_files(ctx, param, value):

- """Open config/log file with favourite editor."""

+ """Open config/log file with favourite editor.

+

+ Args:

+ ctx (:obj:`click.Context`): The current click cli context.

+ param (dict): a dictionary of all parameters pass to the click

+ context when invoking this function as a callback.

+ value (string): the value of this parameter after invocation.

+ It is either "config" or "log" depending on the program

+ calling this function.

+

+ Returns:

+ int: the exit code. Always returns 0 in this case.

+

+ """

+

if value == "config":

file = DEFAULT_CONFIG_PATH

if ctx.command.name == "cli":

@@ -72,7 +86,13 @@

def check_dependencies():

- """Check for system dependencies required by opsdroid."""

+ """Check for system dependencies required by opsdroid.

+

+ Returns:

+ int: the exit code. Returns 1 if the Python version installed is

+ below 3.6.

+

+ """

if sys.version_info.major < 3 or sys.version_info.minor < 6:

logging.critical(_("Whoops! opsdroid requires python 3.6 or above."))

sys.exit(1)