problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_17942

|

rasdani/github-patches

|

git_diff

|

open-mmlab__mmocr-334

|

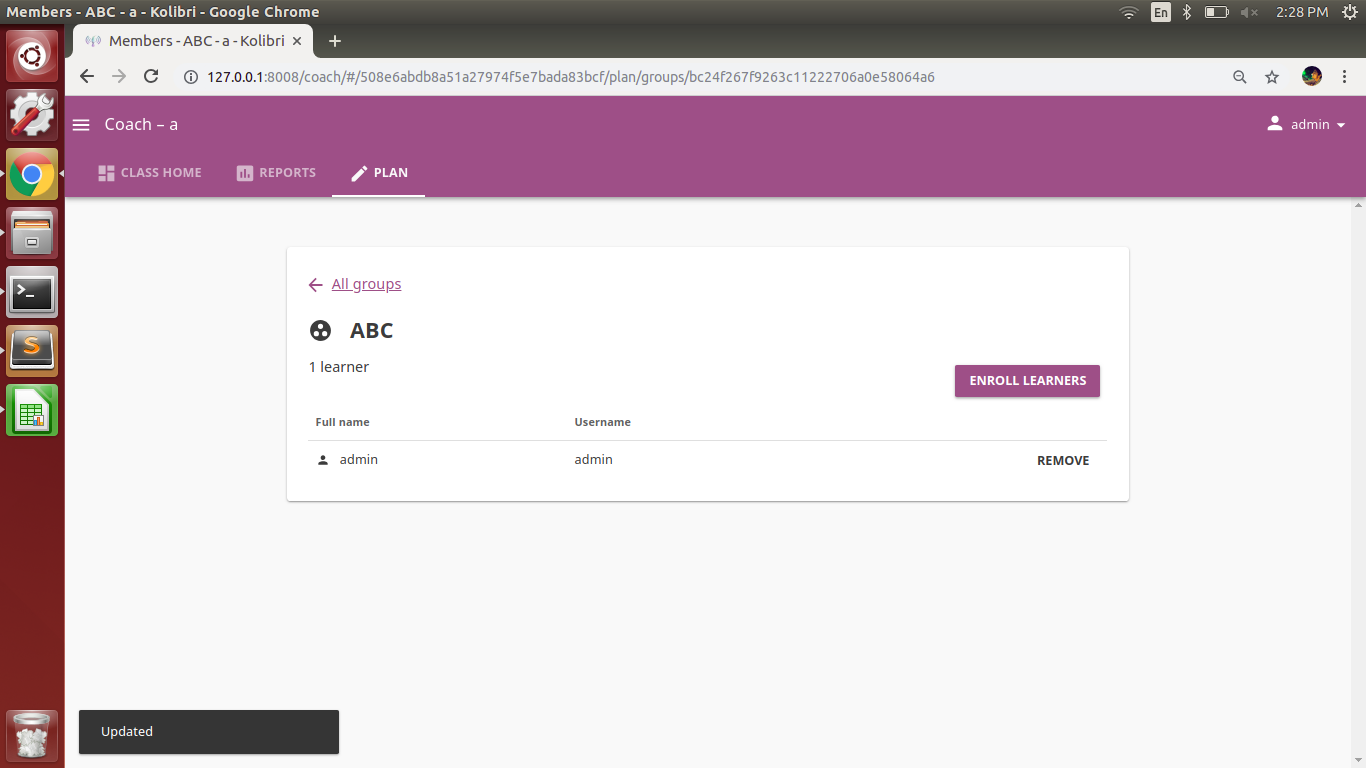

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

When I want to train a fcenet, I met a problem

It did run, but several epoch,there note that data not on the gpu.

how to solve it?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mmocr/models/textdet/losses/fce_loss.py`

Content:

```

1 import numpy as np

2 import torch

3 import torch.nn.functional as F

4 from mmdet.core import multi_apply

5 from mmdet.models.builder import LOSSES

6 from torch import nn

7

8

9 @LOSSES.register_module()

10 class FCELoss(nn.Module):

11 """The class for implementing FCENet loss

12 FCENet(CVPR2021): Fourier Contour Embedding for Arbitrary-shaped

13 Text Detection

14

15 [https://arxiv.org/abs/2104.10442]

16

17 Args:

18 fourier_degree (int) : The maximum Fourier transform degree k.

19 num_sample (int) : The sampling points number of regression

20 loss. If it is too small, fcenet tends to be overfitting.

21 ohem_ratio (float): the negative/positive ratio in OHEM.

22 """

23

24 def __init__(self, fourier_degree, num_sample, ohem_ratio=3.):

25 super().__init__()

26 self.fourier_degree = fourier_degree

27 self.num_sample = num_sample

28 self.ohem_ratio = ohem_ratio

29

30 def forward(self, preds, _, p3_maps, p4_maps, p5_maps):

31 assert isinstance(preds, list)

32 assert p3_maps[0].shape[0] == 4 * self.fourier_degree + 5,\

33 'fourier degree not equal in FCEhead and FCEtarget'

34

35 device = preds[0][0].device

36 # to tensor

37 gts = [p3_maps, p4_maps, p5_maps]

38 for idx, maps in enumerate(gts):

39 gts[idx] = torch.from_numpy(np.stack(maps)).float().to(device)

40

41 losses = multi_apply(self.forward_single, preds, gts)

42

43 loss_tr = torch.tensor(0., device=device).float()

44 loss_tcl = torch.tensor(0., device=device).float()

45 loss_reg_x = torch.tensor(0., device=device).float()

46 loss_reg_y = torch.tensor(0., device=device).float()

47

48 for idx, loss in enumerate(losses):

49 if idx == 0:

50 loss_tr += sum(loss)

51 elif idx == 1:

52 loss_tcl += sum(loss)

53 elif idx == 2:

54 loss_reg_x += sum(loss)

55 else:

56 loss_reg_y += sum(loss)

57

58 results = dict(

59 loss_text=loss_tr,

60 loss_center=loss_tcl,

61 loss_reg_x=loss_reg_x,

62 loss_reg_y=loss_reg_y,

63 )

64

65 return results

66

67 def forward_single(self, pred, gt):

68 cls_pred = pred[0].permute(0, 2, 3, 1).contiguous()

69 reg_pred = pred[1].permute(0, 2, 3, 1).contiguous()

70 gt = gt.permute(0, 2, 3, 1).contiguous()

71

72 k = 2 * self.fourier_degree + 1

73 tr_pred = cls_pred[:, :, :, :2].view(-1, 2)

74 tcl_pred = cls_pred[:, :, :, 2:].view(-1, 2)

75 x_pred = reg_pred[:, :, :, 0:k].view(-1, k)

76 y_pred = reg_pred[:, :, :, k:2 * k].view(-1, k)

77

78 tr_mask = gt[:, :, :, :1].view(-1)

79 tcl_mask = gt[:, :, :, 1:2].view(-1)

80 train_mask = gt[:, :, :, 2:3].view(-1)

81 x_map = gt[:, :, :, 3:3 + k].view(-1, k)

82 y_map = gt[:, :, :, 3 + k:].view(-1, k)

83

84 tr_train_mask = train_mask * tr_mask

85 device = x_map.device

86 # tr loss

87 loss_tr = self.ohem(tr_pred, tr_mask.long(), train_mask.long())

88

89 # tcl loss

90 loss_tcl = torch.tensor(0.).float().to(device)

91 tr_neg_mask = 1 - tr_train_mask

92 if tr_train_mask.sum().item() > 0:

93 loss_tcl_pos = F.cross_entropy(

94 tcl_pred[tr_train_mask.bool()],

95 tcl_mask[tr_train_mask.bool()].long())

96 loss_tcl_neg = F.cross_entropy(tcl_pred[tr_neg_mask.bool()],

97 tcl_mask[tr_neg_mask.bool()].long())

98 loss_tcl = loss_tcl_pos + 0.5 * loss_tcl_neg

99

100 # regression loss

101 loss_reg_x = torch.tensor(0.).float().to(device)

102 loss_reg_y = torch.tensor(0.).float().to(device)

103 if tr_train_mask.sum().item() > 0:

104 weight = (tr_mask[tr_train_mask.bool()].float() +

105 tcl_mask[tr_train_mask.bool()].float()) / 2

106 weight = weight.contiguous().view(-1, 1)

107

108 ft_x, ft_y = self.fourier2poly(x_map, y_map)

109 ft_x_pre, ft_y_pre = self.fourier2poly(x_pred, y_pred)

110

111 loss_reg_x = torch.mean(weight * F.smooth_l1_loss(

112 ft_x_pre[tr_train_mask.bool()],

113 ft_x[tr_train_mask.bool()],

114 reduction='none'))

115 loss_reg_y = torch.mean(weight * F.smooth_l1_loss(

116 ft_y_pre[tr_train_mask.bool()],

117 ft_y[tr_train_mask.bool()],

118 reduction='none'))

119

120 return loss_tr, loss_tcl, loss_reg_x, loss_reg_y

121

122 def ohem(self, predict, target, train_mask):

123 pos = (target * train_mask).bool()

124 neg = ((1 - target) * train_mask).bool()

125

126 n_pos = pos.float().sum()

127

128 if n_pos.item() > 0:

129 loss_pos = F.cross_entropy(

130 predict[pos], target[pos], reduction='sum')

131 loss_neg = F.cross_entropy(

132 predict[neg], target[neg], reduction='none')

133 n_neg = min(

134 int(neg.float().sum().item()),

135 int(self.ohem_ratio * n_pos.float()))

136 else:

137 loss_pos = torch.tensor(0.)

138 loss_neg = F.cross_entropy(

139 predict[neg], target[neg], reduction='none')

140 n_neg = 100

141 if len(loss_neg) > n_neg:

142 loss_neg, _ = torch.topk(loss_neg, n_neg)

143

144 return (loss_pos + loss_neg.sum()) / (n_pos + n_neg).float()

145

146 def fourier2poly(self, real_maps, imag_maps):

147 """Transform Fourier coefficient maps to polygon maps.

148

149 Args:

150 real_maps (tensor): A map composed of the real parts of the

151 Fourier coefficients, whose shape is (-1, 2k+1)

152 imag_maps (tensor):A map composed of the imag parts of the

153 Fourier coefficients, whose shape is (-1, 2k+1)

154

155 Returns

156 x_maps (tensor): A map composed of the x value of the polygon

157 represented by n sample points (xn, yn), whose shape is (-1, n)

158 y_maps (tensor): A map composed of the y value of the polygon

159 represented by n sample points (xn, yn), whose shape is (-1, n)

160 """

161

162 device = real_maps.device

163

164 k_vect = torch.arange(

165 -self.fourier_degree,

166 self.fourier_degree + 1,

167 dtype=torch.float,

168 device=device).view(-1, 1)

169 i_vect = torch.arange(

170 0, self.num_sample, dtype=torch.float, device=device).view(1, -1)

171

172 transform_matrix = 2 * np.pi / self.num_sample * torch.mm(

173 k_vect, i_vect)

174

175 x1 = torch.einsum('ak, kn-> an', real_maps,

176 torch.cos(transform_matrix))

177 x2 = torch.einsum('ak, kn-> an', imag_maps,

178 torch.sin(transform_matrix))

179 y1 = torch.einsum('ak, kn-> an', real_maps,

180 torch.sin(transform_matrix))

181 y2 = torch.einsum('ak, kn-> an', imag_maps,

182 torch.cos(transform_matrix))

183

184 x_maps = x1 - x2

185 y_maps = y1 + y2

186

187 return x_maps, y_maps

188

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mmocr/models/textdet/losses/fce_loss.py b/mmocr/models/textdet/losses/fce_loss.py

--- a/mmocr/models/textdet/losses/fce_loss.py

+++ b/mmocr/models/textdet/losses/fce_loss.py

@@ -120,6 +120,7 @@

return loss_tr, loss_tcl, loss_reg_x, loss_reg_y

def ohem(self, predict, target, train_mask):

+ device = train_mask.device

pos = (target * train_mask).bool()

neg = ((1 - target) * train_mask).bool()

@@ -134,7 +135,7 @@

int(neg.float().sum().item()),

int(self.ohem_ratio * n_pos.float()))

else:

- loss_pos = torch.tensor(0.)

+ loss_pos = torch.tensor(0.).to(device)

loss_neg = F.cross_entropy(

predict[neg], target[neg], reduction='none')

n_neg = 100

|

{"golden_diff": "diff --git a/mmocr/models/textdet/losses/fce_loss.py b/mmocr/models/textdet/losses/fce_loss.py\n--- a/mmocr/models/textdet/losses/fce_loss.py\n+++ b/mmocr/models/textdet/losses/fce_loss.py\n@@ -120,6 +120,7 @@\n return loss_tr, loss_tcl, loss_reg_x, loss_reg_y\n \n def ohem(self, predict, target, train_mask):\n+ device = train_mask.device\n pos = (target * train_mask).bool()\n neg = ((1 - target) * train_mask).bool()\n \n@@ -134,7 +135,7 @@\n int(neg.float().sum().item()),\n int(self.ohem_ratio * n_pos.float()))\n else:\n- loss_pos = torch.tensor(0.)\n+ loss_pos = torch.tensor(0.).to(device)\n loss_neg = F.cross_entropy(\n predict[neg], target[neg], reduction='none')\n n_neg = 100\n", "issue": "When I want to train a fcenet, I met a problem\n\r\nIt did run, but several epoch,there note that data not on the gpu.\r\nhow to solve it?\n", "before_files": [{"content": "import numpy as np\nimport torch\nimport torch.nn.functional as F\nfrom mmdet.core import multi_apply\nfrom mmdet.models.builder import LOSSES\nfrom torch import nn\n\n\[email protected]_module()\nclass FCELoss(nn.Module):\n \"\"\"The class for implementing FCENet loss\n FCENet(CVPR2021): Fourier Contour Embedding for Arbitrary-shaped\n Text Detection\n\n [https://arxiv.org/abs/2104.10442]\n\n Args:\n fourier_degree (int) : The maximum Fourier transform degree k.\n num_sample (int) : The sampling points number of regression\n loss. If it is too small, fcenet tends to be overfitting.\n ohem_ratio (float): the negative/positive ratio in OHEM.\n \"\"\"\n\n def __init__(self, fourier_degree, num_sample, ohem_ratio=3.):\n super().__init__()\n self.fourier_degree = fourier_degree\n self.num_sample = num_sample\n self.ohem_ratio = ohem_ratio\n\n def forward(self, preds, _, p3_maps, p4_maps, p5_maps):\n assert isinstance(preds, list)\n assert p3_maps[0].shape[0] == 4 * self.fourier_degree + 5,\\\n 'fourier degree not equal in FCEhead and FCEtarget'\n\n device = preds[0][0].device\n # to tensor\n gts = [p3_maps, p4_maps, p5_maps]\n for idx, maps in enumerate(gts):\n gts[idx] = torch.from_numpy(np.stack(maps)).float().to(device)\n\n losses = multi_apply(self.forward_single, preds, gts)\n\n loss_tr = torch.tensor(0., device=device).float()\n loss_tcl = torch.tensor(0., device=device).float()\n loss_reg_x = torch.tensor(0., device=device).float()\n loss_reg_y = torch.tensor(0., device=device).float()\n\n for idx, loss in enumerate(losses):\n if idx == 0:\n loss_tr += sum(loss)\n elif idx == 1:\n loss_tcl += sum(loss)\n elif idx == 2:\n loss_reg_x += sum(loss)\n else:\n loss_reg_y += sum(loss)\n\n results = dict(\n loss_text=loss_tr,\n loss_center=loss_tcl,\n loss_reg_x=loss_reg_x,\n loss_reg_y=loss_reg_y,\n )\n\n return results\n\n def forward_single(self, pred, gt):\n cls_pred = pred[0].permute(0, 2, 3, 1).contiguous()\n reg_pred = pred[1].permute(0, 2, 3, 1).contiguous()\n gt = gt.permute(0, 2, 3, 1).contiguous()\n\n k = 2 * self.fourier_degree + 1\n tr_pred = cls_pred[:, :, :, :2].view(-1, 2)\n tcl_pred = cls_pred[:, :, :, 2:].view(-1, 2)\n x_pred = reg_pred[:, :, :, 0:k].view(-1, k)\n y_pred = reg_pred[:, :, :, k:2 * k].view(-1, k)\n\n tr_mask = gt[:, :, :, :1].view(-1)\n tcl_mask = gt[:, :, :, 1:2].view(-1)\n train_mask = gt[:, :, :, 2:3].view(-1)\n x_map = gt[:, :, :, 3:3 + k].view(-1, k)\n y_map = gt[:, :, :, 3 + k:].view(-1, k)\n\n tr_train_mask = train_mask * tr_mask\n device = x_map.device\n # tr loss\n loss_tr = self.ohem(tr_pred, tr_mask.long(), train_mask.long())\n\n # tcl loss\n loss_tcl = torch.tensor(0.).float().to(device)\n tr_neg_mask = 1 - tr_train_mask\n if tr_train_mask.sum().item() > 0:\n loss_tcl_pos = F.cross_entropy(\n tcl_pred[tr_train_mask.bool()],\n tcl_mask[tr_train_mask.bool()].long())\n loss_tcl_neg = F.cross_entropy(tcl_pred[tr_neg_mask.bool()],\n tcl_mask[tr_neg_mask.bool()].long())\n loss_tcl = loss_tcl_pos + 0.5 * loss_tcl_neg\n\n # regression loss\n loss_reg_x = torch.tensor(0.).float().to(device)\n loss_reg_y = torch.tensor(0.).float().to(device)\n if tr_train_mask.sum().item() > 0:\n weight = (tr_mask[tr_train_mask.bool()].float() +\n tcl_mask[tr_train_mask.bool()].float()) / 2\n weight = weight.contiguous().view(-1, 1)\n\n ft_x, ft_y = self.fourier2poly(x_map, y_map)\n ft_x_pre, ft_y_pre = self.fourier2poly(x_pred, y_pred)\n\n loss_reg_x = torch.mean(weight * F.smooth_l1_loss(\n ft_x_pre[tr_train_mask.bool()],\n ft_x[tr_train_mask.bool()],\n reduction='none'))\n loss_reg_y = torch.mean(weight * F.smooth_l1_loss(\n ft_y_pre[tr_train_mask.bool()],\n ft_y[tr_train_mask.bool()],\n reduction='none'))\n\n return loss_tr, loss_tcl, loss_reg_x, loss_reg_y\n\n def ohem(self, predict, target, train_mask):\n pos = (target * train_mask).bool()\n neg = ((1 - target) * train_mask).bool()\n\n n_pos = pos.float().sum()\n\n if n_pos.item() > 0:\n loss_pos = F.cross_entropy(\n predict[pos], target[pos], reduction='sum')\n loss_neg = F.cross_entropy(\n predict[neg], target[neg], reduction='none')\n n_neg = min(\n int(neg.float().sum().item()),\n int(self.ohem_ratio * n_pos.float()))\n else:\n loss_pos = torch.tensor(0.)\n loss_neg = F.cross_entropy(\n predict[neg], target[neg], reduction='none')\n n_neg = 100\n if len(loss_neg) > n_neg:\n loss_neg, _ = torch.topk(loss_neg, n_neg)\n\n return (loss_pos + loss_neg.sum()) / (n_pos + n_neg).float()\n\n def fourier2poly(self, real_maps, imag_maps):\n \"\"\"Transform Fourier coefficient maps to polygon maps.\n\n Args:\n real_maps (tensor): A map composed of the real parts of the\n Fourier coefficients, whose shape is (-1, 2k+1)\n imag_maps (tensor):A map composed of the imag parts of the\n Fourier coefficients, whose shape is (-1, 2k+1)\n\n Returns\n x_maps (tensor): A map composed of the x value of the polygon\n represented by n sample points (xn, yn), whose shape is (-1, n)\n y_maps (tensor): A map composed of the y value of the polygon\n represented by n sample points (xn, yn), whose shape is (-1, n)\n \"\"\"\n\n device = real_maps.device\n\n k_vect = torch.arange(\n -self.fourier_degree,\n self.fourier_degree + 1,\n dtype=torch.float,\n device=device).view(-1, 1)\n i_vect = torch.arange(\n 0, self.num_sample, dtype=torch.float, device=device).view(1, -1)\n\n transform_matrix = 2 * np.pi / self.num_sample * torch.mm(\n k_vect, i_vect)\n\n x1 = torch.einsum('ak, kn-> an', real_maps,\n torch.cos(transform_matrix))\n x2 = torch.einsum('ak, kn-> an', imag_maps,\n torch.sin(transform_matrix))\n y1 = torch.einsum('ak, kn-> an', real_maps,\n torch.sin(transform_matrix))\n y2 = torch.einsum('ak, kn-> an', imag_maps,\n torch.cos(transform_matrix))\n\n x_maps = x1 - x2\n y_maps = y1 + y2\n\n return x_maps, y_maps\n", "path": "mmocr/models/textdet/losses/fce_loss.py"}], "after_files": [{"content": "import numpy as np\nimport torch\nimport torch.nn.functional as F\nfrom mmdet.core import multi_apply\nfrom mmdet.models.builder import LOSSES\nfrom torch import nn\n\n\[email protected]_module()\nclass FCELoss(nn.Module):\n \"\"\"The class for implementing FCENet loss\n FCENet(CVPR2021): Fourier Contour Embedding for Arbitrary-shaped\n Text Detection\n\n [https://arxiv.org/abs/2104.10442]\n\n Args:\n fourier_degree (int) : The maximum Fourier transform degree k.\n num_sample (int) : The sampling points number of regression\n loss. If it is too small, fcenet tends to be overfitting.\n ohem_ratio (float): the negative/positive ratio in OHEM.\n \"\"\"\n\n def __init__(self, fourier_degree, num_sample, ohem_ratio=3.):\n super().__init__()\n self.fourier_degree = fourier_degree\n self.num_sample = num_sample\n self.ohem_ratio = ohem_ratio\n\n def forward(self, preds, _, p3_maps, p4_maps, p5_maps):\n assert isinstance(preds, list)\n assert p3_maps[0].shape[0] == 4 * self.fourier_degree + 5,\\\n 'fourier degree not equal in FCEhead and FCEtarget'\n\n device = preds[0][0].device\n # to tensor\n gts = [p3_maps, p4_maps, p5_maps]\n for idx, maps in enumerate(gts):\n gts[idx] = torch.from_numpy(np.stack(maps)).float().to(device)\n\n losses = multi_apply(self.forward_single, preds, gts)\n\n loss_tr = torch.tensor(0., device=device).float()\n loss_tcl = torch.tensor(0., device=device).float()\n loss_reg_x = torch.tensor(0., device=device).float()\n loss_reg_y = torch.tensor(0., device=device).float()\n\n for idx, loss in enumerate(losses):\n if idx == 0:\n loss_tr += sum(loss)\n elif idx == 1:\n loss_tcl += sum(loss)\n elif idx == 2:\n loss_reg_x += sum(loss)\n else:\n loss_reg_y += sum(loss)\n\n results = dict(\n loss_text=loss_tr,\n loss_center=loss_tcl,\n loss_reg_x=loss_reg_x,\n loss_reg_y=loss_reg_y,\n )\n\n return results\n\n def forward_single(self, pred, gt):\n cls_pred = pred[0].permute(0, 2, 3, 1).contiguous()\n reg_pred = pred[1].permute(0, 2, 3, 1).contiguous()\n gt = gt.permute(0, 2, 3, 1).contiguous()\n\n k = 2 * self.fourier_degree + 1\n tr_pred = cls_pred[:, :, :, :2].view(-1, 2)\n tcl_pred = cls_pred[:, :, :, 2:].view(-1, 2)\n x_pred = reg_pred[:, :, :, 0:k].view(-1, k)\n y_pred = reg_pred[:, :, :, k:2 * k].view(-1, k)\n\n tr_mask = gt[:, :, :, :1].view(-1)\n tcl_mask = gt[:, :, :, 1:2].view(-1)\n train_mask = gt[:, :, :, 2:3].view(-1)\n x_map = gt[:, :, :, 3:3 + k].view(-1, k)\n y_map = gt[:, :, :, 3 + k:].view(-1, k)\n\n tr_train_mask = train_mask * tr_mask\n device = x_map.device\n # tr loss\n loss_tr = self.ohem(tr_pred, tr_mask.long(), train_mask.long())\n\n # tcl loss\n loss_tcl = torch.tensor(0.).float().to(device)\n tr_neg_mask = 1 - tr_train_mask\n if tr_train_mask.sum().item() > 0:\n loss_tcl_pos = F.cross_entropy(\n tcl_pred[tr_train_mask.bool()],\n tcl_mask[tr_train_mask.bool()].long())\n loss_tcl_neg = F.cross_entropy(tcl_pred[tr_neg_mask.bool()],\n tcl_mask[tr_neg_mask.bool()].long())\n loss_tcl = loss_tcl_pos + 0.5 * loss_tcl_neg\n\n # regression loss\n loss_reg_x = torch.tensor(0.).float().to(device)\n loss_reg_y = torch.tensor(0.).float().to(device)\n if tr_train_mask.sum().item() > 0:\n weight = (tr_mask[tr_train_mask.bool()].float() +\n tcl_mask[tr_train_mask.bool()].float()) / 2\n weight = weight.contiguous().view(-1, 1)\n\n ft_x, ft_y = self.fourier2poly(x_map, y_map)\n ft_x_pre, ft_y_pre = self.fourier2poly(x_pred, y_pred)\n\n loss_reg_x = torch.mean(weight * F.smooth_l1_loss(\n ft_x_pre[tr_train_mask.bool()],\n ft_x[tr_train_mask.bool()],\n reduction='none'))\n loss_reg_y = torch.mean(weight * F.smooth_l1_loss(\n ft_y_pre[tr_train_mask.bool()],\n ft_y[tr_train_mask.bool()],\n reduction='none'))\n\n return loss_tr, loss_tcl, loss_reg_x, loss_reg_y\n\n def ohem(self, predict, target, train_mask):\n device = train_mask.device\n pos = (target * train_mask).bool()\n neg = ((1 - target) * train_mask).bool()\n\n n_pos = pos.float().sum()\n\n if n_pos.item() > 0:\n loss_pos = F.cross_entropy(\n predict[pos], target[pos], reduction='sum')\n loss_neg = F.cross_entropy(\n predict[neg], target[neg], reduction='none')\n n_neg = min(\n int(neg.float().sum().item()),\n int(self.ohem_ratio * n_pos.float()))\n else:\n loss_pos = torch.tensor(0.).to(device)\n loss_neg = F.cross_entropy(\n predict[neg], target[neg], reduction='none')\n n_neg = 100\n if len(loss_neg) > n_neg:\n loss_neg, _ = torch.topk(loss_neg, n_neg)\n\n return (loss_pos + loss_neg.sum()) / (n_pos + n_neg).float()\n\n def fourier2poly(self, real_maps, imag_maps):\n \"\"\"Transform Fourier coefficient maps to polygon maps.\n\n Args:\n real_maps (tensor): A map composed of the real parts of the\n Fourier coefficients, whose shape is (-1, 2k+1)\n imag_maps (tensor):A map composed of the imag parts of the\n Fourier coefficients, whose shape is (-1, 2k+1)\n\n Returns\n x_maps (tensor): A map composed of the x value of the polygon\n represented by n sample points (xn, yn), whose shape is (-1, n)\n y_maps (tensor): A map composed of the y value of the polygon\n represented by n sample points (xn, yn), whose shape is (-1, n)\n \"\"\"\n\n device = real_maps.device\n\n k_vect = torch.arange(\n -self.fourier_degree,\n self.fourier_degree + 1,\n dtype=torch.float,\n device=device).view(-1, 1)\n i_vect = torch.arange(\n 0, self.num_sample, dtype=torch.float, device=device).view(1, -1)\n\n transform_matrix = 2 * np.pi / self.num_sample * torch.mm(\n k_vect, i_vect)\n\n x1 = torch.einsum('ak, kn-> an', real_maps,\n torch.cos(transform_matrix))\n x2 = torch.einsum('ak, kn-> an', imag_maps,\n torch.sin(transform_matrix))\n y1 = torch.einsum('ak, kn-> an', real_maps,\n torch.sin(transform_matrix))\n y2 = torch.einsum('ak, kn-> an', imag_maps,\n torch.cos(transform_matrix))\n\n x_maps = x1 - x2\n y_maps = y1 + y2\n\n return x_maps, y_maps\n", "path": "mmocr/models/textdet/losses/fce_loss.py"}]}

| 2,686 | 230 |

gh_patches_debug_43238

|

rasdani/github-patches

|

git_diff

|

openvinotoolkit__datumaro-689

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[WIP]Fix yolo format output path

### Summary

delete 'data' folder from path because it is not generated

### Checklist

<!-- Put an 'x' in all the boxes that apply -->

- [ ] I submit my changes into the `develop` branch

- [ ] I have added description of my changes into [CHANGELOG](https://github.com/openvinotoolkit/datumaro/blob/develop/CHANGELOG.md)

- [ ] I have updated the [documentation](

https://github.com/openvinotoolkit/datumaro/tree/develop/docs) accordingly

- [ ] I have added tests to cover my changes

- [ ] I have [linked related issues](

https://help.github.com/en/github/managing-your-work-on-github/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword)

### License

- [ ] I submit _my code changes_ under the same [MIT License](

https://github.com/openvinotoolkit/datumaro/blob/develop/LICENSE) that covers the project.

Feel free to contact the maintainers if that's a concern.

- [ ] I have updated the license header for each file (see an example below)

```python

# Copyright (C) 2021 Intel Corporation

#

# SPDX-License-Identifier: MIT

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `datumaro/plugins/yolo_format/converter.py`

Content:

```

1 # Copyright (C) 2019-2022 Intel Corporation

2 #

3 # SPDX-License-Identifier: MIT

4

5 import logging as log

6 import os

7 import os.path as osp

8 from collections import OrderedDict

9

10 from datumaro.components.annotation import AnnotationType, Bbox

11 from datumaro.components.converter import Converter

12 from datumaro.components.dataset import ItemStatus

13 from datumaro.components.errors import MediaTypeError

14 from datumaro.components.extractor import DEFAULT_SUBSET_NAME, DatasetItem

15 from datumaro.components.media import Image

16

17 from .format import YoloPath

18

19

20 def _make_yolo_bbox(img_size, box):

21 # https://github.com/pjreddie/darknet/blob/master/scripts/voc_label.py

22 # <x> <y> <width> <height> - values relative to width and height of image

23 # <x> <y> - are center of rectangle

24 x = (box[0] + box[2]) / 2 / img_size[0]

25 y = (box[1] + box[3]) / 2 / img_size[1]

26 w = (box[2] - box[0]) / img_size[0]

27 h = (box[3] - box[1]) / img_size[1]

28 return x, y, w, h

29

30

31 class YoloConverter(Converter):

32 # https://github.com/AlexeyAB/darknet#how-to-train-to-detect-your-custom-objects

33 DEFAULT_IMAGE_EXT = ".jpg"

34

35 def apply(self):

36 extractor = self._extractor

37 save_dir = self._save_dir

38

39 if self._extractor.media_type() and not issubclass(self._extractor.media_type(), Image):

40 raise MediaTypeError("Media type is not an image")

41

42 os.makedirs(save_dir, exist_ok=True)

43

44 if self._save_dataset_meta:

45 self._save_meta_file(self._save_dir)

46

47 label_categories = extractor.categories()[AnnotationType.label]

48 label_ids = {label.name: idx for idx, label in enumerate(label_categories.items)}

49 with open(osp.join(save_dir, "obj.names"), "w", encoding="utf-8") as f:

50 f.writelines("%s\n" % l[0] for l in sorted(label_ids.items(), key=lambda x: x[1]))

51

52 subset_lists = OrderedDict()

53

54 subsets = self._extractor.subsets()

55 pbars = self._ctx.progress_reporter.split(len(subsets))

56 for (subset_name, subset), pbar in zip(subsets.items(), pbars):

57 if not subset_name or subset_name == DEFAULT_SUBSET_NAME:

58 subset_name = YoloPath.DEFAULT_SUBSET_NAME

59 elif subset_name not in YoloPath.SUBSET_NAMES:

60 log.warning(

61 "Skipping subset export '%s'. "

62 "If specified, the only valid names are %s"

63 % (subset_name, ", ".join("'%s'" % s for s in YoloPath.SUBSET_NAMES))

64 )

65 continue

66

67 subset_dir = osp.join(save_dir, "obj_%s_data" % subset_name)

68 os.makedirs(subset_dir, exist_ok=True)

69

70 image_paths = OrderedDict()

71 for item in pbar.iter(subset, desc=f"Exporting '{subset_name}'"):

72 try:

73 if not item.media or not (item.media.has_data or item.media.has_size):

74 raise Exception(

75 "Failed to export item '%s': " "item has no image info" % item.id

76 )

77

78 image_name = self._make_image_filename(item)

79 if self._save_media:

80 if item.media:

81 self._save_image(item, osp.join(subset_dir, image_name))

82 else:

83 log.warning("Item '%s' has no image" % item.id)

84 image_paths[item.id] = osp.join("data", osp.basename(subset_dir), image_name)

85

86 yolo_annotation = self._export_item_annotation(item)

87 annotation_path = osp.join(subset_dir, "%s.txt" % item.id)

88 os.makedirs(osp.dirname(annotation_path), exist_ok=True)

89 with open(annotation_path, "w", encoding="utf-8") as f:

90 f.write(yolo_annotation)

91 except Exception as e:

92 self._report_item_error(e, item_id=(item.id, item.subset))

93

94 subset_list_name = "%s.txt" % subset_name

95 subset_list_path = osp.join(save_dir, subset_list_name)

96 if self._patch and subset_name in self._patch.updated_subsets and not image_paths:

97 if osp.isfile(subset_list_path):

98 os.remove(subset_list_path)

99 continue

100

101 subset_lists[subset_name] = subset_list_name

102 with open(subset_list_path, "w", encoding="utf-8") as f:

103 f.writelines("%s\n" % s for s in image_paths.values())

104

105 with open(osp.join(save_dir, "obj.data"), "w", encoding="utf-8") as f:

106 f.write("classes = %s\n" % len(label_ids))

107

108 for subset_name, subset_list_name in subset_lists.items():

109 f.write("%s = %s\n" % (subset_name, osp.join("data", subset_list_name)))

110

111 f.write("names = %s\n" % osp.join("data", "obj.names"))

112 f.write("backup = backup/\n")

113

114 def _export_item_annotation(self, item):

115 height, width = item.media.size

116

117 yolo_annotation = ""

118

119 for bbox in item.annotations:

120 if not isinstance(bbox, Bbox) or bbox.label is None:

121 continue

122

123 yolo_bb = _make_yolo_bbox((width, height), bbox.points)

124 yolo_bb = " ".join("%.6f" % p for p in yolo_bb)

125 yolo_annotation += "%s %s\n" % (bbox.label, yolo_bb)

126

127 return yolo_annotation

128

129 @classmethod

130 def patch(cls, dataset, patch, save_dir, **kwargs):

131 conv = cls(dataset, save_dir=save_dir, **kwargs)

132 conv._patch = patch

133 conv.apply()

134

135 for (item_id, subset), status in patch.updated_items.items():

136 if status != ItemStatus.removed:

137 item = patch.data.get(item_id, subset)

138 else:

139 item = DatasetItem(item_id, subset=subset)

140

141 if not (status == ItemStatus.removed or not item.media):

142 continue

143

144 if subset == DEFAULT_SUBSET_NAME:

145 subset = YoloPath.DEFAULT_SUBSET_NAME

146 subset_dir = osp.join(save_dir, "obj_%s_data" % subset)

147

148 image_path = osp.join(subset_dir, conv._make_image_filename(item))

149 if osp.isfile(image_path):

150 os.remove(image_path)

151

152 ann_path = osp.join(subset_dir, "%s.txt" % item.id)

153 if osp.isfile(ann_path):

154 os.remove(ann_path)

155

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/datumaro/plugins/yolo_format/converter.py b/datumaro/plugins/yolo_format/converter.py

--- a/datumaro/plugins/yolo_format/converter.py

+++ b/datumaro/plugins/yolo_format/converter.py

@@ -11,8 +11,9 @@

from datumaro.components.converter import Converter

from datumaro.components.dataset import ItemStatus

from datumaro.components.errors import MediaTypeError

-from datumaro.components.extractor import DEFAULT_SUBSET_NAME, DatasetItem

+from datumaro.components.extractor import DEFAULT_SUBSET_NAME, DatasetItem, IExtractor

from datumaro.components.media import Image

+from datumaro.util import str_to_bool

from .format import YoloPath

@@ -32,6 +33,24 @@

# https://github.com/AlexeyAB/darknet#how-to-train-to-detect-your-custom-objects

DEFAULT_IMAGE_EXT = ".jpg"

+ @classmethod

+ def build_cmdline_parser(cls, **kwargs):

+ parser = super().build_cmdline_parser(**kwargs)

+ parser.add_argument(

+ "--add-path-prefix",

+ default=True,

+ type=str_to_bool,

+ help="Add the 'data/' prefix for paths in the dataset info (default: %(default)s)",

+ )

+ return parser

+

+ def __init__(

+ self, extractor: IExtractor, save_dir: str, *, add_path_prefix: bool = True, **kwargs

+ ) -> None:

+ super().__init__(extractor, save_dir, **kwargs)

+

+ self._prefix = "data" if add_path_prefix else ""

+

def apply(self):

extractor = self._extractor

save_dir = self._save_dir

@@ -81,7 +100,9 @@

self._save_image(item, osp.join(subset_dir, image_name))

else:

log.warning("Item '%s' has no image" % item.id)

- image_paths[item.id] = osp.join("data", osp.basename(subset_dir), image_name)

+ image_paths[item.id] = osp.join(

+ self._prefix, osp.basename(subset_dir), image_name

+ )

yolo_annotation = self._export_item_annotation(item)

annotation_path = osp.join(subset_dir, "%s.txt" % item.id)

@@ -91,7 +112,7 @@

except Exception as e:

self._report_item_error(e, item_id=(item.id, item.subset))

- subset_list_name = "%s.txt" % subset_name

+ subset_list_name = f"{subset_name}.txt"

subset_list_path = osp.join(save_dir, subset_list_name)

if self._patch and subset_name in self._patch.updated_subsets and not image_paths:

if osp.isfile(subset_list_path):

@@ -100,15 +121,18 @@

subset_lists[subset_name] = subset_list_name

with open(subset_list_path, "w", encoding="utf-8") as f:

- f.writelines("%s\n" % s for s in image_paths.values())

+ f.writelines("%s\n" % s.replace("\\", "/") for s in image_paths.values())

with open(osp.join(save_dir, "obj.data"), "w", encoding="utf-8") as f:

- f.write("classes = %s\n" % len(label_ids))

+ f.write(f"classes = {len(label_ids)}\n")

for subset_name, subset_list_name in subset_lists.items():

- f.write("%s = %s\n" % (subset_name, osp.join("data", subset_list_name)))

+ f.write(

+ "%s = %s\n"

+ % (subset_name, osp.join(self._prefix, subset_list_name).replace("\\", "/"))

+ )

- f.write("names = %s\n" % osp.join("data", "obj.names"))

+ f.write("names = %s\n" % osp.join(self._prefix, "obj.names"))

f.write("backup = backup/\n")

def _export_item_annotation(self, item):

|

{"golden_diff": "diff --git a/datumaro/plugins/yolo_format/converter.py b/datumaro/plugins/yolo_format/converter.py\n--- a/datumaro/plugins/yolo_format/converter.py\n+++ b/datumaro/plugins/yolo_format/converter.py\n@@ -11,8 +11,9 @@\n from datumaro.components.converter import Converter\n from datumaro.components.dataset import ItemStatus\n from datumaro.components.errors import MediaTypeError\n-from datumaro.components.extractor import DEFAULT_SUBSET_NAME, DatasetItem\n+from datumaro.components.extractor import DEFAULT_SUBSET_NAME, DatasetItem, IExtractor\n from datumaro.components.media import Image\n+from datumaro.util import str_to_bool\n \n from .format import YoloPath\n \n@@ -32,6 +33,24 @@\n # https://github.com/AlexeyAB/darknet#how-to-train-to-detect-your-custom-objects\n DEFAULT_IMAGE_EXT = \".jpg\"\n \n+ @classmethod\n+ def build_cmdline_parser(cls, **kwargs):\n+ parser = super().build_cmdline_parser(**kwargs)\n+ parser.add_argument(\n+ \"--add-path-prefix\",\n+ default=True,\n+ type=str_to_bool,\n+ help=\"Add the 'data/' prefix for paths in the dataset info (default: %(default)s)\",\n+ )\n+ return parser\n+\n+ def __init__(\n+ self, extractor: IExtractor, save_dir: str, *, add_path_prefix: bool = True, **kwargs\n+ ) -> None:\n+ super().__init__(extractor, save_dir, **kwargs)\n+\n+ self._prefix = \"data\" if add_path_prefix else \"\"\n+\n def apply(self):\n extractor = self._extractor\n save_dir = self._save_dir\n@@ -81,7 +100,9 @@\n self._save_image(item, osp.join(subset_dir, image_name))\n else:\n log.warning(\"Item '%s' has no image\" % item.id)\n- image_paths[item.id] = osp.join(\"data\", osp.basename(subset_dir), image_name)\n+ image_paths[item.id] = osp.join(\n+ self._prefix, osp.basename(subset_dir), image_name\n+ )\n \n yolo_annotation = self._export_item_annotation(item)\n annotation_path = osp.join(subset_dir, \"%s.txt\" % item.id)\n@@ -91,7 +112,7 @@\n except Exception as e:\n self._report_item_error(e, item_id=(item.id, item.subset))\n \n- subset_list_name = \"%s.txt\" % subset_name\n+ subset_list_name = f\"{subset_name}.txt\"\n subset_list_path = osp.join(save_dir, subset_list_name)\n if self._patch and subset_name in self._patch.updated_subsets and not image_paths:\n if osp.isfile(subset_list_path):\n@@ -100,15 +121,18 @@\n \n subset_lists[subset_name] = subset_list_name\n with open(subset_list_path, \"w\", encoding=\"utf-8\") as f:\n- f.writelines(\"%s\\n\" % s for s in image_paths.values())\n+ f.writelines(\"%s\\n\" % s.replace(\"\\\\\", \"/\") for s in image_paths.values())\n \n with open(osp.join(save_dir, \"obj.data\"), \"w\", encoding=\"utf-8\") as f:\n- f.write(\"classes = %s\\n\" % len(label_ids))\n+ f.write(f\"classes = {len(label_ids)}\\n\")\n \n for subset_name, subset_list_name in subset_lists.items():\n- f.write(\"%s = %s\\n\" % (subset_name, osp.join(\"data\", subset_list_name)))\n+ f.write(\n+ \"%s = %s\\n\"\n+ % (subset_name, osp.join(self._prefix, subset_list_name).replace(\"\\\\\", \"/\"))\n+ )\n \n- f.write(\"names = %s\\n\" % osp.join(\"data\", \"obj.names\"))\n+ f.write(\"names = %s\\n\" % osp.join(self._prefix, \"obj.names\"))\n f.write(\"backup = backup/\\n\")\n \n def _export_item_annotation(self, item):\n", "issue": "[WIP]Fix yolo format output path\n\r\n\r\n### Summary\r\ndelete 'data' folder from path because it is not generated\r\n\r\n\r\n\r\n### Checklist\r\n<!-- Put an 'x' in all the boxes that apply -->\r\n- [ ] I submit my changes into the `develop` branch\r\n- [ ] I have added description of my changes into [CHANGELOG](https://github.com/openvinotoolkit/datumaro/blob/develop/CHANGELOG.md)\r\n- [ ] I have updated the [documentation](\r\n https://github.com/openvinotoolkit/datumaro/tree/develop/docs) accordingly\r\n- [ ] I have added tests to cover my changes\r\n- [ ] I have [linked related issues](\r\n https://help.github.com/en/github/managing-your-work-on-github/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword)\r\n\r\n### License\r\n\r\n- [ ] I submit _my code changes_ under the same [MIT License](\r\n https://github.com/openvinotoolkit/datumaro/blob/develop/LICENSE) that covers the project.\r\n Feel free to contact the maintainers if that's a concern.\r\n- [ ] I have updated the license header for each file (see an example below)\r\n\r\n```python\r\n# Copyright (C) 2021 Intel Corporation\r\n#\r\n# SPDX-License-Identifier: MIT\r\n```\r\n\n", "before_files": [{"content": "# Copyright (C) 2019-2022 Intel Corporation\n#\n# SPDX-License-Identifier: MIT\n\nimport logging as log\nimport os\nimport os.path as osp\nfrom collections import OrderedDict\n\nfrom datumaro.components.annotation import AnnotationType, Bbox\nfrom datumaro.components.converter import Converter\nfrom datumaro.components.dataset import ItemStatus\nfrom datumaro.components.errors import MediaTypeError\nfrom datumaro.components.extractor import DEFAULT_SUBSET_NAME, DatasetItem\nfrom datumaro.components.media import Image\n\nfrom .format import YoloPath\n\n\ndef _make_yolo_bbox(img_size, box):\n # https://github.com/pjreddie/darknet/blob/master/scripts/voc_label.py\n # <x> <y> <width> <height> - values relative to width and height of image\n # <x> <y> - are center of rectangle\n x = (box[0] + box[2]) / 2 / img_size[0]\n y = (box[1] + box[3]) / 2 / img_size[1]\n w = (box[2] - box[0]) / img_size[0]\n h = (box[3] - box[1]) / img_size[1]\n return x, y, w, h\n\n\nclass YoloConverter(Converter):\n # https://github.com/AlexeyAB/darknet#how-to-train-to-detect-your-custom-objects\n DEFAULT_IMAGE_EXT = \".jpg\"\n\n def apply(self):\n extractor = self._extractor\n save_dir = self._save_dir\n\n if self._extractor.media_type() and not issubclass(self._extractor.media_type(), Image):\n raise MediaTypeError(\"Media type is not an image\")\n\n os.makedirs(save_dir, exist_ok=True)\n\n if self._save_dataset_meta:\n self._save_meta_file(self._save_dir)\n\n label_categories = extractor.categories()[AnnotationType.label]\n label_ids = {label.name: idx for idx, label in enumerate(label_categories.items)}\n with open(osp.join(save_dir, \"obj.names\"), \"w\", encoding=\"utf-8\") as f:\n f.writelines(\"%s\\n\" % l[0] for l in sorted(label_ids.items(), key=lambda x: x[1]))\n\n subset_lists = OrderedDict()\n\n subsets = self._extractor.subsets()\n pbars = self._ctx.progress_reporter.split(len(subsets))\n for (subset_name, subset), pbar in zip(subsets.items(), pbars):\n if not subset_name or subset_name == DEFAULT_SUBSET_NAME:\n subset_name = YoloPath.DEFAULT_SUBSET_NAME\n elif subset_name not in YoloPath.SUBSET_NAMES:\n log.warning(\n \"Skipping subset export '%s'. \"\n \"If specified, the only valid names are %s\"\n % (subset_name, \", \".join(\"'%s'\" % s for s in YoloPath.SUBSET_NAMES))\n )\n continue\n\n subset_dir = osp.join(save_dir, \"obj_%s_data\" % subset_name)\n os.makedirs(subset_dir, exist_ok=True)\n\n image_paths = OrderedDict()\n for item in pbar.iter(subset, desc=f\"Exporting '{subset_name}'\"):\n try:\n if not item.media or not (item.media.has_data or item.media.has_size):\n raise Exception(\n \"Failed to export item '%s': \" \"item has no image info\" % item.id\n )\n\n image_name = self._make_image_filename(item)\n if self._save_media:\n if item.media:\n self._save_image(item, osp.join(subset_dir, image_name))\n else:\n log.warning(\"Item '%s' has no image\" % item.id)\n image_paths[item.id] = osp.join(\"data\", osp.basename(subset_dir), image_name)\n\n yolo_annotation = self._export_item_annotation(item)\n annotation_path = osp.join(subset_dir, \"%s.txt\" % item.id)\n os.makedirs(osp.dirname(annotation_path), exist_ok=True)\n with open(annotation_path, \"w\", encoding=\"utf-8\") as f:\n f.write(yolo_annotation)\n except Exception as e:\n self._report_item_error(e, item_id=(item.id, item.subset))\n\n subset_list_name = \"%s.txt\" % subset_name\n subset_list_path = osp.join(save_dir, subset_list_name)\n if self._patch and subset_name in self._patch.updated_subsets and not image_paths:\n if osp.isfile(subset_list_path):\n os.remove(subset_list_path)\n continue\n\n subset_lists[subset_name] = subset_list_name\n with open(subset_list_path, \"w\", encoding=\"utf-8\") as f:\n f.writelines(\"%s\\n\" % s for s in image_paths.values())\n\n with open(osp.join(save_dir, \"obj.data\"), \"w\", encoding=\"utf-8\") as f:\n f.write(\"classes = %s\\n\" % len(label_ids))\n\n for subset_name, subset_list_name in subset_lists.items():\n f.write(\"%s = %s\\n\" % (subset_name, osp.join(\"data\", subset_list_name)))\n\n f.write(\"names = %s\\n\" % osp.join(\"data\", \"obj.names\"))\n f.write(\"backup = backup/\\n\")\n\n def _export_item_annotation(self, item):\n height, width = item.media.size\n\n yolo_annotation = \"\"\n\n for bbox in item.annotations:\n if not isinstance(bbox, Bbox) or bbox.label is None:\n continue\n\n yolo_bb = _make_yolo_bbox((width, height), bbox.points)\n yolo_bb = \" \".join(\"%.6f\" % p for p in yolo_bb)\n yolo_annotation += \"%s %s\\n\" % (bbox.label, yolo_bb)\n\n return yolo_annotation\n\n @classmethod\n def patch(cls, dataset, patch, save_dir, **kwargs):\n conv = cls(dataset, save_dir=save_dir, **kwargs)\n conv._patch = patch\n conv.apply()\n\n for (item_id, subset), status in patch.updated_items.items():\n if status != ItemStatus.removed:\n item = patch.data.get(item_id, subset)\n else:\n item = DatasetItem(item_id, subset=subset)\n\n if not (status == ItemStatus.removed or not item.media):\n continue\n\n if subset == DEFAULT_SUBSET_NAME:\n subset = YoloPath.DEFAULT_SUBSET_NAME\n subset_dir = osp.join(save_dir, \"obj_%s_data\" % subset)\n\n image_path = osp.join(subset_dir, conv._make_image_filename(item))\n if osp.isfile(image_path):\n os.remove(image_path)\n\n ann_path = osp.join(subset_dir, \"%s.txt\" % item.id)\n if osp.isfile(ann_path):\n os.remove(ann_path)\n", "path": "datumaro/plugins/yolo_format/converter.py"}], "after_files": [{"content": "# Copyright (C) 2019-2022 Intel Corporation\n#\n# SPDX-License-Identifier: MIT\n\nimport logging as log\nimport os\nimport os.path as osp\nfrom collections import OrderedDict\n\nfrom datumaro.components.annotation import AnnotationType, Bbox\nfrom datumaro.components.converter import Converter\nfrom datumaro.components.dataset import ItemStatus\nfrom datumaro.components.errors import MediaTypeError\nfrom datumaro.components.extractor import DEFAULT_SUBSET_NAME, DatasetItem, IExtractor\nfrom datumaro.components.media import Image\nfrom datumaro.util import str_to_bool\n\nfrom .format import YoloPath\n\n\ndef _make_yolo_bbox(img_size, box):\n # https://github.com/pjreddie/darknet/blob/master/scripts/voc_label.py\n # <x> <y> <width> <height> - values relative to width and height of image\n # <x> <y> - are center of rectangle\n x = (box[0] + box[2]) / 2 / img_size[0]\n y = (box[1] + box[3]) / 2 / img_size[1]\n w = (box[2] - box[0]) / img_size[0]\n h = (box[3] - box[1]) / img_size[1]\n return x, y, w, h\n\n\nclass YoloConverter(Converter):\n # https://github.com/AlexeyAB/darknet#how-to-train-to-detect-your-custom-objects\n DEFAULT_IMAGE_EXT = \".jpg\"\n\n @classmethod\n def build_cmdline_parser(cls, **kwargs):\n parser = super().build_cmdline_parser(**kwargs)\n parser.add_argument(\n \"--add-path-prefix\",\n default=True,\n type=str_to_bool,\n help=\"Add the 'data/' prefix for paths in the dataset info (default: %(default)s)\",\n )\n return parser\n\n def __init__(\n self, extractor: IExtractor, save_dir: str, *, add_path_prefix: bool = True, **kwargs\n ) -> None:\n super().__init__(extractor, save_dir, **kwargs)\n\n self._prefix = \"data\" if add_path_prefix else \"\"\n\n def apply(self):\n extractor = self._extractor\n save_dir = self._save_dir\n\n if self._extractor.media_type() and not issubclass(self._extractor.media_type(), Image):\n raise MediaTypeError(\"Media type is not an image\")\n\n os.makedirs(save_dir, exist_ok=True)\n\n if self._save_dataset_meta:\n self._save_meta_file(self._save_dir)\n\n label_categories = extractor.categories()[AnnotationType.label]\n label_ids = {label.name: idx for idx, label in enumerate(label_categories.items)}\n with open(osp.join(save_dir, \"obj.names\"), \"w\", encoding=\"utf-8\") as f:\n f.writelines(\"%s\\n\" % l[0] for l in sorted(label_ids.items(), key=lambda x: x[1]))\n\n subset_lists = OrderedDict()\n\n subsets = self._extractor.subsets()\n pbars = self._ctx.progress_reporter.split(len(subsets))\n for (subset_name, subset), pbar in zip(subsets.items(), pbars):\n if not subset_name or subset_name == DEFAULT_SUBSET_NAME:\n subset_name = YoloPath.DEFAULT_SUBSET_NAME\n elif subset_name not in YoloPath.SUBSET_NAMES:\n log.warning(\n \"Skipping subset export '%s'. \"\n \"If specified, the only valid names are %s\"\n % (subset_name, \", \".join(\"'%s'\" % s for s in YoloPath.SUBSET_NAMES))\n )\n continue\n\n subset_dir = osp.join(save_dir, \"obj_%s_data\" % subset_name)\n os.makedirs(subset_dir, exist_ok=True)\n\n image_paths = OrderedDict()\n for item in pbar.iter(subset, desc=f\"Exporting '{subset_name}'\"):\n try:\n if not item.media or not (item.media.has_data or item.media.has_size):\n raise Exception(\n \"Failed to export item '%s': \" \"item has no image info\" % item.id\n )\n\n image_name = self._make_image_filename(item)\n if self._save_media:\n if item.media:\n self._save_image(item, osp.join(subset_dir, image_name))\n else:\n log.warning(\"Item '%s' has no image\" % item.id)\n image_paths[item.id] = osp.join(\n self._prefix, osp.basename(subset_dir), image_name\n )\n\n yolo_annotation = self._export_item_annotation(item)\n annotation_path = osp.join(subset_dir, \"%s.txt\" % item.id)\n os.makedirs(osp.dirname(annotation_path), exist_ok=True)\n with open(annotation_path, \"w\", encoding=\"utf-8\") as f:\n f.write(yolo_annotation)\n except Exception as e:\n self._report_item_error(e, item_id=(item.id, item.subset))\n\n subset_list_name = f\"{subset_name}.txt\"\n subset_list_path = osp.join(save_dir, subset_list_name)\n if self._patch and subset_name in self._patch.updated_subsets and not image_paths:\n if osp.isfile(subset_list_path):\n os.remove(subset_list_path)\n continue\n\n subset_lists[subset_name] = subset_list_name\n with open(subset_list_path, \"w\", encoding=\"utf-8\") as f:\n f.writelines(\"%s\\n\" % s.replace(\"\\\\\", \"/\") for s in image_paths.values())\n\n with open(osp.join(save_dir, \"obj.data\"), \"w\", encoding=\"utf-8\") as f:\n f.write(f\"classes = {len(label_ids)}\\n\")\n\n for subset_name, subset_list_name in subset_lists.items():\n f.write(\n \"%s = %s\\n\"\n % (subset_name, osp.join(self._prefix, subset_list_name).replace(\"\\\\\", \"/\"))\n )\n\n f.write(\"names = %s\\n\" % osp.join(self._prefix, \"obj.names\"))\n f.write(\"backup = backup/\\n\")\n\n def _export_item_annotation(self, item):\n height, width = item.media.size\n\n yolo_annotation = \"\"\n\n for bbox in item.annotations:\n if not isinstance(bbox, Bbox) or bbox.label is None:\n continue\n\n yolo_bb = _make_yolo_bbox((width, height), bbox.points)\n yolo_bb = \" \".join(\"%.6f\" % p for p in yolo_bb)\n yolo_annotation += \"%s %s\\n\" % (bbox.label, yolo_bb)\n\n return yolo_annotation\n\n @classmethod\n def patch(cls, dataset, patch, save_dir, **kwargs):\n conv = cls(dataset, save_dir=save_dir, **kwargs)\n conv._patch = patch\n conv.apply()\n\n for (item_id, subset), status in patch.updated_items.items():\n if status != ItemStatus.removed:\n item = patch.data.get(item_id, subset)\n else:\n item = DatasetItem(item_id, subset=subset)\n\n if not (status == ItemStatus.removed or not item.media):\n continue\n\n if subset == DEFAULT_SUBSET_NAME:\n subset = YoloPath.DEFAULT_SUBSET_NAME\n subset_dir = osp.join(save_dir, \"obj_%s_data\" % subset)\n\n image_path = osp.join(subset_dir, conv._make_image_filename(item))\n if osp.isfile(image_path):\n os.remove(image_path)\n\n ann_path = osp.join(subset_dir, \"%s.txt\" % item.id)\n if osp.isfile(ann_path):\n os.remove(ann_path)\n", "path": "datumaro/plugins/yolo_format/converter.py"}]}

| 2,400 | 906 |

gh_patches_debug_18222

|

rasdani/github-patches

|

git_diff

|

streamlit__streamlit-1908

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

st.map() fails with zoom error for small number of points

# Summary

In this [forum post](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950), the user highlights that `st.map()` does not plot a single point for the map. To troubleshoot, I opened up the Chrome console, saw a "Zoom must be set error" message in regards to the map.

# Solution

Adding a non `None` zoom value in the `st.map()` call [resolves the issue](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950/3?u=randyzwitch). Need a heuristic for a "small" number of points where `st.map()` automatically adds an integer value for the `zoom` parameter. At larger volumes of data, `zoom =None` seems to be ok, and deck.gl infers the proper zoom level based on the bounding box of data points.

st.map() fails with zoom error for small number of points

# Summary

In this [forum post](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950), the user highlights that `st.map()` does not plot a single point for the map. To troubleshoot, I opened up the Chrome console, saw a "Zoom must be set error" message in regards to the map.

# Solution

Adding a non `None` zoom value in the `st.map()` call [resolves the issue](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950/3?u=randyzwitch). Need a heuristic for a "small" number of points where `st.map()` automatically adds an integer value for the `zoom` parameter. At larger volumes of data, `zoom =None` seems to be ok, and deck.gl infers the proper zoom level based on the bounding box of data points.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `lib/streamlit/elements/map.py`

Content:

```

1 # Copyright 2018-2020 Streamlit Inc.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """A wrapper for simple PyDeck scatter charts."""

16

17 import copy

18 import json

19 from typing import Any, Dict

20

21 import pandas as pd

22

23 from streamlit.proto.DeckGlJsonChart_pb2 import DeckGlJsonChart as DeckGlJsonChartProto

24 import streamlit.elements.deck_gl_json_chart as deck_gl_json_chart

25 from streamlit.errors import StreamlitAPIException

26

27

28 class MapMixin:

29 def map(dg, data=None, zoom=None, use_container_width=True):

30 """Display a map with points on it.

31

32 This is a wrapper around st.pydeck_chart to quickly create scatterplot

33 charts on top of a map, with auto-centering and auto-zoom.

34

35 When using this command, we advise all users to use a personal Mapbox

36 token. This ensures the map tiles used in this chart are more

37 robust. You can do this with the mapbox.token config option.

38

39 To get a token for yourself, create an account at

40 https://mapbox.com. It's free! (for moderate usage levels) See

41 https://docs.streamlit.io/en/latest/cli.html#view-all-config-options for more

42 info on how to set config options.

43

44 Parameters

45 ----------

46 data : pandas.DataFrame, pandas.Styler, numpy.ndarray, Iterable, dict,

47 or None

48 The data to be plotted. Must have columns called 'lat', 'lon',

49 'latitude', or 'longitude'.

50 zoom : int

51 Zoom level as specified in

52 https://wiki.openstreetmap.org/wiki/Zoom_levels

53

54 Example

55 -------

56 >>> import pandas as pd

57 >>> import numpy as np

58 >>>

59 >>> df = pd.DataFrame(

60 ... np.random.randn(1000, 2) / [50, 50] + [37.76, -122.4],

61 ... columns=['lat', 'lon'])

62 >>>

63 >>> st.map(df)

64

65 .. output::

66 https://share.streamlit.io/0.53.0-SULT/index.html?id=9gTiomqPEbvHY2huTLoQtH

67 height: 600px

68

69 """

70 map_proto = DeckGlJsonChartProto()

71 map_proto.json = to_deckgl_json(data, zoom)

72 map_proto.use_container_width = use_container_width

73 return dg._enqueue("deck_gl_json_chart", map_proto) # type: ignore

74

75

76 # Map used as the basis for st.map.

77 _DEFAULT_MAP = dict(deck_gl_json_chart.EMPTY_MAP) # type: Dict[str, Any]

78 _DEFAULT_MAP["mapStyle"] = "mapbox://styles/mapbox/light-v10"

79

80 # Other default parameters for st.map.

81 _DEFAULT_COLOR = [200, 30, 0, 160]

82 _ZOOM_LEVELS = [

83 360,

84 180,

85 90,

86 45,

87 22.5,

88 11.25,

89 5.625,

90 2.813,

91 1.406,

92 0.703,

93 0.352,

94 0.176,

95 0.088,

96 0.044,

97 0.022,

98 0.011,

99 0.005,

100 0.003,

101 0.001,

102 0.0005,

103 ]

104

105

106 def _get_zoom_level(distance):

107 """Get the zoom level for a given distance in degrees.

108

109 See https://wiki.openstreetmap.org/wiki/Zoom_levels for reference.

110

111 Parameters

112 ----------

113 distance : float

114 How many degrees of longitude should fit in the map.

115

116 Returns

117 -------

118 int

119 The zoom level, from 0 to 29.

120

121 """

122

123 for i in range(len(_ZOOM_LEVELS) - 1):

124 if _ZOOM_LEVELS[i + 1] < distance <= _ZOOM_LEVELS[i]:

125 return i

126

127

128 def to_deckgl_json(data, zoom):

129

130 if data is None or data.empty:

131 return json.dumps(_DEFAULT_MAP)

132

133 if "lat" in data:

134 lat = "lat"

135 elif "latitude" in data:

136 lat = "latitude"

137 else:

138 raise StreamlitAPIException(

139 'Map data must contain a column named "latitude" or "lat".'

140 )

141

142 if "lon" in data:

143 lon = "lon"

144 elif "longitude" in data:

145 lon = "longitude"

146 else:

147 raise StreamlitAPIException(

148 'Map data must contain a column called "longitude" or "lon".'

149 )

150

151 if data[lon].isnull().values.any() or data[lat].isnull().values.any():

152 raise StreamlitAPIException("Latitude and longitude data must be numeric.")

153

154 data = pd.DataFrame(data)

155

156 min_lat = data[lat].min()

157 max_lat = data[lat].max()

158 min_lon = data[lon].min()

159 max_lon = data[lon].max()

160 center_lat = (max_lat + min_lat) / 2.0

161 center_lon = (max_lon + min_lon) / 2.0

162 range_lon = abs(max_lon - min_lon)

163 range_lat = abs(max_lat - min_lat)

164

165 if zoom == None:

166 if range_lon > range_lat:

167 longitude_distance = range_lon

168 else:

169 longitude_distance = range_lat

170 zoom = _get_zoom_level(longitude_distance)

171

172 # "+1" because itertuples includes the row index.

173 lon_col_index = data.columns.get_loc(lon) + 1

174 lat_col_index = data.columns.get_loc(lat) + 1

175 final_data = []

176 for row in data.itertuples():

177 final_data.append(

178 {"lon": float(row[lon_col_index]), "lat": float(row[lat_col_index])}

179 )

180

181 default = copy.deepcopy(_DEFAULT_MAP)

182 default["initialViewState"]["latitude"] = center_lat

183 default["initialViewState"]["longitude"] = center_lon

184 default["initialViewState"]["zoom"] = zoom

185 default["layers"] = [

186 {

187 "@@type": "ScatterplotLayer",

188 "getPosition": "@@=[lon, lat]",

189 "getRadius": 10,

190 "radiusScale": 10,

191 "radiusMinPixels": 3,

192 "getFillColor": _DEFAULT_COLOR,

193 "data": final_data,

194 }

195 ]

196 return json.dumps(default)

197

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/lib/streamlit/elements/map.py b/lib/streamlit/elements/map.py

--- a/lib/streamlit/elements/map.py

+++ b/lib/streamlit/elements/map.py

@@ -79,6 +79,7 @@

# Other default parameters for st.map.

_DEFAULT_COLOR = [200, 30, 0, 160]

+_DEFAULT_ZOOM_LEVEL = 12

_ZOOM_LEVELS = [

360,

180,

@@ -100,6 +101,7 @@

0.003,

0.001,

0.0005,

+ 0.00025,

]

@@ -116,10 +118,14 @@

Returns

-------

int

- The zoom level, from 0 to 29.

+ The zoom level, from 0 to 20.

"""

+ # For small number of points the default zoom level will be used.

+ if distance < _ZOOM_LEVELS[-1]:

+ return _DEFAULT_ZOOM_LEVEL

+

for i in range(len(_ZOOM_LEVELS) - 1):

if _ZOOM_LEVELS[i + 1] < distance <= _ZOOM_LEVELS[i]:

return i

|

{"golden_diff": "diff --git a/lib/streamlit/elements/map.py b/lib/streamlit/elements/map.py\n--- a/lib/streamlit/elements/map.py\n+++ b/lib/streamlit/elements/map.py\n@@ -79,6 +79,7 @@\n \n # Other default parameters for st.map.\n _DEFAULT_COLOR = [200, 30, 0, 160]\n+_DEFAULT_ZOOM_LEVEL = 12\n _ZOOM_LEVELS = [\n 360,\n 180,\n@@ -100,6 +101,7 @@\n 0.003,\n 0.001,\n 0.0005,\n+ 0.00025,\n ]\n \n \n@@ -116,10 +118,14 @@\n Returns\n -------\n int\n- The zoom level, from 0 to 29.\n+ The zoom level, from 0 to 20.\n \n \"\"\"\n \n+ # For small number of points the default zoom level will be used.\n+ if distance < _ZOOM_LEVELS[-1]:\n+ return _DEFAULT_ZOOM_LEVEL\n+\n for i in range(len(_ZOOM_LEVELS) - 1):\n if _ZOOM_LEVELS[i + 1] < distance <= _ZOOM_LEVELS[i]:\n return i\n", "issue": "st.map() fails with zoom error for small number of points\n# Summary\r\n\r\nIn this [forum post](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950), the user highlights that `st.map()` does not plot a single point for the map. To troubleshoot, I opened up the Chrome console, saw a \"Zoom must be set error\" message in regards to the map.\r\n\r\n# Solution\r\n\r\nAdding a non `None` zoom value in the `st.map()` call [resolves the issue](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950/3?u=randyzwitch). Need a heuristic for a \"small\" number of points where `st.map()` automatically adds an integer value for the `zoom` parameter. At larger volumes of data, `zoom =None` seems to be ok, and deck.gl infers the proper zoom level based on the bounding box of data points.\nst.map() fails with zoom error for small number of points\n# Summary\r\n\r\nIn this [forum post](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950), the user highlights that `st.map()` does not plot a single point for the map. To troubleshoot, I opened up the Chrome console, saw a \"Zoom must be set error\" message in regards to the map.\r\n\r\n# Solution\r\n\r\nAdding a non `None` zoom value in the `st.map()` call [resolves the issue](https://discuss.streamlit.io/t/plot-map-with-address-from-user-input/2950/3?u=randyzwitch). Need a heuristic for a \"small\" number of points where `st.map()` automatically adds an integer value for the `zoom` parameter. At larger volumes of data, `zoom =None` seems to be ok, and deck.gl infers the proper zoom level based on the bounding box of data points.\n", "before_files": [{"content": "# Copyright 2018-2020 Streamlit Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"A wrapper for simple PyDeck scatter charts.\"\"\"\n\nimport copy\nimport json\nfrom typing import Any, Dict\n\nimport pandas as pd\n\nfrom streamlit.proto.DeckGlJsonChart_pb2 import DeckGlJsonChart as DeckGlJsonChartProto\nimport streamlit.elements.deck_gl_json_chart as deck_gl_json_chart\nfrom streamlit.errors import StreamlitAPIException\n\n\nclass MapMixin:\n def map(dg, data=None, zoom=None, use_container_width=True):\n \"\"\"Display a map with points on it.\n\n This is a wrapper around st.pydeck_chart to quickly create scatterplot\n charts on top of a map, with auto-centering and auto-zoom.\n\n When using this command, we advise all users to use a personal Mapbox\n token. This ensures the map tiles used in this chart are more\n robust. You can do this with the mapbox.token config option.\n\n To get a token for yourself, create an account at\n https://mapbox.com. It's free! (for moderate usage levels) See\n https://docs.streamlit.io/en/latest/cli.html#view-all-config-options for more\n info on how to set config options.\n\n Parameters\n ----------\n data : pandas.DataFrame, pandas.Styler, numpy.ndarray, Iterable, dict,\n or None\n The data to be plotted. Must have columns called 'lat', 'lon',\n 'latitude', or 'longitude'.\n zoom : int\n Zoom level as specified in\n https://wiki.openstreetmap.org/wiki/Zoom_levels\n\n Example\n -------\n >>> import pandas as pd\n >>> import numpy as np\n >>>\n >>> df = pd.DataFrame(\n ... np.random.randn(1000, 2) / [50, 50] + [37.76, -122.4],\n ... columns=['lat', 'lon'])\n >>>\n >>> st.map(df)\n\n .. output::\n https://share.streamlit.io/0.53.0-SULT/index.html?id=9gTiomqPEbvHY2huTLoQtH\n height: 600px\n\n \"\"\"\n map_proto = DeckGlJsonChartProto()\n map_proto.json = to_deckgl_json(data, zoom)\n map_proto.use_container_width = use_container_width\n return dg._enqueue(\"deck_gl_json_chart\", map_proto) # type: ignore\n\n\n# Map used as the basis for st.map.\n_DEFAULT_MAP = dict(deck_gl_json_chart.EMPTY_MAP) # type: Dict[str, Any]\n_DEFAULT_MAP[\"mapStyle\"] = \"mapbox://styles/mapbox/light-v10\"\n\n# Other default parameters for st.map.\n_DEFAULT_COLOR = [200, 30, 0, 160]\n_ZOOM_LEVELS = [\n 360,\n 180,\n 90,\n 45,\n 22.5,\n 11.25,\n 5.625,\n 2.813,\n 1.406,\n 0.703,\n 0.352,\n 0.176,\n 0.088,\n 0.044,\n 0.022,\n 0.011,\n 0.005,\n 0.003,\n 0.001,\n 0.0005,\n]\n\n\ndef _get_zoom_level(distance):\n \"\"\"Get the zoom level for a given distance in degrees.\n\n See https://wiki.openstreetmap.org/wiki/Zoom_levels for reference.\n\n Parameters\n ----------\n distance : float\n How many degrees of longitude should fit in the map.\n\n Returns\n -------\n int\n The zoom level, from 0 to 29.\n\n \"\"\"\n\n for i in range(len(_ZOOM_LEVELS) - 1):\n if _ZOOM_LEVELS[i + 1] < distance <= _ZOOM_LEVELS[i]:\n return i\n\n\ndef to_deckgl_json(data, zoom):\n\n if data is None or data.empty:\n return json.dumps(_DEFAULT_MAP)\n\n if \"lat\" in data:\n lat = \"lat\"\n elif \"latitude\" in data:\n lat = \"latitude\"\n else:\n raise StreamlitAPIException(\n 'Map data must contain a column named \"latitude\" or \"lat\".'\n )\n\n if \"lon\" in data:\n lon = \"lon\"\n elif \"longitude\" in data:\n lon = \"longitude\"\n else:\n raise StreamlitAPIException(\n 'Map data must contain a column called \"longitude\" or \"lon\".'\n )\n\n if data[lon].isnull().values.any() or data[lat].isnull().values.any():\n raise StreamlitAPIException(\"Latitude and longitude data must be numeric.\")\n\n data = pd.DataFrame(data)\n\n min_lat = data[lat].min()\n max_lat = data[lat].max()\n min_lon = data[lon].min()\n max_lon = data[lon].max()\n center_lat = (max_lat + min_lat) / 2.0\n center_lon = (max_lon + min_lon) / 2.0\n range_lon = abs(max_lon - min_lon)\n range_lat = abs(max_lat - min_lat)\n\n if zoom == None:\n if range_lon > range_lat:\n longitude_distance = range_lon\n else:\n longitude_distance = range_lat\n zoom = _get_zoom_level(longitude_distance)\n\n # \"+1\" because itertuples includes the row index.\n lon_col_index = data.columns.get_loc(lon) + 1\n lat_col_index = data.columns.get_loc(lat) + 1\n final_data = []\n for row in data.itertuples():\n final_data.append(\n {\"lon\": float(row[lon_col_index]), \"lat\": float(row[lat_col_index])}\n )\n\n default = copy.deepcopy(_DEFAULT_MAP)\n default[\"initialViewState\"][\"latitude\"] = center_lat\n default[\"initialViewState\"][\"longitude\"] = center_lon\n default[\"initialViewState\"][\"zoom\"] = zoom\n default[\"layers\"] = [\n {\n \"@@type\": \"ScatterplotLayer\",\n \"getPosition\": \"@@=[lon, lat]\",\n \"getRadius\": 10,\n \"radiusScale\": 10,\n \"radiusMinPixels\": 3,\n \"getFillColor\": _DEFAULT_COLOR,\n \"data\": final_data,\n }\n ]\n return json.dumps(default)\n", "path": "lib/streamlit/elements/map.py"}], "after_files": [{"content": "# Copyright 2018-2020 Streamlit Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"A wrapper for simple PyDeck scatter charts.\"\"\"\n\nimport copy\nimport json\nfrom typing import Any, Dict\n\nimport pandas as pd\n\nfrom streamlit.proto.DeckGlJsonChart_pb2 import DeckGlJsonChart as DeckGlJsonChartProto\nimport streamlit.elements.deck_gl_json_chart as deck_gl_json_chart\nfrom streamlit.errors import StreamlitAPIException\n\n\nclass MapMixin:\n def map(dg, data=None, zoom=None, use_container_width=True):\n \"\"\"Display a map with points on it.\n\n This is a wrapper around st.pydeck_chart to quickly create scatterplot\n charts on top of a map, with auto-centering and auto-zoom.\n\n When using this command, we advise all users to use a personal Mapbox\n token. This ensures the map tiles used in this chart are more\n robust. You can do this with the mapbox.token config option.\n\n To get a token for yourself, create an account at\n https://mapbox.com. It's free! (for moderate usage levels) See\n https://docs.streamlit.io/en/latest/cli.html#view-all-config-options for more\n info on how to set config options.\n\n Parameters\n ----------\n data : pandas.DataFrame, pandas.Styler, numpy.ndarray, Iterable, dict,\n or None\n The data to be plotted. Must have columns called 'lat', 'lon',\n 'latitude', or 'longitude'.\n zoom : int\n Zoom level as specified in\n https://wiki.openstreetmap.org/wiki/Zoom_levels\n\n Example\n -------\n >>> import pandas as pd\n >>> import numpy as np\n >>>\n >>> df = pd.DataFrame(\n ... np.random.randn(1000, 2) / [50, 50] + [37.76, -122.4],\n ... columns=['lat', 'lon'])\n >>>\n >>> st.map(df)\n\n .. output::\n https://share.streamlit.io/0.53.0-SULT/index.html?id=9gTiomqPEbvHY2huTLoQtH\n height: 600px\n\n \"\"\"\n map_proto = DeckGlJsonChartProto()\n map_proto.json = to_deckgl_json(data, zoom)\n map_proto.use_container_width = use_container_width\n return dg._enqueue(\"deck_gl_json_chart\", map_proto) # type: ignore\n\n\n# Map used as the basis for st.map.\n_DEFAULT_MAP = dict(deck_gl_json_chart.EMPTY_MAP) # type: Dict[str, Any]\n_DEFAULT_MAP[\"mapStyle\"] = \"mapbox://styles/mapbox/light-v10\"\n\n# Other default parameters for st.map.\n_DEFAULT_COLOR = [200, 30, 0, 160]\n_DEFAULT_ZOOM_LEVEL = 12\n_ZOOM_LEVELS = [\n 360,\n 180,\n 90,\n 45,\n 22.5,\n 11.25,\n 5.625,\n 2.813,\n 1.406,\n 0.703,\n 0.352,\n 0.176,\n 0.088,\n 0.044,\n 0.022,\n 0.011,\n 0.005,\n 0.003,\n 0.001,\n 0.0005,\n 0.00025,\n]\n\n\ndef _get_zoom_level(distance):\n \"\"\"Get the zoom level for a given distance in degrees.\n\n See https://wiki.openstreetmap.org/wiki/Zoom_levels for reference.\n\n Parameters\n ----------\n distance : float\n How many degrees of longitude should fit in the map.\n\n Returns\n -------\n int\n The zoom level, from 0 to 20.\n\n \"\"\"\n\n # For small number of points the default zoom level will be used.\n if distance < _ZOOM_LEVELS[-1]:\n return _DEFAULT_ZOOM_LEVEL\n\n for i in range(len(_ZOOM_LEVELS) - 1):\n if _ZOOM_LEVELS[i + 1] < distance <= _ZOOM_LEVELS[i]:\n return i\n\n\ndef to_deckgl_json(data, zoom):\n\n if data is None or data.empty:\n return json.dumps(_DEFAULT_MAP)\n\n if \"lat\" in data:\n lat = \"lat\"\n elif \"latitude\" in data:\n lat = \"latitude\"\n else:\n raise StreamlitAPIException(\n 'Map data must contain a column named \"latitude\" or \"lat\".'\n )\n\n if \"lon\" in data:\n lon = \"lon\"\n elif \"longitude\" in data:\n lon = \"longitude\"\n else:\n raise StreamlitAPIException(\n 'Map data must contain a column called \"longitude\" or \"lon\".'\n )\n\n if data[lon].isnull().values.any() or data[lat].isnull().values.any():\n raise StreamlitAPIException(\"Latitude and longitude data must be numeric.\")\n\n data = pd.DataFrame(data)\n\n min_lat = data[lat].min()\n max_lat = data[lat].max()\n min_lon = data[lon].min()\n max_lon = data[lon].max()\n center_lat = (max_lat + min_lat) / 2.0\n center_lon = (max_lon + min_lon) / 2.0\n range_lon = abs(max_lon - min_lon)\n range_lat = abs(max_lat - min_lat)\n\n if zoom == None:\n if range_lon > range_lat:\n longitude_distance = range_lon\n else:\n longitude_distance = range_lat\n zoom = _get_zoom_level(longitude_distance)\n\n # \"+1\" because itertuples includes the row index.\n lon_col_index = data.columns.get_loc(lon) + 1\n lat_col_index = data.columns.get_loc(lat) + 1\n final_data = []\n for row in data.itertuples():\n final_data.append(\n {\"lon\": float(row[lon_col_index]), \"lat\": float(row[lat_col_index])}\n )\n\n default = copy.deepcopy(_DEFAULT_MAP)\n default[\"initialViewState\"][\"latitude\"] = center_lat\n default[\"initialViewState\"][\"longitude\"] = center_lon\n default[\"initialViewState\"][\"zoom\"] = zoom\n default[\"layers\"] = [\n {\n \"@@type\": \"ScatterplotLayer\",\n \"getPosition\": \"@@=[lon, lat]\",\n \"getRadius\": 10,\n \"radiusScale\": 10,\n \"radiusMinPixels\": 3,\n \"getFillColor\": _DEFAULT_COLOR,\n \"data\": final_data,\n }\n ]\n return json.dumps(default)\n", "path": "lib/streamlit/elements/map.py"}]}

| 2,735 | 300 |

gh_patches_debug_21737

|

rasdani/github-patches

|

git_diff

|

openmc-dev__openmc-1254

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Add method to get total material density

For multiphysics coupling, convergence between successive iterations of OpenMC with a T/H solver may be based on the densities, in which case we will need to make the initial density used in OpenMC available to a multiphysics driver.

There is an `openmc_material_get_densities()` method to get the density of each nuclide in a material, but it would be cleaner for a multiphysics driver to avoid doing a summation on this density and instead directly get the density in g/cm^3.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `openmc/capi/material.py`

Content:

```

1 from collections.abc import Mapping

2 from ctypes import c_int, c_int32, c_double, c_char_p, POINTER, c_size_t

3 from weakref import WeakValueDictionary

4

5 import numpy as np

6 from numpy.ctypeslib import as_array

7

8 from openmc.exceptions import AllocationError, InvalidIDError, OpenMCError

9 from . import _dll, Nuclide

10 from .core import _FortranObjectWithID

11 from .error import _error_handler

12

13

14 __all__ = ['Material', 'materials']

15

16 # Material functions

17 _dll.openmc_extend_materials.argtypes = [c_int32, POINTER(c_int32), POINTER(c_int32)]

18 _dll.openmc_extend_materials.restype = c_int

19 _dll.openmc_extend_materials.errcheck = _error_handler

20 _dll.openmc_get_material_index.argtypes = [c_int32, POINTER(c_int32)]

21 _dll.openmc_get_material_index.restype = c_int

22 _dll.openmc_get_material_index.errcheck = _error_handler

23 _dll.openmc_material_add_nuclide.argtypes = [

24 c_int32, c_char_p, c_double]

25 _dll.openmc_material_add_nuclide.restype = c_int

26 _dll.openmc_material_add_nuclide.errcheck = _error_handler

27 _dll.openmc_material_get_id.argtypes = [c_int32, POINTER(c_int32)]

28 _dll.openmc_material_get_id.restype = c_int

29 _dll.openmc_material_get_id.errcheck = _error_handler

30 _dll.openmc_material_get_densities.argtypes = [

31 c_int32, POINTER(POINTER(c_int)), POINTER(POINTER(c_double)),

32 POINTER(c_int)]

33 _dll.openmc_material_get_densities.restype = c_int

34 _dll.openmc_material_get_densities.errcheck = _error_handler

35 _dll.openmc_material_get_volume.argtypes = [c_int32, POINTER(c_double)]

36 _dll.openmc_material_get_volume.restype = c_int

37 _dll.openmc_material_get_volume.errcheck = _error_handler

38 _dll.openmc_material_set_density.argtypes = [c_int32, c_double, c_char_p]

39 _dll.openmc_material_set_density.restype = c_int

40 _dll.openmc_material_set_density.errcheck = _error_handler

41 _dll.openmc_material_set_densities.argtypes = [

42 c_int32, c_int, POINTER(c_char_p), POINTER(c_double)]

43 _dll.openmc_material_set_densities.restype = c_int

44 _dll.openmc_material_set_densities.errcheck = _error_handler

45 _dll.openmc_material_set_id.argtypes = [c_int32, c_int32]

46 _dll.openmc_material_set_id.restype = c_int

47 _dll.openmc_material_set_id.errcheck = _error_handler

48 _dll.openmc_material_set_volume.argtypes = [c_int32, c_double]

49 _dll.openmc_material_set_volume.restype = c_int

50 _dll.openmc_material_set_volume.errcheck = _error_handler

51 _dll.n_materials.argtypes = []

52 _dll.n_materials.restype = c_size_t

53

54

55 class Material(_FortranObjectWithID):

56 """Material stored internally.

57

58 This class exposes a material that is stored internally in the OpenMC

59 library. To obtain a view of a material with a given ID, use the

60 :data:`openmc.capi.materials` mapping.

61

62 Parameters

63 ----------

64 uid : int or None

65 Unique ID of the tally

66 new : bool

67 When `index` is None, this argument controls whether a new object is

68 created or a view to an existing object is returned.

69 index : int or None

70 Index in the `materials` array.

71

72 Attributes

73 ----------

74 id : int

75 ID of the material

76 nuclides : list of str

77 List of nuclides in the material

78 densities : numpy.ndarray

79 Array of densities in atom/b-cm

80

81 """

82 __instances = WeakValueDictionary()

83

84 def __new__(cls, uid=None, new=True, index=None):

85 mapping = materials

86 if index is None:

87 if new:

88 # Determine ID to assign

89 if uid is None:

90 uid = max(mapping, default=0) + 1

91 else:

92 if uid in mapping: