problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_6268

|

rasdani/github-patches

|

git_diff

|

pymedusa__Medusa-3047

|

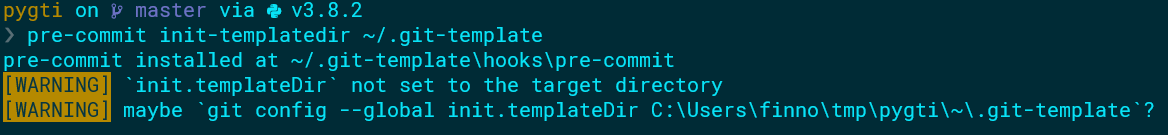

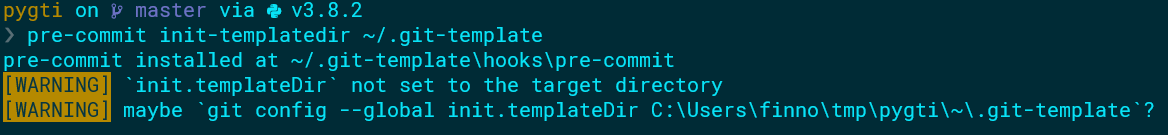

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[APP SUBMITTED]: AttributeError: 'NavigableString' object has no attribute 'span'

### INFO

**Python Version**: `2.7.13 (v2.7.13:a06454b1afa1, Dec 17 2016, 20:53:40) [MSC v.1500 64 bit (AMD64)]`

**Operating System**: `Windows-10-10.0.15063`

**Locale**: `cp1252`

**Branch**: [develop](../tree/develop)

**Database**: `44.8`

**Commit**: pymedusa/Medusa@38e6cbf13d9cede5de23106b656dbbb90df2dd38

**Link to Log**: https://gist.github.com/39feec6e67c967a5e2fdec7f7bc2b69f

### ERROR

<pre>

2017-08-13 22:44:17 ERROR SEARCHQUEUE-FORCED-175001 :: [LimeTorrents] :: [38e6cbf] Unknown exception while searching LimeTorrents. Error: "'NavigableString' object has no attribute 'span'"

Traceback (most recent call last):

File "C:\Medusa\medusa\search\core.py", line 555, in search_providers

down_cur_quality, manual_search, manual_search_type)

File "C:\Medusa\medusa\providers\generic_provider.py", line 226, in find_search_results

items_list += self.search(search_string, ep_obj=episode)

File "C:\Medusa\medusa\providers\torrent\html\limetorrents.py", line 97, in search

results += self.parse(response.text, mode)

File "C:\Medusa\medusa\providers\torrent\html\limetorrents.py", line 124, in parse

labels = [process_column_header(label) for label in torrent_rows[0]]

File "C:\Medusa\medusa\providers\torrent\html\limetorrents.py", line 113, in process_column_header

return th.span.get_text() if th.span else th.get_text()

File "C:\Medusa\lib\bs4\element.py", line 713, in __getattr__

self.__class__.__name__, attr))

AttributeError: 'NavigableString' object has no attribute 'span'

</pre>

---

_STAFF NOTIFIED_: @pymedusa/support @pymedusa/moderators

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `medusa/providers/torrent/html/limetorrents.py`

Content:

```

1 # coding=utf-8

2

3 """Provider code for Limetorrents."""

4

5 from __future__ import unicode_literals

6

7 import logging

8 import re

9 import traceback

10

11 from contextlib2 import suppress

12

13 from medusa import tv

14 from medusa.bs4_parser import BS4Parser

15 from medusa.helper.common import (

16 convert_size,

17 try_int,

18 )

19 from medusa.logger.adapters.style import BraceAdapter

20 from medusa.providers.torrent.torrent_provider import TorrentProvider

21

22 from requests.compat import urljoin

23 from requests.exceptions import ConnectionError as RequestsConnectionError, Timeout

24

25 log = BraceAdapter(logging.getLogger(__name__))

26 log.logger.addHandler(logging.NullHandler())

27

28 id_regex = re.compile(r'(?:\/)(.*)(?:-torrent-([0-9]*)\.html)', re.I)

29 hash_regex = re.compile(r'(.*)([0-9a-f]{40})(.*)', re.I)

30

31

32 class LimeTorrentsProvider(TorrentProvider):

33 """LimeTorrents Torrent provider."""

34

35 def __init__(self):

36 """Initialize the class."""

37 super(LimeTorrentsProvider, self).__init__('LimeTorrents')

38

39 # Credentials

40 self.public = True

41

42 # URLs

43 self.url = 'https://www.limetorrents.cc'

44 self.urls = {

45 'update': urljoin(self.url, '/post/updatestats.php'),

46 'search': urljoin(self.url, '/search/tv/{query}/'),

47 # Original rss feed url, temporary offline. Replaced by the main Tv-show page.

48 # 'rss': urljoin(self.url, '/browse-torrents/TV-shows/date/{page}/'),

49 'rss': urljoin(self.url, '/browse-torrents/TV-shows/'),

50 }

51

52 # Proper Strings

53 self.proper_strings = ['PROPER', 'REPACK', 'REAL']

54

55 # Miscellaneous Options

56 self.confirmed = False

57

58 # Torrent Stats

59 self.minseed = None

60 self.minleech = None

61

62 # Cache

63 self.cache = tv.Cache(self, min_time=10)

64

65 def search(self, search_strings, age=0, ep_obj=None):

66 """

67 Search a provider and parse the results.

68

69 :param search_strings: A dict with mode (key) and the search value (value)

70 :param age: Not used

71 :param ep_obj: Not used

72 :returns: A list of search results (structure)

73 """

74 results = []

75

76 for mode in search_strings:

77 log.debug('Search mode: {0}', mode)

78

79 for search_string in search_strings[mode]:

80

81 if mode != 'RSS':

82 log.debug('Search string: {search}',

83 {'search': search_string})

84 if self.confirmed:

85 log.debug('Searching only confirmed torrents')

86

87 search_url = self.urls['search'].format(query=search_string)

88 else:

89 # search_url = self.urls['rss'].format(page=1)

90 search_url = self.urls['rss']

91

92 response = self.session.get(search_url)

93 if not response or not response.text:

94 log.debug('No data returned from provider')

95 continue

96

97 results += self.parse(response.text, mode)

98

99 return results

100

101 def parse(self, data, mode):

102 """

103 Parse search results for items.

104

105 :param data: The raw response from a search

106 :param mode: The current mode used to search, e.g. RSS

107

108 :return: A list of items found

109 """

110 items = []

111

112 def process_column_header(th):

113 return th.span.get_text() if th.span else th.get_text()

114

115 with BS4Parser(data, 'html5lib') as html:

116 torrent_table = html.find('table', class_='table2')

117

118 if not torrent_table:

119 log.debug('Data returned from provider does not contain any {0}torrents',

120 'confirmed ' if self.confirmed else '')

121 return items

122

123 torrent_rows = torrent_table.find_all('tr')

124 labels = [process_column_header(label) for label in torrent_rows[0]]

125

126 # Skip the first row, since it isn't a valid result

127 for row in torrent_rows[1:]:

128 cells = row.find_all('td')

129

130 try:

131 title_cell = cells[labels.index('Torrent Name')]

132

133 verified = title_cell.find('img', title='Verified torrent')

134 if self.confirmed and not verified:

135 continue

136

137 title_anchors = title_cell.find_all('a')

138 if not title_anchors or len(title_anchors) < 2:

139 continue

140

141 title_url = title_anchors[0].get('href')

142 title = title_anchors[1].get_text(strip=True)

143 regex_result = id_regex.search(title_anchors[1].get('href'))

144

145 alt_title = regex_result.group(1)

146 if len(title) < len(alt_title):

147 title = alt_title.replace('-', ' ')

148

149 torrent_id = regex_result.group(2)

150 info_hash = hash_regex.search(title_url).group(2)

151 if not all([title, torrent_id, info_hash]):

152 continue

153

154 with suppress(RequestsConnectionError, Timeout):

155 # Suppress the timeout since we are not interested in actually getting the results

156 self.session.get(self.urls['update'], timeout=0.1, params={'torrent_id': torrent_id,

157 'infohash': info_hash})

158

159 download_url = 'magnet:?xt=urn:btih:{hash}&dn={title}{trackers}'.format(

160 hash=info_hash, title=title, trackers=self._custom_trackers)

161

162 # Remove comma as thousands separator from larger number like 2,000 seeders = 2000

163 seeders = try_int(cells[labels.index('Seed')].get_text(strip=True).replace(',', ''), 1)

164 leechers = try_int(cells[labels.index('Leech')].get_text(strip=True).replace(',', ''))

165

166 if seeders < min(self.minseed, 1):

167 if mode != 'RSS':

168 log.debug("Discarding torrent because it doesn't meet the"

169 " minimum seeders: {0}. Seeders: {1}",

170 title, seeders)

171 continue

172

173 size = convert_size(cells[labels.index('Size')].get_text(strip=True)) or -1

174

175 pubdate_raw = cells[1].get_text().replace('Last', '1').replace('Yesterday', '24 hours')

176 pubdate = self.parse_pubdate(pubdate_raw, human_time=True)

177

178 item = {

179 'title': title,

180 'link': download_url,

181 'size': size,

182 'seeders': seeders,

183 'leechers': leechers,

184 'pubdate': pubdate,

185 }

186 if mode != 'RSS':

187 log.debug('Found result: {0} with {1} seeders and {2} leechers',

188 title, seeders, leechers)

189

190 items.append(item)

191 except (AttributeError, TypeError, KeyError, ValueError, IndexError):

192 log.error('Failed parsing provider. Traceback: {0!r}',

193 traceback.format_exc())

194

195 return items

196

197

198 provider = LimeTorrentsProvider()

199

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/medusa/providers/torrent/html/limetorrents.py b/medusa/providers/torrent/html/limetorrents.py

--- a/medusa/providers/torrent/html/limetorrents.py

+++ b/medusa/providers/torrent/html/limetorrents.py

@@ -121,7 +121,7 @@

return items

torrent_rows = torrent_table.find_all('tr')

- labels = [process_column_header(label) for label in torrent_rows[0]]

+ labels = [process_column_header(label) for label in torrent_rows[0].find_all('th')]

# Skip the first row, since it isn't a valid result

for row in torrent_rows[1:]:

|

{"golden_diff": "diff --git a/medusa/providers/torrent/html/limetorrents.py b/medusa/providers/torrent/html/limetorrents.py\n--- a/medusa/providers/torrent/html/limetorrents.py\n+++ b/medusa/providers/torrent/html/limetorrents.py\n@@ -121,7 +121,7 @@\n return items\n \n torrent_rows = torrent_table.find_all('tr')\n- labels = [process_column_header(label) for label in torrent_rows[0]]\n+ labels = [process_column_header(label) for label in torrent_rows[0].find_all('th')]\n \n # Skip the first row, since it isn't a valid result\n for row in torrent_rows[1:]:\n", "issue": "[APP SUBMITTED]: AttributeError: 'NavigableString' object has no attribute 'span'\n### INFO\n**Python Version**: `2.7.13 (v2.7.13:a06454b1afa1, Dec 17 2016, 20:53:40) [MSC v.1500 64 bit (AMD64)]`\n**Operating System**: `Windows-10-10.0.15063`\n**Locale**: `cp1252`\n**Branch**: [develop](../tree/develop)\n**Database**: `44.8`\n**Commit**: pymedusa/Medusa@38e6cbf13d9cede5de23106b656dbbb90df2dd38\n**Link to Log**: https://gist.github.com/39feec6e67c967a5e2fdec7f7bc2b69f\n### ERROR\n<pre>\n2017-08-13 22:44:17 ERROR SEARCHQUEUE-FORCED-175001 :: [LimeTorrents] :: [38e6cbf] Unknown exception while searching LimeTorrents. Error: \"'NavigableString' object has no attribute 'span'\"\nTraceback (most recent call last):\n File \"C:\\Medusa\\medusa\\search\\core.py\", line 555, in search_providers\n down_cur_quality, manual_search, manual_search_type)\n File \"C:\\Medusa\\medusa\\providers\\generic_provider.py\", line 226, in find_search_results\n items_list += self.search(search_string, ep_obj=episode)\n File \"C:\\Medusa\\medusa\\providers\\torrent\\html\\limetorrents.py\", line 97, in search\n results += self.parse(response.text, mode)\n File \"C:\\Medusa\\medusa\\providers\\torrent\\html\\limetorrents.py\", line 124, in parse\n labels = [process_column_header(label) for label in torrent_rows[0]]\n File \"C:\\Medusa\\medusa\\providers\\torrent\\html\\limetorrents.py\", line 113, in process_column_header\n return th.span.get_text() if th.span else th.get_text()\n File \"C:\\Medusa\\lib\\bs4\\element.py\", line 713, in __getattr__\n self.__class__.__name__, attr))\nAttributeError: 'NavigableString' object has no attribute 'span'\n</pre>\n---\n_STAFF NOTIFIED_: @pymedusa/support @pymedusa/moderators\n", "before_files": [{"content": "# coding=utf-8\n\n\"\"\"Provider code for Limetorrents.\"\"\"\n\nfrom __future__ import unicode_literals\n\nimport logging\nimport re\nimport traceback\n\nfrom contextlib2 import suppress\n\nfrom medusa import tv\nfrom medusa.bs4_parser import BS4Parser\nfrom medusa.helper.common import (\n convert_size,\n try_int,\n)\nfrom medusa.logger.adapters.style import BraceAdapter\nfrom medusa.providers.torrent.torrent_provider import TorrentProvider\n\nfrom requests.compat import urljoin\nfrom requests.exceptions import ConnectionError as RequestsConnectionError, Timeout\n\nlog = BraceAdapter(logging.getLogger(__name__))\nlog.logger.addHandler(logging.NullHandler())\n\nid_regex = re.compile(r'(?:\\/)(.*)(?:-torrent-([0-9]*)\\.html)', re.I)\nhash_regex = re.compile(r'(.*)([0-9a-f]{40})(.*)', re.I)\n\n\nclass LimeTorrentsProvider(TorrentProvider):\n \"\"\"LimeTorrents Torrent provider.\"\"\"\n\n def __init__(self):\n \"\"\"Initialize the class.\"\"\"\n super(LimeTorrentsProvider, self).__init__('LimeTorrents')\n\n # Credentials\n self.public = True\n\n # URLs\n self.url = 'https://www.limetorrents.cc'\n self.urls = {\n 'update': urljoin(self.url, '/post/updatestats.php'),\n 'search': urljoin(self.url, '/search/tv/{query}/'),\n # Original rss feed url, temporary offline. Replaced by the main Tv-show page.\n # 'rss': urljoin(self.url, '/browse-torrents/TV-shows/date/{page}/'),\n 'rss': urljoin(self.url, '/browse-torrents/TV-shows/'),\n }\n\n # Proper Strings\n self.proper_strings = ['PROPER', 'REPACK', 'REAL']\n\n # Miscellaneous Options\n self.confirmed = False\n\n # Torrent Stats\n self.minseed = None\n self.minleech = None\n\n # Cache\n self.cache = tv.Cache(self, min_time=10)\n\n def search(self, search_strings, age=0, ep_obj=None):\n \"\"\"\n Search a provider and parse the results.\n\n :param search_strings: A dict with mode (key) and the search value (value)\n :param age: Not used\n :param ep_obj: Not used\n :returns: A list of search results (structure)\n \"\"\"\n results = []\n\n for mode in search_strings:\n log.debug('Search mode: {0}', mode)\n\n for search_string in search_strings[mode]:\n\n if mode != 'RSS':\n log.debug('Search string: {search}',\n {'search': search_string})\n if self.confirmed:\n log.debug('Searching only confirmed torrents')\n\n search_url = self.urls['search'].format(query=search_string)\n else:\n # search_url = self.urls['rss'].format(page=1)\n search_url = self.urls['rss']\n\n response = self.session.get(search_url)\n if not response or not response.text:\n log.debug('No data returned from provider')\n continue\n\n results += self.parse(response.text, mode)\n\n return results\n\n def parse(self, data, mode):\n \"\"\"\n Parse search results for items.\n\n :param data: The raw response from a search\n :param mode: The current mode used to search, e.g. RSS\n\n :return: A list of items found\n \"\"\"\n items = []\n\n def process_column_header(th):\n return th.span.get_text() if th.span else th.get_text()\n\n with BS4Parser(data, 'html5lib') as html:\n torrent_table = html.find('table', class_='table2')\n\n if not torrent_table:\n log.debug('Data returned from provider does not contain any {0}torrents',\n 'confirmed ' if self.confirmed else '')\n return items\n\n torrent_rows = torrent_table.find_all('tr')\n labels = [process_column_header(label) for label in torrent_rows[0]]\n\n # Skip the first row, since it isn't a valid result\n for row in torrent_rows[1:]:\n cells = row.find_all('td')\n\n try:\n title_cell = cells[labels.index('Torrent Name')]\n\n verified = title_cell.find('img', title='Verified torrent')\n if self.confirmed and not verified:\n continue\n\n title_anchors = title_cell.find_all('a')\n if not title_anchors or len(title_anchors) < 2:\n continue\n\n title_url = title_anchors[0].get('href')\n title = title_anchors[1].get_text(strip=True)\n regex_result = id_regex.search(title_anchors[1].get('href'))\n\n alt_title = regex_result.group(1)\n if len(title) < len(alt_title):\n title = alt_title.replace('-', ' ')\n\n torrent_id = regex_result.group(2)\n info_hash = hash_regex.search(title_url).group(2)\n if not all([title, torrent_id, info_hash]):\n continue\n\n with suppress(RequestsConnectionError, Timeout):\n # Suppress the timeout since we are not interested in actually getting the results\n self.session.get(self.urls['update'], timeout=0.1, params={'torrent_id': torrent_id,\n 'infohash': info_hash})\n\n download_url = 'magnet:?xt=urn:btih:{hash}&dn={title}{trackers}'.format(\n hash=info_hash, title=title, trackers=self._custom_trackers)\n\n # Remove comma as thousands separator from larger number like 2,000 seeders = 2000\n seeders = try_int(cells[labels.index('Seed')].get_text(strip=True).replace(',', ''), 1)\n leechers = try_int(cells[labels.index('Leech')].get_text(strip=True).replace(',', ''))\n\n if seeders < min(self.minseed, 1):\n if mode != 'RSS':\n log.debug(\"Discarding torrent because it doesn't meet the\"\n \" minimum seeders: {0}. Seeders: {1}\",\n title, seeders)\n continue\n\n size = convert_size(cells[labels.index('Size')].get_text(strip=True)) or -1\n\n pubdate_raw = cells[1].get_text().replace('Last', '1').replace('Yesterday', '24 hours')\n pubdate = self.parse_pubdate(pubdate_raw, human_time=True)\n\n item = {\n 'title': title,\n 'link': download_url,\n 'size': size,\n 'seeders': seeders,\n 'leechers': leechers,\n 'pubdate': pubdate,\n }\n if mode != 'RSS':\n log.debug('Found result: {0} with {1} seeders and {2} leechers',\n title, seeders, leechers)\n\n items.append(item)\n except (AttributeError, TypeError, KeyError, ValueError, IndexError):\n log.error('Failed parsing provider. Traceback: {0!r}',\n traceback.format_exc())\n\n return items\n\n\nprovider = LimeTorrentsProvider()\n", "path": "medusa/providers/torrent/html/limetorrents.py"}], "after_files": [{"content": "# coding=utf-8\n\n\"\"\"Provider code for Limetorrents.\"\"\"\n\nfrom __future__ import unicode_literals\n\nimport logging\nimport re\nimport traceback\n\nfrom contextlib2 import suppress\n\nfrom medusa import tv\nfrom medusa.bs4_parser import BS4Parser\nfrom medusa.helper.common import (\n convert_size,\n try_int,\n)\nfrom medusa.logger.adapters.style import BraceAdapter\nfrom medusa.providers.torrent.torrent_provider import TorrentProvider\n\nfrom requests.compat import urljoin\nfrom requests.exceptions import ConnectionError as RequestsConnectionError, Timeout\n\nlog = BraceAdapter(logging.getLogger(__name__))\nlog.logger.addHandler(logging.NullHandler())\n\nid_regex = re.compile(r'(?:\\/)(.*)(?:-torrent-([0-9]*)\\.html)', re.I)\nhash_regex = re.compile(r'(.*)([0-9a-f]{40})(.*)', re.I)\n\n\nclass LimeTorrentsProvider(TorrentProvider):\n \"\"\"LimeTorrents Torrent provider.\"\"\"\n\n def __init__(self):\n \"\"\"Initialize the class.\"\"\"\n super(LimeTorrentsProvider, self).__init__('LimeTorrents')\n\n # Credentials\n self.public = True\n\n # URLs\n self.url = 'https://www.limetorrents.cc'\n self.urls = {\n 'update': urljoin(self.url, '/post/updatestats.php'),\n 'search': urljoin(self.url, '/search/tv/{query}/'),\n # Original rss feed url, temporary offline. Replaced by the main Tv-show page.\n # 'rss': urljoin(self.url, '/browse-torrents/TV-shows/date/{page}/'),\n 'rss': urljoin(self.url, '/browse-torrents/TV-shows/'),\n }\n\n # Proper Strings\n self.proper_strings = ['PROPER', 'REPACK', 'REAL']\n\n # Miscellaneous Options\n self.confirmed = False\n\n # Torrent Stats\n self.minseed = None\n self.minleech = None\n\n # Cache\n self.cache = tv.Cache(self, min_time=10)\n\n def search(self, search_strings, age=0, ep_obj=None):\n \"\"\"\n Search a provider and parse the results.\n\n :param search_strings: A dict with mode (key) and the search value (value)\n :param age: Not used\n :param ep_obj: Not used\n :returns: A list of search results (structure)\n \"\"\"\n results = []\n\n for mode in search_strings:\n log.debug('Search mode: {0}', mode)\n\n for search_string in search_strings[mode]:\n\n if mode != 'RSS':\n log.debug('Search string: {search}',\n {'search': search_string})\n if self.confirmed:\n log.debug('Searching only confirmed torrents')\n\n search_url = self.urls['search'].format(query=search_string)\n else:\n # search_url = self.urls['rss'].format(page=1)\n search_url = self.urls['rss']\n\n response = self.session.get(search_url)\n if not response or not response.text:\n log.debug('No data returned from provider')\n continue\n\n results += self.parse(response.text, mode)\n\n return results\n\n def parse(self, data, mode):\n \"\"\"\n Parse search results for items.\n\n :param data: The raw response from a search\n :param mode: The current mode used to search, e.g. RSS\n\n :return: A list of items found\n \"\"\"\n items = []\n\n def process_column_header(th):\n return th.span.get_text() if th.span else th.get_text()\n\n with BS4Parser(data, 'html5lib') as html:\n torrent_table = html.find('table', class_='table2')\n\n if not torrent_table:\n log.debug('Data returned from provider does not contain any {0}torrents',\n 'confirmed ' if self.confirmed else '')\n return items\n\n torrent_rows = torrent_table.find_all('tr')\n labels = [process_column_header(label) for label in torrent_rows[0].find_all('th')]\n\n # Skip the first row, since it isn't a valid result\n for row in torrent_rows[1:]:\n cells = row.find_all('td')\n\n try:\n title_cell = cells[labels.index('Torrent Name')]\n\n verified = title_cell.find('img', title='Verified torrent')\n if self.confirmed and not verified:\n continue\n\n title_anchors = title_cell.find_all('a')\n if not title_anchors or len(title_anchors) < 2:\n continue\n\n title_url = title_anchors[0].get('href')\n title = title_anchors[1].get_text(strip=True)\n regex_result = id_regex.search(title_anchors[1].get('href'))\n\n alt_title = regex_result.group(1)\n if len(title) < len(alt_title):\n title = alt_title.replace('-', ' ')\n\n torrent_id = regex_result.group(2)\n info_hash = hash_regex.search(title_url).group(2)\n if not all([title, torrent_id, info_hash]):\n continue\n\n with suppress(RequestsConnectionError, Timeout):\n # Suppress the timeout since we are not interested in actually getting the results\n self.session.get(self.urls['update'], timeout=0.1, params={'torrent_id': torrent_id,\n 'infohash': info_hash})\n\n download_url = 'magnet:?xt=urn:btih:{hash}&dn={title}{trackers}'.format(\n hash=info_hash, title=title, trackers=self._custom_trackers)\n\n # Remove comma as thousands separator from larger number like 2,000 seeders = 2000\n seeders = try_int(cells[labels.index('Seed')].get_text(strip=True).replace(',', ''), 1)\n leechers = try_int(cells[labels.index('Leech')].get_text(strip=True).replace(',', ''))\n\n if seeders < min(self.minseed, 1):\n if mode != 'RSS':\n log.debug(\"Discarding torrent because it doesn't meet the\"\n \" minimum seeders: {0}. Seeders: {1}\",\n title, seeders)\n continue\n\n size = convert_size(cells[labels.index('Size')].get_text(strip=True)) or -1\n\n pubdate_raw = cells[1].get_text().replace('Last', '1').replace('Yesterday', '24 hours')\n pubdate = self.parse_pubdate(pubdate_raw, human_time=True)\n\n item = {\n 'title': title,\n 'link': download_url,\n 'size': size,\n 'seeders': seeders,\n 'leechers': leechers,\n 'pubdate': pubdate,\n }\n if mode != 'RSS':\n log.debug('Found result: {0} with {1} seeders and {2} leechers',\n title, seeders, leechers)\n\n items.append(item)\n except (AttributeError, TypeError, KeyError, ValueError, IndexError):\n log.error('Failed parsing provider. Traceback: {0!r}',\n traceback.format_exc())\n\n return items\n\n\nprovider = LimeTorrentsProvider()\n", "path": "medusa/providers/torrent/html/limetorrents.py"}]}

| 2,915 | 159 |

gh_patches_debug_4612

|

rasdani/github-patches

|

git_diff

|

buildbot__buildbot-1441

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

fix E741 on test_process_build

recent pep8 does not like l (the letter) as a variable because some fonts make it undistinguishable from 1 (the number).

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `master/buildbot/status/web/slaves.py`

Content:

```

1 # This file is part of Buildbot. Buildbot is free software: you can

2 # redistribute it and/or modify it under the terms of the GNU General Public

3 # License as published by the Free Software Foundation, version 2.

4 #

5 # This program is distributed in the hope that it will be useful, but WITHOUT

6 # ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS

7 # FOR A PARTICULAR PURPOSE. See the GNU General Public License for more

8 # details.

9 #

10 # You should have received a copy of the GNU General Public License along with

11 # this program; if not, write to the Free Software Foundation, Inc., 51

12 # Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.

13 #

14 # Copyright Buildbot Team Members

15

16

17 import time

18 import urllib

19

20 from twisted.internet import defer

21 from twisted.web import html

22 from twisted.web.resource import NoResource

23 from twisted.web.util import Redirect

24

25 from buildbot import util

26 from buildbot.status.web.base import ActionResource

27 from buildbot.status.web.base import BuildLineMixin

28 from buildbot.status.web.base import HtmlResource

29 from buildbot.status.web.base import abbreviate_age

30 from buildbot.status.web.base import path_to_authzfail

31 from buildbot.status.web.base import path_to_slave

32

33

34 class ShutdownActionResource(ActionResource):

35

36 def __init__(self, slave):

37 self.slave = slave

38 self.action = "gracefulShutdown"

39

40 @defer.inlineCallbacks

41 def performAction(self, request):

42 res = yield self.getAuthz(request).actionAllowed(self.action,

43 request,

44 self.slave)

45

46 url = None

47 if res:

48 self.slave.setGraceful(True)

49 url = path_to_slave(request, self.slave)

50 else:

51 url = path_to_authzfail(request)

52 defer.returnValue(url)

53

54

55 class PauseActionResource(ActionResource):

56

57 def __init__(self, slave, state):

58 self.slave = slave

59 self.action = "pauseSlave"

60 self.state = state

61

62 @defer.inlineCallbacks

63 def performAction(self, request):

64 res = yield self.getAuthz(request).actionAllowed(self.action,

65 request,

66 self.slave)

67

68 url = None

69 if res:

70 self.slave.setPaused(self.state)

71 url = path_to_slave(request, self.slave)

72 else:

73 url = path_to_authzfail(request)

74 defer.returnValue(url)

75

76 # /buildslaves/$slavename

77

78

79 class OneBuildSlaveResource(HtmlResource, BuildLineMixin):

80 addSlash = False

81

82 def __init__(self, slavename):

83 HtmlResource.__init__(self)

84 self.slavename = slavename

85

86 def getPageTitle(self, req):

87 return "Buildbot: %s" % self.slavename

88

89 def getChild(self, path, req):

90 s = self.getStatus(req)

91 slave = s.getSlave(self.slavename)

92 if path == "shutdown":

93 return ShutdownActionResource(slave)

94 if path == "pause" or path == "unpause":

95 return PauseActionResource(slave, path == "pause")

96 return Redirect(path_to_slave(req, slave))

97

98 def content(self, request, ctx):

99 s = self.getStatus(request)

100 slave = s.getSlave(self.slavename)

101

102 my_builders = []

103 for bname in s.getBuilderNames():

104 b = s.getBuilder(bname)

105 for bs in b.getSlaves():

106 if bs.getName() == self.slavename:

107 my_builders.append(b)

108

109 # Current builds

110 current_builds = []

111 for b in my_builders:

112 for cb in b.getCurrentBuilds():

113 if cb.getSlavename() == self.slavename:

114 current_builds.append(self.get_line_values(request, cb))

115

116 try:

117 max_builds = int(request.args.get('numbuilds')[0])

118 except ValueError:

119 max_builds = 10

120

121 recent_builds = []

122 n = 0

123 for rb in s.generateFinishedBuilds(builders=[b.getName() for b in my_builders]):

124 if rb.getSlavename() == self.slavename:

125 n += 1

126 recent_builds.append(self.get_line_values(request, rb))

127 if n > max_builds:

128 break

129

130 # connects over the last hour

131 slave = s.getSlave(self.slavename)

132 connect_count = slave.getConnectCount()

133

134 if slave.isPaused():

135 pause_url = request.childLink("unpause")

136 else:

137 pause_url = request.childLink("pause")

138

139 ctx.update(dict(slave=slave,

140 slavename=self.slavename,

141 current=current_builds,

142 recent=recent_builds,

143 shutdown_url=request.childLink("shutdown"),

144 pause_url=pause_url,

145 authz=self.getAuthz(request),

146 this_url="../../../" + path_to_slave(request, slave),

147 access_uri=slave.getAccessURI(),

148 admin=slave.getAdmin() or u'',

149 host=slave.getHost() or u'',

150 info=slave.getInfoAsDict(),

151 slave_version=slave.getVersion(),

152 show_builder_column=True,

153 connect_count=connect_count))

154 template = request.site.buildbot_service.templates.get_template("buildslave.html")

155 data = template.render(**ctx)

156 return data

157

158 # /buildslaves

159

160

161 class BuildSlavesResource(HtmlResource):

162 pageTitle = "BuildSlaves"

163 addSlash = True

164

165 def content(self, request, ctx):

166 s = self.getStatus(request)

167

168 # ?no_builders=1 disables build column

169 show_builder_column = not (request.args.get('no_builders', '0')[0]) == '1'

170 ctx['show_builder_column'] = show_builder_column

171

172 used_by_builder = {}

173 for bname in s.getBuilderNames():

174 b = s.getBuilder(bname)

175 for bs in b.getSlaves():

176 slavename = bs.getName()

177 if slavename not in used_by_builder:

178 used_by_builder[slavename] = []

179 used_by_builder[slavename].append(bname)

180

181 slaves = ctx['slaves'] = []

182 for name in util.naturalSort(s.getSlaveNames()):

183 info = {}

184 slaves.append(info)

185 slave = s.getSlave(name)

186 slave_status = s.botmaster.slaves[name].slave_status

187 info['running_builds'] = len(slave_status.getRunningBuilds())

188 info['link'] = request.childLink(urllib.quote(name, ''))

189 info['name'] = name

190

191 if show_builder_column:

192 info['builders'] = []

193 for b in used_by_builder.get(name, []):

194 info['builders'].append(dict(link=request.childLink("../builders/%s" % b), name=b))

195

196 info['version'] = slave.getVersion()

197 info['connected'] = slave.isConnected()

198 info['connectCount'] = slave.getConnectCount()

199 info['paused'] = slave.isPaused()

200

201 info['admin'] = slave.getAdmin() or u''

202 last = slave.lastMessageReceived()

203 if last:

204 info['last_heard_from_age'] = abbreviate_age(time.time() - last)

205 info['last_heard_from_time'] = time.strftime("%Y-%b-%d %H:%M:%S",

206 time.localtime(last))

207

208 template = request.site.buildbot_service.templates.get_template("buildslaves.html")

209 data = template.render(**ctx)

210 return data

211

212 def getChild(self, path, req):

213 try:

214 self.getStatus(req).getSlave(path)

215 return OneBuildSlaveResource(path)

216 except KeyError:

217 return NoResource("No such slave '%s'" % html.escape(path))

218

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/master/buildbot/status/web/slaves.py b/master/buildbot/status/web/slaves.py

--- a/master/buildbot/status/web/slaves.py

+++ b/master/buildbot/status/web/slaves.py

@@ -114,8 +114,8 @@

current_builds.append(self.get_line_values(request, cb))

try:

- max_builds = int(request.args.get('numbuilds')[0])

- except ValueError:

+ max_builds = int(request.args.get('numbuilds', ['10'])[0])

+ except (TypeError, ValueError):

max_builds = 10

recent_builds = []

|

{"golden_diff": "diff --git a/master/buildbot/status/web/slaves.py b/master/buildbot/status/web/slaves.py\n--- a/master/buildbot/status/web/slaves.py\n+++ b/master/buildbot/status/web/slaves.py\n@@ -114,8 +114,8 @@\n current_builds.append(self.get_line_values(request, cb))\n \n try:\n- max_builds = int(request.args.get('numbuilds')[0])\n- except ValueError:\n+ max_builds = int(request.args.get('numbuilds', ['10'])[0])\n+ except (TypeError, ValueError):\n max_builds = 10\n \n recent_builds = []\n", "issue": "fix E741 on test_process_build\nrecent pep8 does not like l (the letter) as a variable because some fonts make it undistinguishable from 1 (the number).\r\n\n", "before_files": [{"content": "# This file is part of Buildbot. Buildbot is free software: you can\n# redistribute it and/or modify it under the terms of the GNU General Public\n# License as published by the Free Software Foundation, version 2.\n#\n# This program is distributed in the hope that it will be useful, but WITHOUT\n# ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS\n# FOR A PARTICULAR PURPOSE. See the GNU General Public License for more\n# details.\n#\n# You should have received a copy of the GNU General Public License along with\n# this program; if not, write to the Free Software Foundation, Inc., 51\n# Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.\n#\n# Copyright Buildbot Team Members\n\n\nimport time\nimport urllib\n\nfrom twisted.internet import defer\nfrom twisted.web import html\nfrom twisted.web.resource import NoResource\nfrom twisted.web.util import Redirect\n\nfrom buildbot import util\nfrom buildbot.status.web.base import ActionResource\nfrom buildbot.status.web.base import BuildLineMixin\nfrom buildbot.status.web.base import HtmlResource\nfrom buildbot.status.web.base import abbreviate_age\nfrom buildbot.status.web.base import path_to_authzfail\nfrom buildbot.status.web.base import path_to_slave\n\n\nclass ShutdownActionResource(ActionResource):\n\n def __init__(self, slave):\n self.slave = slave\n self.action = \"gracefulShutdown\"\n\n @defer.inlineCallbacks\n def performAction(self, request):\n res = yield self.getAuthz(request).actionAllowed(self.action,\n request,\n self.slave)\n\n url = None\n if res:\n self.slave.setGraceful(True)\n url = path_to_slave(request, self.slave)\n else:\n url = path_to_authzfail(request)\n defer.returnValue(url)\n\n\nclass PauseActionResource(ActionResource):\n\n def __init__(self, slave, state):\n self.slave = slave\n self.action = \"pauseSlave\"\n self.state = state\n\n @defer.inlineCallbacks\n def performAction(self, request):\n res = yield self.getAuthz(request).actionAllowed(self.action,\n request,\n self.slave)\n\n url = None\n if res:\n self.slave.setPaused(self.state)\n url = path_to_slave(request, self.slave)\n else:\n url = path_to_authzfail(request)\n defer.returnValue(url)\n\n# /buildslaves/$slavename\n\n\nclass OneBuildSlaveResource(HtmlResource, BuildLineMixin):\n addSlash = False\n\n def __init__(self, slavename):\n HtmlResource.__init__(self)\n self.slavename = slavename\n\n def getPageTitle(self, req):\n return \"Buildbot: %s\" % self.slavename\n\n def getChild(self, path, req):\n s = self.getStatus(req)\n slave = s.getSlave(self.slavename)\n if path == \"shutdown\":\n return ShutdownActionResource(slave)\n if path == \"pause\" or path == \"unpause\":\n return PauseActionResource(slave, path == \"pause\")\n return Redirect(path_to_slave(req, slave))\n\n def content(self, request, ctx):\n s = self.getStatus(request)\n slave = s.getSlave(self.slavename)\n\n my_builders = []\n for bname in s.getBuilderNames():\n b = s.getBuilder(bname)\n for bs in b.getSlaves():\n if bs.getName() == self.slavename:\n my_builders.append(b)\n\n # Current builds\n current_builds = []\n for b in my_builders:\n for cb in b.getCurrentBuilds():\n if cb.getSlavename() == self.slavename:\n current_builds.append(self.get_line_values(request, cb))\n\n try:\n max_builds = int(request.args.get('numbuilds')[0])\n except ValueError:\n max_builds = 10\n\n recent_builds = []\n n = 0\n for rb in s.generateFinishedBuilds(builders=[b.getName() for b in my_builders]):\n if rb.getSlavename() == self.slavename:\n n += 1\n recent_builds.append(self.get_line_values(request, rb))\n if n > max_builds:\n break\n\n # connects over the last hour\n slave = s.getSlave(self.slavename)\n connect_count = slave.getConnectCount()\n\n if slave.isPaused():\n pause_url = request.childLink(\"unpause\")\n else:\n pause_url = request.childLink(\"pause\")\n\n ctx.update(dict(slave=slave,\n slavename=self.slavename,\n current=current_builds,\n recent=recent_builds,\n shutdown_url=request.childLink(\"shutdown\"),\n pause_url=pause_url,\n authz=self.getAuthz(request),\n this_url=\"../../../\" + path_to_slave(request, slave),\n access_uri=slave.getAccessURI(),\n admin=slave.getAdmin() or u'',\n host=slave.getHost() or u'',\n info=slave.getInfoAsDict(),\n slave_version=slave.getVersion(),\n show_builder_column=True,\n connect_count=connect_count))\n template = request.site.buildbot_service.templates.get_template(\"buildslave.html\")\n data = template.render(**ctx)\n return data\n\n# /buildslaves\n\n\nclass BuildSlavesResource(HtmlResource):\n pageTitle = \"BuildSlaves\"\n addSlash = True\n\n def content(self, request, ctx):\n s = self.getStatus(request)\n\n # ?no_builders=1 disables build column\n show_builder_column = not (request.args.get('no_builders', '0')[0]) == '1'\n ctx['show_builder_column'] = show_builder_column\n\n used_by_builder = {}\n for bname in s.getBuilderNames():\n b = s.getBuilder(bname)\n for bs in b.getSlaves():\n slavename = bs.getName()\n if slavename not in used_by_builder:\n used_by_builder[slavename] = []\n used_by_builder[slavename].append(bname)\n\n slaves = ctx['slaves'] = []\n for name in util.naturalSort(s.getSlaveNames()):\n info = {}\n slaves.append(info)\n slave = s.getSlave(name)\n slave_status = s.botmaster.slaves[name].slave_status\n info['running_builds'] = len(slave_status.getRunningBuilds())\n info['link'] = request.childLink(urllib.quote(name, ''))\n info['name'] = name\n\n if show_builder_column:\n info['builders'] = []\n for b in used_by_builder.get(name, []):\n info['builders'].append(dict(link=request.childLink(\"../builders/%s\" % b), name=b))\n\n info['version'] = slave.getVersion()\n info['connected'] = slave.isConnected()\n info['connectCount'] = slave.getConnectCount()\n info['paused'] = slave.isPaused()\n\n info['admin'] = slave.getAdmin() or u''\n last = slave.lastMessageReceived()\n if last:\n info['last_heard_from_age'] = abbreviate_age(time.time() - last)\n info['last_heard_from_time'] = time.strftime(\"%Y-%b-%d %H:%M:%S\",\n time.localtime(last))\n\n template = request.site.buildbot_service.templates.get_template(\"buildslaves.html\")\n data = template.render(**ctx)\n return data\n\n def getChild(self, path, req):\n try:\n self.getStatus(req).getSlave(path)\n return OneBuildSlaveResource(path)\n except KeyError:\n return NoResource(\"No such slave '%s'\" % html.escape(path))\n", "path": "master/buildbot/status/web/slaves.py"}], "after_files": [{"content": "# This file is part of Buildbot. Buildbot is free software: you can\n# redistribute it and/or modify it under the terms of the GNU General Public\n# License as published by the Free Software Foundation, version 2.\n#\n# This program is distributed in the hope that it will be useful, but WITHOUT\n# ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS\n# FOR A PARTICULAR PURPOSE. See the GNU General Public License for more\n# details.\n#\n# You should have received a copy of the GNU General Public License along with\n# this program; if not, write to the Free Software Foundation, Inc., 51\n# Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.\n#\n# Copyright Buildbot Team Members\n\n\nimport time\nimport urllib\n\nfrom twisted.internet import defer\nfrom twisted.web import html\nfrom twisted.web.resource import NoResource\nfrom twisted.web.util import Redirect\n\nfrom buildbot import util\nfrom buildbot.status.web.base import ActionResource\nfrom buildbot.status.web.base import BuildLineMixin\nfrom buildbot.status.web.base import HtmlResource\nfrom buildbot.status.web.base import abbreviate_age\nfrom buildbot.status.web.base import path_to_authzfail\nfrom buildbot.status.web.base import path_to_slave\n\n\nclass ShutdownActionResource(ActionResource):\n\n def __init__(self, slave):\n self.slave = slave\n self.action = \"gracefulShutdown\"\n\n @defer.inlineCallbacks\n def performAction(self, request):\n res = yield self.getAuthz(request).actionAllowed(self.action,\n request,\n self.slave)\n\n url = None\n if res:\n self.slave.setGraceful(True)\n url = path_to_slave(request, self.slave)\n else:\n url = path_to_authzfail(request)\n defer.returnValue(url)\n\n\nclass PauseActionResource(ActionResource):\n\n def __init__(self, slave, state):\n self.slave = slave\n self.action = \"pauseSlave\"\n self.state = state\n\n @defer.inlineCallbacks\n def performAction(self, request):\n res = yield self.getAuthz(request).actionAllowed(self.action,\n request,\n self.slave)\n\n url = None\n if res:\n self.slave.setPaused(self.state)\n url = path_to_slave(request, self.slave)\n else:\n url = path_to_authzfail(request)\n defer.returnValue(url)\n\n# /buildslaves/$slavename\n\n\nclass OneBuildSlaveResource(HtmlResource, BuildLineMixin):\n addSlash = False\n\n def __init__(self, slavename):\n HtmlResource.__init__(self)\n self.slavename = slavename\n\n def getPageTitle(self, req):\n return \"Buildbot: %s\" % self.slavename\n\n def getChild(self, path, req):\n s = self.getStatus(req)\n slave = s.getSlave(self.slavename)\n if path == \"shutdown\":\n return ShutdownActionResource(slave)\n if path == \"pause\" or path == \"unpause\":\n return PauseActionResource(slave, path == \"pause\")\n return Redirect(path_to_slave(req, slave))\n\n def content(self, request, ctx):\n s = self.getStatus(request)\n slave = s.getSlave(self.slavename)\n\n my_builders = []\n for bname in s.getBuilderNames():\n b = s.getBuilder(bname)\n for bs in b.getSlaves():\n if bs.getName() == self.slavename:\n my_builders.append(b)\n\n # Current builds\n current_builds = []\n for b in my_builders:\n for cb in b.getCurrentBuilds():\n if cb.getSlavename() == self.slavename:\n current_builds.append(self.get_line_values(request, cb))\n\n try:\n max_builds = int(request.args.get('numbuilds', ['10'])[0])\n except (TypeError, ValueError):\n max_builds = 10\n\n recent_builds = []\n n = 0\n for rb in s.generateFinishedBuilds(builders=[b.getName() for b in my_builders]):\n if rb.getSlavename() == self.slavename:\n n += 1\n recent_builds.append(self.get_line_values(request, rb))\n if n > max_builds:\n break\n\n # connects over the last hour\n slave = s.getSlave(self.slavename)\n connect_count = slave.getConnectCount()\n\n if slave.isPaused():\n pause_url = request.childLink(\"unpause\")\n else:\n pause_url = request.childLink(\"pause\")\n\n ctx.update(dict(slave=slave,\n slavename=self.slavename,\n current=current_builds,\n recent=recent_builds,\n shutdown_url=request.childLink(\"shutdown\"),\n pause_url=pause_url,\n authz=self.getAuthz(request),\n this_url=\"../../../\" + path_to_slave(request, slave),\n access_uri=slave.getAccessURI(),\n admin=slave.getAdmin() or u'',\n host=slave.getHost() or u'',\n info=slave.getInfoAsDict(),\n slave_version=slave.getVersion(),\n show_builder_column=True,\n connect_count=connect_count))\n template = request.site.buildbot_service.templates.get_template(\"buildslave.html\")\n data = template.render(**ctx)\n return data\n\n# /buildslaves\n\n\nclass BuildSlavesResource(HtmlResource):\n pageTitle = \"BuildSlaves\"\n addSlash = True\n\n def content(self, request, ctx):\n s = self.getStatus(request)\n\n # ?no_builders=1 disables build column\n show_builder_column = not (request.args.get('no_builders', '0')[0]) == '1'\n ctx['show_builder_column'] = show_builder_column\n\n used_by_builder = {}\n for bname in s.getBuilderNames():\n b = s.getBuilder(bname)\n for bs in b.getSlaves():\n slavename = bs.getName()\n if slavename not in used_by_builder:\n used_by_builder[slavename] = []\n used_by_builder[slavename].append(bname)\n\n slaves = ctx['slaves'] = []\n for name in util.naturalSort(s.getSlaveNames()):\n info = {}\n slaves.append(info)\n slave = s.getSlave(name)\n slave_status = s.botmaster.slaves[name].slave_status\n info['running_builds'] = len(slave_status.getRunningBuilds())\n info['link'] = request.childLink(urllib.quote(name, ''))\n info['name'] = name\n\n if show_builder_column:\n info['builders'] = []\n for b in used_by_builder.get(name, []):\n info['builders'].append(dict(link=request.childLink(\"../builders/%s\" % b), name=b))\n\n info['version'] = slave.getVersion()\n info['connected'] = slave.isConnected()\n info['connectCount'] = slave.getConnectCount()\n info['paused'] = slave.isPaused()\n\n info['admin'] = slave.getAdmin() or u''\n last = slave.lastMessageReceived()\n if last:\n info['last_heard_from_age'] = abbreviate_age(time.time() - last)\n info['last_heard_from_time'] = time.strftime(\"%Y-%b-%d %H:%M:%S\",\n time.localtime(last))\n\n template = request.site.buildbot_service.templates.get_template(\"buildslaves.html\")\n data = template.render(**ctx)\n return data\n\n def getChild(self, path, req):\n try:\n self.getStatus(req).getSlave(path)\n return OneBuildSlaveResource(path)\n except KeyError:\n return NoResource(\"No such slave '%s'\" % html.escape(path))\n", "path": "master/buildbot/status/web/slaves.py"}]}

| 2,523 | 141 |

gh_patches_debug_8268

|

rasdani/github-patches

|

git_diff

|

googleapis__python-bigquery-87

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Packaging issue with GRPC and google-cloud-bigquery + optional packages?

We're apparently losing some needed dependencies when using the optional installs for google-cloud-bigquery

```

$ ~/.pyenv/versions/3.8.2/bin/virtualenv repro

$ source repro/bin/activate

$ pip freeze

```

(no results)

```

$ pip install --upgrade google-cloud-bigquery[bqstorage,pandas]

$ pip freeze > before.txt

$ cat before.txt

```

cachetools==4.1.0

certifi==2020.4.5.1

chardet==3.0.4

google-api-core==1.17.0

google-auth==1.14.1

google-cloud-bigquery==1.24.0

google-cloud-bigquery-storage==0.8.0

google-cloud-core==1.3.0

google-resumable-media==0.5.0

googleapis-common-protos==1.51.0

idna==2.9

numpy==1.18.3

pandas==1.0.3

protobuf==3.11.3

pyarrow==0.17.0

pyasn1==0.4.8

pyasn1-modules==0.2.8

python-dateutil==2.8.1

pytz==2019.3

requests==2.23.0

rsa==4.0

six==1.14.0

urllib3==1.25.9

```

$ python

>>> from google.cloud import bigquery_storage_v1beta1

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/cloud/bigquery_storage_v1beta1/__init__.py", line 26, in <module>

from google.cloud.bigquery_storage_v1beta1 import client

File "/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/cloud/bigquery_storage_v1beta1/client.py", line 24, in <module>

import google.api_core.gapic_v1.method

File "/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/api_core/gapic_v1/__init__.py", line 16, in <module>

from google.api_core.gapic_v1 import config

File "/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/api_core/gapic_v1/config.py", line 23, in <module>

import grpc

ModuleNotFoundError: No module named 'grpc'

```

```

$ pip install google-cloud-bigquery-storage

$ pip freeze > after.txt

$ diff before.txt after.txt

```

> grpcio==1.28.1

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup.py`

Content:

```

1 # Copyright 2018 Google LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import io

16 import os

17

18 import setuptools

19

20

21 # Package metadata.

22

23 name = "google-cloud-bigquery"

24 description = "Google BigQuery API client library"

25 version = "1.24.0"

26 # Should be one of:

27 # 'Development Status :: 3 - Alpha'

28 # 'Development Status :: 4 - Beta'

29 # 'Development Status :: 5 - Production/Stable'

30 release_status = "Development Status :: 5 - Production/Stable"

31 dependencies = [

32 'enum34; python_version < "3.4"',

33 "google-auth >= 1.9.0, < 2.0dev",

34 "google-api-core >= 1.15.0, < 2.0dev",

35 "google-cloud-core >= 1.1.0, < 2.0dev",

36 "google-resumable-media >= 0.5.0, < 0.6dev",

37 "protobuf >= 3.6.0",

38 "six >=1.13.0,< 2.0.0dev",

39 ]

40 extras = {

41 "bqstorage": [

42 "google-cloud-bigquery-storage >= 0.6.0, <2.0.0dev",

43 "pyarrow>=0.16.0, < 2.0dev",

44 ],

45 "pandas": ["pandas>=0.17.1"],

46 # Exclude PyArrow dependency from Windows Python 2.7.

47 'pyarrow: platform_system != "Windows" or python_version >= "3.4"': [

48 # Bad Linux release for 0.14.0.

49 # https://issues.apache.org/jira/browse/ARROW-5868

50 "pyarrow>=0.4.1, != 0.14.0"

51 ],

52 "tqdm": ["tqdm >= 4.0.0, <5.0.0dev"],

53 "fastparquet": [

54 "fastparquet",

55 "python-snappy",

56 # llvmlite >= 0.32.0 cannot be installed on Python 3.5 and below

57 # (building the wheel fails), thus needs to be restricted.

58 # See: https://github.com/googleapis/python-bigquery/issues/78

59 "llvmlite <= 0.31.0",

60 ],

61 }

62

63 all_extras = []

64

65 for extra in extras:

66 if extra == "fastparquet":

67 # Skip fastparquet from "all" because it is redundant with pyarrow and

68 # creates a dependency on pre-release versions of numpy. See:

69 # https://github.com/googleapis/google-cloud-python/issues/8549

70 continue

71 all_extras.extend(extras[extra])

72

73 extras["all"] = all_extras

74

75 # Setup boilerplate below this line.

76

77 package_root = os.path.abspath(os.path.dirname(__file__))

78

79 readme_filename = os.path.join(package_root, "README.rst")

80 with io.open(readme_filename, encoding="utf-8") as readme_file:

81 readme = readme_file.read()

82

83 # Only include packages under the 'google' namespace. Do not include tests,

84 # benchmarks, etc.

85 packages = [

86 package for package in setuptools.find_packages() if package.startswith("google")

87 ]

88

89 # Determine which namespaces are needed.

90 namespaces = ["google"]

91 if "google.cloud" in packages:

92 namespaces.append("google.cloud")

93

94

95 setuptools.setup(

96 name=name,

97 version=version,

98 description=description,

99 long_description=readme,

100 author="Google LLC",

101 author_email="[email protected]",

102 license="Apache 2.0",

103 url="https://github.com/googleapis/python-bigquery",

104 classifiers=[

105 release_status,

106 "Intended Audience :: Developers",

107 "License :: OSI Approved :: Apache Software License",

108 "Programming Language :: Python",

109 "Programming Language :: Python :: 2",

110 "Programming Language :: Python :: 2.7",

111 "Programming Language :: Python :: 3",

112 "Programming Language :: Python :: 3.5",

113 "Programming Language :: Python :: 3.6",

114 "Programming Language :: Python :: 3.7",

115 "Programming Language :: Python :: 3.8",

116 "Operating System :: OS Independent",

117 "Topic :: Internet",

118 ],

119 platforms="Posix; MacOS X; Windows",

120 packages=packages,

121 namespace_packages=namespaces,

122 install_requires=dependencies,

123 extras_require=extras,

124 python_requires=">=2.7,!=3.0.*,!=3.1.*,!=3.2.*,!=3.3.*",

125 include_package_data=True,

126 zip_safe=False,

127 )

128

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -40,6 +40,11 @@

extras = {

"bqstorage": [

"google-cloud-bigquery-storage >= 0.6.0, <2.0.0dev",

+ # Due to an issue in pip's dependency resolver, the `grpc` extra is not

+ # installed, even though `google-cloud-bigquery-storage` specifies it

+ # as `google-api-core[grpc]`. We thus need to explicitly specify it here.

+ # See: https://github.com/googleapis/python-bigquery/issues/83

+ "grpcio >= 1.8.2, < 2.0dev",

"pyarrow>=0.16.0, < 2.0dev",

],

"pandas": ["pandas>=0.17.1"],

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -40,6 +40,11 @@\n extras = {\n \"bqstorage\": [\n \"google-cloud-bigquery-storage >= 0.6.0, <2.0.0dev\",\n+ # Due to an issue in pip's dependency resolver, the `grpc` extra is not\n+ # installed, even though `google-cloud-bigquery-storage` specifies it\n+ # as `google-api-core[grpc]`. We thus need to explicitly specify it here.\n+ # See: https://github.com/googleapis/python-bigquery/issues/83\n+ \"grpcio >= 1.8.2, < 2.0dev\",\n \"pyarrow>=0.16.0, < 2.0dev\",\n ],\n \"pandas\": [\"pandas>=0.17.1\"],\n", "issue": "Packaging issue with GRPC and google-cloud-bigquery + optional packages?\nWe're apparently losing some needed dependencies when using the optional installs for google-cloud-bigquery\r\n\r\n```\r\n$ ~/.pyenv/versions/3.8.2/bin/virtualenv repro\r\n$ source repro/bin/activate\r\n$ pip freeze \r\n```\r\n(no results)\r\n```\r\n$ pip install --upgrade google-cloud-bigquery[bqstorage,pandas]\r\n$ pip freeze > before.txt\r\n$ cat before.txt\r\n```\r\ncachetools==4.1.0\r\ncertifi==2020.4.5.1\r\nchardet==3.0.4\r\ngoogle-api-core==1.17.0\r\ngoogle-auth==1.14.1\r\ngoogle-cloud-bigquery==1.24.0\r\ngoogle-cloud-bigquery-storage==0.8.0\r\ngoogle-cloud-core==1.3.0\r\ngoogle-resumable-media==0.5.0\r\ngoogleapis-common-protos==1.51.0\r\nidna==2.9\r\nnumpy==1.18.3\r\npandas==1.0.3\r\nprotobuf==3.11.3\r\npyarrow==0.17.0\r\npyasn1==0.4.8\r\npyasn1-modules==0.2.8\r\npython-dateutil==2.8.1\r\npytz==2019.3\r\nrequests==2.23.0\r\nrsa==4.0\r\nsix==1.14.0\r\nurllib3==1.25.9\r\n\r\n\r\n```\r\n$ python\r\n>>> from google.cloud import bigquery_storage_v1beta1\r\nTraceback (most recent call last):\r\n File \"<stdin>\", line 1, in <module>\r\n File \"/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/cloud/bigquery_storage_v1beta1/__init__.py\", line 26, in <module>\r\n from google.cloud.bigquery_storage_v1beta1 import client\r\n File \"/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/cloud/bigquery_storage_v1beta1/client.py\", line 24, in <module>\r\n import google.api_core.gapic_v1.method\r\n File \"/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/api_core/gapic_v1/__init__.py\", line 16, in <module>\r\n from google.api_core.gapic_v1 import config\r\n File \"/usr/local/google/home/shollyman/python_envs/repro/lib/python3.8/site-packages/google/api_core/gapic_v1/config.py\", line 23, in <module>\r\n import grpc\r\nModuleNotFoundError: No module named 'grpc'\r\n```\r\n\r\n```\r\n$ pip install google-cloud-bigquery-storage\r\n$ pip freeze > after.txt\r\n$ diff before.txt after.txt\r\n```\r\n\r\n> grpcio==1.28.1\r\n\n", "before_files": [{"content": "# Copyright 2018 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport io\nimport os\n\nimport setuptools\n\n\n# Package metadata.\n\nname = \"google-cloud-bigquery\"\ndescription = \"Google BigQuery API client library\"\nversion = \"1.24.0\"\n# Should be one of:\n# 'Development Status :: 3 - Alpha'\n# 'Development Status :: 4 - Beta'\n# 'Development Status :: 5 - Production/Stable'\nrelease_status = \"Development Status :: 5 - Production/Stable\"\ndependencies = [\n 'enum34; python_version < \"3.4\"',\n \"google-auth >= 1.9.0, < 2.0dev\",\n \"google-api-core >= 1.15.0, < 2.0dev\",\n \"google-cloud-core >= 1.1.0, < 2.0dev\",\n \"google-resumable-media >= 0.5.0, < 0.6dev\",\n \"protobuf >= 3.6.0\",\n \"six >=1.13.0,< 2.0.0dev\",\n]\nextras = {\n \"bqstorage\": [\n \"google-cloud-bigquery-storage >= 0.6.0, <2.0.0dev\",\n \"pyarrow>=0.16.0, < 2.0dev\",\n ],\n \"pandas\": [\"pandas>=0.17.1\"],\n # Exclude PyArrow dependency from Windows Python 2.7.\n 'pyarrow: platform_system != \"Windows\" or python_version >= \"3.4\"': [\n # Bad Linux release for 0.14.0.\n # https://issues.apache.org/jira/browse/ARROW-5868\n \"pyarrow>=0.4.1, != 0.14.0\"\n ],\n \"tqdm\": [\"tqdm >= 4.0.0, <5.0.0dev\"],\n \"fastparquet\": [\n \"fastparquet\",\n \"python-snappy\",\n # llvmlite >= 0.32.0 cannot be installed on Python 3.5 and below\n # (building the wheel fails), thus needs to be restricted.\n # See: https://github.com/googleapis/python-bigquery/issues/78\n \"llvmlite <= 0.31.0\",\n ],\n}\n\nall_extras = []\n\nfor extra in extras:\n if extra == \"fastparquet\":\n # Skip fastparquet from \"all\" because it is redundant with pyarrow and\n # creates a dependency on pre-release versions of numpy. See:\n # https://github.com/googleapis/google-cloud-python/issues/8549\n continue\n all_extras.extend(extras[extra])\n\nextras[\"all\"] = all_extras\n\n# Setup boilerplate below this line.\n\npackage_root = os.path.abspath(os.path.dirname(__file__))\n\nreadme_filename = os.path.join(package_root, \"README.rst\")\nwith io.open(readme_filename, encoding=\"utf-8\") as readme_file:\n readme = readme_file.read()\n\n# Only include packages under the 'google' namespace. Do not include tests,\n# benchmarks, etc.\npackages = [\n package for package in setuptools.find_packages() if package.startswith(\"google\")\n]\n\n# Determine which namespaces are needed.\nnamespaces = [\"google\"]\nif \"google.cloud\" in packages:\n namespaces.append(\"google.cloud\")\n\n\nsetuptools.setup(\n name=name,\n version=version,\n description=description,\n long_description=readme,\n author=\"Google LLC\",\n author_email=\"[email protected]\",\n license=\"Apache 2.0\",\n url=\"https://github.com/googleapis/python-bigquery\",\n classifiers=[\n release_status,\n \"Intended Audience :: Developers\",\n \"License :: OSI Approved :: Apache Software License\",\n \"Programming Language :: Python\",\n \"Programming Language :: Python :: 2\",\n \"Programming Language :: Python :: 2.7\",\n \"Programming Language :: Python :: 3\",\n \"Programming Language :: Python :: 3.5\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n \"Programming Language :: Python :: 3.8\",\n \"Operating System :: OS Independent\",\n \"Topic :: Internet\",\n ],\n platforms=\"Posix; MacOS X; Windows\",\n packages=packages,\n namespace_packages=namespaces,\n install_requires=dependencies,\n extras_require=extras,\n python_requires=\">=2.7,!=3.0.*,!=3.1.*,!=3.2.*,!=3.3.*\",\n include_package_data=True,\n zip_safe=False,\n)\n", "path": "setup.py"}], "after_files": [{"content": "# Copyright 2018 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport io\nimport os\n\nimport setuptools\n\n\n# Package metadata.\n\nname = \"google-cloud-bigquery\"\ndescription = \"Google BigQuery API client library\"\nversion = \"1.24.0\"\n# Should be one of:\n# 'Development Status :: 3 - Alpha'\n# 'Development Status :: 4 - Beta'\n# 'Development Status :: 5 - Production/Stable'\nrelease_status = \"Development Status :: 5 - Production/Stable\"\ndependencies = [\n 'enum34; python_version < \"3.4\"',\n \"google-auth >= 1.9.0, < 2.0dev\",\n \"google-api-core >= 1.15.0, < 2.0dev\",\n \"google-cloud-core >= 1.1.0, < 2.0dev\",\n \"google-resumable-media >= 0.5.0, < 0.6dev\",\n \"protobuf >= 3.6.0\",\n \"six >=1.13.0,< 2.0.0dev\",\n]\nextras = {\n \"bqstorage\": [\n \"google-cloud-bigquery-storage >= 0.6.0, <2.0.0dev\",\n # Due to an issue in pip's dependency resolver, the `grpc` extra is not\n # installed, even though `google-cloud-bigquery-storage` specifies it\n # as `google-api-core[grpc]`. We thus need to explicitly specify it here.\n # See: https://github.com/googleapis/python-bigquery/issues/83\n \"grpcio >= 1.8.2, < 2.0dev\",\n \"pyarrow>=0.16.0, < 2.0dev\",\n ],\n \"pandas\": [\"pandas>=0.17.1\"],\n # Exclude PyArrow dependency from Windows Python 2.7.\n 'pyarrow: platform_system != \"Windows\" or python_version >= \"3.4\"': [\n # Bad Linux release for 0.14.0.\n # https://issues.apache.org/jira/browse/ARROW-5868\n \"pyarrow>=0.4.1, != 0.14.0\"\n ],\n \"tqdm\": [\"tqdm >= 4.0.0, <5.0.0dev\"],\n \"fastparquet\": [\n \"fastparquet\",\n \"python-snappy\",\n # llvmlite >= 0.32.0 cannot be installed on Python 3.5 and below\n # (building the wheel fails), thus needs to be restricted.\n # See: https://github.com/googleapis/python-bigquery/issues/78\n \"llvmlite <= 0.31.0\",\n ],\n}\n\nall_extras = []\n\nfor extra in extras:\n if extra == \"fastparquet\":\n # Skip fastparquet from \"all\" because it is redundant with pyarrow and\n # creates a dependency on pre-release versions of numpy. See:\n # https://github.com/googleapis/google-cloud-python/issues/8549\n continue\n all_extras.extend(extras[extra])\n\nextras[\"all\"] = all_extras\n\n# Setup boilerplate below this line.\n\npackage_root = os.path.abspath(os.path.dirname(__file__))\n\nreadme_filename = os.path.join(package_root, \"README.rst\")\nwith io.open(readme_filename, encoding=\"utf-8\") as readme_file:\n readme = readme_file.read()\n\n# Only include packages under the 'google' namespace. Do not include tests,\n# benchmarks, etc.\npackages = [\n package for package in setuptools.find_packages() if package.startswith(\"google\")\n]\n\n# Determine which namespaces are needed.\nnamespaces = [\"google\"]\nif \"google.cloud\" in packages:\n namespaces.append(\"google.cloud\")\n\n\nsetuptools.setup(\n name=name,\n version=version,\n description=description,\n long_description=readme,\n author=\"Google LLC\",\n author_email=\"[email protected]\",\n license=\"Apache 2.0\",\n url=\"https://github.com/googleapis/python-bigquery\",\n classifiers=[\n release_status,\n \"Intended Audience :: Developers\",\n \"License :: OSI Approved :: Apache Software License\",\n \"Programming Language :: Python\",\n \"Programming Language :: Python :: 2\",\n \"Programming Language :: Python :: 2.7\",\n \"Programming Language :: Python :: 3\",\n \"Programming Language :: Python :: 3.5\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n \"Programming Language :: Python :: 3.8\",\n \"Operating System :: OS Independent\",\n \"Topic :: Internet\",\n ],\n platforms=\"Posix; MacOS X; Windows\",\n packages=packages,\n namespace_packages=namespaces,\n install_requires=dependencies,\n extras_require=extras,\n python_requires=\">=2.7,!=3.0.*,!=3.1.*,!=3.2.*,!=3.3.*\",\n include_package_data=True,\n zip_safe=False,\n)\n", "path": "setup.py"}]}

| 2,309 | 201 |

gh_patches_debug_8666

|

rasdani/github-patches

|

git_diff

|

open-telemetry__opentelemetry-python-570

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Remove unnecessary Flask app

The current auto instrumentation example includes an unnecessary Flask app in its client, remove that.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `docs/examples/auto-instrumentation/client.py`

Content:

```

1 # Copyright The OpenTelemetry Authors

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 from sys import argv

16

17 from flask import Flask

18 from requests import get

19

20 from opentelemetry import propagators, trace

21 from opentelemetry.sdk.trace import TracerProvider

22 from opentelemetry.sdk.trace.export import (

23 ConsoleSpanExporter,

24 SimpleExportSpanProcessor,

25 )

26

27 app = Flask(__name__)

28

29 trace.set_tracer_provider(TracerProvider())

30 tracer = trace.get_tracer_provider().get_tracer(__name__)

31

32 trace.get_tracer_provider().add_span_processor(

33 SimpleExportSpanProcessor(ConsoleSpanExporter())

34 )

35

36

37 assert len(argv) == 2

38

39 with tracer.start_as_current_span("client"):

40

41 with tracer.start_as_current_span("client-server"):

42 headers = {}

43 propagators.inject(dict.__setitem__, headers)

44 requested = get(

45 "http://localhost:8082/server_request",

46 params={"param": argv[1]},

47 headers=headers,

48 )

49

50 assert requested.status_code == 200

51

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/docs/examples/auto-instrumentation/client.py b/docs/examples/auto-instrumentation/client.py

--- a/docs/examples/auto-instrumentation/client.py

+++ b/docs/examples/auto-instrumentation/client.py

@@ -14,7 +14,6 @@

from sys import argv

-from flask import Flask

from requests import get

from opentelemetry import propagators, trace

@@ -24,8 +23,6 @@

SimpleExportSpanProcessor,

)

-app = Flask(__name__)

-

trace.set_tracer_provider(TracerProvider())

tracer = trace.get_tracer_provider().get_tracer(__name__)

|

{"golden_diff": "diff --git a/docs/examples/auto-instrumentation/client.py b/docs/examples/auto-instrumentation/client.py\n--- a/docs/examples/auto-instrumentation/client.py\n+++ b/docs/examples/auto-instrumentation/client.py\n@@ -14,7 +14,6 @@\n \n from sys import argv\n \n-from flask import Flask\n from requests import get\n \n from opentelemetry import propagators, trace\n@@ -24,8 +23,6 @@\n SimpleExportSpanProcessor,\n )\n \n-app = Flask(__name__)\n-\n trace.set_tracer_provider(TracerProvider())\n tracer = trace.get_tracer_provider().get_tracer(__name__)\n", "issue": "Remove unnecessary Flask app\nThe current auto instrumentation example includes an unnecessary Flask app in its client, remove that.\n", "before_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom sys import argv\n\nfrom flask import Flask\nfrom requests import get\n\nfrom opentelemetry import propagators, trace\nfrom opentelemetry.sdk.trace import TracerProvider\nfrom opentelemetry.sdk.trace.export import (\n ConsoleSpanExporter,\n SimpleExportSpanProcessor,\n)\n\napp = Flask(__name__)\n\ntrace.set_tracer_provider(TracerProvider())\ntracer = trace.get_tracer_provider().get_tracer(__name__)\n\ntrace.get_tracer_provider().add_span_processor(\n SimpleExportSpanProcessor(ConsoleSpanExporter())\n)\n\n\nassert len(argv) == 2\n\nwith tracer.start_as_current_span(\"client\"):\n\n with tracer.start_as_current_span(\"client-server\"):\n headers = {}\n propagators.inject(dict.__setitem__, headers)\n requested = get(\n \"http://localhost:8082/server_request\",\n params={\"param\": argv[1]},\n headers=headers,\n )\n\n assert requested.status_code == 200\n", "path": "docs/examples/auto-instrumentation/client.py"}], "after_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom sys import argv\n\nfrom requests import get\n\nfrom opentelemetry import propagators, trace\nfrom opentelemetry.sdk.trace import TracerProvider\nfrom opentelemetry.sdk.trace.export import (\n ConsoleSpanExporter,\n SimpleExportSpanProcessor,\n)\n\ntrace.set_tracer_provider(TracerProvider())\ntracer = trace.get_tracer_provider().get_tracer(__name__)\n\ntrace.get_tracer_provider().add_span_processor(\n SimpleExportSpanProcessor(ConsoleSpanExporter())\n)\n\n\nassert len(argv) == 2\n\nwith tracer.start_as_current_span(\"client\"):\n\n with tracer.start_as_current_span(\"client-server\"):\n headers = {}\n propagators.inject(dict.__setitem__, headers)\n requested = get(\n \"http://localhost:8082/server_request\",\n params={\"param\": argv[1]},\n headers=headers,\n )\n\n assert requested.status_code == 200\n", "path": "docs/examples/auto-instrumentation/client.py"}]}

| 710 | 133 |

gh_patches_debug_22214

|

rasdani/github-patches

|

git_diff

|

vega__altair-167

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

numpy scalar in Dataframe not JSON serializable

Is it possible to allow numpy scalars in a dataframe?

The following code is not working, because a np.array is not json serializable:

```

df_numpy = pd.DataFrame([dict(

x=np.array(1),

y=np.array(2),

)])

Chart(df_numpy).encode(x='x', y='y')

# TypeError: array(1) is not JSON serializable

```

Full Example: [

https://github.com/boeddeker/ipynb/blob/master/bug_altair_numpy.ipynb](https://github.com/boeddeker/ipynb/blob/master/bug_altair_numpy.ipynb)

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `altair/utils/core.py`

Content:

```

1 """

2 Utility routines

3 """

4 import re

5 import warnings

6

7 import pandas as pd

8 import numpy as np

9

10

11 TYPECODE_MAP = {'ordinal': 'O',

12 'nominal': 'N',

13 'quantitative': 'Q',

14 'temporal': 'T'}

15

16 INV_TYPECODE_MAP = {v: k for k, v in TYPECODE_MAP.items()}

17

18 TYPE_ABBR = TYPECODE_MAP.values()

19

20

21 def parse_shorthand(shorthand):

22 """

23 Parse the shorthand expression for aggregation, field, and type.

24

25 These are of the form:

26

27 - "col_name"

28 - "col_name:O"

29 - "average(col_name)"

30 - "average(col_name):O"

31

32 Parameters

33 ----------

34 shorthand: str

35 Shorthand string

36

37 Returns

38 -------

39 D : dict

40 Dictionary containing the field, aggregate, and typecode

41 """

42 if not shorthand:

43 return {}

44

45 # Must import this here to avoid circular imports

46 from ..schema import AggregateOp

47 valid_aggregates = AggregateOp().values

48 valid_typecodes = list(TYPECODE_MAP) + list(INV_TYPECODE_MAP)

49

50 # build regular expressions

51 units = dict(field='(?P<field>.*)',

52 type='(?P<type>{0})'.format('|'.join(valid_typecodes)),

53 aggregate='(?P<aggregate>{0})'.format('|'.join(valid_aggregates)))

54 patterns = [r'{field}',

55 r'{field}:{type}',

56 r'{aggregate}\({field}\)',

57 r'{aggregate}\({field}\):{type}']

58 regexps = (re.compile('\A' + p.format(**units) + '\Z', re.DOTALL)