problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_14331

|

rasdani/github-patches

|

git_diff

|

facebookresearch__fairscale-795

|

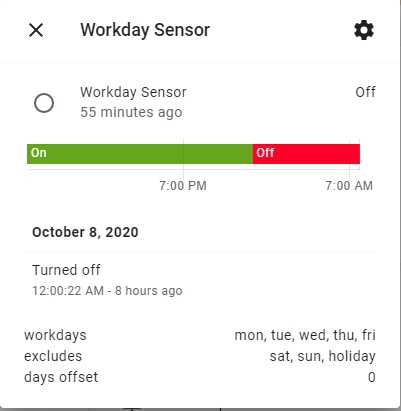

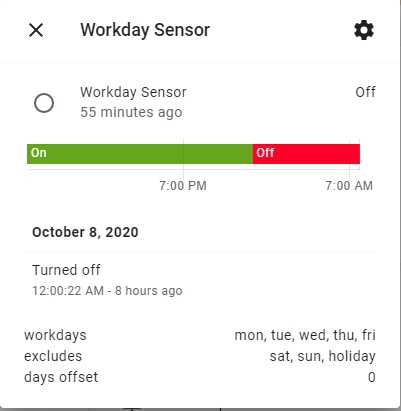

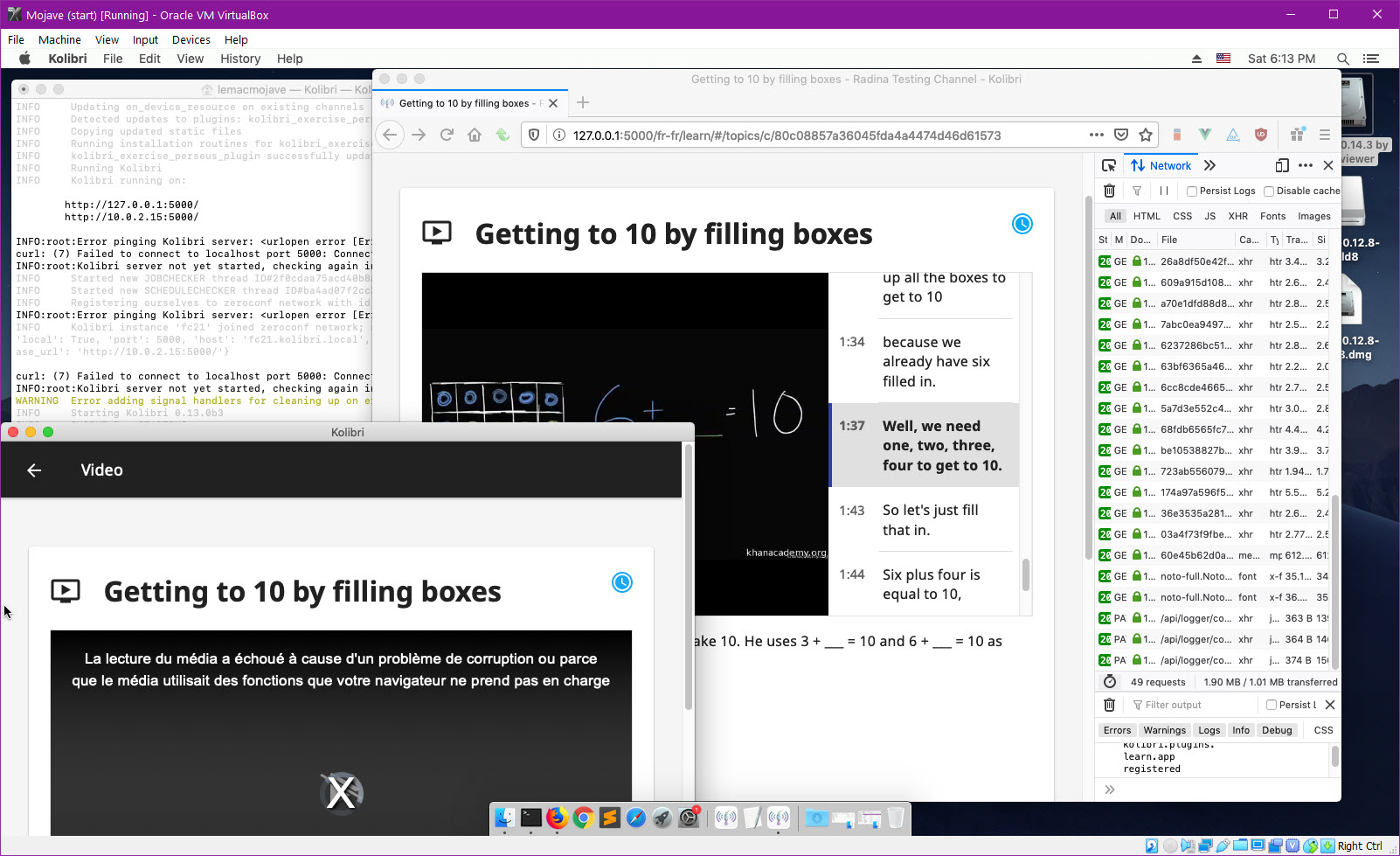

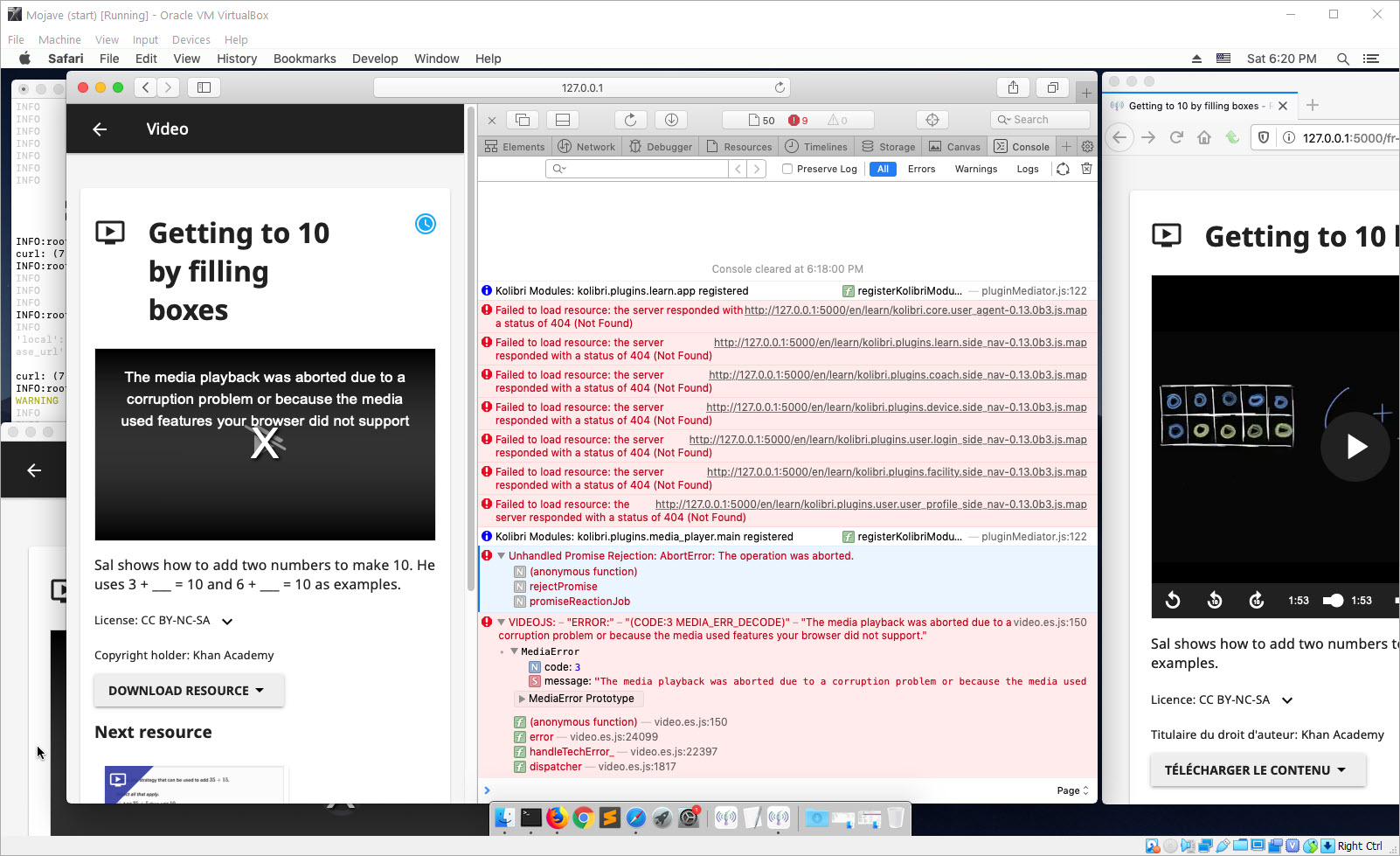

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

The Argument broadcast_fp16 is not working on OSS

## 🐛 Bug

The Argument `broadcast_fp16` is not working on `OSS`.

## Command

## To Reproduce

Steps to reproduce the behavior:

1. Add one print line in fairscale's source code [here](https://github.com/facebookresearch/fairscale/blob/31e3645371e1462c0fb119a7a54642e327d0d4bf/fairscale/optim/oss.py#L603)

```

for device in self.buckets.keys():

for dst_rank, bucket in self.buckets[device].items():

print(bucket.buffer.dtype, bucket.buffer.shape)

work_handles.append(

```

2. Run a test script as below:

```

import sys

import pdb

import torch

import torch.distributed as dist

import torch.multiprocessing as mp

from torch.nn.parallel import DistributedDataParallel as DDP

from fairscale.optim.oss import OSS

from fairscale.nn.data_parallel import ShardedDataParallel as ShardedDDP

CUDA_DEVICE = [0, 1]

HIDDEN_SIZE = 8

class ToyModel(torch.nn.Module):

def __init__(self):

super(ToyModel, self).__init__()

self.net1 = torch.nn.Linear(HIDDEN_SIZE, HIDDEN_SIZE)

self.relu = torch.nn.ReLU()

self.net2 = torch.nn.Linear(HIDDEN_SIZE, 5)

def forward(self, x):

return self.net2(self.relu(self.net1(x)))

def main(rank, world_size):

dist.init_process_group(

backend='nccl',

init_method="tcp://localhost:29501",

rank=rank,

world_size=world_size)

device = CUDA_DEVICE[rank]

torch.manual_seed(rank + 1007)

torch.cuda.set_device(device)

torch.cuda.manual_seed(rank + 1007)

print('Rank: %d/%d Device: %s' % (rank, world_size, device))

model = ToyModel().to(device)

loss_fn = torch.nn.MSELoss()

# ddp_model = DDP(model, device_ids=[device])

# optimizer = torch.optim.SGD(ddp_model.parameters(), lr=0.1)

optimizer = OSS(params=model.parameters(), optim=torch.optim.SGD, lr=0.1, broadcast_fp16=True)

ddp_model = ShardedDDP(model, optimizer)

inputs = torch.randn(2, HIDDEN_SIZE).to(device)

print('inputs', rank, inputs)

optimizer.zero_grad()

outputs = ddp_model(inputs)

labels = torch.randn(2, 5).to(device)

loss_fn(outputs, labels).backward()

optimizer.step()

dist.destroy_process_group()

if __name__ == "__main__":

WORLD_SIZE = len(CUDA_DEVICE)

mp.spawn(

main,

args=(WORLD_SIZE,),

nprocs=WORLD_SIZE,

join=True,

)

```

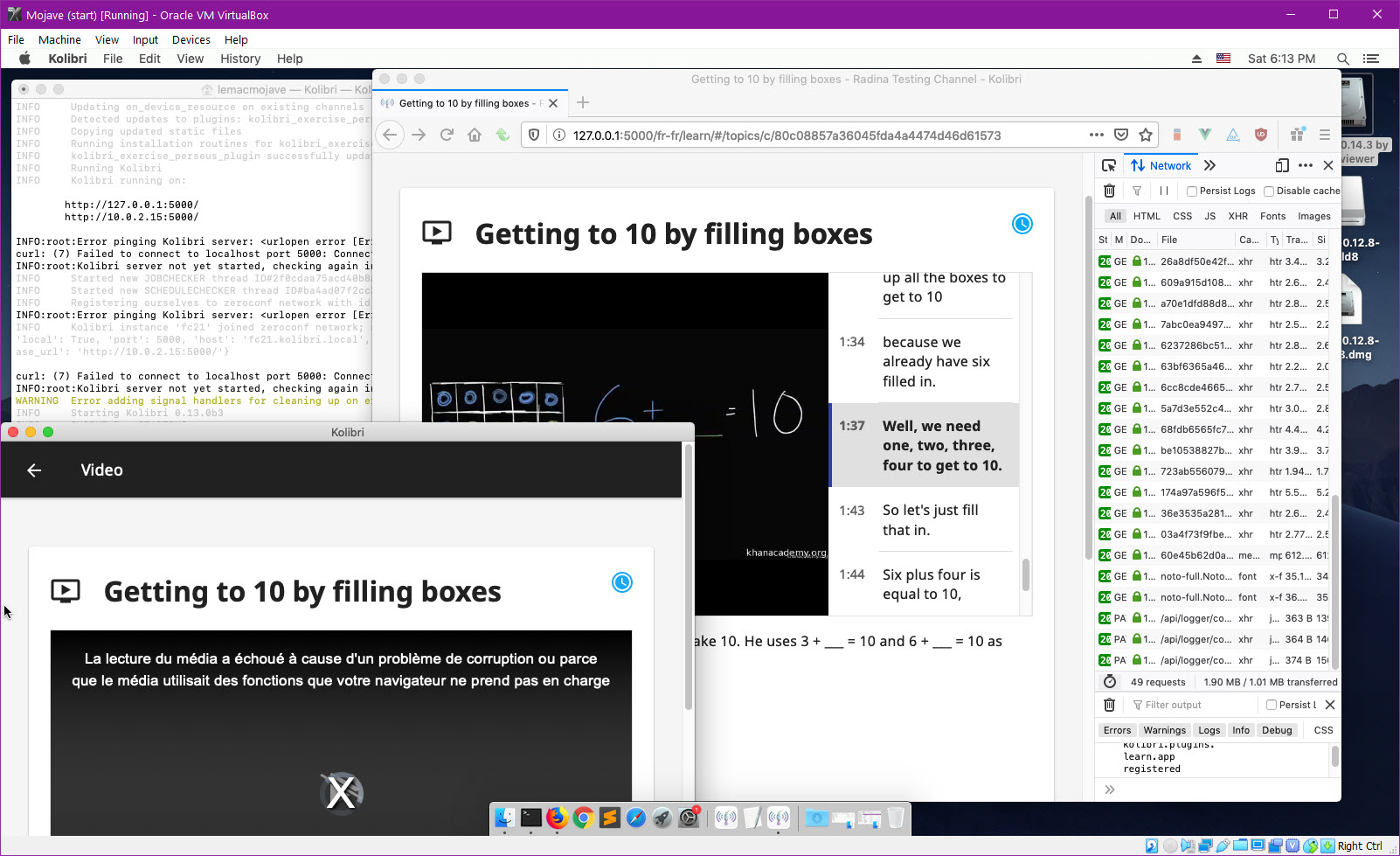

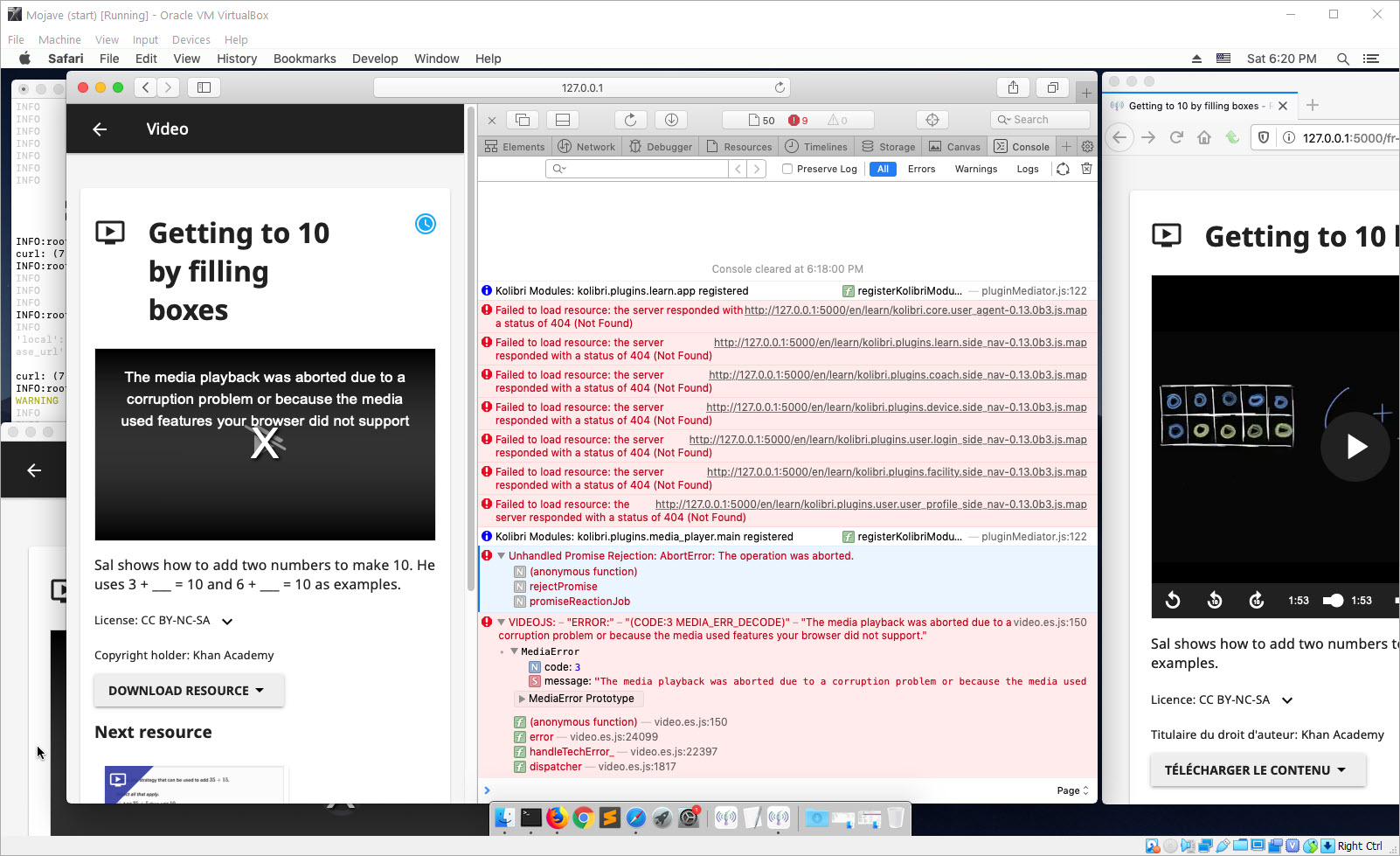

And the output is:

```

...

torch.float32 torch.Size([64])

torch.float32 torch.Size([53])

torch.float32 torch.Size([64])

torch.float32 torch.Size([53])

```

Note that we have already set the argument `broadcast_fp16` to be true, but fairscale still boardcasts float32 tensor.

## Reason

The Tensor.to [(code here)](https://github.com/facebookresearch/fairscale/blob/31e3645371e1462c0fb119a7a54642e327d0d4bf/fairscale/nn/misc/param_bucket.py#L35) method is not in-place operation.

## Posible Solution

Change [fairscale/nn/misc/param_bucket.py:35](https://github.com/facebookresearch/fairscale/blob/31e3645371e1462c0fb119a7a54642e327d0d4bf/fairscale/nn/misc/param_bucket.py#L35) to

```

self.buffer = self.buffer.to(device, dtype, non_blocking)

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `fairscale/nn/misc/param_bucket.py`

Content:

```

1 # Copyright (c) Facebook, Inc. and its affiliates.

2 #

3 # This source code is licensed under the BSD license found in the

4 # LICENSE file in the root directory of this source tree.

5

6 from typing import Any, Callable, List, Optional, Union

7

8 import torch

9

10

11 class Bucket:

12 """

13 Helper class to simplify the handling of buckets, which unify the underlying storage of multiple tensors

14 """

15

16 def __init__(self, size: int, dtype: torch.dtype, device: torch.device) -> None:

17 self._params: List[torch.Tensor] = []

18 self._param_ids: List[int] = []

19 self._fill = 0

20

21 # The actual flat tensor

22 self.buffer: torch.Tensor = torch.zeros(size, dtype=dtype, device=device)

23

24 def to( # type: ignore

25 self,

26 device: Optional[Union[int, torch.device]],

27 dtype: Optional[torch.dtype] = None,

28 non_blocking: bool = False,

29 keep_param_alignment: bool = True,

30 ) -> "ParamBucket":

31 """

32 Move the underlying buffer

33 """

34 assert self.buffer is not None, "Cannot move a collapsed bucket, please rebuild it"

35 self.buffer.to(device, dtype, non_blocking)

36

37

38 class ParamBucket(Bucket):

39 """

40 Helper class to simplify the handling of parameter buckets

41 """

42

43 def __init__(self, size: int, dtype: torch.dtype, device: torch.device) -> None:

44 super().__init__(size, dtype, device)

45

46 def to( # type: ignore

47 self,

48 device: Optional[Union[int, torch.device]],

49 dtype: Optional[torch.dtype] = None,

50 non_blocking: bool = False,

51 keep_param_alignment: bool = True,

52 ) -> "ParamBucket":

53 """

54 Move the underlying buffer

55 """

56 super().to(device, dtype, non_blocking)

57

58 if keep_param_alignment:

59 self._reattach_params()

60

61 @torch.no_grad()

62 def add_param(self, param: torch.Tensor) -> None:

63 """

64 Add a new parameter gradient to the bucket. Param.grad becomes a view of this bucket buffer

65 """

66

67 assert id(param) not in self._param_ids, "The same param cannot be checked in twice"

68

69 self._add_param_as_view(param)

70 self._params.append(param)

71 self._param_ids.append(id(param))

72

73 @torch.no_grad()

74 def _add_param_as_view(self, param: torch.Tensor, keep_existing_value: bool = True) -> None:

75 assert self.buffer is not None

76 assert (

77 param.dtype == self.buffer.dtype

78 ), f"Different types for the bucket and the param, cannot proceed: {param.dtype} - {self.buffer.dtype}"

79 assert (

80 param.device == self.buffer.device

81 ), f"Different devices for the bucket and the param, cannot proceed: {param.device} - {self.buffer.device}"

82

83 fill_next = self._fill + param.numel()

84 assert fill_next <= self.buffer.numel()

85

86 # Copy the current param value

87 if keep_existing_value:

88 self.buffer[self._fill : fill_next].copy_(param.data.flatten())

89 param.data = self.buffer[self._fill : fill_next].view_as(param.data)

90 self._fill = fill_next

91

92 @torch.no_grad()

93 def _reattach_params(self) -> None:

94 """

95 Given the parameters which have been registered previously, rebuild the whole bucket

96 """

97 assert len(self._params) > 0

98

99 self._fill = 0

100 for p in self._params:

101 self._add_param_as_view(p, keep_existing_value=False)

102

103

104 class GradBucket(Bucket):

105 """

106 Helper class to simplify the handling of gradient buckets

107 """

108

109 def __init__(self, size: int, dtype: torch.dtype, device: torch.device, destination: int) -> None:

110 super().__init__(size, dtype, device)

111

112 self._max_size = size

113 self._is_collapsed = False

114

115 self.params_checked_in = 0

116 self.destination = destination

117 self.sent = True

118 self.callback: Optional[Callable[[Any], None]] = None

119

120 def reset_checked_in(self) -> None:

121 """ Reset the counter of the parameter grads which have been checked in

122 """

123 self.params_checked_in = 0

124 self.sent = False

125

126 @property

127 def all_checked_in(self) -> bool:

128 """ Have all the expected gradient check-in happened ?"""

129 return len(self._params) == self.params_checked_in

130

131 def can_add_grad_view(self, param: torch.Tensor) -> bool:

132 """ Is there enough room in the bucket to add this parameter gradient, and is this param not already checked in ?

133 """

134 return self._fill + param.numel() < self._max_size and id(param) not in self._param_ids

135

136 def to( # type: ignore

137 self,

138 device: Optional[Union[int, torch.device]],

139 dtype: Optional[torch.dtype] = None,

140 non_blocking: bool = False,

141 keep_param_alignment: bool = True,

142 ) -> "GradBucket":

143 """

144 Move the underlying buffer

145 """

146 if self._is_collapsed:

147 self.rebuild()

148

149 super().to(device, dtype, non_blocking)

150

151 if keep_param_alignment:

152 self._reattach_grads()

153

154 def zero(self) -> None:

155 """

156 Set all the grads to zero

157 """

158 self.buffer.fill_(0.0)

159

160 @torch.no_grad()

161 def add_grad(self, param: torch.Tensor) -> None:

162 """

163 Add a new parameter gradient to the bucket. Param.grad becomes a view of this bucket buffer

164 """

165

166 assert id(param) not in self._param_ids, "The same gradients cannot be checked in twice"

167

168 if param.grad is None:

169 param.grad = torch.zeros_like(param)

170

171 self._add_grad_as_view(param)

172 self._params.append(param)

173 self._param_ids.append(id(param))

174

175 @torch.no_grad()

176 def collapse(self) -> None:

177 """

178 Release the buffer from memory. The bucket will need to be rebuilt before use

179 """

180 if not self._is_collapsed:

181 for p in self._params:

182 assert p.grad is not None

183 p.grad.detach_()

184 p.grad = None

185

186 self.buffer = torch.zeros(0, dtype=self.buffer.dtype, device=self.buffer.device)

187 self._fill = 0

188 self.params_checked_in = 0

189 self._is_collapsed = True

190

191 @torch.no_grad()

192 def rebuild(self) -> None:

193 """

194 Given the parameter gradients which have been registered previously, rebuild the whole bucket

195 """

196 assert len(self._params) > 0

197

198 if self._is_collapsed:

199 self.buffer = torch.zeros(self._max_size, dtype=self._params[0].dtype, device=self._params[0].device)

200

201 for p in self._params:

202 self._add_grad_as_view(p)

203

204 self._is_collapsed = False

205

206 @torch.no_grad()

207 def shrink(self) -> None:

208 """

209 Shrink the buffer to the size of the parameter gradients currently checked in, release the extra memory

210 """

211 assert self.buffer.numel() > 0, "Cannot shrink a collapsed bucket, please rebuild"

212

213 self.buffer = self.buffer.resize_(self._fill).clone()

214 self._fill = 0

215 for p in self._params:

216 self._add_grad_as_view(p)

217

218 self._max_size = self._fill

219

220 @torch.no_grad()

221 def _reattach_grads(self) -> None:

222 """

223 Given the parameters gradients which have been registered previously, rebuild the whole bucket

224 """

225 assert len(self._params) > 0

226

227 self._fill = 0

228 for p in self._params:

229 self._add_grad_as_view(p, keep_existing_value=False)

230

231 @torch.no_grad()

232 def _add_grad_as_view(self, param: torch.Tensor, keep_existing_value: bool = True) -> None:

233 assert self.buffer.numel() > 0, "Cannot add a gradient to a collapsed bucket, please rebuild"

234 assert param.dtype == self.buffer.dtype

235 assert param.device == self.buffer.device

236

237 fill_next = self._fill + param.numel()

238 assert fill_next <= self.buffer.numel()

239

240 # Copy the current grad value, if any

241 if param.grad is not None:

242 # keep param.grad in place

243 if keep_existing_value:

244 self.buffer[self._fill : fill_next].copy_(param.grad.data.flatten())

245 param.grad.data = self.buffer[self._fill : fill_next].view_as(param.data)

246 else:

247 param.grad = self.buffer[self._fill : fill_next].view_as(param.data)

248 self._fill = fill_next

249

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/fairscale/nn/misc/param_bucket.py b/fairscale/nn/misc/param_bucket.py

--- a/fairscale/nn/misc/param_bucket.py

+++ b/fairscale/nn/misc/param_bucket.py

@@ -32,7 +32,7 @@

Move the underlying buffer

"""

assert self.buffer is not None, "Cannot move a collapsed bucket, please rebuild it"

- self.buffer.to(device, dtype, non_blocking)

+ self.buffer = self.buffer.to(device, dtype, non_blocking)

class ParamBucket(Bucket):

@@ -98,6 +98,8 @@

self._fill = 0

for p in self._params:

+ if p.dtype != self.buffer.dtype:

+ p.data = p.data.to(self.buffer.dtype)

self._add_param_as_view(p, keep_existing_value=False)

|

{"golden_diff": "diff --git a/fairscale/nn/misc/param_bucket.py b/fairscale/nn/misc/param_bucket.py\n--- a/fairscale/nn/misc/param_bucket.py\n+++ b/fairscale/nn/misc/param_bucket.py\n@@ -32,7 +32,7 @@\n Move the underlying buffer\n \"\"\"\n assert self.buffer is not None, \"Cannot move a collapsed bucket, please rebuild it\"\n- self.buffer.to(device, dtype, non_blocking)\n+ self.buffer = self.buffer.to(device, dtype, non_blocking)\n \n \n class ParamBucket(Bucket):\n@@ -98,6 +98,8 @@\n \n self._fill = 0\n for p in self._params:\n+ if p.dtype != self.buffer.dtype:\n+ p.data = p.data.to(self.buffer.dtype)\n self._add_param_as_view(p, keep_existing_value=False)\n", "issue": "The Argument broadcast_fp16 is not working on OSS\n## \ud83d\udc1b Bug\r\n\r\nThe Argument `broadcast_fp16` is not working on `OSS`.\r\n\r\n## Command\r\n\r\n\r\n\r\n## To Reproduce\r\n\r\nSteps to reproduce the behavior:\r\n\r\n1. Add one print line in fairscale's source code [here](https://github.com/facebookresearch/fairscale/blob/31e3645371e1462c0fb119a7a54642e327d0d4bf/fairscale/optim/oss.py#L603)\r\n```\r\n for device in self.buckets.keys():\r\n for dst_rank, bucket in self.buckets[device].items():\r\n print(bucket.buffer.dtype, bucket.buffer.shape)\r\n work_handles.append(\r\n```\r\n2. Run a test script as below:\r\n```\r\nimport sys\r\nimport pdb\r\nimport torch\r\nimport torch.distributed as dist\r\nimport torch.multiprocessing as mp\r\nfrom torch.nn.parallel import DistributedDataParallel as DDP\r\n\r\nfrom fairscale.optim.oss import OSS\r\nfrom fairscale.nn.data_parallel import ShardedDataParallel as ShardedDDP\r\n\r\nCUDA_DEVICE = [0, 1]\r\nHIDDEN_SIZE = 8\r\n\r\nclass ToyModel(torch.nn.Module):\r\n def __init__(self):\r\n super(ToyModel, self).__init__()\r\n self.net1 = torch.nn.Linear(HIDDEN_SIZE, HIDDEN_SIZE)\r\n self.relu = torch.nn.ReLU()\r\n self.net2 = torch.nn.Linear(HIDDEN_SIZE, 5)\r\n\r\n def forward(self, x):\r\n return self.net2(self.relu(self.net1(x)))\r\n\r\n\r\ndef main(rank, world_size):\r\n\r\n dist.init_process_group(\r\n backend='nccl',\r\n init_method=\"tcp://localhost:29501\",\r\n rank=rank,\r\n world_size=world_size)\r\n device = CUDA_DEVICE[rank]\r\n torch.manual_seed(rank + 1007)\r\n torch.cuda.set_device(device)\r\n torch.cuda.manual_seed(rank + 1007)\r\n print('Rank: %d/%d Device: %s' % (rank, world_size, device))\r\n\r\n\r\n model = ToyModel().to(device)\r\n loss_fn = torch.nn.MSELoss()\r\n # ddp_model = DDP(model, device_ids=[device])\r\n # optimizer = torch.optim.SGD(ddp_model.parameters(), lr=0.1)\r\n optimizer = OSS(params=model.parameters(), optim=torch.optim.SGD, lr=0.1, broadcast_fp16=True)\r\n ddp_model = ShardedDDP(model, optimizer)\r\n\r\n inputs = torch.randn(2, HIDDEN_SIZE).to(device)\r\n print('inputs', rank, inputs)\r\n\r\n optimizer.zero_grad()\r\n outputs = ddp_model(inputs)\r\n labels = torch.randn(2, 5).to(device)\r\n loss_fn(outputs, labels).backward()\r\n optimizer.step()\r\n \r\n\r\n dist.destroy_process_group()\r\n\r\n\r\nif __name__ == \"__main__\":\r\n WORLD_SIZE = len(CUDA_DEVICE)\r\n mp.spawn(\r\n main,\r\n args=(WORLD_SIZE,),\r\n nprocs=WORLD_SIZE,\r\n join=True,\r\n )\r\n\r\n```\r\n\r\nAnd the output is:\r\n```\r\n...\r\ntorch.float32 torch.Size([64])\r\ntorch.float32 torch.Size([53])\r\ntorch.float32 torch.Size([64])\r\ntorch.float32 torch.Size([53])\r\n```\r\n\r\nNote that we have already set the argument `broadcast_fp16` to be true, but fairscale still boardcasts float32 tensor.\r\n\r\n## Reason\r\n\r\nThe Tensor.to [(code here)](https://github.com/facebookresearch/fairscale/blob/31e3645371e1462c0fb119a7a54642e327d0d4bf/fairscale/nn/misc/param_bucket.py#L35) method is not in-place operation.\r\n\r\n## Posible Solution\r\nChange [fairscale/nn/misc/param_bucket.py:35](https://github.com/facebookresearch/fairscale/blob/31e3645371e1462c0fb119a7a54642e327d0d4bf/fairscale/nn/misc/param_bucket.py#L35) to \r\n```\r\nself.buffer = self.buffer.to(device, dtype, non_blocking)\r\n```\n", "before_files": [{"content": "# Copyright (c) Facebook, Inc. and its affiliates.\n#\n# This source code is licensed under the BSD license found in the\n# LICENSE file in the root directory of this source tree.\n\nfrom typing import Any, Callable, List, Optional, Union\n\nimport torch\n\n\nclass Bucket:\n \"\"\"\n Helper class to simplify the handling of buckets, which unify the underlying storage of multiple tensors\n \"\"\"\n\n def __init__(self, size: int, dtype: torch.dtype, device: torch.device) -> None:\n self._params: List[torch.Tensor] = []\n self._param_ids: List[int] = []\n self._fill = 0\n\n # The actual flat tensor\n self.buffer: torch.Tensor = torch.zeros(size, dtype=dtype, device=device)\n\n def to( # type: ignore\n self,\n device: Optional[Union[int, torch.device]],\n dtype: Optional[torch.dtype] = None,\n non_blocking: bool = False,\n keep_param_alignment: bool = True,\n ) -> \"ParamBucket\":\n \"\"\"\n Move the underlying buffer\n \"\"\"\n assert self.buffer is not None, \"Cannot move a collapsed bucket, please rebuild it\"\n self.buffer.to(device, dtype, non_blocking)\n\n\nclass ParamBucket(Bucket):\n \"\"\"\n Helper class to simplify the handling of parameter buckets\n \"\"\"\n\n def __init__(self, size: int, dtype: torch.dtype, device: torch.device) -> None:\n super().__init__(size, dtype, device)\n\n def to( # type: ignore\n self,\n device: Optional[Union[int, torch.device]],\n dtype: Optional[torch.dtype] = None,\n non_blocking: bool = False,\n keep_param_alignment: bool = True,\n ) -> \"ParamBucket\":\n \"\"\"\n Move the underlying buffer\n \"\"\"\n super().to(device, dtype, non_blocking)\n\n if keep_param_alignment:\n self._reattach_params()\n\n @torch.no_grad()\n def add_param(self, param: torch.Tensor) -> None:\n \"\"\"\n Add a new parameter gradient to the bucket. Param.grad becomes a view of this bucket buffer\n \"\"\"\n\n assert id(param) not in self._param_ids, \"The same param cannot be checked in twice\"\n\n self._add_param_as_view(param)\n self._params.append(param)\n self._param_ids.append(id(param))\n\n @torch.no_grad()\n def _add_param_as_view(self, param: torch.Tensor, keep_existing_value: bool = True) -> None:\n assert self.buffer is not None\n assert (\n param.dtype == self.buffer.dtype\n ), f\"Different types for the bucket and the param, cannot proceed: {param.dtype} - {self.buffer.dtype}\"\n assert (\n param.device == self.buffer.device\n ), f\"Different devices for the bucket and the param, cannot proceed: {param.device} - {self.buffer.device}\"\n\n fill_next = self._fill + param.numel()\n assert fill_next <= self.buffer.numel()\n\n # Copy the current param value\n if keep_existing_value:\n self.buffer[self._fill : fill_next].copy_(param.data.flatten())\n param.data = self.buffer[self._fill : fill_next].view_as(param.data)\n self._fill = fill_next\n\n @torch.no_grad()\n def _reattach_params(self) -> None:\n \"\"\"\n Given the parameters which have been registered previously, rebuild the whole bucket\n \"\"\"\n assert len(self._params) > 0\n\n self._fill = 0\n for p in self._params:\n self._add_param_as_view(p, keep_existing_value=False)\n\n\nclass GradBucket(Bucket):\n \"\"\"\n Helper class to simplify the handling of gradient buckets\n \"\"\"\n\n def __init__(self, size: int, dtype: torch.dtype, device: torch.device, destination: int) -> None:\n super().__init__(size, dtype, device)\n\n self._max_size = size\n self._is_collapsed = False\n\n self.params_checked_in = 0\n self.destination = destination\n self.sent = True\n self.callback: Optional[Callable[[Any], None]] = None\n\n def reset_checked_in(self) -> None:\n \"\"\" Reset the counter of the parameter grads which have been checked in\n \"\"\"\n self.params_checked_in = 0\n self.sent = False\n\n @property\n def all_checked_in(self) -> bool:\n \"\"\" Have all the expected gradient check-in happened ?\"\"\"\n return len(self._params) == self.params_checked_in\n\n def can_add_grad_view(self, param: torch.Tensor) -> bool:\n \"\"\" Is there enough room in the bucket to add this parameter gradient, and is this param not already checked in ?\n \"\"\"\n return self._fill + param.numel() < self._max_size and id(param) not in self._param_ids\n\n def to( # type: ignore\n self,\n device: Optional[Union[int, torch.device]],\n dtype: Optional[torch.dtype] = None,\n non_blocking: bool = False,\n keep_param_alignment: bool = True,\n ) -> \"GradBucket\":\n \"\"\"\n Move the underlying buffer\n \"\"\"\n if self._is_collapsed:\n self.rebuild()\n\n super().to(device, dtype, non_blocking)\n\n if keep_param_alignment:\n self._reattach_grads()\n\n def zero(self) -> None:\n \"\"\"\n Set all the grads to zero\n \"\"\"\n self.buffer.fill_(0.0)\n\n @torch.no_grad()\n def add_grad(self, param: torch.Tensor) -> None:\n \"\"\"\n Add a new parameter gradient to the bucket. Param.grad becomes a view of this bucket buffer\n \"\"\"\n\n assert id(param) not in self._param_ids, \"The same gradients cannot be checked in twice\"\n\n if param.grad is None:\n param.grad = torch.zeros_like(param)\n\n self._add_grad_as_view(param)\n self._params.append(param)\n self._param_ids.append(id(param))\n\n @torch.no_grad()\n def collapse(self) -> None:\n \"\"\"\n Release the buffer from memory. The bucket will need to be rebuilt before use\n \"\"\"\n if not self._is_collapsed:\n for p in self._params:\n assert p.grad is not None\n p.grad.detach_()\n p.grad = None\n\n self.buffer = torch.zeros(0, dtype=self.buffer.dtype, device=self.buffer.device)\n self._fill = 0\n self.params_checked_in = 0\n self._is_collapsed = True\n\n @torch.no_grad()\n def rebuild(self) -> None:\n \"\"\"\n Given the parameter gradients which have been registered previously, rebuild the whole bucket\n \"\"\"\n assert len(self._params) > 0\n\n if self._is_collapsed:\n self.buffer = torch.zeros(self._max_size, dtype=self._params[0].dtype, device=self._params[0].device)\n\n for p in self._params:\n self._add_grad_as_view(p)\n\n self._is_collapsed = False\n\n @torch.no_grad()\n def shrink(self) -> None:\n \"\"\"\n Shrink the buffer to the size of the parameter gradients currently checked in, release the extra memory\n \"\"\"\n assert self.buffer.numel() > 0, \"Cannot shrink a collapsed bucket, please rebuild\"\n\n self.buffer = self.buffer.resize_(self._fill).clone()\n self._fill = 0\n for p in self._params:\n self._add_grad_as_view(p)\n\n self._max_size = self._fill\n\n @torch.no_grad()\n def _reattach_grads(self) -> None:\n \"\"\"\n Given the parameters gradients which have been registered previously, rebuild the whole bucket\n \"\"\"\n assert len(self._params) > 0\n\n self._fill = 0\n for p in self._params:\n self._add_grad_as_view(p, keep_existing_value=False)\n\n @torch.no_grad()\n def _add_grad_as_view(self, param: torch.Tensor, keep_existing_value: bool = True) -> None:\n assert self.buffer.numel() > 0, \"Cannot add a gradient to a collapsed bucket, please rebuild\"\n assert param.dtype == self.buffer.dtype\n assert param.device == self.buffer.device\n\n fill_next = self._fill + param.numel()\n assert fill_next <= self.buffer.numel()\n\n # Copy the current grad value, if any\n if param.grad is not None:\n # keep param.grad in place\n if keep_existing_value:\n self.buffer[self._fill : fill_next].copy_(param.grad.data.flatten())\n param.grad.data = self.buffer[self._fill : fill_next].view_as(param.data)\n else:\n param.grad = self.buffer[self._fill : fill_next].view_as(param.data)\n self._fill = fill_next\n", "path": "fairscale/nn/misc/param_bucket.py"}], "after_files": [{"content": "# Copyright (c) Facebook, Inc. and its affiliates.\n#\n# This source code is licensed under the BSD license found in the\n# LICENSE file in the root directory of this source tree.\n\nfrom typing import Any, Callable, List, Optional, Union\n\nimport torch\n\n\nclass Bucket:\n \"\"\"\n Helper class to simplify the handling of buckets, which unify the underlying storage of multiple tensors\n \"\"\"\n\n def __init__(self, size: int, dtype: torch.dtype, device: torch.device) -> None:\n self._params: List[torch.Tensor] = []\n self._param_ids: List[int] = []\n self._fill = 0\n\n # The actual flat tensor\n self.buffer: torch.Tensor = torch.zeros(size, dtype=dtype, device=device)\n\n def to( # type: ignore\n self,\n device: Optional[Union[int, torch.device]],\n dtype: Optional[torch.dtype] = None,\n non_blocking: bool = False,\n keep_param_alignment: bool = True,\n ) -> \"ParamBucket\":\n \"\"\"\n Move the underlying buffer\n \"\"\"\n assert self.buffer is not None, \"Cannot move a collapsed bucket, please rebuild it\"\n self.buffer = self.buffer.to(device, dtype, non_blocking)\n\n\nclass ParamBucket(Bucket):\n \"\"\"\n Helper class to simplify the handling of parameter buckets\n \"\"\"\n\n def __init__(self, size: int, dtype: torch.dtype, device: torch.device) -> None:\n super().__init__(size, dtype, device)\n\n def to( # type: ignore\n self,\n device: Optional[Union[int, torch.device]],\n dtype: Optional[torch.dtype] = None,\n non_blocking: bool = False,\n keep_param_alignment: bool = True,\n ) -> \"ParamBucket\":\n \"\"\"\n Move the underlying buffer\n \"\"\"\n super().to(device, dtype, non_blocking)\n\n if keep_param_alignment:\n self._reattach_params()\n\n @torch.no_grad()\n def add_param(self, param: torch.Tensor) -> None:\n \"\"\"\n Add a new parameter gradient to the bucket. Param.grad becomes a view of this bucket buffer\n \"\"\"\n\n assert id(param) not in self._param_ids, \"The same param cannot be checked in twice\"\n\n self._add_param_as_view(param)\n self._params.append(param)\n self._param_ids.append(id(param))\n\n @torch.no_grad()\n def _add_param_as_view(self, param: torch.Tensor, keep_existing_value: bool = True) -> None:\n assert self.buffer is not None\n assert (\n param.dtype == self.buffer.dtype\n ), f\"Different types for the bucket and the param, cannot proceed: {param.dtype} - {self.buffer.dtype}\"\n assert (\n param.device == self.buffer.device\n ), f\"Different devices for the bucket and the param, cannot proceed: {param.device} - {self.buffer.device}\"\n\n fill_next = self._fill + param.numel()\n assert fill_next <= self.buffer.numel()\n\n # Copy the current param value\n if keep_existing_value:\n self.buffer[self._fill : fill_next].copy_(param.data.flatten())\n param.data = self.buffer[self._fill : fill_next].view_as(param.data)\n self._fill = fill_next\n\n @torch.no_grad()\n def _reattach_params(self) -> None:\n \"\"\"\n Given the parameters which have been registered previously, rebuild the whole bucket\n \"\"\"\n assert len(self._params) > 0\n\n self._fill = 0\n for p in self._params:\n if p.dtype != self.buffer.dtype:\n p.data = p.data.to(self.buffer.dtype)\n self._add_param_as_view(p, keep_existing_value=False)\n\n\nclass GradBucket(Bucket):\n \"\"\"\n Helper class to simplify the handling of gradient buckets\n \"\"\"\n\n def __init__(self, size: int, dtype: torch.dtype, device: torch.device, destination: int) -> None:\n super().__init__(size, dtype, device)\n\n self._max_size = size\n self._is_collapsed = False\n\n self.params_checked_in = 0\n self.destination = destination\n self.sent = True\n self.callback: Optional[Callable[[Any], None]] = None\n\n def reset_checked_in(self) -> None:\n \"\"\" Reset the counter of the parameter grads which have been checked in\n \"\"\"\n self.params_checked_in = 0\n self.sent = False\n\n @property\n def all_checked_in(self) -> bool:\n \"\"\" Have all the expected gradient check-in happened ?\"\"\"\n return len(self._params) == self.params_checked_in\n\n def can_add_grad_view(self, param: torch.Tensor) -> bool:\n \"\"\" Is there enough room in the bucket to add this parameter gradient, and is this param not already checked in ?\n \"\"\"\n return self._fill + param.numel() < self._max_size and id(param) not in self._param_ids\n\n def to( # type: ignore\n self,\n device: Optional[Union[int, torch.device]],\n dtype: Optional[torch.dtype] = None,\n non_blocking: bool = False,\n keep_param_alignment: bool = True,\n ) -> \"GradBucket\":\n \"\"\"\n Move the underlying buffer\n \"\"\"\n if self._is_collapsed:\n self.rebuild()\n\n super().to(device, dtype, non_blocking)\n\n if keep_param_alignment:\n self._reattach_grads()\n\n def zero(self) -> None:\n \"\"\"\n Set all the grads to zero\n \"\"\"\n self.buffer.fill_(0.0)\n\n @torch.no_grad()\n def add_grad(self, param: torch.Tensor) -> None:\n \"\"\"\n Add a new parameter gradient to the bucket. Param.grad becomes a view of this bucket buffer\n \"\"\"\n\n assert id(param) not in self._param_ids, \"The same gradients cannot be checked in twice\"\n\n if param.grad is None:\n param.grad = torch.zeros_like(param)\n\n self._add_grad_as_view(param)\n self._params.append(param)\n self._param_ids.append(id(param))\n\n @torch.no_grad()\n def collapse(self) -> None:\n \"\"\"\n Release the buffer from memory. The bucket will need to be rebuilt before use\n \"\"\"\n if not self._is_collapsed:\n for p in self._params:\n assert p.grad is not None\n p.grad.detach_()\n p.grad = None\n\n self.buffer = torch.zeros(0, dtype=self.buffer.dtype, device=self.buffer.device)\n self._fill = 0\n self.params_checked_in = 0\n self._is_collapsed = True\n\n @torch.no_grad()\n def rebuild(self) -> None:\n \"\"\"\n Given the parameter gradients which have been registered previously, rebuild the whole bucket\n \"\"\"\n assert len(self._params) > 0\n\n if self._is_collapsed:\n self.buffer = torch.zeros(self._max_size, dtype=self._params[0].dtype, device=self._params[0].device)\n\n for p in self._params:\n self._add_grad_as_view(p)\n\n self._is_collapsed = False\n\n @torch.no_grad()\n def shrink(self) -> None:\n \"\"\"\n Shrink the buffer to the size of the parameter gradients currently checked in, release the extra memory\n \"\"\"\n assert self.buffer.numel() > 0, \"Cannot shrink a collapsed bucket, please rebuild\"\n\n self.buffer = self.buffer.resize_(self._fill).clone()\n self._fill = 0\n for p in self._params:\n self._add_grad_as_view(p)\n\n self._max_size = self._fill\n\n @torch.no_grad()\n def _reattach_grads(self) -> None:\n \"\"\"\n Given the parameters gradients which have been registered previously, rebuild the whole bucket\n \"\"\"\n assert len(self._params) > 0\n\n self._fill = 0\n for p in self._params:\n self._add_grad_as_view(p, keep_existing_value=False)\n\n @torch.no_grad()\n def _add_grad_as_view(self, param: torch.Tensor, keep_existing_value: bool = True) -> None:\n assert self.buffer.numel() > 0, \"Cannot add a gradient to a collapsed bucket, please rebuild\"\n assert param.dtype == self.buffer.dtype\n assert param.device == self.buffer.device\n\n fill_next = self._fill + param.numel()\n assert fill_next <= self.buffer.numel()\n\n # Copy the current grad value, if any\n if param.grad is not None:\n # keep param.grad in place\n if keep_existing_value:\n self.buffer[self._fill : fill_next].copy_(param.grad.data.flatten())\n param.grad.data = self.buffer[self._fill : fill_next].view_as(param.data)\n else:\n param.grad = self.buffer[self._fill : fill_next].view_as(param.data)\n self._fill = fill_next\n", "path": "fairscale/nn/misc/param_bucket.py"}]}

| 3,769 | 190 |

gh_patches_debug_21047

|

rasdani/github-patches

|

git_diff

|

dask__distributed-3387

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

When --dashboard-prefix is used root location is always redirected to /status without adding the prefix

The problem arises when using --dashboard-prefix, using http://DASHBOARD[:PORT]/PREFIX/ redirects to http://DASHBOARD[:PORT]/status instead of http://DASHBOARD[:PORT]/PREFIX/status.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `distributed/dashboard/core.py`

Content:

```

1 from distutils.version import LooseVersion

2 import os

3 import warnings

4

5 import bokeh

6 from bokeh.server.server import Server

7 from tornado import web

8

9

10 if LooseVersion(bokeh.__version__) < LooseVersion("0.13.0"):

11 warnings.warn(

12 "\nDask needs bokeh >= 0.13.0 for the dashboard."

13 "\nContinuing without the dashboard."

14 )

15 raise ImportError("Dask needs bokeh >= 0.13.0")

16

17

18 class BokehServer(object):

19 server_kwargs = {}

20

21 def listen(self, addr):

22 if self.server:

23 return

24 if isinstance(addr, tuple):

25 ip, port = addr

26 else:

27 port = addr

28 ip = None

29 for i in range(5):

30 try:

31 server_kwargs = dict(

32 port=port,

33 address=ip,

34 check_unused_sessions_milliseconds=500,

35 allow_websocket_origin=["*"],

36 use_index=False,

37 extra_patterns=[(r"/", web.RedirectHandler, {"url": "/status"})],

38 )

39 server_kwargs.update(self.server_kwargs)

40 self.server = Server(self.apps, **server_kwargs)

41 self.server.start()

42

43 handlers = [

44 (

45 self.prefix + r"/statics/(.*)",

46 web.StaticFileHandler,

47 {"path": os.path.join(os.path.dirname(__file__), "static")},

48 )

49 ]

50

51 self.server._tornado.add_handlers(r".*", handlers)

52

53 return

54 except (SystemExit, EnvironmentError) as exc:

55 if port != 0:

56 if "already in use" in str(

57 exc

58 ) or "Only one usage of" in str( # Unix/Mac

59 exc

60 ): # Windows

61 msg = (

62 "Port %d is already in use. "

63 "\nPerhaps you already have a cluster running?"

64 "\nHosting the diagnostics dashboard on a random port instead."

65 % port

66 )

67 else:

68 msg = (

69 "Failed to start diagnostics server on port %d. " % port

70 + str(exc)

71 )

72 warnings.warn("\n" + msg)

73 port = 0

74 if i == 4:

75 raise

76

77 @property

78 def port(self):

79 return (

80 self.server.port

81 or list(self.server._http._sockets.values())[0].getsockname()[1]

82 )

83

84 def stop(self):

85 for context in self.server._tornado._applications.values():

86 context.run_unload_hook()

87

88 self.server._tornado._stats_job.stop()

89 self.server._tornado._cleanup_job.stop()

90 if self.server._tornado._ping_job is not None:

91 self.server._tornado._ping_job.stop()

92

93 # https://github.com/bokeh/bokeh/issues/5494

94 if LooseVersion(bokeh.__version__) >= "0.12.4":

95 self.server.stop()

96

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/distributed/dashboard/core.py b/distributed/dashboard/core.py

--- a/distributed/dashboard/core.py

+++ b/distributed/dashboard/core.py

@@ -5,6 +5,7 @@

import bokeh

from bokeh.server.server import Server

from tornado import web

+from urllib.parse import urljoin

if LooseVersion(bokeh.__version__) < LooseVersion("0.13.0"):

@@ -34,7 +35,13 @@

check_unused_sessions_milliseconds=500,

allow_websocket_origin=["*"],

use_index=False,

- extra_patterns=[(r"/", web.RedirectHandler, {"url": "/status"})],

+ extra_patterns=[

+ (

+ r"/",

+ web.RedirectHandler,

+ {"url": urljoin(self.prefix.rstrip("/") + "/", r"status")},

+ )

+ ],

)

server_kwargs.update(self.server_kwargs)

self.server = Server(self.apps, **server_kwargs)

|

{"golden_diff": "diff --git a/distributed/dashboard/core.py b/distributed/dashboard/core.py\n--- a/distributed/dashboard/core.py\n+++ b/distributed/dashboard/core.py\n@@ -5,6 +5,7 @@\n import bokeh\n from bokeh.server.server import Server\n from tornado import web\n+from urllib.parse import urljoin\n \n \n if LooseVersion(bokeh.__version__) < LooseVersion(\"0.13.0\"):\n@@ -34,7 +35,13 @@\n check_unused_sessions_milliseconds=500,\n allow_websocket_origin=[\"*\"],\n use_index=False,\n- extra_patterns=[(r\"/\", web.RedirectHandler, {\"url\": \"/status\"})],\n+ extra_patterns=[\n+ (\n+ r\"/\",\n+ web.RedirectHandler,\n+ {\"url\": urljoin(self.prefix.rstrip(\"/\") + \"/\", r\"status\")},\n+ )\n+ ],\n )\n server_kwargs.update(self.server_kwargs)\n self.server = Server(self.apps, **server_kwargs)\n", "issue": "When --dashboard-prefix is used root location is always redirected to /status without adding the prefix\nThe problem arises when using --dashboard-prefix, using http://DASHBOARD[:PORT]/PREFIX/ redirects to http://DASHBOARD[:PORT]/status instead of http://DASHBOARD[:PORT]/PREFIX/status.\n", "before_files": [{"content": "from distutils.version import LooseVersion\nimport os\nimport warnings\n\nimport bokeh\nfrom bokeh.server.server import Server\nfrom tornado import web\n\n\nif LooseVersion(bokeh.__version__) < LooseVersion(\"0.13.0\"):\n warnings.warn(\n \"\\nDask needs bokeh >= 0.13.0 for the dashboard.\"\n \"\\nContinuing without the dashboard.\"\n )\n raise ImportError(\"Dask needs bokeh >= 0.13.0\")\n\n\nclass BokehServer(object):\n server_kwargs = {}\n\n def listen(self, addr):\n if self.server:\n return\n if isinstance(addr, tuple):\n ip, port = addr\n else:\n port = addr\n ip = None\n for i in range(5):\n try:\n server_kwargs = dict(\n port=port,\n address=ip,\n check_unused_sessions_milliseconds=500,\n allow_websocket_origin=[\"*\"],\n use_index=False,\n extra_patterns=[(r\"/\", web.RedirectHandler, {\"url\": \"/status\"})],\n )\n server_kwargs.update(self.server_kwargs)\n self.server = Server(self.apps, **server_kwargs)\n self.server.start()\n\n handlers = [\n (\n self.prefix + r\"/statics/(.*)\",\n web.StaticFileHandler,\n {\"path\": os.path.join(os.path.dirname(__file__), \"static\")},\n )\n ]\n\n self.server._tornado.add_handlers(r\".*\", handlers)\n\n return\n except (SystemExit, EnvironmentError) as exc:\n if port != 0:\n if \"already in use\" in str(\n exc\n ) or \"Only one usage of\" in str( # Unix/Mac\n exc\n ): # Windows\n msg = (\n \"Port %d is already in use. \"\n \"\\nPerhaps you already have a cluster running?\"\n \"\\nHosting the diagnostics dashboard on a random port instead.\"\n % port\n )\n else:\n msg = (\n \"Failed to start diagnostics server on port %d. \" % port\n + str(exc)\n )\n warnings.warn(\"\\n\" + msg)\n port = 0\n if i == 4:\n raise\n\n @property\n def port(self):\n return (\n self.server.port\n or list(self.server._http._sockets.values())[0].getsockname()[1]\n )\n\n def stop(self):\n for context in self.server._tornado._applications.values():\n context.run_unload_hook()\n\n self.server._tornado._stats_job.stop()\n self.server._tornado._cleanup_job.stop()\n if self.server._tornado._ping_job is not None:\n self.server._tornado._ping_job.stop()\n\n # https://github.com/bokeh/bokeh/issues/5494\n if LooseVersion(bokeh.__version__) >= \"0.12.4\":\n self.server.stop()\n", "path": "distributed/dashboard/core.py"}], "after_files": [{"content": "from distutils.version import LooseVersion\nimport os\nimport warnings\n\nimport bokeh\nfrom bokeh.server.server import Server\nfrom tornado import web\nfrom urllib.parse import urljoin\n\n\nif LooseVersion(bokeh.__version__) < LooseVersion(\"0.13.0\"):\n warnings.warn(\n \"\\nDask needs bokeh >= 0.13.0 for the dashboard.\"\n \"\\nContinuing without the dashboard.\"\n )\n raise ImportError(\"Dask needs bokeh >= 0.13.0\")\n\n\nclass BokehServer(object):\n server_kwargs = {}\n\n def listen(self, addr):\n if self.server:\n return\n if isinstance(addr, tuple):\n ip, port = addr\n else:\n port = addr\n ip = None\n for i in range(5):\n try:\n server_kwargs = dict(\n port=port,\n address=ip,\n check_unused_sessions_milliseconds=500,\n allow_websocket_origin=[\"*\"],\n use_index=False,\n extra_patterns=[\n (\n r\"/\",\n web.RedirectHandler,\n {\"url\": urljoin(self.prefix.rstrip(\"/\") + \"/\", r\"status\")},\n )\n ],\n )\n server_kwargs.update(self.server_kwargs)\n self.server = Server(self.apps, **server_kwargs)\n self.server.start()\n\n handlers = [\n (\n self.prefix + r\"/statics/(.*)\",\n web.StaticFileHandler,\n {\"path\": os.path.join(os.path.dirname(__file__), \"static\")},\n )\n ]\n\n self.server._tornado.add_handlers(r\".*\", handlers)\n\n return\n except (SystemExit, EnvironmentError) as exc:\n if port != 0:\n if \"already in use\" in str(\n exc\n ) or \"Only one usage of\" in str( # Unix/Mac\n exc\n ): # Windows\n msg = (\n \"Port %d is already in use. \"\n \"\\nPerhaps you already have a cluster running?\"\n \"\\nHosting the diagnostics dashboard on a random port instead.\"\n % port\n )\n else:\n msg = (\n \"Failed to start diagnostics server on port %d. \" % port\n + str(exc)\n )\n warnings.warn(\"\\n\" + msg)\n port = 0\n if i == 4:\n raise\n\n @property\n def port(self):\n return (\n self.server.port\n or list(self.server._http._sockets.values())[0].getsockname()[1]\n )\n\n def stop(self):\n for context in self.server._tornado._applications.values():\n context.run_unload_hook()\n\n self.server._tornado._stats_job.stop()\n self.server._tornado._cleanup_job.stop()\n if self.server._tornado._ping_job is not None:\n self.server._tornado._ping_job.stop()\n\n # https://github.com/bokeh/bokeh/issues/5494\n if LooseVersion(bokeh.__version__) >= \"0.12.4\":\n self.server.stop()\n", "path": "distributed/dashboard/core.py"}]}

| 1,139 | 209 |

gh_patches_debug_40460

|

rasdani/github-patches

|

git_diff

|

vacanza__python-holidays-371

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Wrong workday info for country HR

Today (Oct. 8, 2020) my alarmclock automation did not go off, because my workday sensor gave the wrong info (no workday). This day used to be a holiday in Croatia, but is not anymore.

binary_sensor:

- platform: workday

country: HR

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `holidays/countries/croatia.py`

Content:

```

1 # -*- coding: utf-8 -*-

2

3 # python-holidays

4 # ---------------

5 # A fast, efficient Python library for generating country, province and state

6 # specific sets of holidays on the fly. It aims to make determining whether a

7 # specific date is a holiday as fast and flexible as possible.

8 #

9 # Author: ryanss <[email protected]> (c) 2014-2017

10 # dr-prodigy <[email protected]> (c) 2017-2020

11 # Website: https://github.com/dr-prodigy/python-holidays

12 # License: MIT (see LICENSE file)

13

14 from datetime import date

15

16 from dateutil.easter import easter

17 from dateutil.relativedelta import relativedelta as rd

18

19 from holidays.constants import JAN, MAY, JUN, AUG, OCT, \

20 NOV, DEC

21 from holidays.holiday_base import HolidayBase

22

23

24 class Croatia(HolidayBase):

25

26 # https://en.wikipedia.org/wiki/Public_holidays_in_Croatia

27

28 def __init__(self, **kwargs):

29 self.country = "HR"

30 HolidayBase.__init__(self, **kwargs)

31

32 def _populate(self, year):

33 # New years

34 self[date(year, JAN, 1)] = "Nova Godina"

35 # Epiphany

36 self[date(year, JAN, 6)] = "Sveta tri kralja"

37 easter_date = easter(year)

38

39 # Easter

40 self[easter_date] = "Uskrs"

41 # Easter Monday

42 self[easter_date + rd(days=1)] = "Uskršnji ponedjeljak"

43

44 # Corpus Christi

45 self[easter_date + rd(days=60)] = "Tijelovo"

46

47 # International Workers' Day

48 self[date(year, MAY, 1)] = "Međunarodni praznik rada"

49

50 if year >= 2020:

51 # Statehood day

52 self[date(year, MAY, 30)] = "Dan državnosti"

53

54 # Anti-fascist struggle day

55 self[date(year, JUN, 22)] = "Dan antifašističke borbe"

56

57 if year < 2020:

58 # Statehood day

59 self[date(year, JUN, 25)] = "Dan državnosti"

60

61 # Victory and Homeland Thanksgiving Day

62 self[date(year, AUG, 5)] = "Dan pobjede i domovinske zahvalnosti"

63

64 # Assumption of Mary

65 self[date(year, AUG, 15)] = "Velika Gospa"

66

67 if year < 2020:

68 # Independence Day

69 self[date(year, OCT, 8)] = "Dan neovisnosti"

70

71 # All Saints' Day

72 self[date(year, NOV, 1)] = "Dan svih svetih"

73

74 if year >= 2020:

75 # Memorial day

76 self[date(year, NOV, 18)] =\

77 "Dan sjećanja na žrtve Domovinskog rata"

78

79 # Christmas day

80 self[date(year, DEC, 25)] = "Božić"

81

82 # St. Stephen's day

83 self[date(year, DEC, 26)] = "Sveti Stjepan"

84

85

86 class HR(Croatia):

87 pass

88

89

90 class HRV(Croatia):

91 pass

92

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/holidays/countries/croatia.py b/holidays/countries/croatia.py

--- a/holidays/countries/croatia.py

+++ b/holidays/countries/croatia.py

@@ -11,18 +11,18 @@

# Website: https://github.com/dr-prodigy/python-holidays

# License: MIT (see LICENSE file)

-from datetime import date

+from datetime import date, timedelta

from dateutil.easter import easter

-from dateutil.relativedelta import relativedelta as rd

-from holidays.constants import JAN, MAY, JUN, AUG, OCT, \

- NOV, DEC

+from holidays.constants import JAN, MAY, JUN, AUG, OCT, NOV, DEC

from holidays.holiday_base import HolidayBase

class Croatia(HolidayBase):

+ # Updated with act 022-03 / 19-01 / 219 of 14 November 2019

+ # https://narodne-novine.nn.hr/clanci/sluzbeni/2019_11_110_2212.html

# https://en.wikipedia.org/wiki/Public_holidays_in_Croatia

def __init__(self, **kwargs):

@@ -32,6 +32,7 @@

def _populate(self, year):

# New years

self[date(year, JAN, 1)] = "Nova Godina"

+

# Epiphany

self[date(year, JAN, 6)] = "Sveta tri kralja"

easter_date = easter(year)

@@ -39,23 +40,23 @@

# Easter

self[easter_date] = "Uskrs"

# Easter Monday

- self[easter_date + rd(days=1)] = "Uskršnji ponedjeljak"

+ self[easter_date + timedelta(days=1)] = "Uskrsni ponedjeljak"

# Corpus Christi

- self[easter_date + rd(days=60)] = "Tijelovo"

+ self[easter_date + timedelta(days=60)] = "Tijelovo"

# International Workers' Day

self[date(year, MAY, 1)] = "Međunarodni praznik rada"

+ # Statehood day (new)

if year >= 2020:

- # Statehood day

self[date(year, MAY, 30)] = "Dan državnosti"

# Anti-fascist struggle day

self[date(year, JUN, 22)] = "Dan antifašističke borbe"

+ # Statehood day (old)

if year < 2020:

- # Statehood day

self[date(year, JUN, 25)] = "Dan državnosti"

# Victory and Homeland Thanksgiving Day

@@ -64,17 +65,16 @@

# Assumption of Mary

self[date(year, AUG, 15)] = "Velika Gospa"

+ # Independence Day (old)

if year < 2020:

- # Independence Day

self[date(year, OCT, 8)] = "Dan neovisnosti"

# All Saints' Day

- self[date(year, NOV, 1)] = "Dan svih svetih"

+ self[date(year, NOV, 1)] = "Svi sveti"

if year >= 2020:

# Memorial day

- self[date(year, NOV, 18)] =\

- "Dan sjećanja na žrtve Domovinskog rata"

+ self[date(year, NOV, 18)] = "Dan sjećanja"

# Christmas day

self[date(year, DEC, 25)] = "Božić"

|

{"golden_diff": "diff --git a/holidays/countries/croatia.py b/holidays/countries/croatia.py\n--- a/holidays/countries/croatia.py\n+++ b/holidays/countries/croatia.py\n@@ -11,18 +11,18 @@\n # Website: https://github.com/dr-prodigy/python-holidays\n # License: MIT (see LICENSE file)\n \n-from datetime import date\n+from datetime import date, timedelta\n \n from dateutil.easter import easter\n-from dateutil.relativedelta import relativedelta as rd\n \n-from holidays.constants import JAN, MAY, JUN, AUG, OCT, \\\n- NOV, DEC\n+from holidays.constants import JAN, MAY, JUN, AUG, OCT, NOV, DEC\n from holidays.holiday_base import HolidayBase\n \n \n class Croatia(HolidayBase):\n \n+ # Updated with act 022-03 / 19-01 / 219 of 14 November 2019\n+ # https://narodne-novine.nn.hr/clanci/sluzbeni/2019_11_110_2212.html\n # https://en.wikipedia.org/wiki/Public_holidays_in_Croatia\n \n def __init__(self, **kwargs):\n@@ -32,6 +32,7 @@\n def _populate(self, year):\n # New years\n self[date(year, JAN, 1)] = \"Nova Godina\"\n+\n # Epiphany\n self[date(year, JAN, 6)] = \"Sveta tri kralja\"\n easter_date = easter(year)\n@@ -39,23 +40,23 @@\n # Easter\n self[easter_date] = \"Uskrs\"\n # Easter Monday\n- self[easter_date + rd(days=1)] = \"Uskr\u0161nji ponedjeljak\"\n+ self[easter_date + timedelta(days=1)] = \"Uskrsni ponedjeljak\"\n \n # Corpus Christi\n- self[easter_date + rd(days=60)] = \"Tijelovo\"\n+ self[easter_date + timedelta(days=60)] = \"Tijelovo\"\n \n # International Workers' Day\n self[date(year, MAY, 1)] = \"Me\u0111unarodni praznik rada\"\n \n+ # Statehood day (new)\n if year >= 2020:\n- # Statehood day\n self[date(year, MAY, 30)] = \"Dan dr\u017eavnosti\"\n \n # Anti-fascist struggle day\n self[date(year, JUN, 22)] = \"Dan antifa\u0161isti\u010dke borbe\"\n \n+ # Statehood day (old)\n if year < 2020:\n- # Statehood day\n self[date(year, JUN, 25)] = \"Dan dr\u017eavnosti\"\n \n # Victory and Homeland Thanksgiving Day\n@@ -64,17 +65,16 @@\n # Assumption of Mary\n self[date(year, AUG, 15)] = \"Velika Gospa\"\n \n+ # Independence Day (old)\n if year < 2020:\n- # Independence Day\n self[date(year, OCT, 8)] = \"Dan neovisnosti\"\n \n # All Saints' Day\n- self[date(year, NOV, 1)] = \"Dan svih svetih\"\n+ self[date(year, NOV, 1)] = \"Svi sveti\"\n \n if year >= 2020:\n # Memorial day\n- self[date(year, NOV, 18)] =\\\n- \"Dan sje\u0107anja na \u017ertve Domovinskog rata\"\n+ self[date(year, NOV, 18)] = \"Dan sje\u0107anja\"\n \n # Christmas day\n self[date(year, DEC, 25)] = \"Bo\u017ei\u0107\"\n", "issue": "Wrong workday info for country HR\nToday (Oct. 8, 2020) my alarmclock automation did not go off, because my workday sensor gave the wrong info (no workday). This day used to be a holiday in Croatia, but is not anymore.\r\n\r\nbinary_sensor:\r\n - platform: workday\r\n country: HR\r\n\r\n\r\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\n# python-holidays\n# ---------------\n# A fast, efficient Python library for generating country, province and state\n# specific sets of holidays on the fly. It aims to make determining whether a\n# specific date is a holiday as fast and flexible as possible.\n#\n# Author: ryanss <[email protected]> (c) 2014-2017\n# dr-prodigy <[email protected]> (c) 2017-2020\n# Website: https://github.com/dr-prodigy/python-holidays\n# License: MIT (see LICENSE file)\n\nfrom datetime import date\n\nfrom dateutil.easter import easter\nfrom dateutil.relativedelta import relativedelta as rd\n\nfrom holidays.constants import JAN, MAY, JUN, AUG, OCT, \\\n NOV, DEC\nfrom holidays.holiday_base import HolidayBase\n\n\nclass Croatia(HolidayBase):\n\n # https://en.wikipedia.org/wiki/Public_holidays_in_Croatia\n\n def __init__(self, **kwargs):\n self.country = \"HR\"\n HolidayBase.__init__(self, **kwargs)\n\n def _populate(self, year):\n # New years\n self[date(year, JAN, 1)] = \"Nova Godina\"\n # Epiphany\n self[date(year, JAN, 6)] = \"Sveta tri kralja\"\n easter_date = easter(year)\n\n # Easter\n self[easter_date] = \"Uskrs\"\n # Easter Monday\n self[easter_date + rd(days=1)] = \"Uskr\u0161nji ponedjeljak\"\n\n # Corpus Christi\n self[easter_date + rd(days=60)] = \"Tijelovo\"\n\n # International Workers' Day\n self[date(year, MAY, 1)] = \"Me\u0111unarodni praznik rada\"\n\n if year >= 2020:\n # Statehood day\n self[date(year, MAY, 30)] = \"Dan dr\u017eavnosti\"\n\n # Anti-fascist struggle day\n self[date(year, JUN, 22)] = \"Dan antifa\u0161isti\u010dke borbe\"\n\n if year < 2020:\n # Statehood day\n self[date(year, JUN, 25)] = \"Dan dr\u017eavnosti\"\n\n # Victory and Homeland Thanksgiving Day\n self[date(year, AUG, 5)] = \"Dan pobjede i domovinske zahvalnosti\"\n\n # Assumption of Mary\n self[date(year, AUG, 15)] = \"Velika Gospa\"\n\n if year < 2020:\n # Independence Day\n self[date(year, OCT, 8)] = \"Dan neovisnosti\"\n\n # All Saints' Day\n self[date(year, NOV, 1)] = \"Dan svih svetih\"\n\n if year >= 2020:\n # Memorial day\n self[date(year, NOV, 18)] =\\\n \"Dan sje\u0107anja na \u017ertve Domovinskog rata\"\n\n # Christmas day\n self[date(year, DEC, 25)] = \"Bo\u017ei\u0107\"\n\n # St. Stephen's day\n self[date(year, DEC, 26)] = \"Sveti Stjepan\"\n\n\nclass HR(Croatia):\n pass\n\n\nclass HRV(Croatia):\n pass\n", "path": "holidays/countries/croatia.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\n\n# python-holidays\n# ---------------\n# A fast, efficient Python library for generating country, province and state\n# specific sets of holidays on the fly. It aims to make determining whether a\n# specific date is a holiday as fast and flexible as possible.\n#\n# Author: ryanss <[email protected]> (c) 2014-2017\n# dr-prodigy <[email protected]> (c) 2017-2020\n# Website: https://github.com/dr-prodigy/python-holidays\n# License: MIT (see LICENSE file)\n\nfrom datetime import date, timedelta\n\nfrom dateutil.easter import easter\n\nfrom holidays.constants import JAN, MAY, JUN, AUG, OCT, NOV, DEC\nfrom holidays.holiday_base import HolidayBase\n\n\nclass Croatia(HolidayBase):\n\n # Updated with act 022-03 / 19-01 / 219 of 14 November 2019\n # https://narodne-novine.nn.hr/clanci/sluzbeni/2019_11_110_2212.html\n # https://en.wikipedia.org/wiki/Public_holidays_in_Croatia\n\n def __init__(self, **kwargs):\n self.country = \"HR\"\n HolidayBase.__init__(self, **kwargs)\n\n def _populate(self, year):\n # New years\n self[date(year, JAN, 1)] = \"Nova Godina\"\n\n # Epiphany\n self[date(year, JAN, 6)] = \"Sveta tri kralja\"\n easter_date = easter(year)\n\n # Easter\n self[easter_date] = \"Uskrs\"\n # Easter Monday\n self[easter_date + timedelta(days=1)] = \"Uskrsni ponedjeljak\"\n\n # Corpus Christi\n self[easter_date + timedelta(days=60)] = \"Tijelovo\"\n\n # International Workers' Day\n self[date(year, MAY, 1)] = \"Me\u0111unarodni praznik rada\"\n\n # Statehood day (new)\n if year >= 2020:\n self[date(year, MAY, 30)] = \"Dan dr\u017eavnosti\"\n\n # Anti-fascist struggle day\n self[date(year, JUN, 22)] = \"Dan antifa\u0161isti\u010dke borbe\"\n\n # Statehood day (old)\n if year < 2020:\n self[date(year, JUN, 25)] = \"Dan dr\u017eavnosti\"\n\n # Victory and Homeland Thanksgiving Day\n self[date(year, AUG, 5)] = \"Dan pobjede i domovinske zahvalnosti\"\n\n # Assumption of Mary\n self[date(year, AUG, 15)] = \"Velika Gospa\"\n\n # Independence Day (old)\n if year < 2020:\n self[date(year, OCT, 8)] = \"Dan neovisnosti\"\n\n # All Saints' Day\n self[date(year, NOV, 1)] = \"Svi sveti\"\n\n if year >= 2020:\n # Memorial day\n self[date(year, NOV, 18)] = \"Dan sje\u0107anja\"\n\n # Christmas day\n self[date(year, DEC, 25)] = \"Bo\u017ei\u0107\"\n\n # St. Stephen's day\n self[date(year, DEC, 26)] = \"Sveti Stjepan\"\n\n\nclass HR(Croatia):\n pass\n\n\nclass HRV(Croatia):\n pass\n", "path": "holidays/countries/croatia.py"}]}

| 1,373 | 883 |

gh_patches_debug_7385

|

rasdani/github-patches

|

git_diff

|

mozilla__bugbug-138

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fix linting issue preventing us from updating to flake8 4.7.1

https://github.com/mozilla/bugbug/pull/135 is failing because:

> ./bugbug/model.py:102:21: E117 over-indented

We should fix it, and then we can merge https://github.com/mozilla/bugbug/pull/135.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `bugbug/model.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 # This Source Code Form is subject to the terms of the Mozilla Public

3 # License, v. 2.0. If a copy of the MPL was not distributed with this file,

4 # You can obtain one at http://mozilla.org/MPL/2.0/.

5

6 import numpy as np

7 import shap

8 from imblearn.metrics import classification_report_imbalanced

9 from imblearn.under_sampling import RandomUnderSampler

10 from sklearn import metrics

11 from sklearn.externals import joblib

12 from sklearn.feature_extraction.text import TfidfVectorizer

13 from sklearn.model_selection import cross_validate

14 from sklearn.model_selection import train_test_split

15

16 from bugbug import bugzilla

17 from bugbug.nlp import SpacyVectorizer

18

19

20 class Model():

21 def __init__(self, lemmatization=False):

22 if lemmatization:

23 self.text_vectorizer = SpacyVectorizer

24 else:

25 self.text_vectorizer = TfidfVectorizer

26

27 self.undersampling_enabled = True

28 self.cross_validation_enabled = True

29

30 def get_feature_names(self):

31 return []

32

33 def get_important_features(self, cutoff, shap_values):

34 # Calculate the values that represent the fraction of the model output variability attributable

35 # to each feature across the whole dataset.

36 shap_sums = shap_values.sum(0)

37 abs_shap_sums = np.abs(shap_values).sum(0)

38 rel_shap_sums = abs_shap_sums / abs_shap_sums.sum()

39

40 cut_off_value = cutoff * np.amax(rel_shap_sums)

41

42 # Get indices of features that pass the cut off value

43 top_feature_indices = np.where(rel_shap_sums >= cut_off_value)[0]

44 # Get the importance values of the top features from their indices

45 top_features = np.take(rel_shap_sums, top_feature_indices)

46 # Gets the sign of the importance from shap_sums as boolean

47 is_positive = (np.take(shap_sums, top_feature_indices)) >= 0

48 # Stack the importance, indices and shap_sums in a 2D array

49 top_features = np.column_stack((top_features, top_feature_indices, is_positive))

50 # Sort the array (in decreasing order of importance values)

51 top_features = top_features[top_features[:, 0].argsort()][::-1]

52

53 return top_features

54

55 def train(self, importance_cutoff=0.15):

56 classes = self.get_labels()

57 class_names = sorted(list(set(classes.values())), reverse=True)

58

59 # Get bugs, filtering out those for which we have no labels.

60 def bugs():

61 return (bug for bug in bugzilla.get_bugs() if bug['id'] in classes)

62

63 # Calculate labels.

64 y = np.array([classes[bug['id']] for bug in bugs()])

65

66 # Extract features from the bugs.

67 X = self.extraction_pipeline.fit_transform(bugs())

68

69 print(f'X: {X.shape}, y: {y.shape}')

70

71 # Split dataset in training and test.

72 X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.1, random_state=0)

73

74 if self.undersampling_enabled:

75 # Under-sample the majority classes, as the datasets are imbalanced.

76 X_train, y_train = RandomUnderSampler(random_state=0).fit_sample(X_train, y_train)

77

78 print(f'X_train: {X_train.shape}, y_train: {y_train.shape}')

79 print(f'X_test: {X_test.shape}, y_test: {y_test.shape}')

80

81 # Use k-fold cross validation to evaluate results.

82 if self.cross_validation_enabled:

83 scorings = ['accuracy', 'precision', 'recall']

84 scores = cross_validate(self.clf, X_train, y_train, scoring=scorings, cv=5)

85 print('Cross Validation scores:')

86 for scoring in scorings:

87 score = scores[f'test_{scoring}']

88 print(f'{scoring.capitalize()}: f{score.mean()} (+/- {score.std() * 2})')

89

90 # Evaluate results on the test set.

91 self.clf.fit(X_train, y_train)

92

93 feature_names = self.get_feature_names()

94 if len(feature_names):

95 explainer = shap.TreeExplainer(self.clf)

96 shap_values = explainer.shap_values(X_train)

97

98 important_features = self.get_important_features(importance_cutoff, shap_values)

99

100 print(f'\nTop {len(important_features)} Features :')

101 for i, [importance, index, is_positive] in enumerate(important_features):

102 print(f'{i + 1}. \'{feature_names[int(index)]}\' ({"+" if (is_positive) else "-"}{importance})')

103

104 y_pred = self.clf.predict(X_test)

105

106 print(f'No confidence threshold - {len(y_test)} classified')

107 print(metrics.confusion_matrix(y_test, y_pred, labels=class_names))

108 print(classification_report_imbalanced(y_test, y_pred, labels=class_names))

109

110 # Evaluate results on the test set for some confidence thresholds.

111 for confidence_threshold in [0.6, 0.7, 0.8, 0.9]:

112 y_pred_probas = self.clf.predict_proba(X_test)

113

114 y_test_filter = []

115 y_pred_filter = []

116 for i in range(0, len(y_test)):

117 argmax = np.argmax(y_pred_probas[i])

118 if y_pred_probas[i][argmax] < confidence_threshold:

119 continue

120

121 y_test_filter.append(y_test[i])

122 y_pred_filter.append(argmax)

123

124 y_pred_filter = self.clf._le.inverse_transform(y_pred_filter)

125

126 print(f'\nConfidence threshold > {confidence_threshold} - {len(y_test_filter)} classified')

127 print(metrics.confusion_matrix(y_test_filter, y_pred_filter, labels=class_names))

128 print(classification_report_imbalanced(y_test_filter, y_pred_filter, labels=class_names))

129

130 joblib.dump(self, self.__class__.__name__.lower())

131

132 @staticmethod

133 def load(model_file_name):

134 return joblib.load(model_file_name)

135

136 def overwrite_classes(self, bugs, classes, probabilities):

137 return classes

138

139 def classify(self, bugs, probabilities=False, importances=False, importance_cutoff=0.15):

140 assert bugs is not None

141 assert self.extraction_pipeline is not None and self.clf is not None, 'The module needs to be initialized first'

142

143 if not isinstance(bugs, list):

144 bugs = [bugs]

145

146 assert isinstance(bugs[0], dict)

147

148 X = self.extraction_pipeline.transform(bugs)

149 if probabilities:

150 classes = self.clf.predict_proba(X)

151 else:

152 classes = self.clf.predict(X)

153

154 classes = self.overwrite_classes(bugs, classes, probabilities)

155

156 if importances:

157 explainer = shap.TreeExplainer(self.clf)

158 shap_values = explainer.shap_values(X)

159

160 importances = self.get_important_features(importance_cutoff, shap_values)

161

162 return classes, importances

163

164 return classes

165

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/bugbug/model.py b/bugbug/model.py

--- a/bugbug/model.py

+++ b/bugbug/model.py

@@ -99,7 +99,7 @@

print(f'\nTop {len(important_features)} Features :')

for i, [importance, index, is_positive] in enumerate(important_features):

- print(f'{i + 1}. \'{feature_names[int(index)]}\' ({"+" if (is_positive) else "-"}{importance})')

+ print(f'{i + 1}. \'{feature_names[int(index)]}\' ({"+" if (is_positive) else "-"}{importance})')

y_pred = self.clf.predict(X_test)

|