problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

9.01k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 465

11.3k

| num_tokens_prompt

int64 557

2.05k

| num_tokens_diff

int64 48

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_20827

|

rasdani/github-patches

|

git_diff

|

shuup__shuup-742

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

System check to verify Parler sanity

Shuup should check that the Parler configuration is sane before starting.

@JsseL and @juhakujala puzzled over an unrelated exception (`'shuup.admin.modules.services.behavior_form_part.BehaviorFormSet object' has no attribute 'empty_form'`) for a while – turns out it was an `AttributeError` ([which, as we unfortunately know, are hidden within `@property`s](https://github.com/shuup/shuup/blob/5584ebf912bae415fe367ea0c00ad4c5cff49244/shuup/utils/form_group.py#L86-L100)) within `FormSet.empty_form` calls that happens due to `PARLER_DEFAULT_LANGUAGE_CODE` being undefined:

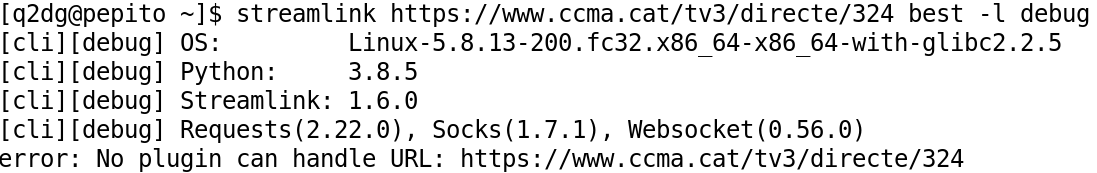

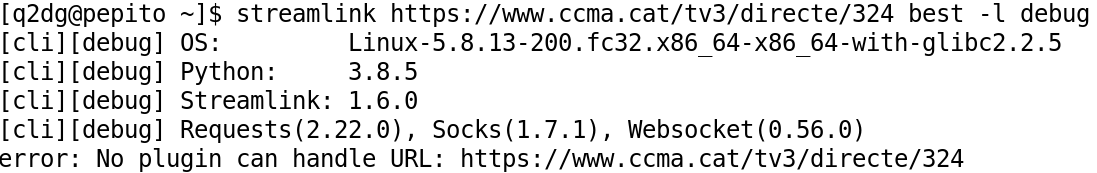

```

Traceback (most recent call last):

File "~/django/forms/formsets.py", line 187, in empty_form

empty_permitted=True,

File "~/shuup/admin/modules/services/behavior_form_part.py", line 49, in form

kwargs.setdefault("default_language", settings.PARLER_DEFAULT_LANGUAGE_CODE)

File "~/django/conf/__init__.py", line 49, in __getattr__

return getattr(self._wrapped, name)

AttributeError: 'Settings' object has no attribute 'PARLER_DEFAULT_LANGUAGE_CODE'

```

My suggestion is to add a simple system check in [ShuupCoreAppConfig.ready()](https://github.com/shuup/shuup/blob/5584ebf912bae415fe367ea0c00ad4c5cff49244/shuup/core/__init__.py#L11) that throws an exception if some of the Parler settings (`PARLER_DEFAULT_LANGUAGE_CODE` and `PARLER_LANGUAGES`) are unset -- or perhaps it could automatically derive them based on the Django `LANGUAGES` setting, as "sane defaults" go?

</issue>

<code>

[start of shuup/core/__init__.py]

1 # -*- coding: utf-8 -*-

2 # This file is part of Shuup.

3 #

4 # Copyright (c) 2012-2016, Shoop Ltd. All rights reserved.

5 #

6 # This source code is licensed under the AGPLv3 license found in the

7 # LICENSE file in the root directory of this source tree.

8 from shuup.apps import AppConfig

9

10

11 class ShuupCoreAppConfig(AppConfig):

12 name = "shuup.core"

13 verbose_name = "Shuup Core"

14 label = "shuup" # Use "shuup" as app_label instead of "core"

15 required_installed_apps = (

16 "django.contrib.auth",

17 "django.contrib.contenttypes",

18 "easy_thumbnails",

19 "filer",

20 )

21 provides = {

22 "api_populator": [

23 "shuup.core.api:populate_core_api"

24 ],

25 "pricing_module": [

26 "shuup.core.pricing.default_pricing:DefaultPricingModule"

27 ],

28 }

29

30

31 default_app_config = "shuup.core.ShuupCoreAppConfig"

32

[end of shuup/core/__init__.py]

[start of shuup/core/excs.py]

1 # -*- coding: utf-8 -*-

2 # This file is part of Shuup.

3 #

4 # Copyright (c) 2012-2016, Shoop Ltd. All rights reserved.

5 #

6 # This source code is licensed under the AGPLv3 license found in the

7 # LICENSE file in the root directory of this source tree.

8 from shuup.utils.excs import Problem

9

10

11 class ImmutabilityError(ValueError):

12 pass

13

14

15 class NoProductsToShipException(Exception):

16 pass

17

18

19 class NoPaymentToCreateException(Exception):

20 pass

21

22

23 class NoRefundToCreateException(Exception):

24 pass

25

26

27 class RefundExceedsAmountException(Exception):

28 pass

29

30

31 class InvalidRefundAmountException(Exception):

32 pass

33

34

35 class ProductNotOrderableProblem(Problem):

36 pass

37

38

39 class ProductNotVisibleProblem(Problem):

40 pass

41

42

43 class ImpossibleProductModeException(ValueError):

44 def __init__(self, message, code=None):

45 super(ImpossibleProductModeException, self).__init__(message)

46 self.code = code

47

[end of shuup/core/excs.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/shuup/core/__init__.py b/shuup/core/__init__.py

--- a/shuup/core/__init__.py

+++ b/shuup/core/__init__.py

@@ -6,6 +6,7 @@

# This source code is licensed under the AGPLv3 license found in the

# LICENSE file in the root directory of this source tree.

from shuup.apps import AppConfig

+from shuup.core.excs import MissingSettingException

class ShuupCoreAppConfig(AppConfig):

@@ -27,5 +28,12 @@

],

}

+ def ready(self):

+ from django.conf import settings

+ if not getattr(settings, "PARLER_DEFAULT_LANGUAGE_CODE", None):

+ raise MissingSettingException("PARLER_DEFAULT_LANGUAGE_CODE must be set.")

+ if not getattr(settings, "PARLER_LANGUAGES", None):

+ raise MissingSettingException("PARLER_LANGUAGES must be set.")

+

default_app_config = "shuup.core.ShuupCoreAppConfig"

diff --git a/shuup/core/excs.py b/shuup/core/excs.py

--- a/shuup/core/excs.py

+++ b/shuup/core/excs.py

@@ -32,6 +32,10 @@

pass

+class MissingSettingException(Exception):

+ pass

+

+

class ProductNotOrderableProblem(Problem):

pass

|

{"golden_diff": "diff --git a/shuup/core/__init__.py b/shuup/core/__init__.py\n--- a/shuup/core/__init__.py\n+++ b/shuup/core/__init__.py\n@@ -6,6 +6,7 @@\n # This source code is licensed under the AGPLv3 license found in the\n # LICENSE file in the root directory of this source tree.\n from shuup.apps import AppConfig\n+from shuup.core.excs import MissingSettingException\n \n \n class ShuupCoreAppConfig(AppConfig):\n@@ -27,5 +28,12 @@\n ],\n }\n \n+ def ready(self):\n+ from django.conf import settings\n+ if not getattr(settings, \"PARLER_DEFAULT_LANGUAGE_CODE\", None):\n+ raise MissingSettingException(\"PARLER_DEFAULT_LANGUAGE_CODE must be set.\")\n+ if not getattr(settings, \"PARLER_LANGUAGES\", None):\n+ raise MissingSettingException(\"PARLER_LANGUAGES must be set.\")\n+\n \n default_app_config = \"shuup.core.ShuupCoreAppConfig\"\ndiff --git a/shuup/core/excs.py b/shuup/core/excs.py\n--- a/shuup/core/excs.py\n+++ b/shuup/core/excs.py\n@@ -32,6 +32,10 @@\n pass\n \n \n+class MissingSettingException(Exception):\n+ pass\n+\n+\n class ProductNotOrderableProblem(Problem):\n pass\n", "issue": "System check to verify Parler sanity\nShuup should check that the Parler configuration is sane before starting.\n\n@JsseL and @juhakujala puzzled over an unrelated exception (`'shuup.admin.modules.services.behavior_form_part.BehaviorFormSet object' has no attribute 'empty_form'`) for a while \u2013 turns out it was an `AttributeError` ([which, as we unfortunately know, are hidden within `@property`s](https://github.com/shuup/shuup/blob/5584ebf912bae415fe367ea0c00ad4c5cff49244/shuup/utils/form_group.py#L86-L100)) within `FormSet.empty_form` calls that happens due to `PARLER_DEFAULT_LANGUAGE_CODE` being undefined:\n\n```\nTraceback (most recent call last):\n File \"~/django/forms/formsets.py\", line 187, in empty_form\n empty_permitted=True,\n File \"~/shuup/admin/modules/services/behavior_form_part.py\", line 49, in form\n kwargs.setdefault(\"default_language\", settings.PARLER_DEFAULT_LANGUAGE_CODE)\n File \"~/django/conf/__init__.py\", line 49, in __getattr__\n return getattr(self._wrapped, name)\nAttributeError: 'Settings' object has no attribute 'PARLER_DEFAULT_LANGUAGE_CODE'\n```\n\nMy suggestion is to add a simple system check in [ShuupCoreAppConfig.ready()](https://github.com/shuup/shuup/blob/5584ebf912bae415fe367ea0c00ad4c5cff49244/shuup/core/__init__.py#L11) that throws an exception if some of the Parler settings (`PARLER_DEFAULT_LANGUAGE_CODE` and `PARLER_LANGUAGES`) are unset -- or perhaps it could automatically derive them based on the Django `LANGUAGES` setting, as \"sane defaults\" go?\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n# This file is part of Shuup.\n#\n# Copyright (c) 2012-2016, Shoop Ltd. All rights reserved.\n#\n# This source code is licensed under the AGPLv3 license found in the\n# LICENSE file in the root directory of this source tree.\nfrom shuup.apps import AppConfig\n\n\nclass ShuupCoreAppConfig(AppConfig):\n name = \"shuup.core\"\n verbose_name = \"Shuup Core\"\n label = \"shuup\" # Use \"shuup\" as app_label instead of \"core\"\n required_installed_apps = (\n \"django.contrib.auth\",\n \"django.contrib.contenttypes\",\n \"easy_thumbnails\",\n \"filer\",\n )\n provides = {\n \"api_populator\": [\n \"shuup.core.api:populate_core_api\"\n ],\n \"pricing_module\": [\n \"shuup.core.pricing.default_pricing:DefaultPricingModule\"\n ],\n }\n\n\ndefault_app_config = \"shuup.core.ShuupCoreAppConfig\"\n", "path": "shuup/core/__init__.py"}, {"content": "# -*- coding: utf-8 -*-\n# This file is part of Shuup.\n#\n# Copyright (c) 2012-2016, Shoop Ltd. All rights reserved.\n#\n# This source code is licensed under the AGPLv3 license found in the\n# LICENSE file in the root directory of this source tree.\nfrom shuup.utils.excs import Problem\n\n\nclass ImmutabilityError(ValueError):\n pass\n\n\nclass NoProductsToShipException(Exception):\n pass\n\n\nclass NoPaymentToCreateException(Exception):\n pass\n\n\nclass NoRefundToCreateException(Exception):\n pass\n\n\nclass RefundExceedsAmountException(Exception):\n pass\n\n\nclass InvalidRefundAmountException(Exception):\n pass\n\n\nclass ProductNotOrderableProblem(Problem):\n pass\n\n\nclass ProductNotVisibleProblem(Problem):\n pass\n\n\nclass ImpossibleProductModeException(ValueError):\n def __init__(self, message, code=None):\n super(ImpossibleProductModeException, self).__init__(message)\n self.code = code\n", "path": "shuup/core/excs.py"}]}

| 1,602 | 312 |

gh_patches_debug_29881

|

rasdani/github-patches

|

git_diff

|

e2nIEE__pandapower-880

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Missing dependencies: xlswriter, xlrd, cryptography

Hi,

I am currently following the instructions for the installation of the development version, as shown here: https://www.pandapower.org/start/#develop

I have a brand new virtual environment on Python 3.8.3 (Windows 10, 64 bits), and the tests failed because of the following missing dependencies:

> Edit: Same result on Python 3.7.8.

1. xlsxwriter: `FAILED pandapower\test\api\test_file_io.py::test_excel[1] - ModuleNotFoundError: No module named 'xlsxwriter'`

2. xlrd: `FAILED pandapower\test\api\test_file_io.py::test_excel[1] - ImportError: Missing optional dependency 'xlrd'. Install xlrd >= 1.0.0 for Excel support Use pip or conda to install xlrd.`

3. cryptography: `FAILED pandapower\test\api\test_file_io.py::test_encrypted_json[1] - ModuleNotFoundError: No module named 'cryptography'`

The permanent solution would most likely be to add those to setup.py and mention them in the documentation, but you might want to check if you should restrict the version.

P.S.: The tests still ended up failing, but that's a seperate issue (see issue #876 ).

</issue>

<code>

[start of setup.py]

1 # -*- coding: utf-8 -*-

2

3 # Copyright (c) 2016-2020 by University of Kassel and Fraunhofer Institute for Energy Economics

4 # and Energy System Technology (IEE), Kassel. All rights reserved.

5

6 from setuptools import setup, find_packages

7 import re

8

9 with open('README.rst', 'rb') as f:

10 install = f.read().decode('utf-8')

11

12 with open('CHANGELOG.rst', 'rb') as f:

13 changelog = f.read().decode('utf-8')

14

15 classifiers = [

16 'Development Status :: 5 - Production/Stable',

17 'Environment :: Console',

18 'Intended Audience :: Developers',

19 'Intended Audience :: Education',

20 'Intended Audience :: Science/Research',

21 'License :: OSI Approved :: BSD License',

22 'Natural Language :: English',

23 'Operating System :: OS Independent',

24 'Programming Language :: Python',

25 'Programming Language :: Python :: 3']

26

27 with open('.travis.yml', 'rb') as f:

28 lines = f.read().decode('utf-8')

29 for version in re.findall('python: 3.[0-9]', lines):

30 classifiers.append('Programming Language :: Python :: 3.%s' % version[-1])

31

32 long_description = '\n\n'.join((install, changelog))

33

34 setup(

35 name='pandapower',

36 version='2.3.0',

37 author='Leon Thurner, Alexander Scheidler',

38 author_email='[email protected], [email protected]',

39 description='Convenient Power System Modelling and Analysis based on PYPOWER and pandas',

40 long_description=long_description,

41 long_description_content_type='text/x-rst',

42 url='http://www.pandapower.org',

43 license='BSD',

44 install_requires=["pandas>=0.17",

45 "networkx",

46 "scipy",

47 "numpy>=0.11",

48 "packaging"],

49 extras_require={":python_version<'3.0'": ["future"],

50 "docs": ["numpydoc", "sphinx", "sphinx_rtd_theme"],

51 "plotting": ["plotly", "matplotlib", "python-igraph"],

52 "test": ["pytest", "pytest-xdist"]},

53 packages=find_packages(),

54 include_package_data=True,

55 classifiers=classifiers

56 )

57

[end of setup.py]

[start of pandapower/__init__.py]

1 __version__ = "2.3.0"

2

3 import os

4 pp_dir = os.path.dirname(os.path.realpath(__file__))

5

6 from pandapower.auxiliary import *

7 from pandapower.convert_format import *

8 from pandapower.create import *

9 from pandapower.diagnostic import *

10 from pandapower.file_io import *

11 from pandapower.run import *

12 from pandapower.runpm import *

13 from pandapower.std_types import *

14 from pandapower.toolbox import *

15 from pandapower.powerflow import *

16 from pandapower.opf import *

17 from pandapower.optimal_powerflow import OPFNotConverged

18 from pandapower.pf.runpp_3ph import runpp_3ph

19 import pandas as pd

20 pd.options.mode.chained_assignment = None # default='warn'

21

[end of pandapower/__init__.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pandapower/__init__.py b/pandapower/__init__.py

--- a/pandapower/__init__.py

+++ b/pandapower/__init__.py

@@ -1,4 +1,4 @@

-__version__ = "2.3.0"

+__version__ = "2.3.1"

import os

pp_dir = os.path.dirname(os.path.realpath(__file__))

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -33,10 +33,10 @@

setup(

name='pandapower',

- version='2.3.0',

+ version='2.3.1',

author='Leon Thurner, Alexander Scheidler',

author_email='[email protected], [email protected]',

- description='Convenient Power System Modelling and Analysis based on PYPOWER and pandas',

+ description='An easy to use open source tool for power system modeling, analysis and optimization with a high degree of automation.',

long_description=long_description,

long_description_content_type='text/x-rst',

url='http://www.pandapower.org',

@@ -45,11 +45,14 @@

"networkx",

"scipy",

"numpy>=0.11",

- "packaging"],

- extras_require={":python_version<'3.0'": ["future"],

- "docs": ["numpydoc", "sphinx", "sphinx_rtd_theme"],

- "plotting": ["plotly", "matplotlib", "python-igraph"],

- "test": ["pytest", "pytest-xdist"]},

+ "packaging",

+ "xlsxwriter",

+ "xlrd",

+ "cryptography"],

+ extras_require={

+ "docs": ["numpydoc", "sphinx", "sphinx_rtd_theme"],

+ "plotting": ["plotly", "matplotlib", "python-igraph"],

+ "test": ["pytest", "pytest-xdist"]},

packages=find_packages(),

include_package_data=True,

classifiers=classifiers

|

{"golden_diff": "diff --git a/pandapower/__init__.py b/pandapower/__init__.py\n--- a/pandapower/__init__.py\n+++ b/pandapower/__init__.py\n@@ -1,4 +1,4 @@\n-__version__ = \"2.3.0\"\n+__version__ = \"2.3.1\"\n \n import os\n pp_dir = os.path.dirname(os.path.realpath(__file__))\ndiff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -33,10 +33,10 @@\n \n setup(\n name='pandapower',\n- version='2.3.0',\n+ version='2.3.1',\n author='Leon Thurner, Alexander Scheidler',\n author_email='[email protected], [email protected]',\n- description='Convenient Power System Modelling and Analysis based on PYPOWER and pandas',\n+ description='An easy to use open source tool for power system modeling, analysis and optimization with a high degree of automation.',\n long_description=long_description,\n \tlong_description_content_type='text/x-rst',\n url='http://www.pandapower.org',\n@@ -45,11 +45,14 @@\n \"networkx\",\n \"scipy\",\n \"numpy>=0.11\",\n- \"packaging\"],\n- extras_require={\":python_version<'3.0'\": [\"future\"],\n- \"docs\": [\"numpydoc\", \"sphinx\", \"sphinx_rtd_theme\"],\n- \"plotting\": [\"plotly\", \"matplotlib\", \"python-igraph\"],\n- \"test\": [\"pytest\", \"pytest-xdist\"]},\n+ \"packaging\",\n+\t\t\t\t\t \"xlsxwriter\",\n+\t\t\t\t\t \"xlrd\",\n+\t\t\t\t\t \"cryptography\"],\n+ extras_require={\n+\t\t\"docs\": [\"numpydoc\", \"sphinx\", \"sphinx_rtd_theme\"],\n+\t\t\"plotting\": [\"plotly\", \"matplotlib\", \"python-igraph\"],\n+\t\t\"test\": [\"pytest\", \"pytest-xdist\"]},\n packages=find_packages(),\n include_package_data=True,\n classifiers=classifiers\n", "issue": "Missing dependencies: xlswriter, xlrd, cryptography\nHi,\r\n\r\nI am currently following the instructions for the installation of the development version, as shown here: https://www.pandapower.org/start/#develop\r\n\r\nI have a brand new virtual environment on Python 3.8.3 (Windows 10, 64 bits), and the tests failed because of the following missing dependencies:\r\n\r\n> Edit: Same result on Python 3.7.8.\r\n\r\n1. xlsxwriter: `FAILED pandapower\\test\\api\\test_file_io.py::test_excel[1] - ModuleNotFoundError: No module named 'xlsxwriter'`\r\n2. xlrd: `FAILED pandapower\\test\\api\\test_file_io.py::test_excel[1] - ImportError: Missing optional dependency 'xlrd'. Install xlrd >= 1.0.0 for Excel support Use pip or conda to install xlrd.`\r\n3. cryptography: `FAILED pandapower\\test\\api\\test_file_io.py::test_encrypted_json[1] - ModuleNotFoundError: No module named 'cryptography'`\r\n\r\nThe permanent solution would most likely be to add those to setup.py and mention them in the documentation, but you might want to check if you should restrict the version.\r\n\r\nP.S.: The tests still ended up failing, but that's a seperate issue (see issue #876 ).\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\n# Copyright (c) 2016-2020 by University of Kassel and Fraunhofer Institute for Energy Economics\n# and Energy System Technology (IEE), Kassel. All rights reserved.\n\nfrom setuptools import setup, find_packages\nimport re\n\nwith open('README.rst', 'rb') as f:\n install = f.read().decode('utf-8')\n\nwith open('CHANGELOG.rst', 'rb') as f:\n changelog = f.read().decode('utf-8')\n\nclassifiers = [\n 'Development Status :: 5 - Production/Stable',\n 'Environment :: Console',\n 'Intended Audience :: Developers',\n 'Intended Audience :: Education',\n 'Intended Audience :: Science/Research',\n 'License :: OSI Approved :: BSD License',\n 'Natural Language :: English',\n 'Operating System :: OS Independent',\n 'Programming Language :: Python',\n 'Programming Language :: Python :: 3']\n\nwith open('.travis.yml', 'rb') as f:\n lines = f.read().decode('utf-8')\n for version in re.findall('python: 3.[0-9]', lines):\n classifiers.append('Programming Language :: Python :: 3.%s' % version[-1])\n\nlong_description = '\\n\\n'.join((install, changelog))\n\nsetup(\n name='pandapower',\n version='2.3.0',\n author='Leon Thurner, Alexander Scheidler',\n author_email='[email protected], [email protected]',\n description='Convenient Power System Modelling and Analysis based on PYPOWER and pandas',\n long_description=long_description,\n\tlong_description_content_type='text/x-rst',\n url='http://www.pandapower.org',\n license='BSD',\n install_requires=[\"pandas>=0.17\",\n \"networkx\",\n \"scipy\",\n \"numpy>=0.11\",\n \"packaging\"],\n extras_require={\":python_version<'3.0'\": [\"future\"],\n \"docs\": [\"numpydoc\", \"sphinx\", \"sphinx_rtd_theme\"],\n \"plotting\": [\"plotly\", \"matplotlib\", \"python-igraph\"],\n \"test\": [\"pytest\", \"pytest-xdist\"]},\n packages=find_packages(),\n include_package_data=True,\n classifiers=classifiers\n)\n", "path": "setup.py"}, {"content": "__version__ = \"2.3.0\"\n\nimport os\npp_dir = os.path.dirname(os.path.realpath(__file__))\n\nfrom pandapower.auxiliary import *\nfrom pandapower.convert_format import *\nfrom pandapower.create import *\nfrom pandapower.diagnostic import *\nfrom pandapower.file_io import *\nfrom pandapower.run import *\nfrom pandapower.runpm import *\nfrom pandapower.std_types import *\nfrom pandapower.toolbox import *\nfrom pandapower.powerflow import *\nfrom pandapower.opf import *\nfrom pandapower.optimal_powerflow import OPFNotConverged\nfrom pandapower.pf.runpp_3ph import runpp_3ph\nimport pandas as pd\npd.options.mode.chained_assignment = None # default='warn'\n", "path": "pandapower/__init__.py"}]}

| 1,671 | 490 |

gh_patches_debug_19563

|

rasdani/github-patches

|

git_diff

|

Flexget__Flexget-1345

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

TypeError with Form Login Plugin

### Expected behaviour:

Task runs without generating error.

### Actual behaviour:

Task runs and generates the following error

```

TypeError: must be unicode, not str

```

### Steps to reproduce:

- Step 1: Install latest version of Flexget using virtualenv

- Step 2: pip install mechanize

- Step 3: Create config.yml

- Step 4: flexget --test execute

#### Config:

```

tasks:

test task:

form:

url: http://example.com/login.php

username: email address

password: password

html:

url: http://example.com/

```

#### Log:

Crash:

```

2016-08-16 11:40 DEBUG manager test task Traceback:

Traceback (most recent call last):

File "/home/username/flexget/local/lib/python2.7/site-packages/flexget/task.py", line 444, in __run_plugin

return method(*args, **kwargs)

File "/home/username/flexget/local/lib/python2.7/site-packages/flexget/event.py", line 23, in __call__

return self.func(*args, **kwargs)

File "/home/username/flexget/local/lib/python2.7/site-packages/flexget/plugins/plugin_formlogin.py", line 73, in on_task_start

f.write(br.response().get_data())

TypeError: must be unicode, not str

2016-08-16 11:40 WARNING task test task Aborting task (plugin: form)

2016-08-16 11:40 DEBUG task_queue task test task aborted: TaskAbort(reason=BUG: Unhandled error in plugin form: must be unicode, not str, silent=False)

```

Full log.

```

http://pastebin.com/yBRqhYjR

```

### Additional information:

- Flexget Version: 2.2.20

- Python Version: 2.7.9

- Installation method: Virtualenv

- OS and version: Debian 8

</issue>

<code>

[start of flexget/plugins/plugin_formlogin.py]

1 from __future__ import unicode_literals, division, absolute_import

2 from builtins import * # pylint: disable=unused-import, redefined-builtin

3

4 import logging

5 import os

6 import socket

7

8 from flexget import plugin

9 from flexget.event import event

10

11 log = logging.getLogger('formlogin')

12

13

14 class FormLogin(object):

15 """

16 Login on form

17 """

18

19 schema = {

20 'type': 'object',

21 'properties': {

22 'url': {'type': 'string', 'format': 'url'},

23 'username': {'type': 'string'},

24 'password': {'type': 'string'},

25 'userfield': {'type': 'string'},

26 'passfield': {'type': 'string'}

27 },

28 'required': ['url', 'username', 'password'],

29 'additionalProperties': False

30 }

31

32 def on_task_start(self, task, config):

33 try:

34 from mechanize import Browser

35 except ImportError:

36 raise plugin.PluginError('mechanize required (python module), please install it.', log)

37

38 userfield = config.get('userfield', 'username')

39 passfield = config.get('passfield', 'password')

40

41 url = config['url']

42 username = config['username']

43 password = config['password']

44

45 br = Browser()

46 br.set_handle_robots(False)

47 try:

48 br.open(url)

49 except Exception:

50 # TODO: improve error handling

51 raise plugin.PluginError('Unable to post login form', log)

52

53 # br.set_debug_redirects(True)

54 # br.set_debug_responses(True)

55 # br.set_debug_http(True)

56

57 try:

58 for form in br.forms():

59 loginform = form

60

61 try:

62 loginform[userfield] = username

63 loginform[passfield] = password

64 break

65 except Exception:

66 pass

67 else:

68 received = os.path.join(task.manager.config_base, 'received')

69 if not os.path.isdir(received):

70 os.mkdir(received)

71 filename = os.path.join(received, '%s.formlogin.html' % task.name)

72 with open(filename, 'w') as f:

73 f.write(br.response().get_data())

74 log.critical('I have saved the login page content to %s for you to view' % filename)

75 raise plugin.PluginError('Unable to find login fields', log)

76 except socket.timeout:

77 raise plugin.PluginError('Timed out on url %s' % url)

78

79 br.form = loginform

80

81 br.submit()

82

83 cookiejar = br._ua_handlers["_cookies"].cookiejar

84

85 # Add cookiejar to our requests session

86 task.requests.add_cookiejar(cookiejar)

87

88

89 @event('plugin.register')

90 def register_plugin():

91 plugin.register(FormLogin, 'form', api_ver=2)

92

[end of flexget/plugins/plugin_formlogin.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/flexget/plugins/plugin_formlogin.py b/flexget/plugins/plugin_formlogin.py

--- a/flexget/plugins/plugin_formlogin.py

+++ b/flexget/plugins/plugin_formlogin.py

@@ -2,6 +2,7 @@

from builtins import * # pylint: disable=unused-import, redefined-builtin

import logging

+import io

import os

import socket

@@ -69,7 +70,7 @@

if not os.path.isdir(received):

os.mkdir(received)

filename = os.path.join(received, '%s.formlogin.html' % task.name)

- with open(filename, 'w') as f:

+ with io.open(filename, 'wb') as f:

f.write(br.response().get_data())

log.critical('I have saved the login page content to %s for you to view' % filename)

raise plugin.PluginError('Unable to find login fields', log)

|

{"golden_diff": "diff --git a/flexget/plugins/plugin_formlogin.py b/flexget/plugins/plugin_formlogin.py\n--- a/flexget/plugins/plugin_formlogin.py\n+++ b/flexget/plugins/plugin_formlogin.py\n@@ -2,6 +2,7 @@\n from builtins import * # pylint: disable=unused-import, redefined-builtin\n \n import logging\n+import io\n import os\n import socket\n \n@@ -69,7 +70,7 @@\n if not os.path.isdir(received):\n os.mkdir(received)\n filename = os.path.join(received, '%s.formlogin.html' % task.name)\n- with open(filename, 'w') as f:\n+ with io.open(filename, 'wb') as f:\n f.write(br.response().get_data())\n log.critical('I have saved the login page content to %s for you to view' % filename)\n raise plugin.PluginError('Unable to find login fields', log)\n", "issue": "TypeError with Form Login Plugin\n### Expected behaviour:\n\nTask runs without generating error.\n### Actual behaviour:\n\nTask runs and generates the following error\n\n```\nTypeError: must be unicode, not str\n```\n### Steps to reproduce:\n- Step 1: Install latest version of Flexget using virtualenv\n- Step 2: pip install mechanize\n- Step 3: Create config.yml\n- Step 4: flexget --test execute\n#### Config:\n\n```\ntasks:\n test task:\n form:\n url: http://example.com/login.php\n username: email address\n password: password\n html:\n url: http://example.com/\n```\n#### Log:\n\nCrash:\n\n```\n2016-08-16 11:40 DEBUG manager test task Traceback:\nTraceback (most recent call last):\n File \"/home/username/flexget/local/lib/python2.7/site-packages/flexget/task.py\", line 444, in __run_plugin\n return method(*args, **kwargs)\n File \"/home/username/flexget/local/lib/python2.7/site-packages/flexget/event.py\", line 23, in __call__\n return self.func(*args, **kwargs)\n File \"/home/username/flexget/local/lib/python2.7/site-packages/flexget/plugins/plugin_formlogin.py\", line 73, in on_task_start\n f.write(br.response().get_data())\nTypeError: must be unicode, not str\n2016-08-16 11:40 WARNING task test task Aborting task (plugin: form)\n2016-08-16 11:40 DEBUG task_queue task test task aborted: TaskAbort(reason=BUG: Unhandled error in plugin form: must be unicode, not str, silent=False)\n```\n\nFull log.\n\n```\nhttp://pastebin.com/yBRqhYjR\n```\n### Additional information:\n- Flexget Version: 2.2.20\n- Python Version: 2.7.9\n- Installation method: Virtualenv\n- OS and version: Debian 8\n\n", "before_files": [{"content": "from __future__ import unicode_literals, division, absolute_import\nfrom builtins import * # pylint: disable=unused-import, redefined-builtin\n\nimport logging\nimport os\nimport socket\n\nfrom flexget import plugin\nfrom flexget.event import event\n\nlog = logging.getLogger('formlogin')\n\n\nclass FormLogin(object):\n \"\"\"\n Login on form\n \"\"\"\n\n schema = {\n 'type': 'object',\n 'properties': {\n 'url': {'type': 'string', 'format': 'url'},\n 'username': {'type': 'string'},\n 'password': {'type': 'string'},\n 'userfield': {'type': 'string'},\n 'passfield': {'type': 'string'}\n },\n 'required': ['url', 'username', 'password'],\n 'additionalProperties': False\n }\n\n def on_task_start(self, task, config):\n try:\n from mechanize import Browser\n except ImportError:\n raise plugin.PluginError('mechanize required (python module), please install it.', log)\n\n userfield = config.get('userfield', 'username')\n passfield = config.get('passfield', 'password')\n\n url = config['url']\n username = config['username']\n password = config['password']\n\n br = Browser()\n br.set_handle_robots(False)\n try:\n br.open(url)\n except Exception:\n # TODO: improve error handling\n raise plugin.PluginError('Unable to post login form', log)\n\n # br.set_debug_redirects(True)\n # br.set_debug_responses(True)\n # br.set_debug_http(True)\n\n try:\n for form in br.forms():\n loginform = form\n\n try:\n loginform[userfield] = username\n loginform[passfield] = password\n break\n except Exception:\n pass\n else:\n received = os.path.join(task.manager.config_base, 'received')\n if not os.path.isdir(received):\n os.mkdir(received)\n filename = os.path.join(received, '%s.formlogin.html' % task.name)\n with open(filename, 'w') as f:\n f.write(br.response().get_data())\n log.critical('I have saved the login page content to %s for you to view' % filename)\n raise plugin.PluginError('Unable to find login fields', log)\n except socket.timeout:\n raise plugin.PluginError('Timed out on url %s' % url)\n\n br.form = loginform\n\n br.submit()\n\n cookiejar = br._ua_handlers[\"_cookies\"].cookiejar\n\n # Add cookiejar to our requests session\n task.requests.add_cookiejar(cookiejar)\n\n\n@event('plugin.register')\ndef register_plugin():\n plugin.register(FormLogin, 'form', api_ver=2)\n", "path": "flexget/plugins/plugin_formlogin.py"}]}

| 1,783 | 204 |

gh_patches_debug_3564

|

rasdani/github-patches

|

git_diff

|

pypa__setuptools-2369

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

SystemError: Parent module 'setuptools' not loaded, cannot perform relative import with setuptools 50

After upgrading setuptools to 50.0 today, the environment fails to locate the entry points as it could not import distutils

```

$ python --version

Python 3.5.1

$ python -c "import distutils"

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "<frozen importlib._bootstrap>", line 969, in _find_and_load

File "<frozen importlib._bootstrap>", line 958, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 666, in _load_unlocked

File "<frozen importlib._bootstrap>", line 577, in module_from_spec

File "/home/gchan/tmp/setuptools-python-3.5/lib/python3.5/site-packages/_distutils_hack/__init__.py", line 82, in create_module

return importlib.import_module('._distutils', 'setuptools')

File "/home/gchan/tmp/setuptools-python-3.5/lib64/python3.5/importlib/__init__.py", line 126, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 981, in _gcd_import

File "<frozen importlib._bootstrap>", line 931, in _sanity_check

SystemError: Parent module 'setuptools' not loaded, cannot perform relative import

```

The issue could not be found in the python 3.8 environment.

</issue>

<code>

[start of _distutils_hack/__init__.py]

1 import sys

2 import os

3 import re

4 import importlib

5 import warnings

6

7

8 is_pypy = '__pypy__' in sys.builtin_module_names

9

10

11 def warn_distutils_present():

12 if 'distutils' not in sys.modules:

13 return

14 if is_pypy and sys.version_info < (3, 7):

15 # PyPy for 3.6 unconditionally imports distutils, so bypass the warning

16 # https://foss.heptapod.net/pypy/pypy/-/blob/be829135bc0d758997b3566062999ee8b23872b4/lib-python/3/site.py#L250

17 return

18 warnings.warn(

19 "Distutils was imported before Setuptools, but importing Setuptools "

20 "also replaces the `distutils` module in `sys.modules`. This may lead "

21 "to undesirable behaviors or errors. To avoid these issues, avoid "

22 "using distutils directly, ensure that setuptools is installed in the "

23 "traditional way (e.g. not an editable install), and/or make sure that "

24 "setuptools is always imported before distutils.")

25

26

27 def clear_distutils():

28 if 'distutils' not in sys.modules:

29 return

30 warnings.warn("Setuptools is replacing distutils.")

31 mods = [name for name in sys.modules if re.match(r'distutils\b', name)]

32 for name in mods:

33 del sys.modules[name]

34

35

36 def enabled():

37 """

38 Allow selection of distutils by environment variable.

39 """

40 which = os.environ.get('SETUPTOOLS_USE_DISTUTILS', 'local')

41 return which == 'local'

42

43

44 def ensure_local_distutils():

45 clear_distutils()

46 distutils = importlib.import_module('setuptools._distutils')

47 distutils.__name__ = 'distutils'

48 sys.modules['distutils'] = distutils

49

50 # sanity check that submodules load as expected

51 core = importlib.import_module('distutils.core')

52 assert '_distutils' in core.__file__, core.__file__

53

54

55 def do_override():

56 """

57 Ensure that the local copy of distutils is preferred over stdlib.

58

59 See https://github.com/pypa/setuptools/issues/417#issuecomment-392298401

60 for more motivation.

61 """

62 if enabled():

63 warn_distutils_present()

64 ensure_local_distutils()

65

66

67 class DistutilsMetaFinder:

68 def find_spec(self, fullname, path, target=None):

69 if path is not None:

70 return

71

72 method_name = 'spec_for_{fullname}'.format(**locals())

73 method = getattr(self, method_name, lambda: None)

74 return method()

75

76 def spec_for_distutils(self):

77 import importlib.abc

78 import importlib.util

79

80 class DistutilsLoader(importlib.abc.Loader):

81

82 def create_module(self, spec):

83 return importlib.import_module('._distutils', 'setuptools')

84

85 def exec_module(self, module):

86 pass

87

88 return importlib.util.spec_from_loader('distutils', DistutilsLoader())

89

90 def spec_for_pip(self):

91 """

92 Ensure stdlib distutils when running under pip.

93 See pypa/pip#8761 for rationale.

94 """

95 clear_distutils()

96 self.spec_for_distutils = lambda: None

97

98

99 DISTUTILS_FINDER = DistutilsMetaFinder()

100

101

102 def add_shim():

103 sys.meta_path.insert(0, DISTUTILS_FINDER)

104

105

106 def remove_shim():

107 try:

108 sys.meta_path.remove(DISTUTILS_FINDER)

109 except ValueError:

110 pass

111

[end of _distutils_hack/__init__.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/_distutils_hack/__init__.py b/_distutils_hack/__init__.py

--- a/_distutils_hack/__init__.py

+++ b/_distutils_hack/__init__.py

@@ -80,7 +80,7 @@

class DistutilsLoader(importlib.abc.Loader):

def create_module(self, spec):

- return importlib.import_module('._distutils', 'setuptools')

+ return importlib.import_module('setuptools._distutils')

def exec_module(self, module):

pass

|

{"golden_diff": "diff --git a/_distutils_hack/__init__.py b/_distutils_hack/__init__.py\n--- a/_distutils_hack/__init__.py\n+++ b/_distutils_hack/__init__.py\n@@ -80,7 +80,7 @@\n class DistutilsLoader(importlib.abc.Loader):\n \n def create_module(self, spec):\n- return importlib.import_module('._distutils', 'setuptools')\n+ return importlib.import_module('setuptools._distutils')\n \n def exec_module(self, module):\n pass\n", "issue": "SystemError: Parent module 'setuptools' not loaded, cannot perform relative import with setuptools 50\nAfter upgrading setuptools to 50.0 today, the environment fails to locate the entry points as it could not import distutils\r\n\r\n```\r\n$ python --version\r\nPython 3.5.1\r\n$ python -c \"import distutils\"\r\nTraceback (most recent call last):\r\n File \"<string>\", line 1, in <module>\r\n File \"<frozen importlib._bootstrap>\", line 969, in _find_and_load\r\n File \"<frozen importlib._bootstrap>\", line 958, in _find_and_load_unlocked\r\n File \"<frozen importlib._bootstrap>\", line 666, in _load_unlocked\r\n File \"<frozen importlib._bootstrap>\", line 577, in module_from_spec\r\n File \"/home/gchan/tmp/setuptools-python-3.5/lib/python3.5/site-packages/_distutils_hack/__init__.py\", line 82, in create_module\r\n return importlib.import_module('._distutils', 'setuptools')\r\n File \"/home/gchan/tmp/setuptools-python-3.5/lib64/python3.5/importlib/__init__.py\", line 126, in import_module\r\n return _bootstrap._gcd_import(name[level:], package, level)\r\n File \"<frozen importlib._bootstrap>\", line 981, in _gcd_import\r\n File \"<frozen importlib._bootstrap>\", line 931, in _sanity_check\r\nSystemError: Parent module 'setuptools' not loaded, cannot perform relative import\r\n```\r\n\r\nThe issue could not be found in the python 3.8 environment. \n", "before_files": [{"content": "import sys\nimport os\nimport re\nimport importlib\nimport warnings\n\n\nis_pypy = '__pypy__' in sys.builtin_module_names\n\n\ndef warn_distutils_present():\n if 'distutils' not in sys.modules:\n return\n if is_pypy and sys.version_info < (3, 7):\n # PyPy for 3.6 unconditionally imports distutils, so bypass the warning\n # https://foss.heptapod.net/pypy/pypy/-/blob/be829135bc0d758997b3566062999ee8b23872b4/lib-python/3/site.py#L250\n return\n warnings.warn(\n \"Distutils was imported before Setuptools, but importing Setuptools \"\n \"also replaces the `distutils` module in `sys.modules`. This may lead \"\n \"to undesirable behaviors or errors. To avoid these issues, avoid \"\n \"using distutils directly, ensure that setuptools is installed in the \"\n \"traditional way (e.g. not an editable install), and/or make sure that \"\n \"setuptools is always imported before distutils.\")\n\n\ndef clear_distutils():\n if 'distutils' not in sys.modules:\n return\n warnings.warn(\"Setuptools is replacing distutils.\")\n mods = [name for name in sys.modules if re.match(r'distutils\\b', name)]\n for name in mods:\n del sys.modules[name]\n\n\ndef enabled():\n \"\"\"\n Allow selection of distutils by environment variable.\n \"\"\"\n which = os.environ.get('SETUPTOOLS_USE_DISTUTILS', 'local')\n return which == 'local'\n\n\ndef ensure_local_distutils():\n clear_distutils()\n distutils = importlib.import_module('setuptools._distutils')\n distutils.__name__ = 'distutils'\n sys.modules['distutils'] = distutils\n\n # sanity check that submodules load as expected\n core = importlib.import_module('distutils.core')\n assert '_distutils' in core.__file__, core.__file__\n\n\ndef do_override():\n \"\"\"\n Ensure that the local copy of distutils is preferred over stdlib.\n\n See https://github.com/pypa/setuptools/issues/417#issuecomment-392298401\n for more motivation.\n \"\"\"\n if enabled():\n warn_distutils_present()\n ensure_local_distutils()\n\n\nclass DistutilsMetaFinder:\n def find_spec(self, fullname, path, target=None):\n if path is not None:\n return\n\n method_name = 'spec_for_{fullname}'.format(**locals())\n method = getattr(self, method_name, lambda: None)\n return method()\n\n def spec_for_distutils(self):\n import importlib.abc\n import importlib.util\n\n class DistutilsLoader(importlib.abc.Loader):\n\n def create_module(self, spec):\n return importlib.import_module('._distutils', 'setuptools')\n\n def exec_module(self, module):\n pass\n\n return importlib.util.spec_from_loader('distutils', DistutilsLoader())\n\n def spec_for_pip(self):\n \"\"\"\n Ensure stdlib distutils when running under pip.\n See pypa/pip#8761 for rationale.\n \"\"\"\n clear_distutils()\n self.spec_for_distutils = lambda: None\n\n\nDISTUTILS_FINDER = DistutilsMetaFinder()\n\n\ndef add_shim():\n sys.meta_path.insert(0, DISTUTILS_FINDER)\n\n\ndef remove_shim():\n try:\n sys.meta_path.remove(DISTUTILS_FINDER)\n except ValueError:\n pass\n", "path": "_distutils_hack/__init__.py"}]}

| 1,942 | 123 |

gh_patches_debug_31088

|

rasdani/github-patches

|

git_diff

|

shapiromatron__hawc-505

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

dosing regime dose groups hotfix

We had a reported data corruption issue where a user edited content in a dosing regime and then after saving, we found multiple endpoint-groups with the same endpoint group id, which shouldn't be possible.

After investigation, we found it was an error in the signal which keeps dose-groups and endpoint-groups synced. If there were multiple representation of dose-groups, for example 5 dose-groups and 2 units, then hawc would create 10 endpoint-groups instead of 5. Further, it would create these even for endpoints where data is not extracted.

Here we fix this issue and write a few tests.

</issue>

<code>

[start of hawc/apps/animal/admin.py]

1 from django.contrib import admin

2

3 from . import models

4

5

6 @admin.register(models.Experiment)

7 class ExperimentAdmin(admin.ModelAdmin):

8 list_display = (

9 "id",

10 "study",

11 "name",

12 "type",

13 "has_multiple_generations",

14 "chemical",

15 "cas",

16 "created",

17 )

18 list_filter = ("type", "has_multiple_generations", "chemical", "study__assessment")

19 search_fields = (

20 "study__short_citation",

21 "name",

22 )

23

24

25 @admin.register(models.AnimalGroup)

26 class AnimalGroupAdmin(admin.ModelAdmin):

27 list_display = (

28 "id",

29 "experiment",

30 "name",

31 "species",

32 "strain",

33 "sex",

34 "created",

35 )

36 list_filter = ("species", "strain", "sex", "experiment__study__assessment_id")

37 search_fields = ("name",)

38

39

40 @admin.register(models.DosingRegime)

41 class DosingRegimeAdmin(admin.ModelAdmin):

42 list_display = (

43 "id",

44 "dosed_animals",

45 "route_of_exposure",

46 "duration_exposure",

47 "num_dose_groups",

48 "created",

49 )

50 list_filter = (

51 "route_of_exposure",

52 "num_dose_groups",

53 "dosed_animals__experiment__study__assessment_id",

54 )

55 list_select_related = ("dosed_animals",)

56 search_fields = ("dosed_animals__name",)

57

58

59 @admin.register(models.Endpoint)

60 class EndpointAdmin(admin.ModelAdmin):

61 list_display = (

62 "id",

63 "assessment_id",

64 "animal_group",

65 "name",

66 "system",

67 "organ",

68 "effect",

69 "data_extracted",

70 "created",

71 )

72 list_filter = ("system", "organ", "data_extracted", "assessment_id")

73 search_fields = ("name",)

74

[end of hawc/apps/animal/admin.py]

[start of hawc/services/utils/git.py]

1 import subprocess

2 from datetime import datetime

3

4 from pydantic import BaseModel

5

6

7 class Commit(BaseModel):

8 sha: str

9 dt: datetime

10

11 @classmethod

12 def current(cls, cwd: str = ".") -> "Commit":

13 """Return information on the last commit at the repository path desired.

14

15 Returns:

16 A Commit instance

17 """

18 cmd = "git log -1 --format=%H"

19 sha = subprocess.check_output(cmd.split(), cwd=cwd).decode().strip()[:12]

20 cmd = "git show -s --format=%ct"

21 dt = datetime.fromtimestamp(

22 int(subprocess.check_output(cmd.split(), cwd=cwd).decode().strip())

23 )

24 return cls(sha=sha, dt=dt)

25

[end of hawc/services/utils/git.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/hawc/apps/animal/admin.py b/hawc/apps/animal/admin.py

--- a/hawc/apps/animal/admin.py

+++ b/hawc/apps/animal/admin.py

@@ -35,6 +35,13 @@

)

list_filter = ("species", "strain", "sex", "experiment__study__assessment_id")

search_fields = ("name",)

+ raw_id_fields = ("experiment", "species", "strain", "dosing_regime", "siblings")

+

+

+class DoseGroupInline(admin.TabularInline):

+ model = models.DoseGroup

+ raw_id_fields = ("dose_units",)

+ extra = 0

@admin.register(models.DosingRegime)

@@ -54,6 +61,12 @@

)

list_select_related = ("dosed_animals",)

search_fields = ("dosed_animals__name",)

+ inlines = (DoseGroupInline,)

+

+

+class EndpointGroupInline(admin.TabularInline):

+ model = models.EndpointGroup

+ extra = 0

@admin.register(models.Endpoint)

@@ -71,3 +84,13 @@

)

list_filter = ("system", "organ", "data_extracted", "assessment_id")

search_fields = ("name",)

+ raw_id_fields = (

+ "assessment",

+ "animal_group",

+ "system_term",

+ "organ_term",

+ "effect_term",

+ "effect_subtype_term",

+ "name_term",

+ )

+ inlines = (EndpointGroupInline,)

diff --git a/hawc/services/utils/git.py b/hawc/services/utils/git.py

--- a/hawc/services/utils/git.py

+++ b/hawc/services/utils/git.py

@@ -16,7 +16,7 @@

A Commit instance

"""

cmd = "git log -1 --format=%H"

- sha = subprocess.check_output(cmd.split(), cwd=cwd).decode().strip()[:12]

+ sha = subprocess.check_output(cmd.split(), cwd=cwd).decode().strip()[:8]

cmd = "git show -s --format=%ct"

dt = datetime.fromtimestamp(

int(subprocess.check_output(cmd.split(), cwd=cwd).decode().strip())

|

{"golden_diff": "diff --git a/hawc/apps/animal/admin.py b/hawc/apps/animal/admin.py\n--- a/hawc/apps/animal/admin.py\n+++ b/hawc/apps/animal/admin.py\n@@ -35,6 +35,13 @@\n )\n list_filter = (\"species\", \"strain\", \"sex\", \"experiment__study__assessment_id\")\n search_fields = (\"name\",)\n+ raw_id_fields = (\"experiment\", \"species\", \"strain\", \"dosing_regime\", \"siblings\")\n+\n+\n+class DoseGroupInline(admin.TabularInline):\n+ model = models.DoseGroup\n+ raw_id_fields = (\"dose_units\",)\n+ extra = 0\n \n \n @admin.register(models.DosingRegime)\n@@ -54,6 +61,12 @@\n )\n list_select_related = (\"dosed_animals\",)\n search_fields = (\"dosed_animals__name\",)\n+ inlines = (DoseGroupInline,)\n+\n+\n+class EndpointGroupInline(admin.TabularInline):\n+ model = models.EndpointGroup\n+ extra = 0\n \n \n @admin.register(models.Endpoint)\n@@ -71,3 +84,13 @@\n )\n list_filter = (\"system\", \"organ\", \"data_extracted\", \"assessment_id\")\n search_fields = (\"name\",)\n+ raw_id_fields = (\n+ \"assessment\",\n+ \"animal_group\",\n+ \"system_term\",\n+ \"organ_term\",\n+ \"effect_term\",\n+ \"effect_subtype_term\",\n+ \"name_term\",\n+ )\n+ inlines = (EndpointGroupInline,)\ndiff --git a/hawc/services/utils/git.py b/hawc/services/utils/git.py\n--- a/hawc/services/utils/git.py\n+++ b/hawc/services/utils/git.py\n@@ -16,7 +16,7 @@\n A Commit instance\n \"\"\"\n cmd = \"git log -1 --format=%H\"\n- sha = subprocess.check_output(cmd.split(), cwd=cwd).decode().strip()[:12]\n+ sha = subprocess.check_output(cmd.split(), cwd=cwd).decode().strip()[:8]\n cmd = \"git show -s --format=%ct\"\n dt = datetime.fromtimestamp(\n int(subprocess.check_output(cmd.split(), cwd=cwd).decode().strip())\n", "issue": "dosing regime dose groups hotfix\nWe had a reported data corruption issue where a user edited content in a dosing regime and then after saving, we found multiple endpoint-groups with the same endpoint group id, which shouldn't be possible.\r\n\r\nAfter investigation, we found it was an error in the signal which keeps dose-groups and endpoint-groups synced. If there were multiple representation of dose-groups, for example 5 dose-groups and 2 units, then hawc would create 10 endpoint-groups instead of 5. Further, it would create these even for endpoints where data is not extracted.\r\n\r\nHere we fix this issue and write a few tests.\n", "before_files": [{"content": "from django.contrib import admin\n\nfrom . import models\n\n\[email protected](models.Experiment)\nclass ExperimentAdmin(admin.ModelAdmin):\n list_display = (\n \"id\",\n \"study\",\n \"name\",\n \"type\",\n \"has_multiple_generations\",\n \"chemical\",\n \"cas\",\n \"created\",\n )\n list_filter = (\"type\", \"has_multiple_generations\", \"chemical\", \"study__assessment\")\n search_fields = (\n \"study__short_citation\",\n \"name\",\n )\n\n\[email protected](models.AnimalGroup)\nclass AnimalGroupAdmin(admin.ModelAdmin):\n list_display = (\n \"id\",\n \"experiment\",\n \"name\",\n \"species\",\n \"strain\",\n \"sex\",\n \"created\",\n )\n list_filter = (\"species\", \"strain\", \"sex\", \"experiment__study__assessment_id\")\n search_fields = (\"name\",)\n\n\[email protected](models.DosingRegime)\nclass DosingRegimeAdmin(admin.ModelAdmin):\n list_display = (\n \"id\",\n \"dosed_animals\",\n \"route_of_exposure\",\n \"duration_exposure\",\n \"num_dose_groups\",\n \"created\",\n )\n list_filter = (\n \"route_of_exposure\",\n \"num_dose_groups\",\n \"dosed_animals__experiment__study__assessment_id\",\n )\n list_select_related = (\"dosed_animals\",)\n search_fields = (\"dosed_animals__name\",)\n\n\[email protected](models.Endpoint)\nclass EndpointAdmin(admin.ModelAdmin):\n list_display = (\n \"id\",\n \"assessment_id\",\n \"animal_group\",\n \"name\",\n \"system\",\n \"organ\",\n \"effect\",\n \"data_extracted\",\n \"created\",\n )\n list_filter = (\"system\", \"organ\", \"data_extracted\", \"assessment_id\")\n search_fields = (\"name\",)\n", "path": "hawc/apps/animal/admin.py"}, {"content": "import subprocess\nfrom datetime import datetime\n\nfrom pydantic import BaseModel\n\n\nclass Commit(BaseModel):\n sha: str\n dt: datetime\n\n @classmethod\n def current(cls, cwd: str = \".\") -> \"Commit\":\n \"\"\"Return information on the last commit at the repository path desired.\n\n Returns:\n A Commit instance\n \"\"\"\n cmd = \"git log -1 --format=%H\"\n sha = subprocess.check_output(cmd.split(), cwd=cwd).decode().strip()[:12]\n cmd = \"git show -s --format=%ct\"\n dt = datetime.fromtimestamp(\n int(subprocess.check_output(cmd.split(), cwd=cwd).decode().strip())\n )\n return cls(sha=sha, dt=dt)\n", "path": "hawc/services/utils/git.py"}]}

| 1,437 | 505 |

gh_patches_debug_3278

|

rasdani/github-patches

|

git_diff

|

certbot__certbot-7294

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Certbot's Apache plugin doesn't work on Scientific Linux

See https://community.letsencrypt.org/t/noinstallationerror-cannot-find-apache-executable-apache2ctl/97980.

This should be fixable by adding an override in https://github.com/certbot/certbot/blob/master/certbot-apache/certbot_apache/entrypoint.py#L17.

</issue>

<code>

[start of certbot-apache/certbot_apache/entrypoint.py]

1 """ Entry point for Apache Plugin """

2 # Pylint does not like disutils.version when running inside a venv.

3 # See: https://github.com/PyCQA/pylint/issues/73

4 from distutils.version import LooseVersion # pylint: disable=no-name-in-module,import-error

5

6 from certbot import util

7

8 from certbot_apache import configurator

9 from certbot_apache import override_arch

10 from certbot_apache import override_fedora

11 from certbot_apache import override_darwin

12 from certbot_apache import override_debian

13 from certbot_apache import override_centos

14 from certbot_apache import override_gentoo

15 from certbot_apache import override_suse

16

17 OVERRIDE_CLASSES = {

18 "arch": override_arch.ArchConfigurator,

19 "darwin": override_darwin.DarwinConfigurator,

20 "debian": override_debian.DebianConfigurator,

21 "ubuntu": override_debian.DebianConfigurator,

22 "centos": override_centos.CentOSConfigurator,

23 "centos linux": override_centos.CentOSConfigurator,

24 "fedora_old": override_centos.CentOSConfigurator,

25 "fedora": override_fedora.FedoraConfigurator,

26 "ol": override_centos.CentOSConfigurator,

27 "red hat enterprise linux server": override_centos.CentOSConfigurator,

28 "rhel": override_centos.CentOSConfigurator,

29 "amazon": override_centos.CentOSConfigurator,

30 "gentoo": override_gentoo.GentooConfigurator,

31 "gentoo base system": override_gentoo.GentooConfigurator,

32 "opensuse": override_suse.OpenSUSEConfigurator,

33 "suse": override_suse.OpenSUSEConfigurator,

34 }

35

36

37 def get_configurator():

38 """ Get correct configurator class based on the OS fingerprint """

39 os_name, os_version = util.get_os_info()

40 os_name = os_name.lower()

41 override_class = None

42

43 # Special case for older Fedora versions

44 if os_name == 'fedora' and LooseVersion(os_version) < LooseVersion('29'):

45 os_name = 'fedora_old'

46

47 try:

48 override_class = OVERRIDE_CLASSES[os_name]

49 except KeyError:

50 # OS not found in the list

51 os_like = util.get_systemd_os_like()

52 if os_like:

53 for os_name in os_like:

54 if os_name in OVERRIDE_CLASSES.keys():

55 override_class = OVERRIDE_CLASSES[os_name]

56 if not override_class:

57 # No override class found, return the generic configurator

58 override_class = configurator.ApacheConfigurator

59 return override_class

60

61

62 ENTRYPOINT = get_configurator()

63

[end of certbot-apache/certbot_apache/entrypoint.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/certbot-apache/certbot_apache/entrypoint.py b/certbot-apache/certbot_apache/entrypoint.py

--- a/certbot-apache/certbot_apache/entrypoint.py

+++ b/certbot-apache/certbot_apache/entrypoint.py

@@ -31,6 +31,8 @@

"gentoo base system": override_gentoo.GentooConfigurator,

"opensuse": override_suse.OpenSUSEConfigurator,

"suse": override_suse.OpenSUSEConfigurator,

+ "scientific": override_centos.CentOSConfigurator,

+ "scientific linux": override_centos.CentOSConfigurator,

}

|

{"golden_diff": "diff --git a/certbot-apache/certbot_apache/entrypoint.py b/certbot-apache/certbot_apache/entrypoint.py\n--- a/certbot-apache/certbot_apache/entrypoint.py\n+++ b/certbot-apache/certbot_apache/entrypoint.py\n@@ -31,6 +31,8 @@\n \"gentoo base system\": override_gentoo.GentooConfigurator,\n \"opensuse\": override_suse.OpenSUSEConfigurator,\n \"suse\": override_suse.OpenSUSEConfigurator,\n+ \"scientific\": override_centos.CentOSConfigurator,\n+ \"scientific linux\": override_centos.CentOSConfigurator,\n }\n", "issue": "Certbot's Apache plugin doesn't work on Scientific Linux\nSee https://community.letsencrypt.org/t/noinstallationerror-cannot-find-apache-executable-apache2ctl/97980.\r\n\r\nThis should be fixable by adding an override in https://github.com/certbot/certbot/blob/master/certbot-apache/certbot_apache/entrypoint.py#L17.\n", "before_files": [{"content": "\"\"\" Entry point for Apache Plugin \"\"\"\n# Pylint does not like disutils.version when running inside a venv.\n# See: https://github.com/PyCQA/pylint/issues/73\nfrom distutils.version import LooseVersion # pylint: disable=no-name-in-module,import-error\n\nfrom certbot import util\n\nfrom certbot_apache import configurator\nfrom certbot_apache import override_arch\nfrom certbot_apache import override_fedora\nfrom certbot_apache import override_darwin\nfrom certbot_apache import override_debian\nfrom certbot_apache import override_centos\nfrom certbot_apache import override_gentoo\nfrom certbot_apache import override_suse\n\nOVERRIDE_CLASSES = {\n \"arch\": override_arch.ArchConfigurator,\n \"darwin\": override_darwin.DarwinConfigurator,\n \"debian\": override_debian.DebianConfigurator,\n \"ubuntu\": override_debian.DebianConfigurator,\n \"centos\": override_centos.CentOSConfigurator,\n \"centos linux\": override_centos.CentOSConfigurator,\n \"fedora_old\": override_centos.CentOSConfigurator,\n \"fedora\": override_fedora.FedoraConfigurator,\n \"ol\": override_centos.CentOSConfigurator,\n \"red hat enterprise linux server\": override_centos.CentOSConfigurator,\n \"rhel\": override_centos.CentOSConfigurator,\n \"amazon\": override_centos.CentOSConfigurator,\n \"gentoo\": override_gentoo.GentooConfigurator,\n \"gentoo base system\": override_gentoo.GentooConfigurator,\n \"opensuse\": override_suse.OpenSUSEConfigurator,\n \"suse\": override_suse.OpenSUSEConfigurator,\n}\n\n\ndef get_configurator():\n \"\"\" Get correct configurator class based on the OS fingerprint \"\"\"\n os_name, os_version = util.get_os_info()\n os_name = os_name.lower()\n override_class = None\n\n # Special case for older Fedora versions\n if os_name == 'fedora' and LooseVersion(os_version) < LooseVersion('29'):\n os_name = 'fedora_old'\n\n try:\n override_class = OVERRIDE_CLASSES[os_name]\n except KeyError:\n # OS not found in the list\n os_like = util.get_systemd_os_like()\n if os_like:\n for os_name in os_like:\n if os_name in OVERRIDE_CLASSES.keys():\n override_class = OVERRIDE_CLASSES[os_name]\n if not override_class:\n # No override class found, return the generic configurator\n override_class = configurator.ApacheConfigurator\n return override_class\n\n\nENTRYPOINT = get_configurator()\n", "path": "certbot-apache/certbot_apache/entrypoint.py"}]}

| 1,340 | 156 |

gh_patches_debug_4216

|

rasdani/github-patches

|

git_diff

|

great-expectations__great_expectations-4055

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

get_validator method do not work

Hello!

I have a problem with get_validator component.

Here’s my code:

```

batch_request = BatchRequest(

datasource_name="redshift_",

data_connector_name="default_inferred_data_connector_name",

data_asset_name="daily_chargeback_table_v1", # this is the name of the table you want to retrieve

)

context.create_expectation_suite(

expectation_suite_name="test_suite", overwrite_existing=True

)

validator = context.get_validator(

batch_request=batch_request, expectation_suite_name="test_suite"

)

print(validator.head())

```

I get this exception:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-67-16f90e0aa558> in <module>

8 )

9 validator = context.get_validator(

---> 10 batch_request=batch_request, expectation_suite_name="test_suite"

11 )

12 print(validator.head())

.

.

.

~/anaconda3/lib/python3.7/site-packages/great_expectations/execution_engine/sqlalchemy_execution_engine.py in _build_selectable_from_batch_spec(self, batch_spec)

979 )

980 .where(

--> 981 sa.and_(

982 split_clause,

983 sampler_fn(**batch_spec["sampling_kwargs"]),

TypeError: table() got an unexpected keyword argument 'schema'

```

My Datasource configuration like:

```

name: redshift_

class_name: Datasource

execution_engine:

class_name: SqlAlchemyExecutionEngine

credentials:

host: redshift_host

port: '5443'

username: username

password: password

database: dbname

query:

sslmode: prefer

drivername: postgresql+psycopg2

data_connectors:

default_runtime_data_connector_name:

class_name: RuntimeDataConnector

batch_identifiers:

- default_identifier_name

default_inferred_data_connector_name:

class_name: InferredAssetSqlDataConnector

name: whole_table

```

My environment:

MacOS

python 3.7.4

great_expectations 0.13.34

I will be grateful for any help.

</issue>

<code>

[start of setup.py]

1 from setuptools import find_packages, setup

2

3 import versioneer

4

5 # Parse requirements.txt

6 with open("requirements.txt") as f:

7 required = f.read().splitlines()

8

9 # try:

10 # import pypandoc

11 # long_description = pypandoc.convert_file('README.md', 'rst')

12 # except (IOError, ImportError):

13 long_description = "Always know what to expect from your data. (See https://github.com/great-expectations/great_expectations for full description)."

14

15 config = {

16 "description": "Always know what to expect from your data.",

17 "author": "The Great Expectations Team",

18 "url": "https://github.com/great-expectations/great_expectations",

19 "author_email": "[email protected]",

20 "version": versioneer.get_version(),

21 "cmdclass": versioneer.get_cmdclass(),

22 "install_requires": required,

23 "extras_require": {

24 "spark": ["pyspark>=2.3.2"],

25 "sqlalchemy": ["sqlalchemy>=1.3.16"],

26 "airflow": ["apache-airflow[s3]>=1.9.0", "boto3>=1.7.3"],

27 "gcp": [

28 "google-cloud>=0.34.0",

29 "google-cloud-storage>=1.28.0",

30 "google-cloud-secret-manager>=1.0.0",

31 "pybigquery==0.4.15",

32 ],

33 "redshift": ["psycopg2>=2.8"],

34 "s3": ["boto3>=1.14"],

35 "aws_secrets": ["boto3>=1.8.7"],

36 "azure_secrets": ["azure-identity>=1.0.0", "azure-keyvault-secrets>=4.0.0"],

37 "snowflake": ["snowflake-sqlalchemy>=1.2"],

38 },

39 "packages": find_packages(exclude=["contrib*", "docs*", "tests*", "examples*"]),

40 "entry_points": {

41 "console_scripts": ["great_expectations=great_expectations.cli:main"]

42 },

43 "name": "great_expectations",

44 "long_description": long_description,

45 "license": "Apache-2.0",

46 "keywords": "data science testing pipeline data quality dataquality validation datavalidation",

47 "include_package_data": True,

48 "classifiers": [

49 "Development Status :: 4 - Beta",

50 "Intended Audience :: Developers",

51 "Intended Audience :: Science/Research",

52 "Intended Audience :: Other Audience",

53 "Topic :: Scientific/Engineering",

54 "Topic :: Software Development",

55 "Topic :: Software Development :: Testing",

56 "License :: OSI Approved :: Apache Software License",

57 "Programming Language :: Python :: 3",

58 "Programming Language :: Python :: 3.6",

59 "Programming Language :: Python :: 3.7",

60 "Programming Language :: Python :: 3.8",

61 "Programming Language :: Python :: 3.9",

62 ],

63 }

64

65 setup(**config)

66

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -22,7 +22,7 @@

"install_requires": required,

"extras_require": {

"spark": ["pyspark>=2.3.2"],

- "sqlalchemy": ["sqlalchemy>=1.3.16"],

+ "sqlalchemy": ["sqlalchemy>=1.3.18"],

"airflow": ["apache-airflow[s3]>=1.9.0", "boto3>=1.7.3"],

"gcp": [

"google-cloud>=0.34.0",

|