hexsha

stringlengths 40

40

| size

int64 6

14.9M

| ext

stringclasses 1

value | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 6

260

| max_stars_repo_name

stringlengths 6

119

| max_stars_repo_head_hexsha

stringlengths 40

41

| max_stars_repo_licenses

list | max_stars_count

int64 1

191k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 6

260

| max_issues_repo_name

stringlengths 6

119

| max_issues_repo_head_hexsha

stringlengths 40

41

| max_issues_repo_licenses

list | max_issues_count

int64 1

67k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 6

260

| max_forks_repo_name

stringlengths 6

119

| max_forks_repo_head_hexsha

stringlengths 40

41

| max_forks_repo_licenses

list | max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | avg_line_length

float64 2

1.04M

| max_line_length

int64 2

11.2M

| alphanum_fraction

float64 0

1

| cells

list | cell_types

list | cell_type_groups

list |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

cbe124020a3dae905bcbdeb8aaee4d8c089d4027

| 50,157 |

ipynb

|

Jupyter Notebook

|

web-server-related-tutorials/pandas-movie-recommender/movie-recommendation-system-scratch-master/movie-lens-recommendation-system-from-scratch.ipynb

|

djfedos/djfedos-boostrap

|

8a2fb1259c52134f6bcbda53821ab8f3b20e50e4

|

[

"Apache-2.0"

] | null | null | null |

web-server-related-tutorials/pandas-movie-recommender/movie-recommendation-system-scratch-master/movie-lens-recommendation-system-from-scratch.ipynb

|

djfedos/djfedos-boostrap

|

8a2fb1259c52134f6bcbda53821ab8f3b20e50e4

|

[

"Apache-2.0"

] | 9 |

2021-11-03T18:57:45.000Z

|

2022-03-26T06:29:38.000Z

|

web-server-related-tutorials/pandas-movie-recommender/movie-recommendation-system-scratch-master/movie-lens-recommendation-system-from-scratch.ipynb

|

djfedos/djfedos-boostrap

|

8a2fb1259c52134f6bcbda53821ab8f3b20e50e4

|

[

"Apache-2.0"

] | null | null | null | 32.954665 | 640 | 0.427677 |

[

[

[

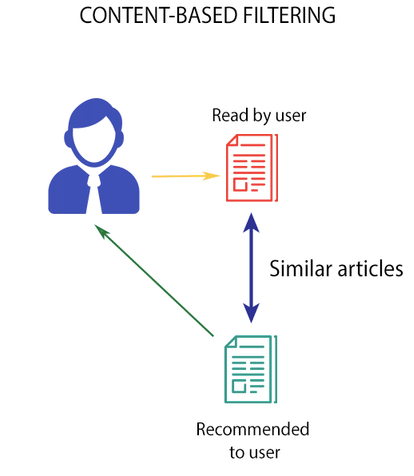

"# Coding Recommendation Engines Ground Up\n***\n## Overview\nRecommendation Engines are the programs which basically compute the similarities between two entities and on that basis, they give us the targeted output. If we look at the root level of the recommendation engines, they all are trying to find out the level of similarity between two entities. Then, the computed similarities can be used to calculate the various kinds of results.\n\n**Recommendation Engines are mostly based on the following concepts:**\n 1. Popularity Model\n 2. Collaborative Filtering Technique (Content Based / User Based)\n 3. Matrix Factorization Techniques. \n\n\n### Popularity Model\n***\nThe most basic form of a recommendation engine would be where the engine recommends the most popular items to all the customers. That would be generalised as everyone is getting the similar recommendations as we didn't personalize the recommendations. These kinds of recommendation engines are based on the **Popularity Model**. THe use case for this model would be the 'Top News' Section for the day on a news website where the most popular new for everyone is same irespective of the interests of every user because that makes a logical sense because News is a generalized thing and it has got nothing to do with your likeliness.\n\n\n\n### Collaborative Filtering Techniques\n***\n**User Based Collaborative Filetering**\n\nIn user based collaborative filtering, we find out the similarity score between the two users. On the basis of similarity score, we recommend the items bought/liked by one user to other user assuing that he might like these items on the basis of similarity. This will be more clear when we go ahead and implement this.\n\n\n**Content Based Filtering**\n\nIn user based filtering technique, we saw that we recommend items to a used based on the similarity score between two users where it does not matter whether the items were of a similar type. But, in this tehchnique out interest is in the content rather than the users. Here, if user 1 like watching movies of genre A (most of the movies he has watched/rated highly are of genre A), then we will recommend him more movies of the same genre. That's how this things works.\n\n\n\n### Matrix Factorization Techniques\n***\nIn this technique, our objective is to find out the latent features which we derive m*n matrix by taking the dot product of m*k and k*n matrices where k is out latent feature matrix. Here if we go by an example, if m is the row index of the users and n is column index of the items adn data is the rating provided by every user, then we start with m*k and k*n matrices by adjusting values such that they finally converge to ~ m*n matrix (not totally the same ofocurse). This is a very expensive approach but highly accurate.\n\n\n\n## Problem Statement\n***\nWe have a movie lens database and our objective is to apply various kinds of recommendation techniques from scratch and find out similarities between the users, most popular movies, and personalized recommendations for the targeted user based on user based collaborative filtering.",

"_____no_output_____"

]

],

[

[

"# Importing the required libraries.\nimport pandas as pd\nfrom sklearn.model_selection import train_test_split\nfrom math import pow, sqrt",

"_____no_output_____"

],

[

"# Reading users dataset into a pandas dataframe object.\nu_cols = ['user_id', 'age', 'sex', 'occupation', 'zip_code']\nusers = pd.read_csv('data/users.dat', sep='::', names=u_cols,\n encoding='latin-1')",

"/tmp/ipykernel_44083/484106584.py:3: ParserWarning: Falling back to the 'python' engine because the 'c' engine does not support regex separators (separators > 1 char and different from '\\s+' are interpreted as regex); you can avoid this warning by specifying engine='python'.\n users = pd.read_csv('data/users.dat', sep='::', names=u_cols,\n"

],

[

"users.head(8)",

"_____no_output_____"

],

[

"# Reading ratings dataset into a pandas dataframe object.\nr_cols = ['user_id', 'movie_id', 'rating', 'unix_timestamp']\nratings = pd.read_csv('data/ratings.dat', sep='::', names=r_cols,\n encoding='latin-1')",

"/tmp/ipykernel_44083/1225598432.py:3: ParserWarning: Falling back to the 'python' engine because the 'c' engine does not support regex separators (separators > 1 char and different from '\\s+' are interpreted as regex); you can avoid this warning by specifying engine='python'.\n ratings = pd.read_csv('data/ratings.dat', sep='::', names=r_cols,\n"

],

[

"ratings.head(8)",

"_____no_output_____"

],

[

"# Reading movies dataset into a pandas dataframe object.\nm_cols = ['movie_id', 'movie_title', 'genre']\nmovies = pd.read_csv('data/movies.dat', sep='::', names=m_cols, encoding='latin-1')",

"/tmp/ipykernel_44083/3303852832.py:3: ParserWarning: Falling back to the 'python' engine because the 'c' engine does not support regex separators (separators > 1 char and different from '\\s+' are interpreted as regex); you can avoid this warning by specifying engine='python'.\n movies = pd.read_csv('data/movies.dat', sep='::', names=m_cols, encoding='latin-1')\n"

],

[

"movies.head(8)",

"_____no_output_____"

]

],

[

[

"As seen in the above dataframe, the genre column has data with pipe separators which cannot be processed for recommendations as such. Hence, we need to genrate columns for every genre type such that if the movie belongs to that genre its value will be 1 otheriwse 0.(Sort of one hot encoding)",

"_____no_output_____"

]

],

[

[

"# Getting series of lists by applying split operation.\nmovies.genre = movies.genre.str.split('|')",

"_____no_output_____"

],

[

"# Getting distinct genre types for generating columns of genre type.\ngenre_columns = list(set([j for i in movies['genre'].tolist() for j in i]))",

"_____no_output_____"

],

[

"# Iterating over every list to create and fill values into columns.\nfor j in genre_columns:\n movies[j] = 0\nfor i in range(movies.shape[0]):\n for j in genre_columns:\n if(j in movies['genre'].iloc[i]):\n movies.loc[i,j] = 1",

"_____no_output_____"

],

[

"movies.head(7)",

"_____no_output_____"

]

],

[

[

"Also, we need to separate the year part of the 'movie_title' columns for better interpretability and processing. Hence, a columns named 'release_year' will be created using the below code.",

"_____no_output_____"

]

],

[

[

"# Separting movie title and year part using split function\nsplit_values = movies['movie_title'].str.split(\"(\", n = 1, expand = True) \n\n# setting 'movie_title' values to title part and creating 'release_year' column.\nmovies.movie_title = split_values[0]\nmovies['release_year'] = split_values[1]\n\n# Cleaning the release_year series and dropping 'genre' columns as it has already been one hot encoded.\nmovies['release_year'] = movies.release_year.str.replace(')','')\nmovies.drop('genre',axis=1,inplace=True)",

"/tmp/ipykernel_44083/1326198417.py:9: FutureWarning: The default value of regex will change from True to False in a future version. In addition, single character regular expressions will *not* be treated as literal strings when regex=True.\n movies['release_year'] = movies.release_year.str.replace(')','')\n"

]

],

[

[

"Let's visualize all the dataframes after all the preprocessing we did.",

"_____no_output_____"

]

],

[

[

"movies[['movie_title', 'release_year']]",

"_____no_output_____"

],

[

"ratings.head()",

"_____no_output_____"

],

[

"users.head()",

"_____no_output_____"

],

[

"ratings.shape",

"_____no_output_____"

]

],

[

[

"### Writing generally used getter functions in the implementation\nHere, we have written down a few getters so that we do not need to write down them again adn again and it also increases readability and reusability of the code.",

"_____no_output_____"

]

],

[

[

"#Function to get the rating given by a user to a movie.\ndef get_rating_(userid,movieid):\n return (ratings.loc[(ratings.user_id==userid) & (ratings.movie_id == movieid),'rating'].iloc[0])\n\n# Function to get the list of all movie ids the specified user has rated.\ndef get_movieids_(userid):\n return (ratings.loc[(ratings.user_id==userid),'movie_id'].tolist())\n\n# Function to get the movie titles against the movie id.\ndef get_movie_title_(movieid):\n return (movies.loc[(movies.movie_id == movieid),'movie_title'].iloc[0])",

"_____no_output_____"

]

],

[

[

"## Similarity Scores\n***\nIn this implementation the similarity between the two users have been calculated on the basis of the distance between the two users (i.e. Euclidean distances) and by calculating Pearson Correlation between the two users.\n\nWe have written two functions.",

"_____no_output_____"

]

],

[

[

"def distance_similarity_score(user1,user2):\n '''\n user1 & user2 : user ids of two users between which similarity score is to be calculated.\n '''\n both_watch_count = 0\n both_watch_list = []\n for element in ratings.loc[ratings.user_id==user1,'movie_id'].tolist():\n if element in ratings.loc[ratings.user_id==user2,'movie_id'].tolist():\n both_watch_count += 1\n both_watch_list.append(element)\n if both_watch_count == 0 :\n return 0\n distance = []\n for element in both_watch_list:\n rating1 = get_rating_(user1,element)\n rating2 = get_rating_(user2,element)\n distance.append(pow(rating1 - rating2, 2))\n total_distance = sum(distance)\n return 1/(1+sqrt(total_distance))",

"_____no_output_____"

],

[

"distance_similarity_score(1,310)",

"_____no_output_____"

],

[

"distance_similarity_score(1,310)",

"_____no_output_____"

]

],

[

[

"Calculating Similarity Scores based on the distances have an inherent problem. We do not have a threshold to decide how much more distance between two users is to be considered for calculating whether the users are close enough or far enough. On the other side, this problem is resolved by pearson correlation method as it always returns a value between -1 & 1 which clearly provides us with the boundaries for closeness as we prefer.",

"_____no_output_____"

]

],

[

[

"def pearson_correlation_score(user1,user2):\n '''\n user1 & user2 : user ids of two users between which similarity score is to be calculated.\n '''\n both_watch_count = []\n for element in ratings.loc[ratings.user_id==user1,'movie_id'].tolist():\n if element in ratings.loc[ratings.user_id==user2,'movie_id'].tolist():\n both_watch_count.append(element)\n if len(both_watch_count) == 0 :\n return 0\n ratings_1 = [get_rating_(user1,element) for element in both_watch_count]\n ratings_2 = [get_rating_(user2,element) for element in both_watch_count]\n rating_sum_1 = sum(ratings_1)\n rating_sum_2 = sum(ratings_2)\n rating_squared_sum_1 = sum([pow(element,2) for element in ratings_1])\n rating_squared_sum_2 = sum([pow(element,2) for element in ratings_2])\n product_sum_rating = sum([get_rating_(user1,element) * get_rating_(user2,element) for element in both_watch_count])\n \n numerator = product_sum_rating - ((rating_sum_1 * rating_sum_2) / len(both_watch_count))\n denominator = sqrt((rating_squared_sum_1 - pow(rating_sum_1,2) / len(both_watch_count)) * (rating_squared_sum_2 - pow(rating_sum_2,2) / len(both_watch_count)))\n if denominator == 0:\n return 0\n return numerator/denominator",

"_____no_output_____"

],

[

"pearson_correlation_score(1,310)",

"_____no_output_____"

],

[

"pearson_correlation_score(1,310)",

"_____no_output_____"

]

],

[

[

"### Most Similar Users\n\nThe objective is to find out **Most Similar Users** to the targeted user. Here we have two metrics to find the score i.e. distance and correlation. ",

"_____no_output_____"

]

],

[

[

"def most_similar_users_(user1,number_of_users,metric='pearson'):\n '''\n user1 : Targeted User\n number_of_users : number of most similar users you want to user1.\n metric : metric to be used to calculate inter-user similarity score. ('pearson' or else)\n '''\n # Getting distinct user ids.\n user_ids = ratings.user_id.unique().tolist()\n \n # Getting similarity score between targeted and every other suer in the list(or subset of the list).\n if(metric == 'pearson'):\n similarity_score = [(pearson_correlation_score(user1,nth_user),nth_user) for nth_user in user_ids[:100] if nth_user != user1]\n else:\n similarity_score = [(distance_similarity_score(user1,nth_user),nth_user) for nth_user in user_ids[:100] if nth_user != user1]\n \n # Sorting in descending order.\n similarity_score.sort()\n similarity_score.reverse()\n \n # Returning the top most 'number_of_users' similar users. \n return similarity_score[:number_of_users]",

"_____no_output_____"

]

],

[

[

"\n\n## Getting Movie Recommendations for Targeted User\n***\nThe concept is very simple. First, we need to iterate over only those movies not watched(or rated) by the targeted user and the subsetting items based on the users highly correlated with targeted user. Here, we have used a weighted similarity approach where we have taken product of rating and score into account to make sure that the highly similar users affect the recommendations more than those less similar. Then, we have sorted the list on the basis of score along with movie ids and returned the movie titles against those movie ids.\n\n",

"_____no_output_____"

]

],

[

[

"def get_recommendation_(userid):\n user_ids = ratings.user_id.unique().tolist()\n total = {}\n similariy_sum = {}\n \n # Iterating over subset of user ids.\n for user in user_ids[:100]:\n \n # not comparing the user to itself (obviously!)\n if user == userid:\n continue\n \n # Getting similarity score between the users.\n score = pearson_correlation_score(userid,user)\n \n # not considering users having zero or less similarity score.\n if score <= 0:\n continue\n \n # Getting weighted similarity score and sum of similarities between both the users.\n for movieid in get_movieids_(user):\n # Only considering not watched/rated movies\n if movieid not in get_movieids_(userid) or get_rating_(userid,movieid) == 0:\n total[movieid] = 0\n total[movieid] += get_rating_(user,movieid) * score\n similariy_sum[movieid] = 0\n similariy_sum[movieid] += score\n \n # Normalizing ratings\n ranking = [(tot/similariy_sum[movieid],movieid) for movieid,tot in total.items()]\n ranking.sort()\n ranking.reverse()\n \n # Getting movie titles against the movie ids.\n recommendations = [get_movie_title_(movieid) for score,movieid in ranking]\n return recommendations[:10]",

"_____no_output_____"

]

],

[

[

"**NOTE**: We have applied the above three techniques only to specific subset of the dataset as the dataset is too big and iterating over every row multiple times will increase runtime manifolds.",

"_____no_output_____"

],

[

"### Implementations\n***\nWe will call all the functions one by one and let's see whether they return the desird output (or they return any output at all!) ;)",

"_____no_output_____"

]

],

[

[

"print(most_similar_users_(23,5))",

"[(0.936585811581694, 61), (0.7076731463403717, 41), (0.6123724356957956, 21), (0.5970863767331771, 25), (0.5477225575051661, 64)]\n"

]

],

[

[

"I don't know if few of the people have noticed that the most similar users' logic can be stregthened more by considering other factors as well sucb as age etc. Here, we have created our logic on the basis of only one feature i.e. rating.",

"_____no_output_____"

]

],

[

[

"print(get_recommendation_(320))",

"['Contender, The ', 'Requiem for a Dream ', 'Bamboozled ', 'Invisible Man, The ', 'Creature From the Black Lagoon, The ', 'Hellraiser ', 'Almost Famous ', 'Way of the Gun, The ', 'Shane ', 'Naked Gun 2 1/2: The Smell of Fear, The ']\n"

]

],

[

[

"Next in line, we will discuss and implement matrix factorization approach.",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown"

] |

[

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

]

] |

cbe12adc241842607bc47e65918d3f820700d192

| 22,166 |

ipynb

|

Jupyter Notebook

|

knn/digit-recognision.ipynb

|

ayushnagar123/Data-Science

|

22fa3a2d2eee1adaf4be51663b61bcae587cfe21

|

[

"MIT"

] | 1 |

2020-12-19T19:04:41.000Z

|

2020-12-19T19:04:41.000Z

|

knn/digit-recognision.ipynb

|

ayushnagar123/Data-Science

|

22fa3a2d2eee1adaf4be51663b61bcae587cfe21

|

[

"MIT"

] | null | null | null |

knn/digit-recognision.ipynb

|

ayushnagar123/Data-Science

|

22fa3a2d2eee1adaf4be51663b61bcae587cfe21

|

[

"MIT"

] | null | null | null | 42.626923 | 4,828 | 0.611703 |

[

[

[

"# hand written digit recognision on mnist dataset using knn",

"_____no_output_____"

]

],

[

[

"import numpy as np\nimport pandas as pd\nimport matplotlib.pyplot as plt",

"_____no_output_____"

]

],

[

[

"# step 1:- Data Preperation",

"_____no_output_____"

]

],

[

[

"df = pd.read_csv('mnist_train.csv')\nprint(df.shape)",

"(42000, 785)\n"

],

[

"print(df.columns)",

"Index(['label', 'pixel0', 'pixel1', 'pixel2', 'pixel3', 'pixel4', 'pixel5',\n 'pixel6', 'pixel7', 'pixel8',\n ...\n 'pixel774', 'pixel775', 'pixel776', 'pixel777', 'pixel778', 'pixel779',\n 'pixel780', 'pixel781', 'pixel782', 'pixel783'],\n dtype='object', length=785)\n"

],

[

"df.head(n=5)",

"_____no_output_____"

],

[

"data =df.values\nprint(data.shape)\nprint(type(data))",

"(42000, 785)\n<class 'numpy.ndarray'>\n"

],

[

"x = data[:,1:]\ny = data[:,0]\nprint(x.shape,y.shape)",

"(42000, 784) (42000,)\n"

],

[

"split = int(0.8*x.shape[0])\nprint(split)\n\nx_train = x[:split,:]\ny_train = y[:split]\n\nx_test = x[split:,:]\ny_test = y[split:]\n\nprint(x_train.shape,y_train.shape)\nprint(x_test.shape,y_test.shape)",

"33600\n(33600, 784) (33600,)\n(8400, 784) (8400,)\n"

],

[

"def drawImg(sample):\n img = sample.reshape((28,28))\n plt.imshow(img,cmap='gray')\n plt.show()\ndrawImg(x_train[3])",

"_____no_output_____"

]

],

[

[

"# Step 2 :- K-NN",

"_____no_output_____"

]

],

[

[

"def dist(x1,x2):\n return np.sqrt(sum((x1-x2)**2))\n\ndef knn(x,y,queryPoint,k=5):\n vals = []\n m = x.shape[0]\n \n for i in range(m):\n d = dist(queryPoint,x[i])\n vals.append((d,y[i]))\n vals = sorted(vals)\n \n vals = vals[:k]\n vals = np.array(vals)\n# print(vals)\n \n new_vals = np.unique(vals[:,1],return_counts = True)\n# print(new_vals)\n index = new_vals[1].argmax()\n pred = new_vals[0][index]\n return pred",

"_____no_output_____"

]

],

[

[

"# Step 3 :- Make predictions",

"_____no_output_____"

]

],

[

[

"pred = knn(x_train,y_train,x_test[1])\nprint(int(pred))",

"7\n"

],

[

"drawImg(x_test[1])\nprint(y_test[1])",

"_____no_output_____"

]

],

[

[

"# write one method to compute accuracy of KNN over the test set",

"_____no_output_____"

]

],

[

[

"c=0\nfor i in range(len(x_test)):\n if(int(knn(x_train,y_train,x_test[i]))==y_test[i]):\n c+=1\naccuracy = c*100/len(x_test)\nprint(accuracy)",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

]

] |

cbe12cd281bf7b2a33085152d6d8a48fe38c597e

| 119,926 |

ipynb

|

Jupyter Notebook

|

Examples/EISImportPlot/EISImportPlot.ipynb

|

Zahner-elektrik/Thales-Remote-Python

|

23bd86c1a662ecbb5faf02e635aa8ef9c6596243

|

[

"MIT"

] | 6 |

2021-02-03T19:31:59.000Z

|

2022-01-25T04:16:16.000Z

|

Examples/EISImportPlot/EISImportPlot.ipynb

|

Zahner-elektrik/Thales-Remote-Python

|

23bd86c1a662ecbb5faf02e635aa8ef9c6596243

|

[

"MIT"

] | null | null | null |

Examples/EISImportPlot/EISImportPlot.ipynb

|

Zahner-elektrik/Thales-Remote-Python

|

23bd86c1a662ecbb5faf02e635aa8ef9c6596243

|

[

"MIT"

] | 3 |

2020-11-08T14:25:20.000Z

|

2021-11-16T09:00:05.000Z

| 336.870787 | 69,606 | 0.933184 |

[

[

[

"# ism Import and Plotting\n\nThis example shows how to measure an impedance spectrum and then plot it in Bode and Nyquist using the Python library [matplotlib](https://matplotlib.org/).",

"_____no_output_____"

]

],

[

[

"import sys\nfrom thales_remote.connection import ThalesRemoteConnection\nfrom thales_remote.script_wrapper import PotentiostatMode,ThalesRemoteScriptWrapper\n\nfrom thales_file_import.ism_import import IsmImport\nimport matplotlib.pyplot as plt\nimport numpy as np\nfrom matplotlib.ticker import EngFormatter\n\nfrom jupyter_utils import executionInNotebook, notebookCodeToPython",

"_____no_output_____"

]

],

[

[

"\n# Connect Python to the already launched Thales-Software",

"_____no_output_____"

]

],

[

[

"if __name__ == \"__main__\":\n zenniumConnection = ThalesRemoteConnection()\n connectionSuccessful = zenniumConnection.connectToTerm(\"localhost\", \"ScriptRemote\")\n if connectionSuccessful:\n print(\"connection successfull\")\n else:\n print(\"connection not possible\")\n sys.exit()\n \n zahnerZennium = ThalesRemoteScriptWrapper(zenniumConnection)\n \n zahnerZennium.forceThalesIntoRemoteScript()",

"connection successfull\n"

]

],

[

[

"# Setting the parameters for the measurement\n\nAfter the connection with Thales, the naming of the files of the measurement results is set.\n\nMeasure EIS spectra with a sequential number in the file name that has been specified.\nStarting with number 1.",

"_____no_output_____"

]

],

[

[

" zahnerZennium.setEISNaming(\"counter\")\n zahnerZennium.setEISCounter(1)\n zahnerZennium.setEISOutputPath(r\"C:\\THALES\\temp\\test1\")\n zahnerZennium.setEISOutputFileName(\"spectra\")",

"_____no_output_____"

]

],

[

[

"Setting the parameters for the spectra.\nAlternatively a rule file can be used as a template.",

"_____no_output_____"

]

],

[

[

" zahnerZennium.setPotentiostatMode(PotentiostatMode.POTMODE_POTENTIOSTATIC)\n zahnerZennium.setAmplitude(10e-3)\n zahnerZennium.setPotential(0)\n zahnerZennium.setLowerFrequencyLimit(0.01)\n zahnerZennium.setStartFrequency(1000)\n zahnerZennium.setUpperFrequencyLimit(200000)\n zahnerZennium.setLowerNumberOfPeriods(3)\n zahnerZennium.setLowerStepsPerDecade(5)\n zahnerZennium.setUpperNumberOfPeriods(20)\n zahnerZennium.setUpperStepsPerDecade(10)\n zahnerZennium.setScanDirection(\"startToMax\")\n zahnerZennium.setScanStrategy(\"single\")",

"_____no_output_____"

]

],

[

[

"After setting the parameters, the measurement is started. \n\n<div class=\"alert alert-block alert-info\">\n<b>Note:</b> If the potentiostat is set to potentiostatic before the impedance measurement and is switched off, the measurement is performed at the open circuit voltage/potential.\n</div>\n\nAfter the measurement the potentiostat is switched off.",

"_____no_output_____"

]

],

[

[

" zahnerZennium.enablePotentiostat()\n zahnerZennium.measureEIS()\n zahnerZennium.disablePotentiostat()\n zenniumConnection.disconnectFromTerm()",

"_____no_output_____"

]

],

[

[

"# Importing the ism file\n\nImport the spectrum from the previous measurement. This was saved under the set path and name with the number expanded. \nThe measurement starts at 1 therefore the following path results: \"C:\\THALES\\temp\\test1\\spectra_0001.ism\".",

"_____no_output_____"

]

],

[

[

" ismFile = IsmImport(r\"C:\\THALES\\temp\\test1\\spectra_0001.ism\")\n \n impedanceFrequencies = ismFile.getFrequencyArray()\n \n impedanceAbsolute = ismFile.getImpedanceArray()\n impedancePhase = ismFile.getPhaseArray()\n \n impedanceComplex = ismFile.getComplexImpedanceArray()",

"_____no_output_____"

]

],

[

[

"The Python datetime object of the measurement date is output to the console next.",

"_____no_output_____"

]

],

[

[

" print(\"Measurement end time: \" + str(ismFile.getMeasurementEndDateTime()))",

"Measurement end time: 2021-10-14 13:21:30.032500\n"

]

],

[

[

"# Displaying the measurement results\n\nThe spectra are presented in the Bode and Nyquist representation. For this test, the Zahner test box was measured in the lin position.\n\n## Nyquist Plot\n\nThe matplotlib diagram is configured to match the Nyquist representation. For this, the diagram aspect is set equal and the axes are labeled in engineering units. The axis labeling is realized with [LaTeX](https://www.latex-project.org/) for subscript text.\n\nThe possible settings of the graph can be found in the detailed documentation and tutorials of [matplotlib](https://matplotlib.org/).",

"_____no_output_____"

]

],

[

[

" figNyquist, (nyquistAxis) = plt.subplots(1, 1)\n figNyquist.suptitle(\"Nyquist\")\n \n nyquistAxis.plot(np.real(impedanceComplex), -np.imag(impedanceComplex), marker=\"x\", markersize=5)\n nyquistAxis.grid(which=\"both\")\n nyquistAxis.set_aspect(\"equal\")\n nyquistAxis.xaxis.set_major_formatter(EngFormatter(unit=\"$\\Omega$\"))\n nyquistAxis.yaxis.set_major_formatter(EngFormatter(unit=\"$\\Omega$\"))\n nyquistAxis.set_xlabel(r\"$Z_{\\rm re}$\")\n nyquistAxis.set_ylabel(r\"$-Z_{\\rm im}$\")\n figNyquist.set_size_inches(18, 18)\n plt.show()\n figNyquist.savefig(\"nyquist.svg\")",

"_____no_output_____"

]

],

[

[

"## Bode Plot\n\nThe matplotlib representation was also adapted for the Bode plot. A figure with two plots was created for the separate display of phase and impedance which are plotted over the same x-axis.",

"_____no_output_____"

]

],

[

[

" figBode, (impedanceAxis, phaseAxis) = plt.subplots(2, 1, sharex=True)\n figBode.suptitle(\"Bode\")\n \n impedanceAxis.loglog(impedanceFrequencies, impedanceAbsolute, marker=\"+\", markersize=5)\n impedanceAxis.xaxis.set_major_formatter(EngFormatter(unit=\"Hz\"))\n impedanceAxis.yaxis.set_major_formatter(EngFormatter(unit=\"$\\Omega$\"))\n impedanceAxis.set_xlabel(r\"$f$\")\n impedanceAxis.set_ylabel(r\"$|Z|$\")\n impedanceAxis.grid(which=\"both\")\n \n phaseAxis.semilogx(impedanceFrequencies, np.abs(impedancePhase * (360 / (2 * np.pi))), marker=\"+\", markersize=5)\n phaseAxis.xaxis.set_major_formatter(EngFormatter(unit=\"Hz\"))\n phaseAxis.yaxis.set_major_formatter(EngFormatter(unit=\"$°$\", sep=\"\"))\n phaseAxis.set_xlabel(r\"$f$\")\n phaseAxis.set_ylabel(r\"$|Phase|$\")\n phaseAxis.grid(which=\"both\")\n phaseAxis.set_ylim([0, 90])\n figBode.set_size_inches(18, 12)\n plt.show()\n figBode.savefig(\"bode.svg\")",

"_____no_output_____"

]

],

[

[

"# Deployment of the source code\n\n**The following instruction is not needed by the user.**\n\nIt automatically extracts the pure python code from the jupyter notebook to provide it to the user. Thus the user does not need jupyter itself and does not have to copy the code manually.\n\nThe source code is saved in a .py file with the same name as the notebook.",

"_____no_output_____"

]

],

[

[

" if executionInNotebook() == True:\n notebookCodeToPython(\"EISImportPlot.ipynb\")",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

]

] |

cbe13597ee21a93f3ffebfb0847151c4945b3ad5

| 4,824 |

ipynb

|

Jupyter Notebook

|

2. Omega and Xi, Constraints.ipynb

|

dhruvspatel/Robot-Localization-and-Landmark-Detection-Using-Graph-SLAM

|

b4f199cd237b45bb45a410d1032beeef88a7da77

|

[

"MIT"

] | 2 |

2020-09-28T21:08:00.000Z

|

2022-02-15T09:22:31.000Z

|

2. Omega and Xi, Constraints.ipynb

|

dhruvspatel/Robot-Localization-and-Landmark-Detection-Using-Graph-SLAM

|

b4f199cd237b45bb45a410d1032beeef88a7da77

|

[

"MIT"

] | null | null | null |

2. Omega and Xi, Constraints.ipynb

|

dhruvspatel/Robot-Localization-and-Landmark-Detection-Using-Graph-SLAM

|

b4f199cd237b45bb45a410d1032beeef88a7da77

|

[

"MIT"

] | null | null | null | 41.586207 | 607 | 0.615672 |

[

[

[

"#### Omega and Xi\n\nTo implement Graph SLAM, a matrix and a vector (omega and xi, respectively) are introduced. The matrix is square and labelled with all the robot poses (xi) and all the landmarks (Li). Every time you make an observation, for example, as you move between two poses by some distance `dx` and can relate those two positions, you can represent this as a numerical relationship in these matrices.\n\nIt's easiest to see how these work in an example. Below you can see a matrix representation of omega and a vector representation of xi.\n\n <img src='images/omega_xi.png' width=\"20%\" height=\"20%\" />\nNext, let's look at a simple example that relates 3 poses to one another. \n* When you start out in the world most of these values are zeros or contain only values from the initial robot position\n* In this example, you have been given constraints, which relate these poses to one another\n* Constraints translate into matrix values\n\n<img src='images/omega_xi_constraints.png' width=\"70%\" height=\"70%\" />\n\nIf you have ever solved linear systems of equations before, this may look familiar, and if not, let's keep going!\n\n### Solving for x\n\nTo \"solve\" for all these x values, we can use linear algebra; all the values of x are in the vector `mu` which can be calculated as a product of the inverse of omega times xi.\n\n<img src='images/solution.png' width=\"30%\" height=\"30%\" />\n\n---\n**You can confirm this result for yourself by executing the math in the cell below.**\n",

"_____no_output_____"

]

],

[

[

"import numpy as np\n\n# define omega and xi as in the example\nomega = np.array([[1,0,0],\n [-1,1,0],\n [0,-1,1]])\n\nxi = np.array([[-3],\n [5],\n [3]])\n\n# calculate the inverse of omega\nomega_inv = np.linalg.inv(np.matrix(omega))\n\n# calculate the solution, mu\nmu = omega_inv*xi\n\n# print out the values of mu (x0, x1, x2)\nprint(mu)",

"[[-3.]\n [ 2.]\n [ 5.]]\n"

]

],

[

[

"## Motion Constraints and Landmarks\n\nIn the last example, the constraint equations, relating one pose to another were given to you. In this next example, let's look at how motion (and similarly, sensor measurements) can be used to create constraints and fill up the constraint matrices, omega and xi. Let's start with empty/zero matrices.\n\n<img src='images/initial_constraints.png' width=\"35%\" height=\"35%\" />\n\nThis example also includes relationships between poses and landmarks. Say we move from x0 to x1 with a displacement `dx` of 5. Then we have created a motion constraint that relates x0 to x1, and we can start to fill up these matrices.\n\n<img src='images/motion_constraint.png' width=\"50%\" height=\"50%\" />\n\nIn fact, the one constraint equation can be written in two ways. So, the motion constraint that relates x0 and x1 by the motion of 5 has affected the matrix, adding values for *all* elements that correspond to x0 and x1.\n\n---\n\n### 2D case\n\nIn these examples, we've been showing you change in only one dimension, the x-dimension. In the project, it will be up to you to represent x and y positional values in omega and xi. One solution could be to create an omega and xi that are 2x larger that the number of robot poses (that will be generated over a series of time steps) and the number of landmarks, so that they can hold both x and y values for poses and landmark locations. I might suggest drawing out a rough solution to graph slam as you read the instructions in the next notebook; that always helps me organize my thoughts. Good luck!",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown"

] |

[

[

"markdown"

],

[

"code"

],

[

"markdown"

]

] |

cbe13627259ed9905f6a6acf2a2f7057882d3b19

| 109,513 |

ipynb

|

Jupyter Notebook

|

Week1-Introduction-to-Python-_-NumPy/Intro_to_Python_plus_NumPy.ipynb

|

isabella232/Bitcamp-DataSci

|

78b921b60b66d8cfb88ce54dcfdef37664004552

|

[

"MIT"

] | 1 |

2020-10-02T19:18:36.000Z

|

2020-10-02T19:18:36.000Z

|

Week1-Introduction-to-Python-_-NumPy/Intro_to_Python_plus_NumPy.ipynb

|

kylebegovich/Bitcamp-DataSci

|

0bf52ca1d250a915c3e7e4ba2eb6bc1edfb2766b

|

[

"MIT"

] | 2 |

2020-09-10T05:45:50.000Z

|

2020-09-25T07:23:26.000Z

|

Week1-Introduction-to-Python-_-NumPy/Intro_to_Python_plus_NumPy.ipynb

|

kylebegovich/Bitcamp-DataSci

|

0bf52ca1d250a915c3e7e4ba2eb6bc1edfb2766b

|

[

"MIT"

] | 3 |

2020-09-03T03:43:47.000Z

|

2020-12-27T02:33:49.000Z

| 22.598638 | 740 | 0.528741 |

[

[

[

"<a href=\"https://colab.research.google.com/github/bitprj/Bitcamp-DataSci/blob/master/Week1-Introduction-to-Python-_-NumPy/Intro_to_Python_plus_NumPy.ipynb\" target=\"_parent\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/></a>",

"_____no_output_____"

],

[

"<img src=\"https://github.com/bitprj/Bitcamp-DataSci/blob/master/Week1-Introduction-to-Python-_-NumPy/assets/icons/bitproject.png?raw=1\" width=\"200\" align=\"left\"> \n<img src=\"https://github.com/bitprj/Bitcamp-DataSci/blob/master/Week1-Introduction-to-Python-_-NumPy/assets/icons/data-science.jpg?raw=1\" width=\"300\" align=\"right\">",

"_____no_output_____"

],

[

"# Introduction to Python",

"_____no_output_____"

],

[

"### Table of Contents\n\n- Why, Where, and How we use Python\n- What we will be learning today\n - Goals\n- Numbers\n - Types of Numbers\n - Basic Arithmetic\n - Arithmetic Continued\n- Variable Assignment\n- Strings\n - Creating Strings\n - Printing Strings\n - String Basics\n - String Properties\n - Basic Built-In String Methods\n - Print Formatting\n - **1.0 Now Try This**\n- Booleans\n- Lists\n - Creating Lists\n - Basic List Methods\n - Nesting Lists\n - List Comprehensions\n - **2.0 Now Try This**\n- Tuples\n - Constructing Tuples\n - Basic Tuple Methods\n - Immutability\n - When To Use Tuples\n - **3.0 Now Try This**\n- Dictionaries\n - Constructing a Dictionary\n - Nesting With Dictionaries\n - Dictionary Methods\n - **4.0 Now Try This**\n- Comparison Operators\n- Functions\n - Intro to Functions\n - `def` Statements\n - Examples\n - Using `return`\n - **5.0 Now Try This**\n- Modules and Packages\n - Overview\n- NumPy\n - Creating Arrays\n - Indexing\n - Slicing\n - **6.0 Now Try This**\n - Data Types\n - **7.0 Now Try This**\n - Copy vs. View\n - **8.0 Now Try This**\n - Shape\n - **9.0 Now Try This**\n - Iterating Through Arrays\n - Joining Arrays\n - Splitting Arrays\n - Searching Arrays\n - Sorting Arrays\n - Filtering Arrays\n - **10.0 Now Try This**\n- Resources\n",

"_____no_output_____"

],

[

"## Why, Where, and How we use Python\n\nPython is a very popular scripting language that you can use to create applications and programs of all sizes and complexity. It is very easy to learn and has very little syntax, making it very efficient to code with. Python is also the language of choice for many when performing comprehensive data analysis. ",

"_____no_output_____"

],

[

"## What we will be learning today\n\n### Goals\n- Understanding key Python data types, operators and data structures\n- Understanding functions\n- Understanding modules\n- Understanding errors and exceptions",

"_____no_output_____"

],

[

"First data type we'll cover in detail is Numbers!",

"_____no_output_____"

],

[

"## Numbers",

"_____no_output_____"

],

[

"### Types of numbers\n\nPython has various \"types\" of numbers. We'll strictly cover integers and floating point numbers for now.\n\nIntegers are just whole numbers, positive or negative. (2,4,-21,etc.)\n\nFloating point numbers in Python have a decimal point in them, or use an exponential (e). For example 3.14 and 2.17 are *floats*. 5E7 (5 times 10 to the power of 7) is also a float. This is scientific notation and something you've probably seen in math classes.\n\nLet's start working through numbers and arithmetic:",

"_____no_output_____"

],

[

"### Basic Arithmetic",

"_____no_output_____"

]

],

[

[

"# Addition\n4+5",

"_____no_output_____"

],

[

"# Subtraction\n5-10",

"_____no_output_____"

],

[

"# Multiplication\n4*8",

"_____no_output_____"

],

[

"# Division\n25/5",

"_____no_output_____"

],

[

"# Floor Division\n12//4",

"_____no_output_____"

]

],

[

[

"What happened here?\n\nThe reason we get this result is because we are using \"*floor*\" division. The // operator (two forward slashes) removes any decimals and doesn't round. This always produces an integer answer.",

"_____no_output_____"

],

[

"**So what if we just want the remainder of division?**",

"_____no_output_____"

]

],

[

[

"# Modulo\n9 % 4",

"_____no_output_____"

]

],

[

[

"4 goes into 9 twice, with a remainder of 1. The % operator returns the remainder after division.",

"_____no_output_____"

],

[

"### Arithmetic continued",

"_____no_output_____"

]

],

[

[

"# Powers\n4**2",

"_____no_output_____"

],

[

"# A way to do roots\n144**0.5",

"_____no_output_____"

],

[

"# Order of Operations\n4 + 20 * 52 + 5",

"_____no_output_____"

],

[

"# Can use parentheses to specify orders\n(21+5) * (4+89)",

"_____no_output_____"

]

],

[

[

"## Variable Assignments\n\nWe can do a lot more with Python than just using it as a calculator. We can store any numbers we create in **variables**.\n\nWe use a single equals sign to assign labels or values to variables. Let's see a few examples of how we can do this.",

"_____no_output_____"

]

],

[

[

"# Let's create a variable called \"a\" and assign to it the number 10\na = 10\n\na",

"_____no_output_____"

]

],

[

[

"Now if I call *a* in my Python script, Python will treat it as the integer 10.",

"_____no_output_____"

]

],

[

[

"# Adding the objects\na+a",

"_____no_output_____"

]

],

[

[

"What happens on reassignment? Will Python let us write it over?",

"_____no_output_____"

]

],

[

[

"# Reassignment\na = 20\n\n# Check\na",

"_____no_output_____"

]

],

[

[

"Yes! Python allows you to write over assigned variable names. We can also use the variables themselves when doing the reassignment. Here is an example of what I mean:",

"_____no_output_____"

]

],

[

[

"# Use A to redefine A\na = a+a\n\n# check\na",

"_____no_output_____"

]

],

[

[

"The names you use when creating these labels need to follow a few rules:\n\n 1. Names can not start with a number.\n 2. There can be no spaces in the name, use _ instead.\n 3. Can't use any of these symbols :'\",<>/?|\\()!@#$%^&*~-+\n 4. Using lowercase names are best practice.\n 5. Can't words that have special meaning in Python like \"list\" and \"str\", we'll see why later\n\n\nUsing variable names can be a very useful way to keep track of different variables in Python. For example:",

"_____no_output_____"

]

],

[

[

"# Use object names to keep better track of what's going on in your code!\nincome = 1000\n\ntax_rate = 0.2\n\ntaxes = income*tax_rate",

"_____no_output_____"

],

[

"# Show the result!\ntaxes",

"_____no_output_____"

]

],

[

[

"So what have we learned? We learned some of the basics of numbers in Python. We also learned how to do arithmetic and use Python as a basic calculator. We then wrapped it up with learning about Variable Assignment in Python.\n\nUp next we'll learn about Strings!",

"_____no_output_____"

],

[

"## Strings",

"_____no_output_____"

],

[

"Strings are used in Python to record text information, such as names. Strings in Python are not treated like their own objects, but rather like a *sequence*, a consecutive series of characters. For example, Python understands the string \"hello' to be a sequence of letters in a specific order. This means we will be able to use indexing to grab particular letters (like the first letter, or the last letter).\n",

"_____no_output_____"

],

[

"### Creating Strings\nTo create a string in Python you need to use either single quotes or double quotes. For example:",

"_____no_output_____"

]

],

[

[

"# A word\n'hi'",

"_____no_output_____"

],

[

"# A phrase\n'A string can even be a sentence like this.'",

"_____no_output_____"

],

[

"# Using double quotes\n\"The quote type doesn't really matter.\"",

"_____no_output_____"

],

[

"# Be wary of contractions and apostrophes!\n'I'm using single quotes, but this will create an error!'",

"_____no_output_____"

]

],

[

[

"The reason for the error above is because the single quote in <code>I'm</code> stopped the string. You can use combinations of double and single quotes to get the complete statement.",

"_____no_output_____"

]

],

[

[

"\"This shouldn't cause an error now.\"",

"_____no_output_____"

]

],

[

[

"Now let's learn about printing strings!",

"_____no_output_____"

],

[

"### Printing Strings\n\nJupyter Notebooks have many neat behaviors that aren't available in base python. One of those is the ability to print strings by just typing it into a cell. The universal way to display strings however, is to use a **print()** function.",

"_____no_output_____"

]

],

[

[

"# In Jupyter, this is all we need\n'Hello World'",

"_____no_output_____"

],

[

"# This is the same as:\nprint('Hello World')",

"_____no_output_____"

],

[

"# Without the print function, we can't print multiple times in one block of code:\n'Hello World'\n'Second string'",

"_____no_output_____"

]

],

[

[

"A print statement can look like the following.",

"_____no_output_____"

]

],

[

[

"print('Hello World')\nprint('Second string')\nprint('\\n prints a new line')\nprint('\\n')\nprint('Just to prove it to you.')",

"Hello World\nSecond string\n\n prints a new line\n\n\nJust to prove it to you.\n"

]

],

[

[

"Now let's move on to understanding how we can manipulate strings in our programs.",

"_____no_output_____"

],

[

"### String Basics",

"_____no_output_____"

],

[

"Oftentimes, we would like to know how many characters are in a string. We can do this very easily with the **len()** function (short for 'length').",

"_____no_output_____"

]

],

[

[

"len('Hello World')",

"_____no_output_____"

]

],

[

[

"Python's built-in len() function counts all of the characters in the string, including spaces and punctuation.",

"_____no_output_____"

],

[

"Naturally, we can assign strings to variables.",

"_____no_output_____"

]

],

[

[

"# Assign 'Hello World' to mystring variable\nmystring = 'Hello World'",

"_____no_output_____"

],

[

"# Did it work?\nmystring",

"_____no_output_____"

],

[

"# Print it to make sure\nprint(mystring) ",

"Hello World\n"

]

],

[

[

"As stated before, Python treats strings as a sequence of characters. That means we can interact with each letter in a string and manipulate it. The way we access these letters is called **indexing**. Each letter has an index, which corresponds to their position in the string. In python, indices start at 0. For instance, in the string 'Hello World', 'H' has an index of 0, 'e' has an index of 1, the 'W' has an index of 6 (because spaces count as characters), and 'd' has an index of 10. The syntax for indexing is shown below.",

"_____no_output_____"

]

],

[

[

"# Extract first character in a string.\nmystring[0]",

"_____no_output_____"

],

[

"mystring[1]",

"_____no_output_____"

],

[

"mystring[2]",

"_____no_output_____"

]

],

[

[

"We can use a <code>:</code> to perform *slicing* which grabs everything up to a designated index. For example:",

"_____no_output_____"

]

],

[

[

"# Grab all letters past the first letter all the way to the end of the string\nmystring[:]",

"_____no_output_____"

],

[

"# This does not change the original string in any way\nmystring",

"_____no_output_____"

],

[

"# Grab everything UP TO the 5th index\nmystring[:5]",

"_____no_output_____"

]

],

[

[

"Note what happened above. We told Python to grab everything from 0 up to 5. It doesn't include the character in the 5th index. You'll notice this a lot in Python, where statements are usually in the context of \"up to, but not including\".",

"_____no_output_____"

]

],

[

[

"# The whole string\nmystring[:]",

"_____no_output_____"

],

[

"# The 'default' values, if you leave the sides of the colon blank, are 0 and the length of the string\nend = len(mystring)\n\n# See that is matches above\nmystring[0:end]",

"_____no_output_____"

]

],

[

[

"But we don't have to go forwards. Negative indexing allows us to start from the *end* of the string and work backwards.",

"_____no_output_____"

]

],

[

[

"# The LAST letter (one index 'behind' 0, so it loops back around)\nmystring[-1]",

"_____no_output_____"

],

[

"# Grab everything but the last letter\nmystring[:-1]",

"_____no_output_____"

]

],

[

[

"We can also use indexing and slicing to grab characters by a specified step size (1 is the default). See the following examples.",

"_____no_output_____"

]

],

[

[

"# Grab everything (default), go in steps size of 1\nmystring[::1]",

"_____no_output_____"

],

[

"# Grab everything, but go in step sizes of 2 (every other letter)\nmystring[0::2]",

"_____no_output_____"

],

[

"# A handy way to reverse a string!\nmystring[::-1]",

"_____no_output_____"

]

],

[

[

"Strings have certain properties to them that affect the way we can, and cannot, interact with them.",

"_____no_output_____"

],

[

"### String Properties\nIt's important to note that strings are *immutable*. This means that once a string is created, the elements within it can not be changed or replaced. For example:",

"_____no_output_____"

]

],

[

[

"mystring",

"_____no_output_____"

],

[

"# Let's try to change the first letter\nmystring[0] = 'a'",

"_____no_output_____"

]

],

[

[

"The error tells it us to straight. Strings do not support assignment the same way other data types do.\n\nHowever, we *can* **concatenate** strings.",

"_____no_output_____"

]

],

[

[

"mystring",

"_____no_output_____"

],

[

"# Combine strings through concatenation\nmystring + \". It's me.\"",

"_____no_output_____"

],

[

"# We can reassign mystring to a new value, however\nmystring = mystring + \". It's me.\"",

"_____no_output_____"

],

[

"mystring",

"_____no_output_____"

]

],

[

[

"One neat trick we can do with strings is use multiplication whenever we want to repeat characters a certain number of times.",

"_____no_output_____"

]

],

[

[

"letter = 'a'",

"_____no_output_____"

],

[

"letter*20",

"_____no_output_____"

]

],

[

[

"We already saw how to use len(). This is an example of a built-in string method, but there are quite a few more which we will cover next.",

"_____no_output_____"

],

[

"### Basic Built-in String methods\n\nObjects in Python usually have built-in methods. These methods are functions inside the object that can perform actions or commands on the object itself.\n\nWe call methods with a period and then the method name. Methods are in the form:\n\nobject.method(parameters)\n\nParameters are extra arguments we can pass into the method. Don't worry if the details don't make 100% sense right now. We will be going into more depth with these later.\n\nHere are some examples of built-in methods in strings:",

"_____no_output_____"

]

],

[

[

"mystring",

"_____no_output_____"

],

[

"# Make all letters in a string uppercase\nmystring.upper()",

"_____no_output_____"

],

[

"# Make all letters in a string lowercase\nmystring.lower()",

"_____no_output_____"

],

[

"# Split strings with a specified character as the separator. Spaces are the default.\nmystring.split()",

"_____no_output_____"

],

[

"# Split by a specific character (doesn't include the character in the resulting string)\nmystring.split('W')",

"_____no_output_____"

]

],

[

[

"### 1.0 Now Try This",

"_____no_output_____"

],

[

"Given the string 'Amsterdam' give an index command that returns 'd'. Enter your code in the cell below:",

"_____no_output_____"

]

],

[

[

"s = 'Amsterdam'\n# Print out 'd' using indexing\nanswer1 = # INSERT CODE HERE\nprint(answer1)\n",

"_____no_output_____"

]

],

[

[

"Reverse the string 'Amsterdam' using slicing:",

"_____no_output_____"

]

],

[

[

"s ='Amsterdam'\n# Reverse the string using slicing\nanswer2 = # INSERT CODE HERE\nprint(answer2)",

"_____no_output_____"

]

],

[

[

"Given the string Amsterdam, extract the letter 'm' using negative indexing.",

"_____no_output_____"

]

],

[

[

"s ='Amsterdam'\n\n# Print out the 'm'\nanswer3 = # INSERT CODE HERE\nprint(answer3)",

"_____no_output_____"

]

],

[

[

"## Booleans\n\nPython comes with *booleans* (values that are essentially binary: True or False, 1 or 0). It also has a placeholder object called None. Let's walk through a few quick examples of Booleans.",

"_____no_output_____"

]

],

[

[

"# Set object to be a boolean\na = True",

"_____no_output_____"

],

[

"#Show\na",

"_____no_output_____"

]

],

[

[

"We can also use comparison operators to create booleans. We'll cover comparison operators a little later.",

"_____no_output_____"

]

],

[

[

"# Output is boolean\n1 > 2",

"_____no_output_____"

]

],

[

[

"We can use None as a placeholder for an object that we don't want to reassign yet:",

"_____no_output_____"

]

],

[

[

"# None placeholder\nb = None",

"_____no_output_____"

],

[

"# Show\nprint(b)",

"_____no_output_____"

]

],

[

[

"That's all to booleans! Next we start covering data structures. First up, lists.",

"_____no_output_____"

],

[

"## Lists\n\nEarlier when discussing strings we introduced the concept of a *sequence*. Lists is the most generalized version of sequences in Python. Unlike strings, they are mutable, meaning the elements inside a list can be changed!\n\nLists are constructed with brackets [] and commas separating every element in the list.\n\nLet's start with seeing how we can build a list.",

"_____no_output_____"

],

[

"### Creating Lists",

"_____no_output_____"

]

],

[

[

"# Assign a list to an variable named my_list\nmy_list = [1,2,3]",

"_____no_output_____"

]

],

[

[

"We just created a list of integers, but lists can actually hold elements of multiple data types. For example:",

"_____no_output_____"

]

],

[

[

"my_list = ['A string',23,100.232,'o']",

"_____no_output_____"

]

],

[

[

"Just like strings, the len() function will tell you how many items are in the sequence of the list.",

"_____no_output_____"

]

],

[

[

"len(my_list)",

"_____no_output_____"

],

[

"my_list = ['one','two','three',4,5]",

"_____no_output_____"

],

[

"# Grab element at index 0\nmy_list[0]",

"_____no_output_____"

],

[

"# Grab index 1 and everything past it\nmy_list[1:]",

"_____no_output_____"

],

[

"# Grab everything UP TO index 3\nmy_list[:3]",

"_____no_output_____"

]

],

[

[

"We can also use + to concatenate lists, just like we did for strings.",

"_____no_output_____"

]

],

[

[

"my_list + ['new item']",

"_____no_output_____"

]

],

[

[

"Note: This doesn't actually change the original list!",

"_____no_output_____"

]

],

[

[

"my_list",

"_____no_output_____"

]

],

[

[

"You would have to reassign the list to make the change permanent.",

"_____no_output_____"

]

],

[

[

"# Reassign\nmy_list = my_list + ['add new item permanently']",

"_____no_output_____"

],

[

"my_list",

"_____no_output_____"

]

],

[

[

"We can also use the * for a duplication method similar to strings:",

"_____no_output_____"

]

],

[

[

"# Make the list double\nmy_list * 2",

"_____no_output_____"

],

[

"# Again doubling not permanent\nmy_list",

"_____no_output_____"

]

],

[

[

"Use the **append** method to permanently add an item to the end of a list:",

"_____no_output_____"

]

],

[

[

"# Append\nlist1.append('append me!')",

"_____no_output_____"

],

[

"# Show\nlist1",

"_____no_output_____"

]

],

[

[

"### List Comprehensions\nPython has an advanced feature called list comprehensions. They allow for quick construction of lists. To fully understand list comprehensions we need to understand for loops. So don't worry if you don't completely understand this section, and feel free to just skip it since we will return to this topic later.\n\nBut in case you want to know now, here are a few examples!",

"_____no_output_____"

]

],

[

[

"# Build a list comprehension by deconstructing a for loop within a []\nfirst_col = [row[0] for row in matrix]",

"_____no_output_____"

],

[

"first_col",

"_____no_output_____"

]

],

[

[

"We used a list comprehension here to grab the first element of every row in the matrix object. We will cover this in much more detail later on!",

"_____no_output_____"

],

[

"### 2.0 Now Try This",

"_____no_output_____"

],

[

"Build this list [0,0,0] using any of the shown ways.",

"_____no_output_____"

]

],

[

[

"# Build the list\nanswer1 = #INSERT CODE HERE\nprint(answer1)",

"_____no_output_____"

]

],

[

[

"## Tuples\n\nIn Python tuples are very similar to lists, however, unlike lists they are *immutable* meaning they can not be changed. You would use tuples to present things that shouldn't be changed, such as days of the week, or dates on a calendar. \n\nYou'll have an intuition of how to use tuples based on what you've learned about lists. We can treat them very similarly with the major distinction being that tuples are immutable.",

"_____no_output_____"

],

[

"### Constructing Tuples\n\nThe construction of a tuples use () with elements separated by commas. For example:",

"_____no_output_____"

]

],

[

[

"# Create a tuple\nt = (1,2,3)",

"_____no_output_____"

],

[

"# Check len just like a list\nlen(t)",

"_____no_output_____"

],

[

"# Can also mix object types\nt = ('one',2)\n\n# Show\nt",

"_____no_output_____"

],

[

"# Use indexing just like we did in lists\nt[0]",

"_____no_output_____"

],

[

"# Slicing just like a list\nt[-1]",

"_____no_output_____"

]

],

[

[

"### Basic Tuple Methods\n\nTuples have built-in methods, but not as many as lists do. Let's look at two of them:",

"_____no_output_____"

]

],

[

[

"# Use .index to enter a value and return the index\nt.index('one')",

"_____no_output_____"

],

[

"# Use .count to count the number of times a value appears\nt.count('one')",

"_____no_output_____"

]

],

[

[

"### Immutability\n\nIt can't be stressed enough that tuples are immutable. To drive that point home:",

"_____no_output_____"

]

],

[

[

"t[0]= 'change'",

"_____no_output_____"

]

],

[

[

"Because of this immutability, tuples can't grow. Once a tuple is made we can not add to it.",

"_____no_output_____"

]

],

[

[

"t.append('nope')",

"_____no_output_____"

]

],

[

[

"### When to use Tuples\n\nYou may be wondering, \"Why bother using tuples when they have fewer available methods?\" To be honest, tuples are not used as often as lists in programming, but are used when immutability is necessary. If in your program you are passing around an object and need to make sure it does not get changed, then a tuple becomes your solution. It provides a convenient source of data integrity.\n\nYou should now be able to create and use tuples in your programming as well as have an understanding of their immutability.",

"_____no_output_____"

],

[

"### 3.0 Now Try This",

"_____no_output_____"

],

[

"Create a tuple.",

"_____no_output_____"

]

],

[

[

"answer1 = #INSERT CODE HERE\nprint(type(answer1))",

"_____no_output_____"

]

],

[

[

"## Dictionaries\n\nWe've been learning about *sequences* in Python but now we're going to switch gears and learn about *mappings* in Python. If you're familiar with other languages you can think of dictionaries as hash tables. \n\nSo what are mappings? Mappings are a collection of objects that are stored by a *key*, unlike a sequence that stored objects by their relative position. This is an important distinction, since mappings won't retain order as is no *order* to keys..\n\nA Python dictionary consists of a key and then an associated value. That value can be almost any Python object.",

"_____no_output_____"

],

[

"### Constructing a Dictionary\nLet's see how we can build dictionaries and better understand how they work.",

"_____no_output_____"

]

],

[

[

"# Make a dictionary with {} and : to signify a key and a value\nmy_dict = {'key1':'value1','key2':'value2'}",

"_____no_output_____"

],

[

"# Call values by their key\nmy_dict['key2']",

"_____no_output_____"

]

],

[

[

"Its important to note that dictionaries are very flexible in the data types they can hold. For example:",

"_____no_output_____"

]

],

[

[

"my_dict = {'key1':123,'key2':[12,23,33],'key3':['item0','item1','item2']}",

"_____no_output_____"

],

[

"# Let's call items from the dictionary\nmy_dict['key3']",

"_____no_output_____"

],

[

"# Can call an index on that value\nmy_dict['key3'][0]",

"_____no_output_____"

],

[

"# Can then even call methods on that value\nmy_dict['key3'][0].upper()",

"_____no_output_____"

]

],

[

[

"We can affect the values of a key as well. For instance:",

"_____no_output_____"

]

],

[

[

"my_dict['key1']",

"_____no_output_____"

],

[

"# Subtract 123 from the value\nmy_dict['key1'] = my_dict['key1'] - 123",

"_____no_output_____"

],

[

"#Check\nmy_dict['key1']",

"_____no_output_____"

]

],

[

[

"A quick note, Python has a built-in method of doing a self subtraction or addition (or multiplication or division). We could have also used += or -= for the above statement. For example:",

"_____no_output_____"

]

],

[

[

"# Set the object equal to itself minus 123 \nmy_dict['key1'] -= 123\nmy_dict['key1']",

"_____no_output_____"

]

],

[

[

"We can also create keys by assignment. For instance if we started off with an empty dictionary, we could continually add to it:",

"_____no_output_____"

]

],

[

[

"# Create a new dictionary\nd = {}",

"_____no_output_____"

],

[

"# Create a new key through assignment\nd['animal'] = 'Dog'",

"_____no_output_____"

],

[

"# Can do this with any object\nd['answer'] = 42",

"_____no_output_____"

],

[

"#Show\nd",

"_____no_output_____"

]

],

[

[

"### Nesting with Dictionaries\n\nHopefully you're starting to see how powerful Python is with its flexibility of nesting objects and calling methods on them. Let's see a dictionary nested inside a dictionary:",

"_____no_output_____"

]

],

[

[

"# Dictionary nested inside a dictionary nested inside a dictionary\nd = {'key1':{'nestkey':{'subnestkey':'value'}}}",

"_____no_output_____"

]

],

[

[

"Seems complicated, but let's see how we can grab that value:",

"_____no_output_____"

]

],

[

[

"# Keep calling the keys\nd['key1']['nestkey']['subnestkey']",

"_____no_output_____"

]

],

[

[

"### Dictionary Methods\n\nThere are a few methods we can call on a dictionary. Let's get a quick introduction to a few of them:",

"_____no_output_____"

]

],

[

[

"# Create a typical dictionary\nd = {'key1':1,'key2':2,'key3':3}",

"_____no_output_____"

],

[

"# Method to return a list of all keys \nd.keys()",

"_____no_output_____"

],

[

"# Method to grab all values\nd.values()",

"_____no_output_____"

],

[

"# Method to return tuples of all items (we'll learn about tuples soon)\nd.items()",

"_____no_output_____"

]

],

[

[

"### 4.0 Now Try This\n",

"_____no_output_____"

],

[

"Using keys and indexing, grab the 'hello' from the following dictionaries:",

"_____no_output_____"

]

],

[

[

"d = {'simple_key':'hello'}\n\n# Grab 'hello'\nanswer1 = #INSERT CODE HERE\nprint(answer1)",

"_____no_output_____"

],

[

"d = {'k1':{'k2':'hello'}}\n\n# Grab 'hello'\nanswer2 = #INSERT CODE HERE\nprint(answer2)",

"_____no_output_____"

],

[

"# Getting a little tricker\nd = {'k1':[{'nest_key':['this is deep',['hello']]}]}\n\n#Grab hello\nanswer3 = #INSERT CODE HERE\nprint(answer3)",

"_____no_output_____"

],

[

"# This will be hard and annoying!\nd = {'k1':[1,2,{'k2':['this is tricky',{'tough':[1,2,['hello']]}]}]}\n\n# Grab hello\nanswer4 = #INSERT CODE HERE\nprint(answer4)",

"_____no_output_____"

]

],

[

[

"## Comparison Operators \n\nAs stated previously, comparison operators allow us to compare variables and output a Boolean value (True or False). \n\nThese operators are the exact same as what you've seen in Math, so there's nothing new here.\n\nFirst we'll present a table of the comparison operators and then work through some examples:\n\n<h2> Table of Comparison Operators </h2><p> In the table below, a=9 and b=11.</p>\n\n<table class=\"table table-bordered\">\n<tr>\n<th style=\"width:10%\">Operator</th><th style=\"width:45%\">Description</th><th>Example</th>\n</tr>\n<tr>\n<td>==</td>\n<td>If the values of two operands are equal, then the condition becomes true.</td>\n<td> (a == b) is not true.</td>\n</tr>\n<tr>\n<td>!=</td>\n<td>If the values of two operands are not equal, then the condition becomes true.</td>\n<td>(a != b) is true</td>\n</tr>\n<tr>\n<td>></td>\n<td>If the value of the left operand is greater than the value of the right operand, then the condition becomes true.</td>\n<td> (a > b) is not true.</td>\n</tr>\n<tr>\n<td><</td>\n<td>If the value of the left operand is less than the value of the right operand, then the condition becomes true.</td>\n<td> (a < b) is true.</td>\n</tr>\n<tr>\n<td>>=</td>\n<td>If the value of the left operand is greater than or equal to the value of the right operand, then the condition becomes true.</td>\n<td> (a >= b) is not true. </td>\n</tr>\n<tr>\n<td><=</td>\n<td>If the value of the left operand is less than or equal to the value of the right operand, then the condition becomes true.</td>\n<td> (a <= b) is true. </td>\n</tr>\n</table>",

"_____no_output_____"

],

[

"Let's now work through quick examples of each of these.\n\n#### Equal",

"_____no_output_____"

]

],

[

[

"4 == 4",

"_____no_output_____"

],

[

"1 == 0",

"_____no_output_____"

]

],

[

[

"Note that <code>==</code> is a <em>comparison</em> operator, while <code>=</code> is an <em>assignment</em> operator.",

"_____no_output_____"

],

[

"#### Not Equal",

"_____no_output_____"

]

],

[

[

"4 != 5",

"_____no_output_____"

],

[

"1 != 1",

"_____no_output_____"

]

],

[

[

"#### Greater Than",

"_____no_output_____"

]

],

[

[

"8 > 3",

"_____no_output_____"

],

[

"1 > 9",

"_____no_output_____"

]

],

[

[

"#### Less Than",

"_____no_output_____"

]

],

[

[

"3 < 8",

"_____no_output_____"

],

[

"7 < 0",

"_____no_output_____"

]

],

[

[

"#### Greater Than or Equal to",

"_____no_output_____"

]

],

[

[

"7 >= 7",

"_____no_output_____"

],

[

"9 >= 4",

"_____no_output_____"

]

],

[

[

"#### Less than or Equal to",

"_____no_output_____"

]

],

[

[

"4 <= 4",

"_____no_output_____"

],

[

"1 <= 3",

"_____no_output_____"

]

],

[

[

"Hopefully this was more of a review than anything new! Next, we move on to one of the most important aspects of building programs: functions and how to use them.",

"_____no_output_____"

],

[

"## Functions\n\n### Introduction to Functions\n\nHere, we will explain what a function is in Python and how to create one. Functions will be one of our main building blocks when we construct larger and larger amounts of code to solve problems.\n\n**So what is a function?**\n\nFormally, a function is a useful device that groups together a set of statements so they can be run more than once. They can also let us specify parameters that can serve as inputs to the functions.\n\nOn a more fundamental level, functions allow us to not have to repeatedly write the same code again and again. If you remember back to the lessons on strings and lists, remember that we used a function len() to get the length of a string. Since checking the length of a sequence is a common task you would want to write a function that can do this repeatedly at command.\n\nFunctions will be one of most basic levels of reusing code in Python, and it will also allow us to start thinking of program design.",

"_____no_output_____"

],

[

"### def Statements\n\nLet's see how to build out a function's syntax in Python. It has the following form:",

"_____no_output_____"

]

],

[

[

"def name_of_function(arg1,arg2):\n '''\n This is where the function's Document String (docstring) goes\n '''\n # Do stuff here\n # Return desired result",

"_____no_output_____"

]

],

[

[