modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

Wheatley961/Raw_1_Test_1_new.model

|

Wheatley961

| 2022-11-15T14:45:41Z | 2 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-11-15T14:45:17Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 128 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 128,

"warmup_steps": 13,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

SundayPunch/Combined_Tech_SF

|

SundayPunch

| 2022-11-15T14:43:46Z | 0 | 10 | null |

[

"license:openrail",

"region:us"

] | null | 2022-11-15T12:47:56Z |

---

license: openrail

---

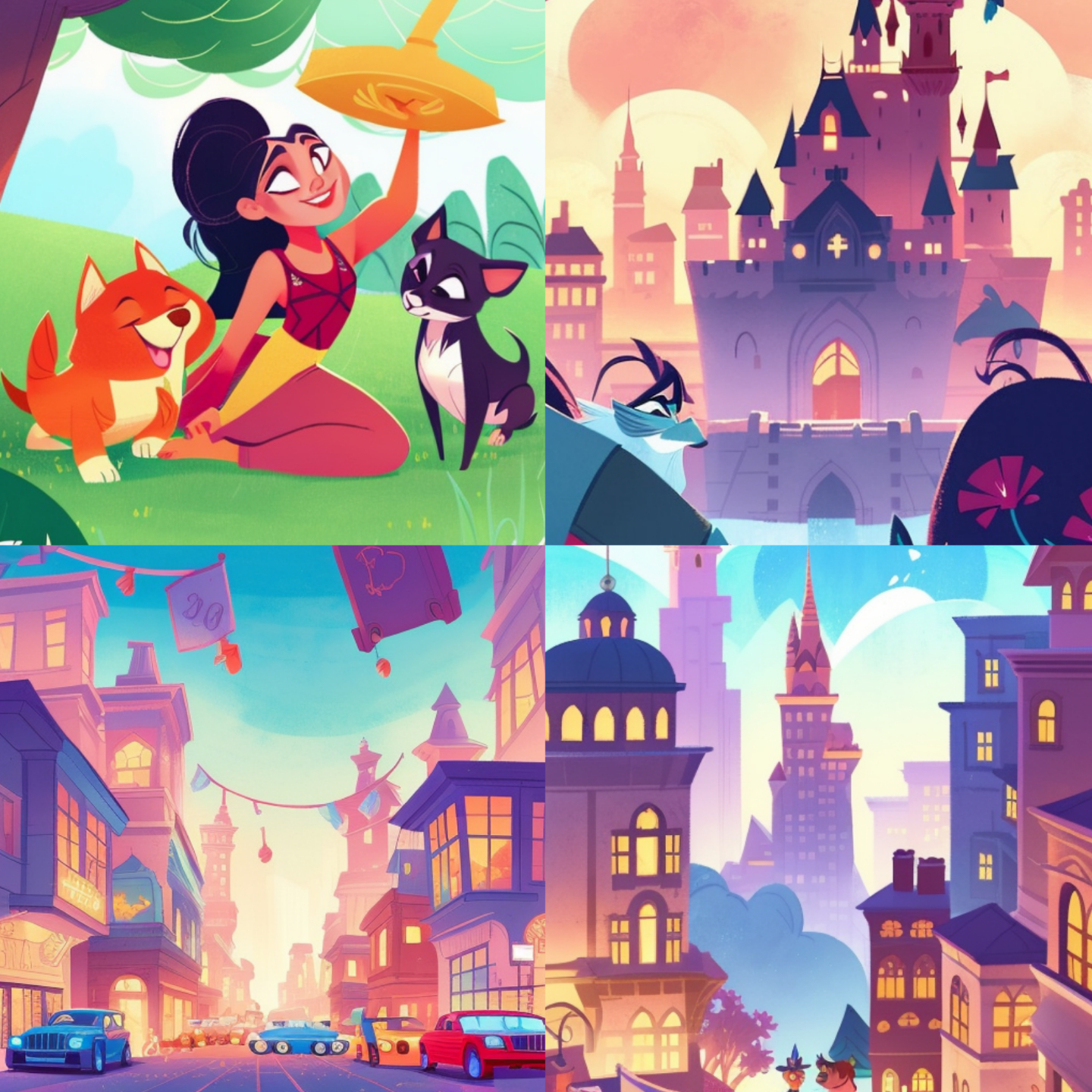

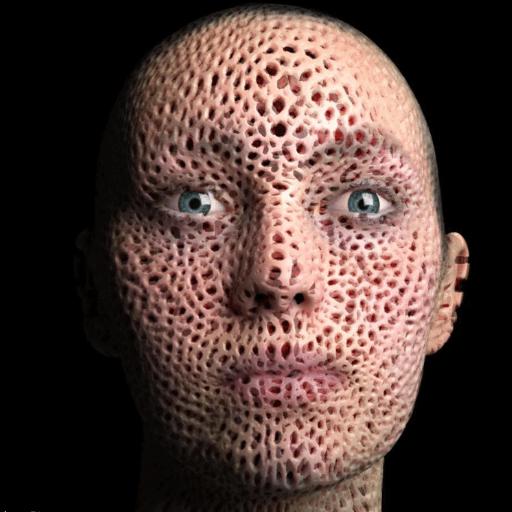

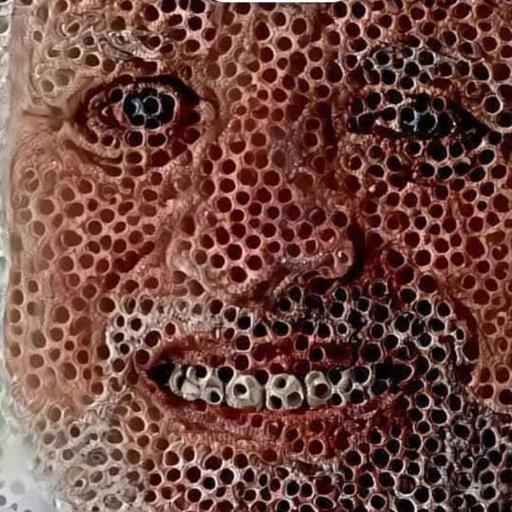

## Dreambooth model for a high-tech, detailed concept art style

This is a model trained on a mix of real images of fighter aircraft, warships, and spacecraft, and techy, detailed concept art from Aaron Beck, Paul Chadeisson and Rasmus Poulsen. High-tech, industrial sci-fi with a grungy aesthetic.

Use prompt: 'combotechsf'

## Example images

|

Tom11/xlm-roberta-base-finetuned-panx-de-fr

|

Tom11

| 2022-11-15T13:51:06Z | 111 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-11-15T08:58:04Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1637

- F1: 0.8581

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.29 | 1.0 | 715 | 0.1885 | 0.8231 |

| 0.1443 | 2.0 | 1430 | 0.1607 | 0.8479 |

| 0.0937 | 3.0 | 2145 | 0.1637 | 0.8581 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cpu

- Datasets 1.16.1

- Tokenizers 0.13.2

|

egorulz/malayalam-news

|

egorulz

| 2022-11-15T13:36:08Z | 62 | 0 |

transformers

|

[

"transformers",

"tf",

"gpt2",

"text-generation",

"generated_from_keras_callback",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-11-15T13:35:20Z |

---

license: mit

tags:

- generated_from_keras_callback

model-index:

- name: malayalam-news

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# malayalam-news

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 10.9255

- Validation Loss: 10.9247

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 5e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5e-05, 'decay_steps': -999, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 10.9636 | 10.9321 | 0 |

| 10.9425 | 10.9296 | 1 |

| 10.9255 | 10.9247 | 2 |

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.2

- Datasets 2.6.1

- Tokenizers 0.13.2

|

DenilsenAxel/nlp-text-classification

|

DenilsenAxel

| 2022-11-15T13:30:57Z | 161 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"dataset:amazon_us_reviews",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-15T13:22:15Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- amazon_us_reviews

metrics:

- accuracy

model-index:

- name: test_trainer

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: amazon_us_reviews

type: amazon_us_reviews

config: Books_v1_01

split: train[:1%]

args: Books_v1_01

metrics:

- name: Accuracy

type: accuracy

value: 0.7441424554826617

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# test_trainer

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the amazon_us_reviews dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9348

- Accuracy: 0.7441

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.6471 | 1.0 | 7500 | 0.6596 | 0.7376 |

| 0.5235 | 2.0 | 15000 | 0.6997 | 0.7423 |

| 0.3955 | 3.0 | 22500 | 0.9348 | 0.7441 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

cjvt/sloberta-trendi-topics

|

cjvt

| 2022-11-15T13:24:38Z | 207 | 0 |

transformers

|

[

"transformers",

"pytorch",

"camembert",

"text-classification",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-15T09:03:01Z |

---

license: apache-2.0

---

*Text classification model SloBERTa-Trendi-Topics 1.0*

The SloBerta-Trendi-Topics model is a text classification model for categorizing news texts with one of 13 topic labels. It was trained on a set of approx. 36,000 Slovene texts from various Slovene news sources included in the Trendi Monitor Corpus of Slovene (http://hdl.handle.net/11356/1590) such as "rtvslo.si", "sta.si", "delo.si", "dnevnik.si", "vecer.com", "24ur.com", "siol.net", "gorenjskiglas.si", etc.

The texts were semi-automatically categorized into 13 categories based on the sections under which they were published (i.e. URLs). The set of labels was developed in accordance with related categorization schemas used in other corpora and comprises the following topics: "črna kronika" (crime and accidents), "gospodarstvo, posel, finance" (economy, business, finance), "izobraževanje" (education), "okolje" (environment), "prosti čas" (free time), "šport" (sport), "umetnost, kultura" (art, culture), "vreme" (weather), "zabava" (entertainment), "zdravje" (health), "znanost in tehnologija" (science and technology), "politika" (politics), and "družba" (society). The categorization process is explained in more detail in Kosem et al. (2022): https://nl.ijs.si/jtdh22/pdf/JTDH2022_Kosem-et-al_Spremljevalni-korpus-Trendi.pdf

The model was trained on the labeled texts using the SloBERTa 2.0 contextual embeddings model (https://huggingface.co/EMBEDDIA/sloberta, also available at CLARIN.SI: http://hdl.handle.net/11356/1397) and validated on a development set of 1,293 texts using the simpletransformers library and the following hyperparameters:

- Train batch size: 8

- Learning rate: 1e-5

- Max. sequence length: 512

- Number of epochs: 2

The model achieves a macro-F1-score of 0.94 on a test set of 1,295 texts (best for "črna kronika", "politika", "šport", and "vreme" at 0.98, worst for "prosti čas" at 0.83).

|

ogkalu/Illustration-Diffusion

|

ogkalu

| 2022-11-15T12:57:36Z | 0 | 162 | null |

[

"text-to-image",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2022-10-22T02:13:26Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

---

2D Illustration Styles are scarce on Stable Diffusion. Inspired by Hollie Mengert, this a fine-tuned Stable Diffusion model trained on her work. The correct token is holliemengert artstyle.

Hollie is **not** affiliated with this. You can read about her stance on the issue here - https://waxy.org/2022/11/invasive-diffusion-how-one-unwilling-illustrator-found-herself-turned-into-an-ai-model/

**Portraits generated by this model:**

**Lanscapes generated by this model:**

|

davide1998/a2c-AntBulletEnv-v0

|

davide1998

| 2022-11-15T12:40:21Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-11-15T12:39:17Z |

---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

metrics:

- type: mean_reward

value: 1309.17 +/- 78.04

name: mean_reward

verified: false

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

OSalem99/Pixelcopter-PLE-v0

|

OSalem99

| 2022-11-15T12:03:00Z | 0 | 0 | null |

[

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-11-15T12:02:52Z |

---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Pixelcopter-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 11.50 +/- 7.03

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

Bhuvana/setfit_2class_model

|

Bhuvana

| 2022-11-15T11:58:40Z | 4 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-11-15T11:58:09Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 75 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 75,

"warmup_steps": 8,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

aayu/bert-base-uncased-finetuned-jd_Nov15

|

aayu

| 2022-11-15T11:11:33Z | 56 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-11-15T10:14:23Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bert-base-uncased-finetuned-jd_Nov15

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-jd_Nov15

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0061

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 469 | 0.0547 |

| 1.8699 | 2.0 | 938 | 0.0090 |

| 0.0888 | 3.0 | 1407 | 0.0061 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

alexcaillet/ddpm-butterflies-128

|

alexcaillet

| 2022-11-15T10:43:02Z | 0 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-11-08T10:37:38Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/alexcaillet/ddpm-butterflies-128/tensorboard?#scalars)

|

OSalem99/Reinforce-CartPole01

|

OSalem99

| 2022-11-15T10:37:58Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-11-15T10:36:59Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole01

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 65.80 +/- 17.22

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

Freazling/main-sentiment-model-chats-2-labels

|

Freazling

| 2022-11-15T10:18:19Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-15T09:31:05Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: main-sentiment-model-chats-2-labels

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# main-sentiment-model-chats-2-labels

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3718

- Accuracy: 0.8567

- F1: 0.8459

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.24.0

- Pytorch 1.14.0.dev20221113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

Bhuvana/setfit_2class

|

Bhuvana

| 2022-11-15T09:19:34Z | 2 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-11-15T09:19:15Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 40 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 40,

"warmup_steps": 4,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

iampedroalz/ppo-LunarLander-v2

|

iampedroalz

| 2022-11-15T07:55:45Z | 4 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-11-15T07:40:41Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 115.65 +/- 116.41

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

nightalon/distilroberta-base-finetuned-wikitext2

|

nightalon

| 2022-11-15T07:53:44Z | 195 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-11-15T07:24:02Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilroberta-base-finetuned-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilroberta-base-finetuned-wikitext2

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8340

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.0843 | 1.0 | 2406 | 1.9226 |

| 1.9913 | 2.0 | 4812 | 1.8820 |

| 1.9597 | 3.0 | 7218 | 1.8214 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

GhifSmile/mT5_multilingual_XLSum-finetuned-liputan6-coba

|

GhifSmile

| 2022-11-15T07:44:16Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-11-15T04:34:47Z |

---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mT5_multilingual_XLSum-finetuned-liputan6-coba

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mT5_multilingual_XLSum-finetuned-liputan6-coba

This model is a fine-tuned version of [csebuetnlp/mT5_multilingual_XLSum](https://huggingface.co/csebuetnlp/mT5_multilingual_XLSum) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2713

- Rouge1: 0.3371

- Rouge2: 0.2029

- Rougel: 0.2927

- Rougelsum: 0.309

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adafactor

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:---------:|

| 1.4304 | 1.0 | 4474 | 1.2713 | 0.3371 | 0.2029 | 0.2927 | 0.309 |

| 1.4286 | 2.0 | 8948 | 1.2713 | 0.3371 | 0.2029 | 0.2927 | 0.309 |

| 1.429 | 3.0 | 13422 | 1.2713 | 0.3371 | 0.2029 | 0.2927 | 0.309 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

chuchun9/distilbert-base-uncased-finetuned-squad

|

chuchun9

| 2022-11-15T07:24:11Z | 127 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-11-08T02:14:30Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6727

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.5227 | 1.0 | 1107 | 2.0485 |

| 1.7555 | 2.0 | 2214 | 1.7443 |

| 1.4567 | 3.0 | 3321 | 1.6511 |

| 1.2107 | 4.0 | 4428 | 1.6496 |

| 1.083 | 5.0 | 5535 | 1.6727 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

nightalon/distilgpt2-finetuned-wikitext2

|

nightalon

| 2022-11-15T07:13:57Z | 165 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-11-15T06:37:54Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilgpt2-finetuned-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilgpt2-finetuned-wikitext2

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6421

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.7602 | 1.0 | 2334 | 3.6669 |

| 3.653 | 2.0 | 4668 | 3.6472 |

| 3.6006 | 3.0 | 7002 | 3.6421 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

Guroruseru/distilbert-base-uncased-finetuned-emotion

|

Guroruseru

| 2022-11-15T06:53:06Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-15T04:34:21Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1

- Datasets 1.16.1

- Tokenizers 0.10.3

|

teacookies/autotrain-15112022-cert2-2099767621

|

teacookies

| 2022-11-15T05:45:30Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"token-classification",

"unk",

"dataset:teacookies/autotrain-data-15112022-cert2",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-11-15T05:27:01Z |

---

tags:

- autotrain

- token-classification

language:

- unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- teacookies/autotrain-data-15112022-cert2

co2_eq_emissions:

emissions: 30.88105111466208

---

# Model Trained Using AutoTrain

- Problem type: Entity Extraction

- Model ID: 2099767621

- CO2 Emissions (in grams): 30.8811

## Validation Metrics

- Loss: 0.004

- Accuracy: 0.999

- Precision: 0.982

- Recall: 0.990

- F1: 0.986

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/teacookies/autotrain-15112022-cert2-2099767621

```

Or Python API:

```

from transformers import AutoModelForTokenClassification, AutoTokenizer

model = AutoModelForTokenClassification.from_pretrained("teacookies/autotrain-15112022-cert2-2099767621", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("teacookies/autotrain-15112022-cert2-2099767621", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

jbreunig/xlm-roberta-base-finetuned-panx-de-fr

|

jbreunig

| 2022-11-15T05:07:16Z | 110 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-11-15T02:12:52Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1637

- F1: 0.8581

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.29 | 1.0 | 715 | 0.1885 | 0.8231 |

| 0.1443 | 2.0 | 1430 | 0.1607 | 0.8479 |

| 0.0937 | 3.0 | 2145 | 0.1637 | 0.8581 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1

- Datasets 1.16.1

- Tokenizers 0.10.3

|

hcho22/opus-mt-ko-en-finetuned-en-to-kr

|

hcho22

| 2022-11-15T05:03:46Z | 83 | 1 |

transformers

|

[

"transformers",

"tf",

"marian",

"text2text-generation",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-11-10T03:37:03Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: hcho22/opus-mt-ko-en-finetuned-en-to-kr

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# hcho22/opus-mt-ko-en-finetuned-en-to-kr

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-ko-en](https://huggingface.co/Helsinki-NLP/opus-mt-ko-en) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 2.5856

- Validation Loss: 2.0437

- Train Bleu: 2.0518

- Train Gen Len: 20.8110

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Bleu | Train Gen Len | Epoch |

|:----------:|:---------------:|:----------:|:-------------:|:-----:|

| 2.5856 | 2.0437 | 2.0518 | 20.8110 | 0 |

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.2

- Datasets 2.6.1

- Tokenizers 0.13.2

|

rohitsan/bart-finetuned-idl-new

|

rohitsan

| 2022-11-15T04:50:30Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-11-14T08:00:11Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bart-finetuned-idl-new

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-finetuned-idl-new

This model is a fine-tuned version of [rohitsan/bart-finetuned-idl-new](https://huggingface.co/rohitsan/bart-finetuned-idl-new) on an unknown dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.2981

- eval_bleu: 18.5188

- eval_gen_len: 19.3843

- eval_runtime: 257.315

- eval_samples_per_second: 24.464

- eval_steps_per_second: 3.059

- epoch: 8.0

- step: 56648

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 35

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

Marqo/marqo-yolo-v1

|

Marqo

| 2022-11-15T04:14:14Z | 0 | 0 | null |

[

"onnx",

"region:us"

] | null | 2022-11-15T04:02:30Z |

https://github.com/marqo-ai/marqo

|

Signorlimone/StylizR

|

Signorlimone

| 2022-11-15T04:06:53Z | 0 | 7 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2022-11-15T03:38:15Z |

---

license: creativeml-openrail-m

---

This model tries to mimick the stylized 3d look but with a realistic twist on texture and overall materials rendition.

Use "tdst style" (without quotes) to activate the model

As usual, if you want a better likeness with your subject you can either use brackets like in: [3dst style:10] or give more emphasis to the subject like in: (subject:1.3)

|

Bhathiya/setfit-model

|

Bhathiya

| 2022-11-15T04:05:45Z | 2 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-11-15T04:05:13Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 64 with parameters:

```

{'batch_size': 8, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 64,

"warmup_steps": 7,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

fuh990202/distilbert-base-uncased-finetuned-squad

|

fuh990202

| 2022-11-15T03:59:32Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-11-15T02:48:12Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 2.1634

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.0874 | 1.0 | 1113 | 1.7948 |

| 1.1106 | 2.0 | 2226 | 1.7791 |

| 0.4632 | 3.0 | 3339 | 2.1634 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

herpritts/FFXIV-Style

|

herpritts

| 2022-11-15T03:14:41Z | 0 | 53 | null |

[

"stable-diffusion",

"text-to-image",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2022-10-29T22:01:56Z |

---

tags:

- stable-diffusion

- text-to-image

license: creativeml-openrail-m

---

v1.1: [xivcine-style-1-1.ckpt](https://huggingface.co/herpritts/FFXIV-Style/blob/main/xivcine-style-1-1.ckpt)

token/class: <b>xivcine style</b>

All training images are from the trailers of the critically acclaimed MMORPG Final Fantasy XIV, which has a free trial that includes the entirety of A Realm Reborn AND the award-winning Heavensward expansion up to level 60 with no restrictions on playtime. Sign up, and enjoy Eorzea today! https://secure.square-enix.com/account/app/svc/ffxivregister?lng=en-gb. This model hopes to replicate that style. (Use recruitment code D8PE6VFZ if you like my model and decide to play the game. /smile)

If using the newer version of this model, use "<b>xivcine style</b>" in your prompt. The following images were made with a generic prompt like "portrait, xivcine style, action pose, [hyper realistic], colorful background, bokeh, detailed, cinematic, 3d octane render, 4k, concept art, trending on artstation"

Faces will trend toward this style with a non-specific prompt:

Armor styles can be tweaked effectively with variations in X/Y plots:

Landscapes will trend toward this style with a non-specific prompt:

Merging with other checkpoints can produce entirely unique styles while maintaining an ornate armor style:

These are examples of how elements combine in various mergers:

Future updates will be aimed at training specific pieces of armor, etc. The intent is to create gposes in the style of FFXIV trailers.

v1.0: [xivcine_person_v1.ckpt](https://huggingface.co/herpritts/FFXIV-Style/blob/main/xivcine_person_v1.ckpt)

token/class: <b>xivcine person</b>

This model is just for fun. It makes deep fried images with bright colors and cool lights if you want it to, but it won't listen to your prompts very well.

The images below were prompted with a generic prompt like "xivcine person, cinematic, colorful background, concept art, dramatic lighting, high detail, highly detailed, hyper realistic, intricate, intricate sharp details, octane render, smooth, studio lighting, trending on artstation" plus forest or castle.

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

Owos/tb-classifier

|

Owos

| 2022-11-15T02:51:01Z | 22 | 0 |

transformers

|

[

"transformers",

"inception",

"vision",

"image-classification",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-10-23T04:17:59Z |

---

license: apache-2.0

tags:

- vision

- image-classification

datasets:

- https://www.kaggle.com/datasets/tawsifurrahman/tuberculosis-tb-chest-xray-dataset

widget:

- src: https://huggingface.co/Owos/tb-classifier/blob/main/tb-negative.png

example_title: Negative

- src: https://huggingface.co/Owos/tb-classifier/blob/main/tb-positive.png

example_title: Positive

metrics:

- Accuracy

- Precision

- Recall

---

# Tuberculosis Classifier

[Github repo is here](https://github.com/owos/tb_project) </br>

[HuggingFace Space](https://huggingface.co/spaces/Owos/tb_prediction_space)

# Model description

This is a computer vision model that was built with TensorFlow to classify if a given x-ray scan is positive for Tuberculosis or not.

# Intended uses & limitations

The model was built to help support low-resourced and short-staffed primary healthcare centers in Nigeria. Particularly, the aim to was created a computer-aided diagnosing tool for Radiologists in these centers.

The model has not undergone clinical testing and usage is at ueser's own risk.The model has however been tested on real life data images that are positive for tuberculosis

# How to use

Download the pre-trained model and use it to make inference.

A space has been created for testing [here](space.com)

# Training data

The entire dataset consist of 3500 negative images and 700 positive TB images. </br>

The data was splitted in 80% for training and 20% for validation.

# Training procedure

Transfer-learning was employed using InceptionV3 as the pre-trained model. Training was done for 20 epochs and the classes were weighted during training in order to neutralize the imbalanced class in the dataset. The training was done on Kaggle using the GPUs provided. More details of the experiments can be found [here](https://www.kaggle.com/code/abrahamowodunni/tb-project)

# Evaluation results

The result of the evaluation are as follows: - loss: 0.0923 - binary_accuracy: 0.9857 - precision: 0.9259 - recall: 0.9843

More information can be found in the plot below.

[Evaluation results of the TB model](https://github.com/owos/tb_project/blob/main/README.md)

|

huggingtweets/ianflynnbkc

|

huggingtweets

| 2022-11-15T02:50:43Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-08-14T02:48:05Z |

---

language: en

thumbnail: http://www.huggingtweets.com/ianflynnbkc/1668480615006/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1107777212835614720/g_KwstYD_400x400.png')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Ian Flynn</div>

<div style="text-align: center; font-size: 14px;">@ianflynnbkc</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Ian Flynn.

| Data | Ian Flynn |

| --- | --- |

| Tweets downloaded | 3243 |

| Retweets | 964 |

| Short tweets | 315 |

| Tweets kept | 1964 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/gnis1yl2/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @ianflynnbkc's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2692e7ob) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2692e7ob/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/ianflynnbkc')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

hfl/minirbt-h256

|

hfl

| 2022-11-15T02:21:47Z | 461 | 5 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"fill-mask",

"zh",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-11-14T05:13:06Z |

---

language:

- zh

tags:

- bert

license: "apache-2.0"

---

# Please use 'Bert' related functions to load this model!

## Chinese small pre-trained model MiniRBT

In order to further promote the research and development of Chinese information processing, we launched a Chinese small pre-training model MiniRBT based on the self-developed knowledge distillation tool TextBrewer, combined with Whole Word Masking technology and Knowledge Distillation technology.

This repository is developed based on:https://github.com/iflytek/MiniRBT

You may also interested in,

- Chinese LERT: https://github.com/ymcui/LERT

- Chinese PERT: https://github.com/ymcui/PERT

- Chinese MacBERT: https://github.com/ymcui/MacBERT

- Chinese ELECTRA: https://github.com/ymcui/Chinese-ELECTRA

- Chinese XLNet: https://github.com/ymcui/Chinese-XLNet

- Knowledge Distillation Toolkit - TextBrewer: https://github.com/airaria/TextBrewer

More resources by HFL: https://github.com/iflytek/HFL-Anthology

|

hfl/minirbt-h288

|

hfl

| 2022-11-15T02:21:41Z | 358 | 5 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"fill-mask",

"zh",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-11-14T05:53:35Z |

---

language:

- zh

tags:

- bert

license: "apache-2.0"

---

# Please use 'Bert' related functions to load this model!

## Chinese small pre-trained model MiniRBT

In order to further promote the research and development of Chinese information processing, we launched a Chinese small pre-training model MiniRBT based on the self-developed knowledge distillation tool TextBrewer, combined with Whole Word Masking technology and Knowledge Distillation technology.

This repository is developed based on:https://github.com/iflytek/MiniRBT

You may also interested in,

- Chinese LERT: https://github.com/ymcui/LERT

- Chinese PERT: https://github.com/ymcui/PERT

- Chinese MacBERT: https://github.com/ymcui/MacBERT

- Chinese ELECTRA: https://github.com/ymcui/Chinese-ELECTRA

- Chinese XLNet: https://github.com/ymcui/Chinese-XLNet

- Knowledge Distillation Toolkit - TextBrewer: https://github.com/airaria/TextBrewer

More resources by HFL: https://github.com/iflytek/HFL-Anthology

|

hfl/rbt4-h312

|

hfl

| 2022-11-15T02:21:23Z | 211 | 4 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"bert",

"fill-mask",

"zh",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-11-14T06:24:07Z |

---

language:

- zh

tags:

- bert

license: "apache-2.0"

---

# Please use 'Bert' related functions to load this model!

## Chinese small pre-trained model MiniRBT

In order to further promote the research and development of Chinese information processing, we launched a Chinese small pre-training model MiniRBT based on the self-developed knowledge distillation tool TextBrewer, combined with Whole Word Masking technology and Knowledge Distillation technology.

This repository is developed based on:https://github.com/iflytek/MiniRBT

You may also interested in,

- Chinese LERT: https://github.com/ymcui/LERT

- Chinese PERT: https://github.com/ymcui/PERT

- Chinese MacBERT: https://github.com/ymcui/MacBERT

- Chinese ELECTRA: https://github.com/ymcui/Chinese-ELECTRA

- Chinese XLNet: https://github.com/ymcui/Chinese-XLNet

- Knowledge Distillation Toolkit - TextBrewer: https://github.com/airaria/TextBrewer

More resources by HFL: https://github.com/iflytek/HFL-Anthology

|

RawMean/farsi_lastname_classifier_1

|

RawMean

| 2022-11-15T01:53:10Z | 75 | 0 |

transformers

|

[

"transformers",

"pytorch",

"deberta-v2",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-15T01:45:21Z |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: farsi_lastname_classifier_1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# farsi_lastname_classifier_1

This model is a fine-tuned version of [microsoft/deberta-v3-small](https://huggingface.co/microsoft/deberta-v3-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0482

- Pearson: 0.9232

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 8e-05

- train_batch_size: 128

- eval_batch_size: 256

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| No log | 1.0 | 12 | 0.2705 | 0.7018 |

| No log | 2.0 | 24 | 0.0993 | 0.7986 |

| No log | 3.0 | 36 | 0.0804 | 0.8347 |

| No log | 4.0 | 48 | 0.0433 | 0.9246 |

| No log | 5.0 | 60 | 0.0559 | 0.9176 |

| No log | 6.0 | 72 | 0.0465 | 0.9334 |

| No log | 7.0 | 84 | 0.0503 | 0.9154 |

| No log | 8.0 | 96 | 0.0438 | 0.9222 |

| No log | 9.0 | 108 | 0.0468 | 0.9260 |

| No log | 10.0 | 120 | 0.0482 | 0.9232 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

RawMean/farsi_lastname_classifier

|

RawMean

| 2022-11-15T01:41:04Z | 74 | 0 |

transformers

|

[

"transformers",

"pytorch",

"deberta-v2",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-15T00:57:22Z |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: farsi_lastname_classifier

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# farsi_lastname_classifier