hexsha

stringlengths 40

40

| size

int64 6

14.9M

| ext

stringclasses 1

value | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 6

260

| max_stars_repo_name

stringlengths 6

119

| max_stars_repo_head_hexsha

stringlengths 40

41

| max_stars_repo_licenses

list | max_stars_count

int64 1

191k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 6

260

| max_issues_repo_name

stringlengths 6

119

| max_issues_repo_head_hexsha

stringlengths 40

41

| max_issues_repo_licenses

list | max_issues_count

int64 1

67k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 6

260

| max_forks_repo_name

stringlengths 6

119

| max_forks_repo_head_hexsha

stringlengths 40

41

| max_forks_repo_licenses

list | max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | avg_line_length

float64 2

1.04M

| max_line_length

int64 2

11.2M

| alphanum_fraction

float64 0

1

| cells

list | cell_types

list | cell_type_groups

list |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

c5122cd9f6752a889bff43edc3ea8e61526f06aa

| 5,200 |

ipynb

|

Jupyter Notebook

|

doc/code/CodeForTutor/primer.ipynb

|

runawayhorse001/PythonTipsDS

|

e82a4be4774b56ff487644d2328fe7c6a782faeb

|

[

"MIT"

] | 26 |

2019-02-23T01:09:23.000Z

|

2021-11-25T21:50:27.000Z

|

doc/code/CodeForTutor/primer.ipynb

|

runawayhorse001/PythonTipsDS

|

e82a4be4774b56ff487644d2328fe7c6a782faeb

|

[

"MIT"

] | null | null | null |

doc/code/CodeForTutor/primer.ipynb

|

runawayhorse001/PythonTipsDS

|

e82a4be4774b56ff487644d2328fe7c6a782faeb

|

[

"MIT"

] | 8 |

2019-05-24T02:05:46.000Z

|

2021-11-25T20:52:01.000Z

| 17.04918 | 68 | 0.444038 |

[

[

[

"import random\nimport numpy as np",

"_____no_output_____"

]

],

[

[

"# Primer Functions",

"_____no_output_____"

],

[

"### *",

"_____no_output_____"

]

],

[

[

"my_list = [1,2,3]\nprint(my_list)",

"[1, 2, 3]\n"

],

[

"print(*my_list)",

"1 2 3\n"

]

],

[

[

"### Random ",

"_____no_output_____"

]

],

[

[

"import random\nrandom.random()",

"_____no_output_____"

],

[

"# (b - a) * random_sample() + a\nrandom.uniform(3,8)",

"_____no_output_____"

],

[

"np.random.random_sample()",

"_____no_output_____"

],

[

"np.random.random_sample(4)",

"_____no_output_____"

],

[

"np.random.random_sample([2,4])",

"_____no_output_____"

],

[

"# (b - a) * random_sample() + a\na = 3; b = 8\n(b-a)*np.random.random_sample([2,4])+a",

"_____no_output_____"

]

],

[

[

"### Round",

"_____no_output_____"

]

],

[

[

"np.round(np.random.random_sample([2,4]),2)",

"_____no_output_____"

]

],

[

[

"### range",

"_____no_output_____"

]

],

[

[

"print(range(5))\nprint(*range(5))\nprint(*range(3,8))",

"range(0, 5)\n0 1 2 3 4\n3 4 5 6 7\n"

],

[

"#range([start], stop[, step])\n\nfor i in range(3,8):\n print(i)",

"3\n4\n5\n6\n7\n"

]

]

] |

[

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

]

] |

c51236fc1bee552e8c875f8294304bfe157137ac

| 551,823 |

ipynb

|

Jupyter Notebook

|

examples/webinars_conferences_etc/python_web_conf/NLU_crashcourse_py_web.ipynb

|

UPbook-innovations/nlu

|

2ae02ce7b6ca163f47271e98b71de109d38adefe

|

[

"Apache-2.0"

] | null | null | null |

examples/webinars_conferences_etc/python_web_conf/NLU_crashcourse_py_web.ipynb

|

UPbook-innovations/nlu

|

2ae02ce7b6ca163f47271e98b71de109d38adefe

|

[

"Apache-2.0"

] | 2 |

2021-09-28T05:55:05.000Z

|

2022-02-26T11:16:21.000Z

|

examples/webinars_conferences_etc/python_web_conf/NLU_crashcourse_py_web.ipynb

|

atdavidpark/nlu

|

619d07299e993323d83086c86506db71e2a139a9

|

[

"Apache-2.0"

] | 1 |

2021-09-13T10:06:20.000Z

|

2021-09-13T10:06:20.000Z

| 110.276379 | 73,646 | 0.754262 |

[

[

[

"\n\n[](https://colab.research.google.com/github/JohnSnowLabs/nlu/blob/master/examples/webinars_conferences_etc/python_web_conf/NLU_crashcourse_py_web.ipynb)\n\n\n<div>\n<img src=\"https://2021.pythonwebconf.com/images/pwcgenericlogo-opt2.jpg\" width=\"400\" height=\"250\" >\n</div>\n\n\n\n\n# NLU 20 Minutes Crashcourse - the fast Data Science route\nThis short notebook will teach you a lot of things!\n- Sentiment classification, binary, multi class and regressive\n- Extract Parts of Speech (POS)\n- Extract Named Entities (NER)\n- Extract Keywords (YAKE!)\n- Answer Open and Closed book questions with T5\n- Summarize text and more with Multi task T5\n- Translate text with Microsofts Marian Model\n- Train a Multi Lingual Classifier for 100+ languages from a dataset with just one language\n\n## More ressources \n- [Join our Slack](https://join.slack.com/t/spark-nlp/shared_invite/zt-lutct9gm-kuUazcyFKhuGY3_0AMkxqA)\n- [NLU Website](https://nlu.johnsnowlabs.com/)\n- [NLU Github](https://github.com/JohnSnowLabs/nlu)\n- [Many more NLU example tutorials](https://github.com/JohnSnowLabs/nlu/tree/master/examples)\n- [Overview of every powerful nlu 1-liner](https://nlu.johnsnowlabs.com/docs/en/examples)\n- [Checkout the Modelshub for an overview of all models](https://nlp.johnsnowlabs.com/models) \n- [Checkout the NLU Namespace where you can find every model as a tabel](https://nlu.johnsnowlabs.com/docs/en/namespace)\n- [Intro to NLU article](https://medium.com/spark-nlp/1-line-of-code-350-nlp-models-with-john-snow-labs-nlu-in-python-2f1c55bba619)\n- [Indepth and easy Sentence Similarity Tutorial, with StackOverflow Questions using BERTology embeddings](https://medium.com/spark-nlp/easy-sentence-similarity-with-bert-sentence-embeddings-using-john-snow-labs-nlu-ea078deb6ebf)\n- [1 line of Python code for BERT, ALBERT, ELMO, ELECTRA, XLNET, GLOVE, Part of Speech with NLU and t-SNE](https://medium.com/spark-nlp/1-line-of-code-for-bert-albert-elmo-electra-xlnet-glove-part-of-speech-with-nlu-and-t-sne-9ebcd5379cd)",

"_____no_output_____"

],

[

"# Install NLU\nYou need Java8, Pyspark and Spark-NLP installed, [see the installation guide for instructions](https://nlu.johnsnowlabs.com/docs/en/install). If you need help or run into troubles, [ping us on slack :)](https://join.slack.com/t/spark-nlp/shared_invite/zt-lutct9gm-kuUazcyFKhuGY3_0AMkxqA) ",

"_____no_output_____"

]

],

[

[

"import os\n! apt-get update -qq > /dev/null \n# Install java\n! apt-get install -y openjdk-8-jdk-headless -qq > /dev/null\nos.environ[\"JAVA_HOME\"] = \"/usr/lib/jvm/java-8-openjdk-amd64\"\nos.environ[\"PATH\"] = os.environ[\"JAVA_HOME\"] + \"/bin:\" + os.environ[\"PATH\"]\n! pip install nlu pyspark==2.4.7 > /dev/null \nimport nlu",

"_____no_output_____"

]

],

[

[

"# Simple NLU basics on Strings",

"_____no_output_____"

],

[

"## Context based spell Checking in 1 line\n\n",

"_____no_output_____"

]

],

[

[

"nlu.load('spell').predict('I also liek to live dangertus')",

"spellcheck_dl download started this may take some time.\nApproximate size to download 112.2 MB\n[OK!]\n"

]

],

[

[

"## Binary Sentiment classification in 1 Line\n\n",

"_____no_output_____"

]

],

[

[

"nlu.load('sentiment').predict('I love NLU and rainy days!')",

"analyze_sentiment download started this may take some time.\nApprox size to download 4.9 MB\n[OK!]\n"

]

],

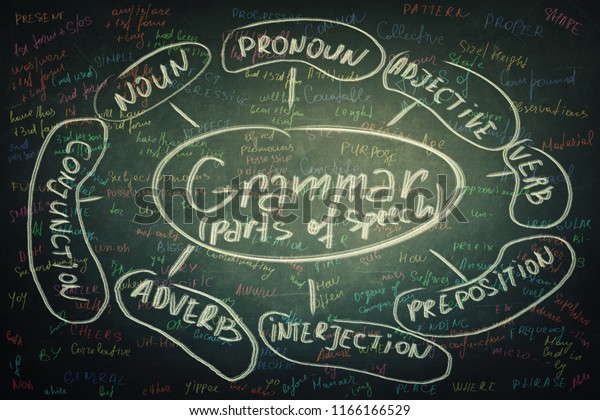

[

[

"## Part of Speech (POS) in 1 line\n\n\n|Tag |Description | Example|\n|------|------------|------|\n|CC| Coordinating conjunction | This batch of mushroom stew is savory **and** delicious |\n|CD| Cardinal number | Here are **five** coins |\n|DT| Determiner | **The** bunny went home |\n|EX| Existential there | **There** is a storm coming |\n|FW| Foreign word | I'm having a **déjà vu** |\n|IN| Preposition or subordinating conjunction | He is cleverer **than** I am |\n|JJ| Adjective | She wore a **beautiful** dress |\n|JJR| Adjective, comparative | My house is **bigger** than yours |\n|JJS| Adjective, superlative | I am the **shortest** person in my family |\n|LS| List item marker | A number of things need to be considered before starting a business **,** such as premises **,** finance **,** product demand **,** staffing and access to customers |\n|MD| Modal | You **must** stop when the traffic lights turn red |\n|NN| Noun, singular or mass | The **dog** likes to run |\n|NNS| Noun, plural | The **cars** are fast |\n|NNP| Proper noun, singular | I ordered the chair from **Amazon** |\n|NNPS| Proper noun, plural | We visted the **Kennedys** |\n|PDT| Predeterminer | **Both** the children had a toy |\n|POS| Possessive ending | I built the dog'**s** house |\n|PRP| Personal pronoun | **You** need to stop |\n|PRP$| Possessive pronoun | Remember not to judge a book by **its** cover |\n|RB| Adverb | The dog barks **loudly** |\n|RBR| Adverb, comparative | Could you sing more **quietly** please? |\n|RBS| Adverb, superlative | Everyone in the race ran fast, but John ran **the fastest** of all |\n|RP| Particle | He ate **up** all his dinner |\n|SYM| Symbol | What are you doing **?** |\n|TO| to | Please send it back **to** me |\n|UH| Interjection | **Wow!** You look gorgeous |\n|VB| Verb, base form | We **play** soccer |\n|VBD| Verb, past tense | I **worked** at a restaurant |\n|VBG| Verb, gerund or present participle | **Smoking** kills people |\n|VBN| Verb, past participle | She has **done** her homework |\n|VBP| Verb, non-3rd person singular present | You **flit** from place to place |\n|VBZ| Verb, 3rd person singular present | He never **calls** me |\n|WDT| Wh-determiner | The store honored the complaints, **which** were less than 25 days old |\n|WP| Wh-pronoun | **Who** can help me? |\n|WP\\$| Possessive wh-pronoun | **Whose** fault is it? |\n|WRB| Wh-adverb | **Where** are you going? |",

"_____no_output_____"

]

],

[

[

"nlu.load('pos').predict('POS assigns each token in a sentence a grammatical label')",

"pos_anc download started this may take some time.\nApproximate size to download 4.3 MB\n[OK!]\n"

]

],

[

[

"## Named Entity Recognition (NER) in 1 line\n\n\n\n|Type | \tDescription |\n|------|--------------|\n| PERSON | \tPeople, including fictional like **Harry Potter** |\n| NORP | \tNationalities or religious or political groups like the **Germans** |\n| FAC | \tBuildings, airports, highways, bridges, etc. like **New York Airport** |\n| ORG | \tCompanies, agencies, institutions, etc. like **Microsoft** |\n| GPE | \tCountries, cities, states. like **Germany** |\n| LOC | \tNon-GPE locations, mountain ranges, bodies of water. Like the **Sahara desert**|\n| PRODUCT | \tObjects, vehicles, foods, etc. (Not services.) like **playstation** |\n| EVENT | \tNamed hurricanes, battles, wars, sports events, etc. like **hurricane Katrina**|\n| WORK_OF_ART | \tTitles of books, songs, etc. Like **Mona Lisa** |\n| LAW | \tNamed documents made into laws. Like : **Declaration of Independence** |\n| LANGUAGE | \tAny named language. Like **Turkish**|\n| DATE | \tAbsolute or relative dates or periods. Like every second **friday**|\n| TIME | \tTimes smaller than a day. Like **every minute**|\n| PERCENT | \tPercentage, including ”%“. Like **55%** of workers enjoy their work |\n| MONEY | \tMonetary values, including unit. Like **50$** for those pants |\n| QUANTITY | \tMeasurements, as of weight or distance. Like this person weights **50kg** |\n| ORDINAL | \t“first”, “second”, etc. Like David placed **first** in the tournament |\n| CARDINAL | \tNumerals that do not fall under another type. Like **hundreds** of models are avaiable in NLU |\n",

"_____no_output_____"

]

],

[

[

"nlu.load('ner').predict(\"John Snow Labs congratulates the Amarican John Biden to winning the American election!\", output_level='chunk')",

"onto_recognize_entities_sm download started this may take some time.\nApprox size to download 159 MB\n[OK!]\n"

]

],

[

[

"# Let's apply NLU to a dataset!\n\n<div>\n<img src=\"http://ckl-it.de/wp-content/uploads/2021/02/crypto.jpeg \" width=\"400\" height=\"250\" >\n</div>\n",

"_____no_output_____"

]

],

[

[

"import pandas as pd \nimport nlu\n!wget http://ckl-it.de/wp-content/uploads/2020/12/small_btc.csv \ndf = pd.read_csv('/content/small_btc.csv').iloc[0:5000].title\ndf\n\n",

"--2021-03-24 09:32:01-- http://ckl-it.de/wp-content/uploads/2020/12/small_btc.csv\nResolving ckl-it.de (ckl-it.de)... 217.160.0.108, 2001:8d8:100f:f000::209\nConnecting to ckl-it.de (ckl-it.de)|217.160.0.108|:80... connected.\nHTTP request sent, awaiting response... 200 OK\nLength: 22244914 (21M) [text/csv]\nSaving to: ‘small_btc.csv’\n\nsmall_btc.csv 100%[===================>] 21.21M 6.62MB/s in 3.5s \n\n2021-03-24 09:32:04 (6.06 MB/s) - ‘small_btc.csv’ saved [22244914/22244914]\n\n"

]

],

[

[

"## NER on a Crypto News dataset\n### The **NER** model which you can load via `nlu.load('ner')` recognizes 18 different classes in your dataset.\nWe set output level to chunk, so that we get 1 row per NER class.\n\n\n#### Predicted entities:\n\n\nNER is avaiable in many languages, which you can [find in the John Snow Labs Modelshub](https://nlp.johnsnowlabs.com/models)",

"_____no_output_____"

],

[

"",

"_____no_output_____"

]

],

[

[

"ner_df = nlu.load('ner').predict(df, output_level = 'chunk')\nner_df ",

"onto_recognize_entities_sm download started this may take some time.\nApprox size to download 159 MB\n[OK!]\n"

]

],

[

[

"### Top 50 Named Entities",

"_____no_output_____"

]

],

[

[

"ner_df.entities.value_counts()[:100].plot.barh(figsize = (16,20))",

"_____no_output_____"

]

],

[

[

"### Top 50 Named Entities which are PERSONS",

"_____no_output_____"

]

],

[

[

"ner_df[ner_df.entities_class == 'PERSON'].entities.value_counts()[:50].plot.barh(figsize=(18,20), title ='Top 50 Occuring Persons in the dataset')",

"_____no_output_____"

]

],

[

[

"### Top 50 Named Entities which are Countries/Cities/States",

"_____no_output_____"

]

],

[

[

"ner_df[ner_df.entities_class == 'GPE'].entities.value_counts()[:50].plot.barh(figsize=(18,20),title ='Top 50 Countries/Cities/States Occuring in the dataset')",

"_____no_output_____"

]

],

[

[

"### Top 50 Named Entities which are PRODUCTS ",

"_____no_output_____"

]

],

[

[

"ner_df[ner_df.entities_class == 'PRODUCT'].entities.value_counts()[:50].plot.barh(figsize=(18,20),title ='Top 50 products occuring in the dataset')",

"_____no_output_____"

]

],

[

[

"### Top 50 Named Entities which are ORGANIZATIONS",

"_____no_output_____"

]

],

[

[

"ner_df[ner_df.entities_class == 'ORG'].entities.value_counts()[:50].plot.barh(figsize=(18,20),title ='Top 50 products occuring in the dataset')",

"_____no_output_____"

]

],

[

[

"## YAKE on a Crypto News dataset\n### The **YAKE!** model (Yet Another Keyword Extractor) is a **unsupervised** keyword extraction algorithm.\nYou can load it via which you can load via `nlu.load('yake')`. It has no weights and is very fast.\nIt has various parameters that can be configured to influence which keywords are beeing extracted, [here for an more indepth YAKE guide](https://github.com/JohnSnowLabs/nlu/blob/master/examples/webinars_conferences_etc/multi_lingual_webinar/1_NLU_base_features_on_dataset_with_YAKE_Lemma_Stemm_classifiers_NER_.ipynb)",

"_____no_output_____"

]

],

[

[

"yake_df = nlu.load('yake').predict(df)\nyake_df",

"_____no_output_____"

]

],

[

[

"### Top 50 extracted Keywords with YAKE!",

"_____no_output_____"

]

],

[

[

"yake_df.explode('keywords_classes').keywords_classes.value_counts()[0:50].plot.barh(figsize=(14,18))",

"_____no_output_____"

]

],

[

[

"## Binary Sentimental Analysis and Distribution on a dataset",

"_____no_output_____"

]

],

[

[

"sent_df = nlu.load('sentiment').predict(df)\nsent_df",

"analyze_sentiment download started this may take some time.\nApprox size to download 4.9 MB\n[OK!]\n"

],

[

"sent_df.sentiment.value_counts().plot.bar(title='Sentiment ')",

"_____no_output_____"

]

],

[

[

"## Emotional Analysis and Distribution of Headlines ",

"_____no_output_____"

]

],

[

[

"emo_df = nlu.load('emotion').predict(df)\nemo_df",

"classifierdl_use_emotion download started this may take some time.\nApproximate size to download 21.3 MB\n[OK!]\ntfhub_use download started this may take some time.\nApproximate size to download 923.7 MB\n[OK!]\n\n\n\n\n\n\n"

],

[

"emo_df.emotion.value_counts().plot.bar(title='Emotion Distribution')\n",

"_____no_output_____"

]

],

[

[

"**Make sure to restart your notebook again** before starting the next section",

"_____no_output_____"

]

],

[

[

"print(\"Please restart kernel if you are in google colab and run next cell after the restart to configure java 8 back\")\n1+'wait'\n",

"Please restart kernel if you are in google colab and run next cell after the restart to configure java 8 back\n"

],

[

"# This configures colab to use Java 8 again. \n# You need to run this in Google colab, because after restart it likes to set Java 11 as default, which will cause issues\n! echo 2 | update-alternatives --config java\nimport pandas as pd\nimport nlu ",

"There are 2 choices for the alternative java (providing /usr/bin/java).\n\n Selection Path Priority Status\n------------------------------------------------------------\n 0 /usr/lib/jvm/java-11-openjdk-amd64/bin/java 1111 auto mode\n 1 /usr/lib/jvm/java-11-openjdk-amd64/bin/java 1111 manual mode\n* 2 /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java 1081 manual mode\n\nPress <enter> to keep the current choice[*], or type selection number: "

]

],

[

[

"# Answer **Closed Book** and Open **Book Questions** with Google's T5!\n\n<!-- [T5]() -->\n\n\nYou can load the **question answering** model with `nlu.load('en.t5')`",

"_____no_output_____"

]

],

[

[

"# Load question answering T5 model\nt5_closed_question = nlu.load('en.t5')",

"google_t5_small_ssm_nq download started this may take some time.\nApproximate size to download 139 MB\n[OK!]\n"

]

],

[

[

"## Answer **Closed Book Questions** \nClosed book means that no additional context is given and the model must answer the question with the knowledge stored in it's weights",

"_____no_output_____"

]

],

[

[

"t5_closed_question.predict(\"Who is president of Nigeria?\")",

"_____no_output_____"

],

[

"t5_closed_question.predict(\"What is the most common language in India?\")",

"_____no_output_____"

],

[

"t5_closed_question.predict(\"What is the capital of Germany?\")",

"_____no_output_____"

]

],

[

[

"## Answer **Open Book Questions** \nThese are questions where we give the model some additional context, that is used to answer the question",

"_____no_output_____"

]

],

[

[

"t5_open_book = nlu.load('answer_question')",

"t5_base download started this may take some time.\nApproximate size to download 446 MB\n[OK!]\n"

],

[

"context = 'Peters last week was terrible! He had an accident and broke his leg while skiing!'\nquestion1 = 'Why was peters week so bad?' \nquestion2 = 'How did peter broke his leg?' \n\nt5_open_book.predict([question1+context, question2 + context]) ",

"_____no_output_____"

],

[

"# Ask T5 questions in the context of a News Article\nquestion1 = 'Who is Jack ma?'\nquestion2 = 'Who is founder of Alibaba Group?'\nquestion3 = 'When did Jack Ma re-appear?'\nquestion4 = 'How did Alibaba stocks react?'\nquestion5 = 'Whom did Jack Ma meet?'\nquestion6 = 'Who did Jack Ma hide from?'\n\n\n# from https://www.bbc.com/news/business-55728338 \nnews_article_context = \"\"\" context:\nAlibaba Group founder Jack Ma has made his first appearance since Chinese regulators cracked down on his business empire.\nHis absence had fuelled speculation over his whereabouts amid increasing official scrutiny of his businesses.\nThe billionaire met 100 rural teachers in China via a video meeting on Wednesday, according to local government media.\nAlibaba shares surged 5% on Hong Kong's stock exchange on the news.\n\"\"\"\n\nquestions = [\n question1+ news_article_context,\n question2+ news_article_context,\n question3+ news_article_context,\n question4+ news_article_context,\n question5+ news_article_context,\n question6+ news_article_context,]\n\n",

"_____no_output_____"

],

[

"t5_open_book.predict(questions)",

"_____no_output_____"

]

],

[

[

"# Multi Problem T5 model for Summarization and more\nThe main T5 model was trained for over 20 tasks from the SQUAD/GLUE/SUPERGLUE datasets. See [this notebook](https://github.com/JohnSnowLabs/nlu/blob/master/examples/webinars_conferences_etc/multi_lingual_webinar/7_T5_SQUAD_GLUE_SUPER_GLUE_TASKS.ipynb) for a demo of all tasks \n\n\n# Overview of every task available with T5\n[The T5 model](https://arxiv.org/pdf/1910.10683.pdf) is trained on various datasets for 17 different tasks which fall into 8 categories.\n\n\n\n1. Text summarization\n2. Question answering\n3. Translation\n4. Sentiment analysis\n5. Natural Language inference\n6. Coreference resolution\n7. Sentence Completion\n8. Word sense disambiguation\n\n### Every T5 Task with explanation:\n|Task Name | Explanation | \n|----------|--------------|\n|[1.CoLA](https://nyu-mll.github.io/CoLA/) | Classify if a sentence is gramaticaly correct|\n|[2.RTE](https://dl.acm.org/doi/10.1007/11736790_9) | Classify whether if a statement can be deducted from a sentence|\n|[3.MNLI](https://arxiv.org/abs/1704.05426) | Classify for a hypothesis and premise whether they contradict or contradict each other or neither of both (3 class).|\n|[4.MRPC](https://www.aclweb.org/anthology/I05-5002.pdf) | Classify whether a pair of sentences is a re-phrasing of each other (semantically equivalent)|\n|[5.QNLI](https://arxiv.org/pdf/1804.07461.pdf) | Classify whether the answer to a question can be deducted from an answer candidate.|\n|[6.QQP](https://www.quora.com/q/quoradata/First-Quora-Dataset-Release-Question-Pairs) | Classify whether a pair of questions is a re-phrasing of each other (semantically equivalent)|\n|[7.SST2](https://www.aclweb.org/anthology/D13-1170.pdf) | Classify the sentiment of a sentence as positive or negative|\n|[8.STSB](https://www.aclweb.org/anthology/S17-2001/) | Classify the sentiment of a sentence on a scale from 1 to 5 (21 Sentiment classes)|\n|[9.CB](https://ojs.ub.uni-konstanz.de/sub/index.php/sub/article/view/601) | Classify for a premise and a hypothesis whether they contradict each other or not (binary).|\n|[10.COPA](https://www.aaai.org/ocs/index.php/SSS/SSS11/paper/view/2418/0) | Classify for a question, premise, and 2 choices which choice the correct choice is (binary).|\n|[11.MultiRc](https://www.aclweb.org/anthology/N18-1023.pdf) | Classify for a question, a paragraph of text, and an answer candidate, if the answer is correct (binary),|\n|[12.WiC](https://arxiv.org/abs/1808.09121) | Classify for a pair of sentences and a disambigous word if the word has the same meaning in both sentences.|\n|[13.WSC/DPR](https://www.aaai.org/ocs/index.php/KR/KR12/paper/view/4492/0) | Predict for an ambiguous pronoun in a sentence what it is referring to. |\n|[14.Summarization](https://arxiv.org/abs/1506.03340) | Summarize text into a shorter representation.|\n|[15.SQuAD](https://arxiv.org/abs/1606.05250) | Answer a question for a given context.|\n|[16.WMT1.](https://arxiv.org/abs/1706.03762) | Translate English to German|\n|[17.WMT2.](https://arxiv.org/abs/1706.03762) | Translate English to French|\n|[18.WMT3.](https://arxiv.org/abs/1706.03762) | Translate English to Romanian|\n\n",

"_____no_output_____"

]

],

[

[

"# Load the Multi Task Model T5\nt5_multi = nlu.load('en.t5.base')",

"t5_base download started this may take some time.\nApproximate size to download 446 MB\n[OK!]\n"

],

[

"# https://www.reuters.com/article/instant-article/idCAKBN2AA2WF\ntext = \"\"\"(Reuters) - Mastercard Inc said on Wednesday it was planning to offer support for some cryptocurrencies on its network this year, joining a string of big-ticket firms that have pledged similar support.\n\nThe credit-card giant’s announcement comes days after Elon Musk’s Tesla Inc revealed it had purchased $1.5 billion of bitcoin and would soon accept it as a form of payment.\n\nAsset manager BlackRock Inc and payments companies Square and PayPal have also recently backed cryptocurrencies.\n\nMastercard already offers customers cards that allow people to transact using their cryptocurrencies, although without going through its network.\n\n\"Doing this work will create a lot more possibilities for shoppers and merchants, allowing them to transact in an entirely new form of payment. This change may open merchants up to new customers who are already flocking to digital assets,\" Mastercard said. (mstr.cd/3tLaPZM)\n\nMastercard specified that not all cryptocurrencies will be supported on its network, adding that many of the hundreds of digital assets in circulation still need to tighten their compliance measures.\n\nMany cryptocurrencies have struggled to win the trust of mainstream investors and the general public due to their speculative nature and potential for money laundering.\n\"\"\"\nt5_multi['t5'].setTask('summarize ') \nshort = t5_multi.predict(text)\nshort",

"_____no_output_____"

],

[

"print(f\"Original Length {len(short.document.iloc[0])} Summarized Length : {len(short.T5.iloc[0])} \\n summarized text :{short.T5.iloc[0]} \")\n",

"Original Length 1277 Summarized Length : 352 \n summarized text :mastercard said on Wednesday it was planning to offer support for some cryptocurrencies on its network this year . the credit-card giant’s announcement comes days after Elon Musk’s Tesla Inc revealed it had purchased $1.5 billion of bitcoin . asset manager blackrock and payments companies Square and PayPal have also recently backed cryptocurrencies . \n"

],

[

"short.T5.iloc[0]",

"_____no_output_____"

]

],

[

[

"**Make sure to restart your notebook again** before starting the next section",

"_____no_output_____"

]

],

[

[

"print(\"Please restart kernel if you are in google colab and run next cell after the restart to configure java 8 back\")\n1+'wait'\n",

"_____no_output_____"

],

[

"# This configures colab to use Java 8 again. \n# You need to run this in Google colab, because after restart it likes to set Java 11 as default, which will cause issues\n! echo 2 | update-alternatives --config java\n",

"_____no_output_____"

]

],

[

[

"# Translate between more than 200 Languages with [ Microsofts Marian Models](https://marian-nmt.github.io/publications/)\n\nMarian is an efficient, free Neural Machine Translation framework mainly being developed by the Microsoft Translator team (646+ pretrained models & pipelines in 192+ languages)\nYou need to specify the language your data is in as `start_language` and the language you want to translate to as `target_language`. \n The language references must be [ISO language codes](https://en.wikipedia.org/wiki/List_of_ISO_639-1_codes)\n\n`nlu.load('<start_language>.translate_to.<target_language>')` \n\n**Translate Turkish to English:** \n`nlu.load('tr.translate_to.en')`\n\n**Translate English to French:** \n`nlu.load('en.translate_to.fr')`\n\n\n**Translate French to Hebrew:** \n`nlu.load('fr.translate_to.he')`\n\n\n\n\n\n",

"_____no_output_____"

]

],

[

[

"import nlu\nimport pandas as pd\n!wget http://ckl-it.de/wp-content/uploads/2020/12/small_btc.csv \ndf = pd.read_csv('/content/small_btc.csv').iloc[0:20].title",

"_____no_output_____"

]

],

[

[

"## Translate to German",

"_____no_output_____"

]

],

[

[

"translate_pipe = nlu.load('en.translate_to.de')\ntranslate_pipe.predict(df)",

"translate_en_de download started this may take some time.\nApprox size to download 370.2 MB\n[OK!]\n"

]

],

[

[

"## Translate to Chinese",

"_____no_output_____"

]

],

[

[

"translate_pipe = nlu.load('en.translate_to.zh')\ntranslate_pipe.predict(df)",

"translate_en_zh download started this may take some time.\nApprox size to download 396.8 MB\n[OK!]\n"

]

],

[

[

"## Translate to Hindi",

"_____no_output_____"

]

],

[

[

"translate_pipe = nlu.load('en.translate_to.hi')\ntranslate_pipe.predict(df)",

"translate_en_hi download started this may take some time.\nApprox size to download 385.8 MB\n[OK!]\n"

]

],

[

[

"# Train a Multi Lingual Classifier for 100+ languages from a dataset with just one language\n\n[Leverage Language-agnostic BERT Sentence Embedding (LABSE) and acheive state of the art!](https://arxiv.org/abs/2007.01852) \n\nTraining a classifier with LABSE embeddings enables the knowledge to be transferred to 109 languages!\nWith the [SentimentDL model](https://nlp.johnsnowlabs.com/docs/en/annotators#sentimentdl-multi-class-sentiment-analysis-annotator) from Spark NLP you can achieve State Of the Art results on any binary class text classification problem.\n\n### Languages suppoted by LABSE\n\n\n",

"_____no_output_____"

]

],

[

[

"# Download French twitter Sentiment dataset https://www.kaggle.com/hbaflast/french-twitter-sentiment-analysis\n! wget http://ckl-it.de/wp-content/uploads/2021/02/french_tweets.csv\n\nimport pandas as pd\n\ntrain_path = '/content/french_tweets.csv'\n\ntrain_df = pd.read_csv(train_path)\n# the text data to use for classification should be in a column named 'text'\ncolumns=['text','y']\ntrain_df = train_df[columns]\ntrain_df = train_df.sample(frac=1).reset_index(drop=True)\ntrain_df",

"_____no_output_____"

]

],

[

[

"## Train Deep Learning Classifier using `nlu.load('train.sentiment')`\n\nAl you need is a Pandas Dataframe with a label column named `y` and the column with text data should be named `text`\n\nWe are training on a french dataset and can then predict classes correct **in 100+ langauges**",

"_____no_output_____"

]

],

[

[

"# Train longer!\ntrainable_pipe = nlu.load('xx.embed_sentence.labse train.sentiment')\ntrainable_pipe['sentiment_dl'].setMaxEpochs(60) \ntrainable_pipe['sentiment_dl'].setLr(0.005) \nfitted_pipe = trainable_pipe.fit(train_df.iloc[:2000])\n# predict with the trainable pipeline on dataset and get predictions\npreds = fitted_pipe.predict(train_df.iloc[:2000],output_level='document')\n\n#sentence detector that is part of the pipe generates sone NaNs. lets drop them first\npreds.dropna(inplace=True)\nprint(classification_report(preds['y'], preds['sentiment']))\n\npreds",

"labse download started this may take some time.\nApproximate size to download 1.7 GB\n[OK!]\n precision recall f1-score support\n\n negative 0.88 0.94 0.91 980\n positive 0.94 0.88 0.91 1020\n\n accuracy 0.91 2000\n macro avg 0.91 0.91 0.91 2000\nweighted avg 0.91 0.91 0.91 2000\n\n"

]

],

[

[

"### Test the fitted pipe on new example",

"_____no_output_____"

],

[

"#### The Model understands Englsih\n",

"_____no_output_____"

]

],

[

[

"fitted_pipe.predict(\"This was awful!\")",

"_____no_output_____"

],

[

"fitted_pipe.predict(\"This was great!\")",

"_____no_output_____"

]

],

[

[

"#### The Model understands German\n",

"_____no_output_____"

]

],

[

[

"# German for:' this movie was great!'\nfitted_pipe.predict(\"Der Film war echt klasse!\")",

"_____no_output_____"

],

[

"# German for: 'This movie was really boring'\nfitted_pipe.predict(\"Der Film war echt langweilig!\")",

"_____no_output_____"

]

],

[

[

"#### The Model understands Chinese\n",

"_____no_output_____"

]

],

[

[

"# Chinese for: \"This model was awful!\"\nfitted_pipe.predict(\"这部电影太糟糕了!\")",

"_____no_output_____"

],

[

"# Chine for : \"This move was great!\"\nfitted_pipe.predict(\"此举很棒!\")\n",

"_____no_output_____"

]

],

[

[

"#### Model understanda Afrikaans\n\n\n\n",

"_____no_output_____"

]

],

[

[

"# Afrikaans for 'This movie was amazing!'\nfitted_pipe.predict(\"Hierdie film was ongelooflik!\")\n",

"_____no_output_____"

],

[

"# Afrikaans for :'The movie made me fall asleep, it's awful!'\nfitted_pipe.predict('Die film het my aan die slaap laat raak, dit is verskriklik!')",

"_____no_output_____"

]

],

[

[

"#### The model understands Vietnamese\n",

"_____no_output_____"

]

],

[

[

"# Vietnamese for : 'The movie was painful to watch'\nfitted_pipe.predict('Phim đau điếng người xem')\n",

"_____no_output_____"

],

[

"\n# Vietnamese for : 'This was the best movie ever'\nfitted_pipe.predict('Đây là bộ phim hay nhất từ trước đến nay')",

"_____no_output_____"

]

],

[

[

"#### The model understands Japanese\n\n",

"_____no_output_____"

]

],

[

[

"\n# Japanese for : 'This is now my favorite movie!'\nfitted_pipe.predict('これが私のお気に入りの映画です!')",

"_____no_output_____"

],

[

"\n# Japanese for : 'I would rather kill myself than watch that movie again'\nfitted_pipe.predict('その映画をもう一度見るよりも自殺したい')",

"_____no_output_____"

]

],

[

[

"# There are many more models you can put to use in 1 line of code!\n## Checkout [the Modelshub](https://nlp.johnsnowlabs.com/models) and the [NLU Namespace](https://nlu.johnsnowlabs.com/docs/en/namespace) for more models\n\n\n### More ressources \n- [Join our Slack](https://join.slack.com/t/spark-nlp/shared_invite/zt-lutct9gm-kuUazcyFKhuGY3_0AMkxqA)\n- [NLU Website](https://nlu.johnsnowlabs.com/)\n- [NLU Github](https://github.com/JohnSnowLabs/nlu)\n- [Many more NLU example tutorials](https://github.com/JohnSnowLabs/nlu/tree/master/examples)\n- [Overview of every powerful nlu 1-liner](https://nlu.johnsnowlabs.com/docs/en/examples)\n- [Checkout the Modelshub for an overview of all models](https://nlp.johnsnowlabs.com/models) \n- [Checkout the NLU Namespace where you can find every model as a tabel](https://nlu.johnsnowlabs.com/docs/en/namespace)\n- [Intro to NLU article](https://medium.com/spark-nlp/1-line-of-code-350-nlp-models-with-john-snow-labs-nlu-in-python-2f1c55bba619)\n- [Indepth and easy Sentence Similarity Tutorial, with StackOverflow Questions using BERTology embeddings](https://medium.com/spark-nlp/easy-sentence-similarity-with-bert-sentence-embeddings-using-john-snow-labs-nlu-ea078deb6ebf)\n- [1 line of Python code for BERT, ALBERT, ELMO, ELECTRA, XLNET, GLOVE, Part of Speech with NLU and t-SNE](https://medium.com/spark-nlp/1-line-of-code-for-bert-albert-elmo-electra-xlnet-glove-part-of-speech-with-nlu-and-t-sne-9ebcd5379cd)",

"_____no_output_____"

]

],

[

[

"while 1 : 1 ",

"_____no_output_____"

],

[

"",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

]

] |

c512479ff28d562f3953384f7f2cf54a4f1fbeda

| 696,729 |

ipynb

|

Jupyter Notebook

|

flu-trained-models/florida/florida_flu_temp_nextWed.ipynb

|

tamjazad/ml-covid19

|

85130e22fd3ded2dc55ca1f773fb42a20318b75a

|

[

"MIT"

] | 1 |

2020-09-02T11:59:02.000Z

|

2020-09-02T11:59:02.000Z

|

flu-trained-models/florida/florida_flu_temp_nextWed.ipynb

|

tamjazad/ml-covid19

|

85130e22fd3ded2dc55ca1f773fb42a20318b75a

|

[

"MIT"

] | null | null | null |

flu-trained-models/florida/florida_flu_temp_nextWed.ipynb

|

tamjazad/ml-covid19

|

85130e22fd3ded2dc55ca1f773fb42a20318b75a

|

[

"MIT"

] | 1 |

2020-09-02T11:59:06.000Z

|

2020-09-02T11:59:06.000Z

| 402.500867 | 202,248 | 0.928896 |

[

[

[

"# Florida Single Weekly Predictions, trained on historical flu data and temperature\n\n> Once again, just like before in the USA flu model, I am going to index COVID weekly cases by Wednesdays",

"_____no_output_____"

]

],

[

[

"import tensorflow as tf\nphysical_devices = tf.config.list_physical_devices('GPU')\ntf.config.experimental.set_memory_growth(physical_devices[0], enable=True)\nimport matplotlib.pyplot as plt\nimport numpy as np\nimport pandas as pd\nimport sklearn\nfrom sklearn import preprocessing",

"_____no_output_____"

]

],

[

[

"### getting historical flu data",

"_____no_output_____"

]

],

[

[

"system = \"Windows\"\n\nif system == \"Windows\":\n flu_dir = \"..\\\\..\\\\..\\\\cdc-fludata\\\\us_national\\\\\"\nelse:\n flu_dir = \"../../../cdc-fludata/us_national/\"",

"_____no_output_____"

],

[

"flu_dictionary = {}\n\nfor year in range(1997, 2019):\n filepath = \"usflu_\"\n year_string = str(year) + \"-\" + str(year + 1)\n filepath = flu_dir + filepath + year_string + \".csv\"\n temp_df = pd.read_csv(filepath)\n flu_dictionary[year] = temp_df",

"_____no_output_____"

]

],

[

[

"### combining flu data into one chronological series of total cases",

"_____no_output_____"

]

],

[

[

"# getting total cases and putting them in a series by week\nflu_series_dict = {} \n\nfor year in flu_dictionary:\n temp_df = flu_dictionary[year]\n temp_df = temp_df.set_index(\"WEEK\")\n abridged_df = temp_df.iloc[:, 2:]\n \n try:\n abridged_df = abridged_df.drop(columns=\"PERCENT POSITIVE\")\n except:\n pass\n \n total_cases_series = abridged_df.sum(axis=1)\n flu_series_dict[year] = total_cases_series\n ",

"_____no_output_____"

],

[

"all_cases_series = pd.Series(dtype=\"int64\")\n\nfor year in flu_series_dict:\n temp_series = flu_series_dict[year]\n all_cases_series = all_cases_series.append(temp_series, ignore_index=True)",

"_____no_output_____"

],

[

"all_cases_series",

"_____no_output_____"

],

[

"all_cases_series.plot(grid=True, figsize=(60,20))",

"_____no_output_____"

]

],

[

[

"### Now, making a normalized series between 0, 1",

"_____no_output_____"

]

],

[

[

"norm_flu_series_dict = {}\n\nfor year in flu_series_dict:\n temp_series = flu_series_dict[year]\n temp_list = preprocessing.minmax_scale(temp_series)\n temp_series = pd.Series(temp_list)\n norm_flu_series_dict[year] = temp_series",

"_____no_output_____"

],

[

"all_cases_norm_series = pd.Series(dtype=\"int64\")\n\nfor year in norm_flu_series_dict:\n temp_series = norm_flu_series_dict[year]\n all_cases_norm_series = all_cases_norm_series.append(temp_series, ignore_index=True)",

"_____no_output_____"

],

[

"all_cases_norm_series.plot(grid=True, figsize=(60,5))\nall_cases_norm_series",

"_____no_output_____"

]

],

[

[

"## Getting COVID-19 Case Data",

"_____no_output_____"

]

],

[

[

"if system == \"Windows\":\n datapath = \"..\\\\..\\\\..\\\\COVID-19\\\\csse_covid_19_data\\\\csse_covid_19_time_series\\\\\"\nelse:\n datapath = \"../../../COVID-19/csse_covid_19_data/csse_covid_19_time_series/\"\n\n# Choose from \"US Cases\", \"US Deaths\", \"World Cases\", \"World Deaths\", \"World Recoveries\"\nkey = \"US Cases\" \n\nif key == \"US Cases\":\n datapath = datapath + \"time_series_covid19_confirmed_US.csv\"\nelif key == \"US Deaths\":\n datapath = datapath + \"time_series_covid19_deaths_US.csv\"\nelif key == \"World Cases\":\n datapath = datapath + \"time_series_covid19_confirmed_global.csv\"\nelif key == \"World Deaths\":\n datapath = datapath + \"time_series_covid19_deaths_global.csv\"\nelif key == \"World Recoveries\":\n datapath = datapath + \"time_series_covid19_recovered_global.csv\"",

"_____no_output_____"

],

[

"covid_df = pd.read_csv(datapath)",

"_____no_output_____"

],

[

"covid_df",

"_____no_output_____"

],

[

"florida_data = covid_df.loc[covid_df[\"Province_State\"] == \"Florida\"]",

"_____no_output_____"

],

[

"florida_cases = florida_data.iloc[:,11:]",

"_____no_output_____"

],

[

"florida_cases_total = florida_cases.sum(axis=0)",

"_____no_output_____"

],

[

"florida_cases_total.plot()",

"_____no_output_____"

]

],

[

[

"### convert daily data to weekly data",

"_____no_output_____"

]

],

[

[

"florida_weekly_cases = florida_cases_total.iloc[::7]",

"_____no_output_____"

],

[

"florida_weekly_cases",

"_____no_output_____"

],

[

"florida_weekly_cases.plot()",

"_____no_output_____"

]

],

[

[

"### Converting cumulative series to non-cumulative series",

"_____no_output_____"

]

],

[

[

"florida_wnew_cases = florida_weekly_cases.diff()\nflorida_wnew_cases[0] = 1.0\nflorida_wnew_cases",

"_____no_output_____"

],

[

"florida_wnew_cases.plot()",

"_____no_output_____"

]

],

[

[

"### normalizing weekly case data\n> This is going to be different for texas. This is because, the peak number of weekly new infections probably has not been reached yet. We need to divide everything by a guess for the peak number of predictions instead of min-max scaling.",

"_____no_output_____"

]

],

[

[

"# I'm guessing that the peak number of weekly cases will be about 60,000. Could definitely be wrong.\npeak_guess = 60000\n\nflorida_wnew_cases_norm = florida_wnew_cases / peak_guess\nflorida_wnew_cases_norm.plot()\nflorida_wnew_cases_norm",

"_____no_output_____"

]

],

[

[

"## getting temperature data\n> At the moment, this will be dummy data",

"_____no_output_____"

]

],

[

[

"flu_temp_data = np.full(len(all_cases_norm_series), 0.5)",

"_____no_output_____"

],

[

"training_data_df = pd.DataFrame({\n \"Temperature\" : flu_temp_data,\n \"Flu Cases\" : all_cases_norm_series\n})\ntraining_data_df",

"_____no_output_____"

],

[

"covid_temp_data = np.full(len(florida_wnew_cases_norm), 0.5)",

"_____no_output_____"

],

[

"testing_data_df = pd.DataFrame({\n \"Temperature\" : covid_temp_data,\n \"COVID Cases\" : florida_wnew_cases_norm\n})\ntesting_data_df",

"_____no_output_____"

],

[

"testing_data_df.shape",

"_____no_output_____"

],

[

"training_data_np = training_data_df.values\ntesting_data_np = testing_data_df.values",

"_____no_output_____"

]

],

[

[

"## Building Neural Net Model",

"_____no_output_____"

],

[

"### preparing model data",

"_____no_output_____"

]

],

[

[

"# this code is directly from https://www.tensorflow.org/tutorials/structured_data/time_series\n# much of below data formatting code is derived straight from same link\n\ndef multivariate_data(dataset, target, start_index, end_index, history_size,\n target_size, step, single_step=False):\n data = []\n labels = []\n\n start_index = start_index + history_size\n if end_index is None:\n end_index = len(dataset) - target_size\n\n for i in range(start_index, end_index):\n indices = range(i-history_size, i, step)\n data.append(dataset[indices])\n\n if single_step:\n labels.append(target[i+target_size])\n else:\n labels.append(target[i:i+target_size])\n\n return np.array(data), np.array(labels)",

"_____no_output_____"

],

[

"past_history = 22\nfuture_target = 0\nSTEP = 1\n\nx_train_single, y_train_single = multivariate_data(training_data_np, training_data_np[:, 1], 0,\n None, past_history,\n future_target, STEP,\n single_step=True)\nx_test_single, y_test_single = multivariate_data(testing_data_np, testing_data_np[:, 1],\n 0, None, past_history,\n future_target, STEP,\n single_step=True)",

"_____no_output_____"

],

[

"BATCH_SIZE = 300\nBUFFER_SIZE = 1000\n\ntrain_data_single = tf.data.Dataset.from_tensor_slices((x_train_single, y_train_single))\ntrain_data_single = train_data_single.cache().shuffle(BUFFER_SIZE).batch(BATCH_SIZE).repeat()\n\ntest_data_single = tf.data.Dataset.from_tensor_slices((x_test_single, y_test_single))\ntest_data_single = test_data_single.batch(1).repeat()",

"_____no_output_____"

]

],

[

[

"### designing actual model",

"_____no_output_____"

]

],

[

[

"# creating the neural network model\n\nlstm_prediction_model = tf.keras.Sequential([\n tf.keras.layers.LSTM(32, input_shape=x_train_single.shape[-2:]),\n tf.keras.layers.Dense(32),\n tf.keras.layers.Dense(1)\n])\n\nlstm_prediction_model.compile(optimizer=tf.keras.optimizers.RMSprop(), loss=\"mae\")",

"_____no_output_____"

],

[

"single_step_history = lstm_prediction_model.fit(train_data_single, epochs=10,\n steps_per_epoch=250,\n validation_data=test_data_single,\n validation_steps=50)",

"Train for 250 steps, validate for 50 steps\nEpoch 1/10\n250/250 [==============================] - 4s 17ms/step - loss: 0.0578 - val_loss: 0.1777\nEpoch 2/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0321 - val_loss: 0.1247\nEpoch 3/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0292 - val_loss: 0.1219\nEpoch 4/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0278 - val_loss: 0.1129\nEpoch 5/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0269 - val_loss: 0.1129\nEpoch 6/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0262 - val_loss: 0.1099\nEpoch 7/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0256 - val_loss: 0.1091\nEpoch 8/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0251 - val_loss: 0.1077\nEpoch 9/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0245 - val_loss: 0.1165\nEpoch 10/10\n250/250 [==============================] - 1s 4ms/step - loss: 0.0239 - val_loss: 0.1006\n"

],

[

"def create_time_steps(length):\n return list(range(-length, 0))\n\ndef show_plot(plot_data, delta, title):\n labels = ['History', 'True Future', 'Model Prediction']\n marker = ['.-', 'rx', 'go']\n time_steps = create_time_steps(plot_data[0].shape[0])\n if delta:\n future = delta\n else:\n future = 0\n\n plt.title(title)\n for i, x in enumerate(plot_data):\n if i:\n plt.plot(future, plot_data[i], marker[i], markersize=10,\n label=labels[i])\n else:\n plt.plot(time_steps, plot_data[i].flatten(), marker[i], label=labels[i])\n plt.legend()\n plt.xlim([time_steps[0], (future+5)*2])\n plt.xlabel('Week (defined by Wednesdays)')\n plt.ylabel('Normalized Cases')\n return plt",

"_____no_output_____"

],

[

"for x, y in train_data_single.take(10):\n #print(lstm_prediction_model.predict(x))\n plot = show_plot([x[0][:, 1].numpy(), y[0].numpy(),\n lstm_prediction_model.predict(x)[0]], 0,\n 'Training Data Prediction')\n plot.show()",

"_____no_output_____"

],

[

"for x, y in test_data_single.take(1):\n plot = show_plot([x[0][:, 1].numpy(), y[0].numpy(),\n lstm_prediction_model.predict(x)[0]], 0,\n 'Florida COVID Case Prediction, Single Week')\n plot.show()",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] |

[

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code"

]

] |

c51252b9a6b53f76ff37e9b0153bd424ae9ea8ac

| 130,790 |

ipynb

|

Jupyter Notebook

|

Healthcare_Project/semi_proposal/Project_Figure_2.ipynb

|

vasudhathinks/Personal-Projects

|

7e59ddf4d3277efcb524f1e046528f7e9e8def18

|

[

"MIT"

] | null | null | null |

Healthcare_Project/semi_proposal/Project_Figure_2.ipynb

|

vasudhathinks/Personal-Projects

|

7e59ddf4d3277efcb524f1e046528f7e9e8def18

|

[

"MIT"

] | null | null | null |

Healthcare_Project/semi_proposal/Project_Figure_2.ipynb

|

vasudhathinks/Personal-Projects

|

7e59ddf4d3277efcb524f1e046528f7e9e8def18

|

[

"MIT"

] | null | null | null | 783.173653 | 125,204 | 0.956113 |

[

[

[

"### Background and Overview:\nThe [MIMIC-III](https://mimic.mit.edu/about/mimic/) (Medical Information Mart for Intensive Care) Clinical Database is comprised of deidentified health-related data associated with over 40,000 patients (available through request). Its 26 tables have a vast amount of information on the patients who stayed in critical care units of the Beth Israel Deaconess Medical Center between 2001 and 2012 ranging from patient demographics to lab reports to detailed clinical notes. ",

"_____no_output_____"

],

[

"#### Figure 2: Compares an Unstructured Data Field's Values for Alive and Deceased Patients\nThis analysis was done on 1,000 patients, with a 50/50 split between patients who were marked alive and those who were marked deceased. It explores the different reasons patients are admitted to the hospital. ",

"_____no_output_____"

]

],

[

[

"# import pandas as pd\n# import numpy as np\n# import matplotlib.pyplot as plt\n\nfrom IPython.display import Image",

"_____no_output_____"

],

[

"\"\"\"NOTE: This code block is commented out because I have not uploaded data files or source code; it is only here to show to the process.\"\"\"\n\n# # Set up path/s to file/s\n# path_to_data = '../data/'\n\n# # Read data\n# alive_admissions = pd.read_csv(path_to_data + 'alive_admissions.csv', header=None, \n# names=['patient_id', 'flag', 'type'])\n# deceased_admissions = pd.read_csv(path_to_data + 'deceased_admissions.csv', header=None,\n# names=['patient_id', 'flag', 'type'])\n\n# # Process data\n# alive_type_count = alive_admissions.groupby(['type']).size().reset_index()\n# deceased_type_count = deceased_admissions.groupby(['type']).size().reset_index()",

"_____no_output_____"

],

[

"\"\"\"NOTE: This code block is also commented out as above; it is only here to show to the process.\"\"\"\n\n# # Plot the data\n# n_bars = 3\n# index = np.arange(n_bars)\n# bar_width = 0.5\n\n# fig2 = plt.subplots()\n# plt.bar(index, alive_type_count[0], bar_width, alpha=0.5, label='alive')\n# plt.bar(index + bar_width / 2, deceased_type_count[0], bar_width, alpha=0.5, label='deceased')\n# plt.xticks(index + bar_width / 4, alive_type_count['type'])\n# plt.ylabel(\"Frequency\")\n# plt.xlabel(\"Admission Type\")\n# plt.legend(loc='upper right')\n# plt.title('Type of Admissions for Alive & Deceased Patients')\n\n# plt.tight_layout()\n# plt.show()",

"_____no_output_____"

],

[

"Image(filename='Figure_2.png')",

"_____no_output_____"

]

],

[

[

"**Figure 2** reviews the reasons behind why 1,000 patients were admitted to the hospital for each visit. The admission types are segmented by whether the patients were marked alive or deceased. Figure 2 shows that, overall, deceased patients tended to have more emergency-type visits, whereas alive patients tended to have more elective-based visits. This data field can be converted to another feature (e.g.: a normalized number of visits per patient, altogether or for each type of admission) to be added to the feature vectors for training a predictive model. ",

"_____no_output_____"

],

[

"**_Note:_** *as a starting point, I used 1,000 patients. I would like to expand this to a larger subset of the 46,000+ patients available through MIMIC-III. Additionally, I intend to review other unstructured data fields, such as diagnosis description and clinical notes.*",

"_____no_output_____"

]

]

] |

[

"markdown",

"code",

"markdown"

] |

[

[

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown",

"markdown"

]

] |

c51266470d6094c9cc990ae321228a0ef6e97721

| 84,141 |

ipynb

|

Jupyter Notebook

|

04 - KNN & Scikit-learn/Part 1 - K Nearest Neighbor.ipynb

|

AdamArthurF/supervised_learning

|

4cb90c1503b57e685c9bb0721964c3fe93e274df

|

[

"MIT"

] | 1 |

2021-08-28T05:38:19.000Z

|

2021-08-28T05:38:19.000Z

|

04 - KNN & Scikit-learn/Part 1 - K Nearest Neighbor.ipynb

|

AdamArthurF/supervised_learning

|

4cb90c1503b57e685c9bb0721964c3fe93e274df

|

[

"MIT"

] | null | null | null |

04 - KNN & Scikit-learn/Part 1 - K Nearest Neighbor.ipynb

|

AdamArthurF/supervised_learning

|

4cb90c1503b57e685c9bb0721964c3fe93e274df

|

[

"MIT"

] | null | null | null | 858.581633 | 82,185 | 0.952698 |

[

[

[

"from luwiji.knn import illustration, demo",

"_____no_output_____"

],

[

"demo.knn()",

"_____no_output_____"

],

[

"illustration.knn_distance",

"_____no_output_____"

]

],

[

[

"### Other Distance Metric",

"_____no_output_____"

],

[

"https://scikit-learn.org/stable/modules/generated/sklearn.neighbors.DistanceMetric.html",

"_____no_output_____"

]

]

] |

[

"code",

"markdown"

] |

[

[

"code",

"code",

"code"

],

[

"markdown",

"markdown"

]

] |

c51266714a754c6f85570f619a3d59b12267998e

| 134,662 |

ipynb

|

Jupyter Notebook

|

docs/notebooks/Guide_for_Authors.ipynb

|

MaxCamillo/debuggingbook

|

9675706d2f089929aeb8211a508508b9d3e9348d

|

[

"MIT"

] | null | null | null |

docs/notebooks/Guide_for_Authors.ipynb

|

MaxCamillo/debuggingbook

|

9675706d2f089929aeb8211a508508b9d3e9348d

|

[

"MIT"

] | null | null | null |

docs/notebooks/Guide_for_Authors.ipynb

|

MaxCamillo/debuggingbook

|

9675706d2f089929aeb8211a508508b9d3e9348d

|

[

"MIT"

] | null | null | null | 49.727474 | 42,388 | 0.725691 |

[

[

[

"# Guide for Authors",

"_____no_output_____"

]

],

[

[

"print('Welcome to \"The Debugging Book\"!')",

"Welcome to \"The Debugging Book\"!\n"

]

],

[

[

"This notebook compiles the most important conventions for all chapters (notebooks) of \"The Debugging Book\".",

"_____no_output_____"

],

[

"## Organization of this Book",

"_____no_output_____"

],

[

"### Chapters as Notebooks\n\nEach chapter comes in its own _Jupyter notebook_. A single notebook (= a chapter) should cover the material (text and code, possibly slides) for a 90-minute lecture.\n\nA chapter notebook should be named `Topic.ipynb`, where `Topic` is the topic. `Topic` must be usable as a Python module and should characterize the main contribution. If the main contribution of your chapter is a class `FooDebugger`, for instance, then your topic (and notebook name) should be `FooDebugger`, such that users can state\n\n```python\nfrom FooDebugger import FooDebugger\n```\n\nSince class and module names should start with uppercase letters, all non-notebook files and folders start with lowercase letters. this may make it easier to differentiate them. The special notebook `index.ipynb` gets converted into the home pages `index.html` (on fuzzingbook.org) and `README.md` (on GitHub).\n\nNotebooks are stored in the `notebooks` folder.",

"_____no_output_____"

],

[

"### DebuggingBook and FuzzingBook\n\nThis project shares some infrastructure (and even chapters) with \"The Fuzzing Book\", established through _symbolic links_. Your file organization should be such that `debuggingbook` and `fuzzingbook` are checked out in the same folder; otherwise, sharing infrastructure will not work\n\n```\n<some folder>\n|- fuzzingbook\n|- debuggingbook (this project folder)\n```\n\nTo check whether the organization fits, check whether the `debuggingbook` `Makefile` properly points to `../fuzzingbook/Makefile` - that is, the `fuzzingbook` `Makefile`. If you can properly open the (shared) `Makefile` in both projects, things are set up properly.",

"_____no_output_____"

],

[

"### Output Formats\n\nThe notebooks by themselves can be used by instructors and students to toy around with. They can edit code (and text) as they like and even run them as a slide show.\n\nThe notebook can be _exported_ to multiple (non-interactive) formats:\n\n* HTML – for placing this material online.\n* PDF – for printing\n* Python – for coding\n* Slides – for presenting\n\nThe included Makefile can generate all of these automatically (and a few more).\n\nAt this point, we mostly focus on HTML and Python, as we want to get these out quickly; but you should also occasionally ensure that your notebooks can (still) be exported into PDF. Other formats (Word, Markdown) are experimental.",

"_____no_output_____"

],

[

"## Sites\n\nAll sources for the book end up on the [Github project page](https://github.com/uds-se/debuggingbook). This holds the sources (notebooks), utilities (Makefiles), as well as an issue tracker.\n\nThe derived material for the book ends up in the `docs/` folder, from where it is eventually pushed to the [debuggingbook website](http://www.debuggingbook.org/). This site allows to read the chapters online, can launch Jupyter notebooks using the binder service, and provides access to code and slide formats. Use `make publish` to create and update the site.",

"_____no_output_____"

],

[

"### The Book PDF\n\nThe book PDF is compiled automatically from the individual notebooks. Each notebook becomes a chapter; references are compiled in the final chapter. Use `make book` to create the book.",

"_____no_output_____"

],

[

"## Creating and Building",

"_____no_output_____"

],

[

"### Tools you will need\n\nTo work on the notebook files, you need the following:\n\n1. Jupyter notebook. The easiest way to install this is via the [Anaconda distribution](https://www.anaconda.com/download/).\n\n2. Once you have the Jupyter notebook installed, you can start editing and coding right away by starting `jupyter notebook` (or `jupyter lab`) in the topmost project folder.\n\n3. If (like me) you don't like the Jupyter Notebook interface, I recommend [Jupyter Lab](https://jupyterlab.readthedocs.io/en/stable/), the designated successor to Jupyter Notebook. Invoke it as `jupyter lab`. It comes with a much more modern interface, but misses autocompletion and a couple of extensions. I am running it [as a Desktop application](http://christopherroach.com/articles/jupyterlab-desktop-app/) which gets rid of all the browser toolbars.\nOn the Mac, there is also the [Pineapple app](https://nwhitehead.github.io/pineapple/), which integrates a nice editor with a local server. This is easy to use, but misses a few features; also, it hasn't seen updates since 2015.\n\n4. To create the entire book (with citations, references, and all), you also need the [ipybublish](https://github.com/chrisjsewell/ipypublish) package. This allows you to create the HTML files, merge multiple chapters into a single PDF or HTML file, create slides, and more. The Makefile provides the essential tools for creation.\n",

"_____no_output_____"

],

[

"### Version Control\n\nWe use git in a single strand of revisions. Feel free branch for features, but eventually merge back into the main \"master\" branch. Sync early; sync often. Only push if everything (\"make all\") builds and passes.\n\nThe Github repo thus will typically reflect work in progress. If you reach a stable milestone, you can push things on the fuzzingbook.org web site, using `make publish`.",

"_____no_output_____"

],

[

"#### nbdime\n\nThe [nbdime](https://github.com/jupyter/nbdime) package gives you tools such as `nbdiff` (and even better, `nbdiff-web`) to compare notebooks against each other; this ensures that cell _contents_ are compared rather than the binary format.\n\n`nbdime config-git --enable` integrates nbdime with git such that `git diff` runs the above tools; merging should also be notebook-specific.",

"_____no_output_____"

],

[

"#### nbstripout\n\nNotebooks in version control _should not contain output cells,_ as these tend to change a lot. (Hey, we're talking random output generation here!) To have output cells automatically stripped during commit, install the [nbstripout](https://github.com/kynan/nbstripout) package and use\n\n```\nnbstripout --install\n```\n\nto set it up as a git filter. The `notebooks/` folder comes with a `.gitattributes` file already set up for `nbstripout`, so you should be all set.\n\nNote that _published_ notebooks (in short, anything under the `docs/` tree _should_ have their output cells included, such that users can download and edit notebooks with pre-rendered output. This folder contains a `.gitattributes` file that should explicitly disable `nbstripout`, but it can't hurt to check.\n\nAs an example, the following cell \n\n1. _should_ have its output included in the [HTML version of this guide](https://www.debuggingbook.org/beta/html/Guide_for_Authors.html);\n2. _should not_ have its output included in [the git repo](https://github.com/uds-se/debuggingbook/blob/master/notebooks/Guide_for_Authors.ipynb) (`notebooks/`);\n3. _should_ have its output included in [downloadable and editable notebooks](https://github.com/uds-se/debuggingbook/blob/master/docs/beta/notebooks/Guide_for_Authors.ipynb) (`docs/notebooks/` and `docs/beta/notebooks/`).",

"_____no_output_____"

]

],

[

[

"import random",

"_____no_output_____"

],

[

"random.random()",

"_____no_output_____"

]

],

[

[

"### Inkscape and GraphViz\n\nCreating derived files uses [Inkscape](https://inkscape.org/en/) and [Graphviz](https://www.graphviz.org/) – through its [Python wrapper](https://pypi.org/project/graphviz/) – to process SVG images. These tools are not automatically installed, but are available on pip, _brew_ and _apt-get_ for all major distributions.",

"_____no_output_____"

],

[

"### LaTeX Fonts\n\nBy default, creating PDF uses XeLaTeX with a couple of special fonts, which you can find in the `fonts/` folder; install these fonts system-wide to make them accessible to XeLaTeX.\n\nYou can also run `make LATEX=pdflatex` to use `pdflatex` and standard LaTeX fonts instead.",

"_____no_output_____"

],

[

"### Creating Derived Formats (HTML, PDF, code, ...)\n\nThe [Makefile](../Makefile) provides rules for all targets. Type `make help` for instructions.\n\nThe Makefile should work with GNU make and a standard Jupyter Notebook installation. To create the multi-chapter book and BibTeX citation support, you need to install the [iPyPublish](https://github.com/chrisjsewell/ipypublish) package (which includes the `nbpublish` command).",

"_____no_output_____"

],

[

"### Creating a New Chapter\n\nTo create a new chapter for the book,\n\n1. Set up a new `.ipynb` notebook file as copy of [Template.ipynb](Template.ipynb).\n2. Include it in the `CHAPTERS` list in the `Makefile`.\n3. Add it to the git repository.",

"_____no_output_____"

],

[

"## Teaching a Topic\n\nEach chapter should be devoted to a central concept and a small set of lessons to be learned. I recommend the following structure:\n\n* Introduce the problem (\"We want to parse inputs\")\n* Illustrate it with some code examples (\"Here's some input I'd like to parse\")\n* Develop a first (possibly quick and dirty) solution (\"A PEG parser is short and often does the job\"_\n* Show that it works and how it works (\"Here's a neat derivation tree. Look how we can use this to mutate and combine expressions!\")\n* Develop a second, more elaborated solution, which should then become the main contribution. (\"Here's a general LR(1) parser that does not require a special grammar format. (You can skip it if you're not interested)\")\n* Offload non-essential extensions to later sections or to exercises. (\"Implement a universal parser, using the Dragon Book\")\n\nThe key idea is that readers should be able to grasp the essentials of the problem and the solution in the beginning of the chapter, and get further into details as they progress through it. Make it easy for readers to be drawn in, providing insights of value quickly. If they are interested to understand how things work, they will get deeper into the topic. If they just want to use the technique (because they may be more interested in later chapters), having them read only the first few examples should be fine for them, too.\n\nWhatever you introduce should be motivated first, and illustrated after. Motivate the code you'll be writing, and use plenty of examples to show what the code just introduced is doing. Remember that readers should have fun interacting with your code and your examples. Show and tell again and again and again.",

"_____no_output_____"

],

[

"### Special Sections",

"_____no_output_____"

],

[

"#### Quizzes",

"_____no_output_____"

],

[

"You can have _quizzes_ as part of the notebook. These are created using the `quiz()` function. Its arguments are\n\n* The question\n* A list of options\n* The correct answer(s) - either\n * the single number of the one single correct answer (starting with 1)\n * a list of numbers of correct answers (multiple choices)\n \nTo make the answer less obvious, you can specify it as a string containing an arithmetic expression evaluating to the desired number(s). The expression will remain in the code (and possibly be shown as hint in the quiz).",

"_____no_output_____"

]

],

[

[

"from bookutils import quiz",

"_____no_output_____"

],

[

"# A single-choice quiz\nquiz(\"The color of the sky is\",\n [\n \"blue\",\n \"red\",\n \"black\"\n ], '5 - 4')",

"_____no_output_____"

],

[

"# A multiple-choice quiz\nquiz(\"What is this book?\",\n [\n \"Novel\",\n \"Friendly\",\n \"Useful\"\n ], '[5 - 4, 1 + 1, 27 / 9]')",

"_____no_output_____"

]

],

[

[

"Cells that contain only the `quiz()` call will not be rendered (but the quiz will).",

"_____no_output_____"

],

[

"#### Synopsis",

"_____no_output_____"

],

[

"Each chapter should have a section named \"Synopsis\" at the very end:\n\n```markdown\n## Synopsis\n\nThis is the text of the synopsis.\n```",

"_____no_output_____"

],

[

"This section is evaluated at the very end of the notebook. It should summarize the most important functionality (classes, methods, etc.) together with examples. In the derived HTML and PDF files, it is rendered at the beginning, such that it can serve as a quick reference",

"_____no_output_____"

],

[

"#### Excursions",

"_____no_output_____"

],

[

"There may be longer stretches of text (and code!) that are too special, too boring, or too repetitve to read. You can mark such stretches as \"Excursions\" by enclosing them in MarkDown cells that state:\n\n```markdown\n#### Excursion: TITLE\n```\n\nand\n\n```markdown\n#### End of Excursion\n```",

"_____no_output_____"

],

[

"Stretches between these two markers get special treatment when rendering:\n\n* In the resulting HTML output, these blocks are set up such that they are shown on demand only.\n* In printed (PDF) versions, they will be replaced by a pointer to the online version.\n* In the resulting slides, they will be omitted right away.",

"_____no_output_____"

],

[

"Here is an example of an excursion:",

"_____no_output_____"

],

[

"#### Excursion: Fine points on Excursion Cells",

"_____no_output_____"

],

[

"Note that the `Excursion` and `End of Excursion` cells must be separate cells; they cannot be merged with others.",

"_____no_output_____"

],

[

"#### End of Excursion",

"_____no_output_____"

],

[

"### Ignored Code\n\nIf a code cell starts with\n```python\n# ignore\n```\nthen the code will not show up in rendered input. Its _output_ will, however. \nThis is useful for cells that create drawings, for instance - the focus should be on the result, not the code.\n\nThis also applies to cells that start with a call to `display()` or `quiz()`.",

"_____no_output_____"

],

[

"### Ignored Cells\n\nYou can have _any_ cell not show up at all (including its output) in any rendered input by adding the following meta-data to the cell:\n```json\n{\n \"ipub\": {\n \"ignore\": true\n}\n```\n",

"_____no_output_____"

],

[

"*This* text, for instance, does not show up in the rendered version.",

"_____no_output_____"

],

[

"## Coding",

"_____no_output_____"

],

[

"### Set up\n\nThe first code block in each notebook should be",

"_____no_output_____"

]

],

[

[

"import bookutils",

"_____no_output_____"

]

],

[

[