modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-02 06:30:45

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 533

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-02 06:30:39

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

north/t5_base_NCC

|

north

| 2022-10-13T13:53:50Z | 5 | 5 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"tensorboard",

"t5",

"text2text-generation",

"no",

"nn",

"sv",

"dk",

"is",

"en",

"dataset:nbailab/NCC",

"dataset:mc4",

"dataset:wikipedia",

"arxiv:2104.09617",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-05-21T11:45:48Z |

---

language:

- no

- nn

- sv

- dk

- is

- en

datasets:

- nbailab/NCC

- mc4

- wikipedia

widget:

- text: <extra_id_0> hver uke samles Regjeringens medlemmer til Statsråd på <extra_id_1>. Dette organet er øverste <extra_id_2> i Norge. For at møtet skal være <extra_id_3>, må over halvparten av regjeringens <extra_id_4> være til stede.

- text: På <extra_id_0> kan man <extra_id_1> en bok, og man kan også <extra_id_2> seg ned og lese den.

license: apache-2.0

---

The North-T5-models are a set of Norwegian and Scandinavian sequence-to-sequence-models. It builds upon the flexible [T5](https://github.com/google-research/text-to-text-transfer-transformer) and [T5X](https://github.com/google-research/t5x) and can be used for a variety of NLP tasks ranging from classification to translation.

| |**Small** <br />_60M_|**Base** <br />_220M_|**Large** <br />_770M_|**XL** <br />_3B_|**XXL** <br />_11B_|

|:-----------|:------------:|:------------:|:------------:|:------------:|:------------:|

|North-T5‑NCC|[🤗](https://huggingface.co/north/t5_small_NCC)|✔|[🤗](https://huggingface.co/north/t5_large_NCC)|[🤗](https://huggingface.co/north/t5_xl_NCC)|[🤗](https://huggingface.co/north/t5_xxl_NCC)||

|North-T5‑NCC‑lm|[🤗](https://huggingface.co/north/t5_small_NCC_lm)|[🤗](https://huggingface.co/north/t5_base_NCC_lm)|[🤗](https://huggingface.co/north/t5_large_NCC_lm)|[🤗](https://huggingface.co/north/t5_xl_NCC_lm)|[🤗](https://huggingface.co/north/t5_xxl_NCC_lm)||

## T5X Checkpoint

The original T5X checkpoint is also available for this model in the [Google Cloud Bucket](gs://north-t5x/pretrained_models/base/norwegian_NCC_plus_English_t5x_base/).

## Performance

A thorough evaluation of the North-T5 models is planned, and I strongly recommend external researchers to make their own evaluation. The main advantage with the T5-models are their flexibility. Traditionally, encoder-only models (like BERT) excels in classification tasks, while seq-2-seq models are easier to train for tasks like translation and Q&A. Despite this, here are the results from using North-T5 on the political classification task explained [here](https://arxiv.org/abs/2104.09617).

|**Model:** | **F1** |

|:-----------|:------------|

|mT5-base|73.2 |

|mBERT-base|78.4 |

|NorBERT-base|78.2 |

|North-T5-small|80.5 |

|nb-bert-base|81.8 |

|North-T5-base|85.3 |

|North-T5-large|86.7 |

|North-T5-xl|88.7 |

|North-T5-xxl|91.8|

These are preliminary results. The [results](https://arxiv.org/abs/2104.09617) from the BERT-models are based on the test-results from the best model after 10 runs with early stopping and a decaying learning rate. The T5-results are the average of five runs on the evaluation set. The small-model was trained for 10.000 steps, while the rest for 5.000 steps. A fixed learning rate was used (no decay), and no early stopping. Neither was the recommended rank classification used. We use a max sequence length of 512. This method simplifies the test setup and gives results that are easy to interpret. However, the results from the T5 model might actually be a bit sub-optimal.

## Sub-versions of North-T5

The following sub-versions are available. More versions will be available shorter.

|**Model** | **Description** |

|:-----------|:-------|

|**North‑T5‑NCC** |This is the main version. It is trained an additonal 500.000 steps on from the mT5 checkpoint. The training corpus is based on [the Norwegian Colossal Corpus (NCC)](https://huggingface.co/datasets/NbAiLab/NCC). In addition there are added data from MC4 and English Wikipedia.|

|**North‑T5‑NCC‑lm**|The model is pretrained for an addtional 100k steps on the LM objective discussed in the [T5 paper](https://arxiv.org/pdf/1910.10683.pdf). In a way this turns a masked language model into an autoregressive model. It also prepares the model for some tasks. When for instance doing translation and NLI, it is well documented that there is a clear benefit to do a step of unsupervised LM-training before starting the finetuning.|

## Fine-tuned versions

As explained below, the model really needs to be fine-tuned for specific tasks. This procedure is relatively simple, and the models are not very sensitive to the hyper-parameters used. Usually a decent result can be obtained by using a fixed learning rate of 1e-3. Smaller versions of the model typically needs to be trained for a longer time. It is easy to train the base-models in a Google Colab.

Since some people really want to see what the models are capable of, without going through the training procedure, I provide a couple of test models. These models are by no means optimised, and are just for demonstrating how the North-T5 models can be used.

* Nynorsk Translator. Translates any text from Norwegian Bokmål to Norwegian Nynorsk. Please test the [Streamlit-demo](https://huggingface.co/spaces/north/Nynorsk) and the [HuggingFace repo](https://huggingface.co/north/demo-nynorsk-base)

* DeUnCaser. The model adds punctation, spaces and capitalisation back into the text. The input needs to be in Norwegian but does not have to be divided into sentences or have proper capitalisation of words. You can even remove the spaces from the text, and make the model reconstruct it. It can be tested with the [Streamlit-demo](https://huggingface.co/spaces/north/DeUnCaser) and directly on the [HuggingFace repo](https://huggingface.co/north/demo-deuncaser-base)

## Training details

All models are built using the Flax-based T5X codebase, and all models are initiated with the mT5 pretrained weights. The models are trained using the T5.1.1 training regime, where they are only trained on an unsupervised masking-task. This also means that the models (contrary to the original T5) needs to be finetuned to solve specific tasks. This finetuning is however usually not very compute intensive, and in most cases it can be performed even with free online training resources.

All the main model model versions are trained for 500.000 steps after the mT5 checkpoint (1.000.000 steps). They are trained mainly on a 75GB corpus, consisting of NCC, Common Crawl and some additional high quality English text (Wikipedia). The corpus is roughly 80% Norwegian text. Additional languages are added to retain some of the multilingual capabilities, making the model both more robust to new words/concepts and also more suited as a basis for translation tasks.

While the huge models almost always will give the best results, they are also both more difficult and more expensive to finetune. I will strongly recommended to start with finetuning a base-models. The base-models can easily be finetuned on a standard graphic card or a free TPU through Google Colab.

All models were trained on TPUs. The largest XXL model was trained on a TPU v4-64, the XL model on a TPU v4-32, the Large model on a TPU v4-16 and the rest on TPU v4-8. Since it is possible to reduce the batch size during fine-tuning, it is also possible to finetune on slightly smaller hardware. The rule of thumb is that you can go "one step down" when finetuning. The large models still rewuire access to significant hardware, even for finetuning.

## Formats

All models are trained using the Flax-based T5X library. The original checkpoints are available in T5X format and can be used for both finetuning or interference. All models, except the XXL-model, are also converted to Transformers/HuggingFace. In this framework, the models can be loaded for finetuning or inference both in Flax, PyTorch and TensorFlow format.

## Future

I will continue to train and release additional models to this set. What models that are added is dependent upon the feedbacki from the users

## Thanks

This release would not have been possible without getting support and hardware from the [TPU Research Cloud](https://sites.research.google/trc/about/) at Google Research. Both the TPU Research Cloud Team and the T5X Team has provided extremely useful support for getting this running.

Freddy Wetjen at the National Library of Norway has been of tremendous help in generating the original NCC corpus, and has also contributed to generate the collated coprus used for this training. In addition he has been a dicussion partner in the creation of these models.

Also thanks to Stefan Schweter for writing the [script](https://github.com/huggingface/transformers/blob/main/src/transformers/models/t5/convert_t5x_checkpoint_to_flax.py) for converting these models from T5X to HuggingFace and to Javier de la Rosa for writing the dataloader for reading the HuggingFace Datasets in T5X.

## Warranty

Use at your own risk. The models have not yet been thougroughly tested, and may contain both errors and biases.

## Contact/About

These models were trained by Per E Kummervold. Please contact me on [email protected].

|

north/t5_base_NCC_lm

|

north

| 2022-10-13T13:53:23Z | 9 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"tensorboard",

"t5",

"text2text-generation",

"no",

"nn",

"sv",

"dk",

"is",

"en",

"dataset:nbailab/NCC",

"dataset:mc4",

"dataset:wikipedia",

"arxiv:2104.09617",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-05-21T11:45:24Z |

---

language:

- no

- nn

- sv

- dk

- is

- en

datasets:

- nbailab/NCC

- mc4

- wikipedia

widget:

- text: <extra_id_0> hver uke samles Regjeringens medlemmer til Statsråd på <extra_id_1>. Dette organet er øverste <extra_id_2> i Norge. For at møtet skal være <extra_id_3>, må over halvparten av regjeringens <extra_id_4> være til stede.

- text: På <extra_id_0> kan man <extra_id_1> en bok, og man kan også <extra_id_2> seg ned og lese den.

license: apache-2.0

---

The North-T5-models are a set of Norwegian and Scandinavian sequence-to-sequence-models. It builds upon the flexible [T5](https://github.com/google-research/text-to-text-transfer-transformer) and [T5X](https://github.com/google-research/t5x) and can be used for a variety of NLP tasks ranging from classification to translation.

| |**Small** <br />_60M_|**Base** <br />_220M_|**Large** <br />_770M_|**XL** <br />_3B_|**XXL** <br />_11B_|

|:-----------|:------------:|:------------:|:------------:|:------------:|:------------:|

|North-T5‑NCC|[🤗](https://huggingface.co/north/t5_small_NCC)|[🤗](https://huggingface.co/north/t5_base_NCC)|[🤗](https://huggingface.co/north/t5_large_NCC)|[🤗](https://huggingface.co/north/t5_xl_NCC)|[🤗](https://huggingface.co/north/t5_xxl_NCC)||

|North-T5‑NCC‑lm|[🤗](https://huggingface.co/north/t5_small_NCC_lm)|✔|[🤗](https://huggingface.co/north/t5_large_NCC_lm)|[🤗](https://huggingface.co/north/t5_xl_NCC_lm)|[🤗](https://huggingface.co/north/t5_xxl_NCC_lm)||

## T5X Checkpoint

The original T5X checkpoint is also available for this model in the [Google Cloud Bucket](gs://north-t5x/pretrained_models/base/norwegian_NCC_plus_English_pluss100k_lm_t5x_base/).

## Performance

A thorough evaluation of the North-T5 models is planned, and I strongly recommend external researchers to make their own evaluation. The main advantage with the T5-models are their flexibility. Traditionally, encoder-only models (like BERT) excels in classification tasks, while seq-2-seq models are easier to train for tasks like translation and Q&A. Despite this, here are the results from using North-T5 on the political classification task explained [here](https://arxiv.org/abs/2104.09617).

|**Model:** | **F1** |

|:-----------|:------------|

|mT5-base|73.2 |

|mBERT-base|78.4 |

|NorBERT-base|78.2 |

|North-T5-small|80.5 |

|nb-bert-base|81.8 |

|North-T5-base|85.3 |

|North-T5-large|86.7 |

|North-T5-xl|88.7 |

|North-T5-xxl|91.8|

These are preliminary results. The [results](https://arxiv.org/abs/2104.09617) from the BERT-models are based on the test-results from the best model after 10 runs with early stopping and a decaying learning rate. The T5-results are the average of five runs on the evaluation set. The small-model was trained for 10.000 steps, while the rest for 5.000 steps. A fixed learning rate was used (no decay), and no early stopping. Neither was the recommended rank classification used. We use a max sequence length of 512. This method simplifies the test setup and gives results that are easy to interpret. However, the results from the T5 model might actually be a bit sub-optimal.

## Sub-versions of North-T5

The following sub-versions are available. More versions will be available shorter.

|**Model** | **Description** |

|:-----------|:-------|

|**North‑T5‑NCC** |This is the main version. It is trained an additonal 500.000 steps on from the mT5 checkpoint. The training corpus is based on [the Norwegian Colossal Corpus (NCC)](https://huggingface.co/datasets/NbAiLab/NCC). In addition there are added data from MC4 and English Wikipedia.|

|**North‑T5‑NCC‑lm**|The model is pretrained for an addtional 100k steps on the LM objective discussed in the [T5 paper](https://arxiv.org/pdf/1910.10683.pdf). In a way this turns a masked language model into an autoregressive model. It also prepares the model for some tasks. When for instance doing translation and NLI, it is well documented that there is a clear benefit to do a step of unsupervised LM-training before starting the finetuning.|

## Fine-tuned versions

As explained below, the model really needs to be fine-tuned for specific tasks. This procedure is relatively simple, and the models are not very sensitive to the hyper-parameters used. Usually a decent result can be obtained by using a fixed learning rate of 1e-3. Smaller versions of the model typically needs to be trained for a longer time. It is easy to train the base-models in a Google Colab.

Since some people really want to see what the models are capable of, without going through the training procedure, I provide a couple of test models. These models are by no means optimised, and are just for demonstrating how the North-T5 models can be used.

* Nynorsk Translator. Translates any text from Norwegian Bokmål to Norwegian Nynorsk. Please test the [Streamlit-demo](https://huggingface.co/spaces/north/Nynorsk) and the [HuggingFace repo](https://huggingface.co/north/demo-nynorsk-base)

* DeUnCaser. The model adds punctation, spaces and capitalisation back into the text. The input needs to be in Norwegian but does not have to be divided into sentences or have proper capitalisation of words. You can even remove the spaces from the text, and make the model reconstruct it. It can be tested with the [Streamlit-demo](https://huggingface.co/spaces/north/DeUnCaser) and directly on the [HuggingFace repo](https://huggingface.co/north/demo-deuncaser-base)

## Training details

All models are built using the Flax-based T5X codebase, and all models are initiated with the mT5 pretrained weights. The models are trained using the T5.1.1 training regime, where they are only trained on an unsupervised masking-task. This also means that the models (contrary to the original T5) needs to be finetuned to solve specific tasks. This finetuning is however usually not very compute intensive, and in most cases it can be performed even with free online training resources.

All the main model model versions are trained for 500.000 steps after the mT5 checkpoint (1.000.000 steps). They are trained mainly on a 75GB corpus, consisting of NCC, Common Crawl and some additional high quality English text (Wikipedia). The corpus is roughly 80% Norwegian text. Additional languages are added to retain some of the multilingual capabilities, making the model both more robust to new words/concepts and also more suited as a basis for translation tasks.

While the huge models almost always will give the best results, they are also both more difficult and more expensive to finetune. I will strongly recommended to start with finetuning a base-models. The base-models can easily be finetuned on a standard graphic card or a free TPU through Google Colab.

All models were trained on TPUs. The largest XXL model was trained on a TPU v4-64, the XL model on a TPU v4-32, the Large model on a TPU v4-16 and the rest on TPU v4-8. Since it is possible to reduce the batch size during fine-tuning, it is also possible to finetune on slightly smaller hardware. The rule of thumb is that you can go "one step down" when finetuning. The large models still rewuire access to significant hardware, even for finetuning.

## Formats

All models are trained using the Flax-based T5X library. The original checkpoints are available in T5X format and can be used for both finetuning or interference. All models, except the XXL-model, are also converted to Transformers/HuggingFace. In this framework, the models can be loaded for finetuning or inference both in Flax, PyTorch and TensorFlow format.

## Future

I will continue to train and release additional models to this set. What models that are added is dependent upon the feedbacki from the users

## Thanks

This release would not have been possible without getting support and hardware from the [TPU Research Cloud](https://sites.research.google/trc/about/) at Google Research. Both the TPU Research Cloud Team and the T5X Team has provided extremely useful support for getting this running.

Freddy Wetjen at the National Library of Norway has been of tremendous help in generating the original NCC corpus, and has also contributed to generate the collated coprus used for this training. In addition he has been a dicussion partner in the creation of these models.

Also thanks to Stefan Schweter for writing the [script](https://github.com/huggingface/transformers/blob/main/src/transformers/models/t5/convert_t5x_checkpoint_to_flax.py) for converting these models from T5X to HuggingFace and to Javier de la Rosa for writing the dataloader for reading the HuggingFace Datasets in T5X.

## Warranty

Use at your own risk. The models have not yet been thougroughly tested, and may contain both errors and biases.

## Contact/About

These models were trained by Per E Kummervold. Please contact me on [email protected].

|

north/t5_small_NCC

|

north

| 2022-10-13T13:53:07Z | 109 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"tensorboard",

"t5",

"text2text-generation",

"no",

"nn",

"sv",

"dk",

"is",

"en",

"dataset:nbailab/NCC",

"dataset:mc4",

"dataset:wikipedia",

"arxiv:2104.09617",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-05-21T11:45:05Z |

---

language:

- no

- nn

- sv

- dk

- is

- en

datasets:

- nbailab/NCC

- mc4

- wikipedia

widget:

- text: <extra_id_0> hver uke samles Regjeringens medlemmer til Statsråd på <extra_id_1>. Dette organet er øverste <extra_id_2> i Norge. For at møtet skal være <extra_id_3>, må over halvparten av regjeringens <extra_id_4> være til stede.

- text: På <extra_id_0> kan man <extra_id_1> en bok, og man kan også <extra_id_2> seg ned og lese den.

license: apache-2.0

---

The North-T5-models are a set of Norwegian and Scandinavian sequence-to-sequence-models. It builds upon the flexible [T5](https://github.com/google-research/text-to-text-transfer-transformer) and [T5X](https://github.com/google-research/t5x) and can be used for a variety of NLP tasks ranging from classification to translation.

| |**Small** <br />_60M_|**Base** <br />_220M_|**Large** <br />_770M_|**XL** <br />_3B_|**XXL** <br />_11B_|

|:-----------|:------------:|:------------:|:------------:|:------------:|:------------:|

|North-T5‑NCC|✔|[🤗](https://huggingface.co/north/t5_base_NCC)|[🤗](https://huggingface.co/north/t5_large_NCC)|[🤗](https://huggingface.co/north/t5_xl_NCC)|[🤗](https://huggingface.co/north/t5_xxl_NCC)||

|North-T5‑NCC‑lm|[🤗](https://huggingface.co/north/t5_small_NCC_lm)|[🤗](https://huggingface.co/north/t5_base_NCC_lm)|[🤗](https://huggingface.co/north/t5_large_NCC_lm)|[🤗](https://huggingface.co/north/t5_xl_NCC_lm)|[🤗](https://huggingface.co/north/t5_xxl_NCC_lm)||

## T5X Checkpoint

The original T5X checkpoint is also available for this model in the [Google Cloud Bucket](gs://north-t5x/pretrained_models/small/norwegian_NCC_plus_English_t5x_small/).

## Performance

A thorough evaluation of the North-T5 models is planned, and I strongly recommend external researchers to make their own evaluation. The main advantage with the T5-models are their flexibility. Traditionally, encoder-only models (like BERT) excels in classification tasks, while seq-2-seq models are easier to train for tasks like translation and Q&A. Despite this, here are the results from using North-T5 on the political classification task explained [here](https://arxiv.org/abs/2104.09617).

|**Model:** | **F1** |

|:-----------|:------------|

|mT5-base|73.2 |

|mBERT-base|78.4 |

|NorBERT-base|78.2 |

|North-T5-small|80.5 |

|nb-bert-base|81.8 |

|North-T5-base|85.3 |

|North-T5-large|86.7 |

|North-T5-xl|88.7 |

|North-T5-xxl|91.8|

These are preliminary results. The [results](https://arxiv.org/abs/2104.09617) from the BERT-models are based on the test-results from the best model after 10 runs with early stopping and a decaying learning rate. The T5-results are the average of five runs on the evaluation set. The small-model was trained for 10.000 steps, while the rest for 5.000 steps. A fixed learning rate was used (no decay), and no early stopping. Neither was the recommended rank classification used. We use a max sequence length of 512. This method simplifies the test setup and gives results that are easy to interpret. However, the results from the T5 model might actually be a bit sub-optimal.

## Sub-versions of North-T5

The following sub-versions are available. More versions will be available shorter.

|**Model** | **Description** |

|:-----------|:-------|

|**North‑T5‑NCC** |This is the main version. It is trained an additonal 500.000 steps on from the mT5 checkpoint. The training corpus is based on [the Norwegian Colossal Corpus (NCC)](https://huggingface.co/datasets/NbAiLab/NCC). In addition there are added data from MC4 and English Wikipedia.|

|**North‑T5‑NCC‑lm**|The model is pretrained for an addtional 100k steps on the LM objective discussed in the [T5 paper](https://arxiv.org/pdf/1910.10683.pdf). In a way this turns a masked language model into an autoregressive model. It also prepares the model for some tasks. When for instance doing translation and NLI, it is well documented that there is a clear benefit to do a step of unsupervised LM-training before starting the finetuning.|

## Fine-tuned versions

As explained below, the model really needs to be fine-tuned for specific tasks. This procedure is relatively simple, and the models are not very sensitive to the hyper-parameters used. Usually a decent result can be obtained by using a fixed learning rate of 1e-3. Smaller versions of the model typically needs to be trained for a longer time. It is easy to train the base-models in a Google Colab.

Since some people really want to see what the models are capable of, without going through the training procedure, I provide a couple of test models. These models are by no means optimised, and are just for demonstrating how the North-T5 models can be used.

* Nynorsk Translator. Translates any text from Norwegian Bokmål to Norwegian Nynorsk. Please test the [Streamlit-demo](https://huggingface.co/spaces/north/Nynorsk) and the [HuggingFace repo](https://huggingface.co/north/demo-nynorsk-base)

* DeUnCaser. The model adds punctation, spaces and capitalisation back into the text. The input needs to be in Norwegian but does not have to be divided into sentences or have proper capitalisation of words. You can even remove the spaces from the text, and make the model reconstruct it. It can be tested with the [Streamlit-demo](https://huggingface.co/spaces/north/DeUnCaser) and directly on the [HuggingFace repo](https://huggingface.co/north/demo-deuncaser-base)

## Training details

All models are built using the Flax-based T5X codebase, and all models are initiated with the mT5 pretrained weights. The models are trained using the T5.1.1 training regime, where they are only trained on an unsupervised masking-task. This also means that the models (contrary to the original T5) needs to be finetuned to solve specific tasks. This finetuning is however usually not very compute intensive, and in most cases it can be performed even with free online training resources.

All the main model model versions are trained for 500.000 steps after the mT5 checkpoint (1.000.000 steps). They are trained mainly on a 75GB corpus, consisting of NCC, Common Crawl and some additional high quality English text (Wikipedia). The corpus is roughly 80% Norwegian text. Additional languages are added to retain some of the multilingual capabilities, making the model both more robust to new words/concepts and also more suited as a basis for translation tasks.

While the huge models almost always will give the best results, they are also both more difficult and more expensive to finetune. I will strongly recommended to start with finetuning a base-models. The base-models can easily be finetuned on a standard graphic card or a free TPU through Google Colab.

All models were trained on TPUs. The largest XXL model was trained on a TPU v4-64, the XL model on a TPU v4-32, the Large model on a TPU v4-16 and the rest on TPU v4-8. Since it is possible to reduce the batch size during fine-tuning, it is also possible to finetune on slightly smaller hardware. The rule of thumb is that you can go "one step down" when finetuning. The large models still rewuire access to significant hardware, even for finetuning.

## Formats

All models are trained using the Flax-based T5X library. The original checkpoints are available in T5X format and can be used for both finetuning or interference. All models, except the XXL-model, are also converted to Transformers/HuggingFace. In this framework, the models can be loaded for finetuning or inference both in Flax, PyTorch and TensorFlow format.

## Future

I will continue to train and release additional models to this set. What models that are added is dependent upon the feedbacki from the users

## Thanks

This release would not have been possible without getting support and hardware from the [TPU Research Cloud](https://sites.research.google/trc/about/) at Google Research. Both the TPU Research Cloud Team and the T5X Team has provided extremely useful support for getting this running.

Freddy Wetjen at the National Library of Norway has been of tremendous help in generating the original NCC corpus, and has also contributed to generate the collated coprus used for this training. In addition he has been a dicussion partner in the creation of these models.

Also thanks to Stefan Schweter for writing the [script](https://github.com/huggingface/transformers/blob/main/src/transformers/models/t5/convert_t5x_checkpoint_to_flax.py) for converting these models from T5X to HuggingFace and to Javier de la Rosa for writing the dataloader for reading the HuggingFace Datasets in T5X.

## Warranty

Use at your own risk. The models have not yet been thougroughly tested, and may contain both errors and biases.

## Contact/About

These models were trained by Per E Kummervold. Please contact me on [email protected].

|

north/t5_small_NCC_lm

|

north

| 2022-10-13T13:52:45Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"tensorboard",

"t5",

"text2text-generation",

"no",

"nn",

"sv",

"dk",

"is",

"en",

"dataset:nbailab/NCC",

"dataset:mc4",

"dataset:wikipedia",

"arxiv:2104.09617",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-05-21T11:44:41Z |

---

language:

- no

- nn

- sv

- dk

- is

- en

datasets:

- nbailab/NCC

- mc4

- wikipedia

widget:

- text: <extra_id_0> hver uke samles Regjeringens medlemmer til Statsråd på <extra_id_1>. Dette organet er øverste <extra_id_2> i Norge. For at møtet skal være <extra_id_3>, må over halvparten av regjeringens <extra_id_4> være til stede.

- text: På <extra_id_0> kan man <extra_id_1> en bok, og man kan også <extra_id_2> seg ned og lese den.

license: apache-2.0

---

The North-T5-models are a set of Norwegian and Scandinavian sequence-to-sequence-models. It builds upon the flexible [T5](https://github.com/google-research/text-to-text-transfer-transformer) and [T5X](https://github.com/google-research/t5x) and can be used for a variety of NLP tasks ranging from classification to translation.

| |**Small** <br />_60M_|**Base** <br />_220M_|**Large** <br />_770M_|**XL** <br />_3B_|**XXL** <br />_11B_|

|:-----------|:------------:|:------------:|:------------:|:------------:|:------------:|

|North-T5‑NCC|[🤗](https://huggingface.co/north/t5_small_NCC)|[🤗](https://huggingface.co/north/t5_base_NCC)|[🤗](https://huggingface.co/north/t5_large_NCC)|[🤗](https://huggingface.co/north/t5_xl_NCC)|[🤗](https://huggingface.co/north/t5_xxl_NCC)||

|North-T5‑NCC‑lm|✔|[🤗](https://huggingface.co/north/t5_base_NCC_lm)|[🤗](https://huggingface.co/north/t5_large_NCC_lm)|[🤗](https://huggingface.co/north/t5_xl_NCC_lm)|[🤗](https://huggingface.co/north/t5_xxl_NCC_lm)||

## T5X Checkpoint

The original T5X checkpoint is also available for this model in the [Google Cloud Bucket](gs://north-t5x/pretrained_models/small/norwegian_NCC_plus_English_pluss100k_lm_t5x_small/).

## Performance

A thorough evaluation of the North-T5 models is planned, and I strongly recommend external researchers to make their own evaluation. The main advantage with the T5-models are their flexibility. Traditionally, encoder-only models (like BERT) excels in classification tasks, while seq-2-seq models are easier to train for tasks like translation and Q&A. Despite this, here are the results from using North-T5 on the political classification task explained [here](https://arxiv.org/abs/2104.09617).

|**Model:** | **F1** |

|:-----------|:------------|

|mT5-base|73.2 |

|mBERT-base|78.4 |

|NorBERT-base|78.2 |

|North-T5-small|80.5 |

|nb-bert-base|81.8 |

|North-T5-base|85.3 |

|North-T5-large|86.7 |

|North-T5-xl|88.7 |

|North-T5-xxl|91.8|

These are preliminary results. The [results](https://arxiv.org/abs/2104.09617) from the BERT-models are based on the test-results from the best model after 10 runs with early stopping and a decaying learning rate. The T5-results are the average of five runs on the evaluation set. The small-model was trained for 10.000 steps, while the rest for 5.000 steps. A fixed learning rate was used (no decay), and no early stopping. Neither was the recommended rank classification used. We use a max sequence length of 512. This method simplifies the test setup and gives results that are easy to interpret. However, the results from the T5 model might actually be a bit sub-optimal.

## Sub-versions of North-T5

The following sub-versions are available. More versions will be available shorter.

|**Model** | **Description** |

|:-----------|:-------|

|**North‑T5‑NCC** |This is the main version. It is trained an additonal 500.000 steps on from the mT5 checkpoint. The training corpus is based on [the Norwegian Colossal Corpus (NCC)](https://huggingface.co/datasets/NbAiLab/NCC). In addition there are added data from MC4 and English Wikipedia.|

|**North‑T5‑NCC‑lm**|The model is pretrained for an addtional 100k steps on the LM objective discussed in the [T5 paper](https://arxiv.org/pdf/1910.10683.pdf). In a way this turns a masked language model into an autoregressive model. It also prepares the model for some tasks. When for instance doing translation and NLI, it is well documented that there is a clear benefit to do a step of unsupervised LM-training before starting the finetuning.|

## Fine-tuned versions

As explained below, the model really needs to be fine-tuned for specific tasks. This procedure is relatively simple, and the models are not very sensitive to the hyper-parameters used. Usually a decent result can be obtained by using a fixed learning rate of 1e-3. Smaller versions of the model typically needs to be trained for a longer time. It is easy to train the base-models in a Google Colab.

Since some people really want to see what the models are capable of, without going through the training procedure, I provide a couple of test models. These models are by no means optimised, and are just for demonstrating how the North-T5 models can be used.

* Nynorsk Translator. Translates any text from Norwegian Bokmål to Norwegian Nynorsk. Please test the [Streamlit-demo](https://huggingface.co/spaces/north/Nynorsk) and the [HuggingFace repo](https://huggingface.co/north/demo-nynorsk-base)

* DeUnCaser. The model adds punctation, spaces and capitalisation back into the text. The input needs to be in Norwegian but does not have to be divided into sentences or have proper capitalisation of words. You can even remove the spaces from the text, and make the model reconstruct it. It can be tested with the [Streamlit-demo](https://huggingface.co/spaces/north/DeUnCaser) and directly on the [HuggingFace repo](https://huggingface.co/north/demo-deuncaser-base)

## Training details

All models are built using the Flax-based T5X codebase, and all models are initiated with the mT5 pretrained weights. The models are trained using the T5.1.1 training regime, where they are only trained on an unsupervised masking-task. This also means that the models (contrary to the original T5) needs to be finetuned to solve specific tasks. This finetuning is however usually not very compute intensive, and in most cases it can be performed even with free online training resources.

All the main model model versions are trained for 500.000 steps after the mT5 checkpoint (1.000.000 steps). They are trained mainly on a 75GB corpus, consisting of NCC, Common Crawl and some additional high quality English text (Wikipedia). The corpus is roughly 80% Norwegian text. Additional languages are added to retain some of the multilingual capabilities, making the model both more robust to new words/concepts and also more suited as a basis for translation tasks.

While the huge models almost always will give the best results, they are also both more difficult and more expensive to finetune. I will strongly recommended to start with finetuning a base-models. The base-models can easily be finetuned on a standard graphic card or a free TPU through Google Colab.

All models were trained on TPUs. The largest XXL model was trained on a TPU v4-64, the XL model on a TPU v4-32, the Large model on a TPU v4-16 and the rest on TPU v4-8. Since it is possible to reduce the batch size during fine-tuning, it is also possible to finetune on slightly smaller hardware. The rule of thumb is that you can go "one step down" when finetuning. The large models still rewuire access to significant hardware, even for finetuning.

## Formats

All models are trained using the Flax-based T5X library. The original checkpoints are available in T5X format and can be used for both finetuning or interference. All models, except the XXL-model, are also converted to Transformers/HuggingFace. In this framework, the models can be loaded for finetuning or inference both in Flax, PyTorch and TensorFlow format.

## Future

I will continue to train and release additional models to this set. What models that are added is dependent upon the feedbacki from the users

## Thanks

This release would not have been possible without getting support and hardware from the [TPU Research Cloud](https://sites.research.google/trc/about/) at Google Research. Both the TPU Research Cloud Team and the T5X Team has provided extremely useful support for getting this running.

Freddy Wetjen at the National Library of Norway has been of tremendous help in generating the original NCC corpus, and has also contributed to generate the collated coprus used for this training. In addition he has been a dicussion partner in the creation of these models.

Also thanks to Stefan Schweter for writing the [script](https://github.com/huggingface/transformers/blob/main/src/transformers/models/t5/convert_t5x_checkpoint_to_flax.py) for converting these models from T5X to HuggingFace and to Javier de la Rosa for writing the dataloader for reading the HuggingFace Datasets in T5X.

## Warranty

Use at your own risk. The models have not yet been thougroughly tested, and may contain both errors and biases.

## Contact/About

These models were trained by Per E Kummervold. Please contact me on [email protected].

|

huggingtweets/boredapeyc-garyvee-opensea

|

huggingtweets

| 2022-10-13T12:52:04Z | 130 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-10-13T12:48:37Z |

---

language: en

thumbnail: http://www.huggingtweets.com/boredapeyc-garyvee-opensea/1665665519153/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1493524673962852353/qRxbC9Xq_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1544105652330631168/ZuvjfGkT_400x400.png')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1446569222352654344/Uc-tml-6_400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Gary Vaynerchuk & OpenSea & Bored Ape Yacht Club</div>

<div style="text-align: center; font-size: 14px;">@boredapeyc-garyvee-opensea</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Gary Vaynerchuk & OpenSea & Bored Ape Yacht Club.

| Data | Gary Vaynerchuk | OpenSea | Bored Ape Yacht Club |

| --- | --- | --- | --- |

| Tweets downloaded | 3249 | 3239 | 3243 |

| Retweets | 723 | 1428 | 3014 |

| Short tweets | 838 | 410 | 11 |

| Tweets kept | 1688 | 1401 | 218 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/8ylc2l06/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @boredapeyc-garyvee-opensea's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2t159hph) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2t159hph/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/boredapeyc-garyvee-opensea')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

jinofcoolnes/sksjinxmerge

|

jinofcoolnes

| 2022-10-13T11:03:07Z | 0 | 11 | null |

[

"region:us"

] | null | 2022-10-12T16:25:28Z |

Use "sks jinx" or just "jinx" should work

|

shed-e/ner_peoples_daily

|

shed-e

| 2022-10-13T10:26:38Z | 138 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:peoples_daily_ner",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-10-13T09:49:28Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- peoples_daily_ner

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: ner_peoples_daily

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: peoples_daily_ner

type: peoples_daily_ner

config: peoples_daily_ner

split: train

args: peoples_daily_ner

metrics:

- name: Precision

type: precision

value: 0.9205354599829109

- name: Recall

type: recall

value: 0.9365401332946972

- name: F1

type: f1

value: 0.9284688307957485

- name: Accuracy

type: accuracy

value: 0.9929549534505072

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ner_peoples_daily

This model is a fine-tuned version of [hfl/rbt6](https://huggingface.co/hfl/rbt6) on the peoples_daily_ner dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0249

- Precision: 0.9205

- Recall: 0.9365

- F1: 0.9285

- Accuracy: 0.9930

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.3154 | 1.0 | 164 | 0.0410 | 0.8258 | 0.8684 | 0.8466 | 0.9868 |

| 0.0394 | 2.0 | 328 | 0.0287 | 0.8842 | 0.9070 | 0.8954 | 0.9905 |

| 0.0293 | 3.0 | 492 | 0.0264 | 0.8978 | 0.9168 | 0.9072 | 0.9916 |

| 0.02 | 4.0 | 656 | 0.0254 | 0.9149 | 0.9226 | 0.9188 | 0.9923 |

| 0.016 | 5.0 | 820 | 0.0250 | 0.9167 | 0.9281 | 0.9224 | 0.9927 |

| 0.0124 | 6.0 | 984 | 0.0252 | 0.9114 | 0.9328 | 0.9220 | 0.9928 |

| 0.0108 | 7.0 | 1148 | 0.0249 | 0.9169 | 0.9339 | 0.9254 | 0.9928 |

| 0.0097 | 8.0 | 1312 | 0.0249 | 0.9205 | 0.9365 | 0.9285 | 0.9930 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.5.2

- Tokenizers 0.13.1

|

osanseviero/titanic_mlconsole

|

osanseviero

| 2022-10-13T09:56:26Z | 0 | 1 |

mlconsole

|

[

"mlconsole",

"tabular-classification",

"dataset:train.csv",

"license:unknown",

"model-index",

"region:us"

] |

tabular-classification

| 2022-10-13T09:56:23Z |

---

license: unknown

inference: false

tags:

- mlconsole

- tabular-classification

library_name: mlconsole

metrics:

- accuracy

- loss

datasets:

- train.csv

model-index:

- name: titanic_mlconsole

results:

- task:

type: tabular-classification

name: tabular-classification

dataset:

type: train.csv

name: train.csv

metrics:

- type: accuracy

name: Accuracy

value: 0.792792797088623

- type: loss

name: Model loss

value: 0.5146282911300659

---

# train.csv (#0)

Trained on [ML Console](https://mlconsole.com).

[Load the model on ML Console](https://mlconsole.com/model/hf/osanseviero/titanic_mlconsole).

|

sd-concepts-library/masyanya

|

sd-concepts-library

| 2022-10-13T09:35:28Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-10-13T09:35:25Z |

---

license: mit

---

### Masyanya on Stable Diffusion

This is the `<masyanya>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sanjeev498/vit-base-beans

|

sanjeev498

| 2022-10-13T07:04:07Z | 217 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:beans",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-10-13T06:38:50Z |

---

license: apache-2.0

tags:

- image-classification

- generated_from_trainer

datasets:

- beans

metrics:

- accuracy

model-index:

- name: vit-base-beans

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: beans

type: beans

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 1.0

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-base-beans

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the beans dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0189

- Accuracy: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0568 | 1.54 | 100 | 0.0299 | 1.0 |

| 0.0135 | 3.08 | 200 | 0.0189 | 1.0 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.5.2

- Tokenizers 0.13.1

|

sxxyxn/kogpt_reduced_vocab

|

sxxyxn

| 2022-10-13T06:56:45Z | 7 | 1 |

transformers

|

[

"transformers",

"pytorch",

"gptj",

"text-generation",

"KakaoBrain",

"KoGPT",

"GPT",

"GPT3",

"ko",

"arxiv:2104.09864",

"arxiv:2109.04650",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-10-13T02:04:31Z |

---

language: ko

tags:

- KakaoBrain

- KoGPT

- GPT

- GPT3

license: cc-by-nc-4.0

---

# KoGPT

KakaoBrain's Pre-Trained Language Models.

* KoGPT (Korean Generative Pre-trained Transformer)

* [https://github.com/kakaobrain/kogpt](https://github.com/kakaobrain/kogpt)

* [https://huggingface.co/kakaobrain/kogpt](https://huggingface.co/kakaobrain/kogpt)

## Model Descriptions

### KoGPT6B-ryan1.5b

* [\[huggingface\]\[kakaobrain/kogpt\]\[KoGPT6B-ryan1.5b\]](https://huggingface.co/kakaobrain/kogpt/tree/KoGPT6B-ryan1.5b)

* [\[huggingface\]\[kakaobrain/kogpt\]\[KoGPT6B-ryan1.5b-float16\]](https://huggingface.co/kakaobrain/kogpt/tree/KoGPT6B-ryan1.5b-float16)

| Hyperparameter | Value |

|:---------------------|--------------:|

| \\(n_{parameters}\\) | 6,166,502,400 |

| \\(n_{layers}\\) | 28 |

| \\(d_{model}\\) | 4,096 |

| \\(d_{ff}\\) | 16,384 |

| \\(n_{heads}\\) | 16 |

| \\(d_{head}\\) | 256 |

| \\(n_{ctx}\\) | 2,048 |

| \\(n_{vocab}\\) | 64,512 |

| Positional Encoding | [Rotary Position Embedding (RoPE)](https://arxiv.org/abs/2104.09864) |

| RoPE Dimensions | 64 |

## Hardware requirements

### KoGPT6B-ryan1.5b

#### GPU

The following is the recommended minimum GPU hardware guidance for a handful of example KoGPT.

* `32GB GPU RAM` in the required minimum memory size

### KoGPT6B-ryan1.5b-float16

#### GPU

The following is the recommended minimum GPU hardware guidance for a handful of example KoGPT.

* half-precision requires NVIDIA GPUS based on Volta, Turing or Ampere

* `16GB GPU RAM` in the required minimum memory size

## Usage

### prompt

```bash

python -m kogpt --help

usage: KoGPT inference [-h] [--model MODEL] [--revision {KoGPT6B-ryan1.5b}]

[--device {cpu,cuda}] [-d]

KakaoBrain Korean(hangul) Generative Pre-Training Model

optional arguments:

-h, --help show this help message and exit

--model MODEL huggingface repo (default:kakaobrain/kogpt)

--revision {KoGPT6B-ryan1.5b}

--device {cpu,cuda} (default:cuda)

-d, --debug

```

```bash

python -m kogpt

prompt> 인간처럼 생각하고, 행동하는 '지능'을 통해 인류가 이제까지 풀지 못했던

temperature(0.8)>

max_length(128)> 64

인간처럼 생각하고, 행동하는 '지능'을 통해 인류가 이제까지 풀지 못했던 문제의 해답을 찾을 수 있을 것이다. 과학기술이 고도로 발달한 21세기를 살아갈 우리 아이들에게 가장 필요한 것은 사고력 훈련이다. 사고력 훈련을 통해, 세상

prompt>

...

```

### python

```python

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained(

'kakaobrain/kogpt', revision='KoGPT6B-ryan1.5b-float16', # or float32 version: revision=KoGPT6B-ryan1.5b

bos_token='[BOS]', eos_token='[EOS]', unk_token='[UNK]', pad_token='[PAD]', mask_token='[MASK]'

)

model = AutoModelForCausalLM.from_pretrained(

'kakaobrain/kogpt', revision='KoGPT6B-ryan1.5b-float16', # or float32 version: revision=KoGPT6B-ryan1.5b

pad_token_id=tokenizer.eos_token_id,

torch_dtype='auto', low_cpu_mem_usage=True

).to(device='cuda', non_blocking=True)

_ = model.eval()

prompt = '인간처럼 생각하고, 행동하는 \'지능\'을 통해 인류가 이제까지 풀지 못했던'

with torch.no_grad():

tokens = tokenizer.encode(prompt, return_tensors='pt').to(device='cuda', non_blocking=True)

gen_tokens = model.generate(tokens, do_sample=True, temperature=0.8, max_length=64)

generated = tokenizer.batch_decode(gen_tokens)[0]

print(generated) # print: 인간처럼 생각하고, 행동하는 '지능'을 통해 인류가 이제까지 풀지 못했던 문제의 해답을 찾을 수 있을 것이다. 과학기술이 고도로 발달한 21세기를 살아갈 우리 아이들에게 가장 필요한 것은 사고력 훈련이다. 사고력 훈련을 통해, 세상

```

## Experiments

### In-context Few-Shots

| Models | #params | NSMC (Acc.) | YNAT (F1) | KLUE-STS (F1) |

|:--------------|--------:|------------:|----------:|--------------:|

| HyperCLOVA[1] | 1.3B | 83.9 | 58.7 | 60.9 |

| HyperCLOVA[1] | 6.9B | 83.8 | 67.5 | 59.3 |

| HyperCLOVA[1] | 13.0B | 87.9 | 67.9 | 60.0 |

| HyperCLOVA[1] | 39.0B | 88.0 | 71.4 | 61.6 |

| HyperCLOVA[1] | 82.0B | **88.2** | 72.7 | **65.1** |

| **Ours** | 6.0B | 87.8 | **78.0** | 64.3 |

### Finetuning / P-Tuning

We have been reported to have issues(https://github.com/kakaobrain/kogpt/issues/17) with our downstream evaluation.

The previously published performance evaluation table was deleted because it was difficult to see it as a fair comparison because the comparison target algorithm was different and the performance measurement method could not be confirmed.

You can refer to the above issue link for the existing performance evaluation table and troubleshooting results.

## Limitations

KakaoBrain `KoGPT` was trained on `rayn dataset`, a dataset known to contain profanity, lewd, political changed, and other harsh language.

Therefore, `KoGPT` can generate socially unacceptable texts. As with all language models, It is difficult to predict in advance how `KoGPT` will response to particular prompts and offensive content without warning.

Primarily Korean: `KoGPT` is primarily trained on Korean texts, and is best for classifying, searching, summarizing or generating such texts.

`KoGPT` by default perform worse on inputs that are different from the data distribution it is trained on, including non-Korean as well as specific dialects of Korean that are not well represented in the training data.

[comment]: <> (If abnormal or socially unacceptable text is generated during testing, please send a "prompt" and the "generated text" to [[email protected]](mailto:[email protected]). )

카카오브레인 `KoGPT`는 욕설, 음란, 정치적 내용 및 기타 거친 언어에 대한 처리를 하지 않은 `rayn dataset`으로 학습하였습니다.

따라서 `KoGPT`는 사회적으로 용인되지 않은 텍스트를 생성할 수 있습니다. 다른 언어 모델과 마찬가지로 특정 프롬프트와 공격적인 콘텐츠에 어떠한 결과를 생성할지 사전에 파악하기 어렵습니다.

`KoGPT`는 주로 한국어 텍스트로 학습을 하였으며 이러한 텍스트를 분류, 검색, 요약 또는 생성하는데 가장 적합합니다.

기본적으로 `KoGPT`는 학습 데이터에 잘 나타나지 않는 방언뿐만아니라 한국어가 아닌 경우와 같이 학습 데이터에서 발견하기 어려운 입력에서 좋지 않은 성능을 보입니다.

[comment]: <> (테스트중에 발생한 비정상적인 혹은 사회적으로 용인되지 않는 텍스트가 생성된 경우 [[email protected]](mailto:[email protected])로 "prompt"와 "생성된 문장"을 함께 보내주시기 바랍니다.)

## Citation

If you apply this library or model to any project and research, please cite our code:

```

@misc{kakaobrain2021kogpt,

title = {KoGPT: KakaoBrain Korean(hangul) Generative Pre-trained Transformer},

author = {Ildoo Kim and Gunsoo Han and Jiyeon Ham and Woonhyuk Baek},

year = {2021},

howpublished = {\url{https://github.com/kakaobrain/kogpt}},

}

```

## Contact

This is released as an open source in the hope that it will be helpful to many research institutes and startups for research purposes. We look forward to contacting us from various places who wish to cooperate with us.

[[email protected]](mailto:[email protected])

## License

The `source code` of KakaoBrain `KoGPT` are licensed under [Apache 2.0](LICENSE.apache-2.0) License.

The `pretrained wieghts` of KakaoBrain `KoGPT` are licensed under [CC-BY-NC-ND 4.0 License](https://creativecommons.org/licenses/by-nc-nd/4.0/) License.

카카오브레인 `KoGPT`의 `소스코드(source code)`는 [Apache 2.0](LICENSE.apache-2.0) 라이선스 하에 공개되어 있습니다.

카카오브레인 `KoGPT`의 `사전학습된 가중치(pretrained weights)`는 [CC-BY-NC-ND 4.0 라이선스](https://creativecommons.org/licenses/by-nc-nd/4.0/) 라이선스 하에 공개되어 있습니다.

모델 및 코드, 사전학습된 가중치를 사용할 경우 라이선스 내용을 준수해 주십시오. 라이선스 전문은 [Apache 2.0](LICENSE.apache-2.0), [LICENSE.cc-by-nc-nd-4.0](LICENSE.cc-by-nc-nd-4.0) 파일에서 확인하실 수 있습니다.

## References

[1] [HyperCLOVA](https://arxiv.org/abs/2109.04650): Kim, Boseop, et al. "What changes can large-scale language models bring? intensive study on hyperclova: Billions-scale korean generative pretrained transformers." arXiv preprint arXiv:2109.04650 (2021).

|

luomingshuang/icefall_asr_wenetspeech_pruned_transducer_stateless2

|

luomingshuang

| 2022-10-13T06:43:37Z | 0 | 3 | null |

[

"onnx",

"region:us"

] | null | 2022-05-19T14:32:27Z |

Note: This recipe is trained with the codes from this PR https://github.com/k2-fsa/icefall/pull/349

# Pre-trained Transducer-Stateless2 models for the WenetSpeech dataset with icefall.

The model was trained on the L subset of WenetSpeech with the scripts in [icefall](https://github.com/k2-fsa/icefall) based on the latest version k2.

## Training procedure

The main repositories are list below, we will update the training and decoding scripts with the update of version.

k2: https://github.com/k2-fsa/k2

icefall: https://github.com/k2-fsa/icefall

lhotse: https://github.com/lhotse-speech/lhotse

* Install k2 and lhotse, k2 installation guide refers to https://k2.readthedocs.io/en/latest/installation/index.html, lhotse refers to https://lhotse.readthedocs.io/en/latest/getting-started.html#installation. I think the latest version would be ok. And please also install the requirements listed in icefall.

* Clone icefall(https://github.com/k2-fsa/icefall) and check to the commit showed above.

```

git clone https://github.com/k2-fsa/icefall

cd icefall

```

* Preparing data.

```

cd egs/wenetspeech/ASR

bash ./prepare.sh

```

* Training

```

export CUDA_VISIBLE_DEVICES="0,1,2,3,4,5,6,7"

./pruned_transducer_stateless2/train.py \

--world-size 8 \

--num-epochs 15 \

--start-epoch 0 \

--exp-dir pruned_transducer_stateless2/exp \

--lang-dir data/lang_char \

--max-duration 180 \

--valid-interval 3000 \

--model-warm-step 3000 \

--save-every-n 8000 \

--training-subset L

```

## Evaluation results

The decoding results (WER%) on WenetSpeech(dev, test-net and test-meeting) are listed below, we got this result by averaging models from epoch 9 to 10.

The WERs are

| | dev | test-net | test-meeting | comment |

|------------------------------------|-------|----------|--------------|------------------------------------------|

| greedy search | 7.80 | 8.75 | 13.49 | --epoch 10, --avg 2, --max-duration 100 |

| modified beam search (beam size 4) | 7.76 | 8.71 | 13.41 | --epoch 10, --avg 2, --max-duration 100 |

| fast beam search (1best) | 7.94 | 8.74 | 13.80 | --epoch 10, --avg 2, --max-duration 1500 |

| fast beam search (nbest) | 9.82 | 10.98 | 16.37 | --epoch 10, --avg 2, --max-duration 600 |

| fast beam search (nbest oracle) | 6.88 | 7.18 | 11.77 | --epoch 10, --avg 2, --max-duration 600 |

| fast beam search (nbest LG) | 14.94 | 16.14 | 22.93 | --epoch 10, --avg 2, --max-duration 600 |

|

SaurabhKaushik/distilbert-base-uncased-finetuned-cola

|

SaurabhKaushik

| 2022-10-13T06:25:21Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-23T08:08:06Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: SaurabhKaushik/distilbert-base-uncased-finetuned-cola

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# SaurabhKaushik/distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1919

- Validation Loss: 0.5520

- Train Matthews Correlation: 0.5123

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 1602, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Matthews Correlation | Epoch |

|:----------:|:---------------:|:--------------------------:|:-----:|

| 0.5232 | 0.4685 | 0.4651 | 0 |

| 0.3245 | 0.4620 | 0.4982 | 1 |

| 0.1919 | 0.5520 | 0.5123 | 2 |

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.10.0

- Datasets 2.5.1

- Tokenizers 0.12.1

|

azad-wolf-se/FExGAN

|

azad-wolf-se

| 2022-10-13T06:01:13Z | 0 | 0 | null |

[

"Computer Vision",

"Machine Learning",

"Deep Learning",

"en",

"arxiv:2201.09061",

"region:us"

] | null | 2022-10-13T05:27:57Z |

---

language: en

tags:

- Computer Vision

- Machine Learning

- Deep Learning

---

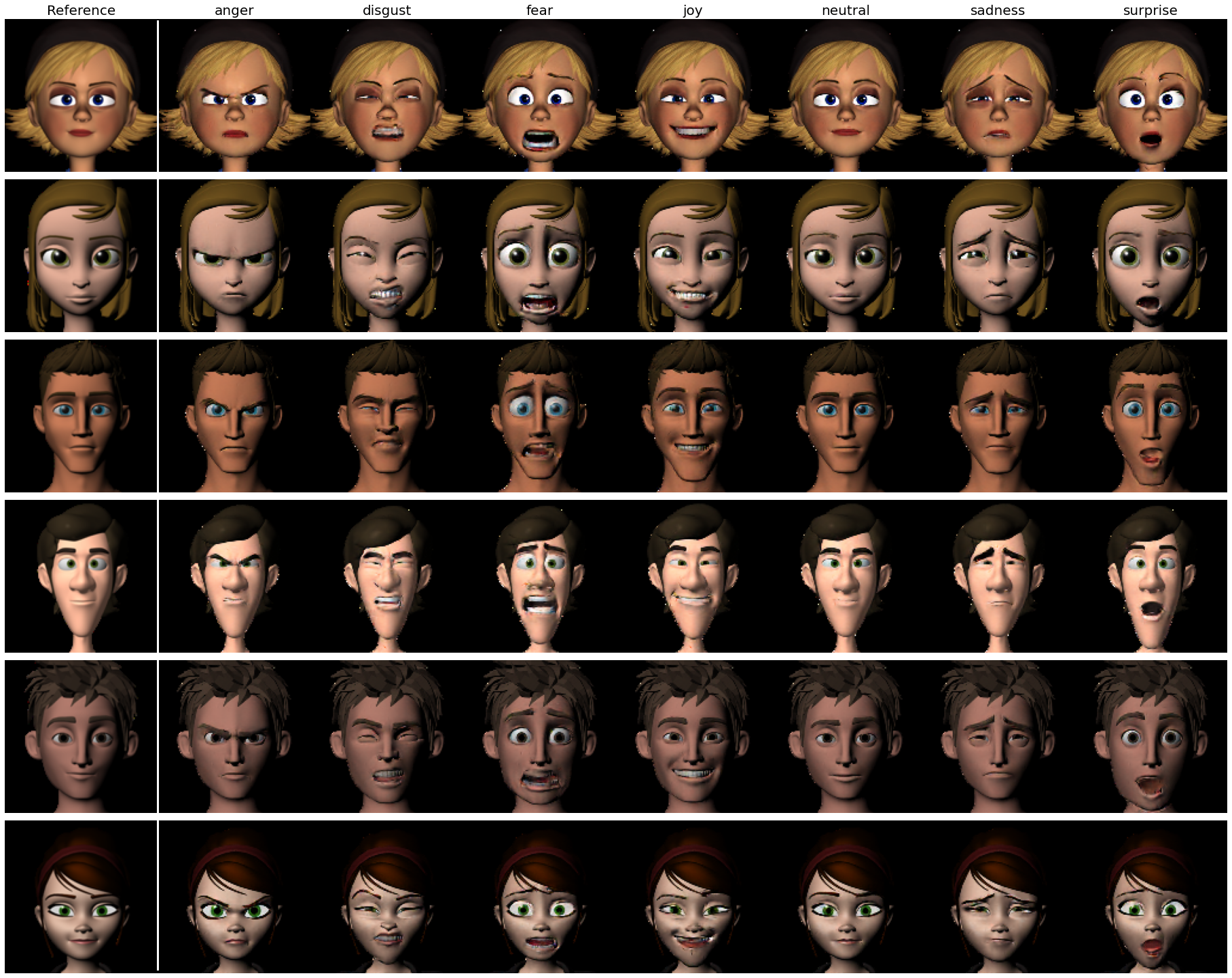

# Explore the Expression: Facial Expression Generation using Auxiliary Classifier Generative Adversarial Network

This is the implementation of the FExGAN proposed in the following article:

[Explore the Expression: Facial Expression Generation using Auxiliary Classifier Generative Adversarial Network](https://www.arxiv.com)

FExGAN takes input an image and a vector of desired affect (e.g. angry,disgust,sad,surprise,joy,neutral and fear) and converts the input image to the desired emotion while keeping the identity of the original image.

# Requirements

In order to run this you need following:

* Python >= 3.7

* Tensorflow >= 2.6

* CUDA enabled GPU (e.g. GTX1070/GTX1080)

# Usage Code

https://www.github.com/azadlab/FExGAN

# Citation

If you use any part of this code or use ideas mentioned in the paper, please cite the following article.

```

@article{Siddiqui_FExGAN_2022,

author = {{Siddiqui}, J. Rafid},

title = {{Explore the Expression: Facial Expression Generation using Auxiliary Classifier Generative Adversarial Network}},

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

keywords = {Deep Learning, GAN, Facial Expressions},

year = {2022}

url = {http://arxiv.org/abs/2201.09061},

}

```

|

g30rv17ys/ddpm-geeve-dme-10k-200ep

|

g30rv17ys

| 2022-10-13T05:07:02Z | 0 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:imagefolder",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-10-12T14:38:43Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: imagefolder

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-geeve-dme-10k-200ep

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `imagefolder` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/geevegeorge/ddpm-geeve-dme-10k-200ep/tensorboard?#scalars)

|

format37/PPO-MountainCar-v0

|

format37

| 2022-10-13T04:43:04Z | 3 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"MountainCar-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-10-13T04:42:45Z |

---

library_name: stable-baselines3

tags:

- MountainCar-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: -151.80 +/- 16.12

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: MountainCar-v0

type: MountainCar-v0

---

# **PPO** Agent playing **MountainCar-v0**

This is a trained model of a **PPO** agent playing **MountainCar-v0** using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

|

fathan/ijelid-bert-base-multilingual

|

fathan

| 2022-10-13T03:51:51Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-10-05T06:00:07Z |

---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: ijelid-bert-base-multilingual

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ijelid-bert-base-multilingual

This model is a fine-tuned version of [BERT multilingual base model (cased)](https://huggingface.co/bert-base-multilingual-cased) on the Indonesian-Javanese-English code-mixed Twitter dataset.

Label ID and its corresponding name:

| Label ID | Label Name |

|:---------------:|:------------------------------------------:

| LABEL_0 | English (EN) |

| LABEL_1 | Indonesian (ID) |

| LABEL_2 | Javanese (JV) |

| LABEL_3 | Mixed Indonesian-English (MIX-ID-EN) |

| LABEL_4 | Mixed Indonesian-Javanese (MIX-ID-JV) |

| LABEL_5 | Mixed Javanese-English (MIX-JV-EN) |

| LABEL_6 | Other (O) |

It achieves the following results on the evaluation set:

- Loss: 0.3553

- Precision: 0.9189

- Recall: 0.9188

- F1: 0.9187

- Accuracy: 0.9451

It achieves the following results on the test set:

- Overall Precision: 0.9249

- Overall Recall: 0.9251

- Overall F1: 0.925

- Overall Accuracy: 0.951

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 386 | 0.2340 | 0.8956 | 0.8507 | 0.8715 | 0.9239 |

| 0.3379 | 2.0 | 772 | 0.2101 | 0.9057 | 0.8904 | 0.8962 | 0.9342 |

| 0.1603 | 3.0 | 1158 | 0.2231 | 0.9252 | 0.8896 | 0.9065 | 0.9367 |

| 0.1079 | 4.0 | 1544 | 0.2013 | 0.9272 | 0.8902 | 0.9070 | 0.9420 |

| 0.1079 | 5.0 | 1930 | 0.2179 | 0.9031 | 0.9179 | 0.9103 | 0.9425 |

| 0.0701 | 6.0 | 2316 | 0.2330 | 0.9075 | 0.9165 | 0.9114 | 0.9435 |

| 0.051 | 7.0 | 2702 | 0.2433 | 0.9117 | 0.9190 | 0.9150 | 0.9432 |

| 0.0384 | 8.0 | 3088 | 0.2545 | 0.9001 | 0.9167 | 0.9078 | 0.9439 |

| 0.0384 | 9.0 | 3474 | 0.2629 | 0.9164 | 0.9159 | 0.9158 | 0.9444 |

| 0.0293 | 10.0 | 3860 | 0.2881 | 0.9263 | 0.9096 | 0.9178 | 0.9427 |

| 0.022 | 11.0 | 4246 | 0.2882 | 0.9167 | 0.9222 | 0.9191 | 0.9450 |

| 0.0171 | 12.0 | 4632 | 0.3028 | 0.9203 | 0.9152 | 0.9177 | 0.9447 |

| 0.0143 | 13.0 | 5018 | 0.3236 | 0.9155 | 0.9167 | 0.9158 | 0.9440 |

| 0.0143 | 14.0 | 5404 | 0.3301 | 0.9237 | 0.9163 | 0.9199 | 0.9444 |

| 0.0109 | 15.0 | 5790 | 0.3290 | 0.9187 | 0.9154 | 0.9169 | 0.9442 |

| 0.0092 | 16.0 | 6176 | 0.3308 | 0.9213 | 0.9178 | 0.9194 | 0.9448 |

| 0.0075 | 17.0 | 6562 | 0.3501 | 0.9273 | 0.9142 | 0.9206 | 0.9445 |

| 0.0075 | 18.0 | 6948 | 0.3520 | 0.9200 | 0.9184 | 0.9190 | 0.9447 |

| 0.0062 | 19.0 | 7334 | 0.3524 | 0.9238 | 0.9183 | 0.9210 | 0.9458 |

| 0.0051 | 20.0 | 7720 | 0.3553 | 0.9189 | 0.9188 | 0.9187 | 0.9451 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.7.1

- Datasets 2.5.1

- Tokenizers 0.12.1

|

masusuka/wav2vec2-large-xls-r-300m-tr-colab

|

masusuka

| 2022-10-13T03:44:51Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-10-10T18:43:46Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xls-r-300m-tr-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-tr-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4316

- Wer: 0.2905

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 100

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.9953 | 3.67 | 400 | 0.7024 | 0.7226 |

| 0.4046 | 7.34 | 800 | 0.4342 | 0.5343 |

| 0.201 | 11.01 | 1200 | 0.4396 | 0.5290 |

| 0.1513 | 14.68 | 1600 | 0.4319 | 0.4108 |

| 0.1285 | 18.35 | 2000 | 0.4422 | 0.3864 |

| 0.1086 | 22.02 | 2400 | 0.4568 | 0.3796 |

| 0.0998 | 25.69 | 2800 | 0.4687 | 0.3732 |

| 0.0863 | 29.36 | 3200 | 0.4726 | 0.3803 |

| 0.0809 | 33.03 | 3600 | 0.4479 | 0.3601 |

| 0.0747 | 36.7 | 4000 | 0.4624 | 0.3525 |

| 0.0692 | 40.37 | 4400 | 0.4366 | 0.3435 |

| 0.0595 | 44.04 | 4800 | 0.4204 | 0.3510 |

| 0.0584 | 47.71 | 5200 | 0.4202 | 0.3402 |

| 0.0545 | 51.38 | 5600 | 0.4366 | 0.3343 |

| 0.0486 | 55.05 | 6000 | 0.4492 | 0.3678 |

| 0.0444 | 58.72 | 6400 | 0.4471 | 0.3301 |

| 0.0406 | 62.39 | 6800 | 0.4382 | 0.3318 |

| 0.0341 | 66.06 | 7200 | 0.4295 | 0.3258 |

| 0.0297 | 69.72 | 7600 | 0.4336 | 0.3205 |

| 0.0295 | 73.39 | 8000 | 0.4240 | 0.3199 |

| 0.0261 | 77.06 | 8400 | 0.4316 | 0.3143 |

| 0.0247 | 80.73 | 8800 | 0.4300 | 0.3165 |

| 0.0207 | 84.4 | 9200 | 0.4380 | 0.3111 |

| 0.0203 | 88.07 | 9600 | 0.4218 | 0.2998 |

| 0.0174 | 91.74 | 10000 | 0.4271 | 0.2973 |

| 0.015 | 95.41 | 10400 | 0.4330 | 0.2939 |

| 0.0144 | 99.08 | 10800 | 0.4316 | 0.2905 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu102

- Datasets 2.5.2

- Tokenizers 0.13.1

|

caotouchan/distilbert_uncase_emo

|

caotouchan

| 2022-10-13T03:23:35Z | 59 | 0 |

transformers

|

[

"transformers",

"tf",

"distilbert",

"text-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-10-13T03:23:28Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: distilbert_uncase_emo

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# distilbert_uncase_emo

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.4588

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data