modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-02 12:32:32

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 534

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-02 12:31:20

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

DeepakGautam/Gautam

|

DeepakGautam

| 2023-07-22T07:51:38Z | 0 | 0 | null |

[

"license:bigscience-openrail-m",

"region:us"

] | null | 2023-07-22T07:51:38Z |

---

license: bigscience-openrail-m

---

|

vineetsharma/a2c-AntBulletEnv-v0

|

vineetsharma

| 2023-07-22T07:47:29Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-22T07:46:54Z |

---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

metrics:

- type: mean_reward

value: 1480.41 +/- 128.67

name: mean_reward

verified: false

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

ailabturkiye/kratosGOWRAGNAROK

|

ailabturkiye

| 2023-07-22T07:40:18Z | 0 | 0 | null |

[

"region:us"

] | null | 2023-07-22T07:36:28Z |

[](discord.gg/ailab)

# Kratos (God Of War Ragnarök) - RVC V2 500 Epoch

**Kratos'un Serisinin son oyunu olan ragnarök'teki ses kayıtlarından oluşturulmuş ses modelidir.

Rvc V2 | 10 Dakikalık Dataset | 500 Epoch olarak eğitilmiştir.**

_Dataset ve Train Benim Tarafımdan yapılmıştır.._

__Modelin izinsiz bir şekilde [Ai Lab Discord](discord.gg/ailab) Sunucusu dışında paylaşılması tamamen yasaktır, model openrail lisansına sahiptir.__

## Credits

**Herhangi bir platformda model ile yapılan bir cover paylaşımında credits vermeniz rica olunur.**

- Discord: hydragee

- YouTube: CoverLai (https://www.youtube.com/@coverlai)

[](discord.gg/ailab)

|

josephrich/my_awesome_model_721_2

|

josephrich

| 2023-07-22T07:27:32Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-22T04:00:33Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

model-index:

- name: my_awesome_model_721_2

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: test

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.93228

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_model_721_2

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5942

- Accuracy: 0.9323

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.4604 | 1.0 | 12500 | 0.6389 | 0.8761 |

| 0.2442 | 2.0 | 25000 | 0.4233 | 0.9264 |

| 0.1495 | 3.0 | 37500 | 0.4755 | 0.9303 |

| 0.0516 | 4.0 | 50000 | 0.5942 | 0.9323 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.0

- Datasets 2.13.1

- Tokenizers 0.13.3

|

Ryukijano/Mujoco_rl_halfcheetah_Decision_Trasformer

|

Ryukijano

| 2023-07-22T07:27:15Z | 62 | 0 |

transformers

|

[

"transformers",

"pytorch",

"decision_transformer",

"Generated_From_Trainer",

"reinforcement-learning",

"Mujoco",

"dataset:decision_transformer_gym_replay",

"endpoints_compatible",

"region:us"

] |

reinforcement-learning

| 2023-07-19T15:13:27Z |

---

base_model: ''

tags:

- Generated_From_Trainer

- reinforcement-learning

- Mujoco

datasets:

- decision_transformer_gym_replay

model-index:

- name: Mujoco_rl_halfcheetah_Decision_Trasformer

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Mujoco_rl_halfcheetah_Decision_Trasformer

This model is a fine-tuned version of [](https://huggingface.co/) on the decision_transformer_gym_replay dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 250

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

gokuls/hbertv2-wt-frz-48-emotion

|

gokuls

| 2023-07-22T07:19:29Z | 48 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"hybridbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"base_model:gokuls/bert_12_layer_model_v2_complete_training_new_wt_init_48_frz",

"base_model:finetune:gokuls/bert_12_layer_model_v2_complete_training_new_wt_init_48_frz",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-22T07:09:53Z |

---

base_model: gokuls/bert_12_layer_model_v2_complete_training_new_wt_init_48_frz

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

model-index:

- name: hbertv2-wt-frz-48-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

config: split

split: validation

args: split

metrics:

- name: Accuracy

type: accuracy

value: 0.927

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hbertv2-wt-frz-48-emotion

This model is a fine-tuned version of [gokuls/bert_12_layer_model_v2_complete_training_new_wt_init_48_frz](https://huggingface.co/gokuls/bert_12_layer_model_v2_complete_training_new_wt_init_48_frz) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2271

- Accuracy: 0.927

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 33

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6559 | 1.0 | 250 | 0.2760 | 0.9015 |

| 0.2565 | 2.0 | 500 | 0.2507 | 0.9035 |

| 0.1862 | 3.0 | 750 | 0.2221 | 0.919 |

| 0.1455 | 4.0 | 1000 | 0.2271 | 0.927 |

| 0.1218 | 5.0 | 1250 | 0.2059 | 0.9235 |

| 0.1003 | 6.0 | 1500 | 0.2576 | 0.9215 |

| 0.0812 | 7.0 | 1750 | 0.2603 | 0.92 |

| 0.0676 | 8.0 | 2000 | 0.2949 | 0.9215 |

| 0.0515 | 9.0 | 2250 | 0.3322 | 0.919 |

| 0.0411 | 10.0 | 2500 | 0.3375 | 0.924 |

### Framework versions

- Transformers 4.31.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.13.1

- Tokenizers 0.13.3

|

qwerty8409/Medical_dataset

|

qwerty8409

| 2023-07-22T07:04:27Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-22T07:00:20Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.5.0.dev0

|

EXrRor3/ppo-Huggy

|

EXrRor3

| 2023-07-22T06:47:29Z | 0 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] |

reinforcement-learning

| 2023-07-22T06:47:19Z |

---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: EXrRor3/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

AndrewL088/SpaceInvadersNoFrameskip-v4_20230722

|

AndrewL088

| 2023-07-22T06:31:47Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-22T06:31:18Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 29.00 +/- 64.30

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga AndrewL088 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga AndrewL088 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga AndrewL088

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.025),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 10000000.0),

('learning_starts', 100000),

('n_timesteps', 110000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

NasimB/guten-rarity-neg-log-rarity-end-19p1k

|

NasimB

| 2023-07-22T06:23:58Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-22T04:00:43Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: guten-rarity-neg-log-rarity-end-19p1k

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# guten-rarity-neg-log-rarity-end-19p1k

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.1078

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 6.3472 | 0.29 | 500 | 5.3359 |

| 5.0242 | 0.59 | 1000 | 4.9159 |

| 4.7018 | 0.88 | 1500 | 4.6868 |

| 4.4382 | 1.17 | 2000 | 4.5458 |

| 4.2888 | 1.47 | 2500 | 4.4338 |

| 4.1941 | 1.76 | 3000 | 4.3265 |

| 4.0652 | 2.05 | 3500 | 4.2631 |

| 3.8933 | 2.34 | 4000 | 4.2118 |

| 3.8664 | 2.64 | 4500 | 4.1589 |

| 3.8275 | 2.93 | 5000 | 4.1077 |

| 3.6287 | 3.22 | 5500 | 4.1006 |

| 3.5847 | 3.52 | 6000 | 4.0707 |

| 3.5697 | 3.81 | 6500 | 4.0389 |

| 3.4614 | 4.1 | 7000 | 4.0369 |

| 3.3179 | 4.4 | 7500 | 4.0323 |

| 3.307 | 4.69 | 8000 | 4.0175 |

| 3.3039 | 4.98 | 8500 | 4.0058 |

| 3.1413 | 5.28 | 9000 | 4.0177 |

| 3.132 | 5.57 | 9500 | 4.0172 |

| 3.1349 | 5.86 | 10000 | 4.0158 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

AndrewL088/SpaceInvadersNoFrameskip-v4_0722

|

AndrewL088

| 2023-07-22T06:18:45Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-22T06:09:45Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 257.00 +/- 38.81

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga AndrewL088 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga AndrewL088 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga AndrewL088

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.025),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 10000000.0),

('learning_starts', 100000),

('n_timesteps', 70000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

ebilal79/watsonx-falcon-7b

|

ebilal79

| 2023-07-22T06:04:59Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-19T19:42:58Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: bfloat16

### Framework versions

- PEFT 0.5.0.dev0

|

4bit/Nous-Hermes-Llama2-13b-GPTQ

|

4bit

| 2023-07-22T05:32:28Z | 11 | 3 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"llama-2",

"self-instruct",

"distillation",

"synthetic instruction",

"en",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-22T05:26:48Z |

---

license: llama2

language:

- en

tags:

- llama-2

- self-instruct

- distillation

- synthetic instruction

---

# Model Card: Nous-Hermes-Llama2-13b

Compute provided by our project sponsor Redmond AI, thank you! Follow RedmondAI on Twitter @RedmondAI.

## Model Description

Nous-Hermes-Llama2-13b is a state-of-the-art language model fine-tuned on over 300,000 instructions. This model was fine-tuned by Nous Research, with Teknium and Emozilla leading the fine tuning process and dataset curation, Redmond AI sponsoring the compute, and several other contributors.

This Hermes model uses the exact same dataset as Hermes on Llama-1. This is to ensure consistency between the old Hermes and new, for anyone who wanted to keep Hermes as similar to the old one, just more capable.

This model stands out for its long responses, lower hallucination rate, and absence of OpenAI censorship mechanisms. The fine-tuning process was performed with a 4096 sequence length on an 8x a100 80GB DGX machine.

## Example Outputs:

## Model Training

The model was trained almost entirely on synthetic GPT-4 outputs. Curating high quality GPT-4 datasets enables incredibly high quality in knowledge, task completion, and style.

This includes data from diverse sources such as GPTeacher, the general, roleplay v1&2, code instruct datasets, Nous Instruct & PDACTL (unpublished), and several others, detailed further below

## Collaborators

The model fine-tuning and the datasets were a collaboration of efforts and resources between Teknium, Karan4D, Emozilla, Huemin Art, and Redmond AI.

Special mention goes to @winglian for assisting in some of the training issues.

Huge shoutout and acknowledgement is deserved for all the dataset creators who generously share their datasets openly.

Among the contributors of datasets:

- GPTeacher was made available by Teknium

- Wizard LM by nlpxucan

- Nous Research Instruct Dataset was provided by Karan4D and HueminArt.

- GPT4-LLM and Unnatural Instructions were provided by Microsoft

- Airoboros dataset by jondurbin

- Camel-AI's domain expert datasets are from Camel-AI

- CodeAlpaca dataset by Sahil 2801.

If anyone was left out, please open a thread in the community tab.

## Prompt Format

The model follows the Alpaca prompt format:

```

### Instruction:

<prompt>

### Response:

<leave a newline blank for model to respond>

```

or

```

### Instruction:

<prompt>

### Input:

<additional context>

### Response:

<leave a newline blank for model to respond>

```

## Benchmark Results

AGI-Eval

```

| Task |Version| Metric |Value | |Stderr|

|agieval_aqua_rat | 0|acc |0.2362|± |0.0267|

| | |acc_norm|0.2480|± |0.0272|

|agieval_logiqa_en | 0|acc |0.3425|± |0.0186|

| | |acc_norm|0.3472|± |0.0187|

|agieval_lsat_ar | 0|acc |0.2522|± |0.0287|

| | |acc_norm|0.2087|± |0.0269|

|agieval_lsat_lr | 0|acc |0.3510|± |0.0212|

| | |acc_norm|0.3627|± |0.0213|

|agieval_lsat_rc | 0|acc |0.4647|± |0.0305|

| | |acc_norm|0.4424|± |0.0303|

|agieval_sat_en | 0|acc |0.6602|± |0.0331|

| | |acc_norm|0.6165|± |0.0340|

|agieval_sat_en_without_passage| 0|acc |0.4320|± |0.0346|

| | |acc_norm|0.4272|± |0.0345|

|agieval_sat_math | 0|acc |0.2909|± |0.0307|

| | |acc_norm|0.2727|± |0.0301|

```

GPT-4All Benchmark Set

```

| Task |Version| Metric |Value | |Stderr|

|arc_challenge| 0|acc |0.5102|± |0.0146|

| | |acc_norm|0.5213|± |0.0146|

|arc_easy | 0|acc |0.7959|± |0.0083|

| | |acc_norm|0.7567|± |0.0088|

|boolq | 1|acc |0.8394|± |0.0064|

|hellaswag | 0|acc |0.6164|± |0.0049|

| | |acc_norm|0.8009|± |0.0040|

|openbookqa | 0|acc |0.3580|± |0.0215|

| | |acc_norm|0.4620|± |0.0223|

|piqa | 0|acc |0.7992|± |0.0093|

| | |acc_norm|0.8069|± |0.0092|

|winogrande | 0|acc |0.7127|± |0.0127|

```

BigBench Reasoning Test

```

| Task |Version| Metric |Value | |Stderr|

|bigbench_causal_judgement | 0|multiple_choice_grade|0.5526|± |0.0362|

|bigbench_date_understanding | 0|multiple_choice_grade|0.7344|± |0.0230|

|bigbench_disambiguation_qa | 0|multiple_choice_grade|0.2636|± |0.0275|

|bigbench_geometric_shapes | 0|multiple_choice_grade|0.0195|± |0.0073|

| | |exact_str_match |0.0000|± |0.0000|

|bigbench_logical_deduction_five_objects | 0|multiple_choice_grade|0.2760|± |0.0200|

|bigbench_logical_deduction_seven_objects | 0|multiple_choice_grade|0.2100|± |0.0154|

|bigbench_logical_deduction_three_objects | 0|multiple_choice_grade|0.4400|± |0.0287|

|bigbench_movie_recommendation | 0|multiple_choice_grade|0.2440|± |0.0192|

|bigbench_navigate | 0|multiple_choice_grade|0.4950|± |0.0158|

|bigbench_reasoning_about_colored_objects | 0|multiple_choice_grade|0.5570|± |0.0111|

|bigbench_ruin_names | 0|multiple_choice_grade|0.3728|± |0.0229|

|bigbench_salient_translation_error_detection | 0|multiple_choice_grade|0.1854|± |0.0123|

|bigbench_snarks | 0|multiple_choice_grade|0.6298|± |0.0360|

|bigbench_sports_understanding | 0|multiple_choice_grade|0.6156|± |0.0155|

|bigbench_temporal_sequences | 0|multiple_choice_grade|0.3140|± |0.0147|

|bigbench_tracking_shuffled_objects_five_objects | 0|multiple_choice_grade|0.2032|± |0.0114|

|bigbench_tracking_shuffled_objects_seven_objects| 0|multiple_choice_grade|0.1406|± |0.0083|

|bigbench_tracking_shuffled_objects_three_objects| 0|multiple_choice_grade|0.4400|± |0.0287|

```

These are the highest benchmarks Hermes has seen on every metric, achieving the following average scores:

- GPT4All benchmark average is now 70.0 - from 68.8 in Hermes-Llama1

- 0.3657 on BigBench, up from 0.328 on hermes-llama1

- 0.372 on AGIEval, up from 0.354 on Hermes-llama1

These benchmarks currently have us at #1 on ARC-c, ARC-e, Hellaswag, and OpenBookQA, and 2nd place on Winogrande, comparing to GPT4all's benchmarking list, supplanting Hermes 1 for the new top position.

## Resources for Applied Use Cases:

For an example of a back and forth chatbot using huggingface transformers and discord, check out: https://github.com/teknium1/alpaca-discord

For an example of a roleplaying discord chatbot, check out this: https://github.com/teknium1/alpaca-roleplay-discordbot

## Future Plans

We plan to continue to iterate on both more high quality data, and new data filtering techniques to eliminate lower quality data going forward.

## Model Usage

The model is available for download on Hugging Face. It is suitable for a wide range of language tasks, from generating creative text to understanding and following complex instructions.

|

Vsukiyaki/Shungiku-Mix

|

Vsukiyaki

| 2023-07-22T05:11:31Z | 0 | 23 | null |

[

"stable-diffusion",

"text-to-image",

"ja",

"en",

"license:other",

"region:us"

] |

text-to-image

| 2023-06-03T16:25:04Z |

---

license: other

language:

- ja

- en

tags:

- stable-diffusion

- text-to-image

---

# Shungiku-Mix

<img src="https://huggingface.co/Vsukiyaki/Shungiku-Mix/resolve/main/imgs/header.jpg" style="width: 640px;">

## 概要 / Overview

- **Shungiku-Mix**は、アニメ風の画風に特化したマージモデルです。 / **Shungiku-Mix** is a merge model that specializes in an anime-like painting style.

- 幻想的な空や光の表現が得意です。 / This model excels in the expression of fantastic skies and light.

- VAEはお好きなものをお使いください。VAEが無くても鮮やかな色合いで出力されますが、clearvaeを使用することを推奨しています。 / You can use whatever VAE you like. The output will be vividly tinted without VAE, but we recommend using clearvae.

- clearvaeを含んだモデルも提供しています。 / I also offer models that include clearvae.

=> **Shungiku-Mix_v1-better-vae-fp16.safetensors**

<hr>

## 更新 / UPDATE NOTE

- 2023/07/22:ライセンスを変更しました。 / License changed.

<hr>

## 推奨設定 / Recommended Settings

<pre style="margin: 1em 0; padding: 1em; border-radius: 5px; background: #25292f; color: #fff; white-space: pre-line;">

Steps: 20 ~ 60

Sampler: DPM++ SDE Karras

CFG scale: 7.5

Denoising strength: 0.55

Hires steps: 20

Hires upscaler: Latent

Clip skip: 2

Negative embeddings: EasyNegative, verybadimagenegative

</pre>

**Negative prompt**:

<pre style="margin: 1em 0; padding: 1em; border-radius: 5px; background: #25292f; color: #fff; white-space: pre-line;">

(easynegative:1.0),(worst quality,low quality:1.2),(bad anatomy:1.4),(realistic:1.1),nose,lips,adult,fat,sad,(inaccurate limb:1.2),extra digit,fewer digits,six fingers,(monochrome:0.95),verybadimagenegative_v1.3,

</pre>

<hr>

## 例 / Examples

<img src="https://huggingface.co/Vsukiyaki/Shungiku-Mix/resolve/main/imgs/sample1.png" style="width: 512px;">

<pre style="margin: 1em 0; padding: 1em; border-radius: 5px; background: #25292f; color: #fff; white-space: pre-line;">

((solo:1.2)),cute girl,(harbor),(blue sky:1.2),looking at viewer,dramatic,fantastic atmosphere,magnificent view,cumulonimbus,(cowboy shot:1.2),scenery,Mediterranean Buildings,silver hair

Negative prompt:

(easynegative:1.0),(worst quality,low quality:1.2),(bad anatomy:1.4),(realistic:1.1),nose,lips,adult,fat,sad,(inaccurate limb:1.2),extra digit,fewer digits,six fingers,(monochrome:0.95),verybadimagenegative_v1.3,

Steps: 60

Sampler: DPM++ SDE Karras

CFG scale: 7.5

Seed: 1896063174

Size: 768x768

Denoising strength: 0.58

Clip skip: 2

Hires upscale: 2

Hires steps: 20

Hires upscaler: Latent

</pre>

<br>

<img src="https://huggingface.co/Vsukiyaki/Shungiku-Mix/resolve/main/imgs/sample2.png" style="width: 640px;">

<pre style="margin: 1em 0; padding: 1em; border-radius: 5px; background: #25292f; color: #fff; white-space: pre-line;">

((solo:1.2)),cute little (1girl:1.3) walking,landscape,beautiful sky,village,head tilt,bloom effect,fantastic atmosphere,magnificent view,cowboy shot,pale-blonde hair,blue eyes,long twintails,blush,light smile,white dress,wind,(petals)

Negative prompt:

(easynegative:1.0),(worst quality,low quality:1.2),(bad anatomy:1.4),(realistic:1.1),nose,lips,adult,fat,sad,(inaccurate limb:1.2),extra digit,fewer digits,six fingers,(monochrome:0.95),verybadimagenegative_v1.3,

Steps: 60

Sampler: DPM++ SDE Karras

CFG scale: 7.5

Seed: 400031884

Size: 848x600

Denoising strength: 0.55

Clip skip: 2

Hires upscale: 2.5

Hires steps: 20

Hires upscaler: Latent

</pre>

<hr>

## ライセンス / License

<div class="px-2">

<table class="table-fixed border mt-0 text-xs">

<tbody>

<tr>

<td class="px-4 text-base text-bold" colspan="2">

<a href="https://huggingface.co/spaces/CompVis/stable-diffusion-license">

修正 CreativeML OpenRAIL-M ライセンス / Modified CreativeML OpenRAIL-M license

</a>

</td>

</tr>

<tr>

<td class="align-middle px-2 w-8">

<span style="font-size: 18px;">

🚫

</span>

</td>

<td>

このモデルのクレジットを入れずに使用する<br>

Use the model without crediting the creator

</td>

</tr>

<tr>

<td class="align-middle px-2 w-8">

<span style="font-size: 18px;">

🚫

</span>

</td>

<td>

このモデルで生成した画像を商用利用する<br>

Sell images they generate

</td>

</tr>

<tr class="bg-danger-100">

<td class="align-middle px-2 w-8">

<span style="font-size: 18px;">

🚫

</span>

</td>

<td>

このモデルを商用の画像生成サービスで利用する</br>

Run on services that generate images for money

</td>

</tr>

<tr>

<td class="align-middle px-2 w-8">

<span style="font-size: 18px;">

✅

</span>

</td>

<td>

このモデルを使用したマージモデルを共有する<br>

Share merges using this model

</td>

</tr>

<tr class="bg-danger-100">

<td class="align-middle px-2 w-8">

<span style="font-size: 18px;">

🚫

</span>

</td>

<td>

このモデル、またはこのモデルをマージしたモデルを販売する</br>

Sell this model or merges using this model

</td>

</tr>

<tr class="bg-danger-100">

<td class="align-middle px-2 w-8">

<span style="font-size: 18px;">

🚫

</span>

</td>

<td>

このモデルをマージしたモデルに異なる権限を設定する</br>

Have different permissions when sharing merges

</td>

</tr>

</tbody>

</table>

</div>

<hr>

Twiter: [@Vsukiyaki_AIArt](https://twitter.com/Vsukiyaki_AIArt)

<a

href="https://twitter.com/Vsukiyaki_AIArt"

class="mb-2 inline-block rounded px-6 py-2.5 text-white shadow-md"

style="background-color: #1da1f2">

<svg xmlns="http://www.w3.org/2000/svg" class="h-3.5 w-3.5" fill="currentColor" viewBox="0 0 24 24">

<path d="M24 4.557c-.883.392-1.832.656-2.828.775 1.017-.609 1.798-1.574 2.165-2.724-.951.564-2.005.974-3.127 1.195-.897-.957-2.178-1.555-3.594-1.555-3.179 0-5.515 2.966-4.797 6.045-4.091-.205-7.719-2.165-10.148-5.144-1.29 2.213-.669 5.108 1.523 6.574-.806-.026-1.566-.247-2.229-.616-.054 2.281 1.581 4.415 3.949 4.89-.693.188-1.452.232-2.224.084.626 1.956 2.444 3.379 4.6 3.419-2.07 1.623-4.678 2.348-7.29 2.04 2.179 1.397 4.768 2.212 7.548 2.212 9.142 0 14.307-7.721 13.995-14.646.962-.695 1.797-1.562 2.457-2.549z" />

</svg>

</a>

|

nvidia/GCViT

|

nvidia

| 2023-07-22T04:47:32Z | 0 | 5 | null |

[

"arxiv:2206.09959",

"region:us"

] | null | 2023-07-21T19:28:35Z |

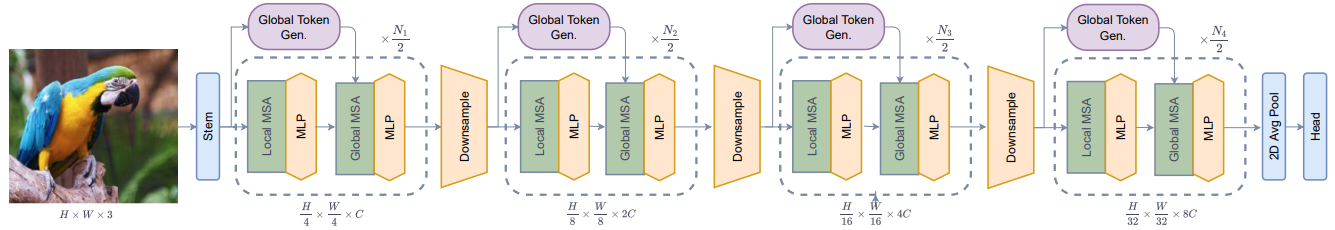

# Global Context Vision Transformer (GC ViT)

This model contains the official PyTorch implementation of **Global Context Vision Transformers** (ICML2023) \

\

[Global Context Vision

Transformers](https://arxiv.org/pdf/2206.09959.pdf) \

[Ali Hatamizadeh](https://research.nvidia.com/person/ali-hatamizadeh),

[Hongxu (Danny) Yin](https://scholar.princeton.edu/hongxu),

[Greg Heinrich](https://developer.nvidia.com/blog/author/gheinrich/),

[Jan Kautz](https://jankautz.com/),

and [Pavlo Molchanov](https://www.pmolchanov.com/).

GC ViT achieves state-of-the-art results across image classification, object detection and semantic segmentation tasks. On ImageNet-1K dataset for classification, GC ViT variants with `51M`, `90M` and `201M` parameters achieve `84.3`, `85.9` and `85.7` Top-1 accuracy, respectively, surpassing comparably-sized prior art such as CNN-based ConvNeXt and ViT-based Swin Transformer.

<p align="center">

<img src="https://github.com/NVlabs/GCVit/assets/26806394/d1820d6d-3aef-470e-a1d3-af370f1c1f77" width=63% height=63%

class="center">

</p>

The architecture of GC ViT is demonstrated in the following:

## Introduction

**GC ViT** leverages global context self-attention modules, joint with local self-attention, to effectively yet efficiently model both long and short-range spatial interactions, without the need for expensive

operations such as computing attention masks or shifting local windows.

<p align="center">

<img src="https://github.com/NVlabs/GCVit/assets/26806394/da64f22a-e7af-4577-8884-b08ba4e24e49" width=72% height=72%

class="center">

</p>

## ImageNet Benchmarks

**ImageNet-1K Pretrained Models**

<table>

<tr>

<th>Model Variant</th>

<th>Acc@1</th>

<th>#Params(M)</th>

<th>FLOPs(G)</th>

<th>Download</th>

</tr>

<tr>

<td>GC ViT-XXT</td>

<th>79.9</th>

<td>12</td>

<td>2.1</td>

<td><a href="https://drive.google.com/uc?export=download&id=1apSIWQCa5VhWLJws8ugMTuyKzyayw4Eh">model</a></td>

</tr>

<tr>

<td>GC ViT-XT</td>

<th>82.0</th>

<td>20</td>

<td>2.6</td>

<td><a href="https://drive.google.com/uc?export=download&id=1OgSbX73AXmE0beStoJf2Jtda1yin9t9m">model</a></td>

</tr>

<tr>

<td>GC ViT-T</td>

<th>83.5</th>

<td>28</td>

<td>4.7</td>

<td><a href="https://drive.google.com/uc?export=download&id=11M6AsxKLhfOpD12Nm_c7lOvIIAn9cljy">model</a></td>

</tr>

<tr>

<td>GC ViT-T2</td>

<th>83.7</th>

<td>34</td>

<td>5.5</td>

<td><a href="https://drive.google.com/uc?export=download&id=1cTD8VemWFiwAx0FB9cRMT-P4vRuylvmQ">model</a></td>

</tr>

<tr>

<td>GC ViT-S</td>

<th>84.3</th>

<td>51</td>

<td>8.5</td>

<td><a href="https://drive.google.com/uc?export=download&id=1Nn6ABKmYjylyWC0I41Q3oExrn4fTzO9Y">model</a></td>

</tr>

<tr>

<td>GC ViT-S2</td>

<th>84.8</th>

<td>68</td>

<td>10.7</td>

<td><a href="https://drive.google.com/uc?export=download&id=1E5TtYpTqILznjBLLBTlO5CGq343RbEan">model</a></td>

</tr>

<tr>

<td>GC ViT-B</td>

<th>85.0</th>

<td>90</td>

<td>14.8</td>

<td><a href="https://drive.google.com/uc?export=download&id=1PF7qfxKLcv_ASOMetDP75n8lC50gaqyH">model</a></td>

</tr>

<tr>

<td>GC ViT-L</td>

<th>85.7</th>

<td>201</td>

<td>32.6</td>

<td><a href="https://drive.google.com/uc?export=download&id=1Lkz1nWKTwCCUR7yQJM6zu_xwN1TR0mxS">model</a></td>

</tr>

</table>

**ImageNet-21K Pretrained Models**

<table>

<tr>

<th>Model Variant</th>

<th>Resolution</th>

<th>Acc@1</th>

<th>#Params(M)</th>

<th>FLOPs(G)</th>

<th>Download</th>

</tr>

<tr>

<td>GC ViT-L</td>

<td>224 x 224</td>

<th>86.6</th>

<td>201</td>

<td>32.6</td>

<td><a href="https://drive.google.com/uc?export=download&id=1maGDr6mJkLyRTUkspMzCgSlhDzNRFGEf">model</a></td>

</tr>

<tr>

<td>GC ViT-L</td>

<td>384 x 384</td>

<th>87.4</th>

<td>201</td>

<td>120.4</td>

<td><a href="https://drive.google.com/uc?export=download&id=1P-IEhvQbJ3FjnunVkM1Z9dEpKw-tsuWv">model</a></td>

</tr>

</table>

## Citation

Please consider citing GC ViT paper if it is useful for your work:

```

@inproceedings{hatamizadeh2023global,

title={Global context vision transformers},

author={Hatamizadeh, Ali and Yin, Hongxu and Heinrich, Greg and Kautz, Jan and Molchanov, Pavlo},

booktitle={International Conference on Machine Learning},

pages={12633--12646},

year={2023},

organization={PMLR}

}

```

## Licenses

Copyright © 2023, NVIDIA Corporation. All rights reserved.

This work is made available under the Nvidia Source Code License-NC. Click [here](LICENSE) to view a copy of this license.

The pre-trained models are shared under [CC-BY-NC-SA-4.0](https://creativecommons.org/licenses/by-nc-sa/4.0/). If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

For license information regarding the timm, please refer to its [repository](https://github.com/rwightman/pytorch-image-models).

For license information regarding the ImageNet dataset, please refer to the ImageNet [official website](https://www.image-net.org/).

|

EXrRor3/ppo-LunarLander-v2

|

EXrRor3

| 2023-07-22T03:46:54Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-22T03:40:27Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 251.86 +/- 17.63

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

ittailup/lallama-13b-chat

|

ittailup

| 2023-07-22T03:20:47Z | 1 | 0 |

peft

|

[

"peft",

"pytorch",

"llama",

"region:us"

] | null | 2023-07-21T19:10:35Z |

---

library_name: peft

---

## Training procedure

### Framework versions

- PEFT 0.4.0

|

Jonathaniu/vicuna-breast-cancer-7b-mix-data-epoch-2_5

|

Jonathaniu

| 2023-07-22T03:09:35Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-22T03:09:21Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

### Framework versions

- PEFT 0.4.0.dev0

|

UNIST-Eunchan/Pegasus-x-base-govreport-12288-1024-numepoch-10

|

UNIST-Eunchan

| 2023-07-22T03:05:31Z | 93 | 0 |

transformers

|

[

"transformers",

"pytorch",

"pegasus_x",

"text2text-generation",

"generated_from_trainer",

"dataset:govreport-summarization",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-07-20T02:20:44Z |

---

tags:

- generated_from_trainer

datasets:

- govreport-summarization

model-index:

- name: Pegasus-x-base-govreport-12288-1024-numepoch-10

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Pegasus-x-base-govreport-12288-1024-numepoch-10

This model is a fine-tuned version of [google/pegasus-x-base](https://huggingface.co/google/pegasus-x-base) on the govreport-summarization dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6234

## Model description

More information needed

## Evaluation Score

**'ROUGE'**:

{

'rouge1': 0.5012,

'rouge2': 0.2205,

'rougeL': 0.2552,

'rougeLsum': 0.2554

}

**'BERT_SCORE'**

{'f1': 0.859,

'precision': 0.8619,

'recall': 0.8563

}

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 1

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 64

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.1149 | 0.37 | 100 | 1.9237 |

| 1.9545 | 0.73 | 200 | 1.8380 |

| 1.8835 | 1.1 | 300 | 1.7574 |

| 1.862 | 1.46 | 400 | 1.7305 |

| 1.8536 | 1.83 | 500 | 1.7100 |

| 1.8062 | 2.19 | 600 | 1.6944 |

| 1.8161 | 2.56 | 700 | 1.6882 |

| 1.7611 | 2.92 | 800 | 1.6803 |

| 1.7878 | 3.29 | 900 | 1.6671 |

| 1.7299 | 3.65 | 1000 | 1.6599 |

| 1.7636 | 4.02 | 1100 | 1.6558 |

| 1.7262 | 4.38 | 1200 | 1.6547 |

| 1.715 | 4.75 | 1300 | 1.6437 |

| 1.7178 | 5.12 | 1400 | 1.6445 |

| 1.7163 | 5.48 | 1500 | 1.6386 |

| 1.7367 | 5.85 | 1600 | 1.6364 |

| 1.7114 | 6.21 | 1700 | 1.6365 |

| 1.6452 | 6.58 | 1800 | 1.6309 |

| 1.7251 | 6.94 | 1900 | 1.6301 |

| 1.6726 | 7.31 | 2000 | 1.6305 |

| 1.7104 | 7.67 | 2100 | 1.6285 |

| 1.6739 | 8.04 | 2200 | 1.6252 |

| 1.7082 | 8.4 | 2300 | 1.6246 |

| 1.6888 | 8.77 | 2400 | 1.6244 |

| 1.6609 | 9.13 | 2500 | 1.6256 |

| 1.6707 | 9.5 | 2600 | 1.6241 |

| 1.669 | 9.86 | 2700 | 1.6234 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu117

- Datasets 2.13.1

- Tokenizers 0.13.3

|

Falcinspire/Reinforce-MLP-v1-Cartpole-v1

|

Falcinspire

| 2023-07-22T02:22:54Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-22T00:42:23Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-MLP-v1-Cartpole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 491.10 +/- 26.70

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

LarryAIDraw/YelanV4-09

|

LarryAIDraw

| 2023-07-22T02:16:49Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-22T02:15:12Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/61470/yelan-lora-genshin-impact

|

Blackroot/Llama-2-13B-Storyweaver-LORA-Deprecated

|

Blackroot

| 2023-07-22T02:12:40Z | 0 | 0 | null |

[

"region:us"

] | null | 2023-07-21T23:33:22Z |

Join the Coffee & AI Discord for AI Stuff and things!

[](https://discord.gg/2JhHVh7CGu)

## **Probably bad model**

Test results are showing that although this model does produce long outputs, the quality has generally degraded. I'm leaving this up for the time being but I would recommend one of my other loras instead. As an aside, this model is really, really funny, try it if you want a laugh.

## Get the base model here:

Base Model Quantizations by The Bloke here:

https://huggingface.co/TheBloke/Llama-2-13B-GGML

https://huggingface.co/TheBloke/Llama-2-13B-GPTQ

## Prompting for this model:

A brief warning that no alignment or attempts to sanitize or otherwise filter the dataset or the outputs have been done. This is a completelty raw model and may behave unpredictably or create scenarios that are unpleasant.

The base Llama2 is a text completion model. That means it will continue writing from the story in whatever manner you direct it. This is not an instruct tuned model, so don't try and give it instruction.

Correct prompting:

```

He grabbed his sword, his gleaming armor, he readied himself. The battle was coming, he walked into the dawn light and

```

Incorrect prompting:

```

Write a story about...

```

This model has been trained to generate as much text as possible, so you should use some mechanism to force it to stop at N tokens or something. For exmaple, in one prompt I average about 7000 output tokens, basically make sure you have a max sequence length set or it'll just keep going forever.

## Training procedure

22,000 steps @ 7 epochs. Final training loss of 1.8. Total training time was 30 hours on a single 3090 TI.

PEFT:

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.5.0.dev0

|

LarryAIDraw/ots-14

|

LarryAIDraw

| 2023-07-22T02:11:14Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-22T02:09:45Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/21833/girls-frontline-ots-14

|

LarryAIDraw/niloutest

|

LarryAIDraw

| 2023-07-22T01:50:09Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-22T01:49:28Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/101969/nilou-genshin-impact

|

LarryAIDraw/Genshin_Impact-Nilou_V2_nilou__genshin_impact_-000012

|

LarryAIDraw

| 2023-07-22T01:49:53Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-22T01:46:49Z |

---

license: creativeml-openrail-m

---

https://civitai.com/models/5367/tsumasaky-nilou-genshin-impact-lora

|

minhanhtuan/llama2-qlora-finetunined-french

|

minhanhtuan

| 2023-07-22T01:25:58Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-22T01:25:51Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.5.0.dev0

|

jonmay/ppo-LunarLander-v2

|

jonmay

| 2023-07-22T01:24:58Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-22T01:24:35Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 255.45 +/- 20.35

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Mel-Iza0/RedPajama-ZeroShot-20K-new_prompt_classe_bias

|

Mel-Iza0

| 2023-07-22T01:12:05Z | 2 | 0 |

peft

|

[

"peft",

"pytorch",

"gpt_neox",

"region:us"

] | null | 2023-07-21T21:11:26Z |

---

library_name: peft

---

## Training procedure

### Framework versions

- PEFT 0.4.0

|

Bainbridge/vilt-b32-mlm-mami

|

Bainbridge

| 2023-07-22T01:03:05Z | 38 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vilt",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2023-07-22T00:22:38Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: vilt-b32-mlm-mami

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vilt-b32-mlm-mami

This model is a fine-tuned version of [dandelin/vilt-b32-mlm](https://huggingface.co/dandelin/vilt-b32-mlm) on the MAMI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5796

- F1: 0.7899

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.6898 | 0.48 | 100 | 0.6631 | 0.6076 |

| 0.5824 | 0.96 | 200 | 0.5055 | 0.7545 |

| 0.4306 | 1.44 | 300 | 0.4586 | 0.7861 |

| 0.4207 | 1.91 | 400 | 0.4439 | 0.7927 |

| 0.3055 | 2.39 | 500 | 0.4912 | 0.7949 |

| 0.2582 | 2.87 | 600 | 0.4921 | 0.7873 |

| 0.1875 | 3.35 | 700 | 0.5796 | 0.7899 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu117

- Datasets 2.12.0

- Tokenizers 0.13.3

|

NasimB/cbt-norm-rarity-neg-log-rarity

|

NasimB

| 2023-07-22T00:46:15Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-21T22:20:45Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: cbt-norm-rarity-neg-log-rarity

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# cbt-norm-rarity-neg-log-rarity

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.1046

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 6.3494 | 0.29 | 500 | 5.3385 |

| 5.0263 | 0.58 | 1000 | 4.9258 |

| 4.7061 | 0.87 | 1500 | 4.6888 |

| 4.4468 | 1.16 | 2000 | 4.5463 |

| 4.2956 | 1.46 | 2500 | 4.4260 |

| 4.1947 | 1.75 | 3000 | 4.3302 |

| 4.0756 | 2.04 | 3500 | 4.2520 |

| 3.8921 | 2.33 | 4000 | 4.2106 |

| 3.8655 | 2.62 | 4500 | 4.1572 |

| 3.8345 | 2.91 | 5000 | 4.1064 |

| 3.6432 | 3.2 | 5500 | 4.1013 |

| 3.581 | 3.49 | 6000 | 4.0704 |

| 3.569 | 3.79 | 6500 | 4.0362 |

| 3.4919 | 4.08 | 7000 | 4.0338 |

| 3.3226 | 4.37 | 7500 | 4.0289 |

| 3.3106 | 4.66 | 8000 | 4.0166 |

| 3.297 | 4.95 | 8500 | 4.0046 |

| 3.1568 | 5.24 | 9000 | 4.0152 |

| 3.1358 | 5.53 | 9500 | 4.0145 |

| 3.1313 | 5.82 | 10000 | 4.0135 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

SaffalPoosh/falcon-7b-autogptq-custom

|

SaffalPoosh

| 2023-07-22T00:17:42Z | 6 | 0 |

transformers

|

[

"transformers",

"RefinedWebModel",

"text-generation",

"custom_code",

"autotrain_compatible",

"region:us"

] |

text-generation

| 2023-07-22T00:02:03Z |

autogptq quant. logs

```

>>> model.quantize(examples)

2023-07-21 16:54:47 INFO [auto_gptq.modeling._base] Start quantizing layer 1/32

2023-07-21 16:54:47 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 1/32...

2023-07-21 16:54:48 INFO [auto_gptq.quantization.gptq] duration: 0.8171646595001221

2023-07-21 16:54:48 INFO [auto_gptq.quantization.gptq] avg loss: 3.7546463012695312

2023-07-21 16:54:48 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 1/32...

2023-07-21 16:54:49 INFO [auto_gptq.quantization.gptq] duration: 0.8055715560913086

2023-07-21 16:54:49 INFO [auto_gptq.quantization.gptq] avg loss: 0.2164316177368164

2023-07-21 16:54:49 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 1/32...

2023-07-21 16:54:50 INFO [auto_gptq.quantization.gptq] duration: 0.8417620658874512

2023-07-21 16:54:50 INFO [auto_gptq.quantization.gptq] avg loss: 16.070518493652344

2023-07-21 16:54:50 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 1/32...

2023-07-21 16:54:53 INFO [auto_gptq.quantization.gptq] duration: 3.90244197845459

2023-07-21 16:54:53 INFO [auto_gptq.quantization.gptq] avg loss: 0.5676069855690002

2023-07-21 16:54:53 INFO [auto_gptq.modeling._base] Start quantizing layer 2/32

2023-07-21 16:54:54 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 2/32...

2023-07-21 16:54:54 INFO [auto_gptq.quantization.gptq] duration: 0.8373761177062988

2023-07-21 16:54:54 INFO [auto_gptq.quantization.gptq] avg loss: 4.066518783569336

2023-07-21 16:54:54 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 2/32...

2023-07-21 16:54:55 INFO [auto_gptq.quantization.gptq] duration: 0.8285796642303467

2023-07-21 16:54:55 INFO [auto_gptq.quantization.gptq] avg loss: 0.2558078169822693

2023-07-21 16:55:25 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 2/32...

2023-07-21 16:55:25 INFO [auto_gptq.quantization.gptq] duration: 0.8859198093414307

2023-07-21 16:55:25 INFO [auto_gptq.quantization.gptq] avg loss: 16.571727752685547

2023-07-21 16:55:26 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 2/32...

2023-07-21 16:55:29 INFO [auto_gptq.quantization.gptq] duration: 3.86962890625

2023-07-21 16:55:29 INFO [auto_gptq.quantization.gptq] avg loss: 0.34605544805526733

2023-07-21 16:55:30 INFO [auto_gptq.modeling._base] Start quantizing layer 3/32

2023-07-21 16:55:30 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 3/32...

2023-07-21 16:55:30 INFO [auto_gptq.quantization.gptq] duration: 0.8118832111358643

2023-07-21 16:55:30 INFO [auto_gptq.quantization.gptq] avg loss: 5.4185943603515625

2023-07-21 16:55:30 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 3/32...

2023-07-21 16:55:31 INFO [auto_gptq.quantization.gptq] duration: 0.8096959590911865

2023-07-21 16:55:31 INFO [auto_gptq.quantization.gptq] avg loss: 0.22585009038448334

2023-07-21 16:55:31 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 3/32...

2023-07-21 16:55:32 INFO [auto_gptq.quantization.gptq] duration: 0.8473665714263916

2023-07-21 16:55:32 INFO [auto_gptq.quantization.gptq] avg loss: 27.050426483154297

2023-07-21 16:55:32 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 3/32...

2023-07-21 16:55:36 INFO [auto_gptq.quantization.gptq] duration: 3.8430850505828857

2023-07-21 16:55:36 INFO [auto_gptq.quantization.gptq] avg loss: 0.6839203834533691

2023-07-21 16:55:36 INFO [auto_gptq.modeling._base] Start quantizing layer 4/32

2023-07-21 16:55:36 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 4/32...

2023-07-21 16:55:37 INFO [auto_gptq.quantization.gptq] duration: 0.7948899269104004

2023-07-21 16:55:37 INFO [auto_gptq.quantization.gptq] avg loss: 6.523550987243652

2023-07-21 16:55:37 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 4/32...

2023-07-21 16:55:38 INFO [auto_gptq.quantization.gptq] duration: 0.7990512847900391

2023-07-21 16:55:38 INFO [auto_gptq.quantization.gptq] avg loss: 0.21638213098049164

2023-07-21 16:55:38 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 4/32...

2023-07-21 16:55:39 INFO [auto_gptq.quantization.gptq] duration: 0.8403058052062988

2023-07-21 16:55:39 INFO [auto_gptq.quantization.gptq] avg loss: 36.57025146484375

2023-07-21 16:55:39 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 4/32...

2023-07-21 16:55:43 INFO [auto_gptq.quantization.gptq] duration: 3.856529474258423

2023-07-21 16:55:43 INFO [auto_gptq.quantization.gptq] avg loss: 9.424503326416016

2023-07-21 16:55:43 INFO [auto_gptq.modeling._base] Start quantizing layer 5/32

2023-07-21 16:55:43 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 5/32...

2023-07-21 16:55:44 INFO [auto_gptq.quantization.gptq] duration: 0.7926647663116455

2023-07-21 16:55:44 INFO [auto_gptq.quantization.gptq] avg loss: 6.277029037475586

2023-07-21 16:55:44 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 5/32...

2023-07-21 16:55:44 INFO [auto_gptq.quantization.gptq] duration: 0.7987856864929199

2023-07-21 16:55:44 INFO [auto_gptq.quantization.gptq] avg loss: 0.1324760764837265

2023-07-21 16:55:44 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 5/32...

2023-07-21 16:55:45 INFO [auto_gptq.quantization.gptq] duration: 0.8394050598144531

2023-07-21 16:55:45 INFO [auto_gptq.quantization.gptq] avg loss: 36.26388168334961

2023-07-21 16:55:45 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 5/32...

2023-07-21 16:55:49 INFO [auto_gptq.quantization.gptq] duration: 3.849104166030884

2023-07-21 16:55:49 INFO [auto_gptq.quantization.gptq] avg loss: 2.376619338989258

2023-07-21 16:55:49 INFO [auto_gptq.modeling._base] Start quantizing layer 6/32

2023-07-21 16:55:49 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 6/32...

2023-07-21 16:55:50 INFO [auto_gptq.quantization.gptq] duration: 0.7964150905609131

2023-07-21 16:55:50 INFO [auto_gptq.quantization.gptq] avg loss: 8.479263305664062

2023-07-21 16:55:50 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 6/32...

2023-07-21 16:55:51 INFO [auto_gptq.quantization.gptq] duration: 0.7951827049255371

2023-07-21 16:55:51 INFO [auto_gptq.quantization.gptq] avg loss: 0.14170163869857788

2023-07-21 16:56:21 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 6/32...

2023-07-21 16:56:22 INFO [auto_gptq.quantization.gptq] duration: 0.8720560073852539

2023-07-21 16:56:22 INFO [auto_gptq.quantization.gptq] avg loss: 42.756919860839844

2023-07-21 16:56:22 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 6/32...

2023-07-21 16:56:25 INFO [auto_gptq.quantization.gptq] duration: 3.8685550689697266

2023-07-21 16:56:25 INFO [auto_gptq.quantization.gptq] avg loss: 0.8117952346801758

2023-07-21 16:56:26 INFO [auto_gptq.modeling._base] Start quantizing layer 7/32

2023-07-21 16:56:26 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 7/32...

2023-07-21 16:56:26 INFO [auto_gptq.quantization.gptq] duration: 0.7976808547973633

2023-07-21 16:56:26 INFO [auto_gptq.quantization.gptq] avg loss: 7.019394397735596

2023-07-21 16:56:26 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 7/32...

2023-07-21 16:56:27 INFO [auto_gptq.quantization.gptq] duration: 0.803225040435791

2023-07-21 16:56:27 INFO [auto_gptq.quantization.gptq] avg loss: 0.21443051099777222

2023-07-21 16:56:27 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 7/32...

2023-07-21 16:56:28 INFO [auto_gptq.quantization.gptq] duration: 0.8342931270599365

2023-07-21 16:56:28 INFO [auto_gptq.quantization.gptq] avg loss: 39.33504104614258

2023-07-21 16:56:28 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 7/32...

2023-07-21 16:56:32 INFO [auto_gptq.quantization.gptq] duration: 3.8671581745147705

2023-07-21 16:56:32 INFO [auto_gptq.quantization.gptq] avg loss: 0.9214520454406738

2023-07-21 16:56:32 INFO [auto_gptq.modeling._base] Start quantizing layer 8/32

2023-07-21 16:56:32 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 8/32...

2023-07-21 16:56:33 INFO [auto_gptq.quantization.gptq] duration: 0.7989864349365234

2023-07-21 16:56:33 INFO [auto_gptq.quantization.gptq] avg loss: 7.602280616760254

2023-07-21 16:56:33 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 8/32...

2023-07-21 16:56:34 INFO [auto_gptq.quantization.gptq] duration: 0.8112733364105225

2023-07-21 16:56:34 INFO [auto_gptq.quantization.gptq] avg loss: 0.11391645669937134

2023-07-21 16:56:34 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 8/32...

2023-07-21 16:56:35 INFO [auto_gptq.quantization.gptq] duration: 0.8388988971710205

2023-07-21 16:56:35 INFO [auto_gptq.quantization.gptq] avg loss: 34.74957275390625

2023-07-21 16:56:35 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 8/32...

2023-07-21 16:56:39 INFO [auto_gptq.quantization.gptq] duration: 3.8561182022094727

2023-07-21 16:56:39 INFO [auto_gptq.quantization.gptq] avg loss: 1.1289432048797607

2023-07-21 16:56:39 INFO [auto_gptq.modeling._base] Start quantizing layer 9/32

2023-07-21 16:56:39 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 9/32...

2023-07-21 16:56:40 INFO [auto_gptq.quantization.gptq] duration: 0.7969386577606201

2023-07-21 16:56:40 INFO [auto_gptq.quantization.gptq] avg loss: 6.806826591491699

2023-07-21 16:56:40 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 9/32...

2023-07-21 16:56:41 INFO [auto_gptq.quantization.gptq] duration: 0.7953078746795654

2023-07-21 16:56:41 INFO [auto_gptq.quantization.gptq] avg loss: 0.2318212240934372

2023-07-21 16:56:41 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 9/32...

2023-07-21 16:56:41 INFO [auto_gptq.quantization.gptq] duration: 0.8294937610626221

2023-07-21 16:56:41 INFO [auto_gptq.quantization.gptq] avg loss: 35.324676513671875

2023-07-21 16:56:41 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 9/32...

2023-07-21 16:56:45 INFO [auto_gptq.quantization.gptq] duration: 3.8630259037017822

2023-07-21 16:56:45 INFO [auto_gptq.quantization.gptq] avg loss: 1.4622347354888916

2023-07-21 16:56:45 INFO [auto_gptq.modeling._base] Start quantizing layer 10/32

2023-07-21 16:56:46 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 10/32...

2023-07-21 16:56:46 INFO [auto_gptq.quantization.gptq] duration: 0.8029708862304688

2023-07-21 16:56:46 INFO [auto_gptq.quantization.gptq] avg loss: 6.056252956390381

2023-07-21 16:56:46 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 10/32...

2023-07-21 16:56:47 INFO [auto_gptq.quantization.gptq] duration: 0.8028323650360107

2023-07-21 16:56:47 INFO [auto_gptq.quantization.gptq] avg loss: 1.092197060585022

2023-07-21 16:56:47 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 10/32...

2023-07-21 16:56:48 INFO [auto_gptq.quantization.gptq] duration: 0.8335537910461426

2023-07-21 16:56:48 INFO [auto_gptq.quantization.gptq] avg loss: 30.71457290649414

2023-07-21 16:56:48 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 10/32...

2023-07-21 16:56:52 INFO [auto_gptq.quantization.gptq] duration: 3.8703184127807617

2023-07-21 16:56:52 INFO [auto_gptq.quantization.gptq] avg loss: 1.2208330631256104

2023-07-21 16:56:52 INFO [auto_gptq.modeling._base] Start quantizing layer 11/32

2023-07-21 16:56:52 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 11/32...

2023-07-21 16:56:53 INFO [auto_gptq.quantization.gptq] duration: 0.814570426940918

2023-07-21 16:56:53 INFO [auto_gptq.quantization.gptq] avg loss: 6.145627021789551

2023-07-21 16:56:53 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 11/32...

2023-07-21 16:56:54 INFO [auto_gptq.quantization.gptq] duration: 0.8268287181854248

2023-07-21 16:56:54 INFO [auto_gptq.quantization.gptq] avg loss: 0.24324843287467957

2023-07-21 16:56:54 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 11/32...

2023-07-21 16:56:55 INFO [auto_gptq.quantization.gptq] duration: 0.8359119892120361

2023-07-21 16:56:55 INFO [auto_gptq.quantization.gptq] avg loss: 30.847026824951172

2023-07-21 16:56:55 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 11/32...

2023-07-21 16:56:58 INFO [auto_gptq.quantization.gptq] duration: 3.831470489501953

2023-07-21 16:56:58 INFO [auto_gptq.quantization.gptq] avg loss: 1.3961751461029053

2023-07-21 16:57:26 INFO [auto_gptq.modeling._base] Start quantizing layer 12/32

2023-07-21 16:57:26 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 12/32...

2023-07-21 16:57:27 INFO [auto_gptq.quantization.gptq] duration: 0.7964096069335938

2023-07-21 16:57:27 INFO [auto_gptq.quantization.gptq] avg loss: 6.053964614868164

2023-07-21 16:57:27 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 12/32...

2023-07-21 16:57:28 INFO [auto_gptq.quantization.gptq] duration: 0.799691915512085

2023-07-21 16:57:28 INFO [auto_gptq.quantization.gptq] avg loss: 0.2671034336090088

2023-07-21 16:57:28 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 12/32...

2023-07-21 16:57:29 INFO [auto_gptq.quantization.gptq] duration: 0.8342888355255127

2023-07-21 16:57:29 INFO [auto_gptq.quantization.gptq] avg loss: 29.729408264160156

2023-07-21 16:57:29 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 12/32...

2023-07-21 16:57:33 INFO [auto_gptq.quantization.gptq] duration: 3.8561949729919434

2023-07-21 16:57:33 INFO [auto_gptq.quantization.gptq] avg loss: 1.495622158050537

2023-07-21 16:57:33 INFO [auto_gptq.modeling._base] Start quantizing layer 13/32

2023-07-21 16:57:33 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 13/32...

2023-07-21 16:57:34 INFO [auto_gptq.quantization.gptq] duration: 0.7953364849090576

2023-07-21 16:57:34 INFO [auto_gptq.quantization.gptq] avg loss: 5.408998489379883

2023-07-21 16:57:34 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 13/32...

2023-07-21 16:57:34 INFO [auto_gptq.quantization.gptq] duration: 0.7990250587463379

2023-07-21 16:57:34 INFO [auto_gptq.quantization.gptq] avg loss: 0.5066410303115845

2023-07-21 16:57:34 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 13/32...

2023-07-21 16:57:35 INFO [auto_gptq.quantization.gptq] duration: 0.8330769538879395

2023-07-21 16:57:35 INFO [auto_gptq.quantization.gptq] avg loss: 27.790515899658203

2023-07-21 16:57:35 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_4h_to_h in layer 13/32...

2023-07-21 16:57:39 INFO [auto_gptq.quantization.gptq] duration: 3.861015558242798

2023-07-21 16:57:39 INFO [auto_gptq.quantization.gptq] avg loss: 1.3019633293151855

2023-07-21 16:57:39 INFO [auto_gptq.modeling._base] Start quantizing layer 14/32

2023-07-21 16:57:39 INFO [auto_gptq.modeling._base] Quantizing self_attention.query_key_value in layer 14/32...

2023-07-21 16:57:40 INFO [auto_gptq.quantization.gptq] duration: 0.8011329174041748

2023-07-21 16:57:40 INFO [auto_gptq.quantization.gptq] avg loss: 6.027165412902832

2023-07-21 16:57:40 INFO [auto_gptq.modeling._base] Quantizing self_attention.dense in layer 14/32...

2023-07-21 16:57:41 INFO [auto_gptq.quantization.gptq] duration: 0.7977538108825684

2023-07-21 16:57:41 INFO [auto_gptq.quantization.gptq] avg loss: 0.28969255089759827

2023-07-21 16:57:41 INFO [auto_gptq.modeling._base] Quantizing mlp.dense_h_to_4h in layer 14/32...

2023-07-21 16:57:42 INFO [auto_gptq.quantization.gptq] duration: 0.8305981159210205