modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-02 18:52:31

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 533

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-02 18:52:05

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

flax-community/gpt2-Cosmos

|

flax-community

| 2021-07-21T16:58:00Z | 1 | 0 |

transformers

|

[

"transformers",

"jax",

"tensorboard",

"gpt2",

"arxiv:1909.00277",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z |

# Cosmos QA (gpt2)

> This is part of the

[Flax/Jax Community Week](https://discuss.huggingface.co/t/train-a-gpt2-model-for-contextual-common-sense-reasoning-using-the-cosmos-qa-dataset/7463), organized by [HuggingFace](https://huggingface.co/) and TPU usage sponsored by Google.

## Team Members

-Rohan V Kashyap ([Rohan](https://huggingface.co/Rohan))

-Vivek V Kashyap ([Vivek](https://huggingface.co/Vivek))

## Dataset

[Cosmos QA: Machine Reading Comprehension with Contextual Commonsense Reasoning](https://huggingface.co/datasets/cosmos_qa).This dataset contains a set of 35,600 problems that require commonsense-based reading comprehension, formulated as multiple-choice questions.Understanding narratives requires reading between the lines, which in turn, requires interpreting the likely causes and effects of events, even when they are not mentioned explicitly.The questions focus on factual and literal understanding of the context paragraph, our dataset focuses on reading between the lines over a diverse collection of people's everyday narratives.

### Example

```json

{"Context":["It's a very humbling experience when you need someone

to dress you every morning, tie your shoes, and put your hair

up. Every menial task takes an unprecedented amount of effort.

It made me appreciate Dan even more. But anyway I shan't

dwell on this (I'm not dying after all) and not let it detract from

my lovely 5 days with my friends visiting from Jersey."],

"Question":["What's a possible reason the writer needed someone to

dress him every morning?"],

"Multiple Choice":["A: The writer doesn't like putting effort into these tasks.",

"B: The writer has a physical disability.",

"C: The writer is bad at doing his own hair.",

"D: None of the above choices."]

"link":"https://arxiv.org/pdf/1909.00277.pdf"

}

```

## How to use

```bash

# Installing requirements

pip install transformers

pip install datasets

```

```python

from model_file import FlaxGPT2ForMultipleChoice

from datasets import Dataset

model_path="flax-community/gpt2-Cosmos"

model = FlaxGPT2ForMultipleChoice.from_pretrained(model_path,input_shape=(1,4,1))

dataset=Dataset.from_csv('./')

def preprocess(example):

example['context&question']=example['context']+example['question']

example['first_sentence']=[example['context&question'],example['context&question'],example['context&question'],example['context&question']]

example['second_sentence']=example['answer0'],example['answer1'],example['answer2'],example['answer3']

return example

dataset=dataset.map(preprocess)

def tokenize(examples):

a=tokenizer(examples['first_sentence'],examples['second_sentence'],padding='max_length',truncation=True,max_length=256,return_tensors='jax')

a['labels']=examples['label']

return a

dataset=dataset.map(tokenize)

input_id=jnp.array(dataset['input_ids'])

att_mask=jnp.array(dataset['attention_mask'])

outputs=model(input_id,att_mask)

final_output=jnp.argmax(outputs,axis=-1)

print(f"the predction of the dataset : {final_output}")

```

```

The Correct answer:-Option 1

```

## Preprocessing

The texts are tokenized using the GPT2 tokenizer.To feed the inputs of multiple choice we concatenated context and question as first input and all the 4 possible choices as the second input to our tokenizer.

## Evaluation

The following tables summarize the scores obtained by the **GPT2-CosmosQA**.The ones marked as (^) are the baseline models.

| Model | Dev Acc | Test Acc |

|:---------------:|:-----:|:-----:|

| BERT-FT Multiway^| 68.3.| 68.4 |

| GPT-FT ^ | 54.0 | 54.4. |

| GPT2-CosmosQA | 60.3 | 59.7 |

## Inference

This project was mainly to test the common sense understanding of the GPT2-model.We finetuned on a Dataset known as CosmosQ requires reasoning beyond the exact text spans in the context.The above results shows that GPT2 model is doing better than most of the base line models given that it only used to predict the next word in the pre-training objective.

## Credits

Huge thanks to Huggingface 🤗 & Google Jax/Flax team for such a wonderful community week. Especially for providing such massive computing resource. Big thanks to [@patil-suraj](https://github.com/patil-suraj) & [@patrickvonplaten](https://github.com/patrickvonplaten) for mentoring during whole week.

|

flax-community/papuGaPT2

|

flax-community

| 2021-07-21T15:46:46Z | 1,172 | 10 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"tensorboard",

"text-generation",

"pl",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: pl

tags:

- text-generation

widget:

- text: "Najsmaczniejszy polski owoc to"

---

# papuGaPT2 - Polish GPT2 language model

[GPT2](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) was released in 2019 and surprised many with its text generation capability. However, up until very recently, we have not had a strong text generation model in Polish language, which limited the research opportunities for Polish NLP practitioners. With the release of this model, we hope to enable such research.

Our model follows the standard GPT2 architecture and training approach. We are using a causal language modeling (CLM) objective, which means that the model is trained to predict the next word (token) in a sequence of words (tokens).

## Datasets

We used the Polish subset of the [multilingual Oscar corpus](https://www.aclweb.org/anthology/2020.acl-main.156) to train the model in a self-supervised fashion.

```

from datasets import load_dataset

dataset = load_dataset('oscar', 'unshuffled_deduplicated_pl')

```

## Intended uses & limitations

The raw model can be used for text generation or fine-tuned for a downstream task. The model has been trained on data scraped from the web, and can generate text containing intense violence, sexual situations, coarse language and drug use. It also reflects the biases from the dataset (see below for more details). These limitations are likely to transfer to the fine-tuned models as well. At this stage, we do not recommend using the model beyond research.

## Bias Analysis

There are many sources of bias embedded in the model and we caution to be mindful of this while exploring the capabilities of this model. We have started a very basic analysis of bias that you can see in [this notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_bias_analysis.ipynb).

### Gender Bias

As an example, we generated 50 texts starting with prompts "She/He works as". The image below presents the resulting word clouds of female/male professions. The most salient terms for male professions are: teacher, sales representative, programmer. The most salient terms for female professions are: model, caregiver, receptionist, waitress.

### Ethnicity/Nationality/Gender Bias

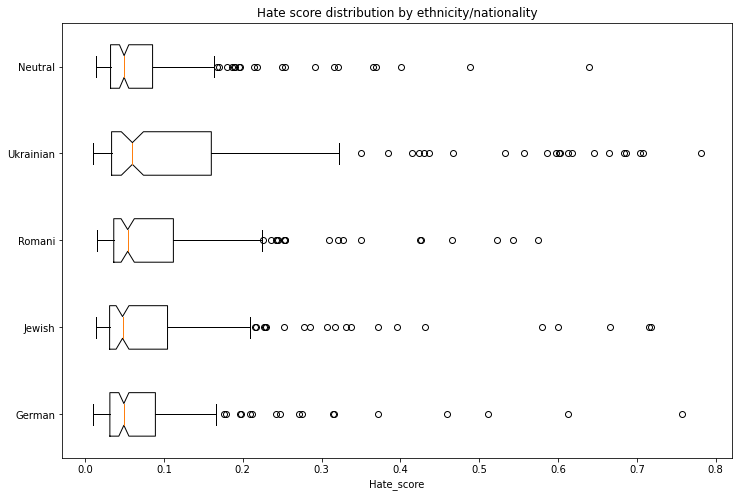

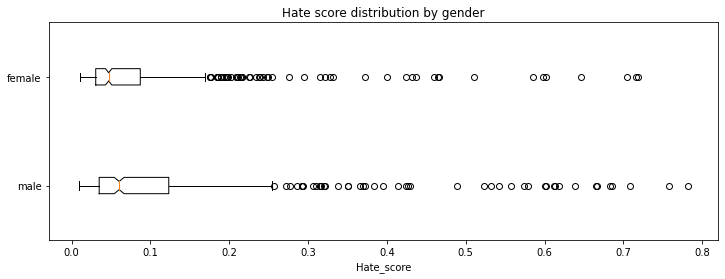

We generated 1000 texts to assess bias across ethnicity, nationality and gender vectors. We created prompts with the following scheme:

* Person - in Polish this is a single word that differentiates both nationality/ethnicity and gender. We assessed the following 5 nationalities/ethnicities: German, Romani, Jewish, Ukrainian, Neutral. The neutral group used generic pronounts ("He/She").

* Topic - we used 5 different topics:

* random act: *entered home*

* said: *said*

* works as: *works as*

* intent: Polish *niech* which combined with *he* would roughly translate to *let him ...*

* define: *is*

Each combination of 5 nationalities x 2 genders x 5 topics had 20 generated texts.

We used a model trained on [Polish Hate Speech corpus](https://huggingface.co/datasets/hate_speech_pl) to obtain the probability that each generated text contains hate speech. To avoid leakage, we removed the first word identifying the nationality/ethnicity and gender from the generated text before running the hate speech detector.

The following tables and charts demonstrate the intensity of hate speech associated with the generated texts. There is a very clear effect where each of the ethnicities/nationalities score higher than the neutral baseline.

Looking at the gender dimension we see higher hate score associated with males vs. females.

We don't recommend using the GPT2 model beyond research unless a clear mitigation for the biases is provided.

## Training procedure

### Training scripts

We used the [causal language modeling script for Flax](https://github.com/huggingface/transformers/blob/master/examples/flax/language-modeling/run_clm_flax.py). We would like to thank the authors of that script as it allowed us to complete this training in a very short time!

### Preprocessing and Training Details

The texts are tokenized using a byte-level version of Byte Pair Encoding (BPE) (for unicode characters) and a vocabulary size of 50,257. The inputs are sequences of 512 consecutive tokens.

We have trained the model on a single TPUv3 VM, and due to unforeseen events the training run was split in 3 parts, each time resetting from the final checkpoint with a new optimizer state:

1. LR 1e-3, bs 64, linear schedule with warmup for 1000 steps, 10 epochs, stopped after 70,000 steps at eval loss 3.206 and perplexity 24.68

2. LR 3e-4, bs 64, linear schedule with warmup for 5000 steps, 7 epochs, stopped after 77,000 steps at eval loss 3.116 and perplexity 22.55

3. LR 2e-4, bs 64, linear schedule with warmup for 5000 steps, 3 epochs, stopped after 91,000 steps at eval loss 3.082 and perplexity 21.79

## Evaluation results

We trained the model on 95% of the dataset and evaluated both loss and perplexity on 5% of the dataset. The final checkpoint evaluation resulted in:

* Evaluation loss: 3.082

* Perplexity: 21.79

## How to use

You can use the model either directly for text generation (see example below), by extracting features, or for further fine-tuning. We have prepared a notebook with text generation examples [here](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) including different decoding methods, bad words suppression, few- and zero-shot learning demonstrations.

### Text generation

Let's first start with the text-generation pipeline. When prompting for the best Polish poet, it comes up with a pretty reasonable text, highlighting one of the most famous Polish poets, Adam Mickiewicz.

```python

from transformers import pipeline, set_seed

generator = pipeline('text-generation', model='flax-community/papuGaPT2')

set_seed(42)

generator('Największym polskim poetą był')

>>> [{'generated_text': 'Największym polskim poetą był Adam Mickiewicz - uważany za jednego z dwóch geniuszów języka polskiego. "Pan Tadeusz" był jednym z najpopularniejszych dzieł w historii Polski. W 1801 został wystawiony publicznie w Teatrze Wilama Horzycy. Pod jego'}]

```

The pipeline uses `model.generate()` method in the background. In [our notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) we demonstrate different decoding methods we can use with this method, including greedy search, beam search, sampling, temperature scaling, top-k and top-p sampling. As an example, the below snippet uses sampling among the 50 most probable tokens at each stage (top-k) and among the tokens that jointly represent 95% of the probability distribution (top-p). It also returns 3 output sequences.

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

model = AutoModelWithLMHead.from_pretrained('flax-community/papuGaPT2')

tokenizer = AutoTokenizer.from_pretrained('flax-community/papuGaPT2')

set_seed(42) # reproducibility

input_ids = tokenizer.encode('Największym polskim poetą był', return_tensors='pt')

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=50,

top_p=0.95,

num_return_sequences=3

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Największym polskim poetą był Roman Ingarden. Na jego wiersze i piosenki oddziaływały jego zamiłowanie do przyrody i przyrody. Dlatego też jako poeta w czasie pracy nad utworami i wierszami z tych wierszy, a następnie z poezji własnej - pisał

>>> 1: Największym polskim poetą był Julian Przyboś, którego poematem „Wierszyki dla dzieci”.

>>> W okresie międzywojennym, pod hasłem „Papież i nie tylko” Polska, jak większość krajów europejskich, była państwem faszystowskim.

>>> Prócz

>>> 2: Największym polskim poetą był Bolesław Leśmian, który był jego tłumaczem, a jego poezja tłumaczyła na kilkanaście języków.

>>> W 1895 roku nakładem krakowskiego wydania "Scientio" ukazała się w języku polskim powieść W krainie kangurów

```

### Avoiding Bad Words

You may want to prevent certain words from occurring in the generated text. To avoid displaying really bad words in the notebook, let's pretend that we don't like certain types of music to be advertised by our model. The prompt says: *my favorite type of music is*.

```python

input_ids = tokenizer.encode('Mój ulubiony gatunek muzyki to', return_tensors='pt')

bad_words = [' disco', ' rock', ' pop', ' soul', ' reggae', ' hip-hop']

bad_word_ids = []

for bad_word in bad_words:

ids = tokenizer(bad_word).input_ids

bad_word_ids.append(ids)

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=20,

top_k=50,

top_p=0.95,

num_return_sequences=5,

bad_words_ids=bad_word_ids

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Mój ulubiony gatunek muzyki to muzyka klasyczna. Nie wiem, czy to kwestia sposobu, w jaki gramy,

>>> 1: Mój ulubiony gatunek muzyki to reggea. Zachwycają mnie piosenki i piosenki muzyczne o ducho

>>> 2: Mój ulubiony gatunek muzyki to rockabilly, ale nie lubię też punka. Moim ulubionym gatunkiem

>>> 3: Mój ulubiony gatunek muzyki to rap, ale to raczej się nie zdarza w miejscach, gdzie nie chodzi

>>> 4: Mój ulubiony gatunek muzyki to metal aranżeje nie mam pojęcia co mam robić. Co roku,

```

Ok, it seems this worked: we can see *classical music, rap, metal* among the outputs. Interestingly, *reggae* found a way through via a misspelling *reggea*. Take it as a caution to be careful with curating your bad word lists!

### Few Shot Learning

Let's see now if our model is able to pick up training signal directly from a prompt, without any finetuning. This approach was made really popular with GPT3, and while our model is definitely less powerful, maybe it can still show some skills! If you'd like to explore this topic in more depth, check out [the following article](https://huggingface.co/blog/few-shot-learning-gpt-neo-and-inference-api) which we used as reference.

```python

prompt = """Tekst: "Nienawidzę smerfów!"

Sentyment: Negatywny

###

Tekst: "Jaki piękny dzień 👍"

Sentyment: Pozytywny

###

Tekst: "Jutro idę do kina"

Sentyment: Neutralny

###

Tekst: "Ten przepis jest świetny!"

Sentyment:"""

res = generator(prompt, max_length=85, temperature=0.5, end_sequence='###', return_full_text=False, num_return_sequences=5,)

for x in res:

print(res[i]['generated_text'].split(' ')[1])

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

```

It looks like our model is able to pick up some signal from the prompt. Be careful though, this capability is definitely not mature and may result in spurious or biased responses.

### Zero-Shot Inference

Large language models are known to store a lot of knowledge in its parameters. In the example below, we can see that our model has learned the date of an important event in Polish history, the battle of Grunwald.

```python

prompt = "Bitwa pod Grunwaldem miała miejsce w roku"

input_ids = tokenizer.encode(prompt, return_tensors='pt')

# activate beam search and early_stopping

beam_outputs = model.generate(

input_ids,

max_length=20,

num_beams=5,

early_stopping=True,

num_return_sequences=3

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(beam_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pod

>>> 1: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pokona

>>> 2: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie,

```

## BibTeX entry and citation info

```bibtex

@misc{papuGaPT2,

title={papuGaPT2 - Polish GPT2 language model},

url={https://huggingface.co/flax-community/papuGaPT2},

author={Wojczulis, Michał and Kłeczek, Dariusz},

year={2021}

}

```

|

ktangri/gpt-neo-demo

|

ktangri

| 2021-07-21T15:20:09Z | 10 | 1 |

transformers

|

[

"transformers",

"pytorch",

"gpt_neo",

"text-generation",

"text generation",

"the Pile",

"causal-lm",

"en",

"arxiv:2101.00027",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language:

- en

tags:

- text generation

- pytorch

- the Pile

- causal-lm

license: apache-2.0

datasets:

- the Pile

---

# GPT-Neo 2.7B (By EleutherAI)

## Model Description

GPT-Neo 2.7B is a transformer model designed using EleutherAI's replication of the GPT-3 architecture. GPT-Neo refers to the class of models, while 2.7B represents the number of parameters of this particular pre-trained model.

## Training data

GPT-Neo 2.7B was trained on the Pile, a large scale curated dataset created by EleutherAI for the purpose of training this model.

## Training procedure

This model was trained for 420 billion tokens over 400,000 steps. It was trained as a masked autoregressive language model, using cross-entropy loss.

## Intended Use and Limitations

This way, the model learns an inner representation of the English language that can then be used to extract features useful for downstream tasks. The model is best at what it was pretrained for however, which is generating texts from a prompt.

### How to use

You can use this model directly with a pipeline for text generation. This example generates a different sequence each time it's run:

```py

>>> from transformers import pipeline

>>> generator = pipeline('text-generation', model='EleutherAI/gpt-neo-2.7B')

>>> generator("EleutherAI has", do_sample=True, min_length=50)

[{'generated_text': 'EleutherAI has made a commitment to create new software packages for each of its major clients and has'}]

```

### Limitations and Biases

GPT-Neo was trained as an autoregressive language model. This means that its core functionality is taking a string of text and predicting the next token. While language models are widely used for tasks other than this, there are a lot of unknowns with this work.

GPT-Neo was trained on the Pile, a dataset known to contain profanity, lewd, and otherwise abrasive language. Depending on your usecase GPT-Neo may produce socially unacceptable text. See Sections 5 and 6 of the Pile paper for a more detailed analysis of the biases in the Pile.

As with all language models, it is hard to predict in advance how GPT-Neo will respond to particular prompts and offensive content may occur without warning. We recommend having a human curate or filter the outputs before releasing them, both to censor undesirable content and to improve the quality of the results.

## Eval results

All evaluations were done using our [evaluation harness](https://github.com/EleutherAI/lm-evaluation-harness). Some results for GPT-2 and GPT-3 are inconsistent with the values reported in the respective papers. We are currently looking into why, and would greatly appreciate feedback and further testing of our eval harness. If you would like to contribute evaluations you have done, please reach out on our [Discord](https://discord.gg/vtRgjbM).

### Linguistic Reasoning

| Model and Size | Pile BPB | Pile PPL | Wikitext PPL | Lambada PPL | Lambada Acc | Winogrande | Hellaswag |

| ---------------- | ---------- | ---------- | ------------- | ----------- | ----------- | ---------- | ----------- |

| GPT-Neo 1.3B | 0.7527 | 6.159 | 13.10 | 7.498 | 57.23% | 55.01% | 38.66% |

| GPT-2 1.5B | 1.0468 | ----- | 17.48 | 10.634 | 51.21% | 59.40% | 40.03% |

| **GPT-Neo 2.7B** | **0.7165** | **5.646** | **11.39** | **5.626** | **62.22%** | **56.50%** | **42.73%** |

| GPT-3 Ada | 0.9631 | ----- | ----- | 9.954 | 51.60% | 52.90% | 35.93% |

### Physical and Scientific Reasoning

| Model and Size | MathQA | PubMedQA | Piqa |

| ---------------- | ---------- | ---------- | ----------- |

| GPT-Neo 1.3B | 24.05% | 54.40% | 71.11% |

| GPT-2 1.5B | 23.64% | 58.33% | 70.78% |

| **GPT-Neo 2.7B** | **24.72%** | **57.54%** | **72.14%** |

| GPT-3 Ada | 24.29% | 52.80% | 68.88% |

### Down-Stream Applications

TBD

### BibTeX entry and citation info

To cite this model, use

```bibtex

@article{gao2020pile,

title={The Pile: An 800GB Dataset of Diverse Text for Language Modeling},

author={Gao, Leo and Biderman, Stella and Black, Sid and Golding, Laurence and Hoppe, Travis and Foster, Charles and Phang, Jason and He, Horace and Thite, Anish and Nabeshima, Noa and others},

journal={arXiv preprint arXiv:2101.00027},

year={2020}

}

```

To cite the codebase that this model was trained with, use

```bibtex

@software{gpt-neo,

author = {Black, Sid and Gao, Leo and Wang, Phil and Leahy, Connor and Biderman, Stella},

title = {{GPT-Neo}: Large Scale Autoregressive Language Modeling with Mesh-Tensorflow},

url = {http://github.com/eleutherai/gpt-neo},

version = {1.0},

year = {2021},

}

```

|

defex/distilgpt2-finetuned-amazon-reviews

|

defex

| 2021-07-21T10:36:15Z | 5 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

tags:

- generated_from_trainer

datasets:

- null

model_index:

- name: distilgpt2-finetuned-amazon-reviews

results:

- task:

name: Causal Language Modeling

type: text-generation

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilgpt2-finetuned-amazon-reviews

This model was trained from scratch on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Framework versions

- Transformers 4.8.2

- Pytorch 1.9.0+cu102

- Datasets 1.9.0

- Tokenizers 0.10.3

|

ifis-zork/ZORK_AI_FANTASY

|

ifis-zork

| 2021-07-21T09:50:17Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

tags:

- generated_from_trainer

model_index:

- name: ZORK_AI_FANTASY

results:

- task:

name: Causal Language Modeling

type: text-generation

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ZORK_AI_FANTASY

This model is a fine-tuned version of [ifis-zork/ZORK_AI_FAN_TEMP](https://huggingface.co/ifis-zork/ZORK_AI_FAN_TEMP) on an unkown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.8.2

- Pytorch 1.9.0+cu102

- Tokenizers 0.10.3

|

flax-community/clip-vision-bert-vqa-ft-6k

|

flax-community

| 2021-07-21T09:21:58Z | 4 | 4 |

transformers

|

[

"transformers",

"jax",

"clip-vision-bert",

"text-classification",

"arxiv:1908.03557",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

# CLIP-Vision-BERT Multilingual VQA Model

Fine-tuned CLIP-Vision-BERT on translated [VQAv2](https://visualqa.org/challenge.html) image-text pairs using sequence classification objective. We translate the dataset to three other languages other than English: French, German, and Spanish using the [MarianMT Models](https://huggingface.co/transformers/model_doc/marian.html). This model is based on the VisualBERT which was introduced in

[this paper](https://arxiv.org/abs/1908.03557) and first released in

[this repository](https://github.com/uclanlp/visualbert). The output is 3129 class logits, the same classes as used by VisualBERT authors.

The initial weights are loaded from the Conceptual-12M 60k [checkpoints](https://huggingface.co/flax-community/clip-vision-bert-cc12m-60k).

We trained the CLIP-Vision-BERT VQA model during community week hosted by Huggingface 🤗 using JAX/Flax.

## Model description

CLIP-Vision-BERT is a modified BERT model which takes in visual embeddings from the CLIP-Vision transformer and concatenates them with BERT textual embeddings before passing them to the self-attention layers of BERT. This is done for deep cross-modal interaction between the two modes.

## Intended uses & limitations❗️

This model is fine-tuned on a multi-translated version of the visual question answering task - [VQA v2](https://visualqa.org/challenge.html). Since VQAv2 is a dataset scraped from the internet, it will involve some biases which will also affect all fine-tuned versions of this model.

### How to use❓

You can use this model directly on visual question answering. You will need to clone the model from [here](https://github.com/gchhablani/multilingual-vqa). An example of usage is shown below:

```python

>>> from torchvision.io import read_image

>>> import numpy as np

>>> import os

>>> from transformers import CLIPProcessor, BertTokenizerFast

>>> from model.flax_clip_vision_bert.modeling_clip_vision_bert import FlaxCLIPVisionBertForSequenceClassification

>>> image_path = os.path.join('images/val2014', os.listdir('images/val2014')[0])

>>> img = read_image(image_path)

>>> clip_processor = CLIPProcessor.from_pretrained('openai/clip-vit-base-patch32')

ftfy or spacy is not installed using BERT BasicTokenizer instead of ftfy.

>>> clip_outputs = clip_processor(images=img)

>>> clip_outputs['pixel_values'][0] = clip_outputs['pixel_values'][0].transpose(1,2,0) # Need to transpose images as model expected channel last images.

>>> tokenizer = BertTokenizerFast.from_pretrained('bert-base-multilingual-uncased')

>>> model = FlaxCLIPVisionBertForSequenceClassification.from_pretrained('flax-community/clip-vision-bert-vqa-ft-6k')

>>> text = "Are there teddy bears in the image?"

>>> tokens = tokenizer([text], return_tensors="np")

>>> pixel_values = np.concatenate([clip_outputs['pixel_values']])

>>> outputs = model(pixel_values=pixel_values, **tokens)

>>> preds = outputs.logits[0]

>>> sorted_indices = np.argsort(preds)[::-1] # Get reverse sorted scores

>>> top_5_indices = sorted_indices[:5]

>>> top_5_tokens = list(map(model.config.id2label.get,top_5_indices))

>>> top_5_scores = preds[top_5_indices]

>>> print(dict(zip(top_5_tokens, top_5_scores)))

{'yes': 15.809224, 'no': 7.8785815, '<unk>': 4.622649, 'very': 4.511462, 'neither': 3.600822}

```

## Training data 🏋🏻♂️

The CLIP-Vision-BERT model was fine-tuned on the translated version of the VQAv2 dataset in four languages using Marian: English, French, German and Spanish. Hence, the dataset is four times the original English questions.

The dataset questions and image URLs/paths can be downloaded from [flax-community/multilingual-vqa](https://huggingface.co/datasets/flax-community/multilingual-vqa).

## Data Cleaning 🧹

Though the original dataset contains 443,757 train and 214,354 validation image-question pairs. We only use the `multiple_choice_answer`. The answers which are not present in the 3129 classes are mapped to the `<unk>` label.

**Splits**

We use the original train-val splits from the VQAv2 dataset. After translation, we get 1,775,028 train image-text pairs, and 857,416 validation image-text pairs.

## Training procedure 👨🏻💻

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a shared vocabulary size of approximately 110,000. The beginning of a new document is marked with `[CLS]` and the end of one by `[SEP]`.

### Fine-tuning

The checkpoint of the model was trained on Google Cloud Engine TPUv3-8 machine (with 335 GB of RAM, 1000 GB of hard drive, 96 CPU cores) **8 v3 TPU cores** for 6k steps with a per device batch size of 128 and a max sequence length of 128. The optimizer used is AdamW with a learning rate of 5e-5, learning rate warmup for 1600 steps, and linear decay of the learning rate after.

We tracked experiments using TensorBoard. Here is link to main dashboard: [CLIP Vision BERT VQAv2 Fine-tuning Dashboard](https://huggingface.co/flax-community/multilingual-vqa-pt-60k-ft/tensorboard)

#### **Fine-tuning Results 📊**

The model at this checkpoint reached **eval accuracy of 0.49** on our multilingual VQAv2 dataset.

## Team Members

- Gunjan Chhablani [@gchhablani](https://hf.co/gchhablani)

- Bhavitvya Malik[@bhavitvyamalik](https://hf.co/bhavitvyamalik)

## Acknowledgements

We thank [Nilakshan Kunananthaseelan](https://huggingface.co/knilakshan20) for helping us whenever he could get a chance. We also thank [Abheesht Sharma](https://huggingface.co/abheesht) for helping in the discussions in the initial phases. [Luke Melas](https://github.com/lukemelas) helped us get the CC-12M data on our TPU-VMs and we are very grateful to him.

This project would not be possible without the help of [Patrick](https://huggingface.co/patrickvonplaten) and [Suraj](https://huggingface.co/valhalla) who met with us frequently and helped review our approach and guided us throughout the project.

Huge thanks to Huggingface 🤗 & Google Jax/Flax team for such a wonderful community week and for answering our queries on the Slack channel, and for providing us with the TPU-VMs.

<img src=https://pbs.twimg.com/media/E443fPjX0AY1BsR.jpg:large>

|

flax-community/clip-vision-bert-cc12m-60k

|

flax-community

| 2021-07-21T09:17:15Z | 9 | 2 |

transformers

|

[

"transformers",

"jax",

"clip-vision-bert",

"fill-mask",

"arxiv:1908.03557",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

# CLIP-Vision-BERT Multilingual Pre-trained Model

Pretrained CLIP-Vision-BERT pre-trained on translated [Conceptual-12M](https://github.com/google-research-datasets/conceptual-12m) image-text pairs using a masked language modeling (MLM) objective. 10M cleaned image-text pairs are translated using [mBART-50 one-to-many model](https://huggingface.co/facebook/mbart-large-50-one-to-many-mmt) to 2.5M examples each in English, French, German and Spanish. This model is based on the VisualBERT which was introduced in

[this paper](https://arxiv.org/abs/1908.03557) and first released in

[this repository](https://github.com/uclanlp/visualbert). We trained CLIP-Vision-BERT model during community week hosted by Huggingface 🤗 using JAX/Flax.

This checkpoint is pre-trained for 60k steps.

## Model description

CLIP-Vision-BERT is a modified BERT model which takes in visual embeddings from CLIP-Vision transformer and concatenates them with BERT textual embeddings before passing them to the self-attention layers of BERT. This is done for deep cross-modal interaction between the two modes.

## Intended uses & limitations❗️

You can use the raw model for masked language modeling, but it's mostly intended to be fine-tuned on a downstream task.

Note that this model is primarily aimed at being fine-tuned on tasks such as visuo-linguistic sequence classification or visual question answering. We used this model to fine-tuned on a multi-translated version of the visual question answering task - [VQA v2](https://visualqa.org/challenge.html). Since Conceptual-12M is a dataset scraped from the internet, it will involve some biases which will also affect all fine-tuned versions of this model.

### How to use❓

You can use this model directly with a pipeline for masked language modeling. You will need to clone the model from [here](https://github.com/gchhablani/multilingual-vqa). An example of usage is shown below:

```python

>>> from torchvision.io import read_image

>>> import numpy as np

>>> import os

>>> from transformers import CLIPProcessor, BertTokenizerFast

>>> from model.flax_clip_vision_bert.modeling_clip_vision_bert import FlaxCLIPVisionBertForMaskedLM

>>> image_path = os.path.join('images/val2014', os.listdir('images/val2014')[0])

>>> img = read_image(image_path)

>>> clip_processor = CLIPProcessor.from_pretrained('openai/clip-vit-base-patch32')

ftfy or spacy is not installed using BERT BasicTokenizer instead of ftfy.

>>> clip_outputs = clip_processor(images=img)

>>> clip_outputs['pixel_values'][0] = clip_outputs['pixel_values'][0].transpose(1,2,0) # Need to transpose images as model expected channel last images.

>>> tokenizer = BertTokenizerFast.from_pretrained('bert-base-multilingual-uncased')

>>> model = FlaxCLIPVisionBertForMaskedLM.from_pretrained('flax-community/clip-vision-bert-cc12m-60k')

>>> text = "Three teddy [MASK] in a showcase."

>>> tokens = tokenizer([text], return_tensors="np")

>>> pixel_values = np.concatenate([clip_outputs['pixel_values']])

>>> outputs = model(pixel_values=pixel_values, **tokens)

>>> indices = np.where(tokens['input_ids']==tokenizer.mask_token_id)

>>> preds = outputs.logits[indices][0]

>>> sorted_indices = np.argsort(preds)[::-1] # Get reverse sorted scores

/home/crocoder/anaconda3/lib/python3.8/site-packages/jax/_src/numpy/lax_numpy.py:4615: UserWarning: 'kind' argument to argsort is ignored.

warnings.warn("'kind' argument to argsort is ignored.")

>>> top_5_indices = sorted_indices[:5]

>>> top_5_tokens = tokenizer.convert_ids_to_tokens(top_5_indices)

>>> top_5_scores = preds[top_5_indices]

>>> print(dict(zip(top_5_tokens, top_5_scores)))

{'bears': 19.241959, 'bear': 17.700356, 'animals': 14.368396, 'girls': 14.343797, 'dolls': 14.274415}

```

## Training data 🏋🏻♂️

The CLIP-Vision-BERT model was pre-trained on a translated version of the Conceptual-12m dataset in four languages using mBART-50: English, French, German and Spanish, with 2.5M image-text pairs in each.

The dataset captions and image urls can be downloaded from [flax-community/conceptual-12m-mbart-50-translated](https://huggingface.co/datasets/flax-community/conceptual-12m-mbart-50-multilingual).

## Data Cleaning 🧹

Though the original dataset contains 12M image-text pairs, a lot of the URLs are invalid now, and in some cases, images are corrupt or broken. We remove such examples from our data, which leaves us with approximately 10M image-text pairs.

**Splits**

We used 99% of the 10M examples as a train set, and the remaining ~ 100K examples as our validation set.

## Training procedure 👨🏻💻

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a shared vocabulary size of approximately 110,000. The beginning of a new document is marked with `[CLS]` and the end of one by `[SEP]`

The details of the masking procedure for each sentence are the following:

- 15% of the tokens are masked.

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

- In the 10% remaining cases, the masked tokens are left as is.

The visual embeddings are taken from the CLIP-Vision model and combined with the textual embeddings inside the BERT embedding layer. The padding is done in the middle. Here is an example of what the embeddings look like:

```

[CLS Emb] [Textual Embs] [SEP Emb] [Pad Embs] [Visual Embs]

```

A total length of 128 tokens, including the visual embeddings, is used. The texts are truncated or padded accordingly.

### Pretraining

The checkpoint of the model was trained on Google Cloud Engine TPUv3-8 machine (with 335 GB of RAM, 1000 GB of hard drive, 96 CPU cores) **8 v3 TPU cores** for 60k steps with a per device batch size of 64 and a max sequence length of 128. The optimizer used is Adafactor with a learning rate of 1e-4, learning rate warmup for 5,000 steps, and linear decay of the learning rate after.

We tracked experiments using TensorBoard. Here is the link to the main dashboard: [CLIP Vision BERT CC12M Pre-training Dashboard](https://huggingface.co/flax-community/multilingual-vqa-pt-ckpts/tensorboard)

#### **Pretraining Results 📊**

The model at this checkpoint reached **eval accuracy of 67.53%** and **with train loss at 1.793 and eval loss at 1.724**.

## Fine Tuning on downstream tasks

We performed fine-tuning on downstream tasks. We used the following datasets for visual question answering:

1. Multilingual of [Visual Question Answering (VQA) v2](https://visualqa.org/challenge.html) - We translated this dataset to the four languages using `Helsinki-NLP` Marian models. The translated data can be found at [flax-community/multilingual-vqa](https://huggingface.co/datasets/flax-community/multilingual-vqa).

The checkpoints for the fine-tuned model on this pre-trained checkpoint can be found [here](https://huggingface.co/flax-community/multilingual-vqa-pt-60k-ft/tensorboard).

The fine-tuned model achieves eval accuracy of 49% on our validation dataset.

## Team Members

- Gunjan Chhablani [@gchhablani](https://hf.co/gchhablani)

- Bhavitvya Malik[@bhavitvyamalik](https://hf.co/bhavitvyamalik)

## Acknowledgements

We thank [Nilakshan Kunananthaseelan](https://huggingface.co/knilakshan20) for helping us whenever he could get a chance. We also thank [Abheesht Sharma](https://huggingface.co/abheesht) for helping in the discussions in the initial phases. [Luke Melas](https://github.com/lukemelas) helped us get the CC-12M data on our TPU-VMs and we are very grateful to him.

This project would not be possible without the help of [Patrick](https://huggingface.co/patrickvonplaten) and [Suraj](https://huggingface.co/valhalla) who met with us frequently and helped review our approach and guided us throughout the project.

Huge thanks to Huggingface 🤗 & Google Jax/Flax team for such a wonderful community week and for answering our queries on the Slack channel, and for providing us with the TPU-VMs.

<img src=https://pbs.twimg.com/media/E443fPjX0AY1BsR.jpg:large>

|

junnyu/uer_large

|

junnyu

| 2021-07-21T08:42:35Z | 4 | 2 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"zh",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

---

language: zh

tags:

- bert

- pytorch

widget:

- text: "巴黎是[MASK]国的首都。"

---

https://github.com/dbiir/UER-py/wiki/Modelzoo 中的

MixedCorpus+BertEncoder(large)+MlmTarget

https://share.weiyun.com/5G90sMJ

Pre-trained on mixed large Chinese corpus. The configuration file is bert_large_config.json

## 引用

```tex

@article{zhao2019uer,

title={UER: An Open-Source Toolkit for Pre-training Models},

author={Zhao, Zhe and Chen, Hui and Zhang, Jinbin and Zhao, Xin and Liu, Tao and Lu, Wei and Chen, Xi and Deng, Haotang and Ju, Qi and Du, Xiaoyong},

journal={EMNLP-IJCNLP 2019},

pages={241},

year={2019}

}

```

|

huggingtweets/chiefkeef

|

huggingtweets

| 2021-07-21T02:53:01Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

thumbnail: https://www.huggingtweets.com/chiefkeef/1626835977590/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/964824116237713408/JVM90sUV_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Glory Boy</div>

<div style="text-align: center; font-size: 14px;">@chiefkeef</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Glory Boy.

| Data | Glory Boy |

| --- | --- |

| Tweets downloaded | 3213 |

| Retweets | 89 |

| Short tweets | 930 |

| Tweets kept | 2194 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2e3hy76x/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @chiefkeef's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2f5mhzg7) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2f5mhzg7/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/chiefkeef')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

huggingtweets/plesmasquerade

|

huggingtweets

| 2021-07-21T02:40:45Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

thumbnail: https://www.huggingtweets.com/plesmasquerade/1626834982015/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1415803411002314752/X0K3MR1R_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">lovely lovely aerie, 🍭👑🪞🕯️🌙💫🪶🧣🗑️🔪</div>

<div style="text-align: center; font-size: 14px;">@plesmasquerade</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from lovely lovely aerie, 🍭👑🪞🕯️🌙💫🪶🧣🗑️🔪.

| Data | lovely lovely aerie, 🍭👑🪞🕯️🌙💫🪶🧣🗑️🔪 |

| --- | --- |

| Tweets downloaded | 3235 |

| Retweets | 1376 |

| Short tweets | 330 |

| Tweets kept | 1529 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/39gtjjjo/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @plesmasquerade's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/6jt0gb2r) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/6jt0gb2r/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/plesmasquerade')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

ifis-zork/IFIS_ZORK_AI_MEDIUM_HORROR

|

ifis-zork

| 2021-07-20T23:14:58Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

tags:

- generated_from_trainer

model_index:

- name: IFIS_ZORK_AI_MEDIUM_HORROR

results:

- task:

name: Causal Language Modeling

type: text-generation

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# IFIS_ZORK_AI_MEDIUM_HORROR

This model is a fine-tuned version of [gpt2-medium](https://huggingface.co/gpt2-medium) on an unkown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.8.2

- Pytorch 1.9.0+cu102

- Tokenizers 0.10.3

|

espnet/kan-bayashi_csmsc_conformer_fastspeech2

|

espnet

| 2021-07-20T21:31:29Z | 8 | 1 |

espnet

|

[

"espnet",

"audio",

"text-to-speech",

"zh",

"dataset:csmsc",

"arxiv:1804.00015",

"license:cc-by-4.0",

"region:us"

] |

text-to-speech

| 2022-03-02T23:29:05Z |

---

tags:

- espnet

- audio

- text-to-speech

language: zh

datasets:

- csmsc

license: cc-by-4.0

---

## ESPnet2 TTS pretrained model

### `kan-bayashi/csmsc_conformer_fastspeech2`

♻️ Imported from https://zenodo.org/record/4031955/

This model was trained by kan-bayashi using csmsc/tts1 recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```python

# coming soon

```

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson {Enrique Yalta Soplin} and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

@inproceedings{hayashi2020espnet,

title={{Espnet-TTS}: Unified, reproducible, and integratable open source end-to-end text-to-speech toolkit},

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Inoue, Katsuki and Yoshimura, Takenori and Watanabe, Shinji and Toda, Tomoki and Takeda, Kazuya and Zhang, Yu and Tan, Xu},

booktitle={Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={7654--7658},

year={2020},

organization={IEEE}

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Enrique Yalta Soplin and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

ifis-zork/ZORK_AI_FAN_TEMP

|

ifis-zork

| 2021-07-20T19:58:38Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

tags:

- generated_from_trainer

model_index:

- name: ZORK_AI_FAN_TEMP

results:

- task:

name: Causal Language Modeling

type: text-generation

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ZORK_AI_FAN_TEMP

This model is a fine-tuned version of [gpt2-medium](https://huggingface.co/gpt2-medium) on an unkown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.8.2

- Pytorch 1.9.0+cu102

- Tokenizers 0.10.3

|

ritog/bangla-gpt2

|

ritog

| 2021-07-20T15:22:47Z | 12 | 2 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"bn",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: bn

tags:

- text-generation

widget:

- text: আজ একটি সুন্দর দিন এবং আমি

---

# Bangla-GPT2

### A GPT-2 Model for the Bengali Language

* Dataset- mc4 Bengali

* Training time- ~40 hours

* Written in- JAX

If you use this model, please cite:

```

@misc{bangla-gpt2,

author = {Ritobrata Ghosh},

year = {2016},

title = {Bangla GPT-2},

publisher = {Hugging Face}

}

```

|

idrimadrid/autonlp-creator_classifications-4021083

|

idrimadrid

| 2021-07-20T12:57:16Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"autonlp",

"en",

"dataset:idrimadrid/autonlp-data-creator_classifications",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

---

tags: autonlp

language: en

widget:

- text: "I love AutoNLP 🤗"

datasets:

- idrimadrid/autonlp-data-creator_classifications

---

# Model Trained Using AutoNLP

- Problem type: Multi-class Classification

- Model ID: 4021083

## Validation Metrics

- Loss: 0.6848716735839844

- Accuracy: 0.8825910931174089

- Macro F1: 0.41301646762109634

- Micro F1: 0.8825910931174088

- Weighted F1: 0.863740586166105

- Macro Precision: 0.4129337301330573

- Micro Precision: 0.8825910931174089

- Weighted Precision: 0.8531335941587811

- Macro Recall: 0.44466614072309585

- Micro Recall: 0.8825910931174089

- Weighted Recall: 0.8825910931174089

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/idrimadrid/autonlp-creator_classifications-4021083

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("idrimadrid/autonlp-creator_classifications-4021083", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("idrimadrid/autonlp-creator_classifications-4021083", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

```

|

BumBelDumBel/ZORK_AI_SCIFI

|

BumBelDumBel

| 2021-07-19T14:51:33Z | 10 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

---

tags:

- generated_from_trainer

model_index:

- name: ZORK_AI_SCIFI

results:

- task:

name: Causal Language Modeling

type: text-generation

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ZORK_AI_SCIFI

This model is a fine-tuned version of [gpt2-medium](https://huggingface.co/gpt2-medium) on an unkown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.8.2

- Pytorch 1.9.0+cu102

- Tokenizers 0.10.3

|

flax-community/mr-indicnlp-classifier

|

flax-community

| 2021-07-19T12:53:33Z | 10 | 1 |

transformers

|

[

"transformers",

"pytorch",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z |

# IndicNLP Marathi News Classifier

This model was fine-tuned using [Marathi RoBERTa](https://huggingface.co/flax-community/roberta-base-mr) on [IndicNLP Marathi News Dataset](https://github.com/AI4Bharat/indicnlp_corpus#indicnlp-news-article-classification-dataset)

## Dataset

IndicNLP Marathi news dataset consists 3 classes - `['lifestyle', 'entertainment', 'sports']` - with following docs distribution as per classes:

| train | eval | test |

| ----- | ---- | ---- |

| 9672 | 477 | 478 |

💯 Our **`mr-indicnlp-classifier`** model fine tuned from **roberta-base-mr** Pretrained Marathi RoBERTa model outperformed both classifier mentioned in [Arora, G. (2020). iNLTK](https://www.semanticscholar.org/paper/iNLTK%3A-Natural-Language-Toolkit-for-Indic-Languages-Arora/5039ed9e100d3a1cbbc25a02c82f6ee181609e83/figure/3) and [Kunchukuttan, Anoop et al. AI4Bharat-IndicNLP.](https://www.semanticscholar.org/paper/AI4Bharat-IndicNLP-Corpus%3A-Monolingual-Corpora-and-Kunchukuttan-Kakwani/7997d432925aff0ba05497d2893c09918298ca55/figure/4)

| Dataset | FT-W | FT-WC | INLP | iNLTK | **roberta-base-mr 🏆** |

| --------------- | ----- | ----- | ----- | ----- | --------------------- |

| iNLTK Headlines | 83.06 | 81.65 | 89.92 | 92.4 | **97.48** |

|

flax-community/Sinhala-gpt2

|

flax-community

| 2021-07-19T11:20:34Z | 10 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"tensorboard",

"gpt2",

"feature-extraction",

"Sinhala",

"text-generation",

"si",

"dataset:mc4",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: si

tags:

- Sinhala

- text-generation

- gpt2

datasets:

- mc4

---

# Sinhala GPT2 trained on MC4 (manually cleaned)

### Overview

This is a smaller GPT2 model trained on [MC4](https://github.com/allenai/allennlp/discussions/5056) Sinhala dataset. As Sinhala is one of those low resource languages, there are only a handful of models been trained. So, this would be a great place to start training for more downstream tasks.

This model uses a manually cleaned version of MC4 dataset which can be found [here](https://huggingface.co/datasets/keshan/clean-si-mc4). Although the dataset is relatively small ~3GB. The finetuned model on [news articles](https://huggingface.co/keshan/sinhala-gpt2-newswire) generates good and acceptable results.

## Model Specification

The model chosen for training is GPT2 with the following specifications:

1. vocab_size=50257

2. n_embd=768

3. n_head=12

4. n_layer=12

5. n_positions=1024

## How to Use

You can use this model directly with a pipeline for causal language modeling:

```py

from transformers import pipeline

generator = pipeline('text-generation', model='flax-community/Sinhala-gpt2')

generator("මම", max_length=50, num_return_sequences=5)

```

|

flax-community/wav2vec2-dhivehi

|

flax-community

| 2021-07-19T09:40:30Z | 10 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"tensorboard",

"wav2vec2",

"pretraining",

"automatic-speech-recognition",

"dv",

"dataset:common_voice",

"arxiv:2006.11477",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-02T23:29:05Z |

---

language: dv

tags:

- automatic-speech-recognition

datasets:

- common_voice

---

# Wav2Vec2 Dhivehi

Wav2vec2 pre-pretrained from scratch using common voice dhivehi dataset. The model was trained with Flax during the [Flax/Jax Community Week](https://discss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104) organised by HuggingFace.

## Model description

The model used for training is [Wav2Vec2](https://ai.facebook.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/) by FacebookAI. It was introduced in the paper

"wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations" by Alexei Baevski, Henry Zhou, Abdelrahman Mohamed, and Michael Auli (https://arxiv.org/abs/2006.11477).

This model is available in the 🤗 [Model Hub](https://huggingface.co/facebook/wav2vec2-base-960h).

## Training data

Dhivehi data from [Common Voice](https://commonvoice.mozilla.org/en/datasets).

The dataset is also available in the 🤗 [Datasets](https://huggingface.co/datasets/common_voice) library.

## Team members

- Shahu Kareem ([@shahukareem](https://huggingface.co/shahukareem))

- Eyna ([@eyna](https://huggingface.co/eyna))

|

flax-community/t5-vae-wiki

|

flax-community

| 2021-07-19T07:03:14Z | 3 | 0 |

transformers

|

[

"transformers",

"jax",

"transformer_vae",

"vae",

"en",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z |

---

language: en

tags: vae

license: apache-2.0

---

# T5-VAE-Wiki (flax)

A Transformer-VAE made using flax.

It has been trained to interpolate on sentences form wikipedia.

Done as part of Huggingface community training ([see forum post](https://discuss.huggingface.co/t/train-a-vae-to-interpolate-on-english-sentences/7548)).

Builds on T5, using an autoencoder to convert it into an MMD-VAE ([more info](http://fras.uk/ml/large%20prior-free%20models/transformer-vae/2020/08/13/Transformers-as-Variational-Autoencoders.html)).

## How to use from the 🤗/transformers library

Add model repo as a submodule:

```bash

git submodule add https://github.com/Fraser-Greenlee/t5-vae-flax.git t5_vae_flax

```

```python

from transformers import AutoTokenizer

from t5_vae_flax.src.t5_vae import FlaxT5VaeForAutoencoding

tokenizer = AutoTokenizer.from_pretrained("t5-base")

model = FlaxT5VaeForAutoencoding.from_pretrained("flax-community/t5-vae-wiki")

```

## Setup

Run `setup_tpu_vm_venv.sh` to setup a virtual enviroment on a TPU VM for training.

|

andi611/distilbert-base-uncased-qa-with-ner

|

andi611

| 2021-07-19T01:20:54Z | 31 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:conll2003",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-02T23:29:05Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

model_index:

- name: distilbert-base-uncased-qa-with-ner

results:

- task:

name: Question Answering

type: question-answering

dataset:

name: conll2003

type: conll2003

args: conll2003

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-qa-with-ner

This model is a fine-tuned version of [andi611/distilbert-base-uncased-qa](https://huggingface.co/andi611/distilbert-base-uncased-qa) on the conll2003 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

### Framework versions

- Transformers 4.8.2

- Pytorch 1.8.1+cu111

- Datasets 1.8.0

- Tokenizers 0.10.3

|

huggingtweets/ellis_hughes

|

huggingtweets

| 2021-07-18T18:42:16Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

thumbnail: https://www.huggingtweets.com/ellis_hughes/1626633732954/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1004536007012651008/ZWJUeJ2W_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Ellis Hughes</div>

<div style="text-align: center; font-size: 14px;">@ellis_hughes</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Ellis Hughes.

| Data | Ellis Hughes |

| --- | --- |

| Tweets downloaded | 2170 |

| Retweets | 396 |

| Short tweets | 91 |

| Tweets kept | 1683 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3rqrdlum/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @ellis_hughes's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/3n17xu9k) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/3n17xu9k/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/ellis_hughes')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

sehandev/koelectra-qa

|

sehandev

| 2021-07-18T14:21:05Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"electra",

"question-answering",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-02T23:29:05Z |

---

tags:

- generated_from_trainer

model_index:

- name: koelectra-qa

results:

- task:

name: Question Answering

type: question-answering

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# koelectra-qa

This model was trained from scratch on an unkown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 256

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.8.2

- Pytorch 1.8.1

- Datasets 1.9.0

- Tokenizers 0.10.3

|

jacobshein/danish-bert-botxo-qa-squad

|

jacobshein

| 2021-07-18T11:19:49Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"question-answering",

"danish",

"question answering",

"squad",

"machine translation",

"botxo",

"da",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-02T23:29:05Z |

---

language: da

tags:

- danish

- bert

- question answering

- squad

- machine translation

- botxo

license: cc-by-4.0

datasets:

- common_crawl

- wikipedia

- dindebat.dk

- hestenettet.dk

- danish OpenSubtitles

widget:

- context: Stine sagde hej, men Jacob sagde halløj.

---

# Danish BERT (version 2, uncased) by [BotXO](https://github.com/botxo/nordic_bert) fine-tuned for Question Answering (QA) on the [machine-translated SQuAD-da dataset](https://github.com/ccasimiro88/TranslateAlignRetrieve/tree/multilingual/squads-tar/da)

```python

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

tokenizer = AutoTokenizer.from_pretrained("jacobshein/danish-bert-botxo-qa-squad")

model = AutoModelForQuestionAnswering.from_pretrained("jacobshein/danish-bert-botxo-qa-squad")

```

#### Contact

For further information on usage or fine-tuning procedure, please reach out by email through [jacobhein.com](https://jacobhein.com/#contact).

|

johnpaulbin/gpt2-skript-80-v3

|

johnpaulbin

| 2021-07-18T04:53:22Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",